SUMMARY

Electroencephalography (EEG) - the direct recording of the electrical activity of populations of neurons - is a tremendously important tool for diagnosing, treating, and researching epilepsy. While standard procedures for recording and analyzing human EEG exist and are broadly accepted, no such standards exist for research in animal models of seizures and epilepsy – recording montages, acquisition systems, and processing algorithms may differ substantially among investigators and laboratories. The lack of standard procedures for acquiring and analyzing EEG from animal models of epilepsy hinders the interpretation of experimental results and reduces the ability of the scientific community to efficiently translate new experimental findings into clinical practice. Accordingly, the intention of this report is twofold: 1) to review current techniques for the collection and software-based analysis of neural field recordings in animal models of epilepsy, and 2) to offer pertinent standards and reporting guidelines for this research. Specifically, we review current techniques for signal acquisition, signal conditioning, signal processing, data storage, and data sharing, and include applicable recommendations to standardize collection and reporting. We close with a discussion of challenges and future opportunities, and include a supplemental report of currently available acquisition systems and analysis tools. This work represents a collaboration on behalf of the International League Against Epilepsy (ILAE)- American Epilepsy Society (AES) Translational Research Task Force (TASK1-Workgroup 5), and is part of a larger effort to harmonize video-electroencephalography interpretation and analysis methods across studies using in vivo and in vitro seizure and epilepsy models.

Keywords: Signal processing, Data sharing, Data storage, electroencephalography, electrocorticography

INTRODUCTION

Direct recording of the electrical activity of the brain has been an indispensable tool for the diagnosis, treatment, and research of seizures and epilepsy for several decades1; 2. Over time, clinicians have developed standard procedures for the recording and analysis of human neurological signals, including electrode placement3, signal interpretation4; 5, and device design6. By contrast, no such standards exist for research in animal models of epilepsy – electrode placement, recording montages, acquisition systems, and processing algorithms are independently developed by researchers according to their specific interests and thus may differ substantially.

Ongoing advances in experimental techniques and computational power have provided increasingly sophisticated analytic tools and algorithms, many of which rely on complex mathematical processing of large amounts of data. Software-based analysis is thus both a powerful tool for improving the yield of studies leveraging neural data and a dangerous weapon that can irreversibly distort the signal if used improperly. Researchers wishing to perform software-based analysis of recorded neural data may consult a number of excellent resources in the literature and may utilize highly refined software packages available in the online community. Here, our goal is to supplement these resources with a general overview of modern concepts in the acquisition and software-based analysis of neural data, including analog and digital signal acquisition, processing, storage, and analysis techniques used in the study of epilepsy. This should improve the validity of acquired data and enhance effective translation of experimental results into clinical practice. A dictionary of the terminologies we will use in this manuscript appears in Table 1.

Table 1.

Definitions of technical terms used throughout the manuscript.

| Grounding and referencing | |

| Ground | Used in a general sense to refer to the reference point for an electrical circuit. |

| Electrical isolation | The physical and electrical separation of the animal circuit from the mains earth (equipment) circuit. |

| Earth ground | The reference point for the equipment electrical circuit – equivalent to the earth ground in the wall outlet. |

| Animal common | The reference point for the isolated portion of the equipment |

| Ground loop | An equipment setup in which two or more ground points on a circuit are at different voltage potentials. |

| Star topology | A setup in which equipment connected to an animal converges to a single earth connection. |

| Amplification | |

| Preamplifier | A low-gain amplifier that converts the neural signal from high-impedance to low-impedance, also called the headstage or jackbox. |

| Common-mode rejection | The removal of signals common to both inputs of an amplifier in order to reject ambient noise from the recorded signal. |

| Differential recording | Recording a neural signal using a reference relatively close to the signal of interest. |

| Referential recording | Recording a neural signal using a common reference located relatively far from the signal of interest. |

| Recording montage | The grouping of source and reference electrodes used for collecting and reviewing data. |

| Video Monitoring | |

| Video-EEG | Video monitoring in combination with EEG acquisition. |

| Signal Digitization | |

| Analog-to-digital converter | An electronic component that samples a continuous input signal and converts it to a series of discrete measurements. |

| Sampling frequency | The frequency at which the continuous input signal is converted to discrete measurements. |

| Nyquist rate | The minimum sampling frequency required for a given application, equal to twice the maximum frequency content of the input signal. |

| Aliasing | Signal distortion occurring when high frequency signal content incorrectly appears as lower frequency signal content during data acquisition or review. |

| Nyquist frequency | The maximum input frequency that may be accurately captured at a given sampling frequency, equal to one half of the sampling frequency. |

| Downsampling | Reducing the sampling frequency of data. |

| Bit resolution | Refers to the number of steps the analog-to-digital converter will use to digitize the input signal – calculated as two to the power of the number of bits. |

| Dynamic range | The ratio between the largest signal a system can process and the noise floor. |

| Voltage conversion factor | A constant scaling factor used to reconstruct a digitized signal (stored as integers) to a signal represented in volts. |

| Filtering | |

| Finite impulse response (FIR) filter | A filter with a time-limited response to a very brief input. |

| Infinite impulse response (IIR) filter | A filter with a non-time-limited response to a very brief input. |

| Low-pass filter | A filter that preferentially passes frequency content below a specified cutoff frequency, while removing frequency content above the cutoff frequency. |

| High-pass filter | A filter that preferentially passes frequency content above a specified cutoff frequency, while removing frequency content below the cutoff frequency. |

| Band-pass filter | A filter that preferentially passes frequency content between two cutoff frequencies, while removing all other frequency content. |

| Group delay | The time delay of different frequency components of a filtered signal, equal to the derivative of the phase versus frequency response. |

| Filter Design | |

| Sinc function | A function commonly used to build digital FIR filters, |

| Notch filter | A filter that removes a specific frequency band, usually 50 or 60 Hz (the frequency of the mains power supply). |

| Spectral Analysis | |

| Fast Fourier Transform (FFT) | An efficient algorithm for decomposing a signal into a series of sine waves of different frequencies, often used for spectral analysis. |

| Joint time-frequency analysis (JTFA) | A class of techniques that express a signal in both time and frequency domains simultaneously, most commonly in order to track the evolution of the signal spectral content over time. |

| Short-term Fourier transform (STFT) | A JTFA algorithm in which an FFT is repeatedly calculated for brief, non-overlapping segments of the recorded signal. |

| Spectrogram | A two-dimensional heatmap plot of the frequency content of a signal versus time, calculated using Fourier analysis. |

| Scalogram | A two-dimensional heatmap plot of the frequency content of a signal versus time, calculated using wavelets. |

| Stationarity | An assumed property of time-series data which posits that the statistical properties of a signal (mean, variance, etc) do not fundamentally change over the duration of recording. |

| Artifact Recognition and Rejection | |

| Features | Quantitative measures of a recorded signal. |

| Data Storage and Data Sharing | |

| Metadata | Information describing stored data, such as sampling frequency, date, method of collection, etc. |

| Header | A section of a data file, usually placed at the beginning of a file, which contains information (metadata) explaining the rest of the data in the file. |

TECHNIQUES

Data acquisition

Grounding and referencing

Meaningful software-based analysis of electrophysiological brain data is predicated on the acquisition of high-quality signals. Likewise, the acquisition of high-quality electroencephalography (EEG), electrocorticography (ECoG), intracranial EEG (iEEG), and stereoEEG (SEEG) data is critically dependent on proper recording setup. This section provides a brief overview of some of the important considerations for ensuring proper recording setup, including grounding, electrical isolation, signal referencing, amplification, and video monitoring in an experimental setting.

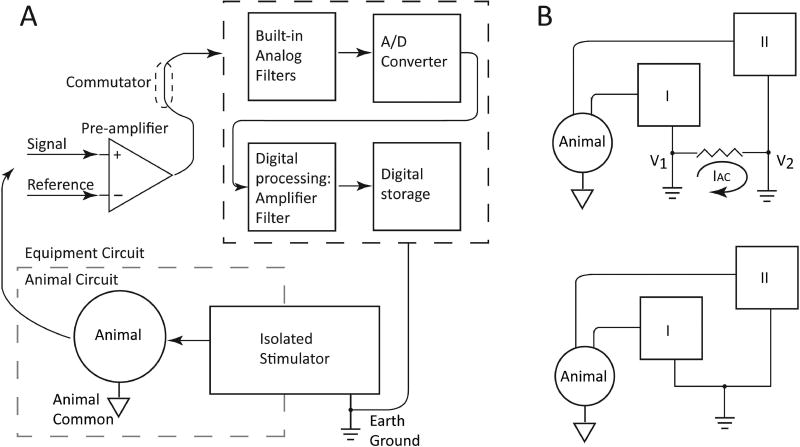

Proper subject and equipment grounding is the single most important consideration for acquiring high-quality neurophysiological recordings7; 8. In electrophysiology, ground is a somewhat ambiguous term that is used to generally refer to the reference point for an electrical circuit. Since there are two electrical circuits to consider in electrophysiology – the animal circuit and the equipment circuit – ground may refer to either animal common (for the animal circuit) or earth ground (for the equipment circuit). We define these terms below and will be careful to distinguish between the two when relevant.

The reason it is important to distinguish between earth ground and animal common is because some recording systems (and most electrical stimulators) are electrically isolated (Figure 1A). Electrical isolation is the physical and electrical separation of the animal circuit from the mains earth (equipment) circuit – this hinders current flow across the isolation barrier and reducing the risk of inadvertent shock hazards and leakage current9; 10. This also prevents the possibility that multiple devices connected to the same recording subject might have different ground potentials, again preventing a shock hazard but also preventing ground loops (see discussion on ground loops, below). Because of the isolation barrier, earth ground and animal common are actually distinct reference points. Earth ground is the ground reference for the equipment circuit and is the same as the earth ground in the wall outlet. Animal common, or animal ground, is actually the “floating” potential of the animal and is to be used as the common reference point for all electrophysiological signal acquisition (see discussion on referential recording, below).

Figure 1. Proper equipment setup and grounding.

A) Block diagram of sample equipment setup for electrophysiology recording. Electrical signals from recording electrodes are referenced and amplified by the pre-amplifier, then filtered, digitized, processed, and stored by the recording system (black dashed box at upper right). Note that the animal circuit (gray dashed box at lower left) is referenced to the animal common and is electrically isolated from the equipment circuit, which is referenced to the earth ground. B) Block diagram illustrating how proper grounding technique can prevent a ground loop. Top, connecting systems I and II to earth ground at different points (V1 and V2) may enable unwanted current (IAC) to flow between V1 and V2, introducing electromagnetic artifact on both systems and severely degrading recording quality. Bottom, connecting both systems I and II to earth ground at a single point prevents a ground loop by eliminating the voltage drop between the two system grounds.

While all clinical recording systems are required to be electrically isolated for patient safety10; 11, some recording systems for use with animals are not isolated because of the added design complexity and reduced likelihood of many systems being connected to the same recording subject. Therefore, in more complicated experimental setups, it is important to consider not only the proper equipment and animal grounding setup, but also the need for electrical isolation of various pieces of equipment. If the recording system is not itself electrically isolated, ensure that all other connected systems (e.g., stimulators) are electrically isolated. If electrical isolation is not built into a given device, one can use a stand-alone isolation transformer to isolate the device.

Proposal -> If using non-isolated equipment, consider the need for a stand-alone isolation transformer for the non-isolated piece of equipment – especially if connecting more than one piece of equipment to the animal at a time. If using a stand-alone isolation transformer, report the manufacturer and model number.

A reliable, low-impedance electrical connection must be established and maintained between the animal and the animal common input of the recording system to ensure noise-free recordings9. This connection establishes the animal common reference for the animal circuit (Figure 1A), and is important for ensuring the stability and overall quality of the recording9. Vendors will be able to provide guidance on the best method for establishing the animal common connection between the animal and a particular recording system.

Ensuring proper recording setup becomes much more complicated when multiple pieces of equipment – for example, a stimulator and a recording system – are connected to the animal simultaneously. It is imperative to avoid a ground loop7; 12. A ground loop occurs when there are two or more ground points on a circuit that are at different voltage potentials (Figure 1B), resulting in a current flow between them that will appear on the recorded signal as unwanted noise (almost always as 50 or 60 Hz line noise). Ground loops may occur when multiple animal common connections are in place, but more often occur when multiple earth grounds are in place. To avoid a ground loop, ensure that animal common connections converge to a single connection at the equipment animal common input. Likewise, ensure that earth ground connections converge to a single earth connection (Figure 1B), for example a single power strip or a single wall outlet – this is commonly called a star topology.

Proposal -> Specifically state the placement of the common connection on the animal when reporting data, as it is an important consideration in the quality of the data obtained.

Amplification

In order to obtain usable neurophysiological data, the signal must be appropriately amplified before digitization. The first stage of signal processing is the preamplifier, also called headstage or jackbox (Figure 1A). The headstage is a low-gain amplifier that converts the neural signal from high-impedance to low-impedance12. Practically speaking, the headstage improves signal transmission and reduces noise pickup on the recording. Placing the headstage close to the signal source is recommended in order to reduce the length of the high-impedance cable run7. A high-impedance cable run will function as an antenna, picking up movement artifacts and line noise artifacts. Most commercially available systems are carefully calibrated to limit noise pickup and maintain a high signal-to-noise ratio while still providing a flexible interface for connection with the recording subject.

Proposal -> To prevent noise pickup, place the headstage as close as possible to the animal and utilize sufficient shielding on the cable leads. Perform the recording in a Faraday cage if possible, keeping the animal and any unshielded connections and wires inside the cage.

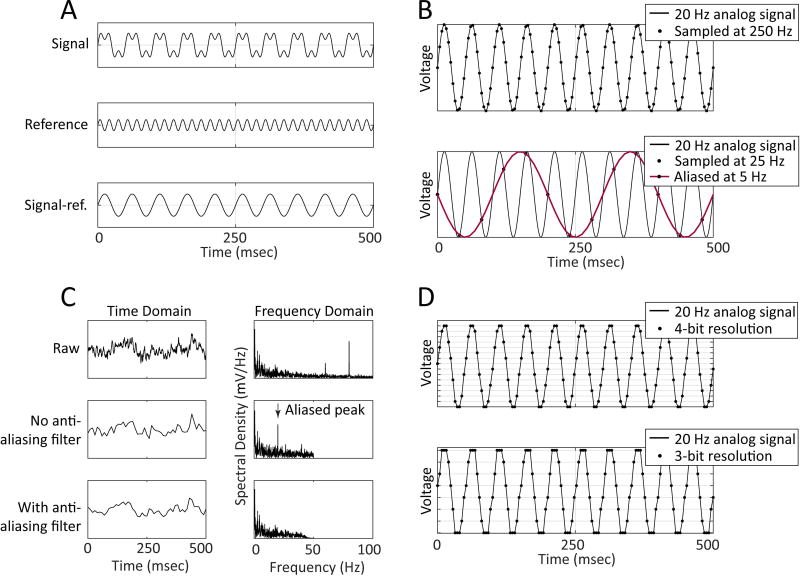

An important technique for removing noise from electrophysiological recordings is common-mode rejection13. This technique relies on the ability of differential amplifiers to reject signals common to both inputs – since noise is ambient while the neural signal is localized, noise appears on both inputs to the amplifier but the signal appears on just one (Figure 2A). Therefore subtracting one input from the other removes noise but spares the signal. To do this, electrophysiology systems subtract the signal at the reference electrode from the signal at the source electrode. The reference electrode may be another electrode located close (several mm) to the source electrode (called differential recording) or it may be the animal common connection which is generally located somewhat further away (called referential recording). The particular grouping of source and reference electrodes for collecting and reviewing data is called a recording montage. While differential recording usually provides a better signal-to-noise ratio and generally enhances the ability to quickly interpret the EEG, referential recording offers the ability to re-montage signals offline using different signal-reference electrode groupings, thus increasing the flexibility of the system14. It is important to note, however, that re-montaging is only possible if the desired reference signal is free of noise and/or amplifier saturation.

Figure 2. Analog-to-digital conversion.

A) In differential recording, a reference signal (middle) is compared to the acquired signal (top), and information common to both inputs is removed (“common mode rejection”). The resulting signal (bottom) is free of noise components appearing on both channels. B) Top, sampling a 20 Hz signal (black dots) at or above the Nyquist rate (sampled at 250 Hz here) enables the original signal to be accurately represented in digital form. Bottom, sampling a 20 Hz signal (black dots) below the Nyquist rate causes the signal to alias at a lower frequency (red line). C) Taking a raw signal (top row) and then downsampling (middle row) without first low-pass filtering the data may induce aliasing in the resulting signal (added peak in frequency domain at ~20 Hz). Low-pass filtering prior to downsampling (bottom row) prevents aliasing. D) Bit resolution determines the precision of the signal digitization on the voltage scale. Whereas sampling with 4-bit resolution (top) uses 2^4=16 voltage levels to store data, sampling with 3-bit resolution (bottom) uses only 2^3=8 voltage levels to store data – reducing the resolution of the acquired signal.

Proposal -> Collect and store data referentially, using the animal common as the reference for all recording electrodes. This way, data can be digitally re-montaged after collection, permitting more flexible and in-depth offline analysis.

Video monitoring

Video-EEG, or video monitoring in combination with EEG acquisition, is highly recommended in order to characterize the epileptic phenotype in animal models. Video-EEG enables seizure confirmation in the case of focal seizures without an obvious motor pattern, and enables the exclusion of various types of artifact associated with a given EEG event15; 16. The extent of video monitoring is dependent on the needs of the study and should be reported in the manuscripts17. That said, with modern technology it is relatively straightforward and cost-effective to obtain and store continuous, long-term EEG and video-data. Therefore we recommend capturing simultaneous EEG and video data continuously for the duration of the experiment in almost all circumstances. To obtain a useful video-EEG, it is critical to synchronize the video monitoring system with the EEG system. This can be accomplished in a variety of ways – the most straightforward being to use the same acquisition computer to run both the video and the EEG capture. However, even if using the same acquisition computer for video and EEG recording, it is advised to test the synchronization routinely by generating a video-EEG artifact (e.g., connecting or disconnecting the animal under video-capture).

Proposal -> Video monitoring should be incorporated with simultaneous EEG recording to help classify motor seizures and identify non-motor seizures or behavioral artifacts appearing on the EEG.

Proposal -> Continuously capture and store video and EEG data for the duration of the experiment in almost all circumstances. Report the extent of video-EEG recording in manuscripts.

Proposal -> Ensure that the video monitoring and the EEG acquisition systems are synchronized throughout the duration of the experiment.

Signal Conditioning

Signal digitization

Signal conditioning, in this context, refers to the preparation of the neural signal for storage in a digital format. After preamplification, the signal will pass through an analog-to-digital converter (ADC). The ADC samples the electrode signal at a given sampling frequency and bit resolution (Figure 2B–C), converting the continuous electrode signal into a discrete digitized signal by taking measurements of the incoming signal at evenly spaced time steps18. Digitized signals afford the system several advantages, including ease of signal compression, speed of processing and transmission, and immunity to several forms of noise19; 20. Following digitization, the signal may be further amplified, filtered, and otherwise processed as needed.

The most critical consideration for analog-to-digital conversion is the Nyquist or Nyquist-Shannon sampling theorem21; 22. This theorem states that a signal at a given frequency must be sampled at least twice per period in order to be accurately represented7; 23. By extension, the Nyquist rate is the minimum sampling frequency required for a given application and is equal to twice the maximum frequency content of the input signal. If the sampling frequency is set below the Nyquist rate, high-frequency signals will appear as lower-frequency signals that are not actually present in the signal (Figure 2B) – this is called aliasing. One may prevent aliasing by using a sufficiently high sampling rate and by using an anti-aliasing low-pass filter to remove signal content above the Nyquist frequency prior to sampling. The Nyquist frequency is equal to one half of the sampling rate, and typically anti-aliasing filters are set to have a cutoff frequency well below the Nyquist frequency to account for the rolloff of the filters. For most recording systems, the anti-aliasing filters are not user-configurable, as they are implemented in hardware – likewise, most recording systems will restrict the sampling frequency to an appropriate range based on the anti-aliasing filter settings. Importantly, note that optical aliasing may also occur during visual review of the recorded EEG as a result of limitations in the resolution of the display.

It is preferable to collect data using a sampling frequency well above the Nyquist rate, not only to prevent aliasing but also to collect higher resolution signals. While higher sampling frequencies come with the tradeoff of requiring more storage space and more time to process, continuing advances in computational power and technology reduce this concern. Additionally, data can often be downsampled to a lower sampling frequency to improve the speed of processing. Importantly, in order to avoid aliasing, it is imperative to low-pass filter the signal prior to downsampling (Figure 2C)7.

Proposal -> Low-pass filter all data prior to digitization to avoid the aliasing of signals above the Nyquist frequency. Use a cutoff frequency for the filter of at most one-third of the sampling frequency.

Proposal -> Report the sampling frequency of data acquisition. Set the sampling frequency at least 2× the maximum frequency of the signal. Higher sampling frequencies (at least 4×; optimally 8–10× maximum frequency of the signal) may improve signal detection. Proposal -> Always low-pass filter the signal prior to downsampling to avoid aliasing.

Proposal -> Detail filtering and downsampling steps when reporting the signal processing procedure. Include a thorough description of filters used and the sampling frequencies.

Just as the sampling frequency specifies the resolution of the digitization in the time domain, the bit resolution specifies the resolution of the digitization in the voltage domain (Figure 2D) and also determines the dynamic range. Bit resolution refers to the number of steps the ADC will use to digitize the incoming signal, calculated as two to the power of the number of bits – for example, a 12 bit system will digitize the signal into 212 = 4,096 steps24. Dynamic range is defined as the ratio between the largest signal a system can process and the noise floor18 – therefore, systems with a larger dynamic range can tolerate a wider variation in the amplitude of the input signal. The digitized signal is stored as a series of integers with a constant voltage conversion factor and sampling frequency. Almost all A/D converters currently on the market offer 16-bit resolution, which is sufficient for most users. A/D converters with higher bit resolution are not necessary for most applications.

Proposal -> Use a 16-bit recording system – higher resolutions are unlikely to be necessary. Include the bit resolution of all acquired data in published reports.

Filtering

Filtering is the process of attenuating specific frequency content in a recorded signal and is a critical component of signal conditioning and signal analysis18; 25; 26. It is important to note that filtering by definition distorts the recorded signal (see27, for example) and may actually introduce artifacts into the data. Accordingly, it is imperative to filter data only as needed, using appropriately designed filters, and to accurately and thoroughly describe filters and their application in published reports. Importantly, there is no single filter or filter type that may be universally applied – each has its own particular advantages and disadvantages and requires a reasonable understanding of the constraints involved.

Filters used for signal conditioning and signal analysis will be digital filters, i.e., filters defined in software or firmware and applied to the digitized signal. We will not discuss analog filters in detail, as these are defined in the hardware of the system and will be appropriately specified by the manufacturer of the recording and digitization equipment.

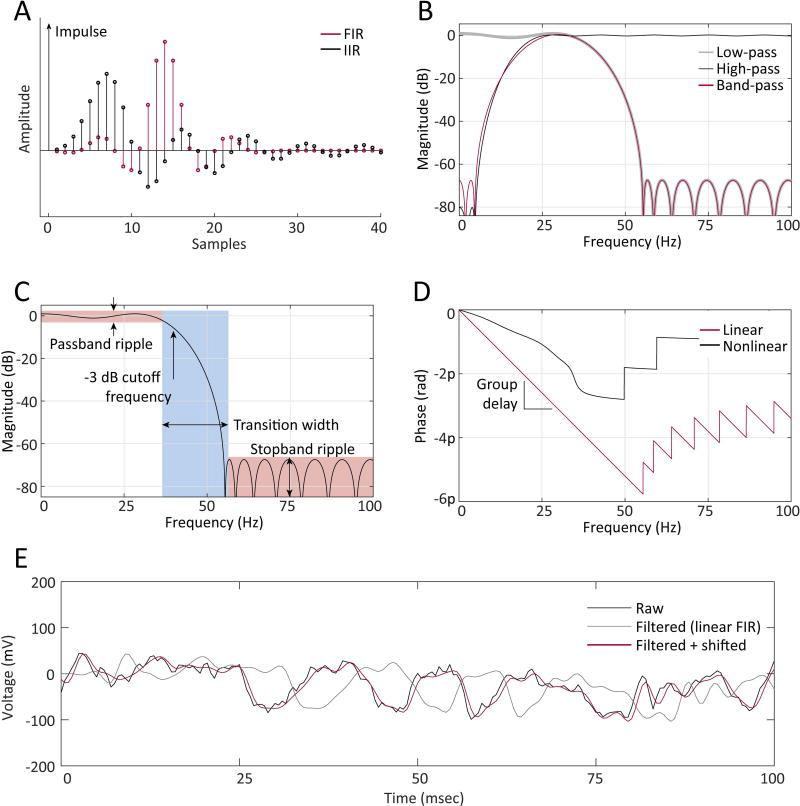

The most important way to classify filters is based on their response to an impulse, or very brief input (Figure 3A). Finite impulse response (FIR) filters will produce an output of limited duration, while infinite impulse response (IIR) filters will produce an output of unlimited duration, although the response will decay asymptotically towards zero18. Low-pass (sometimes called high-frequency or high-cut) filters allow frequencies lower than the filter cutoff frequency to pass. In contrast, high-pass (also called low-frequency or low-cut) filters pass frequencies above the filter cutoff frequency. Band-pass filters allow a specific range of frequencies to pass (Figure 3B), while notch filters remove a specific range of frequencies, e.g. 50/60 Hz generated by the mains power supply. Note that the impulse response describes the filter’s response in the time domain, while the low-pass/high-pass/band-pass descriptors describe the filter’s response in the frequency domain (Figure 3A–B).

Figure 3. Filter design and use.

A) The response of a sample finite impulse response (FIR) filter (red) returns to zero 26 samples after a very brief input (“impulse”), while the response of a sample infinite impulse response (IIR) filter (black) decays asymptotically to zero. B) Low-pass (gray), high-pass (black), and band-pass (red) filters preferentially pass different frequency bands. C) Cutoff frequency, passband/stopband ripple (pink boxes), and transition width (gray box) are important characteristics of filters, as illustrated in a magnitude vs. frequency plot. D) Linear filters (red) provide the same group delay (slope of phase vs. frequency relationship) for all frequencies, whereas nonlinear filters (black) do not. E) Filtering a signal (black trace) with a linear filter (gray trace) introduces a constant delay (“group delay”) to all frequency components. Therefore, correcting for the delay introduced by a linear filter is simple: shift the signal forward by the group delay (red trace).

There are five important design characteristics25 of filters to consider (Figure 3C): the (1) cutoff frequency, (2) phase, (3) transition width, and (4) peak passband/stopband ripple of the frequency response of the filter (ripple refers to the variation in the filter’s response in the pass- and stop-bands). The (5) filter order measures the complexity of the filter and is either the number of filter coefficients (IIR) or length of the filter (FIR) minus one. Transition width, ripple performance, and filter order are interrelated in that improving performance in one of these characteristics decreases the performance in the other two, analogous to adjusting the angles in a triangle28. For instance, reducing the transition width of a filter requires an increase in the filter order, with the requisite increased complexity and (potentially dramatically) increased processing time. Note that the order of FIR and IIR filters cannot be directly compared, since they are implemented differently.

In practice, FIR filters are preferable to IIR filters26, as they may be easily designed to provide a linear delay (Figure 3D) and are always computationally stable. In comparison, IIR filters offer narrower transition bandwidth and improved computational performance18, but with a non-linear phase-delay relationship25. Additionally, IIR filters may be unstable – that is, they may incur underflow or overflow errors as a result of accumulated rounding errors. Correcting for the phase delay introduced by filtering is much simpler and faster with a linear-phase filter (Figure 3E): simply left-shift the output signal by the group delay (the derivative of the phase-frequency response of the filter). For a non-linear phase filter, the most practical approach is to two-pass filter the signal, i.e., filter in both the forward and backward directions, using for example the MATLAB command filtfilt. Unfortunately, this doubles the amount of computation needed and also changes the functional properties of the filter25. Note that all of the FIR design methods described in this report will implement linear-phase filters.

Filter design

FIR and IIR filters may be designed using a number of different methods, each with specific advantages (Table 2). Two common methods for designing FIR filters are the equiripple (also called Parks-McClellan) and least-squares methods28. Equiripple FIR filters offer a constant ripple in the pass- and stop-bands and can be designed using the smallest filter order of all FIR filters. In comparison, least-squares FIR filters optimize signal rejection in the stop-band, but provide a slightly wider transition band compared to the equiripple. Another common method for designing FIR filters utilizes the sinc function to approximate the frequency response of an ideal filter18. However, the filter must be modified using one of various windows to improve passband and stopband performance25, with the result called a “windowed-sinc” filter. Common windows are the Hamming (trade-off between rolloff, stopband attenuation, passband ripple), the rectangular (sharpest rolloff, least stopband attenuation, largest passband ripple), and the Kaiser (shallowest rolloff, greatest stopband attenuation, smallest passband ripple).

Table 2.

Relative advantages and disadvantages of digital filters commonly used in neuroscience.

| Filter type |

Filter Name | Transition Width |

Passband ripple |

Stopband performance |

Computational Efficiency |

Phase delay |

|---|---|---|---|---|---|---|

| FIR | Equiripple | ++ | +++ | ++ | ++ | Linear |

| Least-squares | + | ++ | +++ | + | Linear | |

| Windowed sinc: Hamming | ++ | ++ | ++ | + | Linear | |

| Windowed sinc: rectangular | +++ | + | + | + | Linear | |

| Windowed sinc: Kaiser | + | +++ | +++ | + | Linear | |

| IIR | Butterworth | + | ++ | ++ | +++ | Nonlinear |

| Chebyshev I | ++ | + | +++ | +++ | Nonlinear | |

| Chebyshev II | ++ | +++ | + | +++ | Nonlinear | |

| Elliptic | +++ | + | + | +++ | Nonlinear |

+++ = excellent performance; ++ = moderate performance; + = relatively poor performance.

Common IIR filters28 include the Butterworth filter (wide transition band, smallest passband/stopband ripple), Chebyshev (shorter transition band, ripple in either the passband (Chebyshev I) or the stopband (Chebyshev II)), and elliptic (narrowest transition band, ripple in both passband and stopband).

Notch filters may be implemented to remove 50- or 60-Hz line noise. Adaptive line noise filters are a more powerful, though more complicated, type of notch filter – these types of filters create a template of the line noise artifact and remove it from the incoming signal29. This has the advantage of being able to adjust to subtle changes in the line noise shape and frequency while preserving more of the incoming signal. Proper setup (grounding, referencing, and shielding) is always preferred to filtering in order to reduce line noise, since filtering distorts the signal and eliminates information from the recording. With proper setup and grounding, in fact, a line noise filter may not even be necessary.

We recommend utilizing FIR filters for offline processing, except for large datasets when the improved computational performance of IIR filters is required. It is critical to carefully compare the filtered signal to the raw signal to confirm that the appropriate frequency bands are being removed, and that the signal is not being distorted in unexpected ways. Note that the filtering of recorded artifacts (for instance, step discontinuities or general increases in activity) may introduce physiological-looking activity patterns or increases in the band of interest – therefore, it is imperative to identify and remove artifacts from the analysis prior to filtering. It is best to band-pass filter in two stages, i.e. use a low-pass filter and then a high-pass filter, as utilizing two filters allows one to design more appropriate filters for both stages.

Proposal -> Fully report filter characteristics, including: type of filter, filter order, filter cutoff frequency (specify -3 dB or -6 dB point), and filter transition width or rolloff.

Proposal -> Report the type of software used to construct and apply filters to the data (e.g., MATLAB, Spike2, etc). Specify the commands used to construct and apply the filter, for example, butter() and filtfilt() in MATLAB.

Proposal -> FIR filters are preferable to IIR filters, as they are guaranteed to be stable and (if linear phase) may be easily corrected to zero-phase delay without additional computation.

Proposal -> Always examine the magnitude and phase response of a filter prior to applying it to data, using tools such as fdatool and fvtool in MATLAB.

Proposal -> Always directly compare the filtered signal to the original, unfiltered signal – this will confirm that the filter is functioning as expected and not introducing unanticipated artifact.

Proposal -> Report use of a line noise filter. Report settings of the line noise filter as appropriate.

Proposal -> Minimize the use of line noise filters during acquisition, since data signal content removed by a filter cannot be restored.

Signal processing and analysis

Spectral analysis

The determination of the frequency content of the recorded signal, more specifically called spectral analysis, is a critical component of software-based analysis of EEG. Spectral analysis is accomplished by transforming the signal from the time domain into the frequency domain23; 26. The transformation between these domains may be accomplished using Fourier analysis, and most commonly, using the Fast Fourier Transform (FFT). The FFT is an efficient algorithm for expressing a signal as a composition of sine waves of different frequencies (a Fourier series), making it straightforward to examine the relative contribution of each frequency to the overall signal by comparing the amplitude of each sine wave. However, it is generally more useful to examine the evolution of a signal’s spectral content over time – for this, one of many joint time-frequency analysis (JTFA) techniques may be employed30; 31. Probably the most common JTFA algorithm is the short-term Fourier transform (STFT), in which one repeatedly applies an FFT to short, non-overlapping clips of the original signal. The result of a JTFA algorithm is usually plotted in a two-dimensional heatmap called a spectrogram (if calculated using Fourier analysis) or a scalogram (if calculated using wavelets)24.

Wavelet analysis is conceptually similar to Fourier analysis – however, in Fourier analysis one transforms the recorded signal into sine waves, while in wavelet analysis one transforms the signal into wavelets24; 32. Like sine waves, wavelets are signals of a single specific frequency, but wavelets are finite in duration whereas sine waves are infinite in duration. Therefore, in comparison to Fourier analysis, wavelets perform better with non-stationary signals (i.e., signals that change over time). Accordingly, wavelet analysis is particularly useful for signals that are relatively brief in duration or that have a sudden onset/offset – for instance, identifying artifacts33 and detecting spikes, sharp waves, and HFOs34; 35.

There are several important tips to bear in mind when performing spectral analysis. First, note that electrophysiological spectra will exhibit what is termed 1/f falloff (“one over f”) – i.e., the power of the signal will decrease as frequency increases18. Second, while spectral analysis decomposes the recorded signal (most often) into sine waves, many rhythmic activities in the raw data will not be sinusoidal in nature. Such non-sinusoidal activities will be represented in the frequency domain by a sine wave at the fundamental frequency, with several additional sine waves at harmonics (integer multiples) of the fundamental frequency. Third, note that spectral analysis should only be used to identify line noise or other “human-made” noise occurring at a particular frequency. Biological noise, such as movement artifact or scratching, is comprised of a broad range of frequencies from across the spectrum, making it indistinguishable from biological signal in the frequency domain. Fourth, spectral analysis is only informative if it is applied to a data epoch of appropriate duration. One needs several cycles-worth of data in order to accurately calculate the relative contributions of each frequency band – this is especially important to consider when analyzing lower frequencies, since lower frequencies have longer periods. Therefore, we recommend the application of spectral analysis to data segments of duration of at least five cycles of the lowest frequency of interest, preferably more, if possible. That said, spectral analysis relies on the assumption of signal stationarity, that is, that a signal does not fundamentally change over the duration of the data segment. Therefore, it is also important to limit the duration of a data segment to an appropriate amount of time, depending on the signal of interest. In most cases, the most effective (and most important) way to determine the appropriate duration of a data segment for spectral analysis will be to simply visually inspect the raw recording to identify the onset and offset of a particular pattern of interest.

Proposal -> Perform spectral analysis on data epochs of appropriate duration – at least five cycles of the lowest frequency of interest, and limited to the onset and offset of the signal of interest (as identified by visual analysis of the raw data.)

Artifact recognition and rejection

Artifact recognition and rejection is a critical component of software-based EEG analysis (see also ILAE-AES TASK1-WG1 publication). Artifacts pertinent to software-based analysis of EEG can be roughly divided into two categories: external electromagnetic interference and biophysical sources. A third category, which might be loosely termed internal noise, arises from factors inherent to the design and specification of the recording equipment itself and will not be discussed here (though see7 for an excellent discussion). Because biophysical artifacts can be quite difficult to differentiate from epileptiform activity, it is extremely useful to have time-synchronized video available during the analysis of the EEG.

Artifacts from external electromagnetic (EM) interference derive from electrical or mechanical equipment generating an electromagnetic field in the vicinity of the recording equipment. Prevent electromagnetic interference by ensuring proper setup, grounding, and shielding on all equipment. By far the most common type of EM interference is line noise from the mains power supply.

Biophysical artifacts derive from the animal, rather than the environment. Common examples are movement artifact, respiratory artifact, cardiac artifact, scratching artifact, and grooming artifact. As with EM artifact, the best way to prevent biophysical artifact is to ensure proper setup and grounding – including making sure that all cables are firmly connected and the headstage is located as close as possible to the animal. Additionally, in order to reduce movement artifact, it may help to allow a 20 minute adaptation period in the recording cage for the animal, before initiating the EEG data acquisition36.

Since artifacts cannot be completely prevented during recording, it is also required to detect and remove them during analysis. While manual review of the data is probably the most widely accepted technique for artifact rejection, it may be infeasible for large data sets (and it is certainly tedious for any size data set). Accordingly, researchers have developed a number of algorithms to identify and remove artifacts from EEG recordings37–39. Because different datasets may be susceptible to different types of artifact, there is probably not a single optimal artifact rejection algorithm that may be utilized for all needs. Rather, it is likely that each researcher may need to customize an artifact rejection algorithm for his or her needs. Many techniques for artifact detection and removal in EEG utilize independent component analysis (ICA) at some stage in the analysis40–42. This technique decomposes the EEG into multiple independent sources, with the goal being to separate sources of artifact from sources of clean neural signal. However, it is very difficult to control or validate how the ICA performs, and it is dependent upon the noise and neural signals being separable. Another challenge for artifact rejection algorithms in general is that the artifact itself may evolve with time, or may take several related forms – for instance, many algorithms rely on the characterization of high amplitude signals, but perhaps at the expense of identifying lower amplitude artifacts from the same source. Note that regardless of the approach used for artifact rejection, it is important to report the success rate for rejection of artifacts (percent false rejections, percent correct rejections), against the “gold standard” of visual screening.

It is increasingly common for researchers to utilize various types of machine learning algorithms for artifact rejection and/or epileptiform event detection. While there are many machine learning algorithms available for use in EEG analysis, all of them leverage a set of features to classify segments of data as artifact, epileptiform, or neither. Features are measured properties of the signal, and are often analogous to the characteristics that neurologists use when interpreting EEG, such as increase in background activity, correlation across channels, and change in spectral content43. A machine-learning algorithm can only be as effective as the features it utilizes – therefore, proper feature selection is a critical consideration for artifact rejection using machine learning techniques. It is also very important to prevent overfitting by properly utilizing training and testing data sets, along with techniques such as cross-validation.

Proposal -> Report all features used for artifact rejection, explicitly including the equations used for each.

Proposal -> Include a detailed description of the data processing algorithm used, including steps for preprocessing, artifact rejection, and statistical analysis.

Proposal -> Divide data epochs into training and testing data sets before developing a machine learning algorithm for artifact rejection. Utilize techniques such as cross-validation to help prevent overfitting and to ensure the algorithm is consistent.

Proposal -> Report the success rate of an artifact rejection artifact (percent false and percent correct rejections) against a standard, i.e, visual review of video-EEG file by expert readers.

Proposal -> Acquisition, conversion, and analysis scripts should be made available at the time of publication. Include a link to the repository in the publication.

Data storage and data sharing

The choice of data format for storage is a fundamental consideration in neurophysiology. Countless options exist, yet no single data format is optimal for all purposes – instead, the research team must choose a format that ensures long-term accessibility of the data while also meeting data storage and sharing constraints.

The simplest data storage strategy stores recorded values as integers, most commonly using ASCII or Unicode format. In this case, the recorded signal may be reconstructed by multiplying the integer values by a constant scaling factor, often called a voltage calibration constant. Commonly these types of files are stored as .txt or .csv files. This approach maximizes accessibility of the data – the files are human-readable, and can be opened using any simple text editor software – but is the least efficient for storage and processing. This strategy is most appropriate for sharing short clips of the recorded signal. A more efficient strategy is to store data in binary format, often using an extension such as .bin. Whereas integer values must be encoded using a scheme such as ASCII, binary files store data in 1’s and 0’s – more efficient for storage and processing, but not directly readable by humans. That said, binary format is still relatively easy to edit, using a hex-editor or analysis environment capable of importing and exporting binary files (such as MATLAB).

Some data storage schemes utilize compression algorithms to significantly reduce the file size of the data, with the drawback of making data interpretation and processing somewhat more complicated. Probably the most common formats for storing compressed neurophysiological data are the MEF44–46 and HDF5 (http://www.hdfgroup.org/) formats. It is also reasonable to archive stored data in a format such as .zip, though the compression achieved with this approach is not as appreciable as it is for .mef.

It is crucial to store metadata, or information describing the acquired neurophysiological data, in a related file. Metadata should include information relevant for the interpretation of the recorded data, such as acquisition system settings, electrode placement, recording montage, and experimental protocol. Commonly this type of information is stored in a header, or block of data placed at the beginning of a data file.

Digital EEG acquisition systems usually store recorded data/metadata in their own specific binary data format, thus requiring either a format-specific file reader or knowledge of the precise file structure for import. There are some commercially available software products, such as Spike2 and Persyst, which enable opening and converting between several file formats.

While several versatile formats for neurophysiological data exchange and storage have been developed19; 47, our group recommends utilizing the European Data Format (EDF/EDF+, http://www.edfplus.info/index.html) for most applications. EDF is probably the most commonly used format in the field of epilepsy research, with a well-documented file structure48; 49 and many freely available tools for importing and exporting EDF files. However, the EDF format may not be suitable for complex, high-bandwidth, high-sampling-rate datasets that are becoming increasingly more common in experimental neurophysiology – in such cases, the MEF format is likely preferable.

Fortunately, powerful platforms already exist to enable the sharing of neural data50. General-purpose cloud-based storage utilities (Amazon S3, Google Drive, Dropbox, Box, Microsoft OneDrive, BlackBlaze) currently enable one to store several GB in the cloud for free, with larger storage amounts available with a paid subscription. Additionally, neurophysiology-specific data storage tools exist, such as the iEEG Portal (www.ieeg.org), Epilepsiae (www.epilepsiae.eu), Physionet (www.physionet.org), and Blackfynn (www.blackfynn.com). Some of these databases are grant-funded platforms for storing, sharing, and annotating arbitrarily large neurophysiology datasets.

Proposal -> Store data in raw or at least minimally processed form (i.e., in referential montage, without filtering or postprocessing).

Proposal -> Store all acquisition system settings along with the data: sampling frequency, bit resolution, filter settings, acquisition system, research center/principal investigator, date obtained, animal model, etc. As applicable, store this data in the header of the file (EDF+ or MEF) or in a separate text or comma-separated value file – or store in both the header and a separate human-readable file.

Proposal -> For small- to medium-sized datasets (<0.5 TB) that will be accessed regularly, use the EDF+ format if feasible. If it is necessary to use a proprietary format (i.e., a format unique to your recording system), ensure that it can be easily converted to EDF+ for long-term storage.

Proposal -> For large datasets (>0.5 TB) that are accessed regularly, upload data to a cloud-based storage system.

Proposal -> For long-term storage of small or medium-sized datasets, use either EDF+ or MEF format. For archiving of large-sized datasets, use MEF format. Proposal -> When possible, make the raw data publically available using a cloud-based sharing platform.

Challenges and opportunities

Many fundamental questions in the field of epilepsy (and neuroscience in general) remain unanswered. Similarly, there are significant technical barriers to obtaining high-quality data and performing rigorous analyses necessary to answer these questions. Fortunately, the most challenging obstacles represent the greatest opportunities for advancing the field. Here, we have briefly discussed some of the latest trends in the field relevant to data acquisition and software analysis of electrophysiological signals in epilepsy, noting challenges that must be addressed and opportunities that may be available.

One of the most fundamental challenges in experimental neurophysiology is improving the quality of the hardware used for data acquisition. Opportunities in this realm include the development of recording systems with improved signal isolation capabilities, enhanced processing power, and advanced filtering algorithms to optimize the extraction of biological signals, even in noisy or suboptimal experimental conditions. For example, new wireless neuro-telemetry systems51; 52, facilitate the acquisition of relatively artifact-free data, and minimize animal discomfort for long-term recordings53. Similarly, recent advances in the design and fabrication of electrodes has enabled higher resolution, higher density recording, and in some cases has permitted the acquisition of multiple modalities (e.g., MRI, calcium dye imaging) of information simultaneously54. With these advances, however, comes the challenge of developing new methods for processing and visualizing such high-dimensional data.

Improvements in hardware – faster processors, smaller devices, and new implantables – should facilitate the development of more advanced algorithms for the analysis of neurophysiological data. For instance, improved real-time automated seizure detection and prediction algorithms would be useful not only for the investigation of the mechanisms of seizures and epileptogenesis in animal models, but would also be quite valuable for the development of on-demand treatment/neuromodulation devices in humans. A significant challenge in this area is the lack of a “gold standard” for what constitutes a seizure – even among experts, inter-observer agreement hovers around 85%, so it is difficult to expect a device to improve upon this rate. A major opportunity here is the development of a large, annotated data set, hosted on the cloud, and openly accessible by the community and usable for the development and testing of new detection and prediction algorithms. Another opportunity is the leveraging of crowdsourcing platforms to facilitate the analysis of neural data by experts in other fields. For example, in a recent seizure detection competition hosted on the Kaggle website (https://www.kaggle.com/c/seizure-detection), the winning algorithm achieved a detection accuracy of 0.96 (area under the curve).

Another area of application for advanced data analysis is “wide-band” EEG – that is, EEG signals at the extreme low and high ends of the frequency spectrum. In humans, slow activity transients (<0.5 Hz) have been described in premature neonates55 and infantile spasms56, while very low frequency activity (<1 Hz) coincides with burst periods in post-asphyxia human neonates57 and lateralize with the seizure onset zone in adults with temporal lobe epilepsy58. High frequency oscillations, including ripples (80–250 Hz) and fast ripples (250–500 Hz), have been suggested as a novel epileptogenic biomarker not only in humans but also in animals59.

Finally, significant opportunities are available in the realm of data sharing and data storage50, largely because of new possibilities afforded by the development of cloud-based computing. For instance, it seems likely that in the near future, researchers will be able to upload their data to the cloud and process it using standardized analysis and detection algorithms, without the need to write customized analysis scripts or maintain expensive computing infrastructure. The cloud might also allow the field to circumvent the wide variety of file formats currently used for data storage, many of which are proprietary to individual vendors. This is an important challenge to address, since even though several attempts have been made over time to develop a “universal” format44; 46; 48; 49; 60, the field is still nowhere close to a consensus. Also critical for the field to address is to develop a universal standard for the storage of meta-data. Hopefully, ongoing efforts towards developing a universal data storage format47 will be successful and thus drastically lower the barrier to sharing data and reproducing analyses.

Conclusion

In stark contrast to clinical practice, widely accepted standards and experimental protocols do not exist for epilepsy research utilizing animal models. In truth, it is probably not possible to develop universal standards for all animal-based research on epilepsy, since the scope and intent of studies may vary drastically among laboratories. Instead, researchers will likely need to develop experimental procedures and protocols as appropriate for their needs, but must focus on appropriately documenting and reporting the specifics of their setup and analysis to ensure reproducibility and to facilitate translation to the clinic.

There are many important questions that researchers must consider when designing their recording setup and experimental protocol. For instance, is it preferable to record from many channels for a short period of time, or to record from fewer channels for a longer period of time? Is the intent of the experiment to establish that the subject does at some point develop seizures, or is the intent to document the number and severity of seizures? Is it necessary to obtain very high-resolution recordings (e.g., high sampling frequency), for example to investigate high frequency activity in the model, or would a lower sampling frequency suffice? Our hope is that the present paper spurs investigators to consider such questions carefully while developing and implementing their experimental setup and analysis.

Supplementary Material

KEY POINTS.

In collaboration with the International League Against Epilepsy (ILAE) and American Epilepsy Society (AES), this work is part of a larger effort to harmonize video-electroencephalography interpretation and analysis methods across studies using in vivo and in vitro seizure and epilepsy models.

This manuscript describes standard data acquisition and data analysis techniques for use in the analysis of neural field recordings, specifically, electroencephalographic (EEG), electrocorticographic (ECoG), and stereo-EEG (SEEG) recordings.

For each topic addressed, this report lays out proposals with regard to data collection, data analysis, and documentation in an effort to specify analysis and reporting standards for high-quality research.

The goal of this workgroup is to develop and optimize depositories of annotated video-EEG data and software tools, accessible for all interested investigators, for the screening and analysis of epileptic or non-epileptic patterns of interest.

Acknowledgments

This manuscript is a product of the working group 5 of the TASK1 of the AES/ILAE Translational Research Task Force of the ILAE. We thank the members of the TASK1 and the Task Force for their feedback and reviewing this manuscript, and Dr. Lauren Harte-Hargrove for assistance during the preparation of the manuscript. Vadym Gnatkovsky acknowledges grant support from the Italian Ministry of Health. Tomonori Ono acknowledges grant support from the Japan Epilepsy Research Foundation (2016–2018). Jakub Otáhal acknowledges grant support by Project No. 15-08565S from Czech Science Foundation and No.15-33115A from Czech Health Research Council. Joost Wagenaar acknowledges grant support by NIH 1K01ES025436. William Stacey acknowledges grant support by NIH 1K08NS069783. Akio Ikea acknowledges endowment from GlaxoSmithKline K.K., Nihon Kohden Corporation, Otsuka Pharmaceutical Co., and UCB Japan Co., Ltd. Brian Litt acknowledges grant support by NIH U24 NS063930. Aristea Galanopoulou acknowledges grant support by NINDS RO1 NS091170, the US Department of Defense (W81XWH-13-1-0180), the CURE Infantile Spasms Initiative and research funding from the Heffer Family and the Segal Family Foundations and the Abbe Goldstein/Joshua Lurie and Laurie Marsh/ Dan Levitz families. We confirm that we have read the Journal’s position on issues involved in ethical publication and affirm that this report is consistent with those guidelines.

Footnotes

Conflict disclosures

This report was written by experts selected by the International League Against Epilepsy (ILAE) and was approved for publication by the ILAE. Opinions expressed by the authors, however, do not necessarily represent the policy or position of the ILAE. Reference to websites, products or systems that are being used for EEG acquisition, storage or analysis was based on the resources known to the co-authors of this manuscript and is done only for informational purposes. The AES/ILAE Translational Research Task Force of the ILAE is a non-profit society that does not preferentially endorse certain of these resources, but it is the readers’ responsibility to determine the appropriateness of these resources for their specific intended experimental purposes.

Jason Moyer is currently an employee of UCB, Inc; this position has no direct conflict of interest with the content of this manuscript. Joost Wagenaar is a founder and employee of Blackfynn Inc and a founder of IEEG.org. Brian Litt is a founder of Blackfynn Inc and IEEG.org. The remaining authors have no conflicts of interests.

References

- 1.Niedermeyer E. Historical aspects. In: Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principles, clinical applications, and related fields. Lippincott Williams and Wilkins; Philadelphia, PA, USA: 2005. pp. 1–16. [Google Scholar]

- 2.Westbrook G. Seizures and epilepsy. In: Kandel E, Schwartz T, Jessell T, et al., editors. Principles of neural science. McGraw Hill Professional; 2013. pp. 1116–1139. [Google Scholar]

- 3.American Clinical Neurophysiology S. Guideline 6: A proposal for standard montages to be used in clinical eeg. J Clin Neurophysiol. 2006;23:111–117. doi: 10.1097/00004691-200604000-00007. [DOI] [PubMed] [Google Scholar]

- 4.Hirsch LJ, LaRoche SM, Gaspard N, et al. American clinical neurophysiology society's standardized critical care eeg terminology: 2012 version. J Clin Neurophysiol. 2013;30:1–27. doi: 10.1097/WNP.0b013e3182784729. [DOI] [PubMed] [Google Scholar]

- 5.Noachtar S, Binnie C, Ebersole J, et al. A glossary of terms most commonly used by clinical electroencephalographers and proposal for the report form for the eeg findings. The international federation of clinical neurophysiology. Electroencephalogr Clin Neurophysiol Suppl. 1999;52:21–41. [PubMed] [Google Scholar]

- 6.Institution IE. Iec 60601-1: Medical electrical equipment - part 1: General requirements for basic safety and essential performance. Editor (Ed)^(Eds) Book Iec 60601-1: Medical electrical equipment - part 1: General requirements for basic safety and essential performance. 2005 [Google Scholar]

- 7.Sherman-Gold R. The axon guide for electrophysiology and biophysics: Laboratory techniques. Foster City, CA, USA: 1993. [Google Scholar]

- 8.Isley MR, Krauss GL, Levin KH, et al. Electromyography/electroencephalography. SpaceLabs Medical. 1993 [Google Scholar]

- 9.Nagel JH. Biopotential amplifiers. In: Bronzino JD, Peterson DR, editors. The biomedical engineering handbook, vol. 4: Medical devices and engineering. CRC Press, LLC; Boca Raton, FL, USA: 2014. [Google Scholar]

- 10.Olson WH. Electrical safety. In: Webster JG, editor. Medical instrumentation: Application and design. John Wiley & Sons; New York, NY, USA: 2010. pp. 638–675. [Google Scholar]

- 11.Mathew G. Medical devices isolation: How safe is safe enough. [Accessed July 31, 2016];2002 Available at: https://www.wipro.com/documents/whitepaper/Whitepaper-MedicalDevicesIsolation-%C3%B4Howsafeissafeenough%C3%B6.pdf.

- 12.Prutchi D, Norris M. Design and development of medical electronic instrumentation: A practical perspective of the deisng, construction, and test of medical devices. John Wiley & Sons; New York, NY, USA: 2005. [Google Scholar]

- 13.Webster JG. Amplifiers and signal processing. In: Webster JG, editor. Medical instrumentation: Application and design. John Wiley & Sons; New York, NY, USA: 2010. pp. 91–125. [Google Scholar]

- 14.Reilly EL. Eeg recording and operation of the apparatus. In: Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principles, clinical applications, and related fields. Lippincott Williams and Wilkins; Philadelphia, PA, USA: 2005. pp. 139–159. [Google Scholar]

- 15.Bertram EH. Monitoring for seizures in rodents. In: Pitkanen A, Schwartzkroin PA, Moshe SL, editors. Models of seizures and epilepsy. Elsevier Academic Press; Burlington, MA, USA: 2006. pp. 569–582. [Google Scholar]

- 16.Rensing NR, Guo D, Wong M. Video-eeg monitoring methods for characterizing rodent models of tuberous sclerosis and epilepsy. In: Weichhart T, editor. Mtor: Methods and protocols. Humana Press; New York, NY, USA: 2012. pp. 373–391. [DOI] [PubMed] [Google Scholar]

- 17.Galanopoulou AS, Kokaia M, Loeb JA, et al. Epilepsy therapy development: Technical and methodologic issues in studies with animal models. Epilepsia. 2013;54(Suppl 4):13–23. doi: 10.1111/epi.12295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Smith SW. The scientist and engineer's guide to digital signal processing. Califronia Technical Publishers; 1997. [Google Scholar]

- 19.Krauss GL, Webber WRS. Digital eeg. In: Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principles, clinical applications, and related fields. Lippincott Williams and Wilkins; Philadelphia, PA, USA: 2005. pp. 797–813. [Google Scholar]

- 20.Lesser RP, Webber WRS. Principles of computerized epilepsy monitoring. In: Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principles, clinical applications, and related fields. Lippincott Williams and Wilkins; Philadelphia, PA, USA: 2005. pp. 791–796. [Google Scholar]

- 21.Shannon CE. Communication in the presence of noise. Proceedings of the Institue of Radio Engineers. 1949;37:10–21. [Google Scholar]

- 22.Nyquist H. Certain topics in telegraph transmission theory. Transactions of the American Institute of Electrical Engineers. 1928;47:617–644. [Google Scholar]

- 23.Mainardi LT, Bianchi AM, Cerutti S. Digital biomedical signal acquisition and processing. In: Bronzino JD, Peterson DR, editors. The biomedical engineering handbook, vol. 3: Biomedical signals, imaging, and informatics. CRC Press LLC; 2014. [Google Scholar]

- 24.van Drongelen W. Signal processing for neuroscientists: An introduction to the analysis of physiological signals. Academic Press; Burlington, MA, USA: 2006. [Google Scholar]

- 25.Widmann A, Schroger E, Maess B. Digital filter design for electrophysiological data--a practical approach. J Neurosci Methods. 2015;250:34–46. doi: 10.1016/j.jneumeth.2014.08.002. [DOI] [PubMed] [Google Scholar]

- 26.Ifeachor E, Jervis B. Digital signal processing: A practical approach. 2002 [Google Scholar]

- 27.Gliske SV, Irwin ZT, Chestek C, et al. Effect of sampling rate and filter settings on high frequency oscillation detections. Clin Neurophysiol. 2016;127:3042–3050. doi: 10.1016/j.clinph.2016.06.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Practical introduction to digital filter design. [Accessed July 31, 2016]; Available at: http://www.mathworks.com/help/signal/examples/practical-introduction-to-digital-filter-design.html?requestedDomain=www.mathworks.com.

- 29.Wesson KD, Ochshorn RM, Land BR. Low-cost, high-fidelity, adaptive cancellation of periodic 60 hz noise. J Neurosci Methods. 2009;185:50–55. doi: 10.1016/j.jneumeth.2009.09.008. [DOI] [PubMed] [Google Scholar]

- 30.Northrop RB. Signals and systems analysis in biomedical engineering. CRC Press, Taylor & Francis Group; Boca Raton, FL: 2010. [Google Scholar]

- 31.Qian S, Chen D. Joint time-frequency analysis : Methods and applications. PTR Prentice Hall; Upper Saddle River, NJ: 1996. [Google Scholar]

- 32.Walker JS. A primer on wavelets and their scientific applications. Chapman and Hall/CRC; 2008. [Google Scholar]

- 33.Castellanos NP, Makarov VA. Recovering eeg brain signals: Artifact suppression with wavelet enhanced independent component analysis. J Neurosci Methods. 2006;158:300–312. doi: 10.1016/j.jneumeth.2006.05.033. [DOI] [PubMed] [Google Scholar]

- 34.Wilson SB, Emerson R. Spike detection: A review and comparison of algorithms. Clin Neurophysiol. 2002;113:1873–1881. doi: 10.1016/s1388-2457(02)00297-3. [DOI] [PubMed] [Google Scholar]

- 35.Latka M, Was Z, Kozik A, et al. Wavelet analysis of epileptic spikes. Phys Rev E Stat Nonlin Soft Matter Phys. 2003;67:052902. doi: 10.1103/PhysRevE.67.052902. [DOI] [PubMed] [Google Scholar]

- 36.del Campo CM, Velazquez JL, Freire MA. Eeg recording in rodents, with a focus on epilepsy. Curr Protoc Neurosci. 2009;(Unit 6):24. doi: 10.1002/0471142301.ns0624s49. Chapter 6. [DOI] [PubMed] [Google Scholar]

- 37.Nolan H, Whelan R, Reilly RB. Faster: Fully automated statistical thresholding for eeg artifact rejection. J Neurosci Methods. 2010;192:152–162. doi: 10.1016/j.jneumeth.2010.07.015. [DOI] [PubMed] [Google Scholar]

- 38.Kelleher D, Temko A, Orregan S, et al. Parallel artefact rejection for epileptiform activity detection in routine eeg. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:7953–7956. doi: 10.1109/IEMBS.2011.6091961. [DOI] [PubMed] [Google Scholar]

- 39.LeVan P, Urrestarazu E, Gotman J. A system for automatic artifact removal in ictal scalp eeg based on independent component analysis and bayesian classification. Clin Neurophysiol. 2006;117:912–927. doi: 10.1016/j.clinph.2005.12.013. [DOI] [PubMed] [Google Scholar]

- 40.Chaumon M, Bishop DV, Busch NA. A practical guide to the selection of independent components of the electroencephalogram for artifact correction. J Neurosci Methods. 2015;250:47–63. doi: 10.1016/j.jneumeth.2015.02.025. [DOI] [PubMed] [Google Scholar]

- 41.Delorme A, Sejnowski T, Makeig S. Enhanced detection of artifacts in eeg data using higher-order statistics and independent component analysis. Neuroimage. 2007;34:1443–1449. doi: 10.1016/j.neuroimage.2006.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hamaneh MB, Chitravas N, Kaiboriboon K, et al. Automated removal of ekg artifact from eeg data using independent component analysis and continuous wavelet transformation. IEEE Trans Biomed Eng. 2014;61:1634–1641. doi: 10.1109/TBME.2013.2295173. [DOI] [PubMed] [Google Scholar]

- 43.Lopes da Silva F. Eeg analysis: Theory and practice. In: Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic principles, clinical applications, and related fields. Lippincott Williams and Wilkins; Philadelphia, PA, USA: 2005. pp. 1199–1231. [Google Scholar]

- 44.Brinkmann BH, Bower MR, Stengel KA, et al. Multiscale electrophysiology format: An open-source electrophysiology format using data compression, encryption, and cyclic redundancy check. Conf Proc IEEE Eng Med Biol Soc. 2009;2009:7083–7086. doi: 10.1109/IEMBS.2009.5332915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Brinkmann BH, Bower MR, Stengel KA, et al. Large-scale electrophysiology: Acquisition, compression, encryption, and storage of big data. J Neurosci Methods. 2009;180:185–192. doi: 10.1016/j.jneumeth.2009.03.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Stead M, Halford JJ. A proposal for a standard format for neurophysiology data recording and exchange. J Clin Neurophysiol. 2016 doi: 10.1097/WNP.0000000000000257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Teeters JL, Godfrey K, Young R, et al. Neurodata without borders: Creating a common data format for neurophysiology. Neuron. 2015;88:629–634. doi: 10.1016/j.neuron.2015.10.025. [DOI] [PubMed] [Google Scholar]

- 48.Kemp B, Varri A, Rosa AC, et al. A simple format for exchange of digitized polygraphic recordings. Electroencephalogr Clin Neurophysiol. 1992;82:391–393. doi: 10.1016/0013-4694(92)90009-7. [DOI] [PubMed] [Google Scholar]

- 49.Kemp B, Olivan J. European data format 'plus' (edf+), an edf alike standard format for the exchange of physiological data. Clin Neurophysiol. 2003;114:1755–1761. doi: 10.1016/s1388-2457(03)00123-8. [DOI] [PubMed] [Google Scholar]

- 50.Wagenaar JB, Worrell GA, Ives Z, et al. Collaborating and sharing data in epilepsy research. J Clin Neurophysiol. 2015;32:235–239. doi: 10.1097/WNP.0000000000000159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Liang SF, Shaw FZ, Young CP, et al. A closed-loop brain computer interface for real-time seizure detection and control. Conf Proc IEEE Eng Med Biol Soc. 2010;2010:4950–4953. doi: 10.1109/IEMBS.2010.5627243. [DOI] [PubMed] [Google Scholar]

- 52.Nelson TS, Suhr CL, Freestone DR, et al. Closed-loop seizure control with very high frequency electrical stimulation at seizure onset in the gaers model of absence epilepsy. Int J Neural Syst. 2011;21:163–173. doi: 10.1142/S0129065711002717. [DOI] [PubMed] [Google Scholar]

- 53.Zayachkivsky A, Lehmkuhle MJ, Dudek FE. Long-term continuous eeg monitoring in small rodent models of human disease using the epoch wireless transmitter system. J Vis Exp. 2015:e52554. doi: 10.3791/52554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kuzum D, Takano H, Shim E, et al. Transparent and flexible low noise graphene electrodes for simultaneous electrophysiology and neuroimaging. Nat Commun. 2014;5:5259. doi: 10.1038/ncomms6259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Vanhatalo S, Palva JM, Andersson S, et al. Slow endogenous activity transients and developmental expression of k+-cl- cotransporter 2 in the immature human cortex. Eur J Neurosci. 2005;22:2799–2804. doi: 10.1111/j.1460-9568.2005.04459.x. [DOI] [PubMed] [Google Scholar]

- 56.Myers KA, Bello-Espinosa LE, Wei XC, et al. Infraslow eeg changes in infantile spasms. J Clin Neurophysiol. 2014;31:600–605. doi: 10.1097/WNP.0000000000000109. [DOI] [PubMed] [Google Scholar]

- 57.Thordstein M, Lofgren N, Flisberg A, et al. Infraslow eeg activity in burst periods from post asphyctic full term neonates. Clin Neurophysiol. 2005;116:1501–1506. doi: 10.1016/j.clinph.2005.02.025. [DOI] [PubMed] [Google Scholar]

- 58.Vanhatalo S, Holmes MD, Tallgren P, et al. Very slow eeg responses lateralize temporal lobe seizures: An evaluation of non-invasive dc-eeg. Neurology. 2003;60:1098–1104. doi: 10.1212/01.wnl.0000052993.37621.cc. [DOI] [PubMed] [Google Scholar]

- 59.Bragin A, Engel J, Jr, Staba RJ. High-frequency oscillations in epileptic brain. Curr Opin Neurol. 2010;23:151–156. doi: 10.1097/WCO.0b013e3283373ac8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Open, industry-standard file format for neurophysiological data. [Accessed July 31, 2016];2000 Available at: http://neuroshare.sourceforge.net/API-Documentation/sfn00meeting_agenda.pdf.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.