Abstract

Computational models of classical conditioning have made significant contributions to the theoretic understanding of associative learning, yet they still struggle when the temporal aspects of conditioning are taken into account. Interval timing models have contributed a rich variety of time representations and provided accurate predictions for the timing of responses, but they usually have little to say about associative learning. In this article we present a unified model of conditioning and timing that is based on the influential Rescorla-Wagner conditioning model and the more recently developed Timing Drift-Diffusion model. We test the model by simulating 10 experimental phenomena and show that it can provide an adequate account for 8, and a partial account for the other 2. We argue that the model can account for more phenomena in the chosen set than these other similar in scope models: CSC-TD, MS-TD, Learning to Time and Modular Theory. A comparison and analysis of the mechanisms in these models is provided, with a focus on the types of time representation and associative learning rule used.

Author summary

How does the time of events affect the way we learn about associations between these events? Computational models have made great contributions to our understanding of associative learning, but they usually do not perform very well when time is taken into account. Models of timing have reached high levels of accuracy in describing timed behaviour, but they usually do not have much to say about associations. A unified approach would involve combining associative learning and timing models into a single framework. This article takes just this approach. It combines the influential Rescorla-Wagner associative model with a timing model based on the Drift-Diffusion process, and shows how the resultant model can account for a number of learning and timing phenomena. The article also compares the new model to others that are similar in scope.

Introduction

Classical conditioning theories aim to understand how associations between stimuli are learned. Ever since Pavlov [1] the process of association formation has been understood to depend crucially on the temporal relations between stimuli [2, 3, 4]. Yet, classical conditioning theories have so far struggled to work when time is taken into account as an attribute of the stimulus representation. The study of time as a mental representation is the object of a separate area of study known as interval timing. Interval timing theories have produced a rich variety of time representations [5, 6, 7, 8, 9], and therefore are a natural place to look for ways to integrate time into classical conditioning. In this paper we first analyse previous efforts in this direction before introducing a new hybrid classical conditioning and timing model.

The process of association formation is understood to be of fundamental survival value for both human and non-human animals. Prediction, which forms the core of classical conditioning, allows the organism to adapt to significant events in its surroundings. A prototypical experiment in classical conditioning, a type of associative learning, involves a neutral stimulus and an unconditioned stimulus (US) which is capable of eliciting an unconditioned response (UR). After repeated pairings of both stimuli in a specified order and temporal distance, the neutral stimulus comes to elicit a response similar to the UR. This response is called the conditioned response (CR) and the neutral stimulus is said to have become a conditioned stimulus (CS). Classical conditioning theories typically conceptualize this process as the formation of a link (association) between the internal representations of CS and US. Their basic building blocks are [10, 11]: (a) the representations of stimuli, and (b) a learning rule to update the association weights between these representations. Although most theories do not attempt to find neurophysiological correlates, these constructs are nonetheless commonly assumed to be instantiated by (a) neural activity in the form of spike rates, and (b) synaptic plasticity [12, 13, 14]. These have found some support in the neuroscientific literature, particularly studies of the role of dopamine in reward prediction [15, 16, 17, 18]. However it is important to note that there is still no widely accepted complete neural mechanism for classical conditioning and that most theories stay at the computational level of explanation.

Stimulus representations are generally thought of as neural activation that is elicited by the stimulus, which may linger for a short time as a ‘trace’ after stimulus offset. Representations are commonly one of two types: molar or componential. Molar (or elemental) trace theories treat the stimulus as a single conceptualized unit whose activity is usually assumed to peak quite early following stimulus onset, and then gradually decrease [19, 20, 21, 22, 23, 24]. In contrast, componential trace theories break down the CS representation into smaller units, each capable of being associated with the US, with some units more active early during the CS and others late, but all leaving a trace after activation [25, 26, 27, 28].

Learning rules may be classified according to different criteria. An important period in the recent history of the field gave rise to one of these criteria. Prior to 1970’s conditioning used to be rooted in the stimulus-response tradition, which attributed crucial importance to the temporal pairing, or contiguity, of stimuli for the development of associations. The linear operator learning rule [19] is one of the products of that period. In the late 1960’s and early 1970’s important experimental discoveries using compound stimuli, that is, a stimulus formed by combining other individual stimuli, showed the contiguity view to be incomplete [29, 30]. These compound experiments indicated that the formation of associations also depended on the reinforcement history of the individual elements forming the compound stimulus. This led to the development of new learning rules [31, 32, 33] capable of combining individual reinforcement histories in compounds, which the linear operator rule cannot. The first, and arguably still the most influential, of these learning rules is the Rescorla-Wagner [RW, 31]. It has become famous for being the first model able to provide an account for the blocking effect [34], where a novel CS does not become associated with the US if it is reinforced only in compound with a previously conditioned CS.

The CR is usually not a single event. Organisms time their responses so that they emerge gradually during the duration of the CS and reach maximum frequency or intensity around the time of reinforcement. Interval timing theories have attempted to provide an account for this timing of the CR. One of the fundamental properties of timing behaviour is that it is approximately timescale invariant, i.e. the whole response distribution scales with the interval being timed [35, 36].One of the consequences of timescale invariance is that the coefficient of variation, that is the standard deviation divided by the mean, of the dependent measure of timing is approximately constant. A number of timing models have put forth explanations for timescale invariance and other timing properties (how time is encoded, how it is stored in memory and how it gets translated into behaviour) by recourse to an internal pacemaker. The most influential pacemaker-based timing theory to date is Scalar Expectancy Theory [SET, 5, 37]. The pacemaker is supposed to mark the passage of time by emitting pulses. These pulses can be gated to an accumulator via a switch which closes at the start of a relevant interval and opens when the interval is finished. The accumulator count is kept in working memory. At the end of the interval the current count is transferred to a long-term reference memory. Behaviour is guided by the action of a comparator which actively compares the count in working memory to the one retrieved from reference memory.

In spite of the considerable overlap, interval timing and classical conditioning are not easily integrated. Most conditioning theories are trial-based, that is they consider the trial as the unit of time. A trial is generally taken to be the state where a CS is present (or CSs in compound) and which may or may not contain a US (or USs). The most influential model in this category is the Rescorla-Wagner [RW, 31]. In order to account for different stimulus durations, trial-based theories like RW must resort to some sort of time discretization, usually by subdividing the trial into ‘mini-trials’. Each mini-trial is treated as a trial in its own right, which are then used to update associative links. This gives rise to the problem of deciding on a particular discretization. Also, given that humans experience time passing as a continuous flow, it is unlikely that animals discretize their conditioning experience in such a way. A more realistic approach to timing is taken by real-time theories. These theories attempt to formalize the concept of a continuous flow of time.

The Temporal Difference model [TD, 38, 39] was one of the earliest and still most influential real-time classical conditioning model. It may be thought of as a real-time version of RW. When used with stimulus representations such as the Complete Serial Compound [CSC, 40], Microstimuli [MS, 28, 41] and the Simultaneous and Serial Configural-cue Compound [SSCC, 42] it is capable of reproducing some timing phenomena like the gradual increase in anticipatory responding that occurs before a signalled reinforcer, and the lower response rates observed during longer CSs. However, only MS-TD has a time representation capable of approximating the most fundamental property of timing, timescale invariance. Another issue with the stimulus representations for TD is that their approach to timing resembles the strategy used by trial-based models, i.e. they all split the stimulus into a number of smaller units or states, the number of which being directly proportional to the duration of the stimulus. Given that conditioning is observed in a timescale that ranges from milliseconds to hours [43, p. 189] this can lead to a very high number of units being required. The stimulus as a whole no doubt is a complex entity, and the brain may be employing a large number of neurons to represent it, but to dedicate so many resources only for timing might not be the most energy-efficient strategy. Also, TD and its stimulus representations do not usually account for a change in timing that is not tied to reinforcement. Animals time the occurrence of different events, such as onset and offset of stimuli [see for example 44], but TD usually only allows for the timing of rewards.

On the other hand, timing models have made even fewer attempts at integrating aspects of classical conditioning. A notable exception is the Learning to Time [LeT, 7, 45] model. It represents the passage of time by transitioning between internal states according to a stochastic pacemaker, an idea borrowed from an earlier timing model called the Behavioural Theory of Time [6]. Learning takes place by associating reinforcement presentation with the current internal state according to the linear operator, a standard classical conditioning rule. LeT offers an account of the basic dynamics of association formation, but it cannot explain cue-competition phenomena like blocking. In a blocking procedure, a CS is first paired with a US until a CR is acquired. The same CS is then presented together with a novel CS and both are paired with the US for a few trials. If the novel CS is now presented alone it elicits little or no responding, and so it is said to be blocked by the first CS. LeT’s learning rule, the linear operator, has largely been supplanted by RW in classical conditioning modelling because it cannot explain cue-competition phenomena. Like TD, LeT also employs a representation that requires as many units as time-steps, making it a resource-intense model.

Modular Theory [MoT, 46, 47] is a timing model which because of its explicit goal of integrating timing and learning may be called a hybrid theory. MoT has introduced novelties that allow it to account for some aspects of the dynamics of classical conditioning that LeT cannot. Its architecture is different than the connectionist one (states or units connected by modifiable links) assumed by RW, TD and LeT. Instead, it uses a more cognitive architecture, with separate information processing stages that deal with perception, memory and decision. It postulates two separate memories: a pattern memory which stores CS durations, and a strength memory which stores the associative strength between each pattern memory and the US. This separation allows MoT to deal with more complex situations involving the dynamics of learning during acquisition and extinction. However, MoT also relies on the linear operator to update its strength memory, which, like LeT, prevents it from accounting for cue-competition phenomena.

Although the models mentioned above, namely TD, LeT and MoT, have accomplished a great deal in terms of bringing together timing and conditioning, they each have their different strengths and weaknesses as we have touched above. In this paper we introduce a model that tries to address some of these weaknesses while preserving the strengths. More specifically, the model has the following strengths. It represents time in real-time. Like MoT and unlike LeT and TD, its time representation does not require an arbitrary large number of units or states. Similarly to TD but unlike LeT and MoT, it uses a learning rule that preserves the main features of RW which allow it to account for compound phenomena. It can time the onset and offset of all stimuli, not only of rewards, and store a memory for each. It includes two update rules: one for timing that is updated by time-markers, and another for associations that is updated by the US. Hence, simple stimulus exposure causes the model to learn and store its duration. This capability is not present in models that depend only on an associative learning rule to also learn about time, such as TD and LeT.

This new model is essentially a way to connect one of the most influential classical conditioning theories, the Rescorla-Wagner model [31], with a recently developed timing theory called Timing Drift-Diffusion Model [TDDM, 48, 49]. The TDDM is based on the drift-diffusion model, widely used in decision making theory, and it provides an adaptive time representation that has commonalities with pacemaker-based models like SET and LeT [50]. These models postulate the existence of a pacemaker that emits pulses at a regular rate, which are then counted to mark the passage of time. To preserve timescale invariance they either postulate a specific type of noise in the memory saved for intervals and a ratio-based decision process (SET) or adapt the rate of pulses (LeT). The TDDM takes the latter route but sets a fixed threshold on pulse counting. To emphasize the unification of these two theories we call our proposal the Rescorla-Wagner Drift-Diffusion Model (RWDDM).

We evaluate RWDDM based on how well it can simulate the behaviour of animals in a number of experimental procedures. Many classical conditioning phenomena have been identified which collectively represent a significant challenge for any single model to explain. A recent list [51] has compiled 12 categories, which include acquisition, extinction, conditioned inhibition, stimulus competition, preexposure effects, temporal properties, among others. Of particular interest to a theory of timing and conditioning are phenomena that involve elements of both timing and conditioning. As we detail later, we have searched the literature for documented effects that can challenge the main mechanisms embodied in RWDDM.

We proceed by first introducing the new model. We compare its formalism with four models that have similar scope, namely CSC-TD, MS-TD, MoT and LeT. In the results section we present the phenomena we will simulate, followed by the results of our simulations, and compare them to the current explanations given by LeT, MoT and TD.

Model

We follow most classical conditioning theories in conceptualizing the conditioning process as the formation of an association between the internal representations of CS and US. Arguably, one of the most influential rules describing the evolution of this association through training is the Rescorla-Wagner [31] rule. As mentioned previously, other models exist which have a similar scope to RW, both trial based [32, 33] and real-time [52, 23, 53]. However, our goal was to take advantage of TDDM’s time representation, so we sought a theoretical associative framework that could incorporate such a representation. Since trial-based conditioning theories lack any time representation, they are a natural place to start. Out of those theories the RW is perhaps the simplest whilst also retaining the greatest possible explanatory power. Its basic formalism consists of the following rule for updating associative strength:

| (1) |

where Vi(n) denotes associative strength for CSi at trial n, λ the asymptote of learning which is set by the US representation, xi(n) which marks the presence (xi = 1) or absence (xi = 0) of the i-th CS representation at trial n, 0 < α < 1 a learning rate set by the CS and 0 < β < 1 a learning rate set by the US. The summation term in the eq (1) sums over all CSs present in the trial. The top panel of Fig 1 shows a diagram of a basic neural net for classical conditioning which serves as the architectural framework for both RW and RWDDM. The RW rule is used to update the links V1, …, Vl that connect the CS input nodes CS1, …, CSl. The summation term in the RW rule is represented in the diagram as a summation unit or junction Σ, that sums the inputs it receives from the CSs j = 1, …, l present in the trial. This sum allows RW to combine (additively) the reinforcement history of each individual CS present in a compound trial. In the neural network literature, eq (1) is also referred to as the Widrow-Hoff rule [54] and the Least-Means-Square [LMS; 55]. The relationship to the LMS rule is easier to see if we let be the output of a learning unit that aims to predict a target λ given inputs xi by adapting the weights Vi. In classical conditioning, λ represents the maximum learning driven by a given outcome (the US), xi is the CS and Vi the associative strength. If we let δ(n) = λ − y(n) be the error between output and US, eq (1) can be obtained with the method of gradient descent by minimizing the squared error δ2(n) with respect to the weight Vi.

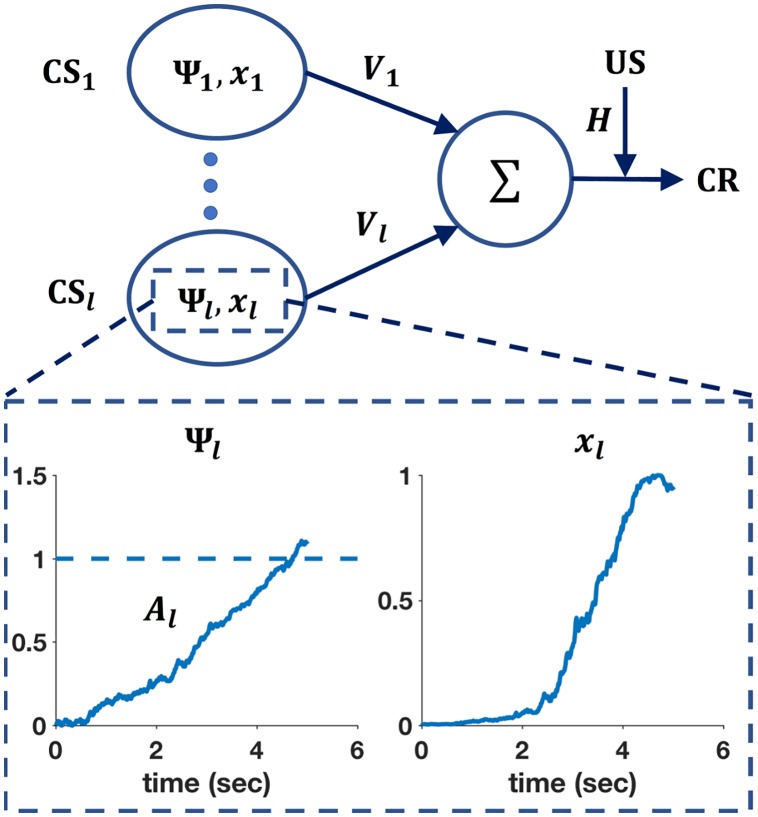

Fig 1. Connectionist diagram of RWDDM.

Each CS unit is connected to a summing junction (labelled Σ) via a modifiable link V. The output of the summing junction is the CR. The US is represented as a teaching signal with a fixed weight H. Each CS unit has its own timer Ψ and representation x. The bottom panel shows a zoomed-in view of the timer Ψl and CS representation xl associated with CSl. The timer slope Al is tuned to a 5-second CS duration.

In spite of the relative success in explaining a wide range of conditioning phenomena [for a list of successes, and failures, see 56], the Rescorla-Wagner rule lacks a mechanism to account for the microstructure of real-time responding during conditioning procedures. In terms of the order of CS-US presentation conditioning procedures may be either forward (CS followed by US) or backward (US followed by CS). Two common types of forward conditioning are delay and trace. In delay conditioning the US always occurs a fixed time after CS onset. In trace conditioning the US occurs at a fixed duration after CS offset. After sufficient training with delay or trace conditioning, responding begins some time after CS onset, increases rapidly in frequency until it reaches a maximum level where it stays until US onset [57]. The RW rule alone does not account for CR level as a function of time. This role is usually fulfilled by the choice of CS representation. We base our choice on a timing model called Timing Drift-Diffusion Model [TDDM, 49, 48, 58, 59]. We chose the TDDM because it possesses a number of interesting features. It is part of a family of pacemaker based models like SET and LeT [50] which are arguably two of the most successful timing theories to date. The TDDM is a modified version of the drift-diffusion models that have been extremely successful at modelling reaction time in decision making tasks [60, 61]. Evidence of climbing neural activity related to timing that resembles the TDDM has been extensively reported [62, 63, 64, 65, 66]. The TDDM consists of a drift-diffusion process with an adaptive drift or rate. The drift-diffusion process is defined by a continuous random walk called Wiener diffusion process. The two main components of Wiener diffusion are the drift and the normally distributed noise. The Wiener diffusion process may be visualized by imagining a two-dimensional grid with time in the horizontal axis and displacement on the vertical axis. If we imagine a purely linear and non-random walk that starts at the origin and moves up at a constant rate then the resulting walk would be a straight line and the drift would be equal to the slope of the line. With normally distributed noise, the walk becomes a random walk and it looks like a jagged curve, since at each time step there is now only a probability that the displacement will be up or down. For the purposes of timing, the slope is always positive and the random walk can be interpreted as a noisy accumulator (or timer) Ψ(t), which starts at the beginning of a salient stimulus and stops (and resets) at the end. In a conditioning experiment the CS is usually the most salient stimulus in the uneventful context of the conditioning chamber, so it is well placed to serve as a time marker. When timing starts, accumulator increments are performed at each time-step according to

| (2) |

where Ai(n) is the rate (slope) of accumulation for CSi in trial n, m is a noise factor, Δt is the time-step size and denotes a sampling from the standard normal distribution. An interval is timed by the rise in the accumulator to a certain fixed threshold, say Ψi(t) = θ. The TDDM adjusts to new intervals by keeping the threshold fixed but adapting the rate of accumulation Ai(n). The bottom left panel of Fig 1 shows a typical trajectory (or realization) of a CS’s TDDM timer after one 5-second trial.

In its original formulation [48, 49] the accumulation process was not allowed to continue beyond the threshold value θ, a constraint that gave rise to two distinct rules for rate adaptation, one for when the US arrived earlier than expected and another for when it arrived later. The constraint fixing a maximum level of accumulation was driven by the neurophysiological assumption that a linear neural accumulator is not likely to continue to perform effectively beyond a certain level. The neural implementation so far proposed for TDDM’s linear accumulator [49] is based on a feedback control mechanism that is tuned to balance excitation and inhibition in a neuron population. Tuning of this kind requires great computational precision, which may not be easily kept for very long in a biological system. Neurophysiology notwithstanding, we will drop that requirement here for simplicity and use instead only one update rule. We demonstrate how this single update rule can be derived by the method of gradient descent. The model learns a new interval by adapting its slope Ai so that the accumulator Ψi reaches the threshold value θ at the target time t*, which may be the time of reinforcement for example. The target slope will therefore be θ/t*. The error δ(n) between the target slope and the current slope is δ(n) = θ/t* − Ai(n). By minimizing the squared error δ2(n) using gradient descent we can derive the slope update rule. The squared error as a function of Ai forms a curve. Moving in the direction opposite the slope of this curve and taking a step of size αt/2 we form the equation:

| (3) |

Solving the derivative yields

| (4) |

Since the organism only has access to the psychological time given by its internal timing mechanism, and not the physical time t, we assume that an internal estimate for t is formed by dividing the current pacemaker count by the current slope, t = Ψi(t)/Ai(n). Substituting this estimate into eq (4) we get:

| (5) |

Hence, the update rule for slope Ai to be applied at target time t* (the end of the trial or of the interval being timed) is

| (6) |

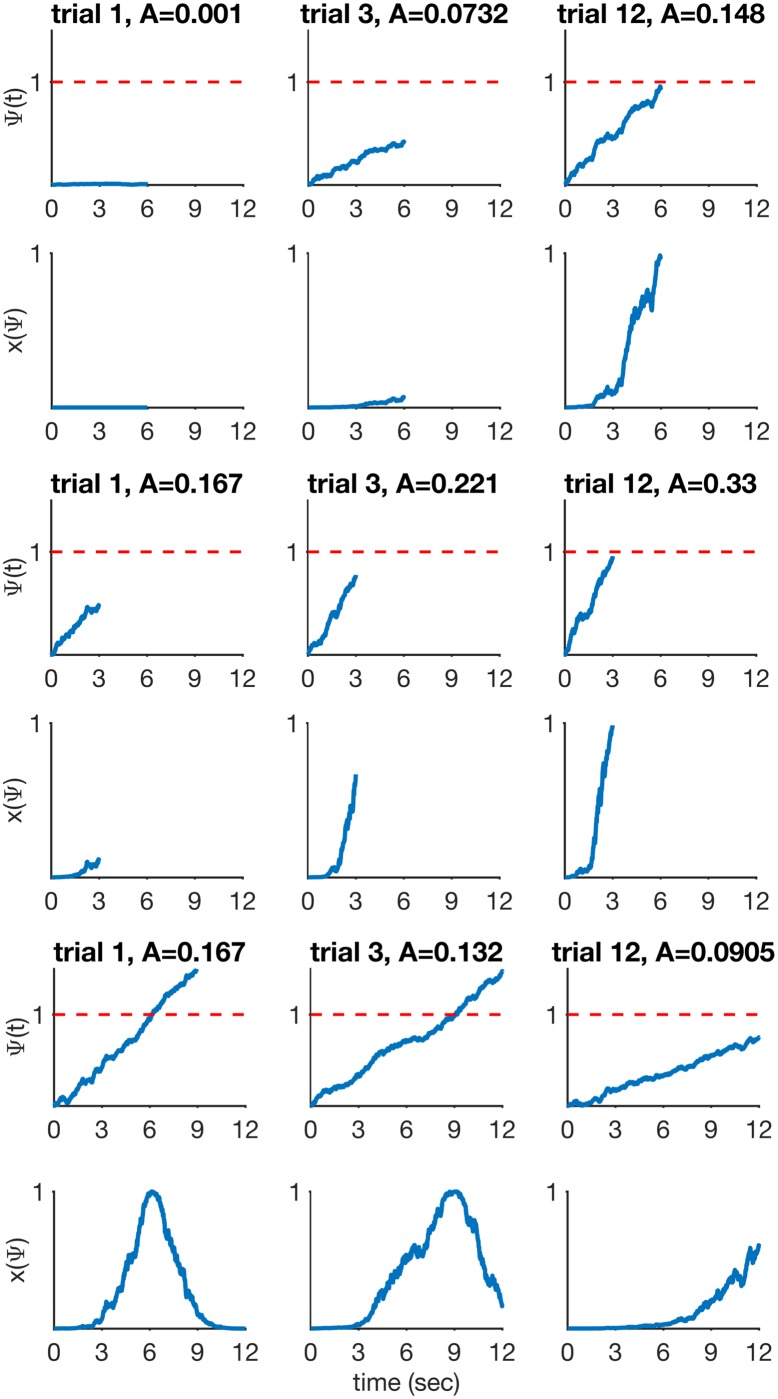

Eq (6) is the slope update rule we use. Note that n above is indexing the number of occurrences of a specific interval that the timer is timing. These intervals may be the duration between CS onset and US onset (the usual ‘trial’ in delay conditioning for example), but they may be any other salient time interval such as CS or intertrial duration. Fig 2 shows timer slope adaptation during three timing scenarios: timing a novel stimulus (row 1), timing a long-short change in stimulus duration (row 3), and timing a short-long change in stimulus duration (row 5).

Fig 2. RWDDM timer and CS representation during three 12-trial timing scenarios.

Top two rows: timing a novel 6 second stimulus. Timer starts with a low baseline slope (A = 0.001) on trial 1 and gradually adapts over training to reach approximately the required slope. Middle two rows: stimulus duration change from 6 to 3 seconds. Bottom two rows: stimulus duration change from 6 to 12 seconds. Parameters: αt = 0.215, θ = 1, σ = 0.25, m = 0.15.

In the top row of Fig 2 and throughout the paper we assume that the initial value of slope A for a novel stimulus is so low as to overestimate the stimulus duration. This overestimation will only last for a few trials, the number of which can be made arbitrarily small by choosing a high adaptation rate αt. Alternatively, it would be possible to use a very high initial value for A so as to underestimate the stimulus duration. However this alternative does not seem neurophysiologically plausible as the brain would need to keep a pool of neurons firing very rapidly as its ‘standby’ timer.

In TDDM, timescale invariance arises from the nature of the noise in the accumulator. After repeated training, say in delay conditioning with a CS of fixed duration, eq (6) will converge to a value of Ai which will make the accumulator reach the threshold value θ at the time of stimulus offset, but only on average. In some trials the accumulator will reach the threshold sooner, in which case the organism will underestimate the stimulus duration. In other trials the accumulator will reach the threshold later, causing overestimation. The variability of this time estimate relative to the mean is given by the coefficient of variation (CV). It has been well established experimentally that the CV of time estimates in humans and other animals is approximately constant over a wide timescale [35, 67, 36]. The CV of TDDM’s time estimate is [see equation 3 in 68]

| (7) |

which depends only on the choice of threshold θ and noise factor m. As these are constant, the CV of TDDM’s time estimate is also constant. Note that because the timer adapts its slope gradually, if the duration of a CS is changed, CV measurements will only match the one given by eq (7) after the slope has finished adapting. The number of trials to adaptation will vary depending on the adaptation rate αt.

We substitute the presence representation used in the original RW model by a Gaussian radial basis function. Its input is provided by the TDDM accumulator:

| (8) |

This representation may be interpreted as the receptive field of time-sensitive neurons that read the signal coming from the accumulator neurons. Their receptive fields are tuned to the accumulator threshold value θ. The bottom right panel in Fig 1 shows the representation for CSl generated from the input provided by the timer on the left. Note how xl reaches its maximum value at the same time that Ψl crosses the threshold at 1. Fig 2 shows x(Ψ) adapting in the three different timing scenarios explained previously. As can be seen, xi is a dynamic representation of CSi that adapts to the temporal information conveyed by the stimulus. Other representation shapes could be used, like a sigmoid for example, but a Gaussian is mathematically simple and has been used before by at least one other timing model [MS-TD, 28].

We follow Gibbon and Balsam [35, 69] in assuming that time sets the asymptote of learning, λ, in eq (1). They were led to this hypothesis by investigating CR timing in fixed interval conditioning schedules, a type of delay conditioning. After enough training in this procedure, subjects begin responding some time after CS onset, with a slow rate at first which then increases rapidly until it reaches asymptotic level some time before reinforcement delivery. Gibbon [35] proposed that subjects make an estimate of time to reinforcement which is used to generate an expectancy of reinforcement. The expectancy for a particular CSi with duration t*, hi, was hypothesised to be hi = H/t*, where H was a motivational parameter which was assumed to depend on the reinforcing properties of the US. The reinforcing value of the US is thus spread evenly over the CS length. It was assumed that this expectancy would be updated as time elapsed during the CS, such that hi(t) = H/(t* − t). Hence, expectancy would increase hyperbolically until the estimated time to reinforcement t = t*. Responding would reach asymptotic level when the expectancy crossed a threshold value hi(t) = b.

Here we will not use Gibbon’s concept of expectancy update. A similar role is fulfilled by the TDDM accumulator in our formalization. But we hold on to his argument that the reinforcing value of the US is spread over the CS length. Within the Rescorla-Wagner modelling framework, Gibbon’s expectancy value may be interpreted as setting the asymptotic level of learning in eq (1), namely λ = H/t*. Under this interpretation, λ may be said to implement hyperbolic delay discounting of rewards. Similarly to the argument used above in the derivation of the slope update rule, we use the psychological time estimate from TDDM in place of the physical time t*, such that t* = Ψi(t*)/Ai(n). The value we use is then . Another possibility would be simply λ = HAi(n). Both alternatives yield the same asymptotic value, but HAi(n) converges gradually (with the rate set by αt) whilst immediately. Our version of eq (1) for updating associative strength then becomes:

| (9) |

In the trial-based RW model, eq (1) is applied at the end of a ‘trial’, which is usually taken to be the event starting at CS onset and ending at US delivery. We follow the same practice here and apply eq (9) at the end of a trial, i.e. at US delivery. Note that because xi(Ψi) is a dynamic CS representation, its activation (or strength) level at the end of the trial will vary from trial to trial, as can be seen in Fig 2. Eq (9) is applied using the activation level of xi(Ψi) current at the end of the trial.

We assume that real-time responses to a CSi are emitted according to the product of its associative strength Vi(n) and representation xi(Ψi), that is, it is the output of the summing junction in Fig 1:

| (10) |

Eqs (2), (6), (8), (9) and (10) fully define the basic model. Its six free parameters are: m, αt, θ, σ, αV, H.

Relationship with other models

Among the theories capable of providing an account of both timing and conditioning, arguably four stand out for their scope or influence. They are CSC-TD, MS-TD, LeT and MoT.

TD has been developed primarily as a learning model, without the explicit intention of addressing timing. It may be visualized as a real-time rendition of the RW rule. Its basic learning algorithm, is given by:

| (11) |

| (12) |

| (13) |

where Vt is the US prediction at time t, formed by a linear combination of the weights w(i) and the CS representation values x(i). This update algorithm is performed at each time step, and not only at the end of a trial like RW and RWDDM. Another important difference is that eq (12) computes a difference between the current US value and the temporal difference between predictions. Hence, δt > 0 if the US is higher than this temporal difference in prediction, and δt < 0 if the US is lower. The constant 0 < γ < 1 is termed a discount factor. Eq (13) updates the weights for the next time step. The vector et stores eligibility traces, which are functions describing the activation and decay of representations xt. The three most common eligibility traces used are: accumulating traces, bounded accumulating and replacing traces. These three types accumulate activation in the presence of the CS and discharge slowly in its absence, the first accumulates with no upper bound, the second only until the upper bound and the third is always at the upper bound whilst the CS is present [39, pp. 162-192].

The richness of TD’s timing account relies on the choice of CS representation x. The Complete Serial Compound representation [CSC, 40] postulates one CS element x(i) per time unit of CS duration. Each element is only switched on at its activation time unit, and then decays afterwards following its choice of eligibility trace e(i) (usually an exponential decay function). This componential representation, which increases in size linearly with CS duration, should be contrasted with RWDDM’s molar representation (eq (8)) which requires only one element. CSC may be called a time-static representation, whilst RWDDM is a time-adaptive representation, with a rule to change its structure based on a change in time (eqs (6) and (8)). CSC-TD also lacks any mechanism to explain timescale invariance of the response curve, which is present in RWDDM. A modification of CSC has recently been developed, the Simultaneous and Serial Configural-Cue Compound [SSCC, 42]. SSCC-TD formalizes the idea that when multiple stimuli are presented together in time, a configural cue–a novel stimulus that is unique to the current set of present stimuli–is formed. SSCC follows on the CSC representation, but, unlike any other TD model, it allows for the representation of compounds and configurations of stimuli. Because SSCC-TD is a real-time model, it also allows for the simulation of CR timing during compounds and configurations. However, its approach to timing is still the same as CSC, i.e. it breaks down the stimuli into a series of elemental units which are activated in series. Therefore, with respect to timing only we will consider SSCC to belong to the family of CSC representations.

The Microstimuli representation [28, 41] introduced a more realistic description of time. Unlike CSC, it uses a fixed number of elements x(i) per stimulus. The ith microstimulus is given by:

| (14) |

where m is the total number of microstimuli, y is an exponentially decaying time trace set at 1 at CS onset. It will be noted that a microstimulus is a Gaussian curve modulated by the decaying trace yt. The set of microstimuli generated by the CS will then give rise to partially overlapping Gaussians, with decreasing heights and increasing widths across time. The fact that only a fixed number of microstimuli are required per CS is an improvement to the potentially large numbers of elements in CSC. The MS representation tries to capture the idea that as time elapses, the stimulus leaves a more diffuse and faint impression. However, even though it is more realistic than CSC, it still lacks a mechanism to produce exact timescale invariance.

Learning to Time is primarily a theory of interval timing which can also account for some aspects of conditioning. Here we will deal with its most recent version in [45], which differs somewhat from the earlier version in [7]. Its CS representation resembles CSC in postulating a long series of elements (or states) that span the whole stimulus duration. Unlike CSC, it transitions from state to state at a rate that varies from trial to trial, and that is normally distributed. Hence, time during a trial is represented as a noiseless linear increase from states n = 1, 2, 3, … (one per time-step) at a fixed rate. This linear time representation resembles the linear accumulator in RWDDM, except that the latter has noise built into the linear accumulator, whilst LeT assumes noise only at the intertrial level. Each state n is associated with the US via an associative link. At the end of a trial, the strength w of these links are updated as follows:

- For the active state at reinforcement, n*, the update rule is

where β is a constant.(15) - For inactive states, n < n*, the update rule is

where α is a constant.(16) - For states that did not become active during the trial, n > n*, the rule is

(17)

Note that unlike RWDDM’s associative update rule, eqs (15) to (17) do not include a summation term. This places a severe limitation on the ability of LeT to deal with compound conditioned stimuli. LeT’s strength lies on its being able to explain timescale invariance of the response curve. Machado and colleagues [45] showed that it is possible to derive timescale invariance using only the assumption of intertrial normality of state transition rate. Finally, LeT assumes that responses are emitted at a constant rate if the current active state has associative strength w(n) greater than a threshold θ. The fact that responding depends on the associative strength of the current state, and that this strength only changes with US associations, prevents LeT from accounting for changes in timing that are not related to US occurrence. For example, there is evidence that animals learn the timing of a preexposed CS [70] and are sensitive to changes in timing during extinction [71], two situations that do not involve the occurrence of a US.

Modular Theory is another primarily timing theory that can also deal with some aspects of conditioning. It treats the onset of a stimulus as signalling a time expectation to reinforcement. Its time representation T is, like LeT, an accumulator that increases linearly with time t, T = ct, where c is a constant. When reinforcement is delivered the current reading from the accumulator is stored in what is called pattern memory. Pattern memory is updated at each trial n according to

| (18) |

where α is a learning rate and T* is reinforcement time. Eq (18) may be contrasted to (6) from RWDDM. The main difference is that pattern memory in MoT stores a moving exponential average of intervals, whilst the slope in RWDDM stores a moving exponential harmonic average of intervals. However, both models are similar in that they can potentially time the occurrence of any event, not only rewards. MoT’s pattern memory and RWDDM’s slope can be made, for example, to adapt to mark the end of stimuli that are not necessarily paired with a reward.

A stochastic threshold b is used to mark response initiation. The threshold distribution is set so as to yield timescale invariance of the response curve. Its mean, B, is a fixed proportion of the value in pattern memory, B = km(n), where k is the proportionality constant, and its standard deviation is γB, where γ is the coefficient of variation of B. Hence, the coefficient of variation of the threshold, i.e. of response initiation, is constant for all intervals, which is the timescale invariance of the response curve. RWDDM derives timescale invariance of response curve from noise in the accumulator (eq (2), not from the threshold.

This account of time from MoT is an instantiation of Scalar Expectancy Theory, arguably one of the most successful timing models to date. Being a purely timing theory, SET does not address associative learning directly, so it does not have a rule for changes in association between stimuli. MoT bridges this gap by adding a rule to update what is termed strength memory, w(n). Strength memory holds the associative strength between stimulus and reinforcement. The rule consists of a linear operator:

| (19) |

with β a constant that can determine different rates of update for acquisition (βr) and extinction (βe). Eq (19) may be compared with (9). Note that, unlike RWDDM, eq (19) does not contain the summation term from RW based rules.

MoT also includes a rule for response rate that is more realistic than RWDDM’s given by (10). It is partly derived from an empirical analysis of real-time responding in animals. We refer the interested reader to [46] for a fuller description. We will only mention here that MoT generates a two-state response pattern, low and high. The transition between states is determined by the crossing of threshold B, and the high state is proportional to strength memory w(n).

Other theories exist which are similar in scope to CSC-TD, MS-TD, LeT and MoT. Two notable examples are the Componential version of the Sometimes Opponent Process model [C-SOP, 72] and the Adaptive Resonance Theory—Spectral Timing Model [ART-STM 26]. C-SOP builds a CS representation based on two sets of elements, or components, one that includes elements activated as a function of time and another whose elements are randomly activated. Associative strength for each element is updated using the standard trial-based RW rule. Simulations in [72] have demonstrated that C-SOP can produce some degree of timescale invariance. ART-STM is a neural net with an input layer and one hidden layer, which allows it to explain nonlinear conditioning phenomena (such as negative pattern) that a single-layer RW neural net cannot. It employs a CS representation that is very similar to the microstimuli used in MS-TD, so it also shows a degree of timescale invariance. Other theories could be mentioned [for two inuential examples see 52, 23, 53] but we will limit the analysis to CSC-TD, MS-TD, LeT and MoT for two reasons: a) these four models collectively embody most of the conditioning and timing mechanisms used in modelling these areas, and b) our goal here is not to provide a comprehensive review, but rather focus on the mechanisms that are shared by our proposed model and the others.

Table 1 summarizes the main mechanisms/features of the models described above. In terms of the type of time representation, it may be observed that the models fall roughly into two categories: (a) those that employ a chain of units or states activated sequentially (CSC-TD, MS-TD, LeT), and (b) those that employ an accumulator (MoT and RWDDM). Those in category (b) may be considered more economical both computationally and biologically, as they don’t require a number of units that increase with time. In terms of what the representations can time, two categories may be discerned: (a) those that time only rewards (CSC-TD, MS-TD and LeT), and (b) those that can time any stimuli (MoT and RWDDM). Models in category (b) have more flexibility to create a temporal map involving all stimuli present, including those not signalling reward. In terms of timescale invariance, the models are basically divided between those that can account for it (MS-TD, LeT, MoT and RWDDM) and the one that cannot (CSC-TD). Finally, in terms of the type of associative learning rule used, models are divided between those that use a RW-type rule (CSC-TD, MS-TD, RWDDM) and those that use the linear operator (LeT and MoT). The ones that use RW are wider in scope, being able to account for cue-competition phenomena, which form the core of classical conditioning.

Table 1. Summary of the main features of the models.

| model | type of time representation | what it can time | timescale invariant | associative learning rule |

|---|---|---|---|---|

| CSC-TD | units/states, one per time step | only rewards | no | TD/RW, cue competition |

| MS-TD | units/states, fewer than one per time step | only rewards | approximately | TD/RW, cue competition |

| LeT | units/states, one per time step | only rewards | yes | linear operator, no cue competition |

| MoT | linear accumulator | any stimuli, not only rewards | yes | linear operator, no cue competition |

| RWDDM | noisy linear accumulator | any stimuli, not only rewards | yes | RW, cue competition |

The main innovation of RWDDM over its predecessors is the combination of a noisy linear accumulator for timing with the RW rule for associative learning. As Table 1 shows, linear accumulator theories are the only ones in our sample of the models that can fully account for timescale invariance. But because they rely on the linear operator rule, they cannot account for cue-competition and other compound stimuli phenomena in conditioning. Therefore RWDDM extends the application of the linear accumulator to compound stimuli, covering a wider range of conditioning phenomena.

In summary, the model we propose is, to the best of our knowledge, the only one that unites the flexibility, computational economy and timescale invariance of the linear accumulator as a time representation, to the RW associative learning rule, which accounts for many more conditioning phenomena than the linear operator. In the next section we evaluate the models against a number of phenomena in conditioning and timing.

Results

The long history of experimental work in classical conditioning has allowed the discovery of a rich variety of phenomena–a recent review [51] has catalogued approximately 87. This forces theorists to be selective when deciding which phenomena to simulate when presenting a new model. We searched the literature for phenomena that could test each feature of the model. Table 2 lists the main RWDDM features, together with the corresponding phenomena found in the literature that can test each.

Table 2. Model features and the experimental findings they can explain.

| RWDDM feature | phenomenon for which it can account |

|---|---|

| independent update rules for time and associative strength | faster reacquisition, time change in extinction, latent inhibition and timing |

| RW rule for associative strength | blocking with different durations, time specificity of conditioned inhibition |

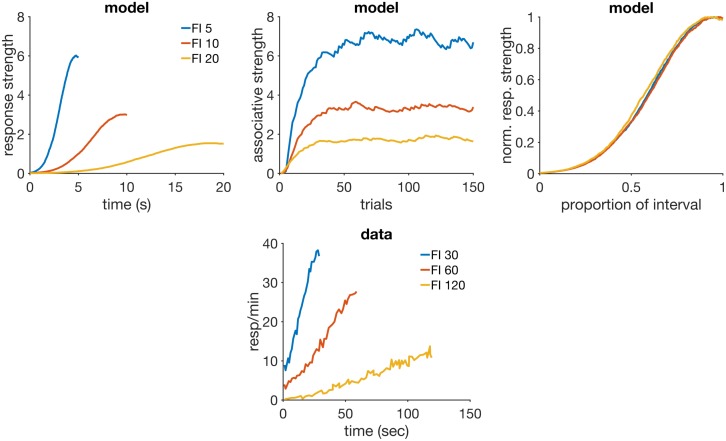

| intertrial variability in time estimation asymptote of associative strength set by time | compound peak procedure ISI effect, mixed FI |

| a memory that learns the rate of reinforcement | VI and FI, temporal averaging |

Table 3 contains the design for each simulation performed with the model. The model parameters used in all simulations were kept almost constant but in some cases a few adjustments were found necessary to obtain a better agreement between model and data. We report their values in each simulation below. The time-step was the same for all simulations: Δt = 10 msec. Simulations were performed using MATLAB version R2016b. The code to generate the figures in each result section is available for download at https://github.com/ndrluzardo/RWDDM-PLOSCB.

Table 3. Simulation designs.

| Simulation | Group | Phase 1 | Phase 2 | Phase 3 |

|---|---|---|---|---|

| Acquisition, extinction and reacquisition | FI 5 | 80 CS+ | 100 CS- | 80 CS+ |

| Extinction with diff. duration | FI 20-40 | 150 CS(20)+ | 150 CS(40)- | — |

| FI 20-10 | 150 CS(20)+ | 150 CS(10)- | — | |

| ISI effect | FI 5 | 150 CS+ | — | — |

| FI 10 | — | — | ||

| FI 20 | — | — | ||

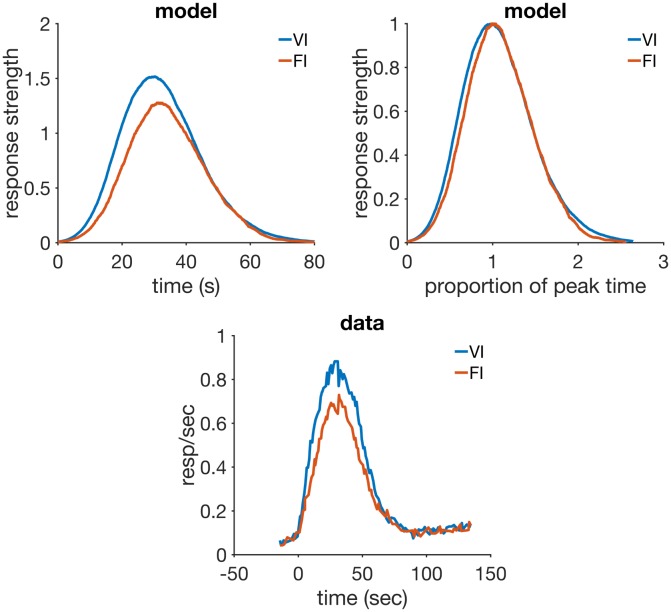

| VI vs FI | VI 30 | mixed 1500 CS+, 375 peak | — | — |

| FI 30 | mixed 500 CS+, 125 peak | — | — | |

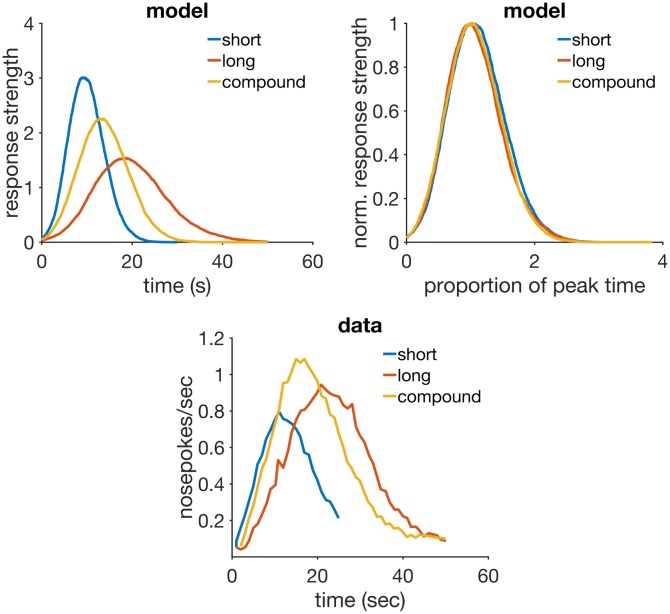

| Mixed FI | MFI 15-75 | mixed 200 A(15)+, 200 A(75)+ | — | — |

| Latent inhibition | Preexposed A | 80 A- | 120 A+ | — |

| Control C | — | 120 C+ | — | |

| Blocking diff. durations | Blocking A | 120 A(10 or 15)+ | 60 A(10)B1(15)+ or 60 A(15)B1(10)+ | — |

| Blocked B1, B2 | — | — | ||

| Control C | — | 60 C(10)B2(15)+ or 60 C(15)B2(10)+ | — | |

| Disinhibition of delay | FI 30 | 100 A+, 100 B- | mixed 300 A+, 300 B+, 100 AB+ | — |

| Compound peak | FI 50 | 100 A+, 100 B+ | mixed 300 A+, 300 B+, 100 AB peak | — |

| Conditioned inhibition | 1 group | mixed 300 each E1(10)+, E2(30)+, E1(10)I1(10)-, E2(30)I2(30)- | mixed 300 each E3(10)+, E3(30)+ | 100 each peak E3I1, E3I2, E3 |

| Temporal averaging | FI 10-20 | 700 L(20)+, 700 S(10)+, 154 L peak, 154 S peak, 154 SL peak | — | — |

Faster reacquisition

A conditioned response emerges gradually over the course of several trials where the CS signals the arrival of a US. If a measure of CR strength (such as rate or magnitude) is plotted against the number of trials, the shape and rate of this acquisition curve will depend largely on the CR and organism, but it usually follows a negatively accelerated curve [1, 43]. Pavlov [1] believed timing of the CR would emerge only later in acquisition, through a process he described as inhibition of delay whereby the initial part of the CS would become inhibitory. Recent and more detailed analyses suggest that an estimate for the time to reinforcement is acquired very early in training, possibly even after one or two trials, although the expression of such estimation may not be observable until later in training [73, 74, 75, 76].

If the CS no longer signals reinforcement, CR strength gradually decreases over the course of these extinction trials, until it finally disappears. If the CS is made to signal the US again, the CR returns, a process that is called reacquisition. It is a consistent finding that reacquisition is faster than acquisition [77, 46, 43, p. 185].

Learning is loosely defined as an enduring change in behaviour as a result of experience. Acquisition of a CR is the most basic demonstration that classical conditioning is a form of learning. As such, all classical conditioning models provide an account of it.

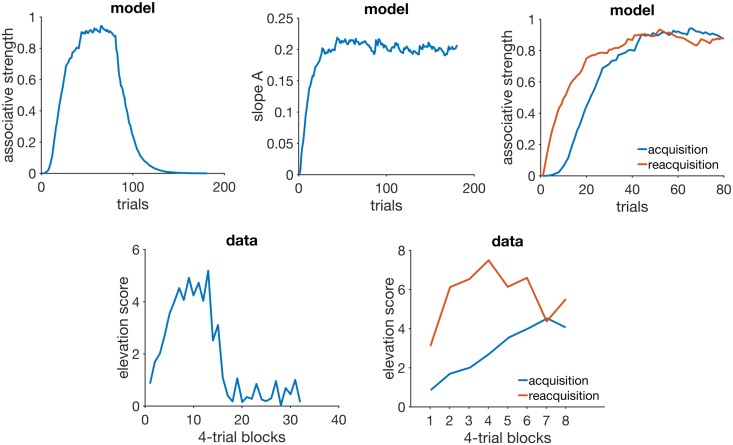

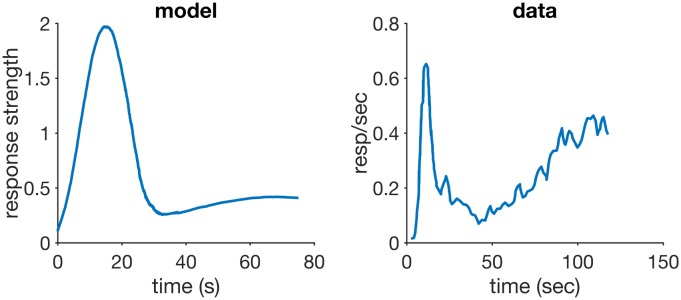

Simulations

Fig 3 (top left panel) shows a plot of RWDDM’s associative strength as given by eq (9), in a simulation of acquisition and extinction. Acquisition consisted of 80 presentations of a 5-sec CS followed by reinforcement, after which there were 100 extinction trials where H was set to zero. The simulations match with experimental data from acquisition and extinction (bottom left panel of Fig 3). The simulated acquisition curve asymptotes around the theoretical value given by setting ΔV(n) = 0 in eq (9) and solving for V, yielding

| (20) |

which in this particular case is V∞ ≈ 1, since H = 5, A∞ ≈ 1/5, Ψt* = Ψ(t*) ≈ 1, x(Ψt*) ≈ 1, where t* is the time of reinforcement. Because Ψ(t*) is a random variable, x(Ψt*) and V∞ are also random variables and their values are reported as approximations to their expected values (but not the actual expected values).

Fig 3. Acquisition and reacquisition.

Top left: simulated associative strength V in acquisition and extinction. Top middle: adaptation of RWDDM slope A. CR extinction began at trial 80 but has no effect on the RWDDM slope. Top right panel: simulated V curves in acquisition and reacquisition. Bottom left panel: response strength data from an experiment in acquisition and extinction, redrawn from Fig 1 in [77]. Bottom right panel: data from an experiment in acquisition and reacquisition, redrawn from the top panel of Fig 3 in [77]. Model parameters: m = 0.15, θ = 1, σ = 0.3, αt = 0.1, αV = 0.1, H = 4 in acquisition and H = 0 in extinction.

Fig 3 (top middle panel) shows the adaptation of timer slope A given by eq (6). This equation precludes the initial value of A from being zero, so we set it to the very low value of A(1) = 10−6. We also set the threshold θ = 1, which by eq (6) means that Ai(n) encodes the exponential moving average of the rate of reinforcement signalled by CSi. Or, equivalently, 1/Ai(n) encodes the moving harmonic average of the intervals since last reinforcement during CSi. In this simulation, since there is only one US which is delivered always at the same time at CS offset (5000 msec), A converges to A∞ = 1/5000. Note that the value of A does not decline after extinction begins at trial 80. It continues to be updated since the stimulus is still present, even if its presence no longer signals reinforcement.

The top right panel of Fig 3 shows the acquisition and reacquisition curves using RWDDM. Reacquisition produced by the model is evidently faster than the simulated acquisition, but not as fast as the reacquisition seen in the data on the bottom left of Fig 3.

Discussion

In RWDDM acquisition and extinction of associative strength follow from the same mechanism as RW. The only difference is the noisy stimulus representation x(Ψt*), which induces noise into the acquisition curve. Changes in associative strength and timing are treated independently. In particular, the memory for time encoded by the slope A is not affected by extinction. This leads to a faster reacquisition following extinction. This is because RWDDM’s time-adaptive CS representation x(Ψt*) reaches its maximum activation value right from the beginning of reacquisition, since the timer slope A is already tuned to the current CS duration (see eq (8)).

Modular theory [46] is another model that treats timing and associative strength separately. It postulates two memories, one for the pattern of reinforcement and another for the strength of the association between CS and US. The pattern memory stores an exponential moving average of the intervals to reinforcement which, like RWDDM, does not change with extinction. However, its strength memory w(n) is updated according to the linear operator rule,

| (21) |

which, unlike RWDDM, does not include a term for a time-adaptive CS representation. Thus, the way MoT accounts for rapid reacquisition is by using different learning rates β for acquisition and reacquisition. The same strategy may be employed with the TD and LeT models.

In summary, RWDDM explains reacquisition as the persistence of a memory for time, whilst TD, LeT and MoT explain it as a permanent change in the learning rate for associative strength.

Time change in extinction

When a previously conditioned stimulus is no longer followed by reinforcement, the conditioned response gradually decreases. An important theoretical question for hybrid timing/conditioning models concerns what happens to the timing of responses in extinction. Using the peak procedure Ohyama and colleagues [78] found that although the maximum (peak) response rate decreased in extinction, peak time and sensitivity (measured by the coefficient of variation) remained virtually unchanged. Drew and colleagues [79] investigated the behaviour on extinction by changing CS duration between acquisition and extinction. Groups where the CS changed to a shorter or longer duration were compared to another where the duration did not change. They found that CS duration had little effect on the rate of extinction, with all groups taking about the same number of trials to achieve CR extinction. However, when the CS used in extinction was considerably longer (4 times) than the one acquired, extinction was facilitated. Guilhardi and Church [71] performed a similar experiment (experiment 2) and observed that when stimulus duration is changed from acquisition to extinction, the pattern of responding during extinction gradually shifts to the new duration over extinction trials. Following the same procedure, Drew and colleagues [80] also used partial reinforcement to slow down the rate of acquisition, and thus observe if response patterns really do shift gradually to the new duration. They confirmed that when CS duration was increased from acquisition to extinction, the within-trial response peak shifted gradually to the right over the course of extinction. When the CS was shortened, the results were not conclusive. Also, when CS duration was changed from training to extinction, the speed of extinction increased, but this appeared to be explained at least in part by the shifting of response patterns.

In summary: a) peak timing and CV are not altered in extinction when using a peak procedure, b) changing the CS duration from training to extinction causes the within-trial response peak to shift to the new duration, and c) changing the CS duration in extinction can speed up extinction, but this may be due to the shifting of the response peak and not to changes in associative strength. These results pose a challenge to the models analysed here. Out of CSC-TD, MS-TD, LeT and MoT, only MoT has a mechanism that would allow it to account for time change in extinction.

Simulations

RWDDM provides an account for these findings as follows. In the case of the peak procedure, the occurrence of the longer peak trials may be considered too infrequent to cause a shift to the longer time. In this case, eq (6) is not applied in peak trials so RWDDM predicts that both slope A and CV will remain unaltered in extinction. In the case of a permanent change in CS duration from acquisition to extinction, the slope update rule is applied and the response peak will shift gradually to the new duration.

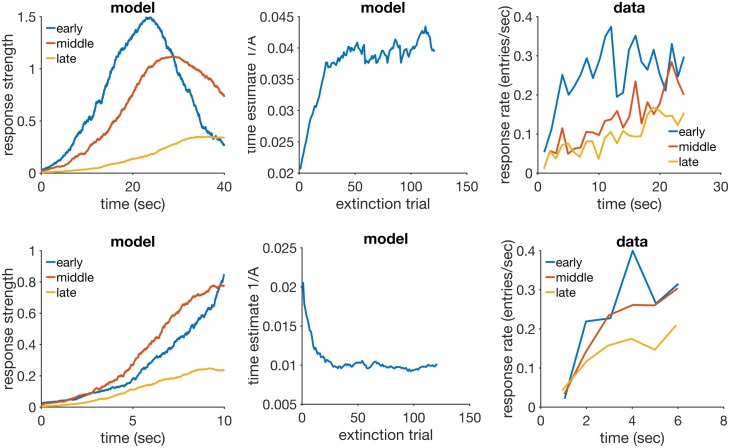

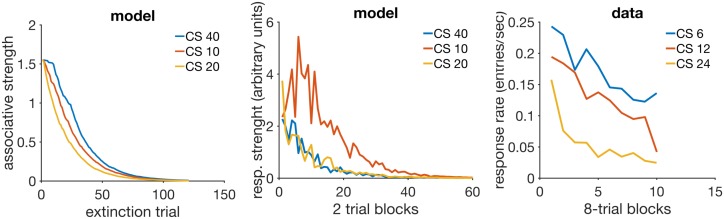

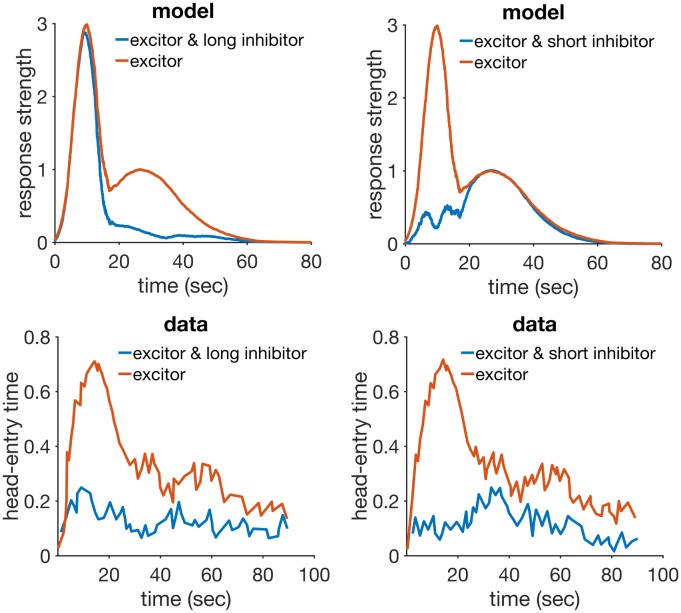

We have simulated RWDDM in two extinction conditions, one where the CS presented in extinction was longer than the one acquired (20 sec to 40 sec, short-long) and another where the extinction CS was shorter than the acquired CS (20 sec to 10 sec, long-short). Fig 4 summarizes the main results.

Fig 4. Time change in extinction.

Left column: simulated response strength averaged over trials in extinction short-long (top) and long-short (bottom). Middle column: time estimate adaptation of the model during extinction short-long (top) and long-short (bottom). Right column: experimental data from an experiment where the CS duration changed from 12-sec in acquisition to either 24-sec (top) or 6-sec (bottom) in extinction. Data plots redrawn from Figure 10 in [80]. Model parameters: m = 0.25, θ = 1, σ = 0.35, αt = 0.08, αV = 0.09, H = 30.

The panels on the left column show response strength during a trial in conditions short-long (top) and long-short (bottom). In the early stages of extinction (early) the response curves peak around the time of US arrival in acquisition (20 sec). This is more evident in the condition short-long (top left) because in the other condition (bottom left) the trial ends 10 seconds before the peak at 20 seconds occurs. Had the stimulus remained on for a full 20 seconds, the response curve in the early stages of long-short would have continued to increase until the 20 second mark. In middle and late extinction the response peak slowly shifts to the new duration in both conditions, and their heights decrease. Compare the simulated curves in the left column of Fig 4 to the actual experimental data in the right column. The panels on the middle row of Fig 4 show the adaptation of time estimate 1/A in conditions short-long (top) and long-short (bottom). They demonstrate that RWDDM adapts exactly to time change in extinction.

To investigate if the rate of acquisition changes with CS duration, we have plotted the extinction curves for each CS duration in the left panel of Fig 5. Decreasing CS duration from acquisition to extinction slightly facilitates extinction, but increasing CS duration markedly delays extinction. However, these are only the V values, a theoretical construct that accounts for the associative strength of the stimulus as a whole. Actual behaviour measurements of extinction are based on how much response frequency changes from trial to trial. But response frequency also changes within the trial. As pointed out by [80], the value obtained for the rate of extinction may be affected by which portion of the CS was measured. To analyse this, they [80] measured response frequency only during the first 6-sec (half the duration of the CS in acquisition) of each CS duration in extinction. We have followed the same procedure and the results can be seen on the middle panel of Fig 5. They show a marked delay on extinction when the CS duration was shortened, but not when it was lengthened. Compare these curves with the actual data analysed by [80] and displayed in the rightmost panel of Fig 5. The simulations conflict in part with the same analysis in [80], which showed no delay on extinction, only facilitation in the case of extending CS duration.

Fig 5. Extinction curves.

Left panel: model V values for each CS duration in extinction. Middle panel: simulated CR values calculated only for the first 10 seconds of the CS. Each data point is calculated by summing the output of eq (10) over the first 10 sec of each trial, then averaging these trial values two by two, and dividing by 100 to rescale. Right panel: actual CR data for the first 6 sec of the CS in extinction, redrawn from Figure 8 (C) in [80].

Discussion

RWDDM predicts that a change in CS duration from acquisition to extinction will always cause a rescaling of the response curves in extinction. This is largely in agreement with the data. However, RWDDM seems to predict a degree of delay on extinction, whilst the data seems to point to a facilitation of extinction when the CS changes duration. When only the first half of the CS response curves are analysed, the data suggests that extending CS duration in extinction can speed up extinction, whilst RWDDM predicts that shortening CS duration will delay extinction.

RWDDM’s prediction for a delay in extinction following a change in CS duration is due to the shifting of the response curve. At the beginning of extinction, a trial ends either before the CS representation has reached its peak (CS shortening) or after its peak (CS lengthening). This makes eq (9) update with a small value for x(Ψ), resulting in a smaller update than with the higher x(Ψ) value of the unchanged CS.

As mentioned above, time change in extinction is a difficult phenomenon for the current models to explain. CSC-TD does not have a mechanism to change the peak of responding when a US is not present. Neither does MS-TD or LeT. These models assume that extinction can only weaken existing links between CS and US representations. Because in these models timing usually depends on the sequential activation of these links, changing the CS duration in extinction would not alter the timing but only the magnitude of responding. RWDDM explains time change in extinction because its rule for time adaptation is independent of a change in associative strength. Thus, when the duration changes in extinction, RWDDM’s accumulator slope tracks this change, whilst associative strength decays as a function of US absence. Regarding the extinction facilitation caused by a change in CS duration, none of the models analysed here currently have a mechanism to explain this either.

It would be possible to allow the average rate of state transition in LeT to vary as a function of CS duration, which would cause timing to adapt to the new time in extinction. However, in its latest formulation [45] LeT relies on a fixed average rate of state transition to explain timescale invariance. Thus, if the rate is made to change as a function of CS duration, this would break timescale invariance.

As for MS-TD, one interesting modification that would likely allow it to explain time change in extinction is to make the microstimuli themselves time-adaptive. Like RWDDM’s time-adaptive CS representation, the microstimuli could be made to ‘stretch’ or ‘compress’ when stimulus duration shortens or lengthens.

Modular Theory is likely to account for time change in extinction, since its pattern memory for time could be made to update even in extinction. That would shift the response pattern to the new time whilst strength memory, which depends only on US presentation, would decay.

Latent inhibition and timing

When a subject is exposed to repeated and non-reinforced presentations of a stimulus it has never encountered before, this procedure is called preexposure. If reinforcement is subsequently paired with the preexposed CS, the initial rate of CR acquisition is usually lower compared to acquisition to a nonpreexposed stimulus, a phenomenon called latent inhibition [81]. The asymptotic level of conditioning, however, is not normally affected by preexposure [82]. Latent inhibition is an important representative of a class of phenomena involving latent effects. Collectively, these phenomena demonstrate that something is learned about the stimulus even when it does not signal reinforcement. Therefore, latent inhibition cannot be accounted by the Rescorla-Wagner model, since the theory only applies when there are changes in associative strength.

A question relevant for real-time conditioning models is what happens to timing when a preexposed stimulus is conditioned. To answer this question, Bonardi and colleagues [70] used CSs of variable and fixed durations (the variable duration CS had the same mean as the duration of the fixed CS) to vary the temporal conditions between preexposure and conditioning phases. Latent inhibition was observed even when the temporal information from the two phases was different. Crucially, timing, as measured by the response gradient within a trial, appeared to improve in the preexposed CS even when the temporal information was different between the two phases.

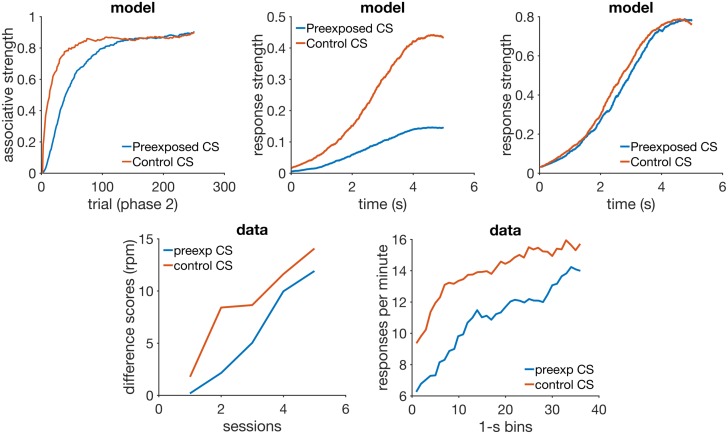

As alluded to above, latent inhibition cannot be accounted by the associative learning update rule used in RWDDM, the Rescorla-Wagner. However, we show here that RWDDM is compatible with the Pearce-Hall rule [33, 83], one of the most widely used models for explaining latent inhibition and other latent learning effects. We demonstrate that this modification maintains the basic framework of the RWDDM, and that it can account for latent inhibition and improved timing with preexposure. None of the other models analysed here can account for latent inhibition without modifications. Improved timing with preexposure could be accounted by Modular Theory, but not by the the current version of the other models.

Simulations

The Pearce-Hall model is basically a rule for adapting the learning rate αV based on the error δ between the predicted US outcome and the actual US outcome. It was originally formulated by [33] and updated by [83]. We have maintained eq (9) for associative strength, but changed αV on every trial n according to

| (22) |

| (23) |

where 0 < γ < 1 is a parameter that sets the rate of learning rate adaptation. Eq (22) is basically the Pearce-Hall rule, except that instead of using 1 as the asymptote of learning we use .

We simulated latent inhibition with a 5-sec CS. Preexposure consisted of 80 trials of the CS without reinforcement (H = 0). The preexposed CS was then reinforced for 250 trials. Fig 6 (top left panel) compares the acquisition curves for the preexposed CS and a control CS in the reinforced trials. The preexposed CS acquisition curve increases at a lower rate than the control CS, the latent inhibition effect (see data from a corresponding experiment at the bottom left panel of Fig 6).

Fig 6. Latent inhibition.

Top row: simulated associative strength in latent inhibition (left), simulated CR averaged over the first 30 trials of conditioning phase (middle), and simulated CR averaged over the last 30 trials of conditioning phase (right). Bottom row: acquisition curves from an actual experiment in latent inhibition (left), and response rate data during the CS (right). Data plots redrawn from Figures 1 and 2 respectively in [70]. Model parameters: αt = 0.1, αV = 0.08, μ = 1, σ = [0.6 − 0.35], m = 0.2, H = 4, αPH = 0.4, γ = 0.03.

Improved timing with preexposure follows directly from the fact that RWDDM adapts its accumulator slope A to the CS duration during preexposure. However, our choice of a Gaussian for stimulus representation does not allow for this change to become visible. Bonardi and colleagues [70] demonstrated improved timing by showing that the slope of the response curve from the preexposed CS was higher in the first few trials of acquisition than the one from the control CS (see bottom right panel of Fig 6). In general, animal response curves tend to be quite flat during the beginning of acquisition. There is evidence that the response curves appear to change from negatively accelerated to a sigmoidal shape over the course of training (see Figure 1 in [44] for an example). This means that in the early stages of acquisition, within-trial response frequency increases very early in the trial and then stays at a constant level until the end. As training progresses, the increase in frequency moves slowly to the right, giving rise to the sigmoidal shape that peaks just before the end of the trial. In these cases a higher slope of the response curve would indicate improved timing. But in our model the curves are sigmoidal from start of acquisition, so they will always peak at the end of the trial, even if the timer slope has not adapted to the interval yet, as is the case with a novel stimulus. Therefore, during the acquisition phase of latent inhibition, RWDDM predicts that only the peaks of the response curves will gradually increase over the trials. Because of the learning decrement caused by preexposure, the peak of the control CS will increase faster than the preexposed CS, as the top middle panel of Fig 6 demonstrates. The response curve of the control CS will have a higher slope than the preexposed CS, even though the preexposed CS’s timer rate has been adapted to its duration. Hence, the improved timing found in the data is explained by adaptation of RWDDM’s timer slope, but RWDDM’s CS representation cannot make this visible.

We have tried adding an adaptable σ in eq (8) so as to decrease the width of the gaussian curve gradually over trials. We chose a simple linear operator rule to adapt the Gaussian width:

| (24) |

and set σ(1) = 0.6 and ασ = 0.025.

Fig 6 (top middle panel) shows response strength of control and preexposed CSs averaged over the first 30 trials of the conditioning phase. The preexposed CS already shows a clear sigmoidal shape, whilst the control is slightly wider and linear. But the effect is too small to be able to account for the one seen in the data from [70]. Towards the end of the conditioning phase the two curves converge (Fig 6, top right panel).

Discussion

The simulations show that the model can account for latent inhibition adequately if the Pearce-Hall rule is used (in which case the model would be more appropriately named PHDDM). The PH rule adapts the learning rate αV based on the level of associative learning between stimulus and reward. When the subject encounters a novel stimulus, it is assumed that αV has some non-zero starting value , which allows learning in eq (9) to take place. If this novel stimulus does not signal reward, as is the case in the preexposure phase of latent inhibition, σ = 0 and eq (22) will simply decay the value of the learning rate across trials until it reaches zero. If at this point the stimulus begins to be followed by reward, σ > 0 and eq (22) will begin to raise the value of the learning rate, which in turn will allow eq (9) to begin increasing the value of V. Since the increase in the value of the learning rate is gradual, determined by the rate γ, there will be a number of trials in the beginning of the conditioning phase where , which leads to the initial impairment in the learning curve when compared to the learning curve of a non-preexposed CS, as seen in the top left panel of Fig 6.

The separate rule for time adaptation allows the model to account for improved timing after preexposure, but the model cannot make this effect visible even if we allow for Gaussian width adaptation. In view of this it seems more likely that a two-state CS representation may be a better solution. As mentioned above, Figure 1 in [44] suggests that during the initial stages of training a CS representation may be modelled by the following leaky integrator

| (25) |

where Ii is the indicator function marking the presence of CSi, and τ a time constant. In the latter stages of training, when timing is expressed, the organism switches to the Gaussian representation given by eq (8). When the switch between representations is made and how abruptly remains to be investigated.

Latent inhibition cannot be accounted by any of the other models analysed here without modifications. Also, models that rely on the US for time adaptation, like CSC-TD, MS-TD and LeT, cannot account for improved timing by preexposure. Modular Theory is the only one that can time any stimulus like RWDDM, so it could account for the improved timing. But it would also need a modification like (22) to adapt its learning rate to account for latent inhibition.

Blocking with different durations

Arguably, the most important compound conditioning phenomenon is blocking. It is part of a class of cue competition and compound phenomena discovered in the late 1960s which challenged the view that conditioning was driven by the pairing, or contiguity, of CS-US. These results suggested that conditioning with compound stimuli was influenced by the reinforcement histories of the elements forming the compound [29, 30]. This led to the development of a new generation of models that could account for those findings [31, 32, 33]. The rule we use, the Rescorla-Wagner, provides an explanation for blocking that is based on the summation term in eq (1).

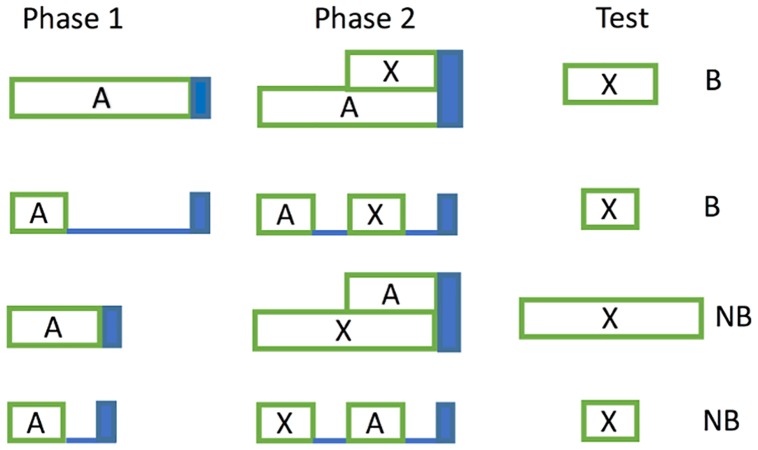

In a blocking procedure a CS is first paired with a US in phase 1 of training. During phase 2 a novel CS is presented in compound with phase 1 CS and paired with the US for just a few trials. Subsequently, when tested alone the novel CS elicits less responding than if it had been trained in compound with another novel stimulus [34]. The previously reinforced CS is said to block the novel CS. The temporal information encoded by each CS has an effect on the amount of blocking observed. Schreurs and Westbrook [84] varied the ISI in the pre-training and compound phases, and observed less blocking when the durations were different in both phases than when they were the same. Barnet and colleagues [85] performed a similar experiment but with forward and simultaneous conditioning varying between phases, and also found that blocking was stronger when blocked and blocking CSs had the same temporal history. Jennings and Kirkpatrick [86] used compounds where the elements had different durations. They observed that a long blocking CS could block a co-terminating short Cs, but a short blocking CS failed to block a co-terminating long CS (see rows 1 and 3 in Fig 7). Amundson and Miller [87] performed four blocking experiments using trace conditioning. In two of them the blocking CS trace duration changed between phases, and blocking was not observed. In the other two experiments the trace duration was held fixed between phases, and the blocking and blocked CSs were presented serially and not in a compound (see rows 2 and 4 of Fig 7). Blocking was observed when the blocking CS followed the blocked CS, but not in the reverse condition.

Fig 7. Experimental designs from two blocking experiments.

CS X was blocked (B) in rows 1 and 2, and not blocked (NB) in rows 3 and 4. Blue bar indicates US presence.

The studies reviewed above appear to show that changing the ISI of the blocking CS between phases may attenuate blocking. Another finding is the apparent asymmetry of blocking when the ISI of the blocking CS is kept constant between phases. Rows 1 and 2 of Fig 7 suggest that a long blocking ISI can block a short blocked ISI. Rows 3 and 4 suggest that a short blocking ISI does not block a long blocked ISI.

As mentioned above, RWDDM can account for blocking because it uses the RW rule. The summation term in eq (1) formalizes the widely held view that a given US can only confer a limited amount of associative strength which CSs must compete for. Different theories exist that take other approaches to blocking [see for example 32, 88, 89] but among the ones analysed here (for their ability to handle timing also) only CSC-TD and MS-TD are equipped to deal with it. We show next that RWDDM can account for the blocking of a short CS by a long CS, and that by making the reasonable assumption of second-order conditioning it can also account for the lack of blocking of a long CS by a short CS. CSC-TD and MS-TD are also capable of providing an account of both blocking conditions.

Simulations

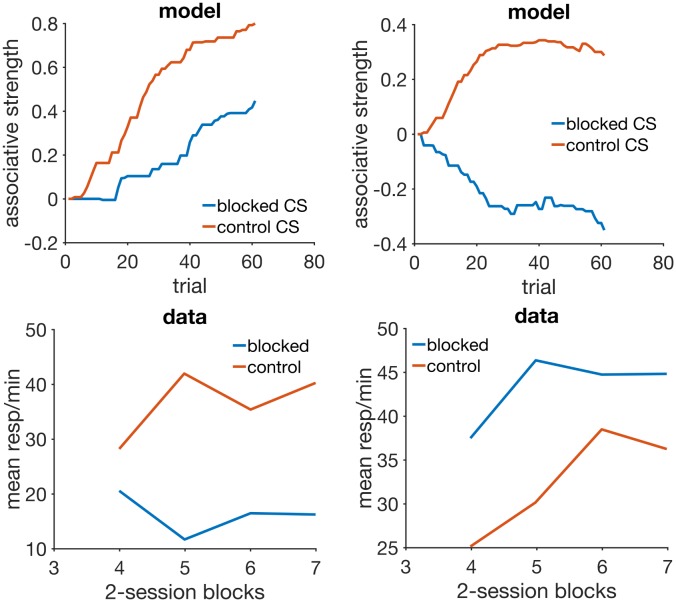

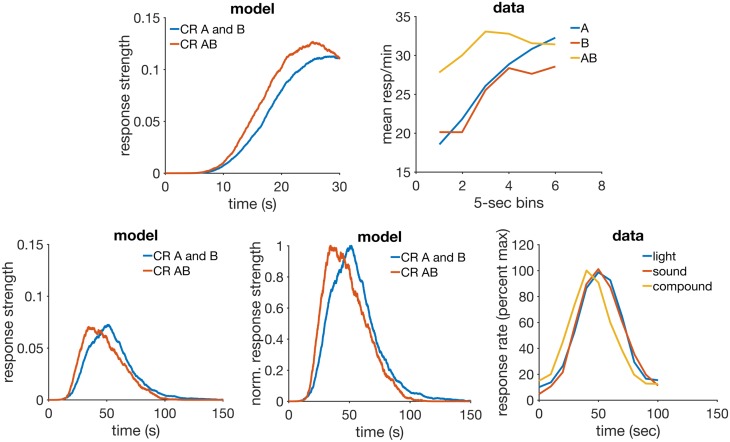

Because RWDDM is based on the RW rule, it produces virtually the same results as the latter when the CSs have the same duration. Our interest here is to test whether it can reproduce the finding that a long CS can block a shorter CS but a shorter CS does not block a longer one. We performed a simulation following the design in rows 1 and 3 of Fig 7. In the first phase a CSA (blocking CS) of duration either 10 or 15 seconds was followed by reinforcement until its associative strength V reached asymptote. In phase 2 CSA was joined with a CSX (blocked CS), of either 15 or 10 seconds, in a coterminating compound and followed by US. The top left panel of Fig 8 shows the acquisition of associative strength for CSX and its control during phase 2 for the condition CSA-15sec and CSX-10sec. A considerable amount of blocking is observed, matching with the data (bottom left panel).

Fig 8. Blocking with different durations.

Left column: simulation (top) with a 15 sec blocking CS and 10 sec blocked CS, and animal data (bottom) from an experiment with the same design. Right column: simulation (top) with a 10 sec blocking CS and 15 sec blocked CS, and animal data (bottom) from an experiment with the same design. Data panels redrawn from the top right panel in Figure 5 in [86]. Model parameters: αt = 0.2, αV = 0.1, μ = 1, σ = 0.35, m = 0.2, H = 10.

The top right panel of Fig 8 shows the results for condition CSA-10sec and CSX-15sec. In this condition the model diverges considerably from the data (bottom right panel) and predicts that CSX should actually become inhibitory.

Discussion

The blocking and inhibition seen in Fig 8 is a result of a discrepancy in the asymptote of learning between the CSs. After phase 1, CSA has associative strength VA ≈ HAA. During phase 2, CSX’s associative strength changes according to:

and since (AX − AA) < 0, VX becomes negative.

However, it could be argued that the short CSA becomes a secondary reinforcer which is signalled by the onset of the long CSX. In this case, the onset of CSX would serve as the time marker for the onset of CSA, and not for the onset of US. Hence, during the first 5 seconds of CSX responding would be under the control of this 5-sec stimulus representation which would not overlap, thus not compete, with CSA’s later representation. It would follow from this account that no blocking would be observed, and that responding during test phase with CSX would peak at the 5-sec mark. This is a testable prediction that, if shown to be the case, could validate RWDDM’s account.