Abstract

Peer review of research articles is a core part of our scholarly communication system. In spite of its importance, the status and purpose of peer review is often contested. What is its role in our modern digital research and communications infrastructure? Does it perform to the high standards with which it is generally regarded? Studies of peer review have shown that it is prone to bias and abuse in numerous dimensions, frequently unreliable, and can fail to detect even fraudulent research. With the advent of Web technologies, we are now witnessing a phase of innovation and experimentation in our approaches to peer review. These developments prompted us to examine emerging models of peer review from a range of disciplines and venues, and to ask how they might address some of the issues with our current systems of peer review. We examine the functionality of a range of social Web platforms, and compare these with the traits underlying a viable peer review system: quality control, quantified performance metrics as engagement incentives, and certification and reputation. Ideally, any new systems will demonstrate that they out-perform current models while avoiding as many of the biases of existing systems as possible. We conclude that there is considerable scope for new peer review initiatives to be developed, each with their own potential issues and advantages. We also propose a novel hybrid platform model that, at least partially, resolves many of the technical and social issues associated with peer review, and can potentially disrupt the entire scholarly communication system. Success for any such development relies on reaching a critical threshold of research community engagement with both the process and the platform, and therefore cannot be achieved without a significant change of incentives in research environments.

Keywords: Open Peer Review, Social Media, Web 2.0, Open Science, Scholarly Publishing, Incentives, Quality Control

1 Introduction

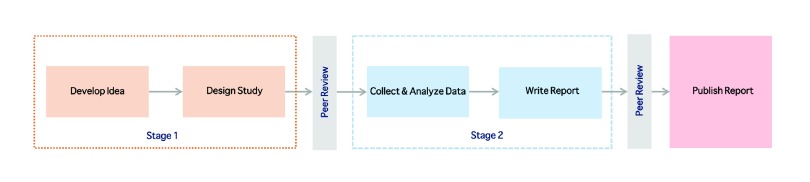

Peer review is the process in which experts are invited to assess the quality, novelty, validity, and potential impact of research by others, typically while it is in the form of a manuscript for an article, conference, or book ( Spier, 2002). For the purposes of this article, we are exclusively addressing peer review in the context of manuscripts for research articles, unless specifically indicated; different forms of peer review are used in other contexts such as hiring, promotion, tenure, or awarding research grants (see, e.g., Fitzpatrick, 2011b, p. 16). Peer review comes in various flavors that result from different approaches to the relative timing of the review (with respect to article drafting, submission, or publication) and the transparency of the process (what is known to whom about submissions, authors, reviewers and reviews) ( Ross-Hellauer, 2017). The criteria used for evaluation, including methodological soundness or expected impact are also important variables to consider. In spite of the diversity of the process, it is generally perceived as the gold standard that defines scholarly publishing by researchers and the wider public alike, and often deemed the primary determinant of scientific, theoretical, and empirical validity ( Kronick, 1990). Consequently, peer review is a vital component at the core of research communication processes, with repercussions for the very structure of academia, which largely operates through a peer reviewed publication-based reward and incentive system ( Moore et al., 2017). However, peer review is applied inconsistently both in theory and practice ( Pontille & Torny, 2015), and generally lacks any form of transparency or formal standardization. As such, it remains difficult to know what we actually mean when we identify something as a “peer reviewed publication.”

Traditionally, the function of peer review has been as a vetting procedure or gatekeeper to assist the distribution of limited resources—for instance, space in peer reviewed print publication venues, research time at specialized research facilities, or competitive research funds. Nowadays, it is also used to assess whether and how a given piece of research fits into the overall body of existing scholarly knowledge, and which journal it is suitable for and should appear in. This has consequences for whether the body of published research produced by an individual merits consideration for a more advanced position within academic or industrial research. With the advent of the Internet, the physical constraints on distribution are no longer present, and, at least in theory, we are now able to disseminate research content rapidly and at relatively negligible cost ( Moore et al., 2017). This has led to the increasing popularity of digital-only publication venues that vet submissions based on the soundness of the research (e.g., PLOS, PeerJ). Such a flexibility in the filter function of peer review reduces, but does not eliminate, the role of peer review as a selective gatekeeper. Due to such innovations, ongoing discussions about peer review are intimately linked with contemporaneous developments in Open Access (OA) publishing and to broader changes in open research ( Tennant et al., 2016).

The goal of this article is to investigate the historical evolution in the theory and application of peer review in a socio-technological context. We use this as the basis to consider how specific traits of consumer social Web platforms can be combined to create an optimized hybrid peer review model that is more efficient, democratic, and accountable than the traditional process.

1.1 The evolution of peer review

Any discussion on innovations in peer review must take into account its historical context. By understanding the history of scholarly publishing and the interwoven evolution of peer review, we recognize that neither are static entities, but in fact covary with each other, and therefore should be treated as such. By learning from historical experiences, we can also become more aware of how to shape future directions of peer review evolution and gain insight to what the process should look like in an optimal world. The actual term “peer review” only appears in the scientific press in the 1960s. Even in the 1970s, it was associated with grant review and not with evaluation and selection for publishing ( Baldwin, 2017a). However, the history of evaluation and selection processes for publication clearly predates the 1970s.

1.1.1 The early history of peer review. The origins of scholarly peer review of research articles are commonly associated with the formation of national academies in 17th-century Europe, although some have found foreshadowing of the practice ( Al-Rahawi, c900; Spier, 2002). We call this period the primordial time of peer review ( Figure 1). Biagioli (2002) described in detail the gradual differentiation of peer review from book censorship, and the role that state licensing and censorship systems played in 16th-century Europe; a period when monographs were the primary mode of communication. Several years after the Royal Society of London (1660) was established, it created its own in-house journal, Philosophical Transactions; around the same time, Denis de Sallo published the first issue of Journal des Sçavans. Both of these journals were first published in 1665. In London, Henry Oldenburg was appointed Secretary to the Royal Society and became the founding editor of Philosophical Transactions. Here, he took on the role of gathering, reporting, critiquing, and editing the work of others, as well as initiating the process of peer review as it is now commonly performed ( Manten, 1980; Oldenburg, 1665). Due to this origin, peer review emerged as part of the social practices of gentlemanly learned societies. These social practices also included organizing meetings and arranging the publications of society members, while being responsible for editorial curation, financial protection, and the assignment of individual prestige ( Moxham & Fyfe, 2016). The development of these prototypical scientific journals gradually replaced the exchange of experimental reports and findings through correspondence, formalizing a process that had been essentially personal and informal until then. “Peer review”, during this time, was more of a civil, collegial discussion in the form of letters between authors and the publication editors ( Baldwin, 2017b). Social pressures of generating new audiences for research, as well as new technological developments such as the steam-powered press, were also crucial. The purpose of developing peer reviewed journals became part of a process to deliver research to both generalist and specialist audiences, and improve the status of societies and fulfil their scholarly missions ( Shuttleworth & Charnley, 2016).

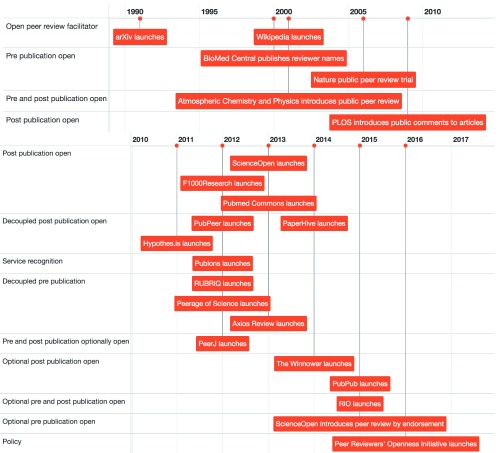

Figure 1. A brief timeline of the evolution of peer review: The primordial times.

The interactive data visualization is available at https://dgraziotin.shinyapps.io/peerreviewtimeline, and the source code and data are available at https://doi.org/10.6084/m9.figshare.5117260 ( Graziotin, 2017).

From these early developments, the process of independent review of scientific reports by acknowledged experts gradually emerged. However, the review process was more similar to non-scholarly publishing, as the editors were the only ones to appraise manuscripts before printing ( Burnham, 1990). As early as 1731, the Royal Society of Edinburgh adopted a formal peer review process in which materials submitted for publication in Medical Essays and Observations were vetted and evaluated by additional knowledgeable members ( Kronick, 1990; Spier, 2002). In 1752, the United Kingdom’s Royal Society created a “Committee on Papers” to review and select texts for publication in Philosophical Transactions ( Fitzpatrick, 2011b, Chapter One). The primary purpose of this process was to select information for publication to account for the limited distribution capacity, and remained the authoritative purpose of peer review for more than two centuries.

1.1.2 Adaptation through commercialisation. Through time, the diversity, quantity, and specialization of the material presented to journal editors increased. This made it necessary to seek assistance outside the immediate group of knowledgeable reviewers from the journals’ sponsoring societies ( Burnham, 1990). Peer review evolved to become a largely outsourced process, which still persists in modern scholarly publishing today, where publishers call upon external specialists to validate journal submissions. The current system of peer review only became more widespread in the mid 20th century (and in some disciplines, the late 20th century or early 21st; see Graf, 2014, for an example of a major philological journal which began systematic peer review in 2011). Nature, now considered a top journal, did not implement such a formal peer review process until 1967 ( nature.com/nature/history/timeline_1960s.html).

This editor-led process of peer review became increasingly important in the post-World War II decades, due to the development of a modern academic prestige economy based on the perception of quality or excellence and symbolism surrounding journal-based publications ( Baldwin, 2017a; Fyfe et al., 2017). The increasing professionalism of academies enabled commercial publishers to use peer review as a way of legitimizing their journals ( Baldwin, 2015; Fyfe et al., 2017), and capitalized on the traditional perception of peer review as voluntary duty by academics to provide these services. A consequence of this was that peer review became a more homogenized process that enabled private publishing companies to establish a dominant, oligarchic marketplace position ( Larivière et al., 2015). This represented a shift from peer review as a more synergistic activity between academics, to commercial entities selling it as an added value service back to the same academic community who was performing it freely for them. The estimated cost of peer review is a minimum of $1.9bn USD per year (in 2008; ( Research Information Network, 2008)), representing a substantial vested financial interest in maintaining the current process of peer review ( Smith, 2010). This figure does not even include the time spent by typically unpaid reviewers, or account for overhead costs in publisher management or the wasteful redundancy of the reject-resubmit cycle authors enter when chasing journal prestige ( Jubb, 2016). The result of this is that peer review has now become enormously complicated. By allowing the process of peer review to become managed by a hyper-competitive industry, developments in scholarly publishing have become strongly coupled to the transforming nature of academic research institutes. These have evolved into internationally competitive businesses that strive for quality through publisher-mediated journals by attempting to align these products with the academic ideal of research excellence ( Moore et al., 2017). Such a consequence is plausibly related to, or even a consequence of, broader shifts towards a more competitive neoliberal academia and society at large. Here, emphasis is largely placed on production and standing, value, or utility ( Gupta, 2016), as opposed to the original primary focus of research on discovery and novel results.

1.1.3 The peer review revolution. In the last several decades, there have been substantial efforts to decouple peer review from the publishing process ( Figure 2; Schmidt & Görögh (2017)). This has typically been done either by adopting peer review as an overlay process on top of formally published research articles, or by pursuing a “publish first, filter later” protocol, with peer review taking place after the initial publication of research results ( McKiernan et al., 2016; Moed, 2007). Here, the meaning of “publication” becomes “making public”, as opposed to the traditional sense where it also implies peer reviewed. In fields such as Physics and Mathematics, it has traditionally been commonplace for authors to send their colleagues either paper or electronic copies of their manuscripts for pre-submission evaluation. Launched in 1991, arXiv ( arxiv.org) formalized this process by creating a central network for whole communities to access such e-prints. Today, arXiv has more than one million e-prints from various research fields and receives more than 8,000 monthly submissions ( arXiv, 2017). Here, e-prints or pre-prints are not formally peer reviewed prior to publication, but still undergo a certain degree of moderation in order to filter out non-scientific content. This practice represents a significant shift, as public dissemination was decoupled from a traditional peer review process, resulting in increased visibility and citation rates ( Davis & Fromerth, 2007; Moed, 2007). The launch of Open Journal Systems ( openjournalsystems.com; OJS) in 2001 offered a step towards bringing journals and peer review back to their community-led roots. As of 2015, the OJS platform provided the technical infrastructure and editorial and peer review workflow management support to more than 10,000 journals ( Public Knowledge Project, 2016). Its exceptionally low cost was perhaps responsible for around half of these journals appearing in the developing world ( Edgar & Willinsky, 2010).

Figure 2. A brief timeline of the evolution of peer review: The revolution.

The interactive data visualization is available at https://dgraziotin.shinyapps.io/peerreviewtimeline, and the source code and data are available at https://doi.org/10.6084/m9.figshare.5117260 ( Graziotin, 2017).

More recently, there has been a new wave of innovation in peer review, which we term “the revolution” phase ( Figure 2; note that this is a non-exhaustive overview of the peer review landscape). The pace of this is accelerating rapidly, with the majority of changes occurring in the last five to ten years. This could be related to initiatives such as the San Francisco Declaration on Research Assessment ( ascb.org/dora/; DORA), that called for systemic changes in the way that scientific research outputs are evaluated. Digital-born journals, such as PLOS ONE, introduced commenting on published papers. This spurred developments in cross-publisher annotation platforms like PubPeer and PaperHive. Some journals, such as F1000 Research and The Winnower, rely exclusively on a model where peer review is conducted after the manuscripts are made publicly available. Other services, such as Publons, enable reviewers to claim recognition for their activities as referees. Platforms such as ScienceOpen provide a search engine combined with peer review across publishers on all documents, regardless of whether manuscripts have been previously reviewed. Each of these innovations has partial parallels to other social Web applications or platforms in terms of transparency, reputation, performance assessment, and community engagement. It remains to be seen whether these innovations and new models of evaluation will become more popular than traditional peer review.

1.2 The role and purpose of modern peer review

Due to the increasingly systematic use of external peer review, its processes have become entwined with the core activities of scholarly communication. Without approval through peer review to assess importance, validity, and journal suitability, research articles will not be sent to print. The historical motivation for selecting amongst submitted articles or distribution was primarily economic. With scholarly publishing turning into an essentially loss-making business, the costs of printing and paper needed to be limited ( Fyfe, 2015). The rising number of submissions, particularly in the 20th century, required distributing the management of this selection process. While in the digital world the costs of dissemination have dropped, the marginal cost of publishing articles is far from zero (e.g., due to time and management, hosting, marketing, technical and ethical checks, among other services). The economic motivations for still imposing selectivity in a digital environment, and applying peer review as a mechanism for this, have received limited attention or questioning, and is often regarded as just how things are done. Selectivity is now often attributed to quality control, but is based on the false assumption that peer review requires careful selection of specific reviewers to assure a definitive level of adequate quality, termed the “Fallacy of Misplaced Focus” by Kelty et al. (2008).

In many cases, there is an attempt to link the goals of peer review processes with Mertonian norms ( Lee et al., 2013; Merton, 1973) (i.e., universalism, communalism, disinterestedness, and organized skepticism) as a way of showing their relation to shared community values. The Mertonian norm of organized scepticism is the most obvious link, while the norm of disinterestedness can be linked to efforts to reduce systemic bias, and the norm of communalism to the expectation of contribution to peer review as part of community membership (i.e., duty). In contrast to the emphasis on supposedly shared social values, relatively little attention has been paid to the diversity of processes of peer review across journals, disciplines, and time. This is especially the case as the (scientific) scholarly community appears overall to have a strong investment in a “creation myth” that links the beginning of scholarly publishing—the founding of The Philosophical Transactions of the Royal Society—to the invention of peer review. The two are often regarded to be coupled by necessity, largely ignoring the complex and interwoven history of peer review and publishing. This has consequences, as the individual identity as a scholar is strongly tied to specific forms of publication that are evaluated in particular ways ( Moore et al., 2017). A scholar’s first research article, PhD thesis, or first book are significant life events. Membership of a community, therefore, is validated by the peers who review this newly contributed work. Community investment in the idea that these processes have “always been followed” appears very strong, but ultimately remains a fallacy.

As mentioned above, there is an increasing quantity and quality of research that examines how publication processes, selection, and peer review evolved from the 17th to the early 20th century, and how this relates to broader social patterns ( Baldwin, 2017a; Baldwin, 2017b; Moxham & Fyfe, 2016). However, there is much less research critically exploring the diversity of selection and peer review processes in the mid- to late-20th century. Indeed, there seems to be a remarkable discrepancy between the historical work we do have ( Baldwin, 2017a; Gupta, 2016; Shuttleworth & Charnley, 2016) and apparent community views that “we have always done it this way,” alongside what sometimes feels like a wilful effort to ignore the current diversity of practice.

Such a discrepancy between a dynamic history and remembered consistency could be a consequence of peer review processes being central to both scholarly identity as a whole and to the identity and boundaries of specific communities ( Moore et al., 2017). Indeed, this story linking identity to peer review is taught to junior researchers as a community norm, often without the much-needed historical context. More work on how peer review, alongside other community practices, contributes to community building and sustainability would be valuable. Examining criticisms of conventional peer review and proposals for change through the lens of community formation and identity may be a productive avenue for future research.

1.3 Criticisms of the conventional peer review system

In spite of its clear relevance, widespread acceptance, and long-standing practice, the academic community does not appear to have a clear consensus on the operational functionality of peer review, and what its effects in a diverse modern research world are. There is a discrepancy between how peer review is regarded as a process, and how it is actually performed. While peer review is still generally perceived as key to quality control for research, others have begun to note that mistakes are becoming ever more frequent in the process ( Margalida & Colomer, 2016; Smith, 2006), or at least that peer review is problematic and not being applied as rigorously as generally perceived ( Cole, 2000; Eckberg, 1991; Ghosh et al., 2012; Jefferson et al., 2002; Kostoff (1995); Ross-Hellauer, 2017; Schroter et al., 2006; Walker & Rocha da Silva, 2015). One consequence of this is that COPE, the Committee on Publication Ethics ( publicationethics.org), was established in 1997 to address potential cases of abuse and misconduct during the publication process. Yet, the effectiveness of this initiative at a system-level remains unclear. A popular editorial in The BMJ stated that peer review is “slow, expensive, profligate of academic time, highly subjective, prone to bias, easily abused, poor at detecting gross defects, and almost useless at detecting fraud,” with evidence supporting each of these quite serious allegations ( Smith, 2006). However, beyond editorials, there now exists a substantial corpus of studies that critically examines the technical aspects of peer review. Taken together, this should be extremely worrisome, especially given that traditional peer review is still viewed almost dogmatically as a gold standard for the publication of research results, and as the process which mediates knowledge dissemination to the public.

The issue is that, ultimately, this uncertainty in standards and implementation can potentially lead to, or at least be viewed as the cause of, widespread failures in research quality and integrity ( Ioannidis, 2005; Jefferson et al., 2002) and even the rise of formal retractions in extreme cases ( Steen et al., 2013). Issues resulting from peer review failure range from simple gate-keeping errors, based on differences in opinion of the perceived impact of research, to failing to detect fraudulent or incorrect work, which then enters the scientific record ( Baxt et al., 1998; Gøtzsche, 1989; Haug, 2015; Moore et al., 2017; Pocock et al., 1987; Schroter et al., 2004; Smith, 2006). A final issue regards peer review by and for non-native English speaking authors, which can lead to cases of linguistic inequality and language-oriented research segregation, in a world where research is increasingly becoming more globally competitive ( Salager-Meyer, 2008; Salager-Meyer, 2014). All of this suggests that, while the idea of peer review remains logical, it is the implementation of it that requires attention.

1.3.1 Peer review needs to be peer reviewed. Attempts to reproduce how peer review selects what is worthy of publication demonstrate that the process is generally adequate for detecting reliable research, but often fails to recognize the research that has the greatest impact ( Mahoney, 1977; Moore et al., 2017; Siler et al., 2015). Many now regard the traditional peer review model as sub-optimal in that it causes publication delays, impacting the communication of novel research ( Bornmann & Daniel, 2010; Brembs, 2015; Eisen, 2011; Jubb, 2016; Vines, 2015b). Reviewer fatigue ( Breuning et al., 2015) and redundancy when articles go through multiple rounds of peer review at different journal venues ( Moore et al., 2017; Jubb, 2016) are just some of the criticisms levied at the technical implementation of peer review. In addition, some view traditional peer review as flawed because it operates within a closed and opaque system. This makes it impossible to trace the discussions that led to (sometimes substantial) revisions to the original research ( Bedeian, 2003), as well as the decision process leading to the final publication.

On top of all of these potential issues, some critics go even further in stating that, at its worst, peer review can be seen as detrimental to research. By operating as a closed system, it protects the status quo and suppresses research viewed as radical, innovative, or contrary to the theoretical perspectives of referees ( Alvesson & Sandberg, 2014; Benda & Engels, 2011; Horrobin, 1990; Mahoney, 1977; Merton, 1968), even though it is precisely these factors that underpin and advance research. As a consequence, questions to the competency and integrity of traditional peer review arise, such as: who are the gatekeepers and how are their gates constructed; what is the balance between author-reviewer-editor tensions; what are the inherent biases associated with this; does this enable a fair or structurally inclined system of peer review to exist; and what are the repercussions for this on our knowledge generation and communication systems?

In spite of all of these criticisms, it remains clear that the ideal of peer review still plays a fundamental role in scholarly communication ( Goodman et al., 1994; Pierie et al., 1996; Ware, 2008) and retains a high level of respect from the research community ( Bedeian, 2003; Greaves et al., 2006; Gibson et al., 2008). One primary reason why peer review has persisted is that it remains a unique way of assigning credit and differentiating research publications from other types of literature, including blogs, media articles, and books. This perception, combined with a general lack of awareness or appreciation of the historic context of peer review, research examining its potential flaws, and the conflation of the process with the ideology, has sustained its ubiquitous usage and continued proliferation in academia. This has led to the widely-held perception that peer review is a singular and static process, and to its acceptance as a social norm. It is difficult to move away from a process that has now become so deeply embedded within oligarchic research institutes. The consequence of this is that, irrespective of any systemic flaws, peer review remains one of the essential pillars of trust when it comes to scientific communication ( Haider & Åström, 2017).

In this article, we summarize the ebb and flow of the debate around the various and complex aspects of conventional (editorially-controlled) peer review. In particular, we highlight how innovative systems are attempting to resolve the major issues associated with traditional models, explore how new platforms could improve the process in the future, and consider what this means for the identity, role, and purpose of peer review within diverse research communities. The aim of this discussion is not to undermine any specific model of peer review in a quest for systemic upheaval, or to advocate any particular alternative model. Rather, we acknowledge that the idea of peer review is critical for research and advancing our knowledge, and as such we provide a foundation here for future exploration and creativity in diversifying and improving an essential component of scholarly communication.

2 The traits and trends affecting modern peer review

Over time, three principal forms of journal peer review have evolved: single blind, double blind, and open ( Table 1). Of these, single blind, where reviewers are anonymous but authors are not, is the most widely-used in most disciplines because the process is comparably less onerous and less expensive to operate than the alternatives. double blind peer review, where both authors and reviewers are reciprocally anonymous, requires considerable effort to remove all traces of the author’s identity from the manuscript under review ( Blank, 1991). For a detailed comparison of double versus single blind review, Snodgrass (2007) provides an excellent summary. These are generally considered to be the traditional forms of peer review, with the advent of open peer review introducing substantial additional complexity into the discussion ( Ross-Hellauer, 2017).

Table 1. Types of peer review.

| Author Identity | |||

|---|---|---|---|

| Hidden | Known | ||

|

Reviewer

Identity |

Hidden | Double blind | Single blind |

| Known | – | Open | |

The diversification of peer review is intrinsically coupled with wider developments in scholarly publishing. When it comes to the gate-keeping function of peer review, innovation is noticeable in some digital-only, or “born open,” journals, such as PLOS ONE and PeerJ. These explicitly request referees to ignore any notion of novelty, significance, or impact, before it becomes accessible to the research community. Instead, reviewers are asked to focus on whether the research was conducted properly and that the conclusions are based on the presented results. This arguably more objective method has met some resistance, even receiving the somewhat derogatory term “peer review lite” from some corners of the scholarly publishing industry ( Pinfield, 2016). Such a perception is largely a hangover from the commercial age of publishing, and now seems superfluous and discordant with any modern Web-based model of scholarly communication. The relative timing of peer review to publication is a further major innovation, with journals such as F1000 Research publishing prior to any formal peer review process. Some of the advantages and disadvantages of these different variations of open peer review are explored in Table 2.

Table 2. Advantages and disadvantages of the different approaches to peer review.

NPRC: Neuroscience Peer Review Consortium.

| Type | Description | Pros/Benefits | Cons/Risks | Examples |

|---|---|---|---|---|

| Pre-peer review

commenting |

Informal commenting and

discussion on a publicly available pre-publication manuscript draft (i.e., preprints) |

Rapid, transparent,

public, relatively low cost (free for authors), open commenting |

Variable uptake, fear

of scooping, fear of journal rejection, fear of premature communication |

bioRxiv, SocArXiv,

engrXiv, PeerJ pre-prints, Figshare, Zenodo |

| Pre-publication | Formal and editorially-invited

evaluation of a piece of research by selected experts in the relevant field |

Editorial moderation,

provides at least some consistent form of quality control for all published work |

Non-transparent,

impossible to evaluate, biased, secretive, exclusive, unclear who “owns” reviews |

Nature, Science, New

England Journal of Medicine, Cell, The Lancet |

| Post-publication | Formal and optionally-invited

evaluation of research by selected experts in the relevant field, subsequent to publication |

Rapid publication

of research, public, transparent, can be editorially-moderated |

Filtering of “bad

research” occurs after publication, relatively low uptake |

F1000Research,

ScienceOpen, Research Ideas and Outcomes (RIO), The Winnower |

| Post-publication

commenting |

Informal discussion of

published research, independent of any formal peer review that may have already occurred |

Can be performed on

third-party platforms, anyone can contribute, public |

Comments can be

rude or of low quality, comments across multiple platforms lack inter-operability, low visibility, low uptake |

PubMed Commons,

PeerJ, PLOS ONE, ScienceOpen |

| Collaborative | Referees, and often editors,

participate in the assessment of scientific manuscripts through interactive comments to reach a consensus decision and a single set of revisions and comments |

Iterative, editors

sign reports, can be integrated with formal process, deters low quality submissions |

Can be additionally

time-consuming, discussion quality variable, peer pressure and influence can tilt the balance |

eLife, Frontiers,

Atmospheric Chemistry and Physics |

| Portable | Authors can take referee

reports to multiple consecutive venues, often administered by a third-party service |

Reduces redundancy or

duplication, saves time |

Low uptake by authors,

low acceptance by journals, high cost |

BioMed Central

journals, NPRC, Rubriq, Peerage of Science, MECA |

| Recommendation

services |

Post-publication evaluation and

recommendation of significant articles, often through a peer-nominated consortium |

Crowd-sourced literature

discovery, time saving, “prestige” factor when inside a consortium |

Paid services

(subscription only), time consuming on recommender side, exclusive |

F1000Prime, CiteULike,

ScienceOpen |

| De-coupled post-

publication (annotation services) |

Comments or highlights added

directly to highlighted sections of the work. Added notes can be private or public |

Rapid, crowd-sourced

and collaborative, cross- publisher, low threshold for entry |

Non-interoperable,

multiple venues, effort duplication, relatively unused, genuine critiques reserved |

PubPeer, Hypothesis,

PaperHive, PeerLibrary |

2.1 The development of open peer review

Novel ideas about “Open Peer Review” (OPR) systems are rapidly emerging, and innovation has been accelerating over the last several years ( Figure 2; Table 3). The advent of OPR is complex, and often multiple aspects of peer review are used inter-changeably or are conflated without appropriate prior definition. Currently, there is no formally established definition of OPR that is accepted by the scholarly research and publishing community ( Ford, 2013). The most simple definitions by McCormack (2009) and Mulligan et al. (2008) presented OPR as a process that does not attempt “to mask the identity of authors or reviewers” ( McCormack, 2009, p.63), thereby explicitly referring to open in terms of personal identification or anonymity. Ware (2011, p.25) expanded on reviewer disclosure practices: “Open peer review can mean the opposite of double blind, in which authors’ and reviewers’ identities are both known to each other (and sometimes publicly disclosed), but discussion is complicated by the fact that it is also used to describe other approaches such as where the reviewers remain anonymous but their reports are published.” Other authors define OPR distinctly, for example by including the publication of all dialogue during the process ( Shotton, 2012), or running it as a publicly participative commentary ( Greaves et al., 2006). A recent survey by OpenAIRE found 122 different definitions of OPR in use, exemplifying the extent of this issue. This diversity was distilled into a single proposed definition comprising seven different open traits: participation, identity, reports, interaction, platforms, pre-review manuscripts, and final-version commenting ( Ross-Hellauer, 2017).

Table 3. Pros and cons of different approaches to anonymity in peer review.

| Approach | Description | Pros/Benefits | Cons/Risks | Examples |

|---|---|---|---|---|

| Single blind peer

review |

Referees are not revealed

to the authors, but referees are aware of author identities |

Allows reviewers to view full

context of an author’s other work, detection of COIs, more efficient |

Prone to bias, authors not

protected, exclusive, non- verifiable, referees can often be identified anyway |

Most biomedical and

physics journals, PLOS ONE, Science |

| Double blind

peer review |

Authors and the referees

are reciprocally anonymous |

Increased author diversity in

published literature, protects authors and reviewers from bias, more objective |

Still prone to abuse and bias,

secretive, exclusive, non- verifiable, referees can often be identified anyway, time consuming |

Nature, most social

sciences journals |

| Triple-blind peer

review |

Authors and their affiliations

are reciprocally anonymous to handling editors and reviewers |

Eliminates geographical,

institutional, personal and gender biases, work evaluated based on merit |

Incompatible with

pre-prints, low-uptake, non- verifiable, secretive |

Science Matters |

| Private, open

peer review |

Referee names are

revealed to the authors pre-publication, if the referees agree, either through an opt-in or opt-out mechanism |

Protects referees, no fear of

reprisal for critical reviews |

Increases decline to review

rates, non-verifiable |

PLOS Medicine,

Learned Publishing |

| Unattributed peer

review |

If referees agree, their

reports are made public but anonymous when the work is published |

Reports publicized for

context and re-use |

Prone to abuse and bias,

secretive, exclusive, non- verifiable |

EMBO Journal |

| Optional open

peer review |

As single blind peer review,

except that the referees are given the option to make their review and their name public |

Increased transparency | Gives an unclear pictures of

the review process if not all reviews are made public |

PeerJ, Nature

Communications |

| Pre-publication

open peer review |

Referees are identified to

authors pre-publication, and if the article is published, the full peer review history together with the names of the associated referees is made public |

Transparency, increased

integrity of reviews |

Fear: referees may decline

to review, or be unwilling to come across too critically or positively |

The medical

BMC-series journals, The BMJ |

| Post-publication

open peer review |

The referee reports and

the names of the referees are always made public regardless of the outcome of their review |

Fast publication, transparent

process |

Fear: referees may decline

to review, or be unwilling to come across too critically or positively |

F1000Research,

ScienceOpen, PubPub |

| Peer review by

endorsement (PRE) |

Pre-arranged and invited,

with referees providing a “stamp of approval” on publications |

Transparent, cost-effective,

rapid, accountable |

Low uptake, prone to

selection bias, not viewed as credible |

RIO Journal,

ScienceOpen |

A core question is how to transform traditional peer review into a process aligned with the latest advances in what is now widely termed “open science”. This is tied to broader developments in how we as a society communicate, thanks to the inherent capacity that the Web provides for open, collaborative, and social communication. Many of the suggestions and new models for improving peer review are geared towards increasing the transparency and ultimately the reliability, efficiency, and accountability of the publishing process, and aligning peer review norms to support these aims. These traits are desired by all actors in the system, and increasing transparency moves peer review towards a more open model.

However, the context of this transparency and the implications of different levels of transparency at different stages of the review process are both very rarely explored, and achieving transparency is difficult at a variety of levels. How and where we inject transparency into the system has implications for the magnitude of transformation and, therefore, the general concept of OPR is highly heterogeneous in meaning, scope, and consequences. New suggestions to modify peer review vary, between fairly incremental small-scale changes, to those that encompass an almost total and radical transformation of the present system. The various parts of the “revolutionary” phase of peer review undoubtedly have different combinations of these OPR traits, and within this remains a very heterogeneous landscape. Table 3 provides an overview of the advantages and disadvantages of the different approaches to anonymity and openness in peer review.

In this article, we regard OPR as a process fulfilling any of the following three primary criteria:

-

1.

Referee names are identified to the authors and the readership;

-

2.

Referee reports are made publicly available under an open license;

-

3.

Peer review is not restricted to invited referees only.

With all of these complex evolutionary trajectories, it is clear that peer review is undergoing a phase of experimentation in line with the evolving scholarly ecosystem. However, despite the range of new innovations, the engagement with these experimental open models is still far from common. The entrenchment of the ubiquitously practiced and much more favored traditional model (which, as noted above, is also diverse) is ironically non-traditional, but nonetheless currently revered. Practices such as self-publishing and predatory or deceptive publishing cast a shadow of doubt on the validity of research posted openly online that follow these models, including those with traditional scholarly imprints ( Fitzpatrick, 2011a; Tennant et al., 2016). The inertia hindering widespread adoption of new models of peer review can be ascribed to what is often termed “cultural inertia” within scholarly research. Cultural inertia, the tendency of communities to cling to a traditional trajectory, is shaped by a complex ecosystem of individuals and groups. These often have highly polarized motivations (i.e., capitalistic commercialism versus knowledge generation versus careerism versus output measurement), and an academic hierarchy that imposes a power dynamic that can suppress innovative practices ( Burris, 2004; Magee & Galinsky, 2008).

The ongoing discussions and innovations around peer review (and OPR) can be sorted into four main categories, which are examined in more detail below. Each of these feed into the wider issues of incentivizing engagement, providing appropriate recognition and certification, and quality control and moderation:

-

1.

How can referees receive credit or recognition for their work, and what form should this take;

-

2.

Should referee reports be published alongside manuscripts;

-

3.

Should referees remain anonymous or have their identities disclosed;

-

4.

Should peer review occur prior or subsequent to the publication process (i.e., publish then filter).

2.2 Giving credit to peer reviewers

A vast majority of researchers see peer review as an integral and fundamental part of their work. They often even consider peer review to be part of an altruistic cultural duty or a quid pro quo service, closely associated with the identity of being part of their research community. Generally, journals do not provide any remuneration or compensation for these services. Notable exceptions are the UK-based publisher Veruscript ( veruscript.com/about/who-we-are) and Collabra ( collabra.org/about/our-model), published by University of California Press. To be invited to review a research article is perceived as a great honor, especially for junior researchers, due to the recognition of expertise—i.e., the attainment of the level of a peer. However, the current system is facing new challenges as the number of published papers continues to increase rapidly ( Albert et al., 2016), with more than one million articles published in peer reviewed, English-language journals every year ( Larsen & Von Ins, 2010). Some estimates are even as high as 2–2.5 million per year ( Plume & van Weijen, 2014), and this number is expected to double approximately every nine years at current rates ( Bornmann & Mutz, 2015). There are several possible solutions to this issue:

Increase the total pool of potential referees,

Increase acceptance rates to avoid review duplication,

Decrease the number of referees per paper, and/or

Decrease the time spent on peer review.

Of these, the latter two can both potentially reduce the quality of peer review, open or otherwise, and therefore affect the overall quality of published research. Paradoxically, as the Internet empowers us to communicate information virtually instantaneously, the turn around time for peer reviewed publications is as far from this as it ever has been. One potential solution to this is to encourage referees by providing additional recognition and credit for their work. The present lack of bona fide incentives for referees is perhaps the main factor responsible for indifference to editorial outcomes, which ultimately leads to the increased proliferation of low quality research ( D’Andrea & O’Dwyer, 2017).

2.2.1 Traditional methods of recognition. One current way to recognize peer review is to thank anonymous referees in the Acknowledgement sections of published papers. In these cases, the referees will not receive any public recognition for their work, unless they explicitly agree to sign their reviews. Another common form of acknowledgement is a private thank you note from the journal or editor, which usually takes the form of an automated email upon completion of the review. In addition, journals often list and thank all reviewers in a special issue or on their website once a year, thus providing another way to credit reviewers. Another idea that journals and publishers have tried implementing is to list the best reviewers for their journal (e.g., by Vines (2015a) for Molecular Ecology), or, on the basis of a suggestion by Pullum (1984), naming referees who recommend acceptance in the article colophon (a single blind version of this recommendation was adopted by Digital Medievalist from 2005–2016; see Wikipedia contributors, 2017, and bit.ly/DigitalMedievalistArchive for examples preserved in the Internet Archive). Digital Medievalist stopped using this model and removed the colophon as part of its move to the Open Library of Humanities; cf. journal.digitalmedievalist.org). As such, authors can then integrate this into their scholarly profiles in order to differentiate themselves from other researchers or referees. Currently, most tenure and review committees do not consider peer review activities as required or sufficient in the process of professional advancement or tenure evaluation. Instead, it is viewed as expected or normal behaviour for all researchers to contribute in some form to peer review.

2.2.2 Increasing demand for recognition. Traditional approaches of credit fall short of any sort of systematic feedback or recognition, such as that granted through publications. A change here is clearly required for the wealth of currently unrewarded time and effort given to peer review by academics. A recent survey of nearly 3,000 peer reviewers by the large commercial publisher Wiley showed that feedback and acknowledgement for work as referees are valued far above either cash reimbursements or payment in kind ( Warne, 2016). As of today, peer review is poorly acknowledged by practically all research assessment bodies, institutions, granting agencies, as well as publishers. Wiley’s survey reports that 80% of researchers agree that there is insufficient recognition for peer review as a valuable research activity and that researchers would actually commit more time to peer review if it became a formally recognized activity for assessments, funding opportunities, and promotion ( Warne, 2016). While this may be true, it is important to note that commercial publishers, including Wiley, have a vested interest in retaining the current, freely provided service of peer review since this is what provides their journals the main stamp of legitimacy and quality (“added value”) as society-led journals. Therefore, one of the root causes for the lack of appropriate recognition and incentivization is, ironically, publishers themselves, who have strong motivations to find non-monetary forms of reviewer recognition. Indeed, the business model of almost every large scholarly publisher is predicated on free work by peer reviewers, and it is unlikely that the present system would function financially with market-rate reimbursement of peer reviewers. Hence, this survey could represent a biased view of the actual situation. Other research shows a similar picture, with approximately 70% of respondents to a small survey done by Nicholson & Alperin (2016) indicating that they would list peer review as a professional service on their curriculum vitae. 27% of respondents mentioned formal recognition in assessment as a factor that would motivate them to participate in public peer review. These numbers indicate that the lack of credit referees receive for peer review is a contributing factor to the perceived stagnation of the traditional models. Furthermore, acceptance rates are lower in humanities and social sciences, and higher in physical sciences and engineering journals ( Ware, 2008). This means there are distinct disciplinary variations in the number of reviews performed by a researcher relative to their publications, and suggests that there is scope for using this to either provide different incentive structures or to increase acceptance rates and therefore decrease referee fatigue ( Lyman, 2013).

2.2.3 Progress in crediting peer review. Any acknowledgement model to credit reviewers also raises the obvious question of how to facilitate this model within an anonymous peer review system. By incentivizing peer review, much of its potential burden can be alleviated by widening the potential referee pool. This can also help to diversify the process and inject transparency into peer review, a solution that is especially appealing when considering that it is often a small minority of researchers who perform the vast majority of peer reviews ( Fox et al., 2017; Gropp et al., 2017); for example, in biomedical research, only 20 percent of researchers perform 70–95 percent of the reviews ( Kovanis et al., 2016). In 2014, a working group on peer review services (CASRAI) was established to “develop recommendations for data fields, descriptors, persistence, resolution, and citation, and describe options for linking peer-review activities with a person identifier such as ORCID” ( Paglione & Lawrence, 2015). The idea here is that by being able to standardize peer review activities, it becomes easier to describe, attribute, and therefore recognize and reward them.

The Publons platform provides a semi-automated mechanism to formally recognize the role of editors and referees who can receive due credit for their work as referees, both pre- and post-publication. Researchers can also choose if they want to publish their full reports depending on publisher and journal policies. Publons also provides a ranking for the quality of the reviewed research article, and users can endorse, follow, and recommend reviews. Other platforms, such as F1000 Research and ScienceOpen, link post-publication peer review activities with CrossRef DOIs to make them more citable, essentially treating them equivalent to a normal Open Access research paper. ORCID (Open Researcher and Contributor ID) provides a stable means of integrating with platforms such as Publons and ImpactStory in order to receive due credit for reviews. ORCID is rapidly becoming part of the critical infrastructure for OPR, and greater shifts towards open scholarship ( Dappert et al., 2017). Exposing peer reviews through these platforms links accountability to receiving credit. Therefore, they offer possible solutions to the dual issues of rigor and reward, while potentially ameliorating the growing threat of reviewer fatigue. Whether such initiatives will be successful remains to be seen, although Publons was recently acquired by Clarivate Analytics, suggesting that the process could become commercialized as this domain rapidly evolves ( Van Noorden, 2017). In spite of this, the outcome is most likely to be dependent on whether funding agencies and those in charge of tenure, hiring, and promotion will use peer review activities to help evaluate candidates. This is likely dependent on whether research communities themselves choose to embrace any such crediting or accounting systems for peer review.

2.3 Publishing peer review reports

The rationale behind publishing referee reports lies in providing increased context and transparency to the peer review process—the making of the sausage, so to speak. Often, valuable insights are shared in reviews that would otherwise remain hidden if not published. By publishing reports, peer review then has the potential to become a supportive and collaborative process that is viewed more as an ongoing dialogue between groups of scientists to progressively assess the quality of research. Furthermore, the reviews themselves are opened up for analysis and inspection, including how authors respond to reviews themselves, which adds an additional layer of quality control and a means for accountability and verification. There are additional educational benefits to publishing peer reviews, such as training purposes or for journal clubs. At the present, some publisher policies are extremely vague about the re-use rights and ownership of peer review reports ( Schiermeier, 2017).

In a study of two journals, one where reports were not published and another where they were, Bornmann et al. (2012) found that publicized comments were much longer. Furthermore, there was an increased chance that they may result in a constructive dialogue between the author, reviewers, and wider community, and might therefore be better for improving the content of a manuscript. On the other hand, unpublished reviews tend to have had more of a selective function to determine whether a manuscript is appropriate for a particular journal (i.e., focusing on the editorial process). Therefore, depending on the journal, different types of peer review could be better suited to perform different functions, and therefore optimized in that direction. Transparency of the peer review process can also be used as an indicator for peer review quality, thereby potentially enabling the tool to predict quality in new journals in which the peer review model is known ( Godlee, 2002; Morrison, 2006; Wicherts, 2016), if desired. Journals with higher transparency ratings were less likely to accept flawed papers and showed a higher impact as measured by Google Scholar’s h5-index.

It is ironic that, while assessments of articles can never be evidence-based without the publication of referee reports, they are still almost ubiquitously regarded as having an authoritative stamp of quality. The issue here is that the attainment of peer reviewed status will always be based on an undefined, and only ever relative, quality threshold due to the opacity of the process. This is quite an unscientific practice, and instead, researchers rely almost entirely on heuristics and trust for a concealed process and the intrinsic reputation of the journal, rather than anything legitimate. This can ultimately result in what is termed the “Fallacy of Misplaced Finality”, described by Kelty et al. (2008), as the assumption that research has a single, final form, to which everyone applies different criteria of quality.

Publishing peer review reports appears to have little or no impact on the overall process but may encourage more civility from referees. In a small survey, Nicholson & Alperin (2016) found that approximately 75% of survey respondents (n=79) perceived that public peer review would change the tone or content of the reviews, and 80% of responses indicated that performing peer reviews that would be eventually be publicized would not require a significantly higher amount of work. However, the responses also indicated that an incentive is needed for referees to engage in open peer review. This would include recognition by performance review or tenure committees (27%), peers publishing their reviews (26%), being paid in some way such as with an honorarium or waived APC (24%), and getting positive feedback on reviews from journal editors (16%). Only 3% (one response) indicated that nothing could motivate them to participate in an open peer review of this kind. Leek et al. (2011) showed that when referees’ comments were made public, significantly more cooperative interactions were formed, while the risk of incorrect comments decreased. Moreover, referees and authors who participated in cooperative interactions had a reviewing accuracy rate that was 11% higher. On the other hand, the possibility of publishing the reviews online has also been associated with a high decline rate among potential peer reviewers, and an increase in the amount of time taken to write a review, but with no effect on review quality ( van Rooyen et al., 2010). This suggests that the barriers to publishing review reports are inherently social, rather than technical.

When BioMed Central launched in 2000, it quickly recognized the value in including both the reviewers’ names and the peer review history (pre-publication) alongside published manuscripts in their medical journals. Since then, further reflections on open peer review ( Godlee, 2002) led to the adoption of a variety of OPR models. For example, the Frontiers series now publishes all referee names alongside articles, EMBO journals publish a review process file with the articles, with referees remaining anonymous but editors being named, and PLOS added public commenting features to articles they published in 2009. More recently, launched journals such as PeerJ have a system where both the reviews and the names of the referees can optionally be made public, and journals such as Nature Communications and the European Journal of Neuroscience have started to adopt this method of OPR as well.

Unresolved issues with posting review reports include whether or not it should be conducted for ultimately unpublished manuscripts, the impact of author identification or anonymity, and if the announcement of author’s career stage has potential consequences on their reputations. Furthermore, the actual readership and usage of published reports remains ambiguous in a world where researchers are typically already inundated with published articles to read. The benefits of publicizing reports might not be seen until further down the line from the initial publication and, therefore, their immediate value might be difficult to convey and measure in current research environments. Finally, different populations of reviewers with different cultural norms and identities will undoubtedly have varying perspectives on this issue, and it is unlikely that any single policy or solution to posting referee reports will ever be widely adopted.

2.4 Eponymous versus anonymous peer review

There are different levels of bi-directional anonymity throughout the peer review process, including whether or not the referees know who the authors are but not vice versa (single blind, the most common; ( Ware, 2008)), or whether both parties remain anonymous to each other (double blind) ( Table 1). Traditional double blind review is based on the idea that peer evaluations should be impartial and based on the research, not ad hominem, but there has been considerable discussion over whether reviewer identities should remain anonymous (e.g., Baggs et al. (2008); Pontille & Torny (2014); Snodgrass (2007)) ( Figure 3). Models such as triple-blind peer review even go a step further, where authors and their affiliations are reciprocally anonymous to the handling editor and the reviewers. This attempts to nullify the effects of one’s scientific reputation, institution, or location on the peer review process, and is employed at the Open Access journal Science Matters ( sciencematters.io), launched in early 2016.

Strong, but often conflicting arguments and attitudes exist for both sides of the anonymity debate (see e.g., Prechelt et al. (2017)). In theory, anonymous reviewers are protected from potential backlashes for expressing themselves fully and therefore are more likely to be more honest in their assessments. Further, there is some evidence to suggest that double blind review can increase the acceptance rate of women-authored articles in the published literature ( Darling, 2015). However, this kind of anonymity can be difficult to protect, as there are ways in which identities can be revealed, albeit non-maliciously, such as through language and phrasing, prior knowledge of the research and a specific angle being taken, previous presentation at a conference, or even simple Web-based searches.

While there is much potential value in anonymity, the corollary is also problematic in that anonymity can lead to reviewers being more aggressive, biased, negligent, orthodox, entitled, and politicized in their language and evaluation, as they have no fear of negative consequences for their actions other than from the editor. ( Lee et al., 2013; Weicher, 2008). Furthermore, by protecting the referees’ identities journals lose an aspect of the prestige, quality, and validation in the review process, leaving researchers to guess or assume this important aspect postpublication. The transparency associated with signed peer review aims to avoid competition and conflicts of interest that can potentially arise due to the fact that referees are often the closest competitors to the authors, as they will naturally tend to be the most competent to assess the research ( Campanario, 1998a; Campanario, 1998b). Eponymous peer review has the potential to encourage increased civility, accountability, and more thoughtful reviews ( Boldt, 2011; Cope & Kalantzis, 2009; Fitzpatrick, 2010; Janowicz & Hitzler, 2012; Lipworth et al., 2011; Mulligan et al., 2013), as well as extending the process to become more of an ongoing, community-driven dialogue rather than a singular, static event ( Bornmann et al., 2012; Maharg & Duncan, 2007). However, there is scope for the peer review to become less critical, skewed, and biased by community selectivity. If the anonymity of the reviewers is removed while maintaining author anonymity at any time during peer review, a skew and extreme accountability is imposed upon the reviewers, while authors remain relatively protected from any potential prejudices against them. However, such transparency provides, in theory, a mode of validation and should mitigate corruption as any association between authors and reviewers would be exposed. Yet, this approach has a clear disadvantage, in that accountability becomes extremely one-sided. Another possible result of this is that reviewers could be stricter in their appraisals within an already conservative environment, and thereby further prevent the publication of research.

2.4.1 Reviewing the evidence. Baggs et al. (2008) investigated the beliefs and preferences of reviewers about blinding. Their results showed double blinding was preferred by 94% of reviewers, although some identified advantages to an un-blinded process. When author names were blinded, 62% of reviewers could not identify the authors, while 17% could identify authors ≤10% of the time. Walsh et al. (2000) conducted a survey in which 76% of reviewers agreed to sign their reviews. In this case, signed reviews were of higher quality, were more courteous, and took longer to complete than unsigned reviews. Reviewers who signed were also more likely to recommend publication. In their study to explore the review process from the reviewers’ perspectives, Snell & Spencer (2005) found that reviewers would be willing to sign their reviews and feel that the process should be transparent. Yet, a similar study by Melero & Lopez-Santovena (2001) found that 75% of surveyed respondents were in favor of reviewer anonymity, while only 17% were against it.

A randomized trial showed that blinding reviewers to the identity of authors improved the quality of the reviews ( McNutt et al., 1990). This trial was repeated on a larger scale by Justice et al. (1998) and Van Rooyen et al. (1999), with neither study finding that blinding reviewers improved the quality of reviews. These studies also showed that blinding is difficult in practice as many manuscripts include clues on authorship. Jadad et al. (1996) analyzed the quality of reports of randomized clinical trials and concluded that blind assessments produced significantly lower and more consistent scores than open assessments. The majority of additional evidence suggests that anonymity has little impact on the quality or speed of the review or of acceptance rates ( Isenberg et al., 2009; Justice et al., 1998; van Rooyen et al., 1998), but revealing the identity of reviewers may lower the likelihood that someone will accept an invitation to review ( Van Rooyen et al., 1999). Revealing the identity of the reviewer to a co-reviewer also has a small, editorially insignificant, but statistically significant beneficial effect on the quality of the review ( van Rooyen et al., 1998). Authors who are aware of the identity of their reviewers may also be less upset by hostile and discourteous comments ( McNutt et al., 1990). Other research found that signed reviews were more polite in tone, of higher quality, and more likely to ultimately recommend acceptance ( Walsh et al., 2000).

2.4.2 The dark side of identification. The debate of signed versus unsigned reviews is not to be taken lightly. Early career researchers in particular are some of the most conservative in this area as they may be afraid that by signing overly critical reviews (i.e., those which investigate the research more thoroughly), they will become targets for retaliatory backlashes from more senior researchers. In this case, the justification for reviewer anonymity is to protect junior researchers, as well as other marginalized demographics, from bad behaviour. Furthermore, author anonymity could potentially save junior authors from public humiliation from more established members of the research community, should they make errors in their evaluations. These potential issues are at least a part of the cause towards a general attitude of conservatism from the research community towards OPR. Indeed, they come up as the most prominent resistance factor in almost every formal discussion on the top of open peer review (e.g., Darling (2015); Godlee et al. (1998); McCormack (2009); Pontille & Torny (2014); Snodgrass (2007) van Rooyen et al. (1998)). However, it is not immediately clear how this widely-exclaimed but poorly documented potential abuse of signed-reviews is any different from what would occur in a closed system anyway, as anonymity provides a potential mechanism for referee abuse. The fear that most backlashes would be external to the peer review itself, and indeed occur in private, is probably the main reason why such abuse has not been widely documented. However, it can also be argued that by reviewing with the prior knowledge of open identification, such backlashes are prevented since researchers do not want to tarnish their reputations in a public forum. Under these circumstances, openness becomes a means to hold both referees and authors accountable for their public discourse, as well as making the editors’ decisions on referee and publishing choice public. Either way, there is little documented evidence that such retaliations actually occur either commonly or systematically. If they did, then publishers that employ this model such as Frontiers or BioMed Central would be under serious question, instead of thriving as they are.

In an ideal world, we would expect that strong, honest, and constructive feedback is well received by authors, no matter their career stage. Yet, it seems that this is not the case, or at least there seems to be the very real perception that it is not, and this is just as important from a social perspective. Retaliations to referees in such a negative manner represent serious cases of academic misconduct ( Fox, 1994; Rennie, 2003). It is important to note, however, that this is not a direct consequence of OPR, but instead a failure of the general academic system to mitigate and act against inappropriate behavior. Increased transparency can only aid in preventing and tackling the potential issues of abuse and publication misconduct, something which is almost entirely absent within a closed system. COPE provides advice to editors and publishers on publication ethics, and on how to handle cases of research and publication misconduct, including during peer review. COPE could be used as the basis for developing formal mechanisms adapted to innovative models of peer review, including those outlined in this paper. Any new OPR ecosystem could also draw on the experience accumulated by Online Dispute Resolution (ODR) researchers and practitioners over the past 20 years. ODR can be defined as “the application of information and communications technology to the prevention, management, and resolution of disputes” ( Katsh & Rule, 2015), and could be implemented to prevent, mitigate, and deal with any potential misconduct during peer review alongside COPE. Therefore, the perceived danger of author backlash is highly unlikely to be acceptable in the current academic system, and if it does occur, it can be dealt with through increased transparency. Furthermore, bias and retaliation exist even in a double blind review process ( Baggs et al., 2008; Snodgrass, 2007; Tomkins et al., 2017), which is generally considered to be more conservative or protective. Such widespread identification of bias highlights this as a more general issue within peer review and academia more broadly, and we should be careful not to attribute it to any particular mode or trait of peer review. This is particularly relevant for more specialized fields, where the pool of potential authors and reviewers is relatively small ( Riggs, 1995). Nonetheless, careful engagement with researchers, especially high-risk or marginalized communities, should be a necessary and vital step prior to implementation of any system of reviewer transparency.

2.4.3 The impact of identification and anonymity on bias. One of the biggest criticisms levied at peer review is that, like many human endeavours, it is intrinsically biased and not the objective and impartial process many regard it to be. The question is no longer about whether or not it is biased, but to what extent it is in different social dimensions. One of the major issues is that peer review suffers from systemic confirmatory bias, with only results that are deemed as significant, statistically or otherwise, being selected for publication ( Mahoney, 1977). This causes a distinct bias within the published research record ( van Assen et al., 2014), as a consequence of perverting the research process itself by creating an incentive system that is almost entirely publication-oriented. Others have described the issues with such an asymmetric evaluation criteria as lacking the core values of a scientific process ( Bon et al., 2017).

The evidence on whether there is bias in peer review against certain author demographics is mixed, but overwhelmingly in favor of systemic bias against women in article publishing ( Budden et al., 2008; Darling, 2015; Grivell, 2006; Helmer et al., 2017; Lerback & Hanson, 2017; Lloyd, 1990; McKiernan, 2003; Roberts & Verhoef, 2016; Smith, 2006; Tregenza, 2002) (although see Blank (1991); Webb et al. (2008); Whittaker (2008)). After the journal Behavioural Ecology adopted double blind peer review in 2001, there was a significant increase in accepted manuscripts by women first authors; an effect not observed in similar journals that did not change their peer review policy ( Budden et al., 2008). One of the most recent public examples of this bias is the case where a reviewer told the authors that they should add more male authors to their study ( Bernstein, 2015). More recently, it has been shown in the Frontiers journal series that women are under-represented in peer-review and that editors of both genders operate with substantial same-gender preference ( Helmer et al., 2017). The most famous piece of evidence on bias against authors comes from a study by Peters & Ceci (1982) using psychology journals. They took 12 studies that came from prestigious institutions that had already been published in psychology journals. They retyped the papers, made minor changes to the titles, abstracts, and introductions but changed the authors’ names and institutions. The papers were then resubmitted to the journals that had first published them. In only three cases did the journals realize that they had already published the paper, and eight of the remaining nine were rejected—not because of lack of originality but because of the perception of poor quality. Peters & Ceci (1982) concluded that this was evidence of bias against authors from less prestigious institutions, although the deeper causes of this bias remain unclear at the present. A similar effect was found in an orthopaedic journal by Okike et al. (2016), where reviewers were more likely to recommend acceptance when the authors’ names and institutions were visible than when they were redacted. Further studies have shown that peer review is substantially positively biased towards authors from top institutions ( Ross et al., 2006; Tomkins et al., 2017), due to the perception of prestige of those institutions and, consequently, of the authors as well. Further biases based on nationality and language have also been shown to exist ( Dall’Aglio, 2006; Ernst & Kienbacher, 1991; Link, 1998; Ross et al., 2006; Tregenza, 2002).

While there are relatively few large-scale investigations of the extent and mode of bias within peer review (although see Lee et al. (2013) for an excellent overview of the different levels in which bias can be potentially injected into the process), these studies together indicate that inherent biases are systemically embedded within the process, and must be accounted for prior to any further developments in peer review. This range of population-level investigations into attitudes and applications of anonymity, and the extent of any biases resulting from this, exposes a highly complex picture, and there is little consensus on its impact at a system-wide scale. However, based on these often polarised studies, it is inescapable to conclude that peer review is highly subjective, rarely impartial, and definitely not as homogeneous as it is often regarded.

Applying a single, blanket policy regarding anonymity would greatly degrade the ability of science to move forward, especially without the flexibility to manage exceptions. The reasons to avoid one definite policy are the inherent complexity of peer review systems, the interplay with different cultural aspects within the various sub-sectors of research, and the difficulty in identifying whether anonymous or identified works are objectively better. As a general overview of the current peer review ecosystem, Nobarany & Booth (2017) recently recommended that, due to this inherent diversity, peer review policies and support systems should remain flexible and customizable to suit the needs of different research communities. We expect that, by emphasizing the different shared values across research communities, as well as their commonalities, we will see a new diversity of OPR processes developed across disciplines in the future. Remaining ignorant of this diversity of practices and inherent biases in peer review, as both social and physical processes, would be an unwise approach for future innovations.

2.5 Decoupling peer review from publishing

One proposal to transform scholarly publishing is to decouple the concept of the journal and its functions (e.g., archiving, registration and dissemination) from peer review and the certification that this provides. Some even hail this decoupling process as the “paradigm shift” that scholarly publishing needs ( Priem & Hemminger, 2012). Some publishers, journals, and platforms are now taking a more adventurous exploration of peer review that occurs subsequently to publication ( Figure 3). Here, the principle is that all research deserves the opportunity to be published (usually pending some form of initial editorial selectivity), and that filtering through peer review occurs subsequently to the actual communication of research articles (i.e., a publish then filter process). This is often termed “post-publication peer review”, a confusing terminology based on what constitutes “publication” in the digital age, depending on whether it occurs on manuscripts that have been previously peer reviewed or not ( blogs.openaire.eu/?p=1205). Numerous venues now provide inbuilt systems for post-publication peer review, including RIO, PubPub, ScienceOpen, The Winnower, and F1000 Research. In addition to the systems adopted by journals, other post-publication annotation and commenting services exist independent of any specific journal or publisher and operating across platforms, such as hypothes.is, PaperHive, and PubPeer.

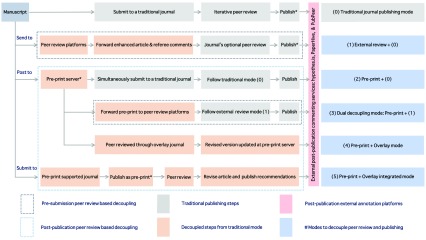

Figure 3. Traditional versus different decoupled peer review models: Under a decoupled model, peer review either happens pre-submission or post-publication.