Abstract

Several challenges exist in carrying out nation‐wide epidemiological surveys in the Kingdom of Saudi Arabia (KSA) due to the unique characteristics of its population. The objectives of this report are to review these challenges and the lessons learnt about best practices in meeting these challenges from the extensive piloting of the Saudi National Mental Health Survey (SNMHS), which is being carried out as part of the World Mental Health (WMH) Survey Initiative. We focus on challenges involving sample design, instrumentation, and data collection procedures. The SNMHS will ultimately provide crucial data for health policy‐makers and mental health specialists in KSA.

Keywords: epidemiology, mental health, psychiatry, survey, WHO‐CIDI diagnostic interview

1. INTRODUCTION

Mental disorders are a major public health problem, affecting people of all ages, cultures and socio‐economic levels (Whiteford et al., 2013). The World Health Organization (WHO) established the World Mental Health (WMH) Survey Initiative to increase awareness of this fact among governments throughout the world and to provide sufficiently textured information on prevalence, treatment, and correlates to help government health policy planners address the disparity between need for and use of mental health services (Demyttenaere et al., 2004; Kessler & Üstün, 2004).

Recognizing the potentially great importance of mental disorders, the Saudi National Mental Health Survey (SNMHS) was launched in 2011 as part of the WMH Initiative. SNMHS is the first population‐based epidemiological survey of mental disorders ever undertaken in the Kingdom of Saudi Arabia (KSA). In addition to assessing prevalence and unmet need for treatment of mental disorders, the survey will generate data on risk and protective factors for mental disorders and modifiable barriers to receiving treatment that can help guide intervention planning.

The main SNMHS survey is being administered to a nationally representative target sample of 4000 Saudis selected using a multistage clustered area probability household sample design stratified across various administrative areas of the Kingdom. Formidable logistical challenges exist in carrying out such a survey in KSA due to the relatively unique constellation of geographic, demographic, and cultural characteristics of the population. As a result, a comprehensive pilot study was built into the design guided by, but going well beyond the piloting carried out in previous WMH surveys (e.g. Ghimire, Chardoul, Kessler, Axinn, & Adhikari, 2013; Slade, Johnston, Oakley Browne, Andrews, & Whiteford, 2009; Xavier, Baptista, Mendes, Magalhães, & Caldas‐de‐Almeida, 2013). This paper discusses lessons learnt from this extensive piloting. We focus especially on challenges involving sample design, instrumentation, and data collection procedures, addressing issues related to the adapted research instrument, the Composite International Diagnostic Interview (CIDI 3.0), interviewer screening and training, field operation, saliva sample collection, and respondent attitudes.

2. METHODS

2.1. Pilot study design overview

The pilot study was conducted between May 25, 2011 and June 19, 2011 in Riyadh. The field procedures and instrumentation mirrored those in the planned main survey, while the sample was designed to reflect the types of respondents to be included in the main study (i.e. respondents of both genders, belonging to varied age groups and of varying socio‐economic statuses. A strict probability sample of households was not used in the pilot phase, but the within‐household probability sampling design used in the main survey was implemented.

2.2. Field staff and sample selection

The pilot study team included the principal investigators, project manager, project coordinator, information technology (IT) support staff and data managers. The project manager and the project coordinator were responsible for managing the day‐to‐day activities in the field and monitoring the interviewers as they collected the data.

Given that the Ministry of Health (MOH) is one of the project collaborators, some of its contributions included facilitating logistical aspects of the survey. One of the most important of these is that the interviewers recruited for the pilot were all physicians from MOH primary health care centers located throughout the city of Riyadh. These interviewers were paid per hour of work, as opposed to per completed interview, based on extensive previous evidence (Kessler, 2007; Kessler et al., 2004) that paying interviewers by number of completed interviews leads to reduced data quality. A total of 19 interviewers (10 male and nine female) conducted the interviews using a design in which one randomly selected male and one randomly selected female in each household were interviewed by an interviewer of the same gender. This gender‐specific interviewing is required by the cultural norms of KSA.

Prior to the data collection phase, interviewers attended a standard six‐day intensive WMH survey interviewer training course at the King Salman Center for Disability Research in Riyadh. The interviewers who completed this training successfully were then divided into data collection teams, where each team consisted of one male interviewer, one female interviewer, and a male driver provided by the MOH.

A list of 190 households was randomly selected from a purposive sample of neighborhoods in Riyadh obtained from the Saudi Ministry of Economy and Planning (General Authority for Statistics, 2007). Interviewer teams were assigned households from this list. Before data collection was officially launched, the support of the police chief, Health Affairs, MOH and the Ministry of Interior in Riyadh was obtained; they provided the study with official letters to prove its credibility to respondents. The study was also advertised in newspapers.

Interviewers were required to make up to a maximum of 10 in‐person visits to each household, contact a household member (the “informant”), introduce the study, and generate a listing of all non‐institutionalized, ambulatory Arabic‐speaking Saudi nationals between the ages of 15 and 65 who resided in that household as potential respondent(s). One eligible male and one eligible female were then randomly selected from this household listing as the designated household respondents. Family members reported by the household informant to have physical and/or mental disabilities that would not prevent them from participating in the study were oversampled, while information about household members whose health would, in the view of the informant, prevent them from participating were also recorded to represent a sampling frame for informant interviews (discussed later).

The designated household respondents were then approached and invited to participate in the survey after explaining study purposes, providing information on risks and benefits, and answering all questions before obtaining written informed consent. Respondents also received a 100 Riyal grocery coupon incentive for participation.

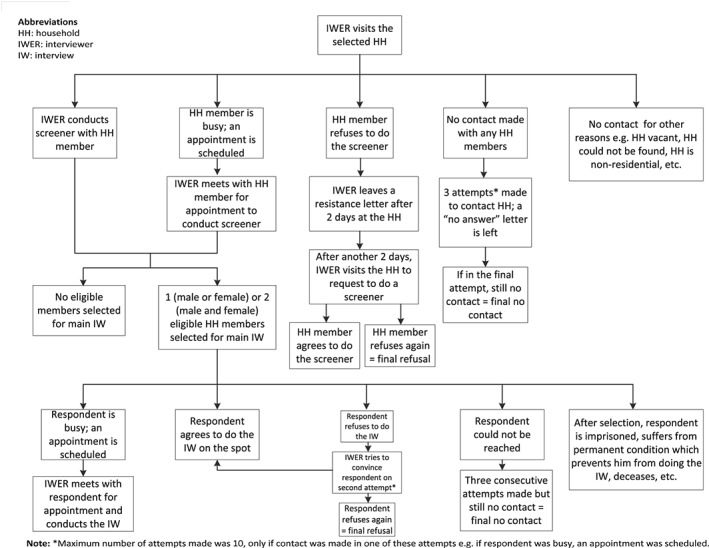

When the interviewer was not able to contact any household member after three attempts to visit, a “no answer” letter was left at the household that attempted to encourage the household's cooperation and provide a study telephone number where potential respondents could call to make an appointment for a household visit. Seven additional interviewer visits to the household were made before the household was closed out as a final no‐contact residence. In case the selected respondent refused to participate, a standard resistance letter was sent that thanked the selected respondent for their consideration and requested them to rethink their decision. Interviewers then revisited the household after a few days to check if the selected respondent had changed his/her mind about participating in the survey. These recruitment and consent procedures were approved by the Institutional Review Board committee at King Faisal Specialist Hospital and Research Center, Riyadh. See Figure 1 for overview of interview scenarios in the field.

Figure 1.

Field procedures

2.3. Study instrument and data collection

The interview used in the pilot study was a KSA translation of the WHO Composite International Diagnostic Interview (CIDI) Version 3.0 (Kessler & Üstün, 2004), a fully‐structured psychiatric diagnostic interview designed to be used by trained lay interviewers and to generate diagnoses according to the definitions and criteria of ICD‐10 (International Classification of Diseases, 10th revision) and DSM‐IV (Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition) (Kessler et al., 1994). The TRAPD (translation, review, adjudication, pretesting and documentation) model (Harkness, 2011) was implemented to carry out the Saudi adaptation of CIDI. The pretesting component included conducting cognitive interviews using “think aloud” and scripted probes for identifying comprehension, recall, and sensitivity concerns as the prevailing culture in KSA is traditionally conservative and its population could construe some questions to be offensive or insensitive. A more detailed description of this process is described elsewhere (Ghimire et al., 2013).

Pilot interviews were conducted on laptops using a Computer Assisted Personal Interview (CAPI) system that included an Audio Computer Assisted Self Interview (ACASI) component with a recorded voice that matched the gender of the respondent and allowed respondents to answer sensitive questions privately via a laptop. Previous methodological research has shown that this approach leads to significant increases in reports of embarrassing behaviors compared to more conventional face‐to‐face survey methods (Brown, Swartzendruber, & DiClemente, 2013; Fairley, Sze, Vodstrcil, & Chen, 2010; Gnambs & Kaspar, 2015; Turner et al., 1998). The instrument was administered as a face‐to‐face interview and its duration varied widely (3.5 hours on average) depending on the number of disorders reported. The Appendix lists the different sections of CIDI that were used in the pilot study.

At the completion of the interview, consent was sought from respondents to collect saliva samples using Oragene DNA Saliva kits for purposes of studying genetic risk factors for mental disorders. Interviewers then asked respondents to give their feedback about the study and the questionnaire by filling out a respondent debriefing form. Interviewers were also asked to document their observations from their contact attempts with respondents in addition to observations regarding the household and the surrounding neighborhood.

As shown in Table 1, the average age of the respondents was 33.9 years; majority of them were male (60%), married (57.56%) and with an education of up to 12th grade (58.75%). These demographics were only for those respondents that completed main interviews and excluded incomplete interviews, refusal cases, respondents with a permanent condition which prevented him/her from participating and other non‐interview cases where for e.g. respondent resided elsewhere or was unavailable due to time restrictions or any other reason.

Table 1.

Socio‐demographic characteristics of respondents (N = 80)

| Category | ||

|---|---|---|

| Age | Average age (in years) | 33.9 |

| Minimum | 15 | |

| Maximum | 65 | |

| % | ||

| Gender | Male | 60 |

| Female | 40 | |

| Marital status | Married | 57.56 |

| Separated | 1.25 | |

| Widowed | 2.50 | |

| Never married | 38.75 | |

| Education | Did not answer | 8.75 |

| None | 6.25 | |

| Primary school (upto 6th grade) | 5 | |

| Secondary school (upto 11th grade) | 1.25 | |

| Secondary school (upto 12th grade) | 58.75 | |

| Undergraduate degree | 18.75 | |

| Graduate (MA/PhD) | 1.25 |

3. DATA PROCESSING, MANAGEMENT AND QUALITY CONTROL

Each administered interview was encrypted and transferred wirelessly from laptops (fitted with wireless network cards) to an online secure server. Wireless data also allowed the transfer of selected households or selected respondents between interviewers after approval from the field manager. The laptops also contained a proprietary sample management software system developed and tailored to the needs of the SNMHS by the Survey Research Center, Institute for Social Research, University of Michigan along with the computerized version of the CIDI. The sample management system displayed information in a user‐friendly fashion to allow interviewers, supervisors, field managers, the field coordinator, and the quality control team to monitor the status of selected cases, call attempts, call notes and send/receive dates. The system also had a search function that allowed querying the database on a number of dimensions at both the level of the individual respondent (e.g. sample ID, interviewer ID, result code, result date, interview length, etc.) and the level of the interviewer aggregated over respondents by time (e.g. distribution of interview length, cooperation rate, response rate, etc. by week and overall) to facilitate supervisor monitoring. After the interview data had been transferred by the interviewer to the secure server, the data manager evaluated the raw data and sent weekly reports to the survey central office and headquarters regarding specific cases that needed closer inspection. Some of such problematic instances included interviews conducted in a very short period of time, inconsistent result codes entered by interviewer(s) for possible interview or contact attempt outcomes, or interview data entered for the wrong address.

3.1. Instrument challenges

3.1.1. Translation

The fact that Arabic dialect differs across regions in KSA created challenges in translating the instrument in a way that would be understood by everyone in the Saudi population. Initially, an attempt was made to address this issue by translating the instrument into Classic Arabic by adding some Saudi nuances to key questions, but respondents still reported in debriefing that they had difficulty understanding some key questions. Based on these concerns, regional modifications were developed for the main survey. In retrospect, a dedicated translation, back‐translation, and harmonization process that pulled in regional representatives from the onset would have been more efficient.

3.1.2. Complexity of questions

Debriefing showed that some sections of the CIDI were worded in a complex way that made it difficult for the respondent to understand. Similar issues have also been reported in previous pilot studies in the WMH Survey Initiative (Slade et al., 2009; Xavier et al., 2013). This problem was addressed by revising complex questions to simplify wording and to add question‐by‐question specifications to explain the intended meaning of especially complicated questions and phrases.

3.1.3. Instrument length

Interviews were longer than expected and much longer than the average length of the English version (approximately two hours; Kessler & Üstün, 2004). Although the increased duration could be partly attributed to the additional sections added to the Saudi CIDI, it is important to note that the cultural attitude and customs of Saudi respondents contributed to the interview length. Respondents tended to take multiple breaks, including for prayer, to show hospitality to the interviewer, or to attend to their family's needs. Some misunderstood the purpose of the study and asked questions about unrelated health issues, deviating from the main questions and lengthening the average time taken to complete the interview. Moreover, some respondents became restless as the interview progressed and tended to cut short their answers or not pay attention to the questions asked, especially those placed towards the end of the interview, consistent with findings from previous literature (Caspar & Couper, 1997; Couper, 1998).

Based on the average pilot interview length being longer than expected, the threshold for administering certain sections and subsections (e.g. the decision whether or not to assess subthreshold manifestations of disorders among respondents that failed to meet full diagnostic criteria) was increased in order to reduce the average length of the questionnaire. Some sections were also shortened by removing questions that did not affect diagnoses. Finally, increased computerization was used to improve flow of the instrument in an effort to reduce administration time. For example, automatic fills were computerized for questions to make them gender‐specific and easier to administer for the interviewer. The word “spouse” in Arabic, for example, has to be translated using two separate words instead of one to describe a female and a male. For these types of questions, the computer automatically filled out the correct gender in the “his/her” field.

3.2. The interviewer model

The typical approach to carrying out national surveys in most countries around the world is to hire interviewers in regional areas who work only in those areas, for the most part returning home each night after they have completed their interviews. There are some cases where interviewers working under this model will travel, such as when some segment of the population lives in remote areas, or where the best regional interviewers from around the country are used near the end of the field period, to travel to regions with low response rates, to help improve response in those regions. This approach requires regional hiring and training. However, when the data collection protocol is very complex, as it is in the CIDI, it sometimes makes sense to build a highly skilled centralized data collection team that travels throughout the country to administer the survey. This approach has been used, for example, in CIDI surveys carried out in Europe (Alonso et al., 2002) as well as in the Health and Nutrition Examination Survey in the United States (Zipf et al., 2013). Based on the substantial variability in interviewer performance found in the SNMHS pilot study, the latter approach was used in the main survey.

A related issue is that the interviewers in the pilot study, as noted earlier, were physicians from the MOH who were assigned to the project on a part‐time basis to carry out interviews. As it turned out, the schedules of these clinicians were too hectic to work efficiently on the project. Moreover, as the SNMHS team had been cautioned by the staff of the WMH Data Collection Coordination Center, the pilot study found that there were substantial difficulties getting interviewers with a medical background to adhere to the instructed standardized interviewing protocol required in the survey. The physician‐interviewers sometimes used their judgment rather than reading questions as required, potentially biasing the data collected. Furthermore, due to the nature of their profession, some clinician‐interviewers were not used to venturing into certain areas of the city because of safety concerns. Thus, for the main survey, the research staff decided to hire lay interviewers who are more available, affordable, and appropriate for the interviewing tasks, most of which turned out to be recent university graduates who were intelligent, motivated, and had a lifestyle that allowed them to travel across the country for a year to work on the project.

3.3. Field operation challenges

3.3.1. Accessing and locating selected households

As in most large‐scale community surveys in many parts of the world, the pilot experienced problems of access due to areas being under military and governmental security such as the Royal Commission and private gated communities. In addition, similar to some other national surveys in the WMH Consortium (e.g. Nepal), interviewers reported facing difficulties locating certain households. This was attributed to: (a) street names missing from the maps, (b) discrepancies between the listed names of the household occupants provided to the interviewer and the names of current resident provided by selected families, (c) selected household with multiple numbers on their door, and (d) harsh climate conditions that affected the morale of the field staff and made the process additionally slow. In order to address these challenges, the main survey benefited from recruiting skilled interviewers and providing each interviewer with a global positioning system (GPS) device for orientation.

3.3.2. Respondent attitude

Because of their conservative upbringing, some respondents were reserved in their answers while others asked to terminate the interview when they heard the questions. And some respondents continued to doubt the credibility of the study even after being shown official papers and IDs. Additionally, sometimes male family members of female participants requested to stay during the interview even though the interviewer was a female. The use of ACASI was especially helpful in dealing with the latter situations, as this procedure allows respondents to put on head phones connected to the survey laptop, have questions read by a digital voice, and to enter responses directly into the computer from the key pad without other people in the room knowing the nature of the questions. Respondents also tended not to abide by time‐sensitive appointments arranged between them and the interviewers. Irrespective of these findings, the response rate for the pilot was 81.6%.

4. THE MAIN STUDY

The pilot study successfully tested all the aspects of the main survey, including the methods and systems employed. The vast majority of randomly selected respondents were receptive and cooperative despite the issues noted in the previous section.

Based on the considerations described in this paper, the instrument and field procedures for the main SNMHS were modified in important ways. The experience gained from the main study, which has as of now completed data collection in more than half the regions of KSA, suggests that these modifications were successful, as field quality and efficiency have been excellent and much improved over those in the pilot. These results demonstrate that careful and thoughtful pilot testing in settings that lack a prior track record of carrying out health surveys can be of great value in improving survey quality and efficiency.

DECLARATION OF INTEREST STATEMENT

In the past 3 years, Dr. Kessler received support for his epidemiological studies from Sanofi Aventis; was a consultant for Johnson & Johnson Wellness and Prevention, Shire, Takeda; and served on an advisory board for the Johnson & Johnson Services Inc. Lake Nona Life Project. Kessler is a co‐owner of DataStat, Inc., a market research firm that carries out healthcare research.

ACKNOWLEDGEMENTS

The Saudi National Mental Health Survey (SNMHS) is under the patronage of the King Salman Center for Disability Research. It is funded by Saudi Basic Industries Corporation (SABIC), King Abdulaziz City for Science and Technology (KACST), Abraaj Capital, Ministry of Health (Saudi Arabia), and King Saud University; King Faisal Specialist Hospital and Research Center, and Ministry of Economy and Planning, General Authority for Statistics are its supporting partners. The SNMHS is carried out in conjunction with the World Health Organization World Mental Health (WMH) Survey Initiative which is supported by the National Institute of Mental Health (NIMH; R01 MH070884), the John D. and Catherine T. MacArthur Foundation, the Pfizer Foundation, the US Public Health Service (R13‐MH066849, R01‐MH069864, and R01 DA016558), the Fogarty International Center (FIRCA R03‐TW006481), the Pan American Health Organization, Eli Lilly and Company, Ortho‐McNeil Pharmaceutical, GlaxoSmithKline, and Bristol‐Myers Squibb. The authors thank the staff of the WMH Data Collection and Data Analysis Coordination Centers for assistance with instrumentation, fieldwork, and consultation on data analysis. None of the funders had any role in the design, analysis, interpretation of results, or preparation of this paper. A complete list of all within‐country and cross‐national WMH publications can be found at http://www.hcp.med.harvard.edu/wmh/

The authors also thank Beth‐Ellen Pennell, Steve Heeringa, Zeina Mneimneh, Yu‐chieh Lin and other staff at the Survey Research Center, University of Michigan for their advice and support on the design and implementation of the SNMHS. Additionally, the authors acknowledge the work of all field staff that participated in the study, as well as input of members from the National Guard Hospital (Saudi Arabia), King Salman bin Abdulaziz Hospital and Blaise. Finally, the authors are grateful to Noha Kattan, the SNMHS team members and all the participants.

APPENDIX 1. ADAPTED CIDI 3.0 SECTIONS USED IN THE PILOT SURVEY

| Sections |

|---|

| 0. Household listing (HHL) |

| 1. Chronic Conditions (CC) |

| 2. 30 Day Functioning (FD) |

| 3. 30 Day Symptoms (NSD) |

| 4. Screening (SC) |

| 5. Depression (D) |

| 6. Mania (M) |

| 7. Panic Disorder (PD) |

| 8. Specific Phobia (SP) |

| 9. Social Phobia (SO) |

| 10. Agoraphobia (AG) |

| 11. Generalized Anxiety Disorder (G) |

| 12. Intermittent Explosive Disorder (IED) |

| 13. Marriage (MR) |

| 14. Suicidality (SD) |

| 15. PTSD (PT) |

| 16. Services (SR) |

| 17. Personality (PEA) |

| 18. Neurasthenia (N) |

| 19. Tobacco (TB) |

| 20. Eating Disorders |

| 21. Premenstrual Syndrome (PR) |

| 22. Obsessive–Compulsive Disorder (O) |

| 23. Psychosis (PS) |

| 24. Employment (EM) |

| 25. Finances (FN) |

| 26. Children (CN) |

| 27. Social Networks (SN) |

| 28. Long Demographics (DE) |

| 29. Childhood (CH) |

| 30. Social Satisfaction (Z) |

| 31. Attitudes towards Substance/Alcohol Use (Z) |

| 32. Alcohol (AU) |

| 33. Illegal Substance Use (IU) |

| 34. Conduct Disorder (CD) |

| 35. Attention‐Deficit/Hyperactivity (AD) |

| 36. Oppositional‐Defiant Disorder (OD) |

| 37. Separation Anxiety Disorder (SA) |

| 38. Disability ADL/IADL (self) |

| 39. Disability ADL/IADL (informant) |

| 40. Dementia FAQ (self) |

| 41. Dementia FAQ (informant) |

| 42. Disability Burden (informant) |

| 43. Family Burden (FB) |

| 44. Religiosity |

| 45. Interviewer's Observation (IO) |

| 46. Contact Observation (CO) |

Shahab M, Al‐Tuwaijri F, Bilal L, et al. The Saudi National Mental Health Survey: Methodological and logistical challenges from the pilot study. Int J Methods Psychiatr Res. 2017;26:e1565 10.1002/mpr.1565

REFERENCES

- Alonso, J. , Ferrer, M. , Romera, B. , Vilagut, G. , Angermeyer, M. , Bernert, S. , … Polidori, G. (2002). The European study of the epidemiology of mental disorders (ESEMeD/MHEDEA 2000) project: Rationale and methods. International Journal of Methods in Psychiatric Research, 11(2), 55–67. 10.1002/mpr.123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown, J. L. , Swartzendruber, A. , & DiClemente, R. J. (2013). Application of audio computer‐assisted self‐interviews to collect self‐reported health data: An overview. Caries Research, 47(1), 40–45. 10.1159/000351827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspar, R. A. , & Couper, M. P. (1997). Using keystroke files to assess respondent difficulties with an ACASI instrument. Paper presented at the Joint Statistical Meetings of the American Statistical Association.

- Couper, M. P. (1998). Measuring survey quality in a CASIC environment . Paper presented at the Proceedings of the Survey Research Methods Section of the American Statistical Association.

- Demyttenaere, K. , Bruffaerts, R. , Posada‐Villa, J. , Gasquet, I. , Kovess, V. , Lepine, J. P. , … WHO World Mental Health Survey Consortium , WHO World Mental Health Survey Consortium (2004). Prevalence, severity, and unmet need for treatment of mental disorders in the World Health Organization world mental health surveys. JAMA, 291(21), 2582–2590. 10.1001/jama.291.21.2581 [DOI] [PubMed] [Google Scholar]

- Fairley, C. K. , Sze, J. K. , Vodstrcil, L. A. , & Chen, M. Y. (2010). Computer‐assisted self interviewing in sexual health clinics. Sexually Transmitted Diseases, 37(11), 665–668. 10.1097/OLQ.0b013e3181f7d505 [DOI] [PubMed] [Google Scholar]

- General Authority for Statistics (2007). Population and Housing Characteristics in the Kingdom of Saudi Arabia: Demographic Survey. Riyadh: Central Department of Statistics and Information, Ministry of Economy and Planning. [Google Scholar]

- Ghimire, D. J. , Chardoul, S. , Kessler, R. C. , Axinn, W. G. , & Adhikari, B. P. (2013). Modifying and validating the composite international diagnostic interview (CIDI) for use in Nepal. International Journal of Methods in Psychiatric Research, 22(1), 71–81. 10.1002/mpr.1375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gnambs, T. , & Kaspar, K. (2015). Disclosure of sensitive behaviors across self‐administered survey modes: A meta‐analysis. Behavior Research Methods, 47(4), 1237–1259. 10.3758/s13428-014-0533-4 [DOI] [PubMed] [Google Scholar]

- Harkness, J. (2011). Translation. Cross‐Cultural Survey Guidelines. http://ccsg.isr.umich.edu/translation.cfm [12 April 2015].

- Kessler, R. C. (2007). The global burden of anxiety and mood disorders: Putting ESEMeD findings into perspective. The Journal of Clinical Psychiatry, 68(Suppl 2), 10–19. [PMC free article] [PubMed] [Google Scholar]

- Kessler, R. C. , Berglund, P. , Chiu, W. T. , Demler, O. , Heeringa, S. , Hiripi, E. , … Zheng, H. (2004). The US National Comorbidity Survey Replication (NCS‐R): Design and field procedures. International Journal of Methods in Psychiatric Research, 13(2), 69–92. 10.1002/mpr.167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler, R. C. , McGonagle, K. A. , Zhao, S. , Nelson, C. B. , Hughes, M. , Eshlcman, S. , & Kendler, K. S. (1994). Lifetime and 12‐month prevalence of DSM‐III‐R psychiatric disorders in the United States: Results from the National Comorbidity Survey. Archives of General Psychiatry, 51(1), 8–19. 10.1001/archpsyc.1994.03950010008002 [DOI] [PubMed] [Google Scholar]

- Kessler, R. C. , & Üstün, T. (2004). The world mental health (WMH) survey initiative version of the World Health Organization (WHO) composite international diagnostic interview (CIDI). International Journal of Methods in Psychiatric Research, 13(2), 93–121. 10.1002/mpr.168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slade, T. , Johnston, A. , Oakley Browne, M. A. , Andrews, G. , & Whiteford, H. (2009). 2007 National Survey of mental health and wellbeing: Methods and key findings. Australian and New Zealand Journal of Psychiatry, 43(7), 594–605. 10.1080/00048670902970882 [DOI] [PubMed] [Google Scholar]

- Turner, C. F. , Ku, L. , Rogers, S. M. , Lindberg, L. D. , Pleck, J. H. , & Sonenstein, F. L. (1998). Adolescent sexual behavior, drug use, and violence: Increased reporting with computer survey technology. Science, 280(5365), 867–873. [DOI] [PubMed] [Google Scholar]

- Whiteford, H. A. , Degenhardt, L. , Rehm, J. , Baxter, A. J. , Ferrari, A. J. , Erskine, H. E. , … Vos, T. (2013). Global burden of disease attributable to mental and substance use disorders: Findings from the global burden of disease study 2010. The Lancet, 382(9904), 1575–1586. 10.1016/S0140-6736(13)61611-6 [DOI] [PubMed] [Google Scholar]

- Xavier, M. , Baptista, H. , Mendes, J. M. , Magalhães, P. , & Caldas‐de‐Almeida, J. M. (2013). Implementing the world mental health survey initiative in Portugal – Rationale, design and fieldwork procedures. International Journal of Mental Health Systems, 7(1), 19 10.1186/1752-4458-7-19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zipf, G. , Chiappa, M. , Porter, K. S. , Ostchega, Y. , Lewis, B. G. , & Dostal, J. (2013). National health and nutrition examination survey: Plan and operations, 1999–2010. Vital and Health Statistics. Series 1, Programs and Collection Procedures, 56, 1–37. [PubMed] [Google Scholar]