What do contribution statements on articles tell us that author order does not—and how can they be improved?

Abstract

Most scientific research is performed by teams, and for a long time, observers have inferred individual team members’ contributions by interpreting author order on published articles. In response to increasing concerns about this approach, journals are adopting policies that require the disclosure of individual authors’ contributions. However, it is not clear whether and how these disclosures improve upon the conventional approach. Moreover, there is little evidence on how contribution statements are written and how they are used by readers. We begin to address these questions in two studies. Guided by a conceptual model, Study 1 examines the relationship between author order and contribution statements on more than 12,000 articles to understand what information is provided by each. This analysis quantifies the risk of error when inferring contributions from author order and shows how this risk increases with team size and for certain types of authors. At the same time, the analysis suggests that some components of the value of contributions are reflected in author order but not in currently used contribution statements. Complementing the bibliometric analysis, Study 2 analyzes survey data from more than 6000 corresponding authors to examine how contribution statements are written and used. This analysis highlights important differences between fields and between senior versus junior scientists, as well as strongly diverging views about the benefits and limitations of contribution statements. On the basis of both studies, we highlight important avenues for future research and consider implications for a broad range of stakeholders.

INTRODUCTION

Scientific research has become the domain of teams, yet rewards and sanctions are still directed at individual scientists (1–4). Therefore, external stakeholders such as scientific peers, potential collaborators, tenure committees, and funding agencies need information on who did what and which team member deserves how much of the credit. Historically, the primary mechanism to obtain this information has been to infer contributions from authors’ presence and position on the byline (5, 6). However, there are widespread concerns that authorship conveys insufficient information, especially given the increasing size of teams and specialization of team members (6–9). In response to these concerns, a growing number of journals now require that teams disclose which authors made which contributions (Table 1). Yet, many stakeholders continue to use authorship order as the primary proxy for authors’ contributions, raising the question of how the information content of contribution disclosures compares to that of authorship order. Moreover, we need a better understanding of how authors decide on contribution statements and how authors as well as readers think about the value—and limitations—of these statements. In addition to being of interest in their own right, these insights may point toward important implications for using and improving contribution disclosures.

Table 1. Top 15 journals in the multidisciplinary sciences and their approaches to contribution disclosures.*.

Journals with the 15 highest 2014 impact factors in the category “multidisciplinary sciences” (source: Journal Citation Reports/Web of Science). Journal policies as of August 2017.

|

Journal rank |

Journal title |

Impact factor |

Statements “required,” “encouraged,” or no policy |

Contributions standardized/offers template |

Asks for level of contributions |

Information made public in paper |

| 1 | Nature | 41.456 | Required | No | No | Yes |

| 2 | Science | 33.611 | Required | Yes | Yes | Optional |

| 3 | Nature Communications | 11.470 | Required | No | No | Yes |

| 4 | PNAS | 9.674 | Required | No | No | Yes |

| 5 | Scientific Reports | 5.578 | Required | No | No | Yes |

| 6 | Annals of the New York Academy of Sciences | 4.383 | Required | No | No | Yes |

| 7 | Journal of the Royal Society Interface | 3.917 | Required | No | No | Yes |

| 8 | Research Synthesis Methods | 3.898 | No policy | — | — | — |

| 9 | PLOS ONE | 3.234 | Required | Yes | No | Yes |

| 10 | Proceedings of the Japan Academy Series B: Physical and Biological Sciences | 2.652 | No policy | — | — | — |

| 11 | Proceedings of the Royal Society A: Mathematical Physical and Engineering Sciences | 2.192 | Required | No | No | Yes |

| 12 | Philosophical Transactions of the Royal Society A: Mathematical Physical and Engineering Sciences | 2.147 | Required | No | No | Yes |

| 13 | PeerJ | 2.112 | Required | Yes | No | Yes |

| 14 | Naturwissenschaften | 2.098 | No policy | — | — | — |

| 15 | Proceedings of the Romanian Academy Series A: Mathematics Physics Technical Sciences | 1.658 | No policy | — | — | — |

*This information was accurate at the time of submission.

Here, we first conceptualize the total value of a team member’s substantive contributions to a project as consisting of various components. By comparing author order and contribution disclosures on more than 12,000 articles in the biological and life sciences, we then show what information about these components can be inferred from authorship order and from currently used contribution disclosures. We also quantify the risk of error when using conventional approaches to interpreting author order and show how this risk increases with team size and for certain types of authors. Although explicit contribution statements provide important complementary information, they cannot—in their current form—substitute for author order. We complement this analysis with insights from a large-scale survey of more than 6000 authors on papers that include contribution statements across a broader range of fields. The survey data support many of the findings from our bibliometric analysis. Moreover, they provide additional quantitative and qualitative evidence on the process by which contribution statements are written and used. Both studies highlight important challenges and concerns regarding contribution statements. They also suggest a number of improvements to journal policies as well as the need for future research and discussions about the use and impact of contribution disclosures.

CONCEPTUAL MODEL

Authorship as an aggregate indicator

Scientists consider multiple factors when deciding which individuals to include as co-authors and where to place them on the byline (6). Hence, authorship aggregates information on a number of different aspects, and different types of external observers face the challenge of extracting the particular information they need. Some observers seek information on the specific types of contributions made by a co-author (for example, conceptualization versus data analysis), which is helpful when searching for collaborators with particular competences or investigating the source of problems and misconduct (8). Others are interested in the overall “value” of an author’s contributions and the resulting share of credit and recognition this author should receive (2, 10). As described in more detail in the Supplementary Materials, we conceptualize the overall value of an author’s contributions as reflecting four components: (i) the count or breadth of contributions made, (ii) the particular types of contributions, (iii) the level of involvement in particular contributions, and (iv) the importance of different contributions for achieving project objectives (fig. S1). Author order may allow partial inferences about all four components; currently used contribution statements provide explicit information primarily on the first two. Although most readers are interested in authors’ substantive contributions, authorship decisions are also influenced by social dynamics that exacerbate the challenge of inferring actual contributions (7, 8).

In the following Study 1, we examine how well author order predicts the number and types of contributions made by co-authors—the two elements typically captured in explicit contribution statements. This analysis reveals what information about these aspects of contributions can be inferred from author order, what errors are likely when making these inferences, and how much additional information is provided by explicit contribution statements. At the same time, the analysis may point toward important components of the value of contributions that are reflected in author order but not in currently used contribution statements, such as the level of an author’s involvement in a particular type of contribution.

STUDY 1: RESULTS FROM BIBLIOMETRIC ANALYSIS

Measuring contributions

We analyze data from articles published in PLOS ONE between 2007 and 2011. PLOS ONE is considered by some to be a leader in requiring contribution disclosures (11) and has an impact factor in the top quartile in its field. The journal publishes research primarily in the biological and life sciences, the domain in which discussions around authorship and contribution disclosures are most active (6, 8, 12). To address the concern that PLOS ONE publishes a smaller share of high-impact papers than more selective journals such as Science or Proceedings of the National Academy of Sciences (PNAS), we also performed our analyses using the top 10% of papers in terms of average annual citations, with very similar results (see the Supplementary Materials). Because the division of labor in very large teams may not be comparable to that in typical teams and to facilitate the analysis, we focus on papers with 2 to 14 authors.

PLOS ONE data provide a novel opportunity for quantitative analysis because the journal requires that articles disclose the types of contributions made by each co-author using predefined categories (conceived the idea, performed experiments, contributed reagents/materials/analysis tools, analyzed data, and wrote the paper), as well as an open-ended field for “other” contributions. For comparison, Table 1 provides an overview of the top 15 interdisciplinary sciences journals and their respective approaches to contribution disclosures. Eleven of the journals require contribution statements. PLOS ONE, PeerJ, and Science collect information using predefined categories of contributions, although Science does not disclose these data routinely. Other journals use open-entry fields but often mention in their instructions contributions similar to those used by PLOS ONE. All journals with contribution disclosures focus on whether or not individual authors were involved in different types of contributions [components (i) and (ii) in our conceptual model], and no journal systematically discloses the level of authors’ involvement in a particular contribution or the importance of contributions for project success [components (iii) and (iv)].

Although contribution statements published on papers may also be shaped by factors other than actual contributions, Study 1 is based on the premise that contribution statements are highly correlated with actual contributions and can thus serve as a meaningful proxy (8, 12, 13). Study 2 generally supports this view while also providing novel insights into potential social dynamics. We partly address confounding effects of social factors using controls and robustness checks discussed below.

Author order and contributions

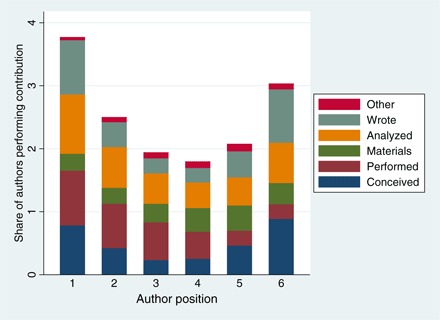

Table S1 shows summary statistics. Figure 1 visualizes the shares of authors in a particular author position who made a particular contribution, focusing on teams with six members (the median team size). First authors made the broadest range of different contributions (average, 3.77), followed by last authors (3.03) and middle authors (ranging from 2.50 to 1.80). Figure 1 also shows differences in the particular contributions made. For example, 94% of first authors in teams of six were involved in analyzing data, 87% in performing experiments, 86% in writing the paper, and 78% in conceiving the study. In contrast, 89% of last authors conceived the study, 85% wrote the paper, 64% analyzed data, and 23% performed experiments. Middle authors listed earlier (that is, second or third position) tend to be more involved in empirical activities than those listed later (that is, fourth or fifth position), but they are similarly likely to be involved in conception or writing. Regression models that use teams of all sizes and control for detailed scientific field as well as affiliation in single versus multiple laboratories (table S2) show that the differences between first, last, and middle authors are qualitatively the same as in Fig. 1. At the same time, these differences partly depend on the size of the team, highlighting the need to take team size into account when interpreting author positions (table S3). Expanding on the analysis of individual contributions, we also examined which combinations of contributions tend to be made by authors in different positions. We find that first authors are more likely to have conceived&written&analyzed as well as performed&analyzed than middle and last authors. Last authors are more likely to have conceived&written than first or middle authors (table S2, models 8 to 10).

Fig. 1. Share of authors performing a particular contribution; stacked for each author position.

Teams with six authors. For example, 78% of first authors conceived (blue segment), 87% performed (brown), 27% provided materials (green), 94% analyzed (orange), 86% wrote (turquoise), and 5% contributed “other” (red). Summing these percentages (377%) shows that the average first author made 3.77 different contributions.

The information content of authorship order may be different when authors are listed alphabetically (14). Only 7.04% of papers in our sample use alphabetical authorship, consistent with the notion that alphabetical authorship is the exception in the biological and life sciences. Even the observed cases of alphabetical authorship may reflect a contribution-based assignment, where alphabetical order emerges by chance (that is, an author has made the most contributions and also happens to have the name with the earliest letter in the alphabet). Consistent with this idea, table S4 shows that the rate of alphabetical order declines markedly with team size and does not differ from what would be expected if alphabetical order emerged simply by chance from a contribution-based assignment of positions (the number of possible permutations of x different names is x!). For example, 50.58% of articles with two authors use alphabetical authorship, which is not significantly different from the 50% predicted by chance. Models 11 and 12 in table S2 show that papers using alphabetical and non-alphabetical author order show similar differences in the count of contributions between first, middle, and last authors. These regressions are estimated using only articles with fewer than six authors because alphabetical authorship is virtually nonexistent in larger teams (table S4). Together, author order provides similar information on contributions in the biological and life sciences even when authors are listed alphabetically.

Authors can also be designated as “corresponding author.” We find that 32% of corresponding authors were also first authors, 9% were middle authors, and 59% were last authors (table S5). Corresponding authors made an average of 3.47 contributions, significantly (P < 0.01) more than noncorresponding authors (2.28). This higher contribution count largely reflects greater involvement in conceiving the study and in writing the paper (table S6). Being designated as corresponding author is associated with a significantly greater count of contributions even for a given author position, and this effect is most pronounced for corresponding authors who are also middle authors (table S6, models 8 to 10).

Reliability of author order as indicator of contributions

The previous analysis suggests that author position allows useful inferences about author contributions, consistent with common practice. However, these inferences are only probabilistic and will often be wrong. This is most obvious with respect to the types of contributions: Assuming that contribution statements are a reasonable proxy for actual contributions, our observation that 80% of first authors are reported to have conceived the study (table S1) suggests that inferring this contribution from first authorship will be incorrect roughly 20% of the time. The error rate will be lowest when inferring contributions that are typically made by a very large (or very small) share of the authors in a particular position. The error rate will be highest when inferring contributions that are made by roughly half of the authors in a particular position. For example, 49% of middle authors are involved in data analysis, and inferring that a middle author was involved in data analysis will be correct only about half the time. Although this example uses raw sample means, the implied error rates are largely the same if we use predicted probabilities of having made particular contributions from regressions that control for field and other factors (table S7).

To explore how reliably author order informs about the breadth of authors’ involvement in the project, fig. S2 shows the distribution of the count of different contributions for first, last, and middle authors in teams of six. We find considerable heterogeneity even for the same author position. For example, although 22.69% of first authors make five or six contributions, 45.44% make four contributions and 31.88% make only three or fewer contributions.

Finally, we examine how reliably author position informs about authors’ contributions relative to each other. We start from two empirical “conventions” observed earlier (Fig. 1 and table S2): First authors typically have broader involvement in the study than last authors, and last authors have broader involvement than middle authors. We then examine how many papers with at least three authors deviate from these conventions. We find that 45.59% of papers deviate from at least one of the two conventions, whereby 15.94% deviate in that first authors have a lower count of contributions than last authors, and 30.32% deviate in that last authors have a lower contribution count than at least one middle author.

Taken together, authorship order provides some information about the underlying number and types of scientists’ contributions. However, the required inferences will often be wrong, suggesting that explicit contribution disclosures provide important additional information. In the next analysis, we examine whether the correspondence between author order and stated contributions differs by team size, possibly reflecting differences in teams’ organization but also the increasing complexity of aggregating different aspects of individuals’ contributions into unidimensional authorship order (fig. S1).

Team size

Figure S3 shows that the count of contributions decreases with team size for first and middle authors but remains largely stable for last authors. This result holds when we control for detailed scientific field and other project attributes (tables S8 and S9). The lower contribution count of first authors in larger teams reflects a lower likelihood of being involved in all of the different activities. For middle authors, the lower count of contributions in larger teams reflects that they are less likely to be involved in conception, analysis, and writing; their likelihood of performing experiments remains stable across team size (table S8).

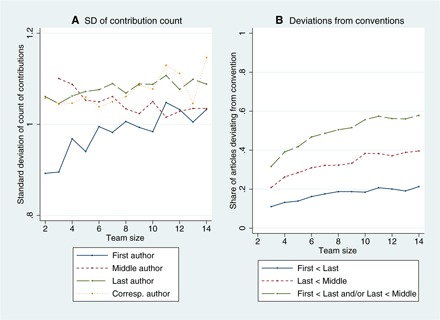

Team size also has implications for the reliability of author order as an indicator of contributions. Figure 2A shows that the SD of the contribution count increases with team size for first authors, suggesting more error when estimating the breadth of first authors’ contributions in larger teams. This measure is more stable across team size for middle and last authors. Figure 2B shows the share of teams deviating from the “convention” that first authors have a higher count of contributions than last authors and last authors have a higher count of contributions than middle authors. This share increases from 31.66% in teams of 3 to 57.87% in teams of 14, largely reflecting an increasing likelihood that one of the middle authors made a broader set of contributions than the last author. Overall, author order is less reliable as an indicator of the breadth of authors’ contributions in larger teams.

Fig. 2. Variation in author contributions by team size.

(A) SD of the count of contributions, by position and team size. (B) Share of articles deviating from conventions regarding count of contributions, by position and team size.

Inclusion as an author

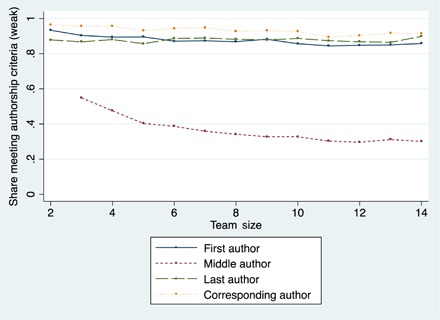

What contributions can be inferred from the fact that a person is listed as an author on the paper at all? The submission instructions of many journals, including Science and PLOS ONE, refer to the authorship requirements established by the International Committee of Medical Journal Editors (ICMJE) (15). The ICMJE was founded in 1978 by editors of leading medical journals to develop guidelines for the conduct, reporting, editing, and publication of scholarly work. It is also intended to establish best practices and ethical standards. According to the ICMJE, authorship requires that an individual fulfills all four of the following criteria: (i) substantial contribution to conception and design, acquisition of data, or analysis and interpretation of data; (ii) drafting the article or revising it critically for important intellectual content; (iii) final approval of the version to be published; and (iv) agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Although we cannot explore the last two requirements, we can explore whether authorship at least reflects the contributions highlighted in requirements (i) and (ii) (see Materials and Methods for details). Consistent with previous work using smaller samples (6, 12), we find that for a large share of authors (47.66%), authorship does not reflect the contributions stated in the ICMJE guidelines, primarily because they were not involved in writing. This share is highest among middle authors (64.5%) but is nontrivial also among first authors (11.6%) and last authors (12.3%). Moreover, this share is higher in larger teams (Fig. 3, fig. S4, and table S10).

Fig. 3. Share of authors in each position that meet criteria (i) and (ii) of the ICMJE requirements for authorship (in their less strict interpretation, see Materials and Methods for details).

STUDY 2: RESULTS FROM AUTHOR SURVEY

Study 1 provides insights into the relationship between author order and contribution statements and highlights potential errors when using the former to infer the latter. However, bibliometric data provide little insight into how contribution statements are written, what benefits and challenges scientists see, and how statements might be improved. To gain deeper insights into these issues, we analyze more than 6000 responses from a survey of corresponding authors on papers published in two journals that require contribution disclosures: PLOS ONE and PNAS. Details on the sampling and survey methodology are provided in Materials and Methods.

One part of the survey was designed to gather information on respondents’ opinions about contribution statements, as well as their impression of common practices in their fields. In a second part of the survey, we asked specifically about the paper on which the respondent was a corresponding author, and we included the paper title and publication date in the survey to facilitate respondents’ recall. We first provide descriptive results, supplemented by an econometric analysis that examines the statistical significance of observed differences in a multivariate regression context. We then draw on open-ended responses to provide additional insights into perceived benefits and challenges of contribution statements, as well as potential improvements. We note that all survey responses are from corresponding authors and are thus not necessarily representative of team members in general.

Quantitative Analysis

Perceived informational value of contribution statements

Study 1 suggests that contribution statements provide important information that cannot be inferred from author order. To assess whether this claim is consistent with scientists’ perceptions, we asked respondents “Compared to author order, how much information do you feel contribution statements give to readers about the following,” specifying four aspects: “The particular types of contributions made by a co-author (e.g., author contributed to experiments and writing),” “A co-author’s share of effort toward particular contributions relative to other co-authors (e.g., author did 80% of the writing),” “How important a co-author’s contributions were for the success of the project,” and “The share of ‘credit’ the co-author should get for the paper.” Respondents answered on a three-point scale anchored by “Less information than author order” (coded as 1), “About the same” (2), and “More information than author order” (3).

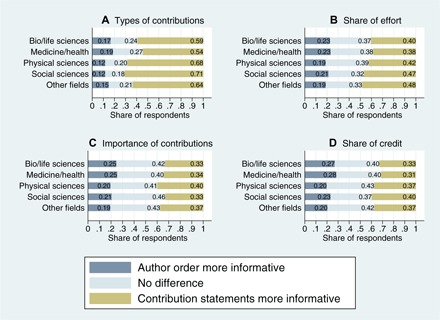

Means greater than two indicate that respondents perceive contribution statements to be more informative with respect to these aspects of contributions (table S11). Consistent with our earlier conjectures, however, the extent of this advantage depends on the particular type of information: Although a large majority of respondents felt that contribution statements provide more information than author order about the types of contributions made (60.71% versus 23.36% who saw no difference and 15.93% who perceived author order to be more informative), only 33.87% of respondents felt that contribution statements provide more information about deserved credit (versus 40.55% who saw no difference and 25.59% who perceived author order to be more informative). Figure 4 shows that these qualitative patterns hold across fields. At the same time, regressions show that perceived informational advantages of contribution statements tend to be higher in the physical and social sciences than in the bio/life sciences, perhaps reflecting that the norms regarding the “meaning” of author positions are less defined in the former fields, making explicit contribution statements relatively more informative (table S12, models 1 to 4).

Fig. 4. Informational advantages of author order and contribution statements.

Ratings with respect to types of contributions (A), share of effort (B), importance of contributions (C), and share of credit (D).

In a related question, we asked “Overall, how much information do you feel typical contribution statements provide readers above and beyond the information provided by author order?,” with responses scored on a four-point scale ranging from “No additional information” to “A lot of additional information.” More than 90% of respondents perceived at least some additional information, with roughly 40% seeing considerable or a lot of additional information. The regressions show no significant field differences (table S12, model 5) but significantly higher value perceived by junior compared to senior scientists.

Perceived value of additional detail

Our conceptual discussion and Fig. 4 suggested that although contribution statements provide explicit information about the types of contributions made, they are less informative about individuals’ level of involvement in particular contributions or about the importance of the contributions made. We explored how useful this information would be by asking respondents “In addition to knowing whether a co-author has made a particular contribution at all, how useful would you find knowing ‘What share of each contribution was made by the co-author (e.g., 80% of writing)’ and ‘How important different types of contributions were for the success of the project.’” Respondents rated both items on four-point scales ranging from “Not useful” to “Extremely useful.” Whereas 20.68% of respondents would find information on shares of effort not useful, 46% would find it somewhat useful, 27.46% very useful, and 5.87% extremely useful. The results for additional information on the importance of contributions are quite similar, with 20.32% finding it not useful, 42.79% somewhat useful, 29.53% very useful, and 7.35% extremely useful. The regressions show few field differences, but junior scientists would find both types of information significantly more useful than senior scientists (table S12, models 6 and 7).

Who decides and agrees on contribution statements?

The informational value of contribution statements depends not only on their format but also on the process by which they are decided. Although a large body of previous work has examined the determinants of authorship decisions (6), little is known about how contribution statements are written. Hence, we asked respondents to think specifically about the focal paper on which they were a corresponding author. We then asked “Which co-authors were involved in discussing the final contribution statements?,” with options involving “All co-authors discussed,” “Some but not all co-authors discussed (please specify who),” “No discussion (corresponding author just submitted),” and “I don’t remember.” In a second question, we asked “Which co-authors explicitly approved the final contribution statements (e.g., verbally or by email)?,” providing equivalent response options.

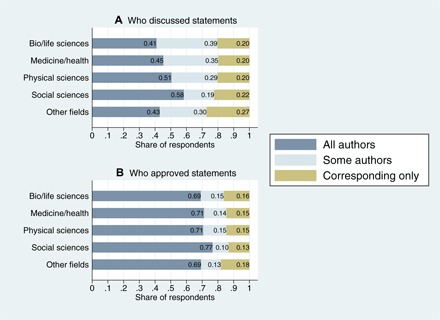

The results suggest that 43.47% of papers had all authors involved in discussing contribution statements, whereas 35.59% of papers had several but not all authors involved (Fig. 5). The open-ended entries suggest that these were primarily first and last authors. In 20.94% of papers, the corresponding author decided on the contribution statements alone. Rates of explicit approval are significantly higher: All authors approved statements on 69.85% of papers, some authors approved on 14.25% of papers, whereas no other team members approved statements on 15.90% of the papers (that is, the corresponding author submitted without others’ explicit approval).

Fig. 5. Process by which contribution statements are made.

Authors who discussed statements (A) and authors who explicitly approved statements (B).

We estimate multinomial logit regressions for these two variables, with “All co-authors” as the omitted category of the dependent variable. These regressions show that compared to the bio/life sciences, papers in the other major fields are significantly more likely to have all authors involved in the discussion (table S13, model 1). However, the involvement in the form of explicit approval differs little between fields (table S13, model 2). We find strong differences by team size: The larger the team, the higher the likelihood that not all team members were involved in discussing and approving the contribution statements.

Importance of contribution statements

A potential concern when interpreting contribution statements is that they do not “matter” as much as authorship and are completed without much thought by corresponding authors just to fulfill journal requirements. Somewhat mitigating this concern, the previous analyses suggest that respondents do see considerable value in contribution statements and that statements reflect discussions between, and approval by, large shares of the team members. To further examine this issue, we also asked respondents “How important was it for you where you appear on the contribution statements?” using a four-point scale ranging from “Not at all important” to “Extremely important” as well as the option “I don’t remember.” Among those respondents who did remember (98.86%), only a small minority (13.87%) indicated that their statements were not at all important to them, 34.29% found them somewhat important, 32.29% found them very important, and 19.54% found them extremely important. Thus, most corresponding authors seem to take contribution statements quite seriously.

Model 3 of table S13 shows that contribution statements were less important for corresponding authors in the physical sciences than for those in the other fields. We also find large differences by status of the respondent: Junior scientists assign significantly higher importance to their contribution statements than senior scientists.

Guest and ghost contributorship

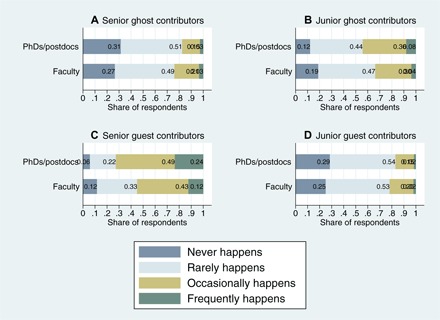

The literature on authorship has highlighted undeserved authorship (“guest authorship”) or unjustified exclusion of individuals who contributed to a project (“ghost authorship”) as important problems (16). To explore how much a similar concern may apply to contribution statements, we asked “In general, how common do you think the following are among teams publishing in PLOS ONE (PNAS)?,” including “Senior authors are not listed with contributions they have made,” “Junior authors are not listed with contributions they have made,” “Senior authors are listed with contributions they have not made,” and “Junior authors are listed with contributions they have not made.” Note that we asked about respondents’ general perceptions of others’ practices in a particular journal rather than about their own practices to reduce social desirability bias (17). Of course, responses do not necessarily reflect the true incidence of certain behaviors but rather respondents’ estimates of these behaviors. Figure 6 shows that “ghost” contributorship is considered quite rare, although it is perceived as less rare for junior contributors.

Fig. 6. Perceived incidence of ghost and guest contributorship.

Incidence of ghost contributorship (authors not listed with contributions they have made) by senior authors (A) and junior authors (B). Incidence of guest contributorship (authors listed with contributions not made) by senior authors (C) and junior authors (D). By seniority of respondent.

Models 8 to 11 in table S12 show that estimates regarding the incidence of “guest” and “ghost” contributorship differ little between fields. Consistent with Fig. 6, however, junior scientists believe that seniors are advantaged (more guest and fewer ghost contributorships), whereas seniors believe that juniors are advantaged. Although we cannot compare these perceptions to actual rates, the considerable differences in junior and senior scientists’ perceptions point toward potential sources of tensions and conflict that deserve study in future work.

Use of contribution statements when evaluating others

We showed above that scientists see considerable informational value in contribution statements. Does this perception translate into a frequent use in addition to, or perhaps even instead of, author order? To examine this question, we asked respondents “Assume you are asked to evaluate a postdoctoral researcher who has co-authored a paper that includes contribution statements. How much weight would you give to: ‘His/her author position (e.g., first, middle, last author)’ and ‘His/her contribution statements.’” The four-point scale for these two items was anchored by “No weight” and “Great weight.” Note that we specified the role of the person to be evaluated (postdoctoral researcher) because criteria may differ depending on who is being evaluated and to ensure consistency across respondents.

Table S11 shows that, on average, respondents would continue to evaluate others primarily based on author position rather than contribution statements; this holds across all fields as well as for junior and senior respondents. When comparing the two ratings for each individual, 45.41% of respondents would give greater weight to author position, 36.91% would give the same weight to both, and 17.68% would give greater weight to contribution statements. This continued emphasis on author order is consistent with our earlier argument that author order conveys information that is not captured in contribution statements, including levels of effort and the importance of contributions. With respect to differences across subsamples, regressions show that respondents in the physical and social sciences would place lower weight on author position than those in the bio/life sciences, whereas physical scientists would place greater weight on contribution statements. Junior scientists would place smaller weight on author order and greater weight on contribution statements than senior scientists (table S12, models 12 and 13).

Comparing responses from PLOS ONE and PNAS authors

Given the different history and status of PNAS and PLOS ONE, we also examine how responses to journal- and article-specific questions differ between respondents from the two journals. Table S11 shows only small differences with respect to the share of papers on which all authors were involved in discussing contribution statements (44% in PLOS ONE versus 42% in PNAS) and in approving statements (68% versus 72%). The importance assigned to contribution statements by the corresponding authors does not differ (table S13, model 3). When asked about the perceived incidence of guest/ghost contributorship in the respective journals, PNAS respondents are somewhat more concerned about ghost contributorship of senior authors than PLOS ONE authors, but there are no differences with respect to the other types of perceived guest/ghost contributorship in the two journals (table S12, models 8 to 11). Together, these comparisons raise our confidence in the results from Study 1: Although Study 1 is based on data from PLOS ONE only, the survey gives no indication that contribution statements play a significantly different role—or are less reliable—in PLOS ONE than in higher status journals, such as PNAS. Nevertheless, future work that directly replicates Study 1 using data from other journals would be highly desirable.

Qualitative insights: Open-ended questions

To supplement the quantitative measures, we also asked respondents two open-ended questions that provide additional qualitative insights. A first question was directed only at respondents who indicated that they would give no or only some weight to contribution statements when evaluating a postdoctoral researcher (see above). This question asked “Why would you not pay more attention to contribution statements?” More than 2000 respondents answered this question. All respondents received a second question at the end of the survey: “Do you have any other comments on this topic that you would like to share? How do you think contribution statements could be improved?” More than 1400 respondents provided additional comments in response to this question. Although a formal analysis of these data is beyond the scope of this paper, we list a number of illustrative responses in tables S14 and S15. We selected responses pertaining to areas or ideas that appeared particularly salient in the body of responses, but we emphasize that the listed responses are not necessarily representative and should not be interpreted in a quantitative way. In addition to revealing fascinating insights into scientists’ experiences with contribution statements, the responses highlight how diverse—and often conflicting—opinions about these statements are.

DISCUSSION

Summary

The bibliometric analysis in Study 1 shows significant relationships between author order and contribution statements, consistent with the view that authorship and author order can allow readers to infer co-authors’ individual contributions. However, author order and contribution statements are not always aligned, suggesting that they also provide different—and complementary—information. The key advantage of contribution disclosures is that they provide more information about the breadth and types of authors’ contributions, reducing the risk of erroneous inferences regarding these aspects based on author order, which is especially useful for middle authors and in larger teams. Yet, contribution statements provide little information about two other important aspects. First, they do not inform about an author’s level of involvement in particular contributions, which is particularly problematic when several authors are listed with the same contributions. Second, they provide little information on the importance of different contributions to project success. Differences in the importance of particular contributions across projects may explain, for example, why some teams assign prominent author positions to individuals who made primarily empirical contributions, whereas others assign these positions to members whose contributions were conceptual.

Survey responses from corresponding authors in Study 2 provide further insights into the informational value of contribution statements: A large majority of respondents indicates that contribution statements provide them with information above and beyond that provided by authorship order. Consistent with our conceptual model, however, contribution statements are considered to be more informative about types of contributions made than about shares of effort or the overall credit an author should receive. The survey also provides insights into the process by which contribution statements are made, suggesting that many respondents—especially those who are more junior—care strongly about where they appear in contribution statements and suggesting broad participation in discussing and approving these statements. Despite the high perceived informational value, however, most respondents pay more attention to author order than contribution statements when evaluating others. Reasons include, among others, concerns about biases due to social influence and lack of attention, greater difficulty of accessing and processing contribution statements compared to authorship information, as well as the lack of detail and the inability of standardized contribution statements to reflect the complexity of teamwork.

Future research

Before we turn to implications of the findings, we highlight three fundamental issues that emerged from our analyses and that suggest important avenues for future research. First, contribution statements are based on the premise that scientific projects involve different types of tasks and activities, and that team members’ contributions can at least, to some extent, be differentiated and assessed relative to each other. However, there are strong complementarities between contributions, and even seemingly minor aspects may ultimately be essential for project success. As illustrated in the open responses to our survey, some scientists believe that efforts to differentiate author contributions are therefore futile and may even be detrimental to collaborative efforts. This tension suggests the need for future conceptual and empirical work on the division of labor in teams, the degree to which contributions can and should be modularized, and on how we can compare the value of contributions that critically depend upon each other. This work should also theorize and empirically evaluate potential differences across fields. Research in other domains such as organizational theory, the economics of bargaining, and the sociology of teams may be useful for studies on these issues (18–21).

Second, it is clear that authorship and contribution disclosures not only reflect objective contributions but also are shaped by important social dynamics. Although a considerable body of work has examined these issues for authorship (6, 7, 22), our understanding of the role of social factors in shaping contribution statements remains limited. Similarly, future work is needed on the social dynamics that influence the adoption and use of contribution statements by journals and the broader scientific community. Descriptive work such as our survey will be an important step, but future work may also usefully draw on related literature on the role of status and social norms, cognitive biases in estimating one’s own contributions, or the diffusion of innovations (23–26). Insights from these literatures may also help understand how social biases can be reduced, how contribution statements can be made more informative, and how the adoption of improved contribution disclosures can be accelerated.

Third, although contribution disclosures report on the past, the presence and design of these statements are likely to affect scientists’ future behaviors. For example, contribution disclosures not only convey credit for work well done but also assign responsibility for errors and potential misconduct. Hence, they may encourage greater effort to avoid mistakes and reduce the incentives for misconduct (8). However, explicit contribution statements may also lead scientists to crowd into activities that are perceived to be valued more highly while avoiding activities that are considered less important or expose them to greater risks of errors. Understanding any such longer-term effects seems particularly important to gain a more holistic view of the benefits and challenges of contribution statements and of opportunities for improvements. Economic frameworks can be used to study these issues, but research in other areas, such as the psychology of accountability and blame, may also provide useful guidance (27–30).

Implications and recommendations

Notwithstanding the need for future research on several fundamental issues, our results suggest opportunities for improvements of contribution disclosures as well as the need for discussion around specific design parameters. First, the results from both studies point to merits of a contributorship system that discloses information not only on the types of contributions made but also on the level of authors’ involvement in each contribution. Science already asks what share of a particular contribution was made by each co-author (ranging from 0 to 100%), although the resulting information is not publicly disclosed (Table 1). Of course, reporting this detailed information requires additional effort and may expose disagreements among co-authors, and reported contributions may not always be accurate (see table S15). Without this detail, however, evaluators are forced to infer important aspects of the value of authors’ contributions from author order, despite the considerable risk of errors. As noted in some open-ended responses to the survey, detailed contribution statements may also encourage more explicit discussions about team members’ contributions, potentially leading to more accurate assessments, more transparency, and less influence of implicit assumptions and social norms that hamper the traditional authorship system (8, 13). This may ultimately increase the likelihood that all individual team members—not just first and last authors—receive adequate recognition for their respective contributions.

Second, public discussions are needed on whether and how contribution disclosures can be standardized. Some journals currently ask authors to use predefined categories of contributions, but different journals use different categories. Other journals ask for open-ended statements (Table 1). A standardized approach may increase the consistency of disclosures and facilitate comparisons across journals (13, 31). It may also allow aggregation and the development of contribution-based indices to complement authorship-based indices (32). At the same time, a standardized approach would have to provide enough flexibility to accommodate heterogeneity across projects and fields. It should also anticipate changes to scientific activity, such as growing team size and specialization, automation and commoditization of certain research activities, as well as broader participation by nonprofessional scientists (1, 33, 34).

Finally, several of our respondents indicated that—in their role as evaluators—they paid little attention to contribution statements because these statements are not provided in all journals, are difficult to find, and are not aggregated in mechanisms such as resumés or publication databases. Hence, editors, funding agencies, administrators, and database providers should consider how the visibility of contribution statements can be increased and users’ costs of accessing and processing this new information can be reduced. Of course, readers may also find it easier to access and process contribution information as their experience with this relatively new mechanism accumulates. However, lower costs of access and information processing may not increase the use of contribution statements significantly unless some of the more fundamental hurdles such as a perceived lack of detail or concerns about accuracy can be addressed.

MATERIALS AND METHODS: STUDY 1

Data

We analyzed data from articles published in PLOS ONE. This large Open Access peer-reviewed journal was started in 2006 by the Public Library of Science. We obtained data for 14,602 research articles published from February 2007 to September 2011 by downloading article xml files available on the PLOS ONE website. Because we are interested in the relationships between contributions and author order, we dropped 233 single-authored papers, 169 papers that did not disclose the contributions of one or more authors, 54 papers that did not use the standard classification of contributions or listed only “other contributions,” and 61 papers that did not list any authors as having “written” (because each paper needs to be written, the contribution statements of these papers are likely incomplete). We excluded papers with more than 14 authors (the 95th percentile) because the organization of knowledge production may be qualitatively different in big science projects (2) and because small cell sizes make analyses of very large teams difficult. Because authorship norms may differ across fields (5, 14), we also excluded a small share of papers that do not have at least one field categorization (see below) in the biological or life sciences. Although Study 1 focuses on data from the biological and life sciences, we examined potential field differences using a different data set in Study 2 below. Overall, Study 1 analyzes data from 12,772 articles that list 79,776 authors.

Measures

We used a number of individual-level (i_) and team/article-level (t_) variables. Summary statistics are reported in table S1.

Measures of contributions

When submitting a manuscript to PLOS ONE, authors state the particular contributions made by each individual author. The journal offers a template with five predefined types of contributions: (i) conceived and designed the study (i_conceived), (ii) performed the experiments (i_performed), (iii) analyzed the data (i_analyzed), (iv) contributed reagents/materials/analysis tools (i_materials), and (v) wrote the paper (i_wrote). An open-text field “other” allows authors to list additional contributions that may not fall in the five predefined types. We created a dummy variable, indicating whether a particular individual was listed as having made some other contribution (i_other). Other contributions were manually checked and recoded if they fell in one of the five predefined categories. We also created the variable i_countcontributions, which captures the total count of contributions for each author and thus the breadth of his or her involvement in the project.

We recognize that listed contributions are imperfect measures of the activities performed by project participants. In particular, they do not capture work done by any “ghost authors,” individuals who made significant contributions but are not listed as authors (7, 22). This limitation also applies to authorship order and thus should not affect our comparisons between the two. More importantly, the listing of author contributions may reflect not only objective contributions but also a social process of negotiation among team members, with more powerful or accomplished team members potentially negotiating to be listed as having made contributions they did not actually make (there may also be reasons to inflate the contributions of junior members, although Fig. 6 suggests that this is less common). Assuming that in the biological and life sciences, senior authors tend to be the last authors on papers (table S1), inflated contribution statements for senior authors may mean that differences in the actual contributions of first and last authors are even greater than estimated in our analyses (whereas differences in the actual contributions of last and middle authors may be smaller). These biases should be less problematic for our analyses of errors when interpreting author order because these analyses primarily focus on the distribution of contributions for a given author position (for example, Fig. 2). Similarly, they should not have a significant impact on our analyses of the relationships between contributions and team size. Nevertheless, a clearer understanding of the potential role of social factors in shaping contribution statements will be critical for future research using these statements and for their actual use in the scientific community. Although Study 2 suggests that some of our respondents are concerned about social influences, it also shows that contribution statements are generally perceived to be quite informative about actual contributions. We seek to partly address social factors through control variables as well as additional analyses reported in the Supplementary Materials.

Despite their limitations, the contribution measures have key advantages over available alternatives. Most importantly, they allow insights into a large sample of projects, complementing previous qualitative work using small numbers of cases (23). By using predefined categories, we obtained measures that are easily compared across teams while relying on the scientists themselves (rather than less knowledgeable coders) to decide which categories best fit the contributions made by the various team members. Finally, although information about author contributions can also be obtained through surveys distributed to individual authors, individuals may overestimate their contributions to a team effort (25). The contributions listed on published papers should be less affected by these biases to the extent that they reflect a collective assessment by team members. Study 2 suggests that contribution statements tend to be collective decisions and approved by all authors on the majority of papers.

Author position

Depending on the order of authorship, each author is coded as first, last, or middle author. Because we analyzed papers with 2 to 14 authors, all papers have a first author and a last author, and the number of middle authors per paper ranges from 0 to 12.

Corresponding author

We created an indicator variable that takes on the value of one if the author is designated as the corresponding author. Nine percent of papers list more than one corresponding author.

ICMJE criteria fulfilled

We coded two binary variables reflecting the fulfillment of ICMJE authorship criteria (i_ icmjefulfilled_weak and i_ icmjefulfilled_strong). These variables are explained in more detail below.

Team size

t_teamsize is a count of the number of authors on the paper.

Alphabetical author order

The indicator variable t_alphaorder equals one if the authors are listed in alphabetical order.

Controls

To account for the fact that not all papers list all six types of contributions, we created the variable t_totalactivitieslisted, which indicates how many of the six possible contributions are listed on the paper at all. Each article is classified by the authors using field classifications provided by PLOS ONE, whereby an article can be classified under multiple fields. We used 34 indicator variables to control for these fields of research (for example, f_biochemistry and f_biophysics; see table S1). We also controlled for the paper’s publication date (t_published).

Social dynamics may be particularly relevant if all authors are from the same laboratory, potentially giving the laboratory head particularly great power in deciding contribution statements. Hence, we included a dummy variable (t_affiliations_d), indicating whether all authors on the paper share the same affiliation (coded as 0; 25% of the sample) or not (coded as 1). In a robustness check reported in the Supplementary Materials, we also control for the quantity and quality of co-authors’ prior publications.

Statistical analyses

Author order and contributions

Figure 1 visualizes the relationships between author position and contributions for teams of six co-authors. For each author position, we computed the share of individuals who made a particular type of contribution and visualized the result by stacking the shares for all contribution types. For example, 94% of first authors were involved in analyzing data, 86% were involved in performing experiments, 88% were involved in writing the paper, 80% were involved in conceiving the study, 28% supplied materials, and 5% made other contributions. The total height of the bar is 381%, which means that the average first author made 3.81 different contributions.

In table S2, we examined the correspondence between author position and contributions using the full sample by regression analysis. SEs are clustered at the level of the article. All regressions include a number of control variables, such as team size and detailed field fixed effects. Note that these and subsequent regressions examine differences in the contributions made by authors listed in different positions and are purely correlational in nature. The objective is to explore the degree to which author position allows observers to infer or “predict” the number or types of authors’ contributions. These regressions are not designed to examine the causal nature of any observed relationships; in particular, we do not seek to determine whether particular contributions “cause” individuals to be placed in particular positions on the byline.

We also explored to what extent author order informs about certain combinations of contributions. In a first step, we performed a factor analysis (using promax rotation) to examine which contributions tend to co-occur as sets. This approach shows two factors. The contributions that clearly load on factor one are conceived (rotated factor loading, 0.81), wrote (0.85), and—less strongly—analyzed (0.57). Factor two consists of performed (0.79) and analyzed (0.37). In a second step, we estimated three additional regression models using these common combinations of contributions as dependent variables (table S2, models 8 to 10). Model 8 shows whether an author conceived&wrote (the dependent variable is one for authors who have made at least these two contributions and is zero for authors without this combination of contributions), model 9 uses conceived&wrote&analyzed, and model 10 uses performed&analyzed. Last authorship is most strongly associated with conceived&wrote, whereas first authorship is most strongly associated with conceived&wrote&analyzed. The latter finding is consistent with our earlier observation that first authors typically have the highest count of contributions (table S2, model 1).

Corresponding authors

Table S5 shows the relationships between author position and corresponding author status. Corresponding authors are more likely to also be last authors (59%) or first authors (32%) than middle authors (9%).

Regressions reported in table S6 examine how corresponding authorship is related to the count of contributions (model 1) and to the likelihood that an author made particular contributions (models 2 to 7). Even controlling for author order (first, middle, and last), corresponding authors are involved in a broader range of activities, particularly conceptual activities and writing. Models 8 to 10 show that the additional contribution count for corresponding authors is particularly large among middle authors (who are generally less likely to be corresponding authors; see table S5).

Deviations from conventions

We examined how reliably author position informs about authors’ contributions relative to each other. We started from two empirical conventions observed in table S2: First authors tend to have broader involvement in the project (higher contribution count) than last authors, and last authors have broader involvement than middle authors. We then examined how many papers with at least three authors deviate from these conventions in that first authors have a lower contribution count than last authors or that last authors have a lower contribution count than at least one of the middle authors. Results are discussed in the main text.

Contributions and team size

We regressed the count of contributions and individual contribution measures on a series of team size dummies as well as article controls. These regressions were estimated separately for first, middle, last, and corresponding authors (tables S8 and S9). We see that authors in larger teams tend to have a lower count of contributions, consistent with increasing specialization in larger teams. Similarly, the likelihood that first, middle, and last authors have made particular contributions also depends on the size of the team.

Reliability of inferences by team size

Figure 2A plots for each author position the SD of individuals’ count of contributions against team size, showing an increase with team size especially for first authors. Figure 2B shows the share of papers deviating from the conventions that first authors have a higher contribution count than last authors and last authors have a higher contribution count than middle authors by team size. The share of papers deviating from the conventions increases with team size. Both panels highlight that inferences about the breadth of authors’ contributions based on author position will have a higher error rate in larger teams.

Fulfillment of ICMJE authorship criteria

According to the ICMJE, authorship requires that an individual fulfill all four of the following criteria: (i) substantial contribution to conception and design, acquisition of data, or analysis and interpretation of data; (ii) drafting the article or revising it critically for important intellectual content; (iii) final approval of the version to be published; and (iv) agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Because criteria (iii) and (iv) are not observed in the data, we focused on criteria (i) and (ii).

On the basis of each author’s stated contributions, we coded two binary variables indicating whether the ICMJE criteria for authorship are fulfilled. The first variable—i_icmjefulfilled _strong—uses a strict interpretation and is coded as 1 if an author was involved in writing the paper and at least one of the following: conceived, performed, analyzed. The second variable—i_icmjefulfilled _weak—is coded as 1 if an author was involved in writing the paper and at least one of the following: conceived, performed, analyzed, materials. It is also coded as 1 if an author is listed with an “other” contribution because this may include aspects that satisfy the ICMJE criteria. The share of authors fulfilling the requirements in the strong interpretation is 44% and that in the weak interpretation is 52%.

Figure 3 shows the share of authors in each position who fulfill the ICMJE criteria using the weak (that is, more permissive) interpretation by team size. Table S10 shows regressions of the two variables on author position and team size.

MATERIALS AND METHODS: STUDY 2

Sample and survey development

We surveyed corresponding authors on recent papers in PLOS ONE and PNAS. PNAS provides a useful complement to PLOS ONE because it is a traditional journal (started in 1915) that consistently publishes high-impact work and ranks in the top 5 of interdisciplinary sciences journals (Table 1). Moreover, PNAS publishes research across a broader range of fields than PLOS ONE, allowing us to explore potential differences across fields. Unlike Science or Nature, PNAS makes article and author information publicly available, allowing us to use this journal in the current study. Because PNAS does not use standardized contribution statements, we were not able to replicate Study 1 using PNAS data. The survey was approved by the Georgia Institute of Technology Institutional Review Board (IRB #H17208).

To obtain the initial sample of PLOS ONE authors, we scraped the PLOS ONE website and downloaded article information and corresponding author names and email addresses for all articles published between January 2016 and April 2017. After removing duplicate contacts, we randomly selected 17,000 of these corresponding authors for our survey. For PNAS (which publishes fewer papers than PLOS ONE), we collected contact information for papers published between January 2014 and April 2017 and randomly selected 10,000 corresponding authors for this study.

We pretested the survey in direct interactions with individual scientists and revised according to their feedback. We then piloted the survey with 4000 of the PLOS ONE corresponding authors. The pilot survey resulted in the addition and removal of some questions but is otherwise identical with the main survey; we used the combined data where possible.

We invited the corresponding authors to participate in an online survey implemented using the software suite Qualtrics between May and July 2017. Respondents were offered to be entered into a drawing of Amazon gift certificates and received a personalized invitation as well as up to three reminders. We received 3980 usable responses from PLOS ONE authors and 2448 from PNAS authors, which correspond to response rates of 23.41 and 24.48%, respectively (not adjusting for undeliverable emails). These response rates are comparable to other recent online survey efforts (35). To analyze nonresponse, we examined the relationships between response status and key variables coded from the original articles (see the next section). Compared to authors on articles in the biological/life sciences, we found significantly higher response rates among social scientists and in “other” fields. Corresponding authors from smaller teams are more likely to respond than those from larger teams. The response rate is lower for authors on older articles, likely reflecting that contact information on older articles is more likely to be outdated. Corresponding authors who are first authors on the focal paper were significantly more likely to respond than those who are second or last author. Finally, PNAS authors were more likely to respond than PLOS ONE authors. To address these differences in response rates, we reported descriptive statistics separately by field and author status in table S11, and we additionally estimated regression models with the relevant control variables.

For this study, we dropped responses from corresponding authors on single-authored papers (n = 64) and on papers with more than 14 authors (n = 362), leaving a final sample of 6002 responses. The sample size for some questions is smaller because not all respondents answered each question, and the respondents to the pilot did not receive some of the questions included in the final survey. Table S11 shows descriptive statistics for all variables for the full sample, as well as by major field, by junior versus senior status of the respondent, and by journal.

Measures (if not discussed in the results section)

Field

PNAS papers list up to two field classifications using the major fields of biological sciences, physical sciences, and social sciences as well as more detailed subfields within. For our analyses, we classified papers using the first listed broad field. More than 85% of PNAS respondents were on papers that were classified using only one of the broad field classifications. PLOS ONE papers include a list of very specific “subject areas.” Using a classification tree available on the PLOS ONE website, we related these subject areas back to their major “root” fields, including biological/life sciences, medical/health sciences, physical sciences, social sciences, as well as “other” fields (for example, earth sciences, engineering and technology, and research methods). For this study, we used the major root field of the first listed subject area.

Junior versus senior status

In the module that asked respondents about the particular paper from which their contact information was obtained, we asked “At the time of the publication of this paper (date of publication), which of the following best describes your position?,” with options including “PhD or undergraduate student,” “Postdoc,” “Faculty member but not lab head,” “Faculty member and lab head,” and “Other (please specify).” For some comparisons, we collapsed PhDs and Postdocs into the category “Junior scientist” and both types of faculty members into “Senior scientist.”

Team size

Team size is the number of authors listed on the focal publication.

Article age

Article age is computed as the difference between the publication date of the youngest article included in the data set (published on 12 April 2017) and the publication date of the focal article, in days.

Remember this paper

In the module that asked respondents about the particular paper from which their contact information was obtained, we asked “How well do you remember your work on this paper?,” with options including “Not at all,” “Somewhat,” “Quite well,” and “Very well.”

Regression analyses

Our regressions include full sets of controls as shown in tables S12 and S13. Regressions were estimated using either ordered logit or multinomial logit regressions, as indicated in the table headings. SEs are robust to heteroscedasticity.

Supplementary Material

Acknowledgments

Funding: The authors acknowledge that they received no external funding in support of this research. Author contributions: Conceived the study: H.S. (50%) and C.H. (50%); collected PLOS ONE article data: H.S. (100%); collected Scopus data on authors’ previous publications: C.H. (100%); analyzed PLOS ONE data: H.S. (50%) and C.H. (50%); developed and implemented survey: H.S. (70%) and C.H. (30%); analyzed survey data: H.S. (100%); wrote the paper: H.S. (70%) and C.H. (30%). Competing interests: The authors declare that they have no competing interests. Data and materials availability: The data needed to evaluate the conclusions in the paper are presented in the paper and the Supplementary Materials. IRB protocol required participant confidentiality and nondisclosure of individual-level data. Scopus data can be obtained under the same conditions and as described by authors for this study. Interested readers should direct questions regarding the data to the corresponding author.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/3/11/e1700404/DC1

Supplementary Text

fig. S1. Team members and their respective contributions (schematic).

fig. S2. Distribution of the count of contributions by position (teams of six).

fig. S3. Count of contributions by team size.

fig. S4. Share of authors who fulfill ICMJE authorship criteria.

table S1. Summary statistics for Study 1.

table S2. Authorship positions and contributions.

table S3. Authorship and contributions for teams with 2, 6, and 10 authors.

table S4. Incidence of alphabetical authorship.

table S5. Authorship position and corresponding author status.

table S6. Corresponding author status and contributions.

table S7. Predicted likelihood of particular contributions and predicted contribution counts.

table S8. Author contributions by position and team size.

table S9. Types of contributions by position and team size.

table S10. ICMJE authorship criteria fulfilled by position and team size.

table S11. Summary statistics for Study 2.

table S12. Regression analyses of survey responses on general opinions regarding contribution statements.

table S13. Regression analyses of survey responses on specific articles.

table S14. Illustrative responses to the question “Why would you not pay more attention to contribution statements?”

table S15. Illustrative responses to the question “Do you have any other comments on this topic that you would like to share? How do you think contribution statements could be improved?”

table S16. Authorship positions and contributions controlling for quantity and quality of previous publications.

table S17. Authorship positions and contributions using data from papers in the top 10% of article impact (citations).

REFERENCES AND NOTES

- 1.Wuchty S., Jones B. F., Uzzi B., The increasing dominance of teams in the production of knowledge. Science 316, 1036–1039 (2007). [DOI] [PubMed] [Google Scholar]

- 2.P. Stephan, How Economics Shapes Science (Harvard University Press, 2012). [Google Scholar]

- 3.Fiore S. M., Interdisciplinarity as teamwork: How the science of teams can inform team science. Small Group Res. 39, 251–277 (2008). [Google Scholar]

- 4.Weinberg B. A., Owen-Smith J., Rosen R. F., Schwarz L., Allen B. M., Weiss R. E., Lane J., Science funding and short-term economic activity. Science 344, 41–43 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Maciejovsky B., Budescu D. V., Ariely D., The researcher as a consumer of scientific publications: How do name-ordering conventions affect inferences about contribution credits?. Market. Sci. 28, 589–598 (2009). [Google Scholar]

- 6.Marušić A., Bošnjak L., Jerončić A., A systematic review of research on the meaning, ethics and practices of authorship across scholarly disciplines. PLOS ONE 6, e23477 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Haeussler C., Sauermann H., Credit where credit is due? The impact of project contributions and social factors on authorship and inventorship. Res. Policy 42, 688–703 (2013). [Google Scholar]

- 8.Rennie D., Yank V., Emanuel L., When authorship fails: A proposal to make contributors accountable. JAMA 278, 579–585 (1997). [DOI] [PubMed] [Google Scholar]

- 9.Bates T., Anić A., Marušić M., Marušić A., Authorship criteria and disclosure of contributions: Comparison of 3 general medical journals with different author contribution forms. JAMA 292, 86–88 (2004). [DOI] [PubMed] [Google Scholar]

- 10.M. Biagioli, in Scientific Authorship—Credit and Intellectual Property in Science, M. Biagioli, P. Galison, Eds. (Routledge, 2003), pp. 253–279. [Google Scholar]

- 11.Bohannon J., Who’s afraid of peer review?. Science 342, 60–65 (2013). [DOI] [PubMed] [Google Scholar]

- 12.Yank V., Rennie D., Disclosure of researcher contributions: A study of original research articles in The Lancet. Ann. Intern. Med. 130, 661–670 (1999). [DOI] [PubMed] [Google Scholar]

- 13.Allen L., Scott J., Brand A., Hlava M., Altman M., Publishing: Credit where credit is due. Nature 508, 312–313 (2014). [DOI] [PubMed] [Google Scholar]

- 14.Engers M., Gans J. S., Grant S., King S. P., First-author conditions. J. Polit. Econ. 107, 859–883 (1999). [Google Scholar]

- 15.ICMJE, http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html (Archived prior versions: http://www.icmje.org/recommendations/archives/) (2010), vol. 2010.

- 16.S. Wood, in Heartwire News, https://www.medscape.com/viewarticle/708781 (2009).

- 17.Moorman R. H., Podsakoff P. M., A meta-analytic review and empirical test of the potential confounding effects of social desirability response sets in organizational behavior research. J. Occup. Organ. Psychol. 65, 131–149 (1992). [Google Scholar]

- 18.von Hippel E., Task partitioning: An innovation process variable. Res. Policy 19, 407–418 (1990). [Google Scholar]

- 19.Shibayama S., Baba Y., Walsh J. P., Organizational design of university laboratories: Task allocation and lab performance in Japanese bioscience laboratories. Res. Policy 44, 610–622 (2015). [Google Scholar]

- 20.Hamilton B. H., Nickerson J. A., Owan H., Team incentives and worker heterogeneity: An empirical analysis of the impact of teams on productivity and participation. J. Polit. Econ. 111, 465–497 (2003). [Google Scholar]

- 21.Haeussler C., Jiang L., Thursby J., Thursby M., Specific and general information sharing among competing academic researchers. Res. Policy 43, 465–475 (2014). [Google Scholar]

- 22.Lissoni F., Montobbio F., Zirulia L., Inventorship and authorship as attribution rights: An enquiry into the economics of scientific credit. J. Econ. Behav. Organ. 95, 49–69 (2013). [Google Scholar]

- 23.Owen-Smith J., Managing laboratory work through skepticism: Processes of evaluation and control. Am. Sociol. Rev. 66, 427–452 (2001). [Google Scholar]

- 24.R. K. Merton, The Sociology of Science: Theoretical and Empirical Investigations (University of Chicago Press, 1973). [Google Scholar]

- 25.Ross M., Sicoly F., Egocentric biases in availability and attribution. J. Pers. Soc. Psychol. 37, 322–336 (1979). [Google Scholar]

- 26.E. M. Rogers, Diffusion of Innovations (Free Press, ed. 5, 2003). [Google Scholar]

- 27.Lacetera N., Zirulia L., The economics of scientific misconduct. J. Law Econ. 27, 568–603 (2011). [Google Scholar]

- 28.Prendergast C., The provision of incentives in firms. J. Econ. Lit. 37, 7–63 (1999). [Google Scholar]

- 29.Lerner J. S., Tetlock P. E., Accounting for the effects of accountability. Psychol. Bull. 125, 255–275 (1999). [DOI] [PubMed] [Google Scholar]

- 30.Alicke M. D., Culpable control and the psychology of blame. Psychol. Bull. 126, 556–574 (2000). [DOI] [PubMed] [Google Scholar]

- 31.Marušić A., Bates T., Anić A., Marušić M., How the structure of contribution disclosure statements affects validity of authorship: A randomized study in a general medical journal. Curr. Med. Res. Opin. 22, 1035–1044 (2006). [DOI] [PubMed] [Google Scholar]

- 32.Hirsch J. E., An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. U.S.A. 102, 16569–16572 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bonney R., Shirk J. L., Phillips T. B., Wiggins A., Ballard H. L., Miller-Rushing A. J., Parrish J. K., Next steps for citizen science. Science 343, 1436–1437 (2014). [DOI] [PubMed] [Google Scholar]

- 34.Cummings J. N., Kiesler S., Organization theory and the changing nature of science. J. Organ. Des. 3, 1–16 (2014). [Google Scholar]