Abstract

Background

A better understanding of the optimal “dose” of behavioral interventions to affect change in weight-related outcomes is a critical topic for childhood obesity intervention research. The objective of this review was to quantify the relationship between dose and outcome in behavioral trials targeting childhood obesity to guide future intervention development.

Methods

A systematic review and meta-regression included randomized controlled trials published between 1990 and June 2017 that tested a behavioral intervention for obesity among children 2–18 years old. Searches were conducted among PubMed (Web-based), Cumulative Index to Nursing and Allied Health Literature (EBSCO platform), PsycINFO (Ovid platform) and EMBASE (Ovid Platform). Two coders independently reviewed and abstracted each included study. Dose was extracted as intended intervention duration, number of sessions, and length of sessions. Standardized effect sizes were calculated from change in weight-related outcome (e.g., BMI-Z score).

Results

Of the 258 studies identified, 133 had sufficient data to be included in the meta-regression. Average intended total contact (# sessions x length of sessions) was 27.7 (SD 32.2) hours and average duration was 26.0 (SD 23.4) weeks. When controlling for study covariates, a random-effects meta-regression revealed no significant association between contact hours, intended duration or their interaction and effect size.

Conclusions

This systematic review identified wide variation in the dose of behavioral interventions to prevent and treat pediatric obesity, but was unable to detect a clear relationship between dose and weight-related outcomes. There is insufficient evidence to provide quantitative guidance for future intervention development. One limitation of this review was the ability to uniformly quantify dose due to a wide range of reporting strategies. Future trials should report dose intended, delivered, and received to facilitate quantitative evaluation of optimal dose.

Trial registrations

The protocol was registered on PROSPERO (Registration #CRD42016036124).

Electronic supplementary material

The online version of this article (10.1186/s12966-017-0615-7) contains supplementary material, which is available to authorized users.

Keywords: Dose, Behavioral intervention, Childhood obesity, Health behavior, Systematic review

Background

In the last several decades there have been a significant number of behavioral interventions designed to support healthy childhood growth and prevent or treat childhood obesity. Systematic reviews that have attempted to draw meaningful conclusions from randomized controlled trials of these interventions have pointed to modestly efficacious results [1–6]. These same reviews highlight a wide range of variability both in the approach taken (e.g., type of intervention, setting, and age group) and in the evaluative methods employed (e.g., strength of trial designs, measurement approaches, and reporting of processes). The difficulty in synthesizing such a voluminous and varied literature leaves the public health community—researchers, clinicians, policy-makers, and patients—without a clear consensus as to the best approach to implementing both efficacious and sustainable solutions to the childhood obesity epidemic.

Establishing the optimum dose of behavioral interventions to prevent and treat childhood obesity is a critical question for addressing the obesity epidemic [7]. Unlike drug trials, the dose of a behavioral intervention is not easily quantified, and unlike drug trials there is a paucity of data on how the dose of a behavioral intervention is related to its outcome. Available literature from behavioral interventions to reduce adult obesity and its associated complications (i.e., diabetes incidence, glycemic control, hypertension, and hyperlipidemia) suggest that there is an association between higher dose and better efficacy [8]. Furthermore, a recent meta-analysis of 20 studies by Janicke et al. found that the dose of comprehensive behavioral family lifestyle interventions in the community or in outpatient clinical settings was associated with their efficacy at supporting healthy childhood growth [9]. With several areas of research supporting the notion that dose is related to efficacy in behavioral interventions, the pediatric research community is left with a critical unanswered question: how much exposure to a behavioral intervention over what period of time is necessary to affect sustainable behavior change and reduce childhood obesity?

In the context of behavioral obesity interventions dose can be characterized as a function of the following attributes: intervention duration, the number of sessions, and the length of sessions [10–12]. Duration is the time over which the active components of an intervention are implemented, and it can be measured in days, weeks, months, or years. The number of sessions/contacts with participants occurs over the intervention duration (e.g., one session per week over three months, or 12 sessions). The length of sessions refers to the amount of time each contact lasts between the interventionist and the participant and generally is measured in minutes or hours. These dose parameters collectively determine a cumulative amount of intervention or “dose”. Furthermore, an optimal dose can be defined as either the maximally efficacious dose that is not conditional on patient adherence (intended dose) or the maximally effective (actual received or observed) dose that is conditional on adherence [13].

Developing a clear understanding of how the dose of a behavioral intervention is related to the outcome is critical for both causal inference and for developing and disseminating future interventions that support healthy childhood growth. The purpose of this study is to review the existing literature on behavioral interventions to prevent and treat childhood obesity and to use quantitative methods to better understand how dose was related to outcome. Our aims were 1) to describe the distribution of dose (i.e., duration, number of sessions, and length of sessions) in existing behavioral trials for childhood obesity in a range of settings, and then 2) to provide researchers with guidance as future interventions are developed and implemented regarding the minimum dose of a behavioral intervention that would likely be necessary to achieve meaningful and sustainable improvements in childhood obesity. This approach fills the gap in the existing literature by attempting to quantify the “dose” of a behavioral intervention that is needed to affect change in childhood obesity.

Methods

Protocol and registration

We conducted a systematic review of the literature using a pre-specified protocol and in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [14]. The protocol was registered on PROSPERO (Registration #CRD42016036124). The initial literature search was completed in March 2014, with an update in June 2017.

Information sources and search strategy

The full methods of the search strategy used in this systematic review have been previously published, and complete search terms and parameters are available on PROSPERO [11]. Briefly, the PubMed (Web-based), Cumulative Index to Nursing and Allied Health Literature (EBSCO platform), PsycINFO (Ovid platform) and EMBASE (Ovid Platform) databases were searched by a trained health sciences librarian to identify randomized controlled trials (RCTs) or intervention studies on the treatment or prevention of childhood obesity. To search PubMed we used the medical subject headings (MeSH) to define the concepts of obesity, overweight or body mass index; treatment, therapy, or prevention; children, childhood, adolescents or pediatric (under 18 years of age); and RCTs or intervention studies.

To augment this search strategy, bibliographies from selected key systematic reviews were scanned to identify additional publications.

Study selection process

A team of eight coders participated in a two-step selection process. First, the title and abstract of each identified article was independently reviewed by two coders to make initial exclusions. For the remaining articles, two coders independently read the full text to determine final inclusion or exclusion. The lead reviewer adjudicated discrepancies during both steps of the process, involving the larger group of coders when necessary. The initial literature search resulted in 4692 articles, and the updated literature search resulted in 876 additional articles. The updated literature search also identified 10 new articles associated with previously included studies, 6 of which resulted in updating data in the analysis.

Studies were included if they were 1) randomized controlled trials, 2) tested a behavior change intervention to treat or prevent obesity (i.e., focused on healthy behaviors like diet, physical activity, sleep or media use without meeting exclusion criteria below), 3) included children between ages 2 and 18 years old at the time of randomization, 4) had sufficient data to calculate the main analytic variables of dose and a weight-related outcome (e.g., BMI-Z score), and 5) were published in English between January 1990 and June 2017. Studies were excluded if they included 1) an intervention targeted at pregnant women, 2) children who were underweight, 3) children who were hospitalized, in residential overnight camps, or in assisted living, 4) pharmacologic or surgical interventions, 5) prescribed diet or exercise interventions without a behavior change component, or 6) interventions delivered only during the school day or community-wide interventions (where individual dose was not measured). If a trial contained more than one intervention arm, only one was selected for review based on how many of the following features it had: 1) in person or individually delivered, 2) enhanced or multicomponent, (i.e., elements added to the basic intervention being tested), 3) parent and child participants. Because the unit of analysis for this approach is the study, if multiple articles were identified for a single study, then all identified articles were used to extract data for any given study [11].

Data extraction process and elements

Two coders independently reviewed all included studies to determine study characteristics. For each study several characteristics about the study were collected, including study year, participant age (coded: only 2–5 or 6–11 or both, only 12–14 or 15–18, or both, and all other combinations of 2–5, 6–11, 12–14, and 15–18), intervention mode (coded: in person only, and combination of in person and either phone, printed material, computer/app/video game, email/text, or other), intervention setting (coded: clinic or university only, school or community only, and all other combinations of home, school, clinic, community, university, environmental, or other), intervention format (coded: individual only, and group only, or individual + group), and intervention participants (coded: child only, and parent only, or parent + child, or parent + child + other family). The Delphi checklist was used to measure study quality, which includes nine items that assess various aspects of study quality with a yes or no response [15]. By virtue of the inclusion/exclusion criteria several of these criteria were necessarily met, consequently we included only whether studies used an intention to treat analysis or not (coded not intention to treat, and intention to treat) in our analytic model (described below). Decisions regarding coding of covariates were initially based on theory and revised based on the uniformity of reporting across the included studies.

Data elements

Dose variables

A single coder conducted subsequent extraction of quantitative dose and outcome components with 20% of the studies reviewed by a second coder (>95% concordance). Three components of dose were extracted: duration of intervention, the number of in person sessions, and the length of sessions in hours. The primary exposure variable of total in person contact hours was then calculated from the product of number of sessions and length of sessions. For example, a study with 12 weekly, 60-min sessions over 6 months would have the following parameters: 12 contact hours with a duration of 6 months. Of the 258 studies identified by the systematic review, 193 had complete data on at least one in-person dose component. Because only 28 studies had data on the dose of a phone component and only 40 studies had data on the dose of print materials, these did not factor into the numerical calculation of dose. We included data only on the intended dose and not the dose received, as the vast majority of studies did not report sufficient quantitative measures of dose received. For the 41 studies where it was possible to compare the dose intended with the dose received, the intended-received correlation was 0.95 (p < 0.001). This correlation suggests that the reported dose received was highly related to the reported dose intended, noting the potential bias where studies of lower quality or studies that suffered from lower rates of fidelity to the intended dose may not have reported the dose received as frequently as higher quality studies.

BMI outcome variables

Because dose is typically only delivered to the intervention participants, the primary effect size was derived from change in weight-related outcome in the intervention group only. In studies where multiple follow-up times were reported, we used the first follow-up with complete data. Furthermore, there was significant variability in control or comparator arms in these studies, making a quantitative comparison between the primary intervention and comparator infeasible. Because different types of outcomes were reported in the literature, all weight-related measurements were recorded for each study, most commonly weight, body mass index (BMI), BMI Z-score, and % over BMI. The standardized mean effect size was calculated to allow comparability across the different types of weight-related outcomes. However, non-standardized weight outcomes are highly sensitive to individual participant age. Therefore, when multiple outcome types were available, we prioritized hierarchically as follows: BMI-Z, BMI percentile, % over BMI, weight, followed by raw BMI. We also used meta-regression to test whether effect size magnitude was related to the type of outcome used with each type of outcome dummy coded and compared to BMI-Z score as the reference group. This process demonstrated that the distribution of effect sizes from raw BMI were significantly different than those calculated from BMI-Z scores. Therefore, studies that reported raw BMI only were not analyzed (N = 27), and coded as missing the outcome. None of the other effect size types were significantly different from BMI-Z score.

Statistical analysis

Because we were interested in the effect of the intervention dose on pre-post differences within the intervention group, effect sizes were calculated for each study using the standardized mean pre-post difference methodology [16]. In this context, the formula for the sample estimate of the standardized mean difference (d) is.

where M diff is the mean outcome change or the mean difference between pre and post outcome in the intervention group. M pre and M post are the mean outcome at baseline and follow up, respectively, and SD within is the sample standard deviation between time periods (i.e., pre-post). If SD within was not reported, then it was imputed from the pre-post BMI correlation and either the standard deviation of the outcome differences (SD diff) when possible or from the pre and post standard deviations (SD pre and SD post, respectively) when SD diff was not reported. Because SD pre and SD post were correlated at 0.94 (p < 0.001), when only one of SD pre or SD post was available, the SD that was present was imputed for the one that was missing [17]. Finally, when it was not possible to calculate the pre-post BMI correlation for a study (which was necessary to impute the SD of BMI for the effect size calculation), the following schema was used to estimate it: r = 0.90 for studies 26 weeks or shorter; r = 0.80 for studies 52 weeks or shorter, but longer than 26 weeks; and r = 0.70 for studies longer than 52 weeks.

We conducted a random-effects meta-regression using inverse variance weighting to characterize the relationship between the total in-person contact hours, total duration and intervention group effect size [18]. The unit of analysis was individual study. Random-effects modeling was used to allow random error to occur at both the subject and study levels. Random-effects modeling is generally preferred when it is expected that the effect sizes will be heterogeneous or when it is desired to generalize the results beyond the subsample of analyzed studies [19]. All effect sizes were calculated so that a negative value indicated that the outcome decreased at follow up when compared to baseline, while a positive effect size indicates that outcome increased over the course of the study. Cochrane’s Q and the I 2 index were used to examine the degree of effect size heterogeneity.

The base model evaluating the relationship between dose and effect size contained three independent variables: mean-centered contact hours, mean-centered intervention duration, and the interaction of centered contact hours and centered intervention duration. The interaction was included to account for the possibility that the relationship between contact hours and effect size may depend on study duration. For example, the effect of contact hours in a study with 40 contact hours over 6 months might be expected to be different than the effect of contact hours in a study with 40 contact hours over 2 years. The full model controlled for additional covariates that were theorized to be confounders of the relationship between dose variables and change in the outcome. These covariates were coded as follows: 1) study year (to account for temporal trends in efficacy of studies; coded continuously) 2) intention to treat (Yes vs. No), 3) participant age group (12–18 years vs. 2–11 years), 4) intervention mode (multi-modality vs. in person only), 5) setting (school/community vs. multi-location vs. clinic/university), 6) intervention format (group vs. individual/group or individual only), 7) intervention participants (parent or parent/child vs. child only), and 8) baseline weight status of study participants (normal + overweight or obese vs. overweight or obese only). We used the Delphi criteria to code for study quality, and included intention to treat as a surrogate for study quality in our analytic model. We also included baseline weight status as a covariate to control for studies primarily aimed at obesity treatment. Finally, separate stratified meta-regressions were run on studies that had specific characteristics to explore for possible dose effects specific to different types of interventions (Additional file 1: Table S2). This additional analysis using stratified meta-regressions should be interpreted with caution due to potential bias and power issues related to sample restriction, but was done for exploratory purposes. All data were extracted into a secure REDCap database and subsequent analyses were conducted using Stata version 14.2 and R version 3.4.0 [20, 21].

Following recommendations in the Cochrane Handbook for Systematic Reviews of Interventions [17], we created a funnel plot to visually assess whether bias from small-study effects may have been present. Additionally, because visual inspection is largely subjective, we conducted the regression test for funnel-plot asymmetry proposed by Egger et al. [22] To further evaluate the influence of small-study effects, we then compared the fixed- and random-effects estimates of the overall effect size. To remove bias that can occur when estimating effect size in studies with small sample sizes, the standardized mean difference effect size, d, was converted to Hedges’ g via a correction factor, J [16].

Results

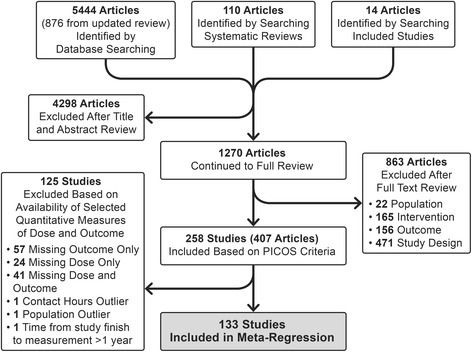

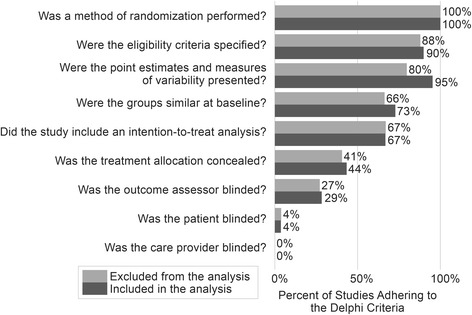

The literature search returned 1270 articles for full review, 863 of which were excluded (471 for study design, 165 for intervention type, 156 for outcome measure, and 22 for population studied), resulting in 258 studies that were abstracted. Of the 258 studies identified by the systematic review, 125 were excluded from the meta-regression. We excluded 123 studies because of incomplete data on effect size, dose, or both. We excluded two additional studies: one study because its initial follow-up time point was one year after the study was completed, and one study because its target population differed greatly from the target populations of the other included studies. This led to the final analytic sample of 133 studies that had acceptable data on dose and a calculable effect size (Fig. 1). See the supplementary material (Additional file 1: Table S1) for full list of reviewed studies. Using the Delphi Criteria, there were no differences in study quality (i.e., internal validity) between the studies included vs. excluded in the analytic sample (Fig. 2).

Fig. 1.

Flow Diagram

Fig. 2.

Assessment of Study Quality using the Delphi Criteria. The percent of studies adhering to the Delphi criteria is shown, comparing studies included in the analytic sample vs. studies excluded from the analytic sample. It is important to note that if a study did not specifically report adherence, then the study would be coded as non-adherent

The distribution of dose components is shown in Table 1. The average intended total contact hours (# sessions x length of sessions) of the 133 studies included was 27.7 (SD 32.2) hours, and the median was 18 (IQR 10, 36) hours. Based on previously published cut-points, 23% of the included studies were categorized as low intensity (<10 h), 49% were categorized as medium intensity (≥10 h & <36 h), and 28% were categorized as high intensity (≥ 36 h) [8]. The average intervention duration for included studies was 26.0 (SD 23.4) weeks and the median intervention duration was 17 (IQR 12, 26) weeks. The intervention duration was as follows: 37% of studies were <3 months, 41% were ≥3 and <6 months, 5% were ≥6 and <9 months, and 17% were ≥9 months.

Table 1.

Descriptive Statistics of Included and Excluded Studies

| Analytic Sample (n = 133) | Excluded Sample (n = 125) | |||

|---|---|---|---|---|

| Median or Frequency | IQR or Percent | Median or Frequency | IQR or Percent | |

| Hedges’ g effect sizea | −0.25 | [−0.83, 0.33] | −0.40b | [−1.09, 0.29] |

| Contact hours | 18.0 | [10.0, 36.0] | 12.5c | [7.6, 27.0] |

| Duration (in weeks) | 17.3 | [12.0, 26.0] | 25.5d | [12.0, 35.0] |

| Study Year | 2010 | [2012, 2014] | 2012 | [2008, 2014] |

| Intention to treat | ||||

| Yes | 89 | 67% | 84 | 67% |

| No | 44 | 33% | 41 | 33% |

| Participant age group | ||||

| 2–11 only | 52 | 39% | 49 | 39% |

| 12–18 only | 22 | 17% | 23 | 18% |

| Other combination | 58 | 44% | 53 | 42% |

| Intervention mode | ||||

| In-person only | 57 | 43% | 42 | 34% |

| In-person plus other | 76 | 57% | 65 | 52% |

| No in-person dose | 0 | 0% | 18 | 14% |

| Settinge | ||||

| School/community only | 27 | 22% | 34 | 29% |

| Clinic/university only | 55 | 46% | 26 | 22% |

| All other combinations | 38 | 32% | 59 | 50% |

| Formatf | ||||

| Individual only | 26 | 20% | 56 | 45% |

| Group only or both | 106 | 80% | 68 | 55% |

| Participants | ||||

| Child only | 24 | 18% | 28 | 22% |

| Parent only, or parent and child, or parent and child and other family | 109 | 82% | 97 | 78% |

| Weight Status | ||||

| Normal weight and overweight or obese | 28 | 21% | 45 | 36% |

| Overweight or obese only | 105 | 79% | 77 | 62% |

| Normal weight only | 0 | 0% | 3 | 2% |

aThe pooled effect size was estimated using the DerSimonian and Laird methodology for random-effects meta-analysis. The variance of the effect sizes is described by the interval from ±2*τ around the random-effects pooled estimate, which is an approximate 95% range of the effect sizes

bEffect size was missing in 98 of the excluded studies (n = 27)

cContact hours was missing in 63 of the excluded studies (n = 62)

dDuration was missing in 25 of the excluded studies (n = 100)

eSetting was missing in 13 of the analyzed studies (n = 120) and 6 of the excluded studies (n = 119)

fFormat was missing for 1 of the analyzed studies (n = 132) and 1 of the excluded studies (n = 124)

There was significant variability of the effect sizes (Additional file 1: Figure S1). Based on the results of a random effects meta-analysis, heterogeneity was significant (χ [2] = 4868; d. f. = 132; p < 0.001) with 97.3% of the variation in effect size attributable to heterogeneity (I 2). The estimate of between-study variance was 0.08 (τ [2]). At the 95% confidence level, 75 studies (56%) demonstrated a statistically significant decrease in the standardized outcome (e.g., BMI-Z), 53 studies (40%) demonstrated no significant change, and 5 studies (4%) demonstrated a significant increase in the standardized outcome.

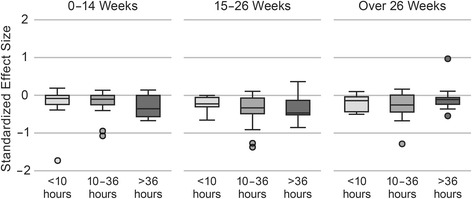

In the base model that examined the relationship between intended dose and effect size, there was no detectable relationship between standardized effect size and the total contact hours (Β = −0.001; 95% CI [−0.003, 0.002]; p = 0.5), the duration of the intervention (Β = 0.0003; 95% CI [−0.002, 0.003]; p = 0.8) or their interaction (Β = 0.00005; 95% CI [−0.000008, 0.0001]; p = 0.09). When treating dose categorically, there was no apparent trend toward an association between any component of dose and the effect size (Fig. 3). In the full model that accounted for both dose and additional study characteristics, there was not a statistically significant association between contact hours, duration or their interaction and effect size (Table 2). When evaluating for any association between dose and effect size in certain types of studies (i.e., stratified analyses), we found a very small but statistically significant association between longer studies and worse outcomes when studies recruited normal weight individuals in addition to overweight/obese individuals (Additional file 1: Table S2).

Fig. 3.

Box Plot of Standardized Effect Size by Dose Components. For each category of duration (0–14 weeks, 15–26 weeks, over 26 weeks) the standardized effect sizes are shown for each category of contact hours (<10 h, 10–36 h, over 36 h). There does not appear to be a trend by duration or contact hours that would suggest an association between either component and effect size. Note that this approach does not take into account the weighting of studies that is possible through meta-regression techniques. A negative standardized effect size represents a decrease in weight-related outcome and a positive standardized effect size represents an increase in weight-related outcome

Table 2.

Meta-regression comparing the dose of the interventions with the standardized effect size, controlling for study characteristics

| n = 119 | Coefficient | 95% CI | p-value | |

|---|---|---|---|---|

| Contact Hours (centered) | 0.000 | −0.003, | 0.002 | 0.79 |

| Duration (centered) | 0.001 | −0.002, | 0.003 | 0.44 |

| Contact Hours x Duration | 0.000 | 0.000, | 0.000 | 0.29 |

| Article Year | −0.007 | −0.025, | 0.011 | 0.46 |

| Intention to Treat | ||||

| No | – | – | – | |

| Yes | −0.004 | −0.137, | 0.129 | 0.95 |

| Age | ||||

| 2–11 years | – | – | – | |

| 12–18 years | 0.123 | −0.044, | 0.290 | 0.15 |

| Combination | 0.032 | −0.098, | 0.161 | 0.63 |

| Mode | ||||

| In Person Only | – | – | – | |

| In Person + Other Mode | 0.040 | −0.087, | 0.166 | 0.53 |

| Setting | ||||

| Clinic or University | – | – | – | |

| School or Community | 0.107 | −0.053, | 0.267 | 0.19 |

| Combination | 0.021 | −0.117, | 0.159 | 0.76 |

| Format | ||||

| Individual Only | – | – | – | |

| Group or Individual + Group | −0.106 | −0.258, | 0.045 | 0.17 |

| Participants | ||||

| Child Only | – | – | – | |

| Parent or Parent + Child | −0.083 | −0.239, | 0.073 | 0.30 |

| Weight Status | ||||

| Normal + Overweight or Obese | – | – | – | |

| Overweight or Obese Only | −0.277 | −0.425, | −0.130 | <0.001 |

N = 119 with complete data on all covariates

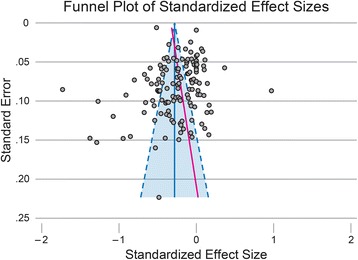

The funnel plot (Fig. 4) demonstrated minimal visual asymmetry. This was supported by the estimated bias coefficient from the Egger test (bias = 1.6, p = 0.03). To further evaluate for potential small-study bias, we compared the estimate from the random effects model used in Table 1 with that from a fixed-effects model (in which, relative to the random effects model, smaller studies are given smaller weights and larger studies are given larger weights). The fixed effects model yielded an estimate of −0.28 (p < 0.001), which is very similar to the random effects estimate (−0.25, p < 0.001). Taken together, these results suggest that the meta-regression estimates are not biased from the lack of inclusion of small studies with “negative findings” (i.e., weight gain).

Fig. 4.

Funnel Plot of Standardized Effect Sizes. The funnel plot demonstrates minimal visual asymmetry, in the direction of excess small studies that resulted in a “negative” outcome (i.e., weight gain = positive effect size). This is supported by the estimated bias coefficient from the Egger test (bias = 1.6, p = 0.03, shown as red solid line). Dotted lines represent the pseudo-95% confidence interval

Discussion

This systematic review identified wide variation in the dose of behavioral interventions to prevent and treat pediatric obesity. In addition, there was no association between dose and changes in weight-related outcomes. Based on the results of these analyses we are unable to provide guidance to the field regarding a minimally effective dose that should be targeted as new behavioral interventions are developed and implemented to treat or prevent childhood obesity. We hypothesize that one of the main reasons we did not find an association between treatment intensity and effect size is that behavior change occurs at a different threshold of exposure to the intervention for each person. Thus, for some people a low “dose” will result in rapid behavior change, but for others an extensive “dose” will be required to even begin the process of behavior change. This level of detail could not be explored using meta-regression techniques, as we did not have individual-level data. Future research should consider focusing on non-linear modeling of dose components as they relate to change in weight-related outcomes. It may have also been that many of the studies had inadequate intervention content/behavioral change strategies. Finally, an individual’s healthy behaviors are the product of multiple levels of influence, including family, environment, communities, and local policies [23]. The current literature does not often report on the complex environmental determinants of a person’s behavior, which makes knowing how to apply these results in a specific context challenging, though this would be an important future direction to consider.

Our study adds to the literature on how the dose of a behavioral intervention is related to pediatric obesity outcomes, however the existing literature provides limited context for these findings. In one previous meta-analysis of 20 behavioral interventions to treat pediatric obesity in community/outpatient settings, dose was found to be a moderator of the relationship between study and outcome [9]. Several systematic reviews have been unable to assess dose or have pointed to the challenges associated with measuring dose appropriately in these contexts [1, 2, 24, 25]. In adult behavior change literature, there has been some preliminary indication that more intensive (i.e., more contact hours) interventions are related to a higher degree of reduction in cardiovascular disease risk [8]. In addition, the smoking cessation literature has demonstrated a dose-response relationship for behavioral interventions in clinic, where more intensive interventions are both better received and more effective at achieving smoking cessation [26, 27]. But these same relationships between dose and outcome in behavioral trials have also been challenging to characterize in other fields, including medication adherence in chronic medication conditions and HIV-related research [28–30]. Taken together, these bodies of literature suggest that while there may be a relationship between the dose of behavior-change interventions and health behaviors, understanding how the dose of a behavioral intervention is related to the target outcome is challenging in any behavior-change context.

The reasons why a relationship was not found between dose and effect size in the current analysis is unclear. We found that there were several limitations to conducting the analysis as we had planned. First, there was wide variation in reporting on the dose of an intervention, making it challenging to appropriately and uniformly quantify the dose delivered and/or received in the intervention. While the underlying characteristics of the studies that were included and excluded based on availability of a dose or outcome measure were not substantially different from each other, we cannot exclude a potential selection bias, which would likely bias the results to the null. Furthermore, many studies did not include sufficient information on multi-modal components (e.g., phone calls, web-based material) to include in a quantitative evaluation of dose of all intervention components. While the multi-modal nature of interventions was not associated with the effect size in the adjusted meta-regression, we could have misclassified the primary exposure for multi-modal studies, which again may have biased the results to the null. The testing of novel trial designs, including the multi-phasic optimization strategy (MOST) and sequential multiple assignment randomized trials (SMART) may be particularly well suited to characterizing these relationships between dose and outcome [31]. We examined the potential for small-study or publication bias, which did not appear to exert a significant effect. The potential for bias from study quality was partially mitigated by the RCT inclusion criterion and by controlling for intent-to-treat study design. However, we primarily used measures of internal validity to measure study quality. As research in the field of behavioral obesity moves from an efficacy approach (where these results are most applicable) to a dissemination and implementation framework, future attempts at systematic reviews should evaluate studies based on external validity (i.e., RE-AIM framework) [32–34] to help guide translation of results into communities. An additional limitation to this study is that we may not have identified all studies relevant to the literature review, excluding relevant findings that could have influenced results. Finally, we could not report on the dose actually received by participants because it was not reported consistently across the literature.

Conclusions

In conclusion, the available literature does not provide sufficient evidence to suggest a minimum behavioral dose to prevent or treat childhood obesity. It is not clear, whether the lack of an association between dose intensity and change in weight-related outcome is because behavior change is non-linear or whether variability in the reporting of both dose and outcome obscured a relationship. Careful attention to this in large ongoing randomized trials may be an effective approach to understand the complex issue of dose in behavioral interventions. Consistent with ongoing work from the Template for Intervention Description and Replication (TIDieR) and NIH Behavior Change consortium, future researchers should quantitatively identify dose intended, dose delivered, and dose received across each intervention format (individual, group, online, text, phone call, mailing) in addition to effect size when reporting results of childhood weight management trials to facilitate quantitative evaluation of how dose is associated with behavior change [35].

Acknowledgements

Not applicable

Funding

This research was supported by the National Heart, Lung, and Blood Institute, the Eunice Kennedy Shriver National Institute of Child Health and Development, and the NIH Office of Behavioral and Social Sciences Research (Grant Numbers U01HL103561, U01HL103620, U01HL103622, U01HL103629, and U01HD068890). Dr. Heerman’s time was supported by a K23 grant from the NHLBI (K23 HL127104). Dr. Hardin’s time was supported by a T32 grant from the NINR (T32 NR015433–01). Dr. Barkin’s time was partially supported by the NIDDK (P30DK092986).

The project described was also supported by Award Number UL1TR000114 from the National Center for Advancing Translational Sciences (NCATS) of the National Institutes of Health (NIH). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center For Research Resources or the National Institutes of Health. Study data were collected and managed using REDCap electronic data capture tools hosted at the University of Minnesota.

The funders of the study provided support for investigator time but did not contribute directly to the carrying out the review, interpretation of the results, or approval of the content.

Availability of data and materials

The data used to conduct this review are available in the additional file.

Additional file

Figure S1. Forest plot demonstrating a visual representation of the variability in standardized effect size. Table S1. Dose of behavioral intervention and intervention characteristics by study and a full reference list of all studies reviewed. Table provides details of all articles reviewed in this meta-regression. Table S2. Meta-regression evaluating the relationship between dose and standardized effect size stratified by study characteristics. (PDF 1938 kb)

Authors’ contributions

All authors were substantively involved in the conceptualization of the manuscript, the interpretation of the results, and the revision of the manuscript. WH was responsible for overseeing data abstraction and analysis plan, and drafted the manuscript. WH, MJ, JB, ET, NJ, AK, HH all participated in data abstraction. ES and LS were responsible for conducting the analysis. BO was responsible for the search strategy. All authors read and approved the final manuscript.

Ethics approval and consent to participate

This manuscript represents a systematic review of the literature, and was not human subjects research.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

Electronic supplementary material

The online version of this article (10.1186/s12966-017-0615-7) contains supplementary material, which is available to authorized users.

Contributor Information

William J. Heerman, Email: Bill.Heerman@vanderbilt.edu

Meghan M. JaKa, Email: Meghan.JaKa@allina.com

Jerica M. Berge, Email: jberge@umn.edu

Erika S. Trapl, Email: erika.trapl@case.edu

Evan C. Sommer, Email: evan.c.sommer@Vanderbilt.Edu

Lauren R. Samuels, Email: lauren.samuels@vanderbilt.edu

Natalie Jackson, Email: natalie.jackson@vanderbilt.edu.

Jacob L. Haapala, Email: haap0016@umn.edu

Alicia S. Kunin-Batson, Email: Alicia.S.KuninBatson@HealthPartners.Com

Barbara A. Olson-Bullis, Email: Barbara.A.OlsonBullis@healthpartners.com

Heather K. Hardin, Email: hkh10@case.edu

Nancy E. Sherwood, Email: sherw005@umn.edu

Shari L. Barkin, Email: Shari.Barkin@vanderbilt.edu

References

- 1.Waters E, de Silva-Sanigorski A, Hall BJ, et al. Interventions for preventing obesity in children. Cochrane Database Syst Rev. 2011;12:CD001871. doi: 10.1002/14651858.CD001871.pub3. [DOI] [PubMed] [Google Scholar]

- 2.Oude Luttikhuis H, Baur L, Jansen H, et al. Interventions for treating obesity in children. Cochrane Database Syst Rev. 2009;1:CD001872. doi: 10.1002/14651858.CD001872.pub2. [DOI] [PubMed] [Google Scholar]

- 3.Boon CS, Clydesdale FMA. Review of childhood and adolescent obesity interventions. Crit Rev Food Sci Nutr. 2005;45(7–8):511–525. doi: 10.1080/10408690590957160. [DOI] [PubMed] [Google Scholar]

- 4.Young KM, Northern JJ, Lister KM, Drummond JA, O'Brien WHA. Meta-analysis of family-behavioral weight-loss treatments for children. Clin Psychol Rev. 2007;27(2):240–249. doi: 10.1016/j.cpr.2006.08.003. [DOI] [PubMed] [Google Scholar]

- 5.Seo DC, Sa JA. Meta-analysis of obesity interventions among U.S. minority children. J Adolesc Health. 2010;46(4):309–323. doi: 10.1016/j.jadohealth.2009.11.202. [DOI] [PubMed] [Google Scholar]

- 6.Stice E, Shaw H, Marti CNA. Meta-analytic review of obesity prevention programs for children and adolescents: the skinny on interventions that work. Psychol Bull. 2006;132(5):667–691. doi: 10.1037/0033-2909.132.5.667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Warren SF, Fey ME, Yoder PJ. Differential treatment intensity research: a missing link to creating optimally effective communication interventions. Ment Retard Dev Disabil Res Rev. 2007;13(1):70–77. doi: 10.1002/mrdd.20139. [DOI] [PubMed] [Google Scholar]

- 8.Lin JS, O'Connor E, Evans CV, Senger CA, Rowland MG, Groom HC. Behavioral counseling to promote a healthy lifestyle in persons with cardiovascular risk factors: a systematic review for the u.S. preventive services task force. Ann Intern Med. 2014;161(8):568–578. doi: 10.7326/M14-0130. [DOI] [PubMed] [Google Scholar]

- 9.Janicke DM, Steele RG, Gayes LA, et al. Systematic review and meta-analysis of comprehensive behavioral family lifestyle interventions addressing pediatric obesity. J Pediatr Psychol. 2014;39(8):809–825. doi: 10.1093/jpepsy/jsu023. [DOI] [PubMed] [Google Scholar]

- 10.Voils CI, Chang Y, Crandell J, Leeman J, Sandelowski M, Maciejewski ML. Informing the dosing of interventions in randomized trials. Contemp Clin Trials. 2012;33(6):1225–1230. doi: 10.1016/j.cct.2012.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.JaKa MM, Haapala JL, Trapl ES, et al. Reporting of treatment fidelity in behavioural paediatric obesity intervention trials: a systematic review. Obes Rev. 2016;17(12):1287–1300. doi: 10.1111/obr.12464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bellg AJ, Borrelli B, Resnick B, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH behavior change consortium. Health Psychol. 2004;23(5):443–451. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- 13.Voils CI, King HA, Maciejewski ML, Allen KD, Yancy WS, Jr, Shaffer JA. Approaches for informing optimal dose of behavioral interventions. Ann Behav Med. 2014;48(3):392–401. doi: 10.1007/s12160-014-9618-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Moher D, Liberati A, Tetzlaff J, Altman DG, Group P Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int J Surg. 2010;8(5):336–341. doi: 10.1016/j.ijsu.2010.02.007. [DOI] [PubMed] [Google Scholar]

- 15.Verhagen AP, de Vet HC, de Bie RA, et al. The Delphi list: a criteria list for quality assessment of randomized clinical trials for conducting systematic reviews developed by Delphi consensus. J Clin Epidemiol. 1998;51(12):1235–1241. doi: 10.1016/S0895-4356(98)00131-0. [DOI] [PubMed] [Google Scholar]

- 16.Cooper HM, Hedges LV, Valentine JC. The handbook of research synthesis and meta-analysis. 2. New York: Russell Sage Foundation; 2009. [Google Scholar]

- 17.Higgins J, Green S. Cochrane Handbook for Systematic Review of Interventions. 2011; http://www.handbook.cochrane.org, Version 5.1.0 [updated March 2011]. Accessed 2 Oct 2017.

- 18.DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 19.Lipsey MW, Wilson DB. Practical meta-analysis. Thousand oaks, Calif: Sage Publications; 2001. [Google Scholar]

- 20.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.R: A language and environment for statistical computing. [computer program]. Vienna, Austria: R Foundation for Statistical Computing; 2017.

- 22.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315(7109):629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Huang TT, Drewnosksi A, Kumanyika S, Glass TA. A systems-oriented multilevel framework for addressing obesity in the 21st century. Prev Chronic Dis. 2009;6(3):A82. [PMC free article] [PubMed] [Google Scholar]

- 24.Marshall SJ, Simoes EJ, Eisenberg CM, et al. Weight-related child behavioral interventions in Brazil: a systematic review. Am J Prev Med. 2013;44(5):543–549. doi: 10.1016/j.amepre.2013.01.017. [DOI] [PubMed] [Google Scholar]

- 25.Tate DF, Lytle LA, Sherwood NE, et al. Deconstructing interventions: approaches to studying behavior change techniques across obesity interventions. Transl Behav Med. 2016;6(2):236–243. doi: 10.1007/s13142-015-0369-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Martin Cantera C, Puigdomenech E, Ballve JL, et al. Effectiveness of multicomponent interventions in primary healthcare settings to promote continuous smoking cessation in adults: a systematic review. BMJ Open. 2015;5(10):e008807. doi: 10.1136/bmjopen-2015-008807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Secades-Villa R, Alonso-Perez F, Garcia-Rodriguez O, Fernandez-Hermida JR. Effectiveness of three intensities of smoking cessation treatment in primary care. Psychol Rep. 2009;105(3 Pt 1):747–758. doi: 10.2466/PR0.105.3.747-758. [DOI] [PubMed] [Google Scholar]

- 28.Lyles CM, Kay LS, Crepaz N, et al. Best-evidence interventions: findings from a systematic review of HIV behavioral interventions for US populations at high risk, 2000-2004. Am J Public Health. 2007;97(1):133–143. doi: 10.2105/AJPH.2005.076182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rotheram-Borus MJ, Lee MB, Murphy DA, et al. Efficacy of a preventive intervention for youths living with HIV. Am J Public Health. 2001;91(3):400–405. doi: 10.2105/AJPH.91.3.400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kripalani S, Yao X, Haynes RB. Interventions to enhance medication adherence in chronic medical conditions: a systematic review. Arch Intern Med. 2007;167(6):540–550. doi: 10.1001/archinte.167.6.540. [DOI] [PubMed] [Google Scholar]

- 31.Howard MC, Jacobs RR. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): two novel evaluation methods for developing optimal training programs. Journal of Organizational Behavior. 2016;37(8):1246-1270.

- 32.Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–e46. doi: 10.2105/AJPH.2013.301299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. doi: 10.2105/AJPH.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Jilcott S, Ammerman A, Sommers J, Glasgow RE. Applying the RE-AIM framework to assess the public health impact of policy change. Ann Behav Med. 2007;34(2):105–114. doi: 10.1007/BF02872666. [DOI] [PubMed] [Google Scholar]

- 35.Borek AJ, Abraham C, Smith JR, Greaves CJ, Tarrant MA. Checklist to improve reporting of group-based behaviour-change interventions. BMC Public Health. 2015;15:963. doi: 10.1186/s12889-015-2300-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to conduct this review are available in the additional file.