Abstract

In medical image processing, robust segmentation of inhomogeneous targets is a challenging problem. Because of the complexity and diversity in medical images, the commonly used semiautomatic segmentation algorithms usually fail in the segmentation of inhomogeneous objects. In this study, we propose a novel algorithm imbedded with a seed point autogeneration for random walks segmentation enhancement, namely SPARSE, for better segmentation of inhomogeneous objects. With a few user‐labeled points, SPARSE is able to generate extended seed points by estimating the probability of each voxel with respect to the labels. The random walks algorithm is then applied upon the extended seed points to achieve improved segmentation result. SPARSE is implemented under the compute unified device architecture (CUDA) programming environment on graphic processing unit (GPU) hardware platform. Quantitative evaluations are performed using clinical homogeneous and inhomogeneous cases. It is found that the SPARSE can greatly decrease the sensitiveness to initial seed points in terms of location and quantity, as well as the freedom of selecting parameters in edge weighting function. The evaluation results of SPARSE also demonstrate substantial improvements in accuracy and robustness to inhomogeneous target segmentation over the original random walks algorithm.

PACS number: 87.57.nm

Keywords: segmentation, inhomogeneous target, random walks, seed point, autogeneration

I. INTRODUCTION

Segmentation of medical images is a significant and challenging task for disease diagnosis 1 , 2 and treatment planning. 3 , 4 , 5 It can be roughly classified into three categories, namely, manual, semiautomatic (interactive), and automatic segmentation. (6) Manual segmentation is usually time‐consuming and experience dependent. Automatic segmentation cannot guarantee optimal results due to the complexity and diversity of the medical images, and often requires a physician's further intervention. Interactive segmentation, (7) which allows the physician to incorporate their professional knowledge and the specific clinical criteria and saves physicians' time with computer aid, is more attractive than other two.

In the past decade, efforts have continued towards developing interactive segmentation methods. One of the popular interactive segmentation methods is random walks (RW), (8) where the users achieve segmentation through estimating the probability that a random walker starting at each voxel to reach the labeled initial seed points (SPs). The RW algorithm has several drawbacks in practice. Firstly, RW relies on the location and quantity of the initial SPs to a large extent. It has been demonstrated that RW is stable to changes of the SPs only when the changes of location and quantity are below 10% and 50%, respectively. (9) Moreover, to obtain satisfactory segmentation results, the quantity of initial SPs should be sufficient to be representative of almost all intensity levels in the target. However, in practice, it is tedious and laborious to place sufficient SPs on the target, especially in a 3D scenario. In this sense, a stable segmentation result may not be guaranteed when insufficient SPs are used, especially for medical inhomogeneous targets, which are common in clinical studies.

Great efforts have been made to improve RW by reducing its reliance on the SPs. Dong et al. (10) proposed a novel SPs selection method composed of a region growing technique and morphological operation to promote the RW segmentation of ventricle in 3D dataset. But, it is limited to cavity or cavity wall extraction. Li et al. (11) extended the RW by incorporating a prior shape to relieve the requirement of the SPs. However, this method works under the assumptions of slight occlusion, similar background, and illumination changes in the pedestrian images, which has limited application in medical images. Moreover, a proper prior shape is usually difficult to obtain because of the diversified shapes of clinic targets. Onoma et al. (12) proposed an improved RW by initializing the SPs automatically via fuzzy‐C means to yield better segmentation of lung tumor. However, it fails in the segmentation of lesions with complex shapes and inhomogeneous uptake. Cui et al. (13) described a SPs automatic selection strategy for RW‐based segmentation of lung tumor in computed tomography (CT) image by using the positron emission tomography (PET) image as the prior. But the availability of the PET image may restrain its application to segmentation tasks in other image modalities.

In this paper, we propose a novel algorithm imbedded with seed point autogeneration for random walks segmentation enhancement (named SPARSE). A previous study (9) has shown that more SPs generally provide more useful information in the target region and background, and thus more robust segmentation results can be guaranteed. With a limited number of user‐labeled initial SPs, we have developed a SPs autogeneration scheme to obtain extended seed points (ESPs) by estimating the probability of each voxel with respect to a certain label. The RW algorithm is then applied upon the ESPs to achieve improved segmentation result. The SPARSE is implemented using compute unified device architecture (CUDA) on a graphic processing unit (GPU) platform to improve computation efficiency. The performance of SPARSE is evaluated using homogeneous and inhomogeneous cases. It is found that the SPARSE is robust to the initial SPs in terms of location, quantity, and the selection of parameter β in edge weighting function. Furthermore, SPARSE improves the segmentation accuracy when compared with the original RW and another state‐of‐the‐art interactive segmentation algorithm — the graph cut (GC) method. (14)

II. MATERIALS AND METHODS

A. Review of the RW

RW 8 , 15 is used to solve the segmentation problem by calculating the probability that a random walker starting at each voxel will first reach one of the labeled SPs. A graph consisting of a pair with vertices (nodes) and edges is first created based on the image I to be segmented. The edge e spanning two vertices, and vj, is denoted by . The connectivity of two adjacent vertices on an edge is weighted by ωij, such as

| (1) |

where and indicate the image intensity at voxel i, j, respectively. β represents the only free parameter.

The desired random walker probabilities x on vertices can be obtained by solving the following combinatorial Dirichlet problem:

| (2) |

where and are the random walker probabilities on vertices , , respectively. L is the Laplacian matrix:

| (3) |

is the degree of a vertex for all edges incident on . The Laplacian matrix is a sparse matrix. It is built according to four‐connectivity and six‐connectivity for 2D and 3D scenarios, respectively.

The vertices can be partitioned into two sets, (labeled vertices) and (unlabeled vertices) such that and . In this way, Eq. (2) can be decomposed into

| (4) |

where and correspond to the probabilities of the labeled and unlabeled voxels, respectively. Differentiating with respect to and finding the critical point yields

| (5) |

The probabilities for those unlabeled voxels can be easily calculated by solving the above sparse, positive definite linear Eq. (5). Denoting the probability assumed at node for each label s, by , the final segmentation is obtained by assigning each node the label corresponding to maximum probability maxs(). Usually, at least two groups of labels () need to be supplied manually (i.e., SPs inside the region to be segmented (named foreground points) and SPs at the background (named background points)).

B. Seed point autogeneration

In the 2D homogenous scenario, only a small number of SPs are needed to be labeled to yield a satisfactory segmentation. For the 3D segmentation task, it would be tedious and sometimes impractical to specify all the SPs on each slice of a 3D volume. Moreover, if the target to be segmented is not homogeneous, but contains highly diversified materials instead, using only a small number of SPs usually fails to provide enough information of the target, and thus satisfactory result cannot be obtained. In this study, we propose a SPs autogeneration scheme to obtain the ESPs for RW by estimating the probability of each voxel with respect to a certain label.

Specifically, let us assume that there are labels , and denote the labeled SPs and the corresponding intensities as respectively, where c is the number of the labeled SPs . The intensity distribution probability of voxel i in image I with respect to label is thus given by

| (6) |

where σ is a tuning parameter that refects the rigor level of the similarity criteria, and is a normalizing constant for label :

| (7) |

where .

Once is available, the probability of voxel i to the chain of label can be simply calculated as

| (8) |

with 1 representing the highest probability. In this way, we can obtain the probability maps with respect to label for each voxel in image I.

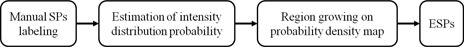

Region growing (16) is then performed on the probability maps with the initially labeled SPs as the SPs and as the growing condition to yield the ESPs , where is the threshold to filter those points with high similarity to the label . For simplicity, we set for in this study. The final segmentation is then completed by performing the RW with for each label . The above ESPs generation scheme is summarized in Fig. 1.

Figure 1.

The flowchart of ESPs generation.

C. Implementation

The proposed algorithm is implemented on a platform equipped with an NVIDIA Tesla C1060 card with a total number of 240 1.3 GHz processors, and a 4 GB DDR3 memory shared by all processors. This platform enables parallel processing of the same operations on different CUDA threads simultaneously, which speeds up the entire algorithm. In order to efficiently parallelize RW in the CUDA environment, the data parallel portions of the algorithm are identified and grouped into the following kernels: 1) an edge kernel to build a graph with vertices and edges; 2) a weighting kernel to compute ; 3) a normalization kernel to normalize ; and 4) a Laplacian kernel to create the Laplacian sparse matrix. Moreover, we adopt the CUSP (a CUDA‐based library for sparse linear algebra and graph computations) (17) to solve the sparse linear Eq. (6). Sparse matrices are stored using the Coordinate (COO) format. The conjugate gradient (CG) method is used as the iterative solver with relative tolerance of 10‐6 and maximum iteration 1000 as the stopping criteria.

D. Evaluation data

The performance of SPARSE is evaluated using a synthetic phantom and four clinical 2D (cases 1‐4) and twenty (cases 5‐24) 3D CT/ MR images. The synthetic phantom (Fig. 2) contains a ground truth target which is made up of piecewise blocks with different intensity values representing inhomogeneous anatomical structures. The image resolution of the phantom is , and Gaussian noise with variance of 20 is added to the phantom, yielding a signal‐to‐noise ratio of roughly 25 in it. For clinical cases, Cases 1‐4 are 2D cases including: a fibula CT image of resolution (case 1); a lung CT image of resolution (case 2); a corpus callosum T2 MR image of resolution (case 3); and an abdomen CT image of resolution (case 4). Cases 5‐24 are 3D cases including: three high‐dose‐rate (HDR) brachytherapy CT images with tandem and cylinder (T&C) applicator of resolution (cases 5, 11), (case 12); five HDR CT images with tandem and ovoid (T&O) applicator of resolution (case 6), (case 13), (case 14), (case 15), and (case 16); three T1c MR images of resolution (cases 7, 17‐18); three T2, T1c, and FLAIR MR image series of resolution (cases 8‐10, 19‐24). In this study, we classify all the evaluated cases into two categories, namely “homogeneous” and “inhomogeneous”, according to the variance of the intensity levels of the segmentation target. Specifically, given the ground truth segmentation, the intensity of the target is first normalized between [0,1], and the standard deviation (SD) is calculated. For those cases with target SD values smaller than a predefined threshold (0.1 is used in this study), they can be regarded as “homogeneous”; otherwise, they are classified as “inhomogeneous”. The target SD value for the synthetic phantom is around 0.22, and the target SD values for other clinical cases are listed in Table 1. By using this metric, we classify cases 3, 7 and 17‐18 as the homogeneous cases, while the others cases, including the synthetic phantom, as the inhomogeneous cases.

Figure 2.

The synthetic phantom segmentation results. (a) A synthetic phantom with an inhomogeneous ground truth target (green contour). The overlaid digits indicate the intensities in each piecewise block. (b) Initial foreground (green) and background (red) SPs; (c) segmentation by RW; (d) segmentation by GC; (e) segmentation by SPARSE. The segmentations in (c) ~ (e) are shown in red contour.

Table 1.

The target SD values of all clinical cases

| Case # | SD | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1‐12 |

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||

| 13‐24 |

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||

;

E. Quantifications of segmentation performance

To quantitatively evaluate the segmentation performance, we employ three similarity metrics: the Dice's coefficient (DC), 18 , 19 the percent error (PE), and the Hausdorff distance (HD). (20) Given the ground truth segmentation region A and the calculated segmentation region B, and their corresponding boundary point sets and , the DC is defined as , which ranges from 0 to 1, corresponding to the worst and the best segmentation, respectively. The PE is defined as with 0 representing the best segmentation. The HD is defined as , where and is the norm on the points of and . For all the evaluated cases (expect for cases 7‐10 and cases 17‐24 where the ground truths are available), the targets are delineated manually by three experienced physicians, and the optimal combination of these three raters is estimated using the SPAPLE algorithm (21) and serves as the surrogate of the ground truth segmentation for each case. All the generated ground truth segmentations are then used for segmentation accuracy studies between the RW, GC, and SPARSE with the above quantitative metrics.

III. RESULTS

A. Synthetic phantom results

Figure 2 illustrates the comparison results of the synthetic phantom using the RW, GC, and SPARSE algorithm. This simple phantom contains an inhomogeneous target made up of nine blocks with different intensities ranging from 25 to 255 (Fig. 2(a)), and the same SPs for RW, GC, and SPARSE are only seeded in five of them (Fig. 2(b)). We can see that the RW and GC algorithm both fail in segmenting the entire target blocks (Fig. 2(c) and (d)). The SPARSE, in contrast, yields a successful segmentation (Fig. 2(e)).

B. Influence of initial seed points: quantity and location

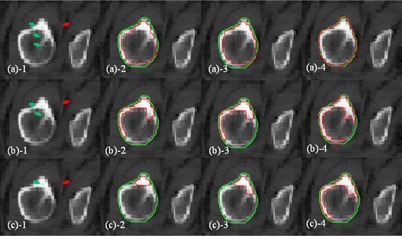

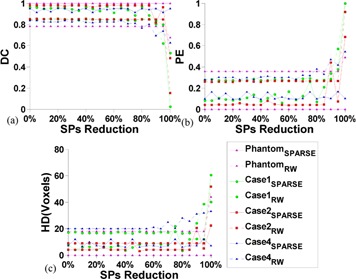

Figure 3 demonstrates an example segmentation of the fibula using RW, GC, and SPARSE with different seed points (case 1). Figure 3(a) shows that RW, GC, and SPARSE can yield satisfactory segmentation results with sufficient SPs (Fig. 3(a) ). When one (Fig. 3(b) ) or two SPs (Fig. 3(c) ) inside the fibula are removed, keeping the others at their original positions, RW, GC, and SPARSE deteriorate, although SPARSE behaves much better than RW and GC. Figure 4 shows another comparison of segmenting a lung tumor with SPs of different locations and quantities (case 2). Figure 4(a) shows that RW, GC, and SPARSE can yield similar satisfactory results when sufficient SPs are labeled (Fig. 4(a) ). However, when reducing the number of the SPs as well as changing their locations (Figs. 4(b) , 4(c)), the segmentation deteriorates (Fig. 4(b) ), or even fails (Fig. 4(c) ) by RW and GC. In contrast, SPARSE produces more robust segmentation results (Figs. 4(a) to (c)).

Figure 3.

Fibula segmentation with different SPs (case 1). Segmentation of the fibula with one background SP outside of the fibula (red point), and three/two/one foreground SPs (green points in (a), (b), and (c), respectively) inside the fibula. The red curves in (a), (b), and (c), in (a), (b), and (c), and in (a), (b), and (c) represent the segmentation results using RW, GC, and SPARSE, respectively. The green curves are the ground truth segmentation.

Figure 4.

Lung tumor segmentation with different SPs (case 2). Segmentation of a lung tumor with sufficient ((a)), limited ((b)) and insufficient ((c)) foreground (green) and background (red) SPs. The red curves in (a) to (c), (a) to (c), and (a) to (c) represent the segmentation results using RW, GC, and SPARSE, respectively. The green curves are the ground truth segmentation. The yellow arrows indicate the undersegmentation ((a), (b)), oversegmentation ((b)), and failed segmentation ((c)).

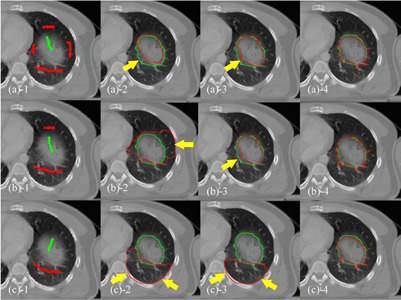

The synthetic phantom and three challenging cases (cases 1, 2, and 4) with inhomogeneous targets are used for further assessment of the impact of SPs' quantity and location on segmentation performance. To analyze the sensitivity of SPs' quantity, certain amounts of initial SPs are first labeled on the images, and then gradually reduced by random down‐sampling with interval of 5% of the original number. The corresponding segmentation results are tracked on each reduction step and quantitatively measured by three similarity metrics: DC, PE, and HD. Figure 5 shows the comparison results of the segmentation response to SPs reduction, with RW and SPARSE. For all the evaluated cases, SPs reduction of less than 80% are seen to produce only minor changes in the resulting segmentation using both RW and SPARSE, while sharp drops in segmentation quality occur when the reduction is larger than 80%. Perturbations of segmentation performance in SPARSE is slightly larger than RW; however, SPARSE always has superior segmentation results than RW for all three metrics.

Figure 5.

Sensitivity analysis of SPs' quantity using the synthetic phantom and cases 1, 2 and 4. DC (a), PE (b), and HD (c) are comparisons of the segmentation response to SPs reduction between RW and SPARSE, respectively. The x‐axis indicates the percentage of SPs reduction (5% interval) with respect to the initial seeding. Note that the 100% value corresponds to the scenario when only one SP is left.

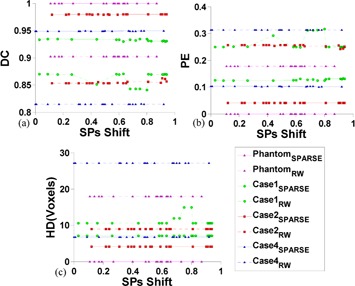

To analyze the sensitivity of SPs' location, arbitrary SPs placement is simulated by shifting the labeled SPs with a random direction and amplitude. Specifically, given certain initial labeled SPs, the shift amplitude of each SPs is given by multiplying the minimum distance from its current location to the boundary points on the ground truth segmentation with a random number in the range (0,1). The shift direction is randomly assigned. Perturbing in this way, the initial SPs can only move within a reasonable distance without crossing over (e.g., moving the foreground points into the background). The segmentation results are tracked on each random SPs location and quantitatively measured by DC, PE, and HD. Figure 6 shows the comparison of segmentation performance relative to the SPs position changes between RW and SPARSE. In RW, a small variation of segmentation performance is observed in the synthetic phantom and in cases 1 and 2, but large perturbation is seen in case 4. In contrast, segmentation is stable for all the evaluated cases via SPARSE. Moreover, SPARSE behaves much better than RW in terms of segmentation accuracy.

Figure 6.

Sensitivity analysis of SPs' location using the synthetic phantom and cases 1, 2 and 4. DC (a), PE (b), and HD (c) are comparisons of the segmentation response to random SPs location between RW and SPARSE, respectively. Given certain initial labeled SPs, the shift amplitude of each SPs is given by multiplying the minimum distance from its current location to the boundary points on the ground truth segmentation with a random number ranges in (0,1), which is the x‐axis. The shift direction is randomly assigned.

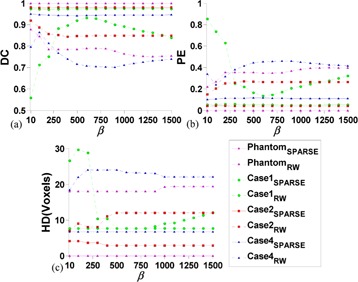

C. Influence of parameter β

Since the number of SPs is largely increased via SPs autogeneration, the influence of β is weakened in SPARSE. Figure 7 shows experiments of the segmentation response to different β. It is shown that the segmentation results vary greatly when different β is used in RW, and the optimal β is case‐dependent, since no fixed perturbation pattern is observed in all the three cases. In contrast, the segmentations are stable relative to different β used in SPARSE for the synthetic phantom and all the three clinical cases, implying that the segmentation is essentially independent of the selection of β. Similar results are also obtained in other evaluated cases, though only three outputs are depicted in Fig. 7 for the clarity of comparison. Therefore, we empirically set for SPARSE, and the optimal ones are used for RW for all the other comparison studies in this study.

Figure 7.

Sensitivity analysis of β using the synthetic phantom and case 1, 2 and 4. DC (a), PE (b), and HD (c) are comparisons of the segmentation response to different β (with same SPs) between RW and SPARSE, respectively. β increases from 10 to 1500 with interval size of 100.

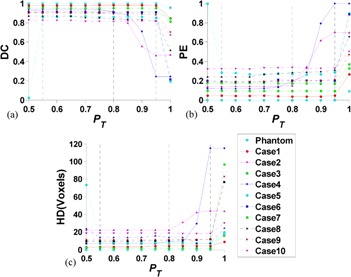

D. Influence of parameter PT

Figure 8 presents the segmentation results response to different in the synthetic phantom and ten segmentation cases (cases 1‐10). We have the following two observations. First, the performance of SPARSE is stable when small is used, and degenerates as increases to a certain degree in all evaluated cases. The turning points are around 0.8 and 0.95 for 2D and 3D, respectively. Theoretically, larger implies stricter growing condition; in other words, fewer points will be included into the ESPs chain. If no new points are grown into ESPs, the SPARSE will degenerate to RW. Secondly, when small is used (PT ≤ 0.5), the ESPs might be overgrown, or one point might be assigned to more than one label. Case 5 in Fig. 8 is such a typical case that failed segmentation is obtained when is used. Based on these observations from both the phantom and clinical cases, we hence heuristically assume that an appropriate range for is [0.6, 0.9] and we empirically set for all the evaluated cases in this study.

Figure 8.

The DC (a), PE (b), and HD (c) by SPARSE with different for the synthetic phantom and cases 1‐10. The solid (phantom and cases 1‐4) and dashed (case 5‐10) lines correspond to the 2D and 3D cases, respectively.

E. More clinical cases

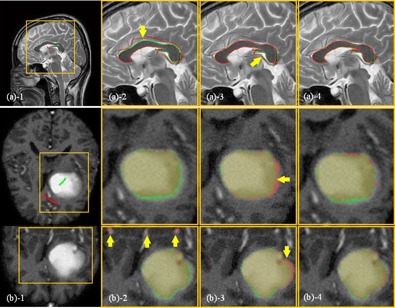

Figure 9 illustrates the comparison of results of segmenting relatively homogeneous targets. Figure 9(a) shows the segmentation comparisons of the corpus callosum in a T2 MR image (case 3). Since only limited SPs are labeled in this case, oversegmentation is observed in RW and GC (arrow in Fig. 9(a) ), while the SPARSE yields a much better segmentation result (Fig. 9(a) ). Figure 9(b) shows a case of segmenting a glioma in a T1c MR image (case 7). We can see that oversegmentations are sparsely distributed in some voxels outside of the target region in RW (arrows in Fig. 9(b) ), and slight oversegmentation is also observed in GC (arrows in Fig. 9(b) ). Comparatively, the SPARSE can generate more accurate results (Fig. 9(b) ).

Figure 9.

Segmentation of homogenous targets: (a) the corpus callosum in a T2 MR image (case 3) and (b) the glioma in a T1c MR image (case 7). The ROIs, which are indicated by the yellow boxes, are shown in the zoomed‐in views for clarity. (a) SPs labeled inside (green) and outside (red) of the corpus callosum; (a) segmentation by RW; (a) segmentation by GC; (a) segmentation by SPARSE. The red and green curves in (a) represent the calculated and ground truth segmentations, respectively. The yellow arrow in (a) indicates the oversegmentation; (b) segmentation of a glioma in a T1c MR image (case 7): (b) SPs labeled inside (green) and outside (red) of the brain glioma on only one transversal slice; (b) segmentation by RW; (b) segmentation by GC; (b) segmentation by SPARSE. Upper and lower rows in (b) are the transversal and coronal slices, respectively. The red and green masks in (b) represent the calculated and ground truth segmentation; the yellow arrows in (b) indicate the oversegmentation.

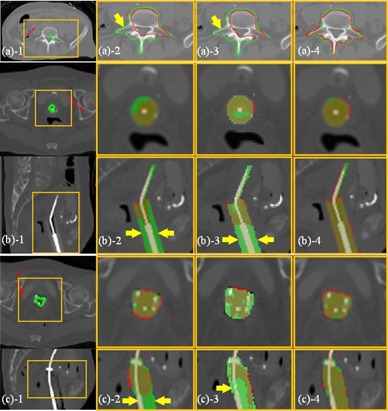

Figure 10 illustrates the comparison results of segmenting inhomogeneous targets in CT images. Figure 10(a) is the segmentation of the vertebra (case 4). Undersegmentation is observed in RW and GC (arrow in Fig. 10(a) ), while SPARSE can yield satisfactory result (Fig. 10(a) ). Figures 10(b) and (c) are the segmentations of two different types of applicator in CT images: T&C applicator and T&O applicator, respectively (cases 5, 6). The RW and GC produce severe undersegmentation (Figs. 10(b) and (c), (b) and (c)), while the SPARSE can generate accurate result (Figs. 10(b) and (c)).

Figure 10.

Segmentation of inhomogeneous targets (CT). The ROIs, which are indicated by the yellow boxes, are shown in the zoomed‐in views for clarity. (a) The vertebra in CT image (case 4): (a) SPs labeled inside (green) and outside (red) of the vertebra; (a) segmentation (red curves) by RW; (a) segmentation (red curves) by GC; (a) segmentation (red curves) by SPARSE. The green curves in (a) represent the ground truth segmentation, and the yellow arrow in (a) indicates undersegmentation in RW; (b) and (c), the T&C applicator and the T&O applicator in CT image respectively (case 5, 6): (b‐c) SPs labeled inside (green) and outside (red) of the applicator on only one transversal slice; (b‐c) segmentation (red masks) by RW; (b‐c) segmentation (red masks) by GC. (b‐c) segmentation (red masks) by SPARSE. Rows in (b) and (c) are transversal and sagittal slices, respectively. The green masks in (b) and (c) represent the ground truth segmentation. The yellow arrows in (b‐c) indicate the undersegmentation in RW and GC, respectively.

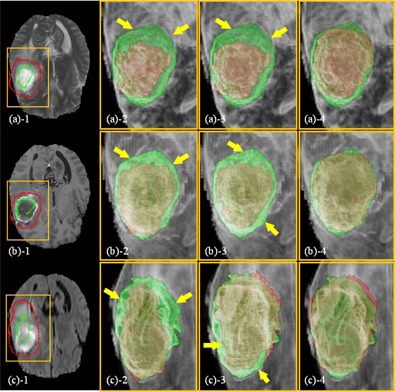

Figure 11 shows another inhomogeneous case of segmenting a glioma in T2, T1c MR images (cases 8, 9) (Fig. 11(a) and (b)), and glioma and edema in FLAIR MR image (case 10) (Fig. 11(c)), respectively. Undersegmentation are also observed in RW (Figs. 11(a) and (c)) and GC (Figs. 11(a) and (c)); in contrast, SPARSE can generate more accurate results (Figs. 11(a) to (c)).

Figure 11.

Segmentation of inhomogeneous targets (MR): (a) the glioma in T2 MR image (case 8), (b) T1c MR image (case 9), and (c) the glioma and edema in FLAIR MR image (case 10). The ROIs, which are indicated by the yellow boxes, are shown in the zoomed‐in views for clarity. First column: SPs labeled inside (green) and outside (red) of the targets on one transversal slice of T2 ((a)), T1c ((b)), and FLAIR ((c)) MR image. Second column: segmentation by RW. Third column: segmentation by GC. Fourth column: segmentation by SPARSE. In the second, third, and fourth columns, the red and green masks represent the calculated and the ground truth segmentations, respectively, and the images are rendered in 3D.

F. Quantitative evaluations

The quantitative comparison results for all the 3D cases (cases 5‐24) are listed in Table 2. We can see that SPARSE is superior to RW and GC in terms of segmentation accuracy for all the evaluated cases in all the three metrics. For RW, the mean DC, PE, HD, and their corresponding standard deviation are , , and , respectively. For GC, the mean DC, PE, HD are , , and , respectively, which are slightly better than RW. For SPARSE, in contrast, the DC increase to , the PE and HD decrease to , and , respectively.

Table 2.

Comparisons of DC, PE, and HD between RW, GC, and SPARSE of 3D cases

| 3D Cases | DC | PE | HD (voxels) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Case # | Image Type | RW | GC | SPARSE | RW | GC | SPARSE | RW | GC | SPARSE |

| 7, 17, 18 | T1c MR | 0.61 () | 0.67 () | 0.89 () | 0.54 () | 0.48 () | 0.22 () | 42.78 () | 11.38 () | 6.31 () |

| 5, 11, 12 | HDR CT with T&C applicator | 0.44 () | 0.49 () | 0.89 () | 0.72 () | 0.67 () | 0.21 () | 70.72 () | 10.10 () | 6.90 () |

| 6, 13‐16 | HDR CT with T&O applicator | 0.37 () | 0.47 () | 0.90 () | 0.79 () | 0.68 () | 0.23 () | 63.45 () | 17.68 () | 4.85 () |

| 8, 19, 22 | T2 MR | 0.40 () | 0.70 () | 0.92 () | 0.75 () | 0.53 () | 0.17 () | 40.83 () | 16.05 () | 8.70 () |

| 9, 20, 23 | T1c MR | 0.54 () | 0.57 () | 0.88 () | 0.63 () | 0.60 () | 0.26 () | 35.56 () | 16.70 () | 12.66 () |

| 10, 21, 24 | FLAIR MR | 0.50 () | 0.50 () | 0.86 () | 0.65 () | 0.65 () | 0.28 () | 37.86 () | 20.42 () | 12.37 () |

| Mean | 0.47 () | 0.59 () | 0.89 () | 0.69 () | 0.58 () | 0.23 () | 50.74 () | 15.62 () | 8.24 () | |

;

G. Computational efficiency

All the experiments in this study were conducted on a GPU platform with an NVIDIA Telsa C1060 card with a total number of 240 processors of 1.3 GHz. It is also equipped with three GB DDR3 memory, shared by all processors. The mean computation time is s for four 2D cases (cases 1‐4) and s for twenty 3D cases (cases 5‐24), respectively. Table 3 lists all computational time for all the 3D cases. It can be seen that the computational time depends on the complexity of the cases tested.

Table 3.

Computational times for all the 3D cases

| Image Size (case #) |

|

|

|

|

|

|

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Time (s) | 22.33 | 23.17 | 24.55 | 31.20 | 32.85 | 39.18 | 40.46 | 46.02 |

IV. DISCUSSION & CONCLUSIONS

In this paper, we presented a RW‐based segmentation algorithm, SPARSE, which incorporates a novel SPs autogeneration scheme for segmentation of inhomogeneous targets. Evaluations of segmentation tasks in the clinical images reveal that the SPARSE decreases the sensitivity to the initial seed points in terms of location and quantity, as well as the dependency of the free parameter β in edge weighting function. With GPU implementation, the robustness and accuracy of the proposed method has been demonstrated with various tested cases in the segmentation of inhomogeneous objects, especially for 3D cases.

One merit of the proposed SPs autogeneration in SPARSE is that the influence of the initial SPs placement to the ultimate segmentation can be reduced. The sensitivities of both SPs quantity and location are evaluated by visual inspection, as well as quantitative analysis. It has been shown that variation of segmentation performance in SPARSE is negligible when the number of SPs is reduced or the locations of SPs are changed randomly, and SPARSE generally produces superior segmentation results than RW when SPs are perturbed. Another gain of the SPs expansion strategy is the reduced workload and labor in manual SPs selection, making the SPARSE algorithm a practical tool for segmentation tasks in clinics. Manual SPs labeling is tedious and usually time‐consuming for most of the interactive segmentation algorithms. In SPARSE, however, the user only needs to specify several SPs on one or a few slices on a 3D volume instead of carefully seeding all through the slices to obtain a satisfactory segmentation. This will significantly facilitate the clinical workflow.

In practice, more SPs usually imply more target/background intensity information, which can theoretically maximize the performance of RW. SPARSE can facilitate collecting such intensity information to a feasible extent with only limited user interaction, and it is this fact that more robust segmentation can be expected given the “incremented” information by incrementing the SPs. Although less initial SPs dependence is achieved in SPARSE, to guarantee appropriate SPs autogeneration, the initial SPs still need to be labeled on locations that are representative of different intensity levels in the target/background instead of seeding arbitrarily. Furthermore, SPs autogeneration is in favor of the β selection. Choosing an appropriate β for RW is a nontrivial task since β is essentially case dependent, and the segmentation performance can vary dramatically when different β is used. However, the SPARSE can weaken this dependence with incremental SPs, which has been demonstrated in this study.

In (6), (7), we set the tuning parameter , where is the mean difference of the intensity between the labeled SPs inside and outside the target, to keep the choice of σ relevant to the intensity contrast of the target and the background. This approach is shown to be effective in distinguishing the low contrast targets.

In SPARSE, the threshold controlling the SPs growth is empirically chosen based on experiments of ten segmentation tasks. Larger usually implies stricter growing condition. However, one should note that is not the only factor contributing to the SPs growth. Connectivity of voxels in the probability map is another factor, which is purely relative to the textural characteristics of the image. Therefore, potential overgrowth of SPs is theoretically possible even if small PT is used, in which case, manual intervention (e.g., deleting certain undesired incremented SPs) is then necessary. According to our observation, satisfactory segmentation performances can be obtained when ranges in [0.6, 0.9]. We have used a relatively rigorous to filter out those points with high similarity to the user‐labeled SPs and include them into the SPs chain. For simplicity, we heuristically use the same for each label s, though this value might not be optimal for all labels. More sophisticated methods (e.g., adaptive approaches) need to be investigated for a better selection of , and we would like to include this work into our future study.

ACKNOWLEDGMENTS

This work is supported in part by the National Natural Science Foundation of China (No. 81301940 and No. 81428019). Brain tumor image data used in this work were obtained from the MICCAI 2012 Challenge on Multimodal Brain Tumor Segmentation (http://www.imm.dtu.dk/projects/BRATS2012) organized by B. Menze, A. Jakab, S. Bauer, M. Reyes, M. Prastawa, and K. Van Leemput. The challenge database contains fully anonymized images from the following institutions: ETH Zurich, University of Bern, University of Debrecen, and University of Utah.

Contributor Information

Xin Zhen, Email: xinzhen@smu.edu.cn.

Linghong Zhou, Email: smart@smu.edu.cn.

REFERENCES

- 1. Jung I‐S, Thapa D, Wang G‐N. Automatic segmentation and diagnosis of breast lesions using morphology method based on ultrasound. In: Wang L, Jin Y, editors. Fuzzy systems and knowledge discovery. Lecture Notes in Computer Science, 3614 Berlin: Springer; 2005. p. 1079–88. [Google Scholar]

- 2. Ito M, Sato K, Fukumi M, Namura I, editors. Brain tissues segmentation for diagnosis of Alzheimer‐type dementia. Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Oct 23‐29, 2011. Piscataway, NJ: IEEE; 2011.

- 3. Popple R, Griffith H, Sawrie S, Fiveash J, Brezovich I. Implementation of talairach atlas based automated brain segmentation for radiation therapy dosimetry. Technol Cancer Res Treat. 2006;5(1):15–21. [DOI] [PubMed] [Google Scholar]

- 4. Pejavar S, Yom SS, Hwang A, et al. Computer‐assisted, atlas‐based segmentation for target volume delineation in whole pelvic IMRT for prostate cancer. Technol Cancer Res Treat. 2013;12(1533‐0338 (Electronic)):199–206. [DOI] [PubMed] [Google Scholar]

- 5. Schreibmann E, Marcus DM, Fox T. Multiatlas segmentation of thoracic and abdominal anatomy with level set‐based local search. J Appl Clin Med Phys. 2014;15(4):4468 Retrieved September 10, 2014 from http://www.jacmp.org [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Pham DL, Xu C, Prince JL. Current methods in medical image segmentation. Annu Rev Biomed Eng. 2000;2(1):315–37. [DOI] [PubMed] [Google Scholar]

- 7. Olabarriaga SD and Smeulders AWM. Interaction in the segmentation of medical images: a survey. Med Image Anal. 2001;5(2):127–42. [DOI] [PubMed] [Google Scholar]

- 8. Grady L, Funka‐Lea G. Multi‐label image segmentation for medical applications based on graph‐theoretic electrical potentials. In: Sonka M, Kakadiaris I, Kybic J, editors. Computer vision and mathematical methods in medical and biomedical image analysis. Lecture Notes in Computer Science, 3117 Berlin: Springer; 2004. p. 230–45. [Google Scholar]

- 9. Grady L, Schiwietz T, Aharon S, Westermann R. Random walks for interactive organ segmentation in two and three dimensions: implementation and validation. In: Duncan J. and Gerig G, editors. Medical image computing and computer‐assisted intervention–MICCAI 2005. Lecture Notes in Computer Science, 3750 Berlin: Springer; 2005. p. 773–80. [DOI] [PubMed] [Google Scholar]

- 10. Dong L, Wang X, Tong T, et al., editors. Left ventricle segmentation from MSCT data based on random walks approach. 3rd International Congress on Image and Signal Processing (CISP), October 16‐18, 2010.

- 11. Li K‐C, Su H‐R, Lai S‐H. Pedestrian image segmentation via shape‐prior constrained random walks. In: Ho Y‐S, editor. Advances in image and video technology. Lecture Notes in Computer Science, 7088 Berlin: Springer; 2011. p. 215–26. [Google Scholar]

- 12. Onoma DP, Ruan S, Gardin I, Monnehan GA, Modzelewski R, Vera P, editors. 3D random walk based segmentation for lung tumor delineation in PET imaging. 9th IEEE International Symposium on Biomedical Imaging (ISBI), May 2‐5, 2012.

- 13. Cui H, Wang X, Fulham M, Feng DD, editors. Prior knowledge enhanced random walk for lung tumor segmentation from low‐contrast CT images. 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), July 3–7, 2013. [DOI] [PubMed]

- 14. Boykov Y and Funka‐Lea G. Graph cuts and efficient N‐D image segmentation. Int J Comput Vision. 2006;70(2):109–31. [Google Scholar]

- 15. Grady L. Random walks for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2006;28(11):1768–83. [DOI] [PubMed] [Google Scholar]

- 16. Adams R and Bischof L. Seeded region growing. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1994;16(6):641–47. [Google Scholar]

- 17. Dalton S and Bell N. CUSP: A C++ templated sparse matrix library. Available from: http://cusplibrary.github.io/.

- 18. Dice L. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. [Google Scholar]

- 19. Zou K, Warfield S, Baharatha A, et al. Statistical validation of image segmentation quality based on a spatial overlap index. Academic Radiol. 2004;11(2):178–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Huttenlocher DP, Klanderman GA, Rucklidge WJ. Comparing images using the Hausdorff distance. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1993;15(9):850–63. [Google Scholar]

- 21. Warfield SK, Zou KH, Wells WM. Simultaneous truth and performance level estimation (STAPLE): an algorithm for the validation of image segmentation. IEEE Trans Med Imaging. 2004;23(7):903–21. [DOI] [PMC free article] [PubMed] [Google Scholar]