Significance

We find that very young children make fine-grained distinctions among positive emotional expressions and connect diverse emotional vocalizations to their probable eliciting causes. Moreover, when infants see emotional reactions that are improbable, given observed causes, they actively search for hidden causes. The results suggest that early emotion understanding is not limited to discriminating a few basic emotions or contrasts across valence; rather, young children’s understanding of others’ emotional reactions is nuanced and causal. The findings have implications for research on the neural and cognitive bases of emotion reasoning, as well as investigations of early social relationships.

Keywords: emotion understanding, emotional vocalizations, causal knowledge, infants, preschoolers

Abstract

The ability to understand why others feel the way they do is critical to human relationships. Here, we show that emotion understanding in early childhood is more sophisticated than previously believed, extending well beyond the ability to distinguish basic emotions or draw different inferences from positively and negatively valenced emotions. In a forced-choice task, 2- to 4-year-olds successfully identified probable causes of five distinct positive emotional vocalizations elicited by what adults would consider funny, delicious, exciting, sympathetic, and adorable stimuli (Experiment 1). Similar results were obtained in a preferential looking paradigm with 12- to 23-month-olds, a direct replication with 18- to 23-month-olds (Experiment 2), and a simplified design with 12- to 17-month-olds (Experiment 3; preregistered). Moreover, 12- to 17-month-olds selectively explored, given improbable causes of different positive emotional reactions (Experiments 4 and 5; preregistered). The results suggest that by the second year of life, children make sophisticated and subtle distinctions among a wide range of positive emotions and reason about the probable causes of others’ emotional reactions. These abilities may play a critical role in developing theory of mind, social cognition, and early relationships.

Emotions, in my experience, aren’t covered by single words. I don’t believe in “sadness,” “joy,” or “regret” … I’d like to show how “intimations of mortality brought on by aging family members” connects with “the hatred of mirrors that begins in middle age.” I’d like to have a word for “the sadness inspired by failing restaurants” as well as for “the excitement of getting a room with a minibar.”

Jeffrey Eugenides, Middlesex (1)

Few abilities are more fundamental to human relationships than our ability to understand why other people feel the way that they do. Insight into the causes of people’s emotional reactions allows us to empathize with, predict, and intervene in others’ experiences of the world. Unsurprisingly, therefore, the conceptual and developmental bases of emotion perception and understanding have been topics of recent interest in a wide range of disciplines, including psychology, anthropology, neuroscience, and computational cognitive science (2–7). Reasoning about emotion has also been a central goal of recent efforts in artificial intelligence (8, 9). However, much remains to be learned even about how young humans understand others’ emotional reactions.

As adults, our understanding of emotion is sufficiently sophisticated that English speakers can appreciate the distinction between “sadness” and “regret” or between “joy” and “excitement.” To the degree that we make such distinctions, we represent not only the meaning of emotion words but also the causes and contexts that elicit them and the expressions and vocalizations that accompany them. Nonetheless, as the quotation by Eugenides (1) suggests, even the abundance of English emotion words may be insufficient to capture the fine-grained relationship between events in the world and our emotional responses. By the same token, however, emotion words may not be necessary to such fine-grained representations; even preverbal children may represent not only what other people feel but also why. Here, we ask to what extent very young children make fine-grained, within-valence distinctions among emotions, and both infer and search for probable causes of others’ emotional reactions. [Note that our focus here is on children’s intuitive theory of emotions rather than scientific theories of what causes or constitutes an emotion. Thus, for simplicity we refer to “emotions” throughout, although we recognize that scientific theories make important distinctions between, for instance, emotion and affect (e.g., ref. 10).]

Newborns respond differently to different emotional expressions within hours of birth (11). By 7 months, infants represent emotional expressions cross-modally and distinguish emotional expressions within valence [e.g., matching happy faces to happy voices and interested faces to interested ones (12–14)]. This early sensitivity might reflect only a low-level ability to distinguish characteristic features of emotional expressions rather than any understanding of emotion per se (e.g., ref. 15). However, by the end of the first year, infants connect emotional expressions to goal-directed actions. Ten-month-olds look longer when an agent expresses a negative (versus positive) emotional reaction to achieving a goal (16), and 12-month-olds approach or retreat from ambiguous stimuli depending on whether the parent expresses a positive or negative emotion (e.g., ref. 17). Two-year-olds and 3-year-olds explicitly predict that an agent will express positive emotions when her desires are fulfilled and negative ones when her desires are thwarted (18), and can guess whether someone is looking at something desirable or undesirable based on whether she reacts positively or negatively (19).

Nonetheless, some work suggests that infants and toddlers often fail to connect others’ emotional reactions to specific events in the world. Nine-month-olds use novel words, but not differently valenced emotional reactions, to distinguish object kinds (20), and 14-month-olds use the direction of someone’s eye gaze, but not her emotional expressions, to predict the target of her reach (21). Similarly, if an experimenter frowns at a food a child likes but smiles at a food she dislikes, 14-month-olds fail to use the valenced reactions to infer that the experimenter’s preferences differ from the child’s own (22). The interpretation of such failures is ambiguous: Object labels and direction of gaze may be more reliable than emotional expressions as cues to object individuation and direction of reach; similarly, infants may understand that emotions indicate preferences while resisting the idea that others’ preferences differ from their own. Critically, however, whether implying precocious or protracted emotion understanding, prior work on early emotion understanding has focused almost exclusively on children’s ability to distinguish a few basic emotions or draw different inferences from positively and negatively valenced emotional expressions. Thus, it is unclear to what extent young children make more fine-grained distinctions.

Indeed, some researchers have proposed that children initially categorize emotions only as “feeling good” and “feeling bad” (reviewed and discussed in ref. 2). Children struggle with explicitly labeling and sorting emotional expressions well into middle childhood, and are often more successful at identifying emotion labels given information about the cause and behavioral consequences of the emotion than given the emotional expression itself (23, 24). Consistent with this, preschoolers know numerous “scripts” connecting familiar emotions and events [e.g., getting a puppy and happiness, dropping an ice cream cone and sadness (25–27)].

Insofar as individuals’ emotional reactions depend on their appraisal of events (28–30), such relationships hold only in probability. However, children’s later developing ability to integrate their understanding of emotion with information about others’ beliefs and desires (reviewed in refs. 31, 32) might be supported by an earlier ability to connect emotional reactions to their probable causes just as causal knowledge supports categorization and conceptual enrichment in other domains (33, 34). Indeed, arguably, it would be surprising if infants were entirely insensitive to such predictive relationships, given their general abilities at statistical learning (e.g., ref. 35) and the sophistication of early social cognition overall (reviewed in ref. 36). To the degree that particular kinds of events are reliably associated with particular kinds of emotional reactions within a given cultural context (37), young children might learn relationships between eliciting events and emotional responses just as they learn probabilistic relationships in other domains (38, 39). In this way, children might make nuanced distinctions among emotions well before they learn the words, if any, corresponding to such distinctions.

Connecting Diverse Positive Emotional Vocalizations to Their Probable Causes

To look at young children’s understanding of relatively fine-grained relationships between eliciting events and others’ emotional reactions, we present participants with generative causes of five distinct positive emotional vocalizations and ask whether children can link the vocalization with the probable eliciting cause. We focus on positive emotions because little is known about children’s ability to make these kinds of discriminations (i.e., none of the contrasts tested here are represented in distinctions among basic emotions like happiness, sadness, anger, fear, and disgust).

Eliciting Cause Stimuli and Emotional Vocalizations.

Two female adults blind to the study design were asked to vocalize a nonverbal emotional response to images from five categories: funny (children making silly faces), delicious (desserts), exciting (light-up toys), adorable (cute babies), and sympathetic (crying babies). The categories were chosen semiarbitrarily, constrained by the criteria that the images had to be recognizable to young children and had to elicit distinct positive emotional reactions from adults. With respect to crying babies, note that although the image is negative, the adult response was positive and consoling. Four individual pictures were chosen from each of the categories, resulting in a set of 20 different images. Representative images are shown in SI Appendix, Fig. S1. One vocalization was chosen for each image (audio files are available at https://osf.io/an57k/?view_only=def5e66600b0441482c10763541e3ac2). Note that we cannot do justice here (but reviewed in ref. 40) to the interesting question of when spontaneous emotional responses to stimuli become paralinguistic or entirely lexicalized parts of speech (e.g., the involuntary cry of pain to “ouch!”, the gasp of surprise to “oh!”); however, as Scherer (40) notes, there may be points on the continuum where no clear distinction can be made. Given the conditions under which they were elicited, we believe it is reasonable to treat the current stimuli as intentional communicative (albeit nonverbal) affect bursts. However, for the current purposes, nothing rests on drawing a sharp distinction between involuntary and voluntary exclamations.

Experiment 1: Children Aged 2 to 4 Years and Adults.

On each trial, one image from each of two different categories was randomly selected and presented on different sides of a screen. The vocalization elicited by one of the images was randomly selected and played. Each image was seen on exactly two test trials, once as the target and once as the distractor; each vocal expression was played on only a single test trial. Children were told the sound was made by a doll (Sally) who sat facing the screen and were asked “Which picture do you think Sally is looking at?”

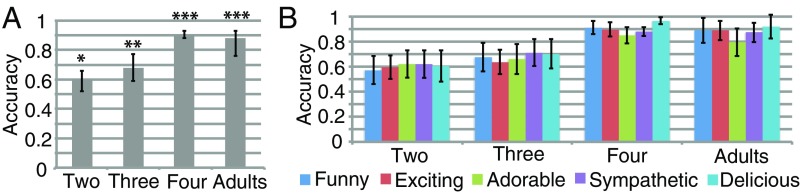

Accuracy was calculated as the number of correct responses over the total number of trials completed. Overall, children successfully matched the vocalizations to their probable eliciting causes [mean (M) = 0.73, SD = 0.192, 95% confidence interval (CI) [0.67, 0.78], t(47) = 8.18, P < 0.001, d = 1.18; one-sample t test, two-tailed]. A mixed-effects model showed no main effect of the category of the eliciting cause [F(4, 188) = 0.93, P = 0.449] but a main effect of age [F(1, 46) = 32.77, P < 0.001]. More information about the model is provided in SI Appendix, section 1.1 and Tables S1 and S2. Post hoc analyses found that children in every age bin succeeded [collapsing across categories, 2-year-olds: M = 0.60, SD = 0.143, 95% CI [0.52, 0.66], t(15) = 2.75, P = 0.015, d = 0.69; 3-year-olds: M = 0.68, SD = 0.194, 95% CI [0.58, 0.77], t(15) = 3.64, P = 0.002, d = 0.91; 4-year-olds: M = 0.90, SD = 0.055, 95% CI [0.88, 0.93], t(15) = 29.59, P < 0.001, d = 7.40]. A group of adults also succeeded [M = 0.88, SD = 0.153, 95% CI [0.76, 0.93], t(15) = 9.82, P < 0.001, d = 2.45]. Two-year-olds and 3-year-olds performed similar to each other (P = 0.466, Tukey’s test); neither age group reached adult-like performance (P < 0.001). By contrast, 4-year-olds differed from younger children (P < 0.001) and were indistinguishable from adults (P = 0.950) (Fig. 1).

Fig. 1.

Results of Experiment 1. (A) Accuracy by age group. (B) Accuracy by age group and the category of the eliciting cause. Error bars indicate 95% confidence intervals. *P < 0.05; **P < 0.01; ***P < 0.001.

Experiments 2 and 3: Children Aged 12 to 23 Months.

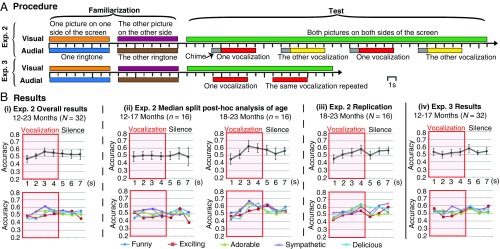

Given that even 2-year-olds selected the target picture above chance, we asked whether younger children (n = 32) might succeed at a nonverbal version of the task. The materials were identical to those in Experiment 1. On each trial, two images were presented on the screen. A vocalization corresponding to one image was played for 4 s, followed by a 3-s pause; then, the other vocalization was played (Fig. 2A).

Fig. 2.

Procedure and results of Experiments 2 and 3. (A) Procedure of each trial of the preferential looking task. (B) Mean proportion of accurate looking to the corresponding picture over total looking to both pictures during the 7-s intervals. (Upper) Accuracy collapsing across categories. (Lower) Accuracy by category. Error bars indicate 95% confident intervals. Exp., Experiment.

Overall, children preferentially looked at the picture corresponding to the vocalization [M = 0.53, SD = 0.070, 95% CI [0.50, 0.55], t(31) = 2.05, P = 0.049, d = 0.36]. A mixed-effects model, with a 7 (second) by 5 (category) by 32 (subject) data matrix showed no effect of age [F(1, 30) = 1.79, P = 0.191] or category [F(4, 980) = 1.39, P = 0.235], but a main effect of time [F(6, 980) = 3.34, P = 0.003], consistent with children shifting their gaze toward the correct picture over each 7-s interval (Fig. 2 B, i). We also found a significant interaction between age and time [F(6, 980) = 2.84, P = 0.010] (SI Appendix, section 1.2 and Tables S3 and S4). As an exploratory analysis, we performed a median split and looked separately at the 12- to 17-month-olds and the 18- to 23-month-olds. The 12- to 17-month-olds performed at chance [M = 0.50, SD = 0.075, 95% CI [0.47, 0.54], t(15) = 0.17, P = 0.866, d = 0.04], and there was a main effect of neither category [F(4, 486) = 1.02, P = 0.396] nor time [F(6, 486) = 0.74, P = 0.621]. In contrast, the 18- to 23-month-olds successfully matched the vocalization to the corresponding picture [M = 0.55, SD = 0.059, 95% CI [0.52, 0.57], t(15) = 3.23, P = 0.006, d = 0.81]. There was no main effect of category [F(4, 490) = 0.69, P = 0.596], but there was a significant main effect of time [F(6, 490) = 6.55, P < 0.001] (Fig. 2 B, ii). More information is provided in SI Appendix, section 1.2 and Table S5.

Children’s average looking time at the target was only slightly above chance. This is perhaps unsurprising, given a number of factors: the fine-grained nature of the distinctions, that the relationships between eliciting causes and reactions hold only in probability, that children had to move their gaze to the target over the 7-s interval (Fig. 2 B, ii), and that the visual stimuli were not matched for salience; in particular, children sometimes observed an object and an agent on the screen simultaneously. However, because the effect was subtle and the age split was post hoc, we replicated the experiment with a separate group of 18- to 23-month-olds (n = 16). As in the initial sample, participants preferentially looked at the target picture [M = 0.53, SD = 0.045, 95% CI [0.51, 0.56], t(15) = 3.04, P = 0.008, d = 0.76]; there was no main effect of category [F(4, 527) = 2.02, P = 0.090], but there was a significant main effect of time [F(6, 527) = 6.96, P < 0.001], consistent with children moving their gaze toward the target (Fig. 2 B, iii). More information is provided in SI Appendix, section 1.2 and Table S6. Across the initial sample and the replication, a preference for the target across trials was observed in most of the 18- to 23-month-olds tested (27 of 32 children).

As noted, in Experiment 2, the vocalizations alternated on each trial such that children had to switch from looking at one picture to looking at the other. These task demands may have overwhelmed the younger children; thus, in Experiment 3 (preregistered at https://osf.io/m3u67/?view_only=3da43a84fd004f4095ac65ae298c567c), we tested 12- to 17-month-olds (n = 32) using a simpler design in which only a single vocalization was played repeatedly on each trial (Fig. 2A). Infants looked at the matched picture above chance across trials [M = 0.53, SD = 0.055, 95% CI [0.51, 0.55], t(31) = 3.14, P = 0.004, d = 0.55]. The mixed-effects model showed no main effect of age [F(1, 30) = 0.03, P = 0.858] or category [F(4, 1,052) = 0.50, P = 0.736], but a main effect of time [F(6, 1,052) = 2.54, P = 0.019], consistent with infants shifting their looking toward the target picture (Fig. 2 B, iv). More information is provided in SI Appendix, section 1.3 and Tables S7 and S8.

Searching for Probable Eliciting Causes of Emotional Vocalizations

Experiments 4 and 5: Children Aged 12 to 17 Months.

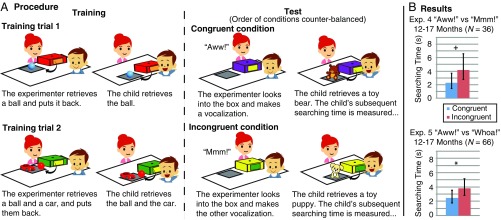

To validate the previous results with converging measures, and also to look at the extent to which infants might actively search for causes of others’ emotional reactions, in Experiments 4 and 5, we tested 12- to 17-month-olds using a manual search task (adapted from refs. 41, 42) (Fig. 3A). The experimenter peeked through a peep hole on the top of a box and made one of two vocalizations (Experiment 4: “Aww!” or “Mmm!”; Experiment 5: “Aww!” or “Whoa!”). Infants were encouraged to reach into a felt slit on the side of the box. They retrieved a toy that was either congruent or incongruent with the vocalization (Experiment 4: half of the infants retrieved a stuffed animal, and half retrieved a toy fruit; Experiment 5: half of the infants retrieved a stuffed animal, and half retrieved a toy car). The experimenter took the retrieved toy away and looked down for 10 s. We coded whether infants reached into the box again and how long they searched. A new box was introduced for a second trial. Infants who had retrieved a congruent toy on the first test trial retrieved an incongruent toy on the second test trial, and vice versa. We were interested in whether infants would search longer on the incongruent trial than on the congruent trial. Because the effect of congruency was the primary question of interest (rather than the effect of the particular emotion contrast tested in each experiment), we report the results of each individual experiment and then a summary analysis of the effect of congruency across both experiments.

Fig. 3.

Procedure and results of Experiments 4 and 5. (A) Example of the procedure of the manual search task. (B) Infants’ searching time in the congruent and incongruent conditions. Error bars indicate 95% confident intervals. +P < 0.10; *P < 0.05. Exp., experiment.

Experiment 4 Results.

One analysis was preregistered (a permutation test on the search time at https://osf.io/9qwcp/?view_only=25bb71fc775748f3a6cab34cf6734dae). There was a trend for infants to search longer in the incongruent condition than in the congruent condition (incongruent: M = 4.18 s, SD = 5.600, 95% CI [2.76, 6.61]; congruent: M = 2.29 s, SD = 3.436, 95% CI [1.40, 3.76]; Z = 1.91, P = 0.053, permutation test) (Fig. 3B).

Experiment 5 Results.

Four analyses were preregistered: (i) a mixed-effects model of the raw data, (ii) a permutation test of the raw data, (iii) a permutation test of the proportional searching, and (iv) the nonparametric McNemar’s test of the number of children searching in each condition (https://osf.io/knb7t/?view_only=afbf701855934195b313c31ad5821dcf; also SI Appendix, section 2). The mixed-effects model revealed no main effect of category [F(1, 63) = 0.20, P = 0.656] but a main effect of age [F(1, 63) = 4.27, P = 0.043], suggesting that older infants searched longer overall than younger ones, and a significant main effect of congruency [F(1, 65) = 4.47, P = 0.038]. The interaction between age and congruency did not survive model selection (SI Appendix, section 1.4 and Tables S9 and S10); thus, no further age analyses were conducted. Infants searched longer in the incongruent condition (M = 3.82 s, SD = 4.818, 95% CI [2.81, 5.17]) than in the congruent condition (M = 2.48 s, SD = 3.557, 95% CI [1.77, 3.52]; Z = 2.06, P = 0.038, permutation test) (Fig. 3B). Neither the proportional search time nor the number of infants who searched at all differed by condition.

Summary Analysis.

A meta-analysis (43) across both experiments found that infants searched longer in the incongruent condition than in the congruent condition (effect: 1.51, 95% CI [0.48, 2.54]; I2: 0.00, 95% CI [0.00, 13.76]). They also spent proportionally more time searching the incongruent box than the congruent box (effect: 0.18, 95% CI [0.06, 0.29]; I2: 31.93, 95% CI [0.00, 92.92]). Finally, they were more likely to search again after retrieving the first toy given the incongruent box than the congruent box (effect: 0.18, 95% CI [0.01, 0.36]; I2: 58.13, 95% CI [0.00, 88.07]) (SI Appendix, Fig. S2).

Note that in the incongruent condition of Experiments 4 and 5, the probable eliciting cause was never observed. Thus, the results cannot be due to infants merely associating the stimulus and the emotional reaction, or generating their own first-person response to the eliciting cause. Rather, the results suggest that infants represent probable causes of others’ emotional reactions, and actively search for unobserved causes when observed candidate causes are implausible.

General Discussion

Across five experiments, we found that very young children make nuanced distinctions among positive emotional vocalizations and connect them to probable eliciting causes. The results suggest that others’ emotional reactions provide a rich source of data for early social cognition, allowing children to recover the focus of others’ attention in cases otherwise underdetermined by the context (Experiments 1–3) and to search for plausible causes of others’ emotional reactions, even when the causes are not in the scene at all (Experiments 4 and 5).

As noted, the vast majority of previous work on early emotion understanding has focused on children’s understanding of a few basic emotions or on distinctions between positively and negatively valenced emotions (reviewed in ref. 2). Similarly, influential accounts of emotional experience in both adults and children have often focused exclusively on dimensions of valence and arousal (e.g., refs. 44–46). Our results go beyond previous work in suggesting that very young children make nuanced distinctions among positive emotional reactions and have a causal understanding of emotion: They recognize that events in the world generate characteristic emotional responses. These findings have a number of interdisciplinary implications, raising questions about how the findings might generalize to other sociocultural contexts and other species, constraining hypotheses about the neural and computational bases of early emotion understanding, and suggesting new targets for infant-inspired artificial intelligence systems.

In representing the relationship between emotional vocalizations and probable eliciting causes, what are children representing? One possibility is that infants deploy their general ability to match meaningful auditory and visual stimuli (e.g., refs. 38, 47) to connect emotional vocalizations with eliciting events, and to search for plausible elicitors when they are otherwise unobserved, but without representing emotional content per se. On this account, children might learn predictive relationships between otherwise arbitrary classes of stimuli or they might represent the vocalizations as having nonemotional content. For instance, they might assume the vocalizations identify, rather than react to, the targets (i.e., like object labels). We think this is unlikely, however, given that neither natural nor artifact kinds capture the distinctions infants made in this study (e.g., grouping light-up toys and toy cars together on the one hand and grouping stuffed animals and babies on the other hand). Alternatively, children might treat the vocalizations adjectivally. However, this too seems unlikely, given that sensitivity to modifiers is rare in the second year of life and that the vocalizations were uttered in isolation, not in noun phrases [“Aww…,” not “the aww bunny” (48)].

Note, however, that even considering only the five positive emotions distinguished here, many other eliciting events were possible (e.g., cooing over pets, clucking over skinned knees, ahhing over athletic events). Given myriad possible combinations, inferring abstract relations may simplify a difficult learning problem and may indeed be easier than learning individual pairwise mappings (e.g., ref. 49). Thus, another explanation, and one we favor, is that infants represent, at the very least, protoemotion concepts. Given that infants engage in sophisticated social cognition in many domains [e.g., distinguishing pro- and antisocial others, in-group and out-group members, and the equitable and inequitable distribution of resources (36, 50, 51)], it is at least conceivable that infants also represent the fact that some stimuli elicit affection, others excitement, and others sympathy, for example. However, the degree to which infants’ early representations have relatively rich information content even in infancy, or serve primarily as placeholders to bootstrap the development of richer intuitive theories later on (e.g., ref. 33), remains a question for further research.

As noted at the outset, these data bear upon the development of children’s intuitive theory of emotions and are orthogonal to debates over what emotion is and how it is generated (also refs. 5–7). That adults may be capable of nuanced distinctions among emotions does not, in itself, invalidate the possibility that there are a small set of innate, evolutionarily specified, universal emotions [“basic emotions” (52)]; that emotions can be characterized primarily on dimensions of valence and arousal (53); that emotions depend on individuals’ appraisal of events (54); or that emotions arise, as other concepts do, from cultural interactions that cannot be reduced to any set of physiological and neural responses (4). Finding that young children are sensitive to some of the same distinctions as adults similarly does not resolve these disputes one way or the other. In addition, these findings are not in tension with the finding that it may take many years for children to learn explicit emotion categories (reviewed in ref. 2). Indeed, arguably, the very richness of infants’ early representations may make it particularly challenging for infants to isolate the specific emotion concepts reified within any given culture. These results do, however, suggest that early in development, infants make remarkably fine-grained distinctions among emotions and represent causal relationships between events and emotional reactions in a manner that could support later conceptual enrichment.

Finally, we note that even in adult judgment, there may be some dispute about the degree to which the response to each of the eliciting causes investigated here qualifies as an emotional reaction per se. People may say they feel “excited” on seeing a toy car or light-up toy or “amused” when they see silly faces; however, there is no simple English emotion word that captures the response to seeing a cute baby (endeared? affectionate?), a crying baby (sympathetic? tender?), or delicious food (delighted? anticipatory?). We believe this may speak more to the impoverished nature of English emotion labels than to the absence of emotional responses to such stimuli. As our opening quotation illustrates, there are myriad emotion words in English, but even these are not exhaustive. The fact that cultures vary in both the number and kind of emotional concepts they label (37) suggests that we may be capable of experiencing more than any given language can say. The current results, however, suggest that at least some of the subtleties and richness of our emotional responses to the world are accessible, even in infancy.

Materials and Methods

Participants.

Child participants were recruited from a children’s museum, and adults were recruited on Amazon Mechanical Turk. Experiment 1 included 48 children (M: 3.4 years, range: 2.0–4.8 years) and 16 adults. Experiment 2 included 32 children aged 12 to 23 months (M: 17.8 months, range: 12.2–23.3 months). An additional 16 children aged 18 to 23 months (M: 21.2 months, range: 18.1–23.9 months) were recruited for a replication study. Experiment 3 included 32 children aged 12 to 17 months (M: 14.8 months, range: 12.1–17.8 months). Experiment 4 included 36 children aged 12 to 17 months (M: 14.8 months, range: 12.0–17.9 months). The sample size was determined by a power analysis, using the effect size found in a pilot study (SI Appendix, section 3) and setting alpha = 0.05 and power = 0.80. In Experiment 5, we increased our power to 0.90 and ran a power analysis based on the effect size found in 15- to 17-month-olds in Experiment 4, but allowing power to test for the age difference revealed in an exploratory analysis (SI Appendix, section 4). This resulted in a sample size of 33 children aged 12 to 14 months (M: 13.7 months, range: 12.2–14.8 months) and 33 children aged 15 to 17 months (M: 16.3 months, range 15.0–17.6 months). Exclusion criteria are provided in SI Appendix, section 5. Parents provided informed consent, and the MIT Institutional Review Board approved the research. Data and R code for analyzing the data can be found at https://osf.io/ru2t4/?view_only=54d9cf5b3a1141729a4e5b3d0a1e01a6.

Materials.

In Experiment 1, images were presented on a 15-inch laptop and vocalizations were played on a speaker. A doll was placed on the speaker. A warm-up trial used a picture of a beautiful beach and a picture of a dying flower. Training vocalizations were elicited as for test stimuli. In Experiments 2 and 3, images were presented on a monitor (93 cm × 56 cm) and vocalizations were played on a speaker. Two 7-s ringtones were used for familiarization, and a chime preceded the presentation of the vocalizations in Experiment 2 only. A multicolored pinwheel was used as an attention getter. In Experiment 4, four different colored cardboard boxes (27 cm × 26 cm × 11 cm) were used. A 4-cm-diameter hole was cut in the top of each box, allowing a partial view of the interior; a 19-cm × 8-cm opening was cut on the side of each box. The opening was covered by two pieces of felt. Velcro on the table and the bottom of the boxes was used to standardize the placement of the boxes. Two boxes were used in the training phase to teach infants that there could be either one (a 5-cm blue ball) or two (a 7-cm red ball and an 11-cm × 5-cm × 5-cm toy car) objects inside. The other two boxes were used in the test phase. For half of the participants, a stuffed animal was inside each box (a bear in one and a puppy in the other; each was ∼14 cm × 9 cm × 7 cm in size). For the remaining participants, a toy fruit was inside each box (an 8-cm orange in one and a 19-cm banana in the other). A black tray was used to hold the items retrieved from the boxes. A timer was used in the test phase. The same materials were used in Experiment 5 except that one training box contained a blue ball, while the other contained a red ball and a toy banana. For half of the children, both test boxes contained cute stuffed animals (a bear in one and a puppy in the other), and for the remaining children, both test boxes contained toy cars (one red and one blue).

Procedure.

In Experiment 1, the experimenter introduced the doll, saying, “Hi, this is Sally! Today we’ll play a game with Sally!” The experimenter placed the doll on the speaker, facing the laptop screen. A practice trial (SI Appendix, section 6.1) preceded the test trials. On each test trial, the experimenter pushed a button on the keyboard to trigger the presentation of two pictures and said: “Here are two new pictures, and Sally makes this sound.” She then pushed a button on the keyboard to trigger the vocalization. She asked the child: “Which picture do you think Sally is looking at?” A total of 20 trials were presented in a random order.

Adults were tested online. They were told that the vocalization on each trial was someone’s response to one of the pictures and their task was to guess which picture the person was looking at. We generated a randomly ordered set of 10 picture pairs. Half of the adults were given the vocalization corresponding to one picture in each pair, and the other half were given the vocalization corresponding to the other picture. Adults had 10 trials rather than 20.

In Experiment 2, the child’s parent sat in a chair with her eyes closed. The child sat on the parent’s lap, ∼63 cm in front of the screen. The experimenter could see the child on a camera but was blind to the visual stimuli throughout. At the beginning of each trial, the attention getter was displayed. When the child looked at the screen, the experimenter pressed a button to initiate the familiarization phase. The computer randomly selected one image (17 cm × 12 cm) from one category and presented it on one side of the screen (left/right counterbalanced), accompanied by one of the two ringtones. After 7 s, the image disappeared. Then, the computer randomly selected another image (17 cm × 12 cm) from a different category and presented it on the other side of the screen, accompanied by the other ringtone. After 7 s, the image disappeared. For the test phase, both pictures were presented simultaneously. A chime was played to attract the child’s attention, and the vocalization corresponding to either the left or right picture (randomized) was played for 4 s, followed by 3 s of silence. The chime was played again, and the vocalization corresponding to the other picture was played for 4 s, followed by 3 s of silence. This was repeated. The computer then moved on to the next trial (Fig. 2A).

In Experiment 3, the procedure was identical to Experiment 2 except that during the test trial, a single vocalization (corresponding to one image, chosen at random) was played for 4 s, followed by a 3 s pause. This was then repeated (Fig. 2A).

In Experiment 4, the experimenter played with the child with some warm-up toys and then initiated the training phase (SI Appendix, section 6.2). After the training phase, she introduced the test box containing either a toy bear or a toy fruit, counterbalanced across participants. The experimenter said: “Here is another box. Let me take a look.” She looked into the box and said either “Aww!” (as if seeing something adorable) or “Mmm!” (as if seeing something yummy) counterbalanced across participants. She looked at the child, looked back into the box, and then repeated the vocalization. She repeated this a third time. The experimenter then affixed the box to the table with the felt opening facing the child and encouraged the child to reach in the box, retrieve the toy, and put the toy in the tray. Once the child did, the experimenter removed the toy, set the timer for 10 s, and looked down at her lap. After 10 s, the experimenter looked up. If the child was still searching, the experimenter looked down for another 10 s. She repeated this until the child was not searching when she looked up. (The box was always empty at this point but it was hard for the infant to discover this, given the size of the box, peep hole, and felt opening.) The experimenter then moved on to the second test box. This box contained a toy similar to the one in the previous box (i.e., a puppy if the previous one was a bear, a banana if the previous one was an orange), but the experimenter made the other vocalization (i.e., “Mmm!” if she had said “Aww!” or “Aww!” if she had said “Mmm!”). Thus, within participants, the object the child retrieved was congruent with the vocalization on one trial and incongruent on the other (order counterbalanced). In Experiment 5, the procedure was identical except that the “Mmm!” was replaced with a “Whoa!” Children’s looking and searching behavior was all coded offline from video clips. Details are provided in SI Appendix, section 7.

Supplementary Material

Acknowledgments

We thank the Boston Children’s Museum and participating parents and children. We also thank Rebecca Saxe, Josh Tenenbaum, Elizabeth Spelke, and members of the Early Childhood Cognition Lab at MIT for helpful comments. This study was supported by the Center for Brains, Minds and Machines, which is funded by National Science Foundation Science and Technology Center Award CCF-1231216.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1707715114/-/DCSupplemental.

References

- 1.Eugenides J. Middlesex. Farrar, Straus and Giroux; New York: 2011. [Google Scholar]

- 2.Widen SC. The development of children’s concepts of emotion. In: Barrett LF, Lewis M, Haviland-Jones JM, editors. Handbook of Emotions. Guilford; New York: 2016. pp. 307–318. [Google Scholar]

- 3.Beatty A. Current emotion research in anthropology: Reporting the field. Emot Rev. 2013;5:414–422. [Google Scholar]

- 4.Barrett LF, Wilson-Mendenhall CD, Barsalou LW. The conceptual act theory: A road map. In: Barrett LF, Russell JA, editors. The Psychological Construction of Emotion. Guilford; New York: 2015. pp. 83–110. [Google Scholar]

- 5.Skerry AE, Saxe R. Neural representations of emotion are organized around abstract event features. Curr Biol. 2015;25:1945–1954. doi: 10.1016/j.cub.2015.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ong DC, Zaki J, Goodman ND. Affective cognition: Exploring lay theories of emotion. Cognition. 2015;143:141–162. doi: 10.1016/j.cognition.2015.06.010. [DOI] [PubMed] [Google Scholar]

- 7.Wu Y, Baker CL, Tenenbaum JB, Schulz LE. Rational inference of beliefs and desires from emotional expressions. Cogn Sci. 2017 doi: 10.1111/cogs.12548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Minsky M. The Emotion Machine: Commonsense Thinking, Artificial Intelligence, and the Future of the Human Mind. Simon and Schuster; New York: 2007. [Google Scholar]

- 9.Scheutz M. Useful roles of emotions in artificial agents: A case study from artificial life. In: Ferguson G, McGuinness D, editors. Proceedings of the 19th National Conference on Artificial Intelligence. AAAI Press; Menlo Park, CA: 2004. pp. 42–47. [Google Scholar]

- 10.Russell JA, Barrett LF. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J Pers Soc Psychol. 1999;76:805–819. doi: 10.1037//0022-3514.76.5.805. [DOI] [PubMed] [Google Scholar]

- 11.Field TM, Woodson R, Greenberg R, Cohen D. Discrimination and imitation of facial expression by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- 12.Walker-Andrews AS. Infants’ perception of expressive behaviors: Differentiation of multimodal information. Psychol Bull. 1997;121:437–456. doi: 10.1037/0033-2909.121.3.437. [DOI] [PubMed] [Google Scholar]

- 13.Soken NH, Pick AD. Infants’ perception of dynamic affective expressions: Do infants distinguish specific expressions? Child Dev. 1999;70:1275–1282. doi: 10.1111/1467-8624.00093. [DOI] [PubMed] [Google Scholar]

- 14.Soderstrom M, Reimchen M, Sauter D, Morgan JL. Do infants discriminate non-linguistic vocal expressions of positive emotions? Cogn Emot. 2017;31:298–311. doi: 10.1080/02699931.2015.1108904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Caron RF, Caron AJ, Myers RS. Do infants see emotional expressions in static faces? Child Dev. 1985;56:1552–1560. [PubMed] [Google Scholar]

- 16.Skerry AE, Spelke ES. Preverbal infants identify emotional reactions that are incongruent with goal outcomes. Cognition. 2014;130:204–216. doi: 10.1016/j.cognition.2013.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sorce JF, Emde RN, Campos JJ, Klinnert MD. Maternal emotional signaling: Its effect on the visual cliff behavior of 1-year-olds. Dev Psychol. 1985;21:195–200. [Google Scholar]

- 18.Wellman HM, Woolley JD. From simple desires to ordinary beliefs: The early development of everyday psychology. Cognition. 1990;35:245–275. doi: 10.1016/0010-0277(90)90024-e. [DOI] [PubMed] [Google Scholar]

- 19.Wellman HM, Phillips AT, Rodriguez T. Young children’s understanding of perception, desire, and emotion. Child Dev. 2000;71:895–912. doi: 10.1111/1467-8624.00198. [DOI] [PubMed] [Google Scholar]

- 20.Xu F. The role of language in acquiring object kind concepts in infancy. Cognition. 2002;85:223–250. doi: 10.1016/s0010-0277(02)00109-9. [DOI] [PubMed] [Google Scholar]

- 21.Vaish A, Woodward A. Infants use attention but not emotions to predict others’ actions. Infant Behav Dev. 2010;33:79–87. doi: 10.1016/j.infbeh.2009.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Repacholi BM, Gopnik A. Early reasoning about desires: Evidence from 14- and 18-month-olds. Dev Psychol. 1997;33:12–21. doi: 10.1037//0012-1649.33.1.12. [DOI] [PubMed] [Google Scholar]

- 23.Widen SC, Russell JA. The relative power of an emotion’s facial expression, label, and behavioral consequence to evoke preschoolers’ knowledge of its cause. Cogn Dev. 2004;19:111–125. [Google Scholar]

- 24.Widen SC, Russell JA. Children’s scripts for social emotions: Causes and consequences are more central than are facial expressions. Br J Dev Psychol. 2010;28:565–581. doi: 10.1348/026151009x457550d. [DOI] [PubMed] [Google Scholar]

- 25.Barden RC, Zelko FA, Duncan SW, Masters JC. Children’s consensual knowledge about the experiential determinants of emotion. J Pers Soc Psychol. 1980;39:968–976. doi: 10.1037//0022-3514.39.5.968. [DOI] [PubMed] [Google Scholar]

- 26.Stein NL, Levine LJ. The causal organisation of emotional knowledge: A developmental study. Cogn Emot. 1989;3:343–378. [Google Scholar]

- 27.Stein NL, Trabasso T. The organisation of emotional experience: Creating links among emotion, thinking, language, and intentional action. Cogn Emot. 1992;6:225–244. [Google Scholar]

- 28.Ellsworth PC, Scherer KR. Appraisal processes in emotion. In: Davidson RJ, Scherer KR, Goldsmith HH, editors. Handbook of Affective Sciences. Oxford Univ Press; New York: 2003. pp. 572–595. [Google Scholar]

- 29.Lazarus RS. Progress on a cognitive-motivational-relational theory of emotion. Am Psychol. 1991;46:819–834. doi: 10.1037//0003-066x.46.8.819. [DOI] [PubMed] [Google Scholar]

- 30.Ortony A, Clore GL, Collins A. The Cognitive Structure of Emotions. Cambridge Univ Press; New York: 1990. [Google Scholar]

- 31.Wellman HM. Making Minds: How Theory of Mind Develops. Oxford Univ Press; New York: 2014. [Google Scholar]

- 32.Harris PL. Emotion, imagination and the world’s furniture. Eur J Dev Psychol. 2016 doi: 10.1080/17405629.2016.125519. [DOI] [Google Scholar]

- 33.Carey S. The Origin of Concepts. Oxford Univ Press; New York: 2009. [Google Scholar]

- 34.Gopnik A, Wellman HM. Reconstructing constructivism: Causal models, Bayesian learning mechanisms, and the theory theory. Psychol Bull. 2012;138:1085–1108. doi: 10.1037/a0028044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Saffran JR, Johnson EK, Aslin RN, Newport EL. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70:27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- 36.Hamlin JK. Moral judgment and action in preverbal infants and toddlers: Evidence for an innate moral core. Curr Dir Psychol Sci. 2013;22:186–193. [Google Scholar]

- 37.Lutz C. The domain of emotion words on Ifaluk. Am Ethnol. 1982;9:113–128. [Google Scholar]

- 38.Bergelson E, Swingley D. At 6-9 months, human infants know the meanings of many common nouns. Proc Natl Acad Sci USA. 2012;109:3253–3258. doi: 10.1073/pnas.1113380109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Téglás E, et al. Pure reasoning in 12-month-old infants as probabilistic inference. Science. 2011;332:1054–1059. doi: 10.1126/science.1196404. [DOI] [PubMed] [Google Scholar]

- 40.Scherer KR. Affect bursts. In: Van Goodzen SHM, Van de Poll NE, Sergeant JA, editors. Emotions: Essays on Emotion Theory. Erlbaum; Mahwah, NJ: 1994. pp. 161–193. [Google Scholar]

- 41.Feigenson L, Carey S. Tracking individuals via object-files: Evidence from infants’ manual search. Dev Sci. 2003;6:568–584. [Google Scholar]

- 42.Xu F, Cote M, Baker A. Labeling guides object individuation in 12-month-old infants. Psychol Sci. 2005;16:372–377. doi: 10.1111/j.0956-7976.2005.01543.x. [DOI] [PubMed] [Google Scholar]

- 43.McShane BB, Bockenholt U. Single-paper meta-analysis: Benefits for study summary, theory testing, and replicability. J Consum Res. 2017;43:1048–1063. [Google Scholar]

- 44.Kensinger EA. Remembering emotional experiences: The contribution of valence and arousal. Rev Neurosci. 2004;15:241–251. doi: 10.1515/revneuro.2004.15.4.241. [DOI] [PubMed] [Google Scholar]

- 45.Kuppens P, Tuerlinckx F, Russell JA, Barrett LF. The relation between valence and arousal in subjective experience. Psychol Bull. 2013;139:917–940. doi: 10.1037/a0030811. [DOI] [PubMed] [Google Scholar]

- 46.Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev Psychopathol. 2005;17:715–734. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Smith L, Yu C. Infants rapidly learn word-referent mappings via cross-situational statistics. Cognition. 2008;106:1558–1568. doi: 10.1016/j.cognition.2007.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mintz TH, Gleitman LR. Adjectives really do modify nouns: The incremental and restricted nature of early adjective acquisition. Cognition. 2002;84:267–293. doi: 10.1016/s0010-0277(02)00047-1. [DOI] [PubMed] [Google Scholar]

- 49.Tenenbaum JB, Kemp C, Griffiths TL, Goodman ND. How to grow a mind: Statistics, structure, and abstraction. Science. 2011;331:1279–1285. doi: 10.1126/science.1192788. [DOI] [PubMed] [Google Scholar]

- 50.Powell LJ, Spelke ES. Preverbal infants expect members of social groups to act alike. Proc Natl Acad Sci USA. 2013;110:E3965–E3972. doi: 10.1073/pnas.1304326110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Schmidt MF, Sommerville JA. Fairness expectations and altruistic sharing in 15-month-old human infants. PLoS One. 2011;6:e23223. doi: 10.1371/journal.pone.0023223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ekman P. An argument for basic emotions. Cogn Emot. 1992;6:169–200. [Google Scholar]

- 53.Russell JA. Core affect and the psychological construction of emotion. Psychol Rev. 2003;110:145–172. doi: 10.1037/0033-295x.110.1.145. [DOI] [PubMed] [Google Scholar]

- 54.Moors A, Ellsworth PC, Scherer KR, Frijda NH. Appraisal theories of emotion: State of the art and future development. Emot Rev. 2013;5:119–124. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.