Abstract

The use of imaging markers to predict clinical outcomes can have a great impact in public health. The aim of this paper is to develop a class of generalized scalar-on-image regression models via total variation (GSIRM-TV), in the sense of generalized linear models, for scalar response and imaging predictor with the presence of scalar covariates. A key novelty of GSIRM-TV is that it is assumed that the slope function (or image) of GSIRM-TV belongs to the space of bounded total variation in order to explicitly account for the piecewise smooth nature of most imaging data. We develop an efficient penalized total variation optimization to estimate the unknown slope function and other parameters. We also establish nonasymptotic error bounds on the excess risk. These bounds are explicitly specified in terms of sample size, image size, and image smoothness. Our simulations demonstrate a superior performance of GSIRM-TV against many existing approaches. We apply GSIRM-TV to the analysis of hippocampus data obtained from the Alzheimers Disease Neuroimaging Initiative (ADNI) dataset.

Keywords: Excess risk, Functional regression, Generalized scalar-on-image regression, Prediction, Total variation

1 Introduction

The aim of this paper is to develop generalized scalar-on-image regression models via total variation (GSIRM-TV) with scalar response and imaging and/or scalar predictors. This new development is motivated by studying the predictive value of ultra-high dimensional imaging data and/or other scalar predictors (e.g., cognitive score) for clinical outcomes including diagnostic status and the response to treatment in the study of neurodegenerative and neuropsychiatric diseases, such as Alzheimer’s disease (AD)(Mu and Gage 2011). For instance, the growing public threat of AD has raised the urgency to discover and validate prognostic biomarkers that may identify subjects at greatest risk for future cognitive decline and accelerate the testing of preventive strategies. In this regard, prior studies of subjects at risk for AD have examined the utility of various individual biomarkers, such as cognitive tests, fluid markers, imaging measurements, or some individual genetic markers (e.g., APOE4 gene), to capture the heterogeneity and multifactorial complexity of AD (reviewed in Weiner et al. 2012).

Our GSIRM-TV considers the use of imaging predictor X and/or scalar predictors Z to predict scalar response Y. In practice, imaging data are often represented in the form of 2-dimensional matrix or 3-dimensional array. Assume that X ∈ ℝN×N is a 2-dimensional matrix of size N × N which is observed without error and Z ∈ ℝp is a p × 1 vector with the first component being constant one. Our GSIRM-TV assumes that Y given (X, Z) follows

| (1) |

where μ and ϕ are, respectively, canonical and scale parameters, 〈U, V〉 = Σi,jui,jvi,j for U = (ui,j) ∈ ℝN×N and V = (vi,j) ∈ ℝN×N, and g(·) is a known link function. Moreover, θ0 and β0(·) are unknown parameters of interest and β0(·) is called the coefficient image/function. Throughout the paper, assume that images are observed without error. We may deal with such measurement errors in images by applying some smoothing methods to reduce error in images (Li et al. 2010).

GSIRM-TV can be regarded as an extension of the well-known functional linear model (FLM) and the high-dimensional linear model (HLM) that have been extensively studied in the literature. If we regard 〈U, V〉 as an approximation of a two-dimensional integral, then GSIRM-TV is an approximated version of FLM. The literature on FLM is too vast to summarize here. Please see the well-known monographs Ramsay and Silverman (2005) and Ferraty and Vieu (2006). The functional principal component analysis (fPCA) and various penalization methods have been developed to estimate the coefficient function. For example, the fPCA method has been discussed by James (2002), Müller and Stadtmüller (2005), Hall and Horowitz (2007), Reiss and Ogden (2007, 2010), James et al. (2009), and Goldsmith et al. (2010) and the penalized method has been studied by Crambes et al. (2009), Yuan and Cai (2010), and Du and Wang (2014). On the other hand, if we vectorize X and β0(·) as N2 × 1 vectors, model (1) takes the form of the high dimensional generalized linear regression. To achieve sparsity in β0, various penalization methods, such as Lasso or SCAD, have been developed. Please see Tibshirani (1996), Chen et al. (1998), Fan and Li (2001), and references therein.

Compared with FLM and HLM, a key novelty of GSIRM-TV is that the coefficient image β0(·) in model (1) is assumed to be a piecewise smooth image with unknown jumps and edges. Such assumption not only has been widely used in the imaging literature, but also is critical for addressing various scientific questions, such as the identification of brain regions associated with AD. As an illustration, we consider a data set with n = 300 subjects simulated from a functional linear model which is a special case of (1). The first row of Figure 1 presents the true 64 × 64 image matrix β0, X, and Y from the left to the right. We have vectorized X, used fPCA for FLM, Lasso for HLM, and GSIRM-TV to estimate the coefficient image and presented the estimated coefficient images in the second row of Figure 1. Unfortunately, both FLM and HLM fail to capture the main feature of the true coefficient image due to their key limitations. First, fPCA requires that β0 be well presented by the eigenfunctions of X, whereas it is not the case according to Figure 1. Second, the existing regularization methods can have difficulty in recovering β0, since the true coefficient image is non-sparse. Moreover, most regularization methods for FLM assume that the unknown coefficient function is one-dimensional and belongs to a smoothed function space, such as the Sobolev space, and thus they will not be able to preserve edge and boundary information for the data set presented in Figure 1. In contrast, our GSIRM-TV estimate developed in this paper can truly preserve the sharp edge of the original image.

Figure 1.

Results from a simulated data set. The top row includes the true 64 × 64 coefficient image β0 in the left panel, one realization of a 64 × 64 image predictor X in the middle panel, and the responses Y from n = 300 in the right panel. The bottom row includes the estimated coefficient functions obtained from fPCA (left), Lasso (middle), and Total Variation (right).

In this paper, we make two important contributions including a new estimation method based on the total variation analysis and non-asymptotic error bounds on the risk under the framework of GSIRM-TV. The total variation analysis plays a fundamental role in various image analyses since the path-breaking works of Rudin and Osher (1994) and Rudin, Osher and Fatemi (1992). The total variation penalty has been proved to be quite efficient for preserving the boundaries and edges of images (Rudin et al. 1992). Michel et al. (2011) proposed a similar total variation method for image regression and image classification, but they focus on the development of different algorithms for the TV optimization problem. According to the best of our knowledge, this is the first paper on the development of statistical analysis of the total variation method for GSIRM-TV. The fused lasso (Tibshirani et al. 2005; Friedman et al. 2007) uses a similar penalty function. But for the 2-dimensional parameter, the fused lasso and the TV penalty can be quite different. For example, the isotropic total variation penalty uses the Euclidean norm of the first differences of the parameter, rather than the sum of the absolute values of the first differences. There are a few papers on the use of two-dimensional or three-dimensional imaging predictors in FLM (Guillas and Lai 2010; Reiss and Ogden 2010; Zhou et al. 2013; James, et al. 2009; Goldsmith et al. 2010; Gertheiss et al. 2013; Wang et al. 2014; Reiss et al. 2015), but none of them consider the piecewisely smoothed function with jumps and edges and the total variation analysis. We also derive nonasymptotic error bounds on the risk for the estimated coefficient image under the total variation penalty. We are able to obtain finite-sample bounds that are specified explicitly in terms of the sample size n, the image size N × N, and the image smoothness.

The rest of the paper is organized as follows. Section 2 considers linear scalar-on-image regression model and proposes the TV optimization framework to estimate the unknown coefficient image. We also establish the nonasymptotic error bound for the prediction error. Section 3 extends linear scalar-on-image regression model to generalized scalar-on-image regression models. Section 4 examines the finite-sample performance of GSIRM-TV and compares it with several state-of-the-art methods, such as regularized matrix regression (Zhou and Li 2014). Section 5 applies GSIRM-TV to the use of the hippocampus imaging data for a binary classification problem. Future research directions are discussed in Section 6. The technical proofs of main theorems are given in the Appendix.

2 Linear scalar-on-image regression model

We start with considering a linear scalar-on-image regression model, which is the simplest case of GSIRM-TV (1), as follows:

| (2) |

where ε is the random error with 𝔼(ε|X) = 0 and 𝔼(ε2|X) = σ2, and without loss of generality, both X and Y are assumed to be centered with 𝔼(Y) = 𝔼(X) = 0. Model (2) may be treated as a special case of FLM since discrete images are isometric to the space of piecewise-constant functions defined as

where Xjk is the (j, k)–th pixel value of the image X and Ω = [0, 1]2. By treating β0 as an integrable function in Ω, that is, β0 ∈ L2(Ω), model (2) can be rewritten as

2.1 The space of bounded variation

Throughout the paper, it is assumed that β0 is a function of bounded variation in Ω if the total variation of β0 in Ω, denoted by ||β0||TV, is finite and defined as follows:

where |f|∞ = sup(u,v)∈Ω |f(u, v)| and denotes the vector field with value in R2, which is infinitely differentiable and has compact support in Ω. Moreover, f(u, v) = (f1(u, v), f2(u, v)) and div f(u, v) = ∂uf1(u, v) + ∂vf2(u, v), where ∂u = ∂/∂u and ∂v = ∂/∂v. The vector space of functions of bounded variation in Ω is denoted by BV(Ω). For example, if β0 is differentiable in Ω, then ||β0||TV reduces to . In this case, β0 belongs to the Sobolev space W1,1(𝒟), i.e., functions with integrable first order partial derivatives. However, the power of total variation in image analysis arises exactly from the relaxation of such constraints. The BV(Ω) is much larger than W1,1(𝒟) and contains many interesting piecewise continuous functions with jumps and edges. This is exactly the advantage of using TV regularization over other familiar regularization methods used in the nonparametric literature. For example, the smoothing spline penalty term is not sensitive enough to capture sharp edges and jumps.

There are at least two additional advantages of using bounded variation functions in model (2). First, many real images with edges have small total variation since image edges usually reside in a low-dimensional subset of pixels. As an illustration, in Figure 2, the left panel displays the Shepp-Logan phantom image, while the middle and right panels show the two components of the discrete gradient of the phantom image, which have obvious sparse patterns. Second, BV(Ω) is mathematically tractable even though it contains many more functions with edges and jumps compared with W1,1(𝒟).

Figure 2.

Left: the Shepp-Logan phantom image; Middle and Right: the two components of the discrete gradient of the phantom image.

2.2 Estimation

On the basis of model (2) and BV(Ω), we propose to solve the following TV minimization:

| (3) |

where λ is a smoothing parameter, which controls the noise level. It is known that the above minimization problem is equivalent to the penalized optimization

| (4) |

where λ̌ is a different smoothing parameter. The TV optimization has been widely used to reconstruct images in the compressive sensing literature (see e.g., Candès et al. 2006a; Candès et al. 2006b; Needell and Ward 2013). Using the TV optimization for one-dimensional regression has been studied by Mammen and van de Geer (1997) and Tibshirani (2014). Michel et al. (2011) discussed some algorithms to solve a similar optimization problem. To the best of our knowledge, nothing has been done on the statistical properties of the TV estimator for scalar-on-image regression models.

To solve the TV minimization (3) (or (4)), we treat β = (βjk) ∈ ℝN×N as an N × N block of pixels with βjk as its (j, k) element. Then, we define the discrete total variation of β = (βjk) ∈ ℝN×N. For any β ∈ BV(Ω), the discrete gradient ∇ : BV(Ω) → ℝN×N×2 is defined by

Based on (∇β)jk = ((∇β)jk,1, (∇β)jk,2)T, the anisotropic version of the total variation norm ||β||TV can be rewritten as

On the other hand, its isotropic version is defined by

The anisotropic and isotropic induced total variation norms are equivalent up to a factor of , i.e.,

We will write all results in terms of the anisotropic total variation seminorm, but our results also extend to the isotropic version.

Let AX be an n × N2 design matrix such that the ith row is the vectorized Xi. With a slight abuse of notation, we use β to denote the coefficient matrix and its corresponding vector. We may rewrite (3) as the matrix form given by

| (5) |

We adapt an algorithm called TVAL3 based on the augmented Lagrangian method (Hestenes 1969; Powell 1969; Li 2013). Specifically, we solve an equivalent optimization problem given by

where Dl is an 2 × N2 vector of constants associated with the discrete gradient. As an illustration, we consider a simple case with N = 2. In this case, we have β = (β11, β12, β21, β22)T. We may choose

so that we have D1β = (∇β)11, D2β = (∇β)12, D3β = (∇β)21, and D4β = (∇β)22.

Its corresponding augmented Lagrangian function is given by

where vl, αl, and γ are tuning parameters. We may find the minimizer iteratively, and then the subproblem at each iteration of TVAL3 becomes minwl,β ℒA(wl, β). In our algorithm, vl is updated at each iteration. Moreover, αl’s and γ as smoothing parameters can be selected by using either the Cp criterion or the K-fold cross-validation (CV). However, its computational time can be long even under current computing facilities. In our numerical examples, we pre-fix the tuning parameters by setting αl = 25 for l = 1, …, N2 and γ = 28. The simplest way to choose γ is to try different values from 24 up to 213 and compare the recovered images. The value of αl is much less sensitive to the choice of γ. We leave tuning parameter optimization for our future research topic.

We describe the complete algorithm as follows.

Step 1. Initialize β(0) and ;

-

Step 2. Given β = β(k) and , we solve for , l = 1, …, N2, by minimizingThe explicit solution (component-wise) is given by

-

Step 3. Given and , l = 1, …, N2, we solve for β(k+1) by minimizingThe explicit solution is given by

- Step 4. Given β = β(k+1), , update by using

Step 5. Iterate Steps 2–4 until convergence.

2.3 The error bound

In this subsection, we establish the nonasymptotic error bound for the TV estimator β̂ based on model (2). We consider two types of distances to measure the error. The first one is a weighted L2 distance such that

where 𝔼* represents taking expectation with respect to (Yn+1, Xn+1) only. The second one is the TV distance between β̂ and β0, ||β̂ − β0||TV.

We derive both error bounds by means of Haar wavelet basis. Various wavelet bases are commonly used to effectively represent images and the Haar wavelet is the simplest possible wavelet. The bivariate Haar wavelet basis for L2(Ω) can be constructed as follows. Let ϕ0(t) = I[0,1) be the indicator function, and the mother wavelet ϕ1(t) = 1 for t ∈ [0, 1/2) and −1 for t ∈ [1/2, 1). Starting from the multivariate functions

the bivariate Haar basis functions include the indicator function I[0,1)2 and other functions

The bivariate Haar wavelet basis is an orthonormal basis for L2[0, 1)2. Note that discrete images are isometric to the space ℐN ⊂ L2[0, 1)2 of piecewise constant functions via the identification cjk = NXjk. Letting N = 2J, the bivariate Haar basis restricted to the N2 basis functions {I[0,1)2, , j ≤ J −1, d ∈ {(0, 1), (1, 0), (1, 1)}, k ∈ ℤ2 ∩ 2j[0, 1)2} forms an orthonormal basis for ℝN×N. Denote by Φ the discrete bivariate Haar transformation and {ϕl} the Haar basis, in which Φβ ∈ ℝN×N contains the bivariate Haar wavelet coefficients of β. Next, we review a theoretical result of Petrushev et al. (1999), who proved a deep and nontrivial result on BV(Ω). Specifically, it states that the Haar wavelet coefficients of β0 ∈ BV(Ω) are in weak ℓ1. That is, if the Haar coefficients are sorted decreasingly according to their absolute values, then the l–th rearranged coefficient is in absolute value less than c||β0||BV/l with c being an absolute constant.

Invoking Haar wavelets is only for theoretical investigation and we do not estimate the Haar coefficients directly. We now introduce the main assumptions of this paper:

-

A1

Assume that the coefficient image β0 in the space of N × N blocks of pixel values with bounded variation. Assume that the error ε is sub-Gaussian.

-

A2

Assume that the discrete Haar representation of the image predictor X is , where ρl are positive constants and ξl are independently and identically distributed sub-Gaussian random variables with zero mean and unit variance.

-

A3

For any β ∈ BV(Ω), write β = Σlγlϕl, where the γl are the Haar basis coefficients of β. We arrange γl in a decreasing order according to their absolute values and denote the sorted coefficients as γ(l). Assume that the corresponding sorted ρ(l) associated with the same basis function satisfies c1s−2q ≤ ρ(s) ≤ c2s−2q with q > 1/2 for each s and two positive constants c1, c2.

Assumption A2 on the wavelet representation of X is reasonable because the discrete wavelet transformation approximately decorrelates or “whitens” data (Vidakovic 1999). Although we might use the Karhunen-Loève expansion of X, we do not adopt this approach in order to avoid additional complexity associated with the estimation of eigenfunctions. When we sort the Haar wavelet coefficients of both β and X, the corresponding basis functions may not follow the same order. Assumption A3 specifies the decay rate of the Haar wavelet coefficients of X. From A2, the predictor images Xi can be written as . Let à be an n × N2 matrix with the (i, l)-th element being . It is well-known that à satisfies the restricted isometry property (RIP) with a large probability (Candès et al. 2006a, 2006b). Specifically, if n ≥ C−2s log(N2/s), then with probability exceeding 1 − 2e−Cn, we have

| (6) |

for all s-sparse vectors u ∈ ℝN2 with a small RIP constant δ < C.

Let {γ̂l} and {γl} be, respectively, the wavelet coefficients of β̂ and β0. It turns out that ||β̂ − β0||X,2 = {Σlρl(γ̂l − γl)2}1/2, which is the weighted L2-norm of the wavelet coefficient difference. On the other hand, since ||ϕl||TV ≤ 8 (Needell and Ward, 2013),

which is bounded by the L1-norm of the wavelet coefficient difference. We obtain the following theorem, whose detailed proof can be found in the Appendix.

Theorem 2.1

Assumptions A1-A3 hold. Let C be an absolute constant and λ = Cn1/2. If n ≥ Cs2q+1 log(N2/s2q+1) and δ < 1/3 in (6), then with probability greater than 1 – 2 exp(−Cn), we have

| (7) |

and

| (8) |

where (∇β0)s = arg minu:s-sparse ||∇β0 − u||1 is the best s-sparse approximation to the discrete gradient ∇β0.

Theorem 2.1 provides non-asymptotic error bounds for ||β̂ − β0||X,2 and ||β̂ − β0||TV, which are specified explicitly in terms of sample size n and image size N × N, and the underlying smoothness of the true coefficient image based on the discrete gradient.

Remark 2.1

We call a prediction “stable” if ||β̂ − β0||X,2 ≤ Cσ holds with a high probability. Assume that the coefficient image has the sparse discrete gradient, i.e., ∇β0 is supported on S0 with |S0|0 ≤ s. If λ = Cn1/2, then Theorem 2.1 shows that ||β̂ − β0||X,2 ≤ Cσ, which indicates that our prediction procedure is stable. Furthermore, for the extreme case with noiseless data, our prediction procedure is exact. The required sample size n is of order s2q+1 log(N2/s2q+1), which depends on the smoothness of the true coefficient image β0, the relative smoothness between β0 and X, and the image size N × N.

Remark 2.2

The parameter q characterizes the decay rate of the wavelet coefficients of X. The larger the q, the more the required sample size. Theorem 2.1 also shows that the larger q is, the smaller the prediction error is. When q = 0, this gives the special case discussed in Needell and Ward (2013).

3 Generalized scalar-on-image regression models

In this section, we extend all developments for model (2) to GSIRM-TV (1). Given X ∈ ℝN×N and Z ∈ Rp, the response variable Y is assumed to follow an exponential family distribution as

| (9) |

where a(·), b(·), and c(·) are known functions, and ψ is either known or considered as a nuisance parameter. Our GSIRM-TV also assumes β0 ∈ BV(Ω). It can be shown (Nelder and Wedderburn, 1972) that

where ḃ(η) and b̈(η) are, respectively, the first and second derivatives of b(η) with respect to η. Moreover, is the canonical parameter of (9). A Gaussian distribution with variance σ2 has a(ψ) = σ2 and b(η) = η2/2, a Bernoulli distribution has a(ψ) = 1 and b(η) = log(1 + eη), and a Poisson distribution has a(ψ) = 1 and b(η) = eη.

3.1 Estimation

Let ξ = (θ, β) ∈ Rp×BV(Ω). Given the observed data, we propose to find estimates ξ̂ by minimizing a penalized likelihood function given by

| (10) |

We use an algorithm, which is a standard iteratively reweighted least squares for GLMs, modified to add a TV penalty, to calculate ξ̂ = (θ̂, β̂). Given a trial estimate of ξ, denoted by ξ̂I, we introduce the iterative weights and the working dependent variable as

| (11) |

where μ̂i,I = μ(Xi, Zi; ξ̂I), ġ(μ) = dg(μ)/dμ, and η̂i,I = η(Xi, Zi; ξ̂I). Then, we can calculate the next estimate of ξ, denoted by ξ̂I+1, by minimizing

| (12) |

where ∂ξ = ∂/∂ξ. The optimization in (12) can be effectively solved by using TVAL3 algorithm discussed in Section 2. Finally, we can iteratively solve ξ̂I until convergence.

We provide the complete algorithm as follows.

Step 1. Initialize ξ(0) = (θ(0), β(0)).

Step 2. For each k, define the weights and the working dependent variable in (11), and define the objective function in (12). Use TVAL3 algorithm to solve for ξ(k+1) = (θ(k+1), β(k+1)).

Step 3. Iterate Steps 2 and 3 until convergence.

We consider the logistic scalar-on-image regression model as an example. Specifically, Yi given (Xi, Zi) follows a Bernoulli distribution with the success probability pi and for i = 1, …, n. Given the current estimate ξ̂I, it is easy to obtain the iterative weight and effective response variable, respectively, given by

Therefore, the estimate ξ̂I+1 can be obtained by solving a weighted penalized least squares in (12).

3.2 The error bound

We establish an non-asymptotic prediction error bound for GSIRM-TV. We need some additional assumptions as follows.

-

B1

Assume η(X, Z; β0, θ0) is bounded almost surely. Given (X, Z), the response Y is sub-Gaussian, i.e., 𝔼{exp(t[Y − ḃ(η(X, Z; β0, θ0))])|X} ≤ exp(t2σ̃2/2) for some σ̃2 > 0 and all t ∈ ℝ.

-

B2

The function ḃ(·) is monotonic with inft b̈(t) ≥ c3 and supt b̈ (t) ≤ c4 for two positive constants c3 and c4.

The sub-Gaussian assumption B1 holds for many well-known distributions, such as Gaussian. The assumption B2 requires that the second derivative of b(·) is bounded above and away from zero.

Theorem 3.1

Assumptions A1–A3 and B1–B2 hold. Let λ = Cn−1/2, where C is a positive constant. If n ≥ Cs2q+1 log (N2/s2q+1) and δ < 1/3 in (6), with probability greater than 1 − 2 exp(−Cn), we have ||θ̂ − θ0||2 ≤ Cn−1/2,

| (13) |

and

| (14) |

The conditional mean of Yn+1 given Xn+1 is ḃ(η(Xn+1, β0)). We may measure the accuracy of β̂ by 𝔼*[ḃ(η(Xn+1, β̂)) − ḃ(η(Xn+1, β0))]2. Under B1, this risk is bounded by and thus, it is reasonable to study the non-asymptotic behavior of ||β̂ − β0||X,2. Theorem 2.1 is a special case of Theorem 3.1 if it is assumed in Theorem 2.1 that responses follow a normal distribution. If assuming that the coefficient image has the sparse discrete gradient, Theorem 3.1 shows that ||β̂ − β0||X,2 is bounded by a constant, which is proportional to σ under the assumption of Theorem 2.1. This shows that our prediction procedure is stable for GSIRM-TV.

4 Simulation Studies

In this section, we conducted a set of Monte Carlo simulations to examine the finite sample performance of the TV estimate β̂ and compare it with five competing methods. The first approach (Lasso) is to calculate the Lasso estimates of β0. The second one (Lasso-Haar) is to calculate the Lasso estimates of the Haar coefficients of β0 and use the inverse discrete wavelet transform to calculate the estimates of β0. The third one (Matrix-Reg) is to estimate β0 by using a recent development called regularized matrix regression (Zhou and Li 2014), which treats the coefficient image as a matrix and penalizes the nuclear norm of this matrix. The fourth one (FPCR) is the functional principal component regression approach (Reiss and Ogden 2007, 2010) by using tensor product cubic B-splines to approximate the coefficient function. The fifth one (WNET) is to perform scalar-on-image regression in the wavelet domain by naive elastic net (Zhao et al. 2014). Among these six approaches, the TV, Lasso, Lasso-Haar, and Matrix-Reg methods have been implemented by Matlab and the FPCR and WNET methods have been implemented in the R packages ‘refund’ and ‘refund.wave’ (see Reiss et al. 2015), respectively. For the FPCR and WNET methods, we have used the default settings of both packages. The choice of wavelet basis in WNET is the Daubechies basis.

We present some results based on linear scalar-on-image regression model (2). Specifically, Xi were simulated from a 64×64 phantom map with N = 64 and 4, 096 pixels according to a spatially correlated random process Xi = Σl l−q/2ξilϕl with q = 0, 0.5, and 1, where the ξl are standard normal random variables and the ϕl are bivariate Haar wavelet basis functions. We consider four different β0 images including triangle, oval, T-shape, and checkerboard shapes (Figure 3). Among them, the triangle and oval images are convex, while the other two are not. Errors εi were independently generated from N(0, 1). We set n1 = 300 for the training set and n2 = 100 for the test set. We repeated each setting 100 times. We calculated the root mean squared prediction error (RMSPE) to compare the finite sample performance of the six different estimation methods. Let β̂ be the estimated coefficient image from the training set and Ŷi = 〈β̂, Xi〉 be the predicted responses for the test set. For each test set, RMSPE is defined by

Figure 3.

The true coefficient images used for the simulation study.

We also calculated the means and standard errors of RMSPEs for the 100 testing datasets.

Figures 4 and 5 present the estimated β0 from a randomly selected training dataset with q = 0 and q = 0.5, respectively, for the sample size n = 300. For all four different shapes, our TV estimates can capture the sharp boundaries of the underlying shapes. In contrast, the Lasso method fails for all shapes, since the predictor images Xi are highly correlated. The Lasso estimates of the Haar coefficients can roughly capture the true shapes. However, this method cannot faithfully recover the sharp boundaries of the triangle, oval, and T shapes, whereas it does work very well for the checkerboard shape, since this checkerboard shape is exactly one of the bivariate Haar wavelet basis functions. The matrix regression approach can roughly capture the true shapes when q = 0, and unfortunately this method fails for the case when q = 0.5, for which the entries of X are spatially correlated. The PCR approach uses splines to approximate the predictor images, and it cannot preserve the sharp edges of coefficient estimator for our examples. The WNET method fails for the case when q = 0 but it can capture the shapes of the true coefficient image when the predictors are more spatially correlated.

Figure 4.

The estimated coefficient images from six estimation methods when q = 0 and n = 300: TV (Top row); Lasso (Second row); Lasso-Haar (Third row); Matrix regression (fourth row); FPCR (Fifth row); and WNET(Sixth row).

Figure 5.

The estimated coefficient images from six methods when q = 0.5 and n = 300: TV (Top row); Lasso (Second row); Lasso-Haar (Third row); Matrix regression (fourth row); FPCR (Fifth row); and WNET(Sixth row).

Table 1 presents the RMSPEs of all six methods across all shapes. Overall, our TV method has significantly smaller prediction errors, in particular for q = 0. It is expected that the Lasso method leads to the largest prediction error. For all these methods, the larger q is, the smaller are their RMSPEs. For a larger q which means the predictor images are more spatially correlated, the performances of the TV, Lasso-Haar, FPCR, and WNET are similar to each other.

Table 1.

The RMSPEs of six methods including TV, Lasso, Lasso-Haar, Matrix-Reg, FPCR, and WNET for four different shapes: the numbers in brackets are the corresponding standard errors of those RMSPEs.

| TV | Lasso | Lasso-Haar | |||||||

|---|---|---|---|---|---|---|---|---|---|

| q | 0 | 0.5 | 1 | 0 | 0.5 | 1 | 0 | 0.5 | 1 |

| Triangle | 3.20 (0.70) | 1.53 (0.12) | 1.18 (0.08) | 34.02 (2.25) | 13.12 (0.95) | 3.96 (0.32) | 18.76 (1.43) | 5.08 (0.52) | 1.98 (0.17) |

| Oval | 1.69 (0.22) | 1.49 (0.13) | 1.22 (0.09) | 31.16 (2.00) | 11.83 (0.95) | 3.57 (0.25) | 14.99 (1.24) | 3.82 (0.28) | 1.67 (0.15) |

| T-shape | 2.04 (0.51) | 1.47 (0.09) | 1.23 (0.10) | 30.10 (2.41) | 18.81 (0.81) | 3.34 (0.28) | 19.65 (1.84) | 4.62 (0.46) | 1.69 (0.16) |

| Checkerboard | 6.27 (1.09) | 1.45 (0.11) | 1.10 (0.08) | 49.00 (3.54) | 25.46 (1.65) | 9.08 (0.83) | 1.43 (0.14) | 1.06 (0.08) | 1.05 (0.07) |

|

| |||||||||

| Matrix-Reg | FPCR | WNET | |||||||

| q | 0 | 0.5 | 1 | 0 | 0.5 | 1 | 0 | 0.5 | 1 |

|

| |||||||||

| Triangle | 23.13 (1.78) | 6.46 (0.55) | 4.00 (0.34) | 10.89 (0.76) | 2.27 (0.18) | 1.19 (0.09) | 28.42 (2.45) | 2.10 (0.17) | 1.28 (0.10) |

| Oval | 18.81 (1.58) | 5.58 (0.37) | 3.41 (0.30) | 9.28 (0.68) | 2.12 (0.15) | 1.19 (0.09) | 24.74 (2.40) | 2.03 (0.16) | 1.26 (0.10) |

| T-shape | 20.97 (1.45) | 5.49 (0.38) | 3.57 (0.33) | 11.69 (0.82) | 3.42 (0.26) | 1.64 (0.12) | 24.41 (2.18) | 2.52 (0.21) | 1.46 (0.13) |

| Checkerboard | 35.33 (2.89) | 12.80 (0.95) | 6.35 (0.38) | 10.70 (0.83) | 3.05 (0.22) | 1.14 (0.10) | 44.24 (3.23) | 5.41 (0.55) | 2.03 (0.15) |

5 Real data analysis

To illustrate the usefulness of our proposed model, we consider anatomical MRI data collected at the baseline by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) study, which is a large scale multi-site study collecting clinical, imaging, and laboratory data at multiple time points from healthy controls, individuals with amnestic mild cognitive impairment, and subjects with Alzheimer’s disease (AD). “Data used in the preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the Food and Drug Administration (FDA), private pharmaceutical companies and non-profit organizations, as a $60 million, 5-year publicprivate partnership. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). Determination of sensitive and specific markers of very early AD progression is intended to aid researchers and clinicians to develop new treatments and monitor their effectiveness, as well as lessen the time and cost of clinical trials. The Principal Investigator of this initiative is Michael W. Weiner, MD, VA Medical Center and University of California, San Francisco. ADNI is the result of efforts of many coinvestigators from a broad range of academic institutions and private corporations, and subjects have been recruited from over 50 sites across the U.S. and Canada. The initial goal of ADNI was to recruit 800 subjects but ADNI has been followed by ADNI-GO and ADNI-2. To date these three protocols have recruited over 1500 adults, ages 55 to 90, to participate in the research, consisting of cognitively normal older individuals, people with early or late MCI, and people with early AD. The follow up duration of each group is specified in the protocols for ADNI-1, ADNI-2 and ADNI-GO. Subjects originally recruited for ADNI-1 and ADNI-GO had the option to be followed in ADNI-2. For up-to-date information, see www.adni-info.org.”

Alzheimer’s disease as an age-related neurodegenerative brain disorder is often characterized by progressive loss in memory and deterioration of cognitive functions (De La Torre 2010; Weiner et al. 2012). Important neuropathological hallmarks of AD are the gradual intraneuronal accumulation of neurofibrillary tangles formed as a result of abnormal hyperphosphorylation of cytoskeletal tau protein, extracellular deposition of amyloid-β (Aβ) protein as senile plaques, and massive neuronal death. These pathologies are evident in the hippocampus, which is located in the medial temporal lobe underneath the cortical surface, and other vulnerable brain areas. The hippocampus belongs to the limbic system and plays important roles in the consolidation of information from short-term memory to long-term memory and spatial navigation (Colom et al. 2013; Fennema-Notestine et al. 2009; Luders et al. 2013).

Given the MRI scans, hippocampal substructures were segmented with FSL FIRST (Patenaude et al. 2011) and hippocampal surfaces were automatically reconstructed with the marching cube method (Lorensen and Cline 1987). We adopted a surface fluid registration based hippocampal subregional analysis package (Shi et al. 2013), which uses isothermal coodinates and fluid registration to generate one-to-one hippocampal surface registration for surface statistics computation. It introduced two cuts on a hippocampal surface to convert it into a genus zero surface with two open boundaries. The locations of the two cuts were at the front and back of the hippocampal surface. By using conformal parameterization, it essentially converts a 3D surface registration problem into a two-dimensional (2D) image registration problem. The flow induced in the parameter domain establishes high-order correspondences between 3D surfaces. Finally, various surface statistics were computed on the registered surface, such as multivariate tensor-based morphometry (mTBM) statistics (Wang et al. 2010), which retain the full tensor information of the deformation Jacobian matrix, together with the radial distance (Pizer et al. 1999). This software package and associated image processing methods have been adopted and described by various studies (Shi et al. 2014).

We applied GSIRM-TV to the hippocampus data set calculated from ADNI. The sample in our investigation includes n = 403 subjects: 223 healthy controls (HC) (107 females and 116 males) and 180 individuals with AD (87 females and 93 males). We consider binary disease status with 0 being HC and 1 being AD as responses. The image predictor Xi is the 2D representation of left hippocampus. The covariate vector Zi includes constant(=1), gender (Female=0 and Male = 1), age (55–92), and behavior score (1–36). Given (Xi, Zi), Yi is assumed to follow a Bernoulli distribution with the success probability pi satisfying

We used the iterative reweighted algorithm described above to estimate the unknown parameters.

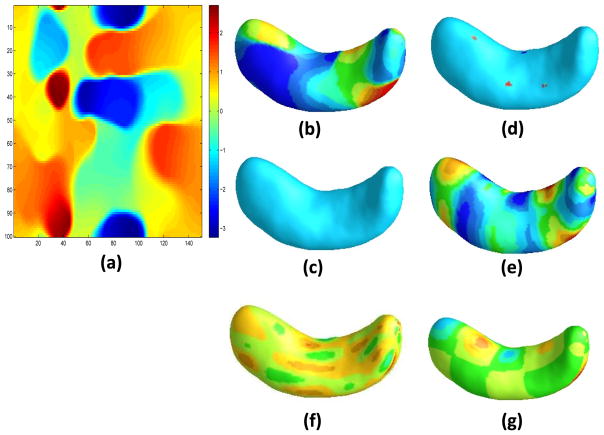

Table 2 presents the estimates of θ0 and their corresponding standard deviations, which were calculated by using the bootstrap method. Figure 7 shows the estimated coefficient images by using the five estimation methods. The effects around pixels (5, 40), (40, 40), (95, 40) seem to be captured well by our TV estimate. The confidence band for the coefficient image can also be obtained by using the bootstrap method. We randomly partitioned the hippocampus data set into a training set with n1 = 203 and a test set with n2 = 200. We repeated this random partition for 100 times and computes 100 classification errors. The average classification error of TV is 8.13% with a standard error 1.56%. We also obtain the average classification errors for other five methods. The average classification errors are 12.23%(7.36%), 21.65%(15.56%), 12.03%(11.55%), 17.13%(3.27%), 16.45%(15.57%), respectively, for Lasso, Lasso-Haar, matrix regression, FPCR, and WNET. For the WNET method, since the R code requires the image size to be a power of 2, we have added zeros to make the image size of 256 × 256 as suggested by one of the referees. Inspecting Table 2 reveals that sex and age are not significant in GSIRM-TV. We run the same procedure without sex and age and obtained a similar classification result as the full model, which is omitted from the paper.

Table 2.

ADNI hippocampus data set: the estimated coefficients of the four scalar covariates and their standard deviations in parentheses.

| intercept | sex | age | behavior score | |

|---|---|---|---|---|

| θ̂ | −1.807 (3.186) | −0.533 (0.590) | −0.093 (0.043) | 0.869 (0.111) |

Figure 7.

Estimated coefficient images for hippocampus data based four methods: the 2d-representation of TV estimator (a) and the surface representation of TV estimator (b), Lasso estimator (c), Lasso-wavelet estimator (d), matrix regression estimator (e), FPCR estimator (f), and WNET estimator (g).

6 Conclusion

We have developed a class of GSIRM-TVs for scalar response and imaging and/or scalar predictors, while explicitly assuming that its slope function belongs to BV(Ω). We have developed an efficient penalized total variation minimization to estimate the coefficient image. We have used simulations and real data analysis to show that GSIRM-TV is quite efficient for estimating the slope function, while preserving its edges and jumps. We have established the nonasymptotic error bound of the TV estimate for the excess risk.

It is known that many image data have small total variation and are compressible with respect to wavelet transform. Therefore, we may generalize our approach to include both total variation penalty and Lasso penalty on the wavelet coefficients. Specifically, let Φ be the wavelet transformation operator and γ be the wavelet coefficients of the coefficient image β0. We may calculate γ by minimizing

| (15) |

where Φ−1 is the inverse discrete wavelet transform, and β = Φ−1γ. In (15), there are two smoothing parameters λ1 and λ2 which need to be selected. Efficient algorithm is also needed to be developed to solve (15). We leave this as further research work.

We have so far focused on two-dimensional (2-D) images. It would be interesting to extend our method to analyze k–dimensional (k-D) images for k ≥ 2 (Zhou et al., 2013; Zhu et al., 2014). For example, consider a 3-D image f ∈ ℝN3, where f = (fe), in which e = (e1, e2, e3) ∈ {1, 2, 3}3. The inner product can be defined as

For ℓ = 1, 2, and 3, the discrete derivative of f in the direction of rℓ is frℓ ∈ ℝNℓ−1×N×N3−ℓ,

and the 3-D discrete gradient is (∇f)e = (frℓ)e for eℓ ≤ N − 1 and zero elsewhere. Hence the 3-D anisotropic and isotropic total variation seminorm can be defined similarly. We may consider a similar total variation optimization (4) to estimate the 3-D coefficient image. This research is currently under investigation and will be presented in another report.

Figure 6.

Observed left hippocampus images.

Acknowledgments

The authors would like to thank Dr. YalinWang for sharing the processed data. We would also like to thank the Editor, Associate Editor, and referees for constructive comments. The research of Xiao Wang is supported by NSF grants DMS1042967 and CMMI1030246. The research of Hongtu Zhu is partially supported by NIH grants MH086633 and 1UL1TR001111, NSF grants SES-1357666 and DMS-1407655, and a grant from Cancer Prevention Research Institute of Texas. This material was based upon work partially supported by the NSF grant DMS-1127914 to the Statistical and Applied Mathematical Science Institute.

7 Appendix

In this appendix, we provide proofs of Theorems 2.1 and 3.1. The constant C represents a universal constant independent of everything else, but it may be different from different lines.

7.1 Proof of Theorem 2.1

We prove the theorem by extending the arguments from Candès, Romberg, and Tao (2006a, 2006b) and Needell and Ward (2013). In the following, a ≲b means that there exists a constant C such that a ≤ Cb.

Recall that {ϕl} is a set of discrete bivariate Haar wavelet basis functions. Write , β0 = Σl γlϕl, and β̂ = Σl γ̂lϕl. We aim to derive the error bounds of Σl ρl(γl − γ̂l)2 and Σl |γl − γ̂l|. Denote by α = β0 − β̂ and write α = Σl hlϕl, where the hl = γl − γ̂l are the wavelet coefficients of the difference between the true coefficient image and the estimated coefficient image. We may sort hl in descending order according to their absolute values. Denote the sorted coefficients by h(l). The corresponding ρl with the same basis function with hl is denoted by ρ(l). Note that the ρ(l) are not necessarily sorted, but it is assumed to satisfy Condition A3.

Let S denote the support of s largest entries in the absolute values of α. As shown in Lemma 9 of Needell and Ward (2013), the set K of wavelets which are non-constant over S has cardinality at most 8s log N. With an abuse of notation, let K = 8s log N. Lemma 7.1 derives cone constraints for the wavelet coefficients h(j) and the weighted wavelet coefficients .

In the following, we focus on for l = K + 1, …, N2. Let

We may write Kc = K1 ∪ K2 ∪ ⋯ ∪ Kd, where K1 consists of 4s̃ largest |h(l)| within Kc, K2 consists of next 4s̃ largest-magnitude of |h(l)|, and so on. Since ρ(l) is of order l−2q and the magnitude of each in Kj−1 is larger than that in Kj up to a constant, we have

Combining this result with Lemma 7.1 yields

| (16) |

Recall that β̂ is calculated by solving (5). Let à be an n × N2 matrix with the (i, l)th element being n−1/2ξil, ρ be a diagonal matrix with the l-th diagonal element ρl, and γ and h be the wavelet coefficients of β0 and α, respectively. Therefore, and . Let . With probability more than 1 − e−Cn, . This gives

| (17) |

Following the argument in Candès et al. (2006a, 2006b), if n ≥ C−2s log(N2/s), then à satisfies the restricted isometry property (RIP) with a large probability: with probability exceeding 1 − 2e−Cδ2n,

| (18) |

for all s-sparse vector u ∈ ℝN2. Therefore,

Since δ < 1/3, we have

| (19) |

Further, it follows from (16) and (19) that

We arrive at

This gives

which provides the weighted L2 error bound.

Finally, because

we have

Combining this with (20) leads to the L1 error bound since

This completes the proof of the theorem.

Lemma 7.1

Let K = 8s log N. Then

| (20) |

| (21) |

Proof

Let α = β0−β̂. We first derive a cone constraint for α. Let S̃ be the support of the largest s elements of ∇β0. Observe that

and on the other hand,

Combining these two inequalities yields

| (22) |

The cone constraint on the discrete gradient can be transferred to a cone constraint on the wavelet coefficients. Write

where the wavelet coefficients are nonconstant over S which has cardinality at most K = 8s log N. Recall that |hj| ≤ Cj−1||∇α||1. From (22) we have

where the last inequality holds because ||∇ϕj||1 ≤ 8 (Needell and Ward, 2013), and

Furthermore, since ρ(j) is of order j−2q for q > 0 from A3, we have

| (23) |

which completes the proof of the lemma.

7.2 Proof of Theorem 3.1

Write ξ = (θ, β), W = (X, Z), and Wi = (Xi, Zi), i = 1, …, n. Denote ηi(ξ) = η(Wi; ξ), ηW(ξ) = η(W; ξ), and

Recall that ξ̂ minimizes −Mn(ξ) + λ||β||TV. We have

Direct calculation yields

| (24) |

where ηi(ξ − ξ0) = (θ − θ0)TZ + 〈X,β − β0〉, and

| (25) |

and is a number between ηi(ξ) and ηi(ξ0). Therefore,

where the last inequality is because of Lemma 7.2.

Let be the least favorable direction such that, for any g = (g1(X1,…, Xn), …, gn(X1, …, Xn))T, we have

Note that

Assuming that is non-singular, we conclude that ||θ̂ − θ0||2 ≲ n−1/2 by choosing λ = Cn−1/2, and , which gives an equation similar to (17). The following proofs will go through with the same arguments as for the proof of Theorem 2.1. This completes the proof of Theorem 3.1.

Lemma 7.2

Assume B1–B2 hold. Let r = q − 1/2 > 0. Let Bδ = {ξ: δ/2 ≤ dW(ξ, ξ0) ≤ δ}, where

Then,

| (26) |

Proof

Recall that Hn(ξ) = − (Pn − ℙ) fξ(W, Y), where

Consider ℳδ = {fξ(W, Y) : ξ ∈ Bδ}, with L2(P) norm, i.e., for any f ∈ ℳδ, .

Let and ||Gn||ℳδ = supf∈ℳδ |𝔾nf|. Then,

Therefore, it suffices to show that

We prove this result by using Theorem 2.14.1 of van der Vaart and Wellner (1996) and exploiting the covering numbers of ℳδ. For statistical applications of covering numbers, please see Chapter 2 of van der Vaart and Wellner (1996). This result can be achieved by showing that

Suppose that there exist ξ1, …, ξm ∈ Bδ such that, for any ξ ∈ Bδ, min1≤i≤m ||ξ − ξi||P,2 ≤ ε. Observe that

Therefore, the cover number for ℳδ is of the same order of that for Bδ, and specifically,

Write β = Σk γkϕk, , and . Hence, . For any β = Σk γkϕk, ∈ Bδ, let β* =Σk≤M γ(k)ϕ(k) with M = ε−1/(r+1). Since ρ(l) is of order l−2q, Σℓ>s ρ(ℓ) is of order s−2r with r = q − 1/2 > 0. Therefore,

So if we can find , where , satisfying for all ,

it also guarantees that, for any β ∈ Bδ,

In addition, since θ ∈ Θ which is a bounded subset of ℝp, we may find θ1, …, θm̃ such that, for any θ ∈ Θ, .

Since it is known that the covering number for a ball in ℝM is , it follows from the above arguments that

We calculate J(1, ℳδ) by

It follows from Theorem 2.14.1 in van der Vaart and Wellner (1996), we have , which completes the proof of the lemma.

Footnotes

Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf.

Contributor Information

Xiao Wang, Associate Professor of Statistics, Department of Statistics, Purdue University, West Lafayette, IN 47907.

Hongtu Zhu, Professor of Biostatistics, Department of Biostatistics, The University of Texas MD Anderson Cancer Center, Houston, TX 77230, and University of North Carolina, Chapel Hill, NC 27599.

References

- 1.Candès W, Romberg J, Tao T. Stable signal recovery from incomplete and inaccurate measurements. Comm Pure App Math. 2006a;59:1027–1023. [Google Scholar]

- 2.Candès W, Romberg J, Tao T. Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inform Theory. 2006b;52:489–509. [Google Scholar]

- 3.Chen S, Donoho DL, Saunders M. Atomic decomposition for basis pursuit. SIAM J Sci Comp. 1998;20:33–61. [Google Scholar]

- 4.Colom R, Stein JL, Rajagopalan P, Martńez K, Hermel D, Wang Y, Alvarez Linera J, Burgaleta M, Quiroga AM, Shih PC, Thompson PM. Hippocampal structure and human cognition: key role of spatial processing and evidence supporting the efficiency hypothesis in females. Intelligence. 2013;41:129–140. doi: 10.1016/j.intell.2013.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Crambes C, Kneip A, Sarda P. Smoothing splines estimators for functional linear regression. Annals of Statistics. 2009;37:35–72. [Google Scholar]

- 6.De La Torre JC. Alzheimers disease is incurable but preventable. Journal of Alzheimers Disease. 2010;20:861–870. doi: 10.3233/JAD-2010-091579. [DOI] [PubMed] [Google Scholar]

- 7.Du P, Wang X. Penalized likelihood functional regression. Statistica Sinca. 2014;24:1017–1041. [Google Scholar]

- 8.Efron B. How biased is the apparent error rate of a prediction rule? J Amer Statist Assoc. 1986;81:461–470. [Google Scholar]

- 9.Efron B. The estimation of prediction error: Covariance penalties and cross-validation (with discussion) J Amer Statist Assoc. 2004;99:619–642. [Google Scholar]

- 10.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Statist Assoc. 2001;96:1348–1360. [Google Scholar]

- 11.Fennema-Notestine C, Hagler DJ, Jr, McEvoy LK, Fleisher AS, Wu EH, Karow DS, Dale AM Alzheimer’s Disease Neuroimaging Initiative. Structural MRI biomarkers for preclinical and mild Alzheimer’s disease. Human Brain Mapping. 2009;30:3238–3253. doi: 10.1002/hbm.20744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ferraty F, Vieu P. Nonparametric Functional Data Analysis: Theory and Practice. Springer-Verlag Inc; New York: 2006. [Google Scholar]

- 13.Friedman JT, Hastie H, Tibshirani R. Pathwise coordinate optimization. Annals of Applied Statistics. 2007;1:302–332. [Google Scholar]

- 14.Gertheiss J, Maity A, Staicu AM. Variable selection in generalized functional linear model. Stat. 2013;2:86–101. doi: 10.1002/sta4.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Goldsmith J, Bobb J, Crainiceanu CM, Caffo B, Reich D. Penalized functional regression. Journal of Computational and Graphical Statistics. 2010;20:830–851. doi: 10.1198/jcgs.2010.10007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Goldsmith J, Huang L, Crainiceanu CM. Smooth scalar-on-image regression via spatial Bayesian variable selection. Journal of Computational and Graphical Statistics. 2014;23:46–64. doi: 10.1080/10618600.2012.743437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Guillas S, Lai MJ. Bivariate splines for spatial functional regression models. Journal of Nonparametric Statistics. 2010;22:477–497. [Google Scholar]

- 18.Hall P, Horowitz JL. Methodology and convergence rates for functional linear regression. Annals of Statistics. 2007;35:70–91. [Google Scholar]

- 19.James GM. Generalized linear models with functional predictors. Journal of the Royal Statistical Society, Series B. 2002;64:411–432. [Google Scholar]

- 20.James GM, Wang J, Zhu J. Functional linear regression that’s interpretable. Annals of Statistics. 2009;37:2083–2108. [Google Scholar]

- 21.Hestenes MR. Multiplier and gradient methods, Journal of Optimization Theory and Applications. In: Zadeh LA, Neustadt LW, Balakrishnan AV, editors. Computing Methods in Optimization Problems. Vol. 4. Academic Press; New York: 1969. pp. 303–320. [Google Scholar]

- 22.Li Y, Wang N, Carroll RJ. Generalized functional linear models with semi parametric single-index interactions. Journal of the American Statistical Association. 2010;105:621–633. doi: 10.1198/jasa.2010.tm09313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li C. PhD Dissertation. Rice University; 2013. Compressive Sensing for 3D Data Processing Tasks: Applications, Model and Algorithms. [Google Scholar]

- 24.Lorensen WE, Cline HE. Marching cubes: a high resolution 3D surface construction algorithm. Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH 87; 1987. pp. 163–169. [Google Scholar]

- 25.Luders E, Thompson PM, Kurth F, Hong JY, Phillips OR, Wang Y, Gutman BA, Chou YY, Narr KL, Toga AW. Global and regional alterations of hippocampal anatomy in long-term meditation practitioners. Hum Brain Mapp. 2013;34:3369–3375. doi: 10.1002/hbm.22153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mammen E, van de Geer S. Locally adaptive regression splines. Annals of Statistics. 1997;25:387–413. [Google Scholar]

- 27.Michel V, Gramfort A, Varoquaux G, Eger E, Thirion B. Total variation regularization for fMRI-based prediction of behavior. IEEE Transactions on Medical Imaging. 2011;30:1328–1340. doi: 10.1109/TMI.2011.2113378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mu Y, Gage F. Adult hippocampal neurogenesis and its role in Alzheimers disease. Molecular Neurodegeneration. 2011;6:85. doi: 10.1186/1750-1326-6-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Müller HG, Stadtmüller U. Generalized functional linear models. Annals of Statistics. 2005;33:774–805. [Google Scholar]

- 30.Needell D, Ward R. Stable image reconstruction using total variation minimization. SIAM J Imaging Sciences. 2013;6:1035–1058. [Google Scholar]

- 31.Nelder J, Wedderburn R. Generalized linear models. Journal of the Royal Statistical Society, Series A. 1972;135:370–384. [Google Scholar]

- 32.Patenaude B, Smith SM, Kennedy DN, Jenkinson M. A Bayesian model of shape and appearance for subcortical brain segmentation. NeuroImage. 2011;56:907–922. doi: 10.1016/j.neuroimage.2011.02.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Petrushev PP, Cohen A, Xu H, DeVore R. Nonlinear approximation and the space BV(R2) American Journal of Mathematics. 1999;121:587–628. [Google Scholar]

- 34.Powell MJD. A Method for Nonlinear Constraints in Minimization Problems. In: Fletcher R, editor. Optimization. Academic Press; London, New York: 1969. pp. 283–298. [Google Scholar]

- 35.Ramsay JO, Silverman BW. Functional Data Analysis. Springer-Verlag Inc; New York: 2005. [Google Scholar]

- 36.Reiss PT, Huo L, Zhao Y, Kelly C, Ogden RT. Wavelet-domain regression and predictive inference in psychiatric neuroimaging. Annals of Applied Statistics. 2015;9(2):1076–1101. doi: 10.1214/15-AOAS829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Reiss PT, Ogden RT. Functional principal component regression and functional partial least squares. Journal of the American Statistical Association. 2007;102:984–996. [Google Scholar]

- 38.Reiss PT, Ogden RT. Functional generalized linear models with images as predictors. Biometrics. 2010;66:61–69. doi: 10.1111/j.1541-0420.2009.01233.x. [DOI] [PubMed] [Google Scholar]

- 39.Rudin LI, Osher S. Total variation based image restoration with free local constraints. Proc 1st IEEE ICIP. 1994;1:31–35. [Google Scholar]

- 40.Rudin LI, Osher S, Fatemi E. Nonlinear total variation noise removal algorithm. Physica D. 1992;60:259–268. [Google Scholar]

- 41.Shi J, Lepore N, Gutman B, Thompson PM, Baxter L, Caselli RJ, Wang Y. Genetic influence of APOE4 genotype on hippocampal morphometry - an N=725 surface-based ADNI Study. Hum Brain Mapp. 2014;35:3902–3918. doi: 10.1002/hbm.22447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Shi J, Thompson PM, Gutman B, Wang Y. Surface fluid registration of conformal representation: application to detect disease burden and genetic influence on hippocampus. NeuroImage. 2013;78:111–134. doi: 10.1016/j.neuroimage.2013.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tibshirani R. Regression shrinkage and selection via the Lasso. J of Royal Statis Soc B. 1996;58:267–288. [Google Scholar]

- 44.Tibshirani R. Adaptive piecewise polynomial estimation via trend filtering. Annals of Statistics. 2014;42:285–323. [Google Scholar]

- 45.Tibshirani R, Taylor J. Degrees of freedom in Lasso problems. Annals of Statistics. 2012;40:1198–1232. [Google Scholar]

- 46.Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. JR Statist SocB. 2005;67:91–108. [Google Scholar]

- 47.van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes: With Applications to Statistics. Springer; New York: 1996. [Google Scholar]

- 48.Vidakovic B. Statistical Modeling by Wavelets. Wiley; New York: 1999. [Google Scholar]

- 49.Wang X, Nan B, Zhu J, Koppe R ADNI. Regularized 3D functional regression for brain image data via Haar wavelets. Annals of Applied Statistics. 2014;8:1045–1064. doi: 10.1214/14-AOAS736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wang Y, Zhang J, Gutman B, Chan TF, Becker JT, Aizenstein HJ, Lopez OL, Tamburo RJ, Toga AW, Thompson PM. Multivariate tensor based morphometry on surfaces: Application to mapping ventricular abnormalities in HIV/AIDS. NeuroImage. 2010;49:2141–2157. doi: 10.1016/j.neuroimage.2009.10.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Weiner MW, Veitcha DP, Aisen PS, Beckett LA, Cairnsh NJ, Green RC, Harvey D, Jack CR, Jagust W, Liu E, Morris JC, Petersen RC, Saykino AJ, Schmidt ME, Shaw L, Siuciak JA, Soares H, Toga AW, Trojanowski JQ ADNI. The Alzheimer’s Disease Neuroimaging Initiative: A review of papers published since its inception. Alzheimers Dement. 2012;8:S1–S68. doi: 10.1016/j.jalz.2011.09.172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Yuan M, Cai TT. A reproducing kernel Hilbert space approach to functional linear regression. Annals of Statistics. 2010;38:3412–3444. [Google Scholar]

- 53.Zhao Y, Ogden RT, Reiss PT. Wavelet-based LASSO in functional linear regression. Journal of Computational and Graphical Statistics. 2014;21:600–617. doi: 10.1080/10618600.2012.679241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zhou H, Li L. Regularized matrix regression. Journal of Royal Statistical Society Series B. 2014;76:463–483. doi: 10.1111/rssb.12031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Zhou H, Li L, Zhu H. Tensor regression with applications in neuroimaging data analysis. Journal of the American Statistical Association. 2013;108:540–552. doi: 10.1080/01621459.2013.776499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Zhu H, Fan J, Kong L. Spatially varying coefficient model for neuroimaging data with jump discontinuities. Journal of the American Statistical Association. 2014;109:977–990. doi: 10.1080/01621459.2014.881742. [DOI] [PMC free article] [PubMed] [Google Scholar]