Abstract

We here investigated a non-linear ensemble Kalman filter (SPKF) application to a motor imagery brain computer interface (BCI). A square root central difference Kalman filter (SR-CDKF) was used as an approach for brain state estimation in motor imagery task performance, using scalp electroencephalography (EEG) signals. Healthy human subjects imagined left vs. right hand movements and tongue vs. bilateral toe movements while scalp EEG signals were recorded. Offline data analysis was conducted for training the model as well as for decoding the imagery movements. Preliminary results indicate the feasibility of this approach with a decoding accuracy of 78%–90% for the hand movements and 70%–90% for the tongue-toes movements. Ongoing research includes online BCI applications of this approach as well as combined state and parameter estimation using this algorithm with different system dynamic models.

I. INTRODUCTION

In a brain computer interface (BCI) development, neuronal signals are translated into commands to build a direct interface between the brain and a device. Although invasive techniques have shown promise in the application of BCI, non-invasive scalp EEG based methods can be more easily applied. Feature extraction and pattern discrimination are commonly applied in the design of motor imagery based BCI paradigms, where subjects perform imagery tasks in response to audio-visual cues on the computer screen. Recently, there have been some model-based BCI applications where a generative model that correlates brain activity with intended tasks is developed. Martens and Leiva [1] developed such a model for decoding the visual event-related potential-based brain-computer speller. Geronimo et al [2] presented a simplified generative model for motor imagery BCI application and showed significantly higher BCI accuracy performance.

The Kalman filter (KF) has been applied in an encoding-decoding framework in a number of neural interface applications using intracranial signals. Wu et al [3–5] used the KF for neural decoding of motor cortical activity and cursor motion. In motor imagery BCI applications, the KF has been applied mainly for optimizing model parameter estimation.

We here applied a KF as a model-based approach for brain state estimation in motor imagery task performance, using non-invasive scalp electroencephalography (EEG) signals. This approach enables encoding imagery hand, tongue, and bilateral toe movements in motor cortex and decoding that movement from recorded EEG signals. A model-based approach in BCI has the benefit of understanding the brain dynamics for further applications in BCI. The application of a Kalman Filter is expected to handle noisy brain signals effectively.

II. Methods

A. Experimental Paradigm

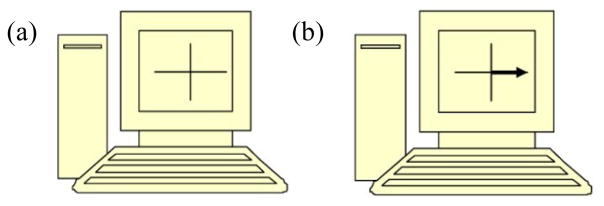

Five healthy human subjects, 25–32 years old, four males and one female, none of them under any kind of medication, participated in the motor imagery tasks. The experiments were conducted under Institutional Review Board approval at Penn State University. Each subject conducted one session of tasks that consisted of four runs, each with 40 trials. Each trial was designed as follows: the subject would be quiet and relaxed, a cross would appear on the computer screen, a left, right, up, or down arrow, depending on the task to be performed, would appear during which time the subject would imagine the task, and then both the cross and arrow would disappear to end the trial. Of the four total runs, the first two were designed for imagery of left or right hand movements and the last two runs were for imagery of tongue or bilateral toe movements. Of the 40 trials in each left-right hand movement run, 20 randomly permuted trials showed “left” arrows indicative of imagined left hand movements and the other 20 showed “right” arrows indicative of imagined right hand movements. Similarly, “up” and “down” arrows were used for tongue-toes tasks.

B. Data Acquisition and Processing

Nineteen monopolar electrode positions (FP1, FP2, F7, F3, Fz, F4, F8, T7, C3, Cz, C4, T8, P7, P3, Pz, P4, P8, O1, and O2 as per the International 10–20 standard electrode locations) referenced to linked earlobe electrodes were selected for acquiring EEG under open loop conditions while the participants performed the imagery tasks. Data were passed through a fourth order band-pass Butterworth filter of 0.5–60 Hz and sampled at 256 Hz. Data were recorded with g.tec amplifier systems [6]. The same experimental paradigm with the same montage was used in our previous work [7].

Data were epoched from 2 s before to 4 s after the presentation of each arrow cue. Recordings were visually inspected for artifacts, and by using an amplitude threshold (55 μV) criterion, trials that contained artifacts were excluded from further analysis. For each subject, the number of per class trials remaining after artifact exclusion was 70–80 out of signals recorded in 80 trials.

The Laplacian derivations [8–11] were developed for nine inner loop channels (F3, Fz, F4, C3, Cz, C4, P3, Pz, P4) using four channels surrounding the active channel for deriving the weighted average. The Laplacian is a discrete second derivative, calculated as the difference between an electrode potential and a weighted average of the surrounding electrode potentials and is commonly applied to increase the spatial resolution of EEG signals.

C. Dynamic Modeling and Kalman Filter Application

The KF is “essentially a set of mathematical equations that implement a predictor-corrector type estimator that is optimal in the sense that it minimizes the estimated error covariance—when some presumed conditions are met” [12]. Although the linear KF, in its original format introduced by Kalman [13], works for many applications, a number of nonlinear filters have been developed for non-linear processes. A KF can be applied in order to estimate states or model parameters as well as to estimate both.

We begin with a dynamic state space model (DSSM) with unobserved states xk that evolve over time (discrete time steps designated by k) and observed outputs yk that are conditionally independent given the states, as:

| (1) |

where vk and nk are process and measurement noises respectively, and uk are external inputs. The state transition function f and the observation function g are parameterized by w, in an augmented format.

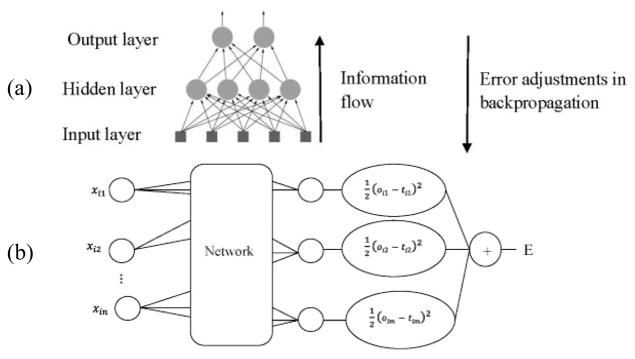

We here used a multi layer perceptron (MLP) artificial neural network (ANN) model corresponding to each of the 9 Laplacian electrodes for motor imagery task performance, as our system dynamic model. An ANN is a computational model based on biological neural networks and consists of an interconnected group of artificial neurons [7–10]. It can be treated as non-linear statistical data modeling tools that can be used to model complex relationships between inputs and outputs or to find patterns in data. Within a layer of an ANN, a single neuron makes an operation of a weighted sum of the incoming signals and a bias term, fed through an activation function and resulting in the output value of that neuron (Fig. 2). A popular activation term in dynamic modeling is a non-linear hyperbolic tangent (tanh) function. There are mainly two learning processes of an ANN, namely supervised learning and unsupervised learning. In supervised learning, for a given set of input-output pairs, the aim is to find a function that maps the inputs to the outputs by minimizing a cost function that is related to the mismatch between the mapped outputs and the target outputs. A commonly used objective is to minimize the average squared error between the network’s outputs and the target values. When one tries to minimize this mean-squared error cost function, one obtains the well-known backpropagation algorithm for training neural networks. Typically, the objective is to make outputs oi and targets ti identical for i = 1, …, p for p variables, by using a learning algorithm. More precisely, the objective is to minimize the error function of the network, defined as

Fig. 2.

(a) A simple example of an artificial neural network (ANN), (b) an example of an extended network to estimate the cost functions in ANN backpropagation.

| (7) |

Fig. 2 shows an example of an ANN model and the backpropagation framework. We used a 6-4-1 structure for the model, a hyperbolic tangent (tanh) activation function in the hidden layer, and a liner activation function for the output layer. The preliminary model structure and function were chosen here in an ad-hoc basis with a goal to introduce different model structures and compare them in the ongoing study. The ANN parameters can be estimated by model fitting using backpropagation to the EEG data corresponding to each electrode for the motor imagery task performance. The single channels were used as an initial modeling approach with the goal to apply a multi-variable KF design in the ongoing study.

The recursive form of the optimal Kalman update of the conditional mean of the state random variable, x̂k = E[xk|y1:k] and its covariance, Pxk is written as:

| (2) |

| (3) |

The optimal terms here are:

| (4) |

| (5) |

| (6) |

The Sigma-point Kalman filters (SPKF) are a group of Kalman filter algorithms that fall into a general deterministic sampling framework known as the sigma-point approach for the calculation of the posterior mean and covariance of the pertinent Gaussian approximate densities in the Kalman framework recursion [14, 15]. The SPKF was introduced as a better alternative to the extended Kalman filter (EKF) for Gaussian approximate probabilistic inference in general nonlinear DSSMs. The underlying unifying sigma-point approach that is common to all SPKFs, was introduced as a method to calculate the statistics of a random variable that undergoes a nonlinear transformation. These calculations form the core of the optimal Kalman time and measurement update equations, which are simply the original (optimal) recursive Bayesian estimation integral equations recast under a Gaussian assumption.

The unscented KF (UKF) [16–18] and the central difference KF (CDKF) [14, 15], although derived from different starting assumptions, both employ the sigma-point approach as their core algorithmic components for calculating the posterior Gaussian statistics necessary for Gaussian approximate inference in a Kalman framework.

In practice, there are many situations where linearizing the underlying model equations, or linearizing the control law, as is done in an extended Kalman Filter (EKF) application, is not suitable. The central difference filter (CDF) of Ito and Xiong [19] and the divided difference filter (DDF) of Nørgaard [20] are based on polynomial approximations of the nonlinear transformations obtained with a multidimensional extension of Stirling's polynomial interpolation formula [21, 22]. The CDKF (either CDF or DDF) is a derivativeless Kalman filter for nonlinear estimation, based on polynomial interpolation and approximation of the derivatives using difference equations (central or divided, as are termed). A particularly useful idea of the CDKF is to directly update the Cholesky factors of the covariance matrices, which are used in the a-priori and aposteriori updates of the outputs and states. Nørgaard et al. [20] showed how the CDKF has a slightly smaller absolute error (compared to the UKF) in the fourth order term and also guarantees positive semi-definiteness of the posterior covariance. The CDKF and UKF perform equally well with negligible difference in estimation accuracy and have been found to be superior to the EKF.

The square-root form of CDKF increases the numerical robustness of the filter, as well as reduces the computational cost for certain DSSMs. This form propagates and updates the square-root of the state covariance directly in Cholesky factored form, using the sigma-point approach and linear algebra techniques such as QR decomposition, Cholesky factor updating and pivot-based least squares [14]. The choice of a SR-UKF or SR-CDKF to be used is a matter of implementational choice. However, there is one advantage CDKF has over the UKF: CDKF uses only a single scalar scaling parameter, the central difference interval size h, as opposed to three parameters in UKF [14]. This h parameter determines the spread of the sigma-points around the prior mean. For Gaussian random variables, the optimal value of h is .

We here applied the square root CDKF (SR-CDKF) algorithm which lies in the family of ensemble KF or sigma point KF developed by Van der Merwe [14, 23], for the state estimation of brain dynamics corresponding to motor imagery. The essential steps and the detailed derivations of SR-CDKF algorithm can be found in the literature.

We used a state dimension of order six, Gaussian noise with 5dB of signal to noise ratio (SNR), zero initial state value, and initial noise variance to be unity. We used the ReBEL toolkit [24] for the KF application.

D. Motor Imagery Task Performance

For the evaluation of the decoding performance of the KF approach, we applied each left, right, tongue, or toes state model to evaluate motor imagery task performance using offline EEG signals. We calculated the distance of the signals from the models corresponding to each of the tasks. We assigned the signals to the model having the shortest distance as the intended task. First order norm of the residual (error between the model and the EEG signal) was considered as the measure of the distance.

III. Results and Discussion

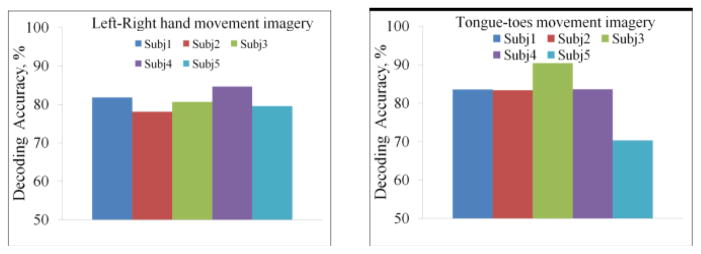

Fig. 3 presents the proposed SR-CDKF results for decoding motor imagery task performance for Subjects 1–5. The results, with the limited number of subjects under this study, show a decoding accuracy of 78%–90% for the hand movements and 70%–90% for the tongue-toes movements, which are very promising to be applied for an online BCI application that is under study.

Fig. 3.

Decoding accuracy of motor imagery tasks using the square root central difference Kalman Filter estimation algorithm for five subjects. Two pairs of tasks, left vs. right hand movements and tongue vs. bilateral toe movements are shown.

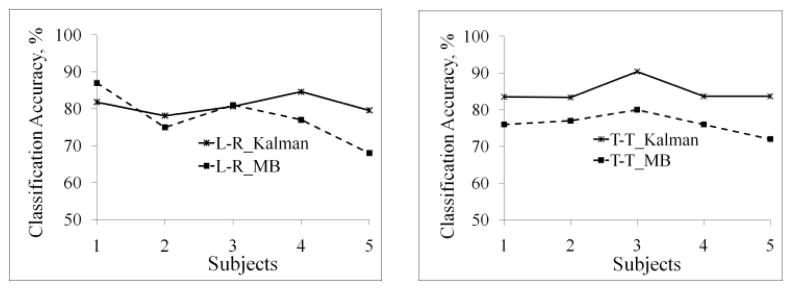

Classification accuracies for the four imagery tasks (left vs. right hand and tongue vs. toes movements) by the state estimation model for five subjects were compared with the accuracies using the commonly applied discriminative approach using spectral powers in mu (8–12 Hz) and beta (14–20 Hz) frequency bands as the features along with linear discriminant analysis (LDA) as a classification method [25–29]. The best (i.e. highest) classification accuracies (among the four time segments) by each method for all five subjects are shown in Fig. 4.

Fig. 4.

Classification accuracy of motor imagery tasks using the square root central difference Kalman Filter estimation algorithm in comparison to the accuracy using mu-beta power spectra features. Two pairs of tasks, left vs. right hand movements and tongue vs. bilateral toe movements are shown.

An analysis of variance (ANOVA) check shows that the classification accuracy using the Kalman model was significantly better (p-value 0.006) than the accuracy using the mu-beta (MB) power spectra features.

The advantage of this approach lies in that this model-based approach with dynamic state estimation will help understand the brain dynamics better as well as effectively handle noisy brain signals through the application of a Kalman Filter.

IV. Summary

A square root central difference Kalman filter (SR-CDKF), a member of non-linear sigma point Kalman filters (SPKF) was applied to a brain computer interface (BCI) for brain state estimation in motor imagery task performance, using scalp electroencephalography (EEG) signals. For left vs. right hand movements and tongue vs. bilateral toe movements, preliminary results of offline data analysis indicate the feasibility of this approach with a decoding accuracy of 78%–90% for the hand movements and 70%–90% for the tongue-toes movements, for the limited number of subjects in this study. Comparison of the imagery classification accuracy using the proposed approach with the accuracy using commonly applied mu-beta (MB) power spectra features shows that the proposed algorithm performance is significantly better than the discrimination approach using MB features. Ongoing research includes online BCI applications of this approach as well as combined state and parameter estimation using this algorithm with different system dynamic models.

Fig. 1.

(a) Fixation cross and (b) Arrow cue for motor imagery tasks.

Acknowledgments

This work was supported by the NINDS (NIH) grant K25NS061001(MK).

Contributor Information

M. Kamrunnahar, Center for Neural Engineering, Dept. of Engineering Science and Mechanics, The Pennsylvania State University, University Park, PA 16803 USA

S. J. Schiff, Center for Neural Engineering, Depts. of Engineering Science and Mechanics, Neurosurgery, and Physics, The Pennsylvania State University, University Park, PA 16803 USA

References

- 1.Martens SMM, Leiva JM. A generative model approach for decoding in the visual event-related potential-based brain-computer interface speller. Journal of Neural Engineering. 2010 Apr;7(2) doi: 10.1088/1741-2560/7/2/026003. [DOI] [PubMed] [Google Scholar]

- 2.Geronimo A, Kamrunnahar M, Schiff S. A generative model approach to motor-imagery brain-computer interfacing [Google Scholar]

- 3.Wu W, Black MJ, Gao Y, et al. Neural decoding of cursor motion using a Kalman filter. Advances in Neural Information Processing Systems. 2003;15:133–140. [Google Scholar]

- 4.Wu W, Gao Y, Bienenstock E, et al. Bayesian Population Decoding of Motor Cortical Activity Using a Kalman Filter. Neural Computation. 2006;18:80–118. doi: 10.1162/089976606774841585. [DOI] [PubMed] [Google Scholar]

- 5.Wu W, Shaikhouni A, Donoghue JR, et al. Closed-loop neural control of cursor motion using a Kalman filter. :4126–4129. doi: 10.1109/IEMBS.2004.1404151. [DOI] [PubMed] [Google Scholar]

- 6.g.tec medical engineering. 1999–2006 http://gtec.at/Products/Hardware-and-Accessories/g.USBamp-Specs-Features.

- 7.Kamrunnahar M, Dias N, Schiff S. Toward a Model-Based Predictive Controller Design in Brain–Computer Interfaces. Annals of Biomedical Engineering. 2011:1–11. doi: 10.1007/s10439-011-0248-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nunez PL, Srinivasan R. Electric Fields of the Brain: The Neurophysics of EEG. 2. New York: Oxford University Press; 2006. [Google Scholar]

- 9.Nunez PL. Electric fields of the brain. Oxford University Press; 1981. [Google Scholar]

- 10.Srinivasan R. Methods to Improve the Spatial Resolution of EEG. International Journal of Bioelectromagnetism. 1999;1(1):102–111. [Google Scholar]

- 11.Srinivasan R, Winter WR, Nunez PL. Source analysis of EEG oscillations using high-resolution EEG and MEG. In: Neuper C, Klimesch W, editors. Event-Related Dynamics of Brain Oscillations; Progress in Brain Research. Elsevier; 2006. pp. 29–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Welch G, Bishop G. An Introduction to the Kalman Filter. http://www.cs.unc.edu/~tracker/media/pdf/SIGGRAPH2001_CoursePack_08.pdf.

- 13.Kalman RE. A New Approach to Linear Filtering and Prediction Problems. Transactions of the ASME - Journal of Basic Engineering. 1960;82:35–45. [Google Scholar]

- 14.Van der Merwe R. PhD. OGI School of Science & Engineering, Oregon Health & Science University; Portland, OR: 2004. Sigma-Point Kalman Filters for Probabilistic Inference in Dynamic State-Space Models. [Google Scholar]

- 15.Van der Merwe R, Wan E. Efficient Derivative-Free Kalman Filters for Online Learning. :205–210. [Google Scholar]

- 16.Julier S, Uhlmann J. A new extension of the Kalman filter to nonlinear systems [Google Scholar]

- 17.Julier SJ, Uhlmann JK. A General Method for Approximating Nonlinear Transformations of Probability Distributions. Dept. of Engineering Science, University of Oxford; Nov, 1996. [Google Scholar]

- 18.Julier SJ, Uhlmann JK, Durrant-Whyte HF. A new approach for filtering nonlinear systems. 3:1628–1632. [Google Scholar]

- 19.Ito K, Xiong KQ. Gaussian filters for nonlinear filtering problems. Ieee Transactions on Automatic Control. 2000 May;45(5):910–927. [Google Scholar]

- 20.Nørgaard M, Poulsen NK, Ravn O. New developments in state estimation for nonlinear systems. Automatica. 2000;36(11):1627–1638. [Google Scholar]

- 21.Fröberg CE. Introduction to numerical analysis. Reading, MA: Addison-Wesley; 1970. [Google Scholar]

- 22.Steffensen JF. Interpolation. Baltimore, MD: Williams & Wilkins; 1927. [Google Scholar]

- 23.Van der Merwe R, Wan E. The Square-Root Unscented Kalman Filter for State- and Parameter-Estimation. :3461–3464. [Google Scholar]

- 24.Van der Merwe R, Wan EA. ReBEL Toolkit in MATLAB. School of Science & Engineering at Oregon Health & Science University (OHSU) http://choosh.csee.ogi.edu/rebel/

- 25.Lotte F, Congedo M, Lecuyer A, et al. A review of classification algorithms for EEG-based brain-computer interfaces. Journal of Neural Engineering. 2007 Jun;4(2):R1–R13. doi: 10.1088/1741-2560/4/2/R01. [DOI] [PubMed] [Google Scholar]

- 26.McFarland DJ, Krusienski DJ, Wolpaw JR. Brain-computer interface signal processing at the Wadsworth Center: mu and sensorimotor beta rhythms. Prog Brain Res. 2006;159:411–9. doi: 10.1016/S0079-6123(06)59026-0. [DOI] [PubMed] [Google Scholar]

- 27.Millan J, Franze M, Mourino J, et al. Relevant EEG features for the classification of spontaneous motor-related tasks. Biol Cybern. 2002 Feb;86(2):89–95. doi: 10.1007/s004220100282. [DOI] [PubMed] [Google Scholar]

- 28.Neuper C, Pfurtscheller G. ERD and ERS during motor behavior in man. Journal of Psychophysiology. 1997;11(4):375–375. [Google Scholar]

- 29.Pregenzer M, Pfurtscheller G. Frequency component selection for an EEG-based brain to computer interface. IEEE Trans Rehabil Eng. 1999 Dec;7(4):413–9. doi: 10.1109/86.808944. [DOI] [PubMed] [Google Scholar]