Abstract

Learning to read causes the development of a letter- and word-selective region known as the visual word form area (VWFA) within the human ventral visual object stream. Why does a reading-selective region develop at this anatomical location? According to one hypothesis, the VWFA develops at the nexus of visual inputs from retinotopic cortices and linguistic input from the frontotemporal language network because reading involves extracting linguistic information from visual symbols. Surprisingly, the anatomical location of the VWFA is also active when blind individuals read Braille by touch, suggesting that vision is not required for the development of the VWFA. In this study, we tested the alternative prediction that VWFA development is in fact influenced by visual experience. We predicted that in the absence of vision, the “VWFA” is incorporated into the frontotemporal language network and participates in high-level language processing. Congenitally blind (n = 10, 9 female, 1 male) and sighted control (n = 15, 9 female, 6 male), male and female participants each took part in two functional magnetic resonance imaging experiments: (1) word reading (Braille for blind and print for sighted participants), and (2) listening to spoken sentences of different grammatical complexity (both groups). We find that in blind, but not sighted participants, the anatomical location of the VWFA responds both to written words and to the grammatical complexity of spoken sentences. This suggests that in blindness, this region takes on high-level linguistic functions, becoming less selective for reading. More generally, the current findings suggest that experience during development has a major effect on functional specialization in the human cortex.

SIGNIFICANCE STATEMENT The visual word form area (VWFA) is a region in the human cortex that becomes specialized for the recognition of written letters and words. Why does this particular brain region become specialized for reading? We tested the hypothesis that the VWFA develops within the ventral visual stream because reading involves extracting linguistic information from visual symbols. Consistent with this hypothesis, we find that in congenitally blind Braille readers, but not sighted readers of print, the VWFA region is active during grammatical processing of spoken sentences. These results suggest that visual experience contributes to VWFA specialization, and that different neural implementations of reading are possible.

Keywords: blindness, language, orthography, plasticity, vision, VWFA

Introduction

Reading depends on a consistent network of cortical regions across individuals and writing systems (Baker et al., 2007; Krafnick et al., 2016). A key part of this network is the so-called visual word form area (VWFA), located in the left ventral occipitotemporal cortex (vOTC). The VWFA is situated among regions involved in visual object recognition, such as the fusiform face area (FFA) and the parahippocampal place area (PPA), and is thought to support the recognition of written letters and word forms (Cohen et al., 2000). The VWFA is an example of experience-dependent cortical specialization: preferential responses to letters and words are only found in literate adults (Dehaene et al., 2010; Cantlon et al., 2011). Furthermore, the historically recent invention of written symbols makes it unlikely that this region evolved specifically for reading. An important outstanding question concerns: which aspects of experience are relevant to the development of the VWFA.

According to one hypothesis, the VWFA develops in the ventral visual processing stream because reading involves the extraction of linguistic information from visual symbols. Consistent with this idea, the VWFA has strong functional and anatomical connectivity with early visual areas on the one hand, and with the frontotemporal language network on the other (Behrmann and Plaut, 2013; Dehaene and Dehaene-Lambertz, 2016). In apparent contradiction to this idea, several studies find that the vOTC is active while blind individuals read Braille, a tactile reading system in which words are written as patterns of raised dots (Sadato et al., 1996; Büchel et al., 1998; Burton et al., 2002; Reich et al., 2011). Each Braille character consists of dots positioned in a three-rows-by-two-columns matrix. The vOTC of individuals who are blind responds more when reading Braille than when touching meaningless patterns, and responses peak in the canonical location of the sighted VWFA (Reich et al., 2011). These results suggest that visual experience with print is not necessary for the development of reading selectivity in the vOTC. It has been proposed that specialization instead results from an area-intrinsic preference for processing shapes, independent of modality (Hannagan et al., 2015).

However, there remains an alternative, and until now untested, interpretation of the available findings. We hypothesize that in the absence of visual input, the vOTC of blind individuals becomes involved in language processing more generally, and not in the recognition of written language in particular. Recent evidence suggests that even before literacy acquisition the vOTC has strong anatomical connectivity to frontotemporal language networks (Saygin et al., 2016). In the absence of bottom-up inputs from visual cortex, such connectivity could drive this region of vOTC to become incorporated into the language network. This hypothesis predicts that unlike responses to written language in the sighted, responses to Braille in the vOTC of blind individuals reflect semantic and grammatical processing of the linguistic information conveyed by Braille, rather than the recognition of letter and word forms.

Indirect support for this idea comes from studies of spoken language comprehension in blind individuals. In this population, multiple regions within the visual cortices, including primary visual cortex (V1) and parts of the ventral visual stream respond to high-level linguistic information (Burton et al., 2002; Röder et al., 2002; Noppeney et al., 2003; Amedi et al., 2003; Bedny et al., 2011; Lane et al., 2015). For example, larger responses are observed to spoken sentences than to unconnected lists of words, and responses are larger still for sentences that are more grammatically complex (Röder et al., 2002; Bedny et al., 2011; Lane et al., 2015). Whether Braille-responsive vOTC in particular responds to high-level linguistic information is not known.

We addressed this question by asking the same congenitally blind and sighted participants to perform both reading tasks and spoken sentence comprehension tasks while undergoing functional magnetic resonance imaging (fMRI). We predicted that in blind Braille readers, but not sighted readers of print, the same part of the vOTC that responds to written language also responds to the grammatical complexity of spoken sentences.

Materials and Methods

Participants

Ten congenitally blind (9 females, M = 41 years-of-age, SD = 17) and 15 sighted (9 females, M = 23 years-of-age, SD = 6) native English speakers participated in the study. All blind participants were fluent Braille readers that began learning Braille in early childhood (M = 4.9 years-of-age, SD = 1.2), rated their own proficiency as high, and reported reading Braille at least 1 h per day (M = 3.1 h, SD = 2.6; see Table 1 for further participant information). All blind participants had at most minimal light perception since birth and blindness due to pathology anterior to the optic chiasm. Sighted participants had normal or corrected to normal vision and were blindfolded during the auditory sentence comprehension experiment (Experiment 3). None of the sighted or blind participants suffered from any known cognitive or neurological disabilities. Participants gave informed consent and were compensated $30 per hour. All procedures were approved by the Johns Hopkins Medicine Institutional Review Board. One additional blind participant was scanned (participant 11) but their data were not included due to technical issues related to the Braille display. Behavioral results for the reading experiment from one sighted participant was not recorded due to button-box failure.

Table 1.

Participant characteristics

| Participant No. | B1 | B2 | B3 | B4 | B5 | B6 | B7 | B8 | B9 | B10 | Sighted |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Age | 26 | 49 | 39 | 35 | 46 | 32 | 25 | 22 | 70 | 67 | M = 23, SD = 6 |

| Gender | F | F | F | F | F | F | F | M | F | F | 9 F/6 M |

| Handedness (Edinburgh) | R | Am | R | R | R | R | Am | R | R | Am | 14 R/1 L |

| Level of education | MA | MA | PhD | MA | BA | BA | MA | BA | HS | MA | 1 PhD/14 BA |

| Cause of blindness | ROP | LCA | ROP | LCA | ROP | ROP | LCA | LCA | ROP | ROP | |

| Age started reading Braille, y | 4 | 7 | 5 | 4 | 4 | 4 | 5 | 4 | 7 | 5 | |

| Self-reported Braille ability (1–5) | 4 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 5 | |

| Hours of reading Braille per day | 8 | 1–2 | 2 | 2 | N/A | 2 | 6–8 | 2–4 | 1 | 1 |

Handedness: left (L), ambidextrous (Am), or right (R), based on Edinburgh Handedness Inventory. ROP, Retinopathy of prematurity; LCA, Leber's congenital amaurosis. For Braille ability, participants were asked: “On a scale of 1 to 5, how well are you able to read Braille, where 1 is ‘not at all’, 2 is ‘very little’, 3 is ‘reasonably well’, 4 is ‘proficiently’, and 5 is ‘expert’?” This information was not available for participant B5 (marked N/A).

Experimental design and statistical analyses

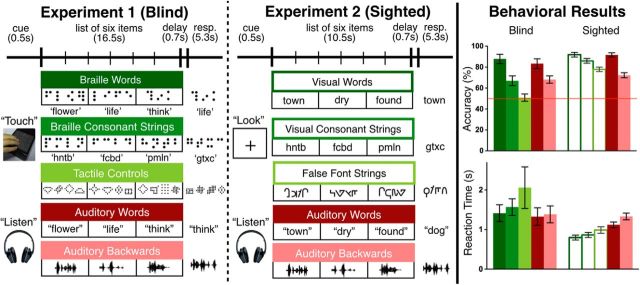

Experiment 1: Braille reading and auditory word experiment (blind).

Blind participants performed a memory probe task with tactile and auditory stimuli in five conditions [three tactile: Braille words (BWs), Braille consonant strings (CSs), tactile control stimuli (TC), and two auditory: spoken words (AWs) and backward speech sounds (ABs)] while undergoing fMRI (Fig. 1). For each trial, participants heard or felt a list of six items from the same condition presented one at a time, and indicated whether a single probe item that appeared after the list had been present in the list. Tactile stimuli were presented using an MRI-compatible refreshable Braille and tactile graphic display consisting of an array of 32 by 35 pins (spaced 2.4 mm apart; Piezoelectric Tactile Stimulus Device, KGS; Bauer et al., 2015). On auditory trials, participants listened to lists of words or the auditory words played backward, presented over MRI-compatible Sensimetrics earphones.

Figure 1.

Experiments 1 and 2 experimental paradigms, example stimuli, and behavioral results. Accuracy is shown in percentage correct and reaction time in seconds (mean ± SEM). Thin red line indicates chance level (50%).

Each trial began with a short auditory cue prompting to “Listen” or to “Touch” (0.5 s). Participants then heard or felt six items, one at a time. In the tactile trials, each item appeared on the Braille display for 2 s, followed by a 0.75 s blank period before the next item appeared. Participants were instructed to read naturally. After all six items had appeared, there was a brief delay (0.2 s), followed by a short beep (0.5 s), and the probe item (2 s). Participants judged whether or not the probe had been present in the original list by pressing one of two buttons using the non-reading hand (5.3 s to respond). The timing and sequence of events (cue, six items, beep, probe, response) were identical for the auditory trials. The auditory words and words played backward were on average 0.41 s long (SD = 0.03 s).

Word items consisted of 120 common nouns, adjectives, and verbs, which were on average five letters long (SD = 1.4 letters). The Braille word and spoken word conditions contained the same words across participants. Half of the participants read a given word in Braille and the other heard it spoken. All BWs were presented in Grade-II contracted English Braille (the most common form of Braille read by proficient Braille readers in the United States). In Grade-II contracted Braille, single characters represent either single letters or frequent letter combinations (e.g., “ch” and “ing”), as well as some frequent whole words (e.g., the letter “b” can stand for “but”). With contractions, the length of each Braille word varied between one and eight Braille characters, with an average length of four characters (SD = 2.1 characters) and 11 tactile pins. Contractions therefore reduced the number of characters in each word by one.

Consonant strings were created using all 20 English consonants. Each Braille consonant string contained four consonant characters to match the average length of contracted BWs. For each participant, 24 unique CSs were repeated five times throughout the experiment. Consonant strings contained 14 pins on average.

Tactile control stimuli each consisted of four abstract shapes created using Braille pins. Pilot testing with a blind participant suggested that any shape confined to the 2 by 3 Braille cell dimensions were still perceived as Braille characters. Therefore, shapes were varied in size (smallest: 5 × 4, largest: 7 × 7), and designed such that the name of the shape was not verbalizable. The average number of tactile pins for shape strings was 58. Note that although the TC were designed to consist of four individual shapes, participants may have perceived them as whole textures (see Results for details).

Auditory words were recorded in a quiet room by a female native English speaker. Auditory ABs were created by playing the auditory words in reverse.

The experiment consisted of five runs each with 20 experimental trials (23.16 s each) and six rest periods (16 s each). The order of conditions was counterbalanced across runs.

Experiment 2: visual reading and auditory words (sighted).

In an analogous reading experiment (Experiment 2), sighted participants either viewed lists of visually presented words (VWs), CSs or false-font strings (FFs), or listened to AWs or ABs while performing the same memory probe task as in the Braille experiment (Experiment 1; Fig. 1). Visual stimuli were presented by a projector and reflected with a mirror onto a screen inside the scanner.

Visually presented words (120) were selected to match the character length of the contracted BWs (M = 4 letters; range = 3–5 letters, SD = 0.7 letters). This new set of words was matched to the BWs on raw frequency per million, averaged frequency per million of orthographic neighbors, and averaged frequency of bigrams (all comparisons p > 0.4, obtained from the MCWord Orthographic Wordform Database; Medler and Binder, 2005). As with the Braille experiment, 24 unique CSs (the same set of strings presented to the blind participants) were repeated five times throughout the experiment.

Twenty FF letters were created as a control for the 20 consonant letters. A FF letter was matched to each consonant in the number of strokes, presence of ascenders and descenders, and stroke thickness. Twenty-four strings of FFs were then created to match the 24 CSs. Each FF character was repeated five times during the experiment, analogous to the tactile control task.

The trial sequence of the visual experiment was identical to Experiment 1 (Braille/audio). Each trial began with an auditory instruction prompt, which was either “Listen” or “Look” (0.5 s). Participants were next presented with six items in sequence (single words, CSs, or FF strings). Note, however, that printed words were presented for only 1 s, which is half as long as the presentation duration of Braille stimuli (Veispak et al., 2013). During all delay periods, a black fixation cross remained on the center of the screen, and participants were instructed to keep their gaze fixed on the center throughout the experiment.

Like Experiment 1, Experiment 2 consisted of five runs each with 20 experimental trials (17 s) and six rest periods (16 s). The length of each experimental trial was shorter than in Experiment 1 (Braille reading) due to shorter presentation times. The order of conditions was counterbalanced across runs. The screen was black during all the auditory trials and half of the rest periods. The screen was white during all the visual trials and the remaining half of the rest periods. Participants were told to close their eyes if the screen went black, and to open their eyes if the screen was white.

Experiment 3: auditory sentence processing (blind and sighted).

Both blind and sighted participants performed an auditory sentence comprehension task and a control task with nonwords (Lane et al., 2015). The grammatical complexity of sentences was manipulated by including syntactic movement in half of the sentences, rendering these more syntactically complex (Chomsky, 1977; King and Just, 1991). There were a total of 54 +Movement/−Movement sentence pairs. Two sentences in a given pair were identical save for the presence or absence of syntactic movement (e.g., a −Movement sentence: “The creator of the gritty HBO crime series admires that the actress often improvises her lines”, and its +Movement counterpart: “The actress that the creator of the gritty HBO crime series admires often improvises her lines”). Each participant only heard one sentence from each pair. In the nonwords control condition, participants performed a memory probe task and were required to remember the order of the nonword stimuli.

In a given sentence trial, participants first heard a tone (0.5 s) followed by sentence (recorded by a male native English speaker) for the duration of the sound (average of 6.7 s). Next, participants heard a probe question (2.9 s) asking who did what to whom (e.g., “Is it the actress who admires the HBO show creator?”). Participants had until the end of the trial (16 s) to respond “Yes” or “No” by pressing one of two buttons. Sentences (+Move and −Move) and nonwords were presented in a pseudorandom order (no trial type repeated >3 times in a row). Blind participants underwent six runs, while sighted participants each underwent three runs.

fMRI data acquisition and analysis

All MRI data were acquired at the F. M. Kirby Research Center of Functional Brain Imaging on a 3T Phillips scanner. T1-weighted structural images were collected in 150 axial slices with 1 mm isotropic voxels. Functional BOLD scans were collected in 26 axial slices (2.4 × 2.4 × 3 mm voxels, TR = 2 s). Analyses were performed using FSL, FreeSurfer, the HCP workbench, and in-house software. For each subject, cortical surface models were created using the standard FreeSurfer pipeline (Dale et al., 1999; Glasser et al., 2013).

Functional data were motion-corrected, high-pass filtered (128 s cutoff), and resampled to the cortical surface. On the surface, the data were smoothed with a 6 mm FWHM Gaussian kernel. Cerebellar and subcortical structures were excluded.

The five conditions of the reading experiments (three visual or tactile and two auditory) or the three conditions of the auditory sentence experiment (+Movement sentences, −Movement sentences, nonwords lists) were entered as covariates of interest into general linear models. Covariates of interest were convolved with a standard hemodynamic response function, with temporal derivatives included. All data were pre-whitened to remove temporal autocorrelation. Response periods and trials in which participants failed to respond were modeled with separate regressors, and individual regressors were used to model out time points with excessive motion [FDRMS > 1.5 mm; average number of time points excluded per run: 0.95 (blind) and 0.1 (sighted)].

Runs were combined within subjects using fixed-effects models. Data across participants were combined using a random-effects analysis. Group-level analyses were corrected for multiple comparisons at the vertex level (p < 0.05) for false discovery rate (FDR). Five runs for blind and three runs for sighted participants were dropped due to equipment failure.

Functional ROI analysis

We defined individual reading-responsive functional regions-of-interest (ROIs) in the left vOTC. In blind participants, reading-responsive functional ROIs were defined based on the Braille CSs > tactile controls contrast. Analogous ROIs were defined in sighted participants using the visual CSs > FF strings contrast. ROIs were defined as the top 5% of vertices activated in each individual within a ventral occipitotemporal mask.

A ventral occipitotemporal mask was created based on the peaks of vOTC responses to written text in sighted individuals across 28 studies, summarized in a meta-analysis of reading experiments in sighted individuals (Jobard et al., 2003). Across studies, activation was centered at MNI coordinates (−44, −58, −15; Jobard et al., 2003; note that coordinates throughout the paper are reported in MNI space). The rectangular mask drawn around the 28 coordinates extended from (−43, −38, −22) most anteriorly to (−37, −75, −14) posteriorly (see Fig. 3B).

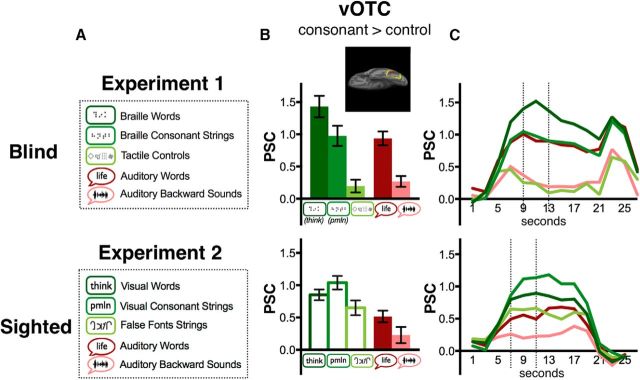

Figure 3.

Responses of vOTC to written and AWs in blind and sighted individuals. A, Experimental conditions and sample stimuli for Experiments 1 and 2 for blind and sighted groups, respectively. B, Bar graph depicts results from functional ROI analyses in vOTC. ROIs were defined as top 5% of vertices activated for Braille CSs > TC (blind) or visual CSs > FF strings (sighted) using a leave-one-run-out procedure. Yellow outline in brain inlay illustrates search space within which individual functional ROIs were defined, and red indicates one sample participant's ROI. PSC is on the y-axis and error bars represent the SEM. C, PSC over time. Dotted lines indicate the peak duration across which PSC was averaged to obtain mean PSC in B.

The resulting individual functional ROIs had average coordinates of (−46, −51, −13) for the blind group and (−45, −51, −14) for the sighted group. Because the response of the vOTC to orthographic and non-orthographic linguistic information may vary along the anterior to posterior axis, we additionally defined a more posterior vOTC mask by excluding all points anterior to y = −54. This new mask generated individual functional ROIs with average coordinates (−42, −61, −11) for the blind and (−42, −60, −10) for the sighted.

We next extracted percentage signal change (PSC) from each ROI. PSC was calculated as [(Signal condition − Signal baseline)/Signal baseline], where baseline is the activity during blocks of rest. PSC was extracted from the stimulus portions of trials in all three experiments, avoiding activity related to decision or response processes (Experiment 1: 9–13 s, Experiment 2: 7–11 s, Experiment 3: 7–11 s; see Fig. 3C). Initial TRs were dropped to allow the hemodynamic response to rise. The number of TRs dropped is different for visual and tactile experiments to account for the different presentation durations of visual and tactile word stimuli. PSC was averaged across vertices within an ROI.

For the sentence comprehension experiment (Experiment 3 for both groups), PSC for the three auditory conditions was extracted from reading-responsive ROIs defined based on data from Experiments 1 and 2 (described above). To avoid potential bias due to the unequal number of runs across groups in the sentence processing Experiment 3 (3 runs in sighted, 6 in blind), we additionally re-ran the analysis extracting PSC from just the first three runs for the blind group.

To examine responses in the reading experiments (Experiment 1 for blind; Experiment 2 for sighted), we redefined the functional ROIs using the same mask and procedure as above, but with a leave-one-run-out cross-validation procedure. Cross-validation was used to define independent functional ROIs and to avoid using the same data for defining ROIs and testing hypotheses (Kriegeskorte et al., 2009; Glezer and Riesenhuber, 2013). ROIs were defined based on data from all but one run, and PSC for the left-out run was then extracted. This was repeated iteratively across all runs (i.e., leaving each run out), and PSC was averaged across iterations.

To examine responses to sentences in the inferior frontal gyrus (IFG), we defined individual ROIs as top 5% of vertices responsive in the BWs > TCs or VWs > FF strings contrasts within a published IFG mask (Fedorenko et al., 2011).

Results

Behavioral performance

Both blind and sighted participants were more accurate on more word-like items across tactile, visual, and auditory conditions (Fig. 1; blind: one-way ANOVA for BW > CS > TC: F(2,18) = 28.98, p < 0.001; two-tailed t tests for BW > CS: t(9) = 4.48, p = 0.002; CS > TC: t(9) = 3.34, p = 0.009; sighted: one-way ANOVA for VW > CS > FF: F(2,26) = 11.33, p = 0.003; two-tailed t tests for VW > CS: t(13) = 2.15, p = 0.05; CS > FF: t(13) = 2.75, p = 0.02; blind: AW > AB, t(9) = 3.12, p = 0.01; sighted: AW > AB, t(13) = 5.31, p < 0.001). Blind participants were at chance on the tactile controls condition (t(9) = 0.2, p = 0.8).

We tested for a group difference in effect of lexicality on accuracy. Because blind participants performed at chance on the tactile control condition, we excluded this condition as well as the FF string condition from the lexicality comparison. The effect of lexicality (i.e., difference between words and CSs) was larger in the tactile reading task of the blind group than in the visual reading task of the sighted (group-by-condition, 2 × 2 ANOVA interaction: F(1,22) = 8.5, p = 0.008).

For the tactile task (blind group), reaction time did not differ across conditions (one-way ANOVA for BW < CS < TC: F(2,18) = 2.52, p = 0.11; AW < AB: t(9) = 1.6, p = 0.14). In the visual reading task (sighted group) reaction times were in the same direction as accuracy, such that participants were faster on more word-like stimuli (one-way ANOVA for VW < CS < FF: F(2,26) = 11.63, p < 0.001; VW < CS: t(13) = 3.01, p = 0.01; CS < FF: t(13) = 3.02, p = 0.01; AW < AB: t(13) = 3.27, p = 0.006). As with accuracy, there was a group-by-condition interaction (again 2 × 2 ANOVA) for reaction time (F(1,22) = 4.55, p = 0.04). A simple main effects test revealed that responses to tactile stimuli (blind) were in general slower than responses to visual stimuli (sighted; words: F(1,22) = 24.35, p < 0.001; CSs: F(1,22) = 6.43, p = 0.02).

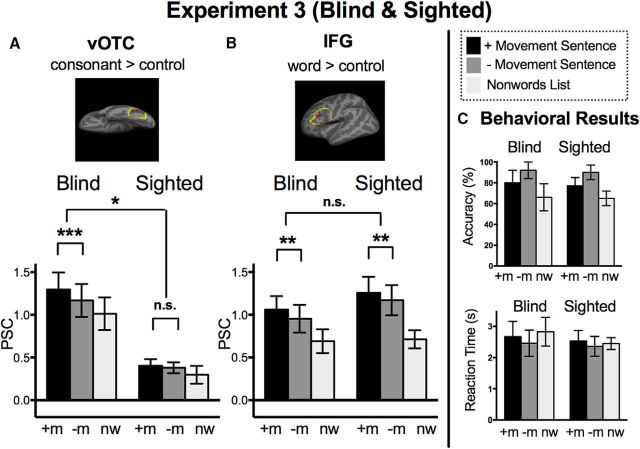

In Experiment 3, both groups were more accurate and faster for sentences without movement than for complex sentences containing movement (see Fig. 4; two-tailed t tests for −Movement > +Movement in accuracy, sighted: t(14) = 7.03, p < 0.001; blind: t(9) = 5.22, p < 0.001; for +Movement > −Movement in reaction time, sighted: t(14) = 3.79, p = 0.002; blind: t(9) = 5.29, p = 0.001). There were no group-by-condition interactions or main effects of group for accuracy or reaction time for comparing +Movement and −Movement sentences (for accuracy, group-by-condition 2 × 2 ANOVA interaction: F(1,23) = 0.07, p = 0.8; main effect of group: F(1,23) = 0.99, p = 0.3; for reaction time, interaction: F(1,23) = 0.29, p = 0.6, main effect of group: F(1,23) = 0.56, p = 0.5).

Figure 4.

Behavioral performance and vOTC and IFG responses to sentence structure during auditory sentence task. A, vOTC functional ROI results. ROIs defined based on Braille CSs > TC (blind) or visual CSs > FF strings (sighted). B, IFG ROIs based on BWs > TC (blind) or VWs > FFs (sighted). PSC is on the y-axis and error bars represent the SEM. C, Behavioral results during Experiment 3, accuracy is shown in percentage correct and reaction time in seconds (mean ± SEM).

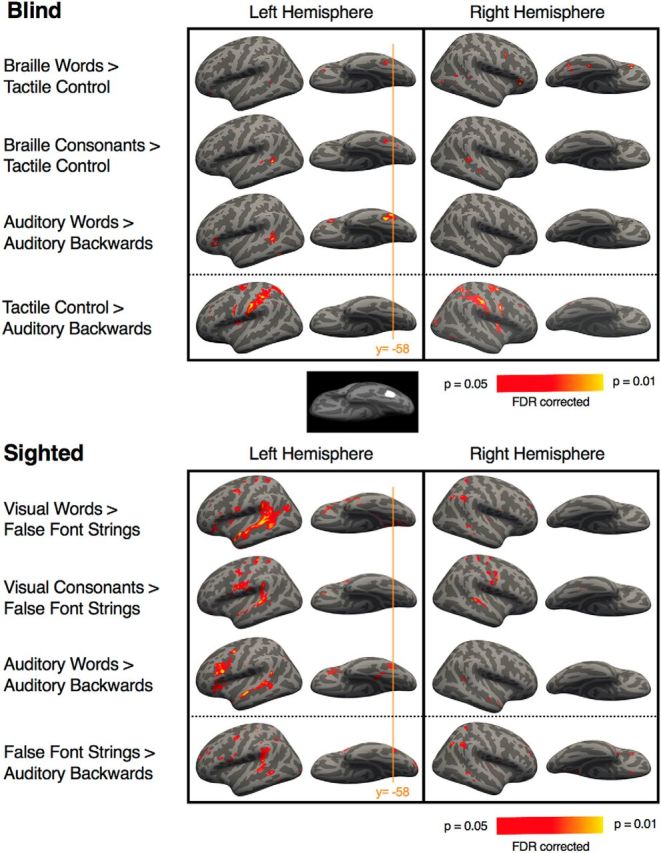

Responses to Braille and AWs peak in the VWFA

Whole-brain analysis revealed activation in the left and right vOTC in the blind group for BWs > TCs [vertex-wise FDR: p < 0.05, corrected; Fig. 2; Table 2; peaks of activation: MNI coordinates (−43, −45, −13) and (34, −54, −15)]. A similar vOTC region was also active more for Braille CSs than for the tactile control condition (−44, −45, −15). The AWs > AB speech contrast (blind group) elicited activation in the same region of left vOTC (−41, −44, −17). The left vOTC peaks for BWs, Braille CSs, and AWs were within 5 mm of each other.

Figure 2.

BOLD Responses to words, CSs, AWs, and control conditions in blind and sighted individuals (Experiments 1 and 2). Whole-brain analyses (corrected vertex-level, FDR p < 0.05). The orange line denotes the y-coordinate of the mean VWFA response across studies of reading in sighted individuals (Jobard et al., 2003). Brain inlay shows 10 mm radius circle around MNI (−43, −60, −6; Cohen et al., 2000). Note the slightly anterior location of the Braille response peak (by 1.3 cm). Whether this somewhat anterior localization of vOTC responses to language reflects differences between blind and sighted groups, Braille and print reading, or noise across studies is not clear.

Table 2.

Peaks of activation for Experiment 1 (blind group)

| x | y | z | Size | Peak p | Peak t | |

|---|---|---|---|---|---|---|

| Braille words > tactile controls | ||||||

| Left hemisphere | ||||||

| Fusiform/inferior temporal | −43 | −45 | −13 | 24 | 0.00002 | 8.33 |

| Pars orbitalis | −39 | 34 | −12 | 15 | 0.00004 | 7.73 |

| Superior temporal sulcus | −57 | −49 | 15 | 15 | 0.00003 | 7.44 |

| Right hemisphere | ||||||

| Pars triangularis | 45 | 30 | 2 | 70 | 0.000001 | 11.97 |

| Lateral occipital | 48 | −74 | 3 | 14 | 0.000003 | 10.29 |

| Fusiform/inferior temporal | 41 | −29 | −21 | 59 | 0.00001 | 8.80 |

| 34 | −54 | −15 | 12 | 0.00007 | 6.71 | |

| 41 | −58 | −12 | 36 | 0.00002 | 5.26 | |

| Middle frontal gyrus | 40 | 5 | 37 | 22 | 0.00002 | 8.23 |

| Superior temporal sulcus | 58 | −34 | 6 | 18 | 0.00003 | 7.56 |

| Middle temporal gyrus | 56 | −55 | 9 | 13 | 0.0001 | 6.50 |

| Braille consonant strings > tactile controls | ||||||

| Left hemisphere | ||||||

| Superior temporal sulcus | −52 | −46 | 5 | 68 | 0.000004 | 9.96 |

| Fusiform/inferior temporal | −44 | −45 | −15 | 23 | 0.00001 | 8.89 |

| Right hemisphere | ||||||

| Superior temporal sulcus | 48 | −36 | 7 | 58 | 0.00004 | 7.42 |

| 46 | −23 | 7 | 13 | 0.00004 | 7.52 | |

| Pars triangularis | 45 | 30 | 2 | 10 | 0.0006 | 5.13 |

| Auditory words > auditory backward | ||||||

| Left hemisphere | ||||||

| Fusiform/inferior temporal | −41 | −44 | −17 | 63 | 0.0000004 | 12.82 |

| −46 | −54 | −9 | 70 | 0.000001 | 11.50 | |

| Superior temporal sulcus | −50 | −46 | 6 | 129 | 0.00001 | 9.31 |

| Pars triangularis | −37 | 33 | 1 | 77 | 0.00001 | 8.55 |

| Tactile controls > auditory backward | ||||||

| Left hemisphere | ||||||

| Postcentral/submarginal | −55 | −19 | 32 | 631 | 0.0000001 | 14.57 |

| Superior parietal | −20 | −65 | 55 | 456 | 0.000002 | 8.99 |

| Precentral | −55 | 8 | 31 | 200 | 0.00002 | 8.11 |

| Insula | −37 | −4 | 17 | 55 | 0.00008 | 6.75 |

| Rostral middle frontal | −39 | 34 | 32 | 13 | 0.0002 | 6.09 |

| Superior frontal | −7 | 9 | 46 | 132 | 0.0002 | 5.94 |

| Caudal anterior cingulate | −9 | 11 | 38 | 22 | 0.0005 | 5.26 |

| Right hemisphere | ||||||

| Precentral | 55 | 9 | 32 | 324 | 0.0000002 | 13.76 |

| Superior parietal | 37 | −49 | 60 | 494 | 0.000005 | 9.63 |

| Caudal middle frontal | 27 | −2 | 47 | 15 | 0.00001 | 9.54 |

| Insula | 37 | −1 | 12 | 104 | 0.00001 | 9.35 |

| Postcentral/submarginal | 52 | −20 | 35 | 700 | 0.00001 | 9.26 |

| Inferior parietal | 40 | −52 | 41 | 56 | 0.00004 | 7.32 |

| Inferior temporal | 51 | −58 | −7 | 33 | 0.0001 | 7.10 |

| Superior frontal | 13 | 8 | 42 | 37 | 0.0001 | 6.96 |

| Lateral occipital | 29 | −86 | 11 | 65 | 0.0006 | 5.13 |

| Rostral middle frontal | 39 | 30 | 33 | 14 | 0.001 | 4.59 |

Peaks of regions activated for Braille words, consonant strings, auditory words, and tactile controls (vertex-wise, FDR, p < 0.05 corrected for multiple comparisons, minimum 10 vertices). Peak coordinates reported in MNI coordinates. Cluster sizes in vertices. Uncorrected p values and t statistics of the maximum peak.

The locations of the Braille letter- and word-responsive vOTC peaks are within the range of previously reported VWFA responses to written words in the sighted [e.g., MNI coordinates; Cohen et al., 2000: (−44, −57, −13); Szwed et al., 2011: (−47, −41, −18)] and blind [Reich et al., 2011: (−39, −63, −8); Büchel et al., 1998: (−37, −43, −23)]. However, the current peaks (averaged) are anterior by 1.3 cm to the “canonical” VWFA response to written words in sighted readers of print [Cohen et al., 2000: (−44, −57, −13); Fig. 2)].

By contrast, the response to the tactile control condition compared with the AB condition (blind group) did not elicit significant activation in the left vOTC. Instead, we observed a peak in the right hemisphere (51, −58, −7), in a location close to what has been termed the lateral-occipital tactile-visual area [(49, −65, −9); Amedi et al., 2001].

In addition, although in the blind group responses to spoken and BWs peaked at the vOTC, we also observed responses to BWs and AWs in other parts of the visual cortices, including lateral occipital cortex and V1 at a lower statistical threshold (uncorrected, p < 0.05). Even at a lowered threshold, responses to the TC condition did not overlap with Braille or AW responses in the vOTC.

In contrast to the blind group, in the sighted, we did not observe extensive visual cortex activation for either the VWs > FFs, AWs > AB, or even AWs > rest contrasts, even at the lowered threshold. Responses to VWs > FFs and CSs > FFs in the vOTC did not reach significance at a corrected threshold in the sighted group (Fig. 2). VOTC responses to CSs > FFs emerged at p < 0.05 uncorrected in the vOTC at (−49, −52, −15). The AWs > AB contrast additionally elicited a vOTC peak at the corrected threshold at (−42, −53, −9).

Different responses to words as opposed to CSs in vOTC of blind and sighted participants (Experiments 1 and 2)

ROI analyses revealed different response profiles in the vOTC of sighted and blind readers. During the reading task, the vOTC of blind subjects (individual ROIs defined Braille consonants > TCs) responded most to BWs, followed by Braille CSs and finally by TCs (one-way ANOVA for BW > CS > TC: F(2,18) = 54.77, p < 0.001; two-tailed t tests for BW > CS: t(9) = 3.92, p = 0.004; CS > TC: t(9) = 8.31, p < 0.001; Fig. 3B). By contrast, in sighted participants the vOTC ROIs (defined visual consonants > FF) responded most to written CSs, followed by written words, and finally FFs (CS > VW > FF: F(2,28) = 8.32, p = 0.001; CS > VW: t(14) = 2.11, p = 0.05; VW > FF: t(14) = 2.04, p = 0.06). Note that the low responses to TC strings in the blind group may be driven in part by poor performance in this condition.

For the auditory conditions, the response of the vOTC was similar across blind and sighted groups. The vOTC responded more to AWs than to ABs (AW > AB blind: t(9) = 8.34, p < 0.001; sighted: t(14) = 3.03, p = 0.009; Fig. 3B). In the blind group, the difference between BWs and TCs was larger than the difference between AWs and ABs [modality-by-lexicality interaction in 2 × 2 ANOVA comparing (BW vs TC) versus (AW vs AB): F(1,9) = 21.46, p = 0.001]. The modality-by-lexically interaction was not significant in the sighted [interaction in 2 × 2 ANOVA comparing (CS vs FF) versus (AW vs AB): F(1,14) = 0.35, p = 0.6].

The vOTC of blind but not sighted individuals is sensitive to grammatical structure

The vOTC of sighted participants did not respond to syntactic complexity of sentences in the auditory sentence comprehension task (Fig. 4; sighted: +Movement > −Movement sentences in ROIs defined CSs > FFs; t(14) = 0.58, p = 0.6). By contrast, in blind participants, the Braille-responsive vOTC region responded more to syntactically complex sentences than to less syntactically complex sentences (ROIs defined Braille CSs > TCs; +Movement > −Movement sentences: t(9) = 5.03, p = 0.001; group-by-condition interaction for the movement effect: F(1,23) = 5.39, p = 0.03). A similar pattern was observed when we restricted the ROIs to a smaller and more posterior vOTC mask (+Movement > −Movement; sighted: t(14) = 0.23, p = 0.8; blind: t(9) = 4.49, p = 0.002; group-by-condition interaction: F(1,23) = 3.78, p = 0.06) and when only examining the first three runs of data for the blind group (larger mask: +Movement > −Movement; blind: t(9) = 3.35, p = 0.009; group-by-condition interaction: F(1,23) = 3.76, p = 0.07; smaller posterior mask: +Movement > −Movement; sighted: t(14) = 0.23, p = 0.8; blind: t(9) = 7.18, p < 0.001; group-by-condition interaction: F(1,23) = 9.03, p = 0.006).

To verify that the results were not due to a failure of sighted subjects to show a syntactic complexity effect at all, we examined language activity in the IFG (Fig. 4). In both sighted and blind groups the word-responsive (written words > controls) IFG responded to syntactic complexity (+Movement > −Movement sentences; sighted: t(14) = 4.06, p = 0.001; blind: t(9) = 4.41, p = 0.002). There was no group by condition interaction in the IFG (F(1,23) = 0.4, p = 0.5).

Discussion

We find that the left vOTC of blind but not sighted individuals responds to the grammatical complexity of spoken sentences. In blind individuals, the very same occipitotemporal region that responds to Braille also responds to syntax. In contrast, the VWFA of sighted participants is insensitive to grammatical structure. These results suggest that blindness decreases the selectivity of the VWFA for orthography.

We also observed different responses to words and CSs across blind and sighted groups when they performed analogous Braille and visual print reading tasks. The VWFA of sighted subjects responded more to CSs than to words, whereas the vOTC of blind participants responded more to words than to CSs. Previous studies with sighted individuals found that the relative VWFA responses to letters, nonwords, and CSs are task-dependent. Although the VWFA responds more to whole words when presentation times are short and the task is relatively automatic (e.g., passive reading), larger or equal responses to consonants are observed during attentionally demanding tasks (Cohen et al., 2002; Vinckier et al., 2007; Bruno et al., 2008; Schurz et al., 2010). It has been suggested that stronger VWFA responses for CSs and nonwords are observed when these demand greater attention to orthographic information than words do (Cohen et al., 2008; Dehaene and Cohen, 2011). In the current study, both blind and sighted participants were less accurate at recognition memory with CSs compared with words, and this effect was even more pronounced in the blind group. Yet CSs relative to words produced larger responses only in the VWFA of sighted participants. This result is consistent with the idea that in blind individuals, no amount of attention to orthography can override a vOTC preference for high-level linguistic content, which is present in words but not in CSs (i.e., the blind vOTC will respond more to words regardless of task demands because words are linguistically richer). Alternatively, the differences in VWFA responses to written words and letter strings across blind and sighted groups could result from the different temporal dynamics of tactile as opposed to visual reading (Veispak et al., 2013). Such a difference could also account for larger vOTC activity overall during Braille reading. By contrast, differential responses of vOTC to spoken sentences across groups in an identical task are more clearly related to between-group selectivity differences.

The hypothesis that the vOTC of blind individuals responds to multiple levels of linguistic information is consistent with prior evidence that in this population, the visual cortices are sensitive to semantics and syntax (Roder et al., 2002; Burton et al., 2002, 2003; Amedi et al., 2003; Noppeney et al., 2003; Bedny et al., 2011, 2015; Watkins et al., 2012; Lane et al., 2015). Notably, the vOTC is not the only part of visual cortex in blind individuals that responds to language. The current study, as well as prior investigations, report responses in V1, extrastriate, and lateral occipital cortices (Sadato et al., 1996; Burton et al., 2002, 2003; Röder et al., 2002; Amedi et al., 2003; Noppeney et al., 2003; Bedny et al., 2011; Lane et al., 2015). On the other hand, it is significant that within visual cortex, the vOTC constitutes a peak of language responsiveness across blind and sighted groups. The VWFA has strong anatomical connectivity with the frontotemporal language network (Yeatman et al., 2013; Epelbaum et al., 2008; Bouhali et al., 2014; Saygin et al., 2016). For sighted readers, this connectivity is suited to the demands of visual reading (i.e., enabling a link between visual symbols and language). In individuals who are blind, such connectivity may instead render the vOTC a gateway for linguistic information into the visual system.

The present results raise several important questions to be addressed in future research. To fully characterize the similarities and differences in the function of the VWFA across blind and sighted groups, it will be important to compare VWFA responses between blind and sighted individuals across a range of tasks and stimuli. Furthermore, a key outstanding question concerns the behavioral role of the vOTC in blind individuals. Previous studies suggest that the “visual” cortices are functionally relevant for nonvisual behaviors in blindness, including for higher cognitive tasks such as Braille reading and verb generation (Cohen et al., 1997; Amedi et al., 2004). Whether the vOTC becomes functionally relevant for syntactic processing specifically remains to be tested. Regardless of the outcomes of these future investigations, the present results illustrate that the function of the vOTC develops differently in the presence, as opposed to in the absence, of vision.

Future investigations are further needed to disentangle the influence of visual experience (even before literacy) on vOTC function from the effect of reading modality (i.e., visual vs tactile). In sighted adults, Braille training enhances responses to Braille in the VWFA, and TMS to the VWFA disrupts Braille reading (Debowska et al., 2016; Siuda-Krzywicka et al., 2016). However, the vOTC of blind children learning to read Braille, unlike that of sighted adults, has not received massive amounts of visual input before literacy acquisition. Furthermore, adult sighted learners of Braille have a reading-responsive area within the vOTC before Braille learning, unlike congenitally blind children, and are learning to read Braille at a later age. Any one of these factors could lead to different neural bases of Braille reading between blind and sighted individuals. Consistent with the possibility of distinct neural mechanisms, reading strategies and levels of proficiency differ substantially across late sighted learners and early blind learners of Braille (Siuda-Krzywicka et al., 2016). Future studies directly comparing Braille reading in blind and sighted individuals have the potential to provide insight into how learning-based plasticity is shaped by preceding experiences.

The current results highlight that much remains to be understood about the neural mechanisms that support orthographic processing in blind readers of Braille. One important question concerns the neural instantiation of abstract orthographic knowledge in blindness. Blind and sighted readers alike acquire modality-independent orthographic knowledge, such as knowledge of frequent letter combinations and spellings of irregular words (Rapp et al., 2001; Fischer-Baum and Englebretson, 2016). How such information is represented in blind individuals remains to be tested. One possibility is that in blindness, orthographic processes are supported by language-responsive regions that also represent grammatical and semantic information. This could include regions in the vOTC and other language-responsive visual areas, as well as parts of the frontotemporal language network.

Blind individuals may also develop regions that are specialized for the recognition of written Braille that are outside of general language processing regions and in a different location from the vOTC. Somatosensory cortex is one candidate region in which VWFA-like specialization for Braille may yet be found. Learning Braille improves tactile acuity for recognizing the types of perceptual features that are found in Braille characters (Stevens et al., 1996; Van Boven et al., 2000; Wong et al., 2011), and these behavioral improvements are associated with plasticity within the somatosensory cortices. For example, representation of the Braille reading finger is expanded in blind Braille readers, relative to sighted non-readers of Braille (Pascual-Leone and Torres, 1993; Sterr et al., 1999; Burton et al., 2004). FMRI and PET studies have observed responses to Braille in somatosensory and parietal cortices of blind individuals, in addition to responses in the visual cortices, including in the vOTC (Sadato et al., 2002; Debowska et al., 2016; Siuda-Krzywicka et al., 2016). However, unlike in the ventral visual stream, there are no known regions specialized for a particular category of stimuli in the somatosensory system. Rather, organization of the somatosensory cortex is based on maps of body parts or distinctions between types of tactile perception, such as texture versus shape perception (Dijkerman and de Haan, 2007). Whether knowledge about Braille is stored in general somatosensory areas responsible for sensing touch on the Braille reading fingers or in dedicated Braille-specific regions remains to be determined.

Beyond providing insights into neural basis of reading, the present results speak more generally to the mechanisms of development and plasticity in the human cortex. A key outstanding question concerns how different aspects of innate physiology and experience interact during functional specialization. Previous investigations of cross-modal plasticity in blindness find examples of both drastic functional change (e.g., responses to language in visual cortex), as well as preserved function (e.g., recruitment of MT/MST during auditory motion processing in blindness; Saenz et al., 2008; Wolbers et al., 2011; Collignon et al., 2011). Here, we report a case where the canonical function of a region within the visual cortex is neither wholly changed nor wholly preserved in blindness. The vOTC performs language-related processing in both blind and sighted individuals, but is more specialized specifically for written letter- and word-recognition in the sighted. These findings suggest that the function of human cortical areas is flexible, rather than intrinsically fixed to a particular class of cognitive representations (e.g., shape) by area-intrinsic cytoarchitecture (Amedi et al., 2017; Bedny, 2017). We hypothesize that similar intrinsic connectivity to frontotemporal language networks in blind and sighted individuals at birth leads to the development of related but distinct functional profiles in the context of different experiences. The findings thus illustrate how intrinsic constraints interact with experience during cortical specialization.

Footnotes

This work was supported by a grant from the Johns Hopkins Science of Learning institute, and NIH/NEI Grant RO1 EY019924 to L.B.M. We thank all our participants and the Baltimore blind community for making this research possible, the Kennedy Krieger Institute for neuroimaging support, Lindsay Yazzolino for help in the design and piloting of this experiment, and Connor Lane for assistance with data collection and analysis.

The authors declare no competing financial interests.

References

- Amedi A, Malach R, Hendler T, Peled S, Zohary E (2001) Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci 4:324–330. 10.1038/85201 [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Pianka P, Malach R, Zohary E (2003) Early “visual”cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci 6:758–766. 10.1038/nn1072 [DOI] [PubMed] [Google Scholar]

- Amedi A, Floel A, Knecht S, Zohary E, Cohen LG (2004) Transcranial magnetic stimulation of the occipital pole interferes with verbal processing in blind subjects. Nat Neurosci 7:1266–1270. 10.1038/nn1328 [DOI] [PubMed] [Google Scholar]

- Amedi A, Hofstetter S, Maidenbaum S, Heimler B (2017) Task selectivity as a comprehensive principle for brain organization. Trends Cogn Sci 21(5):307–310. 10.1016/j.tics.2017.03.007 [DOI] [PubMed] [Google Scholar]

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N (2007) Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc Natl Acad Sci U S A 104:9087–9092. 10.1073/pnas.0703300104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer C, Yazzolino L, Hirsch G, Cattaneo Z, Vecchi T, Merabet LB (2015) Neural correlates associated with superior tactile symmetry perception in the early blind. Cortex 63:104–117. 10.1016/j.cortex.2014.08.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dodell-Feder D, Fedorenko E, Saxe R (2011) Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci U S A 108:4429–4434. 10.1073/pnas.1014818108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Richardson H, Saxe R (2015) “Visual” cortex responds to spoken language in blind children. J Neurosci 35:11674–11681. 10.1523/JNEUROSCI.0634-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M. (2017) Evidence from blindness for a cognitively pluripotent cortex. Trends Cogn Sci 21:637–648. 10.1016/j.tics.2017.06.003 [DOI] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC (2013) Distributed circuits, not circumscribed centers, mediate visual recognition. Trends Cogn Sci 17:210–219. 10.1016/j.tics.2013.03.007 [DOI] [PubMed] [Google Scholar]

- Bouhali F, de Schotten MT, Pinel P, Poupon C, Mangin JF, Dehaene S, Cohen L (2014) Anatomical connections of the visual word form area. J Neurosci 34:15402–15414. 10.1523/JNEUROSCI.4918-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruno JL, Zumberge A, Manis FR, Lu ZL, Goldman JG (2008) Sensitivity to orthographic familiarity in the occipito-temporal region. Neuroimage 39:1988–2001. 10.1016/j.neuroimage.2007.10.044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchel C, Price C, Friston K (1998) A multimodal language region in the ventral visual pathway. Nature 394:274–277. 10.1038/28389 [DOI] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME (2002) Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol 87:589–607. 10.1152/jn.00285.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Diamond JB, McDermott KB (2003) Dissociating cortical regions activated by semantic and phonological tasks: a FMRI study in blind and sighted people. J Neurophysiol 90:1965–1982. 10.1152/jn.00279.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Sinclair RJ, McLaren DG (2004) Cortical activity to vibrotactile stimulation: an fMRI study in blind and sighted individuals. Hum Brain Mapp 23:210–228. 10.1002/hbm.20064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cantlon JF, Pinel P, Dehaene S, Pelphrey KA (2011) Cortical representations of symbols, objects, and faces are pruned back during early childhood. Cereb Cortex 21:191–199. 10.1093/cercor/bhq078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chomsky N. (1977) On WH-movement. In: Formal syntax (Culicover PW, Wasow T, Akmajian A, eds), pp 71–132. New York: Academic. [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Faiz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M (1997) Functional relevance of cross-modal plasticity in blind humans. Nature 389:180–183. 10.1038/38278 [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff MA, Michel F (2000) The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain 123:291–307. 10.1093/brain/123.2.291 [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehéricy S, Chochon F, Lemer C, Rivaud S, Dehaene S (2002) Language specific tuning of visual cortex? Functional properties of the visual word form area. Brain 125:1054–1069. 10.1093/brain/awf094 [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Vinckier F, Jobert A, Montavont A (2008) Reading normal and degraded words: contribution of the dorsal and ventral visual pathways. Neuroimage 40:353–366. 10.1016/j.neuroimage.2007.11.036 [DOI] [PubMed] [Google Scholar]

- Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F (2011) Functional specialization for auditory–spatial processing in the occipital cortex of congenitally blind humans. Proc Natl Acad Sci USA 108:4435–4440. 10.1073/pnas.1013928108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI (1999) Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage 9:179–194. 10.1006/nimg.1998.0395 [DOI] [PubMed] [Google Scholar]

- Debowska W, Wolak T, Nowicka A, Kozak A, Szwed M, Kossut M (2016) Functional and structural neuroplasticity induced by short-term tactile training based on Braille reading. Front Neurosci 10:460. 10.3389/fnins.2016.00460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Cohen L (2011) The unique role of the visual word form area in reading. Trends Cogn Sci 15:254–262. 10.1016/j.tics.2011.04.003 [DOI] [PubMed] [Google Scholar]

- Dehaene S, Dehaene-Lambertz G (2016) Is the brain prewired for letters? Nat Neurosci 19:1192–1193. 10.1038/nn.4369 [DOI] [PubMed] [Google Scholar]

- Dehaene S, Pegado F, Braga LW, Ventura P, Nunes Filho G, Jobert A, Dehaene-Lambertz G, Kolinsky R, Morais J, Cohen L (2010) How learning to read changes the cortical networks for vision and language. Science 330:1359–1364. 10.1126/science.1194140 [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, de Haan EH (2007) Somatosensory processes subserving perception and action. Behav Brain Sci 30:189–201; discussion 201–239. 10.1017/S0140525X07001392 [DOI] [PubMed] [Google Scholar]

- Epelbaum S, Pinel P, Gaillard R, Delmaire C, Perrin M, Dupont S, Dehaene S, Cohen L (2008) Pure alexia as a disconnection syndrome: new diffusion imaging evidence for an old concept. Cortex 44:962–974. 10.1016/j.cortex.2008.05.003 [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Behr MK, Kanwisher N (2011) Functional specificity for high-level linguistic processing in the human brain. Proc Natl Acad Sci U S A 108:16428–16433. 10.1073/pnas.1112937108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer-Baum S, Englebretson R (2016) Orthographic units in the absence of visual processing: evidence from sublexical structure in Braille. Cognition 153:161–174. 10.1016/j.cognition.2016.03.021 [DOI] [PubMed] [Google Scholar]

- Glasser MF, Sotiropoulos SN, Wilson JA, Coalson TS, Fischl B, Andersson JL, Xu J, Jbabdi S, Webster M, Polimeni JR, Van Essen DC, Jenkinson M (2013) The minimal preprocessing pipelines for the Human Connectome Project. Neuroimage 80:105–124. 10.1016/j.neuroimage.2013.04.127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glezer LS, Riesenhuber M (2013) Individual variability in location impacts orthographic selectivity in the “visual word form area”. J Neurosci 33:11221–11226. 10.1523/JNEUROSCI.5002-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannagan T, Amedi A, Cohen L, Dehaene-Lambertz G, Dehaene S (2015) Origins of the specialization for letters and numbers in ventral occipitotemporal cortex. Trends Cogn Sci 19:374–382. 10.1016/j.tics.2015.05.006 [DOI] [PubMed] [Google Scholar]

- Jobard G, Crivello F, Tzourio-Mazoyer N (2003) Evaluation of the dual route theory of reading: a metanalysis of 35 neuroimaging studies. Neuroimage 20:693–712. 10.1016/S1053-8119(03)00343-4 [DOI] [PubMed] [Google Scholar]

- King J, Just MA (1991) Individual differences in syntactic processing: the role of working memory. J Mem Lang 30:580–602. 10.1016/0749-596X(91)90027-H [DOI] [Google Scholar]

- Krafnick AJ, Tan LH, Flowers DL, Luetje MM, Napoliello EM, Siok WT, Perfetti C, Eden GF (2016) Chinese character and English word processing in children's ventral occipitotemporal cortex: fMRI evidence for script invariance. Neuroimage 133:302–312. 10.1016/j.neuroimage.2016.03.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI (2009) Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci 12:535–540. 10.1038/nn.2303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane C, Kanjlia S, Omaki A, Bedny M (2015) “Visual” cortex of congenitally blind adults responds to syntactic movement. J Neurosci 35:12859–12868. 10.1523/JNEUROSCI.1256-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medler DA, Binder JR (2005) MCWord: an on-line orthographic database of the English language. http://www.neuro.mcw.edu/mcword/. Retrieved

- Noppeney U, Friston KJ, Price CJ (2003) Effects of visual deprivation on the organization of the semantic system. Brain 126:1620–1627. 10.1093/brain/awg152 [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Torres F (1993) Plasticity of the sensorimotor cortex representation of the reading finger in Braille readers. Brain 116:39–52. 10.1093/brain/116.1.39 [DOI] [PubMed] [Google Scholar]

- Rapp B, Folk JR, Tainturier MJ (2001) Word reading. In: The handbook of cognitive neuropsychology: what deficits reveal about the human mind, pp 233–262. Philadelphia, PA: Psychology. [Google Scholar]

- Reich L, Szwed M, Cohen L, Amedi A (2011) A ventral visual stream reading center independent of visual experience. Curr Biol 21:363–368. 10.1016/j.cub.2011.01.040 [DOI] [PubMed] [Google Scholar]

- Röder B, Stock O, Bien S, Neville H, Rösler F (2002) Speech processing activates visual cortex in congenitally blind humans. Eur J Neurosci 16:930–936. 10.1046/j.1460-9568.2002.02147.x [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Ibañez V, Deiber MP, Dold G, Hallett M (1996) Activation of the primary visual cortex by Braille reading in blind subjects. Nature 380:526–528. 10.1038/380526a0 [DOI] [PubMed] [Google Scholar]

- Sadato N, Okada T, Honda M, Yonekura Y (2002) Critical period for cross-modal plasticity in blind humans: a functional MRI study. Neuroimage 16:389–400. 10.1006/nimg.2002.1111 [DOI] [PubMed] [Google Scholar]

- Saenz M, Lewis LB, Huth AG, Fine I, Koch C (2008) Visual motion area MT+/V5 responds to auditory motion in human sight-recovery subjects. J Neurosci 28:5141–5148. 10.1523/JNEUROSCI.0803-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saygin ZM, Osher DE, Norton ES, Youssoufian DA, Beach SD, Feather J, Gaab N, Gabrieli JD, Kanwisher N (2016) Connectivity precedes function in the development of the visual word form area. Nat Neurosci 19:1250–1255. 10.1038/nn.4354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurz M, Sturm D, Richlan F, Kronbichler M, Ladurner G, Wimmer H (2010) A dual-route perspective on brain activation in response to visual words: evidence for a length by lexicality interaction in the visual word form area (VWFA). Neuroimage 49:2649–2661. 10.1016/j.neuroimage.2009.10.082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siuda-Krzywicka K, Bola ł, Paplińska M, Sumera E, Jednoróg K, Marchewka A, Śliwińska MW, Amedi A, Szwed M (2016) Massive cortical reorganization in sighted Braille readers. eLife 5:e10762. 10.7554/eLife.10762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterr A, Müller M, Elbert T, Rockstroh B, Taub E (1999) Development of cortical reorganization in the somatosensory cortex of adult Braille students. Electroencephalogr Clin Neurophysiol Suppl 49:292–298. [PubMed] [Google Scholar]

- Stevens JC, Foulke E, Patterson MQ (1996) Tactile acuity, aging, and Braille reading in long-term blindness. J Exp Psychol 2:91–106. 10.1037/1076-898X.2.2.91 [DOI] [Google Scholar]

- Striem-Amit E, Cohen L, Dehaene S, Amedi A (2012) Reading with sounds: sensory substitution selectively activates the visual word form area in the blind. Neuron 76:640–652. 10.1016/j.neuron.2012.08.026 [DOI] [PubMed] [Google Scholar]

- Szwed M, Dehaene S, Kleinschmidt A, Eger E, Valabrègue R, Amadon A, Cohen L (2011) Specialization for written words over objects in the visual cortex. Neuroimage 56:330–344. 10.1016/j.neuroimage.2011.01.073 [DOI] [PubMed] [Google Scholar]

- Van Boven RW, Hamilton RH, Kauffman T, Keenan JP, Pascual-Leone A (2000) Tactile spatial resolution in blind Braille readers. Neurology 54:2230–2236. 10.1212/WNL.54.12.2230 [DOI] [PubMed] [Google Scholar]

- Veispak A, Boets B, Ghesquière P (2013) Differential cognitive and perceptual correlates of print reading versus braille reading. Res Dev Disabil 34:372–385. 10.1016/j.ridd.2012.08.012 [DOI] [PubMed] [Google Scholar]

- Vinckier F, Dehaene S, Jobert A, Dubus JP, Sigman M, Cohen L (2007) Hierarchical coding of letter strings in the ventral stream: dissecting the inner organization of the visual word-form system. Neuron 55:143–156. 10.1016/j.neuron.2007.05.031 [DOI] [PubMed] [Google Scholar]

- Watkins KE, Cowey A, Alexander I, Filippini N, Kennedy JM, Smith SM, Ragge N, Bridge H (2012) Language networks in anophthalmia: maintained hierarchy of processing in “visual” cortex. Brain 135:1566–1577. 10.1093/brain/aws067 [DOI] [PubMed] [Google Scholar]

- Wolbers T, Zahorik P, Giudice NA (2011) Decoding the direction of auditory motion in blind humans. Neuroimage 56:681–687. 10.1016/j.neuroimage.2010.04.266 [DOI] [PubMed] [Google Scholar]

- Wong M, Gnanakumaran V, Goldreich D (2011) Tactile spatial acuity enhancement in blindness: evidence for experience-dependent mechanisms. J Neurosci 31:7028–7037. 10.1523/JNEUROSCI.6461-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeatman JD, Rauschecker AM, Wandell BA (2013) Anatomy of the visual word form area: adjacent cortical circuits and long-range white matter connections. Brain Lang 125:146–155. 10.1016/j.bandl.2012.04.010 [DOI] [PMC free article] [PubMed] [Google Scholar]