Abstract

Objective. To develop a comprehensive instrument specific to student pharmacist-patient communication skills, and to determine face, content, construct, concurrent, and predictive validity and reliability of the instrument.

Methods. A multi-step approach was used to create and validate an instrument, including the use of external experts for face and content validity, students for construct validity, comparisons to other rubrics for concurrent validity, comparisons to other coursework for predictive validity, and extensive reliability and inter-rater reliability testing with trained faculty assessors.

Results. Patient-centered Communication Tools (PaCT) achieved face and content validity and performed well with multiple correlation tests with significant findings for reliability testing and when compared to an alternate rubric.

Conclusion. PaCT is a useful instrument for assessing student pharmacist communication skills with patients.

Keywords: communication tools, provider-patient relationship, patient-centered, pharmacist-patient instrument

INTRODUCTION

Effective communication requires active participation by patients and health care providers to ensure that messages are received and interpreted accurately by all parties. This is especially true for pharmacists as evidenced by a World Health Organization (WHO) report indicating that one of the seven roles of the pharmacist is “communicator.”1 The 2016 Accreditation Council for Pharmacy Education (ACPE) guidelines for Doctor of Pharmacy degree programs explicitly define expectations for communication in the standards.2 Standard 3 (Approach to Practice and Care), Key Element 3.6 outlines that “graduates must be able to effectively communicate verbally and nonverbally when interacting with individuals, groups, and organizations.” Additionally, professional communication is described as a required element of the didactic curriculum in Appendix 1 of the Standards.2 The Center for the Advancement of Pharmacy Education (CAPE) is recognized by schools and colleges of pharmacy, the American Association of Colleges of Pharmacy (AACP), and ACPE as the foundational driver for curricular design, mapping, and setting program expectations. Updated outcomes were released by CAPE in 2013 that specifically mention communication in Domain 3 (3.6 Communicator) and indirectly within the description of collaboration (3.4 Collaborator).3

Studies have shown that pharmacist communication skills can be improved with education and training.4,5 A recent literature review of communications training and assessment in pharmacy education by Wallman and colleagues revealed that the majority of education and training occurs with patient-focused communication activities, such as learning interviewing techniques, patient counseling or public health promotion.6 Several published articles describe objective assessment of student pharmacist oral communication with a patient, such as structured exam, pre/post evaluations, and expert/professor assessment of skills. Other articles describe subjective assessment of the student through methods such as self-assessment, course evaluation questionnaires, and student satisfaction.7-28 The majority of these studies utilized simulated or standardized patients (SPs) as part of the activity, both as an educational tool and as an assessment method. In general, much of the published research describe innovative additions to courses, however, there is no systematic assessment of whether any of the activities result in increased learning and if multiple activities were used, what the optimal order or combination of activities should be. The authors also note that inconsistencies in assessments are due to a lack of skilled evaluators. This highlights the need for further research to develop and evaluate more accurate assessment methods and the importance of training evaluators. Additionally, many communication tools have been developed and validated for other health professions such as nursing and medicine, however, these tools are generally specific to the discipline and do not contain the necessary criteria to fully assess a student pharmacist on all components of a patient-pharmacist encounter.29,30

Prior to development of our instrument, a validated communication framework for student pharmacists had not yet been published. In the absence of a pharmacist-based instrument for students, the faculty at St. Louis College of Pharmacy previously used the Four Habits Model (FHM), which is a framework designed for use by physicians. The FHM contains 23 aspects of clinician communication behaviors organized into four “habits.”31-33 The FHM was chosen because of its significant emphasis on relationship-building behaviors within a patient-provider interaction. This framework was used to teach and assess student pharmacists’ communication abilities with SPs from 2009-2012. While useful for general communication behaviors, our experience with the FHM highlighted the need for a validated instrument specifically designed to teach and assess student pharmacists in a pharmacist-patient encounter since many of the criteria within the FHM relate to skills specific to physician scope of practice.34

Based on our experience with the FHM and lack of a published pharmacy-specific framework, the goals of this study were to: develop a comprehensive instrument specific to student pharmacist-patient communication skills, and determine face, content, construct, concurrent, and predictive validity and reliability of the instrument. This study was part of a larger project to develop, implement, and evaluate curricular changes to improve the health literacy-related abilities of student pharmacists at St. Louis College of Pharmacy. This project was funded in part by the Missouri Foundation for Health.

METHODS

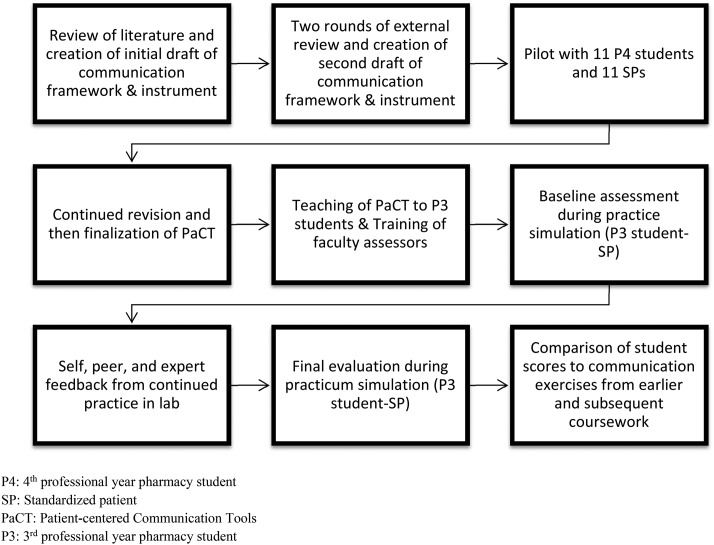

A multi-step process was used to develop and validate a new communication framework and instrument for use with student pharmacists (Figure 1). This study was approved by the St. Louis College of Pharmacy Institutional Review Board and prior informed consent was obtained for all participants. All students (n=216) were invited to participate in the study at the beginning of the third professional year (P3) and were informed that consent would also permit researchers to review data from their other coursework.

Figure 1.

Study Methods.

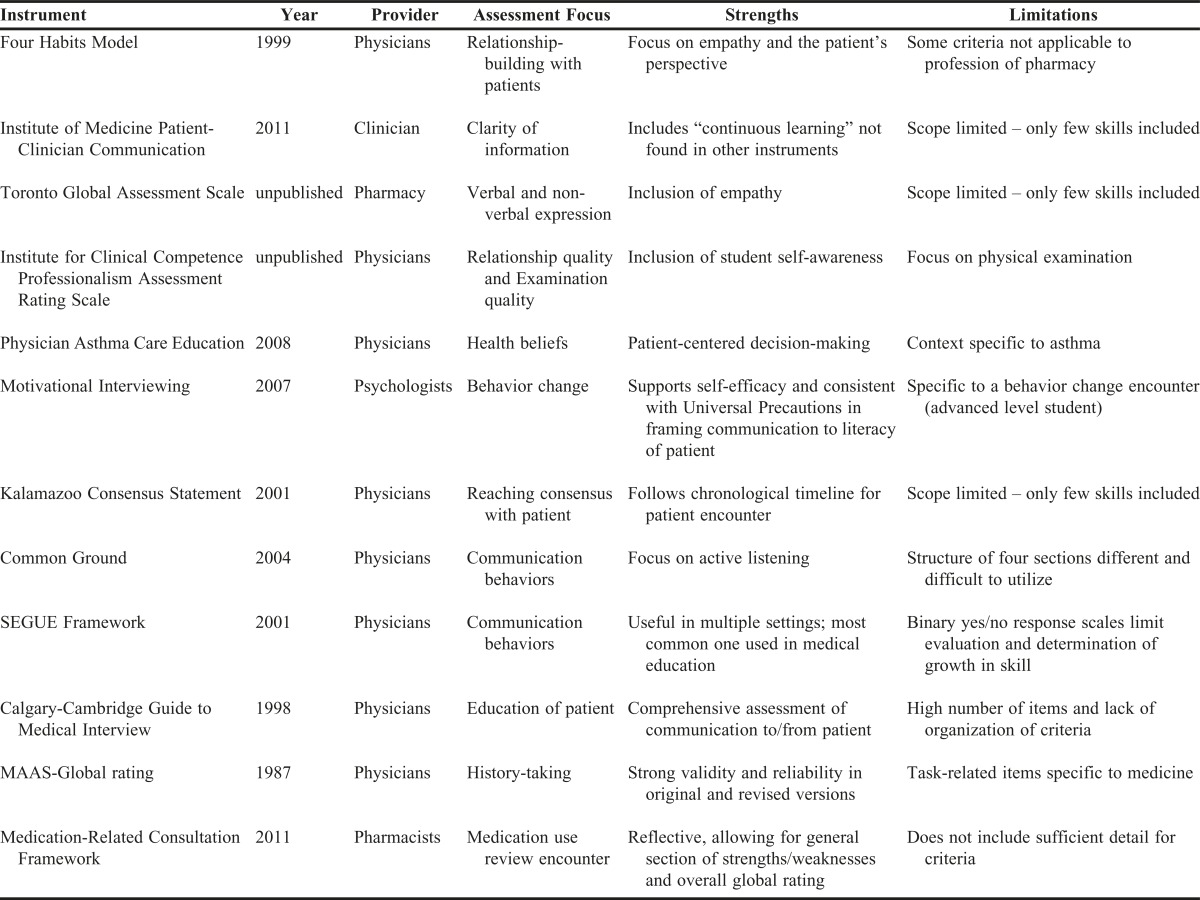

Prior to development of PaCT in 2012, a comprehensive review of the literature identified eight clinician-patient communication instruments that were most closely suited to the education and assessment of student pharmacists (Table 1).31-33,35-41 Additionally, other communication tools were reviewed including the Valid Assessment of Learning in Undergraduate Education (VALUE) rubric for communication, the Developing a Curriculum (DACUM) document reflecting communication outcomes, and a prior self-developed instrument.42,43 Other tools reviewed but not considered in depth for development of PaCT include the SEGUE Framework, the Calgary-Cambridge Guide to Medical Interview, and the MAAS-Global rating list for doctor-patient communication skills.44-46 The SEGUE Framework is the most common, validated communication instrument utilized in medical education, however, the binary “yes/no” nature of response scales do not indicate a learner’s degree of effectiveness in the communication criteria and therefore would be unable to effectively measure differences or growth in skill.44 The Calgary-Cambridge Guide to Medical Interview is another comprehensive communication instrument, however, with a high number of items (71) and a lack of organization for the criteria, it was cumbersome to use.45 The MAAS-Global rating, while much more manageable, has several task-related items specific to medicine, such as diagnostic plans and evaluation and is deemed less relevant to pharmacy.46

Table 1.

Clinician-Patient Communication Instruments

Faculty members (n=4) experienced in teaching clinical communication and health literacy compared each instrument to the FHM. Each instrument was evaluated to identify items that were distinct and important aspects for pharmacists. Items considered both distinct and important by consensus of all pharmacy faculty associated with this research were added to the draft of the new framework. When there were items about similar concepts in more than one instrument, the faculty compared and contrasted the wording of similar items from multiple instruments. A new item was developed by integrating the best features of each instrument. The authors considered the use of a rating scale evaluation versus a descriptive rubric and elected to use a five-point rating scale to keep the instrument shorter for faculty and student use.

Two rounds of feedback on the draft framework and instrument was incorporated from an external, interdisciplinary panel of 10 communication and health literacy experts. Seventeen experts were identified by searching the pharmacy literature and inviting well-published authors (research and/or books) in the area of communication skills in the United States and Canada to participate. The authors of the FHM were also invited as experts to review PaCT. Ten experts, including one of the authors of the FHM, accepted the invitation to review the instrument by participating in two rounds of review.

The first round of review was done by email and included a series of questions about the draft framework such as: What criteria are missing?; What criteria are unnecessary?; Are the criteria clear? If not, which ones are unclear?; Are the criteria organized well in the categories defined?; and Do you feel this list of criteria is an improvement?

For the instrument, experts were asked to comment on the practicality of the instrument; the format (preference of this format (general rating scale), or descriptors for each criterion's behavior (traditional rubric); comments on the descriptions within and category titles of the rating scale; and whether they would use this for assessment of a student-patient encounter. If not, why not? If so, which type of encounter? Lastly, they were asked to respond to three general questions: Do these criteria actually measure communication and relationship-building characteristics of a student pharmacist-patient encounter?; What did you like most about the criteria and instrument?; and What are specific suggestions you have to change the criteria and instrument?

During the first round of review, feedback was collected from all expert reviewers. All feedback was discussed, compiled, and incorporated by the authors into a second draft of the framework and instrument. A summary, along with the authors’ responses to the feedback was distributed to the expert panel along with a revised framework and instrument. The second round of expert feedback was done in a live meeting one month later in which experts were asked similar questions about the updated framework and instrument. Qualitative data provided by each expert during each round was grouped into themes and addressed by the authors to further refine the instrument.

The framework and instrument were initially piloted with 11 student pharmacists in the 4th professional year. The pilot was conducted in a simulation center with multiple exam rooms at a neighboring medical school. Students were asked to interview an SP with asthma and educate on a new inhaler in 30 minutes. Eleven SPs were used because there were 11 exam rooms in the standardized patient simulation center and we were only able to recruit 11 student pharmacists to participate in the pilot. All 11 students had been instructed on pharmacist-patient communication earlier in their training and their performance had been evaluated the year prior using the FHM. Following this interview, 11 SPs were asked to review and provide their opinion on the instrument from the patient’s perspective. All SPs were asked to comment on criteria that measured communication and relationship-building as well as criteria that were not necessary. Additionally, SPs were asked to rate PaCT regarding how accurately it described their expectations of a pharmacist using a Likert scale of 1=least accurate list of criteria to 5=most accurate list of criteria. Finally, volunteer student pharmacists were also asked to provide feedback on the criteria and instrument. Qualitative analysis of this data provided additional information for further refinements to the instrument.

All P3 students were taught the framework in a two-hour interactive lecture in the Advanced Pharmacy Practice (APP) course. For this session, the class was divided into five sections and each section was assigned one of the five Tools. Students were instructed to review the criteria within their assigned Tool and work with their peers to identify good examples for each criterion. Afterward, a pair of students from each section was invited to the front of the class to act out a scenario utilizing good examples of the criteria within their Tool and class-wide discussion followed. Students had multiple opportunities to practice during student-role play activities in the associated laboratory sessions and were provided formative self- and peer feedback using the instrument. Students received formal expert faculty feedback using the instrument during a structured 30-minute student pharmacist-SP simulation early in the semester (pre). Student performances were evaluated at the end of the semester for their final practicum and grade (post) in another structured 30-minute student pharmacist-SP simulation.

Prior to the first structured simulation, 10 faculty assessors were trained to provide feedback and evaluation on student performances using the new instrument during a two-hour session in which one student video from a prior semester was observed and rated by all faculty assessors. Faculty assessors were instructed to select one of the five Likert scale options (half-increments were not permitted), and to provide justification/comments supporting their assessments. Scores and justification for each item were discussed as a large group and scores were calibrated to within one degree of separation on the Likert scale until all faculty assessors understood each item and examples of behaviors expected for each. Faculty assessors were asked for open-ended qualitative feedback about the content of the framework and ease-of-use of the instrument. Faculty assessors who completed the training then assessed videos of student pharmacist performances during the early simulation (pre) and final practicum (post).

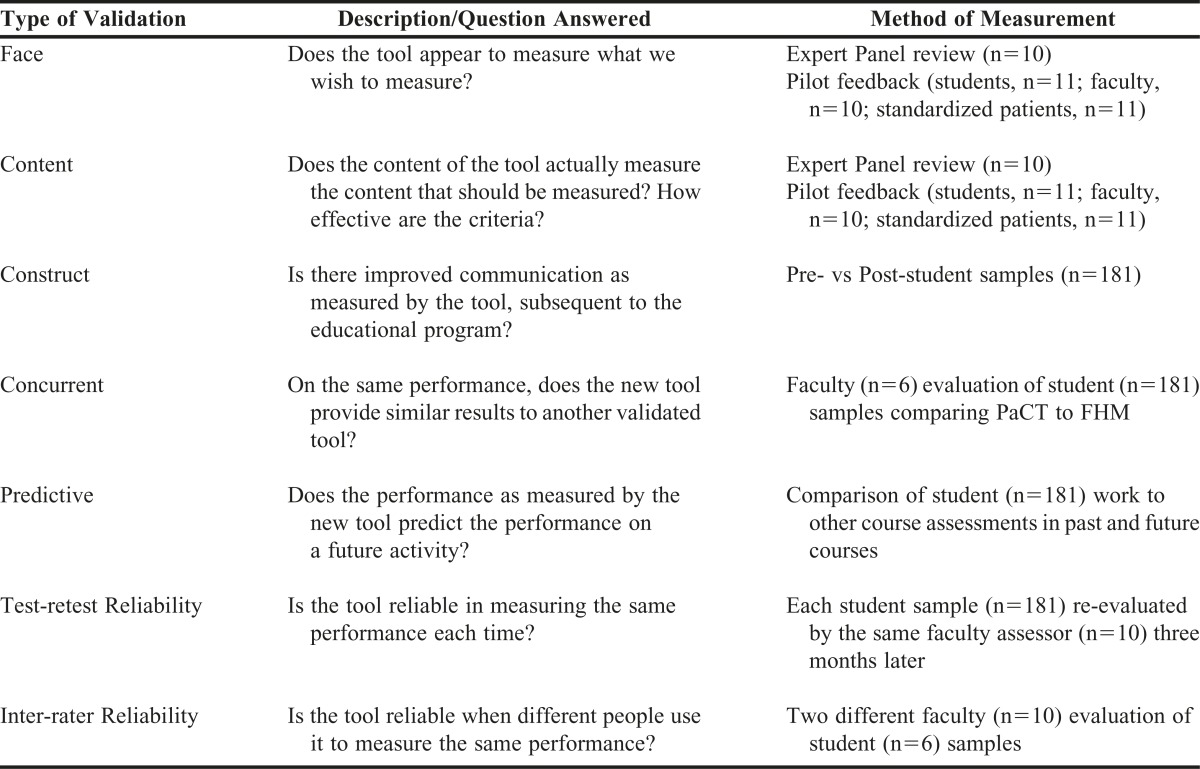

Methods used to validate the instrument are outlined in Table 2. Face and content validation methods have been described earlier during the instrument revision and testing. To assess construct validity, faculty assessments on pre- and post-student performances were compared. To assess concurrent validity (tool sensitivity/specificity), the same student performances were scored by six faculty assessors trained in using both PaCT and FHM and the scores were compared.

Table 2.

Multi-step Validation Process

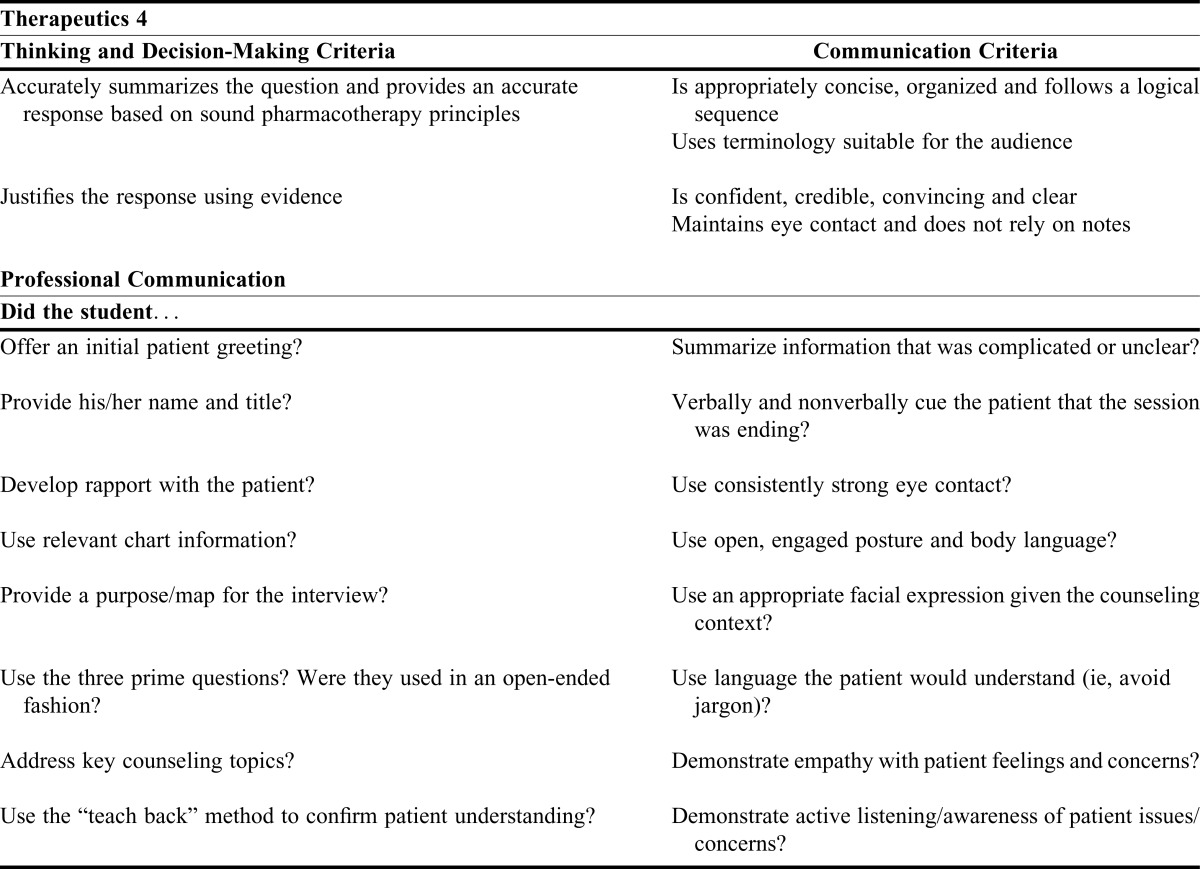

To determine predictive validity, PaCT student scores were compared to the same students’ scores as they progressed across the curriculum in two other courses using different communication assessment forms. Professional year 1 (P1) students performed a 10-minute patient counseling activity in Professional Communication (PC), a semester-long class with sections of 20 students taught by four non-pharmacist, communication faculty (data from three of the four faculty were available for this research). P3 students conducted a 30-minute patient encounter in APP scored using PaCT as described earlier. Later in the P3 year, students completed a verbal communication exercise in Therapeutics 4 (T4) in which they had 10 minutes to research a question using in-class materials and prepare their response and a maximum of five minutes to deliver their answer. Their responses were presented to faculty members, some of whom were involved in this study. Other non-validated, internally developed assessment forms were used for the PC and T4 exercises (Table 3).

Table 3.

Criteria for Therapeutics 4 (T4) and Professional Communication (PC) Activities

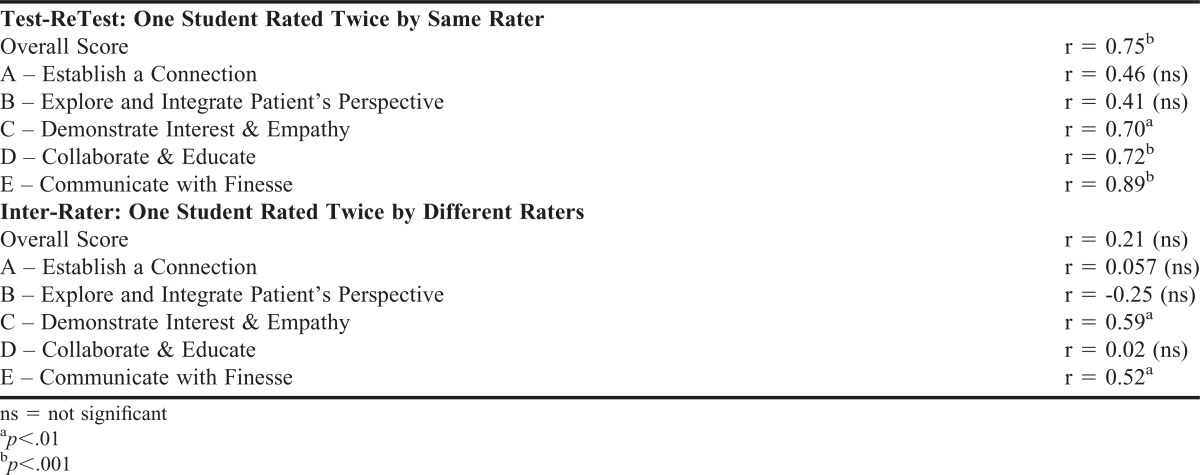

Two methods were used to evaluate reliability for a total of nine student samples: the same faculty assessor’s rating and re-rating a video three months later (test-retest reliability) and pairs of faculty assessors rating the same video for the first time (inter-rater reliability).

In the absence of a standard statistical approach to test reliability and given that the calibration of faculty was not intended to yield absolute consensus, but rather consistency, Pearson correlation coefficient was used throughout the project, calculated using IBM SPSS Statistic (Version 20, 2011, Chicago, IL).47,48 Pearson’s correlation is appropriate given that faculty assessors only had five categories to select from and because composite total scores were used making the data act more like interval data. Also, there was a normal distribution of the data. Standard guidelines for Pearson’s correlation coefficient are: 0.1-0.3 is small association, 0.3-0.5 is medium association, and 0.5-1.0 is large association. These general cutoffs were used to determine the strength of correlation.

RESULTS

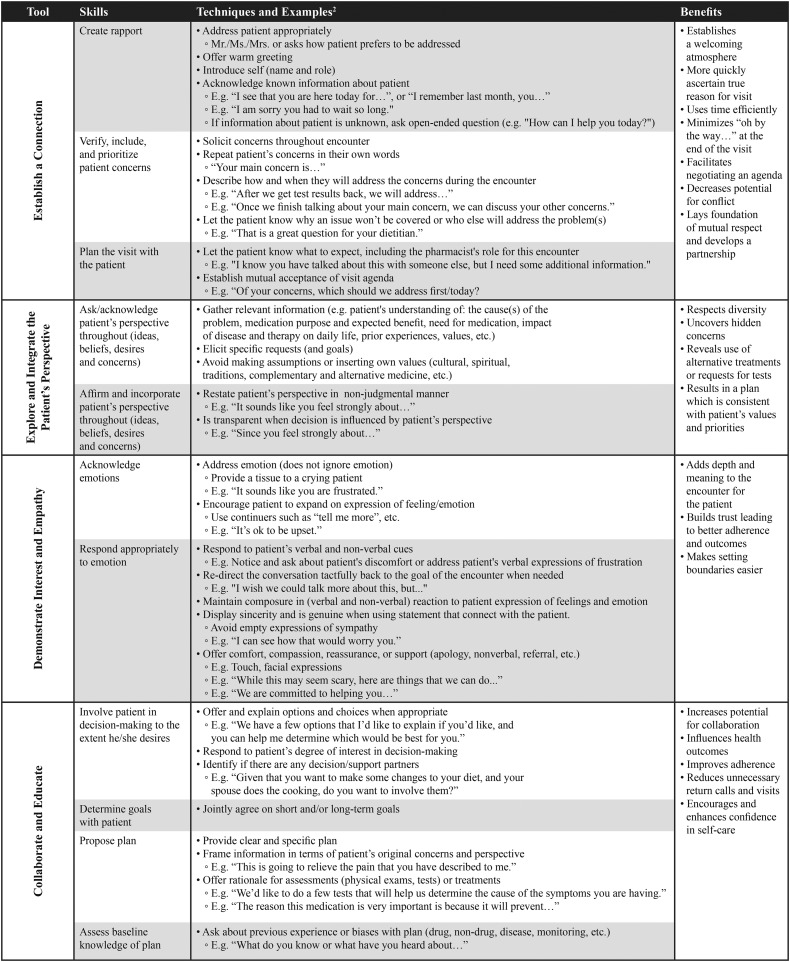

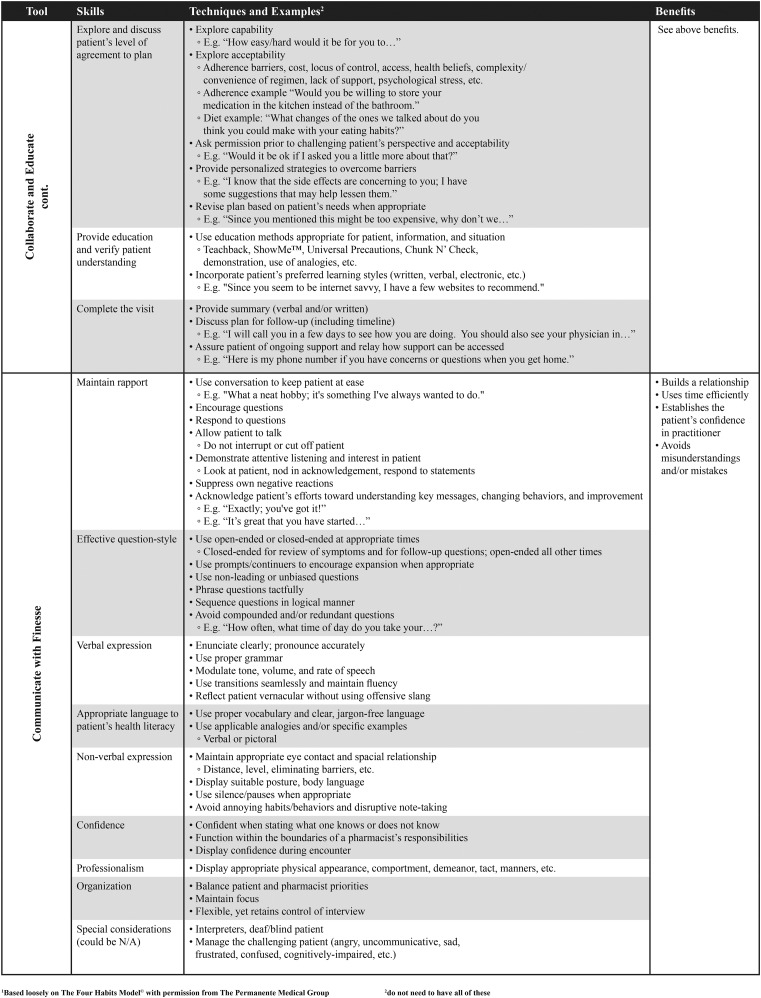

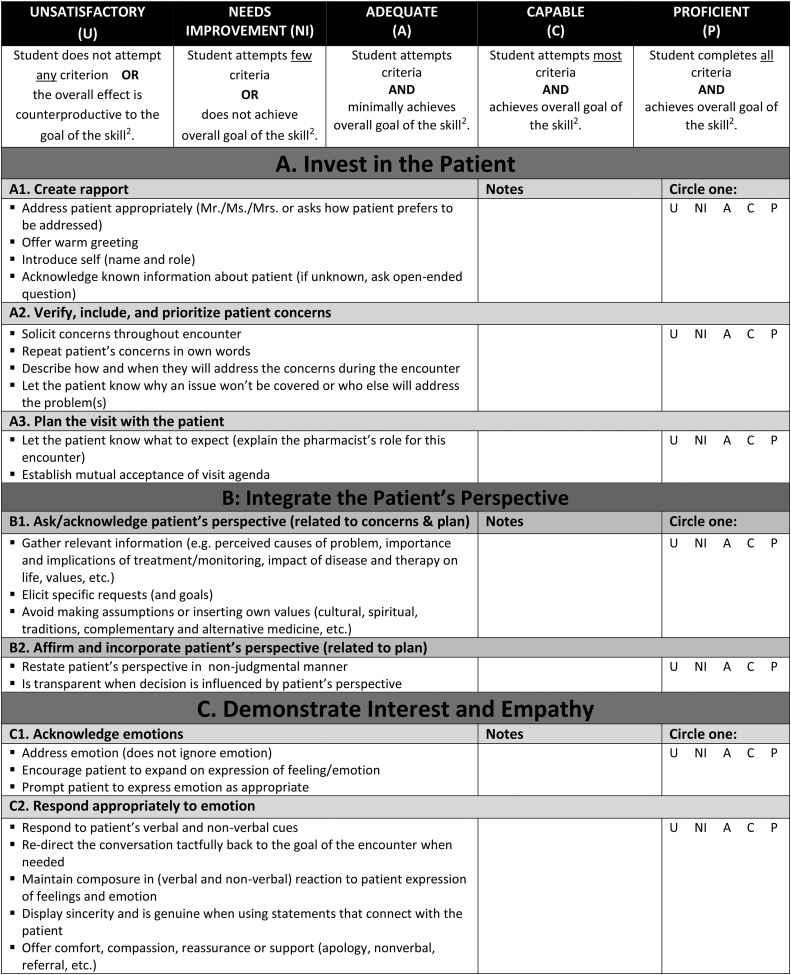

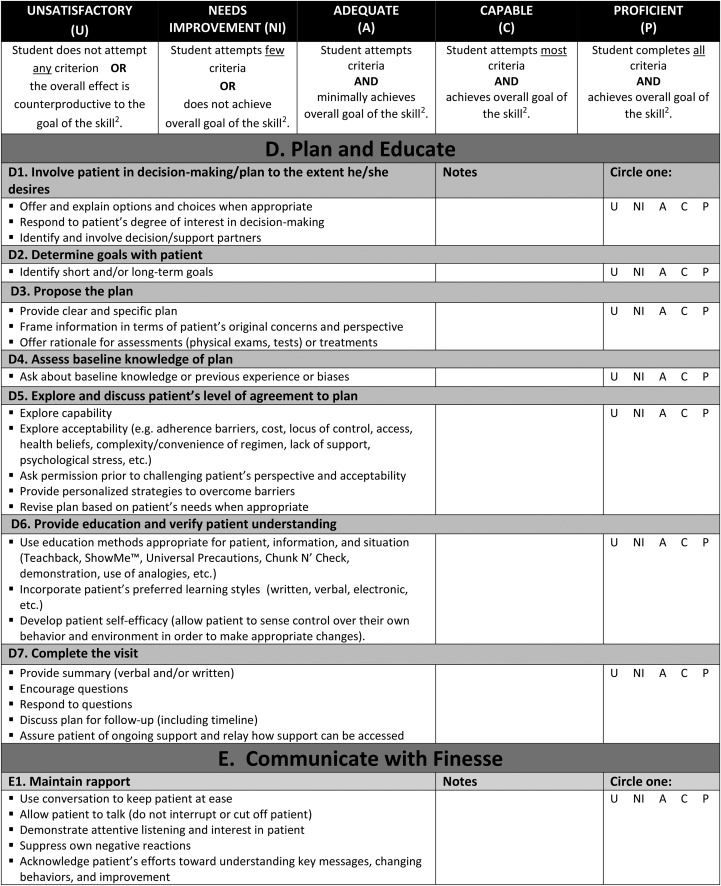

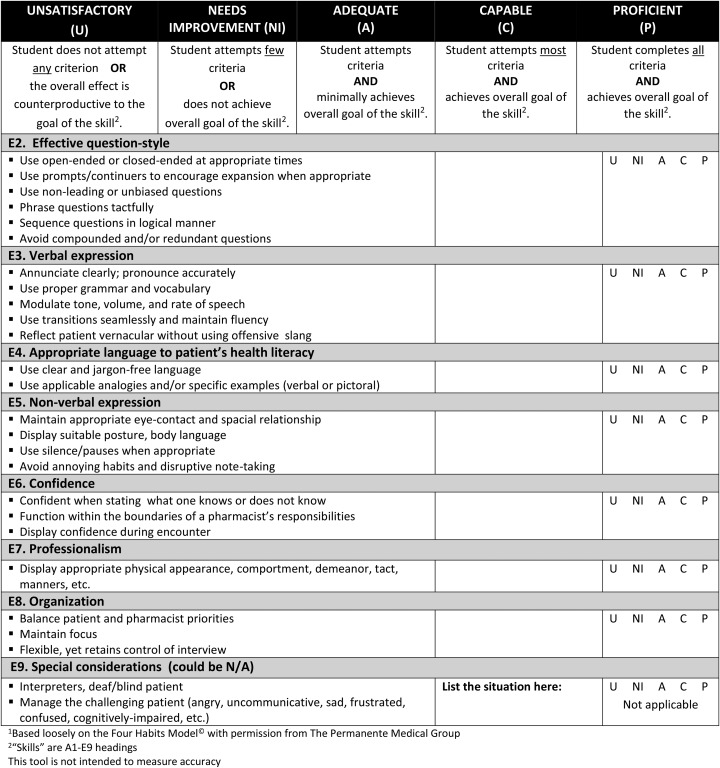

One of the authors of the FHM reviewed our instrument and considered it to be substantially different than FHM and supported pursuit of this independent instrument for use in pharmacy. The name Patient-centered Communication Tools (PaCT) was given to the new framework and instrument. PaCT includes 23 skills categorized into five general “Tools”: Tool A=Establish a Connection; Tool B=Explore and Integrate the Patient’s Perspective; Tool C=Demonstrate Interest and Empathy; Tool D=Collaborate and Educate; Tool E=Communicate with Finesse (Appendix 1). In the PaCT instrument, each individual question is assessed on a 5-point scale: unsatisfactory, needs improvement, adequate, capable, and proficient (Appendix 2).

Face and content validity were supported by the external panel, SPs, and faculty assessors. The experts felt there were no missing criteria, other than a lack of measurement of accuracy of information, the criteria were well-organized, and offered a meaningful contribution to pharmacy. Experts expressed concerns with the practicality of the instrument length and there was a lack of consensus on whether to use a descriptive rubric format or rating scale format. Experts indicated that they would use this instrument for wellness student-patient encounters, medication therapy management cases, objective structured clinical examinations, role-playing exercises, initial and follow-up encounters for longitudinal patients, and medication history taking plus counseling on a new medicine. All experts agreed that this instrument measured communication and relationship-building characteristics of a student pharmacist-patient encounter. The experts considered the ideal level of learner to be a P3 or P4 student and the length of time needed for such an encounter would be a minimum of 30 minutes.

Additionally, all SPs indicated that the criteria measured communication and relationship-building characteristics of a student pharmacist-patient encounter with a few items in Tool D were unnecessary. Nine (82%) ranked PaCT as a 5 on the 5-point Likert scale for accuracy; one (9%) ranked it a 4.5; and one abstained. Finally, faculty assessor and volunteer/pilot P4 student feedback affirmed that the instrument was comprehensive and tested communication skills. All faculty agreed that assessment speed increased the more they assessed, the examples in the framework allowed for a faster way to provide feedback in the instrument, and consistency on use of “not applicable” (N/A) and training of SPs and faculty were critical elements to the success of PaCT.

Although all student pharmacists received the same instruction on the communication framework, practice opportunities, feedback and evaluation, only data from consenting students were included (n=181, 84%). Overall scores for each student on the second interview showed a significant improvement, averaging 80.7% for the early simulation and 90.0% for the final practicum (p<.001). To determine overall scores, each skill was weighted equally. Scores improved for 21 of 22 analyzed skills (the 23rd skill, “Special Considerations” was not applicable for this simulation); significantly improving for 18 skills (p<.05). Correlations between first and second interview scores were significant for all five tools and for 16 of 22 analyzed skills (p<.05). Performance on Tools A, B, C, and E showed significant improvement (p<.05).

The total PaCT and FHM scores on the same performance were significantly correlated (r=0.71, p<.05) supporting the concurrent validity of PaCT when compared to the instrument designed for physicians.

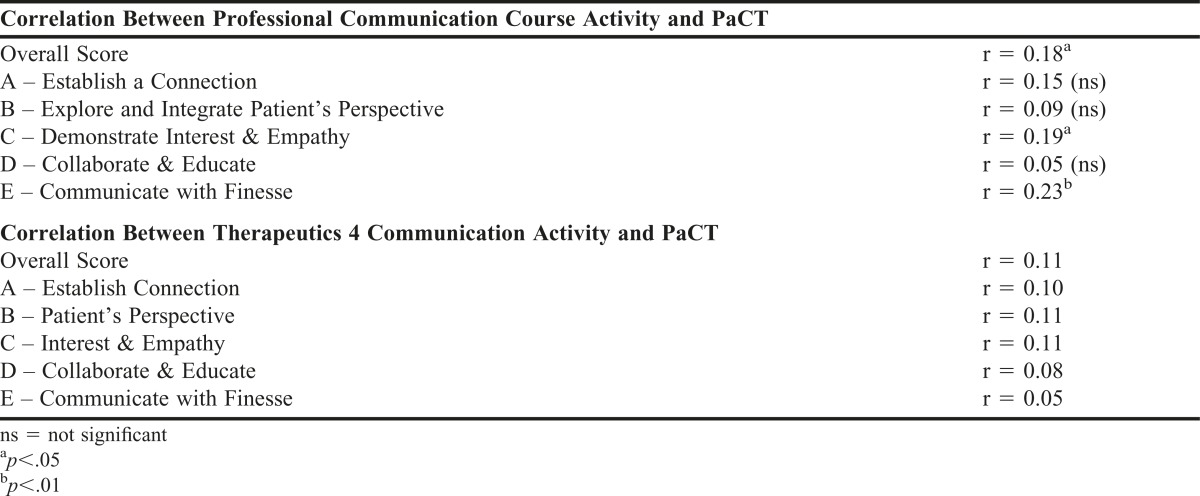

Scores on the PC performance showed a predictive correlation to PaCT performance scores (r=0.18, p<.05), although the correlation was not very strong. PaCT Tools C and E and PC scores were also positively correlated (r=0.19 and 0.23 respectively, p<.05), reflecting similar skills performed between PC and APP exercises, but the correlation was not very strong. PaCT score did not predict performance on the T4 exercise as there was no correlation in overall T4 and PaCT scores (r=0.11, p>.05) or between any PaCT Tool and T4 scores. Performance on three individual PaCT questions did predict T4 scores [D2=Determine Goals (r=0.23); E6=Confidence (r=0.12); and E7=Professionalism (r=-0.20)] (Table 4).

Table 4.

Validity of PaCT Compared to Other Internal Communication Rubrics

For (test-retest) reliability analysis, total scores (Table 5) were significantly correlated (r=0.75, p<.001). Correlations were significant for individual Tools C, D, and E (p<.01). The correlation for Tools A and B approached significance (p<.10). Inter-rater reliability results showed no overall score correlation and two significant Tool correlations: Tools C (p<.01) and E (p<.05).

Table 5.

Reliability of PaCT

DISCUSSION

Multiple methodologies were used to strengthen face and content validity including review by multiple groups of experts (internal and external), students, faculty assessors, and SPs who represented a sample of the general patient population that interacts with pharmacists. The additional validation methods used in the study were thorough and numerous. As noted by Moskal and Leydens, the three types of evidence commonly used to support assessment rubric validity are content, construct and criterion.49 For development of our instrument, we examined content and construct validity.

Subsequent to the development of PaCT, the Medication-Related Consultation Framework (MRCF) was published in 2011.50 While the MRCF is a validated framework, its design and focus are considerably different than the Patient-centered Communication Tools (PaCT), being that it was developed in and for the United Kingdom setting and has fewer detailed criteria; therefore it would not achieve the same objectives for student development and assessment. PaCT addresses the need for a framework and instrument specifically designed for student pharmacist clinical communication skills.

The PaCT instrument can be used to measure student pharmacist communication with SPs for clinical interviews and provide feedback for improvement in specific skills. Other advantages include its detail, the examples provided, the pharmacy-specific considerations, and the comprehensiveness in terms of what student pharmacists should be including in patient encounters. Disadvantages include some differences in assessor interpretation and scoring of the instrument and the limited applicability in brief patient encounters or provider interactions. Given its comprehensiveness, PaCT may be best reserved for upper level pharmacy coursework with a patient encounter scenario. This can be confirmed by the fact that Tool D and certain skills within did not show improvement from the first student use to the second which may indicate that the skills required for Tool D are complex, high-level skills that require more practice.

Results support the ability of the PC assessment form to predict performance on a later patient encounter activity as measured by PaCT, with strongest correlation for questions within Tool E (ie, maintaining rapport, question-style, verbal and non-verbal expression, language, confidence, organization, and professionalism). This suggests that students are applying foundational skills from the PC course into later professional coursework, which confirms the appropriate building of curricular experiences. This finding could be applied to prospectively identify students prior to APP who need additional assistance in developing communication skills during patient encounters.

The lack of correlation between total PaCT and T4 scores is not surprising due to differences in the skills emphasized (provider communication in T4 and patient communication in APP), length of encounter (5 minutes versus 30 minutes), and the primary assessment criteria (thinking and decision-making in T4 and communication in APP as well as the differences in quantity of criteria in both lists). However, greater correlation was anticipated between PaCT Tool E and total T4 scores since both instruments assess general communication skills. The lack of correlation indicates that students may not be translating general communications skills learned in patient encounters into other situations, in this case, provider encounters. An area for future research would be to assess if PaCT performance in APP correlates to a student pharmacist’s communication skills during patient encounters during Advanced Pharmacy Practice Experiences. A positive correlation here would mean that PaCT scores have the potential to prospectively identify students needing more assistance or practice in developing the ability to communicate with patients prior to or during these experiences.

There are several limitations to this research. Data for validation were produced from a single school and within a similar cohort of (P3) students. Also, multiple confounders for communication performance in T4 and PC exist and are related to the diverse nature of the required student curriculum. The inter-rater reliability measurements, while statistically significant, are not strongly correlated. Better correlation would likely require comprehensive orientation and training (longer than 2 hours and calibration by review of multiple videos) that should be implemented for any communication assessment. Though no consensus was achieved by the expert panel due to the length of the instrument, future work can explore expanding the instrument into a descriptive rubric, which would also improve inter-rater reliability.51 Not all sections of PaCT met test-retest reliability criteria; however, the reliability of PaCT with previous validated tools suggests that PaCT measures similar to accepted professional communication instruments. Future research can focus on the adaptation of the form to more effectively measure communication skills while increasing evaluator consistency. Additionally, given that communication tools in other disciplines used an OSCE format for their validation, using an OSCE for validity testing and subsequent modification could strengthen the validity results. It is important to note that this instrument, in order to be adaptable to various activities, will need to be combined with additional rubrics for knowledge assessment. Despite this, having a separate instrument allows for flexibility in the design and assessment of the assignment.

CONCLUSION

There are important skills applicable across health care disciplines that foster effective communication during patient encounters. The development of PaCT was based on the premise that using instruments developed for other disciplines may not capture skills that are unique to pharmacists (eg, changing focus from diagnosis to medication-related issues). PaCT is a useful instrument with significant face, content, construct, and test-retest validity specifically developed to assess student pharmacist communication with patients. The total scores on PaCT and FHM were significantly correlated. Further work is needed to improve inter-rater reliability.

ACKNOWLEDGMENTS

The authors wish to acknowledge and thank Vibhuti Arya, Lynette Bradley-Baker, Robert Beardsley, Lisa Guirguis, Carole Kimberlin, Dee Dee McEwen, Nathaniel Rickles, Sharon Youmans, and Elizabeth Young for their generous time and support as expert panelists in the development of the PaCT framework and assessment form.

Appendix 1. Patient-centered Communication Tools (PaCT)1

Appendix 2. Patient-centered Communication Tools (PaCT)1 Assessment Form

REFERENCES

- 1. WHO. The role of the pharmacist in the health care system: preparing the future pharmacist: curricular development: report of a third WHO Consultative Group on the Role of the Pharmacist, Vancouver, Canada, August 27-29, 1997, WHO/Pharm/97/599. Vancouver; 1997.

- 2.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. Standards 2016. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf. Accessed June 22, 2016.

- 3.Medina MS, Plaza CM, Stowe CD, et al. Center for the Advancement of Pharmacy Education 2013 educational outcomes. Am J Pharm Educ. 2013;77(8):Article 162. doi: 10.5688/ajpe778162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kimberlin CL. Communicating with patients: skills assessment in US colleges of pharmacy. Am J Pharm Educ. 2006;70(3):Article 67. doi: 10.5688/aj700367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mesquita AR, Lyra DP, Jr, Brito GC, Balisa-Rocha BJ, Aguiar PM, de Almeida Neto AC. Developing communication skills in pharmacy: a systematic review of the use of simulation patient methods. Patient Educ Couns. 2010;78(2):143–148. doi: 10.1016/j.pec.2009.07.012. [DOI] [PubMed] [Google Scholar]

- 6.Wallman A, Vaudan C, Sporrong SK. Communications training in pharmacy education, 1995-2010. Am J Pharm Educ. 2013;77(2):Article 36. doi: 10.5688/ajpe77236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Boesen KP, Herrier RN, Apgar DA, Jackowski RM. Improvisational exercises to improve pharmacy students’ professional communication skills. Am J Pharm Educ. 2009;73(2):Article 35. doi: 10.5688/aj730235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nestel D, Calandra A, Elliott RA. Using volunteer simulated patients in development of pre-registration pharmacists: learning from the experience. Pharm Educ. 2007;7(1):35–42. [Google Scholar]

- 9.Rickles NM, Tieu P, Myers L, Galal S, Chung V. The impact of a standardized patient program on student learning of communication skills. Am J Pharm Educ. 2009;73(1):Article 4. doi: 10.5688/aj730104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Barlow JW, Strawbridge JD. Teaching and assessment of an innovative and integrated pharmacy undergraduate module. Pharm Educ. 2007;7(2):193–195. [Google Scholar]

- 11.Mackellar A, Ashcroft DM, Bell D, James DH, Marriott J. Identifying criteria for the assessment of pharmacy students communication skills with patients. Am J Pharm Educ. 2007;71(3):Article 50. doi: 10.5688/aj710350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Purkerson DL, Mason HL, Chalmers RK, Popovich NG, Scott SA. Evaluating pharmacy students’ ability-based educational outcomes using an assessment center approach. Am J Pharm Educ. 1996;60(3):239–247. [Google Scholar]

- 13.Mort JR, Hansen DJ. First-year pharmacy students’ self-assessment of communication skills and the impact of video review. Am J Pharm Educ. 2010;74(5):Article 78. doi: 10.5688/aj740578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Greene RJ, Cavel GF, Jackson SHD. Interprofessional clinical education of medical and pharmacy students. Med Educ. 1996;30(2):129–133. doi: 10.1111/j.1365-2923.1996.tb00730.x. [DOI] [PubMed] [Google Scholar]

- 15.Kilminster S, Hale C, Lascelles M. Learning for real life: patient-focused interprofessional workshops offer added value. Med Educ. 2004;38(7):717–726. doi: 10.1046/j.1365-2923.2004.01769.x. [DOI] [PubMed] [Google Scholar]

- 16.Shah R. Improving undergraduate communication and clinical skills: personal reflections of a real world experience. Pharm Educ. 2004;4(1):1–6. [Google Scholar]

- 17.Westberg SM, Adams J, Thiede K, Stratton TP, Bumgardner MA. An interprofessional activity using standardized patients. Am J Pharm Educ. 2006;70(2):Article 34. doi: 10.5688/aj700234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Villaume WA, Berger BA, Barker BN. Learning motivational interviewing: scripting a virtual patient. Am J Pharm Educ. 2006;70(2):Article 33. doi: 10.5688/aj700233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Begley K, Haddad AR, Christensen C, Lust E. A health education program for underserved community youth led by health professions students. Am J Pharm Educ. 2009;73(6):Article 98. doi: 10.5688/aj730698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Boyatzis M, Batty KT. Domiciliary medication reviews by fourth year pharmacy students in Western Australia. Int J Pharm Pract. 2004;12(2):73–81. [Google Scholar]

- 21.Lawrence L. Applying transactional analysis and personality assessment to improve patient counseling and communication skills. Am J Pharm Educ. 2007;71(4):Article 81. doi: 10.5688/aj710481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sause RB, Galizia VJ. An undergraduate research project: multicultural aspects of pharmacy practice. Am J Pharm Educ. 1996;60:173–179. [Google Scholar]

- 23.Hyvärinen M-L, Tanskanen P, Katajavuori N, Isotalus P. A method for teaching communication in pharmacy in authentic work situations. Comm Educ. 2010;59(2):124–145. [Google Scholar]

- 24.Thomas SG. A continuous community pharmacy practice experience: design and evaluation of instructional materials. Am J Pharm Educ. 1996;60(1):4–12. [Google Scholar]

- 25.Kearney KR. A service-learning course for first-year pharmacy students. Am J Pharm Educ. 2008;72(4):Article 86. doi: 10.5688/aj720486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Awaisu A, Abd Rahman NS, Nik Mohamed MH, Bux Rahman Bux SH, Mohamed Nazar NI. Malaysian pharmacy students’ assessment of an objective structured clinical examination (OSCE) Am J Pharm Educ. 2010;74(2):Article 34. doi: 10.5688/aj740234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Awaisu A, Mohamed MH, Al-Efan QAM. Perception of pharmacy students in Malaysia on the use of objective structured clinical examinations to evaluate competence. Am J Pharm Educ. 2007;71(6):Article 118. doi: 10.5688/aj7106118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Taylor J, Smith A. Pharmacy student attitudes to patient education: a longitudinal study. Can Pharm J. 2010;143(5):234–239. [Google Scholar]

- 29.Pagano MP, O’Shea ER, Campbell SH, Currie LM, Chamberlin E, Pates CA. Validating the Health Communication Assessment Tool (HCAT) Clin Sim Nurs. 2015;11(9):402–410. [Google Scholar]

- 30.Zill JM, Christalle E, Müller E, Härter M, Dirmaier J, Scholl I. Measurement of physician-patient communication – a systematic review. PLoS One. 2014;9(12):e112637. doi: 10.1371/journal.pone.0112637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stein T, Frankel RM, Krupat E. Enhancing clinician communication skills in a large health care organization: a longitudinal case study. Patient Educ Couns. 2005;58(1):4–12. doi: 10.1016/j.pec.2005.01.014. [DOI] [PubMed] [Google Scholar]

- 32.Krupat E, Frankel RM, Stein T, Irish J. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns. 2006;62(1):38–45. doi: 10.1016/j.pec.2005.04.015. [DOI] [PubMed] [Google Scholar]

- 33.Frankel RM, Stein TM. Getting the most out of the clinical encounter: the Four Habits Model. J Med Pract Manage. 2001;16(4):184–191. [PubMed] [Google Scholar]

- 34.Grice GR, Gattas NM, Sailors J, et al. Health literacy: use of the Four Habits Model to improve student pharmacists’ communication. Patient Educ Couns. 2013;90(1):23–28. doi: 10.1016/j.pec.2012.08.019. [DOI] [PubMed] [Google Scholar]

- 35.Paget L, Han P, Nedza S, et al. Institute of Medicine discussion paper: patient-clinician communication: basic principles and expectations 2011; https://www.accp.com/docs/positions/misc/IOMPatientClinicianDiscussionPaper.pdf. Accessed June 22, 2016.

- 36. Global Assessment for Student Pharmacist. Leslie Dan Faculty of Pharmacy, University of Toronto.

- 37. Professionalism Assessment Rating Scale (PARS). Institute for Clinical Competence. New York College of Osteopathic Medicine. 2009. (unpublished).

- 38.PACE Training Manual & Speaker’s Guide. http://www.nhlbi.nih.gov/files/docs/resources/lung/manual.pdf . Accessed June 22, 2016.

- 39.Levensky ER, Forcehimes A, O’Donohue T, Beitz K. Motivational interviewing: an evidence-based approach to counseling helps patients follow treatment recommendations. Am J Nurs. 2007;107(10):50–58. doi: 10.1097/01.NAJ.0000292202.06571.24. [DOI] [PubMed] [Google Scholar]

- 40.Makoul G. Essential elements of communication in medical encounters: The Kalamazoo consensus statement. Acad Med. 2001;76(4):390–393. doi: 10.1097/00001888-200104000-00021. [DOI] [PubMed] [Google Scholar]

- 41.Lang F, McCord R, Harvill L, Anderson DS. Communication assessment using the common ground instrument: psychometric properties. Fam Med. 2004;36(3):189–198. [PubMed] [Google Scholar]

- 42.Association of American Colleges and Universities. Oral communication VALUE rubric. http://www.aacu.org/value/rubrics/pdf/OralCommunication.pdf. Accessed June 22, 2016.

- 43.Developing a curriculum (DACUM) http://www.dacum.org/ . Accessed June 22, 2016.

- 44.Makoul G. The SEGUE framework for teaching and assessing communication skills. Patient Educ Couns. 2001;45(1):23–34. doi: 10.1016/s0738-3991(01)00136-7. [DOI] [PubMed] [Google Scholar]

- 45. Silverman J, Kurtz S, Draper J. Skills for Communicating With Patients. Abingdon, Oxon, UK: Radcliffe Medical Press; 1998.

- 46.Van Thiel J, Kraan HF, van der Vleuten CPM. Reliability and feasibility of measuring medical interviewing skills: the revised Maastricht history-taking and advice checklist. Med Educ. 1991;25(3):224–229. doi: 10.1111/j.1365-2923.1991.tb00055.x. [DOI] [PubMed] [Google Scholar]

- 47.Kottner J, Audige L, Brorson S, et al. Guidelines for reporting reliability and agreement studies (GRRAS) were proposed. J Clin Epidemiol. 2011;64(1):96–106. doi: 10.1016/j.jclinepi.2010.03.002. [DOI] [PubMed] [Google Scholar]

- 48.Stemler SE. A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Pract Assess, Res Eval. 2004;9(4):1–11. [Google Scholar]

- 49.Moskal BM, Leydens JA. Scoring rubric development: validity and reliability. Pract Assess, Res Eval. 2000;7(10):1–6. [Google Scholar]

- 50.Abdel-Tawab R, James DH, Fichtinger A, Clatworthy J, Horne R, Davies G. Development and validation of the medication-related consultation framework (MRCF) Patient Educ Couns. 2011;83(3):451–457. doi: 10.1016/j.pec.2011.05.005. [DOI] [PubMed] [Google Scholar]

- 51.Jonsson A, Svingby G. The use of scoring rubrics: reliability, validity and educational consequences. Educ Res Rev. 2007;2(2):130–144. [Google Scholar]

- 52.Huntley CD, Salmon P, Fisher PL, Fletcher I, Young B. LUCAS: a theoretically informed instrument to assess clinical communication in objective structured clinical examinations. Med Educ. 2012;46:267–276. doi: 10.1111/j.1365-2923.2011.04162.x. [DOI] [PubMed] [Google Scholar]