Abstract

Background

One of the key strategies to successful implementation of effective health-related interventions is targeting improvements in stakeholder engagement. The discrete choice experiment (DCE) is a stated preference technique for eliciting individual preferences over hypothetical alternative scenarios that is increasingly being used in health-related applications. DCEs are a dynamic approach to systematically measure health preferences which can be applied in enhancing stakeholder engagement. However, a knowledge gap exists in characterizing the extent to which DCEs are used in implementation science.

Methods

We conducted a systematic literature search (up to December 2016) of the English literature to identify and describe the use of DCEs in engaging stakeholders as an implementation strategy. We searched the following electronic databases: MEDLINE, Econlit, PsychINFO, and the CINAHL using mesh terms. Studies were categorized according to application type, stakeholder(s), healthcare setting, and implementation outcome.

Results

Seventy-five publications were selected for analysis in this systematic review. Studies were categorized by application type: (1) characterizing demand for therapies and treatment technologies (n = 32), (2) comparing implementation strategies (n = 22), (3) incentivizing workforce participation (n = 11), and (4) prioritizing interventions (n = 10). Stakeholders included providers (n = 27), patients (n = 25), caregivers (n = 5), and administrators (n = 2). The remaining studies (n = 16) engaged multiple stakeholders (i.e., combination of patients, caregivers, providers, and/or administrators). The following implementation outcomes were discussed: acceptability (n = 75), appropriateness (n = 34), adoption (n = 19), feasibility (n = 16), and fidelity (n = 3).

Conclusions

The number of DCE studies engaging stakeholders as an implementation strategy has been increasing over the past decade. As DCEs are more widely used as a healthcare assessment tool, there is a wide range of applications for them in stakeholder engagement. The DCE approach could serve as a tool for engaging stakeholders in implementation science.

Electronic supplementary material

The online version of this article (10.1186/s13012-017-0675-8) contains supplementary material, which is available to authorized users.

Keywords: Discrete choice, Conjoint analysis, Preferences, Stakeholder, Engagement

Background

Implementation science promotes methods to integrate scientific evidence into healthcare practice and policy. Traditionally, it has taken 15–20 years for academic research to translate into evidence-based program and policies, and implementation science is focused on narrowing time for translation of knowledge into practice [1]. One major component of implementation science is stakeholder engagement [2]. Successful implementation of healthcare interventions relies on stakeholder engagement at every stage, ranging from assessing and improving the acceptability of innovations to the sustainability of implemented interventions. In order to optimize the implementation of healthcare interventions, researchers, administrators, and policymakers must weigh the benefits and costs of complex multidimensional arrays of healthcare policies, strategies, and treatments.

As the field of implementation science matures, conceptualizing and measuring implementation outcomes becomes inevitable, particularly as it relates to the context of understanding the demand for evidence-based programs [3, 4]. One strategy for systematically evaluating implementation outcomes involves the assessment of patient health preferences. As a multidisciplinary field, implementation science should leverage health economics tools that assess alternative implementation strategies and communicate the preferences of relevant stakeholders around the characteristics of healthcare programs and interventions.

One dynamic tool for appraising choices in health-related settings is the discrete choice experiment (DCE), which elicits preferences from individual decision makers over alternative scenarios, goods, or services. Each alternative is characterized by several attributes; and the choices subsequently determine how preferences are influenced by each attribute, as well as their relative importance. Health economists increasingly rely on DCEs (also referred to as conjoint analysis) [5] to elicit preferences for healthcare products and programs, which then can be used in outcome measurement for economic evaluation [6]. Despite their utility in improving our understanding of health-related choices, the extent to which DCEs have been applied in implementation research is unknown. In this paper, we explore and document potential applications of DCEs and how these applications can contribute to the field of implementation science by enhancing stakeholder engagement.

There is limited guidance on how to tailor implementation strategies in order to address the contextual needs of change efforts in health-related settings [7]. A recent study identified four methods to improve the selection and tailoring of implementation strategies: (1) concept mapping (i.e., visual mapping using mixed methods); (2) group model building (i.e., causal loop diagrams of complex problems); (3) intervention mapping (i.e., systematic multi-step development of interventions); and (4) DCEs [7]. Although all four methods could be used to match implementation strategies to recognize barriers and facilitators for a particular evidence-based practice or process change being implemented in a given setting, DCEs were identified as having the clear advantages of (1) providing a clear stepwise method for selecting and tailoring strategies to unique settings, while (2) guiding stakeholders to consider attributes of strategies at a granular level, enhancing the precision with which strategies are tailored to context.

Discrete choice experiments are a commonly used technique to address a range of important healthcare questions. DCEs constitute an attribute-based measure of benefit, with the assumptions that first, healthcare interventions, services or policies can be described by their attributes or characteristics and second, the levels of these attributes drive an individual’s valuation of the healthcare good. Within a DCE, respondents are asked to choose between two or more alternatives. The resulting choices reveal an underlying utility function (i.e., an economic measure of preferences over a given set of goods or services). The approach combines econometric analysis with experimental design theory, consumer theory, and random utility theory, which posits that consumers generally choose what they prefer, and where they do not, this can be explained by random factors [6, 8, 9]. Meanwhile, conjoint analysis originated in psychology to address the mathematical representation of the behavior of rankings observed as an outcome of systematic, factorial manipulation of multiple measures. Although there is a distinction between conjoint analysis and DCE, the two terms are used interchangeability by many researchers [5].

Advancing methods to capture stakeholder perspectives is essential for implementation science [10], and consequently, research is needed to document choice experiment methods for assessing the feasibility, acceptability, and validity of stakeholder perspectives. Whereas the use of DCEs in healthcare settings is well documented, there is a knowledge gap in characterizing whether DCE methodology is being applied to improve stakeholder engagement in implementation science. Therefore, the aim of this systematic review was to provide a synthesis of the use of DCEs as a stakeholder engagement tool. Specific objectives were to (1) identify published studies using DCEs in stakeholder engagement; (2) categorize these studies by application subtype, stakeholder group, and healthcare setting; and (3) provide recommendations for future use of DCEs in implementation science.

Methods

Identification of eligible publications

To be included, studies must have reported on original research using the DCE methodology and include a discussion of at least one implementation outcome. Studies must also have been available in English and occurred in a health-related setting. Duplicate abstracts were excluded from the review, as were abstracts describing reviews, editorials, commentaries, protocols, conference abstracts, and dissertations.

Search strategy

A search of MEDLINE, EconLit, PsycINFO, and CINAHL databases was conducted using the following search terms: (“discrete choice” OR “discrete rank” OR “conjoint analysis”) AND (implement*). These four databases were selected as they index journals from the fields of implementation science and include applications of DCEs across a range of health-related contexts or environments including the following: healthcare practice (e.g., clinical, public health, community-based health settings), health policy (e.g., interactions with health decision-makers at local, regional, provincial/state, federal, or international levels), health education (e.g., interactions with health educators in clinical or academic settings), and healthcare administration (e.g., interactions with health system organizations). The keyword search terms were repeated for all four databases. Keyword searches were limited to the English language, covering all published work available up to December 2016 (Additional file 1).

Coding and data synthesis

Retrieved abstracts were initially assessed against the eligibility criteria by one reviewer (RS) and rejected if the reviewer determined from the title and abstract that the study did not meet inclusion criteria. Full-text copies of the remaining publications were retrieved and further assessed against eligibility criteria to confirm or refute inclusion. Studies meeting the eligibility criteria were then coded by two reviewers. Disagreements were resolved by consensus or by a third reviewer. For all included studies, we recorded the mode of administration (i.e., electronic, paper-based, or via telephone), whether ethics board approval was obtained, the study sponsor, the incentives provided to participants, and the average duration of surveys. Included studies were categorized as follows:

Application type: A formative process was used to identify categories of applications used in the studies that met inclusion criteria. All studies were classified according to one of four application types, as follows: (1) characterizing demand for therapies and treatment technologies; (2) comparing implementation strategies; (3) incentivizing workforce participation; and (4) prioritizing interventions. Studies were further coded based on whether implementation science was a primary focus in the research vs. studies that casually discuss one or more implementation outcome.

Implementation outcome and stage: All studies were classified based on one more implementation outcomes discussed in the paper. The implementation outcomes were derived from Proctor’s Conceptual Framework for Implementation Outcomes [3, 4] and include acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, penetration, and sustainability. We also assessed implementation stage—whether early, mid, or late.

Stakeholder: All studies were classified according to the stakeholder(s) involved. These included the patient, stakeholder, provider (including physician, nurse, community health worker, and health educator), and administrator (including health system leader, information technology administrator, and policy maker). Sample size (i.e., number of participants in the DCE) was also recorded.

Setting: Studies were further classified based on the healthcare setting where the research was conducted, as either primary care (including community-based settings), specialty care, or research that involved the broader health system (including research related to health information technology). Studies were also classified based on the country or countries where the research was conducted. Countries were then categorized as either “high income” or “low and middle income” according to the World Bank income classification [11].

Results

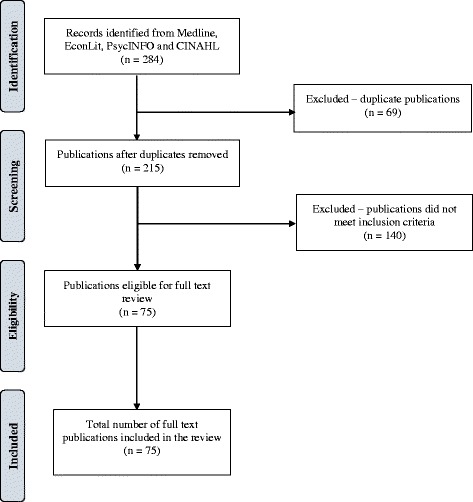

An electronic search yielded a total of 284 titles and abstracts which were judged to be potentially relevant based on title and abstract reading. Of these, 69 records were excluded for being duplicates. Full texts of the remaining 215 articles were reviewed. We finally selected 75 studies that met our inclusion criteria and excluded 140 studies. A flow chart through the different steps of study selection is provided in Fig. 1.

Fig. 1.

PRISMA flow diagram of study selection

Excluded studies

A total of 140 studies were excluded. Of these, 47 were not conducted in health-related settings, 38 did not discuss any implementation outcomes, 23 were either commentaries or systematic reviews, 13 were methodological studies without empirical applications, 12 were study protocols, and 7 did not use the DCE methodology. A table with references and reasons for exclusion can be found in Additional file 2.

Summary of included publications

Of the 75 included studies, 38 were administered as paper-based surveys, 23 were administered electronically, 5 were available in both paper and electronic formats, and 3 were administered via telephone. Administration mode was missing for 6 studies. Overall, 57 studies received institutional review board approval and 38 were exempted.

In terms of sponsorship, 37 studies were supported with government funding, 17 received funding from non-profit organizations, 3 were funded by healthcare delivery systems, and 2 had industry funding. No funding source was listed for the remaining 16 studies. In addition, only 10 studies reported the distribution of financial incentives to participants. The incentives ranged from US$1 or equivalent to US$25 (mean = US$12). Only 7 studies reported the average time it took participants to complete the survey (range 15–30 min).

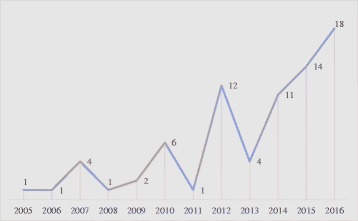

Summary of publications over time

The earliest DCE study addressing stakeholder engagement in our systematic review was published in 2005. The annual number of publications has steadily increased over the past decade to reach 18 articles in 2016 (Fig. 2).

Fig. 2.

Number of studies, by year: 2005–2016

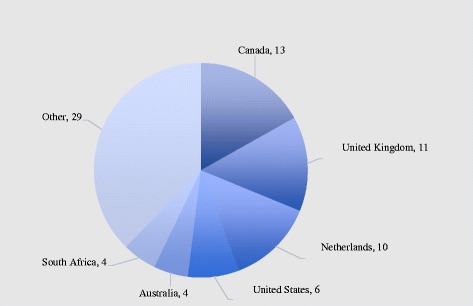

Summary of publications by country

Figure 3 shows the distribution of publications by country. Canada had the largest number of studies that met our inclusion criteria (n = 13), followed by the UK (n = 11), the Netherlands (n = 10), the USA (n = 6), Australia (n = 4), and South Africa (n = 4). Overall, 56 studies were conducted in high-income countries and 19 studies were from low- and middle-income countries (results now shown).

Fig. 3.

Number of studies, by country

Summary of publications by healthcare setting

Table 1 shows the number of studies by application type and healthcare setting. The majority of included studies were conducted in the primary care setting (n = 46), followed by specialty care (n = 22), and across the broader healthcare system (n = 7). Primary care studies were distributed as follows, according to application type: characterizing demand for therapies and treatment technologies (n = 21); incentivizing workforce participation (n = 11); comparing implementations strategies (n = 8); and prioritizing interventions (n = 4). The most common application in specialty care was comparing implementation strategies (n = 12) followed by characterizing demand (n = 10). The majority of health system studies focused on prioritizing interventions (n = 4), followed by comparing implementation strategies (n = 2), and characterizing demand (n = 1).

Table 1.

Summary of studies, by application type and setting

| Setting | ||||

|---|---|---|---|---|

| Application type | Health system | Primary care | Specialty care | Total |

| Characterizing demand for therapies/treatment technologies | 1 | 21 | 10 | 32 |

| Comparing implementation strategies | 2 | 8 | 12 | 22 |

| Incentivizing workforce participation | 11 | 11 | ||

| Prioritizing interventions | 4 | 6 | 10 | |

| Total | 7 | 46 | 22 | 75 |

Summary of publications by stakeholder

Table 2 shows the number of studies by stakeholder type and healthcare setting. A total of 59 studies involved one stakeholder group, distributed as follows: provider (n = 27; mean sample size = 408), patient (n = 25; mean sample size = 717), caregiver (n = 5; mean sample size = 408), and administrator (n = 2; mean sample size = 60). The remainder (n = 16) involved multiple stakeholders. These were distributed as follows: patient and provider (n = 9; mean sample size = 740); provider and administrator (n = 3; mean sample size = 532); patient and caregiver (n = 2; mean sample size = 492); patient, caregiver, and provider (n = 2; mean sample size = 393); and patient, caregiver, provider, and administrator (n = 1; samples size = 102). Only 7 of the 46 studies (15%) in primary care involved more than one stakeholder; whereas 9 of 22 studies (41%) in specialty care had multiple stakeholders.

Table 2.

Summary of studies, by stakeholder and setting

| Setting | Sample size | |||||

|---|---|---|---|---|---|---|

| Stakeholder | Health system | Primary care | Specialty care | Mean | Range | Total |

| Patient | 3 | 14 | 7 | 717 | (35–3372) | 24 |

| Caregiver | 5 | 334 | (48–820) | 5 | ||

| Provider | 2 | 20 | 5 | 408 | (45–1720) | 27 |

| Administrator | 2 | 60 | (41–78) | 2 | ||

| Patient + caregiver | 2 | 492 | (112–873) | 2 | ||

| Patient + caregiver + provider | 2 | 393 | (224–562) | 2 | ||

| Patient + caregiver + provider + administrator | 1 | 102 | 1 | |||

| Patient + provider | 3 | 6 | 740 | (144–3911) | 9 | |

| Provider + administrator | 2 | 1 | 532 | (66–1379) | 3 | |

| Total | 7 | 46 | 22 | 535 | (35–3911) | 75 |

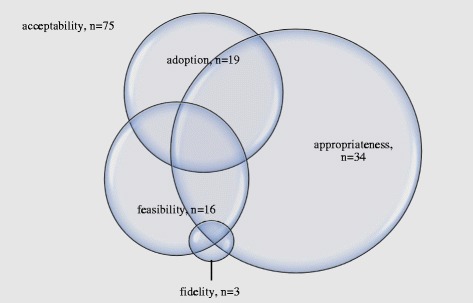

Summary of publications by implementation outcome

Figure 4 shows the documentation of implementation outcomes across the included studies. All included studies were conducted prior to implementation and focused on outcomes associated with early phases of implementation [4]. All 75 publications discussed acceptability. Other outcomes that were discussed include appropriateness (n = 34), adoption (n = 19), feasibility (n = 16), and fidelity (n = 3). Outcomes associated with later phases of implementation (i.e., implementation cost, penetration, and sustainability) were not discussed.

Fig. 4.

Number of studies, by implementation outcome

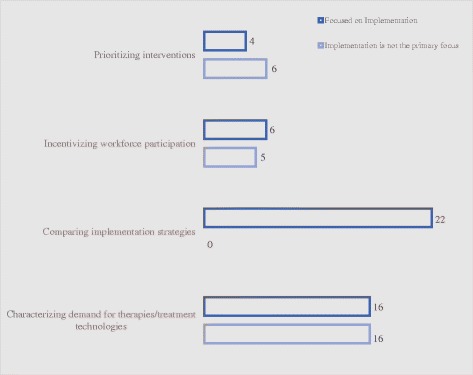

Summary of publications by application type

In terms of application type (Fig. 5), 32 studies were classified as characterizing demand for therapies and treatment technologies [12–42] (16 of 32 [50%] had a primary focus on implementation) [13, 15, 17–19, 21, 22, 25, 26, 29, 33–37, 43]; 22 studies compared implementation strategies [44–65] (22 of 22 [100%] had a primary focus on implementation) [44–65]; 11 studies were concerned with incentivizing workforce participation [66–76] (6 of 11 [55%] had a primary focus on implementation) [66, 68, 71, 74–76]; and 10 studies involved prioritizing health-related interventions [77–86] (4 of 10 [40%] had a primary focus on implementation) [80, 82, 83, 85]. Overall, 48 of the 75 studies (64%) had a primary focus on implementation. The following paragraphs summarize findings by application type:

Fig. 5.

Number of studies, by application type

Application 1: characterizing demand for therapies and treatment technologies

Characterizing demand was the most common application among studies included in the current systematic review (n = 32) [12–42]. In these studies, decision makers used DCEs in predicting demand for new innovations in healthcare products and services prior to implementation. Because DCEs rely on hypothetical (but realistic) scenarios, they have been used to model the demand for treatment options before they become available to healthcare consumers. Advances in medical technology stipulate that patients and their caregivers choose among alternative scenarios (i.e., traditional therapy vs. new innovation). Forecasting demand for new healthcare technologies has been of great interest to various stakeholders, including public and private payers, healthcare systems, and various health programs and implementing agencies. These studies were overwhelmingly focused on exploring acceptability and appropriateness of health-related product or service, and all 32 of them included the perspectives of either patients or caregivers [12–42].

Application 2: comparing implementation strategies

Although the bulk of health-related DCEs examine healthcare preferences and resource allocation, DCEs have also been used in producing decision-making information to guide organizational strategies for implementation of evidence-based practices. Of the 22 studies comparing implementation strategies that were included in the systematic review [44–65], 13 examined the perspective of the provider only [44, 48, 49, 51–54, 57–59, 62, 63], 2 focused only on the patient perspective [56, 60], and 7 examined the perspectives of multiple stakeholders [46, 47, 50, 55, 61, 64, 65]. Furthermore, implementation of patient-centered healthcare provision and the integration of patient priorities into healthcare decision-making require methods for measuring their preferences with respect to health and process outcomes. Therefore, DCEs can be used within the implementation process as tools that elicit stakeholder feedback to ensure the adoption of effective implementation strategies.

Application 3: incentivizing workforce participation

Our review found 11 publications that examined the question of incentivizing workforce participation [66–76]. Healthcare providers, including healthcare organizations and the health professionals employed within them, represent key stakeholders in the implementation and delivery of effective interventions. Providers play leading roles in activities that are essential to implementation, including training, supervision, quality assurance, and improvement. All 11 publications in this category took the provider perspective and were conducted in the primary care setting. The range of topics in these studies covered strategies to incentivize community health personnel in low resource settings within low-income countries [66–68, 70, 71, 74, 76] and primary care providers in rural settings within high-income countries [69, 72, 73, 75]. These studies were mainly concerned with investigating the acceptability and appropriateness of their proposed solutions.

Application 4: prioritizing delivery of evidence-based interventions

The systematic review found 10 studies that used DCEs to inform the prioritization of health-related interventions [77–86]. Policy makers have long used economic tools, such as cost-effectiveness analysis, to prioritize healthcare service delivery [87]. However, prioritizing healthcare services on the basis of cost-effectiveness alone overlooks other important factors. Among the 10 studies in this category, 4 were conducted at the health system level [79, 82–84] and 6 were in the primary care setting [77, 78, 80, 81, 85, 86]. In terms of stakeholder engagement, 5 studies involved providers [77, 78, 80, 85, 86], 4 involved administrators [77–79, 84], and 3 involved patients [81–83]. This category encompassed a wide range of implementation science topics, including the examination of strategies for approving new medicines in Wales [79], strategies for improving treatment of acute respiratory infections in the USA [86], and priority setting for HIV/AIDS interventions in Thailand [78].

Discussion

This systematic review identified and synthesized the literature on the use of DCEs to enhance stakeholder engagement as a strategy to improve implementation. Findings suggest that the use of DCE methodology in implementation science has been scarce but growing steadily over the past decade. The current review documented research studies investigating multiple applications of DCEs, namely characterizing demand for therapies and treatment technologies, comparing implementation strategies, incentivizing workforce participation, and prioritizing interventions. The studies were conducted across diverse primary care and specialty care settings and involved several stakeholder groups, including patients, caregivers, providers, and administrators. All studies included in this systematic review were conducted pre-implementation and therefore focused on the investigation of early-stage implementation outcomes (e.g., acceptability and appropriateness).

The systematic review included studies that engaged various stakeholders, including patients, caregivers, providers, and administrators. Successful implementation of evidence-based strategies and programs depend largely on the fit of the interventions with the values and priorities of stakeholders who are shaping and participating in healthcare service delivery and consumption [1]. For example, healthcare recipients and their family members contribute a wide range of perspectives to the evaluation of healthcare services [88], underscoring the importance of systematically assessing their perspectives with respect to evidence-based alternatives. Choosing which evidence-based programs to implement and how to implement them are key decision points for health systems.

Moreover, the preferences of healthcare providers, administrators, and payers within the context of stakeholder engagement inevitably impact the priority attached to healthcare decisions. Effective implementation efforts focusing on individual providers require changes in professional norms and changes in individual providers’ knowledge and beliefs, economic incentives, and other factors [89, 90]. Both financial and non-financial job characteristics can influence the recruitment and retention, as well as the attitudes and perceptions of healthcare professionals toward emerging evidence and innovations. Therefore, understanding the preferences of individual providers can improve the effectiveness of such efforts. However, existing data using revealed preferences are limited in their ability to address provider-level characteristics, and DCEs can be used to better inform this issue [91, 92].

Preference measurement approaches, such as DCEs, are effective instruments for understanding stakeholders’ decision-making. DCEs have been used to engage patients prior to the implementation of cancer screening and tobacco cessation programs [93, 94]. In such studies, researchers were able to gain valuable information about the demand for healthcare services prior to their provision and implementation. Although the choices presented to participants are hypothetical and the responses to them are potentially different from actual behavior, this hypothetical nature has its advantages over actually exposing the participant to the condition, with the researcher having complete control over the experimental design. Combined with advanced statistical techniques, the ability to model hypothetical conditions within the experimental design of DCEs ensures statistical robustness [95]. DCEs also allow the inclusion of attribute levels that do not yet exist, and are ideal for pre-intervention testing. Accordingly, marketing professionals have widely used DCEs in new product development, pricing, market segmentation, and advertising processes [96].

The aforementioned features of DCE studies can be useful in the design of interventions because they can enhance concordance with stakeholder preferences prior to, and during their implementation. The process of integrating research findings into population-level behaviors occurs in context [97]. Context in many healthcare systems includes scarce resources, variability in adoption of existing innovations, and ways of changing behavior that often incur their own costs but are rarely factored into the final estimate of the cost-effectiveness of innovation adoption [98, 99]. DCEs can integrate the assessment of contextual factors, including cost, in the implementation of evidence-based prevention programs.

As the DCE becomes more widely used in healthcare preference assessment, the potential arises for a broad range of applications in implementation science. This systematic review sheds light on the current applications that have been documented in the peer-reviewed literature to date. Implementation science and DCEs are both rapidly emerging concepts in health services research. DCEs are becoming more accepted as an evaluation tool in healthcare while implementation science is now a growing scientific field with funding announcements, annual conferences, training programs, and a growing portfolio of studies globally [100]. Nevertheless, the two areas seldom cross paths. As implementation science advances, there is an opportunity for the field to harness the power of DCEs as a widely accepted tool for engaging stakeholders. The ability of DCEs to present and evaluate attributes and strategies prior to implementation, and their robustness in simultaneously examining these criteria within a decision framework can greatly enhance their value for implementation science.

Strengths and limitations

To our knowledge, our study is the first to highlight the use of DCEs as a stakeholder engagement strategy to improve implementation. Our study has several strengths, including its explicit and transparent methodology. We conducted a systematic and comprehensive search of the peer-reviewed literature across the relevant databases that resulted in a comprehensive representation of the published research in this area. Further, articles included in this systematic review were categorized into different application types and further classified using the Implementation Outcomes framework to shed light on practical applications for DCEs in implementation science. The identification of these application types and linking them with an implementation science framework provides strong guidance for future studies in this area.

However, our results should be considered in light of several limitations. First, gray literature such as reports, policy documents, and dissertations were not included in the review, nor were protocol papers. Although such reports may be relevant to the topic of interest, gray literature is not peer-reviewed and therefore may not rise to the high standards of quality associated with peer-reviewed publications. Inclusion of gray literature would also have biased the results given that papers related to work known by the authors and their network would have been more likely to have been identified than other works. Second, there are limitations to using volume of research output as a measure of research effort. Due to publication bias, studies with unfavorable results may not be published, leading to under-representation of the actual volume of work carried out in the field. Finally, it is unclear if DCE use effectively influenced implementation strategies and subsequent outcomes, due to the lack of follow-up data in these studies. Whether stakeholder DCEs direct implementation activities to the best approach or outcome remains to be demonstrated in future studies.

Conclusion

DCEs offer an opportunity to address an underrepresented challenge in implementation science—that of the “demand” side. By bringing key stakeholders to the forefront, we can not only focus on the push of scientific innovations but also understand how best they may be desired, demanded, and valued by patients, families, providers, and administrators. Understanding these dimensions will help us improve how to implement evidence-based interventions and programs in such ways that they will be effectively taken up and the gap in translation of evidence to practice and policy will be shortened.

Additional files

List of included studies. (DOCX 40 kb)

List of excluded studies based on full text evaluation. (DOCX 62 kb)

Acknowledgements

We are grateful to Laura Ramirez, Evan Johnson, and Antonio Laracuente for their contributions during the data synthesis phase of this study. We thank Dr. Angela Stover for her comments on a prior draft.

Funding

This research was supported in part by the Mentored Training for Dissemination and Implementation Research in Cancer (MT-DIRC) Program, National Cancer Institute grant (R25-CA171994). Dr. Chambers is a MT-DIRC faculty member. Dr. Salloum is a MT-DIRC fellow from 2016 to 2018. The content of this paper is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies. No financial disclosures were reported by the authors of this paper.

Availability of data and materials

Please contact the authors for data requests.

Abbreviation

- DCE

Discrete choice experiment

Authors’ contributions

All authors were involved in various stages of the study design. RS and DC conceptualized the study. RS wrote the first draft. JL, ES, and DC gave methodological advice and commented on subsequent drafts of the paper. All authors read and approved the final manuscript.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

Electronic supplementary material

The online version of this article (10.1186/s13012-017-0675-8) contains supplementary material, which is available to authorized users.

References

- 1.Brownson RC, Colditz GA, Proctor EK. Dissemination and implementation research in health: translating science to practice. 2012. [Google Scholar]

- 2.Lobb R, Colditz GA. Implementation science and its application to population health. Annu Rev Public Health. 2013;34:235–251. doi: 10.1146/annurev-publhealth-031912-114444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Heal Ment Heal Serv Res. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Heal Ment Heal Serv Res. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Louviere JJ, Flynn TN, Carson RT. Discrete choice experiments are not conjoint analysis. J Choice Model. 2010;3(3):57–72. doi: 10.1016/S1755-5345(13)70014-9. [DOI] [Google Scholar]

- 6.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. PharmacoEconomics. 2008;26(8):661–677. doi: 10.2165/00019053-200826080-00004. [DOI] [PubMed] [Google Scholar]

- 7.Powell BJ, Beidas RS, Lewis CC, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2015;44(2):177-94. [DOI] [PMC free article] [PubMed]

- 8.Louviere JJ, Pihlens D, Carson R. Design of discrete choice experiments: a discussion of issues that matter in future applied research. J Choice Model. 2011;4(1):1–8. doi: 10.1016/S1755-5345(13)70016-2. [DOI] [Google Scholar]

- 9.Lancaster KJ. A new approach to consumer theory. J Polit Econ. 1966;74(2):132–157. doi: 10.1086/259131. [DOI] [Google Scholar]

- 10.Chambers DA. Advancing the science of implementation: a workshop summary. Adm Policy Ment Heal Ment Heal Serv Res. 2008;35(1–2):3–10. doi: 10.1007/s10488-007-0146-7. [DOI] [PubMed] [Google Scholar]

- 11.World Bank Data Team. World Bank country and lending groups–World Bank data help desk. World Bank. 2017:1–8. https://datahelpdesk.worldbank.org/knowledgebase/articles/906519. Accessed 15 Oct 2017.

- 12.Marshall DA, Johnson FR, Phillips KA, Marshall JK, Thabane L, Kulin NA. Measuring patient preferences for colorectal cancer screening using a choice-format survey. Value Heal. 2007;10(5):415–430. doi: 10.1111/j.1524-4733.2007.00196.x. [DOI] [PubMed] [Google Scholar]

- 13.Fitzpatrick E, Coyle DE, Durieux-Smith A, Graham ID, Angus DE, Gaboury I. Parents’ preferences for services for children with hearing loss: a conjoint analysis study. Ear Hear. 2007;28(6):842–849. doi: 10.1097/AUD.0b013e318157676d. [DOI] [PubMed] [Google Scholar]

- 14.Bridges JF, Searle SC, Selck FW, Martinson NA. Engaging families in the choice of social marketing strategies for male circumcision services in Johannesburg, South Africa. Soc Mar Q. 2010;16:60–76. doi: 10.1080/15245004.2010.500443. [DOI] [Google Scholar]

- 15.Eisingerich AB, Wheelock A, Gomez GB, Garnett GP, Dybul MR, Piot PK. Attitudes and acceptance of oral and parenteral HIV preexposure prophylaxis among potential user groups: a multinational study. PLoS One. 2012;7(1) doi: 10.1371/journal.pone.0028238. [DOI] [PMC free article] [PubMed]

- 16.Naik-Panvelkar P, Armour C, Rose JM, Saini B. Patients’ value of asthma services in Australian pharmacies: the way ahead for asthma care. J Asthma. 2012;49(3):310–316. doi: 10.3109/02770903.2012.658130. [DOI] [PubMed] [Google Scholar]

- 17.Naik-Panvelkar P, Armour C, Rose JM, Saini B. Patient preferences for community pharmacy asthma services: a discrete choice experiment. PharmacoEconomics. 2012;30(10):961–976. doi: 10.2165/11594350-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 18.Benning TM, Kimman ML, Dirksen CD, Boersma LJ, Dellaert BGC. Combining individual-level discrete choice experiment estimates and costs to inform health care management decisions about customized care: the case of follow-up strategies after breast cancer treatment. Value Heal. 2012;15(5):680–689. doi: 10.1016/j.jval.2012.04.007. [DOI] [PubMed] [Google Scholar]

- 19.Hill M, Fisher J, Chitty LS, Morris S. Women’s and health professionals’ preferences for prenatal tests for down syndrome: a discrete choice experiment to contrast noninvasive prenatal diagnosis with current invasive tests. Genet Med. 2012;14(11):905–913. doi: 10.1038/gim.2012.68. [DOI] [PubMed] [Google Scholar]

- 20.Rennie L, Porteous T, Ryan M. Preferences for managing symptoms of differing severity: a discrete choice experiment. Value Heal. 2012;15(8):1069–1076. doi: 10.1016/j.jval.2012.06.013. [DOI] [PubMed] [Google Scholar]

- 21.Wheelock A, Eisingerich AB, Ananworanich J, et al. Are Thai MSM willing to take PrEP for HIV prevention? An analysis of attitudes, preferences and acceptance. PLoS One. 2013;8(1) doi: 10.1371/journal.pone.0054288. [DOI] [PMC free article] [PubMed]

- 22.Burton CR, Fargher E, Plumpton C, Roberts GW, Owen H, Roberts E. Investigating preferences for support with life after stroke: a discrete choice experiment. BMC Health Serv Res. 2014;14(1):63. doi: 10.1186/1472-6963-14-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Baxter J-AB, Roth DE, Al Mahmud A, Ahmed T, Islam M, Zlotkin SH. Tablets are preferred and more acceptable than powdered prenatal calcium supplements among pregnant women in Dhaka, Bangladesh. J Nutr. 2014;144(7):1106–1112. doi: 10.3945/jn.113.188524. [DOI] [PubMed] [Google Scholar]

- 24.Pechey R, Burge P, Mentzakis E, Suhrcke M, Marteau TM. Public acceptability of population-level interventions to reduce alcohol consumption: a discrete choice experiment. Soc Sci Med. 2014;113:104–109. doi: 10.1016/j.socscimed.2014.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Deal K, Keshavjee K, Troyan S, Kyba R, Holbrook AM. Physician and patient willingness to pay for electronic cardiovascular disease management. Int J Med Inform. 2014;83(7):517–528. doi: 10.1016/j.ijmedinf.2014.04.007. [DOI] [PubMed] [Google Scholar]

- 26.Veldwijk J, Lambooij MS, Bruijning-Verhagen PCJ, Smit HA, de Wit GA. Parental preferences for rotavirus vaccination in young children: a discrete choice experiment. Vaccine. 2014;32(47):6277–6283. doi: 10.1016/j.vaccine.2014.09.004. [DOI] [PubMed] [Google Scholar]

- 27.Fraenkel L, Cunningham M, Peters E. Subjective numeracy and preference to stay with the status quo. Med Decis Mak. 2015;35(1):6–11. doi: 10.1177/0272989X14532531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hollin IL, Peay HL, Bridges JFP. Caregiver preferences for emerging Duchenne muscular dystrophy treatments: a comparison of best-worst scaling and conjoint analysis. Patient. 2014;8(1):19–27. doi: 10.1007/s40271-014-0104-x. [DOI] [PubMed] [Google Scholar]

- 29.Zickafoose JS, DeCamp LR, Prosser LA, et al. Parents’ preferences for enhanced access in the pediatric medical home. JAMA Pediatr. 2015;169(4):358. doi: 10.1001/jamapediatrics.2014.3534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Agyei-Baffour P, Boahemaa MY, Addy EA. Contraceptive preferences and use among auto artisanal workers in the informal sector of Kumasi, Ghana: a discrete choice experiment. Reprod Health. 2015;12(1):32. doi: 10.1186/s12978-015-0022-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Powell G, Holmes EAF, Plumpton CO, et al. Pharmacogenetic testing prior to carbamazepine treatment of epilepsy: patients’ and physicians’ preferences for testing and service delivery. Br J Clin Pharmacol. 2015;80(5):1149–1159. doi: 10.1111/bcp.12715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Spinks J, Janda M, Soyer HP, Whitty J a. Consumer preferences for teledermoscopy screening to detect melanoma early. J Telemed Telecare. 2015;0(0):1–8. doi: 10.1177/1357633X15586701. [DOI] [PubMed] [Google Scholar]

- 33.Becker MPE, Christensen BK, Cunningham CE, et al. Preferences for early intervention mental health services: a discrete-choice conjoint experiment. Psychiatr Serv. 2015;67:appips201400306. doi: 10.1176/appi.ps.201400306. [DOI] [PubMed] [Google Scholar]

- 34.Tang EC, Galea JT, Kinsler JJ, et al. Using conjoint analysis to determine the impact of product and user characteristics on acceptability of rectal microbicides for HIV prevention among Peruvian men who have sex with men. Sex Transm Infect. 2016;92(3):200–205. doi: 10.1136/sextrans-2015-052028. [DOI] [PubMed] [Google Scholar]

- 35.Hill M, Johnson J-A, Langlois S, et al. Preferences for prenatal tests for down syndrome: an international comparison of the views of pregnant women and health professionals. Eur J Hum Genet. 2015;44:968–975. doi: 10.1038/ejhg.2015.249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lock J, de Bekker-Grob EW, Urhan G, et al. Facilitating the implementation of pharmacokinetic-guided dosing of prophylaxis in haemophilia care by discrete choice experiment. Haemophilia. 2016;22(1):e1–e10. doi: 10.1111/hae.12851. [DOI] [PubMed] [Google Scholar]

- 37.Herman PM, Ingram M, Cunningham CE, et al. A comparison of methods for capturing patient preferences for delivery of mental health services to low-income Hispanics engaged in primary care. Patient. 2016;9(4):293–301. doi: 10.1007/s40271-015-0155-7. [DOI] [PubMed] [Google Scholar]

- 38.Morel T, Ayme S, Cassiman D, Simoens S, Morgan M, Vandebroek M. Quantifying benefit-risk preferences for new medicines in rare disease patients and caregivers. Orphanet J Rare Dis. 2016;11(1):70. doi: 10.1186/s13023-016-0444-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Johnson DC, Mueller DE, Deal AM, et al. Integrating patient preference into treatment decisions for men with prostate cancer at the point of care. J Urol. 2016;196(6):1640–1644. doi: 10.1016/j.juro.2016.06.082. [DOI] [PubMed] [Google Scholar]

- 40.Uemura H, Matsubara N, Kimura G, et al. Patient preferences for treatment of castration-resistant prostate cancer in Japan: a discrete-choice experiment. BMC Urol. 2016;16(1):63. doi: 10.1186/s12894-016-0182-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Determann D, Lambooij MS, Gyrd-Hansen D, et al. Personal health records in the Netherlands: potential user preferences quantified by a discrete choice experiment. J Am Med Inform Assoc. 2016;0(0):ocw158. doi: 10.1093/jamia/ocw158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Harrison M, Marra CA, Bansback N. Preferences for “new” treatments diminish in the face of ambiguity. Health Econ (United Kingdom). 2016;26(6):743-52. [DOI] [PubMed]

- 43.Bridges JFP, Searle SC, Selck FW, Martinson NA. Designing family-centered male circumcision services: a conjoint analysis approach. Patient. 2012;5(2):101–111. doi: 10.2165/11592970-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 44.Huis in’t Veld MHA, van Til JA, Ijzerman MJ, MMR V-H. Preferences of general practitioners regarding an application running on a personal digital assistant in acute stroke care. J Telemed Telecare. 2005;11(Suppl 1):37–39. doi: 10.1258/1357633054461615. [DOI] [PubMed] [Google Scholar]

- 45.Berchi C, Dupuis JM, Launoy G. The reasons of general practitioners for promoting colorectal cancer mass screening in France. Eur J Health Econ. 2006;7(2):91–98. doi: 10.1007/s10198-006-0339-0. [DOI] [PubMed] [Google Scholar]

- 46.Oudhoff JP, Timmermans DRM, Knol DL, Bijnen AB, Van der Wal G. Prioritising patients on surgical waiting lists: a conjoint analysis study on the priority judgements of patients, surgeons, occupational physicians, and general practitioners. Soc Sci Med. 2007;64(9):1863–1875. doi: 10.1016/j.socscimed.2007.01.002. [DOI] [PubMed] [Google Scholar]

- 47.Goossens A, Bossuyt PMM, de Haan RJ. Physicians and nurses focus on different aspects of guidelines when deciding whether to adopt them: an application of conjoint analysis. Med Decis Mak. 2008;28(1):138–145. doi: 10.1177/0272989X07308749. [DOI] [PubMed] [Google Scholar]

- 48.Van Helvoort-Postulart D, Dellaert BGC, Van Der Weijden T, Von Meyenfeldt MF, Dirksen CD. Discrete choice experiments for complex health-care decisions: does hierarchical information integration offer a solution? Health Econ. 2009;18(8):903–920. doi: 10.1002/hec.1411. [DOI] [PubMed] [Google Scholar]

- 49.van Helvoort-Postulart D, van der Weijden T, Dellaert BGC, de Kok M, von Meyenfeldt MF, Dirksen CD. Investigating the complementary value of discrete choice experiments for the evaluation of barriers and facilitators in implementation research: a questionnaire survey. Implement Sci. 2009;4(1):10. doi: 10.1186/1748-5908-4-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Davison SN, Kromm SK, Currie GR. Patient and health professional preferences for organ allocation and procurement, end-of-life care and organization of care for patients with chronic kidney disease using a discrete choice experiment. Nephrol Dial Transplant. 2010;25(7):2334–2341. doi: 10.1093/ndt/gfq072. [DOI] [PubMed] [Google Scholar]

- 51.Hinoul P, Goossens A, Roovers JP. Factors determining the adoption of innovative needle suspension techniques with mesh to treat urogenital prolapse: a conjoint analysis study. Eur J Obstet Gynecol Reprod Biol. 2010;151(2):212–216. doi: 10.1016/j.ejogrb.2010.03.026. [DOI] [PubMed] [Google Scholar]

- 52.Grindrod KA, Marra CA, Tsuyuki RT, Lynd LD. Pharmacists ’ preferences for providing patient-centered services: a discrete choice experiment to guide. Health Policy. 2010;44:1554–1564. doi: 10.1345/aph.1P228. [DOI] [PubMed] [Google Scholar]

- 53.Wen K-Y, Gustafson DH, Hawkins RP, et al. Developing and validating a model to predict the success of an IHCS implementation: the readiness for implementation model. J Am Med Inform Assoc. 2010;17(6):707–713. doi: 10.1136/jamia.2010.005546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lagarde M, Paintain LS, Antwi G, et al. Evaluating health workers’ potential resistance to new interventions: a role for discrete choice experiments. PLoS One. 2011;6(8) doi: 10.1371/journal.pone.0023588. [DOI] [PMC free article] [PubMed]

- 55.Cunningham CE, Henderson J, Niccols A, et al. Preferences for evidence-based practice dissemination in addiction agencies serving women: a discrete-choice conjoint experiment. Addiction. 2012;107(8):1512–1524. doi: 10.1111/j.1360-0443.2012.03832.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Philips H, Mahr D, Remmen R, Weverbergh M, De Graeve D, Van Royen P. Predicting the place of out-of-hours care-a market simulation based on discrete choice analysis. Health Policy (New York) 2012;106(3):284–290. doi: 10.1016/j.healthpol.2012.04.010. [DOI] [PubMed] [Google Scholar]

- 57.Deuchert E, Kauer L, Meisen Zannol F. Would you train me with my mental illness? Evidence from a discrete choice experiment. J Ment Health Policy Econ. 2013;16(2):67–80. [PubMed] [Google Scholar]

- 58.Cunningham CE, Barwick M, Short K, et al. Modeling the mental health practice change preferences of educators: a discrete-choice conjoint experiment. School Ment Health. 2014;6(1):1–14. doi: 10.1007/s12310-013-9110-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Struik MHL, Koster F, Schuit AJ, Nugteren R, Veldwijk J, Lambooij MS. The preferences of users of electronic medical records in hospitals: quantifying the relative importance of barriers and facilitators of an innovation. Implement Sci. 2014;9(1):1–11. doi: 10.1186/1748-5908-9-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Dixon PR, Grant RC, Urbach DR. The impact of marketing language on patient preference for robot-assisted surgery. Surg Innov. 2015;22(1):15–19. doi: 10.1177/1553350614537562. [DOI] [PubMed] [Google Scholar]

- 61.Nicaise P, Soto VE, Dubois V, Lorant V. Users’ and health professionals’ values in relation to a psychiatric intervention: the case of psychiatric advance directives. Adm Policy Ment Heal Ment Heal Serv Res. 2014:384–93. doi: 10.1007/s10488-014-0580-2. [DOI] [PubMed]

- 62.Grudniewicz A, Bhattacharyya O, McKibbon KA, Straus SE. Redesigning printed educational materials for primary care physicians: design improvements increase usability. Implement Sci. 2015;10(1):156. doi: 10.1186/s13012-015-0339-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bailey K, Cunningham C, Pemberton J, Rimas H, Morrison KM. Understanding academic clinicians’ decision making for the treatment of childhood obesity. Child Obes. 2015;11(6):696–706. doi: 10.1089/chi.2015.0031. [DOI] [PubMed] [Google Scholar]

- 64.Barrett AN, Advani HV, Chitty LS, et al. Evaluation of preferences of women and healthcare professionals in Singapore for implementation of noninvasive prenatal testing for down syndrome. Singap Med J. 2016:1–31. 10.11622/smedj.2016114. [DOI] [PMC free article] [PubMed]

- 65.Jennifer Anne Whitty C, Whitty BPharm GradDipClinPharm JA, Spinks BPharm MPHJ, Bucknall TR, Tobiano RNGB, Chaboyer WR. Patient and nurse preferences for implementation of bedside handover: do they agree? Findings from a discrete choice experiment. Health Expect. 2016;1(9):1–9. doi: 10.1111/hex.12513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lagarde M, Blaauw D, Cairns J. Cost-effectiveness analysis of human resources policy interventions to address the shortage of nurses in rural South Africa. Soc Sci Med. 2012;75(5):801–806. doi: 10.1016/j.socscimed.2012.05.005. [DOI] [PubMed] [Google Scholar]

- 67.Miranda JJ, Diez-Canseco F, Lema C, et al. Stated preferences of doctors for choosing a job in rural areas of Peru: a discrete choice experiment. PLoS One. 2012;7(12) doi: 10.1371/journal.pone.0050567. [DOI] [PMC free article] [PubMed]

- 68.Huicho L, Miranda JJ, Diez-Canseco F, et al. Job preferences of nurses and midwives for taking up a rural job in Peru: a discrete choice experiment. PLoS One. 2012;7(12) doi: 10.1371/journal.pone.0050315. [DOI] [PMC free article] [PubMed]

- 69.Li J, Scott A, McGrail M, Humphreys J, Witt J. Retaining rural doctors: doctors’ preferences for rural medical workforce incentives. Soc Sci Med. 2014;121:56–64. doi: 10.1016/j.socscimed.2014.09.053. [DOI] [PubMed] [Google Scholar]

- 70.Yaya Bocoum F, Koné E, Kouanda S, Yaméogo WME, Bado A. Which incentive package will retain regionalized health personnel in Burkina Faso: a discrete choice experiment. Hum Resour Health. 2014;12(Suppl 1):S7. doi: 10.1186/1478-4491-12-S1-S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Song K, Scott A, Sivey P, Meng Q. Improving Chinese primary care providers’ recruitment and retention: a discrete choice experiment. Health Policy Plan. 2015;30(1):68–77. doi: 10.1093/heapol/czt098. [DOI] [PubMed] [Google Scholar]

- 72.Holte JH, Kjaer T, Abelsen B, Olsen JA. The impact of pecuniary and non-pecuniary incentives for attracting young doctors to rural general practice. Soc Sci Med. 2015;128:1–9. doi: 10.1016/j.socscimed.2014.12.022. [DOI] [PubMed] [Google Scholar]

- 73.Kjaer NK, Halling A, Pedersen LB. General practitioners’ preferences for future continuous professional development: evidence from a Danish discrete choice experiment. Educ Prim Care. 2015;26(1):4–10. doi: 10.1080/14739879.2015.11494300. [DOI] [PubMed] [Google Scholar]

- 74.Kasteng F, Settumba S, Källander K, Vassall A. Valuing the work of unpaid community health workers and exploring the incentives to volunteering in rural Africa. Health Policy Plan. 2016;31(2):205–216. doi: 10.1093/heapol/czv042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Chen TT, Lai MS, Chung KP. Participating physician preferences regarding a pay-for-performance incentive design: a discrete choice experiment. Int J Qual Heal Care. 2016;28(1):40–46. doi: 10.1093/intqhc/mzv098. [DOI] [PubMed] [Google Scholar]

- 76.Shiratori S, Agyekum EO, Shibanuma A, et al. Motivation and incentive preferences of community health officers in Ghana : an economic behavioral experiment approach. Hum Resour Health. 2016:1–26. doi: 10.1186/s12960-016-0148-1. [DOI] [PMC free article] [PubMed]

- 77.Baltussen R, ten Asbroek AHA, Koolman X, Shrestha N, Bhattarai P, Niessen LW. Priority setting using multiple criteria: should a lung health programme be implemented in Nepal? Heal Policy Plan. 2007;22(3):178–185. doi: 10.1093/heapol/czm010. [DOI] [PubMed] [Google Scholar]

- 78.Youngkong S, Baltussen R, Tantivess S, Koolman X, Teerawattananon Y. Criteria for priority setting of HIV/AIDS interventions in Thailand: a discrete choice experiment. BMC Health Serv Res. 2010;10(1):197–197 1p. doi: 10.1186/1472-6963-10-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Linley WG, Hughes DA. Decision-makers’ preferences for approving new medicines in wales: a discrete-choice experiment with assessment of external validity. PharmacoEconomics. 2013;31(4):345–355. doi: 10.1007/s40273-013-0030-0. [DOI] [PubMed] [Google Scholar]

- 80.Farley K, Thompson C, Hanbury A, Chambers D. Exploring the feasibility of conjoint analysis as a tool for prioritizing innovations for implementation. Implement Sci. 2013;8:56. doi: 10.1186/1748-5908-8-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Seghieri C, Mengoni A, Nuti S. Applying discrete choice modelling in a priority setting: an investigation of public preferences for primary care models. Eur J Health Econ. 2014;15(7):773–785. doi: 10.1007/s10198-013-0542-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Erdem S, Thompson C. Prioritising health service innovation investments using public preferences: a discrete choice experiment. BMC Health Serv Res. 2014;14(1):360. doi: 10.1186/1472-6963-14-360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Honda A, Ryan M, Van Niekerk R, McIntyre D. Improving the public health sector in South Africa: eliciting public preferences using a discrete choice experiment. Health Policy Plan. 2015;30(5):600–611. doi: 10.1093/heapol/czu038. [DOI] [PubMed] [Google Scholar]

- 84.Paolucci F, Mentzakis E, Defechereux T, Niessen LW. Equity and efficiency preferences of health policy makers in China-a stated preference analysis. Health Policy Plan. 2015;30(8):1059–1066. doi: 10.1093/heapol/czu123. [DOI] [PubMed] [Google Scholar]

- 85.Ammi M, Peyron C. Heterogeneity in general practitioners’ preferences for quality improvement programs: a choice experiment and policy simulation in France. Health Econ Rev. 2016;6(1):44. doi: 10.1186/s13561-016-0121-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Gong CL, Hay JW, Meeker D, Doctor JN. Prescriber preferences for behavioural economics interventions to improve treatment of acute respiratory infections: a discrete choice experiment. BMJ Open. 2016;6(9):e012739. doi: 10.1136/bmjopen-2016-012739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Drummond MF, Sculpher MJ, Torrance GW, O’Brien BJ, Stoddart GL. Methods for the economic evaluation of health care programmes, vol. 3; 2005. http://econpapers.repec.org/RePEc:oxp:obooks:9780198529453. Accessed 1 Dec 2016.

- 88.Aarons GA, Covert J, Skriner LC, et al. The eye of the beholder: youths and parents differ on what matters in mental health services. Adm Policy Ment Heal Ment Heal Serv Res. 2010;37(6):459–467. doi: 10.1007/s10488-010-0276-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the evidence-based practice attitude scale (EBPAS) Ment Health Serv Res. 2004;6(2):61–74. doi: 10.1023/B:MHSR.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8(22):20. doi: 10.1186/1748-5908-8-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Lagarde M, Blaauw D. A review of the application and contribution of discrete choice experiments to inform human resources policy interventions. Hum Resour Health. 2009;7:62. doi: 10.1186/1478-4491-7-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Scott A. Eliciting GPs’ preferences for pecuniary and non-pecuniary job characteristics. J Health Econ. 2001;20(3):329–347. doi: 10.1016/S0167-6296(00)00083-7. [DOI] [PubMed] [Google Scholar]

- 93.Pignone MP, Crutchfield TM, Brown PM, et al. Using a discrete choice experiment to inform the design of programs to promote colon cancer screening for vulnerable populations in North Carolina. BMC Health Serv Res. 2014;14(1):611–629. doi: 10.1186/s12913-014-0611-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Salloum RG, Abbyad CW, Kohler RE, Kratka a K, Oh L, Wood K a. Assessing preferences for a university-based smoking cessation program in Lebanon: a discrete choice experiment. Nicotine Tob Res. 2015;17(5):580–585. doi: 10.1093/ntr/ntu188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.De Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21(2):145–172. doi: 10.1002/hec.1697. [DOI] [PubMed] [Google Scholar]

- 96.Schlereth C, Eckert C, Schaaf R, Skiera B. Measurement of preferences with self-explicated approaches: a classification and merge of trade-off- and non-trade-off-based evaluation types. Eur J Oper Res. 2014;238(1):185–198. doi: 10.1016/j.ejor.2014.03.010. [DOI] [Google Scholar]

- 97.Tomoaia-Cotisel A, Scammon DL, Waitzman NJ, et al. Context matters: the experience of 14 research teams in systematically reporting contextual factors important for practice change. Ann Fam Med. 2013;11(SUPPL. 1) doi: 10.1370/afm.1549. [DOI] [PMC free article] [PubMed]

- 98.Mason J, Freemantle N, Nazareth I, Eccles M, Haines a, Drummond M. When is it cost-effective to change the behavior of health professionals? JAMA. 2001;286(23):2988–2992. doi: 10.1001/jama.286.23.2988. [DOI] [PubMed] [Google Scholar]

- 99.Sculpher M. Evaluating the cost-effectiveness of interventions designed to increase the utilization of evidence-based guidelines. Fam Pract. 2000;17(Suppl 1):S26–S31. doi: 10.1093/fampra/17.suppl_1.S26. [DOI] [PubMed] [Google Scholar]

- 100.Sampson UKA, Chambers D, Riley W, Glass RI, Engelgau MM, Mensah GA. Implementation research the fourth movement of the unfinished translation research symphony. Glob Heart. 2016;11(1):153–158. doi: 10.1016/j.gheart.2016.01.008. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

List of included studies. (DOCX 40 kb)

List of excluded studies based on full text evaluation. (DOCX 62 kb)

Data Availability Statement

Please contact the authors for data requests.