Abstract

Assessment has always been an essential component of postgraduate medical education and for many years focused predominantly on various types of examinations. While examinations of medical knowledge and more recently of clinical skills with standardized patients can assess learner capability in controlled settings and provide a level of assurance for the public, persistent and growing concerns regarding quality of care and patient safety worldwide has raised the importance and need for better work-based assessments. Work-based assessments, when done effectively, can more authentically capture the abilities of learners to actually provide safe, effective, patient-centered care. Furthermore, we have entered the era of interprofessional care where effective teamwork among multiple health care professionals is now paramount. Work-based assessment methods are now essential in an interprofessional healthcare world.

To better prepare learners for these newer competencies and the ever-growing complexity of healthcare, many post-graduate medical education systems across the globe have turned to outcomes-based models of education, codified through competency frameworks. This commentary provides a brief overview on key methods of work-based assessment such as direct observation, multisource feedback, patient experience surveys and performance measures that are needed in a competency-based world that places a premium on educational and clinical outcomes. However, the full potential of work-based assessments will only be realized if post-graduate learners play an active role in their own assessment program. This will require a substantial culture change, and culture change only occurs through actions and changed behaviors. Co-production offers a practical and philosophical approach to engaging postgraduate learners to be active, intrinsically motivated agents for their own professional development, help to change learning culture and contribute to improving programmatic assessment in post-graduate training.

Keywords: competency-based medical education models, Co-production of assessment, programmatic assessment

Zusammenfassung

Prüfungen waren schon immer ein wesentlicher Bestandteil der postgraduierten medizinischen Weiterbildung und haben sich über viele Jahre vorwiegend auf verschiedene Prüfungsformen konzentriert. Durch die Prüfung medizinischen Wissens und neuerdings klinischer Fertigkeiten mit Hilfe von standardisierten Patienten können die Leistungen des Lernenden unter kontrollierten Bedingungen bewertet und eine gewisse Qualitätssicherung für die Öffentlichkeit gewährleistet werden. Anhaltende und wachsende Bedenken bezüglich der Versorgungsqualität und Patientensicherheit weltweit haben die Bedeutung und Notwendigkeit von besseren arbeitsbasierten Bewertungen erhöht. Effektiv durchgeführt können diese die Fähigkeit der Lernenden, eine sichere, wirksame und patientenorientierte Versorgung durchzuführen, verlässlicher erfassen. Außerdem befinden wir uns in einem Zeitalter der interprofessionellen medizinischen Versorgung, in der effektive Teamarbeit zwischen verschiedenen Gesundheitsberufen ganz im Vordergrund steht. Arbeitsbasierte Bewertungsmethoden sind in einer interprofessionellen Welt der Gesundheitsversorgung unverzichtbar.

Um Lernende besser auf diese neuen Kompetenzen und die zunehmende Komplexität der Gesundheitsversorgung vorzubereiten, haben viele postgraduierte medizinische Weiterbildungssysteme weltweit Outcome-basierte Weiterbildungsmodelle aufgegriffen, die sich an kompetenzorientierten Rahmenstrukturen orientieren. Dieser Artikel bietet einen kurzen Überblick über Schlüsselmethoden der arbeitsbasierten Bewertung, wie etwa die direkte Beobachtung, Multisource-Feedback, Patientenerfahrungsumfragen und Leistungsbewertungen, die in einer kompetenzbasierten Weiterbildung erforderlich sind, in der klinischen und bildungsorientierten Ergebnissen (Outcomes) ein besonderer Wert zukommt. Allerdings wird das volle Potenzial arbeitsbasierter Bewertungen erst dann ausgeschöpft, wenn postgraduierte Lernende selbst eine aktive Rolle in ihrem Prüfungsprogramm übernehmen. Voraussetzung ist ein entscheidender Kulturwandel, der nur durch aktives Handeln und eine Verhaltensänderung eintreten kann. Das Modell der Co-Produktion bietet eine praktische und philosophische Herangehensweise, um postgraduierte Lernende für eine aktive und intrinsisch motivierte Partizipation an ihrer eigenen professionellen Entwicklung zu gewinnen, dadurch die Lernkultur zu verändern und zur Verbesserung eines programmatischen Prüfungskonzeptes für die postgraduierte Weiterbildung beizutragen.

Background

Assessment has always been an essential aspect of learning across all health professions. Assessment of learning, or more specifically of knowledge, dominated medical education in the last century [1]. Toward the latter quarter of the 20th century important advances were made in simulation to the point approaches such as the objective structured clinical examinations (OSCEs) became a core component of some national licensing processes [2]. In 1990 George Miller published his now iconic assessment pyramid highlighting the levels of knows, knows how, shows how and does [3]. Assessments such as knowledge examinations and OSCEs address the knows, knows how and shows how levels and have been important in assuring the public a minimal level of competence among health professionals.

However, as the use of examinations and OSCEs expanded evidence of major problems and deficiencies were also emerging around the delivery of health care. Over the last 40 plus years multiple studies have demonstrated substantial issues in patient safety, medical errors, underuse of evidence-based therapies, lack of patient-centeredness and overuse of tests, procedures and therapies that have limited to no medical value [4], [5], [6], [7]. For example, the Commonwealth Fund routinely publishes comparative data from multiple countries that show all heath systems have substantial room to improve [8]. Recently, Makary and Daniel found medical errors may be the third leading cause of death in the United States [9].

It was against this backdrop of quality and safety concerns that the outcomes-based education movement began to take hold in a number of countries [10], [11], [12], [13]. Medical education began to shift from a process model (i.e. simply completing a course of instruction and experiential activities) to an outcomes model focused on determining what graduates can actually do when caring for patients and families. This trend became particularly evident in post-graduate training programs where the predominant form of learning is experiential by caring for patients and families. Educational outcomes in postgraduate training were subsequently codified as competencies [12], [13]. A competency is simply an observable ability of a health professional, integrating multiple components such as knowledge, skills, values and attitudes [14]. Competencies provide the framework for curricula and assessment to ensure health professional can perform the key clinical activities of their discipline.

The rapidly increasing complexity of both basic medical and healthcare delivery sciences mandated a reexamination of what abilities were needed by professionals for modern and future practice. In the United States, specialty postgraduate training, called residencies, typically occurs after four years of college subsequently followed by four years of medical school. Residency education in the United States has been guided by six general, or domains of, competencies since 2001: patient care, medical knowledge, professionalism, interpersonal skills and communication, practice based learning and improvement and systems-based practice [13]. The competencies of practice-based learning and improvement and systems-based practice were specifically created to target areas such as evidence-based practice and use of clinical decision support systems and resources, quality improvement, patient safety science, care coordination, interprofessional teamwork, effective use of health information technology and stewardship of resources. Greater attention was also directed toward communication skills such as shared and informed decision making, health literacy and numeracy.

It became increasingly clear that traditional methods of assessment that targeted the knows, knows how and show how levels were insufficient to assess these critical new competencies and meet the needs of patients [15], [16]. Furthermore, the consequences from decades of not adequately observing and assessing the core clinical skills of medical interviewing, physical examination, informed decision making and clinical reasoning have had a major negative impact on quality of care [17], [18]. This was also part of George Miller’s message of the importance of the does level of the pyramid, determining what health professionals can actually do is the ultimate goal of education and assessment [3].

Competencies have also been difficult to implement. Faculty often weight competencies idiosyncratically, too many assessment tools presumably designed for a competency-based approach are overly reductionist (e.g. a tick box exercise) and there has been a general lack of a programmatic approach to assessment [19], [20]. Finally, culture and the factors that enable learner engagement are also beginning to emerge that will have a major impact on how learners embrace assessment as active participants [21], [22]. These factors, among others, have led to the push for more and better work-based assessments.

Emergence of Work-based Assessment

While work-based assessments (WBAs) have been used for a long time, they have been, and in many cases still are, used poorly and ineffectively [17]. One example is the faculty evaluation form typically completed at the end of a clinical experience or rotation. Multiple past studies show rating problems such as range restriction, halo and leniency effects accompanied by few to no narrative comments for feedback [16], [23]. Much of the ratings were and are often still based on proxies such as case presentations and rounds away from the bedside with little direct observation of the learner with patients and families. This lack of effective assessment can also lead to the “failure to fail” phenomenon where residents are promoted despite possessing significant dyscompetencies that potentially leads to patient harm [24].

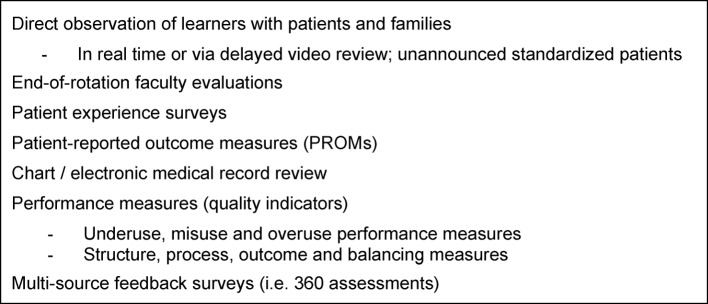

However, the last 15 years has seen the emergence of a number of new work-based assessment tools and approaches (see figure 1 (Fig. 1)). First, research on rater cognition have deepened our understanding on what affects faculty observations along with promising techniques to help faculty perform observation more effectively [25]. For example, Kogan and colleagues found using interactive, conversational methods to deepen understanding of key clinical competencies, known as performance dimension and frame of reference training, can actually empower faculty in their observations of medical residents and may actually transfer to improving their own personal clinical skills [26]. While much research and evaluation remains to be done, research is beginning to provide helpful guidance on how to better prepare faculty to assess through direct observation.

Figure 1. Examples of Work-based Assessments.

Another important development is the use of construct-aligned scales, especially using entrustment type anchors involving either developmental stages and/or supervision. For example, Crossly and colleagues found using scales focused on the constructs of “developing clinical sophistication and independence, or ‘entrustability’” in three assessment tools for the United Kingdom Foundation program led to better reliability and higher satisfaction among the faculty using these tools [27]. Weller and colleagues found similar effects in anesthesia training when using an entrustment supervision scale [28].

Multisource feedback (MSF), including the increasing use of patient experience surveys, is another important development in work-based assessment [29]. Feedback from peers, patients, families and other members of the healthcare team can be useful and powerful aids to professional development and is especially important in reconfigured healthcare delivery that recognizes the critical importance of interprofessional teamwork to deliver safe, effective patient-centered care. Another growing area is the use of patient reported outcome measures (PROMs) that specifically target functional outcomes for patients [30]. For example, while avoiding deep venous thrombosis and infection is paramount in the peri-operative period after joint arthroplasty, the ultimate goal is to improve physical function. The Oxford hip and knee scales are examples of PROMs currently in use and can be useful adjuncts to a program of assessments [30].

Performance Measures in Postgraduate Education

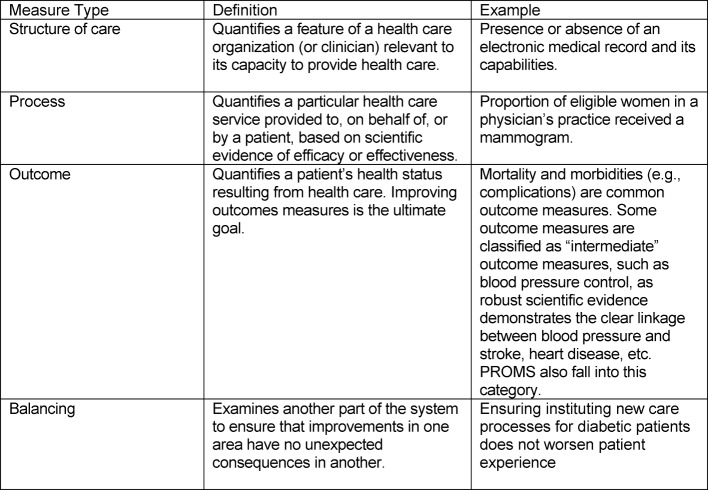

Performance measures “are designed to measure systems of care and are derived from clinical or practice guidelines. Data that are defined into specific measurable elements provides an organization with a meter to measure the quality of its care.” [31]. Performance measures typically fall into four main categories (see Table 1 (Tab. 1)) [32], [31]. In addition to patient experience measures, measurement and assessment of clinical performance is now an essential component of both quality assurance and improvement efforts and will need to increasingly find their way into health professions training. One method to assess clinical performance in addition to direct observation, patient surveys and MSF is review, or audit, of the medical record [33]. Medical records serve a number of important functions:

Table 1. Categories and Definitions of Performance Measures.

as an archive of important patient medical information for use by other healthcare providers and patients;

as source of data to assess performance in practice such as chronic medical conditions (e.g. diabetes), post-operative care or prevention;

monitoring of patient safety and complications; and

documentation of diagnostic and therapeutic decisions.

One can readily see how the medical record can be used for educational and assessment purposes [33], [34], [35], [36], [37].

For health professions training programs, the first three types of measures are important to examine the relationships between the quality of care provided and educational outcomes and ensure the learning environment is performing optimally. For more advanced training programs, additional experience in balanced measures (“balanced scorecards”) [31] may be useful. For the individual learner, process and outcome measures will usually be the most useful types of measures for assessment, feedback and continuous professional development.

Practice reviews are an essential method in the evaluation of the competencies of Practice-based Learning and Improvement (PBLI) and Systems-based Practice (SBP) in the United States general competency framework [13] and the Leader role in CanMEDS [38]. These competencies require that residents be actively involved in monitoring their own clinical practice and improving the quality of care based on a systematic review of the care they provide. Practice review, using the medical record, can promote self-reflection and support self-regulated learning, important skills needed for life-long learning. The key message here is review of clinical performance is something that learners can potentially do as a self-directed activity.

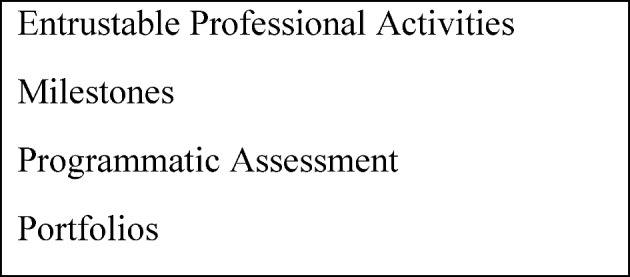

New Concepts to Guide Assessments

While the above work-based assessments are improving and being increasingly used, training programs have struggled to synthesize and integrate all this assessment data into judgments about learner progress. Four major developments have occurred as attempts to guide both curriculum and assessment (see figure 2 (Fig. 2)). The first newer concept is entrustable professional activities (EPAs) [39], [40], [41]. As defined by ten Cate, an EPA “can be defined as a unit of professional practice that can be fully entrusted to a trainee, as soon as he or she has demonstrated the necessary competence to execute this activity unsupervised.” [39], [40], [41]. The second new concept is the use of milestones to describe developmental stages of individual competencies in narrative terms [42], [43]. EPAs describe the actual activities health professionals do while milestones describe the abilities needed by the individual to effectively execute the activity (i.e. EPA). EPAs and competencies, described by milestones narratives, can be nicely combined to richly define an educational trajectory and guide what assessments are needed to determine learner progression [44]. The third major development has been the push for programmatic assessment that can effectively integrate multiple assessments into decisions and judgments and that uses group process to make judgments on progress [45], [46]. The combination of EPAs, milestones and programmatic assessment all provide useful frameworks and approaches to enhance the development and effective use of work-based assessments. While not necessarily a new concept, portfolios are growing in importance as an effective way to facilitate and manage programmatic assessment and track developmental progression through EPAs and milestones [47]. Portfolios can also enhance learner engagement. However, while advances in work-based assessment methods and concepts have helped to move outcomes-based education forward, insufficient attention has been directed toward the role of the learner in assessment.

Figure 2. Important Concepts in Work-based Assessment.

Activating the Learner through Co-production

In order to achieve mastery in practice, learners need specific skills in reflection and mindful practice, self-regulated learning and informed self-assessment. Reflection on practice involves a thoughtful and deliberate review of one’s past performance (e.g. a medical record review or MSF), and is often best done in conversation with a trusted peer or advisor. This is where work-based assessment can be particularly helpful as it represents authentic work and can be reviewed in a continuous and longitudinal manner.

Self-regulated learning requires that learners set specific goals, develop strategic plans, self-monitor and self-assess as they participate in their education. Self-regulated learning thereby engages learners in the three essential elements of forethought, actual performance and subsequent self-reflection [48]. Self-regulated learning also requires awareness of the educational context and recognition that how the learner responds to and actually influences that context will affect the impact of their assessments (49). Self-regulated learning also requires substantial intrinsic motivation [48], [49]. If intrinsic motivation is not a major driver for the learner, it is less likely assessment will have the intended effects. Learners should not rely on extrinsic motivators (e.g. rewards or penalties) as a successful career strategy. Incorporating the key tenets of self-regulated learning into plans is both logical and supported by educational theory [49].

Informed self-assessment requires that learners proactively seek out assessment from faculty and members of the healthcare team, perform aspects of their own assessment such as clinical performance reviews or evidence-based practice and actively engage their assessment data for professional development as part of their own accountability [50], [51]. In other words, learners need to be producers of their own learning and assessment in collaboration and partnership with faculty and the program. This is where the concept of “co-production” can be helpful in realizing the promise of outcomes-based education.

Co-production and Work-based Assessment

The concept of co-production was originally developed in the context of public services and can be defined as “delivering public services in an equal and reciprocal relationship between professionals, people using services, their families and their neighbours. Where activities are co-produced in this way, both services and neighbourhoods become far more effective agents of change.” [52]. More recently Batalden and colleagues expanded this concept for healthcare that has important implications for medical education. They defined co-production as the “interdependent work of users and professionals to design, create, develop, deliver, assess and improve the relationships and actions that contribute to the health of individuals and populations.” [53]. In their model, professionals and patients co-produce health and healthcare through civil discourse, co-planning and co-execution within the context of supportive institutions and the larger healthcare system.

It is not long reach to see how co-production can be applied within medical education and work-based assessment. Work-based assessment through a co-production lens could be defined as the “interdependent work of learners, faculty, health professionals and patients to design, create, develop, deliver, assess and improve the relationships and activities that contribute to the effective assessment and professional development of learners.” [53]. Combining the key principles of self-regulated learning, informed self-assessment and co-production makes it clear that learners must be “active agents” in their own assessment program.

Activated learners should be empowered to ask for direct observation, feedback and coaching. Activated learners also must perform some of their own assessment such as progress testing, review of their own clinical practice, pursuit and documentation of seeking answers to clinical questions through effective evidence-based practice, seeking feedback from patients and members of interprofessional care teams and developing and executing individual learning plans. Empowering residents to seek and co-produce part of their assessments is undergirded by the tenets of self-determination theory (SDT) that posits humans possess three innate psychological needs for competence, autonomy and relatedness [54]. Equally important, SDT provides insight into how residents can internalize regulation of behavior such as assessment that has often been purely driven by faculty and the program, or in other words, more external motivators. The ultimate goal to help the resident develop autonomous, self-determined activity in assessment (54). For medicine, this will require a substantial culture change, and culture change only occurs through actions and changed behaviors.

Conclusion

With the rise of competency-based medical education has come a greater need for effective work-based assessments that can guide professional development and help improve entrustment decision making. EPAs, milestones and programmatic assessment can help to guide the appropriate choice and development of work-based assessments to determine meaningful educational outcomes. Finally, and perhaps most importantly, learners across the educational continuum must become active agents in their program of assessment if we are to realize the full promise of competency-based medical education models. This will require a substantial culture change, and culture change only occurs through actions and changed behaviors. Co-production of assessment by learners with faculty, training programs and patients provides a useful conceptual path to change assessment culture and move competency-based medical education forward.

Competing interests

Dr. Holmboe works for the Accreditation Council for Graduate Medical Education and receives royalties for a textbook on assessment from Mosby-Elsevier.

References

- 1.Cooke M, Irby DM, O'Brien BC. Educating Physicians. A call for Reform of Medical School and Residency. San Francisco: Jossey-Bass; 2010. [Google Scholar]

- 2.Swanson DB, Roberts TE. Trends in national licensing examinations in medicine. Med Educ. 2016;50(1):101–114. doi: 10.1111/medu.12810. Available from: http://dx.doi.org/10.1111/medu.12810. [DOI] [PubMed] [Google Scholar]

- 3.Miller G. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 Suppl):S63–S67. doi: 10.1097/00001888-199009000-00045. Available from: http://dx.doi.org/10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 4.National Academy of Health Sciences, Institute of Medicine. To Err is Human: Building a Safer Health System. Washington, DC: National Academy of Health Sciences, Institut of Medicine; 1999. [Google Scholar]

- 5.National Patient Safety Foundation. Free from Harm: Accelerating Patient Safety Improvement Fifteen Years after To Err Is Human. Boston: National Patient Safety Foundation; 2016. Available from: http://www.npsf.org/?page=freefromharm. [Google Scholar]

- 6.Institute of Medicine. Crossing the Quality Chasm. Washington, DC: National Academy Press; 2001. [Google Scholar]

- 7.Berwick DM, Nolan TW, Whittington L. The triple aim: care, health cost. Health Aff (Millwood) 2008;27(3):759–769. doi: 10.1377/hlthaff.27.3.759. Available from: http://dx.doi.org/10.1377/hlthaff.27.3.759. [DOI] [PubMed] [Google Scholar]

- 8.Mossialos E, Wenzl M, Osborn R, Anderson C. International Profiles of Health Care Systems, 2014. Australia, Canada, Denmark, England, France, Germany, Italy, Japan, The Netherlands, New Zealand, Norway, Singapore, Sweden, Switzerland, and the United States. New York: The Commonwealth Fund; 2015. Available from: http://www.commonwealthfund.org/publications/fund-reports/2015/jan/international-profiles-2014. [Google Scholar]

- 9.Makary MA, Daniel M. Medical error – the third leading cause of death in the US. BMJ. 2016;353:i2139. doi: 10.1136/bmj.i2139. Available from: http://dx.doi.org/10.1136/bmj.i2139. [DOI] [PubMed] [Google Scholar]

- 10.Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Fineberg H, Garcia P, Ke Y, Kelly P, Kistnasamy B, Meleis A, Naylor D, Pablos-Mendez A, Reddy S, Scrimshaw S, Sepulveda J, Serwadda D, Zurayk H. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010;376(9756):1923–1958. doi: 10.1016/S0140-6736(10)61854-5. Available from: http://dx.doi.org/10.1016/S0140-6736(10)61854-5. [DOI] [PubMed] [Google Scholar]

- 11.Harden RM, Crosby JR, Davis M. An introduction to outcome-based education. Med Teach. 1999;21(1):7–14. doi: 10.1080/01421599979969. Available from: http://dx.doi.org/10.1080/01421599979969. [DOI] [PubMed] [Google Scholar]

- 12.Frank JR, Jabbour M, Tugwell P The Societal Needs Working Group. Skills for the new millennium: Report of the societal needs working group, CanMEDS 2000 Project. Ann R Coll Phys Surg Can. 1996;29:206–216. [Google Scholar]

- 13.Batalden P, Leach D, Swing S, Dreyfus H, Dreyfus S. General competencies and accreditation in graduate medical education. Health Aff (Millwood) 200;21(5):103–111. doi: 10.1377/hlthaff.21.5.103. [DOI] [PubMed] [Google Scholar]

- 14.Frank JR, Mungroo R, Ahmad Y, Wang M, De Rossi S, Horsley T. Toward a definition of competency-based education in medicine: a systematic review of published definitions. Med Teach. 2010;32(8):631–637. doi: 10.3109/0142159X.2010.500898. Available from: http://dx.doi.org/10.3109/0142159X.2010.500898. [DOI] [PubMed] [Google Scholar]

- 15.Rethans JJ, Norcini JJ, Barón-Maldonado M, Blackmore D, Jolly BC, LaDuca T, Lew S, Page G, Southgate LH. The relationship between competence and performance: implications for assessing practice performance. Med Educ. 2002;36(10):901–909. doi: 10.1046/j.1365-2923.2002.01316.x. Available from: http://dx.doi.org/10.1046/j.1365-2923.2002.01316.x. [DOI] [PubMed] [Google Scholar]

- 16.Kogan JR, Holmboe E. Realizing the promise and importance of performance-based assessment. Teach Learn Med. 2013;25(Suppl 1):S68–S74. doi: 10.1080/10401334.2013.842912. Available from: http://dx.doi.org/10.1080/10401334.2013.842912. [DOI] [PubMed] [Google Scholar]

- 17.Holmboe ES. Faculty and the observation of trainees' clinical skills: problems and opportunities. Acad Med. 2004;79(1):16–22. doi: 10.1097/00001888-200401000-00006. Available from: http://dx.doi.org/10.1097/00001888-200401000-00006. [DOI] [PubMed] [Google Scholar]

- 18.Kogan JR, Conforti LN, Iobst WF, Holmboe ES. Reconceptualizing variable rater assessments as both an educational and clinical care problem. Acad Med. 2014;89(5):721–727. doi: 10.1097/ACM.0000000000000221. Available from: http://dx.doi.org/10.1097/ACM.0000000000000221. [DOI] [PubMed] [Google Scholar]

- 19.Ginsburg S, McIlroy J, Oulanova O, Eva K, Regehr G. Toward authentic clinical evaluation: pitfalls in the pursuit of competency. Acad Med. 2010;85(5):780–786. doi: 10.1097/ACM.0b013e3181d73fb6. Available from: http://dx.doi.org/10.1097/ACM.0b013e3181d73fb6. [DOI] [PubMed] [Google Scholar]

- 20.Van der Vleuten CP. Revisiting 'assessing professional competence: from methods to programmes'. Med Educ. 2016;50(9):885–888. doi: 10.1111/medu.12632. Available from: http://dx.doi.org/10.1111/medu.12632. [DOI] [PubMed] [Google Scholar]

- 21.Iblher P, Hofmann M, Zupanic M, Breuer G. What motivates young physicians? – a qualitative analysis of the learning climate in specialist medical training. BMC Med Educ. 2015;15:176. doi: 10.1186/s12909-015-0461-8. Available from: http://dx.doi.org/10.1186/s12909-015-0461-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Heeneman S, Oudkerk Pool A, Schuwirth LW, van der Vleuten CP, Driessen EW. The impact of programmatic assessment on student learning: theory versus practice. Med Educ. 2015;49(5):487–498. doi: 10.1111/medu.12645. Available from: http://dx.doi.org/10.1111/medu.12645. [DOI] [PubMed] [Google Scholar]

- 23.Pangaro LN, Durning S, Holmboe ES. Practical Guide to the Evaluation of Clinical Competence. 2nd ed. Philadelphia: Mosby-Elsevier; Rating scales. [Google Scholar]

- 24.Albanese M. Rating educational quality: factors in the erosion of professional standards. Acad Med. 1999;74(6):652–658. doi: 10.1097/00001888-199906000-00009. Available from: http://dx.doi.org/10.1097/00001888-199906000-00009. [DOI] [PubMed] [Google Scholar]

- 25.Gingerich A, Kogan J, Yeates P, Govaerts M, Holmboe E. Seeing the 'black box' differently: assessor cognition from three research perspectives. Med Educ. 2014;48(11):1055–1068. doi: 10.1111/medu.12546. Available from: http://dx.doi.org/10.1111/medu.12546. [DOI] [PubMed] [Google Scholar]

- 26.Kogan JR, Conforti LN, Bernabeo E, Iobst W, Holmboe E. How faculty members experience workplace-based assessment rater training: a qualitative study. Med Educ. 2015;49(7):692–708. doi: 10.1111/medu.12733. Available from: http://dx.doi.org/10.1111/medu.12733. [DOI] [PubMed] [Google Scholar]

- 27.Crossley J, Johnson G, Booth J, Wade W. Good questions, good answers: construct alignment improves the performance of workplace-based assessment scales. Med Educ. 2011;45(6):560–569. doi: 10.1111/j.1365-2923.2010.03913.x. Available from: http://dx.doi.org/10.1111/j.1365-2923.2010.03913.x. [DOI] [PubMed] [Google Scholar]

- 28.Weller JM, Misur M, Nicolson S, Morris J, Ure S, Crossley J, Jolly B. Can I leave the theatre? A key to more reliable workplace-based assessment. Br J Anaesth. 2014;112(6):1083–1091. doi: 10.1093/bja/aeu052. Available from: http://dx.doi.org/10.1093/bja/aeu052. [DOI] [PubMed] [Google Scholar]

- 29.Lockyer J. Multisource feedback: can it meet criteria for good assessment? J Contin Educ Health Prof. 2013;33(2):89–98. doi: 10.1002/chp.21171. Available from: http://dx.doi.org/10.1002/chp.21171. [DOI] [PubMed] [Google Scholar]

- 30.Appleby J, Devlin N. Getting the most ourt of PROMs. London: The Kings' Fund; 2010. Available from: http://www.kingsfund.org.uk/publications/getting-most-out-proms. [Google Scholar]

- 31.Donabedian A. An Introduction to Quality Assurance in Health Care. New York: Oxford University Press; 2003. [Google Scholar]

- 32.U. S. Department of Health and Human Service. Administration. Performance management and measurement. Rockwill: U.S. Department of Health and Human Services; 2011. Available from: http://www.hrsa.gov/quality/toolbox/methodology/performancemanagement/index.html. [Google Scholar]

- 33.Holmboe ES. Practical Guide to the Evaluation of Clinical Competence. 2nd ed. Philadelphia: Mosby-Elsevier; Clinical Practice Review. [Google Scholar]

- 34.Ogrinc G, Headrick LA, Morrison LJ, Foster T. Teaching and assessing resident competence in practice-based learning and improvement. J Gen Intern Med. 2004;19(5 Pt 2):496–500. doi: 10.1111/j.1525-1497.2004.30102.x. Available from: http://dx.doi.org/10.1111/j.1525-1497.2004.30102.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians' clinical performance: BEME Guide No. 7. Med Teach. 2006;28(2):117–128. doi: 10.1080/01421590600622665. Available from: http://dx.doi.org/10.1080/01421590600622665. [DOI] [PubMed] [Google Scholar]

- 36.Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French, SD, O'Brien MA, Johansen M, Grimshaw J, Oxman AD. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6:CD000259. doi: 10.1002/14651858.CD000259.pub3. Available from: http://dx.doi.org/10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brehaut JC, Colquhoun HL, Eva KW, Carroll K, Sales A, Michie S, Ivers N, Grimshaw JM. Practice feedback interventions: 15 suggestions for optimizing effectiveness. Ann Intern Med. 2016;164(6):435–441. doi: 10.7326/M15-2248. Available from: http://dx.doi.org/10.7326/M15-2248. [DOI] [PubMed] [Google Scholar]

- 38.Royal College of Physicians and Surgeons of Canada. CanMEDS. Ottawa: Royal College of Physicians and Surgeons of Canada; 2015. Available from: http://canmeds.royalcollege.ca/uploads/en/framework/CanMEDS%202015%20Framework_EN_Reduced.pdf. [Google Scholar]

- 39.ten Cate O. Entrustability of professional activities and competency-based training. Med Educ. 2005;39(12):1176–1177. doi: 10.1111/j.1365-2929.2005.02341.x. Available from: http://dx.doi.org/10.1111/j.1365-2929.2005.02341.x. [DOI] [PubMed] [Google Scholar]

- 40.ten Cate O, Scheele F. Competency-Based Postgraduate Training: Can We Bridge the Gap between Theory and Clinical Practice?? Acad Med. 2007;82(6):542–547. doi: 10.1097/ACM.0b013e31805559c7. Available from: http://dx.doi.org/10.1097/ACM.0b013e31805559c7. [DOI] [PubMed] [Google Scholar]

- 41.ten Cate O. Nuts and Bolts of Entrustable professional activities. J Grad Med Educ. 2013;5(1):157–158. doi: 10.4300/JGME-D-12-00380.1. Available from: http://dx.doi.org/10.4300/JGME-D-12-00380.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Holmboe ES, Edgar L, Hamstra S. The Milestones Guidebook. Chicago: ACGME; 2016. Available from: http://www.acgme.org/Portals/0/MilestonesGuidebook.pdf?ver=2016-05-31-113245-103. [Google Scholar]

- 43.Holmboe ES, Yamazaki K, Edgar L, Conforti L, Yaghmour N, Miller R, Hamstra SJ. Reflections on the first 2 years of milestone implementation. J Grad Med Educ. 2015;(3):506–511. doi: 10.4300/JGME-07-03-43. Available from: http://dx.doi.org/10.4300/JGME-07-03-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. 2015;37(11):983–1002. doi: 10.3109/0142159X.2015.1060308. Available from: http://dx.doi.org/10.3109/0142159X.2015.1060308. [DOI] [PubMed] [Google Scholar]

- 45.Bok HG, Teunissen PW, Favier RP, Rietbroek NJ, Theyse LF, Brommer H, Haarhuis JC, van Beukelen P, van der Vleuten CP, Jaarsma DA. Programmatic assessment of competency-based workplace learning: when theory meets practice. BMC Med Educ. 2013;13:123. doi: 10.1186/1472-6920-13-123. Available from: http://dx.doi.org/10.1186/1472-6920-13-123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Heeneman S, Oudkerk Pool A, Schuwirth LW, van der Vleuten CP, Driessen EW. The impact of programmatic assessment on student learning: theory versus practice. Med Educ. 2015;49(5):487–498. doi: 10.1111/medu.12645. Available from: http://dx.doi.org/10.1111/medu.12645. [DOI] [PubMed] [Google Scholar]

- 47.Van Tartwijk J, Driessen EW. Portfolios for assessment and learning: AMEE Guide no. 45. Med Teach. 2009;31(9):790–801. doi: 10.1080/01421590903139201. Available from: http://dx.doi.org/10.1080/01421590903139201. [DOI] [PubMed] [Google Scholar]

- 48.Zimmerman BJ. Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. Am Educ Res J. 2008;45:166–183. doi: 10.3102/0002831207312909. Available from: http://dx.doi.org/10.3102/0002831207312909. [DOI] [Google Scholar]

- 49.Durning SJ, Cleary TJ, Sandars J, Hemmer P, Kokotailo P, Artino AR. Perspective: viewing "strugglers" through a different lens: how a self-regulated learning perspective can help medical educators with assessment and remediation. Acad Med. 2011;86(4):488–495. doi: 10.1097/ACM.0b013e31820dc384. Available from: http://dx.doi.org/10.1097/ACM.0b013e31820dc384. [DOI] [PubMed] [Google Scholar]

- 50.Sargeant J, Armson H, Chesluk B, Dornan T, Eva K, Holmboe E, Lockyer J, Loney E, Mann K, van der Vleuten C. The processes and dimensions of informed self-assessment: a conceptual model. Acad Med. 2010;85(7):1212–1220. doi: 10.1097/ACM.0b013e3181d85a4e. Available from: http://dx.doi.org/10.1097/ACM.0b013e3181d85a4e. [DOI] [PubMed] [Google Scholar]

- 51.Eva K, Regehr G. "I'll never play professional football" and other fallacies of self-assessment. J Contin Educ Health Prof. 2008;28(1):14–19. doi: 10.1002/chp.150. Available from: http://dx.doi.org/10.1002/chp.150. [DOI] [PubMed] [Google Scholar]

- 52.Skills for Health. MH63.2013 Work with people and significant others to develop services to improve their mental health. Bristol: Skills for Health; 2004. Available from: https://tools.skillsforhealth.org.uk/competence/show/html/id/3833/ [Google Scholar]

- 53.Batalden M, Batalden P, Margolis P, Seid M, Armstrong G, Opipari-Arrigan L, Hartung H. Coproduction of healthcare service. BMJ Qual Saf. 2016;25(7):509–517. doi: 10.1136/bmjqs-2015-004315. Available from: http://dx.doi.org/10.1136/bmjqs-2015-004315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Ten Cate O, Kusukar RA, Williams GC. How self-determination theory can assist our understanding of the teaching and learning processes in medical education. AMEE Guide No. 59. Med Teach. 2011;33(12):961–973. doi: 10.3109/0142159X.2011.595435. Available from: http://dx.doi.org/10.3109/0142159X.2011.595435. [DOI] [PubMed] [Google Scholar]