Abstract

Importance

Effect sizes and confidence intervals (CIs) are critical for the interpretation of the results for any outcome of interest.

Objective

To evaluate the frequency of reporting effect sizes and CIs in the results of analytical studies.

Design, Setting, and Participants

Descriptive review of analytical studies published from January 2012 to December 2015 in JAMA Otolaryngology–Head & Neck Surgery.

Methods

A random sample of 121 articles was reviewed in this study. Descriptive studies were excluded from the analysis. Seven independent reviewers participated in the evaluation of the articles, with 2 reviewers assigned per article. The review process was standardized for each article; the Methods and Results sections were reviewed for the outcomes of interest. Descriptive statistics for each outcome were calculated and reported accordingly.

Main Outcomes and Measures

Primary outcomes of interest included the presence of effect size and associated CIs. Secondary outcomes of interest included a priori descriptions of statistical methodology, power analysis, and expectation of effect size.

Results

There were 107 articles included for analysis. The majority of the articles were retrospective cohort studies (n = 36 [36%]) followed by cross-sectional studies (n = 18 [17%]). A total of 58 articles (55%) reported an effect size for an outcome of interest. The most common effect size used was difference of mean, followed by odds ratio and correlation coefficient, which were reported 17 (16%), 15 (13%), and 12 times (11%), respectively. Confidence intervals were associated with 29 of these effect sizes (27%), and 9 of these articles (8%) included interpretation of the CI. A description of the statistical methodology was provided in 97 articles (91%), while 5 (5%) provided an a priori power analysis and 8 (7%) provided a description of expected effect size finding.

Conclusions and Relevance

Improving results reporting is necessary to enhance the reader’s ability to interpret the results of any given study. This can only be achieved through increasing the reporting of effect sizes and CIs rather than relying on P values for both statistical significance and clinically meaningful results.

This study evaluates the frequency of reporting effect sizes and confidence intervals in the results of analytical studies from JAMA Otolaryngology–Head & Neck Surgery.

Key Points

Question

What is the frequency of effect size and confidence interval reporting in JAMA Otolaryngology–Head & Neck Surgery from January 2012 to December 2015?

Findings

In this study of 121 articles, we found that approximately half reported an effect size and approximately half of those articles provided confidence intervals for the effect size. Less than 10% of the articles provided an a priori power analysis or an a priori mention of an effect size measure.

Meaning

This study identifies opportunities for improvements in the results reporting of otolaryngology literature through shifting away from null hypothesis statistical testing using P values to the reporting of effect sizes accompanied by confidence intervals.

Introduction

The primary outcome of interest in an analytic research investigation is inevitably a measure of effect size. An effect size is a quantifiable amount of anything that might be of interest. An effect size serves to answer the question, “How much of a change do we see?” or “How big of a difference exists between groups in the real world?” The answer to this question is essential to a reader’s ability to interpret the results of any given study.

Conventional research is largely conducted on a principle called the null hypothesis significance testing (NHST) where the P value is considered the most critical finding of a study. The predefined significance threshold of the P value, known as the α level, is typically set at .05. The P value is the most frequently used variable in biomedical research for conveying results and the interpretation of a study. Investigators use the P value as a means to interpret both statistical significance and clinically meaningful results and often fail to distinguish between these 2 very different concepts. By reporting P values as markers of clinically meaningful results, researchers and readers of the published medical literature have indirectly created a paradigm wherein conclusions about the clinical meaning of an effect are made based on the statistical probability of the results rather than the impact these results can have on patients.

There is a clear need to shift away from this significant or nonsignificant dichotomous method of result reporting and interpretation and toward a more quantitative method. A solution to the issue of dichotomous reporting of the results is to report results as the magnitude of an effect and the precision around the magnitude of the effect. There is a growing consensus among authors, biostatisticians, and leaders in academic medicine to use effect sizes and confidence intervals (CIs) in place of P values.

This study reviews articles published in JAMA Otolaryngology–Head & Neck Surgery over a 4-year period to evaluate the frequency of effect size and confidence interval reporting among the reviewed articles. The larger purpose of this study is to contribute knowledge, which we hope will improve the quality of results reporting in the otolaryngology literature. This study will also seek to emphasize the need to incorporate effect sizes and CIs in future publications.

Methods

Article Selection

This study was conducted by reviewing original investigations published in JAMA Otolaryngology–Head & Neck Surgery from January 2012 to December 2015. The medical librarian (S.K.) collected the title and authors of 464 articles published during this time period.

All descriptive studies, defined as studies with a single cohort and without a control group, were excluded from analysis. The focus of the review was on analytical studies, for which reporting of effect sizes and CIs are more critical.

Sample Size Calculation

Due to the large number of articles identified, the study team conducted a preliminary review of 41 randomly selected articles. The preliminary review was performed to refine the methodological approach and to estimate the sample size needed for the designed precision of results. Precision of results were calculated from an online statistical calculator (Vassar Statistical Computational Tool; VassarStats). Based on the review of 41 articles and identification of 18 articles reporting an effect size (44%), we estimated that a sample of roughly 121 articles would provide a 95% CI range of 35% to 52% around the observed percentage of articles that reported effect sizes.

Process of Reviewing Articles and Outcomes of Interest

A total of 7 independent reviewers participated in the evaluation of 121 randomly selected original investigation articles, with 2 reviewers assigned per article. To enhance both precision and accuracy, a standardized methodological approach to each article was created.

First, a brief overview of the goals, methods, and results of the article was obtained from the abstract, but no data could be collected directly from the abstract without confirmation from the Methods or Results sections of the paper.

Second, the Methods section was reviewed. From this section, the reviewers determined the study design and whether the authors included a section discussing statistical methodology. Next, the reviewers determined whether or not, a priori, a power analysis or an expectation or measurement of effect size was reported. When faced with ambiguous information regarding sample size or effect size, credit was given to the authors if either was mentioned.

Third, the Results section was reviewed and the reporting of effect size for any outcome of interest was recorded. A copy of Table 1.1 Common Effect Size Indexes from the book The Essential Guide to Effect Sizes was provided to each reviewer to help them identify different effect sizes. If an article described an effect size, the presence of the associated CI and its interpretation was recorded. Additionally, the reviewer rated whether the author interpreted CIs as: (1) a precision of the effect size based on the width of the CI; (2) a surrogate for statistical significance based on whether the lower bound of the CI crossed the no-effect threshold; (3) a clinically meaningful result based on the upper bound of the CI; (4) an inference to the total population; or (5) a description of the sample.

Upon completion of an article review, the reviewers sent all collected information to the first author (O.A.K.). Discrepancies between reviewers were identified by the first author and a predetermined process was used to resolve discrepancies. To resolve the identified discrepancies, the 2 reviewers provided to each other a justification for their decision and reached a consensus after a discussion. If the reviewers were unable to arrive to consensus, final decision was made through discussion by the first author (O.A.K.) and last author (J.F.P.).

Statistical Analysis

The primary outcome of interest was the percentage of articles that reported an effect size and associated CIs. The secondary outcomes of interest were the percentage of articles that included a discussion of statistical analysis, a priori calculation of power, a priori mention of effect size or effect measure, and interpretation of CIs.

Results

The details of the reviewed articles are presented in Table 1. A total of 121 articles were reviewed, of which 14 descriptive articles were excluded, leaving 107 total articles for analysis. Overall, 36 studies (34%) were retrospective cohorts, 18 studies (17%) were cross sectional, 14 studies (13%) were prospective cohorts, 14 studies (13%) were “other,” 10 studies (9%) were randomized clinical trials, 10 studies (9%) were case-control studies, and 5 studies (5%) were meta-analyses. “Other” studies included cadaveric studies, basic science research, and study designs that did not fit the parameters of the aforementioned study designs.

Table 1. Types of 107 Studies.

| Study Type | Frequency, No. (%) |

|---|---|

| Retrospective cohort | 36 (34) |

| Cross section | 18 (17) |

| Prospective cohort | 14 (13) |

| Other | 14 (13) |

| Randomized clinical trial | 10 (9) |

| Case-control | 10 (9) |

| Meta-analysis | 5 (5) |

A total of 58 articles (55%) reported effect sizes of various types (Table 2). The majority of effect sizes were reported as a difference of mean, followed by odds ratio, and correlation coefficient, which were reported 17 (16%), 15 (13%), and 12 times (11%), respectively. Table 3 contains the full description of the effect size findings.

Table 2. Results Rigor.

| Result | No. (%) |

|---|---|

| Effect size reported | 58 of 107 (54) |

| Confidence interval reported | 29 of 58 (50) |

| Confidence interval interpreted | 9 of 29 (31) |

Table 3. Effect Size Classification.

| Type | Frequency, No. (%) |

|---|---|

| Difference of mean | 17 (27) |

| Odds ratio | 15 (22) |

| Correlation coefficient | 13 (21) |

| Hazards ratio | 4 (6) |

| Relative risk | 4 (6) |

| Cost/time reduction | 3 (5) |

| Sensitivity/specificity/NPV/PPV | 2 (4) |

| Difference of median | 1 (2) |

| Yue-Clayton theta | 1 (2) |

| Incremental cost-effectiveness ratio | 1 (2) |

| Relative risk reduction | 1 (2) |

| Total | 62a (99) |

Abbreviations: NPV, negative predictive value; PPV, positive predictive value.

The total number does not match the number of studies reporting effect sizes in Table 2. This is due to 4 studies that report multiple effect sizes.

Of the 59 articles that reported effect sizes, we found that 29 effect sizes (27%) were accompanied by CIs (Table 3). Further review determined that 9 articles (8%) included an interpretation of the CIs by the authors. Of the 9 articles that reported CIs, the most common interpretation of the CI was as a surrogate for statistical significance, which we found 6 times (6%). We also found that CIs were used as an inference to clinically meaningful results twice (2%) and as a precision of estimate once (1%).

During the review of the Methods section of the articles, we found that 97 of 107 published articles (91%) provided an a priori description of statistical methodology, 8 of 107 (7%) provided an a priori description of expected effect size findings, and 5 of 107 (5%) provided an a priori power analysis.

Discussion

In this study, which examined the use of effect size and CIs in analytical articles published in JAMA Otolaryngology–Head & Neck Surgery between January 2012 and December 2015, we found that approximately half of the articles reported an effect size, and nearly half of those articles provided CIs for the effect sizes. Less than 10% of the articles provided an a priori power analysis or an a priori mention of effect size measure.

Review of the published literature for the use of effect size and CIs is limited, which suggests greater attention should be paid to alternative forms of results reporting than the reporting of P values for statistical significance. Chavalarias et al performed an original investigation reporting the frequency of P value use in published abstracts in PubMed and MEDLINE between the years 1990 and 2015. Most of the articles included in the review were identified and analyzed using automated text mining analysis. However, a random sample of 1000 MEDLINE abstracts were reviewed by the investigators who found that of 796 abstracts containing empirical data, only 18 (2.3%; 95% CI, 1.3%-3.6%) reported CIs, 179 (22.5%; 95% CI, 19.6%-25.5%) had at least 1 effect size, and only 1 abstract mentioned clinical significance. The authors concluded that there was rampant usage of the P value in the biomedical literature as the measure of clinical and statistical significance, with few articles reporting effect sizes or CIs. The findings from our current investigation analyzing the published otolaryngology literature for the use of effect size and CIs also suggests the need to incorporate more effect sizes and CIs.

Statistical Significance vs Clinically Meaningful Results

In the published literature, the term “statistical significance” connotes a result where the associated P value is .05 or less. The prominence of the P value in the biomedical literature is attributed to R. A. Fisher who, in the Statistical Methods for Research Workers, defined the P value as “the probability of the observed result plus more extreme results, if the null hypothesis were true.” The limitations of using P values alone without indexes of clinically meaningful results are well documented. According to Fisher, the P value was intended to be a measure of statistical inference and should not be used to report the clinical importance of a result.

A clinically meaningful result, as opposed to a statistically significant result, is an outcome that a patient can experience or observe. A clinically meaningful result is also an estimate that exceeds a minimally important threshold, which is defined as the smallest result that the patient perceives as beneficial and would mandate a change in the patient’s management. A measurement of statistical significance (ie, P value) cannot provide information about the magnitude or clinical importance of a result, and for that reason it is necessary to report effect sizes and CIs.

Consider the following example from a recently published article in which the authors examined the association between ibuprofen use and post-tonsillectomy hemorrhage (sPTH) among 6710 patients at a tertiary children’s hospital. Among the 4588 patients with no ibuprofen use, 160 (3.5%) had a sPTH and 7 received transfusion. Among the 2122 with ibuprofen use, 62 (2.9%) had a sPTH and 8 received transfusion. The P value obtained from the Fisher exact probability test for the association between ibuprofen use and transfusion among sPTH patients is .03. The effect size, as measured by the odds ratio (OR), for the association between ibuprofen use and transfusion among children who experienced sPTH is 3.16 and the 95% CI around this effect size is 1.01 to 9.91. Examination of the P value tells us that the association between ibuprofen use and transfusion is statistically significant. On the other hand, the examination of the effect size and the 95% CI tells us so much more. The OR tells us that the magnitude of the effect is large (3 times increase) and, given the setting, our clinical experience tells us that an increase of 3 times is clinically significant. The positive value of the OR tells us the direction of the effect (ibuprofen is associated with a higher transfusion rate). The inspection of the lower bound of the 95% tells us that this effect is statistically significant as the lower bound does not cross 1. The inspection of the upper bound of the CI tells us that the data are compatible with an increase in transfusion rate as much as 10 times among ibuprofen users as compared with nonibuprofen users—a very large clinical effect. And finally, the large range in the width of the CI, which includes clinically insignificant values around 1 and values consistent with 10 times increase, suggest imprecision in the OR and caution in the interpretation.

Effect Sizes

Effect sizes are a diverse category of metrics for results reporting. The 2 major effect size families include the d family and the r family. The d family of effect sizes are a measure of the amount of a difference between groups. The most prominent examples of the d family include mean or median differences, percentage difference, risk ratios, ORs, risk difference, Cohen d, Glass Δ, and Hedges g. The r family of effect sizes are a measure of the strength of a relationship between variables. Most of these r family measures are variations on the Pearson correlation coefficient. Additional examples of these metrics include the Spearman rank correlation, Goodman and Kruskal λ, and φ coefficient. The inclusion of any of these effect size metrics contributes to the description of the clinical significance of the study.

Confidence Intervals

As highlighted in the previous example, CIs provide all the information that P values do, and more. First, the reporting of a CI around a point estimate from a sample provides information about where the true value of the estimate lies in the population. When a clinically meaningful value is defined by the investigators, the CI can be used to identify whether the observed data are compatible with important clinical differences. Second, the range of the CI provides meaningful information about the precision of the observed estimate. A study reporting narrow CIs indicates to the readers high precision in measurement, while a study with wide CIs indicates a lack of precision in measurement. Third, CIs are more stable estimates of the effect than P values. For example, consider repeating a study 100 times, randomly drawing different samples from the same population and calculating a point estimate and 95% CI for each sample. The true value for the point estimate in the total population would be included in 95 of the 100 CIs. On the other hand, the associated P values for these 100 separate experiments could range from as low as P < .001 to P > .99 The reported 95% CI is more stable than the associated P value and therefore more informative for interpreting the result. This instability in the P value has been coined “The Dance of the P value.” Fourth, authors may choose to discuss CIs in the context of representation of the sample data or representation of the total population. In doing so, the authors provide readers with either descriptive or inferential information. And finally, statistical probability of a point estimate can be easily inferred from CIs. A statistical threshold for an analytical test is predefined (eg, α level < .05) and readers can use the CI rather than rely on the P values for this information. Statistical significance can be determined by simply evaluating if the statistical threshold is included in the range of values within the interval.

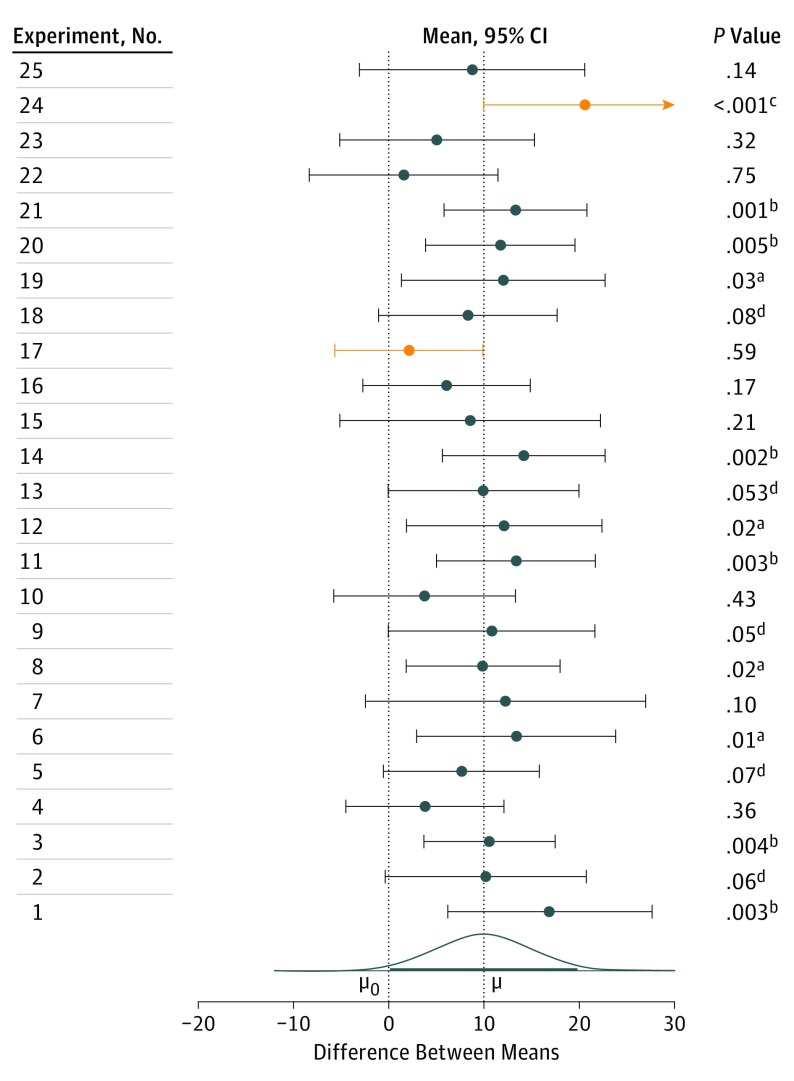

To further illustrate this, the Figure depicts the simulated results of 25 different replications of an experiment conducted on 2 different samples (n = 32) from a normally distributed population. The samples have a standard deviation of 20, and a true mean difference of 10. During each experiment, the mean difference is calculated along with the 95% CI. On the left hand side is the associated P value for each experiment. The mean difference calculated for each experiment is marked by a circle, and its associated CIs are also mapped. Observe the variability in the mean difference and P value calculated during each experiment that can occur through different sample selection from the same population. As can be seen, the interpretation of a single point estimate and P value could starkly vary from experiment to experiment. However, the interpretation of CIs indicates that in all but 2 experiments the CI captures the true mean difference of 10. The experiments that fail to capture the true mean difference are shaded red. This highlights the significance of using CIs for interpretation, and the stability of CIs as estimates of an effect.

Figure. Simulated Results of 25 Replications of an Experiment.

The numbered experiments are on the left. Each numbered experiment in the left column comprises 2 independent samples (n = 32); the samples are from normally distributed populations with σ = 20 and means that differ by µ = 10. For each experiment, the difference between the sample means (circle) and the 95% CI for this difference are displayed. The P values listed on the right are 2-tailed, for a null hypothesis of 0 difference, µ0 = 0, with σ assumed to be not known (a.01 <P < .05; b.001 <P < .01; cP < .001; d.05 <P < .10). The population effect size is 10, or Cohen δ = 0.5, which is conventionally considered a medium-sized effect. Mean differences whose CI does not capture μ are shown in yellow. The curve is the sampling distribution of the difference between the sample means; the heavy line spans 95% of the area under the curve. This Figure is reproduced, with permission, from Cumming.

Conclusions

This study identified opportunities for improvement in results reporting in the otolaryngology literature. Improving results reporting in clinical research will require a paradigm shift from the method of null hypothesis statistical testing using P values to the use of effect sizes accompanied by CIs. We have shown that effect sizes accompanied by CIs are far superior in conveying information regarding the clinical significance of a result, the magnitude of a result, and the stability of a result.

References

- 1.Piccirillo JF. Improving the quality of the reporting of research results. JAMA Otolaryngol Head Neck Surg. 2016;142(10):937-939. [DOI] [PubMed] [Google Scholar]

- 2.Cumming G. Understanding The New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis. New York: Routledge; 2012. [Google Scholar]

- 3.Ellis PD. The Essential Guide to Effect Sizes. Statistical Power, Meta-Analysis, and the Interpretation of Research Results. Cambridge, UK: Cambridge University Press; 2015. [Google Scholar]

- 4.Goodman S. A dirty dozen: twelve p-value misconceptions. Semin Hematol. 2008;45(3):135-140. [DOI] [PubMed] [Google Scholar]

- 5.Goodman SN. Toward evidence-based medical statistics. 1: The P value fallacy. Ann Intern Med. 1999;130(12):995-1004. [DOI] [PubMed] [Google Scholar]

- 6.Goodman SN, Berlin JA. The use of predicted confidence intervals when planning experiments and the misuse of power when interpreting results. Ann Intern Med. 1994;121(3):200-206. [DOI] [PubMed] [Google Scholar]

- 7.Chavalarias D, Wallach JD, Li AH, Ioannidis JP. Evolution of Reporting P Values in the Biomedical Literature, 1990-2015. JAMA. 2016;315(11):1141-1148. [DOI] [PubMed] [Google Scholar]

- 8.Kyriacou DN. The enduring evolution of the P value. JAMA. 2016;315(11):1113-1115. [DOI] [PubMed] [Google Scholar]

- 9.Cohen J. Things I have learned (so far). Am Psychol. 1990;45(12):1304-1312. [Google Scholar]

- 10.Cohen J. The earth is round (P<.05): rejoinder. Am Psychol. 1995;50(12):1103. [Google Scholar]

- 11.Cohen J. Statistical Power Analysis for the Behavioral Sciences. Taylor & Francis; 2013. [Google Scholar]

- 12.Goodman SN. P values, hypothesis tests, and likelihood: implications for epidemiology of a neglected historical debate. Am J Epidemiol. 1993;137(5):485-496. [DOI] [PubMed] [Google Scholar]

- 13.Gardner MJ, Altman DG. Confidence intervals rather than P values: estimation rather than hypothesis testing. Br Med J (Clin Res Ed). 1986;292(6522):746-750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gardner MJ, Altman DG. Confidence—and clinical importance—in research findings. Br J Psychiatry. 1990;156:472-474. [DOI] [PubMed] [Google Scholar]

- 15.Gardner MJ, Altman DG. Estimating with confidence. Br Med J (Clin Res Ed). 1988;296(6631):1210-1211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gardner MJ, Altman DG. Using confidence intervals. Lancet. 1987;1(8535):746. [DOI] [PubMed] [Google Scholar]

- 17.Rosnow RL, Rosenthal R. Statistical procedures and the justification of knowledge in psychological science. Am Psychol. 1989;44(10):1276-1284. [Google Scholar]

- 18.Altman DG, Gardner MJ. Confidence in confidence intervals. Alcohol Alcohol. 1991;26(4):481-482. [DOI] [PubMed] [Google Scholar]

- 19.Fisher RA. Statistical Methods for Research Workers. Edinburgh, Scotland: Oliver and Boyd; 1925. [Google Scholar]

- 20.Jaeschke R, Singer J, Guyatt GH. Measurement of health status: ascertaining the minimal clinically important difference. Control Clin Trials. 1989;10(4):407-415. [DOI] [PubMed] [Google Scholar]

- 21.Crosby RD, Kolotkin RL, Williams GR. Defining clinically meaningful change in health-related quality of life. J Clin Epidemiol. 2003;56(5):395-407. [DOI] [PubMed] [Google Scholar]

- 22.Mudd PA, Thottathil P, Giordano T, et al. Association between ibuprofen use and severity of surgically managed posttonsillectomy hemorrhage. JAMA Otolaryngol Head Neck Surg. 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cumming G. The new statistics: why and how. Psychol Sci. 2014;25(1):7-29. [DOI] [PubMed] [Google Scholar]