Key Points

Question

When applying deep learning methods to the automated assessment of fundus images, what is the accuracy for detecting age-related macular degeneration?

Finding

This study found that the deep convolutional neural network method ranged in accuracy (SD) between 88.4% (0.7%) and 91.6% (0.1%), with kappa scores close to or greater than 0.8, which is comparable with human expert performance levels.

Meaning

The results suggest that deep learning–based machine grading can be leveraged successfully to automatically assess age-related macular degeneration from fundus images in a way that is comparable with the human ability to grade age-related macular degeneration from these images.

Abstract

Importance

Age-related macular degeneration (AMD) affects millions of people throughout the world. The intermediate stage may go undetected, as it typically is asymptomatic. However, the preferred practice patterns for AMD recommend identifying individuals with this stage of the disease to educate how to monitor for the early detection of the choroidal neovascular stage before substantial vision loss has occurred and to consider dietary supplements that might reduce the risk of the disease progressing from the intermediate to the advanced stage. Identification, though, can be time-intensive and requires expertly trained individuals.

Objective

To develop methods for automatically detecting AMD from fundus images using a novel application of deep learning methods to the automated assessment of these images and to leverage artificial intelligence advances.

Design, Setting, and Participants

Deep convolutional neural networks that are explicitly trained for performing automated AMD grading were compared with an alternate deep learning method that used transfer learning and universal features and with a trained clinical grader. Age-related macular degeneration automated detection was applied to a 2-class classification problem in which the task was to distinguish the disease-free/early stages from the referable intermediate/advanced stages. Using several experiments that entailed different data partitioning, the performance of the machine algorithms and human graders in evaluating over 130 000 images that were deidentified with respect to age, sex, and race/ethnicity from 4613 patients against a gold standard included in the National Institutes of Health Age-related Eye Disease Study data set was evaluated.

Main Outcomes and Measures

Accuracy, receiver operating characteristics and area under the curve, and kappa score.

Results

The deep convolutional neural network method yielded accuracy (SD) that ranged between 88.4% (0.5%) and 91.6% (0.1%), the area under the receiver operating characteristic curve was between 0.94 and 0.96, and kappa coefficient (SD) between 0.764 (0.010) and 0.829 (0.003), which indicated a substantial agreement with the gold standard Age-related Eye Disease Study data set.

Conclusions and Relevance

Applying a deep learning–based automated assessment of AMD from fundus images can produce results that are similar to human performance levels. This study demonstrates that automated algorithms could play a role that is independent of expert human graders in the current management of AMD and could address the costs of screening or monitoring, access to health care, and the assessment of novel treatments that address the development or progression of AMD.

This study uses deep learning methods for the automated assessment of age-related macular degeneration from color fundus images.

Introduction

Age-related macular degeneration (AMD) is associated with the presence of drusen, long-spacing collagen, and phospholipid vesicles between the basement membrane of the retinal pigment epithelium and the remainder of the Bruch membrane. The intermediate stage of AMD, which often causes no visual deficit, includes eyes with many medium-sized drusen (the greatest linear dimension ranging from 63 µ-125 µ) or at least 1 large druse (greater than 125 µ) or geographic atrophy (GA) of the retinal pigment epithelium that does not involve the fovea.

The intermediate stage often leads to the advanced stage, in which substantial damage to the macula can occur from choroidal neovascularization, also termed the wet advanced form, or GA that involve the center of the macula, which is termed the dry advanced form. Choroidal neovascularization , when not treated, often leads to the loss of central visual acuity, which affects daily activities like reading, driving, or recognizing objects. Consequently, the advanced stage can pose a substantial socioeconomic burden on society. Age-related macular degeneration is the leading cause of central vision loss among people older than 50 years in the United States; approximately 1.75 million to 3 million individuals have the advanced stage.

While AMD currently has no definite cure, the Age-related Eye Disease Study (AREDS) has suggested benefits of specific dietary supplements for slowing AMD progression among individuals with the intermediate stage in at least 1 eye or the advanced stage only in 1 eye. Additionally, vision loss because of choroidal neovascularization can be reversed, stopped, or slowed by administering antivascular endothelial growth factor intravitreous injections. Ideally, individuals with the intermediate stage of AMD should be identified, even if asymptomatic, and referred to an ophthalmologist who can monitor for the development and subsequent treatment of choroidal neovascularization. Manual screenings of the entire at-risk population of individuals older than 50 years for the development of the intermediate stage of AMD in the United States is not realistic because the at-risk population is large (more than 110 million). It also is not feasible in all US health care environments to screen if there is poor access to experts who can identify the development of the intermediate stage of AMD. These same issues may be more pronounced in low- and middle-income countries. Therefore, automated AMD diagnostic algorithms, which identify the intermediate stage of AMD, are a worthy goal for future automated screening solutions for major eye diseases.

While no treatment comparable with antivascular endothelial growth factor currently exists for GA, numerous clinical trials are being conducted to identify treatments for slowing GA growth. Automated algorithms may play a role in assessing treatment efficacy, in which it is critical to quantify disease worsening objectively under therapy; careful manual grading of this by clinicians can be costly and subjective.

Past algorithms for automated retinal image analysis generally relied on traditional approaches that consisted of manually selecting engineered image features (eg, wavelets, scale-invariant feature transform) that were then used in a classifier (eg, support vector machines [SVM] or random forests). By contrast, deep learning (DL) methods learn task-specific image features with multiple levels of abstraction without relying on manual feature selection. Recent advances in DL have improved performance levels dramatically for numerous image analysis tasks. This progress was enabled by many factors (eg, novel methods to train very deep networks or using graphic processing units). Recently, DL has been used for conducting retinal image analyses, including tasks such as classifying referable diabetic retinopathy. A previous study reported on the use of deep universal features/transfer learning for automated AMD grading. The new study expanded on the previous study by using a data set that is approximately 10 to 20 times larger, using the full scope of deep convolutional neural networks (DCNN).

Methods

Overview

This study aimed to solve a 2-class AMD classification problem, classifying fundus images of individuals that have either no or early stage AMD (for which dietary supplements and monitoring for progression to advanced AMD is not considered) vs those with the intermediate or advanced stage AMD, for which supplements, monitoring, or both is considered. It leveraged DL and DCNN. The goals of this study were to measure and compare the performance of the proposed DL vs a human clinician, and a secondary goal was to compare the performance between 2 DL approaches that entailed different levels of computational effort regarding training.

Data

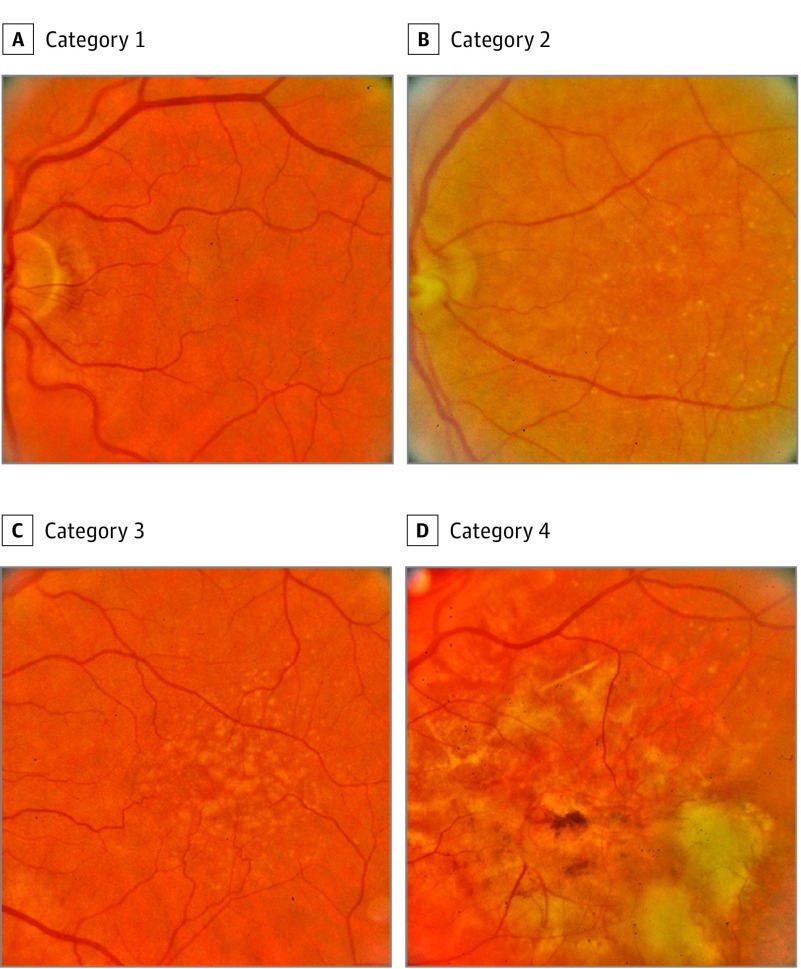

Our study used the National Institutes of Health AREDS data set collected over a 12-year period. The Age-related Eye Disease Study originally was designed to improve understanding of AMD worsening, treatment, and risk factors for worsening. It includes over 130 000 color fundus images from 4613 patients that were taken with informed consent obtained at each of the clinical sites (Table 1). Color fundus photographs were captured of each patient at baseline and follow-up visits and were subsequently digitized. These images included stereo pairs taken from both eyes. Images were carefully and quantitatively graded by experts for identifying AMD at a US fundus photograph-reading center. Graders used graduated circles to measure the location and area of drusen and other retinal abnormalities (eg, retinal elevation and pigment abnormalities) in the fundus images to determine the AMD severity level. Each image was then assigned by graders to a category reflecting AMD severity that ranged from 1 to 4, with 1 = no AMD, 2 = early stage, 3 = intermediate stage, and 4 = advanced stage (Figure 1). These severity grades were used as a “gold standard” in our study for performing a 2-class classification of no or early stage AMD (here referred to as class 0) vs potentially referable (intermediate or advanced) stage (class 1). The Age-related Eye Disease Study is a public data set that can be made available on request to the National Insitutes of Health.

Table 1. Summary of Data Sets Used.

| Data set | Ha | WSb | NSGc | NSd |

|---|---|---|---|---|

| Images, class 0, No. | 2779 | 74401 | 37101 | 37418 |

| Images, class 1, No. | 2221 | 59420 | 29842 | 29983 |

Abbreviations: AREDS, Age-related Eye Disease Study; DCNN, deep convolutional neural networks; H, human; NS, no stereo; NSG, no stereo gradable; WS, with stereo pairs.

For comparing DCNN algorithms to human performance, a physician independently and manually graded a subset (n = 5000) of the AREDS images.

This is the full set of all AREDS images (n = 133 821) including stereo pairs (taking care that stereo pair images from the same eye did not appear in the training and testing data sets).

Because AREDS images are collected under a variety of environmental conditions (eg, lighting, patient eye orientation, etc) and therefore are not of uniform quality, an ophthalmologist was tasked to annotate a subset (n = 7775) of images for “gradability” as a basic measure of fundus image quality. This metric was extended via machine learning over the entire data set and 458 of the poorest quality images were removed from NS to form NSG.

Only 1 of the stereo pair is kept from each eye resulting in a set comprised of 67 401 images.

Figure 1. Examples of Fundus Images Showing Age-Related Macular Degeneration (AMD).

A, category 1 or no AMD; B, category 2 or early AMD; C, category 3, intermediate AMD; and D, category 4 or advanced AMD.

DCNN Approach

This study used DCNNs. A DCNN is a deep neural network that consists of many repeated processing layers that take as input fundus images that are processed via a cascade of operations with the goal of producing an output class label for each image. One way to think about DCNNs is that they match the input image with successive convolutional filters to generate low-, mid-, and high-level representations (ie, features) of the input image. Deep convolutional neural networks also include layers that pool features together spatially, perform nonlinear operations at various levels, combine these via fully connected layers, and output a final probability value for the class label (here the AMD-referable vs not referable classification). A DCNN is trained to discover and optimize the weights of the convolutional filters that produce these image features via a backpropagation process. This optimization is done directly by using the training images. Therefore, this process is considered to be a data-driven approach and contrasts with past approaches to processing and analyzing fundus imagery that have used engineered features that resulted from an ad hoc, manual, and therefore possibly suboptimal algorithmic design and selection of such features. While the workings of DCNNs are simple to grasp at a notional level, there is currently extensive research being conducted to understand, improve, and extend the current state of the art.

We used the AlexNet (University of Toronto) DCNN model (here called DCNN-A) in which the weights of all layers of the network are optimized via training to solve the referable AMD classification problem. This training process involved optimizing over 61 million convolutional filter weights. In addition to the layers mentioned above, this network included dropout, rectified linear unit activation, and contrast normalization steps. The dropout step consisted of arbitrarily setting to 0 some of the neuron outputs (chosen randomly) with the effect of encouraging functional redundancy in the network and acting as a regularization. Our implementation incorporated the Keras and TensorFlow DL frameworks. It used a stochastic gradient descent with a Nesterov momentum, with an initial learning rate that was set to 0.001. The training scheme used an early stopping mechanism that terminated training after 50 epochs of no improvement of the validation accuracy.

Universal Features/Transfer Learning Approach

For comparison, this study also used another DL approach that focused on reusing a pretrained DCNN and performing transfer learning. The idea behind transfer learning is to exploit knowledge that is learned from one source task that has a relative abundance of training data (general images of animals, food, etc.) to allow for learning in an alternative target task (AMD classification on fundus images). Here, universal features were computed by using a pretrained DCNN to solve a general classification problem on a large set of images and reuse these features for the AMD task. Our approach used the pretrained OverFeat (New York University) DCNN which was pretrained on more than a million natural images to produce a 4096 dimension feature vector, which was then used to retrain a linear SVM (LSVM) for our specific AMD classification problem from fundus images. We call this method DCNN-U.

The 2 methods (DCNN-A and DCNN-U) used a preprocessing of the input fundus image by detecting the outer boundaries of the retina, cropping images to the square that was inscribed within the retinal boundary, and resizing the square to fit the expected input size of AlexNet or OverFeat DCNNs. Additionally, DCNN-U used a multigrid approach in which the cropped image was coupled with 2 concentric square subimages that were centered in the middle of the inscribed image. The resulting 3 images (the cropped image plus 2 centered subimages) were then fed to the OverFeat DCNN to produce two additional 4096-long feature vectors. The 3 feature vectors for the image were then concatenated to generate a single 12 288-sized feature vector as input to the LSVM. This method is further detailed in previous reports.

Data Partitioning

This study considered several experiments that used the entire AREDS fundus image data set as well as different subsets of AREDS. It also used different partitionings and groupings of the AREDS image data set. The different subsets of AREDS used are described here. The set of all AREDS images (133 821) was used, including stereo pairs (ensuring that stereo pairs from the same eye did not appear in the training and testing data sets). We called this set WS for “with stereo pairs”. We called the next set NS for “no stereo.” In this data set, only 1 of the stereo images was kept from each eye, which resulted in 67 401 images. We called the next set NSG for “no stereo, gradable.” Because AREDS images are collected under a variety of conditions (eg, lighting or eye orientation) and therefore are not of uniform quality, an ophthalmologist (KP) was tasked to annotate a subset of images (n = 7775, 5.8%) for “gradability” as a basic measure of fundus image quality. Subsequently, a machine learning method was used to extend the index of gradability over the entire image data set NS to exclude automatically the most egregious low-quality images. The NSG was derived from NS by removing 458 images (.34%) with the smallest “gradability” index. The final set was called H for human. For comparison with human performance levels, we tasked a physician to independently and manually grade a subset of AREDS images (n = 5000, 3.7%). The grades that were generated by the physician and the machine were compared with the AREDS gold standard AMD scores. The number of images that were used in each set, broken down by class, is reported in Table 1.

These data sets were further subdivided into training and testing subsets. We used a conventional K-fold crossvalidation performance evaluation method, with K = 5, in which 4-folds were used for training and one was used for testing (with a rotation of the folds). Additionally, because images from patients were collected over multiple visits, and because DCNN performance depends on having as large a number as possible of patient examples, we considered 2 types of experiments that corresponded to 2 types of data grouping and partitioning. In the baseline partitioning method (termed standard partitioning [SP]) images taken at each patient visit (occurring approximately every 2 years) were considered unique. For SP, when both stereo pairs were used (WS), care was taken that they always appeared together in the same fold. In a second partitioning method (termed patient partitioning [PP]), we ensured that all images of the same patient appeared in the same fold. Standard partitioning views patient visit as a unique entity, while PP considers that each patient (not each visit) forms a unique entity. Therefore, PP is a more stringent partitioning method that provides fewer patients to the classifier to train on; any patient with a highly abnormal or atypical retina will be represented in only one of the folds.

Performance Metrics

The performance metrics used included accuracy, sensitivity, specificity, positive predicted value, negative predicted value, and kappa score, which accounts for the possibility of agreement by chance. Because any classifier trades off between sensitivity and specificity, to compare methods we used receiver operating characteristic (ROC) curves that plot the detection probability ie, sensitivity vs false alarm rate (ie, 100% minus specificity) for each algorithm/experiment. To compare with human performance levels, we also showed the operating point that demonstrated the human clinician operating performance level. We also computed the area under the curve for each algorithm/experiment.

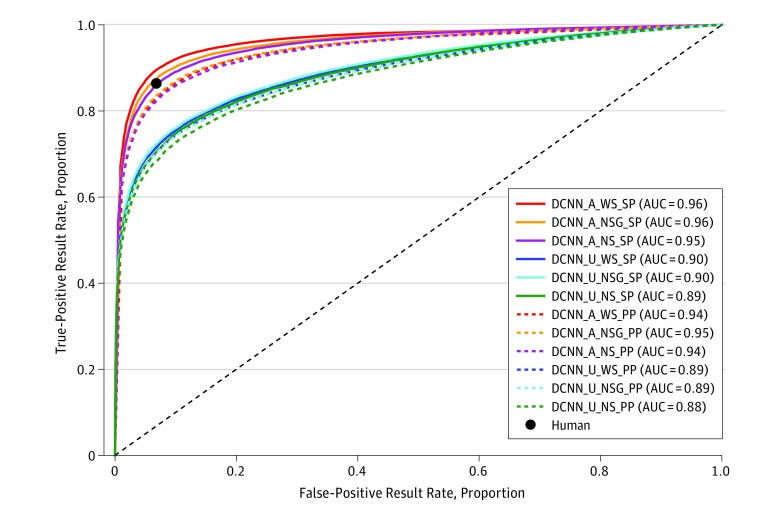

Results

The experiments used the AREDS fundus images with the different subsets and partitioning that were previously explained. Performance levels are reported in Table 2 (SP) and Table 3 (PP) for sets H, WS, NS, and NSG, and for the 2 algorithms (DCNN-A and DCNN-U) and the human performance levels. Receiver operating characteristic curves and areas under the curve are reported in Figure 2.

Table 2. Performance Levels for Human and Machine Experiments Using Standard Partitioned Dataa.

| Method/Data set | Human H |

DCNN-A WS |

DCNN-U WS |

DCNN-A NSG |

DCNN-U NSG |

DCNN-A NS |

DCNN-U NS |

|---|---|---|---|---|---|---|---|

| Accuracy | 90.2 | 91.6 (0.1) | 83.7 (0.5) | 90.7 (0.5) | 83.9 (0.4) | 90.0 (0.6) | 83.2 (0.2) |

| Sensitivity | 86.4 | 88.4 (0.7) | 73.5 (0.9) | 87.2 (0.8) | 73.8 (0.7) | 85.7 (2.3) | 72.8 (0.2) |

| Specificity | 93.2 | 94.1 (0.6) | 91.8 (0.3) | 93.4 (1.0) | 92.1 (0.5) | 93.4 (1.0) | 91.5 (0.2) |

| PPV | 91.0 | 92.3 (0.7) | 87.7 (0.2) | 91.5 (1.2) | 88.3 (0.7) | 91.3 (1.0) | 87.3 (0.3) |

| NPV | 89.6 | 91.1 (0.4) | 81.2 (0.7) | 90.1 (0.4) | 81.4 (0.4) | 89.1 (1.4) | 80.8 (0.1) |

| Kappa | 0.800 | 0.829 (0.003) | 0.663 (0.010) | 0.810 (0.011) | 0.700 (0.008) | 0.796 (0.013) | 0.654 (0.003) |

Abbreviations: DCCN-A, deep convolutional neural network, algorithm A; DCCN-U, deep convolutional neural network, algorithm U; H, human; NS, no stereo; NSG, no stereo gradable; NPV, negative predicted value; PPV, positive predicted value; WS, with stereo pairs..

All values indicate percentages, except for the kappa coefficient. Values in parentheses indicate standard deviations.

Figure 2. Receiver Operating Characteristic Curves.

Receiver operating characteristic curves for all experiments and algorithms showing also the corresponding area under the curve values.

A indicates algorithm A; AUC, area under the curve; DCNN, deep convolutional neural networks; NS, no stereo; NSG, no stereo gradable; PP, patient partitioning; SP, standard partitioning; WS, with stereo pairs; U, algorithm U.

In aggregate, performance results for both DL approaches show promising outcomes when considering all metrics. Accuracy (SD) ranged from 90.0% (0.6%) to 91.6% (0.1%) for DCNN-A (Table 2) and 88.4% (0.5%) to 88.8% (0.7%) (Table 3); for DCNN-U, it ranged from 83.2% (0.2%) to 83.9% (0.4%) (Table 2) and 82.4% (0.5%) to 83.1% (0.5%) (Table 3). As seen in Table 2, Table 3, and the ROCs, DCNN-A consistently outperformed DCNN-U. This can be explained by the fact that DCNN-A was specifically trained to solve the AMD classification problem by optimizing all of the DCNN weights over all layers of the network while for DCNN-U, with its simpler training requirement, the training only affected the final (LSVM) classification stage.

Table 3. Performance Levels for Human and Machine Experiments Using Patient Partitioned Dataa.

| Method/Data set | Human H |

DCNN-A WS |

DCNN-U WS |

DCNN-A NSG |

DCNN-U NSG |

DCNN-A NS |

DCNN-U NS |

|---|---|---|---|---|---|---|---|

| Accuracy | 90.2 | 88.7 (0.7) | 83.1 (0.9) | 88.8 (0.7) | 83.1 (0.5) | 88.4 (0.5) | 82.4 (0.5) |

| Sensitivity | 86.4 | 84.6 (0.9) | 72.3 (2.2) | 85.3 (1.6) | 71.7 (1.4) | 84.5 (0.9) | 71.0 (1.3) |

| Specificity | 93.2 | 92.0 (0.7) | 91.8 (0.6) | 91.6 (1.2) | 92.2 (0.5) | 91.5 (0.7) | 91.4 (0.3) |

| PPV | 91.0 | 89.4 (1.1) | 87.5 (1.1) | 89.2 (1.1) | 88.0 (0.7) | 88.9 (1.0) | 86.9 (0.5) |

| NPV | 89.6 | 88.2 (1.0) | 80.6 (1.4) | 88.6 (1.1) | 80.2 (1.1) | 88.0 (0.5) | 79.8 (0.5) |

| Kappa | 0.800 | 0.770 (0.013) | 0.652 (0.020) | 0.773 (0.014) | 0.651 (0.010) | 0.764 (0.010) | 0.636 (0.011) |

Abbreviations: DCCN-A, deep convolutional neural network, algorithm A; DCCN-U, deep convolutional neural network, algorithm U; H, human; NS, no stereo; NSG, no stereo gradable; NPV, negative predicted value; PPV, positive predicted value; WS, with stereo pairs..

All values indicate percentages, except for the kappa coefficient. Values in parentheses indicate standard deviations.

Table 2 and Table 3 also suggest that the DCNN-A results are comparable to human performance levels. Based on accuracy and kappa scores, in Table 3, DCNN-A performance (a = 88.7% [0.7]; κ = 0.770 [0.013]) is close or comparable with human performance levels (a = 90.2% and κ = 0.800), and in Table 2 it exceeds slightly the human performance levels (a = 91.6% [0.1], κ = 0.829 [0.003]). In Table 2 and 3, the kappa scores for DCNN-A (κ, 0.764 [0.010]-0.829 [0.003]) and the human grader (κ = 0.800) show substantial to near perfect agreement with the AREDS AMD gold standard grading, while DCNN-U exhibits substantial agreement (κ, 0.636 [0.011]-0.700 [0.008]). Receiver operating characteristic curves also show similar human and machine performance levels. The other metrics in Table 2 and Table 3 also echo these observations.

To test algorithms on images that are representative of the quality that one would expect in actual practice, we did not perform extensive eliminations of images based on their quality. In particular, data sets WS and NS used all images while data set NSG removed only 458 ( ~ 0.68%) of the worst-quality images. When looking at the performance of NSG vs NS, there was a small but measurable decrease in performance levels, as seen when comparing the accuracy of DCNN-A of 90.7% for NSG vs 90.0% for NS (Table 2).

Experiments that used PP showed a small degradation in performance levels when compared with experiments that used SP. This is because, for patient partitioning, the classifier was trained on 923 fewer patients (20%). The performance in SP was reflective of a scenario in which training would take advantage of knowledge that was gained during a longitudinal study, vs PP experiments that take a strict view on grouping to remove any possible correlation between fundus images across visits. In aggregate, after accounting for network and partition differences, the results that were obtained for WS, NSG, and NS were close, with a preference for WS (since there were more data to train from) and NSG (because some low-quality images were removed) over NS. For example, DCNN-A accuracies (SD) are 91.6% (0.1%) (WS), 90.7% (0.5%) (NSG), and 90.0% (0.6%) (NS) (Table 2).

Discussion

We described using DL methods for the automated assessment of AMD from color fundus images. These experimental results show promising performance levels in which deep convolutional neural networks appear to perform a screening function that has clinical relevance with performance levels that are comparable with physicians. Specifically, the AREDS data set is, to our knowledge, the largest annotated fundus image data set that is currently available for AMD. Therefore, this study may constitute a useful baseline for future machine-learning methods to be applied to AMD.

Limitations

One limitation of this data set is a mild class imbalance regarding the number of fundus images in class 1 vs 0, which may have a moderate effect on performance levels. Another potential limitation is that this data set uses digitized images that were taken from analog photographs. This possibly can negatively affect quality and machine performance when compared with digital fundus acquisition, but this possibility cannot be determined from this investigation because none of the images were digital.

Another limitation of this study is that it relies exclusively on AREDS and does not make use of a separately collected clinical data set for performance evaluation, as was done in the diabetic retinopathy studies (eg, training a model on EyePACS [EyePACS LLC] and testing on Methods to Evaluate Segmentation and Indexing Techniques in the Field of Retinal Ophtalmology [MESSIDOR]). The situation is different, however, for AMD in which there is currently no large reference clinical data set for use other than AREDS.

Future clinical translation of DL approaches would require validation on separate clinical data sets and using more human clinicians for comparison. While this study offers a promising foray into using DL for automated AMD analysis, future work could involve using more sophisticated networks to improve performance, expanding to lesion delineation and exploiting other modalities (eg, optical coherence tomography).

Conclusions

This study showed that automated algorithms can play a role in addressing several clinically relevant challenges in the management of AMD, including cost of screening, access to health care, and the assessment of novel treatments. The results of this study, using more than 130 000 images from AREDS, suggest that new DL algorithms can perform a screening function that has clinical relevance with results similar to human performance levels to help find individuals that likely should be referred to an ophthalmologist in the management of AMD. This approach could be used to distinguish among various retinal pathologies and subsequently classify the severity level within the identified pathology.

References

- 1.Age-Related Eye Disease Study Research Group The Age-Related Eye Disease Study system for classifying age-related macular degeneration from stereoscopic color fundus photographs: the Age-Related Eye Disease Study Report Number 6. Am J Ophthalmol. 2001;132(5):668-681. [DOI] [PubMed] [Google Scholar]

- 2.Bird AC, Bressler NM, Bressler SB, et al. ; The International ARM Epidemiological Study Group . An international classification and grading system for age-related maculopathy and age-related macular degeneration. Surv Ophthalmol. 1995;39(5):367-374. [DOI] [PubMed] [Google Scholar]

- 3.Bressler NM. Age-related macular degeneration is the leading cause of blindness.... JAMA. 2004;291(15):1900-1901. [DOI] [PubMed] [Google Scholar]

- 4.Macular Photocoagulation Study Group Subfoveal neovascular lesions in age-related macular degeneration. guidelines for evaluation and treatment in the macular photocoagulation study. Arch Ophthalmol. 1991;109(9):1242-1257. [PubMed] [Google Scholar]

- 5.Bressler NM, Bressler SB, Congdon NG, et al. ; Age-Related Eye Disease Study Research Group . Potential public health impact of Age-Related Eye Disease Study results: AREDS report no. 11. Arch Ophthalmol. 2003;121(11):1621-1624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Age-Related Eye Disease Study Research Group A randomized, placebo-controlled, clinical trial of high-dose supplementation with vitamins C and E, beta carotene, and zinc for age-related macular degeneration and vision loss: AREDS report no. 8. Arch Ophthalmol. 2001;119(10):1417-1436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bressler NM, Chang TS, Suñer IJ, et al. ; MARINA and ANCHOR Research Groups . Vision-related function after ranibizumab treatment by better—or worse-seeing eye: clinical trial results from MARINA and ANCHOR. Ophthalmology. 2010;117(4):747-56.e4. [DOI] [PubMed] [Google Scholar]

- 8.US Department of Commerce; United States Census Bureau Statistical abstract of the United States: 2012. https://www2.census.gov/library/publications/2011/compendia/statab/131ed/2012-statab.pdf. Accessed August 18, 2017.

- 9.Holz FG, Strauss EC, Schmitz-Valckenberg S, van Lookeren Campagne M. Geographic atrophy: clinical features and potential therapeutic approaches. Ophthalmology. 2014;121(5):1079-1091. [DOI] [PubMed] [Google Scholar]

- 10.Lim LS, Mitchell P, Seddon JM, Holz FG, Wong TY. Age-related macular degeneration. Lancet. 2012;379(9827):1728-1738. [DOI] [PubMed] [Google Scholar]

- 11.Lindblad AS, Lloyd PC, Clemons TE, et al. ; Age-Related Eye Disease Study Research Group . Change in area of geographic atrophy in the Age-Related Eye Disease Study: AREDS report number 26. Arch Ophthalmol. 2009;127(9):1168-1174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tolentino MJ, Dennrick A, John E, Tolentino MS. Drugs in phase II clinical trials for the treatment of age-related macular degeneration. Expert Opin Investig Drugs. 2015;24(2):183-199. [DOI] [PubMed] [Google Scholar]

- 13.Burlina P, Freund DE, Dupas B, Bressler N. Automatic screening of age-related macular degeneration and retinal abnormalities. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:3962-3966. [DOI] [PubMed] [Google Scholar]

- 14.Feeny AK, Tadarati M, Freund DE, Bressler NM, Burlina P. Automated segmentation of geographic atrophy of the retinal epithelium via random forests in AREDS color fundus images. Comput Biol Med. 2015;65:124-136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Freund DE, Bressler NM, Burlina P Automated detection of drusen in the macula. Paper presented at the Institute of Electrical and Electronics Engineers International Symposium on Biomedical Imaging; June 28-July 1, 2009; Boston, Massachusetts. http://ieeexplore.ieee.org/document/5192983/. Accessed August 18, 2017. [Google Scholar]

- 16.Vapnik VN. Statistical Learning Theory. New York, NY: Wiley; 1998:416-417. [Google Scholar]

- 17.Burlina P, Freund DE, Joshi N, Wolfson Y, Bressler NM Detection of age-related macular degeneration via deep learning. Paper presented at the Institute of Electrical and Electronics Engineers International Symposium on Biomedical Imaging; April 13-16, 2016; Prague, Czech Republic. http://ieeexplore.ieee.org/document/7493240/. Accessed August 18, 2017. [Google Scholar]

- 18.Lowe DG. Distinctive image features from scale invariants keypoints. Int J Comput Vis. 2004;60(2):91-110. https://doi.org/ 10.1023/B:VISI.0000029664.99615.94 [DOI] [Google Scholar]

- 19.Rajagopalan AN, Burlina P, Chellappa R Detection of people in images. Paper presented at the International Joint Conference on Neural Networks; July 10-16, 1999; Washington, http://www.ee.iitm.ac.in/~raju/conf/c15.pdf. Accessed August 18, 2017. [Google Scholar]

- 20.Trucco E, Ruggeri A, Karnowski T, et al. . Validating retinal fundus image analysis algorithms: issues and a proposal. Invest Ophthalmol Vis Sci. 2013;54(5):3546-3559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Burlina P, Pacheco KD, Joshi N, Freund DE, Bressler NM. Comparing humans and deep learning performance for grading AMD: a study in using universal deep features and transfer learning for automated AMD analysis. Comput Biol Med. 2017;82:80-86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Girshick R, Donahue J, Darrell T, Malik J, Rich feature hierarchies for accurate object detection and semantic segmentation. https://arxiv.org/abs/1311.2524. Accessed August 18, 2017.

- 23.Krizhevsky A, Sutskever I, Hinton GE Imagenet classification with deep convolutional neural networks. https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf. Accessed August 18, 2017.

- 24.Razavian AS, Azizpour H, Sullivan J, Carlsson S CNN features off-the-shelf: an astounding baseline for recognition. Paper presented at the Institute of Electrical and Electronics Engineers Conference of Computer Vision and Pattern Recognition; May 12, 2014; Stockholm, Sweden. https://arxiv.org/pdf/1403.6382.pdf. Accessed August 18, 2017. [Google Scholar]

- 25.Simonyan K, Zisserman A, Very deep convolutional networks for large-scale image recognition. https://arxiv.org/abs/1409.1556. Accessed August 18, 2017.

- 26.Szegedy C, Liu W, Yangqing J Going deeper with convolutions. Paper presented at the Institute of Electrical and Electronics Engineers Conference of Computer Vision and Pattern Recognition; June 7-12, 2015; Boston, MA. http://ieeexplore.ieee.org/document/7298594/. Accessed August 18, 2017. [Google Scholar]

- 27.Gulshan V, Peng L, Coram M, et al. . Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402-2410. [DOI] [PubMed] [Google Scholar]

- 28.Quellec G, Charrière K, Boudi Y, Cochener B, Lamard M. Deep image mining for diabetic retinopathy screening. Med Image Anal. 2017;39:178-193. [DOI] [PubMed] [Google Scholar]

- 29.Zeiler MD, Fergus R Visualizing and understanding convolutional networks. Paper presented at the European Conference on Computer Vision; September 6-12, 2014; Zürich, Switzerland. https://arxiv.org/abs/1311.2901. Accessed August 18, 2017. [Google Scholar]

- 30.Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y Overfeat: integrated recognition, localization and detection using convolutional networks. https://arxiv.org/abs/1312.6229. Accessed August 18, 2017.

- 31.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159-174. [PubMed] [Google Scholar]

- 32.Pacheco K, et al. . Evaluation of automated drusen detection system for fundus photographs of patients with age-related macular degeneration. Invest Ophthalmol Vis Sci. 2016;57(12):1611 http://iovs.arvojournals.org/article.aspx?articleid=2560240&resultClick=1. Accessed August 18, 2017. [Google Scholar]