Key Points

Question

Can a computerized, objective system be developed to quantify conjunctival lissamine green staining for the diagnosis of dry eye disease?

Findings

In this cohort study, the output of a semiautomated computerized system for the objective quantification of lissamine green staining of the conjunctiva in 35 clinical digital images obtained from 11 patients with a standard protocol correlated well with the scores obtained by 2 ophthalmologists using the van Bijsterveld scale and moderately when the National Eye Institute scale was used.

Meaning

This algorithm may have potential for improving the characterization and quantification of the severity of ocular surface damage to the conjunctiva in dry eye disease.

Abstract

Importance

Lissamine green (LG) staining of the conjunctiva is a key biomarker in evaluating ocular surface disease. The disease currently is assessed using relatively coarse subjective scales. Objective assessment would standardize comparisons over time and between clinicians.

Objective

To develop a semiautomated, quantitative system to assess lissamine green staining of the bulbar conjunctiva on digital images.

Design, Setting, and Participants

Using a standard photography protocol, 35 digital images of the conjunctiva of 11 patients with a diagnosis of dry eye disease based on characteristic signs and symptoms were obtained after topical administration of preservative-free LG, 1%, solution. Images were scored independently by 2 masked ophthalmologists in an academic medical center using the van Bijsterveld and National Eye Institute (NEI) scales. The region of interest was identified by manually marking 7 anatomic landmarks on the images. An objective measure was developed by segmenting the images, forming a vector of key attributes, and then performing a random forest regression. Subjective scores were correlated with the output from a computer algorithm using a cross-validation technique. The ranking of images from least to most staining was compared between the algorithm and the ophthalmologists. The study was conducted from April 26, 2012, through June 2, 2016.

Main Outcomes and Measures

Correlation and level of agreement among computerized algorithm scores, van Bijsterveld scale clinical scores, and NEI scale clinical scores.

Results

The scores from the automated algorithm correlated well with the mean scores obtained from the gradings of 2 ophthalmologists for the 35 images using the van Bijsterveld scale (Spearman correlation coefficient, rs = 0.79), and moderately with the NEI scale (rs = 0.61) scores. For qualitative ranking of staining, the correlation between the automated algorithm and the 2 ophthalmologists was rs = 0.78 and rs = 0.83.

Conclusions and Relevance

The algorithm performed well when evaluating LG staining of the conjunctiva, as evidenced by good correlation with subjective gradings using 2 different grading scales. Future longitudinal studies are needed to assess the responsiveness of the algorithm to change of conjunctival staining over time.

This cohort study describes a semiautomated system to quantitate lissamine green staining of the conjunctiva in patients with dry eye disease.

Introduction

Ocular surface staining after instillation of vital dyes is a critical component for the diagnosis and evaluation of a variety of ocular surface diseases, including dry eye disease (DED). Dyes used to evaluate the ocular surface include fluorescein to stain the cornea and rose bengal or lissamine green (LG) to assess local abnormalities of the bulbar conjunctiva. Lissamine green and rose bengal have similar staining patterns on the conjunctiva, with both highlighting epithelial cells that are damaged or dead. However, LG is less toxic to cells and causes less stinging; as a result, LG is generally preferred over rose bengal for evaluating the conjunctiva in ocular surface disorders.

Several grading scales for LG staining of the bulbar conjunctiva have been used to evaluate DED. In all of these systems, graders compare the appearance of a patient's conjunctiva with standard reference images to assign an ordinal scale number that designates the severity of staining. However, subjective gradings of conjunctival staining have variable intragrader reliability (repeatability) and intergrader reliability.

Ocular surface staining is one of the most commonly used methods for the diagnosis of DED and also is commonly included as part of the inclusion criteria or outcome measures in clinical trials of treatments for DED. Therefore, better methods for quantitative, objective assessments are needed that would enable standardized assessments across clinicians. Improving the characterization of staining would also help to define DED more objectively and reliably, aiding in the clinical assessment of disease severity and monitoring responses to various therapies.

Although an automated grading system for the evaluation of fluorescein staining of the cornea associated with DED has been reported, to our knowledge, an automated system for the assessment of LG staining of the conjunctiva has not been described. Herein we describe and validate a semiautomated computer-based algorithm to assess LG conjunctival staining using digital photographs acquired by a standard protocol. The study was conducted from April 26, 2012, through June 2, 2016.

Methods

Image Acquisition and Preprocessing

Eleven patients with a history of DED were recruited from the Scheie Eye Institute at the University of Pennsylvania. All tenets of the Declaration of Helsinki were followed and written informed consent was obtained from all participants. Approval was obtained from the University of Pennsylvania Institutional Review Board. Participants received financial compensation.

Preservative-free LG, 1%, solution was obtained from a compounding pharmacy (Leiter's Pharmacy). After instillation of 1 drop of LG into both eyes, digital photographs of the conjunctiva of each patient were acquired 1 to 2 minutes after dye administration using a previously described standardized external photography protocol that was modified so that the conjunctiva was specifically imaged. Briefly, photographs were taken using a camera (EOS Rebel T2i, Canon USA Inc) with a 100-mm macrolens equipped with image stabilization and an adjustable monopod for positioning the camera. The camera was focused on the conjunctiva and 1 photograph of the conjunctiva was taken nasally and temporally for each eye. As part of each session, a color calibration image was obtained, consisting of the mini (5.7 × 8.3 cm [2.25 × 3.25 in]) GretagMacbeth Color Checker Chart (X-rite GmbH) together with a white index card for luminance correction.

Thirty-five photographs were selected for this study using the criteria of best focus, visibility of LG staining, and exposure. For 7 individuals, all 4 views were included (nasal and temporal for each eye). One participant was monocular and therefore had images for only the right eye. Owing to poor exposure, the image set included only left eye images from 2 patients and temporal images from each eye for another patient.

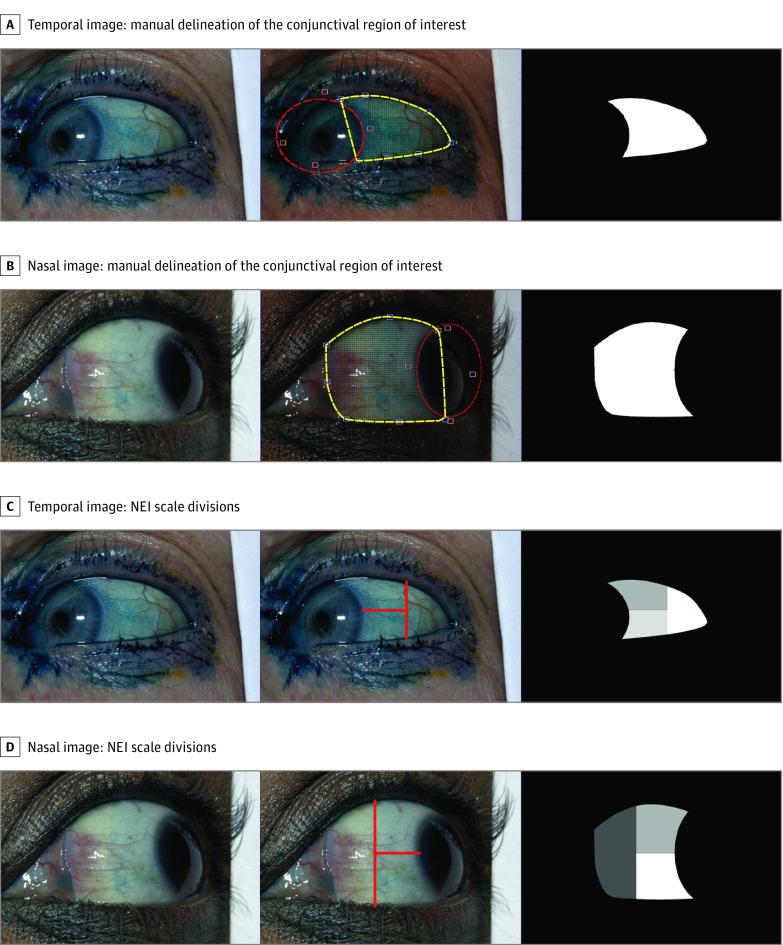

Using a semiautomatic marking program custom designed in MATLAB (The MathWorks Inc) software, the user manually marked the region of interest (ROI) of the conjunctiva by first defining 7 anatomic landmarks on the image: 3 points along the lower eyelid, 3 points along the upper eyelid, and 1 point at the medial or lateral canthus, depending on whether a particular image captured the nasal or temporal conjunctiva. The program automatically connects these landmarks by drawing a smooth curve through each landmark point. The middle panels in Figure 1A and B show examples of these landmarks and delineation in yellow. A portion of the cornea is still included in the delineation of the ROI owing to the vertical connection between the upper and lower eyelids. To remove the cornea from the ROI, the user fits an ellipse (controlled by 4 points) to the boundary between the cornea and conjunctiva. The red delineation in the middle panels of Figure 1A and B shows examples of this ellipse and the 4 control points adjacent to it. The intersection between this ellipse (red) and the initial delineation (yellow) is then used to exclude the cornea from the ROI. The rightmost panels in Figure 1A and B show the final conjunctiva ROI created using these manual delineations. Prior to processing, manual delineation of the ROI for each image required an estimated mean of 3 minutes.

Figure 1. Examples of Manual Delineation of the Conjunctival Region of Interest and Lines Corresponding to the Divisions of the National Eye Institute (NEI) Scale for Temporal and Nasal Images.

The yellow landmarks and curves show the initial delineation of the conjunctival boundaries shown in the central panels of A and B. The red ellipse (controlled by 4 adjacent red points) is used to remove the regions of the cornea from the yellow delineation. Examples of lissamine green images with NEI lines drawn on the temporal (C) and nasal (D) image and conjunctival region of interest. The 2 delineations are combined to create the final conjunctival region of interest shown in the rightmost panels.

To permit application of the National Eye Institute (NEI) grading system, horizontal and vertical lines were manually added to the ROI using digital image software (Adobe Photoshop, Adobe Systems Inc), thereby dividing each conjunctival image into 3 areas. The lines were drawn following the recommendations of the NEI industry report on clinical trials in DED. The vertical division was placed halfway between the limbus and the lateral canthus and the horizontal division line divided the visible conjunctiva in half (Figure 1C and D).

All images were then balanced for color and luminance using a previously described protocol. Briefly, image data were saved in the Canon CR2 raw file format. The public domain program dcraw was used to extract the image data from the raw files and into a nominal CIE XYZ tristimulus representation. This representation was then processed further by custom software written in MATLAB (MathWorks Inc).

Computerized Grading of Images

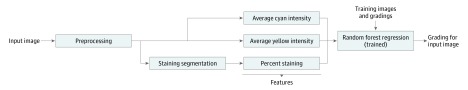

Once the ROI is selected and the lines for the NEI grading system are applied, our computerized grading is completely automated. The training time for the computer algorithm was 10 minutes. The mean time for the trained algorithm to analyze each new image was less than 0.5 second. Figure 2 illustrates an overview of our algorithm for LG staining. First, the algorithm delineates or segments the LG staining in the image. This segmentation is used to estimate the percentage of the conjunctival staining, which the algorithm uses as its first feature to describe each image. Next, the algorithm separates out the cyan and yellow channels (of the CMYK color space) from the image and calculates the mean cyan and mean yellow intensities over the conjunctiva. These 2 channels were chosen because they best exhibit the LG dye color. The 2 mean values are used as 2 additional image features by the algorithm to describe each image. Lastly, the percentage staining, average cyan, and average yellow features are concatenated together to form a 3-dimensional feature vector for each image. The algorithm uses these feature vectors to train a random forest (RF) regression that is used to produce the automatic grading for each image. The following sections describe these 3 parts of the algorithm in more detail.

Figure 2. Block Diagram of the Automatic Grading Algorithm.

After preprocessing, the algorithm delineates or segments lissamine green staining in the image. Next, the cyan and yellow channels (of the CMYK color space) are separated out from the image and the mean cyan and mean yellow intensities over the conjunctiva are calculated. The 2 mean values are used as 2 additional image features by the algorithm to describe each image. Finally, the percent staining, mean cyan, and mean yellow features are concatenated together to form a 3-dimensional feature vector for each image. The algorithm uses these feature vectors and manual gradings from a training data set to train a random forest regression, which is used to produce the automatic grading for each image.

Segmentation of LG Staining

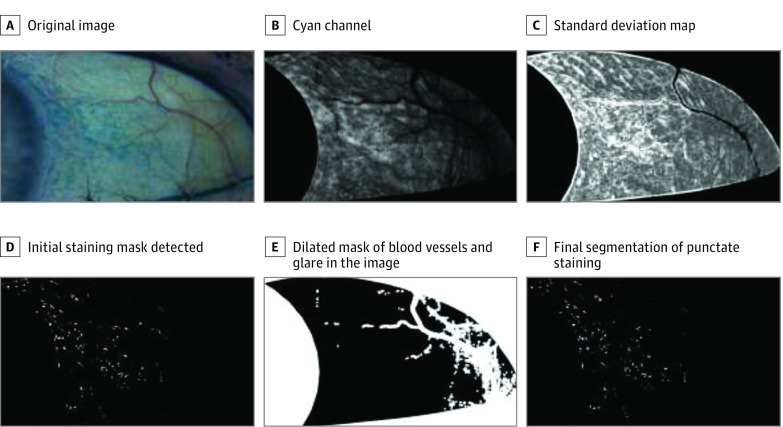

To evaluate the percentage of conjunctival staining, the algorithm first segments the punctate staining in the image, which is demonstrated in Figure 3. Starting from the original image (Figure 3A), we first extract the cyan channel of the image (Figure 3B). We then apply a local standard deviation (SD) filter to the channel (Figure 3C). This filter locates areas of high variability, a characteristic of a region of punctate staining, while avoiding large homogeneous regions that are characteristic of background staining. Since we expect the staining to appear in areas with high intensity variation, we threshold the image using the 95th percentile intensity of the SD map (Figure 3D). The algorithm features and corresponding gradings for the image in Figure 3 are reported in eTable 1 in the Supplement.

Figure 3. Example of Staining Segmentation Method and the Intermediate Images Produced During Processing.

Shown are an original image (A), the cyan channel of the image (B), the standard deviation (SD) map (C), the initial staining mask detected (D), the dilated mask of blood vessels and glare in the image (E), and the final segmentation of the punctate staining from our algorithm (F).

In addition to LG staining, other regions can have high intensity variability and therefore be falsely detected as staining. For example, blood vessels and glare in the image typically have a zero intensity in the cyan channel, but the edges around these regions can have high intensity variability. To prevent the false detection of these areas as staining, we created a mask using the areas where the cyan channel is zero and dilated the mask by 5 pixels (Figure 3E). We then remove all detected staining (from the SD mask) that falls under this dilated mask. This creates our final staining segmentation mask (Figure 3F). After the segmentation is completed, percent staining is calculated by dividing the number of pixels in the segmentation by the total number of pixels in the conjunctival ROI.

Feature Vector Generation and Random Forest Regression

Next, the mean cyan and average yellow features are calculated directly from the cyan and yellow channels. For each channel, we sum the intensity values over the conjunctiva and then divide the sum by the number of pixels in the conjunctiva. These 2 color features and the percentage stained feature are then concatenated to create the 3-dimensional feature vector that describes the staining properties of a given image.

Once the feature vector is calculated for an image, our algorithm applies an RF regression to evaluate the grading for the image. The RF regressor is a supervised, machine-learning approach that uses a training set of feature vectors and responses (in our case, the manual gradings) to create an ensemble of decision trees. Each decision tree uses a random permutation of the features to make a prediction on the grading for an image. The final RF result is the mean of the prediction from all of the trees. The training set is created by calculating the features vectors for a set of images where the manual gradings for each image are known. This training process allows the algorithm to automatically learn the importance of each feature in the feature vector for predicting the manual grading.

Once the RF regressor is trained, it can then be applied to a feature vector from a new image where the grading is unknown to produce an automatic grading. In our algorithm we used the MATLAB implementation (TreeBagger) of RF (with 100 trees) to create the regressor. In all of our experiments, each image was automatically graded by training the classifier on the 34 other images in the data set not being evaluated. This cross-validation setup was used so that information about each image being evaluated was not provided to the regressor during training. In addition, the setup provided 35 distinct train and test evaluations, which allowed us to evaluate the mean performance of the algorithm, giving us more confidence that our results were not due to an anomaly in the training or to overtraining of a particular data set. For future assessments, all 35 images would be used as the default training set for the algorithm.

eFigure 1 in the Supplement shows the mean importance of each feature to the regression from the training. This measure was calculated by randomly switching 1 of the features in an image’s feature vector with the same feature from another image in the training data and then observing the change in accuracy of the grading (relative to the manual grading). If the feature that was switched is important for regressing the grading, then the grading accuracy is expected to fall dramatically when that feature is randomly shuffled between the input images. Likewise, if the feature is unimportant, then randomizing the feature should have no effect on the regression. Although these feature values are currently only used as intermediate values by the algorithm to calculate the overall grading, they are still outputted by the algorithm for each image and can serve as potential metrics for additional analysis.

Clinician Grading and Ranking of Images

Two ophthalmologists (V.Y.B. and M.M.-G.), masked to participant identity, independently graded each of the 35 conjunctival photographs using the van Bijsterveld and the NEI scales. In each of these systems, observers compare the ocular surface with reference images to assign an ordinal scale number to indicate the staining severity. Using the van Bijsterveld scale, graders rate both the nasal and temporal bulbar conjunctiva on a scale of 0 to 3. In the NEI scale, graders evaluate each of the 3 subareas separately on a scale of 0 to 3 for a possible total of 9 points for either the nasal or temporal conjunctiva. Both graders utilized the same calibrated color monitor (24” PA241W-BK-SV monitor; NEC Display Solutions) to view images as described previously, under identical room illumination conditions.

The 2 graders then ranked printed copies of the images and independently ordered them from least staining to most staining. The rankings were correlated with the values from the algorithm for the same images.

Statistical Analysis

The associations between machine learning–based gradings and clinical gradings were assessed by the Spearman correlation coefficient (rs), which accommodates the ordinal clinical score scale and is insensitive to the influence of outliers. The following guidelines are often used to describe the strength of the relationship: none or very weak (0.00-0.10), weak (0.11-0.30), moderate (0.31-0.50), and strong (0.51-1.00). Weighted κ statistics were calculated to assess the agreement in scores between the graders. Calculations for the analyses were performed using Excel 2016 (Microsoft Corp) and MATLAB, version R2015b (MathWorks).

Results

The Table presents a summary of computer algorithm scores and subjective gradings of LG staining of the conjunctiva. The median score for each grader using the van Bijsterveld scale was 2 (range, 0-3), with the corresponding algorithm score being 1.6; and for the NEI scale, the median score for each grader was 4 (range, 0-9) with the corresponding algorithm score being 4. The assigned scores for the image set spanned the full range of severity for both the van Bijsterveld and NEI scales.

Table. Summary of 35 Computer Algorithm Scores and Subjective Gradings of Lissamine Green Staining of the Conjunctiva.

| Scale | Median (Range) |

|---|---|

| van Bijsterveld | |

| Grader 1 | 2 (1-3) |

| Grader 2 | 2 (1-3) |

| Algorithm score | 1.6 (1.1-2.6) |

| NEI Superior Staining | |

| Grader 1 | 1 (0-3) |

| Grader 2 | 1 (0-3) |

| Algorithm score | 1.71 (1.04-2.54) |

| NEI Inferior Staining | |

| Grader 1 | 2 (0-3) |

| Grader 2 | 2 (0-3) |

| Algorithm score | 0.94 (0.30-2.38) |

| NEI Lateral Staining | |

| Grader 1 | 1 (0-3) |

| Grader 2 | 1 (0-3) |

| Algorithm score | 0.88 (0.38-2.40) |

| NEI Total | |

| Grader 1 | 4 (0-9) |

| Grader 2 | 4 (0-9) |

| Algorithm score | 4 (1.9-7.4) |

Abbreviation: NEI, National Eye Institute.

Correlations Among Subjective and Automated Gradings

The overall correlation between graders is reported in eTable 2 in the Supplement. For the van Bijsterveld scale, the correlation was rs = 0.86; with the NEI scale, the correlation varied for region between rs = 0.84 and rs = 0.93. Weighted κ values were similar. eTable 3 in the Supplement reports the correlations between algorithm scores compared with the mean of the 2 graders. Each comparison was trained and tested using the gradings in a leave-1-out cross-validation (ie, each image was assigned a grading using the algorithm trained by the remaining 34 images).

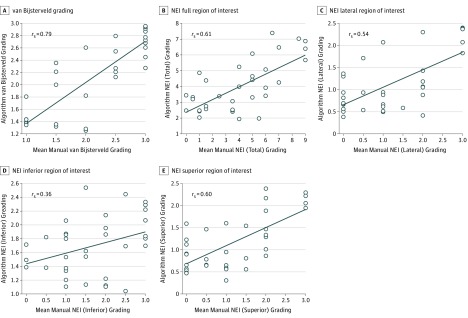

For the van Bijsterveld scale, the correlation was rs = 0.79 between the mean of the 2 graders and the algorithm grading (Figure 4). For the NEI scale, the correlation varied between rs = 0.36 and rs = 0.61 between the mean of the 2 graders and the algorithm grading, depending on conjunctival region (Figure 4).

Figure 4. Correlation Plot Between the Mean of the Subjective Gradings and the Computer Algorithm Gradings Using All 3 Features.

Correlations for the mean van Bijsterveld (A) and National Eye Institute (NEI) (B) gradings for the full conjunctival region of interest and lateral (C), inferior (D), and superior (E) regional NEI gradings.

Ranking Analysis

eFigure 2 in the Supplement shows a ranking analysis of the data, where the 35 images were ordered from least (1) to most (35) staining by the algorithm and the 2 graders (without reference to their previous scoring on the van Bijsterveld and NEI scales). The correlation between the 2 graders was rs = 0.97, and the correlations between the algorithm and the 2 raters were rs = 0.78 and rs = 0.83, respectively.

Discussion

We developed a semiautomated approach to quantify conjunctival LG staining from digital photographs, which consisted of user-assisted selection of ROIs followed by automated quantification of LG staining. Our approach showed good correlation using the van Bijsterveld scale with subjective gradings by observers on the same photographs using 2 different grading scales, but only a moderate correlation when the NEI scale was utilized.

To our knowledge, we are the first to describe a semiautomated system for quantifying LG staining of the conjunctiva from digital images. Others have previously described an objective image analysis technique to evaluate corneal staining and found a strong correlation of the algorithm results with clinical gradings using the Oxford and NEI scales. Developing an automated system for the quantification of LG staining of the conjunctiva may be more challenging because linear background pooling, which is not seen when fluorescein is applied to the cornea, may cause false detection of staining.

An automated system for quantifying ocular surface staining has important advantages over subjective grading scales applied either during clinical examination or to images. The variability introduced by differences in interpretation by observers is eliminated and a continuous scale is more sensitive to change an ordinal scale. Objective, automated assessments also allow for the detailed quantification and characterization of changes in ocular surface staining over time. Further refinements in the approach to automated analysis could address not only the intensity and quantity of staining, but also other staining attributes, such as recognition of distinctive patterns and spatial distribution. In addition, the feature vectors generated by this algorithm can potentially be used to perform high-dimensional analysis of the images (eg, principal component analysis) that extends beyond a single grading for each image. Future studies would be helpful in examining correlations of image analysis with clinical gradings performed at the slitlamp, the assessment of images over time, and how responsive the computer algorithm is to detecting change.

Limitations

Although the current methods and algorithm may be feasible in a research environment, further development and assessment are necessary prior to widespread use in a clinical setting. The impact of using camera models other than the EOS Rebel T2i and of applying the algorithm without prior color and luminance balance may require retraining the algorithm with images obtained under those conditions. Use of our approach can be further facilitated by incorporation of an automated system for segmenting the ROI using published methods for conjunctival segmentation as a starting point.

Conclusions

To our knowledge, this is the first reported computer algorithm designed to provide an objective measurement of LG staining on digital conjunctival images. Our primary goal was to establish that a useful algorithm could be developed. Additional efforts will be directed toward broadening the applicability of the system.

eTable 1. Algorithm Features and Corresponding Subjective Gradings for Figure 3

eTable 2. Inter-grader Correlation and Reliability for Lissamine Green Staining Scores

eTable 3. Correlation of Computer Algorithm Grading versus Subjective Gradings for Lissamine Green Staining

eFigure 1. Plot of Each Feature’s Contribution to the Regression

eFigure 2. Plot of a Comparison Between the Graders (G1 And G2) and the Algorithm (Alg) Rankings for Placing Each of the 35 Images in Rank Order

References

- 1.Bron AJ, Argüeso P, Irkec M, Bright FV. Clinical staining of the ocular surface: mechanisms and interpretations. Prog Retin Eye Res. 2015;44:36-61. [DOI] [PubMed] [Google Scholar]

- 2.Bron AJ, Evans VE, Smith JA. Grading of corneal and conjunctival staining in the context of other dry eye tests. Cornea. 2003;22(7):640-650. [DOI] [PubMed] [Google Scholar]

- 3.Savini G, Prabhawasat P, Kojima T, Grueterich M, Espana E, Goto E. The challenge of dry eye diagnosis. Clin Ophthalmol. 2008;2(1):31-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shiboski SC, Shiboski CH, Criswell L, et al. ; Sjögren’s International Collaborative Clinical Alliance (SICCA) Research Groups . American College of Rheumatology classification criteria for Sjögren’s syndrome: a data-driven, expert consensus approach in the Sjögren’s International Collaborative Clinical Alliance cohort. Arthritis Care Res (Hoboken). 2012;64(4):475-487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shiboski CH, Shiboski SC, Seror R, et al. ; International Sjögren’s Syndrome Criteria Working Group . 2016 American College of Rheumatology/European League Against Rheumatism classification criteria for Primary Sjögren’s syndrome: a consensus and data-driven methodology involving three international patient cohorts. Arthritis Rheumatol. 2017;69(1):35-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bunya VY, Bhosai SJ, Heidenreich AM, et al. ; Sjögren’s International Collaborative Clinical Alliance (SICCA) Study Group . Association of dry eye tests with extraocular signs among 3514 participants in the Sjögren’s Syndrome International Registry. Am J Ophthalmol. 2016;172:87-93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lee YC, Park CK, Kim MS, Kim JH. In vitro study for staining and toxicity of rose bengal on cultured bovine corneal endothelial cells. Cornea. 1996;15(4):376-385. [DOI] [PubMed] [Google Scholar]

- 8.Manning FJ, Wehrly SR, Foulks GN. Patient tolerance and ocular surface staining characteristics of lissamine green versus rose bengal. Ophthalmology. 1995;102(12):1953-1957. [DOI] [PubMed] [Google Scholar]

- 9.Nichols KK, Mitchell GL, Zadnik K. The repeatability of clinical measurements of dry eye. Cornea. 2004;23(3):272-285. [DOI] [PubMed] [Google Scholar]

- 10.Berntsen DA, Mitchell GL, Nichols JJ. Reliability of grading lissamine green conjunctival staining. Cornea. 2006;25(6):695-700. [DOI] [PubMed] [Google Scholar]

- 11.Rose-Nussbaumer J, Lietman TM, Shiboski CH, et al. ; Sjögren’s International Collaborative Clinical Alliance Research Groups . Inter-grader Agreement of the Ocular Staining Score in the Sjögren’s International Clinical Collaborative Alliance (SICCA) Registry. Am J Ophthalmol. 2015;160(6):1150-1153.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Methodologies to diagnose and monitor dry eye disease: report of the Diagnostic Methodology Subcommittee of the International Dry Eye WorkShop (2007). Ocul Surf. 2007;5(2):108-152. [DOI] [PubMed] [Google Scholar]

- 13.Design and conduct of clinical trials: report of the Clinical Trials Subcommittee of the International Dry Eye WorkShop (2007). Ocul Surf. 2007;5(2):153-162. [DOI] [PubMed] [Google Scholar]

- 14.Rodriguez JD, Lane KJ, Ousler GW III, Angjeli E, Smith LM, Abelson MB. Automated grading system for evaluation of superficial punctate keratitis associated with dry eye. Invest Ophthalmol Vis Sci. 2015;56(4):2340-2347. [DOI] [PubMed] [Google Scholar]

- 15.World Medical Association World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA. 2013;310(20):2191-2194. [DOI] [PubMed] [Google Scholar]

- 16.Bunya VY, Brainard DH, Daniel E, et al. . Assessment of signs of anterior blepharitis using standardized color photographs. Cornea. 2013;32(11):1475-1482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lemp MA. Report of the National Eye Institute/Industry workshop on clinical trials in dry eyes. CLAO J. 1995;21(4):221-232. [PubMed] [Google Scholar]

- 18.Breiman L. Random forests. Mach Learn. 2001;45(1):5-32. [Google Scholar]

- 19.Picard RR, Cook RD. Cross-validation of regression models. J Am Stat Assoc. 1984;79(387):575-583. [Google Scholar]

- 20.van Bijsterveld OP. Diagnostic tests in the Sicca syndrome. Arch Ophthalmol. 1969;82(1):10-14. [DOI] [PubMed] [Google Scholar]

- 21.Kendall M, Stuart A. Inference and Relationship. Vol 2 London, England: Charles Griffin & Co; 1973. The Advanced Theory of Statistics. [Google Scholar]

- 22.Chun YS, Yoon WB, Kim KG, Park IK. Objective assessment of corneal staining using digital image analysis. Invest Ophthalmol Vis Sci. 2014;55(12):7896-7903. [DOI] [PubMed] [Google Scholar]

- 23.Sánchez Brea ML, Barreira Rodríguez N, Mosquera González A, Evans K, Pena-Verdeal H. Defining the optimal region of interest for hyperemia grading in the bulbar conjunctiva. Comput Math Methods Med. 2016;2016:3695014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bista SR, Sárándi I, Dogan S, Astvatsatourov A, Mösges R, Deserno TM Automatic conjunctival provocation test combining Hough circle transform and self-calibrated color measurements. Proceedings of SPIE, Medical Imaging 2013: Computer-Aided Diagnosis, 2013: 86702J. http://proceedings.spiedigitallibrary.org/proceeding.aspx?articleid=1658306. Published February 28, 2013. Accessed August 10, 2017.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Algorithm Features and Corresponding Subjective Gradings for Figure 3

eTable 2. Inter-grader Correlation and Reliability for Lissamine Green Staining Scores

eTable 3. Correlation of Computer Algorithm Grading versus Subjective Gradings for Lissamine Green Staining

eFigure 1. Plot of Each Feature’s Contribution to the Regression

eFigure 2. Plot of a Comparison Between the Graders (G1 And G2) and the Algorithm (Alg) Rankings for Placing Each of the 35 Images in Rank Order