Abstract

Traditional examination has inherent deficiencies. Objective Structured Clinical Examination (OSCE) is considered as a method of assessment that may overcome many such deficits. OSCE is being increasingly used worldwide in various medical specialities for formative and summative assessment. Although it is being used in various disciplines in our country as well, its use in the stream of general surgery is scarce. We report our experience of assessment of undergraduate students appearing in their pre-professional examination in the subject of general surgery by conducting OSCE. In our experience, OSCE was considered a better assessment tool as compared to the traditional method of examination by both faculty and students and is acceptable to students and faculty alike. Conducting OSCE is feasible for assessment of students of general surgery.

Keywords: OSCE, Surgery, Summative assessment, Undergraduate students

Introduction

From ages the assessment of students of general surgery is being done by conventional methods comprising of long and short cases and viva voce. The shortcomings of such traditional assessment system include poor reliability and construct validity, examiner and patient bias and inability to directly observe the skills being performed, besides others [1, 2]. Medical teachers have long been looking for a method of assessment that is more objective, comprehensive, consistent, free of bias, and allows for direct observation of the skills of examinee. Objective Structured Clinical Examination (OSCE) is one such method that fulfills most of the desirable qualities of an ideal assessment tool [1–5]. Ever since described by Harden and Gleeson in 1975 [6], it is being increasingly used in various streams in other countries; however its use for assessment in the stream of general surgery is limited at present.

To harvest the best of this unique way of assessment for our students, we decided to conduct the summative assessment of pre-professional examination by OSCE. Our aim was to evaluate the experience of this method of assessment for the students and faculty and to test the feasibility of conducting OSCE in the subject of general surgery.

Methods

The idea was discussed with the department faculty and a consensus on conducting OSCE was reached. Five faculty members were involved in conducting the pre-professional examination.

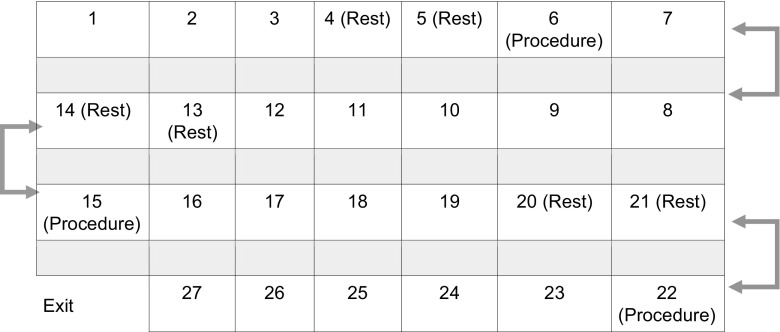

The high dependency unit (HDU) with accompanying corridor having a floor space of 100 m2 was identified as the suitable place for conducting OSCE. The HDU offered additional advantage as there are separate cubicles within the hall which helped in creating stations that offered privacy for both the patient and the candidate. There were 70 students appearing for the examination. It was decided to create 27 stations including procedure, question, and rest stations. Once consensus was reached regarding the number and types of stations, a blueprint was prepared so as to include questions and required competencies from varied areas of the subject (Table 1). The questions, model key answers and checklist was prepared by the faculty members involved in the examination. In the checklist prepared for the procedure stations, the marks were allotted according to weightage given to each step. The questions, model keys and checklists were peer reviewed by other faculty members for face and content validity. A station map (Fig. 1) was then prepared and the list of articles required for these stations were charted.

Table 1.

Blueprint for setting questions

| Examination | Interpretation | Identification | Calculation | |

|---|---|---|---|---|

| Head and neck | Parotid swelling | Specimen multi nodular goiter | Calculating GCS | |

| Breast | Breast lump | Mammogram | ||

| Abdomen | Renal lump | X-ray of intestinal obstruction | Specimen of cholelithiasis, operating instruments | |

| Vascular system | Venous ulcer, peripheral vascular disease | |||

| General | ABG | Fluid requirement of a postoperative patient |

Fig. 1.

Station map

The stations comprised three procedure stations, 21 question stations, and three rest stations. In the procedure stations, patients were kept for clinical examination. The question stations were carefully planned to include clinical problem solving, clinical and laboratory data interpretation, radiographs, specimens, equipment, and clinical photographs representing all “must know areas” from various topics as per the blueprint. In addition, the question stations following the procedure stations were designed in a way that the questions were related to the task performed by the candidate on the preceding station. We involved three different sets of patients (a total of nine patients) for the procedure stations to avoid fatigue and monotony of the patient that could have compromised the performance and assessment of the examinee.

Signages were prepared for each station and the “task to be performed” was printed clearly in bold letters on A4-size paper. A bell was used as a timing device. A time of 3 min was agreed on for each question and rest stations. For procedure stations the candidate was given 6 min. The rest stations preceded the procedure station to provide adequate time for the candidate performing at the procedure station and to prevent the back log of the candidate coming from the question stations (Fig. 1).

The procedure stations were manned by the faculty members and the students were assessed using the check list. An example of task observed with the checklist for a procedure station is depicted in Table 2. The marks for these stations were awarded on the spot. The question stations were supervised by two faculty members and three residents from the department. In addition one resident worked as a dedicated time keeper and two nurses helped in other logistics.

Table 2.

Check list for examination of abdominal (gall bladder) lump (max marks 10)

| Items | Max marks | Adequate | Inadequate |

|---|---|---|---|

| Explains to the patient what he is going to do | 1 | ||

| Takes his consent for examination | 0.5 | ||

| Ensures privacy of patient by using screen | 1 | ||

| Exposes the abdomen from mid chest to mid thigh | 1 | ||

| Warms his hands before palpation | 0.5 | ||

| Palpates whole abdomen gently, does not inflict pain | 2 | ||

| Performs shifting dullness and PR examination | 2 | ||

| Looks for supraclavicular lymph node | 1 | ||

| Covers back the patient and thank him | 1 |

The station set up was completed and reviewed by the faculty members an hour before the scheduled examination. Candidates were briefed on the methodology of the examination, the type of stations and how to perform in the respective stations with special emphasis and instruction on how to move between the stations. The students were then provided with an answer sheet.

All stations were started simultaneously and a constant vigil on the movement and time keeping was ensured. The answer sheets were collected at the end of stations and the students were exited from separate door avoiding a meeting with those students awaiting their turn for OSCE. Students were interviewed after the examination and were asked to share their experience. They were asked to compare OSCE with the traditional examination and their responses were recorded.

Results

Nearly 10 h of brainstorming by five faculty members over 15 days in addition to the online discussion was done for preparation of the examination. Additional manpower of four residents and two nursing sisters was required for smooth conduction of OSCE. The “rest of the resources” required were same as required in conducting a traditional examination. Seventy students were examined for different competencies on various topics in 300 minutes (4.3 min per student). The scores obtained by the students varied from 45–90 %.

Experience of Faculty

The faculty was satisfied with the conduction of OSCE. All involved faculty members agreed that the questions sampled wider area of the subject as compared to the conventional examination. They were also happy as the examination of 70 students could be completed in a short time.

Student’s Experience

Most of the students were content with the fact that there was uniformity of questions for all the students. A student responded “I found this method of examination much better than the usual one. The best part was that all of us were assessed on the same set of questions and patients”.

They also expressed that more number of questions from different areas were included which gave them the opportunity to be assessed on a much wider range of topics. The students also mentioned the elimination of examiner bias as all were examined by more than one examiner using a checklist for marking. In the words of few other students:

I found the environment more relaxed and was not nervous during this examination.

This should replace the existing system of assessment.

Discussion

We were conducting the formative and summative examination of our students from beginning by traditional way of assessment that included short and long cases and viva voce. The reason may be that as students we were also assessed in the same manner and over the years as faculty we were tuned to conduct examination by this conventional way and became comfortable with it. Intrigued by the shortcomings of the conventional assessment system and the apprehensions of our students over the years, we were thinking of adopting a new way of assessment. There were concerns regarding the limited areas over which assessment was done was an overburden on examiners as they had to assess a large number of students in short time. The chance or luck of the examinee in getting an “easy” or a “difficult” case in addition to the bias by the patient (some patients being uncooperative or poor communicators) and examiner bias was also studied.

We zeroed in on OSCE as it eliminates some of the inherent shortcomings of the traditional assessment system. This assessment tool has stood the test of time for nearly 40 years since its introduction and is being used across various medical specialities at graduate and postgraduate levels including dental and veterinary sciences [7, 8]. In various studies OSCE has been found to be more valid, objective, comprehensive and reliable tool as compared to the traditional way of assessment [4, 5, 9, 10]. In addition, OSCE also allows for the direct observation of the candidate which is lacking for the most part in conventional examination. The use of checklist in OSCE also ensures that the candidate does not miss critical steps of a procedure. We could appreciate all these advantages while conducting OSCE. This was further reinforced by the affirmations of our students.

In organizing OSCE, one can carefully plan the type of questions and competencies needs to be assessed on varied topics beforehand that aligns with the learning objectives of the course, a process called “blueprinting” [1]. This ensures the content validity of the assessment system. We performed blueprinting at the outset once the consensus on conducting OSCE was established in the department. The other concerns primarily involving the bias by the patient and examiners were also taken care of as all the students were examined on the same set of questions and on the same patients by different assessors. This was one fact that made our students content as most of them were happy by the uniformity of the questions and procedures on which they were assessed. The same has been reported by other authors too [10–12]. The assessment by the checklist at procedure stations further eliminated the examiner bias.

The scores obtained by the students using OSCE were comparable with the average scores obtained by the students in the preceding years during conventional examination. This finding suggested that OSCE is reliable and that the results are reproducible with those obtained using conventional examination.

Although we were gratified by the end result of our first experience with OSCE, the journey from inception of the idea of using OSCE for our undergraduate students to the final conduction of the examination was not straightforward. There was initial resistance from some faculty members especially concerning the inputs needed to conduct OSCE and the uncertainty of the results obtained by a relatively new tool of which we had no prior experience. It required few sessions with faculty members to convince them for using OSCE. The reason for this might be that most faculty members are not oriented to its use, there is no formal training on the use of assessment tools for the faculty members and there is no mandate by the academic authorities for using OSCE for assessment. This all makes OSCE a less explored area with continued hostility towards using it. In addition, the shortcomings of OSCE cited by some studies [13, 14] provide excuses for nonbelievers for not trying it. Thankfully we did not have a hard time convincing our learned faculty for using OSCE although it required some deliberations with them initially. We admit that we required more time and labor for preparing OSCE (right from blueprinting to constructing checklist) than for the preparation required for examination in conventional way. This is inherent to the use of OSCE [13, 15] and as this was our first experience we might have taken little more time and effort. We believe that with its continued use and as we gain more experience with OSCE, we will require less efforts in subsequent exercises. In addition, the OSCE stations we prepared will enrich our question bank every year further reducing the labor.

We are now planning to use OSCE for formative assessment of our postgraduate students. We have sincere hope that this small initial step will encourage others to use OSCE for assessment in general surgery.

Conclusion

OSCE is comprehensive, valid, reliable and objective assessment tool that allows for direct observation of the procedural skills. Overall organization and conduction of OSCE is feasible in the stream of general surgery. It is acceptable to the faculty and students alike.

Compliance with Ethical Standards

Source of Funding

None.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Gupta P, Dewan P, Singh T. Objective structured clinical examination in resource poor settings. In: Singh T, Anshu, editors. Principles of assessment in medical education. New Delhi: Jaycee brothers medical publishers; 2012. pp. 128–148. [Google Scholar]

- 2.Pandya JS, Bhagwat SM, Kini SL. Evaluation of clinical skills for first-year surgical residents using orientation programme and objective structured clinical evaluation as a tool of assessment. J Postgrad Med. 2010;56:297–300. doi: 10.4103/0022-3859.70950. [DOI] [PubMed] [Google Scholar]

- 3.Nasir AA, Yusuf AS, Abdur-Rahman LO, Babalola OM, Adeyeye AA, Popoola AA, Adeniran JO. Medical students’ perception of objective structured clinical examination: a feedback for process improvement. J Surg Educ. 2014;71:701–706. doi: 10.1016/j.jsurg.2014.02.010. [DOI] [PubMed] [Google Scholar]

- 4.Patrício MF, Julião M, Fareleira F, Carneiro AV. Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Med Teach. 2013;35:503–14. doi: 10.3109/0142159X.2013.774330. [DOI] [PubMed] [Google Scholar]

- 5.Al-Naami MY. Reliability, validity, and feasibility of the Objective Structured Clinical Examination in assessing clinical skills of final year surgical clerkship. Saudi Med J. 2008;29:1802–7. [PubMed] [Google Scholar]

- 6.Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE) Med Educ. 1979;13:41–54. [PubMed] [Google Scholar]

- 7.Landes CA, Hoefer S, Schuebel F, Ballon A, Teiler A, Tran A, Weber R, Walcher F, Sader R. Long-term prospective teaching effectivity of practical skills training and a first OSCE in cranio maxillofacial surgery for dental students. J Craniomaxillofac Surg. 2014;42:e97–104. doi: 10.1016/j.jcms.2013.07.004. [DOI] [PubMed] [Google Scholar]

- 8.Bark H, Shahar R. The use of the Objective Structured Clinical Examination (OSCE) in small-animal internal medicine and surgery. J Vet Med Educ. 2006;33:588–92. doi: 10.3138/jvme.33.4.588. [DOI] [PubMed] [Google Scholar]

- 9.Ruesseler M, Weinlich M, Byhahn C, Müller MP, Jünger J, Marzi I, Walcher F. Increased authenticity in practical assessment using emergency case OSCE stations. Adv Health Sci Educ Theor Pract. 2010;15:81–95. doi: 10.1007/s10459-009-9173-3. [DOI] [PubMed] [Google Scholar]

- 10.Bekele A, Shiferaw S, Kotisso B. First experience with OSCE as an exit exam for general surgery residency program at Addis Ababa University, School of Medicine. East Afr J Surg (online) 2012;17:112–117. [Google Scholar]

- 11.Piryani RM, Shankar PR, Thapa TP, Karki BM, Kafle RK, Khakurel MP, Bhandari S. Introduction of structured physical examination skills to second year undergraduate medical students. F1000 Res. 2013 doi: 10.12688/f1000research.2-16.v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Huang YS, Liu M, Huang CH, Liu KM. Implementation of an OSCE at Kaohsiung Medical University. Kaohsiung J Med Sci. 2007;23:161–9. doi: 10.1016/S1607-551X(09)70392-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Turner JL, Dankoski ME. Objective structured clinical exams: a critical review. Fam Med. 2008;40:574–8. [PubMed] [Google Scholar]

- 14.Zayyan M. Objective Structured Clinical Examination: the assessment of choice. Oman Med J. 2011;26:219–222. doi: 10.5001/omj.2011.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Barman A. Critiques on the Objective Structured Clinical Examination. Ann Acad Med Singapore. 2005;34:478–482. [PubMed] [Google Scholar]