Abstract

The rapid extraction of facial identity and emotional expressions is critical for adapted social interactions. These biologically relevant abilities have been associated with early neural responses on the face sensitive N170 component. However, whether all facial expressions uniformly modulate the N170, and whether this effect occurs only when emotion categorization is task-relevant, is still unclear. To clarify this issue, we recorded high-resolution electrophysiological signals while 22 observers perceived the six basic expressions plus neutral. We used a repetition suppression paradigm, with an adaptor followed by a target face displaying the same identity and expression (trials of interest). We also included catch trials to which participants had to react, by varying identity (identity-task), expression (expression-task) or both (dual-task) on the target face. We extracted single-trial Repetition Suppression (stRS) responses using a data-driven spatiotemporal approach with a robust hierarchical linear model to isolate adaptation effects on the trials of interest. Regardless of the task, fear was the only expression modulating the N170, eliciting the strongest stRS responses. This observation was corroborated by distinct behavioral performance during the catch trials for this facial expression. Altogether, our data reinforce the view that fear elicits distinct neural processes in the brain, enhancing attention and facilitating the early coding of faces.

Keywords: adaptation, facial expressions, N170, single-trial repetition suppression

Introduction

Facial expressions are important visual signals that provide information about internal emotional states and affective dispositions (Jack and Schyns, 2015). The efficient and rapid categorization of these signals is thus critical for adaptive social interactions. Despite numerous studies in the field of neuroscience and cognitive psychology, it is still debated when, where and how the brain achieves these biologically relevant tasks. The most prominent theoretical (Bruce and Young, 1986) and neuroanatomical (Haxby et al., 2000) models of face processing have posited a spatiotemporal dissociation between the processing of facial expression and identity. More precisely, at the anatomical and functional level, the decoding of facial identity takes place in a system involving the inferior occipital gyri and lateral fusiform gyrus (including the fusiform face area). Whereas facial expression categorization occurs in a separate system that includes the occipital cortex, the right posterior superior temporal sulcus (pSTS), and the amygdala (for a review see, Calder and Young, 2005; Pessoa, 2008).

However, experimental evidence remains inconclusive on whether this clear-cut anatomical and functional separation between identity and facial expression recognition is valid. On the one hand, some studies have reported a neural dissociation of these dimensions in brain-damaged patients (e.g. Shuttleworth et al., 1982; Bruyer et al., 1983; Tranel and Damasio, 1988; Sergent and Villemure, 1989; Haxby et al., 2000; Mattson et al., 2000; Richoz et al., 2015; Fiset et al., in press). Functional dissociations have also been found in electrophysiological studies with primates (e.g. Hasselmo et al., 1989) and human functional neuroimaging studies (e.g. Winston et al., 2004). Conversely, some authors have challenged this view suggesting the existence of a complex interplay between the decoding of emotional and identity information in a network of regions within the occipital and temporal cortices. For example, several studies have shown a functional involvement of the inferior occipital gyrus in both identity and expression recognition (e.g. Adolphs, 2002; Pitcher, 2014). Similarly, the lateral fusiform gyrus seems to be involved in both tasks (e.g. Dolan et al., 2001; Williams and Mattingley, 2004; Ganel et al., 2005; Fox et al., 2009). Vuilleumier et al. (2001) have demonstrated that responses in the lateral fusiform gyrus are modulated by the nature of the facial expressions, with fearful faces eliciting stronger activation than neutral faces. Using intracranial local field potential recordings, Pourtois et al. (2010) also revealed an anatomical overlapping between identity and emotional face processing in the right fusiform gyrus. Other functional Magnetic Resonance Imaging (fMRI) studies have also reported sensitivity to identity in the middle and the pSTS (Winston et al., 2004; Fox et al., 2009). Altogether, these studies suggest the existence of a more complex and comprehensive processing system with many overlapping activities for both identity and expression recognition across those brain regions (see also D’Argembeau and Van der Linden, 2007; Todorov et al., 2007), leaving the question of an anatomical and functional dissociation still open.

Event-related potential (ERP) studies have also provided mixed results regarding the time-course of identity and facial expression categorization. The face-sensitive N170 component (Bentin et al., 1996) is the most important electrophysiological signature for studying the early dynamics of face processing. The N170 is a bilateral occipito-temporal negative deflection peaking roughly 170 ms after stimulus onset, which is larger for faces compared to other non-face visual categories (Bötzel et al., 1995; George et al., 1996). Activity in this time window is associated with early accumulation of perceptual information leading to structural encoding stages (Bentin and Deouell, 2000), such as detection (Jeffreys, 1989; Rousselet et al., 2004) and visual categorization (Eimer, 1998; Schweinberger et al., 2002; Itier et al., 2007). Many studies have convincingly shown that the N170 is also sensitive to face identity (Campanella et al., 2000; Guillaume and Tiberghien, 2001; Itier and Taylor, 2002; Jemel et al., 2005; Heisz et al., 2006; Caharel et al., 2009; Jacques and Rossion, 2009; Vizioli et al., 2010a, 2010b), as well as to the detection of other important facial features, such as gender and race (Caldara et al., 2003, 2004b). However, it remains yet unclear whether the N170 is also sensitive to facial expressions. Some studies have reported such expression-sensitive N170 modulations (e.g. Batty and Taylor, 2003; Blau et al., 2007; Schyns et al., 2007; Morel et al., 2009; Smith, 2012; DaSilva et al., 2016;), while others have not (e.g. Campanella et al., 2002; Ishai et al., 2006). A recent meta-analytic review of ERP studies investigating the sensitivity of the N170 to emotional expression by Hinojosa et al. (2015) points toward a general pattern for greater N170 responses to a certain array of expressions (fear, anger, happy), with greater effect sizes for tasks involving indirect attention to the emotional expressions of the faces. The review also highlighted a point of interest of whether emotional expression may modulate the N170 individually, or whether some expressions produce greater modulation while others none at all, which requires a broad array of expressions involved in such paradigms.

Given the inconsistency of the results between studies using different tasks and emotional expressions, the timing of the processing of these expressions is yet unresolved, arising either during the structural encoding N170 stages of processing, or with facial expressions being encoded at a later stage (Eimer and Holmes, 2002; Caldara et al., 2004a; Rellecke et al., 2013).

The differences in task design and methodology, as well as the use of only a subset of facial expressions, might account for some inconsistency in these results (e.g. Pourtois et al., 2005; Caharel et al., 2007; Langeslag et al., 2009; Righi et al., 2012; Morel et al., 2014, for review see Hinojosa et al., 2015). Tasks are, in fact, cognitive contexts that modulate the encoding functions of high-level vision (Schyns, 1998; Kay et al., 2015). It is thus plausible that the categorization task itself can drive the selective search of information from the available visual inputs, directing the visual system towards the features that are the most useful for the task (Goffaux et al., 2003). Accordingly, it is appropriate to investigate how emotion and identity discrimination tasks influence the early processing of faces containing an array of varying emotions.

Some behavioral studies have also suggested that facial expression and identity are not processed independently, through the use of selective attention tasks such as the Garner interference paradigm (Garner, 1976). In this kind of paradigm, observers have to selectively attend to a relevant dimension (e.g. identity), while ignoring another randomly varying dimension (e.g. expression). Garner interference occurs when variations of the irrelevant dimension cause a decreased accuracy and longer reaction times along the relevant dimension, and supports the conclusion that the considered dimensions are processed together. Garner interference has been observed for both identity and expression (as relevant dimensions), suggesting that both these types of facial information are not processed entirely independently (e.g. Ganel and Goshen-Gottstein, 2004; Fisher et al., 2016). Importantly, a recent ERP study employing the Garner interference paradigm with a subset of facial expressions reported evidence for an interaction between identity and expression on the identity-sensitive N250r component (occurring between the 220–320 ms time range, see Fisher et al., 2016), but not on the earlier N170 range.

Since the N170 sensitivity to facial expressions appears highly heterogeneous, more evidence is needed to clarify whether this component is sensitive to a few emotional categories or all the basic facial expressions. In fact, some emotions are more important than others for survival and would require a prompt adaptation of our behavior to salient events. Particularly, expressions that are associated to both threatening and comforting situations (e.g. fear and happy) are processed more rapidly compared to others (Öhman et al., 2001; Algom et al., 2004; Leppänen et al., 2007), even without conscious awareness (e.g. Smith, 2012; for a review see also, Tamietto and de Gelder, 2010). In summary, it remains to be clarified whether all facial expressions of emotion uniformly modulate the N170 and only when the categorization of emotion is task-relevant.

Repetition suppression (RS) or adaptation procedures are particularly promising to clarify this issue. RS reflects a short-term neural decrease, elicited by the repetition of the same visual input, occurring uniquely in neural populations coding for this information. Given its high sensitivity, RS can be considered as one of the most powerful tools for testing the coding and recognition of visual inputs during early stages of information processing (Vizioli et al., 2010b) as well as later stages as for example during identity coding (Ramon et al., 2010). In fact, we have previously demonstrated that the use of conventional electrophysiological paradigms might not be sufficiently sensitive to capture subtle electrophysiological responses on the N170 when coding for the race of (upright) faces (Vizioli et al., 2010b). For identity, there is a general consensus among studies investigating the effects of repetition on the N170 component, with greater RS found for the presentation of pairs of faces with the same identity (e.g. Itier and Taylor, 2002; Harris and Nakayama, 2007; Ewbank et al., 2008; Caharel et al., 2009; Ramon et al., 2010). A recent meta-analytic review of emotional expression effects on the N170 (Hinojosa et al., 2015) notes that while there is a majority of studies indicating this effect, the strength of such effects is modulated by the choice of electrode reference(s) in the data collection/processing stage. As the choice of the reference electrode has been found to modulate the intensity and spatial location of N170/VPP (vertex positive potential) effects (Joyce and Rossion, 2005), the ability to measure a significant N170 modulation by emotional expression may relate both to the signal to noise ratio involved in the paradigm and technical setup, as well as a priori expectations of where in the scalp and when such effects might manifest. Thus, the use of a data-driven single-trial repetition suppression (stRS) approach seems suitable to clarify this issue, especially given that this approach was able to reveal what had otherwise been an inconsistent or null effect with conventional analyses (see Vizioli et al., 2010b).

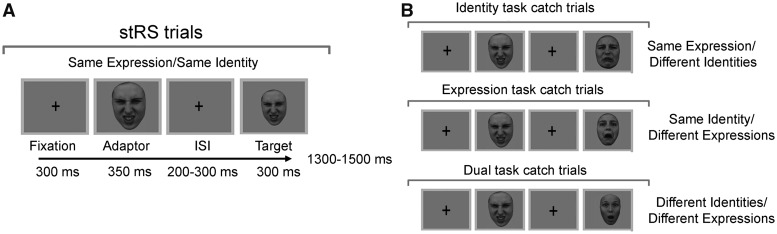

In the present study, we investigated the neural coding for all six basic facial expressions of emotion (anger, disgust, fear, happy, sad, surprise) plus neutral in recorded electrophysiological signals. Specifically, we investigated whether N170-amplitude modulation occurs only when the emotion categorization is task-relevant. To this aim, we quantified the amount of neural adaptation triggered by the repetition of faces displaying identical facial expressions (trials of interest). High-temporal resolution scalp EEG signals were thus registered during the repetition of two faces (an “adaptor” and a “target”), always displaying the same identity and the same facial expression (i.e. trials of interest; in Figure 1A reported as stRS trials). In each sequence, the two faces displayed one of the six basic expressions or the neutral expression. Importantly, to control task constraints, we also included catch trials to which participants had to react (Figure 1B), by varying identity (identity-task), expression (expression-task) or both (expression- and identity-task) on the target face (dual-task). Thus, observers were required to respond only on mismatching trials, leaving the electrophysiological trials of interest uncontaminated by behavioral responses.

Fig. 1.

Experimental procedures. Left panel (A) shows the experimental design of the trials of interest (used for single trial repetition suppression analyses, stRS). Right panel (B) shows the catch trials for the identity-, expression- and dual-task. Please note that the catch trials from identity- and expression-task were also presented during the dual-task as distractors.

We hypothesized that if the early N170 component codes for facial expressions, RS responses would be modulated by the nature of the facial expressions, facial identities being kept equal. Furthermore, if the N170-facial expression coding occurs only when the categorization of emotion is task-relevant, neural differences should emerge only when attentional resources are directed toward this information (see, e.g. Pernet et al., 2007). To anticipate our findings, the single-trial data-driven spatiotemporal analysis did not reveal task-specific modulations. Instead, our data showed a general and strong neural adaptation response for the facial expression of fear regardless of the task at hand. These observations indicate an early specific coding of facial expressions on the N170, with fear eliciting the largest adaptation regardless of task constraints.

Materials and methods

Participants

Twenty-two (10 male) right-handed first-year students from the University of Fribourg took part in the experiment, with an average age of 21.5 years old (range 19–32). All participants had normal or corrected-to-normal vision. They signed a consent form describing the main goals of the study and received course credits for their participation. The Ethical Committee of the Department of Psychology of the University of Fribourg approved the study reported here.

Stimuli and procedure

Seventy grayscale face images containing 10 different identities (five females) were obtained from the Karolinska Directed Emotional Faces database (Lundqvist et al., 1998). Each identity contained seven distinctive facial expressions (anger, disgust, fear, happy, neutral, sad, surprise). After the removal of external facial features, all images were normalized for low-level visual properties using the SHINE toolbox (Willenbockel et al., 2010). Face stimuli were presented at the center of the screen at a viewing distance of 75 cm.

Participants sat in a dimly lit, sound-attenuated electrically shielded booth. They performed three different tasks (expression-, identity- and dual-task) in a counterbalanced random order. All tasks followed the same RS paradigm with two different types of trials: trials of interest and catch trials. Only the trials of interest were analyzed in the EEG data analysis using the stRS approach (Vizioli et al., 2010b). These trials were identical across all tasks, with the same face stimulus presented both as an adaptor and as target (Figure 1A). For catch trials, we changed visual features of the target with reference to the adaptor (Figure 1B). While in the identity-task, the target stimulus displayed a different face identity with the same facial expression, in the expression-task, the target stimulus displayed the same face identity but with a different facial expression. In the dual-task, both face identity and facial expression were different. In addition, to ensure that participants’ attention was directed toward a double genuine categorization of both dimensions, during this task, we presented also catch trials from the identity-task and the expression-task as distractor trials. However, participants had to respond only to catch trials where changes occurred for both identity and expression while ignoring the distractor trials with changes in only one dimension (i.e. identity or expression). Participants were requested to press the space bar whenever they saw a catch trial. No behavioral responses were expected during the trials of interest.

All three tasks consisted of the same 560 trials of interest (10 identities × 7 expressions × 8 repetitions). Participants responded to 70 catch trials in all three tasks, whereas in the dual-task there were 140 additional distractor trials, which were the same catch trials as those found in the identity and expression tasks (i.e. distractor trials). Moreover, the image sequence was pseudorandom, so that each face stimulus was presented with equal probability as adaptor and as a target.

The experiment was presented on a VIEWPixx/3D display system [resolution 1920 (H) × 1080 (V) pixels, refresh rate 120 Hz]. Each trial started with a black fixation cross ≈0.3° of visual angle, presented at the center of the screen for 300 ms. The adaptor face was then presented for 350 ms, followed by an interval of random duration with a black fixation cross (200, 250, 300 ms). The target face was then presented for 300 ms. The offset of the second face was followed by a randomized inter-trial interval between 1300 and 1500 ms.

To minimize low-level adaptation, we manipulated the stimuli size for the adaptor and target: the size of the adaptor face was 11.8 cm × 15 cm (9.636° × 12.231°), whereas the size of the target was smaller: 10.7 cm × 13.5 cm (8.74° × 11.016°). The whole experiment was programed in MATLAB, using the Psychophysics Toolbox (PTB-3 Brainard, 1997; Kleiner et al., 2007). EEG triggers were sent from the VIEWPixx system and were synchronized with the stimuli presentation using the DataPixx Toolbox.

EEG recording

EEG data were acquired with an 128-channel Biosemi Active Two EEG system (BioSemi, Amsterdam, Netherlands). The recording reference and ground consisted of two active electrodes (CMS, Common Mode Sense; DRL, Driven Right Leg). Analog signal was digitized at 1, 024 Hz. Electrode offsets were kept between a maximum of ±25 μV, with a mean offset around 15 μV. Participants were asked to minimize blinking, head movements and swallowing.

Data analysis

We applied mixed-effects logistic regression analyses for the behavioral responses (Jaeger, 2008). We analyzed the frequency of hits (accurately identified catch trials) and false alarms (incorrect responses during the trials of interest and incorrect responses during the presentation of the distractor trials in the dual-task) using GeneralizedLinearMixedModel in MATLAB R2014b with a binomial model. After model fitting, statistical hypothesis testing was performed on the model coefficients using CoefTest in MATLAB. The fixed effects were Expression (7 levels: angry, disgust, fear, happy, neutral, sad, surprise) of the face adaptor, Task (3 levels: identity, expression, dual) and their interaction. The subject-specific effects were considered as the random effect of the model. We predicted the worst performance in the dual-task, as this task makes it particularly difficult for the participants to ignore the presentations of identity and expression catch trials. To further investigate and clarify the modulation effects of facial expressions on this latter task, we conducted a separate mixed-effects logistic regression analysis on the false alarm rates of the dual-task.

We used EEGLAB (Delorme and Makeig, 2004) to perform EEG pre-processing. Only trials of interest with no behavioral response (i.e. correct rejections) were analyzed (i.e. valid trials). EEG signal was low-pass filtered at 40 Hz with a slope of 6 dB and high-pass filtered at 1 Hz. Trials were segmented across an epoch of −100 ms to +550 ms and the average 100 ms pre-stimulus activity was removed from every time-point, independently for each electrode at the single trial level.

We used the same approach as the one used in Vizioli et al. (2010b) for statistical inferences on the valid trials (stRS). As RS refers to a stimulus specific reduction of neural activity, the stRS consists in subtracting the activity elicited by the target face from the activity elicited by the adaptor. This data-driven approach was used primarily for mapping significant electrophysiological effects at all electrodes and time-points, considering the response to the target as not independent from the response to the adaptor. Importantly, this analysis makes no a priori assumption about where and when to look for effects in the ERP signals (Vizioli et al., 2010b).

The signal elicited by both adaptor and target face was rejected from the analysis if either one of the two epochs was contaminated by artifacts (e.g. blink). We used a variance-based algorithm (for details see Vizioli et al., 2010b) during the stRS analysis for excluding on a subject-by-subject basis the epochs contaminated by artifacts. The minimum number of accepted trials across all participants and tasks was 401. The trials included in the average count were limited to the trials of interest. The mean numbers of accepted trials per expression across all the tasks were 67.92 (s.d. = 3.56).

We then applied Hierarchical Linear Model on the EEG data using functions from the LIMO EEG toolbox (Pernet et al., 2011). Statistical analyses of stRS were then performed first within single subjects (level 1) and then at the group levels (level 2). In the first level, we modeled the stRS amplitudes for each categorical condition independently for each participant using a general linear model (GLM) across trials, at all-time points and all electrodes. In the second level, we modeled the stRS amplitudes in GLM as follows:

where stRS was the response matrix containing the amplitudes for each time frame (t) and electrode (e) and X coded for 21 experimental conditions [all possible combinations of expressions (7) and tasks (3)]. The beta parameters (β) were estimated using ordinary least square. Hypothesis testing was performed by conducting a 7 × 3 (Expression: 7 levels: angry, disgust, fear, happy, neutral, sad, surprise, × Task: 3 levels: identity, expression, dual) ANOVA on the model coefficients (β).

Multiple comparison correction was performed using a bootstrap spatiotemporal clustering technique (Wilcox, 2005; Maris and Oostenveld, 2007; Vizioli et al., 2010b; Lao et al., 2013). We first centered the stRS so that each condition had a mean of zero. We bootstrapped the centered stRS by sample with replacement of the subjects, and then performed the same linear contrasts as statistical testing. This procedure was repeated 500 times; each time we recorded the maximal F-value sum in the significant cluster (cluster mass). By doing this, we derived an estimate of the cluster mass distribution under the null hypothesis. The 95% largest value in the bootstrapped cluster distribution was set as the cluster threshold at P < 0.01. The cluster mass in the original result was then compared with this threshold to assess the significance after multiple comparison correction.

Finally, we used a conventional RS analysis to clarify the underlying mechanism of the stRS effect (i.e. different ERP modulations triggered independently by the adaptors and the targets presentation). We applied a one-way ANOVA within each significant spatial cluster considering the Expressions (7 levels: angry, disgust, fear, happy, neutral, sad, surprise) independently for the adaptor and target conditions. Paired t tests were also performed to investigate which facial expression triggered significant N170 effects at the adaptor and target levels. Importantly, we applied these analyses on the same trials as those included in the stRS analysis.

Results

Behavioral results

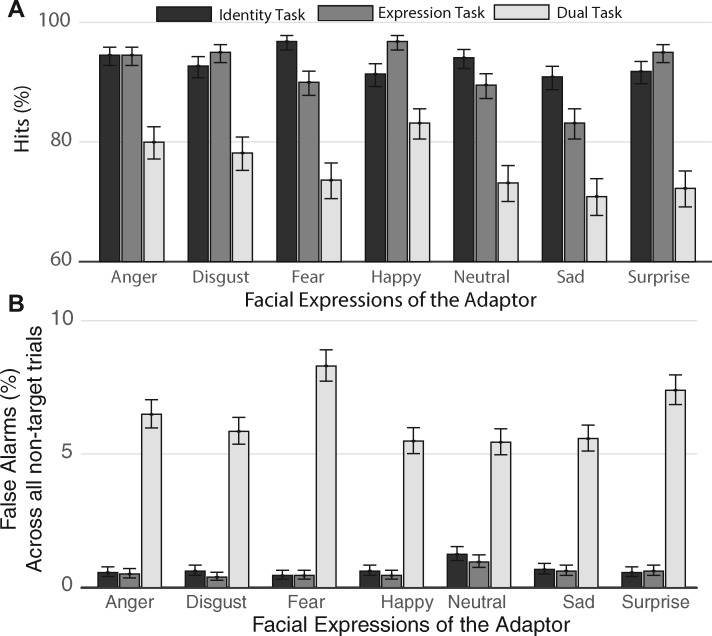

We found a significant main effect of Task for both hits and false alarms. As predicted, participants performed worse in the dual-task compared to the other two tasks [hits: F(2, 441) = 114.61, P < 0.05, Figure 2A; false alarms: F(2, 441) = 355.43, P < 0.05, Figure 2B]. The main effect of Expression was also significant for hits [F(6, 441) = 5.15, P < 0.05], as well as the interaction between Task and Expression for both hits and false alarms [hits: F(12, 441) = 2.47, P < 0.05; false alarms: F(12, 441) = 1.98, P = 0.024]. As shown in Figure 2B, by performing a mixed-effects logistic regression on the dual-task false alarm rate, we found that participants made significantly more false alarms when they were adapted to fear compared with sad [t(147) = 3.53, P = 0.0005, significant after Bonferroni correction], disgust [t(147) = 3.16, P = 0.0019], happy [t(147) = 3.66, P = 0.0003] and neutral [t(147) = 3.72, P = 0.0003] expressions.

Fig. 2.

Behavioral results obtained for the catch trials during the identity-, expression- and dual-task. The bar plots show the estimated coefficients from the logistic mixed-model and error bars show their 95% confidence interval (95% CI). (A) Percentage of hits (i.e. correct responses to catch trials) for the three tasks and the six facial expressions, plus neutral. (B) Percentage of false alarms (i.e. incorrect responses to catch trials) for the three tasks and the six facial expressions, plus neutral. Asterisks indicate statistical significance.

Electrophysiological results

stRS results

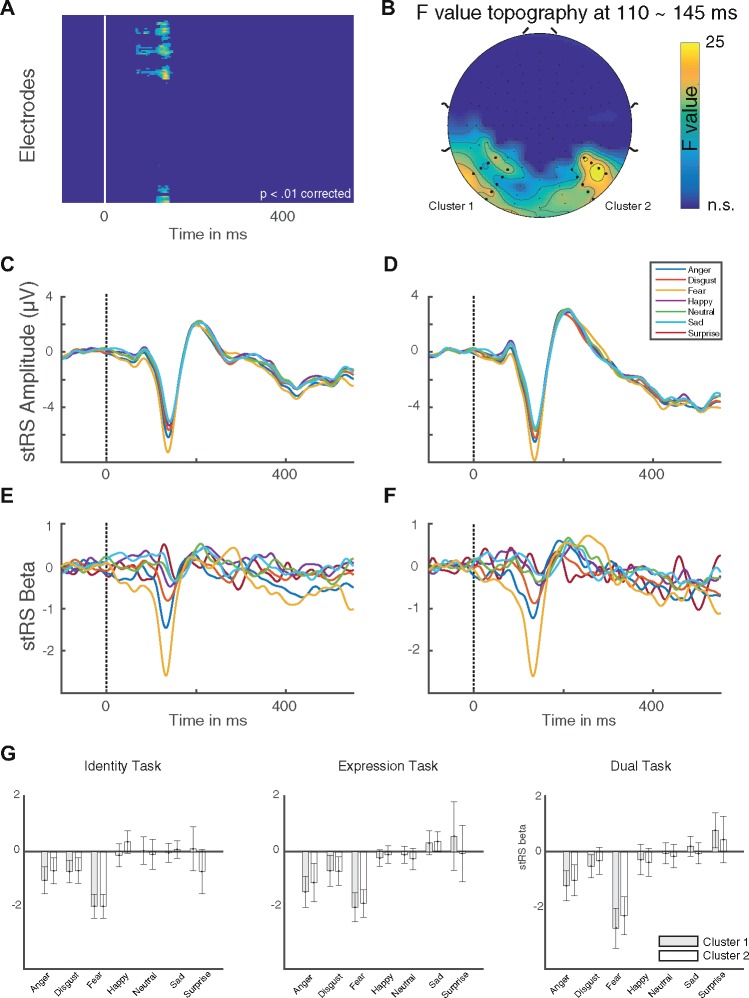

The 7 × 3 (Expression × Task) ANOVA carried out on the model coefficients (β) gave only a significant main effect for the factor Expression over bilateral occipito-temporal electrodes (Figure 3A and B, P < 0.05 corrected for multiple comparisons). The stRS amplitude and beta coefficients time course are shown independently for the left and right occipito-temporal cluster in Figure 3C–F. stRS responses were time-locked to the onset of the N170 component.

Fig. 3.

N170 repetition suppression effects. (A) Significant F values across the time (ms) for all electrodes after bootstrap clustering as multiple comparison corrections. (B) Topography of the significant F value at around 130 ms (maximum F value). (C) Average stRS in μV for the left occipito-temporal cluster (Cluster 1 in B). (D) Average stRS in μV for the right occipito-temporal cluster (Cluster 2 in B). (E) Average beta coefficients for the left occipito-temporal cluster (Cluster 1 in B). (F) Average beta coefficients for the right occipito-temporal cluster (Cluster 2 in B). All six facial expressions plus neutral are depicted (anger, blue line; disgust, red line; fear, yellow line; happy, purple line; neutral, green line; sad, light blue line; surprise, dark red line). (G) Bar plots of the mean stRS beta values within the significant whole cluster (i.e. left and right occipito-temporal electrodes) for the identity-, expression- and dual-task. Error bars show standard errors from the means.

The F-value of both clusters reached its maximum at ∼134 ms [left cluster maximum at electrode P9: F(6, 441) = 20.35; right cluster maximum at electrode P8: F(6, 441) = 30.96; P < 0.05 clustered corrected]. Posthoc paired-sample t tests on the model coefficients independently within each cluster (i.e. the left and right occipito-temporal electrodes) revealed that the repetitions of pairs of faces expressing fear elicited the largest stRS responses [βleft = –2.11, 95% CI (2.438, –1.775), βright = –1.92, 95% CI (—2.253, –1.587); Figure 3G] across all tasks.

Post hoc ERP peak analysis within cluster

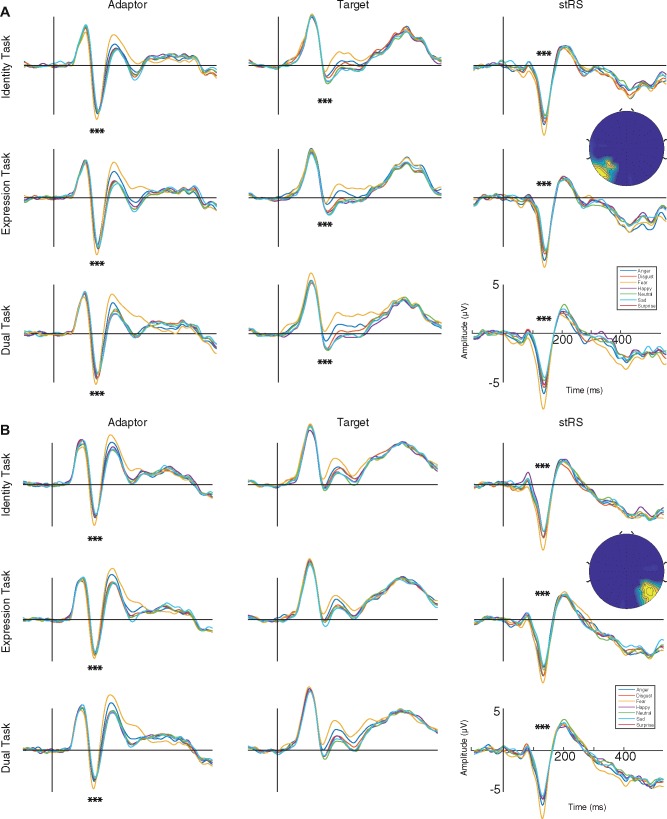

To pinpoint potential significant contributions of the different facial expressions in the adaptor and target conditions in modulating the stRS responses during each task, we performed a series of analyses on the ERP mean amplitude within each significant spatiotemporal cluster and in the time window ranging from 110 to 145 ms (as revealed by the data-driven stRS analysis—see Figure 4).

Fig. 4.

ERPs for the adaptor and target faces, and stRS elicited at the left (A, electrodes POz, P003h, O1, Oz, Ol2h, Ol1h) and the right occipito-temporal clusters (B, electrodes PO4, PO6, PO8, POO10h, PO10, PPO10h) for the seven Expressions (anger, blue line; disgust, red line; fear, yellow line; happy, purple line; neutral, green line; sad, light blue line; surprise, dark red line) in each Task (identity-, expression- and dual-task). Asterisks indicate statistical significance.

We found a significant main effect of the Expression for both the adaptor [F(6, 453) = 18.48, P < 0.05] and the target [F(6, 453) = 2.23, P = 0.04], within the left occipito-temporal cluster. Post hoc paired t tests revealed that fearful faces elicited significantly different responses compared with the other facial expressions in all tasks (see Figure 4A). At the adaptor presentation level, fear elicited significantly larger (negative) ERP responses compared with all other expressions (tmax = –8.49, tmin = –3.20, P < 0.05 Bonferroni corrected). At the target presentation level, fear elicit significantly larger (positive) ERP amplitude than surprise only (t = –3.41, P < 0.05 Bonferroni corrected).

Within the right occipito-temporal cluster, the Expression effect was significant only for the adaptor [F(6, 453) = 11.05, P < 0.05] but not for the target [F(6, 453) = 1.05, P = 0.395] in all tasks (see Figure 4B). Post hoc paired t tests revealed that fear among the other facial expressions elicited larger N170 compared with all other expressions (tmax = –6.77, tmin = –3.09, P < 0.05 Bonferroni corrected).

Discussion

We investigated the electrophysiological coding of facial expressions of emotion by using a RS paradigm with three tasks directing attention towards either identity, expression or on both types of information (i.e. dual-task). We measured the neural RS responses following the repetition of pairs of faces displaying the same identity and expression, for all the basic facial expressions, plus neutral. Importantly, by manipulating task demands while keeping the visual inputs identical, we aimed to assess and quantify whether facial expressions could modulate information processing, especially on the early N170 face-sensitive ERP. We applied a logistic mixed model on the behavioral data acquired on catch trials to isolate task-effects, and a spatiotemporal data-driven analysis on stRS ERP data on the trials of interest to quantify task-specific neural modulations (Vizioli et al., 2010b; Lao et al., 2013).

Our data revealed a distinctive signature for the facial expression of fear, both at the behavioral and electrophysiological levels. The presentation of fear as an adaptor induced significantly higher false alarm rates in the dual-task (i.e. incorrect responses to distractor trials), as well as stronger electrophysiological stRS signals over a bilateral cluster—the left and right typical face-sensitive N170 occipito-temporal electrodes, regardless of the task (i.e. identity, expression or both). To clarify further the nature of the mechanisms underlying such adaptation responses, we also performed conventional analyses on the average ERP on these two clusters, independently for the adaptor and the target amplitudes. Our data revealed that ERP modulations appeared over left and right occipito-temporal electrodes already for the adaptor faces, while a significant effect for both adaptor and target faces on the left occipito-temporal electrodes. Even if the behavioral performance and stRS electrophysiological signals arise from two dissociable sources of information (i.e. catch trials and trials of interest) and cannot be straightforwardly compared, both results corroborate the hypothesis of a distinct neural processing for fear compared to other facial expressions (see, e.g. Vuilleumier et al., 2001; Batty and Taylor, 2003; Öhman, 2005; Blau et al., 2007).

The central and novel finding of our study is the strong electrophysiological stRS response for fear. In line with many studies showing an early coding and sensitivity to facial expressions on the N170 component (e.g. Schyns et al., 2007), our study feeds the N170-facial expression sensitivity debate by contradicting further the view that it is not modulated by the emotional content (Münte et al., 1998; Krolak-Salmon et al., 2001; Eimer and Holmes, 2007; Fisher et al., 2016). In particular, we found a specific RS modulation for fear that allowed us to reveal a discrete nature of this emotional information coding at the N170 level. Indeed, RS represents a sharpening mechanism within the neural population that is engaged in the processing of the repeated stimulus (Wiggs and Martin, 1998; Grill-Spector et al., 2006). Thus, the amount of suppression could be interpreted as an indication of the ability of the face-sensitive neural populations to discriminate between different visual information. Our spatiotemporal data-driven approach indicates that the expression of fear boosts the early coding of individual faces regardless of the attentional constraints required for the effective categorization of identity, expression or both. Our findings are also consistent with an event-related fMRI study by Vuilleumier et al. (2001) investigating the role of spatial attention in modulating neural responses to fearful and neutral faces. Their results revealed that fear elicited stronger activations in the fusiform gyrus, independently of the effect of attention. From a sociobiological perspective, the expression and perception of fear is highly advantageous for human survival (LoBue, 2010) and can thus serve to trigger an enhanced processing of perceptual events (e.g. Phelps et al., 2006). Indeed, fearful faces automatically modulate attention (Fox et al., 2001; Pourtois et al., 2005; Carlson and Mujica-Parodi, 2015), producing a greater bias compared with happy faces (e.g. de Haan et al., 2003, Leppänen et al., 2007) and interfering with behavioral performance, by generating specific attentional narrowing (Eastwood et al., 2003). In our experiment, we observed stronger occipito-temporal responses for fear on the N170 time window, not only in the stRS responses, but also already during the presentation of the adaptor and target faces. It is worth noting that these electrophysiological modulations were observed with comparable attentional task demands across trials of interest. In line with these previous reports, these observations suggest that fear might indeed automatically and uniquely enhance attention.

As reported above, the novelty of the current study relies on the stRS paradigm and analysis, which takes into account the combined electrophysiological effects triggered by both adaptor and target presentations. It is worth noting that this methodological approach does not ignore the responses to adaptor or target faces, but rather incorporates both. The stRS approach represents, in fact, a new way to tap precise neural computations of RS (Vizioli et al., 2010b). A feature of the stRS approach was that while it allowed us to identify that the N170 RS response was sensitive to the encoding of a fearful facial expression, we were also able to analyze the ERP responses to the adaptor and target faces through conventional ERP analyses, which also confirmed an effect of emotional expression on the N170 responses. Although the precise neural computations of RS are still not completely understood and are highly debated in the literature, the adaptation elicited by two stimuli presented in rapid succession is currently interpreted as the engagement of the same neural population in the processing of both adaptor and target stimuli (Vizioli et al., 2010b; Grill-Spector et al., 2006). In our study, we found as expected the stRS for fearful faces triggered in both clusters by the adaptor presentation (for a similar result, see Williams et al., 2006). This implies that since fearful faces led to greater RS in both scalp hemispheres, the processing of fearful expressions appears to be bilateral in nature (e.g. Alves et al., 2008).

The increased sensitivity we observed uniquely for fear, might be related to a rapid perception of possible threatening situations and sustains coping strategies such as fighting, freezing or rapid escape (e.g. Armony and LeDoux, 2000; Calder et al., 2001). Thus, despite the fact that all facial expressions of threat are evolutionarily significant and more likely to capture attention (the threat-superiority effect, see, e.g. Öhman et al., 2001; Smith et al., 2003; Blanchette, 2006), when it comes to a task requiring a very rapid response, only fear enhances neural modulations at the early stage of visual processing. Therefore, it is possible that identity and emotional processing occurs in parallel through overlapping structures/cognitive modules, but only in response to certain evolutionarily important emotions (fear), rather than there being either a clear separation of identity and emotion processing or indeed a general overlap.

While we observed an early distinct electrophysiological response to fear, other researchers have also shown greater N170 modulations for other facial expressions, such as anger and happy (e.g. Williams et al., 2006; Almeida et al., 2016, see Hinojosa et al., 2015 for review). Theoretically, earlier anger and happy effects could be expected given their behavioral significance (see, e.g. Calvo and Beltrán, 2013). When the results of the present study are compared with those reported in the meta-analysis by Hinojosa et al. (2015), there are points of difference regarding the anger and happy expression, which may mostly be accounted for by differences in the experimental designs. The current study used an array of all facial expressions of emotion, involved a repetition paradigm and differed in the EEG analysis approach. It is likely that this combination of differing factors may lead to observed differences between the current and the previous studies. For example, the sensitivity to anger has been reported during orthogonal or passive viewing tasks. Similarly, the advantage for processing happy faces has been mostly demonstrated in long-term memory tasks. Interestingly, a very recent ERP study found this advantage (compared with neutral and pride-positive expressions), but only at later stages (starting from 800 ms) of face processing (DaSilva et al., 2016). However, here we used a facial adaptation paradigm, which might have increased the sensitivity to capture early neural responses, particularly during the adaptor presentations. Adaptors, in fact, could drive a general category-adaptation mechanism acting at a very early stage of visual processing (Eimer et al., 2010); please note that, in our study, fearful face adaptors triggered N170 modulations in both left and right hemispheres.

Contrary to previous reports (Williams et al., 2006; Smith et al., 2003), we did not find modulations (e.g. enhanced amplitudes) on the P100 (or P1) and C1, as well as later components (i.e. P2, N2). These components have been associated in particular with automatic attentional orientation (e.g. Luck et al., 2000; Taylor, 2002) for threatening stimuli (e.g. Pourtois and Vuilleumier, 2006). However, the early enhancements elicited on the P100 component by fearful expressions might be linked to difference in the low-level properties across stimuli and facial expressions, such as differences in luminance and brightness (Puce et al., 2013). In our study, all the stimuli were normalized for low-level visual properties and such a control might explain the absence of modulations on this component. The P2, N2 and C1 components have been related to both automatic task evaluation processing and controlled cognitive processing in a wide variety of tasks. For instance, modulations on those components have been reported during subliminal/masking or shifting paradigms, in which emotional faces were processed without awareness (Eimer and Kiss, 2008; Pegna et al., 2008) or when covert attention was shifted towards emotional face-cues (during a bar-probe task, e.g. Pourtois et al., 2004). Similar results have also been observed during the visual search of emotional stimuli in the occipital-temporal region (i.e. negative deflection on the N2pc; Luck and Hillyard, 1994), which have been related to an attentional shift (e.g. Eimer and Kiss, 2008). Such modulations occurred at about the same latency of a prefrontal positivity (the P2a), a neural index of attentional capture and stimulus evaluation (e.g. Kanske et al., 2011). Following this logic, a possible explanation for the lack of modulations on those components in our study might relate to a decrease in the allocation of attentional resources due to the absence of active behavioral responses during the trials we used to extract the neural adaptation signals (i.e. trials of interest). Therefore, despite these studies supporting the current findings, with fearful expression generally boosting earlier electrophysiological effects, once again the use of varying paradigms might be on the basis of the absence of an effect on those components.

Regarding the emotional state and intentions conveyed by facial expressions, an early posterior negativity (occipito-temporal EPN; 150–300 ms) have also been observed, in particular, for angry as compared to neutral faces, and happy faces (Sato et al., 2001; Liddell et al., 2004; Schupp et al., 2004; Williams et al., 2006; Holmes et al., 2008). It is important to underline that these effects have been shown to be more pronounced in socially anxious participants (Moser et al., 2008; Sewell et al., 2008) and participants undergoing socially-mediated aversive anticipation (Wieser et al., 2010; Bublatzky and Schupp, 2012). In addition, stimulus arousal level contributes highly to EPN since highly arousing pictures (mutilations and erotica) elicit larger amplitude EPNs than fewer arousing pictures for both unpleasant and pleasant categories (Schupp et al., 2004). Therefore, it is reasonable to conclude that an absence of an effect on the EPN component could be simply due to the use of both participants who were not selected according to differences in anxiety levels and only face stimuli in our study.

Contrary to the electrophysiological results, at the behavioral level participants made more false alarms when they saw catch trials displaying fearful compared with disgust, happy, sad and neutral adaptors but only during the dual-task. As expected, this task was cognitively more demanding (Pashler, 1992, 1994). In fact, to engage participants into a genuine categorization of a simultaneous violation on both identity and expression dimensions, the dual-task contained also catch trials with a unique violation of either identity or expression (i.e. the identity- and the expression-only catch trials), which participants were required to ignore.1 At first sight, fearful adaptors seem to distract more. However, this result might also indirectly support the hypothesis of an activation of the ‘fear module’, which elicited a greater reactivity (i.e. false alarms) in our participants. On the other hand, fearful faces may have influenced participants’ performance by decreasing the ability to disengage attention from these faces (Fox et al., 2002). Indeed, it is well-established that fearful expressions play an important role in the allocation and capture of attentional resources (Fenske and Eastwood, 2003).

Further studies are necessary to clarify this observation, by either using an active task on all the trials (and not only on the catch trials) or an experimental design involving the repetition of pairs of faces with different identities but same expressions and similar task constraints. Similar studies should also clarify the role of a potential interaction occurring during the first stages of face processing between identity and expression information, as reported recently by Fisher et al. (2016). In fact, it must be noted again that the use of a different task (i.e. Garner and RS paradigms) could be at the origin of the time course differences found by the current and this earlier study. Overall, the current pattern of results posits the facial expression of fear as one of the most significant for the human adaptive functioning.

Conclusions

Our data show that the facial expression of fear influences the early neural responses of face processing, regardless of whether attention is directed towards identity, expression or both dimensions. Fear elicited stronger stRS responses compared with any other facial expressions of emotion, an electrophysiological effect that was rooted in both the adaptor and the target face over the bilateral occipito-temporal cortex. Fear also modulated categorization efficiency for the most difficult dual-task. These findings suggest that the expression of fear triggers distinct mechanisms, by enhancing attention and leading to a better coding of facial information (i.e. greater adaptation). Such a unique role of fear echoes with the modern theory of threat detection, which highlights the important evolutionary significance of the communication of this expression.

Funding

This publication was supported by the Conseil de l'Université de Fribourg.

Conflict of interest. None declared.

Footnotes

Please note that these trials were discarded from the electrophysiological data analyses.

References

- Adolphs R. (2002). Neural systems for recognizing emotion. Current Opinion in Neurobiology 12(2),169–77. [DOI] [PubMed] [Google Scholar]

- Algom D., Chajut E., Lev S. (2004). A rational look at the emotional stroop phenomenon: a generic slowdown, not a stroop effect. Journal of Experimental Psychology: General 133(3),323–38. [DOI] [PubMed] [Google Scholar]

- Almeida P.R., Ferreira-Santos F., Chaves P.L., Paiva T.O., Barbosa F., Marques-Teixeira J. (2016). Perceived arousal of facial expressions of emotion modulates the N170, regardless of emotional category: time domain and time–frequency dynamics. International Journal of Psychophysiology 99, 48–56. [DOI] [PubMed] [Google Scholar]

- Alves N.T., Fukusima S.S., Aznar-Casanova J.A. (2008). Models of brain asymmetry in emotional processing. Psychology & Neuroscience 1(1),63–6. [Google Scholar]

- Armony J.L., LeDoux J.E. (2000). How danger is encoded: towards a system, cellular and computational understanding of cognitive-emotional interactions In: Gazzaniga M. S. (ed). The New Cognitive Neurosciences. 2th ed Cambridge: The MIT Press; p. 1067–80. [Google Scholar]

- Batty M., Taylor M.J. (2003). Early processing of the six basic facial emotional expressions. Cognitive Brain Research 17(3),613–20. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience 8(6),551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Deouell L.Y. (2000). Structural encoding and identification in face processing: erp evidence for separate mechanisms. Cognitive Neuropsychology 17(1-3),35–55. [DOI] [PubMed] [Google Scholar]

- Blanchette I. (2006). Snakes, spiders, guns, and syringes: how specific are evolutionary constraints on the detection of threatening stimuli?. The Quarterly Journal of Experimental Psychology 59(8),1484–504. [DOI] [PubMed] [Google Scholar]

- Blau V.C., Maurer U., Tottenham N., McCandliss B.D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions 3, 7.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bötzel K., Schulze S., Stodieck S.R. (1995). Scalp topography and analysis of intracranial sources of face-evoked potentials. Experimental Brain Research 104, 135–43. [DOI] [PubMed] [Google Scholar]

- Brainard D.H. (1997). The psychophysics toolbox. Spatial Vision 10(4),433–6. [PubMed] [Google Scholar]

- Bruce V., Young A. (1986). Understanding face recognition. British Journal of Psychology 77(3),305–27. [DOI] [PubMed] [Google Scholar]

- Bruyer R., Laterre C., Seron X., et al. (1983). A case of prosopagnosia with some preserved covert remembrance of familiar faces. Brain and Cognition 2(3),257–84. [DOI] [PubMed] [Google Scholar]

- Bublatzky F., Schupp H.T. (2012). Pictures cueing threat: brain dynamics in viewing explicitly instructed danger cues. Social Cognitive Affectective Neuroscience 7(6),611–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caharel S., Bernard C., Thibaut F., et al. (2007). The effects of familiarity and emotional expression on face processing examined by ERPs in patients with schizophrenia. Schizophrenia Research 95(1-3),186–96. [DOI] [PubMed] [Google Scholar]

- Caharel S., d’Arripe O., Ramon M., Jacques C., Rossion B. (2009). Early adaptation to repeated unfamiliar faces across viewpoint changes in the right hemisphere: evidence from the N170 ERP component. Neuropsychologia 47(3),639–43. [DOI] [PubMed] [Google Scholar]

- Caldara R., Thut G., Servoir P., Michel C., Bovet P., Renault B. (2003). Face versus non-face object perception and the “other-race” effect: a spatio-temporal ERP study. Clinical Neurophysiology 114(3),515–28. [DOI] [PubMed] [Google Scholar]

- Caldara R., Jermann F., Lopez G., Van der Linden M. (2004). Is the N400 modality specific? A language and face processing study. Neuroreport 15(17),2589–93. [DOI] [PubMed] [Google Scholar]

- Caldara R., Rossion B., Bovet P., Hauert C.-A. (2004). Event-related potentials and time course of the “other-race” face classification advantage. Neuroreport 15(5),905–10. [DOI] [PubMed] [Google Scholar]

- Calder A.J., Burton A.M., Miller P., Young A.W., Akamatsu S. (2001). A principal component analysis of facial expressions. Vision Research 41(9),1179–208. [DOI] [PubMed] [Google Scholar]

- Calder A.J., Young A.W. (2005). Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience 6(8),641–51. [DOI] [PubMed] [Google Scholar]

- Calvo M.G., Beltrán D. (2013). Recognition advantage of happy faces: tracing the neurocognitive processes. Neuropsychologia 51(11),2051–61. [DOI] [PubMed] [Google Scholar]

- Campanella S., Hanoteau C., Depy D., et al. (2000). Right N170 modulation in a face discrimination task: an account for categorical perception of familiar faces. Psychophysiology 37(6),796–806. [PubMed] [Google Scholar]

- Campanella S., Quinet P., Bruyer R., Crommelinck M., Guerit J.-M. (2002). Categorical perception of happiness and fear facial expressions: an ERP study. Journal of Cognitive Neuroscience 14(2),210–27. [DOI] [PubMed] [Google Scholar]

- Carlson J.M., Mujica-Parodi L.R. (2015). Facilitated attentional orienting and delayed disengagement to conscious and nonconscious fearful faces. Journal of Nonverbal Behavior 39(1),69–77. [Google Scholar]

- D’Argembeau A., Van der Linden M. (2007). Facial expressions of emotion influence memory for facial identity in an automatic way. Emotion 7, 507–15. [DOI] [PubMed] [Google Scholar]

- DaSilva E.B., Crager K., Puce A. (2016). On dissociating the neural time course of the processing of positive emotions. Neuropsychologia 83, 1–15. [DOI] [PubMed] [Google Scholar]

- de Haan M., Johnson M.H., Halit H. (2003). Development of face-sensitive event-related potentials during infancy: a review. International Journal of Psychophysiology 51(1),45–58. [DOI] [PubMed] [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods 134(1),9–21. [DOI] [PubMed] [Google Scholar]

- Dolan R.J., Morris J.S., de Gelder B. (2001). Crossmodal binding of fear in voice and face. Proceedings of the National Academy of Sciences 98(17),10006–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eastwood J.D., Smilek D., Merikle P.M. (2003). Negative facial expression captures attention and disrupts performance. Perception & Psychophysics 65(3),352–8. [DOI] [PubMed] [Google Scholar]

- Eimer M. (1998). Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroreport 9(13),2945–8. [DOI] [PubMed] [Google Scholar]

- Eimer M., Holmes A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13(4),427–31. [DOI] [PubMed] [Google Scholar]

- Eimer M., Holmes A. (2007). Event-related brain potential correlates of emotional face processing. Neuropsychologia 45(1),15–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M., Kiss M. (2008). Involuntary attentional capture is determined by task set: evidence from event-related brain potentials. Journal of Cognitive Neuroscience 20(8),1423–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M., Kiss M., Nicholas S. & (2010). Response profile of the face-sensitive N170 component: a rapid adaptation study. Cerebral Cortex 20(10),2442–52. [DOI] [PubMed] [Google Scholar]

- Ewbank M.P., Smith W.A.P., Hancock E.R., Andrews T.J. (2008). The M170 reflects a viewpoint-dependent representation for both familiar and unfamiliar faces. Cerebral Cortex 18(2),364–70. [DOI] [PubMed] [Google Scholar]

- Fenske M.J., Eastwood J.D. (2003). Modulation of focused attention by faces expressing emotion: evidence from flanker tasks. Emotion 3(4),327–43. [DOI] [PubMed] [Google Scholar]

- Fiset D., Blais C., Royer J., Richoz A.-R., Dugas G., Caldara R. (2017). Mapping the impairment in decoding static facial expressions of emotion in prosopagnosia. Social Cognitive and Affective Neuroscience, 12(8), 1334–1341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher K., Towler J., Eimer M. (2016). Facial identity and facial expression are initially integrated at visual perceptual stages of face processing. Neuropsychologia 80, 115–25. [DOI] [PubMed] [Google Scholar]

- Fox C., Moon S., Iaria G., Barton J. (2009). The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. Neuroimage 44(2),569–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Russo R., Dutton K. (2002). Attentional bias for threat: evidence for delayed disengagement from emotional faces. Cognition and Emotion 16, 355–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Russo R., Bowles R., Dutton K. (2001). Do threatening stimuli draw or hold visual attention in subclinical anxiety?. Journal of Experimental Psychology: General 130(4),681–700. [PMC free article] [PubMed] [Google Scholar]

- Ganel T., Goshen-Gottstein Y. (2004). Effects of familiarity on the perceptual integrality of the identity and expression of faces: the parallel-route hypothesis revisited. Journal of Experimental Psychology: Human Perception & Performance 30(3),583–97. [DOI] [PubMed] [Google Scholar]

- Ganel T., Valyear K.F., Goshen-Gottstein Y., Goodale M.A. (2005). The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43(11),1645–54. [DOI] [PubMed] [Google Scholar]

- Garner W.R. (1976). Interaction of stimulus dimensions in concept and choice processes. Cognitive Psychology 8(1),98–123. [Google Scholar]

- George N., Evans J., Fiori N., Davidoff J., Renault B. (1996). Brain events related to normal and moderately scrambled faces. Cognitive Brain Research 4(2),65–76. [DOI] [PubMed] [Google Scholar]

- Goffaux V., Jemel B., Jacques C., Rossion B., Schyns P.G. (2003). ERP evidence for task modulations on face perceptual processing at different spatial scales. Cognitive Science 27(2),313–25. [Google Scholar]

- Grill-Spector K., Henson R., Martin A. (2006). Repetition and the brain: neural models of stimulus-specific effects. Trends in Cognitive Science 10(1),14–23. [DOI] [PubMed] [Google Scholar]

- Guillaume F., Tiberghien G. (2001). An event-related potential study of contextual modifications in a face recognition task. Neuroreport 12(6),1209–16. [DOI] [PubMed] [Google Scholar]

- Harris A., Nakayama K. (2007). Rapid face-selective adaptation of an early extrastriate component in MEG. Cerebral Cortex 17(1),63–70. [DOI] [PubMed] [Google Scholar]

- Hasselmo M.E., Rolls E.T., Baylis G.C. (1989). The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behavioural Brain Research 32(3),203–18. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. (2000). The distributed human neural system for face perception. Trends in Cognitive Science 4(6),223–33. [DOI] [PubMed] [Google Scholar]

- Heisz J.J., Watter S., Shedden J.M. (2006). Automatic face identity encoding at the N170. Vision Research 46(28),4604–14. [DOI] [PubMed] [Google Scholar]

- Hinojosa J.A., Mercado F., Carretié L. (2015). N170 sensitivity to facial expression: a meta-analysis. Neuroscience & Biobehavioral Reviews 55, 498–09. [DOI] [PubMed] [Google Scholar]

- Holmes A., Nielsen M.K., Green S. (2008). Effects of anxiety on the processing of fearful and happy faces: an event-related potential study. Biological Psychology 77(2),159–73. [DOI] [PubMed] [Google Scholar]

- Ishai A., Bikle P.C., Ungerleider L.G. (2006). Temporal dynamics of face repetition suppression. Brain Research Bulletin 70(4-6),289–95. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Alain C., Sedore K., McIntosh A.R. (2007). Early face processing specificity: it’s in the eyes!. Journal of Cognitive Neuroscience 19(11),1815–26. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage 15(2),353–72. [DOI] [PubMed] [Google Scholar]

- Jack R.E., Schyns P. G. (2015). The human face as a dynamic tool for social communication. Current Biology 25(14),R621–34. [DOI] [PubMed] [Google Scholar]

- Jacques C., Rossion B. (2009). The initial representation of individual faces in the right occipito-temporal cortex is holistic: electrophysiological evidence from the composite face illusion. Journal of Vision 9(6),8–116. [DOI] [PubMed] [Google Scholar]

- Jaeger T.F. (2008). Categorical data analysis: away from ANOVAs (transformation or not) and towards logit mixed models. Journal of Memory and Language 59(4),434–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffreys D.A. (1989). A face-responsive potential recorded from the human scalp. Experimental Brain Research 78(1),193–202. [DOI] [PubMed] [Google Scholar]

- Jemel B., Pisani M., Rousselle L., Crommelinck M., Bruyer R. (2005). Exploring the functional architecture of person recognition system with event-related potentials in a within- and cross-domain self-priming of faces. Neuropsychologia 43(14),2024–40. [DOI] [PubMed] [Google Scholar]

- Joyce C., Rossion B. (2005). The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology 116(11),2613–31. [DOI] [PubMed] [Google Scholar]

- Kanske P., Plitschka J., Kotz S.A. (2011). Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia 49(11),3121–9. [DOI] [PubMed] [Google Scholar]

- Kay K.N., Weiner K.S., Grill-Spector K. (2015). Attention reduces spatial uncertainty in human ventral temporal cortex. Current Biology 25(5),595–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleiner M., Brainard D., Pelli D., Ingling A., Murray R., Broussard C. (2007). What‘s new in Psychtoolbox-3. Perception 36, 1–16. [Google Scholar]

- Krolak-Salmon P., Fischer C., Vighetto A., Mauguiere F. (2001). Processing of facial emotional expression: spatio-temporal data as assessed by scalp event-related potentials. European Journal of Neuroscience 13(5),987–94. [DOI] [PubMed] [Google Scholar]

- Langeslag S.J.E., Morgan H.M., Jackson M.C., Linden D.E.J., Van Strien J.W. (2009). Electrophysiological correlates of improved short-term memory for emotional faces. Neuropsychologia 47, 887–96. [DOI] [PubMed] [Google Scholar]

- Lao J., Vizioli L., Caldara R. (2013). Culture modulates the temporal dynamics of global/local processing. Culture and Brain 1(2-4),158–74. [Google Scholar]

- Liddell B.J., Williams L.M., Rathjen J., Shevrin H., Gordon E. (2004). A temporal dissociation of subliminal versus supraliminal fear perception: an event-related potential study. Journal of Cognitive Neuroscience 16(3),479–86. [DOI] [PubMed] [Google Scholar]

- Leppänen J.M., Moulson M.C., Vogel-Farley V.K., Nelson C.A. (2007). An ERP study of emotional face processing in the adult and infant brain. Child Development 78(1),232–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LoBue V. (2010). What‘s so scary about needles and knives? Examining the role of experience in threat detection. Cognition and Emotion 24(1),180–7. [Google Scholar]

- Luck S.J., Hillyard S.A. (1994). Spatial filtering during visual search: evidence from human electrophysiology. Journal of Experimental Psychology: Human Perception and Performance 20, 1000–14. [DOI] [PubMed] [Google Scholar]

- Luck S.J., Woodman G.F., Vogel E.K. (2000). Event-related potential studies of attention. Trends in Cognitive Science 4(11),432–40. [DOI] [PubMed] [Google Scholar]

- Lundqvist D., Flykt A., Öhman A. (1998) The Karolinska Directed Emotional Faces - KDEF, CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

- Maris E., Oostenveld R. (2007). Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods 164(1),177–90. [DOI] [PubMed] [Google Scholar]

- Mattson A., Levin H., Grafman J. (2000). A case of prosopagnosia following moderate closed head injury with left hemisphere focal lesion. Cortex 36(1),125–37. [DOI] [PubMed] [Google Scholar]

- Morel S., George N., Foucher A., Chammat M., Dubal S. (2014). ERP evidence for an early emotional bias towards happy faces in trait anxiety. Biological Psychology 99, 183–92. [DOI] [PubMed] [Google Scholar]

- Morel S., Ponz A., Mercier M., Vuilleumier P., George N. (2009). EEG-MEG evidence for early differential repetition effects for fearful, happy and neutral faces. Brain Research 1254, 84–98. [DOI] [PubMed] [Google Scholar]

- Moser J.S., Huppert J.D., Duval E., Simons R.F. (2008). Face processing biases in social anxiety: an electrophysiological study. Biological Psychology 78(1),93–103. [DOI] [PubMed] [Google Scholar]

- Münte T.F., Brack M., Grootheer O., Wieringa B.M., Matzke M., Johannes S. (1998). Brain potentials reveal the timing of face identity and expression judgments. Neuroscience Research 30(1),25–34. [DOI] [PubMed] [Google Scholar]

- Öhman A. (2005). The role of the amygdala in human fear: automatic detection of threat. Psychoneuroendocrinology 30(10),953–8. [DOI] [PubMed] [Google Scholar]

- Öhman A., Flykt A., Esteves F. (2001). Emotion drives attention: detecting the snake in the grass. Journal of Experimental Psychology: General 130(3),466–78. [DOI] [PubMed] [Google Scholar]

- Pashler H. (1992). Attentional limitations in doing two tasks at the same time. Current Directions in Psychological Science 1(2),44–8. [Google Scholar]

- Pashler H. (1994). Dual-task interference in simple tasks: data and theory. Psychological Bulletin 116(2),220–44. [DOI] [PubMed] [Google Scholar]

- Pegna A.J., Landis T., Khateb A. (2008). Electrophysiological evidence for early non-conscious processing of fearful facial expressions. International Journal of Psychophysiology 70(2),127–36. [DOI] [PubMed] [Google Scholar]

- Pernet C., Schyns P.G., Demonet J.-F. (2007). Specific, selective or preferential: comments on category specificity in neuroimaging. Neuroimage 35(3),991–7. [DOI] [PubMed] [Google Scholar]

- Pernet C.R., Chauveau N., Gaspar C., Rousselet G.A. (2011). LIMO EEG: a toolbox for hierarchical LInear MOdeling of ElectroEncephaloGraphic data. Computational Intelligence and Neuroscience 2011, 831409.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. (2008). On the relationship between emotion and cognition. Nature Reviews Neuroscience 9(2),148–58. [DOI] [PubMed] [Google Scholar]

- Phelps E.A., Ling S., Carrasco M. (2006). Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychological Science 17(4),292–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D. (2014). Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. Journal of Neuroscience 34(27),9173–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G., Grandjean D., Sander D., Vuilleumier P. (2004). Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex 14(6),619–33. [DOI] [PubMed] [Google Scholar]

- Pourtois G., de Gelder B., Bol A., Crommelinck M. (2005). Perception of facial expressions and voices and of their combination in the human brain. Cortex 41(1),49–59. [DOI] [PubMed] [Google Scholar]

- Pourtois G., Spinelli L., Seeck M., Vuilleumier P. (2010). Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. Journal of Cognitive Neuroscience 22(9),2086–107. [DOI] [PubMed] [Google Scholar]

- Pourtois G., Vuilleumier P. (2006). Dynamics of emotional effects on spatial attention in the human visual cortex. Progress in Brain Research 156, 67–91. [DOI] [PubMed] [Google Scholar]

- Puce A., McNeely M.E., Berrebi M.E., Thompson J.C., Hardee J., Brefczynski-Lewis J. (2013). Multiple faces elicit augmented neural activity. Frontiers in Human Neuroscience 7, 282.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramon M., Dricot L., Rossion B. (2010). Personally familiar faces are perceived categorically in face-selective regions other than the fusiform face area. European Journal of Neuroscience 32(9),1587–98. [DOI] [PubMed] [Google Scholar]

- Rellecke J., Sommer W., Schacht A. (2013). Emotion effects on the n170: a question of reference?. Brain Topography 26(1),62–71. [DOI] [PubMed] [Google Scholar]

- Richoz A.-R., Jack R.E., Garrod O.G.B., Schyns P.G., Caldara R. (2015). Reconstructing dynamic mental models of facial expressions in prosopagnosia reveals distinct representations for identity and expression. Cortex 65, 50–64. [DOI] [PubMed] [Google Scholar]

- Righi S., Marzi T., Toscani M., Baldassi S., Ottonello S., Viggiano M.P. (2012). Fearful expressions enhance recognition memory: electrophysiological evidence. Acta Psychologica 139(1),7–18. [DOI] [PubMed] [Google Scholar]

- Rousselet G.A., Macé M.J.M., Fabre-Thorpe M. (2004). Spatiotemporal analyses of the N170 for human faces, animal faces and objects in natural scenes. Neuroreport 15(17),2607–11. [DOI] [PubMed] [Google Scholar]

- Sato W., Kochiyama T., Yoshikawa S., Matsumura M. (2001). Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport 12(4),709–14. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Pickering E.C., Jentzsch I., Burton A.M., Kaufmann J.M. (2002). Event-related brain potential evidence for a response of inferior temporal cortex to familiar face repetitions. Cognitive Brain Research 14(3),398–409. [DOI] [PubMed] [Google Scholar]

- Schyns P.G. (1998). Diagnostic recognition: task constraints, object information, and their interactions. Cognition 67(1-2),147–79. [DOI] [PubMed] [Google Scholar]

- Schyns P.G., Petro L.S., Smith M.L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology 17(18),1580–5. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Ohman A., Junghofer M., Weike A.I., Stockburger J., Hamm A.O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion 4(2),189–200. [DOI] [PubMed] [Google Scholar]

- Sergent J., Villemure J.-G. (1989). Prosopagnosia in a right hemispherectomized patient. Brain 112(4),975–95. [DOI] [PubMed] [Google Scholar]

- Sewell C., Palermo R., Atkinson C., McArthur G. (2008). Anxiety and the neural processing of threat in faces. Neuroreport 19(13),1339–43. [DOI] [PubMed] [Google Scholar]

- Shuttleworth E.C., Syring V., Allen N. (1982). Further observations on the nature of prosopagnosia. Brain and Cognition 1(3),307–22. [DOI] [PubMed] [Google Scholar]

- Smith M.L. (2012). Rapid processing of emotional expressions without conscious awareness. Cerebral Cortex 22(8),1748–60. [DOI] [PubMed] [Google Scholar]

- Smith N.K., Cacioppo J.T., Larsen J.T., Chartrand T.L. (2003). May I have your attention, please: electrocortical responses to positive and negative stimuli. Neuropsychologia 41(2),171–83. [DOI] [PubMed] [Google Scholar]

- Tamietto M., de Gelder B. (2010). Neural bases of the non-conscious perception of emotional signals. Nature Reviews Neuroscience 11(10),697–709. [DOI] [PubMed] [Google Scholar]

- Taylor M.J. (2002). Non-spatial attentional effects on P1. Clinical Neurophysiology 113(12),1903–8. [DOI] [PubMed] [Google Scholar]

- Todorov A., Gobbini M.I., Evans K.K., Haxby J.V. (2007). Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia 45(1),163–73. [DOI] [PubMed] [Google Scholar]

- Tranel D., Damasio A.R. (1988). Non-conscious face recognition in patients with face agnosia. Behavioural Brain Research 30(3),235–49. [DOI] [PubMed] [Google Scholar]

- Vizioli L., Foreman K., Rousselet G.A., Caldara R. (2010a). Inverting faces elicits sensitivity to race on the N170 component: a cross-cultural study. Journal of Vision 10, 15.1–23. [DOI] [PubMed] [Google Scholar]

- Vizioli L., Rousselet G.A., Caldara R. (2010). Neural repetition suppression to identity is abolished by other-race faces. Proceedings of the National Academy of Sciences 107(46),20081–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., Armony J.L., Driver J., Dolan R.J. (2001). Effects of attention and emotion on face processing in the human brain. Neuron 30(3),829–41. [DOI] [PubMed] [Google Scholar]

- Wieser M.J., Pauli P., Reicherts P., Mühlberger A. (2010). Dont look at me in anger! Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology 47(2),271–80. [DOI] [PubMed] [Google Scholar]

- Wiggs C.L., Martin A. (1998). Properties and mechanisms of perceptual priming. Current Opinion in Neurobiology 8(2),227–33. [DOI] [PubMed] [Google Scholar]

- Wilcox R. (2005) Introduction to Robust Estimation and Testing. San Diego: Academic Press. [Google Scholar]

- Willenbockel V., Sadr J., Fiset D., Horne G.O., Gosselin F., Tanaka J.W. (2010). Controlling low-level image properties: the SHINE toolbox. Behavior Research Methods 10(7),671–84. [DOI] [PubMed] [Google Scholar]

- Williams L.M., Palmer D., Liddell B.J., Song L., Gordon E. (2006). The “when” and “where” of perceiving signals of threat versus non-threat. Neuroimage 31(1),458–67. [DOI] [PubMed] [Google Scholar]

- Williams M.A., Mattingley J.B. (2004). Unconscious perception of non-threatening facial emotion in parietal extinction. Experimental Brain Research 154(4),403–6. [DOI] [PubMed] [Google Scholar]

- Winston J.S., Henson R.N.A., Fine-Goulden M.R., Dolan R.J. (2004). fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. Journal of Neurophysiology 92(3),1830–9. [DOI] [PubMed] [Google Scholar]