Abstract

How different visual systems process images and make perceptual errors can inform us about cognitive and visual processes. One of the strongest geometric errors in perception is a misperception of size depending on the size of surrounding objects, known as the Ebbinghaus or Titchener illusion. The ability to perceive the Ebbinghaus illusion appears to vary dramatically among vertebrate species, and even populations, but this may depend on whether the viewing distance is restricted. We tested whether honeybees perceive contextual size illusions, and whether errors in perception of size differed under restricted and unrestricted viewing conditions. When the viewing distance was unrestricted, there was an effect of context on size perception and thus, similar to humans, honeybees perceived contrast size illusions. However, when the viewing distance was restricted, bees were able to judge absolute size accurately and did not succumb to visual illusions, despite differing contextual information. Our results show that accurate size perception depends on viewing conditions, and thus may explain the wide variation in previously reported findings across species. These results provide insight into the evolution of visual mechanisms across vertebrate and invertebrate taxa, and suggest convergent evolution of a visual processing solution.

Keywords: Ebbinghaus illusion, Delboeuf illusion, Titchener illusion, optical illusion, size perception, Apis mellifera

1. Introduction

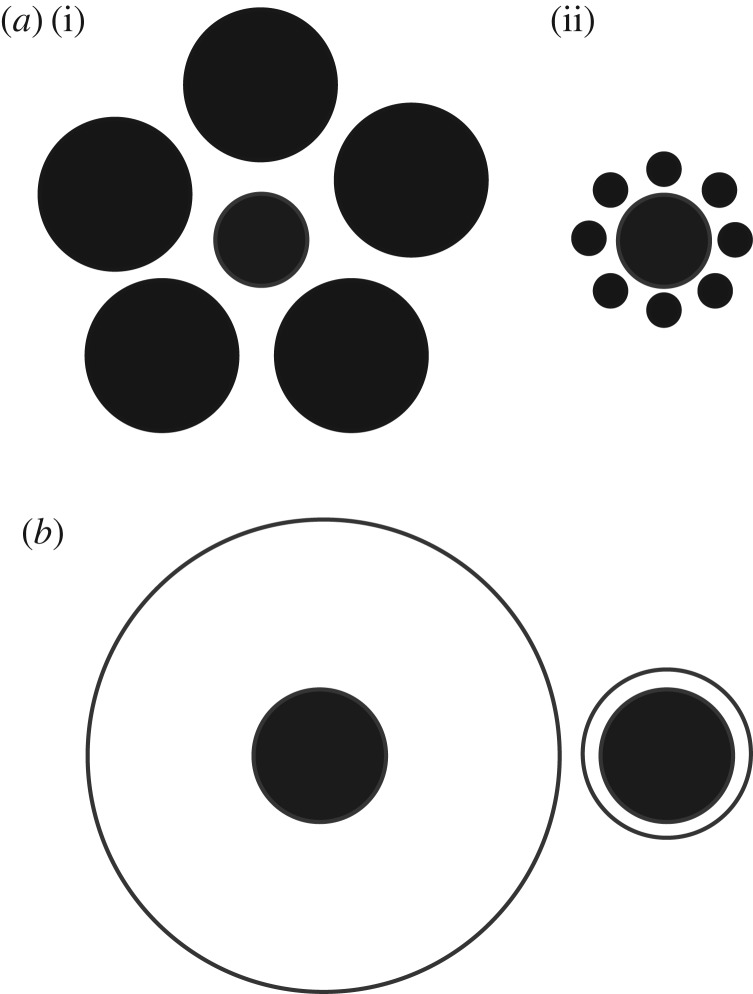

Our visual system allows us to process and assess our environment by providing information such as object size, shape, texture, colour and movement [1]. Visual illusions, classified as errors of perception, are informative for understanding variation in visual processing in both human and non-human animals [1]. One illusion which has been studied extensively in humans is the Ebbinghaus illusion (e.g. [2,3]). It is considered one of the strongest geometric illusions that humans perceive [1,4–6] and occurs where environmental context causes an object to appear relatively larger when surrounded by smaller objects, or relatively smaller when surrounded by larger objects [1,4,7] (figure 1). Humans generally perceive the world using global processing, which is the tendency to process the overall image of a scene rather than separately processing the individual elements which form it, which is known as local processing [8]. Global processing has been proposed to promote the perception of illusions, while local processing does not [1].

Figure 1.

(a) A well-known example of the Ebbinghaus or Titchener circle illusion: two identical central targets are made to look smaller (i) when surrounded by large, distant circles (inducers) than when surrounded by small and close inducers (ii). (b) A representation of the Delboeuf Illusion: a larger circle (annulus) surrounds a central target, resulting in it appearing smaller than when surrounded by a smaller annulus [7].

The ability to perceive contextual size illusions varies across vertebrates. Among those species currently known to be able to perceive the Ebbinghaus illusion are bottlenose dolphins [9], redtail splitfins [10], bower birds [11,12] and domestic chicks [13]. Baboons, however, do not perceive this illusion, thereby allowing them to accurately judge size regardless of context [6]. Interestingly, some species such as pigeons, bantams and domestic dogs perceive the opposite illusion, an assimilation illusion, where the central target size is perceived as being closer in size to the inducers which surround it [14–16]. Remarkably, not only does the Ebbinghaus illusion vary between species, but also within a single species. The Himba, an isolated remote human culture from northern Namibia, experience a strongly reduced effect of the size illusion compared with Western and urbanized populations [17–19].

Similar in nature to the Ebbinghaus illusion is the Delboeuf illusion [7] (figure 1b). The Delboeuf illusion relies on the misperception of size due to context [7]. A well-known example of this illusion is the tendency for identical meal portions to look smaller on a large plate and larger on a small plate [20,21]. Humans, chimpanzees [20,22], capuchin monkeys and rhesus monkeys [23] are vulnerable to this illusion, while domestic dogs are not [16,24]. In humans, this size illusion is thought to involve region V1 cortical representations of target size and context [25]. The differences regarding susceptibility to size illusions observed between species is potentially due the ability of species to process visual images locally or globally [8], as baboons and Himba people do not perceive the illusion [6,17] and demonstrate a local precedence [17,18,26]. Interestingly, pigeons can flexibly shift between local and global processing [27], and, as mentioned, perceive the illusion as an assimilation illusion [15].

Another potential explanation for the differences in perceiving these size illusions is the variance in testing methods for respective studies, specifically the restriction of the viewing distance (as discussed in [10]). For example, studies on pigeons, bantams and domestic dogs required the participants to touch the correct stimulus with their nose or beak (dogs [16,24]; birds [14,15]), forcing subjects to view illusions at a close range [10]; and for baboons, the viewing distance was restricted to 49 cm away from the screen displaying illusions [6]. Indeed, in humans, the Ebbinghaus illusion is reduced or reversed to an assimilation illusion when participants are forced to view the illusions at close range [28,29]. Thus, it appears that promoting a restricted distance on animals and humans may have a significant impact on whether size illusions are able to be perceived, although this has not been formally evaluated using a within-species study.

The honeybee is an important model species for testing visual and cognitive tasks due to the readily accessible way in which individuals can be trained [30–34], which permits high-value comparative analyses to vertebrate systems [35]. Honeybees can accurately discriminate stimulus sizes when presented on homogeneous backgrounds, and have the capacity to learn and apply ‘larger/smaller' size rules [36,37]. While this demonstrates sophisticated visual cognition in a miniature brain with regard to size perception, stimuli in both previous size discrimination experiments were presented on a background of consistent colour, shape and size [36,37]. Thus, the bee's ability to judge size in variable contexts remains unresolved; yet bees forage in complex, dynamic environments where the context in which flowers are encountered often changes. Honeybees express a global preference [38] when processing complex stimuli made up of multiple elements, and may therefore be sensitive to size illusions based on variation of the contextual surrounding cues.

In this study, we use contextual size illusions based on the Delboeuf illusion to determine how context and self-regulation of the viewing distance may impact a bee's ability to accurately judge size. We consequently trained bees to choose larger- or smaller-sized stimuli, and tested contextual size judgement considering either restricted or unrestricted viewing conditions by employing stimuli potentially promoting size illusions.

2. Material and method

(a). Study site and species

Experiments were conducted at the University of Melbourne between April 2015 and May 2017. Free-flying Apis mellifera foragers (experiment 1: n = 10; experiment 2: n = 10) were marked with a coloured mark on the thorax to identify individuals used in respective experiments [38].

(b). Experimental procedure

Training and test stimuli were composed of a central black square target presented on a white square acting as the surrounding annulus/inducer (figure 2). All stimuli were covered with 80 µm Lowell laminate. One bee was tested at a time during the training and testing phases. A counterbalanced design was used for both the unrestricted and restricted viewing distance experiments (see below), where in each experiment one group of bees was trained to associate larger stimulus sizes with a reward (n = 5), while a second group was trained to associate smaller stimulus sizes with a reward (n = 5), on a background of constant size. Previous work established that bees learn either size relation to a similar performance level [36,37]. Thus, the pseudorandomized counterbalance was done to exclude any potential preference effects on the test results. We used a rotating screen apparatus to promote an unrestricted viewing condition and a Y-maze apparatus to create the restricted viewing condition. Previous work has demonstrated that there is no significant difference in results for processing of complex visual patterns (non-illusionary stimuli) between a rotating screen and Y-maze [39].

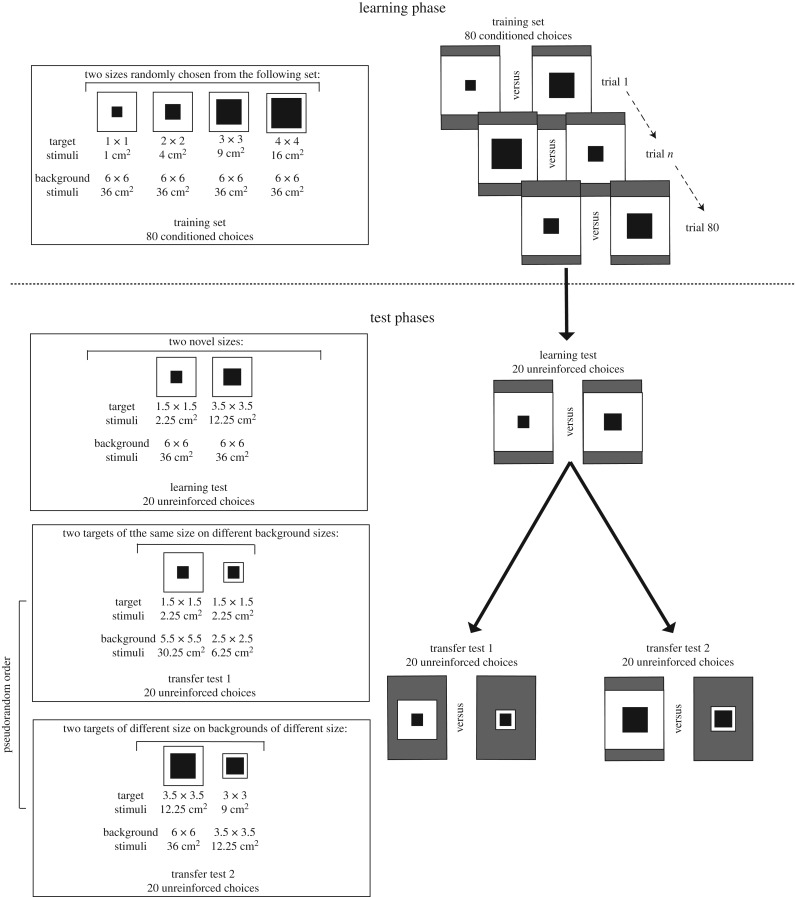

Figure 2.

The stimuli used for the training and testing phases of both experiments showing the dimensions and surface area of target and background stimuli. In the learning phase, 80 appetitive and aversive choices were conducted with four stimulus sizes (side edges: 1, 2, 3, 4 cm) presented on a consistent background (side edge: 6 cm). Two different sizes of target stimuli were simultaneously presented to bees during the learning and test phases. The unreinforced learning test presented bees with novel sizes (side edges: 1.5, 3.5 cm). In transfer test 1, two central targets of the same size (side edges: 1.5 cm) were presented to bees on a background of different sizes (side edges: 2.5, 5.5 cm) to create the effect of a visual illusion where the central target on the larger background appeared smaller in context, and the central target on the smaller background appeared larger in context. In transfer test 2, a larger central target (side edge: 3.5 cm) was displayed on a larger background (side edge: 6 cm), while a smaller central target (side edge: 3 cm) was displayed on a background which was small (side edge: 3.5 cm) in order to create the effect of the smaller central target appearing larger and the larger central target appearing smaller due to the surrounding context.

(i). Experiment 1: unrestricted viewing distance

Apparatus

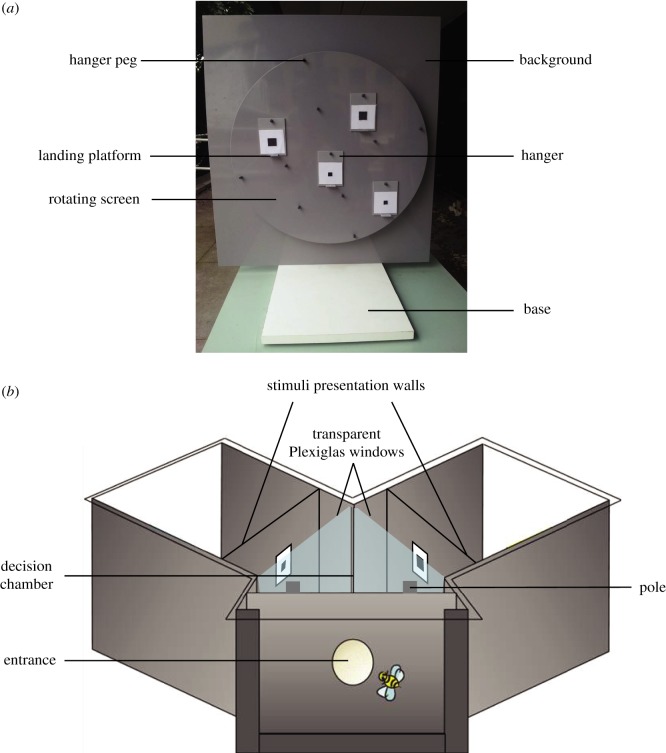

Honeybees were trained to visit a vertical rotating screen made of grey Plexiglas, 50 cm in diameter (figure 3a). By using this screen, the spatial arrangement of stimulus choices could be randomly changed, thus excluding positional cues. Stimuli were presented vertically on 6 × 8 cm grey Plexiglas hangers with a landing platform attached below the presentation area. Hangers and surrounding screen areas were washed with 30% ethanol between foraging bouts and before each test to prevent the use of olfactory cues. Consistent with protocol for the rotating screen [39], four stimuli (two identical correct stimuli; two identical incorrect stimuli) were presented simultaneously above landing platforms on the hangers, which could be positioned in different random spatial positions and were randomly changed between choices [36].

Figure 3.

(a) An image of the rotating screen used for Experiment 1 with labels to show basic parts of the apparatus. (b) A diagram of the Y-maze used in Experiment 2 with labels showing the basic parts of the apparatus. A bee enters through the small hole into the decision chamber where it is presented with two stimuli behind Plexiglas windows and must make a decision on which pole to land on for a reward. (Online version in colour.)

Procedure

Bees were first trained to land on platforms without stimuli present. Once individual bees were able to land on the platforms, the training stimuli were introduced. By using the rotating screen, bees were able to make choices at any distance from the stimuli, flying as far away or as close as they elected prior to making a decision on where to land. By using this design, we intentionally did not control the viewing distance for bees, but allowed individuals to self-regulate their distance prior to making a decision (figure 3a).

The experiment consisted of four parts (figure 2). During the learning phase, the target stimuli varied in size (side edges: 1, 2, 3, 4 cm; figure 2) but were displayed on a background of consistent size (side edge: 6 cm). Bees were presented with two different target sizes during each bout (return from hive to apparatus) and we recorded each correct or incorrect response for a total of 80 appetitive and aversive choices. Stimulus sizes and positions were pseudorandomized and changed between bouts. The sizes of the target stimuli were determined by rolling a die and stimulus positions on the rotating screen were determined by rotating the screen. Stimulus sizes always maintained the size rule for respective groups. A 10 µl drop of either a 50% sucrose solution (correct choice) or a 60 mM quinine solution (incorrect choice) were used as rewarding and punishing outcomes, respectively, during the training phase (figure 2), as this promotes enhanced visual discrimination performances in free-flying honeybees [40]. The procedure followed the logic of size-rule learning [36] where target stimulus sizes were pseudorandomly allocated such that the exact nature of a given stimulus (e.g. side edges: 2 or 3 cm) was ambiguous as correct or incorrect depending on whether it was larger or smaller in size compared with the alternative stimulus presented in a given phase of the conditioning. This training protocol is a form of differential conditioning which promotes processing of the entire image [38,41,42]. Once a bee made a correct choice, it was collected onto a Plexiglas spoon providing 50% sucrose solution and placed behind an opaque barrier 1 m away from the screen while stimulus sizes and positions were pseudorandomly changed, and platforms and surrounding areas were cleaned. If a bee made an incorrect choice, it would taste the bitter quinine solution and then was allowed to continue making choices until a correct choice was made, at which point the same procedure for a correct choice would be followed.

Following the learning phase, we conducted an unreinforced learning test for 20 choices to determine if honeybees had learnt the relational size rule (figure 2). Stimulus target sizes were chosen to be novel and interpolated from the training set (side edges: target, 1.5, 3.5 cm; background, 6 cm). Novel sizes were used to determine if rule learning, rather than a simple associative mechanism [36], was responsible for observed performance.

Following the learning test, two transfer tests were conducted in pseudorandom order. The role of the two sets of stimuli in each of the transfer tests was to induce the potential perception of an illusionary image as demonstrated in other animal models described above (figures 1 and 2). In transfer test 1, bees were presented with central targets of the same size (side edges: 1.5 cm), displayed on backgrounds of different sizes (side edges: 2.5, 5.5 cm). We hypothesized that if bees could accurately judge size regardless of context, there would be no significant difference in the number of choices between the two stimuli. However, if bees perceived an illusion and were trained to associate smaller-sized stimuli with a reward, they should choose the central target on the larger background, as it looks smaller in context. Similarly, if the bees trained to associate larger stimuli with a reward perceived an illusion, they should choose the central target on the smaller background as it looks larger in context. In transfer test 2, bees were presented with a small central target on a small background (side edges: target: 3 cm; background 3.5 cm) against a larger central target on a larger background (side edges: target: 3.5 cm; background 6 cm). This test was designed to determine the potential strength of the illusion in bees. Indeed, in the first transfer test, the target size was identical and therefore ambiguous for the bees, which could potentially facilitate the perception of the illusion. In the second transfer test, a difference in sizes between targets is maintained but might be compensated by the illusion triggered by the difference in the background sizes. In this test, if bees trained to both smaller and larger rules did not perceive an illusion, they should choose the respective stimulus in which the central target maintains the correct size relationship, regardless of the size of the background. However, if bees perceived a size illusion, those trained to a ‘smaller than' rule should choose the stimulus on the larger background, as it looks smaller in context, and bees trained to a ‘larger than' rule should choose the stimulus on the smaller background as it appears larger in context [43–45]. If bees perceived an assimilation illusion, central targets would appear more similar in size to the surroundings (inducer). Thus bees in the respective transfer tests trained to larger sizes would choose the stimulus with a larger surrounding as the target size also appeared larger, and vice versa for bees trained to smaller sizes.

During all three tests (learning test, transfer test 1 and transfer test 2) stimuli were presented without rewarding or punishing outcomes for respective choices, as we used water drops as a neutral substance for tests. We recorded 20 choices (touches of a platform) for each of the three tests. The sequence of the transfer tests was randomized and refresher choices were given between tests for the duration of one bout to maintain bee motivation [36,46].

(ii). Experiment 2: restricted viewing distance

The procedure for experiment 2, testing potential illusionary perception with restricted viewing distance, was largely the same as experiment 1 except for the apparatus mediating viewing conditions. Honeybees were trained to enter a Y-maze (figure 3b; as described in [47,48]). Stimuli were presented on grey backgrounds located 6 cm away from the decision lines. At the position of the decision lines, a transparent Plexiglas barrier was placed such that individual bees could view stimuli at the set distance of 6 cm but were unable to fly any closer, thus restricting their viewing distance to 6 cm (potential maximum distance from the entrance hole is 12 cm). Sucrose or quinine was placed on respective poles directly in front of the Plexiglas barrier so that bees would learn to associate stimuli with either a reward or punishment. Poles were replaced when touched by a bee and cleaned with ethanol to exclude olfactory cues. Two stimuli, one correct and one incorrect, were presented simultaneously in each arm of the Y-maze on the grey plastic background. The size and side of correct and incorrect stimuli were randomly changed between choices. If a bee made an incorrect choice and started to imbibe the quinine, it was allowed to fly to the pole in front of the correct stimulus to collect sucrose to maintain motivation; but only the first choice was recorded. Once the bee had finished drinking the sucrose, it was free to fly back to the hive or make another decision by re-entering the maze. During the unreinforced tests, a drop of water was placed on each of the poles placed in front of the stimuli. Twenty choices (touches of the poles) were recorded.

(c). Statistical analysis

(i). Learning phase

To test for the effect of training on bee performance (number of correct choices), data from the learning phase of 80 choices were analysed with a generalized linear mixed-effects model (GLMM) with a binomial distribution using the ‘glmer' package within the R environment for statistical analysis [49]. We initially fitted a full model with trial number as a continuous predictor, and group as a categorical predictor with two levels (trained to larger or smaller), plus an interaction term between predictors with subject as a random factor to account for repeated choices of individual bees. As the interaction term was not statistically significant in experiment 2, it was excluded from the final model.

(ii). Testing phase

To determine whether bees were able to learn the size rules of ‘smaller than' and ‘larger than' from the learning test data, we employed a GLMM including the intercept term as a fixed factor and subject as a random term. The proportion of ‘correct' choices (MPCC) recorded from the learning tests were used as a response variable in the model. The Wald statistic (z) tested if the mean proportion of correct choices recorded from the learning test, represented by the coefficient of the intercept term, was significantly different from chance expectation (i.e. 50% of correct choices).

The two unreinforced transfer tests (transfer test 1: targets of the same size on different background sizes; transfer test 2: large target on large background and small target on small background) were analysed using the same analyses employed for the learning test. For this analysis a ‘correct choice' was defined as the choice for a stimulus suggesting the perception of a contrast illusion.

(iii). Comparison between experiments

To determine if there was a significant difference between the learning curve functions of the learning phases in experiments 1 and 2, we used a GLMM with bee response (correct or incorrect) as a binary predictor, and trial number, viewing condition and the interaction term as fixed factors. Subject (bee) was included as a random factor. We also tested for differences between the pairs of unreinforced tests (learning test, transfer test 1 and transfer test 2) using the same model structure with bee response as a predictor and experiment (unrestricted or restricted viewing distance) as a factor. All statistical analyses were performed in the R environment using the ‘nlme' and ‘mass' packages [49].

3. Results

(a). Experiment 1: unrestricted viewing distance

There was a significant increase in the number of correct choices made over the 80 conditioned choices during the learning phase (trial number: z = 3.823, p < 0.001; figure 4a), with a significant interaction between group and trial (z = −2.087, p = 0.037) and no significant effect of group (z = 1.184, p = 0.236). For individual bee performance see electronic supplementary material, figure S1.

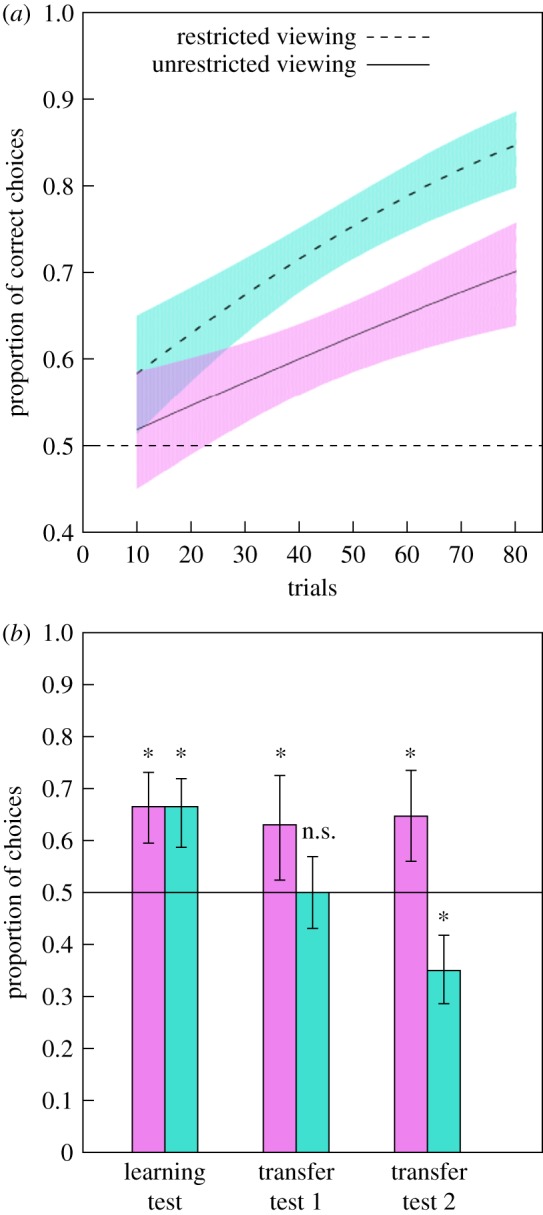

Figure 4.

(a) Performance during the learning phase in experiments 1 and 2. Dashed line at 0.5 indicates the chance level performance. Solid black line indicates the line of best fit for data points in the unrestricted viewing condition and the dashed black line indicates the line of best fit for the restricted viewing condition. The surrounding violet (unrestricted condition; solid line) and blue (restricted condition; dashed line) areas indicate 95% CI boundaries. Increase in performance during the learning phase was significant for both experiments but learning regression lines were not significantly different from each other. (b) Performance during the three testing phases: learning test, transfer test 1, and transfer test 2 for bees in experiments 1 (violet, left bars) and 2 (blue, right bars). For the learning test, performance is measured by proportion of choices for the correct size option; for the transfer tests, performance is measured by the proportion of choices for the illusionary option. Dashed line at 0.5 indicates chance level performance and * indicates performance significantly different from chance. Data shown are means ± 95% CI boundaries for all three unreinforced tests. Ten bees were used for each test in each experiment. (Online version in colour.)

In the learning test, bees consistently chose the correct stimulus in 66.5 ± 3.0% (mean ± s.e. of the mean) of choices, significantly higher than chance expectation (z = 4.577, p < 0.001; mean proportion of correct choices (MPCC) = 0.665, 95% confidence intervals (CIs): 0.595, 0.731; figure 4b).

During transfer test 1 presenting target stimuli of identical sizes, bees chose the stimulus suggesting contrast illusionary perception in 63.0 ± 3.8% of choices, significantly higher than chance (z = 2.592, p < 0.010; MPCC = 0.630, CIs: 0.524, 0.725; figure 4b).

Likewise, in transfer test 2, bees presented with two central targets of different sizes on backgrounds of different sizes chose the contrast illusion stimulus in 64.7 ± 4.1% of choices (z = 3.506, p < 0.001; MPCC = 0.647, CIs: 0.565, 0.735; figure 4b). For individual bee performance see electronic supplementary material, figure S2.

Group was not a significant factor for any of the tests (p > 0.05 in all cases).

(b). Experiment 2: restricted viewing distance

As in experiment 1, there was a significant increase in the number of correct choices made over the 80 conditioned choices during the learning phase (trial number: z = 5.411, p < 0.001; figure 4a) and no difference between groups (z = 0.321, p = 0.748). For individual bee performance see electronic supplementary material, figure S3.

In the learning test, bees selected the correct stimulus in 66.5 ± 2.0% of trials. The mean number of correct choices was significantly different from chance (z = 4.310, p < 0.001; MPCC = 0.655, CIs: 0.587, 0.719; figure 4b).

During transfer test 1 presenting target stimuli of identical size on backgrounds of different sizes, bees chose the contrast illusion stimulus in 50.0 ± 1.1% of choices, which did not differ significantly from chance expectation (z = 0.000, p = 0.944; MPCC = 0.500, CIs: 0.431, 0.569; figure 4b).

During transfer test 2 presenting two central targets of different size on backgrounds of different size, bees chose the contrast illusion option based on their training group in 35.0 ± 2.2% of choices (z = −4.176, p < 0.001; MPCC = 0.350, CIs: 0.286, 0.418; figure 4b), thus choosing the correct relative target size in 65.0 ± 2.2% of choices regardless of the annulus size. For individual bee performance see electronic supplementary material, figure S4.

Group was not a significant factor for any of the tests (p > 0.05).

(c). Comparison of experiments

There was no significant difference between the slopes of the learning phase in experiments 1 and 2 (viewing condition x trial number: z = −1.749, p = 0.080; figure 4a), and in the performance of bees (z = −1.023, p = 0.306; figure 4b) during the training phase, but there was a significant effect of trial on bee performance (z = 3.520, p < 0.001). There was a significant difference between experiments in the results of transfer tests (transfer test 1: Z = −2.406, p < 0.020; transfer test 2: Z = −5.824, p < 0.001; figure 4b) as bees trained using the rotating screen perceived illusions in both tests, whereas bees trained using the Y-maze did not.

4. Discussion

While the ability to perceive size illusions varies across vertebrate species, it appears that the experimental method, specifically the restriction of the viewing distance, may influence results in some experiments [6,14–16,24]. We formally tested and compared the potential ability of honeybees to perceive size illusions under restricted or unrestricted viewing conditions. Bees in the unrestricted viewing conditions perceived contrast illusions, while independent bees under restricted viewing distance conditions did not exhibit choices consistent with the perception of an illusion. These results demonstrate that visual perception is influenced by the ability of bees to choose their own viewing distance and show that context is a relevant factor in accurate size discrimination.

Differences in perception can potentially be explained by the capacity of a species or individual to process visual cues locally or globally [6,8,26]. Local (or featural) processing seems to allow species to accurately judge size by ignoring surrounding information (inducers), while global processing allows the perception of illusions whereby the surrounding information is incorporated into the overall image [1,6]. Honeybees have demonstrated the ability to process both locally and globally, but do show a preference for global processing [38,50]. The honeybee's preference to process globally could explain why bees were able to perceive illusions in an unrestricted viewing context. Indeed, the current study shows that illusion effects are influenced by viewing conditions, and thus suggests that local–global processing effects observed in different animal species may be strongly influenced by viewing context.

Illusionary size perception in the unrestricted viewing condition may also be influenced by visual angle. Bees could have been mediating their distance during the transfer tests in the unrestricted viewing condition in order to place the white square surroundings at an equivalent visual angle, and thus choose the target with the larger or smaller visual angle [51] (see electronic supplementary material, S1). However, this is very unlikely due to the very large or very small distances and visual angles (below the minimum threshold for detection [52]) bees would need to view stimuli from to match the visual angles of the white surroundings. In addition, if bees were mediating visual angle to match background sizes, this would mean bees were ‘fooled' into trying to match visual angles to make decisions on relative size. We could thus still conclude that context is a relevant factor for free-flying bees to judge size. Additionally, the ratio of white to black area could potentially have been a cue for bees; however, we consider this unlikely for three reasons. First, bees were trained to the difference in the local cues (targets) with a white background of consistent size, which promoted size-rule learning of the target [36,37]. Second, during the learning test bees would have needed to be able to discriminate a very small difference of 5.7% between black–white ratios for success in this experiment, which is unlikely in a rule-learning context. Finally, bees in both viewing conditions were trained using the same stimuli and conditioning framework; however, the test results from the two viewing conditions differed significantly. The bees in the restricted viewing condition could not be using the white–black ratio, and so it seems likely that the bees in the unrestricted viewing condition were learning the same cues as those in the restricted condition.

Studying comparative perception of visual illusions allows us to make inferences about the evolution of the visual sensory system. Parallels found across species for the ability to perceive similar illusions suggests a conserved or convergent visual processing solution [5,10]. In mammals and primates, current research suggests that the ability to perceive the Ebbinghaus illusion through specific perceptual mechanisms is due to the recent evolution of this trait [6,10,15]. Illusionary perception in primates is potentially due to the neural substrate located in the neocortex, where the dorsal and ventral streams, two independent neural pathways, are responsible for visual awareness and action control [53]. In non-mammalian species, such as birds, these neural circuits are organized differently, perhaps due to evolutionary differences [54]. This may also have resulted in differences among species regarding the ability to perceive a size illusion and, additionally, the type of illusion which is perceived (contrast or assimilation) in the Ebbinghaus illusion [10]. However, as discussed, this may simply be due to differences in testing procedure [5,10]. Some research suggests that the perception of visual illusions is indeed a conserved ability in both ‘lower' and ‘higher’ vertebrates. [5,10]. With the addition of honeybees to species with known perception of size illusions, we suggest that convergent evolution of a visual processing solution is more likely to be the explanation as to why we see this error of perception in both vertebrates and now an invertebrate. However, this hypothesis requires testing, particularly the ability of other invertebrate species to perceive the Ebbinghaus illusion.

Coupled with studies of other illusions perceived by bees, our research provides additional insight into the honeybee's visual system and cognitive processing. Honeybees perceive illusionary contours [55–57], the Benham illusion [58] and the Craik–O'Brien–Cornsweet illusion [59], which are spatial, movement and colour illusions, respectively. Illusionary perception is potentially important for honeybee perception and assessment of the complex, dynamic environments in which they live. For example, perceiving the relative size of an object is important for assessing distance, thus manipulation of object size can impact distance estimation [1]. However, illusions may also be perceived because it is difficult to process all of the sensory information available in a complex environment. Focusing on a small number of reliable cues can be used to inform behaviour; thus, the information immediately surrounding an object of interest can result in the distortion of sensory cues, such as size [1]. Size perception is a classic problem in animal perception [6,36,37,60], and our new finding that viewing context promotes very different outcomes within species provides for new avenues for exploration in future studies.

Supplementary Material

Supplementary Material

Supplementary Material

Supplementary Material

Supplementary Material

Acknowledgements

We thank Martin Giurfa for comments on the initial results.

Compliance with ethical standards

All applicable international, national and/or institutional guidelines for the care and use of animals were followed.

Data accessibility

Behavioural data: Dryad doi: https://doi.org/10.5061/dryad.mc02p [61].

Authors' contributions

All authors contributed to experimental design. S.R.H. and A.G.D. collected the data. J.E.G. and S.R.H. analysed the data. S.R.H. wrote the first draft of the manuscript. All the authors contributed to interpretation of the data and editing the manuscript. All the authors gave their final approval for submission.

Competing interests

No competing interests declared.

Funding

A.G.D. acknowledges the Australian Research Council (ARC), grant no. 130100015. S.R.H. acknowledges the Australian Government Research Training Program Scholarship (RTP).

References

- 1.Kelley LA, Kelley JL. 2014. Animal visual illusion and confusion: the importance of a perceptual perspective. Behav. Ecol. 25, 450–463. ( 10.1093/beheco/art118) [DOI] [Google Scholar]

- 2.Choplin JM, Medin DL. 1999. Similarity of the perimeters in the Ebbinghaus illusion. Percept. Psychophys. 61, 3–12. ( 10.3758/BF03211944) [DOI] [PubMed] [Google Scholar]

- 3.de Grave DD, Biegstraaten M, Smeets JB, Brenner E. 2005. Effects of the Ebbinghaus figure on grasping are not only due to misjudged size. Exp. Brain Res. 163, 58–64. ( 10.1007/s00221-004-2138-0) [DOI] [PubMed] [Google Scholar]

- 4.Ebbinghaus H.1902. Grundzüge der psychologie. Leipzig, Germany: Verlag von Veit & Comp.

- 5.Salva OR, Sovrano VA, Vallortigara G. 2014. What can fish brains tell us about visual perception? Front. Neural Circuits 8, 119 ( 10.3389/fncir.2014.00119) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parron C, Fagot J. 2007. Comparison of grouping abilities in humans (Homo sapiens) and baboons (Papio papio) with the Ebbinghaus illusion. J. Comp. Psychol. 121, 405–411. ( 10.1037/0735-7036.121.4.405) [DOI] [PubMed] [Google Scholar]

- 7.Girgus JS, Coren S, Agdern M. 1972. The interrelationship between the Ebbinghaus and Delboeuf illusions. J. Exp. Psychol. 95, 453–455. ( 10.1037/h0033606) [DOI] [PubMed] [Google Scholar]

- 8.Navon D. 1977. Forest before trees: the precedence of global features in visual perception. Cognit. Psychol. 9, 353–383. ( 10.1016/0010-0285(77)90012-3) [DOI] [Google Scholar]

- 9.Murayama T, Usui A, Takeda E, Kato K, Maejima K. 2012. Relative size discrimination and perception of the Ebbinghaus illusion in a bottlenose dolphin (Tursiops truncatus). Aquat. Mammals 38, 333–342. ( 10.1578/AM.38.4.2012.333) [DOI] [Google Scholar]

- 10.Sovrano VA, Albertazzi L, Salva OR. 2014. The Ebbinghaus illusion in a fish (Xenotoca eiseni). Anim. Cogn. 18, 533–542. ( 10.1007/s10071-014-0821-5) [DOI] [PubMed] [Google Scholar]

- 11.Endler JA, Endler LC, Doerr NR. 2010. Great bowerbirds create theaters with forced perspective when seen by their audience. Curr. Biol. 20, 1679–1684. ( 10.1016/j.cub.2010.08.033) [DOI] [PubMed] [Google Scholar]

- 12.Kelley LA, Endler JA. 2012. Illusions promote mating success in great bowerbirds. Science 335, 335–338. ( 10.1126/science.1212443) [DOI] [PubMed] [Google Scholar]

- 13.Salva OR, Rugani R, Cavazzana A, Regolin L, Vallortigara G. 2013. Perception of the Ebbinghaus illusion in four-day-old domestic chicks (Gallus gallus). Anim. Cogn. 16, 895–906. ( 10.1007/s10071-013-0622-2) [DOI] [PubMed] [Google Scholar]

- 14.Nakamura N, Watanabe S, Fujita K. 2014. A reversed Ebbinghaus–Titchener illusion in bantams (Gallus gallus domesticus). Anim. Cogn. 17, 471–481. ( 10.1007/s10071-013-0679-y) [DOI] [PubMed] [Google Scholar]

- 15.Nakamura N, Watanabe S, Fujita K. 2008. Pigeons perceive the Ebbinghaus-Titchener circles as an assimilation illusion. J. Exp. Psychol. Anim. Behav. Processes 34, 375–387. ( 10.1037/0097-7403.34.3.375) [DOI] [PubMed] [Google Scholar]

- 16.Byosiere S-E, Feng LC, Woodhead JK, Rutter NJ, Chouinard PA, Howell TJ, Bennett PC. 2016. Visual perception in domestic dogs: susceptibility to the Ebbinghaus–Titchener and Delboeuf illusions. Anim. Cogn. 20, 435–448. ( 10.1007/s10071-016-1067-1) [DOI] [PubMed] [Google Scholar]

- 17.de Fockert J, Davidoff J, Fagot J, Parron C, Goldstein J. 2007. More accurate size contrast judgments in the Ebbinghaus Illusion by a remote culture. J. Exp. Psychol. Hum. Percept. Perform. 33, 738–742. ( 10.1037/0096-1523.33.3.738) [DOI] [PubMed] [Google Scholar]

- 18.Davidoff J, Fonteneau E, Goldstein J. 2008. Cultural differences in perception: observations from a remote culture. J. Cogn. Cult. 8, 189–209. ( 10.1163/156853708X358146) [DOI] [Google Scholar]

- 19.Caparos S, Ahmed L, Bremner AJ, de Fockert JW, Linnell KJ, Davidoff J. 2012. Exposure to an urban environment alters the local bias of a remote culture. Cognition 122, 80–85. ( 10.1016/j.cognition.2011.08.013) [DOI] [PubMed] [Google Scholar]

- 20.Parrish AE, Beran MJ. 2014. When less is more: like humans, chimpanzees (Pan troglodytes) misperceive food amounts based on plate size. Anim. Cogn. 17, 427–434. ( 10.1007/s10071-013-0674-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Van Ittersum K, Wansink B. 2012. Plate size and color suggestibility: the Delboeuf Illusion's bias on serving and eating behavior. J. Consum. Res. 39, 215–228. ( 10.1086/662615) [DOI] [Google Scholar]

- 22.Parrish AE, Beran MJ. 2014. Chimpanzees sometimes see fuller as better: judgments of food quantities based on container size and fullness. Behav. Processes 103, 184–191. ( 10.1016/j.beproc.2013.12.011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Parrish AE, Brosnan SF, Beran MJ. 2015. Do you see what I see? A comparative investigation of the Delboeuf illusion in humans (Homo sapiens), rhesus monkeys (Macaca mulatta), and capuchin monkeys (Cebus apella). J. Exp. Psychol. Anim. Learn. Cogn 41, 395–405. ( 10.1037/xan0000078) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Petrazzini MEM, Bisazza A, Agrillo C. 2016. Do domestic dogs (Canis lupus familiaris) perceive the Delboeuf illusion? Anim. Cogn. 20, 427–434. ( 10.1007/s10071-016-1066-2) [DOI] [PubMed] [Google Scholar]

- 25.Moutsiana C, De Haas B, Papageorgiou A, Van Dijk JA, Balraj A, Greenwood JA, Schwarzkopf DS. 2016. Cortical idiosyncrasies predict the perception of object size. Nat. Commun. 7, 12110 ( 10.1038/ncomms12110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fagot J, Deruelle C. 1997. Processing of global and local visual information and hemispheric specialization in humans (Homo sapiens) and baboons (Papio papio). J. Exp. Psychol. Hum. Percept. Perform. 23, 429–442. ( 10.1037/0096-1523.23.2.429) [DOI] [PubMed] [Google Scholar]

- 27.Fremouw T, Herbranson WT, Shimp CP. 2002. Dynamic shifts of pigeon local/global attention. Anim. Cogn. 5, 233–243. ( 10.1007/s10071-002-0152-9) [DOI] [PubMed] [Google Scholar]

- 28.Aglioti S, DeSouza JF, Goodale MA. 1995. Size-contrast illusions deceive the eye but not the hand. Curr. Biol. 5, 679–685. ( 10.1016/S0960-9822(95)00133-3) [DOI] [PubMed] [Google Scholar]

- 29.Danckert JA, Sharif N, Haffenden AM, Schiff KC, Goodale MA. 2002. A temporal analysis of grasping in the Ebbinghaus illusion: planning versus online control. Exp. Brain Res. 144, 275–280. ( 10.1007/s00221-002-1073-1) [DOI] [PubMed] [Google Scholar]

- 30.Dyer AG. 2012. The mysterious cognitive abilities of bees: why models of visual processing need to consider experience and individual differences in animal performance. J. Exp. Biol. 215, 387–395. ( 10.1242/jeb.038190) [DOI] [PubMed] [Google Scholar]

- 31.Avarguès-Weber A, Giurfa M. 2013. Conceptual learning by miniature brains. Proc. R. Soc.B 280, 20131907 ( 10.1098/rspb.2013.1907) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Avarguès-Weber A, Deisig N, Giurfa M. 2011. Visual cognition in social insects. Annu. Rev. Entomol. 56, 423–443. ( 10.1146/annurev-ento-120709-144855) [DOI] [PubMed] [Google Scholar]

- 33.Zhang S. 2006. Learning of abstract concepts and rules by the honeybee. Int. J. Comp. Psychol. 19, 318–341. [Google Scholar]

- 34.Srinivasan MV. 2010. Honey bees as a model for vision, perception, and cognition. Annu. Rev. Entomol. 55, 267–284. ( 10.1146/annurev.ento.010908.164537) [DOI] [PubMed] [Google Scholar]

- 35.Chittka L, Niven J. 2009. Are bigger brains better? Curr. Biol. 19, R995–R1008. ( 10.1016/j.cub.2009.08.023) [DOI] [PubMed] [Google Scholar]

- 36.Avarguès-Weber A, d'Amaro D, Metzler M, Dyer AG. 2014. Conceptualization of relative size by honeybees. Front. Behav. Neurosci. 8, 1–8. ( 10.3389/fnbeh.2014.00080) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Howard SR, Avarguès-Weber A, Garcia J, Dyer AG. 2017. Free-flying honeybees extrapolate relational size rules to sort successively visited artificial flowers in a realistic foraging situation. Anim. Cogn. 20, 627–638. ( 10.1007/s10071-017-1086-6) [DOI] [PubMed] [Google Scholar]

- 38.Avarguès-Weber A, Dyer AG, Ferrah N, Giurfa M. 2015. The forest or the trees: preference for global over local image processing is reversed by prior experience in honeybees. Proc. R. Soc. B 282, 20142384 ( 10.1098/rspb.2014.2384) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Avarguès-Weber A, Portelli G, Benard J, Dyer A, Giurfa M. 2010. Configural processing enables discrimination and categorization of face-like stimuli in honeybees. J. Exp. Biol. 213, 593–601. ( 10.1242/jeb.039263) [DOI] [PubMed] [Google Scholar]

- 40.Avarguès-Weber A, de Brito Sanchez MG, Giurfa M, Dyer AG. 2010. Aversive reinforcement improves visual discrimination learning in free-flying honeybees. PLoS ONE 5, e15370 ( 10.1371/journal.pone.0015370) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Stach S, Benard J, Giurfa M. 2004. Local-feature assembling in visual pattern recognition and generalization in honeybees. Nature 429, 758–761. ( 10.1038/nature02594) [DOI] [PubMed] [Google Scholar]

- 42.Giurfa M, Hammer M, Stach S, Stollhoff N, Müller-Deisig N, Mizyrycki C. 1999. Pattern learning by honeybees: conditioning procedure and recognition strategy. Anim. Behav. 57, 315–324. ( 10.1006/anbe.1998.0957) [DOI] [PubMed] [Google Scholar]

- 43.Doherty MJ, Campbell NM, Tsuji H, Phillips WA. 2010. The Ebbinghaus illusion deceives adults but not young children. Dev. Sci. 13, 714–721. ( 10.1111/j.1467-7687.2009.00931.x) [DOI] [PubMed] [Google Scholar]

- 44.Knol H, Huys R, Sarrazin J-C, Jirsa VK. 2015. Quantifying the Ebbinghaus figure effect: target size, context size, and target-context distance determine the presence and direction of the illusion. Front. Psychol. 6, 1679 ( 10.3389/fpsyg.2015.01679) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Roberts B, Harris MG, Yates TA. 2005. The roles of inducer size and distance in the Ebbinghaus illusion (Titchener circles). Perception 34, 847–856. ( 10.1068/p5273) [DOI] [PubMed] [Google Scholar]

- 46.Avarguès-Weber A, Dyer AG, Combe M, Giurfa M. 2012. Simultaneous mastering of two abstract concepts by the miniature brain of bees. Proc. Natl Acad. Sci. USA 109, 7481–7486. ( 10.1073/pnas.1202576109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Srinivasan MV, Zhang S, Rolfe B. 1993. Is pattern vision in insects mediated by 'cortical' processing? Nature 362, 539–540. ( 10.1038/362539a0) [DOI] [Google Scholar]

- 48.Avarguès-Weber A, Dyer AG, Giurfa M. 2011. Conceptualization of above and below relationships by an insect. Proc. R. Soc. B 278, 898–905. ( 10.1098/rspb.2010.1891) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.R Core Development Team. 2016. R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. [Google Scholar]

- 50.Zhang S, Srinivasan M, Horridge G. 1992. Pattern recognition in honeybees: local and global analysis. Proc. R. Soc. London B 248, 55–61. ( 10.1098/rspb.1992.0042) [DOI] [Google Scholar]

- 51.Horridge G, Zhang S, Lehrer M. 1992. Bees can combine range and visual angle to estimate absolute size. Phil. Trans. R. Soc. London B 337, 49–57. ( 10.1098/rstb.1992.0082) [DOI] [Google Scholar]

- 52.Giurfa M, Vorobyev M, Kevan P, Menzel R. 1996. Detection of coloured stimuli by honeybees: minimum visual angles and receptor specific contrasts. J. Comp. Physiol. A 178, 699–709. ( 10.1007/BF00227381) [DOI] [Google Scholar]

- 53.Goodale MA, Milner AD. 1992. Separate visual pathways for perception and action. Trends Neurosci. 15, 20–25. ( 10.1016/0166-2236(92)90344-8) [DOI] [PubMed] [Google Scholar]

- 54.Shimizu T, Bowers AN. 1999. Visual circuits of the avian telencephalon: evolutionary implications. Behav. Brain Res. 98, 183–191. ( 10.1016/S0166-4328(98)00083-7) [DOI] [PubMed] [Google Scholar]

- 55.Van Hateren J, Srinivasan M, Wait P. 1990. Pattern recognition in bees: orientation discrimination. J. Comp. Physiol. A 167, 649–654. ( 10.1007/BF00192658) [DOI] [Google Scholar]

- 56.Horridge G, Zhang S, O'Carroll D. 1992. Insect perception of illusory contours. Phil. Trans. R. Soc. London B 337, 59–64. ( 10.1098/rstb.1992.0083) [DOI] [Google Scholar]

- 57.Nieder A. 2002. Seeing more than meets the eye: processing of illusory contours in animals. J. Comp. Physiol. A 188, 249–260. ( 10.1007/s00359-002-0306-x) [DOI] [PubMed] [Google Scholar]

- 58.Srinivasan M, Lehrer M, Wehner R. 1987. Bees perceive illusory colours induced by movement. Vision Res. 27, 1285–1289. ( 10.1016/0042-6989(87)90205-7) [DOI] [PubMed] [Google Scholar]

- 59.Davey M, Srinivasan M, Maddess T. 1998. The Craik–O'Brien–Cornsweet illusion in honeybees. Naturwissenschaften 85, 73–75. ( 10.1007/s001140050455) [DOI] [Google Scholar]

- 60.Martin NH. 2004. Flower size preferences of the honeybee (Apis mellifera) foraging on Mimulus guttatus (Scrophulariaceae). Evol. Ecol. Res. 6, 777–782. [Google Scholar]

- 61.Howard SR, Avarguès-Weber A, Garcia JE, Stuart-Fox D, Dyer AG. 2017. Data from: Perception of contextual size illusions by honeybees in restricted and unrestricted viewing conditions Dryad Digital Repository. ( 10.5061/dryad.mc02p) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Howard SR, Avarguès-Weber A, Garcia JE, Stuart-Fox D, Dyer AG. 2017. Data from: Perception of contextual size illusions by honeybees in restricted and unrestricted viewing conditions Dryad Digital Repository. ( 10.5061/dryad.mc02p) [DOI] [PMC free article] [PubMed]

Supplementary Materials

Data Availability Statement

Behavioural data: Dryad doi: https://doi.org/10.5061/dryad.mc02p [61].