Abstract

Background

Laboratory testing is an important clinical act with a valuable role in screening, diagnosis, management and monitoring of diseases or therapies. However, inappropriate laboratory test ordering is frequent, burdening health care spending and negatively influencing quality of care. Inappropriate tests may also result in false-positive results and potentially cause excessive downstream activities. Clinical decision support systems (CDSSs) have shown promising results to influence the test-ordering behaviour of physicians and to improve appropriateness. Order sets, a form of CDSS where a limited set of evidence-based tests are proposed for a series of indications, integrated in a computerised physician order entry (CPOE) have been shown to be effective in reducing the volume of ordered laboratory tests but convincing evidence that they influence appropriateness is lacking. The aim of this study is to evaluate the effect of order sets on the quality and quantity of laboratory test orders by physicians. We also aim to evaluate the effect of order sets on diagnostic error and explore the effect on downstream or cascade activities.

Methods

We will conduct a cluster randomised controlled trial in Belgian primary care practices. The study is powered to measure two outcomes. We will primarily measure the influence of our CDSS on the appropriateness of laboratory test ordering. Additionally, we will also measure the influence on diagnostic error. We will also explore the effects of our intervention on cascade activities due to altered results of inappropriate tests.

Discussion

We have designed a study that should be able to demonstrate whether the CDSS aimed at diagnostic testing is not only able to influence appropriateness but also safe with respect to diagnostic error. These findings will influence a lager, nationwide implementation of this CDSS.

Trial registration

ClinicalTrials.gov, NCT02950142.

Background

Laboratory testing is an important clinical act with a valuable role in screening, diagnosis, management and monitoring of diseases or therapies. Thirty percent of patient contacts in primary care result in ordering one or more laboratory tests [1, 2]. With 370 million tests annually, laboratory testing is the most frequent medical activity in Belgium [3]. There is a large variation in the appropriateness of these orders [4–7]. Inappropriate laboratory test ordering has been estimated to be as high as 30% [8, 9]. Besides the burden this poses on health care spending, it also negatively influences quality of care. Inappropriate tests may also result in false-positive results and potentially cause excessive downstream activities. Downstream or cascade activities are those medical acts which result from altered or deviant tests. This phenomenon is often referred to as the Ulysses effect, and it is generally assumed that the effects of inappropriate test ordering are larger on the downstream activities than on the tests themselves [10]. To date, little research has been done on these cascades and the true extent of this Ulysses effect in primary care remains unclear [11].

Education-based interventions, feedback-based interventions and clinical decision support systems (CDSSs) have shown promising results to influence the test-ordering behaviour of physicians and to improve appropriateness [1, 9, 12, 13]. These findings, however, tend not to be generalisable because many studies either focus on very limited indications or measure testing volume rather than appropriateness. Order sets, a form of CDSS where a limited set of evidence-based tests are proposed for a series of indications, integrated in a computerised physician order entry (CPOE) have been shown to be effective in reducing the volume of ordered laboratory tests [14, 15]. However, good evidence that the use of order sets aimed at multiple indications improves the appropriateness of laboratory test ordering is still lacking. A barrier to adhering to evidence-based policy is the fear for missing important pathology and the liability this may create [2]. There is currently no evidence that increasing appropriateness of laboratory testing influences morbidity through diagnostic error or delay. The aim of this study is to evaluate the effect of order sets on the quality and quantity of laboratory test orders by physicians. We also aim to evaluate the effect of order sets on diagnostic error and explore the effect on downstream or cascade activities.

Methods

Trial design

To evaluate this intervention, we will conduct a cluster randomised controlled trial in Belgian primary care practices. The participants will be general practitioners (GPs) working in primary care practices (PCPs) affiliated to one of three collaborating laboratories in the Leuven, Ghent or Antwerp regions. Currently, these laboratories are starting to implement web-based CPOEs integrated in the electronic health record (EHR) of primary care physicians.

Participants

PCPs will be considered eligible if all the physicians active in the practice agree to be involved in the study. All physicians will be considered eligible if they:

Collaborate with either one of three collaborating laboratories: Medisch Centrum Huisartsen (MCH), Anacura or Algemeen Medisch Laboratorium (AML)

Agree to use the online CPOE for their laboratory test orders

Use a computerised EHR for patient care

Have little or no experience in the use of order sets within a CPOE

Agree to the terms in the clinical study agreement

We will aim our recruitment primarily at GPs with no prior experience in the use of order sets. The rationale for this exclusion criterion is that GPs who already use some form of order sets will not stop doing so if they were to be allocated to the control group. Experience in the use of a CPOE will not be an exclusion criterion as we wish to include GPs with varying experience in the use of IT.

No GPs will be excluded on other grounds than the above. Age, demographics, prior use of a CPOE (without the use of order sets), prior laboratory ordering behaviour, etc. will not be used to exclude eligible GPs. This will provide us with a real-life, representative subset of GPs.

Interventions

Currently, most laboratory test orders are done through a paper-based system. GPs request or take a blood sample from a patient, order tests manually on a paper form by ticking boxes next to each test, manually add the patient contact detail to the form and send both the form and the test tubes in a plastic bag to the laboratory. Slowly, ambulatory laboratories in primary care have started adopting CPOEs for ordering laboratory tests. CPOEs have several benefits for both laboratories and GPs. They reduce mistakes during the pre-analytical phase, improve timeliness of reporting, reduce mistakes during additional orders on the same sample and reduce the overall turnaround time of samples [16, 17]. The adoption of CPOEs also provides perspectives in providing CDSS through order sets.

We will use two different types of CPOE in our study:

Lab Online (Moonchase) implemented at AML and MCH and

E-Lab implemented at Anacura

Both systems are online platforms that allow the ordering of laboratory tests and the review of lab results through a web-based interface. They are linked to the EHR and integrate patient contact details through an Extensible Markup Language (XML) message. To date, no patient-specific medical data is shared between the EHR and the CPOE. When a physician initiates a laboratory test order through the EHR, a web browser is opened which allows the physician to order laboratory tests. Currently, users are guided to an overview of commonly used laboratory tests, very much like a paper-based form. In our intervention, physicians will be prompted to enter the indication(s) for ordering laboratory tests through a searchable drop-down menu of common indications or a list of indications which can be selected through tick boxes. Selecting one or more of these indications will prompt a new window which shows the appropriate tests for these indications. In this window, the user will be able to accept the panel without changes, to cancel one or more of the ordered test or to order additional tests. The user will not be restricted in ordering any tests but will be ‘nudged’ in the direction of ordering only the appropriate tests.

We developed a series of order sets based on recommendations available through the EBMPracticeNet platform [18]. We chose this database because it contains context-specific guidelines on more than 1000 conditions or situations including guidelines from the Flemish College of Family Physicians on the laboratory testing for 20 different indications commonly seen in general practice [19–21]. From these guidelines, we extracted recommendations on 17 common indications for investigation in this trial. These order sets were translated into decision support rules that suggest a panel of recommended tests when the physician records the indications or conditions for testing.

Implementation

To maximise the effects of our intervention, we planned an implementation strategy using the GUIDES checklist as a reference [22]. We conducted interviews and panel meetings with clinical biologists and information technologists to validate the usability of each order set. We conducted focus group interviews with GPs to identify barriers and facilitators to the use of our intervention in daily practice and used these findings to tailor our intervention where feasible. Currently the intervention is being tested in three GP practices and evaluated for usability and acceptability. At the end of the trial, all participating GPs will be surveyed to identify remaining factors that may have influenced its use.

Outcomes

Primary outcome

The definition of (in)appropriateness is broad and can be interpreted in various ways. In this study, we will use a restrictive definition for appropriateness where a test is considered inappropriate if there is no clear indication for ordering the test [8]. For instance, if, for condition A, five tests are considered appropriate, then all additional tests are considered inappropriate. We will also consider a test inappropriate if it is underutilised or not ordered within a certain time frame for a given condition. For instance, if, for condition A, a test X is considered appropriate if it is ordered once per year, then all missing tests X in that year will be considered inappropriate.

Secondary outcomes

Due to the role of the GP as a gateway keeper, caring for a variety of complex patients, he is vulnerable to diagnostic errors [23]. Diagnostic errors are defined as diagnoses that were unintentionally delayed, wrong or missed [24]. In a classification of diagnostic error, amongst the most important reasons for diagnostic error in laboratory testing were the failure or delay in ordering needed tests and ordering of the wrong tests [25]. Despite the vulnerability of primary care for diagnostic error, incidences remain low. Very little reliable figures on diagnostic error due to laboratory testing exist, but several studies estimate it less than 0.1 to 2.5% [26, 27]. An important concern in the interpretation of these results lies in the fact that they are often based on retrospective analyses with hindsight bias [28]. Despite these apparently low figures, fear of diagnostic error (and the related liability) is an important concern for physicians when ordering laboratory tests [2]. Our secondary aim is to demonstrate that improving appropriateness of laboratory testing does not result in more diagnostic errors.

We will define diagnostic error as a diagnosis that was unintentionally delayed (sufficient information was available earlier), wrong (another diagnosis was made before the correct one) or missed (no diagnosis was made), in accordance with the definition of diagnostic error by Graber et al. [24]. Our order sets recommend tests for initial testing (when a condition or disease is suspected) or for monitoring (when a condition or disease has been diagnosed, but follow-up for the early detection of potential side-effects of treatment or to monitor the evolution of the condition is warranted). In both situations, the potential for diagnostic error is present. We present some examples of diagnostic error in the Appendix.

Measuring this outcome is a challenge. We will use a stepped approach, combining physicians’ reporting of events, chart review and direct patient interviews of a sample of patients. The rationale for adding patient interviews is that some diagnostic errors do not result in additional visits, further investigations or change in practice and will not be recorded in the EHR. Interviewing the patient is the only way to detect these diagnostic errors. The outcome will be measured as the number of diagnostic errors in each arm.

We will also evaluate the effect of evidence-based order sets on test volume. Inappropriateness is not only a result of overutilisation but also of underutilisation, and improving appropriateness may not necessarily result in reducing test volume [8]. We will measure this outcome to contribute to this discussion. This outcome will be measured as the number of tests ordered in each arm for the 17 indications individually and for all laboratory tests ordered by physicians.

Exploratory outcomes

In a subset of 250 laboratory panels, we will review the corresponding patient charts and identify all those activities originating directly from the results of the ordered laboratory tests. We will use the methods by Houben et al. [11] as a guide to identify those laboratory panels that could potentially lead to downstream or cascade activities. We will focus on inappropriate tests in both the control and intervention arm to evaluate the extent of downstream activities and compare the difference in downstream or cascade activities between abnormal and normal results.

Sample size

Our study involves multiple levels of clustering which are not independent of each other. Lowest on the level of analysis is the individual laboratory test included in our study. We must account for the fact that these tests are not ordered entirely independent of each other in one patient; for instance, a white blood cell count is often ordered together with a white blood cell differentiation. Additionally, there is clustering on the level of the physician. When the same physician orders tests for various patients over time, each of these orders is not independent of the other. For instance, if a physician often requests chloride in patients taking diuretics, then this will probably be so in all patients taking diuretics. Finally, GPs often work in primary care practices. Physicians working in the same practice tend to have similar ordering behaviour implying that the orders made by two physicians in the same practice are not independent of each other. We therefore have a four level clustering comprising test, patient, physician and primary care practice with the following assumptions: multiple study tests per patient, assumed to be 5 per patient; multiple patients per physicians, assumed to be 42 per 3 months if there is 70% use of the online intervention [14]; and multiple physicians per primary care practice (PCP), assumed to be 2.35 [29].

Each of these clustering levels inflates the required sample size [30]. Estimates of intracluster correlation coefficients (ICCs) for process of care measures in primary care are between 0.05 and 0.15 [31, 32]; however, estimates for ICCs regarding the appropriateness of laboratory tests have been shown to vary between 0.04 and 0.288 [33]. To our knowledge, no ICCs have been published for clustering on the various levels observed in our trial; therefore, we have chosen to use a very conservative ICC estimate of 0.2 for each level of clustering in our sample size calculations, probably overestimating the design effect.

A trial with 80% power to detect a 10% difference in appropriateness (in this case, from 70 to 80%) using a significance level of 5% would require 586 tests in both arms. Adjusting for the multi-level clustering inflates this to 7305 tests or 35 physicians in trial lasting 3 months.

We also aim to power our study to its secondary outcome. Assuming 2.5% diagnostic errors in primary care, we calculated that the trial, with an 80% power to detect a non-inferiority of a 1% difference using a significance level of 5%, would need 6032 patients in total. Previous studies have illustrated that in primary care, ICCs for clinical outcomes are lower than those for process outcomes and the ICC for adverse effects to be around 0.025 [34]. Although this may not seem correct, the chances that a physician consistently misses the same diagnosis are less probable than consistently ordering the same test for the same indication. Diagnostic error will more probably lead to a change in practice than over- or underutilisation of a laboratory test. For this outcome, the unit of analysis is the individual patients and not individual tests as for appropriateness, reducing clustering with one level. Assuming a 3-month period in which laboratory tests are ordered for 42 patients, we would need to recruit 290 physicians. Our aim is to recruit 300 physicians, and it is expected that the trial will include around 12,600 patients and a total of 63,000 tests.

Assignment of interventions

Randomisation of PCPs will be done using an electronic random number generator blinded to the research facility. The research facility will be kept blinded to the allocation, but collaborating laboratories will be able to identify intervention and control PCPs. Allocation will be kept blinded to all PCPs until the start of the study. At the start of the study, GPs will be aware of the intervention and their allocation to either the intervention or control arm; however, patients and research facility will be kept blinded to this allocation during the study and until after the data analysis.

Data collection, management and analysis

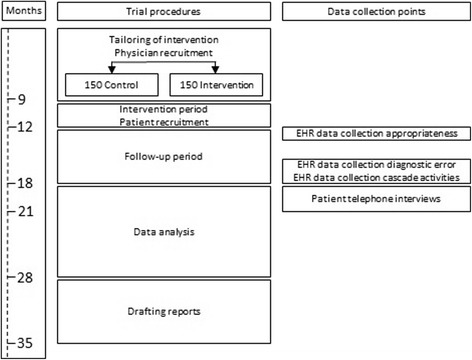

The Scientific Institute for Public Health will facilitate data collection and conform to privacy legislation through the Healthdata platform. This platform allows for secure, encrypted and pseudonomysed data transfer from multiple sources into a central data warehouse. Coded data from a single patient can be collected through this system from more than one source. The HD4DP (Healthdata for data providers) tool captures the data from within the EHR or LIS (laboratory information system) and allows the data provider to complement the tool with additional data which is not stored in the EHR in a structured format. The source data remains in the information system of the data provider, and only an excerpt of this source data is transferred to the platform. Data can be collected continuously or in a one-time fashion depending on the resource. All data is transferred through encrypted channels, using highly secured national eHealth encryption algorithms. The research facility can view the coded data using the HD4RES (Healthdata for researchers) tool. The Scientific Institute for Public Health will maintain the data warehouse, including all coded data. The data warehouse is highly secured, and access to the database is only possible through an extranet connection which does not allow full access to the data. Only a small number of members of the Scientific Institute for public Health are authorised to access the full data. Figure 1 illustrates the various phases of the trial including the data collection points.

Fig. 1.

Flow of the study including data collection points, assessments and reports. EHR: electronic health record

Baseline data to compare participating physicians will be obtained at the start of the study. This data includes the size of the PCP (how many active physicians), age of the participating physician at the start of the study, sex of the participating physician, number of active years in general practice of the participating physician at the start of the study, average number of tests per laboratory order in the 3 months prior to the study and the level of experience in the use of a CPOE prior to the study.

For comparisons of physician characteristics, comparisons will be made using generalised estimating equations (GEEs), using PCP as the clustering variable. An independent working correlation matrix will be used to account for correlations with the clusters. For continuous variables, an identity link and normal distribution will be used; for binary variables, a logit link and binary distribution; and for categorical variables, a cumulative logit link and multinomial distribution. Means and proportions per group will be estimated from the model. For the comparison of patient characteristics, similar methodology will be used as for physician characteristics but using the physician as the clustering variable.

Primary outcome analysis

To assess differences between the allocated groups in the proportion appropriate tests, a logistic GEE model will be used: of interest are the marginal proportions, not the individual probabilities of the test to be appropriate.

The logistic GEE model will include the allocated group as a factor and patients as the clustering variable. The effect of the intervention will be expressed as the difference in proportions and will be presented together with its associated 95% confidence interval. The proportion of appropriate tests in the two allocated groups will also be estimated from the GEE model and presented with their 95% confidence intervals.

Appropriateness for the composite of all study tests will be compared between the intervention and control groups. Furthermore, an analysis will be performed that only includes patients who have no indications in addition to the 17 study indications. This additional analysis will correct for an overestimation of inappropriate tests when more than one indication is selected, including indications not under evaluation. These tests would be considered inappropriate even though they could be appropriate according to one of the other indications not being evaluated. The analyses will be performed on all patients from all physicians according to their allocated group.

Secondary outcome analysis: diagnostic error

The proportion of patients with a missed diagnosis will be analysed by means of a logistic GEE model that includes a factor for allocated group and uses PCP as the clustering variable. An independent working correlation matrix will be used. The proportion of patients with a missed diagnosis and associated 95% confidence intervals will be estimated from the model.

The difference in proportions will be obtained by subtracting the two proportions. The associated standard error will be calculated from the rules for the variance of a difference between two independent estimates. The 95% confidence interval for the difference will be calculated.

The non-inferiority limit for missed diagnoses is 1%, i.e. the intervention will be deemed non-inferior if the difference between the allocated groups (intervention − control) is less than 1%. Therefore, the intervention will be deemed non-inferior if the upper limit of the 95% confidence interval lies below 1.

As for the primary endpoint, the analysis will be performed for all 17 study indications together. An analysis will be performed that only includes patients who have no indications in addition to the 17 study indications.

Secondary outcome analysis: test volume and cascade activities

The total number of tests and cascade activities will be analysed using a Poisson GEE model that includes the allocated group as the factor in the model and the physician as the clustering variable. No offset will be used. The number of tests per patient for each group will be estimated from the model and presented together with their associated 95% confidence intervals. The effect of the intervention will be presented as the ratio between the two numbers with its 95% confidence interval. Statistical significance will be assessed at a significance level of 5%.

Discussion

The effects of decision support have shown to be modest and often inconsistent [35, 36]. Some of these CDSSs have been implemented in settings not receptive to these systems, lacked essential technical qualities or standards, suffered from usability issues or were not trustworthy enough [37, 38]. In this study, we used the insights in the mechanisms that influence effectiveness of CDSS to tailor our intervention where feasible. Moreover, the potential for improvement is substantial with high rates of inappropriateness. We developed 41 different order sets (including sets with optional tests according to specific patient characteristics) for a total of 18 different indications.

The results of this study will reflect the true effect of a CDSS in regular practice because we aim to include a large sample of GPs with varying degrees of experience in the use of a CPOE. We realise that an important feature critical to the success of our CDSS is the degree in which physicians use the laboratory CPOE. In the tailoring strategy for our study, we focussed on this issue and aimed to improve the order sets in a fashion that would make the care processes involved in ordering laboratory tests more efficient. We will investigate any shortcomings of our intervention at the end of the trial to further improve its efficiency and effectiveness. Additionally, we have designed a study that should be able to demonstrate whether the CDSS aimed at diagnostic testing is not only able to influence appropriateness but also safe with respect to diagnostic error. More attention is being focussed on patient safety as an important goal for health care, and recommendations have been made on implementing safety systems [39]. Our study will contribute to the discussion on how CDSS systems can assist not only in process of care but also in safe care. These findings will influence a lager, nationwide implementation of this CDSS. These plans are facilitated by the fact that most laboratories in Belgium use the same software for their CPOE.

Acknowledgements

We would like to thank Mario Berth, An De Vleeschouwer and Eric De Schouwer for their input and trial preparations.

Funding

Funding for this study was obtained through the Belgian Health Care Knowledge Centre Clinical Trials Programme under research agreement KCE16011. The trial sponsor is the University Hospital Leuven.

Availability of data and materials

The data that support the findings of this study are available from University Hospital Leuven, but restrictions apply to the availability of these data, which are to be used under licence for the current study and so will not be publicly available. Data are however available from the authors upon reasonable request and with permission of University Hospital Leuven and the Belgian Health Care Knowledge Centre.

Abbreviations

- AML

Algemeen Medisch Laboratorium

- CDSSs

Clinical decision support systems

- CPOE

Computerised physician order entry

- EHR

Electronic health record

- GP

General practitioner

- HD4DP

Healthdata for data providers

- HD4RES

Healthdata for researchers

- MCH

Medisch Centrum Huisartsen

- PCP

Primary care practice

- XML

Extensible Markup Language

Appendix: examples of diagnostic error

Example of diagnostic error in initial testing (wrong test)

A physician suspects that his patient, who presented with a swollen knee, suffers from an acute attack of gout. He decides to test his patient for plasma uric acid to confirm his suspicion. Plasma uric acid levels are elevated, apparently confirming his suspicion, and he prescribes the patient a non-steroidal anti-inflammatory drug. Several days later, the patient returns with fever, increased swelling of the knee and intensified pain. The physician aspirates some synovial fluid and notices pus in the sample. The patient did not suffer from a gout attack but from a septic arthritis. A diagnostic aspiration of synovial fluid would have diagnosed the condition earlier.

Example of diagnostic error due to not testing

A female patient consults with general fatigue. The GP, knowing that the patient has a stressful life, explains the fatigue as a reaction to this stress. He considers laboratory testing not relevant in this case. A few months later the patient is diagnosed with hypothyroidism.

Example of diagnostic error due to superfluous testing

A young adult patient consults because of decreased power during his weekly football training. The GP performs a laboratory test which shows increased Epstein-Barr antibodies (EBV-IgG). The GP ascribes the complaints to a recovering mononucleosis infection and misses the real reason for the complaints, which is overtraining. EBV-IgG indicates an old infection and has no diagnostic value in this case but led the GP to a wrong conclusion.

Example of diagnostic error in follow-up testing

A patient consults his physician for a yearly check-up of hypertension for which he has been prescribed a thiazide diuretic. The physician checks his blood pressure and finds it well controlled. He confirms the treatment and prescribes refills without performing any laboratory tests. Several weeks later, the patient consults the emergency room because of a sudden syncope. In the hospital, the physicians note that he suffers from a hypokalaemia due to the thiazide treatment. This is an example of a missed diagnosis that should have been made during the yearly check-up of the hypertension treatment.

Authors’ contributions

All authors contributed to framing the research question and designing the study and its methodology. ND drafted the protocol under the supervision of BA. SF was involved in drafting the statistical analysis. All authors commented on subsequent drafts of the protocol and approved the final version.

Ethics approval and consent to participate

Before the start of the study, we will propose a research agreement to the physicians that stipulates the trial objectives and the formalities on data collection. Study physicians will seek informed consent from all patients for whom laboratory tests are ordered for one or more of the 17 study indications. Only data from patients who consent to the terms of the informed consent form will be included in the study.

Ethics committee approval for this study was obtained from the University Hospital Leuven Medical Ethics Committee on 26 July 2017. Additional authorisation for the use of medical and personal data was requested from the Commission for the Protection of Privacy Sector Committee Health.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Cadogan SL, Browne JP, Bradley CP, Cahill MR. The effectiveness of interventions to improve laboratory requesting patterns among primary care physicians: a systematic review. Implement Sci. 2015;10:167. doi: 10.1186/s13012-015-0356-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hickner J, Thompson PJ, Wilkinson T, Epner P, Shaheen M, Pollock AM, et al. Primary care physicians’ challenges in ordering clinical laboratory tests and interpreting results. J Am Board Fam Med. 2014;27:268–274. doi: 10.3122/jabfm.2014.02.130104. [DOI] [PubMed] [Google Scholar]

- 3.Statistieken terugbetaalde bedrage en akten van artsen en tandartsen. RIZIV. http://www.riziv.fgov.be/nl/statistieken/geneesk-verzorging/2015/Paginas/terugbetaalde_bedragen_akten_arts_tandarts.aspx#.WecMTlu0O71. Accessed 18 Oct 2017.

- 4.Driskell OJ, Holland D, Hanna FW, Jones PW, Pemberton RJ, Tran M, et al. Inappropriate requesting of glycated hemoglobin (Hb A1c) is widespread: assessment of prevalence, impact of national guidance, and practice-to-practice variability. Clin Chem. 2012;58:906–915. doi: 10.1373/clinchem.2011.176487. [DOI] [PubMed] [Google Scholar]

- 5.Davis P, Gribben B, Lay-Yee R, Scott A. How much variation in clinical activity is there between general practitioners? A multi-level analysis of decision-making in primary care. J Health Serv Res Policy. 2002;7:202–208. doi: 10.1258/135581902320432723. [DOI] [PubMed] [Google Scholar]

- 6.Leurquin P, Van Casteren V, De Maeseneer J. Use of blood tests in general practice: a collaborative study in eight European countries. Eurosentinel Study Group. Br J Gen Pract. 1995;45:21–25. [PMC free article] [PubMed] [Google Scholar]

- 7.O’Kane MJ, Casey L, Lynch PLM, McGowan N, Corey J. Clinical outcome indicators, disease prevalence and test request variability in primary care. Ann Clin Biochem. 2011;48(Pt 2):155–158. doi: 10.1258/acb.2010.010214. [DOI] [PubMed] [Google Scholar]

- 8.Zhi M, Ding EL, Theisen-Toupal J, Whelan J, Arnaout R. The landscape of inappropriate laboratory testing: a 15-year meta-analysis. PLoS One. 2013;8:e78962. doi: 10.1371/journal.pone.0078962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.De Sutter A, Van den Bruel A, Devriese S, Mambourg F, Van Gaever V, Verstraete A, et al. Laboratorium testen in de huisartsgeneeskunde. KCE reports. Federaal Kenniscentrum voor de Gezondheidszorg (KCE) 2007. [Google Scholar]

- 10.Rang M. The Ulysses syndrome. Can Med Assoc J. 1972;106:122–123. [PMC free article] [PubMed] [Google Scholar]

- 11.Houben PHH, van der Weijden T, Winkens RAG, Grol RPTM. Cascade effects of laboratory testing are found to be rare in low disease probability situations: prospective cohort study. J Clin Epidemiol. 2010;63:452–458. doi: 10.1016/j.jclinepi.2009.08.004. [DOI] [PubMed] [Google Scholar]

- 12.Kobewka DM, Ronksley PE, McKay JA, Forster AJ, van WC. Influence of educational, audit and feedback, system based, and incentive and penalty interventions to reduce laboratory test utilization: a systematic review. Clin Chem Lab Med CCLM. 2014;53:157–183. doi: 10.1515/cclm-2014-0778. [DOI] [PubMed] [Google Scholar]

- 13.Delvaux N, Van Thienen K, Heselmans A, de Velde SV, Ramaekers D, Aertgeerts B. The effects of computerized clinical decision support systems on laboratory test ordering: a systematic review. Arch Pathol Lab Med. 2017;141:585–595. doi: 10.5858/arpa.2016-0115-RA. [DOI] [PubMed] [Google Scholar]

- 14.van Wijk MAM, van der Lei J, Mosseveld M, Bohnen AM, van Bemmel JH. Assessment of decision support for blood test ordering in primary care. A Randomized Trial Ann Intern Med. 2001;134:274–281. doi: 10.7326/0003-4819-134-4-200102200-00010. [DOI] [PubMed] [Google Scholar]

- 15.Chan AJ, Chan J, Cafazzo JA, Rossos PG, Tripp T, Shojania K, et al. Order sets in health care: a systematic review of their effects. Int J Technol Assess Health Care. 2012;28:235–240. doi: 10.1017/S0266462312000281. [DOI] [PubMed] [Google Scholar]

- 16.Westbrook JI, Georgiou A, Dimos A, Germanos T. Computerised pathology test order entry reduces laboratory turnaround times and influences tests ordered by hospital clinicians: a controlled before and after study. J Clin Pathol. 2006;59:533–536. doi: 10.1136/jcp.2005.029983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Thompson W, Dodek PM, Norena M, Dodek J. Computerized physician order entry of diagnostic tests in an intensive care unit is associated with improved timeliness of service. Crit Care Med. 2004;32:1306–1309. doi: 10.1097/01.CCM.0000127783.47103.8D. [DOI] [PubMed] [Google Scholar]

- 18.Van de Velde S, Vander Stichele R, Fauquert B, Geens S, Heselmans A, Ramaekers D, et al. EBMPracticeNet: a bilingual national electronic point-of-care project for retrieval of evidence-based clinical guideline information and decision support. JMIR Res Protoc. 2013;2 10.2196/resprot.2644. [DOI] [PMC free article] [PubMed]

- 19.Avonts M, Cloetens H, Leyns C, Delvaux N, Dekker N, Demulder A, et al. Aanbeveling voor goede medisch praktijkvoering: Aanvraag van laboratoriumtests door huisartsen. Huisarts Nu. 2011;40:S1–55.

- 20.Leysen P, Avonts M, Cloetens H, Delvaux N, Koeck P, Saegeman V, et al. Richtlijn voor goed medische praktijkvoering: Aanvraag van laboratoriumtests door huisartsen - deel 2. Antwerpen: Domus Medica vzw; 2012. [Google Scholar]

- 21.Delvaux N, Van de Velde S, Aertgeerts B, Goossens M, Fauquert B, Kunnamo I, et al. Adapting a large database of point of care summarized guidelines: a process description. J Eval Clin Pract. 2017;23:21–28. doi: 10.1111/jep.12426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Van de Velde S, Roshanov P, Kortteisto T, Kunnamo I, Aertgeerts B, Vandvik PO, et al. Tailoring implementation strategies for evidence-based recommendations using computerised clinical decision support systems: protocol for the development of the GUIDES tools. Implement Sci IS. 2016;11:29. doi: 10.1186/s13012-016-0393-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kostopoulou O, Delaney BC, Munro CW. Diagnostic difficulty and error in primary care—a systematic review. Fam Pract. 2008;25:400–413. doi: 10.1093/fampra/cmn071. [DOI] [PubMed] [Google Scholar]

- 24.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–1499. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 25.Schiff GD, Hasan O, Kim S, Abrams R, Cosby K, Lambert BL, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169:1881–1887. doi: 10.1001/archinternmed.2009.333. [DOI] [PubMed] [Google Scholar]

- 26.Singh H, Giardina TD, Meyer AND, Forjuoh SN, Reis MD, Thomas EJ. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173:418–425. doi: 10.1001/jamainternmed.2013.2777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Panesar SS, de Silva D, Carson-Stevens A, Cresswell KM, Salvilla SA, Slight SP, et al. How safe is primary care? A systematic review. BMJ Qual Saf. 2016;25:544–553. doi: 10.1136/bmjqs-2015-004178. [DOI] [PubMed] [Google Scholar]

- 28.Zwaan L, Schiff GD, Singh H. Advancing the research agenda for diagnostic error reduction. BMJ Qual Saf. 2013;22(Suppl 2):ii52–ii57. doi: 10.1136/bmjqs-2012-001624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Heselmans A, de Velde SV, Ramaekers D, Stichele RV, Aertgeerts B. Feasibility and impact of an evidence-based electronic decision support system for diabetes care in family medicine: protocol for a cluster randomized controlled trial. Implement Sci. 2013;8:83. doi: 10.1186/1748-5908-8-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Teerenstra S, Moerbeek M, van Achterberg T, Pelzer BJ, Borm GF. Sample size calculations for 3-level cluster randomized trials. Clin Trials Lond Engl. 2008;5:486–495. doi: 10.1177/1740774508096476. [DOI] [PubMed] [Google Scholar]

- 31.Campbell M, Steen N, Grimshaw J, Eccles M, Mollison J, Lombard C. Changing professional practice. Copenhagen: DSI: Danish Institute for Health Services Research and Development; 1999. Design and statistical issues in implementation research; pp. 57–76. [Google Scholar]

- 32.Campbell M, Grimshaw J, Steen N. Sample size calculations for cluster randomised trials. Changing Professional Practice in Europe Group (EU BIOMED II Concerted Action) J Health Serv Res Policy. 2000;5:12–16. doi: 10.1177/135581960000500105. [DOI] [PubMed] [Google Scholar]

- 33.Littenberg B, MacLean CD. Intra-cluster correlation coefficients in adults with diabetes in primary care practices: the Vermont Diabetes Information System field survey. BMC Med Res Methodol. 2006;6:20. doi: 10.1186/1471-2288-6-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Adams G, Gulliford MC, Ukoumunne OC, Eldridge S, Chinn S, Campbell MJ. Patterns of intra-cluster correlation from primary care research to inform study design and analysis. J Clin Epidemiol. 2004;57:785–794. doi: 10.1016/j.jclinepi.2003.12.013. [DOI] [PubMed] [Google Scholar]

- 35.Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 36.Roshanov PS, You JJ, Dhaliwal J, Koff D, Mackay JA, Weise-Kelly L, et al. Can computerized clinical decision support systems improve practitioners’ diagnostic test ordering behavior? A decision-maker-researcher partnership systematic review. Implement Sci IS. 2011;6:88. doi: 10.1186/1748-5908-6-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Roshanov PS, Fernandes N, Wilczynski JM, Hemens BJ, You JJ, Handler SM, et al. Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013;346:f657. doi: 10.1136/bmj.f657. [DOI] [PubMed] [Google Scholar]

- 38.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kohn L, Corrigan J, Donaldson M. To err is human: building a safer health system. Washington DC: National Academy Press; 2000. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from University Hospital Leuven, but restrictions apply to the availability of these data, which are to be used under licence for the current study and so will not be publicly available. Data are however available from the authors upon reasonable request and with permission of University Hospital Leuven and the Belgian Health Care Knowledge Centre.