Abstract

Not all of the variance in speech-recognition performance of cochlear implant (CI) users can be explained by biographic and auditory factors. In normal-hearing listeners, linguistic and cognitive factors determine most of speech-in-noise performance. The current study explored specifically the influence of visually measured lexical-access ability compared with other cognitive factors on speech recognition of 24 postlingually deafened CI users. Speech-recognition performance was measured with monosyllables in quiet (consonant-vowel-consonant [CVC]), sentences-in-noise (SIN), and digit-triplets in noise (DIN). In addition to a composite variable of lexical-access ability (LA), measured with a lexical-decision test (LDT) and word-naming task, vocabulary size, working-memory capacity (Reading Span test [RSpan]), and a visual analogue of the SIN test (text reception threshold test) were measured. The DIN test was used to correct for auditory factors in SIN thresholds by taking the difference between SIN and DIN: SRTdiff. Correlation analyses revealed that duration of hearing loss (dHL) was related to SIN thresholds. Better working-memory capacity was related to SIN and SRTdiff scores. LDT reaction time was positively correlated with SRTdiff scores. No significant relationships were found for CVC or DIN scores with the predictor variables. Regression analyses showed that together with dHL, RSpan explained 55% of the variance in SIN thresholds. When controlling for auditory performance, LA, LDT, and RSpan separately explained, together with dHL, respectively 37%, 36%, and 46% of the variance in SRTdiff outcome. The results suggest that poor verbal working-memory capacity and to a lesser extent poor lexical-access ability limit speech-recognition ability in listeners with a CI.

Keywords: cochlear implants, speech-in-noise recognition, lexical access, working memory, linguistic skills

A better understanding of the underlying factors that explain the wide range of speech-recognition performance in postlingually deafened cochlear implant (CI) users is increasingly important to improve preoperative CI counseling and optimize rehabilitation programs. Several factors have been the subject of investigation, but a major part of the variance in speech-recognition outcomes in CI users can still not be explained (e.g., Blamey et al., 2013; Lazard et al., 2012; Roditi, Poissant, Bero, & Lee, 2009). The current study aimed to determine the influence of lexical-access ability and cognitive factors in speech-recognition performance in CI users.

It is well recognized that various factors play a role in speech-recognition performance of CI users: for example, duration of severe to profound hearing loss before implantation (Blamey et al., 1996, 2013; Budenz et al., 2011; Holden et al., 2013; Mosnier et al., 2014; Roditi et al., 2009), the position of the electrodes (e.g., Finley et al., 2008; Lazard et al., 2012), residual hearing, and preoperative speech recognition (Lazard et al., 2012; Leung et al., 2005). Besides these specific CI-related factors, some studies have shown the importance of cognitive skills in speech-recognition tasks in CI users (Heydebrand, Hale, Potts, Gotter, & Skinner, 2007; Lyxell, Andersson, Borg, & Ohlsson, 2003; Pisoni, 2000) as well as for people with normal hearing (NH) or a mild hearing loss (Akeroyd, 2008; Van Rooij & Plomp, 1990; Zekveld, George, Kramer, Goverts, & Houtgast, 2007).

Moreover, there is evidence that linguistic factors play a role in speech recognition as well, especially in demanding listening situations. This has been demonstrated for CI users (Heydebrand et al., 2007; Kaandorp, Smits, Merkus, Goverts, & Festen, 2015; Lyxell et al., 1996), and also for children (Munson, 2001), nonnative listeners (Kaandorp, De Groot, Festen, Smits, & Goverts, 2016; Van Wijngaarden, Steeneken, & Houtgast, 2002), and congenitally hearing-impaired listeners (Huysmans, de Jong, van Lanschot-Wery, Festen, & Goverts, 2014). Lyxell et al. (1996) found in a group of 11 CI candidates that preoperatively measured verbal information processing speed and working-memory capacity were predictors of CI users’ levels of speech understanding. Heydebrand et al. (2007) found in a study sample of 33 postlingually deafened CI recipients that improvement in spoken word recognition in the first 6 months after cochlear implantation was not correlated with processing speed or general cognitive ability, but it was associated with higher verbal learning scores and better verbal working-memory capacity measured before implantation. In a larger study with 114 postlingually deafened adult CI users, Holden et al. (2013) also found a positive correlation between a composite measure of cognition (including verbal learning and working memory) with monosyllable word recognition scores with CI, although after controlling for age, cognition no longer significantly affected CI outcome. Thus, possibly, age-related declines in cognition were responsible for the lower speech-recognition performance in that study.

Although several studies support the assumption that cognitive and linguistic factors are relevant in CI speech-recognition performance, results vary among studies and do not point to a test or combination of tests that can be easily used in the clinic for evaluation of CI candidacy or following rehabilitation progress. Some of these studies examine only the influence of cognitive and linguistic factors on recognition of words and sentences in quiet, which may be less cognitively and linguistically demanding than daily life speech understanding. Kaandorp et al. (2016) recently showed a relationship between linguistic skills and speech recognition of sentences in noise in three groups of 24 young adult NH listeners with varying linguistic skills: native Dutch listeners with high education, native listeners with lower education, and nonnative listeners with high education. Visually measured lexical-access ability (which we consider a mainly fluid cognitive ability, i.e., the capacity for processing information or reasoning) was a better predictor of speech-in-noise recognition than vocabulary size (VS; a type of cognitive ability based on accumulated knowledge, referred to as a crystallized ability; Horn & Cattell, 1967). In the Kaandorp et al. (2016) study, speech-recognition abilities in noise were assessed by measuring the speech reception threshold (SRT; Plomp & Mimpen, 1979) with the sentence-in-noise (SIN) test (Versfeld, Daalder, Festen, & Houtgast, 2000) and a digits-in-noise (DIN) test (Smits, Goverts, & Festen, 2013). It was found that the DIN test measures mainly auditory performance and minimal linguistic aspects because it was less related to the linguistic variables.

Based on the findings of Kaandorp et al. (2016), the current study explored the predictive value of visual lexical-access ability and cognitive measures on speech-recognition performance both in quiet and in noise of postlingually deafened CI users. VS was included as a second measure of linguistic skills because crystallized knowledge is known to be preserved or even improve with age, whereas fluid abilities (lexical-access) tend to decline with increasing age (Horn & Cattell, 1967). In the current study with a larger range of ages, vocabulary knowledge might play a bigger role than in the Kaandorp et al. (2016) study with young adult listeners. Two cognitive measures that are known to relate to speech recognition (in noise) were included to evaluate their predictive value in CI performance compared with that of lexical access: the Reading Span test (RSpan; Besser, Zekveld, Kramer, Ronnberg, & Festen, 2012) as a test of verbal working-memory capacity and the text reception threshold test (TRT; Zekveld et al., 2007) as a visual analogue of the SRT for sentences in noise. Zekveld et al. found that, in a group of 34 NH listeners aged 19 to 78 years, 30% of the variance in TRT was shared with variance in SRT, indicating that the same modality-aspecific cognitive skills were needed in both tests to recover written text or speech from noise. Haumann et al. (2012) found a relation between presurgical TRT measures and postsurgical SRT results in postlingually deafenend adults using a CI.

We were essentially interested in predicting sentence recognition in noise with predictors that can be measured preoperatively, like cognitive and linguistic factors. We assumed that these visually measured skills would not change significantly after surgery. However, when measuring these factors in speech-recognition performance in CI users, we expected a large additional influence of auditory factors (e.g., temporal and spectral resolution), compared with the study in listeners with NH (Kaandorp et al., 2016). To investigate the explanatory value for performance with CI postoperatively, we chose to use the DIN scores to correct for auditory factors, as DIN performance is expected to represent mostly auditory performance. We hypothesized that controlling for auditory performance by looking at the difference between SIN and DIN thresholds after implantation might give a clearer view of the influence of cognitive and linguistic ability in SIN test results.

Method

Participants

Twenty-four postlingually deafened CI users (5 men, 19 women) participated in the study. Table 1 lists characteristics of the participants. No dyslexia, reading problems, or relevant medical problems were reported in an interview prior to participation. Etiology of hearing loss was hereditary (n = 13), meningitis (n = 1), measles (n = 1), hearing loss after brain tumor surgery (n = 1), otoscleroses (n = 1), sudden deafness while giving birth (n = 1), or unknown (n = 6). Participants were all patients of the Otolaryngology department, Section Ear & Hearing of the VU University Medical Center, Amsterdam, The Netherlands, who responded to an e-mail invitation to participate in the study. CI users with known relevant medical problems (i.e., that would influence their participation) or prelingually deaf patients (i.e., profound hearing loss before the age of four) were not invited. All patients had at least 1 year of experience with their CIs. The majority used Cochlear™ devices (Sydney, Australia; n = 23), and there was one Advanced Bionics user (CA, Valencia, CA). During the tests they used their device with the program and setting they preferred for everyday use. If participants wore a contralateral hearing aid, they were allowed to wear it during testing. All were native Dutch speakers. Participants’ vision, with corrective eyewear if needed, was checked with a near-vision screening test (Bailey & Lovie, 1980). All participants were able to read the words of a chart with different print sizes down to a size of at least 16 points at approximately 50 cm from the screen and were assumed to have good visual ability.

Table 1.

Characteristics of the Participants.

| Participant number | Age (years) | Age at implantation (years) | Age at onset of HL (years) | Duration of HL (years) | Duration of SHL (years) | Duration of CI use (years) | Aided preoperative CVC score (% correct phonemes) | Sound field detection thresholds (dB HL) | Etiology | Implant and processor type | Strategy, no. of active electrodes | Contralateral hearing aid |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 84 | 80 | 63 | 17 | 3 | 3 | 36 | 35 | Unknown | CI24RE, Freedom | ACE, 22 | No |

| 2 | 74 | 69 | 19 | 50 | <1 | 4 | 0 | 22 | Hereditary | CI24RE, CP810 | MP3000, 22 | No |

| 3 | 80 | 74 | 9 | 66 | 6 | 5 | 30 | 39 | Unknown | CI24RE, Freedom | ACE(RE)22 | No |

| 4 | 79 | 76 | 35 | 42 | 7 | 2 | 33 | 35 | Hereditary | CI24RE, Freedom | ACE, 22 | No |

| 5 | 53 | 51 | 12 | 40 | 5 | 1 | 43 | 20 | Measles | CI512, CP810 | ACE, 22 | No |

| 6 | 56 | 50 | 35 | 15 | 1 | 5 | 50 | 33 | Hereditary | CI24RE, CP810 | ACE, 20 | No |

| 7 | 59 | 54 | 36 | 18 | 2 | 4 | 26 | 24 | Hereditary | CI24RE, Freedom | ACE(RE), 22 | No |

| 8 | 62 | 56 | 50 | 7 | 1 | 4 | 50 | 39 | Hereditary | CI24RE, Freedom | ACE(RE), 22 | No |

| 9 | 61 | 59 | 52 | 7 | 4 | 1 | 43 | 23 | Unknown | CI24RE, Freedom | ACE, 22 | Yes |

| 10 | 78 | 74 | 57 | 18 | 2 | 3 | 45 | 31 | Unknown | HR90K HiFocus1J, Harmony | HiRes-S/Fidelity 120, 16 | Yes |

| 11 | 70 | 65 | 26 | 40 | 40 | 3 | 12 | 16 | SD after giving birth | CI24RE, Freedom | ACE, 22 | No |

| 12 | 58 | 52 | 0 | 53 | 47 | 5 | 42 | 29 | Hereditary | CI24RE, CP810 | ACE, 22 | Yes |

| 13 | 64 | 60 | 27 | 33 | 1 | 3 | 47 | 24 | Hereditary | CI24RE, Freedom | ACE, 22 | No |

| 14 | 63 | 60 | 14 | 47 | 1 | 1 | 51 | 28 | Otoscleroses | CI24RE, Freedom | ACE, 22 | Yes |

| 15 | 63 | 60 | 8 | 53 | 35 | 2 | 43 | 38 | Hereditary | CI24RE, Freedom | ACE, 22 | Yes |

| 16 | 50 | 47 | 23 | 24 | 16 | 2 | 42 | 23 | After brain tumor surgery | HR90K HiFocus1J, Harmony | HiRes-S/Fidelity 120, 16 | No |

| 17 | 72 | 68 | 40 | 29 | 2 | 2 | 43 | 35 | Hereditary | CI24RE, Freedom | ACE, 22 | No |

| 18 | 73 | 69 | 55 | 14 | 4 | 3 | 45 | 26 | Unknown | CI24RE, Freedom | ACE, 22 | No |

| 19 | 42 | 39 | 6 | 33 | 8 | 2 | 45 | 31 | Hereditary | CI24RE, Freedom | ACE, 22 | No |

| 20 | 66 | 62 | 2 | 61 | 3 | 2 | 30 | 26 | Meningitis | CI24RE, Freedom | ACE, 22 | No |

| 21 | 64 | 62 | 52 | 11 | <1 | 1 | 52 | 19 | Hereditary | CI512, CP810 | ACE, 22 | Yes |

| 22 | 67 | 66 | 41 | 25 | 1 | 1 | 50 | 21 | Hereditary | CI512, CP810 | ACE, 22 | No |

| 23 | 57 | 56 | 35 | 21 | <1 | 1 | 48 | 21 | Unknown | CI512, CP810 | ACE, 22 | Yes |

| 24 | 67 | 58 | 26 | 33 | 10 | 8 | 25 | 26 | Hereditary | CI24R(CS), Freedom | ACE, 20 | No |

| M (SD) | 64 (10) | 61 (10) | 30 (19) | 32 (17) | 8 (13) | 3.3 (1.8) | 39 (13) | 28 (7) |

Note. Duration of hearing loss (HL) and duration of severe to profound hearing impairment (SHL) were obtained from questionnaires and medical records. SHL was defined as either not being able to use the telephone or aided monosyllable (CVC) recognition scores of less than 50% phonemes correct at 65 dB SPL. Participants in Group 2 are marked gray. SD after giving birth = sudden deafness after giving birth; SD = standard deviation; CI = cochlear implant; CVC = consonant-vowel-consonant.

Participants provided informed consent before participating according to the declaration of Helsinki, and they received reimbursement for travel costs and additionally a fee of 7.50 euros per hour for their contribution to the study. The study was approved by the Medical Ethics Committee of VU University Medical Center Amsterdam (reference number: 2010/246).

Tests

Speech-recognition measures

Speech recognition was measured using monosyllables, sentences, and digit-triplets.

Monosyllabic word lists (NVA; Bosman & Smoorenburg, 1995) consisting of 12 meaningful consonant-vowel-consonant (CVC) monosyllables were used. The first word was used to focus the listener’s attention. CVCs were produced by a female speaker and presented in quiet at 65 dB sound pressure level. The test score was defined as the percentage of phonemes correctly reproduced from the last 11 words.

Recognition of sentences in noise was measured with sentence lists (VU98; Versfeld et al., 2000) consisting of 13 short meaningful sentences, produced by a female speaker, that were eight or nine syllables in length. They were presented in steady-state long-term average speech spectrum (LTASS) masking noise (Versfeld et al., 2000). The SRT in noise, defined as the signal-to-noise ratio (SNR) at which on average 50% of the sentences were repeated correctly, was measured by the adaptive procedure described by Plomp and Mimpen (1979). The speech level was kept constant at 65 dB A, and the noise level was varied. The first sentence of each trial was presented at 0 dB SNR and was repeatedly presented with a 4-dB increase of SNR until the participant responded correctly. All subsequent sentences were presented only once with SNRs depending on the response to the previous sentence. A response was considered correct if all two to seven predefined keywords in the sentence were repeated correctly in the presented order (Kaandorp et al., 2016). After a correct response, the SNR was lowered by 2 dB, and after an incorrect response, the SNR was raised by 2 dB. The SIN score was calculated by taking the average SNR for Sentences 5 to 14 (where Sentence 14 does not exist, but its SNR was calculated from the response to Sentence 13; Plomp & Mimpen, 1979). In a previous study, we concluded that not all CI users were able to obtain reliable results on the SIN test (Kaandorp et al., 2015). Nevertheless, in the current study, the SIN test was used again because we aimed to match the test battery that is regularly used in our clinic at present, and there is no common alternative for this relevant test. Participants were not screened before inclusion on SIN performance to obtain a representative group of postlingually deafened CI users. It is known that the SIN test can be difficult for CI users. There is no commonly used criterion to identify unreliable SRTs. The speech intelligibility index model (ANSI, 1997) assumes that all speech information is available when the SNR in steady-state LTASS noise is higher than +15 dB SNR. Thus, in theory, SRTs higher than +15 dB SNR do not reflect the ability to recognize speech in noise, and these SRTs should be classified as unreliable because (a) the adaptive procedure does not work properly and (b) the SRT reflects no longer the construct of speech-in-noise ability. The upper limit of +15 dB SNR (based on the speech intelligibility index) could be different for signals processed by hearing aids or cochlear implants, and this complex topic could be the subject of future research. Nevertheless, we considered SRTs higher than 15 dB SNR as unreliable in line with a previous study (Kaandorp et al., 2015).

Recognition of digit-triplets in noise was measured using the DIN test (Smits et al., 2013) that uses digit-triplet lists containing 24 broadband, homogeneous digit-triplets. The digits were produced by a male speaker and were presented in steady-state LTASS masking noise (Smits et al., 2013). The same adaptive procedure was used for the DIN test as for the SIN test. Here, the overall intensity level was kept constant at 65 dB A, and the first digit-triplet was presented at 0 dB SNR. The SRT was calculated by taking the average SNRs of triplets 5 to 25. All three digits had to be repeated correctly for the response to be considered correct.

All speech-recognition tests were administered three times, where the first list was used as a practice list. Scores on the second and third runs were averaged for the analyses. Recognition of whole sentences will show larger differences between listeners because of differences in cognitive and linguistic abilities, whereas recognition of closed set words (digits) reflects phoneme recognition and is (mainly) associated with auditory capacity (Kaandorp et al., 2016). Therefore, we used the DIN test to eliminate the major auditory effects in speech recognition in noise, and hence isolate the additional role of cognitive and linguistic skills. For this purpose, a derived variable SRTdiff (SIN-DIN) was calculated.

Cognitive and linguistic measures

Linguistic skills were measured with a VS test and two tests of lexical access: a lexical-decision test (LDT) and a word-naming (WN) test. Two commonly used tests that measure combinations of linguistic and nonverbal aspects of cognition were also included: the TRT and the RSpan. All tests will be explained later.

VS was measured with a subtest of the Groningen Intelligence Test-II (Luteijn & Barelds, 2004), which uses a list of 20 visually presented items. For each test word, the participants had to choose the correct synonym out of five alternatives. In this test, raw scores were used to permit direct comparison of participants.

For the LDT (Rubenstein, Lewis, & Rubenstein, 1971), the measurement protocol of Kaandorp et al. (2016) was used. Words were used from a previous study by De Groot, Borgwaldt, Bos, and Van Den Eijnden (2002). Two lists were used for each of three different average word frequencies: low-frequent (LDTLF), middle-frequent (LDTMF), and high-frequent (LDTHF). The lists were presented in two test blocks of three lists (in the order: LDTMF, LDTHF, and LDTLF), each containing 30 words and 20 pseudowords. The pseudowords were constructed from words of the CELEX database (Baayen, Piepenbrock, & Van Rijn, 1993) that were altered by changing at least one letter in such a way they represent orthographically correct, but meaningless letter strings. All words and pseudowords were four to seven letters long and were presented in the middle of the screen. Participants were instructed to press a green button with their right hand for each word and a red button with their left hand for each pseudoword and to respond as quickly and accurately as possible. Reaction times (RTs) for correct responses to words and pseudowords were used as well as the number of errors. RTs under 300 ms or above 1,500 ms as well as RTs that deviated more than 2.5 SDs from the participants’ resulting list average were omitted. The average RT for words of all six lists was used as the test score.

WN was measured with a short test with 30 words simultaneously presented on the screen (Kaandorp et al., 2016). Participants were instructed to read the words out loud as quickly as possible. As soon as the words appeared, a timer was started. The timer was stopped by the experimenter at the offset of the last word. The total time needed to read all the words was used as test score. A combined variable of LDT and WN was calculated by converting the RT’s of both measures into z scores and averaging them. For calculation of the z scores, the mean and SD of the NH data of Kaandorp et al. (2016) were used (LDT: M = 550, SD = 115; WN: M = 15.6 SD = 6.4). This combined variable was used as a more pure measure of lexical-access ability (LA).

The TRT test (Besser et al., 2012; Zekveld et al., 2007) was used as a visual analogue of the SIN test. Three lists of 13 sentences (Versfeld et al., 2000) were used, which did not overlap with the sentences used in the SIN test. Sentences were partly masked by a vertical bar pattern and were presented on a computer screen. Participants were instructed to read the sentence out loud as accurately as possible. The test result indicated the percentage of unmasked text, at which the participant was able to read 50% of the sentences correctly. Masking patterns were, analogous to the SIN test, adaptively changed depending on the response. The first sentence was initially presented at a level of 40% unmasked text. It was then repeated with a decrease in masking of 12% for every repetition until the participant was able to read the complete sentence correctly. All subsequent sentences were presented only once. The change in masking for each following sentence was 6% up after a correct response and down after an incorrect response. The test result was the mean percentage of unmasked text of Sentences 5 to 14. In contrast to the original TRT, (Zekveld et al., 2007) the TRTcenter was used because of its higher correlation with the SRT for sentences in stationary noise (Besser et al., 2012). In the TRTcenter test, sentences are presented word by word in the center of the screen. The presentation time of each word corresponded to the duration of the word in the respective audio recording of the sentence. Each participant did three runs. Scores on the second and third runs were averaged for the analyses.

Verbal working memory was measured with the RSpan test (Besser et al., 2012). In this complex dual task, test sets of sentences were presented on a computer screen. Sentences constructed of five words in past tense were presented in three parts (subject – verb – object) in the center of the screen. Half of the sentences were semantically sensible; the other half were absurd. Twelve sets of sentences in increasing set-size order were used, with each set-size presented three times (3 × 3, 3 × 4, 3 × 5, 3 × 6). After every sentence, the participant had to indicate whether it was semantically sensible or absurd. At the end of every set, participants were asked to recall either all first words (subjects) or all last words (objects) in the set. Which words to recall was unknown in advance. Participants were given a maximum of 80 s to recall the requested subjects or objects. The test result was the total number of correctly recalled target words.

Procedure

Tests were presented in a fixed order with the same lists and test setup for each participant to enable comparisons between listeners. Each participant completed a single 2-hr test session with a 15-min break. The order of tests was as follows: Sound field thresholds, CVCs, RSpan, LDT, DIN, SIN, TRT, VS, and WN. Tests were performed in a sound-treated booth by a trained experimenter. Sound field thresholds were measured with the aid of a clinical audiometer (Decos Audiology Workstation, Decos Systems, Noordwijk, The Netherlands) and a loudspeaker (Yamaha MSP5 Studio). Speech-in-noise tests were measured using a Soundblaster Audigy soundcard and a Soundblaster T20 loudspeaker. The signal was calibrated with a sound level meter at the expected position of the participants heads. Participants were seated facing the loudspeaker at a distance of approximately 70 cm or at a comfortable distance from the display monitor.

The effects of several personal factors and test scores on speech recognition were analyzed first with correlation analyses. Second, variables that showed significant correlations were included in multiple linear regression analyses. Also, the data of CI users were compared with the data of listeners with NH with a wide range of linguistic abilities (Kaandorp et al., 2016) to show the effect of lexical-access ability on speech-in-noise performance for these groups.

Results

Outcome Measures and Predictors

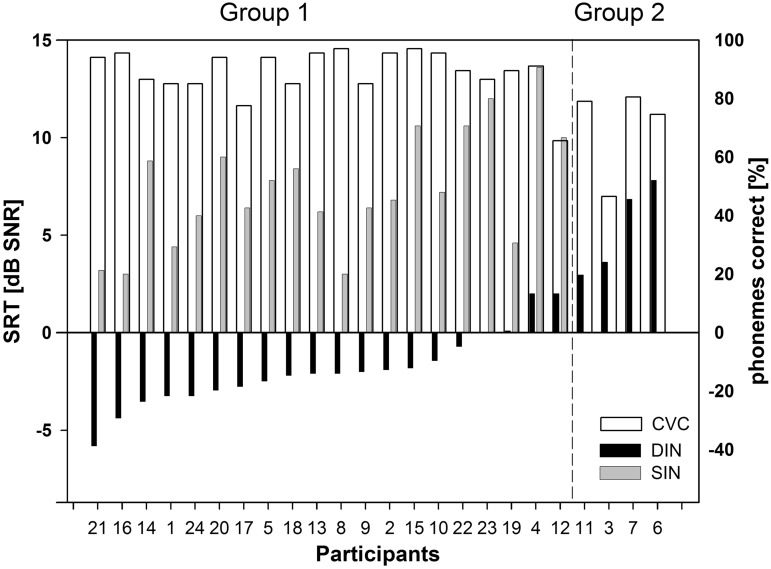

CVC, DIN, and SIN scores are shown in Figure 1 for each participant. The variables were approximately normally distributed (checked by visual inspection of histograms and quantile–quantile [Q-Q] plots), except for the CVC phoneme scores. CVC phoneme scores ranged from 47% to 97% with a mean of 86%. DIN thresholds ranged from −5.8 to 7.8 dB SNR with a mean of −0.7 dB SNR. For the SIN test, there were useful data for only 20 CI users. For the remaining four CI users, both SIN thresholds were higher than 15 dB SNR. For two CI users, one SIN threshold was higher than 15 dB and so their result was based on one list. For the 20 participants, SIN thresholds ranged from 3.0 to 13.6 dB SNR with a mean of 7.4 dB SNR. To examine whether the poor sentence recognition performance of the four participants with unreliable SIN thresholds was related to cognitive or linguistic abilities, participants were divided into two performance groups (see Figure 1), and the individual data of participants in Group 2 were also examined.

Figure 1.

Speech reception thresholds (left axis) for sentences (SIN) and digit-triplets (DIN) in stationary noise and % phonemes correct (right axis) for monosyllables in quiet (CVC). Participants were arranged in order of DIN threshold (from best to poorest) and divided into two performance groups.

SRT = speech reception threshold; SNR = signal-to-noise ratio; DIN = digits-in-noise; SIN = sentences-in-noise; CVC = consonant-vowel-consonant.

Summary data of all cognitive and linguistic measures are shown in Table 2. All variables were approximately normally distributed (checked by visual inspection of histograms and Q-Q plots).

Table 2.

Means, Standard Deviations, and Ranges Are Given for Scores on the Linguistic and Cognitive Tests.

| NH data (from literature) |

Total CI group (n = 24) |

t tests |

||||

|---|---|---|---|---|---|---|

| Predictor variables | M (range) | SD | M (range) | SD | t | p |

| LDT (ms) | 550 (419–965) | 115 | 585 (439–825) | 101 | −1.51 | .134 |

| WN (s) | 15.6 (8.4–40.2) | 6.4 | 16.8 (10.5–29.2) | 3.8 | −0.86 | .394 |

| VS (# correct) | 11.8 (3–19) | 3.5 | 13.1 (7–18) | 3.0 | −1.58 | .117 |

| RSpan (# correct) | 19.7 (4–34) | 6.1 | 16.8 (5–33) | 7.6 | 1.80 | .076 |

| TRT (% unmasked text) | 59.7 (49–75) | 5.4 | 59.8 (53–74) | 5.2 | 0.08 | .939 |

Note. Data of normal-hearing (NH) listeners from previous studies are also given. Independent t tests were done to compare the groups. LDT = lexical-decision test; WN = word-naming test; VS = vocabulary size test; RSpan = Reading Span test; TRT = text reception threshold test; CI = cochlear implant. NH data were obtained from Kaandorp et al. (2016) for LDT, WN, and VS (n = 72); and from Besser et al. (2012) for TRT and RSpan (n = 55).

Results for LDT, WN, and VS for CI users were comparable with the results of the study with 72 young NH listeners (Kaandorp et al., 2016), with a wide range of linguistic skills and different educational levels. Independent sample t tests (Table 2) confirmed that results for the CI users were not statistically different from the total group of NH listeners in that study. Also, results for the RSpan test and TRT of the CI users were not statistically different from the results of the 55 NH listeners with average age of 44 years (range 18–78 years) tested by Besser et al. (2012). In the Kaandorp et al. (2016) study, a correlation of .69 was found between WN and LDT in NH listeners. In the current study population, we found a moderate correlation, but the sample size was too small for such effects to be significant (r = .34, p = .16). Nevertheless, the results of LDT and WN were, in line with the NH study, combined into a composite measure LA and included in the analyses, in addition to WN and LDT RTs.

Table 3 shows the individual test results of the participants in Group 2. Inspection of the individual data showed that participants in Group 2 performed poorer than Group 1 with respect to DIN thresholds. Participant 3 performed poor on all tests, auditory and linguistic/cognitive. The other three participants all had varying but not clearly poor results on the cognitive/linguistic tests. For the following analyses, only participants with SIN scores were included (n = 20). Because a high number of correlations were calculated, results have to be interpreted carefully as type I errors might occur.

Table 3.

Individual Data of the Participants With Unreliable SIN Thresholds, Group 2 (n = 4, SIN > 15 dB SNR).

| Participant | 3 | 6 | 7 | 11 |

|---|---|---|---|---|

| DIN | 3.6 | 7.8 | 6.9 | 3.0 |

| CVC | 47 | 75 | 81 | 79 |

| LDT | 670 | 494 | 493 | 652 |

| WN | 20.4 | 17.0 | 21.6 | 17.3 |

| VS | 10 | 11 | 11 | 14 |

| RSpan | 8 | 27 | 21 | 19 |

| TRT | 62 | 56 | 59 | 58 |

| SFT | 39 | 33 | 24 | 16 |

Note. SIN = sentences-in-noise test in dB SNR; SNR = signal-to-noise ratio; DIN = digits-in-noise test in dB SNR; CVC = consonant-vowel-consonant monosyllable test, in % correct phonemes; LDT = lexical-decision test, in ms; WN = word-naming test, in s; VS = vocabulary size test, in number of correct responses; RSpan = Reading Span test, in number of correct responses; TRT = text reception threshold test, in % unmasked text needed to reach 50% correct responses; SFT = sound field threshold, in dB HL.

Biographic and Audiologic Factors

The influence of biographic and audiologic factors on speech-recognition outcomes was analyzed first. Pearson’s correlations were computed between speech-recognition variables and age, age at onset of hearing loss, age at implantation, duration of hearing loss (dHL), duration of severe to profound hearing loss, years of experience with CI, and aided preoperative CVC phoneme score. Correlations were not significant, except for dHL with SIN threshold (r = .49, p = .029), suggesting that poorer SIN results were related to a longer dHL.

Cognitive and Linguistic Factors

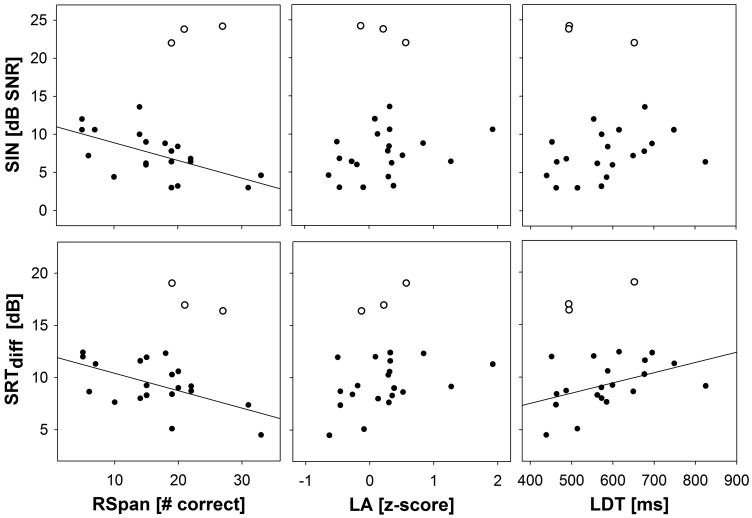

Next, Pearson’s correlations were computed between the speech-recognition outcome variables and the cognitive and linguistic variables as well as the sound field thresholds (Table 4). There were no significant correlations between CVC or DIN scores with any of the cognitive and linguistic tests. For the SIN test and SRTdiff, significant strong correlations were found with the RSpan test (r = − .59, p = .006 and r = −.57, p = .009, respectively). Also, a significant correlation was found for SRTdiff with LDT score (r = .45 , p = .047). Relations between RSpan, LA, and LDT with SIN and SRTdiff are shown in Figure 2 (Group 2 data are shown by open symbols).

Table 4.

Pearson’s Correlation Coefficients for Speech Recognition Measures and Cognitive and Linguistic Factors for CI Users (n = 20) Without Poorest Performers.

| Variables (n = 20) | Word naming | LDT | LA | VS | RSpan | TRT | SFT |

|---|---|---|---|---|---|---|---|

| CVC | −0.02 | −0.25 | −0.19 | −0.22 | 0.03 | −0.13 | −0.08 |

| DIN | −0.03 | 0.07 | 0.03 | −0.19 | −0.26 | 0.03 | 0.27 |

| SIN | 0.12 | 0.38† | 0.34 | −0.33 | −0.59** | 0.08 | 0.02 |

| SRTdiff | 0.18 | 0.45* | 0.42† | −0.27 | −0.57** | 0.08 | −0.20 |

Note. Significance levels are given. CI = cochlear implant; CVC = consonant-vowel-consonant monosyllables; DIN = digits-in-noise test; SIN = sentences-in-noise test; SRTdiff = difference measure (SIN-DIN); LDT = lexical-decision test; LA = lexical access; VS = vocabulary size; RSpan = Reading Span test; TRT = text reception threshold; SFT = sound field thresholds.

†p ≤ .10. *p ≤ .05. **p ≤ .01.

Figure 2.

Relations between working-memory capacity (RSpan), lexical-access ability with a composite measure (LA), and with a lexical-decision test (LDT) with sentence-in-noise recognition (SIN) and the derived variable SRTdiff (difference between sentence-in-noise recognition and digits-in-noise recognition). Open symbols reflect the unreliable SIN results of listeners in Group 2. Lines represent significant correlations.

SRT = speech reception threshold; SNR = signal-to-noise ratio; SIN = sentences-in-noise; LA = lexical access; RSpan = Reading Span test.

Because age is related to some cognitive and linguistic measures, Pearson’s correlation analyses for the total group (N = 24) were done between age, the cognitive, and the separate linguistic measures. They revealed significant correlations for age with RSpan (r = −.54, p = .006) and LDT (r = .47, p = .019) indicating poorer performance on both tests with higher age.

Regression Analyses

Multiple linear regression analyses were done to evaluate predictive value of the cognitive and linguistic variables for sentence recognition performance in addition to other relevant factors. Predictor variables that showed significant strong correlations with SIN threshold or SRTdiff score in the correlation analyses were considered. Those were dHL, RSpan, and LDT score. Because we expected a relationship with composite measure LA based on Kaandorp et al. (2016) and there was a trend for LA with SRTdiff in the correlation analyses, also LA was examined as predictor variable. First, regression models were examined that could be used preoperatively in relation to the application for determining CI candidacy. Separate regression analyses were performed for Group 1 (n = 20) with SIN as dependent variable and LA, LDT, or RSpan as independent factors to compare predictive value of these measures, with each time dHL first entered into a separate block. Table 5 shows that the model with dHL and RSpan together explains 55% of the variance in SIN outcome. LA or LDT did not significantly improve the model on top of dHL.

Table 5.

Multiple Regression Analyses for Preoperative Prediction of SIN Performance of CI Users (n = 20) at 1 Year or More Post CI Activation.

| Predictor | B | SE | p | R2 change | R 2 |

|---|---|---|---|---|---|

| Model with LA | |||||

| dHL | 0.09 | 0.04 | .022 | .215 | .37 |

| LA | 1.87 | 0.94 | .065 | .155 | |

| Model with LDT | |||||

| dHL | 0.09 | 0.04 | .026 | .238 | .37 |

| LDT | 0.01 | 0.01 | .082 | .128 | |

| Model with RSpan | |||||

| dHL | 0.08 | 0.03 | .013 | .238 | .55 |

| RSpan | −0.22 | 0.06 | .003 | .314 |

Note. Separate regression analyses were performed for LA, LDT, and RSpan after controlling for duration of hearing loss. SIN = sentences-in-noise test; CI = cochlear implant; dHL = duration of hearing loss; LA = lexical access; LDT = lexical-decision test; RSpan = Reading Span test.; B = unstandardized regression coefficient; SE = standard error; p = level of significance; R2 = proportion of variance.

Next, multiple linear regression models were examined that could be used to explain results postoperatively. The regression analyses were repeated using the DIN thresholds to correct for auditory factors by using SRTdiff as dependent variable. Table 6 shows that LA, LDT, and RSpan accounted for an additional 22%, 18%, and 29% of variance, respectively, in SRTdiff scores after forcing dHL first into the regression. Together these variables explained 37%, 36%, and 46% of SRTdiff scores, respectively with LA, LDT, or RSpan in the model.

Table 6.

Multiple Regression Analyses for Postoperative Prediction of SRTdiff Performance of CI Users (n = 20) at 1 Year or More Post CI Activation.

| Variables | B | SE | p | R2 change | R 2 |

|---|---|---|---|---|---|

| Model with LA | |||||

| dHL | 0.06 | 0.03 | .04 | .156 | .37 |

| LA | 1.68 | 0.72 | .032 | .216 | |

| Model with LDT | |||||

| dHL | 0.06 | 0.03 | .058 | .173 | .36 |

| LDT | 0.01 | <0.01 | .041 | .184 | |

| Model with RSpan | |||||

| dHL | 0.05 | 0.03 | .048 | .173 | .46 |

| RSpan | −0.16 | 0.05 | .007 | .291 |

Note. Separate analyses were performed for LA, LDT, and RSpan after controlling duration of hearing loss. CI = cochlear implant; SRTdiff = difference between sentences-in-noise (SIN) and digits-in-noise (DIN) thresholds; dHL =duration of hearing loss; LA = lexical access; LDT = lexical-decision test; RSpan = Reading Span test; B = unstandardized regression coefficient; SE = standard error; p = level of significance; R2 = proportion of variance.

Comparison to NH Listeners

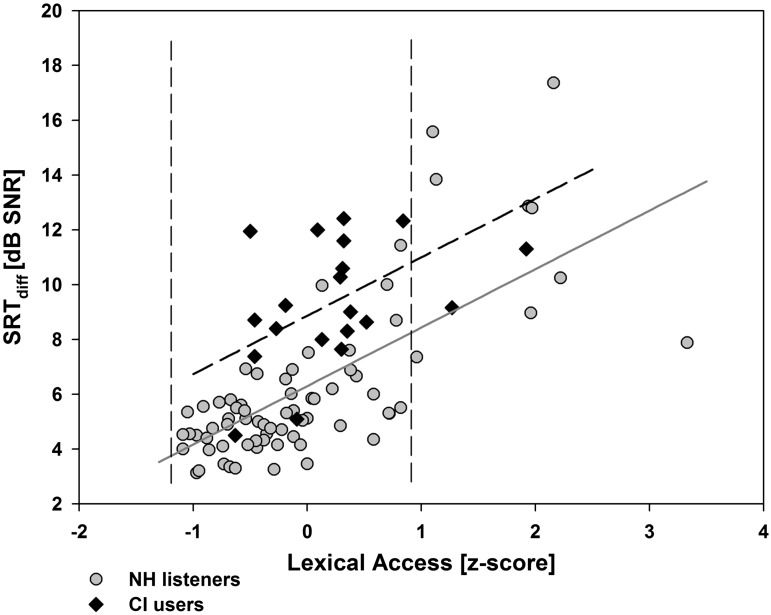

Figure 3 shows that CI users had similar LA scores compared with the NH group from the Kaandorp et al. (2016) study.

Figure 3.

Difference in speech reception threshold for sentences-in-noise and digits-in-noise (SRTdiff) for cochlear implant users (diamonds) together with the normal-hearing (NH) data of listeners with various levels of linguistic skills and nonnative listeners of Kaandorp et al. (2016; circles). Lines represent regression lines for both groups (black dashed for CI users, gray solid for NH listeners). The area between the vertical dashed lines represents the range of native NH listeners.

SRT = Speech Reception Threshold; SNR = signal-to-noise ratio; CI = cochlear implant.

To examine the relation between LA and speech recognition in noise for CI users compared with NH listeners, a multiple linear regression analysis was done on the total of NH and CI data (n = 92). For this analysis, we used SRTdiff values instead of the SIN thresholds to eliminate auditory factors from SIN results. In this combined group, SRTdiff was positively skewed. Log transformation, however, did not result in a normal distribution. Dummy variables were used to evaluate group differences (“CI” and “No CI”). The results showed that the slope of the regression line for CI users was not statistically different from that of the group of NH listeners, but on average, CI users had a 2.7 dB poorer SRTdiff than NH listeners with the same LA performance (Figure 3, Table 7). Thus, LA seems to predict differences between SRTdiff scores in CI users comparable with the effect that LA has in NH listeners.

Table 7.

Multiple Regression Analyses for Prediction of SRTdiff Scores of CI Users (n = 20) and Normal-Hearing Listeners (n = 72).

| Variables | B | SE | p | R2 change | R 2 |

|---|---|---|---|---|---|

| LA | 2.23 | 0.25 | <.001 | .44 | |

| CI | 2.66 | 0.52 | <.001 | .13 | .57 |

Note. SRTdiff = difference between sentences-in-noise (SIN) and digits-in-noise (DIN) thresholds; LA = lexical access; CI = dummy variable for cochlear implant use; B = unstandardized regression coefficient; SE = standard error; p = level of significance; R2 = proportion of variance.

Discussion

The results of this study, with 20 postlingually deafened CI users, support the hypothesis that there is an influence of linguistic and cognitive abilities on speech recognition in noise performance in CI users. Verbal working-memory capacity was a stronger predictor of CI SIN thresholds than lexical-access ability. Lexical-access ability, measured with the LDT test, was only correlated with the derived speech-in-noise measure SRTdiff. The role of lexical access comes on top of auditory factors that account for most of the variance in performance as was expected. Analyses of speech-recognition outcome measures included in this study showed that word recognition in quiet and digit-in-noise recognition were not influenced by cognitive and linguistic measures. However, for sentence recognition with the SIN test, 55% of the variance in results was predicted by verbal working memory together with dHL (both variables that can be determined preoperatively). When controlling for both auditory performance (with the DIN score that can be measured postoperatively) and dHL, lexical-access ability accounted for an additional 22% using the combined variable LA or 18% using LDT score and verbal working memory for an additional 29% of the variance in SRTdiff.

Outcome Measures

The word recognition results (CVC phoneme scores, a commonly used CI outcome measure) showed relatively good performance in quiet for the current study group with an average score of 85% of phonemes correct. Only two participants had CVC scores lower than 77%. For speech-recognition performance in noise, there was a much larger range of outcomes. For four participants, the SIN test produced results in an unreliable range, reflecting very poor performance. This poor speech-recognition performance might be caused by poor cognitive and linguistic processing but could also originate from poor auditory processing. Only one of the four participants had scores on the linguistic or cognitive tests that were among the poorest scores (Table 3), but this participant also had the lowest CVC scores and highest SFT. It is, thus, difficult to say what might have caused the poor results. Table 1 shows no clearly deviating or extreme variables for these poor performers, except that one CI user had a long duration of severe hearing loss, of 40 years. For Participant 7, an implant failure was identified as the cause of a gradual decreasing speech-in-noise recognition performance about two years after data collection for this study. Six months after reimplantation, her speech-in-noise recognition thresholds had drastically improved, which agrees with our assumption that her poor performance in noise during the study was primarily caused by a very poor auditory input.

We expect that the other three poor performers in noise also received a more degraded input. Although the speech recognition in quiet was for three listeners near that of the other participants, their received spectral detail could be much less due to differences in for instance electrode placement, neural survival, and the amount of channel interactions by spread of current in the cochlea. Several studies have shown that poor speech recognition in noise is not necessarily related to poor speech recognition in quiet. For instance, Shannon, Fu, and Galvin (2004) concluded, in a CI simulation study, that speech recognition in quiet requires only four spectral channels, whereas more complex materials can require 30 or more channels for the same level of performance. Results of Friesen, Shannon, Baskent, and Wang (2001) suggested that not all CI users were able to use all channels of spectral information provided by the implant, resulting in poorer speech recognition especially at lower SNR. They hypothesized that the use of multiple electrodes was limited by electrode interactions in these listeners. Thus, for some listeners with reasonable speech recognition in quiet, the available spectral detail can be limiting speech-recognition performance in noise. A very poor quality of auditory input in some CI recipients may result in a different effect of cognitive and linguistic factors. Very degraded signals might not contain enough usable information to put good cognitive or linguistic skills into action. The results of a study on phonemic restoration (Baskent, Eiler, & Edwards, 2010) indicated that listeners with mild hearing loss were able to benefit from top-down processing, while listeners with moderate hearing loss were not. Collison, Munson, and Carney (2004) also studied a group of CI users and hypothesized that differences in signal perception elicited by the heterogeneity of the study group might explain the lack of a relation of linguistic and cognitive skills with speech recognition in their study. They concluded that predictive relations of cognitive and linguistic variables with spoken word recognition might exist only in groups of listeners that are homogeneous with respect to other variables that affect implant use. In addition, Baskent et al. (2016) concluded that the interaction between bottom-up information in case of degraded speech and how this degradation can be compensated for using cognitive mechanisms is complex. In the current study, more clear relations were indeed found for the subset of CI users without the poorest performers on speech-in-noise recognition. Thus, the degree of degradation of the auditory signal seems to interact with the influence of top-down processes on speech recognition in noise. This limits some of our conclusions on the relations discussed later only to relatively good performing CI users.

Predictor Variables for Speech Recognition

In many studies, duration of (severe) hearing loss has been found to predict CI outcome (e.g., Blamey et al., 2013; Holden et al., 2013; Mosnier et al., 2014). In the current study, this variable was related to sentence recognition in noise, but not to phoneme recognition in quiet or digit-triplet recognition in noise. Compared with those studies, the range of scores and sample size of the current study was smaller, which was sufficient to detect strong correlations but could be too small for milder effects to be significant. Another explanation could be that possibly a longer duration of deafness has a stronger impact on the linguistic and more central auditory system, which is mostly reflected in SIN thresholds, than on peripheral auditory pathways. The other biographic or audiologic variables that were found to influence CI outcome in other studies (with very large study samples) were not significantly correlated with any of the speech-recognition scores in the current study sample.

Cognitive and linguistic abilities of the studied CI users did not clearly deviate from NH listeners with a broad range of cognitive and linguistic abilities from previous studies. This could be expected because these CI users acquired their linguistic skills with NH, and the tests were presented visually. For instance, only two CI users had LA scores worse than the native young listeners of Kaandorp et al. (2016), in the range of the nonnative highly educated young listeners performance (see Figure 3). Other studies concerning postlingually deafened adult CI users found similar results (e.g., Collison et al., 2004). Correlation analyses showed that word recognition in quiet and DIN threshold were not correlated with any of the cognitive and linguistic measures. For word recognition in quiet, this could be expected because most CVC phoneme scores were relatively high, possibly causing a ceiling effect at the chosen presentation level. Heydebrand et al. (2007) did find correlations in 33 participants between improvement of CVC word scores in quiet 6 months postoperatively and some cognitive measures, possibly because of the larger range in scores. For DIN thresholds, the absence of a correlation confirms the earlier findings that the DIN test is mainly associated with auditory capacity with only a small cognitive component (Kaandorp et al., 2016). This was also shown by Moore et al. (2014), who found only a 0.7 dB better DIN threshold for listeners with higher cognitive function in a Biobank study with a very large group (n > 500,000) of listeners.

SIN thresholds and the derived variable SRTdiff were, however, influenced by verbal working memory, which is in line with the findings of, for instance, Akeroyd (2008) for hearing impaired listeners. In the current study sample, the correlation between SIN thresholds and the composite measure LA was not significant, possibly because auditory factors caused more variation in results and the much larger age range of the CI users than the NH listeners in our previous study (Kaandorp et al., 2016). For SRTdiff (SIN threshold, corrected for auditory performance by the DIN threshold), a correlation was found with lexical-access ability when measured with the LDT. The composite measure LA was not significantly correlated with SRTdiff. Thus, although this finding has to be interpreted carefully because of the multiple correlations and the risk of type I errors, this suggests that lexical-access ability, measured with the LDT, does also relate to sentence recognition in noise of CI users, but the relation is not as clear as in NH listeners. After controlling for auditory factors by predicting SRTdiff, LA or LDT did add to the prediction model with dHL, which could be used to explain performance after implantation. Lyxell et al. (1996) also found a relation between lexical access measured preoperatively with a lexical-decision task and subjective speech understanding of CI users. They had a study sample with a larger range of performance than the current sample. Four of the 11 participants in their study had only environmental awareness or improved speechreading with their implants, while others could understand a conversation over the telephone. When we compared the current results with the NH data of Kaandorp et al. (2016), we found that the slope of the regression line was not different between the groups, but there was a −2.7 dB worse SNR for CI users. Part of this difference may be attributed to the fact that SRTdiff increases for larger hearing losses due to a less steep slope of the speech information function for listeners with hearing loss (see, e.g., Smits & Festen, 2011). Understanding the exact meaning of this difference requires further research on this topic. To conclude, the influence of LA on CI speech recognition seems comparable with that for NH listeners but comes on top of auditory factors, thereby showing comparatively lower predictive power.

The TRT scores of our CI users were comparable with those of the NH listeners of Besser et al. (2012). A similar influence of TRT scores on SIN thresholds could thus be expected, which would suggest TRT to be a valuable predictor of CI outcome before implantation. Unlike Haumann et al. (2012), we did not find a relation between the TRT test and speech-recognition scores. The group of CI users in Haumann’s study was larger than our study group; 96 participants, and still they found only a moderate correlation of r = .27 (p = .012), which might explain the difference in findings. Another explanation for the absence of a relation between TRT and SIN threshold can be the use of TRTcenter, which is more difficult than the original TRT test, because words are presented one by one instead of the whole sentence at once. In future studies, the original TRT might be a better choice, also to allow for comparison of results with previous studies. the size of the vocabulary was also not correlated with speech-recognition scores. This corresponds to findings of, for instance, Heydebrand et al. (2007), who did not find a correlation between vocabulary size and improvement of word recognition at 6 months after activation. Results of other studies, on the other hand, suggest that better receptive vocabulary knowledge is related to better speech recognition of NH listeners in adverse conditions (e.g., Benard, Mensink, & Baskent, 2014). We hypothesized that VS (a crystalized ability) might improve with age, and thus might show a larger range and on average better results in the current study group compared with the young listeners of Kaandorp et al. (2016), but this was not the case. In the current study, verbal working memory and lexical-decision performance (more fluid abilities) both were correlated with age, RSpan (r = −.54, p = .006), and LDT (r = .47, p = .019), where older age yielded a poorer performance on both tests. Besser et al. (2012) also found a significant correlation (r = −.52, p < .01) between RSpan and age for 55 NH listeners. Some studies on lexical access suggest that in lexical decision, the slower responses of older people are not a result of a lower quality of information processing with older age, but a result of factors like motor movement and degree of cautiousness in responding (Ramscar, Hendrix, Love, & Baayen, 2013). Nevertheless, age alone was not correlated with speech-recognition performance in the current study. Because age can relate to both higher as well as lower performance on some cognitive tasks, the variation in age in this study might have obscured results of the influence of these abilities on speech-recognition performance. Future research should focus either on homogeneous groups of CI users with respect to CI-related factors and age, or on very large groups of listeners, to possibly find concealed relations.

To conclude, auditory factors play a major role in speech recognition of CI users. But, of the cognitive and linguistic tests that can be measured preoperatively, RSpan in particular and LA or LDT to a lesser extent (more fluid abilities) might help to predict and understand SIN thresholds after 12 months of listening experience in CI users.

Lexical Access—Word Naming and Lexical Decision

The relation between visual lexical-access ability and SIN threshold was not as clear for CI users alone compared with the NH listeners of Kaandorp et al. (2016). Examining the two lexical-access tests separately, Pearson’s correlations with speech-recognition measures showed only a significant correlation for LDT with SRTdiff (r = .45, p = .047). The WN test did not correlate with any of the speech-recognition measures, nor did the composite measure LA. In the Kaandorp et al. (2016) study, both the LDT scores and WN scores were significantly correlated with SIN thresholds in the total NH group as well as in the native group. The range of WN scores was smaller in this native CI group than in that diverse NH group, which included second language users. This could render our WN test a test of less additive value to the composite measure LA. We used a very simple version of the WN test, measuring a total RT for reading 30 items in one run. A more precise WN test, that measures response times to the onset of each response with a voice key, excluding the time to produce the word, might be needed to indicate differences in lexical-access ability in this population.

In the current study, we used visual tests to assess lexical-access ability because we wanted to be able to predict speech-recognition outcomes with CI before implantation. A few studies on auditory lexical access suggest that lexical access might be different for listeners with degraded auditory input. For example, Farris-Trimble, McMurray, Cigrand, and Tomblin (2014) studied the perception of degraded speech in 33 CI users and 57 age-matched NH listeners of which 16 in a CI simulation condition. They used a visual world paradigm eye tracking task in which fixations to a set of phonologically related items were monitored as they heard a target word being named. They found differences in lexical access for the groups listening to degraded speech relative to a NH group listening to unfiltered speech. They also found weak evidence that the process for CI users was different from that of the NH group listening to CI simulations, suggesting they are accustomed to being uncertain and having to revise their interpretations. Also, McMurray, Farris-Trimble, Seedorff, and Rigler (2016) found evidence that CI users adapt their lexical access to remain flexible in situations of potential misperceptions. McQueen and Huettig (2012) also showed that young adult NH listeners adjust their strategy when there is more uncertainty in the signal. These articles suggest that auditory lexical access might be different for CI users. In the current study, we assumed that visual lexical access is not changed because of CI use but is primarily a measure of linguistic ability. Thus, where visual lexical access is a linguistic measure that can be obtained prior to implantation, auditory lexical access could be different for that person and could better explain speech-recognition outcome during the rehabilitation stage. Auditory lexical access is a future topic of our research.

To conclude, although we found only weak evidence that lexical-access ability is related to sentence recognition in noise in CI users, the findings in this study suggest to further examine the possible predictive value of these tests.

Clinical Implications

For both preoperative counseling and optimizing rehabilitation programs, it is very important to have a better understanding of the influence of cognitive and linguistic factors on CI outcome. Practical tests, that can be used in the clinic, are needed for this purpose. The current results suggest that poor speech-recognition performance with CI was likely due to auditory factors that can degrade the auditory signal, especially in noise. However, we can conclude that CI candidates with poor verbal working-memory capacity or slow lexical-access RTs are not likely to become the best performers after implantation. The visually conducted verbal working-memory and lexical-access tests can be measured preoperatively as well as postoperatively. As in our previous study, the average RT for words of all six lists was used as LDT score. To examine the value of using only two lists, the correlation of SIN and SRTdiff with the average of the two lists with medium word frequency (LDTMF) was examined. The analyses showed Pearson’s correlations for LDT with SIN (r = .41; p = .076) and with SRTdiff (r = .48; p = .033), comparable with the average of 6 lists. Therefore, in future studies or in the clinic, two lists with a small range of word frequencies can be used instead of six lists, making the test quicker. For the LDT, two lists take about 5 to 8 min. Information about working-memory capacity and lexical-access ability can help to better inform CI candidates of speech-recognition outcome with a CI. During the rehabilitation period, this information can, combined with digit-in-noise recognition, help understand sentence-in-noise recognition performance and thus performance in daily life and support a more personalized rehabilitation program. The influence of lexical-access ability on SRTdiff suggests that CI users with lower lexical-access skills might, despite favorable DIN scores, have significantly more problems with sentence recognition in noise compared with their ability to recognize digit-triplets in noise. These findings suggest to use the DIN test for evaluation of fitting of the CI. However, performance in real-life situations is probably better estimated with tests that demand more cognitive and linguistic skills.

Acknowledgments

The authors thank all participants of the study, Tine Goossens and Astrid de Vos for data collection, and Hans van Beek for technical support.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

References

- Akeroyd M. A. (2008) Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. International Journal of Audiology 47(Suppl 2): S53–S71. doi: 10.1080/14992020802301142. [DOI] [PubMed] [Google Scholar]

- ANSI S3.5-1997 (1997) Methods for the calculation of the speech intelligibility index vol. 19, New York, NY: American National Standards Institute, pp. 90–119. [Google Scholar]

- Baayen H., Piepenbrock R., Van Rijn H. (1993) The CELEX lexical data base CD-ROM, Philadelphia, PA: University of Pennsylvania, Linguistic Data Consortium. [Google Scholar]

- Bailey I. L., Lovie J. E. (1980) The design and use of a new near-vision chart. American Journal of Optometry and Physiological Optics 57(6): 378–387. doi: 10.1097/00006324-198006000-00011. [DOI] [PubMed] [Google Scholar]

- Baskent D., Clarke J., Pals C., Benard M. R., Bhargava P., Saija J., Gaudrain E. (2016) Cognitive compensation of speech perception with hearing impairment, cochlear implants, and aging: How and to what degree can it be achieved? Trends in Hearing 20: 1–16. doi: 10.1177/2331216516670279. [DOI] [Google Scholar]

- Baskent D., Eiler C. L., Edwards B. (2010) Phonemic restoration by hearing-impaired listeners with mild to moderate sensorineural hearing loss. Hearing Research 260(1–2): 54–62. doi: 10.1016/j.heares.2009.11.007. [DOI] [PubMed] [Google Scholar]

- Benard M. R., Mensink J. S., Baskent D. (2014) Individual differences in top-down restoration of interrupted speech: Links to linguistic and cognitive abilities. Journal of the Acoustical Society of America 135(2): El88–El94. doi: 10.1121/1.4862879. [DOI] [PubMed] [Google Scholar]

- Besser J., Zekveld A. A., Kramer S. E., Ronnberg J., Festen J. M. (2012) New measures of masked text recognition in relation to speech-in-noise perception and their associations with age and cognitive abilities. Journal of Speech, Language, and Hearing Research 55: 194–209. doi: 10.1044/1092-4388(2011/11-0008). [DOI] [PubMed] [Google Scholar]

- Blamey P., Arndt P., Bergeron F., Bredberg G., Brimacombe J., Facer G., Whitford L. (1996) Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants. Audiology and Neuro-Otology 1(5): 293–306. doi: 10.1159/000343189. [DOI] [PubMed] [Google Scholar]

- Blamey P., Artieres F., Baskent D., Bergeron F., Beynon A., Burke E., Lazard D. S. (2013) Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: An update with 2251 patients. Audiology and Neuro-Otology 18(1): 36–47. doi: 10.1159/000343189. [DOI] [PubMed] [Google Scholar]

- Bosman A. J., Smoorenburg G. F. (1995) Intelligibility of Dutch CVC syllables and sentences for listeners with normal hearing and with three types of hearing impairment. Audiology 34: 260–284. doi: 10.3109/00206099509071918. [DOI] [PubMed] [Google Scholar]

- Budenz C. L., Cosetti M. K., Coelho D. H., Birenbaum B., Babb J., Waltzman S. B., Roehm P. C. (2011) The effects of cochlear implantation on speech perception in older adults. Journal of the American Geriatrics Society 59(3): 446–453. doi: 10.1111/j.1532-5415.2010.03310.x. [DOI] [PubMed] [Google Scholar]

- Collison E. A., Munson B., Carney A. E. (2004) Relations among linguistic and cognitive skills and spoken word recognition in adults with cochlear implants. Journal of Speech Language and Hearing Research 47(3): 496–508. doi: 10.1044/1092-4388(2004/039). [DOI] [PubMed] [Google Scholar]

- De Groot A. M. B., Borgwaldt S., Bos M., Van Den Eijnden E. (2002) Lexical decision and word naming in bilinguals: Language effects and task effects. Journal of Memory and Language 47(1): 91–124. doi: 10.1006/jmla.2001.2840. [DOI] [Google Scholar]

- Farris-Trimble A., McMurray B., Cigrand N., Tomblin J. B. (2014) The process of spoken word recognition in the face of signal degradation. Journal of Experimental Psychology: Human Perception and Performance 40(1): 308–327. doi: 10.1037/a0034353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finley C. C., Holden T. A., Holden L. K., Whiting B. R., Chole R. A., Neely G. J., Skinner M. W. (2008) Role of electrode placement as a contributor to variability in cochlear implant outcomes. Otology & Neurotology 29(7): 920–928. doi: 10.1097/MAO.0b013e318184f492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen L. M., Shannon R. V., Baskent D., Wang X. (2001) Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. The Journal of the Acoustical Society of America 110(2): 1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Haumann S., Hohmann V., Meis M., Herzke T., Lenarz T., Buchner A. (2012) Indication criteria for cochlear implants and hearing aids: Impact of audiological and non-audiological findings. Audiology Research 2(1): e12. doi: 10.4081/audiores.2012.e12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heydebrand G., Hale S., Potts L., Gotter B., Skinner M. (2007) Cognitive predictors of improvements in adults’ spoken word recognition six months after cochlear implant activation. Audiology Neuro-otology 12(4): 254–264. doi: 10.1159/000101473. [DOI] [PubMed] [Google Scholar]

- Holden L. K., Finley C. C., Firszt J. B., Holden T. A., Brenner C., Potts L. G., Skinner M. W. (2013) Factors affecting open-set word recognition in adults with cochlear implants. Ear and Hearing 34(3): 342–360. doi: 10.1097/aud.0b013e3182741aa7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horn J. L., Cattell R. B. (1967) Age differences in fluid and crystallized intelligence. Acta Psychologica 26(2): 107–129. doi: 10.1016/0001-6918(67)90011-X. [DOI] [PubMed] [Google Scholar]

- Huysmans E., de Jong J., van Lanschot-Wery J. H., Festen J. M., Goverts S. T. (2014) Long-term effects of congenital hearing impairment on language performance in adults. Lingua 139: 102–121. doi: 10.1016/j.lingua.2013.06.003. [DOI] [Google Scholar]

- Kaandorp M. W., De Groot A. M. B., Festen J. M., Smits C., Goverts S. T. (2016) The influence of lexical-access ability and vocabulary knowledge on measures of speech recognition in noise. International Journal of Audiology 55(3): 157–167. doi: 10.3109/14992027.2015.1104735. [DOI] [PubMed] [Google Scholar]

- Kaandorp M. W., Smits C., Merkus P., Goverts S. T., Festen J. M. (2015) Assessing speech recognition abilities with digits in noise in cochlear implant and hearing aid users. International Journal of Audiology 54(1): 48–57. doi: 10.3109/14992027.2014.945623. [DOI] [PubMed] [Google Scholar]

- Lazard D. S., Vincent C., Venail F., Van de Heyning P., Truy E., Sterkers O., Blamey P. J. (2012) Pre-, per- and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: A new conceptual model over time. Plos One 7(11): 1–11. doi: 10.1371/journal.pone.0048739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leung J., Wang N. Y., Yeagle J. D., Chinnici J., Bowditch S., Francis H. W., Niparko J. K. (2005) Predictive models for cochlear implantation in elderly candidates. Archives of Otolaryngology-Head & Neck Surgery 131(12): 1049–1054. doi: 10.1001/archotol.131.12.1049. [DOI] [PubMed] [Google Scholar]

- Luteijn, F., & Barelds, D. (2004). Handleiding van de Groninger Intelligentie Test 2 (GIT-2): Lisse: Harcourt Test Publishers.

- Lyxell B., Andersson J., Arlinger S., Bredberg G., Harder H., Ronnberg J. (1996) Verbal information-processing capabilities and cochlear implants: Implications for preoperative predictors of speech understanding. Journal of Deaf Studies and Deaf Education 1(3): 190–201. doi: 10.1093/oxfordjournals.deafed.a014294. [DOI] [PubMed] [Google Scholar]

- Lyxell B., Andersson U., Borg E., Ohlsson I. S. (2003) Working-memory capacity and phonological processing in deafened adults and individuals with a severe hearing impairment. International Journal of Audiology 42(Suppl 1): S86–S89. doi: 10.3109/14992020309074628. [DOI] [PubMed] [Google Scholar]

- McMurray B., Farris-Trimble A., Seedorff M., Rigler H. (2016) The effect of residual acoustic hearing and adaptation to uncertainty on speech perception in cochlear implant users: Evidence from eye-tracking. Ear and Hearing 37(1): e37–e51. doi: 10.1097/AUD.0000000000000207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McQueen J. M., Huettig F. (2012) Changing only the probability that spoken words will be distorted changes how they are recognized. The Journal of the Acoustical Society of America 131(1): 509–517. doi: 10.1121/1.3664087. [DOI] [PubMed] [Google Scholar]

- Moore D. R., Edmondson-Jones M., Dawes P., Fortnum H., McCormack A., Pierzycki R. H., Munro K. J. (2014) Relation between speech-in-noise threshold, hearing loss and cognition from 40-69 years of age. Plos One 9(9): e107720 . doi:10.1371/journal.pone.0107720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosnier I., Bebear J. P., Marx M., Fraysse B., Truy E., Lina-Granade G., Sterkers O. (2014) Predictive factors of cochlear implant outcomes in the elderly. Audiology and Neuro-Otology 19(Suppl 1): 15–20. doi: 10.1159/000371599. [DOI] [PubMed] [Google Scholar]

- Munson, B. (2001). Relationships between vocabulary size and spoken word recognition in children aged 3 to 7. Contemporary Issues in Communication Science and Disorders, 28, 20–29. doi:1092-5171/01/2801-0020.

- Pisoni D. B. (2000) Cognitive factors and cochlear implants: Some thoughts on perception, learning, and memory in speech perception. Ear and Hearing 21(1): 70–78. doi: 10.1097/00003446-200002000-00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plomp R., Mimpen A. M. (1979) Improving the reliability of testing the speech reception threshold for sentences. Audiology 18(1): 43–52. doi: 10.3109/00206097909072618. [DOI] [PubMed] [Google Scholar]

- Ramscar M., Hendrix P., Love B., Baayen R. H. (2013) Learning is not decline: The mental lexicon as a window into cognition across the lifespan. The Mental Lexicon 8(3): 450–481. doi: 10.1075/ml.8.3.08ram. [DOI] [Google Scholar]

- Roditi R. E., Poissant S. F., Bero E. M., Lee D. J. (2009) A predictive model of cochlear implant performance in postlingually deafened adults. Otology & Neurotology 30(4): 449–454. doi: 10.1097/MAO.0b013e31819d3480. [DOI] [PubMed] [Google Scholar]

- Rubenstein H., Lewis S. S., Rubenstein M. A. (1971) Evidence for phonemic recording in visual word recognition. Journal of Verbal Learning and Verbal Behavior 10(6): 645–657. doi: 10.1016/S0022-5371(71)80071-3. [DOI] [Google Scholar]

- Shannon R. V., Fu Q. J., Galvin J., III., III. (2004) The number of spectral channels required for speech recognition depends on the difficulty of the listening situation. Acta Oto-Laryngologica Supplementum 552: 50–54. doi: 10.1080/03655230410017562. [DOI] [PubMed] [Google Scholar]

- Smits C., Festen J. M. (2011) The interpretation of speech reception threshold data in normal-hearing and hearing-impaired listeners: Steady-state noise. The Journal of the Acoustical Society of America 130(5): 2987–2998. doi: 10.1121/1.3644909. [DOI] [PubMed] [Google Scholar]

- Smits C., Goverts S. T., Festen J. M. (2013) The digits-in-noise test: Assessing auditory speech recognition abilities in noise. Journal of the Acoustical Society of America 133(3): 1693–1706. doi: 10.1121/1.4789933. [DOI] [PubMed] [Google Scholar]

- Van Rooij J. C., Plomp R. (1990) Auditive and cognitive factors in speech perception by elderly listeners. II: Multivariate analyses. The Journal of the Acoustical Society of America 88(6): 2611–2624. doi: 10.1121/1.399981. [DOI] [PubMed] [Google Scholar]

- Van Wijngaarden S. J., Steeneken H. J. M., Houtgast T. (2002) Quantifying the intelligibility of speech in noise for non-native listeners. The Journal of the Acoustical Society of America 111(4): 1906–1916. doi: 10.1121/1.1456928. [DOI] [PubMed] [Google Scholar]

- Versfeld N. J., Daalder L., Festen J. M., Houtgast T. (2000) Method for the selection of sentence materials for efficient measurement of the speech reception threshold. The Journal of the Acoustical Society of America 107(3): 1671–1684. doi: 10.1121/1.428451. [DOI] [PubMed] [Google Scholar]

- Zekveld A. A., George E. L. J., Kramer S. E., Goverts S. T., Houtgast T. (2007) The development of the text reception threshold test: A visual analogue of the speech reception threshold test. Journal of Speech Language and Hearing Research 50(3): 576–584. doi: 10.1044/1092-4388(2007/040. [DOI] [PubMed] [Google Scholar]