Abstract

This article describes a mixed methods study of community-based participatory research (CBPR) partnership practices and the links between these practices and changes in health status and disparities outcomes. Directed by a CBPR conceptual model and grounded in indigenous-transformative theory, our nation-wide, cross-site study showcases the value of a mixed methods approach for better understanding the complexity of CBPR partnerships across diverse community and research contexts. The article then provides examples of how an iterative, integrated approach to our mixed methods analysis yielded enriched understandings of two key constructs of the model: trust and governance. Implications and lessons learned while using mixed methods to study CBPR are provided.

Keywords: mixed methods research, community-based participatory research, complexity, pragmatism, community-engaged research

Over the past two decades, community-based participatory research (CBPR) and other forms of community-engaged and collaborative research have employed interdisciplinary mixed and multi-method research designs (Creswell & Plano Clark, 2011) to generate outcomes that are meaningful to communities (Israel, Eng, Schulz, & Parker, 2013; Trickett & Espino, 2004; Wallerstein et al., 2008). CBPR has increasingly been seen as having the potential to overcome some challenges of more standard research approaches, thereby strengthening the rigor and utility of science for community applicability. These challenges include ensuring external validity (Glasgow, Bull, Gillette, Klesques, & Dzewaltowski, 2002; Glasgow et al., 2006), translating findings to local communities (Wallerstein & Duran, 2010), improving research integrity (Kraemer-Diaz, Spears Johnson, & Arcury, 2015), and demonstrating both individual and community benefit (Mikesell, Bromley, & Khodyakov, 2013). At the nucleus of CBPR is the belief that etiologic and intervention research that incorporates community cultural values and ways of knowing is critical for improving quality of life and (specific to this article) reducing health disparities. CBPR has built on previous principles from the Centers for Disease Control participatory models and international development practice (Gaventa & Cornwall, 2001; Kindig, Booske, & Remington, 2010; Kreuter, 1992), such as respect for diversity, community strengths, cultural identities, power-sharing, and colearning (Israel et al., 2013; Israel, Schulz, Parker, & Becker, 1998; Minkler, Garcia, Rubin, & Wallerstein, 2012; White-Cooper, Dawkins, Kamin, & Anderson, 2009).

Despite the growing recognition of the potential impact CBPR and community-engaged research have on health disparities, little is known about the best practices required to maximize impact. While many individual CBPR studies have used mixed methods to evaluate their own effectiveness, the field of CBPR is still in its infancy for translating which partnering practices, such as those related to decision making, conflict mediation, and resource sharing among others, are most effective for improving health equity across initiatives. In this article, we report on a national mixed methods investigation of the character of CBPR partnership practices across varied health research contexts at a meta-level. Recognizing the variation within CBPR practices and processes, we developed a mixed methods design to capture the unique characteristics of place while developing insights for the translation of partnering processes to policy, practice, and health outcomes across diverse communities.

Research for Improved Health (RIH): A Study of Community-Academic Partnerships was a 4-year (2009–2013) study funded by the National Institutes of Health (NIH) and co-led by the National Congress of American Indians Policy Research Center, the University of New Mexico Center for Participatory Research and the University of Washington, Indigenous Wellness Research Institute. The overarching goal for the RIH study was to gain insight into how CBPR processes and community participation add value to health disparities research in American Indian/Alaska Native (AI/AN) communities and in other diverse communities that face health disparities. The RIH project proposed four specific aims; we focus on the first aim of using mixed methods to assess the variability of CBPR health research partnerships across the nation (see Hicks et al., 2012, for additional RIH study aims). Underlying our methodologic aim was our application of an indigenous-transformative lens to investigate the intricacies and character of community–academic partnered research across diverse contexts. While the actual process of conducting mixed methods research is more complex than current, often-static, descriptions of typologies suggest (Guest, 2012), we implemented a cyclic parallel and sequential mixed methods type, supporting Johnson and Onwuegbuzie’s (2004) assertion of mixed methods being “an expansive and creative form of research” (p. 20).

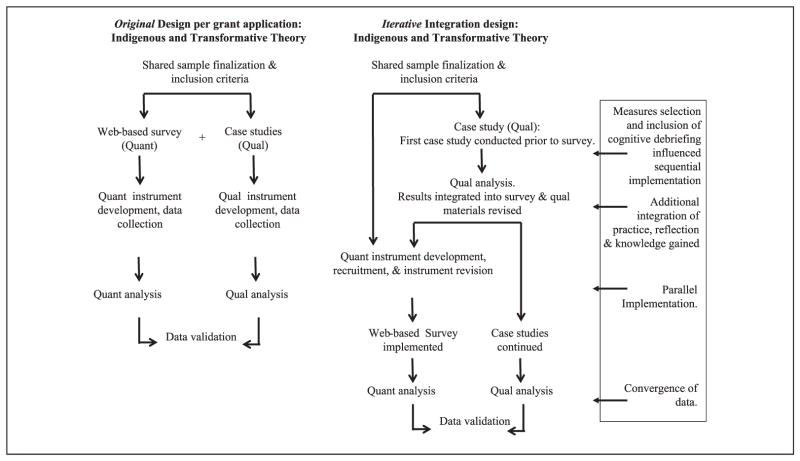

This article contributes to the current discussion on mixed methods and CBPR research in two ways. First, earlier CBPR research projects have mostly reported using mixed methods designs in individual studies. This is one of the first research projects that has used mixed methods to study CBPR processes and association with health and capacity outcomes at local and national levels. Second, while it is agreed that mixed methods involves the integration of qualitative and quantitative methods, the discussion still continues regarding the point of methodologic integration (Johnson, Onwuegbuzie, & Turner, 2007). This RIH project integrated a mixed methodology at all stages of the research process, often revisiting stages to incorporate new knowledge gained from practice. We refer to this as an iterative integration approach, in which our interdisciplinary team was grounded in an indigenous-transformative paradigm that recognized different ways of knowing at each stage and at critical decision points. This approach was adapted and expanded from the interactive approach that interrelates multiple research design components (Maxwell & Loomis, 2003): research purpose, conceptual framework, research questions, and method choice. By using the expanded iterative integration approach within our indigenous-transformative framework, we reflect on how each phase of the project was revisited to ensure operationalization of our philosophy and ways of knowing as well as related design components. Our original design was influenced by our own research team issues of partner readiness, priorities of our research questions, and roll out of data collection. Figure 1 illustrates the impact of our participatory processes on the mixed methods design, which will be explored throughout the article.

Figure 1.

Testing CBPR conceptual model with iterative mixed methods research design.

In this article, we present the development of the RIH mixed methods design as a progression from a 3-year, exploratory, theory-generating, pilot grant into a 4-year, theory-testing grant, totaling a 7-year trajectory. We will briefly describe the pilot study that resulted in a CBPR conceptual model and subsequent RIH project and partnership. We then discuss Specific Aim 1 of the national RIH study—to explicate and test the model across the variability, including intricacies and character, of CBPR practice and outcomes across the country. We show how we integrated our conceptual frameworks of indigenous and transformative theory and distinct ways of knowing into each stage of research and choice of methods over time. We narrate how we moved from the proposed streamlined parallel quantitative and qualitative design, to a sequential mixed methods design that returned to parallel data collection and analyses based on the practical unfolding of research implementation. We discuss our inquiry into two key constructs of the model, “trust” and “governance,” to illustrate the application of iterative integration of methodology, and the capacity for both localized and naturalistic generalizable knowledge. We conclude by drawing implications and lessons learned for using mixed methods within complex contexts of CBPR research.

Development of the Research for Improved Health Study

CBPR Conceptual Model Pilot Project

The RIH study was born out of a 3-year exploratory pilot study between the University of New Mexico and the University of Washington to understand what constructs matter most to CBPR partnerships. The pilot was funded as a supplement to an existing Native American Research Centers for Health (NARCH) grant (Hicks et al., 2012). The NARCH mechanism is a collaboration between the Indian Health Service and the NIH to (1) reduce distrust of research by AI/AN communities, (2) develop a cadre of AI/AN scientists, and (3) conduct rigorous research to reduce AI/AN health disparities. The NARCH perspective, therefore, significantly contributed to our indigenous and transformative research paradigm, which we applied to a diverse set of CBPR partnerships beyond AI/AN communities. We developed our research questions with the lens of understanding the role and practices of research democratization, including community ownership of research and knowledge for health equity.

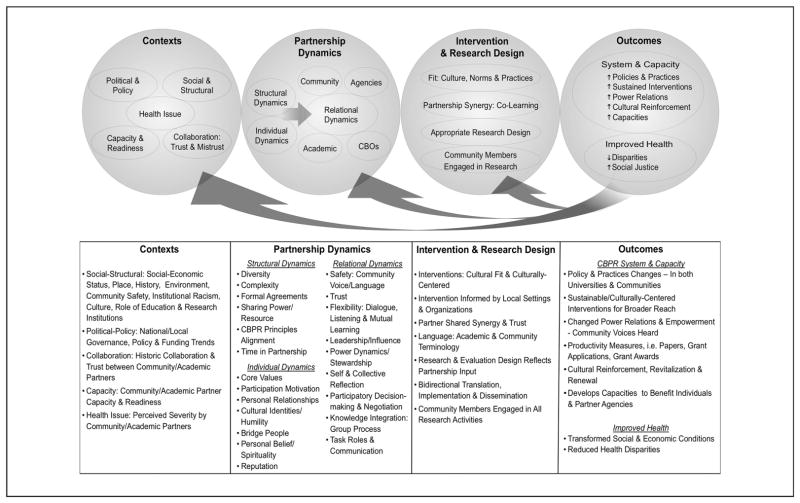

The specific purpose of the pilot project was to develop a conceptual model that identified the salient criteria required for successful CBPR partnerships. We conducted an extensive literature review, held ongoing discussion and deliberation with an advisory council comprised of community and academic CBPR experts, conducted a web-based quantitative survey to assess the appropriateness of the model constructs (Fitch et al., 2001), and held six qualitative community focus groups with local and national partnerships to validate and revise the model (Figure 2; Belone et al., 2016). The resultant CBPR conceptual model is divided into ovals representing four dimensions: context (social–political–cultural) of the research; group dynamics within partnerships; intervention and research design, which emerge from partnership processes; and CBPR and health outcomes, which are project dependent (Wallerstein & Duran, 2010; Wallerstein et al., 2008). The next step was to test the model with a larger sample.

Figure 2.

CBPR conceptual model.

Research for Improved Health Study

Toward the end of the pilot project, University of New Mexico and University of Washington researchers approached the National Congress of American Indians Policy Research Center as a a national AI/AN policy partner to collaborate on a cross-site national study of CBPR, through applying to the NARCH funding mechanism. The National Congress of American Indians Policy Research Center agreed to participate in the RIH study as an opportunity to contrast and compare partnership processes. Of particular interest was comparing the impact of AI/AN tribal governance and approval structures on health with other diverse communities that have more diffuse leadership (Duran, Duran, & Yellow Horse Brave Heart, 1998; Smith, 2012). As a result, our expanded partnered research team reflected a diverse mix of racial/ethnic and economic backgrounds, multiple academic disciplines, and lived experiences of oppression and privilege. Therefore, our collective epistemological background was rooted in our own experiences of the academy, our communities of origin, our extensive history of partnered research experiences, and our shared value in the power of research to effect social transformation. We further anticipated mutual benefits of colearning from diverse communities’ experiences with CBPR in order to test the CBPR model and strengthen the use of CBPR for improving health equity.

Research Purpose and Conceptual Frameworks

Undergirding our research purpose—to test the CBPR conceptual model and assess the contribution of partnering practices to health equity outcomes—was a paradigm that combined indigenous theory (Smith, 1999; Wilson, 2008) with a transformative framework (Mertens, 2007). Indigenous theories are motivated by decolonizing critiques of historic research abuses (Lomawaima, 2000; Smith, 1999; Walters et al., 2012). They honor cultural strengths and community knowledge to enhance research trust and a multidirectional learning process (Dutta & de Souza, 2008; Lomawaima, 2000; Norton & Manso, 1996). These cultural, political, and socioeconomic realities are embodied in the model’s Context dimension, which anchors all CBPR processes that follow. The concept of trust is found throughout the model: within Context, as “historic degree of collaboration and trust between community/academic” partners; in the Group Dynamics dimension as “listening, mutual learning, and dialogue”; in Intervention/ Research Design as “partnership synergy”; and in Outcomes as “sustainability.” Trust and power are inextricably linked, and one must be considered in the presence of the other.

Decolonization of research through indigenous theory can return control, and thereby power, to communities (explored later through the “trust” and “governance” examples). The central tenet of the transformative framework is that “power is an issue that must be addressed at each stage of the research process” so that research processes can impact systems to create genuine transformation (Mertens, 2007, p. 213). As found in the literature, equalizing power dynamics in research relationships are at the core of CBPR in its foundation of addressing inequitable community conditions and leveraging relational and knowledge resources for social transformation (Chilisa & Tsheko, 2014; Gaventa & Cornwall, 2001; Israel et al., 2013; Trickett, 2011; Wallerstein & Duran, 2006). Power dynamics are also laced throughout the model as “governance” within Context, “power-sharing” within Group Dynamics, “fit within the community” in Intervention/Research, and “changes in power relations” within Outcomes.

While CBPR as a whole has adopted transformative ideas of endorsing community partners as knowledge co-creators and of applying research findings toward social justice, indigenous methodologies add more focused attention to culturally driven epistemologies and community control over research, such as ensuring Tribal (or community) data ownership after research is completed. These indigenous insights can be valuable for all CBPR partnerships who seek to leverage multiple epistemologies, produce culturally centered and sustainable efforts to improve community health, develop trusting relationships, and reshape power relations within complex historic and current relationships between communities and dominant society (Mertens, 2010, 2012).

Research Design and Method

Mixed Methodology

With the indigenous-transformative framework embodied in the CBPR conceptual model, our transdisciplinary team embraced a mixed methodology to provide greater depth and breadth than a single method could provide (Mertens, 2012; Wallerstein, Yen, & Syme, 2011). We understood that methodological decisions needed to start with investigating social–political–culture contexts and ever-changing community–academic partnering relationships (Creswell & Plano Clark, 2011; Fassinger & Morrow, 2013; Israel et al., 2013; Mertens, 2012; Ponterotto, Mathew, & Raughley, 2013). While the conceptual model appears linear on paper, we expected mixed methods would enable us to pursue an investigation of partnership processes as complex ecological phenomena within dynamic contexts (Creswell & Plano Clark, 2011; van Manen, 1990). Many CBPR researchers have called for a recognition of this dynamic, ecological-systems perspective, with feedback loops in a system as opposed to separate and unrelated parts (Hawe, Shiell, & Riley, 2009; Trickett & Beehler, 2013). The conceptual model was developed as a response to this call and the complexity perspective allowed us to capture the breadth and depth of dynamic partnering processes within complex contexts and to identify transferable understandings. Because CBPR and mixed methods share complementary foundations in their approach to knowledge formation and use, the application of mixed methods to study CBPR on a meta-level was a natural fit.

Specific Methods

To widen the scope of inquiry (Greene, Caracelli, & Graham, 1989) and implement a mixed methods approach from conception to analyses, RIH originally proposed a parallel design (see Figure 1), with the intent to give equal weight to qualitative and quantitative methods. The plan was to draw a nation-wide sample of more than 300 federally funded and diverse CBPR partnerships for a cross-sectional Internet survey (for description of type of partners, see Pearson et al., 2015) and simultaneously recruit six to eight diverse qualitative case studies. Qualitative case study design was important for weakness minimization (Onwuegbuzie & Burke Johnson, 2006), in that the cross-sectional nature of the Internet survey was unable to assess the contextual, temporal, and dynamic nature of partnership processes. For example, we asked case study members to create a timeline of their partnership and identify key events. Rather than start from grant funding (as expected), all case studies took us back historically, such as to the destruction of an American Indian village by the federal army a 100 years before or to the Missouri compromise legislation on segregation. These timelines were empirical reminders of the importance of an indigenous culturally centered approach to each partnership’s context as well as the necessity of grounding partnership actions in transformation of historic inequities. As part of the iterative integrative approach these reflections and gained knowledge were incorporated into the RIH study (see Figure 1).

In terms of parallel construction, the RIH study team delineated University of New Mexico as responsible for the qualitative design and University of Washington for the quantitative design. After design conceptualization, the next methodological integration occurred during development of the data collection instruments to ensure conceptual alignment of concepts and constructs. The National Congress of American Indians Policy Research Center monitored project administration, participated both in the qualitative and quantitative teams, and was the intellectual driver of research on governance. Since the RIH research team spanned three nonadjacent states, monthly executive calls were held to ensure ongoing communication as well as philosophical and conceptual integration. For the more in-depth collaboration, at least one member of each of the three partners participated in weekly qualitative and quantitative team meetings to create team cross-pollination.

Team cross-pollination was an important part of our iterative integration approach, which produced opportunities to contribute to each other’s data collection methods and shaped our analytic approaches. While RIH was not a CBPR project per se, we were guided by CBPR principles, and as such, the team built in consultation from our Scientific Community Advisory Council (SCAC), composed of academic and community CBPR experts (Hicks et al., 2012). SCAC members included university faculty, staff from community-based organizations, advocates, public health workers, and tribal staff and community members. From the SCAC we created qualitative and quantitative subcommittees for ongoing input into the design and methods. Input from SCAC partners expanded our capacity to integrate community voice, always challenging in interdisciplinary teams.

For the study sample, all available information on federally funded CBPR and Community-Engaged Research projects was downloaded from the 2009 NIH RePORTER database. The sample included U.S.-based, R and U mechanism research with more than 2 years of funding as well as eligible CDC-funded Prevention Research Centers projects and tribal and/or AI/AN research projects receiving NARCH funding. (For sampling details, see Pearson et al., 2015.) All identified projects were invited to participate. The qualitative strand drew from the same sample pool using a robust multiple case-study design. Purposive sampling was used for individual interviews and focus groups (Herriott & Firestone, 1983; Lindof & Taylor, 2011; Stake, 2006; Yin, 2009). Through these case study data we sought to generate a rich understanding of how differing historical and contextual conditions interacted with partner perceptions and processes to produce a range of outcomes.

Implementation Changes: Instrument Finalization, Data Collection, and Analysis

Our original intention to construct instruments in parallel fashion was transformed into an iterative and sequential approach at a critical decision point that affected the timing of qualitative and quantitative methods implementation (Creswell & Plano Clark, 2011). Because the Quantitative Team took longer than expected to finalize the instrument scales, the Qualitative Team finished the first draft of case study interview and focus group guides and began data collection first, resulting in sequential implementation (see Figure 1). The sequencing was beneficial as the Qualitative Team was able to share preliminary results as well as reflections and knowledge from the first case study to inform the final version of the surveys and second draft of the qualitative guides. Participants in the first case study, for example, provided face validity to the trust typology (Table 2) through responses to questions about trust at different times of their relationship. The typology was then integrated into the survey instrument along with a separate trust scale from the literature to validate the new typology. Thus, the qualitative approach helped inform the survey instrument.

Table 2.

Trust Typology, Defining Characteristics, and Supporting Literature.

| Trust types | Characteristics | Supporting literature |

|---|---|---|

| Critical-reflective trust | Trust that allows for mistakes and where differences can be talked about and resolved | Identification-based: Lewicki and Bunker (1995) |

| Proxy trust | Partners are trusted because someone who is trusted invited them | Affective and cognitive: McAllister (1995) and Mayer, Davis, and Schoorman (1995) Organizational citizenship: Organ (1988) Relational: Rousseau, Sitkin, Burt, and Camerer (1998) Third-party influences: Deutsch (1958) |

| Functional trust | Partners are working together for a specific purpose and timeframe, but mistrust may still be present | Reputation: McKnight, Cummings, and Chervany (1998) Familiarity: Webber (2008) Formal agreement: Shapiro (1987) and Sitkin and Roth (1993) |

| Neutral trust | Partners are still getting to know each other; there is neither trust nor mistrust | Co-alliance: Panteli and Sockalingam (2006) Knowledge-based: Lewicki and Bunker (1995) Calculus-based: Lewicki and Bunker (1995) |

| Unearned trust | Trust is based on member’s title or role with limited or no direct interaction | Swift trust: Meyerson, Weick, and Kramer (1996) Presumptive: Webb (1996) |

| Trust deficit (suspicion or mistrust) | Partnership members do not trust each other | Role-based: Barber (1993) Suspicion: Deutsch (1958) and Luhmann (1979) Mistrust: Lewicki (2006) Cynical disposition: Hardin (1996) and Rotter (1971, 1980) |

While instrument finalization occurred at different time points, both the Quantitative and Qualitative teams sought feedback and other suggestions from SCAC advisory committee members. The final Quantitative instruments included (1) a web-based Key Informant Survey completed by the CBPR Principal Investigator (PI) that featured questions related to project-level facts, such as funding, years of partnering, partner member demographics, and so on; and (2) a web-based Community Engaged Survey that included perceptions of partnering quality, such as trust, power-sharing, governance, and multiple CBPR and health outcomes, mapped to the CBPR conceptual model domains. The final Qualitative case study instruments included (1) an individual interview guide, (2) a focus group guide, (3) a template for observation of a project partnership meeting, (4) a template for capturing historical timelines and social world map, and (5) a brief partner survey. The complete instruments can be found at http://cpr.unm.edu/research-projects/cbpr-project and http://narch.ncaiprc.org/index.cfm.

For the web-based survey there were a total of 200 PIs (68% response rate) who responded to the key informant survey and 450 participants in the community engagement survey (74% response rate). The seven case studies were the following: a substance abuse prevention partnership in the Pacific Northwest; a colorectal cancer screening project in California; a cardiovascular disease prevention project in Missouri; a cancer research project in South Dakota; an environmental justice project in New Mexico; and two projects in New York State, (1) a faith-based initiative addressing nutrition/diabetes and access to care and (2) a healthy weight project. In addition to health topics these projects served African American, Latino/a, American Indian, Deaf, and Asian communities. In total there were more than 80 individual interviews and six focus groups. The qualitative sample was purposefully diverse in key contexts, such as urban/rural demographics, geographic region, health issue, and ethnic/racial and other social–political identities.

Mixed Methods Data Analysis

As initially planned, our data analysis of the qualitative and quantitative data has been focused on co-validation, or triangulation, of results to understand the added value of participatory partnering practices for systems and health outcomes. We compared results between methods strands to validate findings in an effort to explain the phenomena and ensure the findings were not an artifact of a particular method (Johnson et al., 2007).

We provide two examples of co-validation in the next section: trust and governance. These two examples were selected as they are integral to the indigenous-transformative philosophy this project adopted and are understudied in health and CBPR research. For our analysis strategy we developed matrices of each specific aim, its research questions, and data analyses plans. Table 1 illustrates four empirical mixed methods research questions associated with our Specific Aim 1. These individual research questions reflect our capacity to benefit from the flexibility of mixed methods, with varying use of quantitative or qualitative methods to explore particular constructs in the CBPR model.

Table 1.

Mixed Methods Analysis Approach for Specific Aim 1.

| Specific Aim 1 | Research question | Quantitative data analysis | Qualitative data analysis | Relationship of quantitative and qualitative data analysis |

|---|---|---|---|---|

| Describe the variability of CBPR characteristics across dimensions in CBPR model to identify differences and commonalities across partnerships. | Research Question 1: How much variability is there in characteristics of CBPR projects? | Descriptive analysis of CBPR processes | Qualitative data to understand variability and dynamic/ temporal nature of CBPR processes | Convergent Parallel: Qualitative and Quantitative to address same issue |

| Research Question 2: How much congruence or divergence is there among partners on perceptions of context, group dynamics, research design, and outcomes? | Examine the intraclass correlation (i.e., degree of agreement of scores for members of the same partnership; and community vs. academic members) | Use qualitative data to look at variability of measures to add insight to quantitative data | Exploratory Sequential: Qualitative follows Quantitative | |

| Research Question 3: How does the concept of CBPR differ across partnership contexts? | Examine which CBPR principles are endorsed and compare across types of projects | Within a utilitarian versus worldview continuum noted in the literature, identify which characteristics differ and why. | Convergent Parallel: Qualitative and Quantitative to address same issue | |

| Research Question 4: How do group dynamics develop over time? | Explore themes illustrating how relational dynamics shape motivation and actions of partners. Describe how groups form and what processes groups use to facilitate effective CBPR. | Exploratory: Qualitative only |

The first research question explores the variability of CBPR projects through convergence of qualitative and quantitative data. The second question explores the congruence or divergence of perceptions among partners on perceptions of context, group dynamics, intervention/research design, and outcomes. We examined the quantitative data regarding the degree of alignment among community and academic partners and then followed with qualitative data to explain reasons for more or less congruence. The third question examines how CBPR differs across partnership contexts, which was explored simultaneously for congruence. Finally, the fourth research question explores how group dynamics developed over time, which is only available qualitatively as the quantitative data is cross-sectional. Given the original plan to emphasize methods equally we recognized the need to emphasize methods depending on research question.

What follows are two examples of data analysis for Specific Aim 1, Research Question 1 (see Table 1), to show how we integrated a mixed methods approach in the study of the variability for two characteristics: trust and governance. These two examples were chosen because each has been recognized as a salient characteristic essential for successful CBPR partnerships. Both however are understudied, requiring mixed methods to fully understand the conceptual and experiential dimensions of these constructs within and across CBPR projects. Based on our philosophical indigenous-transformative underpinnings, they provide good examples of our iterative integration and triangulation in the analysis.

Example 1: Trust Data Mixed Methods Analyses

Trust is widely accepted as a dynamic and multidimensional construct necessary for balancing power and ensuring respectful partnering, consistent with indigenous and transformative theories. Despite its importance, measures of trust in CBPR are still nascent. To address this gap, an initial version of the trust typology was developed during the pilot study and evolved through consultations with community–academic partners and through examination of the extant literature. The trust typology was created as an alternative measure for understanding the process of trust development in CBPR partnerships (Lucero, 2013). This typology represents a developmental model, though not necessarily anchored at opposite poles. It is not assumed that community–academic relationships begin at suspicion; rather, a partnership can begin at any type of trust, and it is up to the partnership to determine the type of trust necessary for its project. Wicks, Berman, and Jones (1999) refer to this as optimal trust, which focuses on finding the optimal point of partnership functioning appropriate to the context (see Table 2).

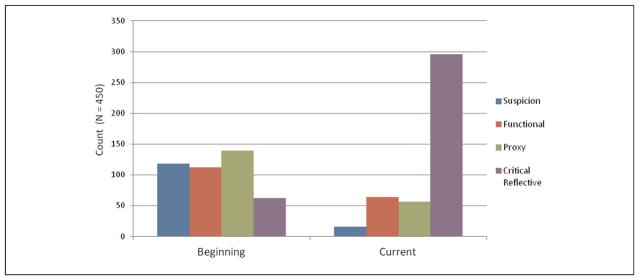

Using the research design previously mentioned, case study interviewees were asked to describe trust at the beginning and contemporary state in the partnership and what caused changes to occur. The web-based survey provided the types of trust with their definitions and asked participants to select the most appropriate type of trust at the beginning of their partnership and the current stage of their partnership and to choose the type of trust expected in the future. Quantitative and qualitative methods were given equal emphasis and data were analyzed simultaneously to create a convergence of knowledge about changes in trust and how and with what strategies these changes occur.

Data analysis from each method supported the theoretical notion that various trust types exist in practice. Interview participants described three types of trust that matched the typology: proxy trust, proxy mistrust, and no trust or suspicion at the beginning of their partnerships (see Figure 3). For example, an academic partner who knew the PI from past projects described proxy trust: “I trusted the PI. He was the one that I actually knew best of all the partners, ’cause I had a longer history of working with him. But he trusts the partners; and so naturally I trust the partners.”

Figure 3.

Beginning and current trust types.

In communities of color suspicion often stems from institutional histories of mistrust and lack of collaboration, issues clearly articulated in the data, situating trust as key to the transformative agenda of partnership equity and shared power. On being approached by researchers, for example, one interview participant stated, “I sometimes admit that I wonder if people have a hidden agenda that they’re not sharing with us. I think it’s a habit. I think it’s ingrained.” Similarly, an academic member said,

On the one hand, there’s this huge legacy of mistrust that has all the things . . . this notion of hidden transcripts; and I would say there’s a huge amount of that that still goes on. We hear certain conversations and not others.

Quantitative data converged with qualitative outcomes by showing that partners reported that their partnerships began in either suspicion, proxy trust, or, to a lesser extent, functional trust.

Regarding current trust, the majority of interview participants described their partnerships functioning at critical reflective trust. A community member shared this view of critical reflective trust:

What has made this project successful is the same thing that happened from the beginning is the fact that you are working with people who are open to listening and to hearing, and that you feel valued, heard, and appreciated, so that your voice counts . . . oftentimes, people come into these kinds of communities . . . and they often come with their own agenda.

The quantitative data complemented qualitative assessments of current trust. Survey respondents reported that their partnerships operated within either critical reflective trust or functional trust (see Figure 3). These data demonstrate the value of mixed methods by verifying each other as well as offering distinct contributions. Both the qualitative and quantitative outcomes indicate that trust types do exist in practice. Data provided evidence that many partnerships began in mistrust/suspicion or proxy trust, and over time those same partnerships shifted to functional or critical reflective trust. In terms of unique contributions the qualitative data provided information about what contributed to the process of trust development.

A mixed methods approach to study CBPR advanced the understanding of trust in three ways. First, adding the trust typology to the literature broadens how we conceptualize trust, a complex factor that changes over time and one that is critical for equalizing voice and power within partnerships. This view lines up with trust being a highly context-specific construct and offers opportunities for partnerships to assess their own experience of trust and, through mutual reflection, identify their visions and practices toward the trust they would like to achieve. Second, convergence of data provided breadth and depth regarding trust development, important for our indigenous lens of respect and honoring community voice. The quantitative data provided a snapshot of trust at different times in the partnership relationship, whereas the qualitative data elaborated on how and why types of trust developed over time, which showcased the transformative nature of CBPR. Third, through these data we see trust functioning at the local levels, with emerging patterns that can be transferable to other contexts.

Example 2: Governance Data Mixed Methods Analysis

Governance is a complex phenomenon that involves a number of practices, policies, and functions (Cornell, Jorgensen, & Kalt, 2002). Governance of research projects involves such practices as approval to conduct research, oversight and regulation of research ethics, engagement with research design and implementation, recruitment of research staff and participants, oversight of partnerships and resources, and oversight and management of data and dissemination (Brugge & Missaghian, 2006; Colwell-Chanthaphonh, 2006; Schnarch, 2004). While governance under sovereignty is unique to AI/AN communities, other communities can benefit from increased understanding and knowledge of how governance, such as formal data agreements or approval processes, affects CBPR processes toward social transformation and shared power outcomes.

In pursuing our indigenous lens we sought to understand the role and variability of governance in CBPR projects. Quantitative methods were given priority with qualitative methods supporting the primary method. The quantitative data explored factors such as who had final approval of the project, who controlled project resources, and the nature of agreements that were developed. For example, we asked PIs to name the body that provided final approval of the project and found much diversity in our sample: (1) Community Agency: Yes = 114, No = 86; (2) Tribal government or health board: Yes = 45 (from AI/AN research projects), No = 155; (3) Individual: Yes = 59, No = 141; (4) Advisory board: Yes = 70, No = 130; (5) Other: Yes = 8, No = 175; and (6) No decision: Yes = 25; No = 175. In this research we did not attempt to identify the “gold standard” governance model but instead a spectrum of governance, with each strategy contributing to reducing decolonization by returning some amount of control of research to the community. For example, in another publication using RIH quantitative data, Oetzel, Villegas, et al. (2015) found that approval by tribal government/health board/public health office was associated with greater community control of resources, greater data ownership, greater authority on publishing, greater share of financial resources for the community partner, and an increased likelihood of developing or revising institutional review board policies.

The qualitative data helped illustrate the strategies across the spectrum. For example, in our case studies with tribal partnerships, formal governance structures included advisory health and cultural committees, with projects under the oversight of tribal government’s official approval, including one tribal institutional review board created through tribal council resolution. Typically, in tribal communities there are clear lines of responsibility identified (usually through Memoranda of Understanding [MOU]) for decision making, oversight, protection of tribal citizens and indigenous knowledge, and community benefit. In case studies in other communities there was more diversity, with relationships dictating the formality (with MOUs or agreements) or informality of the community governance structure. Initial approvals involved core individuals or leaders who then negotiated with academics who held decision-making authority early on. Community committees often assumed more authority over time (and with the support of the academic partners). A community partner in one case study described this change:

[The academic partner] was like, “We are in the city. You guys are down there. We can’t do all this work.” And that is when the power shifted. But it shifted to where we are now communicating, coming up with the strategies, coming up with new ideas, and then taking it to the university.

Increasingly, CBPR practitioners have acknowledged the importance of formal agreements for publications and decision making (Yonas et al., 2013), linking back to functional trust and the conscious and negotiated development of mutual benefit and equity of relationships.

A mixed methods approach to governance in CBPR projects led to a more robust and meaningful analysis in four ways. First, through engagement of our Native community partner, National Congress of American Indians Policy Research Center, our team recognized that the theory and level of community governance needed to be a key element of the CBPR model. Through cross-team discussions, we then developed governance elements for both qualitative and quantitative instruments, demonstrating how our policy partner was not merely a project administrator but significantly contributed to the science, reflecting one of the key stances of shared power within CBPR.

Second, while academic partners typically understood governance to mean research approval, our cross-team discussions led to more nuanced understandings that were reflected by survey measures about project resourcing, partnership agreements, and power-sharing outcomes. From the qualitative data, we moved from talking about governance as a single event to understanding an ongoing negotiation about authority and accountability for research impact at the community level. These insights about continual negotiation came during mixed methods data analysis, which caused us to wish we had asked other questions in the survey about resource sharing negotiation, stewardship, and partnership decision making. Yet because of our triangulation of the data, we have returned to both datasets with a sharper sense of context and nuance of how governance matters in research partnerships, a demonstration of the interactive approach.

Third, our mixed methods approach to governance in CBPR research design caused us to frequently revisit a conversation about community benefits. Many conversations started with discussion about differences in “informal” and “formal” approval structures in the range of communities in our sample, and we constantly considered the uniqueness of tribal sovereignty in relation to other communities’ authority to set research policy. With our project being funded by the NARCH mechanism, we struggled with how to ensure insights for tribal communities while also incorporating other communities facing health disparities. As an example, AI/AN communities have the unique status of being sovereign nations with their own primary government that works on behalf of its community while managing relations with federal and/or state governments. Multiple governments govern other communities of color, and leadership is often highly distributed. Therefore, while lessons learned about governance in AI/AN communities are very different from other communities, using the broader scope of governance as a process and an arena for power sharing of data and publication, for example, provides insights for non-Native populations. Fourth, the conversation about “formal” and “informal” approval structures manifested through the data in both tribal and non-tribal communities. We were able to see emerging patterns of multiple governance structures. Although these structures were expressed differently within local case study sites, they are potentially generalizable practices that can be analyzed, within the quantitative data, for their contributions to CBPR and health outcomes (Oetzel, Zhou, et al., 2015).

Reflections and Conclusions

In this article, we have described our mixed methods study of CBPR processes and outcomes, with the aim of testing the CBPR conceptual model and the variability of research partnerships across the United States. Our study and the CBPR model were grounded in indigenous and transformative theory and the pursuit of co-created knowledge among community and academic partners. We have described the convergent processes of drawing from a common conceptual and theoretical framework to apply qualitative and quantitative methods in data collection, analysis, and inference about CBPR processes and outcomes. Rather than much of the mixed methods literature that articulates a defined protocol or type (Guest, 2012), we have illustrated how we combined a flexible approach with parallel and sequential iterations in design, data collection, and analyses. We have shown how our mixed methods sustained a commitment to the indigenous theoretical perspective, comparing tribal and nontribal communities, and to the transformative goal of seeking to understand interactions between equitable CBPR partnering practices with health equity outcomes. Here we discuss the implications of mixed methods for understanding our own RIH research questions and provides lessons learned to contribute to the integration of mixed methods into CBPR.

The major contribution of our RIH mixed methods research is evidence resulting in a deeper understanding of our CBPR conceptual model and of how context and relational dynamics impact research outputs and promote or constrict proximal and distal outcomes at the individual, agency, cultural-community, and health status levels. We have found mixed methods essential to study CBPR processes and outcomes, especially through our iterative integrative approach and based on our multiple epistemological perspectives. Contextual features, for example, could be explored in-depth in the case studies in ways not possible with the survey. Our qualitative timeline dialogue enabled case study partners to enrich their understanding of their partnership within historic events (i.e., decades or even a hundred years ago) and in struggles for equity over time. While the quantitative data provided evidence of associations between partner dynamics and proximal and distal CBPR outcomes, the qualitative data provided the contextual analysis for how and why individual partnering constructs may or may not be salient for particular outcomes.

We argue therefore that CBPR partnerships face challenges that are best met with mixed research methods. CBPR partnerships are framed by core contextual and partnership aspects that cannot be understood by static concepts implicit in cross-sectional survey research alone; these aspects are by nature complex and changing. We particularly sought to understand the lived realities of adverse conditions as well as the strengths of indigenous communities, communities of color, and others who face health disparities who can contribute their own intervention strategies. Given the emphases on external validity of research findings and the need to better translate research to diverse contexts (Glasgow et al., 2006), the grounding of interactive and iterative mixed methods designs in complex CBPR contexts makes sense, even as the discussion of validity in mixed methods is still emerging (Onwuegbuzie & Burke Johnson, 2006). That being said, we believe inference transferability, especially ecological transferability, results from these data (Teddlie & Tashakkori, 2003), which point to recommendations for CBPR practice, programs, and policies across multiple diverse communities.

Lessons Learned

In this last section, we articulate lessons we have learned as we practiced mixed methods within a CBPR approach and investigated CBPR partnership processes. Our lessons are fundamentally about how to embody fortitude and wisdom within a context of real partners, real suffering, real budget constraints, and real capacity limits to bring about the best short- and long-term outcomes possible.

Lesson 1

Transformative paradigms require the development of shared values and agreements to engage with multiple epistemologies that seek equity and social justice within partnered research. The values and assumptions of a transformative research paradigm helped capture the dimensions and constructs of our CBPR model and enriched our dialogue about research methods. Our indigenous-transformative paradigm grounded us in specific histories and theories of health disparities and influenced our decision making about instrumentation, sampling, and data collection methods. It influenced our decisions to spend extra time to obtain a full sample of the NARCH tribal projects (among the total RePORTER sample of all 2009 federally funded research partnerships), to delve deeply into history within our context dimension, and to focus on constructs that mattered in terms of equity, such as trust, governance, cultural-centeredness, and power-and resource-sharing. Our commitment to shared decision-making power kept us open to discussions and even admonitions from each other when our actions were different than our stated values and ideals. Because we shared fundamental values and trusted, we all wanted our actions to align with our deepest aspirations; we were able to move toward our own goal of partnership synergy as an important personal and professional outcome.

Lesson 2

Serendipity and flexibility are often the researcher’s friends in ensuring accountability to the research and the community. A flexible approach based on research implementation challenges enables researchers to make decisions along the way that best answer new and evolving research questions as they arise. The iterative nature of this project, to deconstruct the practice and variability of CBPR partnerships and outcomes through our theoretical lenses, ultimately led to balancing qualitative and quantitative methods in a practical and changing timeline that replicated the “true nature” of CBPR practice in real time (Howe, 1988). Furthermore, this project demonstrated that common sequential construction in mixed methods use is not always necessary (Hesse-Biber, 2010; Teddlie & Tashakkori, 2009). Hesse-Biber (2010) has argued that mixed methods projects are often driven by research techniques with little concern for theory. In contrast, this project was heavily grounded in theory and the conceptual CBPR model, providing a solid foundation for a realistic research process responsive both to our indigenous and transformative perspective as well as specific research dictates.

Lesson 3

Mixed methodology is powerful for studying complex phenomena, particularly when conceptualized from beginning design to final analyses, with integration occurring in research purpose, conceptual and theoretical frameworks, research questions, and choice of methods. Although our overall purpose was to test the CBPR conceptual model, flexibility dictated being open to processes of iterative integration, and the usefulness of different methodologies for answering questions based on different ways of knowing. This flexibility allowed us to develop deeper understandings of local partnerships from the qualitative data yet also use the qualitative data to add understandings about the importance of contextual dimensions across diverse partnerships surveyed nationwide. These understandings are currently being triangulated with the quantitative data to understand the associations between and contributions of partnering practices and CBPR systems and policy outcomes, which in turn can lead to improved health equity.

In conclusion, this article has illustrated a theory-based, mixed methods research design for the study of CBPR processes and health disparities outcomes. We have illustrated both the challenges in the implementation of this design and how we are engaging in co-validation and triangulation of our data analyses and interpretation of the findings. Advancing the science of CBPR is an important aspect of improving health and reducing health inequities in underserved communities and communities of color. A rigorous mixed methods design is a key component of advancing this science.

Acknowledgments

The research team wishes to gratefully acknowledge the Scientific and Community Advisory Council as well as the Qualitative, Quantitative, and Research Ethics Special Interest Groups for their contributions to this project: Melissa Aguirre, Hector Balcazar, Eddie F. Brown, Charlotte Chang, Terry Cross, Eugenia Eng, Shelley Frazier, Greta Goto, Gloria Grim, Loretta Jones, Joseph Keawe’aimoku Kaholokula, Michele Kelley, Paul Koegel, Naema Mohammed, Freda Motton, Tassy Parker, Beverly Becenti-Pigman, Jesus Ramirez, Nicky Teufel-Shone, Lisa Rey Thomas, Edison Trickett, Derek Valdo, Don Warne, Lucille White, and Sara Young. Our gratitude also to other members of the research teams not in official authorship on this article: Greg B. Tafoya, Lorenda Belone, Emma Noyes, Andrew Sussman, Myra Parker, Lisa Vu, Elana Mainer, Leo Egashira, Belinda Vicuna, and Heather Zenone.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was partially funded by the following: Supplement to Native American Research Centers for Health (NARCH) III: U26IHS300293; and NARCH V: U261IHS0036.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- Barber B. The logic and limits of trust. New Brunswick, NJ: Rutgers University Press; 1983. [Google Scholar]

- Belone L, Lucero JE, Duran B, Tafoya G, Baker EA, Chan D, … Wallerstein N. Community-based participatory research conceptual model: Community partner consultation and face validity. Qualitative Health Research. 2016;26(1):117–135. doi: 10.1177/1049732314557084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brugge D, Missaghian M. Protecting the Navajo people through tribal regulation of research. Science and Engineering Ethics. 2006;12:491–507. doi: 10.1007/s11948-006-0047-2. [DOI] [PubMed] [Google Scholar]

- Chilisa B, Tsheko GN. Mixed methods in indigenous research: Building relationships for sustainable intervention outcomes. Journal of Mixed Methods Research. 2014 doi: 10.1177/1558689814527878. Advance online publication. [DOI] [Google Scholar]

- Colwell-Chanthaphonh C. Self-governance, self-representation, self-determination, and the questions of research ethics: Commentary on “Protecting the Navajo people through tribal regulation of research. Science and Engineering Ethics. 2006;12:508–510. doi: 10.1007/s11948-006-0048-1. [DOI] [PubMed] [Google Scholar]

- Cornell S, Jorgensen M, Kalt J. The First Nations Governance Act: Implications of research findings from the United States and Canada. Tucson: Udall Center for Studies in Public Policy, University of Arizona; 2002. A report to the office of the British Columbia Regional Vice-Chief Assembly of First Nations. [Google Scholar]

- Creswell JW, Plano Clark VL. Designing and conducting mixed methods research. 2. Thousand Oaks, CA: Sage; 2011. [Google Scholar]

- Deutsch M. Trust and suspicion. Journal of Conflict Resolution. 1958;2:265–279. [Google Scholar]

- Duran B, Duran E, Yellow Horse Brave Heart M. Native Americans and the trauma of history. In: Thornton R, editor. Studying Native America: Problems and prospects in Native American studies. Madison: University of Wisconsin Press; 1998. pp. 60–78. [Google Scholar]

- Dutta MJ, de Souza R. The past, present, and future of health development campaigns: Reflexivity and the critical-cultural approach. Health Communication. 2008;23:326–339. doi: 10.1080/10410230802229704. [DOI] [PubMed] [Google Scholar]

- Fassinger R, Morrow SL. Toward best practices in quantitative, qualitative, and mixed-method research: A social justice perspective. Journal for Social Action in Counseling and Psychology. 2013;5(2):69–83. [Google Scholar]

- Fitch K, Bernstein S, Aguilar M, Burnand B, LaCalle J, Lazaro P, … Kahan J. The RAND/UCLA appropriateness method user’s manual. Santa Monica, CA: Rand Corporation; 2001. Prepared for Directorate General XII, European Commission. [Google Scholar]

- Gaventa J, Cornwall A. Power & knowledge. In: Reason P, Bradbury H, editors. Handbook of action research: Participative inquiry & practice. London, England: Sage; 2001. pp. 70–80. [Google Scholar]

- Glasgow RE, Bull SS, Gillette C, Klesques LM, Dzewaltowski DA. Behavior change intervention research in healthcare settings: a review of recent reports with emphasis on external validity. American Journal of Preventative Medicine. 2002;23:62–69. doi: 10.1016/s0749-3797(02)00437-3. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Green LW, Klesges LM, Abrams DB, Fisher EB, Goldstein MG, … Orleans CT. External validity: We need to do more. Annals of Behavioral Medicine. 2006;31:105–108. doi: 10.1207/s15324796abm3102_1. [DOI] [PubMed] [Google Scholar]

- Greene JC, Caracelli VJ, Graham WF. Toward a conceptual framework for mixed-method evaluation designs. Educational Evaluation and Policy Analysis. 1989;11:255–274. [Google Scholar]

- Guest G. Describing mixed methods research: An alternative to typologies. Journal of Mixed Methods Research. 2012;7(2):141–151. [Google Scholar]

- Hardin R. Trustworthiness. Ethics. 1996;107(1):26–42. [Google Scholar]

- Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. American Journal of Community Psychology. 2009;43:267–276. doi: 10.1007/s10464-009-9229-9. [DOI] [PubMed] [Google Scholar]

- Herriott RE, Firestone WA. Multisite qualitative policy research: Optimizing description and generalizability. Educational Researcher. 1983;12(2):14–19. [Google Scholar]

- Hesse-Biber SN. Mixed methods research: Merging theory with practice. New York, NY: Guilford Press; 2010. [Google Scholar]

- Hicks S, Duran B, Wallerstein N, Avila M, Belone L, Lucero J, … White Hat EW. Evaluating community-based participatory research to improve community-partnered science and community health. Progress in Community Health Partnership: Research, Education, and Action. 2012;6:289–299. doi: 10.1353/cpr.2012.0049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howe KR. Against the quantitative-qualitative incompatibility thesis or dogmas die hard. Educational Researcher. 1988;17(8):10–16. [Google Scholar]

- Israel B, Eng E, Schulz AJ, Parker EA. Methods for community-based participatory research. 2. San Francisco, CA: Jossey-Bass; 2013. [Google Scholar]

- Israel B, Schulz AJ, Parker EA, Becker AB. Review of community-based research: Assessing partnership approaches to improve public health. Annual Review of Public Health. 1998;19:173–202. doi: 10.1146/annurev.publhealth.19.1.173. [DOI] [PubMed] [Google Scholar]

- Johnson RB, Onwuegbuzie AJ. Mixed methods research: A research paradigm whose time has come. Educational Researcher. 2004;33(7):14–26. [Google Scholar]

- Johnson RB, Onwuegbuzie AJ, Turner LA. Toward a definition of mixed methods research. Journal of Mixed Methods Research. 2007;1(2):112–133. [Google Scholar]

- Kindig DA, Booske BC, Remington PL. Mobilizing Action Toward Community Health (MATCH): Metrics, incentives, and partnerships for population health. Preventing Chronic Disease. 2010;7(4) Retrieved from http://www.cdc.gov/pcd/issues/2010/jul/10_0019.htm. [PMC free article] [PubMed] [Google Scholar]

- Kraemer-Diaz AE, Spears Johnson CR, Arcury TA. Perceptions that influence the maintenance of scientific integrity in Community-based participatory research. Health Education & Behavior. 2015 doi: 10.1177/1090198114560016. Advance online publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreuter M. Patch: Its origin, basic concepts, and links to contemporary public health policy. Journal of Health Education. 1992;23(3):135–139. [Google Scholar]

- Lewicki RJ. Trust, trust development, and trust repair. In: Deutsch M, Coleman PT, Marcus EC, editors. The handbook of conflict resolution. San Francisco, CA: Jossey-Bass; 2006. pp. 92–119. [Google Scholar]

- Lewicki RJ, Bunker BB. Developing and maintaining trust in work relationships. In: Kramer RM, Tyler TR, editors. Trust in organizations: Frontiers of theory and research. Thousand Oaks, CA: Sage; 1995. pp. 114–139. [Google Scholar]

- Lindof TR, Taylor BC. Qualitative communication research methods. 3. Thousand Oaks, CA: Sage; 2011. [Google Scholar]

- Lomawaima KT. Tribal sovereigns: Reframing research in American Indian education. Harvard Educational Review. 2000;70(1):1–23. [Google Scholar]

- Lucero JE. Unpublished doctoral dissertation. University of New Mexico; Albuquerque: 2013. Trust as an ethical construct in community based participatory research partnerships. [Google Scholar]

- Luhmann N. Trust and power. Chichester, England: Wiley; 1979. [Google Scholar]

- Maxwell J, Loomis D. Mixed methods design: An alternative approach. In: Tashakkori A, Teddlie C, editors. Handbook of mixed methods in social and behavioral research. Thousand Oaks, CA: Sage; 2003. pp. 241–272. [Google Scholar]

- Mayer RC, Davis JH, Schoorman FD. An integrated model of organizational trust. Academy of Management Review. 1995;20:709–739. [Google Scholar]

- McAllister DJ. Affect- and cognitive-based trust as foundations for interpersonal cooperation in organizations. Academy of Management Journal. 1995;38(1):24–59. [Google Scholar]

- McKnight DH, Cummings LL, Chervany NL. Initial trust formation in new organizational relationships. Academy of Management Review. 1998;23:473–490. doi: 10.5465/AMR.1998.926622. [DOI] [Google Scholar]

- Mertens DM. Transformative paradigm mixed methods and social justice. Journal of Mixed Methods Research. 2007;1(3):212–225. [Google Scholar]

- Mertens DM. Transformative mixed methods research. Qualitative Inquiry. 2010;16:469–474. [Google Scholar]

- Mertens DM. Transformative mixed methods: Addressing inequities. American Behavioral Scientist. 2012;56:802–813. [Google Scholar]

- Meyerson D, Weick K, Kramer RM. Swift trust and temporary groups. In: Kramer RM, Tyler TR, editors. Trust in organizations: Frontiers of theory and research. Thousand Oaks, CA: Sage; 1996. pp. 166–195. [Google Scholar]

- Mikesell L, Bromley E, Khodyakov D. Ethical community-engaged research: A literature review. American Journal of Public Health. 2013;103(12):e7–e14. doi: 10.2105/AJPH.2013.301605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minkler M, Garcia A, Rubin V, Wallerstein N. Community based participatory research: A strategy for building healthy communities and promoting health through policy change. A Report to the California Endowment. Oakland, CA: PolicyLink; 2012. [Google Scholar]

- Norton IM, Manso SM. Research in American Indian and Alaska Native communities: Navigating the cultural universe of values and process. Journal of Consulting and Clinical Psychology. 1996;64:856–860. doi: 10.1037//0022-006x.64.5.856. [DOI] [PubMed] [Google Scholar]

- Oetzel JG, Villegas M, Zenone H, White Hat ER, Wallerstein N, Duran B. Enhancing stewardship of community-engaged research through governance. American Journal of Public Health. 2015;105:1161–1167. doi: 10.2105/AJPH.2014.302457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oetzel JG, Zhou C, Duran B, Pearson C, Magarati M, Lucero J, … Villegas M. Establishing the psychometric properties of constructs on a community-based participatory research logic model. American Journal of Health Promotion. 2015;29:e188–e202. doi: 10.4278/ajhp.130731-QUAN-398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onwuegbuzie AJ, Burke Johnson R. The validity issue in mixed research. Research in the Schools. 2006;13(1):48–63. [Google Scholar]

- Organ DW. Organizational citizenship behavior: The “good soldier” syndrome. Lexington, MA: Lexington Books; 1988. [Google Scholar]

- Panteli N, Sockalingam S. Trust and conflict within virtual interorganizational alliances: A framework for facilitating knowledge sharing. Decision Support Systems. 2005;39:599–617. [Google Scholar]

- Pearson CR, Duran B, Oetzel J, Margarati M, Villegas M, Lucero J, Wallerstein N. Research for improved health: Variability and impact of structural characteristics in federally funded community engaged research projects. Progress in Community Health Partnerships: Research, Education, and Action. 2015;9(1):17–29. doi: 10.1353/cpr.2015.0010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ponterotto JG, Mathew J, Raughley B. The value of mixed methods designs to social justice research in counseling and psychology. Journal for Social Action in Counseling and Psychology. 2013;5(2):42–68. [Google Scholar]

- Rotter JB. Generalized expectancies for interpersonal trust. American Psychologist. 1971;26:443–452. [Google Scholar]

- Rotter JB. Interpersonal trust, trustworthiness, and gullibility. American Psychologist. 1980;35:1–7. [Google Scholar]

- Rousseau DM, Sitkin SB, Burt RS, Camerer C. Not so different after all. A cross discipline view of trust. Academy of Management Review. 1998;23:393–404. [Google Scholar]

- Schnarch B. Ownership, control, access, and possession (OCAP) or self-determination applied to research: A critical analysis of contemporary First Nations research and some options for First Nations communities. Journal of Aboriginal Health. 2004 Jan;:80–95. [Google Scholar]

- Shapiro SP. The social control of impersonal trust. American Journal of Sociology. 1987;93:623–658. [Google Scholar]

- Smith LT. Decolonizing methodologies: Research and indigenous peoples. New York, NY: Zed Books; 1999. [Google Scholar]

- Sitkin SB, Roth NL. Explaining the limited effectiveness of legalistic “remedies” for trust/ distrust. Organization Science. 1993;4:367–392. [Google Scholar]

- Smith LT. Decolonizing methodologies: Research and indigenous peoples. 2. New York, NY: Zed Books; 2012. [Google Scholar]

- Stake RE. Multiple case study analysis. New York, NY: Guilford Press; 2006. [Google Scholar]

- Teddlie C, Tashakkori A. Major issues and controversies in the use of mixed methods in the social and behavioral sciences. In: Tashakkori A, Teddlie C, editors. Handbook of mixed methods in social and behavioral research. Thousand Oaks, CA: Sage; 2003. pp. 3–50. [Google Scholar]

- Teddlie C, Tashakkori A. Foundations of mixed methods research. Thousand Oaks, CA: Sage; 2009. [Google Scholar]

- Trickett EJ. Community-based participatory research as worldview or instrumental strategy: Is it lost in translation(al) research? American Journal of Public Health. 2011;101:1353–1355. doi: 10.2105/AJPH.2011.300124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trickett EJ, Beehler S. The ecology of multilevel interventions to reduce social inequalities in health. American Behavioral Scientist. 2013;57:1227–1246. [Google Scholar]

- Trickett EJ, Espino SL. Collaboration and social inquiry: multiple meanings of a construct and its role in creating useful and valid knowledge. American Journal of Community Psychology. 2004;34(1–2):1–69. doi: 10.1023/b:ajcp.0000040146.32749.7d. [DOI] [PubMed] [Google Scholar]

- van Manen M. Researching lived experience: Human science for an action sensitive pedagogy. Albany: University of New York Press; 1990. [Google Scholar]

- Wallerstein NB, Duran B. Using community-based participatory research to address health disparities. Health Promotion Practice. 2006;7:312–323. doi: 10.1177/1524839906289376. [DOI] [PubMed] [Google Scholar]

- Wallerstein NB, Duran B. Community-based participatory research contributions to intervention research: The intersection of science and practice to improve health equity. American Journal of Public Health. 2010;100:S40–S46. doi: 10.2105/AJPH.2009.184036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallerstein NB, Oetzel J, Duran B, Tafoya G, Belone L, Rae R. What predicts outcomes in CBPR? In: Minkler M, Wallerstein N, editors. Community-based participatory research for health: From process to outcomes. San Francisco, CA: Jossey-Bass; 2008. pp. 371–394. [Google Scholar]

- Wallerstein NB, Yen IH, Syme SL. Integrating social epidemiology and community-engaged interventions to improve health equity. American Journal of Public Health. 2011;101:822–830. doi: 10.2105/AJPH.2008.140988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walters KL, Lamarr J, Levy RL, Pearson C, Maresca T, Mohammed SA, … Jobe JB. Project hli?dx(w)/Healthy Hearts Across Generations: Development and Evaluation Design of a Tribally Based Cardiovascular Disease Prevention Intervention for American Indian Families. Journal of Primary Prevention. 2012;33:197–207. doi: 10.1007/s10935-012-0274-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb G. Trust and crises. In: Kramer RM, Tyler TR, editors. Trust in organizations. Thousand Oaks, CA: Sage; 1996. pp. 288–302. [Google Scholar]

- Webber SS. Development of cognitive and affective trust in teams: A longitudinal study. Small Group Research. 2008;39:746–769. doi: 10.1177/1046496408323569. [DOI] [Google Scholar]

- White-Cooper S, Dawkins NU, Kamin SL, Anderson LA. Community-institutional partnerships: Understanding trust among partners. Health Education & Behavior. 2009;36:334–347. doi: 10.1177/1090198107305079. [DOI] [PubMed] [Google Scholar]

- Wicks AC, Berman SJ, Jones TM. The structure of optimal trust: Moral and strategic implications. Academy of Management Review. 1999;24(1):99–116. [Google Scholar]

- Wilson S. Research is ceremony: Indigenous research methods. Halifax, Nova Scotia, Canada: Fernwood; 2008. [Google Scholar]

- Yin R. Case study research: Design and methods. 4. Thousand Oaks, CA: Sage; 2009. [Google Scholar]

- Yonas M, Aronson R, Coad N, Eng E, Petteway R, Schaal J, Webb L. Infrastructure for equitable decision-making in research. In: Israel BA, Eng E, Schulz AJ, Parker EA, editors. Methods for community-based participatory research for health. San Francisco, CA: Jossey-Bass; 2013. pp. 97–126. [Google Scholar]