Abstract

Background

Although high intensity focused ultrasound (HIFU) is a promising technology for tumor treatment, a moving abdominal target is still a challenge in current HIFU systems. In particular, respiratory‐induced organ motion can reduce the treatment efficiency and negatively influence the treatment result. In this research, we present: (1) a methodology for integration of ultrasound (US) image based visual servoing in a HIFU system; and (2) the experimental results obtained using the developed system.

Materials and methods

In the visual servoing system, target motion is monitored by biplane US imaging and tracked in real time (40 Hz) by registration with a preoperative 3D model. The distance between the target and the current HIFU focal position is calculated in every US frame and a three‐axis robot physically compensates for differences. Because simultaneous HIFU irradiation disturbs US target imaging, a sophisticated interlacing strategy was constructed.

Results

In the experiments, respiratory‐induced organ motion was simulated in a water tank with a linear actuator and kidney‐shaped phantom model. Motion compensation with HIFU irradiation was applied to the moving phantom model. Based on the experimental results, visual servoing exhibited a motion compensation accuracy of 1.7 mm (RMS) on average. Moreover, the integrated system could make a spherical HIFU‐ablated lesion in the desired position of the respiratory‐moving phantom model.

Conclusions

We have demonstrated the feasibility of our US image based visual servoing technique in a HIFU system for moving target treatment.

Keywords: high intensity focused ultrasound, motion compensation, robotic HIFU, visual servoing

1. INTRODUCTION

High intensity focused ultrasound (HIFU) is well known as a promising non‐invasive tumor treatment modality, and its applications are increasing. However, treatment for moving organs is still a challenge in currently available commercial HIFU systems due to treatment efficiency and safety. Motions of abdominal organs are caused by heartbeat, respiration, or even bowel peristalsis.1 Any of these motions can cause a mismatch of the target position and put neighboring organs at risk during lengthy HIFU interventions. Among these organ motions, respiratory‐induced motion exhibits the largest displacement, and so substantially influences the performance of HIFU treatment.

Several tracking strategies for respiratory‐induced target motion have been introduced. In2 and,3 a 2D motion model acquired from the initial learning phase was used to correct the target position during HIFU irradiation. In MR‐guided HIFU, pencil‐beam navigator echoes were used for motion compensation of both the MR thermometry and the target position.4, 5 Auboiroux et al.6 combined US imaging and MR imaging for image guidance of HIFU treatment in moving tissue. Respiratory gating for HIFU ablation was also studied by,7, 8 and.9 In a comparison of model‐based motion compensation and respiratory gating, Rijkhorst et al.8 showed that the model‐based method showed lower heating rates than gating. Auboiroux et al.7 exploited an MR‐compatible digital camera to obtain the triggers of respiratory gating and applied the method in the liver and kidney of sheep in vivo. However, in respiratory gating in HIFU ablation, it is difficult to precisely deposit the heat due to the inherent heat diffusion in tissue and the reduced overall duty cycle of the gated HIFU energy delivery. Therefore, for precise ablation of a moving target, the organ motion should be monitored in real time and continuously compensated during HIFU irradiation. Diagnostic ultrasound (US) imaging is an adequate modality for real‐time monitoring of a target, is cheaper than MRI, and is easily integrated as a hardware component in HIFU systems. In,10 US image based visual servoing was applied to track the respiratory motion of renal stones for lithotripsy. As a visual servoing technique for soft tissue motion, in our previous research, we made an artificial marker (visible in US imaging) in the protein phantom by initial HIFU irradiation and tracked the marker for motion compensation.11 Chanel et al. presented a US‐guided robotic HIFU system in,12 which applied a speckle tracking method based on normalized 1D cross‐correlation to reduce computation time. The method is still difficult to apply for real organ motion due to the out‐of‐plane motion.

In this paper, we present a US image based visual servoing technology integrated into a HIFU system for moving target ablation. Briefly, the organ motion is monitored by biplane US imaging and the target position is calculated in real time by registration with a preoperative 3D model. The distance between the target and the current HIFU focal position becomes an error that must be compensated in the visual servoing system. In experiments, organ motion was simulated in a water tank by a linear actuator moving a kidney‐shaped phantom model. During the motion compensation by US image based visual servoing, HIFU was irradiated at the desired target position. Because simultaneous HIFU irradiation disturbs US target imaging, and particularly biplane US imaging, a sophisticated interlacing strategy of US sonication was constructed. The accuracy of the visual servoing system was evaluated by checking whether a thermal lesion was created at the desired target position and by comparing the input motion of the phantom model with the compensated motion of the end‐effector.

2. MATERIALS AND METHODS

2.1. US image based target tracking

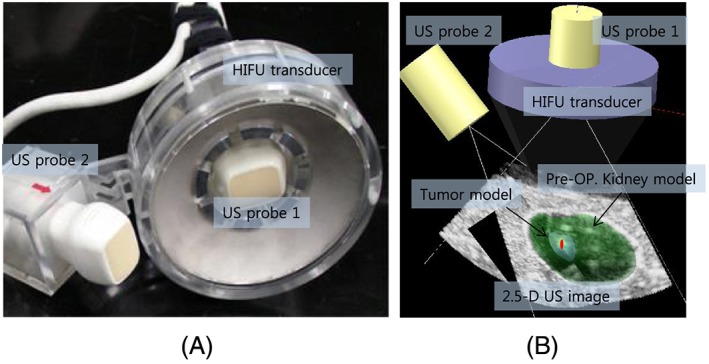

In our HIFU system, the end‐effector enables both US imaging and HIFU irradiation by the design shown in Figure 1. The design of the end‐effector is the same as that presented in our previous research.11, 13 Two US imaging probes are attached to produce biplane US images. The US probes are connected to an ultrasonic scanner (EUB‐8500, Hitachi Medical, Inc., Japan) that runs the two sector‐type probes in turn at an imaging speed of 20 frames per second. A control PC is connected to the US diagnostic machine with a frame grabber (Matrox Meteor‐Digital, Matrox Electronic System Ltd., Canada) to capture the US raw data (IQ Data). The US data are then converted into a biplane B‐mode image with consideration of the geometry of the two US probes in the end‐effector, shown in Figure 1 To track the target position, we register the biplane US image with a preoperative 3D organ model. The 3D model based target tracking method is fast (40 FPS in the developed system) and robust for noisy US image data. The details of the registration algorithm are explained in a previously published paper.13

Figure 1.

(A) End‐effector for biplane US imaging and HIFU, (B) result of preoperative 3D model registration with biplane US image model

2.2. Visual servoing

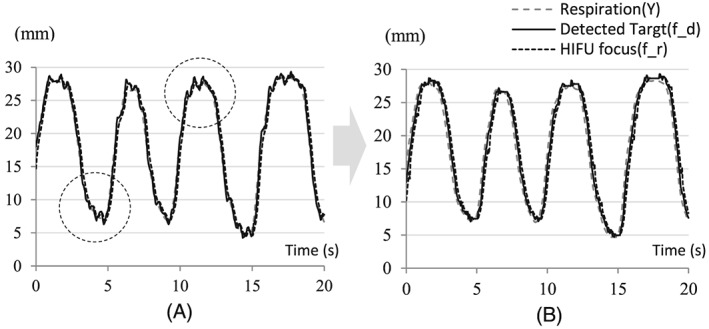

Position‐based visual servoing is implemented in a three‐axis robot system. The target position in the US image is obtained from the preoperative 3D model registration. The difference between the target position and the current HIFU focal position is considered as an error in the feedback control scheme. The control loop includes the encoder feedback (Z−Δt) to compensate for the end‐effector’’s motion during the calculation time for the current frame. The precise delay time (Δt) is measured and applied in the calculation of the new error ( ) as . When the current position is y, the desired target position to be compensated is fd, which is calculated as . This encoder feedback plays an important role in preventing overshoot in a reciprocating motion such as respiratory motion. The experimental results in Figure 2 show the differences with and without the encoder feedback. The PID gains are fixed in both cases.

Figure 2.

Overshoot reduction by applying encoder feedback: (A) without encoder feedback; (B) with encoder feedback

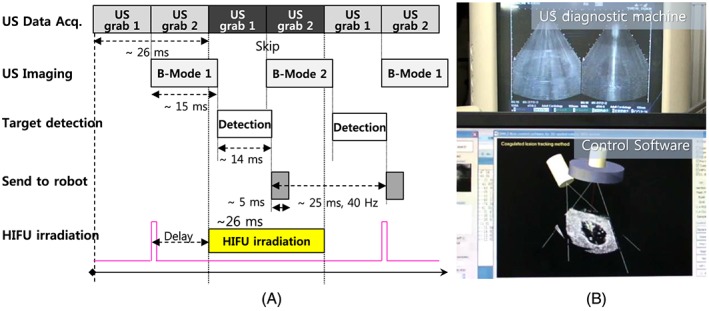

2.3. Integration with HIFU irradiation

The HIFU transducer in the end‐effector is a 100‐mm‐diameter spherical disk with a central hole of 50 mm in diameter and a spherical curvature of 100 mm for geometric focusing, as shown in Figure 1(A). Its center frequency is 1.66 MHz and the transducer is made of a single‐element piezoelectric crystal C‐213 (Fuji Ceramics, Inc., Tokyo, Japan). Since the scattered HIFU signals cause serious noise artifacts in US imaging, we need to construct a sophisticated timeline schedule in order to interlace two US sources. In particular, we need to consider the target tracking speed to secure the maximum efficiency of HIFU irradiation. A 50% duty ratio of HIFU irradiation was selected as the best setting. Therefore, we skip biplane US imaging alternately and irradiate HIFU during that time. Figure 3(A) indicates the time for each process. Based on the time schedule, the target was calculated every 25 ms, and 40 Hz target tracking was implemented. The image in Figure 3(B) is an example of interlacing based on the time schedule shown in Figure 3(A). The upper part of the image shows the noise artifacts due to HIFU irradiation in a diagnostic US machine while the lower part shows the control software displaying a B‐mode image without HIFU induced noise artifacts.

Figure 3.

(A) Time schedule for simultaneous HIFU irradiation with real‐time tracking. (B) Image of an experimental result of interlacing US imaging and HIFU irradiation

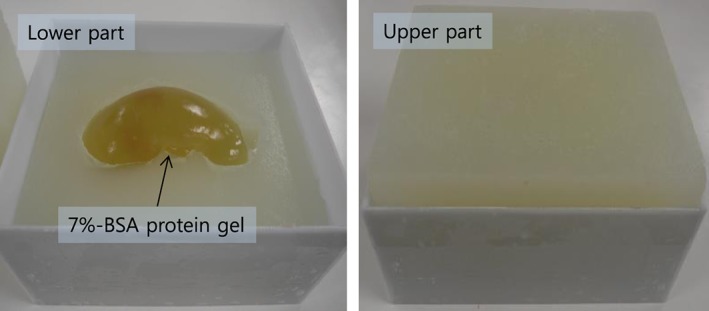

2.4. Phantom model for experiment

For the experiments of US image based visual servoing with HIFU ablation we need a phantom model that enables US imaging as well as lesion formation by HIFU thermal ablation. In this research, we developed a new phantom model combining a rectangular tissue‐mimicking phantom and a kidney‐shaped protein gel made of 7% bovine serum albumin (BSA).14 The protein gel was formed as the preoperative 3D kidney model and sandwiched between the tissue phantoms, as shown in Figure 4. The surface between the protein gel and the tissue phantom is shown as echogenic boundaries in the US image (see Figure 1B and Figure 3B. Therefore, the boundaries are utilized for registration with the preoperative 3D kidney model so that the target position in the US image can be calculated. Moreover, because BSA gel is optically transparent, the coagulated lesion is visually identified after HIFU ablation.

Figure 4.

Kidney‐shaped BSA phantom model for US imaging and HIFU ablation

3. EXPERIMENTS AND RESULTS

3.1. Experimental conditions

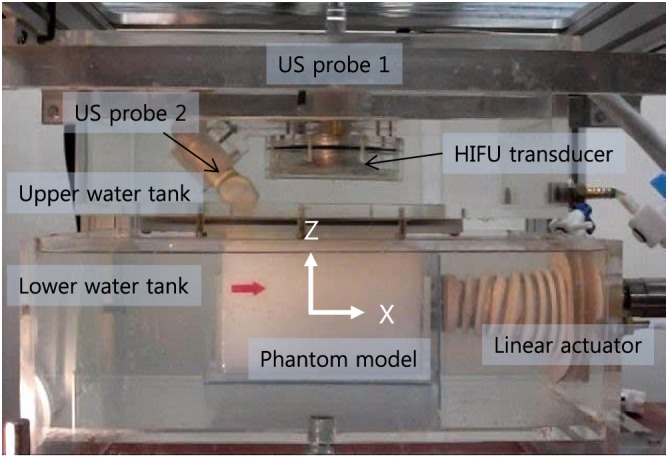

The experimental system is composed of lower and upper water tanks as shown in Figure 5.

Figure 5.

Experimental setup for biplane US image based visual servoing for HIFU irradiation

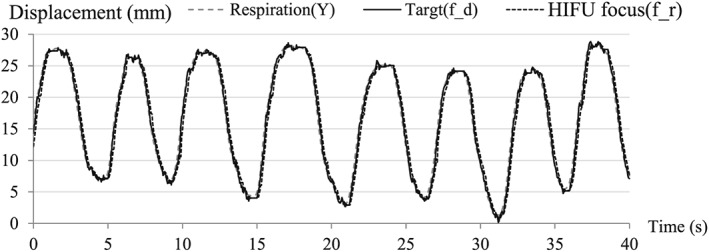

The lower water tank simulates a human body. The phantom model is submerged in the lower water tank at a water temperature maintained at 37°C. The linear actuator simulates the respiratory‐induced cranio‐caudal motion by holding the kidney phantom model. For a realistic simulation, the input motion data were captured from a real human subject: we manually aligned the US imaging plane to the cranio‐caudal axis and captured the 2D US sequence of the kidney of a healthy volunteer under free breathing. Block matching was applied to extract the displacement profile from the first frame of the 2D US sequence. Respiration (Y) in Figure 6 gives the recorded motion profile for 40 s. The maximum speed of the motion was 20 mm s−1 and the maximum amplitude was 25 mm.

Figure 6.

Experimental result of motion compensation with HIFU irradiation (sampling time: 40 s)

The upper water tank has the end‐effector and contacts the surface of the phantom model via an acoustically transparent silicon membrane. The water in both tanks is degassed and circulated during the experiments.

3.2. Visual servoing and error analysis

To evaluate the performance of visual servoing, we recorded the detected target position (fd), and the position of the HIFU focus (fr), and the motions of the linear actuator (Y) simultaneously. Their errors are defined as follows:

(Target detection error): error between the respiratory motion and the detected target position;

(Visual feedback error): error between the detected target and the current HIFU focus;

(Motion compensation error): error between the respiratory motion and the HIFU focus.

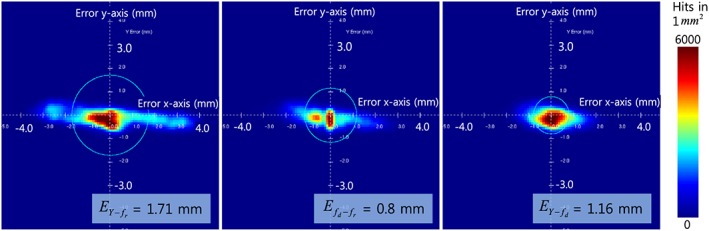

As an experimental result, the time‐variant visual servoing errors over 40 s are shown in Figure 6. The gains were adjusted to track the target motion as closely as possible. The experiment for the motion‐compensated HIFU ablation was conducted for 5 min and motion data were sampled every 5 mins. The results for the total experimental time are represented as a 2D error histogram in Figure 7. Although the input motion of the phantom produced by the linear actuator was only along the x‐axis, the errors were 2D because the target detection method based on 3D model registration produced errors along the y‐axis as well. The errors along the z‐axis were negligible. We conducted the same experiment six times; the results are listed in Table 1. As shown by the results, the visual servoing system can compensate for the respiratory motion of the target with an error of 1.73 mm ( , RMS) with HIFU irradiation.

Figure 7.

2D error histograms of motion compensation with HIFU irradiation (experimental time: 5 min): results of Experiment 3 in Table 1

Table 1.

Experimental results: motion compensation errors with HIFU irradiation

| Experiment No. |

|

|

|

|||

|---|---|---|---|---|---|---|

| 1 | 0.77 | 1.16 | 1.74 | |||

| 2 | 0.86 | 1.15 | 1.78 | |||

| 3 | 0.80 | 1.16 | 1.71 | |||

| 4 | 0.77 | 1.17 | 1.72 | |||

| 5 | 0.81 | 1.18 | 1.76 | |||

| 6 | 0.76 | 1.13 | 1.69 | |||

| Average | 0.80 | 1.16 | 1.73 |

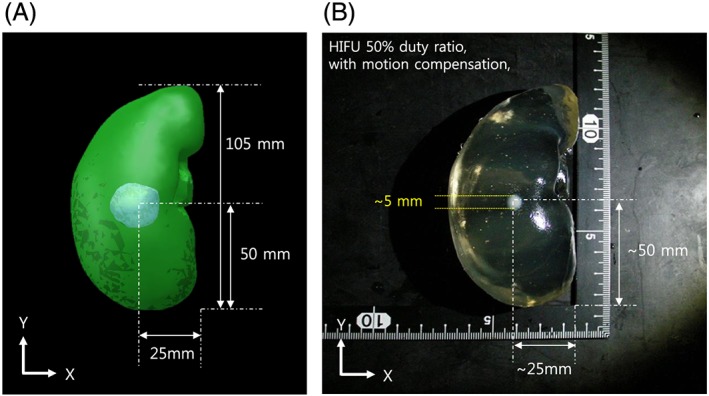

3.3. HIFU ablation for the moving target

After the visual servoing, we checked whether our HIFU system was able to create a coagulated lesion in the desired position of the moving target. The accuracy of HIFU ablation for the moving target was evaluated by comparing the position of the created lesion in the phantom model and the desired target position in the preoperative kidney model. We extracted the BSA phantom from the tissue phantom model and checked the lesion. As shown in Figure 8, the coagulated lesion was created at a position identical to that of the tumor model in the preoperative kidney phantom model. This result proves that our visual servoing was successfully applied for HIFU ablation of the moving target.

Figure 8.

(a) Preoperative 3D kidney model with tumor volume, (b) HIFU irradiation result with motion compensation

4. DISCUSSION

4.1. Motion compensation errors with HIFU thermal ablation

Because the motion compensation error is directly related to the thermal ablation efficiency of HIFU, we need to consider the relationships among motion compensation errors, HIFU irradiation time, and the size of the unit ablation area. A larger motion compensation error requires longer HIFU irradiation time to generate thermal ablation, which will make the unit ablation area larger. In the developed visual servoing system, the motion compensation error is a comprehensive error of and . To improve , therefore, we need to reduce and . , which depends on the speed of the target tracking, is reduced by adjusting the field of view of the US imaging or by reducing the calculation time. depends on the hardware performance of the robot system. Increasing the control gain will decrease this error. However, the higher values of PID gain will cause a mechanical oscillation, which can increase both and . Without mechanical servoing, the electronic HIFU beam steering will reduce this error. However, the tracking range that the system can cover will be smaller. Because these errors in visual servoing for the HIFU system are mutually dependent, we need to carefully consider their relationships when changing any feature of a system.

4.2. HIFU duty ratio in the US‐guided visual servoing system

In the experimental system, US imaging and HIFU irradiation were interlaced, because the scattered HIFU signals can be a significant source of noise in US target imaging. For the interlacing, the maximum duty ratio of HIFU was set to 50% to secure the maximum US imaging speed. A higher HIFU duty ratio reduces the US imaging speed and decreases tracking accuracy, which lowers the efficiency of HIFU treatment. Therefore, 50% of the HIFU duty ratio is the optimal for current US‐guided HIFU systems. However,15 and16 proposed a method to achieve a 100% HIFU duty ratio with US imaging. The basic idea of15 is that the US imaging signal is encoded as a 13‐bit Barker code and is simultaneously burst with the HIFU signal. The reflected HIFU signal by the imaging array can be removed by notch filtering and pulse compression. Based on that method,16 introduced an adaptive method to determine the parameters of notch filtering, and the reported results seem to demonstrate an improvement over the previous method.

4.3. Visual servoing induced HIFU lesion control

No current visual servoing technique can precisely compensate for the motion of a target. Therefore, in visual servoing in the HIFU system, the error always accumulates near the target location; i.e. the heat is deposited across a region, not at a sharp point. This can be visualized by the 2D error histogram shown in Figure 7. Here, we found that the shape of the ablated lesion after motion compensation was usually spherical, as shown in the Figure 9, whereas normally the focal region is a long cigar shape17 along the axis of HIFU irradiation. The larger error or longer HIFU irradiation enlarges the lesion along the direction of the target motion rather than the direction of irradiation. However, appropriate conditions could be applied intentionally to create a spherical lesion. This irradiation shape might be very useful for spherical and small targets that conventional HIFU cannot treat.

Figure 9.

Example result of HIFU ablation with US image based visual servoing (XZ‐plane view)

4.4. Future improvements to US‐guided robotic HIFU systems

In the developed system, the end‐effector is submerged in a water tank and transmits US energy to the patient via a silicon membrane covering a bottom hole in the water tank. In clinical applications, therefore the water tank will be fixed on the patient's body and the end‐effector will track the target motion in the water tank. However this design limits the range of imaging and treatment, so we are planning to redesign the end‐effector with a small water bag in the next version. The FUTURA system18, 19 utilizes two robotic arms, one for the US imaging probe and the other for holding the HIFU transducer. The system gives high flexibility and enables treatment of various targets. For both cases, however, a strategy for constant skin contact should be considered. Bell et al.20 discussed using force control to maintain the probe contact with the patient body.

As for the US image based target tracking method, we consider applying the methods tested by De Luca et al. in.21 In their tests, a large number of real US sequences of the livers of both healthy volunteers and tumor patients under free breathing were used, and six different liver‐tracking methods were directly compared by a quantitative evaluation of tracking accuracy. Although the methods tested by De Luca et al. were all for liver tracking, the methods are translatable to kidneys as well.

With the developed visual servoing system, we aimed to produce a single lesion in the moving target. Therefore servoing for three translational axes was acceptable even if the target spun during the motion. However, additional degrees of freedom should be considered when multiple thermal ablations are required in the volumetric target.

5. CONCLUSION

In this paper, a US image based visual servoing method for a HIFU system was presented. We assumed the condition of respiratory motion, and the target organ was a kidney. In experiments, we built a moving organ model with a linear actuator and kidney‐shaped phantom model to simulate the respiratory‐induced cranio‐caudal motion of the kidney. The motion was based on real human respiration data. The kidney phantom model was monitored by biplane US imaging and the target position was calculated in real time (40 Hz) by registration of a preoperative 3D model. The distance between the target and the current HIFU focal position becomes an error that needs to be compensated in the visual servoing system. Because the motion compensation is conducted during HIFU irradiation, the system must avoid the intervention of two US sources. Therefore, a sophisticated timeline strategy was applied for US signal interlacing. Six experiments of motion compensation with HIFU irradiation were conducted, and the motion compensation error was 1.73 mm (RMS) on average. As a result, thermal lesions were created at the desired position in the moving phantom by the proposed visual servoing technique. Based on the results, we have demonstrated the feasibility of our US image based visual servoing technique in a HIFU system for moving target treatment.

Seo J, Koizumi N, Mitsuishi M, Sugita N. Ultrasound image based visual servoing for moving target ablation by high intensity focused ultrasound. Int J Med Robotics Comput Assist Surg. 2017;13:e1793 https://doi.org/10.1002/rcs.1793

[The copyright line for this article was changed on 27 December 2016 after original online publication.]

REFERENCES

- 1. Zachiu C, Senneville BD, Moonen C, Ries M. A framework for slow physiological motion compensation during HIFU interventions in the liver: proof of concept. Med Phys. 2015;42(7):4137–4148. [DOI] [PubMed] [Google Scholar]

- 2. Arnold P, Preiswerk F, Fasel B, Salomir R, Scheffler K, Cattin P. Model‐based respiratory motion compensation in MRgHIFU. International Conference on Information Processing in Computer‐Assisted Interventions; 7330:54–63. [Google Scholar]

- 3. Senneville BD, Mougenot C, Moonen C. Real‐time adaptive methods for treatment of mobile organs by MRI‐controlled high‐intensity focused ultrasound. Magn Reson Med. 2007;57(2):319–330. [DOI] [PubMed] [Google Scholar]

- 4. Köhler MO, Senneville BD, Quesson B, et al. Spectrally selective pencil‐beam navigator for motion compensation of MR‐guided high‐intensity focused ultrasound therapy of abdominal organs. Magn Reson Med. 2011;66(1):102–111. [DOI] [PubMed] [Google Scholar]

- 5. Ries M, Senneville BD, Roujol S, et al. Real‐time 3D target tracking in MRI guided focused ultrasound ablations in moving tissues. Magn Reson Med. 2010;64(6):1704–1712. [DOI] [PubMed] [Google Scholar]

- 6. Auboiroux V, Petrusca L, Viallon M, et al. Ultrasonography‐based 2D motion‐compensated HIFU sonication integrated with reference‐free MR temperature monitoring: a feasibility study ex vivo . Phys Med Biol. 2012;57(10):159–171. [DOI] [PubMed] [Google Scholar]

- 7. Auboiroux V, Petrusca L, Viallon M, et al. Respiratory‐gated MRgHIFU in upper abdomen using an MR‐compatible in‐bore digital camera. Biomed Res Int. 2014; Article ID 421726 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Rijkhorst E, Rivens I, Haar G, et al. Effects of respiratory liver motion on heating for gated and model‐based motion‐compensated high‐intensity focused ultrasound ablation, Medical Image Computing and Computer‐Assisted Intervention(MICCAI). Lecture Notes in Computer Science. 2011;6891:605–612. [DOI] [PubMed] [Google Scholar]

- 9. Salomir R, Viallon M, Kickhefel A, et al. Reference‐free PRFS MR‐thermometry using near‐harmonic 2‐D reconstruction of the background phase. IEEE Trans on Med Imaging. 2011;31(2):287–301. [DOI] [PubMed] [Google Scholar]

- 10. Lee D, Koizumi N, Ota K, Yoshizawa S. Ultrasound‐based visual servoing system for lithotripsy. Proc Intellig Robots Syst. (IROS)2007;877–882. [Google Scholar]

- 11. Seo J, Koizumi N, Funamoto T, et al. Visual servoing for a US‐guided therapeutic HIFU system by coagulated lesion tracking: a phantom study. Int J Med Robot. 2011a;7(2):237–247. [DOI] [PubMed] [Google Scholar]

- 12. Chanel L, Nageotte F, Vappou J, et al. Robotized high intensity focused ultrasound (HIFU) system for treatment of mobile organs using motion tracking by ultrasound imaging: an in vitro study. Conference of the Proceedings of the IEEE Eng Med Biol Soc. 2015 Aug;2571–2575. [DOI] [PubMed] [Google Scholar]

- 13. Seo J, Koizumi N, Funamoto T, et al. Biplane US‐guided real‐time volumetric target pose estimation method for theragnostic HIFU system. J Robot Mechatron. 2011b;23(3):400–407. [Google Scholar]

- 14. Noble ML, Yuen JC, Kaczkowski PJ, et al. Gel phantom for use in high‐intensity focused ultrasound dosimetry. Ultrasound Med Biol. 2005;31:1383–1389. [DOI] [PubMed] [Google Scholar]

- 15. Shung KK, Jeong JS, Chang JH. Ultrasound transducer and system for real‐time simultaneous therapy and diagnosis for noninvasive surgery of prostate tissue. IEEE Trans Ultrason Ferroelectr Freq Control. 2009;56(9):1913–1922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Matthew JC, Jeong JS, Kirk KS. Adaptive hifu noise cancellation for simultaneous therapy and imaging using an integrated hifu/imaging transducer. Phys Med Biol. 2010;55:1889–1902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Kennedy JE. High‐intensity focused ultrasound in the treatment of solid tumours. Nature Rev Cancer. 2005;5:321–327. [DOI] [PubMed] [Google Scholar]

- 18. Cafarelli A, Mura M, Diodato A, et al A computer‐assisted robotic platform for focused ultrasound surgery: assessment of high intensity focused ultrasound delivery. IEEE Internation Conference of Engineering in Medicine and Biology Society (EMBC), Milan, Italy, Aug. 2015. [DOI] [PubMed]

- 19. Verbeni A, Ciuti G, Cafarelli A, et al., The FUTURA platform: a new approach merging non‐invasive ultrasound therapy with surgical robotics. IEEE International Conference of Engineering in Medicine and Biology Society (EMBC), Chicago, US, Aug. 2014.

- 20. Lediju Bell MA, Sen HT, Iordachita I, Kazanzides P, Wong J. In vivo reproducibility of robotic probe placement for a novel ultrasound‐guided radiation therapy system. J Med Imag. Bellingham. 2014 Jul;1(2):025001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. De Luca V, Benz T, Kondo S, et al. The 2014 liver ultrasound tracking benchmark. Phys Med and Biol. 2015 Jul 21;60(14):5571–5599. [DOI] [PMC free article] [PubMed] [Google Scholar]