Abstract

Introduction

Bringing together continuous quality improvement (CQI) data from multiple health services offers opportunities to identify common improvement priorities and to develop interventions at various system levels to achieve large-scale improvement in care. An important principle of CQI is practitioner participation in interpreting data and planning evidence-based change. This study will contribute knowledge about engaging diverse stakeholders in collaborative and theoretically informed processes to identify and address priority evidence-practice gaps in care delivery. This paper describes a developmental evaluation to support and refine a novel interactive dissemination project using aggregated CQI data from Aboriginal and Torres Strait Islander primary healthcare centres in Australia. The project aims to effect multilevel system improvement in Aboriginal and Torres Strait Islander primary healthcare.

Methods and analysis

Data will be gathered using document analysis, online surveys, interviews with participants and iterative analytical processes with the research team. These methods will enable real-time feedback to guide refinements to the design, reports, tools and processes as the interactive dissemination project is implemented. Qualitative data from interviews and surveys will be analysed and interpreted to provide in-depth understanding of factors that influence engagement and stakeholder perspectives about use of the aggregated data and generated improvement strategies. Sources of data will be triangulated to build up a comprehensive, contextualised perspective and integrated understanding of the project's development, implementation and findings.

Ethics and dissemination

The Human Research Ethics Committee (HREC) of the Northern Territory Department of Health and Menzies School of Health Research (Project 2015-2329), the Central Australian HREC (Project 15-288) and the Charles Darwin University HREC (Project H15030) approved the study. Dissemination will include articles in peer-reviewed journals, policy and research briefs. Results will be presented at conferences and quality improvement network meetings. Researchers, clinicians, policymakers and managers developing evidence-based system and policy interventions should benefit from this research.

Keywords: developmental evaluation, interactive dissemination, stakeholder engagement, primary health care, indigenous, quality improvement

Strengths and limitations of this study.

Use of mixed methods and inclusion of perspectives of the research team and diverse healthcare stakeholders enhances validity and provides comprehensive data.

The developmental evaluation is being applied within an iterative dissemination project. Each iteration provides opportunities to evaluate and refine implementation processes and reports in response to researcher, participant and data collection needs.

The dissemination approach encourages stakeholders to send reports and surveys to others, limiting ability to measure the reach or response rates as part of the evaluation.

The evaluator is a team member and evaluates the research team’s work. Potential lack of objectivity is offset by continuing opportunities for reflexivity, sense-making and timely project adaptations.

Introduction

Background

Improving the implementation of evidence-based healthcare is a complex enterprise. It involves the production, translation and use of knowledge by researchers, policymakers, service providers and consumers. Using evidence to improve the quality of primary healthcare (PHC) services for Aboriginal and Torres Strait Islander people (Australia’s Indigenous nations) is critically important in Australia, where Indigenous people experience an unacceptable burden of ill health, shorter life expectancy and poorer access to PHC services compared with the general population.1 2

A number of health centre teams that serve Indigenous communities use continuous quality improvement (CQI) tools and processes to make evidence-based improvements in the care they deliver. CQI is inherently participatory; it generates and uses data and iterative processes to plan interventions, typically at the team or health centre level. It applies strategies that are known to be effective in knowledge translation, such as audit and feedback and goal setting.3–5

Improvement interventions have a higher probability of success when system changes are implemented concurrently at several levels—individual care processes, group or team work, the organisation and the larger system and policy environment.6 7 Despite developments in CQI theory and practice, there is a gap in the literature about how to engage stakeholders in wide-scale CQI processes to address improvement barriers and inform the development of system strengthening strategies. There is also a need for knowledge about how different knowledge translation strategies influence outcomes.8

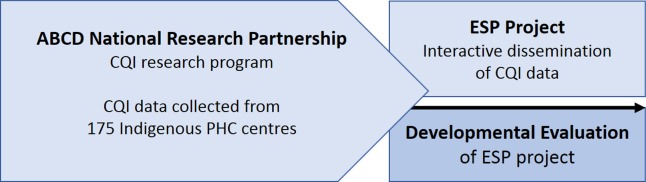

Bringing together CQI data from multiple PHC centres provides scope to use CQI in a different way. It offers opportunities to engage diverse stakeholders in identifying common priorities for improving care and interventions that target change at various levels of the health system. This paper describes the study protocol for the use of developmental evaluation (DE) to evaluate and strengthen a novel theory-informed interactive dissemination project engaging diverse stakeholders involved in Australian Indigenous healthcare. Titled ‘Engaging Stakeholders in Identifying Priority Evidence-Practice Gaps and Strategies for Improvement in Primary Health Care (ESP)’, the project disseminates aggregated CQI data from 175 PHC centres serving Indigenous people, for the purpose of informing improvement interventions. These centres contributed their data to a research programme under a partnership agreement. The relationship between the research programme, the ESP project and the DE is shown in figure 1.

Figure 1.

Relationship between the CQI research programme, ESP project and developmental evaluation. ABCD, Audit and Best Practice for Chronic Disease; CQI, continuous quality improvement; ESP, Engaging Stakeholders in Identifying Priority Evidence-Practice Gaps and Strategies for Improvement in Primary Health Care; PHC, primary health care.

The study context: Australian Indigenous PHC and quality improvement

Despite universal coverage for healthcare services through Medicare and specific funding for Indigenous PHC services, there is a significant and persistent disparity between the health and life expectancy of Indigenous and non-Indigenous Australians.2 9 The disparity is well documented. It relates to a history of colonisation and disempowerment, ongoing racial, social, educational and economic inequalities and lack of access to culturally safe service provision.1 10 Indigenous people access PHC through Indigenous community-controlled health services and government-operated PHC centres specifically established to meet the needs of Aboriginal and Torres Strait Islander people and through private general practices. PHC delivery settings are geographically diverse and vary in population density, governance arrangements and resource provision.

Reducing healthcare disparities requires CQI and system strengthening approaches that address the complexities of the PHC delivery environment and draw on data about clinical care and utilisation of services.7 11 In Indigenous PHC, this calls for approaches that incorporate the needs and values of Indigenous communities,12 make optimal use of health service performance data and use the professional and contextual knowledge of those working in the sector.5 13 It involves policy change and improvement interventions at various system levels.6 14

Developmental evaluation

DE is gaining recognition as a useful approach for implementation research.15 16 Evolving from utilisation-focused evaluation17 and drawing on tools and methods from a variety of disciplines, DE can be used to address complex health system issues that require engagement of multiple stakeholders in both the research and change processes.18

DE is typically embedded in the project context and involves continuous feedback to inform innovators, often with the evaluator positioned within a project or programme team. It is well suited to adapting projects or interventions implemented under complex conditions or emergent situations in which multiple influences make it difficult to predict what will happen as a project or strategy progresses.19 20 DE has been used, for example, to support change through team dialogue, to innovate health and recreation programmes in Indigenous communities, to develop principles and collaborative processes between agencies working to address difficult social and economic issues and to engage communities of practice in complex systems change.21 Challenges in DE include managing uncertainty and ambiguity, the volume of data and maintaining a results focus.22

To our knowledge, DE has not previously been used in a project involving CQI or dissemination of data, nor applied in order to ‘study a study’. While this made methodology development challenging, the available DE literature suggests that DE aligns well with a project that has developmental purpose, is committed to engaging stakeholders with research evidence and to contributing to the science of implementation. The benefits of having an ‘embedded evaluator’, such as timely feedback, discussion and sense-making to inform adaptive decision-making,15 21 were influential in selecting a DE approach.

Aims of the DE study

The aim of the DE study is to evaluate and enhance a novel interactive dissemination project designed to engage PHC stakeholders in Indigenous PHC in wide-scale processes to interpret and use aggregated CQI data.

The objectives of the DE study are to:

develop and refine the design, reports, processes and resources used in the interactive dissemination project

explore the barriers and facilitators to stakeholder engagement in the project

identify the actual or intended use of the aggregated CQI data and coproduced knowledge by different stakeholders and factors influencing use

assess the overall effectiveness of the interactive dissemination processes used in the ESP project.

The ESP project: an opportunity for learning and innovation through DE

Described in a separate paper, the ESP project23 aims to engage stakeholders with aggregated data and promote wide-scale improvements in quality of care by applying a system-wide approach to CQI.24 The project uses a comprehensive CQI dataset collected for the Audit and Best Practice for Chronic Disease (ABCD) National Research Partnership (2010–2014).4 25

Over a decade, PHC centres participating in the ABCD National Research Partnership (Partnership) used evidence-based best practice clinical record audit and system assessment tools to assess and reflect on system performance, interpreting the data to identify improvement priorities and develop strategies appropriate to their service population and delivery contexts.5 Available ABCD CQI tools cover various aspects of PHC (eg, chronic illness, preventive and maternal care).

In addition to their routine use of these tools as part of their plan–do–study–act CQI processes, 175 PHC centres involved in the Partnership voluntarily provided service-level deidentified CQI data for analysis. These data are based on almost 60 000 audits of patient records and 492 systems assessments. They provide a unique opportunity to use aggregated health centre performance data for wide-scale system improvement and population health benefit and to explore innovative ways to engage healthcare stakeholders with evidence.

Aiming to support understanding and use of these data through an interactive exchange between healthcare researchers and stakeholders, the ESP project draws on explicit and practical knowledge, and different types of expertise, to identify improvement strategies aligned with implementation settings.23 26 27

The ESP project design is adapted from systematic methods that aim to link interventions to modifiable barriers to address evidence–practice gaps.28 Four phases of online report distribution and feedback will involve stakeholders in data interpretation and knowledge coproduction, as follows:

Phase 1: identification of priority evidence-practice gaps. Stakeholders receive a report of aggregated cross-sectional CQI data and complete an online survey.

Phase 2: identification of barriers and enablers to addressing gaps in care identified in Phase 1. Stakeholders receive a report of trend data relevant to the identified priority evidence–practice gaps. They complete an online survey about influences on individual behaviours, health centre and wider systems. The survey questions are based on the theoretical domains framework29 30 and on other models identifying barriers to the effective functioning of health centre and higher level systems.31–33

Phase 3: identification of strategies for improvement. Provided with findings from phases 1 and 2, and an evidence summary about CQI implementation, stakeholders are asked to suggest strategies likely to be effective in addressing modifiable barriers and strengthening enablers.

In the final phase, respondents are asked to review the draft final report and provide feedback on the overall findings in the specific clinical care area.

Separate processes will be implemented using audit data collected for child, maternal, preventive and mental health, chronic illness and rheumatic heart disease care. The rationale for the ESP project is that involving diverse stakeholders in a phased approach using aggregated CQI data should stimulate discussion and information sharing and enhance ownership of the development of interventions to address system gaps. The collaboratively produced findings are intended as a resource for planning implementation interventions that fit materially, historically and culturally with organisational and local contexts.34

Methods and analysis

Using a case study approach35 36 the DE will examine and enhance the methods through which the dissemination of aggregated data and knowledge coproduction are enacted in the ESP project. It seeks to effect changes and develop understanding as the dissemination project and concurrent evaluation proceed through iterative phases of implementation.

Systematically applying DE within the ESP project

The DE is designed to align with the aim and design of the ESP project, which will provide opportunities to collect feedback from survey respondents, to identify interview participants and to engage the research team in DE processes.

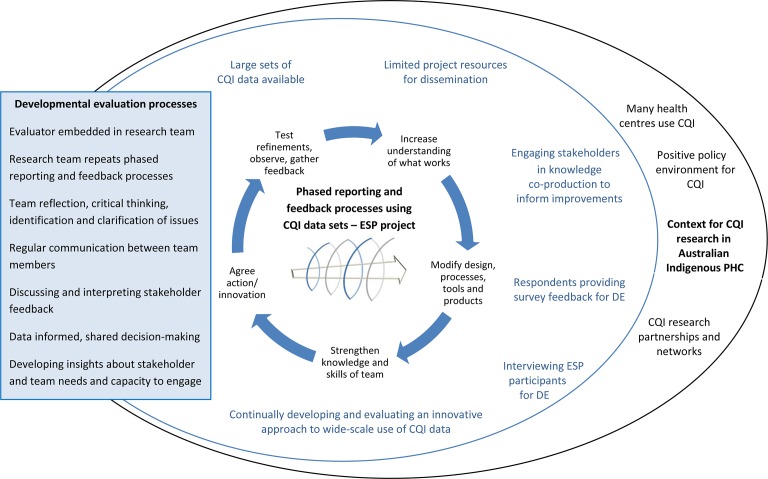

DE processes: the evaluator (AL) is embedded within the research team to support the reflective and iterative nature and the cocreation principle of DE21 and to facilitate real-time responses to project conditions and issues as they emerge. The team will discuss and interpret stakeholder feedback and use reflective critical thinking to identify and clarify issues relevant to implementing the ESP project. Through these processes, decision making for ongoing project implementation will be shared among team members and informed by data. Insights will be developed about stakeholder and team needs and capacity to engage in the collaborative processes of the ESP project.

Iterative cycles: these processes will be applied to iterative cycles of reflection through which actions will be agreed, refinements tested, results observed and feedback gathered. The systematic approach will assist in managing the high volume of data and maintaining focus. It will lead to increased understanding of what works well or poorly to elicit findings and engage project participants and the research team in collaborative processes. Project design, processes, tools and reports are expected to be continuously modified to support the presentation of data to inform wide-scale improvement. Team knowledge and skills in relation to implementing interactive dissemination in the context of Indigenous healthcare will be strengthened through the continuous cycle of learning and development, as phases of the dissemination project are repeated using sets of aggregated CQI data in different areas of clinical care.

Implementation context: the DE study is being conducted within the wider context for CQI research in Australian Indigenous PHC, where CQI is used within many health centres. There is a positive policy environment for CQI and a history of researcher-service provider partnerships for CQI development. Figure 2 illustrates how DE is applied within the ESP project.

Figure 2.

Systematically applying developmental evaluation within the ESP project. CQI, continuous quality improvement; DE, developmental evaluation; ESP, Engaging Stakeholders in Identifying Priority Evidence-Practice Gaps and Strategies for Improvement in Primary Health Care. (Adapted from Togni, Askew, Rogers, et al., 2016)50

Data collection and analysis methods

The sources of data used in this DE study include documentation, quantitative and qualitative surveys and participant interviews. A further source of evidence is participant-observation36—36the actions taken by the research team following their review of evidence and experiences during project implementation. These are appropriate sources for research in which theory is nascent, and research questions are exploratory.37

1. Document analysis

Administrative project records will provide information about the context, scope, early stages of ESP project development, report distribution and ongoing implementation. Data sources will include meeting minutes and recorded interactions between research team members, and between team members and other stakeholders. These documents will be used to identify and clarify key issues, dates, events and tasks and to track key decisions and developments in the ESP design, processes, reports and other resources.

2. Survey data

Online surveys designed to collect data leading to the generation of wide-scale CQI strategies (as part of the ESP project) incorporate evaluative questions. The questions will ask respondents to rate, on a Likert scale, the accessibility, content, usefulness and usability of information in the reports and the extent to which the reports promote workplace discussion about care quality. These data will be analysed using simple descriptive statistics. Respondents will also be invited to provide free-text responses suggesting ways in which the team can improve the surveys and reports and support data interpretation. Free-text responses will be integrated and analysed with other qualitative data (explicit survey items are at online supplementary files 1–4.)

As key change decisions are made, the research team will modify the surveys to seek feedback about the ESP project modifications. For example, additional questions seeking comments about newly developed resources, design innovations or changed report formats will be included.

Survey data collected as part of the ESP will provide important evaluation data about who is engaging with project processes across Australian jurisdictions. It will enable the team and evaluator to track stakeholder engagement through each phase and cycle for each clinical care area, including the number of responses to each survey, whether responses are from individuals or groups and how this impacts on responses. Respondent information requested in the surveys includes professional role, scope and location (national, Australian jurisdiction), work setting or population group served (eg, urban, rural and remote populations), type of organisation represented (eg, community controlled health centre and government health service) and group size (as relevant). This information will enable the purposive sampling of interviewees.

3. Semistructured interviews

Semistructured interviews will be conducted to provide detailed information and feedback for the DE. They will be used to explore emergent themes in the survey data and to probe factors and perspectives relating to participant engagement, use of aggregated data and findings and how to improve the project processes and presentation of information (the interview guide is available at online supplementary file 5.)

bmjopen-2017-016341supp005.pdf (205.6KB, pdf)

A single Australian jurisdiction will be the focus of qualitative interviews, purposively selected because of its history of CQI and CQI research in Indigenous PHC. Participating health centres have contributed a significant proportion of the aggregated CQI data used in the ESP project. Further interviews will also be conducted with participants who have cross jurisdiction (national) roles. Potential interviewees will be identified from respondent information collected through the surveys—contact details are provided voluntarily by respondents. Interview participants will be purposively sampled from project participants to represent different professional roles, organisation types and work settings and participation in different ESP project cycles.

Twenty-five to 30 interviews are expected to provide representative data for effective comparison between groups and settings. The aim will be to conduct sufficient interviews to build a convincing analytical narrative based on richness and detail and to achieve ‘information power’ in identifying themes in the data.38 The evaluator (AL) will conduct all interviews.

Interview transcripts will be deidentified and entered into NVivo, a computer-assisted qualitative data analysis program to assist with coding for analysis. The evaluator will generate a priori codes derived from the literature and based on a widely used conceptual framework for knowledge translation39 and the DE research questions. This will be done ahead of identifying emergent codes to discover themes, categories and patterns in the data, to explore the relationships between them and to build theories through an inductive process.40 Coding will be checked by a research colleague to ensure coding reliability and consistency.

The aim of the analysis of interview data is to provide information for two purposes. First, the preliminary results will be reported and discussed with research team members to help inform the DE process. Together with other information, such as survey findings, the interview data will influence real-time changes to ESP project processes, tools and reports as the project is implemented.

The second purpose is for interpretation and reflection to gain deep insight and develop understanding relevant to the DE research questions. This includes understanding of the factors influencing stakeholder engagement in the interactive dissemination project, ways to support participation and the extent to which being involved influences participants’ implementation decisions. It includes insights into use of the CQI data and use of project findings about consensus priority evidence–practice gaps, barriers, enablers and strategies for improving care quality.

4. Reflective processes with the research team

As illustrated in figure 2, the research team’s learning and actions will be guided by a facilitated process of reflection and analysis, drawing on stakeholder feedback and the team’s experiences. This process will enable the team to identify emerging issues and to innovate, test and refine the elements of the interactive dissemination project. It will be based on the questions: what? (What happened?), so what? (What do the results mean or imply? How did we influence the results?) and now what? (How do we respond? What should we do differently?).41 An example of how these questions are applied is shown in table 1.

Table 1.

Reflective evaluation questions

| What (What happened?) | So what? (What does it mean?) | Now what? (What to do differently?) |

| How many survey responses did we receive? Whose responses did we capture? What was the quality of data collected through this survey? What feedback did survey respondents and interviewees provide about:

What worked well/not so well for you in terms of refinements and modifications made? |

Do we need to promote and/or distribute reports in other ways and target particular people? Do we need to clarify, adjust, add or delete survey questions to elicit robust data and encourage engagement? Do we consider modifying the next phase, or the ESP process we use for the next dataset? Do we need to present or explain the data differently to enhance understanding? Do we need to modify report formats and content to make them more accessible to those targeted? Does the literature about presenting research to different user groups match respondent feedback? How does feedback and observation connect with what we know from our experience of engaging stakeholders in CQI? |

Based on the explicit and experiential evidence, should we be making further changes to enhance the:

How should we prioritise these changes (eg, considering resources needed, time involved, alignment with theory)? What is the plan of action for making changes? How will these changes impact on the project and others involved (eg, clinical leaders and report co-authors involved in data analysis)? |

CQI, continuous quality improvement; ESP, Engaging Stakeholders in Identifying Priority Evidence-Practice Gaps and Strategies for Improvement in Primary Health Care.

The processes will thereby reflect CQI processes (plan–do–study–act cycles). Repeating these cycles in different areas of PHC will offer opportunities to continuously gather data, to learn from each cycle of stakeholder engagement and feedback and to apply learning to improve the implementation of subsequent activities within the ESP project (figure 2). Documenting these processes, team perceptions and change decisions will enable consideration of the contribution of DE in strengthening project implementation.

Data integration and analysis

Taking a pragmatic approach, multiple sources of data will be collected, analysed and integrated42 to address the objectives of the DE. Each data source will be individually analysed then triangulated to support validation and cross-checking of findings.43 Table 2 outlines these processes.

Table 2.

Data sources and their use to address the developmental evaluation objectives

| DE objective | Data source | Analysis and use of data to address DE objective |

| Develop and refine the design, reports, processes and resources used in the interactive dissemination project | Document analysis | Identification of implementation strengths, issues and need for refinements Tracking of actions, issues, decisions, key events, changes |

| Survey data | Analysis of quantitative and qualitative feedback about reports, processes, resources, design | |

| Semistructured interviews | Identification of emerging data patterns, commonalities and ideas for project improvement | |

| Reflective processes and discussion among research team members to integrate, interpret and use different types of data to determine ESP refinement needs and make ongoing implementation decisions | ||

| Explore the barriers and facilitators to stakeholder engagement in the project | Semistructured interviews | Coding and analysis of data to develop assertions, propositions, generalisations about factors influencing stakeholder engagement. Interpretation to develop understanding |

| Qualitative survey data | ||

| Preliminary findings contribute to team discussions about ESP refinement and implementation. | ||

| Identify actual or intended use of the aggregated CQI data and coproduced knowledge by different stakeholders and factors influencing use | Semistructured interviews | Coding and analysis of data to develop assertions, propositions, generalisations about stakeholder use of aggregated CQI data and ESP findings. Interpretation to gain insights |

| Assess the overall effectiveness of the interactive dissemination processes used in the ESP project | All | Synthesis of all data types and findings to identify key DE findings and outcomes |

DE, developmental evaluation; ESP, Engaging Stakeholders in Identifying Priority Evidence-Practice Gaps and Strategies for Improvement in Primary Health Care.

In the initial stage of the study, ESP project survey responses help to inform the development of the exploratory questions used in the semistructured interviews for the DE. Thereafter, the collection of qualitative and quantitative data will occur concurrently. Survey responses will contribute evaluation data through the ESP project phases and dissemination cycles in each area of clinical care. Semistructured interviews will be timed to capture the input of participants engaging with ESP reports and surveys (eg, for maternal health and mental health).

The continuous data collection, analysis and synthesis processes using different data sources will provide the team with opportunities to apply what is learnt, generate new avenues of enquiry and ideas and test changes made within the ESP project.

Project documents and records will be used to construct a timeline reflecting key dates, events, stakeholder feedback and participation, ideas, decisions and implementation of project refinements. The timeline will track the project, enabling the team to draw causal hypotheses and informing ongoing change decisions. Bringing together and interpreting the different types of data will help build a comprehensive picture of ESP project development and a contextualised and integrated understanding of the findings and evaluation outcomes of the ESP project.

Overall, these processes are expected to identify key issues and principles to inform future interactive dissemination efforts and wide-scale CQI in the context of Indigenous PHC and to contribute knowledge that can be transferred to other healthcare contexts and disciplines.

Ethics and dissemination

Ethics

The study has been approved by the Human Research Ethics Committee of the Northern Territory Department of Health and Menzies School of Health Research (Project No. 2015–2329), the Central Australian Human Research Ethics Committee (Project No. 15–288) and the Charles Darwin University Human Research Ethics Committee (Project No. H15030) from March 2015 to 31 May 2018.

Dissemination

Dissemination will be done by submitting articles to peer-reviewed journals, by thesis and other publications such as research briefs. Results will be presented at conferences and other forums including quality improvement research network meetings.

Discussion

The study seeks to support, develop and evaluate an interactive dissemination project (the ESP) involving stakeholders in Indigenous PHC in the novel use of aggregated CQI data to identify priority evidence-practice gaps, barriers, enablers, and strategies in different areas of clinical care. The characteristics of DE, particularly its capacity to support emergence and adaptation in complex settings, make it suitable for this purpose. The collection and analysis of DE data through iterative cycles of stakeholder feedback and team reflection will provide information and opportunities for the continual refinement of research report presentation and the adjustment of tools and processes for capturing participant knowledge. The analysis and interpretation of interview data will provide insights about ways to engage stakeholders in wide-scale CQI and build greater understanding of the implementation context, use of data and ESP project findings and implications for system improvement.

Recent knowledge translation literature indicates gaps in knowledge about how different knowledge translation strategies influence outcomes and about the relationship between their underlying logic or theory and beneficial outcomes.8 44 There is also need for detailed reporting and evaluation of such research.8 45 This study can help to address these gaps. The DE is being applied within a project that has adapted a theory-based design linking the development of interventions with modifiable barriers, enablers and identified improvement priorities.23 28 In addition to studying the application of theory in the ESP project, the DE offers scope to test, identify and document those elements essential to achieving the intended dissemination outcomes.

The ESP project acknowledges the importance of the sharing of tacit knowledge among practitioners for addressing the ‘know-do gap’.46 Consistent with approaches advocated in recent literature,47 48 it adopts a strategy that integrates knowledge production, translation and use across disciplines.49 It is being implemented with modest resources, using online methods of report distribution and feedback, and relying on stakeholder ‘buy-in’ to enhance report distribution and facilitate engagement. There is potential for the DE study to provide useful lessons about the strengths and limitations of such an approach. The study will also contribute knowledge about the conditions and factors that influence stakeholder engagement in wide-scale data interpretation and knowledge coproduction using CQI data and the use of this evidence by various PHC stakeholders and in differing contexts.

Finally, the DE study is supporting and evaluating a novel interactive dissemination project implemented in the Australian Indigenous healthcare context, in which there is an urgent need to ensure that knowledge from research impacts on driving healthcare improvements. The DE will support the coproduction and dissemination of knowledge by stakeholders working in this sector, based on recent national-level CQI data from Australian Indigenous PHC centres—knowledge that can be used to implement improvements at practitioner, team, health centre and higher system levels. The lessons learnt about the potential for using aggregated CQI data for this purpose are expected to be applicable to other healthcare contexts. Researchers, clinicians, policymakers and managers developing evidence-based system and policy interventions should benefit from this research. The study will also help to address the current gap in the scientific literature about applying DE.

bmjopen-2017-016341supp001.pdf (429KB, pdf)

bmjopen-2017-016341supp002.pdf (280.2KB, pdf)

bmjopen-2017-016341supp003.pdf (284KB, pdf)

bmjopen-2017-016341supp004.pdf (408.1KB, pdf)

Supplementary Material

Acknowledgments

The development of this manuscript would not have been possible without the active support, enthusiasm and commitment of staff in participating PHC services, and members of the ABCD National Research Partnership and the Centre for Research Excellence in Integrated Quality Improvement in Indigenous Primary Healthcare. The authors would like to thank Samantha Togni for feedback on figure 2.

Footnotes

Contributors: AL developed the protocol and manuscript drafts. All authors reviewed drafts and contributed to manuscript development. RB leads the ESP project and supervised the protocol design and writing process. JB and VM contributed to the ESP project design and will provide data for the developmental evaluation study. FC, GH and NP contributed to the methodology and provided advice during protocol development. All authors read and approved the final manuscript.

Competing interests: None declared.

Patient consent: The study does not refer to personal medical information. The participants in the study are healthcare workforce.

Ethics approval: Human Research Ethics Committee of the Northern Territory Department of Health and Menzies School of Health Research; Central Australian Human Research Ethics Committee; Charles Darwin University Human Research Ethics Committee.

Provenance and peer review: Not commissioned; externally peer reviewed.

References50

- 1. Australian Health Ministers’ Advisory Council. Aboriginal and Torres Strait Islander Health Performance Framework 2014 Report. Canberra: AHMAC, 2015. [Google Scholar]

- 2. Australian Institute of Health and Welfare. Australia’s health. Canberra: AIHW, 2016. [Google Scholar]

- 3. O'Neill SM, Hempel S, Lim YW, et al. . Identifying continuous quality improvement publications: what makes an improvement intervention 'CQI'? BMJ Qual Saf 2011;20:1011–1019. 10.1136/bmjqs.2010.050880 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Bailie R, Matthews V, Brands J, et al. . A systems-based partnership learning model for strengthening primary healthcare. Implement Sci 2013;8:143 10.1186/1748-5908-8-143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bailie RS, Si D, O'Donoghue L, et al. . Indigenous health: effective and sustainable health services through continuous quality improvement. Med J Aust 2007;186:525–7. [DOI] [PubMed] [Google Scholar]

- 6. Ferlie EB, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q 2001;79:281–315. 10.1111/1468-0009.00206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Starfield B, Gérvas J, Mangin D, et al. . Clinical care and health disparities. Annu Rev Public Health 2012;33:89–106. 10.1146/annurev-publhealth-031811-124528 [DOI] [PubMed] [Google Scholar]

- 8. Gagliardi AR, Berta W, Kothari A, et al. . Integrated knowledge translation (IKT) in health care: a scoping review. Implement Sci 2016;11:38 10.1186/s13012-016-0399-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Anderson I, Robson B, Connolly M, et al. . Indigenous and tribal peoples' health (The Lancet-Lowitja Institute Global Collaboration): a population study. Lancet 2016;388:131–157. 10.1016/S0140-6736(16)00345-7 [DOI] [PubMed] [Google Scholar]

- 10. Baba JT, Brolan CE, Hill PS. Aboriginal medical services cure more than illness: a qualitative study of how Indigenous services address the health impacts of discrimination in Brisbane communities. Int J Equity Health 2014;13:56 10.1186/1475-9276-13-56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Fiscella K, Sanders MR. Racial and Ethnic Disparities in the Quality of Health Care. Annu Rev Public Health 2016;37:375–394. 10.1146/annurev-publhealth-032315-021439 [DOI] [PubMed] [Google Scholar]

- 12. Davy C, Cass A, Brady J, et al. . Facilitating engagement through strong relationships between primary healthcare and Aboriginal and Torres Strait Islander peoples. Aust N Z J Public Health 2016;40:535–41. 10.1111/1753-6405.12553 [DOI] [PubMed] [Google Scholar]

- 13. Kothari AR, Bickford JJ, Edwards N, et al. . Uncovering tacit knowledge: a pilot study to broaden the concept of knowledge in knowledge translation. BMC Health Serv Res 2011;11:198 10.1186/1472-6963-11-198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lopez-Class M, Peprah E, Zhang X, et al. . A Strategic Framework for Utilizing Late-Stage (T4) Translation Research to Address Health Inequities. Ethn Dis 2016;26:387 10.18865/ed.26.3.387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rey L, Tremblay M, Brousselle A. Managing tensions between evaluation and research: illustrative cases of developmental evaluation in the context of research. Am J Eval 2014;35:45–60. [Google Scholar]

- 16. Conklin J, Farrell B, Ward N, et al. . Developmental evaluation as a strategy to enhance the uptake and use of deprescribing guidelines: protocol for a multiple case study. Implement Sci 2015;10:91 10.1186/s13012-015-0279-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Patton MQ. Essentials of Utilization-Focused Evaluation. Thousand Oaks, United States: Sage Publications, 2011. [Google Scholar]

- 18. Gagnon M. Canadian Institutes of Health Research. Section 5.1 Knowledge dissemination and exchange of knowledge. 2010. http://www.cihr-irsc.gc.ca/e/41953.html.

- 19. Hummelbrunner R. Systems thinking and evaluation. Evaluation 2011;17:395–403. 10.1177/1356389011421935 [DOI] [Google Scholar]

- 20. Patton MQ. Developmental Evaluation: Applying Complexity Concepts to Enhance Innovation and Use. New York: The Guilford Press, 2011. [Google Scholar]

- 21. Patton MQ, McKegg K, Wehipeihana N. Developmental Evaluation Exemplars: Principles in Practice. 1st ed New York: The Guildford Press, 2016. [Google Scholar]

- 22. Dozois E, Langlois M, Blanchet-Cohen N. DE 201: a practitioner’s guide to developmental evaluation. The JW. McConnell Family Foundation and the International Institute for Child Rights and Development 2010. [Google Scholar]

- 23. Laycock A, Bailie J, Matthews V, et al. . Interactive Dissemination: Engaging Stakeholders in the Use of Aggregated Quality Improvement Data for System-Wide Change in Australian Indigenous Primary Health Care. Front Public Health 2016;4:84 10.3389/fpubh.2016.00084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Bailie J, Laycock A, Matthews V, et al. . System-Level Action Required for Wide-Scale Improvement in Quality of Primary Health Care: Synthesis of Feedback from an Interactive Process to Promote Dissemination and Use of Aggregated Quality of Care Data. Front Public Health 2016;4:86 10.3389/fpubh.2016.00086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Bailie R, Si D, Shannon C, et al. . Study protocol: national research partnership to improve primary health care performance and outcomes for indigenous peoples. BMC Health Serv Res 2010;10:129 10.1186/1472-6963-10-129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Grimshaw JM, Eccles MP, Lavis JN, et al. . Knowledge translation of research findings. Implement Sci 2012;7:50 10.1186/1748-5908-7-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Hailey D, Grimshaw J, Eccles M, et al. . Effective Dissemination of Findings from Research. Alberta, CA: Institute of Health Economics, 2008. [Google Scholar]

- 28. French SD, Green SE, O'Connor DA, et al. . Developing theory-informed behaviour change interventions to implement evidence into practice: a systematic approach using the theoretical domains framework. Implement Sci 2012;7:38 10.1186/1748-5908-7-38 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Michie S, Johnston M, Abraham C, et al. . Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care 2005;14:26–33. 10.1136/qshc.2004.011155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Huijg JM, Gebhardt WA, Crone MR, et al. . Discriminant content validity of a theoretical domains framework questionnaire for use in implementation research. Implement Sci 2014;9:11 10.1186/1748-5908-9-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Wagner EH, Austin BT, Davis C, et al. . Improving chronic illness care: translating evidence into action: interventions that encourage people to acquire self-management skills are essential in chronic illness care. Health Aff 2001;20:64–78. [DOI] [PubMed] [Google Scholar]

- 32. Schierhout G, Hains J, Si D, et al. . Evaluating the effectiveness of a multifaceted, multilevel continuous quality improvement program in primary health care: developing a realist theory of change. Implement Sci 2013;8:119 10.1186/1748-5908-8-119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Tugwell P, Robinson V, Grimshaw J, et al. . Systematic reviews and knowledge translation. Bull World Health Organ 2006;84:643–51. 10.2471/BLT.05.026658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Jackson CL, Greenhalgh T. Co-creation: a new approach to optimising research impact? Med J Aust 2015;203:283–4. 10.5694/mja15.00219 [DOI] [PubMed] [Google Scholar]

- 35. Thomas G. A typology for the Case Study in Social Science following a review of Definition, Discourse, and structure. Qualitative Inquiry 2011;17:511–21. 10.1177/1077800411409884 [DOI] [Google Scholar]

- 36. Yin R. Case Study Research: Design and Methods 4th ed. Thousand Oaks, United States: Sage Publications, 2009. [Google Scholar]

- 37. Baker GR. The contribution of case study research to knowledge of how to improve quality of care. BMJ Qual Saf 2011;20 Suppl 1:i30–i35. 10.1136/bmjqs.2010.046490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Malterud K, Siersma VD, Guassora AD. Sample size in Qualitative Interview Studies: guided by Information Power. Qual Health Res 2015;1 10.1177/1049732315617444 [DOI] [PubMed] [Google Scholar]

- 39. Harvey G, Kitson A. Implementing Evidence-based Practice in Healthcare: a Facilitation Guide. London, United Kingdom: Taylor & Francis Ltd, 2015. [Google Scholar]

- 40. Patton MQ. Qualitative Research and Evaluation Methods. 3rd ed Thousand Oaks, United States: Sage Publications, 2002. [Google Scholar]

- 41. Rolfe G, Freshwater D, Jasper M. Critical reflection for nursing and the helping professions: a user's guide. Basingstoke: Palgrave Macmillan, 2001. [Google Scholar]

- 42. Fetters MD, Freshwater D. The 1 + 1 = 3 Integration Challenge. J Mix Methods Res 2015;9:115–7. 10.1177/1558689815581222 [DOI] [Google Scholar]

- 43. Creswell J, Klassen A, Clark PV, et al. ; Best Practices for Mixed Methods Research in the Health Sciences. USA: Office of Behavioral and Social Sciences Research (OBSSR), 2011. [Google Scholar]

- 44. Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement Sci 2010;5:14 10.1186/1748-5908-5-14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Greenhalgh T, Robert G, Macfarlane F, et al. . Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 2004;82:581–629. 10.1111/j.0887-378X.2004.00325.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Greenhalgh T, Wieringa S. Is it time to drop the 'knowledge translation' metaphor? A critical literature review. J R Soc Med 2011;104:501–9. 10.1258/jrsm.2011.110285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Bowen SJ, Graham ID. From knowledge translation to engaged scholarship: promoting research relevance and utilization. Arch Phys Med Rehabil 2013;94(1 Suppl):S3–S8. 10.1016/j.apmr.2012.04.037 [DOI] [PubMed] [Google Scholar]

- 48. Rycroft-Malone J, Burton CR, Bucknall T, et al. . Collaboration and Co-Production of knowledge in Healthcare: opportunities and challenges. Int J Health Policy Manag 2016;5:221–3. 10.15171/ijhpm.2016.08 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Kothari A, Wathen CN. A critical second look at integrated knowledge translation. Health Policy 2013;109:187–91. 10.1016/j.healthpol.2012.11.004 [DOI] [PubMed] [Google Scholar]

- 50. Togni S, Askew S, Rogers S, et al. . Creating Safety to Explore: Strengthening Innovation in an Australian Indigenous Primary health Care Setting through Developmental Evaluation Patton M, McKegg M, Wehipeihana M, Developmental Evaluation Exemplars: Principles in Practice. New York: Guilford Press, 2016:234–51. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2017-016341supp005.pdf (205.6KB, pdf)

bmjopen-2017-016341supp001.pdf (429KB, pdf)

bmjopen-2017-016341supp002.pdf (280.2KB, pdf)

bmjopen-2017-016341supp003.pdf (284KB, pdf)

bmjopen-2017-016341supp004.pdf (408.1KB, pdf)