Abstract

Economic choice behavior entails the computation and comparison of subjective values. A central contribution of neuroeconomics has been to show that subjective values are represented explicitly at the neuronal level. With this result at hand, the field has increasingly focused on the difficult question of where in the brain and how exactly subjective values are compared to make a decision. Here we review a broad range of experimental and theoretical results suggesting that good-based decisions are generated in a neural circuit within the orbitofrontal cortex (OFC). The main lines of evidence supporting this proposal include the fact that goal-directed behavior is specifically disrupted by OFC lesions, the fact that different groups of neurons in this area encode the input and the output of the decision process, the fact that activity fluctuations in each of these cell groups correlate with choice variability, and the fact that these groups of neurons are computationally sufficient to generate decisions. Results from other brain regions are consistent with the idea that good-based decisions take place in OFC, and indicate that value signals inform a variety of mental functions. We also contrast the present proposal with other leading models for the neural mechanisms of economic decisions. Finally, we indicate open questions and suggest possible directions for future research.

Introduction

Neuroeconomics has been a lively area of research since the early 2000s. The ultimate goal of this field is to understand the brain mechanisms underlying economic choices. A core idea rooted in economic theory is that choosing entails two mental stages – values are first assigned to the available options and a decision is then made by comparing values. Thus for the first generation of studies, the central question was whether the construct of value is valid at the neural level. The most important result of that season was to demonstrate that subjective values are explicitly represented in the brain during choice behavior (Bartra et al., 2013; Clithero and Rangel, 2014; O’Doherty, 2014; Padoa-Schioppa, 2011; Wallis, 2012). With this fundamental result at hand, the field increasingly turned to the question of how subjective values are compared to make a decision.

In the past few years, different research groups have pursued different working hypotheses on the neural mechanisms generating the decision and on the brain regions participating in this process. Research foci have included the posterior parietal cortex (Glimcher et al., 2005; Louie et al., 2013), the hippocampus (Shadlen and Shohamy, 2016), and the role of visual attention (Hare et al., 2011; Krajbich et al., 2010). While these lines of investigation remain active, a series of recent breakthroughs links good-based decisions specifically to the activity of different neuronal populations in the OFC. The most notable findings have come from experiments in non-human primates and from computational modeling. Lesion studies dissociated the contribution of the OFC from that of neighboring ventromedial prefrontal cortex (vmPFC), while neurophysiology studies established strong links between the activity of neurons in the OFC and the generation of economic decisions. Complementing these results, computational models suggested that the groups of neurons identified in this area are necessary and sufficient to generate decisions. Taken together, these lines of evidence suggest that good-based decisions emerge from a neural circuit within the OFC. The purpose of this article is to review this growing literature, to discuss the proposed role of OFC in relation to other models of economic decision making, and to indicate open questions for future research.

The article is organized as follows. The first three sections review the notion of value in neuroeconomics, discuss anatomy and lesion studies implicating OFC in economic choices, and describe the neuronal representation of goods and values in this area. The next three sections describe the evidence supporting the proposal that good-based decisions (i.e., value comparisons) are generated within the OFC. One section reviews the possible contributions of other brain regions. Two other sections review other models of economic decisions, namely the distributed consensus model and the attentional drift-diffusion model. The concluding section summarizes the main points of the article and suggests directions for further investigation.

The notion of value in neuroeconomics

In the past decade, a large number of studies have provided direct or indirect evidence for an explicit neuronal representation of subjective values during economic choice behavior. Value signals have been found in numerous brain regions, most notably OFC and vmPFC (reviewed in (Bartra et al., 2013; Clithero and Rangel, 2014; O’Doherty, 2014; Padoa-Schioppa, 2011; Wallis, 2012)). Without revisiting that literature in detail, we limit this section to a few considerations that are particularly relevant for the rest of this article.

Work on the neuronal representation of economic value has antecedents in economics and psychology. Classic economists such as Adam Smith and Jeremy Bentham rooted their economic theories in psychological concepts of pleasure and pain. Subsequent generations of economists, however, gradually emancipated their models from psychological constructs – a process that culminated with the formulation of neoclassic, or standard, economic theory (Niehans, 1990). Standard economics is (almost) completely divorced from psychology: the theory is entirely constructed on “revealed preferences” and it is agnostic about whether values (or utilities) are real psychological or neuronal entities. The standard theory limits itself to noting that, under well-defined conditions, choices are made “as if” based on assigned values (Kreps, 1990). In this conception, the notion of value is rather weak. Yet, economic theory offers a powerful framework to which behavioral and physiological facts can be securely anchored.

Other antecedents can be found in learning theory, an area of psychology focused on associative learning. Modern learning scholars distinguish between two concepts of value. The first, sometimes termed “cached value” (McDannald et al., 2014), drives relatively simple learning processes such as those described in classic behaviorism. Resulting behaviors, referred to as “habitual”, are well accounted for by mathematical models of reinforcement learning that define the “value” of a state as the total amount of reward the agent can expect to accumulate starting from that state (Rescorla and Wagner, 1972; Sutton and Barto, 1998). Importantly, cached values are learned as such and thus fixed. Hence, cached values cannot account for flexible behavior such as that observed in reinforcer devaluation experiments. In these experiments, subjects initially learn to perform a task to obtain a particular reward (e.g., a particular food). Prior to testing, experimental subjects undergo a devaluation procedure, for example through selective satiation of that food. As a result, during testing, the performance of experimental subjects is degraded compared to that of control subjects (Balleine and Dickinson, 1998; Colwill and Rescorla, 1985). The drop in performance following reinforcer devaluation implies that subjects compute the value of the reward on the fly at the time of testing. This value is not learned as such; it depends on the environmental conditions, including the motivational state of the animal. Behaviors affected by reinforcer devaluation are referred to as “goal-directed” (Balleine and Dickinson, 1998; Daw et al., 2005; McDannald et al., 2014).

The notion of value in neuroeconomics is rooted in economic concepts and is closely related to that defined for goal-directed behaviors. The focus of neuroeconomics is choice behavior (not learning). While a large number of natural behaviors can be construed as entailing a choice, scholars in neuroeconomics generally restrict the domain of interest to a class of choices defined somewhat intuitively. Specifically, it is generally understood that economic choices depend on subjective preferences (i.e., there is no intrinsically correct choice) and normally require some trade-off between desirable dimensions (e.g., quantity and probability). Furthermore, it is generally understood that economic choices entail two distinct mental processes: values are first assigned to the available options (offers) and a decision is then made by comparing these values (Kable and Glimcher, 2009; Padoa-Schioppa, 2007; Rangel et al., 2008). With these premises, neuroeconomics experiments typically let subjects choose between different goods. In many cases, two offers vary on two dimensions. An operational measure for the relative subjective value of the goods is derived from the observed choice pattern, and specifically from the indifference point. For example, if a subject offered one apple versus two bananas chooses either good equally often, it can be said (assuming linearity) that the value of the apple equals 2 times the value of the banana. This measure of value is then used to interpret neural activity. Neuroeconomics studies typically define variables that neurons or neural populations might conceivably encode, such as the value of individual offers (offer value), the value of the chosen offer (chosen value), the value of other offer (other value), the value difference (chosen value – other value), etc. These variables are used as regressors. If a particular value variable explains the neural activity better than other variables (including non-value variables), it can be concluded that the neural activity “encodes” or “represents” that value variable (Kable and Glimcher, 2007; Padoa-Schioppa and Assad, 2006; Plassmann et al., 2007). (For further discussion, see Padoa-Schioppa (2011).)

The relation between the notions of value in neuroeconomics and in goal-directed behavior emerges from an additional – and crucial – layer of analysis. If a neuron or a brain area really encodes economic value, then its activity should reflect the subjective nature of value. In particular, subjective values generally vary depending on the environmental conditions, including the motivational state of the agent. This variability should also be present in the neural signal. Hence the most compelling evidence for a neural representation of subjective values comes from studies that derived a neural measure for value and showed that the neural measure and the behavioral measure co-varied (Kable and Glimcher, 2007; Padoa-Schioppa and Assad, 2006; Raghuraman and Padoa-Schioppa, 2014; Valentin et al., 2007). This condition – the identity between neural and behavioral measures of value in the face of individual and contextual variability – highlights the close relation between values driving economic choices and values driving goal-directed behaviors. (For further discussion on this issue, see O’Doherty (2014) and Padoa-Schioppa and Schoenbaum (2015).)

Anatomy and lesion studies

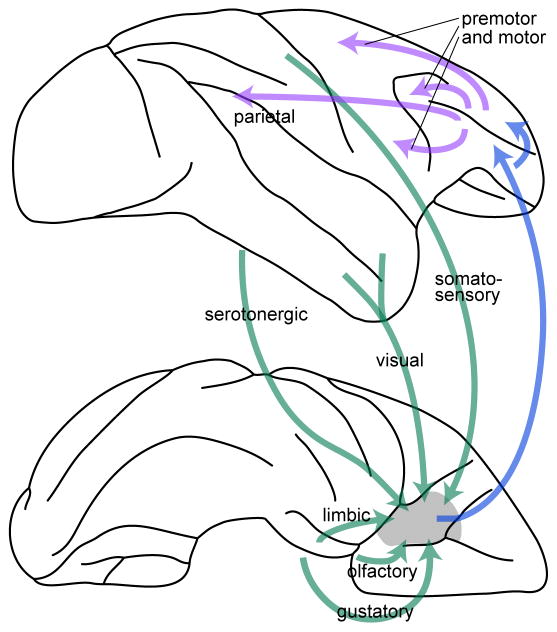

A clear link between economic choices and the OFC has historically been established by anatomy and lesion studies. The orbital surface of the frontal lobe includes a network of distinct but densely interconnected areas termed the “orbital network”. In this article, “OFC” refers to the central part of the orbital network, namely areas 13 m/l and 11l (Ongur and Price, 2000). Anatomically, OFC receives input from visual, somatosensory, olfactory and gustatory regions, from limbic regions, and from the dorsal raphe (Ongur and Price, 2000; Way et al., 2007). This pattern of connectivity seems ideally suited to compute subjective values, which require integrating sensory and motivational signals. Concurrently, OFC sends output to the lateral prefrontal cortex (Petrides and Pandya, 2006; Saleem et al., 2013), which projects widely to motor and premotor areas (Lu et al., 1994; Takada et al., 2004; Takahara et al., 2012). Thus OFC can influence a variety of mental functions including action planning and execution (Figure 1).

Figure 1.

Long-distance anatomical connections underlying economic choice behavior. Top and bottom panels represent the lateral and ventral view of a monkey brain, and the front of the brain is on the right. The figure summarizes the anatomical connections deemed the most relevant to the formation and implementation of economic decisions. Subjective values are computed by integrating input from sensory regions and limbic regions. Value comparison (the decision) takes place in OFC, where goods and values are represented independently of the spatial contingencies of the choice task (good-based representation). Ultimately, many decisions lead to some action. OFC projects to the lateral prefrontal cortex (LPFC), where neurons reflect a good-to-action transformation. In turn, LPFC projects to a variety of premotor and motor regions, where suitable movements are planned and controlled. The figure does not show other anatomical connections through which value signals computed in OFC likely inform other mental functions such as autonomic responses (connections with the medial network), emotion (projections to the amygdala), associative learning (projections to dopamine cells), perceptual attention (reciprocal projections to sensory regions), etc.

Starting with the classic case of Phineas Gage (Damasio et al., 1994), an extensive literature found that OFC dysfunction in human patients is associated with choice deficits in various domains (Cavedini et al., 2006; Heyman, 2009; Hodges, 2001; Rahman et al., 1999; Strauss et al., 2014; Volkow and Li, 2004). Notably, deficits following OFC lesions include increased violations of preference transitivity (Camille et al., 2011; Fellows and Farah, 2007). In non-human primates and rodents, numerous studies found that OFC lesions impaired performance in goal-directed behaviors. More specifically, the effects of reinforcer devaluation was significantly reduced following OFC lesions (Gallagher et al., 1999; Gremel and Costa, 2013; Izquierdo et al., 2004; West et al., 2011). These results indicate that, absent the OFC, animals fail to compute subjective values on the fly.

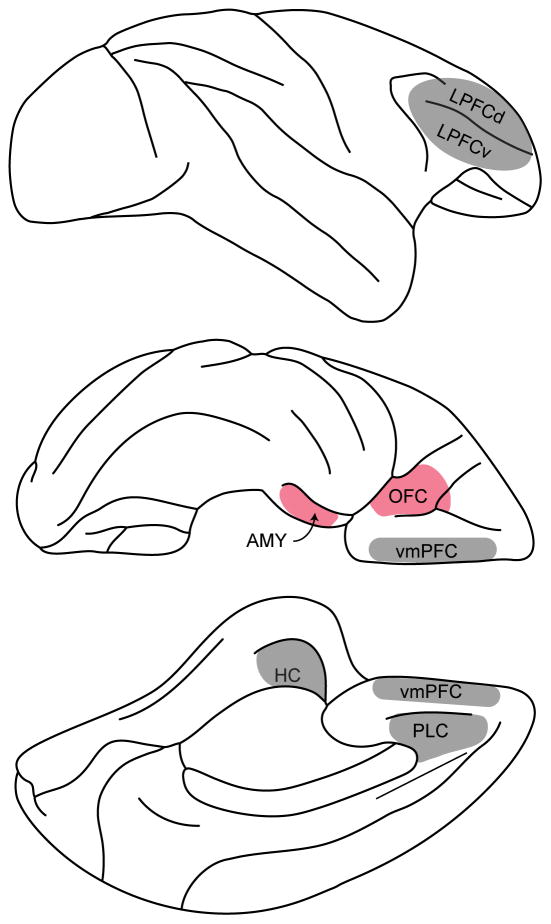

Building on this background, lesion studies conducted in recent years shed light on two key points. First, earlier work had not clarified whether the area most relevant to value computation is OFC proper or neighboring vmPFC. More recently, however, it was shown that goal-directed behavior is specifically impaired after lesions of OFC (Rudebeck and Murray, 2011) or the amygdala (Baxter et al., 2000; Wellman et al., 2005; West et al., 2012). In contrast, goal-directed behavior is not affected by lesions of vmPFC (area 14) (Rudebeck and Murray, 2011), lateral prefrontal cortex (Baxter et al., 2008, 2009), prelimbic cortex (Rhodes and Murray, 2013), or the hippocampus (Chudasama et al., 2008) (Figure 2). Second, earlier work had also indicated that OFC lesions disrupt performance in reversal learning tasks (McDannald et al., 2014), which seem conceptually different from value-based behaviors. However, recent studies using excitotoxic agents (as opposed to aspiration) found that OFC lesions alone do not affect reversal learning, and that performance drops observed previously were likely due to the fact that lesions procured through aspiration damaged fibers of passage (white matter) located above the OFC (Rudebeck et al., 2013).

Figure 2.

Effects of brain lesions on goal-directed behavior. Performance in reinforcer devaluation tasks is disrupted following lesions of orbitofrontal cortex (OFC, area 13/11) and/or amygdala (AMY). In contrast, goal-directed behavior is not affected by lesions of ventromedial prefrontal cortex (vmPFC, area 14), lateral prefrontal cortex (LPFCd/v, area 46d/v), prelimbic cortex (PLC, area 32) or the hippocampus (HC). Top, center and bottom panels represent the lateral, ventral and medial view of a monkey brain, respectively.

In summary, lesion studies indicate that OFC and the amygdala are the only regions strictly necessary for goal-directed behavior. At the same time, lesion studies do not clarify whether economic decisions (i.e., value comparisons) take place in either of these areas.

The representation of goods and values in OFC: flexible but stable

This section summarizes current notions on the neuronal encoding of goods and values in OFC, including ways in which this representation adapts and does-not-adapt to the behavioral context of choice.

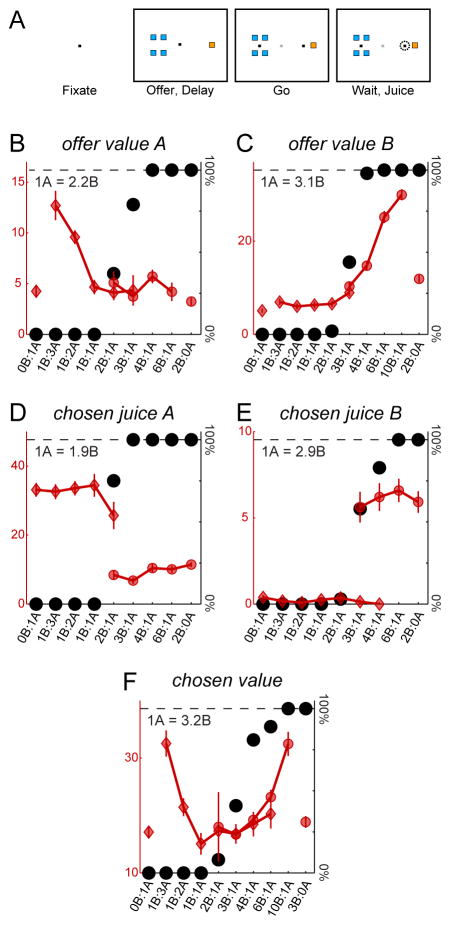

Early neurophysiology work on the primate OFC had found neurons responding to the delivery of particular foods or juices in a way that depended on the motivational state of the animal or on the behavioral context (Rolls et al., 1989; Thorpe et al., 1983; Tremblay and Schultz, 1999). Other experiments found that the activity of the same neurons was modulated both by the quantity of juice and by the delay, in a way qualitatively consistent with time-discounted values (Roesch and Olson, 2005). Along similar lines, studies in other brain regions had found neuronal activity modulated by the type, quantity or probability of rewards (Barraclough et al., 2004; Kawagoe et al., 1998; McCoy and Platt, 2005; Platt and Glimcher, 1999). The first clear evidence for neurons encoding subjective values came from a study in which monkeys chose between different juices offered in variable amounts (Padoa-Schioppa and Assad, 2006). Choice patterns presented a quality/quantity trade-off, and the relative value of the two juices was inferred from the indifference point. The study identified three groups of neurons in the OFC: offer value cells encoding the value of one of the two juices, chosen juice cells encoding the binary choice outcome, and chosen value cells encoding the value of the chosen offer (Figure 3). This neuronal representation was found to be “good-based”, meaning that individual neurons were associated with different juice types and firing rates did not depend on the spatial contingencies of the choice task. Subsequent work built on these seminal findings.

Figure 3.

Cell groups in orbitofrontal cortex. A. Task design. In each session, monkeys chose between two juices (labeled A and B, with A preferred) offered in variable amounts. Offers were represented by colored squares on a computer monitor and monkeys indicated their choice with a saccade. B–F. Cell groups. The five panels represent the activity of five neurons (recorded in different sessions). In each panel, different offer types are ranked on the x-axis by the ratio #B/#A, where #X is the quantity of juice X offered to the animal. Black dots represent the percent of trials in which the animal chose juice B (the choice pattern). A sigmoid fit provided a measure for the relative value of the juices. Red symbols represent the neuronal activity, with diamonds and circles indicating trials in which the animal chose juice A and juice B, respectively. Neurons on the top encode (B) the offer value A and (C) the offer value B. These variables captures the input of the decision process. Neurons on the bottom encode (D) the chosen juice A, (E) the chosen juice B and (F) the chosen value. These variables capture the decision outcome. The fact that different neurons in OFC encode the input and the output of the decision suggests that economic decisions may be generated within a neural circuit formed by these groups of cells. Adapted from Padoa-Schioppa and Assad (2006) and Padoa-Schioppa (2013) with permission.

As one contemplates economic choices made in different behavioral contexts, it becomes apparent that the neural circuit underlying this behavior must satisfy two competing demands. On the one hand, the same subject might be faced with a potentially infinite variety of goods at different times, with values varying by many orders of magnitude. Hence, the neural circuit underlying economic choices must be flexible and adapt to the current circumstances. On the other hand, moving from one context to another, preferences should be (somewhat) consistent. Furthermore, the architecture of the neural circuit cannot change arbitrarily on a short time scale. Hence, the neural circuit underlying economic choices must be stable in the face of contextual variability. A number of studies in the past few years revealed several ways in which the neuronal representation of goods and values in OFC meets these two demands.

Two traits of offer value cells – menu invariance and range adaptation – make these neurons particularly well suited to support economic choices. Menu invariance is the property of neurons encoding the value of a particular good independently of the identity or value of the other good offered in alternative. It was observed in a study where monkeys chose between three juices (A, B, C) offered pairwise. Trials with the three juice pairs (A:B, B:C, C:A) were interleaved. The activity of OFC neurons associated with a particular juice (e.g., offer value B cells) did not depend on the other juice offered concurrently (Padoa-Schioppa and Assad, 2008). Menu invariance is closely related to a fundamental property of economic behavior, namely preference transitivity. By definition, preferences are transitive if for any three goods A, B and C, A>B and B>C imply A>C, where “>” means “is preferred to”. To appreciate the importance of preference transitivity, consider an individual who initially owns C and pays $1 to get B, then pays $1 to get A once he has B, then pays $1 to get C once he has A. After the last transaction, the individual owns C as he did initially, but has lost $3 in the process. Furthermore, the individual could loose any amount of money if he continued to repeat this catastrophic sequence of choices. Hence, a decision circuit that ensures preference transitivity fulfills a fundamental ecological demand. Importantly, if the value assigned to a good does not depend on the good offered in alternative, preferences are necessarily transitive (Grace, 1993; Tversky and Simonson, 1993). Thus if economic decisions are based on the activity of offer value cells in OFC, preference transitivity follows from the fact that this representation is menu invariant.

Range adaptation refers to the fact that the gain of value-encoding cells is inversely related to the range of values contextually available (Cox and Kable, 2014; Kobayashi et al., 2010; Padoa-Schioppa, 2009; Saez et al., 2017). Neuronal adaptation is a ubiquitous phenomenon observed in sensory, cognitive and motor regions. In OFC, range adaptation was observed in the juice choice experiments described above (Figure 3). In each session, the value offered for each juice varied from trial to trial within a fixed range, and value ranges varied across sessions. The tuning of offer value and chosen value cells was always linear. However, a population analysis revealed that tuning slopes were inversely proportional to the range of values available in any given session. Thus the same range of firing rates represented different value ranges in different sessions (Padoa-Schioppa, 2009). Prima facie, range adaptation seems to provide an efficient neuronal representation. However, uncorrected adaptation in offer value cells would induce arbitrary choice biases (Padoa-Schioppa and Rustichini, 2014). Subsequent experiments indicated that the decision circuit corrects for the effects of range adaptation, raising the question of whether adaptation is at all advantageous to the decision (Rustichini et al., in press). To address this fundamental question, Rustichini et al (in press) recently developed a new theory of optimal coding in economic decisions. The core idea is that the representation of offer values is optimal if it ensures maximal expected payoff. Their study shows that for linear tuning functions corrected range adaptation is indeed optimal. Interestingly, linearity in itself was not optimal given the sets of offers presented in the experiments, indicating that linearity is a rigid, non-adapting property of offer value coding. However, it was shown that the benefits of range adaptation outweigh the cost of functional rigidity. In other words, a linear but range adapting representation of offer values ensures close-to-optimal behavioral performance.

In addition to menu invariance and range adaptation, there are other ways in which the representation of goods and values in OFC adapts and does-not-adapt to the behavioral context of choice. First, as noted above, choices may involve a large variety of different goods. To examine how the neuronal representation in OFC adjusts to this aspect of context variability, a recent study let monkeys choose between different pairs of juices in two blocks of trials (A:B, C:D design). The functional role of each neuron (offer value, chosen juice, chosen value) was assessed separately in each trial block. Neurons encoding the identity or the subjective value of particular goods in a given context “remapped” and became associated with different goods when the context changed. Concurrently, the functional role of individual cells and the overall organization of the decision circuit remained stable across contexts. In other words, offer value cells remained offer value cells, and two neurons supporting the same choice in one context also supported the same choice in different contexts (Xie and Padoa-Schioppa, 2016).

Second, it has often been noted that neurons in the OFC represent options and values in a good-based reference frame (Grattan and Glimcher, 2014; Padoa-Schioppa and Assad, 2006; Roesch and Olson, 2005). For example, neurons in the juice choice study described above (Figure 3) were associated with different juice types. Possible alternative reference frames included those in which cells are associated with different locations in space (location-based), with different actions (action-based), or with different numbers (number-based). All of these representations would have been equally valid, but for reasons that are not well understood the actual reference frame was good-based. However, the reference frame in which OFC neurons represent options and values might in fact be flexible. Such a possibility first emerged from studies in which options were defined spatially and neuronal responses appeared to be spatial in nature ((Abe and Lee, 2011; Tsujimoto et al., 2009); for discussion, see (Padoa-Schioppa and Cai, 2011)). More recently, Blanchard et al (2015) used a choice task in which animals traded-off some amount of juice to obtain earlier information about the trial outcome. Options were presented sequentially, and the authors analyzed data in an order-based reference frame. Yet, some aspects of their data suggest that the neuronal might have been information-based (or color-based). (This scenario would partly explain their negative results on value coding.) In any case, the study points to the possibility that reference frames are flexible.

Neuronal remapping and changes of reference frames can be thought of as “discrete” forms of context adaptation (is contrast, range adaptation is “continuous”). The presence of such discrete forms of adaptation resonates with a recent proposal in learning theory, according to which OFC plays a role in building a representation of task “states”. This representation, or cognitive map, would support the actual learning, which is thought to take place in other brain regions. By this account, OFC would be especially important when task states are only partly observable, as is the case in reinforcer devaluation experiments (Lopatina et al., 2017; Schuck et al., 2016; Wilson et al., 2014). The notion of state in learning theory is germane to that of reference frame discussed here in the sense that adopting a particular frame of reference to describe an economic choice is analogous to adopting a particular set of states to describe a learning task. In this respect, the experimental results described above support the cognitive map hypothesis. At the same time, the role played by OFC in economic choice seems broader than that discussed in relation to task states (Wilson et al., 2014). According to the present proposal, neurons in OFC do not merely build a reference frame to represent goods and values; different groups of cells in OFC, organized in a neural circuit, execute a decision process that takes as input the values of individual offers and returns the choice outcome. The next three sections describe the evidence supporting this view.

A neural circuit for economic decisions

The proposal that good-based decisions (i.e., value comparisons) take place within OFC originates from a simple observation: the three groups of neurons identified in this area (Figure 3) capture both the input (offer value) and the output (chosen juice, chosen value) of the decision process. This fact suggests that these groups of cells form a neural circuit in which decisions are generated. This working hypothesis has motivated a substantial research effort in the past few years. This and the following two sections review the most notable results emerging from this work.

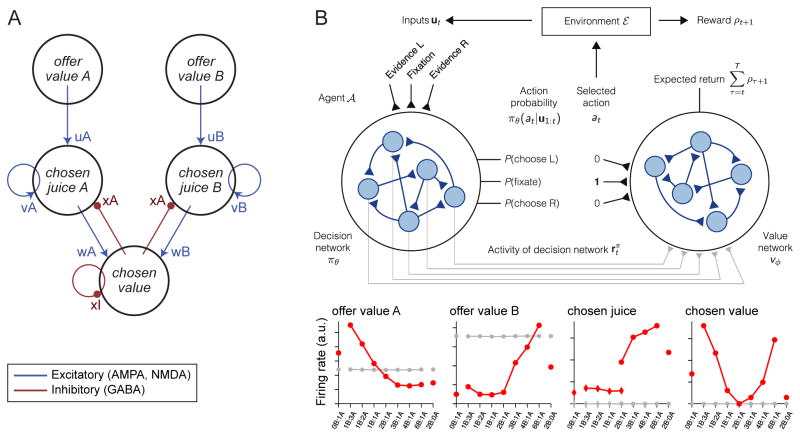

An important proof of concept supporting the idea that good-based decisions are generated within the OFC came from computational modeling. Specifically, Rustichini and Padoa-Schioppa (2015) showed that a biophysically realistic neural network comprised of the three groups of cells identified in OFC can generate binary economic decisions (Figure 4A). The model was adapted from a neural network previously used to describe the activity of parietal neurons during motion perception (Wang, 2002; Wong and Wang, 2006). The model is biophysically realistic in the sense that neurons are either excitatory or inhibitory and all the parameters (synaptic weights, time constants, etc.) have values derived from or compatible with experimental measures (Brunel and Wang, 2001). Remarkably, in addition to recapitulating the groups of cells identified in OFC, the model reproduces various experimental observations, including the behavioral phenomenon of choice hysteresis, the “predictive activity” of chosen juice cells, and the “activity overshooting” of chosen value cells (see below). Corroborating results were also obtained using a reduced version of the model (Hunt et al., 2012; Jocham et al., 2012).

Figure 4.

Neuro-computational models of economic decisions. A. Biophysically realistic model based on pooled inhibition and recurrent excitation. In its extended form, the network includes 2,000 spiking neurons (Wang, 2002). The model is biophysically realistic in the sense that neurons are either excitatory (80% of cells) or inhibitory (20% of cells) and all the parameters (synaptic weights, time constants, etc.) have values derived from or compatible with experimental measures (Brunel and Wang, 2001). A mean-field approximation reduces the network to a dynamic system of 11 variables (Wong and Wang, 2006). The model recapitulates the groups of cells identified in OFC and reproduces second-order phenomena such as choice hysteresis, the “predictive activity” of chosen juice cells and the “activity overshooting” of chosen value cells (Rustichini and Padoa-Schioppa, 2015). B. Recurrent neural network (Song et al., 2017). The network includes two modules organized in an actor-critic architecture, namely a decision module trained to select actions that maximize rewards and a value module that predicts future rewards and guides learning. The authors trained the network to perform binary economic choices providing teaching signals similar to the choice patterns observed behaviorally (Figure 3). After training, the activity of units in the value module recapitulated the three groups of cells identified in OFC. Reproduced from Song et al. (2017) with permission.

The study described above, where monkeys chose between different juice pairs in subsequent trial blocks (Xie and Padoa-Schioppa, 2016) provided empirical support for a neural decision circuit within OFC. As already noted, neurons maintained their functional role but became associated to one of the juices available in each behavioral context (remapping). Perhaps most importantly, the composition of neuronal pools persisted across trial blocks. In other words, two neurons supporting the same (opposite) choice in one block also supported the same (opposite) choice in the other block. These observations validate the understanding that different groups of neurons in OFC form a stable decision circuit.

Neuronal fluctuations and choice variability

The most important lines of evidence linking the activity of neurons in OFC to economic decisions come from the analysis of firing rates in relation to choice variability. Consider in a juice choice task offer types for which choices are split (e.g., 3B:1A in Figure 3C). What makes it so that the monkey chooses one particular juice on a given trial? Several phenomena link the choice made by the animal to trial-by-trial fluctuations in the activity of specific groups of cells.

First, taking an approach frequently used for perceptual decisions (Britten et al., 1992), the relation between fluctuations in the activity of offer value cells and the choice outcome can be quantified with an ROC analysis, which returns a “choice probability” (CP). In essence, CP is the probability with which an ideal observer would infer the choice outcome from the activity of one neuron. Interestingly, the CPs of offer value cells were found to be rather small (Figure 5A–C) (Conen and Padoa-Schioppa, 2015; Padoa-Schioppa, 2013) and substantially lower than the CPs typically measured for sensory neurons during perceptual decisions (Britten et al., 1996; Britten et al., 1992; Cohen and Newsome, 2009; Liu et al., 2013; Nienborg and Cumming, 2006, 2014; Romo et al., 2002). Importantly, the CP of any given neuron reflects not only the cell’s contribution to the choice (read-out weight), but also the structure and intensity of correlated variability across the neuronal population (noise correlation) (Haefner et al., 2013; Shadlen et al., 1996). Thus a follow-up study examined correlations in neuronal variability in OFC (Conen and Padoa-Schioppa, 2015). It was found that noise correlations in this area are much lower than typically measured in sensory areas during perceptual decisions (Cohen and Kohn, 2011; Nienborg and Cumming, 2006; Smith and Kohn, 2008; Smith and Sommer, 2013; Zohary et al., 1994) but see (Ecker et al., 2014; Ecker et al., 2010). Furthermore, computer simulations showed that noise correlations measured in OFC, combined with a plausible read-out of offer value cells, reproduce the experimental measures of CPs. In other words, measures of noise correlations and measures of CPs taken together support the hypothesis that economic decisions are primarily based on the activity of offer value cells (Conen and Padoa-Schioppa, 2015).

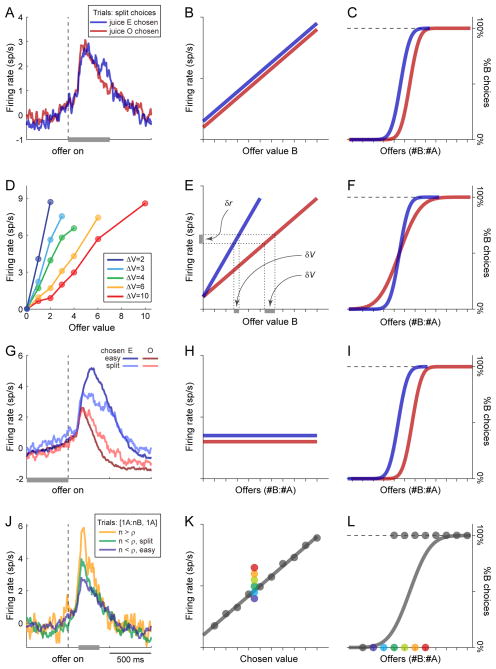

Figure 5.

Neuronal fluctuations and choice variability. The figure illustrates four phenomena relating fluctuations in neuronal activity to choice variability. Each row refers to one phenomenon. In each row, the left panel illustrates experimental data, while two cartoons in the center and right panels depict the phenomenon in a conceptual way. In any given session, “easy” decisions are those for which the animal consistently chose the same juice; “split” decisions are those for which the animal alternated its choices between the two juices. A–C. Trial-by-trial activity fluctuations in offer value cells. Panel (A) illustrates the average baseline-subtracted activity profile of a large number of offer value cells. Only split trials were included in the figure. The two traces refer to trials in which the animal chose the juice encoded by the neurons (juice E, blue) and trials in which the animal chose the other juice (juice O, red). In the post-offer time window, the blue trace is mildly elevated compared to the red trace. Panel (B) depicts the idealized tuning of offer value B cells and panel (C) depicts two idealized choice patterns. In different trials, the neuronal activity might be slightly elevated (blue) or slightly depressed (red). This neuronal variability induces (or correlates with) a choice bias – when the activity of offer value cells is elevated, the choice pattern is displaced to the left. D-F. Range adaptation of offer value cells. In the juice choice experiments, the ranges of offer values varied from session to session. Each trace shown in panel (D) illustrates the average of a large number of offer value responses recorded with a specific value range. Panel (E) depicts the idealized tuning of offer value B cells recorded in two sessions, in which the range of offer values is small (blue) or large (red). Panel (F) depicts two choice patterns presenting low (blue) and high (red) choice variability. Neuronal adaptation to larger ranges of offer values induces higher choice variability. Intuitively, this follows from the fact that neuronal firing rates are noisy. Furthermore, due to range adaptation, the same fluctuation in firing rate (δr) corresponds to a larger fluctuation in subjective value (δV) when the value range is large (red) compared to when it is small (blue). Finally, larger trial-by-trial fluctuations in the subjective value of any given offer induce higher choice variability. G-I. Predictive activity of chosen juice cells. Panel (G) illustrates the average baseline-subtracted activity profile of a large number of chosen juice cells. For each neuron, trials were divided in four groups depending on whether the animal chose the juice encoded by the cell (E, blue traces) or the other juice (O, red traces), and on whether decisions were easy (dark traces) or split (light traces). In split trials, the activity measured prior to the offer was correlated with the eventual decision of the animal (i.e., the light blue trace was elevated compared to the light red trace). Panel (H) depicts the idealize tuning of chosen juice B cells in the pre-offer time window. Cells are not tuned, but in different trials the neuronal activity might be slightly elevated (blue) or slightly depressed (red). This variability induces a choice bias (panel (I)). J–L. Overshooting of chosen value cells. Panel (J) illustrates the average baseline-subtracted activity profile of a large number of chosen value cells. The figure includes only trials in which the animal chose 1A (fixed chosen value). Trials were divided in three groups depending on the quantity of the other, non chosen juice. Here “n” indicates the quantity of juice B offered and “ρ” indicates the relative value (i.e., the quantity of juice B such that the animal is indifferent between 1A and ρB). The blue, green and yellow traces refer, respectively to trials for which n < ρ (easy decisions), n < ρ (spit decisions) and n ≥ ρ. During the decision window (200–450 ms after the offer), the activity of offer value cells presented an overshooting when n was larger (i.e., the decision was more difficult). Panels (K) and (L) depict the idealized tuning of chosen value cells and the corresponding choice pattern. Each dot represents a trial type. In panel (L), dots on the bottom solid lines are trials in which the animal chose juice A, dots on the top dotted line are trials in which the animal chose juice B. Colored dots are trials in which the animal chose 1A over different quantities of juice B. In panel (K), the corresponding colors indicate firing rates. Panels (A), (G) and (J) are adapted from Padoa-Schioppa (2013) with permission; panel (D) is adapted from Padoa-Schioppa (2009) with permission.

Second, evidence linking activity fluctuations in offer value cells and choice variability also emerges from a recent study on optimal coding (Rustichini et al., in press). Consider the session in Figure 3B. The choice pattern may be described by a sigmoid function and the payoff is defined as the chosen value averaged across trials. Given a set of offers, the expected payoff is an increasing function of the sigmoid steepness or, equivalently, a decreasing function of choice variability. The theory developed by Rustichini et al (in press) links the choice outcome to the activity of offer value cells. In this construct, choice variability is due to a combination of neuronal noise, finite maximum firing rates, and non-zero value ranges. The theory describes the conditions that maximize the expected payoff, and thus makes several predictions. First, offer value cells should undergo range adaptation. Second, choice variability should increase as a function of the offer values ranges. Data from two experiments confirmed this prediction (Figure 5D–F).

Third, the choice made by the animal in split trials is correlated with the activity of chosen juice cells in the time window preceding the offer presentation (“predictive activity”) (Padoa-Schioppa, 2013). This phenomenon is interpreted with the understanding that the pre-offer activity of chosen juice cells represents the state of the neural circuit prior to the offer. If one of the two offer values dominates, the animal chooses it independently of the initial state. However, if the two offers are close in value, the initial state effectively imposes a choice bias (Figure 5G–I). Importantly, the predictive activity of chosen juice cells is closely related to the behavioral phenomenon of choice hysteresis – the fact that other things equal, monkeys tend to choose on any given trial the same juice chosen in the previous trial (Padoa-Schioppa, 2013). The two phenomena are closely related, because the predictive activity of chosen juice cells is almost entirely accounted for once the outcome of the previous trial is controlled for. In other words, the predictive activity of chosen juice cells is largely a tail activity from the previous trial. This last observation suggests that the predictive activity of chosen juice cells may be the cause underlying choice hysteresis, although this hypothesis awaits testing. As already noted, choice hysteresis and the predictive activity of chosen juice cells are naturally reproduced by the neural network model illustrated in Figure 4A (Bonaiuto et al., 2016; Rustichini and Padoa-Schioppa, 2015).

The fact that choice variability is accounted for partly by activity fluctuations in offer value cells and partly by activity fluctuations in chosen juice cells suggests that both of these populations are part of the neural circuit generating economic decisions. Another phenomenon, termed “activity overshooting”, suggests that the decision circuit also includes chosen value cells. This phenomenon is observed by examining the activity of these neurons for given chosen value as a function of the other, non-chosen value. During the decision time window, firing rates present a transient overshooting reflecting the decision difficulty (Figure 5J–L) (Padoa-Schioppa, 2013). If chosen value cells did not participate in the decision process (e.g., if their firing rates simply reflected the logical product of offer value and chosen juice), their activity would presumably depend only on the chosen value and not on the decision difficulty. Conversely, the activity overshooting may be accounted for if chosen value cells are within the decision circuit, as demonstrated by the neural network model in Figure 4A (Rustichini and Padoa-Schioppa, 2015).

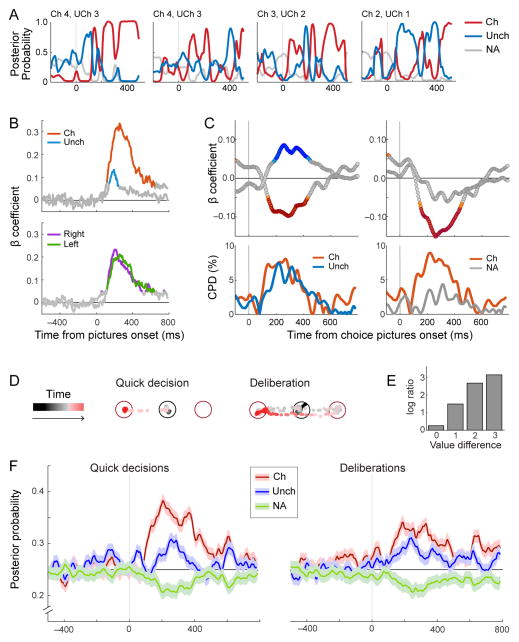

Finally, a powerful way to assess the relation between neuronal activity and the decision process is to examine ensembles of many neurons over the course of individual trials. A significant step in this direction was recently taken by Rich and Wallis (2016). In their experiments, juice was made available to monkeys in four value levels. In training trials, only one value level was offered; in choice trials, the animals chose between two value levels. The authors recorded simultaneously from ensembles of ~10 neurons in OFC. In the analysis, they trained a linear classifier to decode the value level (the “state”) in training trials. Then they used the classifier to decode the state of the neuronal ensemble in choice trials. They thus obtained several interesting results. First, the decoded state alternated between the chosen and the unchosen value over the course of each trial, as though the neuronal ensemble reflected an internal deliberation (Figure 6AB). Second, behavioral response times correlated with the relative time spent by the neuronal ensemble in the unchosen versus chosen state (Figure 6C). Third, controlling for the offer value levels and for the choice outcome, the authors compared trials in which the decision was quick versus deliberative, as revealed by the pattern of eye movements (Figure 6DE). Averaging decoded states across trials, they also obtained an estimate for how strongly the chosen value was represented compared to the unchosen value. They thus found that the strength of that representation was significantly higher in easy compared to difficult decisions (Figure 6F). Notably, this analysis did not attempt to classify cells in different groups. Nonetheless, the results support the understanding that decisions are formed within the OFC.

Figure 6.

Dynamics of economic decisions in OFC. The figure summarizes the results of Rich and Wallis (2016). A. Reconstruction of the internal state. Each panel refers to one trial and illustrates the posterior probability of the decoded state (y-axis) as a function of time (x-axis). B. Relation between choice and internal state. In the top panel, β coefficients were obtained by regressing the decoded state onto the chosen/unchosen value. In the bottom panel, the decoded state was regressed onto the value presented on the left/right. C. Relation between reaction times and internal state during the decision. In the top left panel, β coefficients were obtained by regressing reaction times onto the probability that the network be in the chosen or unchosen state. The bottom left panel depicts the coefficient of partial determination (CPD). The right panels illustrate the equivalent results obtained by regressing reaction times onto the probability that the network be in the chosen or other (non-present) state. DE. Quick versus deliberative decisions. Quick decisions were defined as those in which the animal made only one saccade. The fraction of quick decisions increased as a function of the value difference (E). F. Relation between choice and internal state as a function of the decision difficulty. Reproduced from (Rich and Wallis, 2016) with permission.

Computational models of goal-directed behavior

The neural network model described above (Figure 4A) indicates that the three groups of cells identified in OFC are sufficient to generate economic decisions. Other modeling work suggests that these groups of cells may also be necessary. In one study, Solway and Botvinick (2012) introduced a neuro-computational theory of goal-directed behavior. In their conception, each task is represented by a computational structure, the elements of which are states, actions, policies and rewards. Rewards are stochastic variables and the computational structure amounts to a probabilistic generative model for the rewards. In this formulation, goal-directed behavior involves the inversion of the generative model (i.e., an inverse probabilistic inference). The authors implemented their theory with a neural network. They then examined the activity of network units and the emerging performance in various tasks. For binary economic choices, the activity of network units closely resembled the firing rates of offer value and chosen value cells found in the OFC. Furthermore, the network reproduced the sigmoid shape of choice patterns and the relation between reaction time and value ratio (i.e., decision difficulty) measured in a food choice study in capuchin monkeys (Padoa-Schioppa et al., 2006).

Friedrich and Lengyel (2016) obtained similar results with a different approach. First, they showed that a biologically plausible neural network of spiking neurons can implement goal-directed behavior by solving Bellman’s optimality equation. In their network, synaptic weights are learned given an internal model of the task. As a result, the network can calculate the optimal value of each option online. The authors trained the network in several tasks, including sequential decision tasks. They specifically examined the neuronal tuning curves and the performance of the network in a binary choice task and found striking similarities with the experimental measures. Specifically, the tuning of neurons in the network closely resembled that of offer value and chosen value cells in OFC. Furthermore, neuronal activity profiles depended on the offered values in subtle ways as indeed observed in experimental data (Padoa-Schioppa, 2013). Finally, the network reproduced the sigmoid shape of choice patterns and the relation between reaction times and value ratio observed at the behavioral level.

More recently, Song et al. (2017) took a very different route but ultimately reached similar conclusions. In essence, they trained a recurrent neural network (RNN) to perform various cognitive and value-based tasks. Importantly, the structure of their network was general and not designed to match any particular task (Figure 4B). The RNN consisted of two modules organized in an actor-critic architecture, namely a decision module trained to select actions that maximizes rewards and a value module that predicted future rewards and guides learning. Most relevant here, the authors trained the RNN to perform binary economic choices, providing as teaching signals choice patterns similar to those of Figure 3. Remarkably, they found that after training the activity of units in the value module recapitulated the three groups of neurons identified in the primate OFC, namely offer value, chosen value and chosen juice. Similar results were also obtained by Zhang et al (2017).

Of course, each of the models discussed here builds on a particular set of assumptions. However, the fact that multiple computational models based on very different premises all recapitulate the findings illustrated in Figure 3 and other experimental results suggests that the three groups of neurons identified in OFC might be necessary – and not just sufficient – to generate good-based economic decisions.

Contributions of other brain regions

The previous sections emphasize the role of OFC but do not address whether or how other brain regions might participate in value computation, economic choice and choice-guided behavior. As often noted, value signals inform a variety of cognitive functions beyond economic choice, including emotion, autonomic responses, associative learning, perceptual attention and motor control. Thus not surprisingly, value signals have been identified in a large number of cortical and sub-cortical regions (for review see (O’Doherty, 2014; Padoa-Schioppa, 2011; Schultz, 2015)). In this section we will focus specifically on recent results that shed light on the neural mechanisms underlying economic choices.

Outside OFC, the brain region most likely to host neural processes necessary for economic decisions is arguably the amygdala. Indeed, the amygdala is the only other brain area where lesions affect performance in reinforcer devaluation tasks – an effect observed in monkeys (Malkova et al., 1997; Rhodes and Murray, 2013; Wellman et al., 2005) and rodents (Ostlund and Balleine, 2008; Pickens et al., 2003; West et al., 2012). More specifically, Baxter et al (2000) found that performance in a reinforcer devaluation task depends on the interaction between OFC and the amygdala. Other studies found that amygdala lesions were effective before but not after devaluation, while OFC lesions were effective either before or after devaluation (Johnson et al., 2009; Ostlund and Balleine, 2008; Pickens et al., 2003; Wellman et al., 2005; West et al., 2011; West et al., 2012). Notably, amygdala and OFC are anatomically connected (Carmichael and Price, 1995a; Ghashghaei et al., 2007). Several neurophysiology studies in monkeys (Belova et al., 2007; Bermudez and Schultz, 2010; Grabenhorst et al., 2012; Paton et al., 2006; Sugase-Miyamoto and Richmond, 2005) and rodents (Roesch et al., 2010; Schoenbaum et al., 1998) found neuronal activity broadly consistent with a neuronal representation of goods and subjective values in the amygdala. However, these studies did not analyze the large number of value- and choice-related variables tested in studies of OFC (Padoa-Schioppa and Assad, 2006), and thus did not establish whether amygdala neurons encode the same variables identified in OFC and/or other variables. Also, the relation between neuronal activity in the amygdala and choice variability has not yet been examined at the level of granularity discussed above for the OFC. In conclusion, further work is necessary to ascertain how neurons in the amygdala contribute to economic decisions.

Another strong candidate is the vmPFC. In this case, however, the evidence suggesting participation in economic decisions is not unanimous. On the one hand, the majority of functional imaging studies in humans have found subjective value signals in the vmPFC as opposed to the OFC (Bartra et al., 2013; Clithero and Rangel, 2014). In fact, some imaging studies found in this area neural signal correlated with the value difference (Boorman et al., 2009; Lim et al., 2011), which in the framework of drift-diffusion models is the variable driving the decision (more on this below). On the other hand, single-cell recordings in non-human primates indicate that the fraction of value-encoding neurons and the intensity of value modulations are lower in vmPFC than in OFC (Bouret and Richmond, 2010; Monosov and Hikosaka, 2012; Rich and Wallis, 2014; Strait et al., 2014). Furthermore, as discussed above, vmPFC lesions do not affect performance in goal-directed behavior (Rudebeck and Murray, 2011). The discrepancy between human imaging and primate neurophysiology studies is particularly striking because vmPFC and OFC are part of separate brain networks, with very different patterns of anatomical connectivity and scarce direct interconnections (Ongur et al., 2003). Possible explanations have been discussed elsewhere and include differences between species, differences in behavioral tasks, susceptibility artifacts in fMRI, and the heterogeneous nature of neuronal responses in OFC (Wallis, 2012). In addition to these considerations, it is worth noting that several imaging studies did in fact find value signals in the OFC (Arana et al., 2003; Chaudhry et al., 2009; Gottfried et al., 2003; Hare et al., 2008). These include in particular recent studies that focused on signals associated with individual goods or options (Howard et al., 2015; Howard and Kahnt, 2017; Klein-Flugge et al., 2013). Furthermore, a distinction recently drawn by San Galli et al. (2016) seems potentially revealing. In their experiments, monkeys had to squeeze a bar (3 effort levels) to obtain a juice reward (3 quantity levels). On any given trial, the animal could choose to perform or not perform the task. Unsurprisingly, the willingness to work varied as a function of the reward level, the effort level and the trial number (a proxy for fatigue and satiety). Above and beyond these parameters, the animal’s willingness to work also presented slow fluctuations across trials (e.g., the animal might be unwilling to work for 20 trials before re-engaging in the task). The activity of neurons in vmPFC was weakly correlated with reward and effort levels, and more strongly correlated with the trial number. Most strikingly, firing rates were highly correlated with the willingness to work. In other words, neuronal activity in vmPFC seemed best explained in terms of the overall engagement in the task, as opposed to the values available for choice on any given trial (San Galli et al., 2016). Corroborating this perspective, vmPFC activity has also been linked to affective regulation (Delgado et al., 2016), and vmPFC dysfunction has been implicated in mood disorders including major depression (Price and Drevets, 2010; Ressler and Mayberg, 2007). In summary, it remains unclear whether and how neurons in vmPFC contribute to economic decisions.

Parietal regions including the lateral intraparietal area have long been hypothesized to play a central role in economic choices (Glimcher et al., 2005; Kable and Glimcher, 2009). However, it has also long been noted that parietal lesions do not affect economic choices per se, and that value modulations measured in these areas might be attributed to perceptual attention and/or motor planning, as opposed to economic decision making (Leathers and Olson, 2012; Maunsell, 2004; Padoa-Schioppa, 2011). These issues have not been resolved.

Neurophysiology studies in monkeys have found neurons encoding the chosen value in anterior cingulate cortex (ACC) (Cai and Padoa-Schioppa, 2012; Hosokawa et al., 2013; Kennerley and Wallis, 2009a), dorsal striatum and ventral striatum (Cai et al., 2011). However, these neurons become active later than neurons encoding the same variable in OFC, suggesting that these areas do not directly contribute to good-based decisions per se. Other work indicates that ACC lesions disrupt performance in tasks that include a learning component and where options are defined by actions (Kennerley et al., 2006; Rudebeck et al., 2008). Importantly, these observations may be explained, at least partly, with animals becoming less motivated to undertake physical effort, as opposed to lacking the capability to compare values (Walton et al., 2002; Walton et al., 2007).

Value signals were also found in ventro- and dorso-lateral prefrontal cortex (LPFCv and LPFCd) (Hosokawa et al., 2013; Kennerley and Wallis, 2009b; Kim et al., 2012; Kim et al., 2008; Kim et al., 2009). Notably, the fact that most decisions ultimately lead to some action implies that choice outcomes must be transformed from the space of goods to the space of actions. OFC is not directly connected with motor regions (Carmichael and Price, 1995b), but there are anatomical projections from OFC to LPFCv, to LPFCd, to motor areas (Petrides and Pandya, 2006; Saleem et al., 2013; Takahara et al., 2012). A recent study showed that neurons in LPFCv and LPFCd reflect the good-to-action transformation. Furthermore, response latencies indicated that information about the choice outcome flowed from OFC to LPFCv to LPFCd (Cai and Padoa-Schioppa, 2014). Thus economic decisions taking place in the OFC might be implemented through this circuit (Figure 2).

In summary, our understanding of other brain regions is consistent with good-based decisions taking place within the OFC. At the same time, current notions do not exclude additional or alternative scenarios, and research on the role played by other cortical and sub-cortical areas remains active.

The distributed-consensus model

The results reviewed so far suggest that economic decisions are generated in a neural circuit within the OFC. In the final section of the article, we will indicate aspects of this working hypothesis that await experimental testing. Before doing so, however, we shall discuss other models of economic decisions put forth in recent years. Two of them, viewed as the most serious contenders, are the distributed-consensus model (DCM, discussed in this section) and the attentional drift-diffusion model (ADDM, discussed in the next section).

Early models held that all decisions take place in premotor or motor regions through processes of action selection (Cisek, 2007; Glimcher et al., 2005). Subsequent work noted that neurons in areas involved in economic choices – most notably the OFC – represent options and values in a good-based reference frame. This observation led to the proposal that economic decisions take place in a good-based representation (Padoa-Schioppa, 2011). The notion of good-based decisions has been widely embraced, in the sense that most scholars agree that economic decisions can be dissociated from action planning (Cai and Padoa-Schioppa, 2014; Cisek, 2012; Glimcher, 2011; Rushworth et al., 2012; Wunderlich et al., 2010). At the same time, several authors have argued that motor systems likely participate in some types of value-based decisions (Cisek, 2012; Glimcher, 2011; Klein-Flugge and Bestmann, 2012; Rushworth et al., 2012). The DCM is essentially a unifying model that includes good-based decisions and action-based decisions as special cases. Several variants of this idea have been put forth (Hunt et al., 2014; Hunt and Hayden, 2017; Pezzulo and Cisek, 2016). Here we discuss in particular the original proposal elaborated by Cisek (2012).

In his lucid analysis, Cisek starts by pointing out that many decisions “have nothing to do with actions”. He then summarizes three arguments suggesting the existence of action-based decisions. These include (i) the fact that neurons in sensorimotor regions represent multiple target locations before the decision is completed, (ii) the fact that many decisions are influenced by action costs, and (iii) the fact that decision variables – in particular, subjective values – often modulate neuronal activity in motor regions. Cisek recognizes that these arguments do not necessarily invalidate good-based decisions. In particular, motor systems might well contribute to the computation of action costs and/or action preparation, without participating in value comparison (i.e., the decision itself). Put more bluntly, current empirical evidence does not rule out that economic decisions are always made in an abstract (i.e., good-based) representation. However, Cisek argues that dissociating between abstract and motor representations is not desirable from an ecological perspective. He presents two lines of reasoning to support this contention. First, he notes that while experimental tasks typically present offers simultaneously, foraging in nature often involves decisions between exploiting a known and easily accessible resource and exploring unknown and more distant options. Second, he points out that many natural settings require fast decisions between options that vary over time (non-static), that depend on the behavioral context, and that are partly defined by their spatial configuration. He concludes that “the challenges of a continuously changing environment demanded the evolution of a functional architecture in which the mechanisms specifying possible actions and those which evaluate how to select between them can operate in parallel” (Cisek, 2012).

These considerations motivate a unifying model, the DCM, in which the decision process takes place at multiple levels in parallel. In this view, neurons in lower levels represent specific actions (leftward saccade, rightward saccade, etc.); neurons in higher levels represent goods (one apple, two bananas, etc.). The competition happens at each level, but different levels are reciprocally interconnected and thus influence each other’s dynamics. In some cases, for example when choosing between different actions that yield the same good, the competition entirely takes place at lower levels. In other cases, for example when choosing between different dishes on a restaurant menu, the competition entirely takes place at higher levels. More generally, the competition may take place at multiple levels at once. A decision outcome eventually emerges through a distributed consensus dominated by connections within and across levels (Cisek, 2012; Pezzulo and Cisek, 2016).

One obvious merit of the DCM is that it reconciles the evidence for good-based decisions with the popular belief that motor systems participate in the decision process. Furthermore, the DCM captures the intuition that brains are highly connected machines and that no brain area operates in complete isolation. With these premises, two sets of comments are in order.

First, the lines of reasoning offered by Cisek (2012) to motivate the DCM are not quite conclusive. Specifically, the fact that natural foraging often involves exploration/exploitation decisions does not exclude that these decisions might take place in an abstract representation. Furthermore, while it is true that natural conditions often require making rapid decisions in non-static environments, the time scale of neuronal computations is short. Even for a case such as that discussed by Cisek – a lion chasing a dazzle of zebras that splits while fleeing – decisions could plausibly be made with a modular architecture. (In that particular case, behavior might also be driven by simple ad hoc heuristics.) More generally, different evolutionary considerations support different conclusions. For example, it is true that our brains are the product of evolution and that abstract representations seem less prevalent in lower species. However, it is also true that modular organizations are computationally more efficient (Pinker, 1997; Simon, 1962). Consequently, evolution likely favored modular organizations. Furthermore, for all the evolutionary continuity, species do vary from each other, and there are qualitative differences between primates and other species. For all these reasons, it seems difficult to derive any model of economic decision making on the basis of evolutionary considerations alone.

Second and most important, the proposal discussed in this article is in fact compatible with the DCM. Indeed, decisions taking place in OFC are good-based. The DCM, which includes good-based decisions as a particular case, is consistent with the hypothesis that such decisions are generated within OFC. If one accepts the DCM framework, it becomes interesting to ascertain under what circumstances exactly decisions are good-based, action-based, or distributed. As emphasized by several authors, addressing this question is not easy because the fact that neural activity in a brain region is modulated by decision variables does not imply its participation in the decision process (Cisek, 2012; Klein-Flugge and Bestmann, 2012). Furthermore, in tasks that dissociate offer presentation from the indication of the action associated with each offer, subjects make their decision shortly after the offer, and thus in goods space (Cai and Padoa-Schioppa, 2014; Wunderlich et al., 2010). Hence gathering unequivocal evidence for a direct participation of motor systems in economic decisions has proven difficult so far. Looking forward, one promising direction might be to design tasks that dissociate offer presentation and action indication while also varying action costs. Indeed, choices under variable action costs are viewed as most likely to directly engage the motor systems (Klein-Flugge et al., 2016; Rangel and Hare, 2010; Rushworth et al., 2012). Another way to test the DCM would be to use optogenetics to selectively excite or inhibit neurons associated to a particular action. If motor regions and the OFC are truly part of a distributed decision network, then manipulating the activity of neurons in motor regions should predictably affect the neuronal responses in the OFC, and also predictably affect behavioral measures.

In summary, it is uncontroversial that many economic decisions are good-based and dissociated from action planning. Conversely, the decision processes underlying action selection undoubtedly engage motor systems. The DCM is a unifying model that includes good-based decisions and pure action selection as special cases. In general, the DCM predicates that decisions take place at multiple levels in parallel. In this respect, the DCM reconciles a broad range of view points. However, it is not easy to assess exactly under what conditions motor systems do or do not participate in the decision process. Most importantly for the present purposes, the DCM is consistent with the proposal that good-based decisions take place within the OFC.

The attentional drift-diffusion model

According to the ADDM, economic decisions take place through a drift-diffusion process guided by visual attention. During the decision, subjects switch the gaze or the attention focus back and forth between the options and, at any given time, a comparator increments a decision variable in favor of the attended option (Krajbich et al., 2010). The comparator is thought to reside in the dorso-medial prefrontal cortex (dmPFC) (Hare et al., 2011). The decision ends when the decision variable reaches some threshold.

The ADDM is attractive for several reasons. First, it captures the simple intuition that choosing any particular option requires some degree of mental focus on that option. For example, an individual seeking a means of transportation in the city is unlikely to choose an Uber car over a taxi if she is not aware of (or not thinking about) the existence of Uber cars. Second, drift-diffusion models generate joint distributions of accuracy and reaction times. If the model is sufficiently parameterized, the correspondence with empirical measures can be very good (Milosavljevic et al., 2010). Third, the ADDM formally unifies economic decisions with perceptual decisions, which have been often modeled as drift-diffusion processes (Bogacz et al., 2006; Brunton et al., 2013; Gold and Shadlen, 2001; Mazurek et al., 2003). However, a careful evaluation reveals that the empirical evidence supporting the ADDM is not conclusive and that several of the results presented in support of the model afford alternative interpretations.

At the behavioral level, the main argument for the ADDM comes from studies showing that aspects of the fixation data recorded in free viewing choice tasks – e.g., the fact that subjects tend to spend more time looking at the option they eventually choose – are well explained by the ADDM (Krajbich et al., 2010; Krajbich and Rangel, 2011). However, as the authors emphasize in their discussion, those data do not demonstrate a causal link between fixation and choice. In fact, causality might well be in the opposite direction, in the sense that subjects in any trial might tend to look longer at the good they are leaning towards. In one study, the same authors sought to address the issue of causality by forcing subjects to fixate specific goods at specific times (Lim et al., 2011). However, once gaze direction was mandated, the relation between fixation times and choices vanished almost completely. Thus a simple interpretation of their original results (Krajbich et al., 2010) is that the decision takes place through yet unknown mechanisms, and that fixation only introduces a relatively small bias.

Other behavioral observations seem to undermine the ADDM. In particular, considering decisions made in the absence of gaze shifts, it is hard to reconcile the ADDM with established notions on visual attention. Current views concur that visual attention can be entirely focused on one item or divided between two or more items, depending on the behavioral circumstances and the task demands. In the ADDM, subjects are assumed to focus their attention entirely on one item at any given time, and to switch the focus of attention multiple times in the course of a decision. Indeed, the ADDM is a random walk where each step corresponds to an endogenously driven attentional switch. Thus the serial sampling assumption is not accessory in the model; it is a foundational aspect of the ADDM. The problem becomes apparent if one considers measures of decision times. An extensive literature in experimental psychology examined the dynamics of focused attention. Robust evidence indicates that whenever attention is shifted endogenously, shifts are slow, with dwell times typically in the order of 250–500 ms (Buschman and Kastner, 2015; Duncan et al., 1994; Fiebelkorn et al., 2013; Muller et al., 1998; Theeuwes et al., 2004; Ward et al., 1996). Thus the ADDM should predict relatively slow decision times – it seems reasonable to estimate that the fastest decisions made through an ADDM would take 500 ms or more. In contrast, behavioral and physiological measures indicate that economic decisions can be significantly faster. For example Milosavljevic et al. (2010) found that subjects could reliably choose the preferred option with reaction times ranging 401–433 ms depending on the decision difficulty (note that these reaction times also included the time necessary for saccade initiation). Neuronal data from juice choice experiments are consistent with these findings (Figure 5G) (Padoa-Schioppa, 2013). These measures are at odds with serial sampling and suggest that subjects in these experiments divided their attention between the offers on display.

At the neuronal level, a case for the ADDM was made by Hare et al. (2011), who used a juice choice task nearly identical to that used in primate experiments (Figure 3A). In this study, the authors defined a neural network version of the ADDM (referred to as ADDMn), fitted its parameters based on the behavioral choices, derived an integrated measure for the total activity in the network (Mout), and used Mout as a regressor to interpret the BOLD signal. They found that neural activity in dorsomedial prefrontal cortex (dmPFC) and intraparietal sulcus (IPS) correlated with Mout, and they concluded that value comparisons take place in these regions through ADDM-like mechanisms. One weakness of this argument is that the correlation between aggregate measures does not necessarily imply a correspondence between the underlying elements. In other words, the fact that an aggregate measure derived from the model (Mout) provides good explanatory power for the aggregate neural activity in a particular region (the BOLD signal) does not imply that neurons in that region encoded the variables defined in the underlying model. Drawing such implication is particularly problematic if neuronal responses in the brain areas of interest are heterogeneous. Furthermore, Hare et al. (2011) indicate that their dmPFC corresponds in the macaque brain to the region recorded from by Kennerley et al. (2009), namely the dorsal bank of the cingulate sulcus (ACCd). One primate neurophysiology experiment focused on this very area (Cai and Padoa-Schioppa, 2012), and since the tasks were nearly identical, the data provide an ideal opportunity to test the claims of Hare et al. (2011) at the neuronal level. The analysis of firing rates examined a large number of variables that neurons in the ACCd could potentially encode, including all the variables defined in the ADDMn. The results clearly demonstrated that neurons in the ACCd do not encode the variables defined in the ADDMn (in particular, neither action values nor value differences were encoded at the neuronal level). Moreover, the activity of neurons in the ACCd encoding the choice outcome (chosen value, chosen juice) emerged too late to contribute to the decision (Cai and Padoa-Schioppa, 2012). These same signals, measured in the same task, emerged in the OFC much earlier than in ACCd. Thus the results seem to rule out that decisions in juice choice tasks are made through ADDM-like mechanisms taking place in dmPFC/ACCd.

Another argument put forth by Hare et al. (2011) is based on measures of effective connectivity (i.e., correlation). Specifically, they found increased effective connectivity between dmPFC and vmPFC during stimulus presentation and between dmPFC and motor cortex during the delay preceding the motor response. Interestingly, these effects are well explained by the neuronal results from ACCd (Cai and Padoa-Schioppa, 2012). Indeed, in the early part of the trial (1 s following the offer), the dominant variables encoded by neurons in the ACCd was the chosen value, which was also encoded in the OFC. This explains the increased correlation between these two areas at that time. Later in the trial, especially in the time window preceding the movement onset, many neurons in ACCd encoded the direction of the upcoming movement. This explains the correlation between ACCd and motor cortex at that time. Thus the effective connectivity patterns found by Hare et al. (2011) most likely capture real correlations in neuronal activity. However, these correlations do not imply that decisions are made in dmPFC/ACCd and they do not support the ADDM against alternative hypotheses.

It may be noted that the ADDM is primarily an algorithmic model. Thus one might wonder whether good-based decisions might take place within OFC but according to the ADDM. Two observations in the juice choice experiments described above seem to argue against this hypothesis. First, according to the ADDM, one population of neurons participating in the decision should encode the difference in value between the chosen option and the other, non-chosen option. Contrary to this prediction, the variable value difference explained very few neuronal responses in OFC (Padoa-Schioppa and Assad, 2006). Second, as noted above, chosen juice cells encoded the binary choice outcome. If good-based decisions took place in OFC according to the ADDM, the activity of these cells should present a race-to-threshold profile similar to that observed in area LIP during perceptual decisions (Roitman and Shadlen, 2002). However, experimental measures did not match this prediction (Figure 5G) (Padoa-Schioppa, 2013).

In summary, the ADDM stipulates that economic decisions (i.e., value comparisons) result from random walks driven by endogenously driven shifts of visual attention or gaze. Empirical support for this hypothesis is weak at best. Importantly, the present considerations do not exclude that visual attention – or, more generally, mental focus – might play a role in the construction of subjective values. Some framing effects observed in behavioral economics are consistent with this view.

Conclusions