Abstract

Objectives

Substantial development assistance and research funding are invested in health research capacity strengthening (HRCS) interventions in low-income and middle-income countries, yet the effectiveness, impact and value for money of these investments are not well understood. A major constraint to evidence-informed HRCS intervention has been the disparate nature of the research effort to date. This review aims to map and critically analyse the existing HRCS effort to better understand the level, type, cohesion and conceptual sophistication of the current evidence base. The overall goal of this article is to advance the development of a unified, implementation-focused HRCS science.

Methods

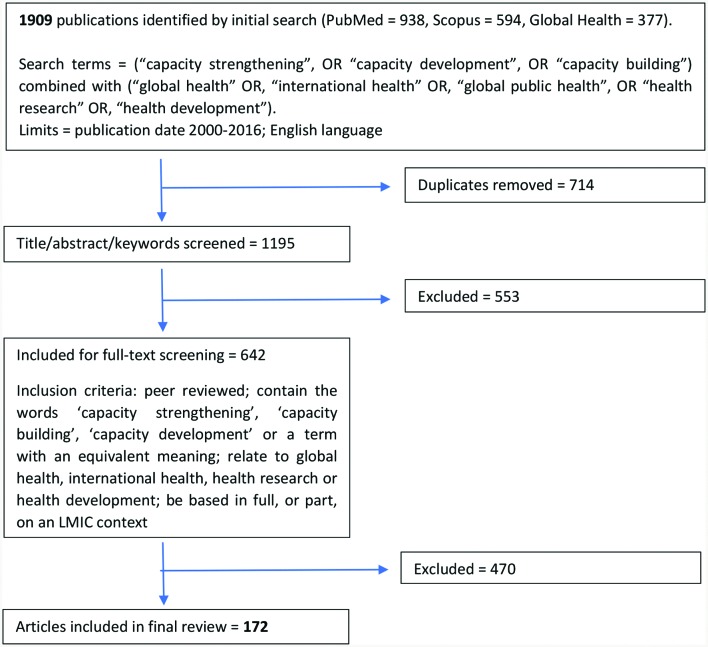

We used a scoping review methodology to identify peer-reviewed HRCS literature within the following databases: PubMed, Global Health and Scopus. HRCS publications available in English between the period 2000 and 2016 were included. 1195 articles were retrieved of which 172 met the final inclusion criteria. A priori thematic analysis of all included articles was completed. Content analysis of identified HRCS definitions was conducted.

Results

The number of HRCS publications increased exponentially between 2000 and 2016. Most publications during this period were perspective, opinion or commentary pieces; however, original research publications were the primary publication type since 2013. Twenty-five different definitions of research capacity strengthening were identified, of which three aligned with current HRCS guidelines.

Conclusions

The review findings indicate that an HRCS research field with a focus on implementation science is emerging, although the conceptual and empirical bases are not yet sufficiently advanced to effectively inform HRCS programme planning. Consolidating an HRCS implementation science therefore presents as a viable option that may accelerate the development of a useful evidence base to inform HRCS programme planning. Identifying an agreed operational definition of HRCS, standardising HRCS-related terminology, developing a needs-based HRCS-specific research agenda and synthesising currently available evidence may be useful first steps.

Keywords: capacity strengthening, lmic, scoping review

Strengths and limitations of this study.

This scoping review brings together various studies and reviews focused on health research capacity strengthening (HRCS) to provide the impetus and direction for a dedicated HRCS implementation science to emerge and to foster a common identity for HRCS researchers.

This review critically analysed current definitions of HRCS to contribute towards the identification of a consolidated, evidence-based, operational definition of HRCS on which future HRCS interventions and evaluations can be based.

Some articles published in non-Anglophone journals, in non-health related journals or in a lexicon outside of the keyword terms employed herein would not have been retrieved by the search methodology.

Relevant work that remains unpublished, published outside of academic peer-reviewed journals or published prior to 2000 would also have been omitted.

The review did not critically examine the quality of the research effort (in original research publications) or analyse the output (findings) of the collective research effort.

Introduction

Health research capacity in many low-income and middle-income countries (LMIC) is poor,1–4 undermining LMIC’s ability to identify and respond to local health needs or to equitably participate in the international response to global health challenges. Numerous health research capacity strengthening (HRCS) interventions have been employed in LMIC ranging from simple training programmes to currently advocated ‘systems’ approaches that focus on developing the capacity of individual researchers, research institutions and the wider research environment.5–7 The international research community has a dual role in LMIC HRCS. The first role is that of an HRCS implementer and centres on the transfer of expertise in specialist subject areas pertinent to LMIC health research priorities, typically from higher-capacitated to lower-capacitated individuals or organisations and may be facilitated through such mechanisms as scholarship schemes, technical assistance, research networks or research consortia. The second role is that of an HRCS scientist and centres on the creation of robust theory and evidence to inform optimal HRCS interventions. Here, the researcher is not an expert in the subject matter of a specific HRCS intervention (eg, increasing capacity in operational research to support national malaria control programmes), but is concerned with providing the evidence base to inform HRCS funders and implementing partners how their respective programme goals may best be achieved (eg, what investments would produce the greatest, most sustainable gain in operational research capacity to support a national malaria control programme).

The extent to which the research community is fulfilling this latter role (ie, HRCS scientist), as compared with the former role (ie, HRCS implementer), is questionable at present. A recent paper described the existing HRCS evidence base as ‘confusing, controversial and poorly defined’8 despite a long recognised need to support HRCS in LMIC.9 Fundamental questions remain largely unanswered such as how to reliably assess existing capacities at different levels of a health research system, which interventions facilitate sustainable capacity gains in which circumstances and which capacity term (building, strengthening or development) is the most nuanced and appropriate to reflect developmental discourse and baseline capacities.10 The international research community is, therefore, in the awkward position of being a highly active participant in the transfer of scientific theory and method within the context of subject-specific HRCS interventions, yet largely inactive in rigorously applying scientific theory and method to the HRCS process.

The paucity of evidence available to inform HRCS implementation reflects, in part, the difficulties in measuring an inherently multifaceted, long-term, continuous process (ie, HRCS) subject to a diverse range of influences and assumptions. A greater constraint has been the sparse and disparate nature of the HRCS-related research effort to date. HRCS-related research has involved multiple academic disciplines, employing diverse frameworks, concepts, methods and terminologies, working in isolation and publishing in different fields (eg, medical education, communication, operational research and evaluation). A dedicated, multidisciplinary, implementation-focused research approach is undoubtedly required to improve the effectiveness, impact and value for money of current and future HRCS implementation activities in LMIC. However, there is little evidence of a unified HRCS implementation science emerging to date.

The overall goal of this article is to advance the development of a unified, implementation-focused HRCS science. To achieve this goal, a scoping review of HRCS-related publications for the period 2000–2016 was conducted and operational definitions of HRCS within this literature critically examined. The review findings are not presented as a definitive account of HRCS activity across this period as relevant material may be unpublished, may be found in the grey literature or may be published in a lexicon outside of the search terms employed herein. The review is better understood as an attempt to critically analyse the collective HRCS effort regarding the level, type, cohesion and conceptual sophistication of the current evidence base. The review may be considered an initial attempt to map the HRCS research effort, providing the impetus and direction for a dedicated HRCS implementation science to emerge and fostering a common identity for HRCS researchers.

Methods

This review was conducted according to stages 1–5 of the advanced ‘scoping’ methodology proposed by Levac et al,11 based on the original framework of Arksey and O’Malley.12 A scoping review was considered appropriate given the primary focus was on examining the extent, range and nature of an emerging peer-reviewed literature. The critical examination of operational definitions of HRCS falls outside of the ‘scoping review’ approach, yet is included as a means of ‘revealing’ (in part) the conceptual sophistication and cohesion of the reviewed literature.

Identification of data sources

The first two steps of the scoping review method include identifying a research question and relevant studies. To explore the breadth, concepts, definitions and methods currently prioritised in the HRCS peer-reviewed literature, we searched for empirical and theoretical publications within the following databases: PubMed, Global Health and Scopus. Search terms used were: (‘capacity strengthening’, OR ‘capacity development’, OR ‘capacity building’) combined with (‘global health’, OR ‘international health’, OR ‘global public health’, OR ‘health research’, OR ‘health development’). Additional search criteria included: papers published between 1 January 2000 and 31 December 2016 and both abstract and full paper available in English. Searches began from the year 2000 as a reflection of the stepwise change in the profile and investment in HRCS. Results were stored within an EndNote library.

Selection of data sources

Study selection (step 3) was an iterative process in which selected abstracts and full texts were initially reviewed to identify and agree on inclusion criteria, which were then subsequently ‘tested’ and refined through further review. All article titles, abstracts and keywords were reviewed against the final inclusion criteria (figure 1). Publications that met these criteria following abstract review were then subjected to a more intensive full-text review. Publications in which a conclusive inclusion/exclusion decision could not be made on the basis of abstract review were also included for full-text review. SG and JP independently screened publications included for full-text review with LD providing a third review to determine inclusion/exclusion status in cases of disagreement.

Figure 1.

Summary of search and selection process. LMIC, low-income and middle-income countries.

Data charting and analysis

The variables extracted from each publication included in the final review were determined by an iterative ‘data charting’ process (step 4) SG and JP independently reviewed a selection of publications and identified potential variables to extract. Target variables were then agreed by consensus opinion. Target variables included publication ‘typologies’ (box 1) and the wide range of programme-type, author-type and research-type data listed in tables 1 and 2 and online supplementary tables S1–S7. Research quality was not formally assessed; however, some aspects such as study design, methods and analysis were considered where appropriate, Data extraction was conducted independently by at least two reviewers, with the third providing a deciding opinion in cases of disagreement. Following data extraction, each member of the review team was assigned a subset of publications for subsequent summary analysis (step 5). Final analysis and reporting of all data were agreed by mutual consent.

Box 1. HRCS publication typologies.

Original research

Publications in which (1) a hypothesis, research question or study purpose was stated, (2) research methods described, (3) results reported and (4) the results and their possible implications discussed.

Perspectives, opinion or commentary

Publications expressing the authors’ viewpoint on some aspect of HRCS based on anecdotal evidence, personal experience and/or (in a very few cases) original data that were not presented in an ‘original research’ format (ie, did not include a formal description of the research aims, methods, results and discussion).

Systematic review

Publications in which (1) research objectives/questions were clearly stated, (2) explicit and systematic methods were used, (3) methods were limited to the systematic identification and analysis of some form of literature and (4) results were reported and discussed. Non-systematic reviews were included within the original research section.

HRCS, health research capacity strengthening.

Table 1.

Selected characteristics of reviewed publications

| Publication type | No | LMIC authorship* | Capacity term† | Defined HRCS‡ | |||||

| First | Last | Either | CB | CD | CS | Oth. | |||

| Original research | 79 | 31 | 32 | 41 | 38 | 18 | 24 | 0 | 17 |

| Pers. opin. commentary | 88 | 36 | 42 | 56 | 63 | 6 | 19 | 0 | 16 |

| Systematic review | 5 | 3 | 1 | 3 | 1 | 1 | 2 | 0 | 0 |

| Total | 172 | 70 | 75 | 100 | 102 | 25 | 45 | 0 | 33 |

*Based on location of listed organisational affiliation of first and last authors; ‘either’=either first or last.

†Capacity term used in title and then keywords given priority (CB, capacity building; CD, capacity development; CS, capacity strengthening and Oth., other).

‡Number of papers that provided an operational definition of HRCS.

HRCS, health research capacity strengthening; LMIC, low-income and middle-income countries.

Table 2.

Selected methodological characteristics of original research publications

| Subcategory | No | Setting* | Design† | Data collection‡§ | Data analysis§¶ | ||||||||||||||

| Af | Am | Se | Eu | Em | Wp | Gl | Quan | Qual | Mix | Sur | IDI | FGD | Rev | Oth | The | Des | Inf | ||

| Learning and evaluation | 36 | 14 | 1 | 4 | 0 | 1 | 4 | 14 | 8 | 9 | 19 | 20 | 18 | 5 | 16 | 10 | 28 | 18 | 1 |

| Capacity assessment | 27 | 16 | 0 | 0 | 1 | 2 | 3 | 5 | 6 | 7 | 14 | 15 | 13 | 5 | 15 | 7 | 19 | 22 | 0 |

| HRCS methods | 7 | 6 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 5 | 1 | 2 | 1 | 1 | 4 | 4 | 6 | 1 | 1 |

| Evidence synthesis | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 4 | 1 | 1 | 0 | 0 | 5 | 4 | 5 | 0 | 0 |

| Miscellaneous | 4 | 2 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 2 | 2 | 2 | 3 | 0 | 2 | 1 | 4 | 0 | 0 |

| Total | 79 | 38 | 1 | 4 | 1 | 3 | 7 | 27 | 15 | 27 | 37 | 40 | 35 | 11 | 42 | 26 | 62 | 41 | 2 |

*WHO region where the study was located: African (Af), Americas (Am), South-East Asia (Se), European (Eu), Eastern Mediterranean (Em), Western Pacific (Wp) or Global (Gl) (defined as three or more WHO regions).

†Quantitative (Quan), qualitative (Qual) or mixed methods (Mix).

‡Survey (Sur), in-depth interview (IDI), focus group discussion (FGD), literature/document review (Rev) or other methodology (Oth).

§Categories are not mutually exclusive.

¶Thematic (The), descriptive (Des) or inferential (Inf).

HRCS, health research capacity strengthening.

bmjopen-2017-018718supp001.pdf (459KB, pdf)

During in-depth analysis of each publication, any operational definition of (health) research capacity strengthening was extracted and analysed for content. To identify commonalities, definition content was independently coded by JP and SG per the a priori content criteria identified in table 3. Coding disagreements were resolved by the same process described above. A content score, defined as the number of domains (out of 10) present, was calculated for each definition to identify the most inclusive working definition of HRCS within the current evidence base.

Table 3.

Content analysis of ‘capacity’ definitions*

| Subject defined | Capacity term | Content domains† | |||||||||

| Ind. | Ins. | Env. | Def. | Car. | App. | Qua. | Sus. | Pro. | Con. | ||

| Health research capacity | Building (30), strengthening (70) | x | x | x | x | x | x | ||||

| Building (166), strengthening (74, 126) | x | x | x | x | |||||||

| Strengthening (123) | x | x | |||||||||

| Development (45) | x | x | x | ||||||||

| Strengthening (48) | x | x | |||||||||

| Building (139) | x | x | x | x | |||||||

| Building (97) | x | x | x | ||||||||

| Research capacity | Building (164), strengthening (29, 123, 159) | x | x | x | x | x | x | x | x | ||

| Strengthening (16, 72) | x | x | x | x | x | ||||||

| Development (4), strengthening (31, 74) | x | x | x | x | x | x | x | x | x | ||

| Building (132) | x | x | |||||||||

| Building (91, 96) | x | x | x | x | x | ||||||

| Building (130) | x | x | x | x | x | ||||||

| Strengthening (165) | x | x | x | x | |||||||

| Building (46) | x | x | x | x | x | x | x | ||||

| Strengthening (79) | x | x | x | ||||||||

| Building (166) | x | x | x | ||||||||

| Capacity | Building (25) | x | x | x | x | x | |||||

| Building (133) | x | x | x | x | |||||||

| Strengthening (66) | x | x | x | x | |||||||

| Strengthening (65) | x | x | x | x | x | ||||||

| Building (150) | x | x | x | ||||||||

| Strengthening (47) | x | x | x | ||||||||

| Organisational capacity | Development (27) | x | x | ||||||||

| Progress | Building (142), development (143) | x | x | ||||||||

*Numbered citations pertain to the reference list in online supplementary table S8.

†The content of each definition was independently coded according to the following criteria: explicit reference to individual-level (Ind.), institutional-level (Ins.) or environmental-level (Env.) capacity strengthening; explicit reference to strengthening capacity in terms of defining research questions or identifying research priorities (Def.), conducting research or applying research methods (Car.) or communicating and applying research outcomes (App.) and explicit reference to facilitating an improvement in research abilities/quality (Qua.), sustainability (Sus.), reference to HRCS as a process (Pro.) and/or HRCS as a continuous activity (Con.).

HRCS, health research capacity strengthening.

bmjopen-2017-018718supp002.pdf (536.3KB, pdf)

Results

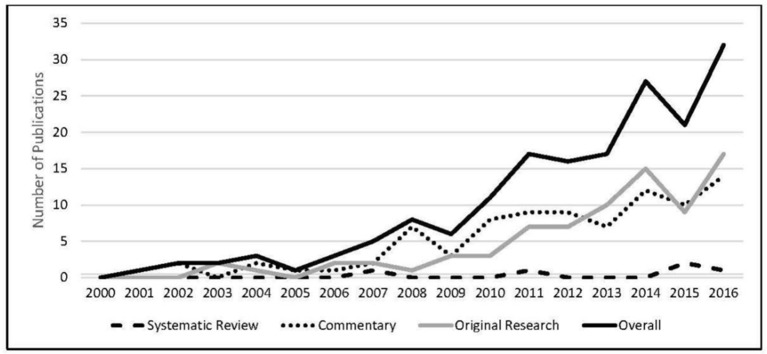

A total of 1195 papers were retrieved via the search methodology of which 172 (see online supplementary table S8) met the final inclusion criteria. The number of HRCS publications identified increased over time, from 0 in the year 2000 to a maximum of 32 in 2016 (figure 2).

Figure 2.

Number of publications per year by publication type.

HRCS publication typologies

Overall, 51% of publications presented a perspective, opinion or commentary, 46% original research and 3% findings from a systematic review (table 1). The first and/or last author was from an institute located in an LMIC in 58% of publications, ‘capacity building’ was the favoured term in 59% and 19% presented an operational definition of HRCS.

Original research

The 79 publications that met ‘original research’ criteria were subcategorised into research typologies including: learning and evaluation (from research initiatives), capacity assessment, HRCS methods for implementation, evidence synthesis for HRCS implementation and evaluation and miscellaneous. Table 2 presents selected methodological characteristics of the original research publications both overall and by subcategory. Additional data, not all of which are described below, are included in online supplementary tables S1–S7.

Learning and evaluation

This category included 36 publications that presented findings from a formal evaluation of an HRCS initiative or described ‘learnings’ obtained from HRCS implementation (table 2 and online supplementary table S1). Sixty-four per cent was ‘education’-based HRCS programmes in which some form of training (inclusive of postgraduate awards) was provided to strengthen individual capacity and, in some cases, was inclusive of the development and transfer of a course curriculum at an institutional level, for example.13 Other HRCS programme types included collaborative research (n=12), time-limited work placement (n=2), strengthening the broader health research system (n=2), infrastructure development (n=1) or strengthening financial management (n=1). The respective HRCS programmes involved North–South collaboration in 83% of cases. Seventy-five per cent of programmes sought to strengthen research capacity in a specific subject area, most commonly health systems (n=6).

The objective of each ‘learning and/or evaluation’ publication was coded per the typologies presented in box 2. Overall, 67% of the learning and evaluation publications were given a single code, and 33% were given two or more codes. ‘Lessons learned’ was allocated to 44% of publications, ‘programme outputs’ to 33%, ‘programme outcomes’ to 28% and unique codes were allocated to 33%. Quantitative outcome indicators varied among publications that employed them, although were generally: variants of some form of citation analysis to measure influence of research publication (that followed the HRCS intervention) on health policy,14–16 measures of knowledge change pre-HRCS and post-HRCS intervention or knowledge gained from an intervention17–20 and some form of ‘attributional’ measure designed to assess the relationship between capacity improvement and the respective HRCS intervention.17 18 21 22

Box 2. Learning and evaluation typologies.

Lessons learned

Publications focused on broad, programme(s)-level experiences in setting up and/or participating in an HRCS initiative and/or providing a largely qualitative account of programme achievements.

Programme outputs

Publications focused on HRCS programme outputs, where outputs were defined as a quantification of activities that occurred during the programme and/or related professional activities that occurred after the programme (eg, number of publications).

Programme outcomes

Publications that focused on improvements in individual-level, institutional-level or environmental-level health research capacity following an HRCS initiative and employed quantitative measures designed to attribute improved performance to the respective HRCS intervention.

HRCS, health research capacity strengthening.

Sixty-four per cent of studies were retrospective, 64% were a type of (quasi-)formative evaluation, 53% were mixed methods and 17% were authored by individuals independent of the organisation implementing the respective HRCS initiative (study design data not presented in table 2 are shown in online supplementary table S1). Sampling was primarily purposive (n=20).

Capacity assessment

This category included 27 original research publications that presented the outcome of some form of health research capacity assessment (table 2 and online supplementary table S2). Capacity assessment focus varied; the largest proportion (9/27) focused on assessing capacity to carry out research, often in a specific subject area (18/27), most commonly health policy and systems research (6/27).

Capacity assessments were conducted within the context of a research institution(s), including universities or research network in 59% of publications. Eleven per cent focused on the capacities of ethics committees and one involved healthcare providers. The remaining 26% focused on national and/or regional capacity in specific research and/or geographical areas through reviewing literature and publication trends.

Thirty-seven per cent (10/27) of capacity assessments were conducted as part of a consortium-based research programme, consisting of European and African partners.

HRCS methods for implementation

This category includes seven articles that present a methodological approach to HRCS or evaluation of HRCS (online supplementary table S3). Two articles focus on HRCS within the frame of North–South partnerships, and four prioritised general HRCS, often embedded in a specific subject area, for example, policy analysis. The remaining article focused on the development and validation of a questionnaire for evaluation of HRCS training activities.

The numbers of steps in methodological approach varied; however, consistent phasing or process can be identified. In all publications, the purpose of the HRCS activity was initially established although this was only stated as an explicit methodological step in one paper.23 Three articles then developed bespoke ‘optimal health research’ criteria or ‘ideal partnership capacity’ criteria through a combination of literature searches and interactions with key stakeholders. The remaining four publications adapted an existing tool or framework that could be used as a common ideal for health research or partnership capacity. Once developed, three papers described these measures as ‘standardised’. The remaining four papers described these measures as ‘semistandardised’ to allow for flexibility in context. Two papers described this flexibility in approach as linked to theory of change or quality assurance cycle methodology.

Papers then presented the methods used to conduct the capacity assessment. One described a fixed point of quantitative measurement, and six described a phased or developmental approach to identification of both health research capacity strengths and weaknesses, anticipating that as HRCS methods were implemented, weaknesses may be identified and certain areas strengthened. One partnership focused paper described this developmental approach to ensure equity within partnership development. Two papers described assessments that were solely ‘self-assessments’ (ie, relied solely on internal institution staff). Four papers described assessments that involved collaborative assessments between partners inside (usually LMIC) and outside (usually high-income country (HIC)) the institution. Four of the papers that took a developmental approach described the end of this process as the collaborative development of continuously evolving capacity strengthening plans which HRCS activities should be implemented against.

Evidence synthesis for HRCS implementation and evaluation

This category included five articles that focused on the synthesis of evidence to enhance learning for the implementation or evaluation of HRCS programmes (online supplementary table S4). Four articles concentrated on understanding multiprogramme experience to harmonise learning for HRCS evaluation. All four of these articles focus on the experience of funders of HRCS activities, with three extending their exploration to the views of HRCS experts, evaluators and/or implementers. The fifth article focused on understanding multiprogramme experience to aid in more effective HRCS programme design and implementation for nurses. All articles had a global focus, with four prioritising LMIC.

The nuanced nature of each article in this category made identification of core typologies challenging. The four articles focused on evidence harmonisation for HRCS24–27 argued that evaluations should be underpinned by theory, using logic or theory of change models. However, three articles reflected that these models are rarely employed in practice due to time constraints on the evaluation process.24 25 27 Furthermore, where potential frameworks for evaluation do exist, two articles described these as being driven by the goal of the funder with limited stakeholder engagement.26 27 Two articles linked lack of stakeholder engagement in evaluation design to issues of equity,24 26 arguing that for HRCS activities to be equitable, members of the most marginalised populations should be involved in evaluation design and indicators should reflect equity issues.

Miscellaneous

Four original research articles could not be assigned to any subcategory (table 2 and online supplementary table S5). The first publication was a qualitative cross-sectional study that investigated the challenges and benefits of research capacity strengthening through North–South research partnerships from a Ugandan perspective. The second publication was a qualitative case study of health research commissioning among different organisations in East Africa. The third investigated researchers’ (involved in collaborative networks across LMIC) experiences regarding science and ethics in global health research collaborations. The fourth publication discussed different experiences of mentoring health researchers across HICs and LMIC, as effective mentorship of researchers is crucial for research capacity strengthening.

Perspectives, opinion or commentary

The 88 ‘perspective’ publications were coded based on the primary subject matter. Codes included the three previously described in box 2 and the additional codes ‘programme description’ and ‘recommendations’. Publications were coded ‘programme description’ if they presented a description of a specific HRCS programme or activity. Publications were coded ‘recommendations’ if a primary purpose of the publication was to describe steps, processes, approaches and/or activities that, per the authors’ views and experiences, would enhance capacity strengthening initiatives. There is significant overlap between the categories ‘lessons learned’ and ‘recommendations’. The key point of difference is that the lessons or recommendations presented in publications coded ‘recommendations’ are largely based on broad experience or reading of the literature rather than reference to a specific HRCS programme or programme type (in which case they would be coded ‘lessons learned’).

Overall, 73% of the perspective, opinion or commentary publications were given a single ‘focus’ code, and 27% were given two or more codes. ‘Lessons learned’ was allocated to 49% of publications, ‘programme description’ to 26%, ‘recommendations’ to 25%, ‘programme outputs’ to 19%, ‘programme outcomes’ to 2% and unique codes were allocated to 8%. The quantitative outcome indicators included a measure of knowledge change pre-HRCS and post-HRCS intervention28 and an ‘attributional’ measure designed to assess the relationship between capacity improvement and the respective HRCS intervention.29

The content of the various perspective, opinion or commentary publications was derived from HRCS experience in 76% of publications, although in the majority, commentary pertained to experience from a single HRCS programme (59/67). Content was also drawn from reviews of HRCS-related literature or documentation (12/88), HRCS-related workshops (5/88) and in eight cases, the basis of the commentary was not stated. The HRCS programme or activity types varied widely, ranging from a broad emphasis on HRCS in LMIC to specific aspects of HRCS in specified countries.

Systematic review

Five publications fitted this category (online supplementary table S7). Two publications reviewed tools and approaches to assess capacity needs and monitor and evaluate capacity strengthening activities.30 31 Three publications did not focus on specific HRCS activities, but used bibliometric and scientometric techniques to investigate health research capacity in specific subject areas focusing on publication trends, author affiliations, geographical areas of the study, study design and thematic focus.32–34

Two publications searched a single database, two searched two and one searched three. Four publications searched PubMed as the main database. Four publications followed a single systematic search strategy, whereas one employed a systematic search and snowball sampling to identify publications after considering inclusion and exclusion criteria. The number of papers included in each review varied from 14 to 690.

HRCS definitions

Nineteen per cent (33/172) of publications presented an operational definition of ‘capacity’ (online supplementary table S9). The definition specifically pertained to ‘health research capacity’ in seven publications; in the remaining publications’, broader definitions of ‘research capacity’ (n=10), ‘capacity’ (n=6) or ‘organisational capacity’ (n=1) were presented and in two publications, capacity was operationally defined as ‘progress’. Twenty-five separate definitions were presented of which nine were original (table 3). Seven of the 25 definitions were cited by two (n=4), three (n=2) or four (n=1) publications. In all other cases, the definition was presented in a single publication. Three publications presented two definitions.

Thirty-six per cent of the definitions included explicit reference to all three levels of capacity strengthening, 12% included explicit reference to all three aspects of the research process (defining research questions, conducting research and communicating/applying research outcomes) and 28% included explicit reference to at least two of the four ‘other’ content domains assessed, the most common of which included reference to HRCS as improving research quality or ability (n=11) or HRCS as a process (n=9) (table 3). Out of the 10 content domains assessed, the median number present across all definitions was 4 (range 2–9). Variation in median ‘content’ score was evident across the definition types: the median score for ‘health research capacity’ definitions was 3 (range 2–6), 5 (range 2–9) for ‘research capacity’ definitions, 4 (range 3–5) for ‘capacity’ definitions and 2 (range 2) for the ‘organisational capacity’ and ‘progress’ definitions.

Variation between a capacity definition and favoured capacity ‘term’ (ie, building, strengthening or development) was evident where a definition had been cited by more than one paper. For example, ‘an ability of individuals, organisations or systems to perform and utilise health research effectively, efficiently and sustainably’35 was variously presented as a definition of health research capacity ‘strengthening’35 and health research capacity ‘building’.16

An additional content analysis was conducted to examine the possible relationship between favoured capacity term and choice of capacity definition (online supplementary table S10). Of the definitions used in the 14 publications that favoured the term ‘capacity building’, the median content score was 4 (range 2–8), 36% (5/14) included a specific reference to all three levels of capacity strengthening, 14% (2/14) included explicit reference to all three aspects of the research process and 21% (3/14) included explicit reference to at least two of the four ‘other’ content domains assessed. Comparative results for the 12 publications that favoured the term ‘capacity strengthening’ were: 4 (2–9), 50% (6/12), 17% (2/12) and 33% (4/12) and 2.5 (range 2–9), 25% (1/4), 25% (1/4) and 25% (1/4) for the four publications that favoured the term ‘capacity development’.

Discussion

The purpose of this scoping review was to map the current HRCS research effort since the year 2000 and to critically examine how HRCS has been defined within the peer-reviewed literature. With regard to the level and type of HRCS-related publication, the study revealed that the number of HRCS publications has increased exponentially between 2000 and 2016. Most publications during this period have been perspective, opinion or commentary pieces. Publications presenting original research findings also increased over this period and have been the primary publication type since 2013, indicating an emerging field of predominantly implementation-focused HRCS science. Almost half of the original research papers pertained to the African region as did a large proportion of commentary papers (online supplementary table S6). An Afrocentric evidence base may reflect current HRCS funding priorities36 and need; however, such Afrocentrism renders it difficult to generalise the collective findings to LMIC settings in other geographical regions.

The findings and recommendations presented in this paper should be considered alongside limitations in the review methodology. HRCS research, reviews and commentaries published in non-Anglophone journals, in non-health-related journals or in a lexicon outside of the keyword terms employed herein would not have been retrieved by the search methodology. Relevant work that remains unpublished, published outside of academic peer-reviewed journals or published prior to 2000 would also have been omitted. Thus, the reported findings should not be considered a comprehensive representation of the existing literature pertaining to HRCS in LMIC. The analysis of retrieved publications was limited to identifying the typologies within, and key characteristics of, the collective peer-reviewed literature as well as the frequency and type of operational HRCS definitions. The review did not critically examine the quality of the research effort (in original research publications) or analyse the output (findings) of the collective research effort. These tasks were outside the scope of this review, but warrant future attention to inform a fuller assessment of the ‘value’ of published HRCS research. All authors on this publication have considerable experience working in and/or with health research institutions in LMIC. However, all authors originate from, were educated in and are currently based in a high-income country context. Interpretation of the reported findings may reflect this reality.

Our findings suggest that conceptual representations of HRCS within the published literature are inconsistent and infrequently applied. Capacity was rarely defined across the publications and the definitions that were presented varied widely in content and scope. Broader definitions of ‘research capacity’ or ‘capacity’, rather than specific ‘health research capacity’ definitions, were most commonly employed and no ‘one’ specific definition of health research capacity was consistently applied. There appeared to be no relationship between a favoured capacity term, such as ‘building’ or ‘strengthening’, and the type of capacity definition used or the content of that definition. There was no apparent difference between operational definitions of (health) research capacity building, strengthening or development even though distinctions between these terms and the concepts they represent have previously been drawn.8 10 37 The content analysis identified a divide between many of the capacity definitions presented and current conceptualisations of a multilevel ‘systems’ approach to HRCS.5 6 For example, only 36% of the proffered definitions made explicit reference to individual-level, institutional-level and environmental-level capacity strengthening, and only 12% explicitly applied the definition to all stages of the research process from conception to subsequent uptake.

There was little sign of cohesion or ‘connectedness’ across the HRCS-related peer-reviewed literature. Greater use of theory of change or logic models in HRCS programme and evaluation design was advocated31–34 and evident among the subset of articles focusing on HRCS methods for implementation.27 28 30 32 However, systematic reviews or syntheses of available evidence were uncommon, despite the relatively narrow focus of the collective literature, and the available conceptual models and methodologies were rarely applied in practice. For example, learning and evaluation studies were typically retrospective and capacity assessments limited to a single ‘fixed’ time point, in contrast to the prospective, phased approaches deemed necessary to advance our understanding of what works well in HRCS implementation.28 32 Furthermore, while multilevel, systems-wide HRCS interventions are increasingly advocated,5–7 learning and evaluation studies commonly centred on individual-level education-based activities. This may reflect intervention or evaluation design, but either way highlights the absence of a widely accepted overarching (H)RCS framework to promote prevailing theories and concepts or to link the increasingly active HRCS research community.

Collectively, findings suggest that the existing (published) evidence base is not yet sufficiently developed to reliably inform HRCS interventions in LMIC. The disjointed research effort is exacerbated by the absence of a recognisable HRCS research ‘field’ and the lack of a defined, needs-based HRCS-specific research agenda. Published research primarily consists of anecdotal, qualitative or descriptive accounts of single interventions not readily generalisable across different types of HRCS or to regions outside of Africa. While research quality was not formally assessed in the context of this review, the body of evidence needs further development when considered against relevant standards such as the Medical Research Council’s guidance for developing and evaluating complex interventions38 or against common hierarchies of evidence,39 inclusive of hierarchies specifically for assessing qualitative health research.40 Good research practice would further suggest that no new ‘learning’ studies should be completed without first reviewing the existing evidence of ‘what works’ or ‘lessons learned’ from previous investments or interventions.41

Three comprehensive definitions that explicitly align with current HRCS guidelines were evident across the reviewed publications, although all three pertain to the broader notion of ‘research capacity’ strengthening. These included: ‘the ongoing process of empowering individuals, institutions, organisations and nations to: define and prioritise problems systematically; develop and scientifically evaluate appropriate solutions and share and apply the knowledge generated’42; ‘the process by which individuals, organisations, and societies develop abilities (individually and collectively) to perform functions effectively, efficiently and in a sustainable manner to define problems, set objectives and priorities, build sustainable institutions and bring solutions to key national problems’43 and ‘strengthening the abilities of individuals, institutions and countries to perform research functions, defining national problems and priorities, solving national problems, utilizing the results of research in policy making and programme delivery’.44

In our opinion, the RCS definition presented by Lansang and Dennis42 is the best among those presented in this review. This definition not only reflects current HRCS ‘best practice’ (ie, encompasses all three levels of research capacity and spans the research process from conception to uptake) but also positions RCS as an ‘ongoing process’ and places few parameters on the focus of the research to be supported (beyond defining and prioritising ‘problems’ systematically). Alternative definitions, such as those provided by the Global Forum for Health Research43 or the United Nations Development Programme,44 limit the HRCS focus to ‘(key) national problems’. While a focus on national problems is undoubtedly important, these definitions suggest that restrictions on what types of research capacity should be strengthened. The more comprehensive, and more frequently used, ‘research capacity’ definitions further raise the possibility that a health-specific RCS definition may not be needed. Arguably, a comprehensive, rather than sector-specific, RCS definition would suitably reflect contemporary HRCS approaches and illuminate the potential for health-specific RCS interventions to enhance capacity for all/additional (ie, non-health) research areas within a target institution or environment (where applicable). While discipline-specific nuance may sometimes be required, promoting this kind of intersectoral, systems-level thinking and discouraging vertical, parallel processes that can arise from topic-specific interventions is increasingly advocated in the health sector45 46 and is equally applicable in the context of a national research system.

Determining a needs-based HRCS-specific research agenda would ideally involve input from influential HRCS funders, implementers and researchers from multiple disciplines. Technical working groups, specialist meetings and the creation of networking and resource sharing platforms would be required to establish and promote the research agenda and a common HRCS implementation science. Specialist meetings and HRCS research networks would also serve to raise the profile of HRCS science, increasing its standing and recognition as a legitimate field of scientific investigation and attracting greater involvement from the broader health research community. Funding to support these activities for strengthening research systems could be modelled on existing mechanisms operating for strengthening health systems, where it is recommended that global development partners involved in health systems strengthening dedicate 5%–10% of programme funds to data collection, monitoring and evaluation and implementation research.47 Without an agreed definition and understanding of HRCS, it is difficult to calculate annual investment in HRCS in LMIC, but the sum is likely to be substantial. For example, the United Kingdom’s ‘Global Challenges Research Fund’ totals £1.5 billion over a 5-year period to support cutting edge research addressing challenges faced by developing countries, a significant proportion of which is allocated for strengthening capacity for research and innovation within LMIC (http://www.rcuk.ac.uk/funding/gcrf/). Thus, a 5% investment in (H)RCS implementation science could support a substantial research effort and rapidly accelerate learning about how to do HRCS more effectively.

Crucially, given the aim of the HRCS research endeavour, ensuring equitable participation by LMIC partners in the development of an HRCS implementation science is essential. Metrics that better account for LMIC contribution may assist this. Despite promising findings, such as relatively high levels of LMIC authorship, questions can be raised as to what extent such indicators reliably reflect equitable contribution in HRCS implementation and research.48 Relatively few studies examined North–South HRCS partnerships (a dominant form of HRCS implementation) from an exclusively southern perspective, or contrasted North–South models with South–South variants, suggesting an absence of critical reflection on the experiences and realities of those for whom HRCS interventions are intended. Such ‘silencing’ in intervention design and development should be rectified if ownership (an essential element of sustainability for HRCS interventions)49–51 is to be promoted. Conversely, it is widely acknowledged that equitable and effective partnerships should be of mutual benefit to all parties,52 yet benefits to the more strongly capacitated partners in HRCS implementation (eg, those in HIC) were rarely discussed. Consideration of such issues will likely afford deeper insights into how power and politics influence equity in the design and development of HRCS theory and implementation, as well as allowing more rigorous examination as to which models of implementation provide the most equitable, efficient and sustainable gains for HRCS.

Conclusions and recommendations

The review findings indicate that an HRCS research field with a focus on implementation science is emerging, although the conceptual and empirical bases are not yet sufficiently advanced to effectively inform HRCS programme planning. The constituent parts for a coherent and conceptually driven research effort are present (if somewhat embryonic), but are not yet aligned under a recognisable ‘HRCS implementation science’ framework. Consolidating an HRCS implementation science therefore presents as a viable option that may accelerate the development of a useful evidence base to inform HRCS programme planning. Identifying an agreed operational definition of HRCS, standardising HRCS-related terminology, developing a needs-based HRCS-specific research agenda and synthesising currently available evidence may be useful first steps. Crucially, given the aim of the HRCS research endeavour, ensuring equitable participation by LMIC partners in the development of an HRCS implementation science is essential. Advancing a dedicated HRCS implementation science will require specialist meetings (eg, technical working groups and research priority setting forums) with representation from influential HRCS researchers, key LMIC partners, funders and implementers as well as the creation and maintenance of networking and resource sharing fora. The continued, substantial investment in HRCS in LMIC suggests that apportioning a fraction of the various research and development budgets to support HRCS implementation science would represent a good ‘buy’.

Supplementary Material

Acknowledgments

The authors would like to thank Helen Smith and Janet Njelesani who contributed to an earlier version of this manuscript. Rachel Tolhurst is also acknowledged for providing a critical review of the final draft.

Footnotes

Contributors: LD, SG and JP were all involved in the search, screening and analysis of research articles. IB provided technical oversight and expertise throughout the screening processes. All authors contributed to the content, drafting, review and revisions of the manuscript.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: Supplementary files as listed in the main manuscript are available to the reader. There are no other unpublished data that link to this research.

References

- 1. McKee M, Stuckler D, Basu S. Where there is no health research: what can be done to fill the global gaps in health research? PLoS Med 2012;9:e1001209 10.1371/journal.pmed.1001209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kebede D, Zielinski C, Mbondji PE, et al. . Human resources in health research institutions in sub-Saharan African countries: results of a questionnaire-based survey. J R Soc Med 2014;107(Suppl 1):85–95. 10.1177/0141076814530602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kebede D, Zielinski C, Mbondji PE, et al. . Institutional facilities in national health research systems in sub-Saharan African countries: results of a questionnaire-based survey. J R Soc Med 2014;107:96–104. 10.1177/0141076813517680 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ijsselmuiden C, Marais DL, Becerra-Posada F, et al. . Africa’s neglected area of human resources for health research - the way forward. S Afr Med J 2012;102:228–33. [PubMed] [Google Scholar]

- 5. Ghaffar A, Ijsselmuiden C, Zucker F. Changing mindsets: Research capacity strengthening in low- and middle-income countries. Geneva: COHRED, Global Forum for Health Research and UNICEF/UNDP/World Bank/WHO Special Programme for Research and Training in Tropical Diseases (TDR), 2008. [Google Scholar]

- 6. ESSENCE. Planning, monitoring and evaluation framework for research capacity strengthening. Geneva: TDR-ESSENCE on Health Research, 2016. [Google Scholar]

- 7. DFID. Capacity building in research: A DFID practice paper. London: Department for International Development, 2010. [Google Scholar]

- 8. Franzen SR, Chandler C, Lang T. Health research capacity development in low and middle income countries: reality or rhetoric? A systematic meta-narrative review of the qualitative literature. BMJ Open 2017;7:e012332 10.1136/bmjopen-2016-012332 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Commission on Health Research for Development. Health Research: Essential Link to Equity in Development. Cambridge, USA: Commission on Health Research for Development, 1990. [Google Scholar]

- 10. Redman-MacLaren M, MacLaren DJ, Harrington H, et al. . Mutual research capacity strengthening: a qualitative study of two-way partnerships in public health research. Int J Equity Health 2012;11:79 10.1186/1475-9276-11-79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci 2010;5:69 10.1186/1748-5908-5-69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol 2005;8:19–32. 10.1080/1364557032000119616 [DOI] [Google Scholar]

- 13. Byrne E, Donaldson L, Manda-Taylor L, et al. . The use of technology enhanced learning in health research capacity development: lessons from a cross country research partnership. Global Health 2016;12:19 10.1186/s12992-016-0154-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Bennett S, Paina L, Ssengooba F, et al. . The impact of fogarty international center research training programs on public health policy and program development in Kenya and Uganda. BMC Public Health 2013;13:770 10.1186/1471-2458-13-770 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zachariah R, Guillerm N, Berger S, et al. . Research to policy and practice change: is capacity building in operational research delivering the goods? Trop Med Int Health 2014;19:1068–75. 10.1111/tmi.12343 [DOI] [PubMed] [Google Scholar]

- 16. Mahmood S, Hort K, Ahmed S, et al. . Strategies for capacity building for health research in Bangladesh: Role of core funding and a common monitoring and evaluation framework. Health Res Policy Syst 2011;9:31 10.1186/1478-4505-9-31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Wilson LL, Rice M, Jones CT, et al. . Enhancing research capacity for global health: evaluation of a distance-based program for international study coordinators. J Contin Educ Health Prof 2013;33:67–75. 10.1002/chp.21167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Goto A, Vinih NQ, Van Nguyen TT, et al. . Epidemiology research training in Vietnam: evaluation at the five year mark. Fukushima J Med Sci 2010;56:63–70. 10.5387/fms.56.63 [DOI] [PubMed] [Google Scholar]

- 19. Thomson DR, Semakula M, Hirschhorn LR, et al. . Applied statistical training to strengthen analysis and health research capacity in Rwanda. Health Res Policy Syst 2016;14:73 10.1186/s12961-016-0144-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Mahendradhata Y, Nabieva J, Ahmad RA, et al. . Promoting good health research practice in low- and middle-income countries. Glob Health Action 2016;9:32474 10.3402/gha.v9.32474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Minja H, Nsanzabana C, Maure C, et al. . Impact of health research capacity strengthening in low- and middle-income countries: the case of WHO/TDR programmes. PLoS Negl Trop Dis 2011;5:e1351–e51. 10.1371/journal.pntd.0001351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Käser M, Maure C, Halpaap BM, et al. . Research capacity strengthening in low and middle income countries - an evaluation of the WHO/TDR career development fellowship programme. PLoS Negl Trop Dis 2016;10:e0004631 10.1371/journal.pntd.0004631 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Bates I, Boyd A, Smith H, et al. . A practical and systematic approach to organisational capacity strengthening for research in the health sector in Africa. Health Res Policy Syst 2014;12:11 10.1186/1478-4505-12-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Cole DC, Boyd A, Aslanyan G, et al. . Indicators for tracking programmes to strengthen health research capacity in lower- and middle-income countries: a qualitative synthesis. Health Res Policy Syst 2014;12:17 10.1186/1478-4505-12-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Gadsby EW. Research capacity strengthening: donor approaches to improving and assessing its impact in low- and middle-income countries. Int J Health Plann Manage 2011;26:89–106. 10.1002/hpm.1031 [DOI] [PubMed] [Google Scholar]

- 26. Boyd A, Cole DC, Cho DB, et al. . Frameworks for evaluating health research capacity strengthening: a qualitative study. Health Res Policy Syst 2013;11:46 10.1186/1478-4505-11-46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Bates I, Boyd A, Aslanyan G, et al. . Tackling the tensions in evaluating capacity strengthening for health research in low- and middle-income countries. Health Policy Plan 2015;30:334–44. 10.1093/heapol/czu016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Kutcher S, Horner B, Cash C, et al. . Building psychiatric clinical research capacity in low and middle income countries: the Cuban-Canadian partnership project. Innovation Journal 2010;15:1–10. [Google Scholar]

- 29. Goto A, Nguyen TN, Nguyen TM, et al. . Building postgraduate capacity in medical and public health research in Vietnam: an in-service training model. Public Health 2005;119:174–83. 10.1016/j.puhe.2004.05.005 [DOI] [PubMed] [Google Scholar]

- 30. Mugabo L, Rouleau D, Odhiambo J, et al. . Approaches and impact of non-academic research capacity strengthening training models in sub-Saharan Africa: a systematic review. Health Res Policy Syst 2015;13:30 10.1186/s12961-015-0017-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Huber J, Nepal S, Bauer D, et al. . Tools and instruments for needs assessment, monitoring and evaluation of health research capacity development activities at the individual and organizational level: a systematic review. Health Res Policy Syst 2015;13:80 10.1186/s12961-015-0070-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Adedokun BO, Olopade CO, Olopade OI. Building local capacity for genomics research in Africa: recommendations from analysis of publications in Sub-Saharan Africa from 2004 to 2013. Glob Health Action 2016;9:31026 10.3402/gha.v9.31026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. González-Block MA, Vargas-Riaño EM, Sonela N, et al. . Research capacity for institutional collaboration in implementation research on diseases of poverty. Trop Med Int Health 2011;16:1285–90. 10.1111/j.1365-3156.2011.02834.x [DOI] [PubMed] [Google Scholar]

- 34. San Sebastián M, Hurtig AK. Review of health research on indigenous populations in Latin America, 1995-2004. Salud Publica Mex 2007;49:316–20. 10.1590/S0036-36342007000400012 [DOI] [PubMed] [Google Scholar]

- 35. Bates I, Akoto AY, Ansong D, et al. . Evaluating health research capacity building: an evidence-based tool. PLoS Med 2006;3:e299 10.1371/journal.pmed.0030299 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. UKCDS. Health Research Capacity Strengthening: A A UKCDS Mapping. London: United Kingdon Collaborative on Development Sciences (UKCDS), 2015. [Google Scholar]

- 37. Vasquez EE, Hirsch JS, Giang leM, et al. . Rethinking health research capacity strengthening. Glob Public Health 2013;8(Suppl 1):S104–S124. 10.1080/17441692.2013.786117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Craig P, Dieppe P, Macintyre S, et al. . Developing and evaluating complex interventions: the new medical research council guidance. BMJ 2008;337:a1655 10.1136/bmj.a1655 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Petticrew M, Roberts H. Evidence, hierarchies, and typologies: horses for courses. J Epidemiol Community Health 2003;57:527–9. 10.1136/jech.57.7.527 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Daly J, Willis K, Small R, et al. . A hierarchy of evidence for assessing qualitative health research. J Clin Epidemiol 2007;60:43–9. 10.1016/j.jclinepi.2006.03.014 [DOI] [PubMed] [Google Scholar]

- 41. Lund H, Brunnhuber K, Juhl C, et al. . Towards evidence based research. BMJ 2016;355:i5440 10.1136/bmj.i5440 [DOI] [PubMed] [Google Scholar]

- 42. Lansang MA, Dennis R. Building capacity in health research in the developing world. Bull World Health Organ 2004;82:764–70. [PMC free article] [PubMed] [Google Scholar]

- 43. Global Forum for Health Research. The 10/90 Report on Health Research 2003-2004. Geneva: Global Forum for Health Research, 2004. [Google Scholar]

- 44. UNDP. Technical advisory paper, No:2. New York: UNDP, 1999. [Google Scholar]

- 45. Ooms G, Van Damme W, Baker BK, et al. . The ’diagonal' approach to global fund financing: a cure for the broader malaise of health systems? Global Health 2008;4:6 10.1186/1744-8603-4-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Hafner T, Shiffman J. The emergence of global attention to health systems strengthening. Health Policy Plan 2013;28:41–50. 10.1093/heapol/czs023 [DOI] [PubMed] [Google Scholar]

- 47. Chan M, Kazatchkine M, Lob-Levyt J, et al. . Meeting the demand for results and accountability: a call for action on health data from eight global health agencies. PLoS Med 2010;7:e1000223 10.1371/journal.pmed.1000223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Bradley M. North–south research partnerships: literature review and annotated bibliography. Ottawa, Canada: International Development Research Centre, 2006. [Google Scholar]

- 49. KFPE. A Guide for Transboundary Research Partnerships: 11 Principles and 7 Questions. Bern, Switzerland: Swiss Commission for Research Partnerships with Developing Countries (KFPE), 2012. [Google Scholar]

- 50. Pryor J, Kuupole A, Kutor N, et al. . Exploring the fault lines of cross‐cultural collaborative research. Compare 2009;39:769–82. 10.1080/03057920903220130 [DOI] [Google Scholar]

- 51. Dean L, Njelesani J, Smith H, et al. . Promoting sustainable research partnerships: a mixed-method evaluation of a United Kingdom-Africa capacity strengthening award scheme. Health Res Policy Syst 2015;13:81 10.1186/s12961-015-0071-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Musolino N, Lazdins J, Toohey J, et al. . COHRED Fairness Index for international collaborative partnerships. Lancet 2015;385:1293–4. 10.1016/S0140-6736(15)60680-8 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2017-018718supp001.pdf (459KB, pdf)

bmjopen-2017-018718supp002.pdf (536.3KB, pdf)