Abstract

Background

Informed by our prior work indicating that therapists do not feel recognized or rewarded for implementation of evidence-based practices, we tested the feasibility and acceptability of two incentive-based implementation strategies that seek to improve therapist adherence to cognitive-behavioral therapy for youth, an evidence-based practice.

Methods

This study was conducted over 6 weeks in two community mental health agencies with therapists (n = 11) and leaders (n = 4). Therapists were randomized to receive either a financial or social incentive if they achieved a predetermined criterion on adherence to cognitive-behavioral therapy. In the first intervention period (block 1; 2 weeks), therapists received the reward they were initially randomized to if they achieved criterion. In the second intervention period (block 2; 2 weeks), therapists received both rewards if they achieved criterion. Therapists recorded 41 sessions across 15 unique clients over the project period. Primary outcomes included feasibility and acceptability. Feasibility was assessed quantitatively. Fifteen semi-structured interviews were conducted with therapists and leaders to assess acceptability. Difference in therapist adherence by condition was examined as an exploratory outcome. Adherence ratings were ascertained using an established and validated observational coding system of cognitive-behavioral therapy.

Results

Both implementation strategies were feasible and acceptable—however, modifications to study design for the larger trial will be necessary based on participant feedback. With respect to our exploratory analysis, we found a trend suggesting the financial reward may have had a more robust effect on therapist adherence than the social reward.

Conclusions

Incentive-based implementation strategies can be feasibly administered in community mental health agencies with good acceptability, although iterative pilot work is essential. Larger, fully powered trials are needed to compare the effectiveness of implementation strategies to incentivize and enhance therapists’ adherence to evidence-based practices such as cognitive-behavioral therapy.

Electronic supplementary material

The online version of this article (10.1186/s13012-017-0684-7) contains supplementary material, which is available to authorized users.

Keywords: Evidence-based practices, Incentives, Behavioral economics, Community mental health

Background

The importance of implementing evidence-based practice (EBP) in community mental health agencies has been well established [1], yet EBP is not widely used in these settings [2]. Use of evidence-based psychosocial treatments that have been systematically and rigorously evaluated [3] in public mental health systems results in better therapeutic outcomes and a cost-benefit advantage over treatment as usual [4]. In recent years, policy makers have invested resources to develop infrastructure to support EBP in many large mental health systems [1]; yet the integration of EBP into practice continues to be highly variable [5, 6].

There is a growing interest in identifying effective implementation strategies that will increase adoption, implementation, and sustainment of EBP. However, most prior implementation studies in community mental health settings have focused on training and consultation, despite evidence that these strategies do not produce sustainable changes in therapist behavior [7, 8]. This pilot study seeks to understand the feasibility and acceptability [9] of two incentive-based implementation strategies that have been used in health settings and have direct relevance to and implications for policy and practice in public mental health systems across the USA.

One of the most robust findings from our efforts to study EBP implementation over the past 5 years in the Philadelphia public mental health system is that therapists do not feel rewarded or recognized for using EBP, thus potentially limiting their motivation to incorporate these interventions into their practice [5, 10–12]. Recent work in healthcare has used incentive-based strategies to change provider and patient behavior, drawing from behavioral economics, which offers insights for manipulating incentive design to account for the psychological biases that drive human behavior [13]. These strategies have shown robust effects in behavior change for both patients and providers [14–18]. Two incentive-based implementation strategies that could be deployed in mental health include financial and social incentives [19]. Incentives and rewards fall under the “reward and threat” category within the Behavior Change Technique Taxonomy. Specifically, incentives refer to informing individuals that the delivery of money, vouchers, or valued objects will be delivered if there is an effort to perform a behavior. Rewards refer to giving an individual money, vouchers, or valued objects when an effort to perform a behavior occurs [20].

Financial incentives assume that variation in clinician performance is caused by variability in motivation and that financial incentives will add to motivation [21]. Broadly across healthcare practices, a Cochrane Report systematic review found that financial incentives may be effective in changing healthcare professional practice, particularly when improving processes of care [22]. A few studies have examined the effectiveness of financial incentives for implementation of substance abuse interventions in drug and alcohol settings [23, 24] and suggest that financial incentives may be a powerful lever to change substance abuse counselor behavior in the short term [23]. More recently, the National Child Traumatic Stress Network called for the use of financial incentives in the delivery of trauma-focused cognitive-behavioral therapy (TF-CBT) nationally [25], although such incentives for delivery of EBP have not been studied.

An alternative strategy, social incentives, also assumes that variation in performance is caused by variability in motivation, but that the basis of this motivation is a desire to uphold personal, internalized professional standards and a high level of quality care. When public recognition of a peer reveals discrepancies between an individual’s behavior and that of her peers, particularly in the presence of strong professional norms, she is motivated to reduce the discrepancy by changing her behavior. This activation of peer comparisons [19] can be particularly salient for professions that have strong norms such as mental health providers. Leveraging social incentives to change therapist behavior, such as having a supervisor or leader of an agency publicly recognize therapist performance, has been lauded as a potentially effective strategy in the healthcare literature [19, 26, 27]. However, while early research suggests this approach is promising [28], social incentives have not been studied in mental health.

The primary aims of this pilot study were to evaluate the feasibility and acceptability of two incentive-based implementation strategies in mental health settings. An exploratory aim was to examine the preliminary comparative effectiveness of the implementation strategies on therapist adherence to cognitive-behavioral therapy (CBT), an EBP. Pilot results from two community mental health agencies will inform the design of a large cluster randomized trial that will be powered to test the effectiveness of the implementation strategies on adherence to CBT. Specifically, we hypothesized that the two implementation strategies would be feasible and acceptable in community mental health agencies from the perspectives of therapists and leaders.

Methods

This study served as a pilot study to inform the design of a larger trial. Data from this pilot study will not be included in the main study.

Setting

This study took place in the child outpatient programs of two community mental health agencies that were actively implementing CBT as part of a larger implementation initiative in the city of Philadelphia [29]. We elected to approach two agencies that were representative of the landscape of providers in the city of Philadelphia based on the number of therapists employed and youth served annually. Our goal was to recruit approximately ten therapists so that we could ascertain the feasibility and acceptability of the study procedures, as consistent with the goals of a pilot study.

Participants

Therapist participants

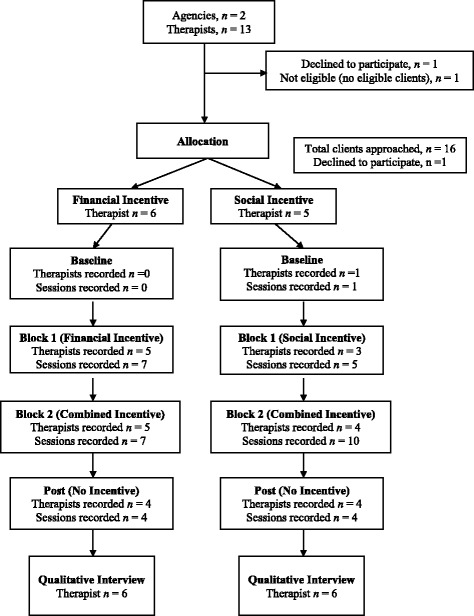

Therapists (n = 11) were predominantly female (n = 9, 81.8%) and were an average of 37.1 years old (SD = 10.6). Therapists identified as White (n = 7, 63.6%), Hispanic/Latino (n = 4, 36.4%), and Black (n = 1, 9.1%). All therapists held master’s degrees and had an average of 6.64 years of full-time clinical experience (SD = 5.26). See Fig. 1.

Fig. 1.

Flow chart of the inclusion and allocation of agencies and participants

Client participants

Clients (n = 15) averaged 12.8 years of age (SD = 4.13) and were predominantly Black (n = 13, 86.7%). One client identified as Hispanic/Latino and another identified as American Indian. The primary diagnosis of most clients as reported by their therapist was post-traumatic stress disorder (n = 10, 66.7%); three clients had adjustment disorder, and two had depressive disorder diagnoses. The average number of completed sessions at the time that clients were first enrolled in the study was 10.73 sessions (SD = 5.62).

Leader participants

Leaders (n = 4) were all female and identified as White. Leaders included clinic directors and direct supervisors, were an average of 45.3 years old (SD = 15.42), and had an average of 20.50 years of full-time clinical experience (SD = 16.05); all held master’s degrees.

Study design

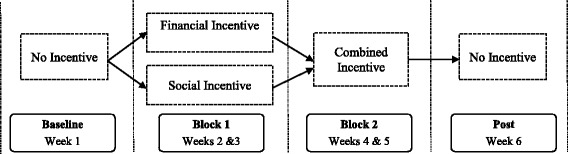

We collected data at each agency for 6 weeks (see Fig. 2). In week 1, therapists were not eligible to receive an incentive nor feedback report (i.e., baseline). In weeks 2–3 (block 1), therapists were randomized to receive either the financial incentive (FI) or social incentive (SI). Six therapists were randomized to FI, and five therapists were randomized to SI. In weeks 4–5 (block 2), therapists were eligible to receive both FI and SI (i.e., a dual incentive). One audio-recorded client session within each block was randomly selected per therapist; therapists received the reward if this randomly selected session met the criterion for adherence to CBT (described below). In week 6 (i.e., post-incentive), therapists were not eligible to receive an incentive nor feedback report but provided recordings of their in-session behavior.

Fig. 2.

Overview of study design

Procedure

We reached out to agency leaders to ascertain their interest in participation. Subsequently, we met with each leader to describe the study and obtain their feedback on its design. To launch the study, we held a 1-h meeting with all eligible therapists, described the incentives in depth, and ensured that participants learned the criteria that would be used to determine incentive eligibility. Therapists were aware of the behaviors that they needed to engage within to receive the incentive prior to engaging in the interventional component of the study (blocks 1 and 2). During this meeting, therapists were told that they would be randomly assigned to FI or SI during block 1 of the study and that they would be eligible for both FI and SI during block 2 of the study. This approach was selected at the request of agency leaders who wanted all therapists to have the opportunity to earn the financial incentive at some point in the study. Participants were randomly assigned using simple randomization procedures to one of the two conditions. The randomization sequence was generated by the principal investigator with a 1:1 allocation. Conditions were written on a folded piece of paper that therapists selected out of a hat (i.e., therapists and research staff were not able to see which condition the therapist selected until they opened the paper). The research staff and therapists were not blind to conditions. A trained research assistant was assigned to each agency to facilitate patient recruitment. All clients between the ages of 7–24 of enrolled therapists receiving individual face-to-face CBT at one of the two agencies participating were eligible for the study. Study participation for clients entailed granting permission for their session to be recorded. Both the guardian (if the client was under 18) and youth had to consent and assent to have the session recorded. The youth were paid $10 dollars for each session that was recorded.

Following study completion, all participating therapists and leaders in the two agencies completed individual qualitative interviews with trained research assistants that queried about the acceptability and feasibility of the conditions, study design, and research procedures. Data collection was completed from September to December in 2015. The pilot trial ended when data completion was complete at each agency.

Clinical intervention

The clinical intervention of interest is CBT. CBT has amassed a large body of evidence supporting its effectiveness as a treatment for a wide range of youth psychiatric disorders [30–32]. CBT refers to a group of interventions that share the underpinning that psychiatric disorders are maintained by cognitive and behavioral factors. These interventions target maladaptive cognitions and behaviors to result in symptom reduction and improved functioning [30]. Common strategies used in CBT include managing negative thoughts (e.g., cognitive restructuring), changing maladaptive behaviors (e.g., exposure), managing maladaptive mood and arousal (e.g., behavioral activation), and general skill training (e.g., problem-solving; [33]).

Implementation strategies

The financial incentive condition was designed based on principles of behavioral economics [19, 21]. Specifically, financial rewards were paid separately from regular salary to increase saliency [14, 34] and were delivered quickly following desired behavior [19]. Therapists were randomized to earn $100 if a randomly selected session over the 2-week period met criterion for adherent CBT delivery; the reward was provided in cash within 1 week. The social incentive was designed to activate peer comparisons via public recognition within the therapist’s agency. We designed the social incentive condition such that therapists would receive public recognition if a randomly selected session over the 2-week period met criterion for adherent CBT delivery. Specifically, their leader sent out an email to the entire team celebrating that the therapist met the target behavior (i.e., social reward). The email (see Additional file 1) was drafted by the research team to ensure consistency.

Based upon initial conversations from the leaders and therapists, we also provided therapists in both arms with a feedback report for the randomly selected session from each block of study participation (i.e., weeks 2–3; 4–5). These one-page feedback reports were written by doctoral-level clinical psychologists and described the areas of strength and opportunities for improvement in therapist delivery of CBT.

Target behavior for rewards

The target behavior for receipt of reward was therapist adherence to CBT. To determine if therapists were implementing CBT in an adherent manner, we used the Therapy Process Observational Coding System for Child Psychotherapy-Revised Strategies Scale (TPOCS-RS) [35]. The TPOCS-RS is the gold-standard observational coding system designed to capture the extensiveness of adherence to a range of psychosocial interventions for youth, including CBT, and shows good internal consistency and validity [35, 36]. The tape from every recorded session (including the one randomly selected for evaluation) was assessed by a doctoral-level rater trained in the TPOCS-RS by the instrument developer. Training followed established procedures [35]. Prior to initiating coding in this study, the rater independently coded 30 certification sessions and calculated intraclass correlation coefficients (ICCs) against gold-standard ratings. The rater exceeded the TPOCS-RS certification standard of ICCs > .59 for all items (ICC range = .76–.97).

We focused on the TPOCS-RS CBT subscale that includes 12 CBT interventions (see Table 1 for interventions). Each component was rated from 1 (not at all) to 7 (extensively). While the TPOCS-RS has several options for scoring (see [37] for discussion), we used the maximum score approach, in which the highest score across the 12 intervention components is used. We based our decision to use the maximum score approach because we would not expect a therapist to use all 12 components in each session. Delivering any CBT model component with extensive adherence is the goal behavior. For example, a therapist might use cognitive education (extensiveness = 7) and relaxation (extensiveness = 3), but not use psychoeducation (extensiveness = 1) or exposure (extensiveness = 1). The therapist would receive a 7 for that session. Thus, therapists could earn an overall maximum score of 1–7 for each therapy session they delivered. To be eligible for the reward, therapists’ maximum CBT score had to be a 4 or greater. We selected this as a benchmark based upon the empirical literature demonstrating that the average extensiveness scores obtained in therapists trained in CBT in usual care are 4 out of 7 [37]. During the initial recruitment meeting, we presented this information in detail so that therapists were aware of the scoring system and benchmark.

Table 1.

Number of times each CBT intervention was scored

| TPOCS-RS item | Times scored (% of sample) | Average rating when scored M, (SD; range) |

|---|---|---|

| Any intervention scored | 37 (100) | |

| Psychoeducation | 21 (56.8) | 2.57 (.81; 2–4) |

| Cognitive education | 22 (59.5) | 3.00 (1.54; 2–7) |

| Cognitive distortion | 10 (27.0) | 2.40 (.97; 2–5) |

| Functional analysis | 4 (10.8) | 2.00 (0.0; 2–2) |

| Relaxation strategies | 17 (45.9) | 3.88 (1.80; 2–7) |

| Respondent strategies | 4 (10.8) | 5.75 (1.26; 4–7) |

| Behavioral activation | 0 (0) | N/A |

| Coping skills | 7 (18.9) | 2.71 (1.50; 2–6) |

| Skill building | 7 (18.9) | 2.00 (0.0; 2–2) |

| Operant strategies (child) | 19 (51.4) | 2.21 (.42; 2–3) |

| Operant strategies (parent) | 3 (8.1) | 3.00 (1.00; 2–4) |

| Parenting skills | 2 (5.4) | 2.50 (.71; 2–3) |

TPOCS-RS Therapy Process Observational Coding System for Child Psychotherapy-Revised Strategies Scale. Percentages reflect the number of sessions in which the intervention was coded across all sessions (n = 37)

N/A not applicable

Analytic plan

We were interested in two primary outcomes (i.e., feasibility and acceptability) and an exploratory outcome (adherence to CBT). Feasibility and adherence were investigated quantitatively whereas acceptability was investigated using qualitative methods.

Feasibility

To ascertain feasibility, we calculated the ratio of (a) number of therapists agreeing to participate divided by number of eligible therapists in each program and (b) number of clients agreeing to be recorded divided by number of eligible clients approached to participate.

Acceptability

All qualitative interviews with therapists and leaders were audiotaped and transcribed verbatim. Transcripts were analyzed in an iterative process based upon an integrated approach that incorporated both inductive and deductive features [38]. Through a close reading of four transcripts, the investigators developed a set of codes that were applied to the data (i.e., inductive approach). A priori codes derived from the original research questions and previous literature were also applied (i.e., deductive approach). Specifically, acceptability, or how palatable or satisfactory participants found the implementation strategies to be, was of interest. A random subset of transcripts (20%) was coded by two investigators, and inter-rater reliability was found to be excellent (ĸ = .94) [39]. Through the approach described above, the first author produced memos including examples and commentary regarding themes that emerged from the codes to interpret the data [38].

Adherence

First, we examined if rates of reward receipt (i.e., the randomly selected tape received a score of 4 or higher on at least one TPOCS-RS item) differed between clients of therapists initially randomized to financial incentive (FI; n = 6) or social incentive (SI; n = 5) using Pearson chi-square tests. Second, we compared CBT adherence rates by initial randomization status across the full sample of sessions. Third, we compared CBT adherence rates between the active incentive project phase (i.e., blocks 1 and 2) and the post-period for each intervention arm.

Ethics

All procedures were approved by the city of Philadelphia and University of Pennsylvania institutional review boards. Leaders within each community mental health agency were informed and gave permission for the study to be conducted within their respective sites. All therapist, leader, and client participants completed written, informed consent prior to initiating study participation. All participants were informed that they could withdraw from the study at any time.

Results

Feasibility

Agency feasibility

We reached out to two leaders of two community mental health agencies in Philadelphia. Both agencies (100%) agreed to participate.

Therapist feasibility

Eleven out of the 13 (85%) eligible therapists agreed to participate in the study. One therapist declined participation because s/he did not have any clients eligible for the study. The second therapist declined participation because s/he was a part-time clinician (i.e., independent contractor) and did not feel comfortable recording patient sessions. Therapists recorded 41 sessions across 15 unique clients over the project period (M = 3.73 sessions per therapist, SD = 1.95); one therapist who was randomized did not record any sessions.

Client feasibility

Fifteen out of the 16 (94%) eligible clients agreed to participate in the study. One client declined to participate.

Acceptability

Therapist and leader study participation

All participants, including therapist and leader participants, reported that they found their overall experience with the study to be highly acceptable. A number of participants described the work that they do as “thankless work,” and remarked that receiving a financial or social reward for their contributions was motivating. One therapist said, “I get paid very little for a lot of work, and a lot of hard and heavy work,” further reflecting that the reward she received made her feel appreciated and acknowledged in a way that she rarely experienced.

Acceptability of the financial incentive

The majority of therapists and leaders reported that they found the financial incentive condition to be acceptable. One therapist reported, “It was awesome having money. It was nice and instant, and handing you the money was pretty cool, you know it was Christmas time so it was awesome.” A number of therapists noted that receiving actual cash immediately was also motivating. At the same time, some participants also struggled with the ethics of receiving additional remuneration for activities that they perceived as being part of their daily job. This quote, which was echoed by a few individuals almost verbatim, illustrates this concern, “I think you should be delivering your best work whether you are incentivized or not.” One leader also reflected the tension around this ethical issue by saying, “The business part of me is saying yes, absolutely incentivize them. The ethical side of me is like, oh that’s a good question. And then the business side is like, shut up ethical side.”

Acceptability of social incentive

The majority of therapists and leaders also reported that the social incentive condition was acceptable, because “being recognized by your supervisor for the work that you’ve done is great.” One of the leaders reported that s/he and the therapists found the social incentive so appealing that they picked it up as a department and started sending out emails recognizing outstanding therapists after the pilot study. Additionally, the leader reported that the social incentive condition had the unintended consequence of making independent contractor therapists “feel part of the group” in a way that they had not felt previously. A leader in the other agency reported that social incentives were already a part of the fabric of their agency; thus, they did not have as much of an impact on the therapists. Both leaders reported that the content of the email felt inauthentic because they did not draft it themselves.

Client study participation

Six of the participants (five therapists and one leader) reported that youth clients and their legal guardians found study participation acceptable (the other participants did not comment on client acceptability). One unintended consequence of the study as reported by a therapist was that participating in the study anecdotally increased client engagement/attendance to sessions. Additionally, two therapists expressed surprise at how interested in participating in research their clients and guardians were.

Acceptability of the feedback report

The majority of therapists and leaders reported that the feedback reports provided to the therapists as part of study participation were a very important feature of the study. One leader noted, “I think what was the most surprising was the feedback report. Initially, the financial incentive was the most exciting, but I think what turned out to be more exciting was the feedback.” Participants reported that they seldom had the opportunity to receive feedback on their in-session behavior and that receiving brief feedback reports gave them an opportunity to improve their practice.

Adherence

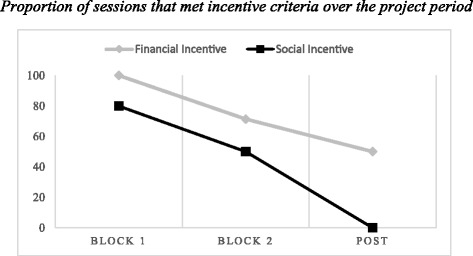

Table 1 shows the number of times each CBT intervention was scored using the TPOCS-RS items in this sample and the average rating when scored. The average maximum TPOCS-RS score across sessions was 4.08 (SD = 1.77; range = 2–7). First, we examined whether there were differences in adherence between therapists originally randomized to FI (n = 6 therapists) and those randomized to SI (n = 5 therapists) in block 1 only, using the intent to treat sample. A higher proportion of therapists’ randomized to FI received the reward (n = 5 of 6 therapists, 83.3%; 1 therapist did not recruit clients in block 1) relative to those randomized to SI (n = 2 of 4 therapists, 40%; two therapists did not recruit clients in block 1), although this was not significant (Fisher’s exact test p = .24). Initial randomization status was not associated with receiving the combined reward in block 2 (FI n = 5 of 6 therapists, 83.3%; SI n = 4 of 5 therapists, 80%; X 2 = .02, p = .89). Across all time points in the full sample of sessions (n = 37), 78.3% of CBT sessions delivered by therapists initially randomized to FI met criteria for the reward compared to 47.4% delivered by therapists initially randomized to SI (X 2 = 3.63, p = .06; see Fig. 3).

Fig. 3.

Figure displays proportions of sessions that met incentive criteria as a function of initial randomization status. In block 1 (weeks 2 and 3), therapists were randomized to receive either the financial or social incentive. In block 2 (weeks 4 and 5), therapists received both incentives. In post (week 6), therapists did not receive incentives

Next, we examined whether adherence was higher when therapists received either reward compared to the post-incentive period. Compared to the active incentive periods (Blocks 1 and 2 combined, n = 29 sessions across ten therapists), sessions recorded at post-incentive (total session n = 8 across eight therapists) were significantly less likely to meet the minimum threshold for the incentive (72.4% vs 25%, X 2 = 5.99, p = .01). When sessions recorded in the post-phase only were examined by initial randomization status, 2 out of 4 (50%) sessions delivered by therapists randomized to financial incentive met criteria for the incentive, and 0 out of 4 (0%) of public recognition sessions met criteria; this difference was not significant (X 2 = 2.67, p = .10).

Discussion

The aims of this pilot study were to evaluate the feasibility and acceptability of two incentive-based implementation strategies, as well as to preliminarily explore the effectiveness of the implementation strategies on CBT adherence. This is consistent with the primary role of a pilot study which is to examine the feasibility of a research endeavor [9]. Findings suggest that the implementation strategies were both feasible and acceptable, but that some modifications will be necessary in a larger trial. Additionally, both implementation strategies we tested have the potential to change therapist behavior but financial incentives may be slightly more effective compared to social incentives.

Use of implementation strategies based upon principles of behavioral economics [40] was feasible, as indicated by the high recruitment rates at the agency (100%), therapist (85%), and client (94%) levels; which is notably higher than previous work conducted [5]. However, we faced challenges in the initial recruitment of clients, thus resulting in only one session recording during the baseline period. We learned from conversations with the participants that therapists would benefit from having time to introduce the study to youth in the week preceding recruitment. This was especially necessary given that adolescents typically came to session without their guardian, requiring advance notice to obtain caregiver consent. Future studies will include an extra week, prior to the baseline week, to facilitate client engagement either in person or by obtaining permission from the IRB to conduct verbal consent with guardians over the telephone as we did in a recent study [41].

We also gained information about the feasibility of the study design. In our early conversations with agency leaders, they shared their concern about randomization of therapist within agencies. They were wary about having half of their therapists in a financial incentive condition and the other half in a social incentive condition, given the less than optimal financial environment [12] in community mental health (i.e., they did not want therapists to feel resentful of their colleagues). Thus, we elected to use a design where all therapists had the opportunity to earn the financial incentive. Although this addressed the leader concerns, it also proved to be a complex design that was difficult to explain to study participants, limiting the feasibility of conducting a scaled-up version of this trial [42]. Future studies will randomize at the organization level so that providers receive a single implementation strategy, thus mitigating leader concerns.

In addition to positive indicators of feasibility, both the implementation strategies and participation in research were acceptable from the perspective of therapists and leaders. Corroborating principles from behavioral economics [21], therapists reported appreciating that they received the financial reward as cash (i.e., separate from their check to increase saliency) [14] as well as promptly, and around the holidays [19]. They generally reported finding both incentive types to be motivating, which is consistent with the underlying theory which supports the use of such strategies [21], and is particularly salient in public mental health settings where therapists are often underappreciated, overworked, and underpaid [10]. Interestingly, no therapists or leaders noted any negative implications of being subject to incentive strategies simultaneously within the context of the broader EBP implementation efforts taking place in their agencies [11, 29]. This may further support the feasibility of layering incentive-based strategies on top of standard implementation strategies (e.g., training and consultation), although this will need to be tested in future work. There are limitations to this pilot trial. First, we were unable to obtain baseline measures of behavior from therapists, thus limiting our ability to make conclusions about how the incentives changed their behavior. Second, we are unable to disentangle the effect of feedback reports alone on therapist behavior. Third, we did not randomly select the agencies we approached for this study, thus the findings may not be generalizable to other agencies.

There are future directions to consider regarding both financial and social incentives in community mental health settings. First, with regard to financial incentives, a number of participants raised concerns about the ethics of receiving additional remuneration for doing work that they perceived to be within the scope of their daily practice. These concerns have been raised in the larger healthcare literature (see [43, 44]), and there may be additional considerations for clinicians serving vulnerable populations such as youth with mental health difficulties. One particular area of interest is the potential impact of such incentives on the therapeutic alliance [45]. For example, client awareness that their therapists are receiving extra compensation for high-quality services may impact their willingness to trust their therapist; alternatively, therapists may use CBT with clients when it is not indicated in order to receive the incentive. However, if the incentives are designed in an ethnical manner with appropriate safeguards (i.e., maintaining freedom to make informed choices, minimize current healthcare inequities, and have rigorous monitoring and evaluation plans), then it is likely that the benefits outweigh the risks [44].

Another area of interest is the potential impact of financial incentives on clinicians’ intrinsic or internalized motivation to deliver high fidelity CBT [46]. Behavioral economics theory relies on a unitary, expectancy-based view of motivation; however, research on self-determination theory differentiates between different types of motivation ranging from purely intrinsic motivation, which is the inherent satisfaction of engaging in an activity, to autonomous and internalized forms of extrinsic motivation, which is the desire to perform a behavior not for its own sake but because of its strong alignment with one’s closely held values and goals, to completely non-autonomous, externally-regulated extrinsic motivation, in which a behavior is performed solely to satisfy an external demand or obtain a contingent reward (e.g., a financial incentive). Meta-analytic research on these different types of motivation indicates that intrinsic motivation can be undermined by the imposition of external rewards if they are not structured properly [47]. Furthermore, while the use of financial incentives can increase the quantity of targeted behaviors, their effect on the quality of targeted behaviors is much more modest, particularly compared to the strong effects of internalized motivation on the quality of behavioral performance [48] [49]. This research demonstrates the importance of carefully structuring incentives and examining both their positive and potentially iatrogenic effects on providers’ behavior. This research also highlights the importance of using measures that assess CBT fidelity (i.e., quality) as opposed to CBT quantity when testing the effects of financial incentives on provider behavior.

Second, a few therapists reported that youth participation in the study may have increased client engagement and attendance to sessions because they were paid $10. This suggests that future studies testing incentives targeted at clients to increase engagement may be warranted [14]. However, the sample of clients obtained for this study were highly experienced in therapy, having on average attended 10 sessions, compared to the national modal number of sessions (i.e., 1 session) [50]. Thus, the current study provides little information about engagement and retention in an EBP. The future study should include a balanced sample of new and experienced clients. Third, with regard to the social incentives, we received feedback from leaders indicating that the condition may not have been robust enough to influence therapist motivation. Specifically, the leaders noted that the language drafted by the research team was not authentic and did not carry as much weight in one agency because they were already engaging in frequent public recognition. These comments will be taken into careful consideration when planning the social incentive condition in the larger trial to increase the saliency of the incentive. Studies to date on non-financial incentives for healthcare providers have primarily used either peer comparisons (showing providers how their performance compares to peers, [51]) or public reporting of provider or health system performance (for example, [52, 53]). Social incentives have been widely tested in other industries and settings to enhance performance and productivity. For example, in a study located at a Korea broadband internet firm, specific comments made to employees about positive behaviors and results was as successful as financial incentives in promoting performance [54]. Within the healthcare domain, it has been most commonly tested for community health workers in low- and middle-income settings, where it typically takes the form of a written or verbal recognition from a superior for outstanding performance or improvement (e.g., [55]). Our study is therefore among the first to use public recognition (vs. peer comparison or public reporting) as a social incentive in a mental health setting in a high-income country.

Additionally, the results of this study suggest the importance of assessing organizational context prior to selecting and evaluating implementation strategies [56]. Organizational characteristics that may influence the study results include the extent to which social incentives are already used by the agencies, the degree and quality of clinical supervision (which will shape therapists’ perceptions of the usefulness of feedback), the extent to which agencies engender proficient cultures that prioritize improvement in client well-being and clinician competence in up-to-date treatment practices, and the degree of innovation-values fit between CBT and agency leadership’s preferred theoretical orientation. We were surprised to learn that the feedback report was such a motivating feature of the study (reportedly potentially more motivating than the financial incentive). Given the power of audit and feedback to change behavior [57] and the lack of opportunities for therapists in the community to receive feedback on their session behavior, we have elected to include these reports in our future study as a stand-alone condition.

As a pilot, the study was not powered to detect the impact of the implementation strategies on changes in CBT adherence over the course of study [58]. However, in exploratory analyses, we found a trend suggesting that the financial incentive condition may have had a stronger effect on CBT adherence compared to the social incentive condition. If replicated in the larger study, this corroborates previous work that suggests that financial incentives increase implementation of an EBP for substance use disorders in youth [23]. Our preliminary results suggest that it may be possible to change clinicians’ EBP implementation behavior using incentives. One consideration based upon the pattern of results observed is the duration of the active intervention. In our study, the duration of incentive-oriented implementation strategies was brief (i.e., 4 weeks). Initially, there was high adherence to CBT which then decreased over the course of the 4 weeks. One possible explanation for this finding is that changes in initial behavior may have been due to observation (i.e., the Hawthorne effect). Given the trend of decreasing adherence over this brief study, future research which understands the long-term effects of incentive-oriented strategies is critical. To put the adherence rates into context, observations of therapist practices in usual care settings indicate that when therapists use EBPs, average therapist adherence is of low intensity (i.e., equivalent to a score of 2 or 3 on the TPOCS-RS ([35, 59, 60]). Therapist CBT adherence in this sample varied across CBT interventions; however, the average maximum score across the sample on the TPOCS-RS suggested that most therapists delivered CBT at least at a moderate intensity across the recorded sessions, which is promising.

Conducting this pilot work has been informative in the design of a larger cluster randomized trial in which we plan to test the comparative effectiveness of two incentive-based implementation strategies compared to implementation-as-usual to increase clinician use of CBT; this trial will be submitted to the National Institute of Mental Health. If funded, our plan is to randomize agencies implementing CBT in Philadelphia public mental health agencies to one of three implementation strategies: (1) implementation-as-usual (IAU): therapists receive written performance feedback only as consistent with the EBP initiatives approach in the city of Philadelphia; (2) financial incentive: weekly financial payment, plus feedback; (3) social incentive: public ranking on a weekly “leaderboard” listing all enrolled therapists, plus feedback. Randomization will occur at the agency level in order to address contamination concerns as well as leader and ethical concerns regarding the importance of giving all therapists within a single agency the opportunity to earn extra compensation. Therapists will receive rewards in conditions 2 and 3 if they provide CBT at a prescribed fidelity criterion. Outcomes will include adherence to CBT and the costs and cost-effectiveness of the three implementation strategies. Including the costs and cost-effectiveness aspect of this work is important to inform the scalability of such an approach. The design of this trial was directly informed by our experiences from this pilot study and includes randomization at the organization rather than clinician level and a stronger social incentive condition that is more directly driven by principles of behavioral economics [26].

Based on our experience and results from this pilot study, we will also measure and test agency-level factors that may moderate the effects of incentives on clinicians’ CBT fidelity. Innovation-values fit [61] is an important potential agency-level moderator given Philadelphia’s long history as a home for evidence-based family therapy approaches and the possibility that agencies and their leadership may be more aligned with these approaches compared to CBT. Other important potential moderators include proficient organizational culture, which encompasses norms and behavioral expectations that clinicians prioritize improvement in client well-being and exhibit competence in up-to-date treatment practices, molar organizational climate, which describes clinicians’ shared perceptions of the extent to which the work environment supports their personal well-being and the extent to which agencies currently use social incentives [62]. Both proficient culture and molar climate have been linked to improved implementation of evidence-based practices in behavioral health services ([5, 63–65]). As a result, we expect that incentives may not have as powerful an effect on clinicians’ behaviors in agencies with these positive organizational characteristics. Conversely, proficient organizational cultures and supportive climates may increase the effects of incentives, particularly social incentives, by framing them as a consistent part of an overall organizational priority for improving client well-being. Positive innovation-values fit, in which CBT is concordant with leaders’ and clinicians’ preferred approaches to treatment, should also enhance the effects of incentives on therapists’ practice behavior.

Implications for implementation science

This pilot study has a number of implications for the forward movement of the field of implementation science. First, the findings demonstrate the utility of conducting iterative pilot work. Important insights were gleaned using mixed-methods as part of the pilot that have informed the larger trial design and demonstrate the importance of conducting pilot work prior to launching a fully powered trial. Second, this pilot work was engendered by observational work ([5, 10–12]) suggesting the importance of tailored implementation strategies that address lack of motivation based on the behavior change components of reward and threat [20]. Third, this pilot work uses theory to delineate the targets and mechanisms of the developed implementation strategies, which moves the field towards building causal theory [66]. In the trial to ensue, we will test the comparative effectiveness of these implementation strategies while also measuring mechanisms, which will also move the field in this direction.

Conclusions

The aim of this pilot study was to test the feasibility and acceptability of two incentive-based implementation strategies, as well as preliminarily examine therapist adherence to CBT. We were able to feasibly administer the implementation strategies, and semi-structured interviews indicate good acceptability. The findings underscore the importance of conducting iterative pilot work to inform the design of future trials.

Acknowledgements

We are grateful for the support that the Department of Behavioral Health and Intellectual disAbility Services has provided us to conduct this work within their system, for the Evidence Based Practice and Innovation (EPIC) group, and for the partnership provided to us by participating agencies, therapists, youth, and families. We thank the following individuals on our research team for assisting with the data collection and analysis: Kyle Chen, Tara Fernandez, Adina Lieberman, Gayatri Nangia, Lauren Shaffer, Amy Summer, and Kyle Szarzynski. We appreciate the contributions provided by Michael Richards, MD, PhD, and Iwan Barankay, PhD, in conceptualizing this work early in the planning of this study.

Funding

Funding for this research project was supported by the University of Pennsylvania Implementation Science Working Group Pilot Fund and from the following grants from NIMH: (K23MH099179, Beidas; F32MH103960, Stewart; F32 MH103955, Wolk).

Availability of data and materials

The datasets used and analyzed during the current study are available from the corresponding author on a reasonable request.

Abbreviations

- CBT

Cognitive-behavioral therapy

- EBP

Evidence-based practice

- FI

Financial incentive

- IAU

Implementation-as-usual

- SI

Social incentive

- TF-CBT

Trauma-focused cognitive-behavioral therapy

- TPOCS-RS

Therapy Process Observational Coding System for Child Psychotherapy-Revised Strategies Scale

Additional file

Email from leaders to therapists. (DOCX 21 kb)

Authors’ contributions

RB is the principal investigator for the study and thus takes responsibility for the study design, data collection, and the integrity of the data. RB, EH, DA, LS, RS, CW, AB, NW, PI, ER, and SC made substantial contributions to the study conception, design, and interpretation. RB and EH analyzed the data. RSB drafted the first version of the manuscript. All authors critically reviewed, edited, and approved the final manuscript.

Ethics approval and consent to participate

The institutional review board (IRB) at the city of Philadelphia approved this study on August 19, 2015 (Protocol Number 2015-12). The city of Philadelphia IRB serves as the IRB of record. The University of Pennsylvania also approved all study procedures on May 21, 2015 (Protocol Number 822526). Written informed consent and/or assent was obtained for all study participants.

Consent for publication

Not applicable

Competing interests

All authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

Electronic supplementary material

The online version of this article (10.1186/s13012-017-0684-7) contains supplementary material, which is available to authorized users.

References

- 1.McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: a review of current efforts. Am Psychol. 2010;65(2):73. doi: 10.1037/a0018121. [DOI] [PubMed] [Google Scholar]

- 2.Gotham HJ, White MK, Bergethon HS, Feeney T, Cho DW, Keehn B. An implementation story: moving the GAIN from pilot project to statewide use. J Psychoactive Drugs. 2008;40(1):97–107. doi: 10.1080/02791072.2008.10399765. [DOI] [PubMed] [Google Scholar]

- 3.Chambless DL, Ollendick TH. Empirically supported psychological interventions: controversies and evidence. Annu Rev Psychol. 2001;52(1):685–716. doi: 10.1146/annurev.psych.52.1.685. [DOI] [PubMed] [Google Scholar]

- 4.Hemmelgarn AL, Glisson C, James LR. Organizational culture and climate: implications for services and interventions research. Clin Psychol-Sci Pr. 2006;13(1):73–89. doi: 10.1111/j.1468-2850.2006.00008.x. [DOI] [Google Scholar]

- 5.Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatr. 2015;169(4):374–382. doi: 10.1001/jamapediatrics.2014.3736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kazdin AE. Treatment as usual and routine care in research and clinical practice. Clin Psychol Rev. 2015;42:168–178. doi: 10.1016/j.cpr.2015.08.006. [DOI] [PubMed] [Google Scholar]

- 7.Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123–157. doi: 10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. doi: 10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Leon AC, Davis LL, Kraemer HC. The role and interpretation of pilot studies in clinical research. J Psychiatr Res. 2011;45(5):626–629. doi: 10.1016/j.jpsychires.2010.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, et al. A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Adm Policy Ment Hlth. 2016;43(6):893–908. doi: 10.1007/s10488-015-0705-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Beidas RS, Aarons G, Barg F, Evans A, Hadley T, Hoagwood K, et al. Policy to implementation: evidence-based practice in community mental health—study protocol. Implement Sci. 2013;8:38. doi: 10.1186/1748-5908-8-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stewart RE, Adams DR, Mandell DS, Hadley TR, Evans AC, Rubin R, et al. The perfect storm: collision of the business of mental health and the implementation of evidence-based practices. Psychiatr Serv. 2016;67(2):159–161. doi: 10.1176/appi.ps.201500392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Volpp KG, Asch DA, Galvin R, Loewenstein G. Redesigning employee health incentives—lessons from behavioral economics. N Engl J Med. 2011;365(5):388–390. doi: 10.1056/NEJMp1105966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Asch DA, Troxel AB, Stewart WF, Sequist TD, Jones JB, Hirsch AG, et al. Effect of financial incentives to physicians, patients, or both on lipid levels: a randomized clinical trial. JAMA. 2015;314(18):1926–1935. doi: 10.1001/jama.2015.14850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Troxel AB, Asch DA, Volpp KG: Statistical issues in pragmatic trials of behavioral economic interventions. Clin Trials 2016:1740774516654862. doi: 10.1177/1740774516654862. [DOI] [PMC free article] [PubMed]

- 16.Halpern SD, French B, Small DS, Saulsgiver K, Harhay MO, Audrain-McGovern J, et al. Randomized trial of four financial-incentive programs for smoking cessation. N Engl J Med. 2015;372(22):2108–2117. doi: 10.1056/NEJMoa1414293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Loewenstein G, Asch DA, Volpp KG. Behavioral economics holds potential to deliver better results for patients, insurers, and employers. Health Affair. 2013;32(7):1244–1250. doi: 10.1377/hlthaff.2012.1163. [DOI] [PubMed] [Google Scholar]

- 18.Volpp KG, Troxel AB, Pauly MV, Glick HA, Puig A, Asch DA, et al. A randomized, controlled trial of financial incentives for smoking cessation. N Engl J Med. 2009;360(7):699–709. doi: 10.1056/NEJMsa0806819. [DOI] [PubMed] [Google Scholar]

- 19.Emanuel EJ, Ubel PA, Kessler JB, Meyer G, Muller RW, Navathe AS, et al. Using behavioral economics to design physician incentives that deliver high-value care behavioral economics, physician incentives, and high-value care. Ann Intern Med. 2016;164(2):114–119. doi: 10.7326/M15-1330. [DOI] [PubMed] [Google Scholar]

- 20.Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95. doi: 10.1007/s12160-013-9486-6. [DOI] [PubMed] [Google Scholar]

- 21.Arnold DR. Countervailing incentives in value-based payment, In Healthcare. 2017;5(3):125–8. ISSN 2213-0764. https://doi.org/10.1016/j.hjdsi.2016.04.009. http://www.sciencedirect.com/science/article/pii/S2213076416300392. [DOI] [PubMed]

- 22.Flodgren G, Eccles MP, Shepperd S, Scott A, Parmelli E, Beyer FR. An overview of reviews evaluating the effectiveness of financial incentives in changing healthcare professional behaviours and patient outcomes. Cochrane Libr. 2011; 10.1002/14651858.CD009255. [DOI] [PMC free article] [PubMed]

- 23.Garner BR, Godley SH, Dennis ML, Hunter BD, Bair CM, Godley MD. Using pay for performance to improve treatment implementation for adolescent substance use disorders: results from a cluster randomized trial. Arch Pediatr Adolesc Med. 2012;166(10):938–944. doi: 10.1001/archpediatrics.2012.802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vandrey R, Stitzer ML, Acquavita SP, Quinn-Stabile P. Pay-for-performance in a community substance abuse clinic. J Subst Abus Treat. 2011;41(2):193–200. doi: 10.1016/j.jsat.2011.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.The National Child Traumatic Stress Network: NCTSN Financing and Sustainability Survey Report. 2016: 1–17. http://www.nctsn.org/sites/all/modules/pubdlcnt/pubdlcnt.php?file=/sites/default/files/assets/pdfs/nctsn_financing_and_sustainability_survey_report.pdf&nid=1784. Accessed: April 28, 2017.

- 26.Navathe AS, Sen AP, Rosenthal MB, Pearl RM, Ubel PA, Emanuel EJ, et al. New strategies for aligning physicians with health system incentives. Am J Manag C. 2016;22(9):610. [PubMed] [Google Scholar]

- 27.Liao JM, Fleisher LA, Navathe AS. Increasing the value of social comparisons of physician performance using norms. J Am Med Assoc. 2016;316(11):1151–1152. doi: 10.1001/jama.2016.10094. [DOI] [PubMed] [Google Scholar]

- 28.Kolstad JR, Lindkvist I. Pro-social preferences and self-selection into the public health sector: evidence from an economic experiment. Health Policy Plan. 2013;28(3):320–327. doi: 10.1093/heapol/czs063. [DOI] [PubMed] [Google Scholar]

- 29.Powell BJ, Beidas RS, Rubin RM, Stewart RE, Wolk CB, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Admin Pol Ment Health. 2016;43(6):909–926. doi: 10.1007/s10488-016-0733-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hofmann SG, Asnaani A, Vonk IJ, Sawyer AT, Fang A. The efficacy of cognitive behavioral therapy: a review of meta-analyses. Cognitive Ther Res. 2012;36(5):427–440. doi: 10.1007/s10608-012-9476-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Weisz JR, Kuppens S, Ng MY, Eckshtain D, Ugueto AM, Vaughn-Coaxum R, et al: What five decades of research tells us about the effects of youth psychological therapy: a multilevel meta-analysis and implications for science and practice. Am Psychol 2017;2(72). doi: 10.1037/a0040360. [DOI] [PubMed]

- 32.Dorsey S, McLaughlin KA, Kerns SE, Harrison JP, Lambert HK, Briggs EC, et al. Evidence base update for psychosocial treatments for children and adolescents exposed to traumatic events. J Clin Child Adolesc Psychol. 2017;46(3):303–330. doi: 10.1080/15374416.2016.1220309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sburlati ES, Schniering CA, Lyneham HJ, Rapee RM. A model of therapist competencies for the empirically supported cognitive behavioral treatment of child and adolescent anxiety and depressive disorders. Clin Child Fam Psychol Rev. 2011;14(1):89–109. doi: 10.1007/s10567-011-0083-6. [DOI] [PubMed] [Google Scholar]

- 34.Read D, Loewenstein G, Rabin M, Keren G, Laibson D. Choice bracketing. In: Fischhoff B, Manski CF, editors. Elicitation of preferences. Norwell, MA: Springer; 1999. pp. 171–202. [Google Scholar]

- 35.McLeod BD, Smith MM, Southam-Gerow MA, Weisz JR, Kendall PC. Measuring treatment differentiation for implementation research: The Therapy Process Observational Coding System for Child Psychotherapy Revised Strategies scale. Psychol Assessment. 2015;27(1):314. doi: 10.1037/pas0000037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.McLeod BD, Weisz JR. The therapy process observational coding system for child psychotherapy strategies scale. J Clin Child Adolesc Psychol. 2010;39(3):436–443. doi: 10.1080/15374411003691750. [DOI] [PubMed] [Google Scholar]

- 37.Smith MM, McLeod BD, Southam-Gerow MA, Jensen-Doss A, Kendall PC, Weisz JR. Does the delivery of CBT for youth anxiety differ across research and practice settings? Behav Ther. 2016; 10.1016/j.beth.2016.07.004. [DOI] [PMC free article] [PubMed]

- 38.Bradley EH, Curry LA, Devers KJ. Qualitative data analysis for health services research: developing taxonomy, themes, and theory. Health Serv Res. 2007;42(4):1758–1772. doi: 10.1111/j.1475-6773.2006.00684.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977:159–74. 10.2307/2529310. [PubMed]

- 40.Eisenberg D, Druss BG. Time preferences, mental health and treatment utilization. J Ment Health Policy. 2015;18(3):125–136. [PubMed] [Google Scholar]

- 41.Beidas RS, Maclean JC, Fishman J, Dorsey S, Schoenwald SK, Mandell DS, et al. A randomized trial to identify accurate and cost-effective fidelity measurement methods for cognitive-behavioral therapy: project FACTS study protocol. BMC Psychiatry. 2016;16(1):323. doi: 10.1186/s12888-016-1034-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mills EJ, Chan A-W, Wu P, Vail A, Guyatt GH, Altman DG. Design, analysis, and presentation of crossover trials. Trials. 2009;10(1):27. doi: 10.1186/1745-6215-10-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Blumenthal-Barby JS, Burroughs H. Seeking better health care outcomes: the ethics of using the “nudge”. Am J Bioeth. 2012;12(2):1–10. doi: 10.1080/15265161.2011.634481. [DOI] [PubMed] [Google Scholar]

- 44.Lunze K, Paasche-Orlow MK. Financial incentives for healthy behavior: ethical safeguards for behavioral economics. Am J Prev Med. 2013;44(6):659–665. doi: 10.1016/j.amepre.2013.01.035. [DOI] [PubMed] [Google Scholar]

- 45.Heyman J, Ariely D. Effort for payment: a tale of two markets. Psychol Sci. 2004;15(11):787–793. doi: 10.1111/j.0956-7976.2004.00757.x. [DOI] [PubMed] [Google Scholar]

- 46.Ryan RM, Deci EL. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am Psychol. 2000;55(1):68. doi: 10.1037/0003-066X.55.1.68. [DOI] [PubMed] [Google Scholar]

- 47.Deci EL, Koestner R, Ryan RM. A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychol Bull. 1999;125(6):627–68. http://dx.doi.org/10.1037/0033-2909.125.6.627. [DOI] [PubMed]

- 48.Cerasoli CP, Nicklin JM, Ford MT. Intrinsic motivation and extrinsic incentives jointly predict performance: a 40-year meta-analysis. Psychol Bull. 2014;140(4):980. doi: 10.1037/a0035661. [DOI] [PubMed] [Google Scholar]

- 49.Jenkins Jr GD, Mitra A, Gupta N, Shaw JD. Are financial incentives related to performance? A meta-analytic review of empirical research. J Appl Psychol. 1998;83(5):777–87. 10.1037/0021-9010.83.5.777.

- 50.Gibbons MBC, Rothbard A, Farris KD, Stirman SW, Thompson SM, Scott K, et al. Changes in psychotherapy utilization among consumers of services for major depressive disorder in the community mental health system. Adm Policy Ment Hlth. 2011;38(6):495–503. doi: 10.1007/s10488-011-0336-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Navathe AS, Emanuel EJ. Physician peer comparisons as a nonfinancial strategy to improve the value of care. JAMA. 2016;316(17):1759–1760. doi: 10.1001/jama.2016.13739. [DOI] [PubMed] [Google Scholar]

- 52.Alexander JA, Maeng D, Casalino LP, Rittenhouse D. Use of care management practices in small-and medium-sized physician groups: do public reporting of physician quality and financial incentives matter? Health Serv Res. 2013;48(2pt1):376–397. doi: 10.1111/j.1475-6773.2012.01454.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wiley JA, Rittenhouse DR, Shortell SM, Casalino LP, Ramsay PP, Bibi S, et al. Managing chronic illness: physician practices increased the use of care management and medical home processes. Health Affair. 2015;34(1):78–86. doi: 10.1377/hlthaff.2014.0404. [DOI] [PubMed] [Google Scholar]

- 54.Luthans F, Rhee S, Luthans BC, Avey JB. Impact of behavioral performance management in a Korean application. Leadership Org Dev J. 2008;29(5):427–443. doi: 10.1108/01437730810887030. [DOI] [Google Scholar]

- 55.Ashraf N, Bandiera O, Lee SS. Awards unbundled: evidence from a natural field experiment. J Econ Behav Organ. 2014;100:44–63. doi: 10.1016/j.jebo.2014.01.001. [DOI] [Google Scholar]

- 56.Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract. 2014;24(2):192–212. doi: 10.1177/1049731513505778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6 10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed]

- 58.Kraemer HC, Mintz J, Noda A, Tinklenberg J, Yesavage JA. Caution regarding the use of pilot studies to guide power calculations for study proposals. Arch Gen Psychiat. 2006;63(5):484–489. doi: 10.1001/archpsyc.63.5.484. [DOI] [PubMed] [Google Scholar]

- 59.Haine-Schlagel R, Fettes DL, Garcia AR, Brookman-Frazee L, Garland AF. Consistency with evidence-based treatments and perceived effectiveness of children’s community-based care. Community Ment Hlt J. 2014;50(2):158–163. doi: 10.1007/s10597-012-9583-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Garland AF, Brookman-Frazee L, Hurlburt MS, Accurso EC, Zoffness RJ, Haine-Schlagel R, et al. Mental health care for children with disruptive behavior problems: a view inside therapists’ offices. Psychiatr Serv. 2010;61(8):788–795. doi: 10.1176/ps.2010.61.8.788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Jacobs SR, Weiner BJ, Reeve BB, Hofmann DA, Christian M. The missing link: a test of Klein and Sorra’s proposed relationship between implementation climate, innovation-values fit and implementation effectiveness. Implement Sci. 2015;10:A18. doi: 10.1186/1748-5908-10-S1-A18. [DOI] [Google Scholar]

- 62.Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Adm Policy Ment Hlth. 2008;35(1–2):98. doi: 10.1007/s10488-007-0148-5. [DOI] [PubMed] [Google Scholar]

- 63.Williams NJ, Glisson C, Hemmelgarn A, Green P. Mechanisms of change in the ARC organizational strategy: increasing mental health clinicians’ EBP adoption through improved organizational culture and capacity. Adm Policy Ment Hlth. 2017;44(2):269–283. doi: 10.1007/s10488-016-0742-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Williams NJ, Glisson C. The role of organizational culture and climate in the dissemination and implementation of empirically supported treatments for youth. In: Beidas RS, Kendall PC, editors. Dissemination and implementation of evidence-based practices in child and adolescent mental health. 2014. pp. 61–81. [Google Scholar]

- 65.Edmondson A. Psychological safety and learning behavior in work teams. Adm Sci Q. 1999;44(2):350–383. doi: 10.2307/2666999. [DOI] [Google Scholar]

- 66.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):139. doi: 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and analyzed during the current study are available from the corresponding author on a reasonable request.