Abstract

Background:

In recent years, medical schools have provided students access to video recordings of course lectures, but few studies have investigated the impact of this on ratings of courses and teachers. This study investigated whether the method of viewing lectures was related to student ratings of the course and its components and whether the method used changed over time.

Methods:

Preclinical medical students indicated whether ratings of course lectures were based primarily on lecture attendance, video capture, or both. Students were categorized into Lecture, Video, or Both groups based on their responses to this question. The data consisted of 7584 student evaluations collected over 2 years.

Results:

Students who attended live lectures rated the course and its components higher than students who only viewed the video or used both methods, although these differences were very small. Students increasingly watched lectures exclusively by video over time: in comparison with first-year students, second-year students were more likely to watch lectures exclusively by video; in comparison with students in the first half of the academic year, students in the second half of the academic year were more likely to watch lectures exclusively by video.

Conclusions:

With the increase in use of lecture video recordings across medical schools, attention must be paid to student attitudes regarding these methods.

Keywords: Evaluation, lecture capture, student ratings, technology, attendance

Introduction

In recent years, medical schools have begun providing their students with access to video recordings of course lectures.1 Students generally welcome this added resource because it provides them with choice over the location, pace, and frequency of viewing lecture content.2–6 In contrast, faculty instructors tend to be more skeptical of this technology, especially regarding its presumed impact on lecture attendance.7–9 Romanelli et al10 argue that declining attendance at lectures has both professional and educational consequences. Although some studies have explored the relationship between method of viewing lectures and learning outcomes, few studies have investigated its relationship with student ratings of courses and teachers.11

In the medical education literature, 5 studies have reported lower ratings by medical students who viewed lectures by video when compared with students who viewed the same lectures live (of note, no studies have reported higher ratings when medical students view lectures by video). Paegle et al12 found that fourth-year students who attended lectures rated them higher than students who viewed the recordings in an adjacent room via closed-circuit television. However, student ratings of other aspects of the clerkship did not differ. Pohl et al13 randomized second-year students to lecture, video, and simulation methods of teaching the mental status exam. Students in the lecture condition rated the session higher than those in the video condition. Kline et al presented fourth-year students with live and videotaped lectures. They reported that students were less accepting of videotape as a teaching method.14 Leamon et al15 randomized second-year medical students to a lecture or video group and found that students who attended the lectures rated characteristics of the teacher and the lecturer’s overall effectiveness higher than the students in the video condition. Callas et al compared the ratings of clerkship students who attended a lecture in person with students at rural sites who viewed the lecture by videoconference. They reported that lecture attendees rated the lecturer, presentation, and slides higher than students who viewed the lectures by videoconference.16 Three aspects of these studies may limit their generalizability. First, 3 of the 5 took place before the development of the Internet, which now allows students to access video recordings wherever and whenever they wish. Second, the quality of the recordings in all 5 studies was probably inferior to what is typical today. Third, in all but one of these studies, the students rated the lecturer(s) and/or the session(s) but did not rate other aspects of the course or clerkship.

Holbrook and Dupont17 reported that first-year college students were more likely than their more senior peers to miss lectures due to the availability of podcasts. In contrast, a meta-analytic study that looked at the relationship between attendance and year of study found that junior and senior students were less likely than freshman and sophomore students to attend class.18 Moulton studied 10 courses at Harvard and found a consistent and sharp drop in attendance during the period of each course.19 All of these studies looked at university students; whether their findings generalize to a medical student population is not clear.

The goals of this study are to investigate whether the method of viewing preclinical lectures is related to medical students’ ratings of the course overall and its components and whether the method of viewing used differs over time. In contrast to the studies cited above, we will consider student ratings of the course as a whole, the lectures and instructors in the course, along with several other course components. Three research questions are posed:

Do medical students’ course ratings differ by viewing method?

Does viewing method differ for first- and second-year medical students?

Does viewing method change over the course of the medical school year?

Methods

Albert Einstein College of Medicine (Einstein) is a private, not-for-profit medical school located in the Bronx, NY, USA. Each medical school class consists of about 180 students, of whom approximately one-half are women, one-fifth are born outside of the United States, and one-eighth self-identify as belonging to groups traditionally underrepresented in medicine. The average age of matriculating students is about 24 years. At the end of each preclinical course, students are assigned an anonymous course evaluation form that contains standard rating items along with several additional course-specific items. Completion of course evaluation forms is mandatory (a policy that leads to response rates of close to 100%) and is required within 2 weeks of the end of each course. Students are not required to attend lectures, and attendance at lectures is not taken.

In the 2013-2014 and 2014-2015 academic years, students were asked to rate the following 7 aspects of the course using a 5-point response scale (which ranged from 1 = “unsatisfactory” to 5 = “excellent”): Learning Objectives, Use of Appropriate Clinical Correlations, Lectures as a Learning Experience, Case Conferences as a Learning Experience, Availability and Responsiveness of Course Director (or Faculty), Course Examination as a Fair Assessment of the Material Taught, and Course Overall as a Positive Learning Experience. All items were phrased positively, so higher scores indicate better ratings.

Video recordings of preclinical lectures, with variable speed playback, were first made available to students using Panopto software at the beginning of the 2013-2014 academic year. To investigate the impact of this new resource, an additional item was added to the evaluation form for most courses, namely, “Is your rating of lectures based primarily on lecture attendance, video capture, or both?” Within each course, students were categorized into Lecture, Video, or Both groups based on their responses to this question.

Analysis of variance (ANOVA) tests were conducted comparing students in the Lecture, Video, or Both groups on several ratings of course components. If the overall test for an item was found to be significant at the .05 level, it was followed by a set of t tests to determine whether the ratings for each pair of groups (ie, Lecture vs Video, Lecture vs Both, and Both vs Video) also differed. A measure of effect size (Cohen d) was calculated for each significant finding between the Lecture and Video groups.

To control for the effect of conducting multiple t tests, a Sidak correction was applied by setting the alpha level for these follow-up tests at 0.004. We also conducted a linear regression to explore the relationship between the proportion of students in a course who watched lectures exclusively by video and the overall course rating. The coefficient of determination (R2) obtained from this test provides an additional measure of effect size.

The χ2 tests were conducted to determine whether the method of viewing lectures differed between first- and second-year students and to determine whether viewing method changed over the course of the academic year. The academic year was split into halves, and the latter test determined whether students were more likely to view lectures by video in the second half of the year than in the first half of the year. These tests were performed separately for first- and second-year students.

Einstein’s institutional review board determined that the study was exempt from review for human subjects’ protection.

Results

Do course ratings differ by viewing method?

The exact number of students who provided data is unknown because the evaluations are anonymous. However, 3 medical school classes of approximately 180 students each participated in the study (ie, the Class of 2016 contributed data for the first year of the study, the Class of 2017 contributed data for both years of the study, and the Class of 2018 contributed data for the second year of the study). Because the response rate was close to 100%, the total N was approximately 540 students. These students completed 7584 evaluations of first- and second-year courses during the 2-year period of the study. On 2091 of these evaluations (27%), students reported that they attended the lectures; on 2548 of these evaluations (34%), students reported that they watched the videos; and on 2945 of these evaluations (39%), students reported that they did both.

The t tests revealed significant differences between the 2013-2014 and 2014-2015 academic years on 2 of the 7 rating questions, namely, lectures (P = .04) and faculty (P = .02). P values for the other rating questions ranged from P = .06 to P = .49. Because these differences were due to the ratings for a single second-year course improving a full point from the earlier academic year to the next, all analyses looking at student ratings used data combined across both years.

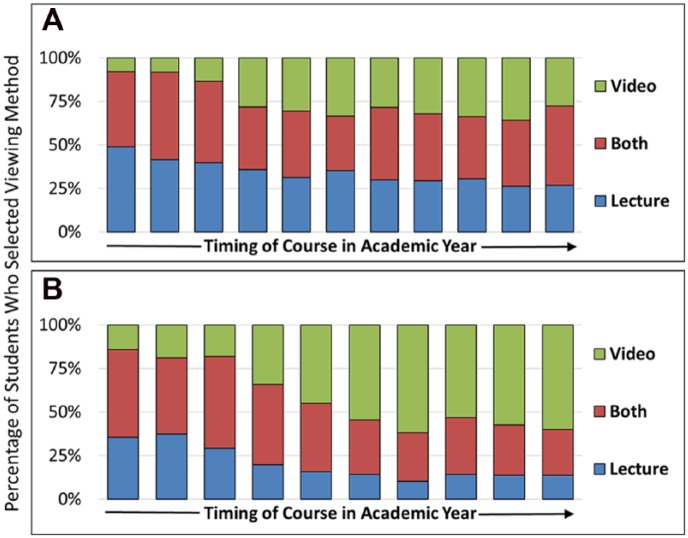

Figure 1 presents the mean ratings of the course overall and its components by method of viewing lectures. The Lecture group rated the course overall and most of its components higher than the Video group, whereas ratings of the Both group were located between the other 2 groups. This pattern was seen for ratings of course learning objectives, clinical correlations, lectures, case conferences, faculty, and the course overall. The ANOVAs for all of these comparisons were significant. The one exception to this pattern was seen for the rating of course exams; no difference was found between the 3 groups on that item (Figure 1).

Figure 1.

Ratings of course components by viewing method*. *The 5-point response scale ranged from 1 = “unsatisfactory” to 5 = “excellent.”

Table 1 displays the P values for the ANOVAs and follow-up t tests performed on the 7 rating items. Significant differences between the Lecture and Video groups were found on ratings of learning objectives, clinical correlations, lectures, case conferences, faculty, and the course overall. A significant difference between the Lecture and Both groups was found on the rating of clinical correlations. Finally, significant differences were found between the Both and Video groups on learning objectives, clinical correlations, lectures, faculty, and the course overall. The bottom row of Table 1 shows the effect sizes (Cohen d) when comparing the Lecture and Video groups. Although the differences between these 2 groups were significant across 6 ratings, the effect sizes (which ranged from 0.10 to 0.19) were very small (Table 1).

Table 1.

Results of analyses comparing the groups on ratings of course components.

| P values | Learning objectives | Clinical correlations | Lectures | Case conferences | Faculty | Exam | Course overall |

|---|---|---|---|---|---|---|---|

| ANOVA (all 3 groups) | .0001 | .0001 | .0006 | .0048 | .0001 | NS | .0001 |

| t test (Lecture vs Video) | .0001 | .0001 | .0009 | .0012 | .0001 | — | .0001 |

| t test (Lecture vs Both) | .29 | .0036 | .74 | .032 | .0064 | — | .07 |

| t test (Both vs Video) | .0008 | .0024 | .0009 | .20 | .0001 | — | .0019 |

| Effect size (Cohen d)a | d = 0.11 | d = 0.17 | d = 0.11 | d = 0.10 | d = 0.19 | — | d = 0.14 |

Abbreviations: ANOVA, analysis of variance; NS, nonsignificant.

The effect sizes apply to comparisons between the Lecture and Video groups.

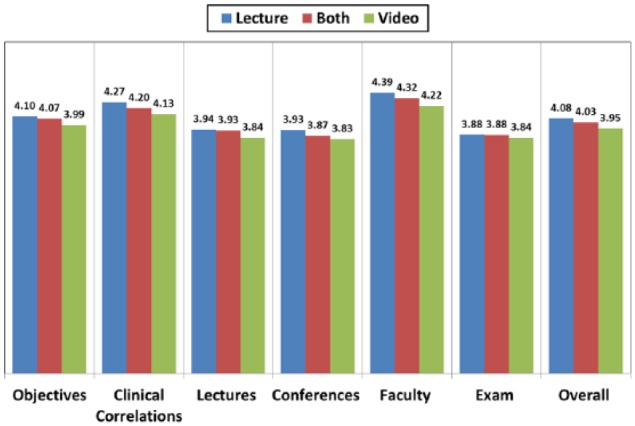

A scatterplot showing the relationship between the proportion of students who watched lectures exclusively by video and the overall course rating is shown in Figure 2 (each dot represents a single course). The coefficient of determination (R2) was 0.04 (corresponding to a correlation of −0.20), indicating that only 4% of the variance in overall course rating can be explained by the proportion of students who watched videos exclusively. The regression equation (included in the figure) indicates that for every 10% increase in this proportion, the overall course rating is expected to fall 0.042 points. It is clear from the scatterplot that the variability in the overall rating across courses is considerably greater than the variability associated with the proportion of students who watched videos exclusively (Figure 2).

Figure 2.

Scatterplot showing the relationship between the percentage of students who watched video exclusively and the overall course rating*. *Each blue dot represents a separate course.

Does viewing method differ for first- and second-year medical students?

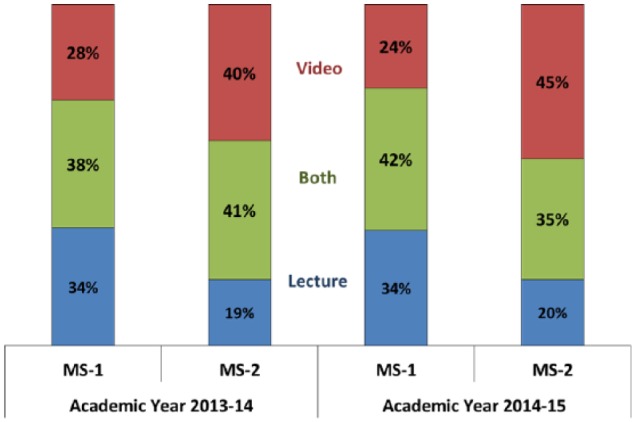

As shown in Figure 3, second-year students (MS-2s) were much more likely than their first-year counterparts (MS-1s) to watch lectures exclusively by video (40% vs 28% in academic year 2013-2014, P = .0001 and 45% vs 24% in academic year 2014-2015, P = .0001). These increases seemed to occur primarily at the expense of live lecture attendance. Because we had 2 continuous years’ worth of data, we were also able to follow the Class of 2017 (members of whom were first-year students in 2013-2014 and second-year students in 2014-2015) over time. Members of this cohort were more likely to watch lectures exclusively by video in their second year than in their first year (45% vs 28%, P = .0001) (Figure 3).

Figure 3.

Method of viewing lectures by medical student (MS) year and academic year*. *Column values represent the percentage of students who select each method.

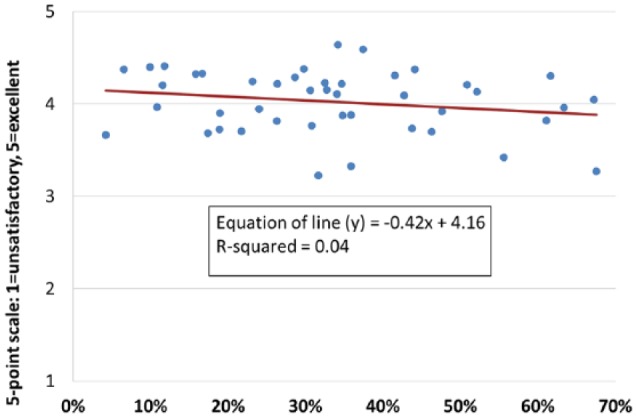

Does viewing method change over the course of the medical school year?

Figure 4 illustrates the relationship between the timing of courses in the academic year and the method of viewing lectures. Separate graphs are shown for first- and second-year students. The graphs show that the percentage of students who viewed lectures exclusively by video increased over the course of the academic year. Both χ2 tests comparing viewing method in the 2 halves of the year were significant, with P < .001. The biggest increases in the use of video occurred during the first half of each academic year, with a leveling off seeming to occur near the beginning of the second half. This may be due to the clustering of final exams and review lectures at the end of the academic year and, for second-year students, preparations for the Step 1 exam (Figure 4).

Figure 4.

Method of viewing lectures over time: (A) first-year students and (B) second-year students.

Discussion

Our results demonstrate that medical students who attended lectures live rated the overall course, various course components, and faculty teachers higher than students who watched the same lectures by video, whereas the ratings of students who used both methods fell in between those 2 groups. These findings are largely consistent with the 5 studies cited earlier.12–16 However, our study expands on those earlier studies by demonstrating that differences in ratings between lecture and video conditions (1) are still found, despite major improvements in video and Internet technology in the years since those studies were conducted and (2) are found for a wide variety of course attributes, including lectures, faculty, and the course overall.

It is unclear what accounts for the differences in ratings between students in our groups, which were consistent across most items. It could be argued that students who attend lectures are somehow “better” than students who choose not to, and this explains the former group’s higher ratings.18 However, the fact that the Lecture, Video, and Both groups in our study were quite fluid over time would seem to weaken this argument. Students who fail to attend lectures may give lower ratings because they miss the presentations’ (potentially) most rewarding aspects, including any interactivity and other nuances of the social setting that cannot be conveyed through recordings. This argument receives support from a recent study in which medical students mentioned 3 key benefits of lectures—the opportunity to interact with faculty, a sense of social support, and the provision of additional motivation to learn.20

Although the differences in ratings between the Lecture and Video groups were consistent, all of the effect sizes were less than 0.20, which are considered quite small.21 The linear regression analysis also provides evidence that video watching alone plays a minor role in determining course ratings.

The second and third research questions dealt with choice of viewing method by time. The extent to which Einstein students used video recordings of lectures increased substantially across the academic year for both first- and second-year students. These increases seemed to take place primarily in the first half of each academic year, as the percentage of students who watched lectures exclusively by video seemed to level off during the second half of each year. However, the particular ceiling that was reached appeared to be higher for second-year students than for first-year students. The reasons for the increased use of video recordings are undoubtedly complex. Students may shift over time to an increased reliance on other course resources (eg, posted slides, assigned readings, and practice problems) to learn the material and prepare for their exams, which results in a decreasing dependence on lecture attendance.

Although satisfaction appears to be higher when students attend live lectures, it is not enough to counter the trend against live attendance. Rather than questioning whether video recordings of lectures should be introduced or continued, the focus should be on how to make better use of them, thereby better meeting the needs of online learners. Joyner et al22 emphasizes the importance of instructor presence, which can be advanced or hindered through technology. Moulton19 stresses the importance of promoting engagement with online learners. As medical schools work to tailor their teaching methods to meet the individualized needs of students, greater focus should be placed on training faculty in the creation of high-quality educational videos.

This study has several limitations. Because students self-selected into one group or the other, it is impossible to know the precise reasons for their choice of viewing method. The data come from students at a single, private medical school located in the Northeastern United States during a 2-year period, so the findings may be context specific. Because the data were anonymous, we were not able to investigate demographic or academic differences between the Lecture, Video, and Both groups. The method of viewing lectures was based on student self-report, and we had no objective data to check the validity of their responses. However, because the surveys were anonymous, we assume that students accurately reported on the methods they used to view lectures. Finally, our classification of students into Lecture, Video, and Both groups was a gross measure, in the sense that it applied to each course overall rather than being lecture specific.

With the widespread use of lecture video recordings in medical schools, attention must be paid to student attitudes regarding the use of these methods and possible impacts on lecture attendance. Although student attitudes is one key outcome, the ultimate outcomes are the knowledge, skills, and behaviors obtained when students use these new instructional methods during medical school. Additional research should explore the impact of video recordings on exam performance and other outcomes. The goal should be to harness these methods to achieve a higher level of competency for our future physicians, so the decision on whether or not to record lectures should be based primarily on the extent to which it facilitates student learning.

Acknowledgments

The authors would like to thank the course directors at Einstein, some of whom provided valuable feedback on an earlier version of this manuscript.

Footnotes

Peer review:Five peer reviewers contributed to the peer review report. Reviewers’ reports totaled 1734 words, excluding any confidential comments to the academic editor.

Funding:The author(s) received no financial support for the research, authorship, and/or publication of this article.

Declaration of conflicting interests:The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Author Contributions: WBB, TPM, and MSG conceived and designed the study; wrote the first draft of the manuscript; agree with manuscript results and conclusion; jointly developed the structure and arguments for the paper; and made critical revisions and approved final manuscript. WBB analyzed the data.

Disclosures and Ethics: Drs Burton, Ma, and Grayson each contributed substantially to the design and execution of the study. They agree to be accountable for all aspects of the paper, in terms of issues of accuracy and integrity. The research described in this paper was deemed exempt from review for human subjects’ protection by Einstein’s institutional review board. The authors confirm that this article is unique and is not under consideration by or published in any other publication. Finally, the authors have read and confirmed their agreement with the ICMJE authorship and conflict of interest criteria.

References

- 1. Cardall S, Krupat E, Ulrich M. Live lecture versus video-recorded lecture: are students voting with their feet? Acad Med. 2008;83:1174–1178. [DOI] [PubMed] [Google Scholar]

- 2. Whatley J, Ahmad A. Using video to record summary lectures to aid students’ revision. IJELL. 2007;3:185–196. [Google Scholar]

- 3. Lovell K, Plantegenest G. Student utilization of digital versions of classroom lectures. Med Sci Educ. 2008;19:20–25. [Google Scholar]

- 4. Traphagan T, Kucsera JV, Kishi K. Impact of class lecture webcasting on attendance and learning. Educ Technol Res Dev. 2009;58:19–37. [Google Scholar]

- 5. Bacro TRH. Evaluation of a lecture recording system in a medical curriculum. Anat Sci Educ. 2010;3:300–308. [DOI] [PubMed] [Google Scholar]

- 6. Pale P, Petrovic J, Jeren B. Assessing the learning potential and students’ perception of rich lecture captures. J Comput Assist Learn. 2014;30:187–195. [Google Scholar]

- 7. Fernandes L, Maley M, Cruickshank C. The impact of online lecture recordings on learning outcomes in pharmacology. Med Sci Educ. 2007;18:62–70. [Google Scholar]

- 8. Young JR. The lectures are recorded, so why go to class? Chron High Educ. 2008;54:A1. [Google Scholar]

- 9. Maynor LM, Barrickman AL, Stamatakis MK, Elliot DP. Student and faculty perceptions of lecture recording in a doctor of pharmacy curriculum. Am J Pharm Educ. 2013;77:Article 165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Romanelli F, Cain J, Smith KM. To record or not to record? Am J Pharm Educ. 2011;75:Article 149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Paul S, Pusic M, Gillespie G. Medical student lecture attendance versus iTunes U. Med Educ. 2015;49:530–531. [DOI] [PubMed] [Google Scholar]

- 12. Paegle RD, Wilkinson EJ, Donnely MB. Videotaped vs traditional lectures for medical students. Med Educ. 1980;14:387–393. [DOI] [PubMed] [Google Scholar]

- 13. Pohl R, Lewis R, Niccolini R, Rubenstein R. Teaching the mental status examination: comparison of three methods. J Med Educ. 1982;57:626–629. [DOI] [PubMed] [Google Scholar]

- 14. Kline P, Shesser R, Smith M, et al. Comparison of a videotape instructional program with a traditional lecture series for medical student emergency medicine teaching. Ann Emerg Med. 1986;15:39–41. [DOI] [PubMed] [Google Scholar]

- 15. Leamon MH, Servis ME, Canning RD, Searles RC. A comparison of student evaluations and faculty peer evaluations of faculty lectures. Acad Med. 1999;74:S22–S24. [DOI] [PubMed] [Google Scholar]

- 16. Callas PW, Bertsch TF, Caputo MP, Flynn BS, Doheny-Farina S, Ricci MA. Medical student evaluations of lectures attended in person or from rural sites via interactive videoconferencing. Teach Learn Med. 2004;16:46–50. [DOI] [PubMed] [Google Scholar]

- 17. Holbrook J, Dupont C. Profcasts and class attendance—does year in program matter? Biosci Edn. 2009;13:A2. [Google Scholar]

- 18. Credé M, Roch SG, Kieszczynka UM. Class attendance in college: a meta-analytic review of the relationship of class attendance with grades and student characteristics. Rev Educ Res. 2010;80:272–295. [Google Scholar]

- 19. Moulton S. Undergraduate lecture attendance. Presentation given at the Harvard Initiative for Learning and Teaching Conference; 2014. http://hilt.harvard.edu/file/285446

- 20. Rysavy M, Christine P, Lenoch S, Pizzimenti MA. Student and faculty perspectives on the use of lectures in the medical school curriculum. Med Sci Educ. 2015;25:431–437. [Google Scholar]

- 21. Portnoy LG, Watkins MP. Foundations of Clinical Research: Applications to Practice. 2nd ed. Upper Saddle River, NJ: Prentice Hall; 2000. [Google Scholar]

- 22. Joyner SA, Fuller MB, Holzweiss PC, Henderson S, Young R. The importance of student-instructor connections in graduate level online courses. J Online Learn Teach. 2014;10:436–445. [Google Scholar]