Abstract

Purpose

The study addressed three research questions: Does lipreading improve between the ages of 7 and 14 years? Does hearing loss affect the development of lipreading? How do individual differences in lipreading relate to other abilities?

Method

Forty children with normal hearing (NH) and 24 with hearing loss (HL) were tested using four lipreading instruments plus measures of perceptual, cognitive, and linguistic abilities.

Results

For both groups, lipreading performance improved with age on all four measures of lipreading, with the HL group performing better than the NH group. Scores from the four measures loaded strongly on a single principal component. Only age, hearing status, and visuospatial working memory were significant predictors of lipreading performance.

Conclusions

Results show that children’s lipreading ability is not fixed, but rather improves between 7 and 14 years of age. The finding that children with HL lipread better than those with NH suggests experience plays an important role in the development of this ability. In addition to age and hearing status, visuospatial working memory predicts lipreading performance in children, just as it does in adults. Future research on the developmental time-course of lipreading could permit interventions and pedagogies to be targeted at periods in which improvement is most likely to occur.

The ability to encode and use visual speech information is often critical to effective spoken communication. This ability is obviously of particular importance for individuals with hearing loss (HL). In fact, the improvement in speech understanding that results when visual speech information is available can be equal to or greater than that obtained from amplification, and even in the absence of hearing loss, access to visual speech information offers one of the most effective means of overcoming the negative effects of degraded listening environments (e.g., Sommers, Tye-Murray, & Spehar, 2005; Summerfield, 1987). Despite the importance of visual speech information for spoken communication, however, little is known about the development of children’s ability to lipread (i.e., their vision-only speech perception) or about the relations between lipreading and other perceptual, cognitive, and linguistic abilities.

One of the hallmarks of lipreading in both adults and children is extensive individual variability (Andersson, Lyxell, Roennberg, & Spens, 2001; Blager & Alpiner, 1981; Feld & Sommers, 2011). Even among a relatively homogenous group, such as college students with normal hearing, lipreading performance can vary over a 60–70% range (Feld & Sommers, 2011) whereas much greater consistency is observed in their auditory speech perception, at least under good listening conditions. Interestingly, the individual variation in lipreading ability appears to be even greater among children and adults with HL than among those with NH (Auer & Bernstein, 2007; Lyxell & Holmberg, 2000).

In contrast to the consistency with which large individual differences in lipreading are reported, the evidence regarding age differences in lipreading is scant and equivocal. Although Ross et al. (2011) reported that the ability of children with NH to lipread isolated words did not improve between the ages of 5 and 14 years, others have reported that the ability to visually discriminate phonological contrasts, which presumably underlies the ability to lipread, does improve with age (Desjardins, Rogers, & Werker, 1997; Hnath-Chisolm, Laipply, & Boothroyd, 1998). With regard to group differences in lipreading, the few studies of children and adolescents with HL also present a mixed picture. Arnold and Köpsel (1996) found no difference in lipreading ability between 10-year-old children with HL and those with NH, and Conrad (1977) reported similar findings for15-year-old children. However, Jerger, Tye-Murray, and Abdi (2009) found that children with HL, aged 5 to 12 years, were significantly better lipreaders than those with NH. Beattie and Markides (1992) also found a lipreading advantage for 10- to 11-year-old children with HL compared to those with NH, and Lyxell and Holmberg (2000) reported similar results with 11- to 14-year-old children.

Previously, similar confusion existed regarding whether adults with NH differed from those with HL in lipreading ability, but more recent studies have established that adults with pre-lingual HL are better lipreaders than those with NH (Auer & Bernstein, 2007; Bernstein, Demorest, & Tucker, 2000). Thus, with respect to adults, the question is no longer whether those with pre-lingual HL are better lipreaders – they are. Rather, the question now is how they got that way, and progress on answering that question would appear to depend in part on understanding the nature of both age and individual differences in lipreading ability. Specifically, researchers need to resolve the issues of whether children’s lipreading ability improves as they get older, and whether the difference in lipreading ability between those with HL and those with NH is already present in children. Finally, although adults with prelingual HL may be, on average, better lipreaders than those with NH, extensive variation was observed in both groups. Determining the perceptual, cognitive, and linguistic correlates of lipreading ability may yield important insights into what causes an individual to be good or poor at lipreading as well as what the consequences will be.

Researchers have only begun to examine the cognitive predictors of successful lipreading in adults relatively recently (e.g., Feld & Sommers, 2009; Lyxell, Andersson, Borg, & Ohlsson, 2003), and the same is true with respect to cognitive predictors (e.g., working memory) of lipreading in children (e.g., Lyxell & Holmberg, 2000; Pisoni & Cleary, 2003). This is particularly unfortunate because according to Lyxell and Holmberg, lipreading may be more cognitively demanding for children than it is for adults. Accordingly, the goal of the present investigation was to address how children’s lipreading is affected by development and hearing loss, as well as by individual differences in perceptual, cognitive, and linguistic abilities.

One reason for the inconsistencies in the child literature may be that few instruments exist that are appropriate for assessing lipreading abilities in children. To assess lipreading performance, we developed three new measures suitable for use across a relatively broad age range because they were specifically designed to avoid both floor and ceiling effects. In addition, we used the well-established Children’s Audiovisual Test (CAVET; Tye-Murray & Geers, 2001). This battery made possible not only a broad assessment of children’s lipreading ability but also identification of age and individual differences in specific uses of visual speech information. The three research questions addressed by the current study were as follows: First, does lipreading performance improve with age for children between 7 and 14 years? Second, does the presence of hearing loss influence the development of lipreading ability? Third, how are age and individual differences in lipreading ability related to other perceptual, cognitive, and linguistic abilities, such as visuospatial perception, working memory, phonological awareness, and receptive and expressive vocabulary?

Method

Participants

Forty children (22 female) with NH and a mean age of 10 years and 9 months (SD = 28.6 months; range = 84–179 months) and 24 children (16 female) with HL and a mean age of 10 years and 10 months (SD = 27.0 months; range = 91–177 months) served as participants. All children’s first spoken language was English, and none had any disability other than hearing loss. Participants were paid for their participation and received a toy at the completion of the study. All procedures were approved by the Washington University School of Medicine’s Institutional Review Board.

Of the children in the HL group, 16 used hearing aids and 8 used cochlear implants. On average, these children were 24.5 months of age (SD = 21.6 months; range = 0–60 months) at the time their hearing loss was diagnosed. Their mean unaided pure tone average (PTA) for the better ear (based on thresholds for 500, 1000, and 2000 Hz) was 68 dB hearing level (SD=30 dB), and their mean aided soundfield PTA was 22 dB (SD = 8 dB). Aided word recognition for the HL group was assessed with the Word Intelligibility by Picture Identification (WIPI) test (Ross & Lerman, 1971). On average, the HL participants scored 92.3% words correct (SD = 8.8). Twenty of the participants with HL were mainstreamed with children who have NH, and four were in a special classroom or attended an oral school for children with HL. All NH participants were screened for bilateral PTAs of 20 dB hearing level or less.

Lipreading Battery

One likely reason for the paucity of information about the development of lipreading is that few satisfactory instruments exist for assessing children. For this reason, we developed three new measures to include in our lipreading battery in addition to the existing the Children’s Audiovisual Enhancement Test (CAVET; Tye-Murray & Geers, 2001): The Tri-BAS, the Illustrated Sentence Test (IST), and the Gist Test, all of which are described below. (Note that in order to prevent having these measures confused with standardized tests, we refer to them as measurement ‘instruments’ or ‘measures’ throughout but include the term ‘test’ in their names because we plan to collect normative data in the future.) Development of the three new instruments was guided by the following considerations. First, each measure was designed to avoid floor and ceiling effects in young participants. Second, the instruments were designed to assess competence across a wide range of linguistic levels, including separate measures of word and sentence recognition. Third and finally, the battery was created so as to avoid the problem of shared method variance masquerading as construct variance by varying the response format (open-versus closed-set) and task requirements (verbatim repetition versus general comprehension) across the instruments.

The lipreading battery required participants to identify the final word in a carrier phrase (CAVET), identify multiple words in a carrier sentence (Tri-BAS), repeat a meaningful sentence (IST), or select an illustration that best captured the meaning of a sentence (Gist Test). We plan to develop parts of the battery in order to create a set of comprehensive measures of audio, visual, and audiovisual speech perception, and therefore measures were administered in auditory-only, vision-only, and audiovisual conditions. Only the vision-only results, however, are reported here. Items in the vision-only condition were presented without the recorded sound track. For the instruments that have multiple lists, participants were assigned to lists in a rotating fashion. For the CAVET, items were presented in quiet; items in the vision-only conditions of the three new measures were presented in speech noise (62 dB SPL). Participants received no feedback as to the accuracy of their responses on any of the items.

CAVET

This instrument assesses visual word recognition in a carrier phrase format. The instrument was digitized from the original laser video disc recordings (Tye-Murray & Geers, 2001). Participants viewed 20 words embedded in the carrier phrase, Say the word ____, spoken by a woman with a general American dialect. They were instructed to watch the woman speak a phrase and then to repeat the final word. Performance on this instrument was measured as the percentage of the 20 embedded words that were correctly identified, and no practice items were provided. Only verbatim responses were scored as correct. One half of the words on the CAVET have high visibility on the face (e.g., elephant) and one half of the words have low visibility (e.g., hill). The instrument took approximately 5 minutes to administer.

Tri-BAS

This measure was adapted from the Build-A-Sentence (BAS) instrument for adults (Tye-Murray et al. 2008). The Tri-BAS was designed with a closed-set of responses that appeared as a matrix on the computer screen. Target words were always presented in the same sentence frame: The____ watched the ____. In order to make the carrier sentences sensible in English, the high frequency target words (Balota et al., 2007) all referred to people or animals with visual capabilities. The closed-set format was used to prevent floor effects, and nine separate word-picture matrices appeared in random order in order to prevent ceiling effects. Three matrices depicted monosyllabic words (e.g., goat, shark, dog), three matrices depicted bisyllabic words (e.g., zebra, tiger, baby), and three depicted trisyllabic words (e.g., fireman, kangaroo, elephant). Five educators of children with HL employed by the Central Institute for the Deaf reviewed candidate words for the Tri-BAS to ensure that children as young as 5 years with significant HL would recognize the pictured nouns. The sentences were recorded by a professional actress with a general American accent. Participants were instructed to touch the two correct pictures (out of nine) that corresponded to the two target words in each sentence (see Figure 1). Each sentence was scored based on the number of words correct, regardless of order. This instrument measured participants’ performance on either 81 or 45 sentences (preceded by 10 practice sentences) because partway through the experiment, we opted to reduce the amount of time required to administer this task by reducing the number of items. There was no significant difference between the percent word correct scores of those who viewed 81 sentences (M = 49.8, SD = 21.5) and those who viewed 45 sentences (M = 41.0, SD = 16.0).

Figure 1.

One of the 3 × 3 response screens for the one-syllable Tri-BAS (Build-A-Sentence) test. The pictured items beginning at the upper left hand corner are: crab, goat, duck, hen, owl, whale, ant, cook, clown. This screen appeared when the talker spoke a typical test sentence, such as “The whale watched the goat.”

IST

This measure was inspired by a lipreading instrument for adults developed by Pelson and Prather (1974). The IST used an open-set sentence format with three lists of 40 sentences each. For each participant, only one of the lists (counterbalanced across participants) was presented in the vision-only condition. A context-rich sentence illustration was presented on the video monitor before a video clip of the talker speaking the target sentence. For example, the sentence, The family ate dinner at the table, was preceded by the illustration shown in Figure 3. A different actress than the one who recorded the items for the Tri-BAS was used for recording the items for the Gist Test and the IST. The IST required the participant to first look at the illustration that appeared on the monitor, and then to watch the actress speak the corresponding sentence. Participants were instructed to simply repeat the sentence aloud. Responses were scored as percent words correct and were required to be verbatim repetitions. The sentences used in the IST and the Gist measures (described next) were constructed with vocabulary from the Bamford-Kowal-Bench Sentences (Bench, Kowal, & Bamford, 1979) and reviewed by five educators at the Central Institute for the Deaf. To ensure that each picture illustrated its sentence, 10 adults with NH were shown each sentence and asked to indicate which of four pictures best corresponded to the meaning of the sentence. This instrument measured performance on 40 items which were preceded by 10 practice items.. The IST required approximately 5 minutes to administer.

Figure 3.

Sample four-choice response screen for the Gist Test. This screen appeared while the talker spoke the sentence, “The doll is on the shelf.”

Gist

This measure was based on one of the early lipreading instruments developed for children (Craig, 1964). Following presentation of a sentence, participants responded using a multiple choice format by touching the illustration on the touch-sensitive screen video monitor that best matched the meaning (i.e., the gist) of the sentence. To avoid ceiling performance, illustrations were grouped into response sets of four, with each set selected so as to ensure that participants could not respond correctly based on lipreading only a few words of the sentence. For example, Figure 2 presents the response screen for the sentence, The doll is on the shelf. One foil shows a doll on a bed, another shows a doll in a pool, and a third shows a dog in a pool. During administration, a talker appeared on the video monitor and spoke a sentence, after which the response options appeared. This instrument measured performance on 40 sentences which were preceded by a series of 10 practice sentences and required approximately 10 minutes to administer.

Figure 2.

Sample item from the Illustrated Sentence Test (IST). This illustration was presented before the talker spoke the sentence, “The family ate dinner at the table.”

Cognitive and Linguistic Battery

A measure of visual perception was included as a potential predictor variable of lipreading performance as suggested by the work of Mead and Lapidus (Mead & Lapidus, 1989), and a measure of phonological awareness was also included because we reasoned that familiarity with the phonological system of the language might be useful in decoding the visual speech signal. We also included measures of receptive and expressive vocabulary based on previous suggestions (e.g., Jeffers & Barley, 1971). Finally, we included a measure of visuospatial working memory because Feld and Sommers (2009) found it to be the cognitive variable most predictive of lipreading performance in adults.

Receptive and expressive vocabulary measures were obtained using the Peabody Picture Vocabulary Test-4 (Dunn & Dunn, 2007) and the Expressive One-Word Picture Vocabulary Test (Brownell, 2000). Based on the strong correlation between these instruments (r =.93), the scores were converted to z-scores and averaged to establish a composite vocabulary score. The Visual Perception subtest of the Beery-Buktenica Developmental Test of Visual-Motor Integration (Beery & Beery, 2004) requires a participant to pick the geometric drawing from a choice of two to seven similar drawings that best matches a sample drawing. The Blending subtest of The Comprehensive Test of Phonological Processing (CTOPP; Wagner, Torgesen, & Rashotte, 1999) was used to assess phonological awareness. Participants listened to a recording of a series of separated sounds presented at 54 dB hearing level using a calibrated audiometer and then were asked to “blend” the sounds together to make a meaningful word. For example, a participant might hear “/m/,” “/u/,” and “/n/,” and then be asked to put the parts together to make a whole word (moon). Finally, visuospatial working memory was measured using a laboratory instrument, adapted from the Dot Matrix portion of the Automated Working Memory Assessment battery developed by Alloway (2007), and required participants to remember the locations of a series of red dots presented one-by-one in a 4 × 4 grid. At the end of each series, participants were presented with a blank grid on the touch-sensitive video monitor and asked to touch the locations of the dots presented earlier. Participants were also instructed that the order of reporting the dot locations did not matter (i.e., they could indicate the locations without regard to the order in which they were presented)

Procedure

All of the instruments were administered in our laboratory, which resembles a child-friendly audiological clinic. Participants performed auditory-only (A), visual-only (V), and auditory-visual (AV) conditions, with condition order counterbalanced across participants such that across participants each modality was presented first, second, or third an equivalent number of times. Only data from the V condition are presented in the current manuscript.

All of the instruments were administered during one or two sessions. Ample rest periods and a snack time were interleaved with administration of the different measures. Participants completed the paper-pencil measures at a table and sat in front of a 17-inch ELO touch-sensitive computer monitor in a single-walled sound attenuating booth for the remainder of the instruments. An audiologist or graduate research assistant sat in the room with the participant when necessary. Participants were given as much time as they needed to respond. Due to participant time restrictions, one NH participant did not take the Illustrated Sentence Test and one participant with NH and one with HL did not take the Gist Test.

Results

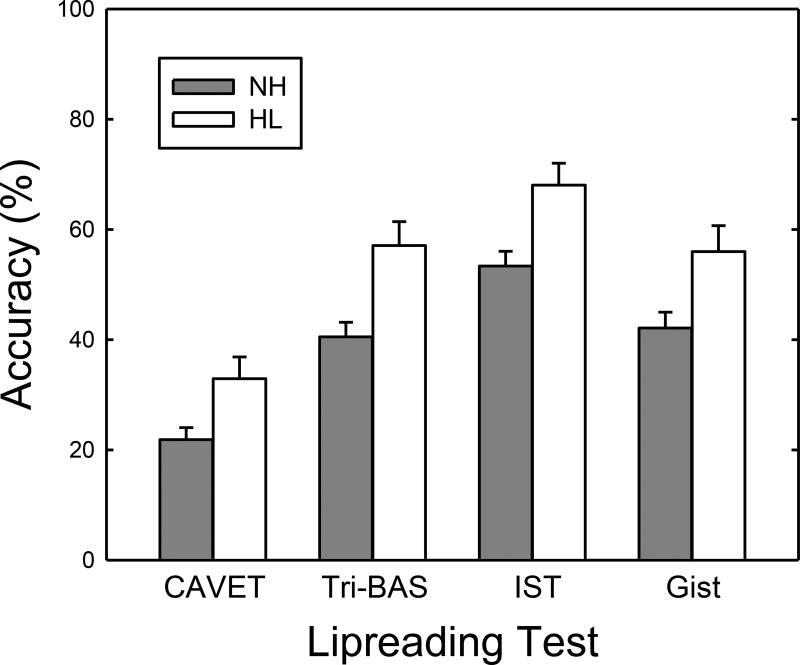

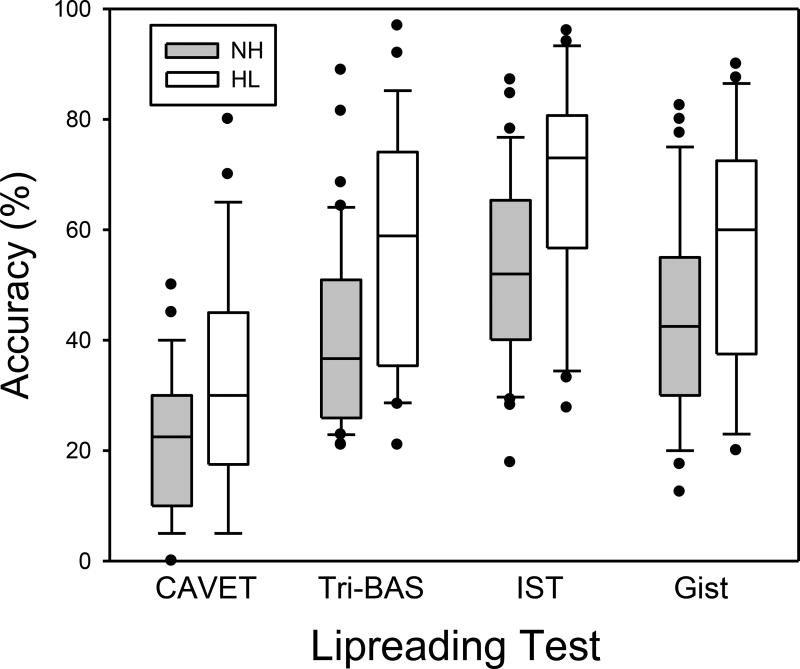

Figure 4 presents accuracy (percent correct) on the four lipreading measures for the NH and HL groups, and as may be seen, the HL group scored higher on all measures (all ts >2.67, all ps <.011). Figure 5 presents boxplots depicting the distributions of scores on the lipreading measures for the NH and HL groups, and as may be seen, the three new instruments all successfully avoided the problem of ceiling effects. Two (the Tri-BAS and the IST) of the three also clearly avoided the problem of floor effects, whereas the third – the multiple-choice Gist measure for which chance was 25% correct – was slightly less successful in this regard, although more than 90% of the participants scored above chance. The three new lipreading measures also all had Cronbach’s alphas of .80 or greater, attesting to their high reliability as assessment instruments. Notably, performance on all four instruments increased systematically as a function of age (all rs >.33; see Table 1). Consistent with these results, step-wise multiple regression analyses, in which Age was entered in the first step and Group (NH vs. HL) in the second step, revealed significant effects of both Age and Group on all four measures of lipreading.

Figure 4.

Mean accuracy of performance by the NH and HL groups on each of the four lipreading instruments. Error bars are the standard errors of the mean.

Figure 5.

Box plots depictingthe distributions of scores on the four lipreading measures for the NH and HL groups. Horizontal lines within the rectangles represent median scores, and the top and bottoms of the rectangles correspond to the first and second quartiles; the horizontal lines at the ends of the vertical “whiskers” represent tenth and ninetieth percentile scores, and the solid circles represent scores outside this range.

Table 1.

Principal Component Loadings and Correlations among the Four Lipreading Instruments and Age

| Loadings | Tri-BAS | IST | Gist | Age | |

|---|---|---|---|---|---|

| CAVET | .862 | .766 | .792 | .647 | .334 |

| Tri-BAS | .943 | .866 | .839 | .394 | |

| IST | .940 | .801 | .544 | ||

| Gist | .903 | .366 |

On the CAVET, for example, multiple regression revealed that Age alone accounted for 11.1% of the variance, F(1,62)=7.78, p=.007, and adding Group to the regression model increased the variance accounted for by 10.0%, F(2,61)=7.78, p=.007. With both factors in the model, the percentage of words correct on the CAVET increased at a rate of 2.4% per year, and overall, participants with HL scored 10.9% better than participants with NH. Similar results were obtained for the other three instruments. On the Tri-BAS, Age accounted for 15.5% of the variance, F(1,62)=11.38, p=.001, with Group accounting for an additional 15.7% of the variance, F(2,61)=13.94, p<.001; the percentage of words correct increased by 3.4% per year, and participants with HL scored 16.3% better than participants with NH. On the IST, Age accounted 29.6% of the variance, F(1,61)=25.67, p<.001, with Group adding 14.0%, F(2,60)=14.91, p<.001); accuracy increased by 4.5% per year, and participants with HL scored 14.6% better than participants with NH. Finally, age accounted for 13.4% of accuracy on the Gist Test, F(1,60)=9.30, p=.003, with Group adding 11.3% to the variance accounted for by the regression model, F(2,59)=8.82, p=.004; accuracy increased by 3.4% per year, and participants with HL scored 14.3% better than participants with NH.

The similar patterns of results for the four lipreading measures suggested that these instruments could all be assessing the same construct. Accordingly, we examined the correlations among the four measures and conducted a principal component analysis (PCA) in order to determine whether they all load on a single common factor or whether more than one significant factor is involved. The former result would indicate that they all tap the same set of perceptual and cognitive processes and would justify creation of a single composite measure of lipreading ability for use in further analyses, whereas the latter result would imply that in addition to general lipreading ability, different measures also tap different processes, perhaps as a function of the type of stimuli and/or response format used.

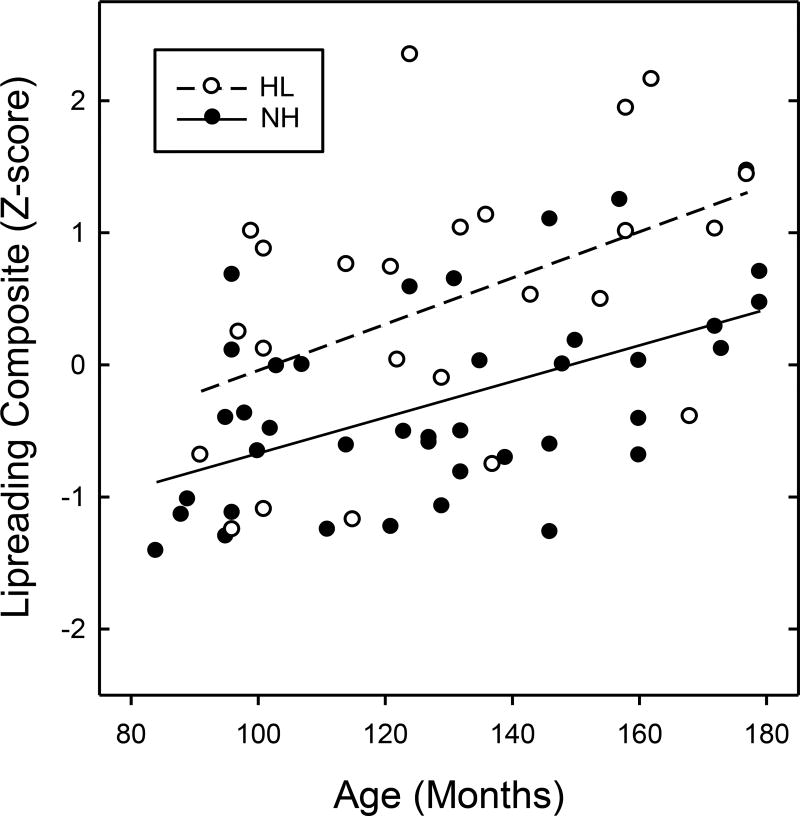

The PCA revealed only a single significant factor (Eigenvalue = 3.33) that accounted for 83.3% of the variance. As may be seen in Table 1, the intercorrelations among the four measures of lipreading were all very high (all rs >.64, all ps <.001), and the measures all loaded very strongly (all loadings >.86) on a general lipreading factor (i.e., the first principal component). Therefore, a composite lipreading score was created for each participant by converting the raw (percent correct) scores on each of the lipreading instruments into z-scores and then taking the mean of the four z-scores. Raw scores were used to calculate the z-scores for all variables so as to make it possible to determine the contribution of age to lipreading ability in subsequent analyses. As may be seen in Figure 6, composite lipreading scores increased significantly as a function of age for participants with NH (r =.522) and HL (r =.464). Notably, multiple regression revealed that there was no significant difference between the groups in the rate of improvement with age (i.e., the Age × Group interaction term was not significant, F<1.0).

Figure 6.

Individual participants’ composite lipreading scores plotted as a function of age; solid and open circles represent data from the children with NH and HL, respectively. Lines represent the regression of lipreading on age for participants from both the NH (solid line) and HL (dashed line) groups.

Step-wise regression analyses were conducted to determine the percentage of variance in the lipreading composite scores that could be attributed to participants’ age, hearing status, and the other potential predictor variables: visual perception, vocabulary, phonological awareness, and spatial working memory. The NH and HL groups differed on only one of these potential predictor variables. Specifically, the NH group scored higher on vocabulary than the HL group, t(62) = 3.41, p=.001).

Age was entered into the regression model first, and subsequent variables were entered based on their partial correlations with lipreading controlling for variables already in the model. Age alone accounted for 20.6% of the variance in the lipreading composite, F(1,62)=16.10, p<.001, Group accounted for an additional 14.2%, F(2,61)=14.48, p<.001, and visuospatial working memory accounted for a further 4.5%, F(3,60) =4.18, p=.045, bringing the total variance accounted for to 39.3%. With these three predictors in the regression model, none of the remaining constructs (neither visual perception, nor vocabulary, nor phonological awareness) accounted for any additional (unique) variance in the composite lipreading scores.

Finally, step-wise regression was used to assess the contribution of the degree of hearing loss to lipreading performance in the HL group. Participants’ current age accounted for 21.5% of the variance in the composite lipreading scores, F(1,22)=6.02, p=.023. However, once Age was statistically controlled, degree of hearing loss (whether measured as unaided PTA for the better ear or aided soundfield PTA) failed to predict individual differences in lipreading by children with HL (both Fs <1.0).

Discussion

The goal of the present study was to answer three questions. The first of these was whether lipreading performance improves with age in children between 7 and 14 years. The answer obtained to this question was clear: Children’s lipreading improved significantly across this age range, and this improvement was apparent in both NH and HL groups. This finding is contrary to that reported by Ross et al. (2011) who studied children’s ability to lipread isolated words over a similar age range and found no evidence of age differences, despite prior evidence for age differences in children’s ability to visually discriminate phonological contrasts (Desjardins, et al., 1997; Hnath-Chisolm, et al., 1998). Although the discrepancy in published results could be construed as reflecting a difference between discriminating lip movements and identifying whole words, the present study yielded consistent evidence of age-related improvement in lipreading words based on results obtained with four different measures of lipreading ability (the CAVET and three newly developed instruments with high reliability) that enabled assessment of different uses of visual speech information. In addition, the new instruments were relatively free of either floor effects or ceiling effects, even in the youngest and oldest children.

The second research question addressed by the present study was whether the presence of hearing loss influences the development of lipreading ability. Again, the answer was very clear: Children with HL performed significantly better than those with NH on all four lipreading measures across the age range under consideration. As with the question of age differences in lipreading, the question of group differences (NH versus HL) in children’s lipreading has also been controversial, with some researchers reporting no difference (Arnold & Köpsel, 1996; Conrad, 1977) and others reporting better lipreading by children with HL (Beattie & Markides, 1992; Jerger, et al., 2009; Lyxell & Holmberg, 2000). The present results are consistent with those studies reporting an advantage for children with HL similar to that recently established in adults (Auer & Bernstein, 2007; Bernstein, et al., 2000), and replicate Jerger et al. in that this advantage was present at least as early as age 7 years.

Our conclusions regarding the answers to our questions about age and group differences were reinforced by a multiple regression analysis using a composite lipreading measure based on all four instruments: Age accounted for more than 20% of the variance in the lipreading composite and adding group (NH versus HL) to the regression model brought the total variance accounted for to more than 35%. This analysis also addressed the third research question: How is the ability to lipread related to other perceptual, cognitive, and linguistic abilities? With age and group already in the model, adding visuospatial working memory as a predictor brought the total variance in lipreading accounted for to almost 40%. In contrast, measures of children’s visual perception, vocabulary, and phonological awareness all failed to predict lipreading performance once age and group were statistically controlled. Finally, among the children in the HL group, degree of hearing loss was not a significant predictor of lipreading ability.

The lack of a relation between degree of hearing loss and lipreading ability in the present study is consistent with previous findings in adults with early onset hearing loss (Auer & Bernstein, 2007). It may be the case that any clinically significant hearing impairment enhances even an aided listeners’ reliance on visual speech information, particularly under degraded listening conditions, and that the presence, but not the degree, of hearing loss is the critical factor contributing to the improved lipreading abilities of participants with early HL.

The finding that only visuospatial working memory, of all the perceptual, cognitive, and linguistic variables measured, accounted for significant unique variance in lipreading performance is consistent with results obtained with both younger and older adult groups by Feld and Sommers (2009). Feld and Sommers measured adults’ verbal working memory and visuospatial working memory as well as perceptual ability and processing speed. They found that of these measures, only visuospatial working memory and processing speed accounted for significant unique variance in lipreading. Because lipreading requires temporarily storing and processing visual speech information, it is perhaps not surprising that visuospatial working memory is predictive of lipreading performance, although other interpretations are possible.

This finding is contrary to the conclusion reached by McGrath and Summerfield (1985), who argued that beyond minimal levels of intelligence and verbal ability, no other abilities were important for lipreading. It is also contrary to the view expressed by Summerfield (1992) in a review of the literature on lipreading and AV speech perception, who also concluded that lipreading was independent of other cognitive abilities. It should be noted, however, that visuospatial working memory accounted for a relatively small proportion of the variance in the current study, raising the possibility that other cognitive abilities (e.g., processing speed) also contribute to individual differences in children’s lipreading. Future research is needed to explore this possibility, particularly because the existence of multiple determinants might explain the large individual variability that is one of the hallmarks of lipreading in both children and adults (e.g., Auer & Bernstein, 2007; Lyxell & Holmberg, 2000) and also provide clues as to how to improve lipreading performance.

Although individual differences in multiple cognitive abilities may contribute to lipreading, and despite the enormous individual differences in lipreading performance, lipreading itself appears to be a remarkably homogenous ability. The findings from the PCA revealed that a child’s lipreading performance, relative to his or her peers, was relatively consistent across measures that varied considerably in what the child was required to do based on visual speech information: identify the final word in a carrier phrase, identify multiple words in a carrier sentence, repeat a meaningful sentence, or select an illustration that best captured the meaning of a sentence. Despite the diverse performance and reponse requirements, children who performed well on one type of lipreading measure were also generally good on the other instruments as well, as evidenced by the very strong correlations among the measures, as well as by the finding that individual differences in performance on all four measures could be described in terms of a single significant principal component reflecting a common lipreading ability factor. We should note, however, that in referring to lipreading as an ability we do not mean to imply that it is either immutable or innate. Indeed, the present results show that lipreading is not fixed, but rather improves with age. Moreover, the finding that children with HL, on average, show superior lipreading performance implies that experience plays an important role in the development of this ability.

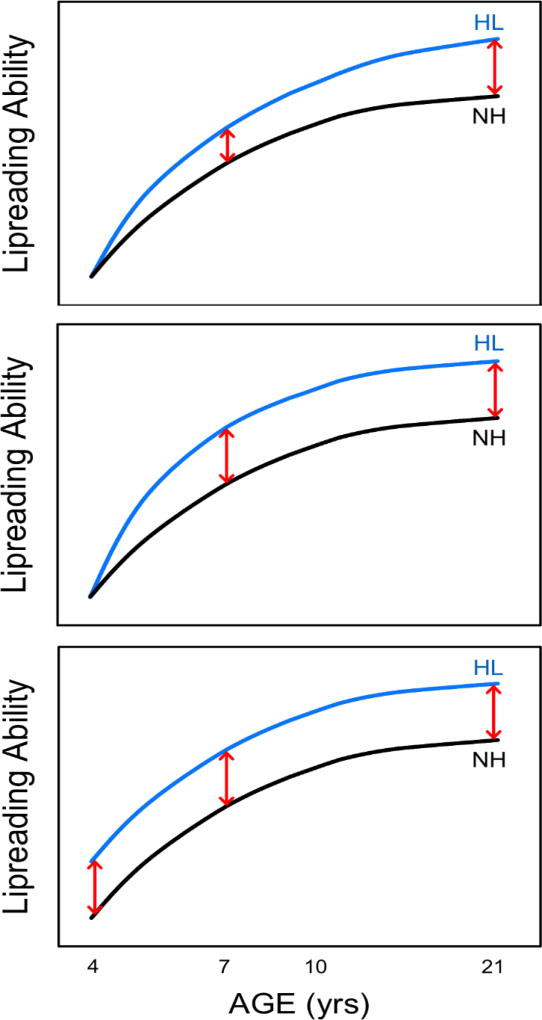

The present investigation focused on lipreading by children between 7 and14 years of age with NH and by those with HL. In future work, we propose to establish the developmental trajectories of lipreading from early childhood (as young as 4 years of age) to early adulthood, and to elaborate on how prelingual hearing loss affects lipreading. As already noted, it is now fairly well established that adults with prelingual HL lipread better, on average, than adults with NH, and the present results establish that the superior lipreading performance of individuals with HL is present as early as 7 years of age. The size of the present samples, however, did not provide sufficient statistical power to distinguish between possible developmental trajectories leading to the observed difference between NH and HL groups in adults. There are at least three such trajectories, which may be seen depicted in the three panels of Figure 7. Note the logarithmic age scale, which reflects the fact that the time course of perceptual and cognitive development is typically nonlinear, reaching an asymptote usually at some point around the beginning of adulthood.

Figure 7.

Hypothetical developmental trajectories of Lipreading Ability for HL and NH groups. See the text for details concerning the hypothesis depicted in each of the three panels. Also note the logarithmic age scale.

One possibility is that because children with HL need to attend more to visual speech information, they get more practice lipreading than those with NH, who may only attend to visual speech information when the listening environment is very poor. The fact that those with HL continue to get more practice than those with NH as they get older might result in an ever increasing difference in lipreading skill, as depicted in the top panel (note that the double-headed arrow at age 21 years, which indicates the difference between lipreaders with HL and NH, is nearly twice the size of the arrow at age 7 years).

Another possibility is that children may be especially sensitive to visual speech information at particular times in their lives. For example, they might be especially sensitive when they are becoming phonologically aware or when learning to read (typically between the ages of 4 and 7 years). This might lead to a developmental trajectory like that shown in the middle panel, in which the difference between children with HL and NH emerges during a sensitive period between 4 and 7 years, and is maintained (but importantly, does not increase) during later development (note that the arrows indicating the difference between lipreaders with HL and NH are the same size at ages 7 and 21 years).

A third possibility is that pre-lingual hearing loss exerts its effect on lipreading during initial language acquisition prior to age 4 years, resulting in a very early difference in lipreading skill that is then maintained throughout childhood and on into adulthood, as shown in the bottom panel (note the arrows indicating the difference between lipreaders with HL and NH are the same length at 4, 7, and 21 years of age). This early difference could occur because hearing loss affects neural organization directly (e.g., depriving neurons of auditory input could lead to enhanced sensitivity to visual input) or indirectly via learning (e.g., being deprived of auditory information could force even very young children to rely more on visual information, thus promoting early acquisition of lipreading skills).

Other trajectories are possible, of course, but whatever form the developmental trajectories for NH children and those with HL take, they will have important implications not only for understanding the processes underlying the development of lipreading, but also for efforts to help children with HL. For example, if future findings are consistent with the trajectories shown in the middle panel, indicating that differences in lipreading emerge during a sensitive period, this finding would suggest that differences in experience during this period play an important role and that aural rehabilitation interventions should be targeted at this period. Alternatively, evidence for trajectories such as those shown in the bottom panel would suggest that the critical sensitive period occurs prior to 4 years of age, and that interventions should be directed at very young children.

Although educators, physicians, and audiologists often confidently assume that children with HL can extract and use visual speech information from an early age, this is currently only an assumption with very little research to support it. We would suggest that if the developmental time-course of lipreading were better understood, it might provide clues as to the source of the extensive differences observed between individuals, and at the same time, enable us to tailor our interventions and pedagogies so as to exploit natural trends, such as developmental periods in which improvement is most likely to occur.

Acknowledgments

The development of the new instruments used in this work was supported under the auspices of the National Institutes of Health #RO1DC008964-01A1. We thank Elizabeth Mauze and Catherine Schroy, the project's audiologists, for their assistance throughout the conduct of this work and Emily Yonker for her artwork.

References

- Alloway TP. Automated Working Memory Assessment (AWMA) London, England: Harcourt; 2007. [Google Scholar]

- Andersson U, Lyxell B, Roennberg J, Spens K-E. Cognitive correlates of visual speech understanding in hearing-impaired individuals. Journal of Deaf Studies & Deaf Education. 2001;6(2):103–116. doi: 10.1093/deafed/6.2.103. [DOI] [PubMed] [Google Scholar]

- Arnold P, Köpsel A. Lipreading, reading and memory of hearing and hearing-impaired children. Scandinavian Audiology. 1996;25(1):13–20. doi: 10.3109/01050399609047550. [DOI] [PubMed] [Google Scholar]

- Auer ET, Jr, Bernstein LE. Enhanced visual speech perception in individuals with early-onset hearing impairment. Journal of Speech Language and Hearing Research. 2007;50(5):1157–1165. doi: 10.1044/1092-4388(2007/080). doi:50/5/1157 [pii] 10.1044/1092-4388(2007/080) [DOI] [PubMed] [Google Scholar]

- Balota DA, Yap MJ, Cortese MJ, Hutchison KA, Kessler B, Loftis B, et al. The English lexicon project. Behavioral Research Methods. 2007;39(3):445–459. doi: 10.3758/BF03193014. [DOI] [PubMed] [Google Scholar]

- Beattie R, Markides A. The degree of hearing loss and lipreading ability: An example for the doctrine of sensory compensation? ACEHI Journal. 1992;18(2–3):110–114. [Google Scholar]

- Beery KE, Beery NA. The Beery-Buktenica Developmental Test of Visual-Motor Integration. Bloomington, Minnesota: Pearson Assessments; 2004. [Google Scholar]

- Bench J, Kowal A, Bamford J. The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. British Journal of Audiology. 1979;13(3):108–112. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Bernstein LE, Demorest ME, Tucker PE. Speech perception without hearing. Perception and Psychophysics. 2000;62(2):233–252. doi: 10.3758/BF03205546. [DOI] [PubMed] [Google Scholar]

- Blager FB, Alpiner JG. Correlation between visual--spatial ability and speechreading. Journal of Communication Disorders. 1981;14(4):331–339. doi: 10.1016/0021-9924(81)90017-4. [DOI] [PubMed] [Google Scholar]

- Brownell R. Expressive One-Word Picture Vocabulary Test Manual. Novato, California: Academic Therapy Publications; 2000. [Google Scholar]

- Conrad R. Lip-reading by deaf and hearing children. British Journal of Educational Psychology. 1977;47(1):60–65. doi: 10.1111/j.2044-8279.1977.tb03001.x. [DOI] [PubMed] [Google Scholar]

- Craig WN. Effects of preschool training on the development of reading and lipreading skills of deaf children. American Annals of the Deaf. 1964;109:280–296. doi: 10.1093/deafed/eni035. [DOI] [Google Scholar]

- Desjardins RN, Rogers J, Werker JF. An exploration of why preschoolers perform differently than do adults in audiovisual speech perception tasks. Journal of Experimental Child Psychology. 1997;66(1):85–110. doi: 10.1006/jecp.1997.2379. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn DM. Peabody Picture Vocabulary Test - Fourth Edition (PPVT-4) Minneapolis, Minnesota: Pearson Education, Inc; 2007. [Google Scholar]

- Feld J, Sommers M. Lipreading, processing speed, and working memory in younger and older adults. Journal of Speech, Language, and Hearing Research. 2009;52:1555–1565. doi: 10.1044/1092-4388(2009/08137). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Fussell C, Summerfield AQ. Reading fluent speech from talking faces: Typical brain networks and individual differences. Journal of Cognitive Neuroscience. 2005;17(6):939–953. doi: 10.1162/0898929054021175. [DOI] [PubMed] [Google Scholar]

- Hnath-Chisolm TE, Laipply E, Boothroyd A. Age-related changes on a children's test of sensory-level speech perception capacity. Journal of Speech Language and Hearing Research. 1998;41(1):94–106. doi: 10.1044/jslhr.4101.94. [DOI] [PubMed] [Google Scholar]

- Jeffers J, Barley M. Speechreading (lipreading) Springfield, Illinois: Thomas; 1971. [Google Scholar]

- Jerger S, Tye-Murray N, Abdi H. Role of visual speech in phonological processing by children with hearing loss. Journal of Speech Language and Hearing Research. 2009;52(2):412–434. doi: 10.1044/1092-4388(2009/08-0021). doi:52/2/412 [pii]10.1044/1092-4388(2009/08-0021) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyxell B, Andersson U, Borg E, Ohlsson IS. Working-memory capacity and phonological processing in deafened adults and individuals with a severe hearing impairment. International Journal of Audiology. 2003;42(Suppl 1):S86–89. doi: 10.3109/14992020309074628. [DOI] [PubMed] [Google Scholar]

- Lyxell B, Holmberg I. Visual speechreading and cognitive performance in hearing-impaired and normal hearing children (11–14 years) British Journal of Educational Psychology. 2000;70(4):505–518. doi: 10.1348/000709900158272. [DOI] [PubMed] [Google Scholar]

- Mattys SL, Bernstein LE, Auer ET. Stimulus-based lexical distinctiveness as a general word-recognition mechanism. Perception & Psychophysics. 2002;64(4):667–679. doi: 10.3758/BF03194734. [DOI] [PubMed] [Google Scholar]

- McGrath M, Summerfield Q. Intermodal timing relations and audio-visual speech recognition by normal-hearing adults. Journal of the Acoustical Society of America. 1985;77(2):678–685. doi: 10.1121/1.392336. [DOI] [PubMed] [Google Scholar]

- Mead RA, Lapidus LB. Psychological differentiation, arousal, and lipreading efficiency in hearing-impaired and normal children. Journal of Clinical Psychology. 1989;45(6):851–859. doi: 10.1002/1097-4679(198911)45:6<851::aid-jclp2270450604>3.0.co;2-6. [DOI] [PubMed] [Google Scholar]

- Pisoni DB, Cleary M. Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear and Hearing. 2003;24(1 Suppl):106S–120S. doi: 10.1097/01.AUD.0000051692.05140.8E. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross LA, Molholm S, Blanco D, Gomez-Ramirez M, Saint-Amour D, Foxe JJ. The development of multisensory speech perception continues into the late childhood years. European Journal of Neuroscience. 2011;33(12):2329–2337. doi: 10.1111/j.1460-9568.2011.07685.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross M, Lerman JW. Word Intelligibility by Picture Identification (WIPI) Pittsburgh, Pennsylvania: Stanwix House; 1971. [Google Scholar]

- Sommers MS, Tye-Murray N, Spehar B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear and Hearing. 2005;26(3):263–275. doi: 10.1097/00003446-200506000-00003. doi:00003446-200506000-00003 [pii] [DOI] [PubMed] [Google Scholar]

- Summerfield Q. Speech perception in normal and impaired hearing. British Medical Bulletin. 1987;43(4):909–925. doi: 10.1093/oxfordjournals.bmb.a072225. [DOI] [PubMed] [Google Scholar]

- Summerfield Q. Lipreading and audio-visual speech perception. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 1992;335(1273):71–78. doi: 10.1098/rstb.1992.0009. [DOI] [PubMed] [Google Scholar]

- Turner TH, Fridriksson J, Baker J, Eoute D, Jr, Bonilha L, Rorden C. Obligatory Broca's area modulation associated with passive speech perception. Neuroreport. 2009;20(5):492–496. doi: 10.1097/WNR.0b013e32832940a0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tye-Murray N, Geers A. The Children's Audiovisual Enhancement Test (CAVET) St. Louis, MO: Central Institute for the Deaf; 2001. [Google Scholar]

- Tye-Murray N, Sommers MS, Spehar B. Audiovisual integration and lipreading abilities of older adults with normal and impaired hearing. Ear and Hearing. 2007;28(5):656–668. doi: 10.1097/AUD.0b013e31812f7185. 00003446-200709000-00006 [pii] [DOI] [PubMed] [Google Scholar]

- Wagner RK, Torgesen JK, Rashotte CA. The Comprehensive Test of Phonological Processing (CTOPP) Austin, Texas: ProEd; 1999. [Google Scholar]