Abstract

Objective

Intracortical brain-machine interfaces (BMIs) are a promising source of prosthesis control signals for individuals with severe motor disabilities. Previous BMI studies have primarily focused on predicting and controlling whole-arm movements; precise control of hand kinematics, however, has not been fully demonstrated. Here, we investigate the continuous decoding of precise finger movements in rhesus macaques.

Approach

In order to elicit precise and repeatable finger movements, we have developed a novel behavioral task paradigm which requires the subject to acquire virtual fingertip position targets. In the physical control condition, four rhesus macaques performed this task by moving all four fingers together in order to acquire a single target. This movement was equivalent to controlling the aperture of a power grasp. During this task performance, we recorded neural spikes from intracortical electrode arrays in primary motor cortex.

Main Results

Using a standard Kalman filter, we could reconstruct continuous finger movement offline with an average correlation of ρ = 0.78 between actual and predicted position across four rhesus macaques. For two of the monkeys, this movement prediction was performed in real-time to enable direct brain control of the virtual hand. Compared to physical control, neural control performance was slightly degraded; however, the monkeys were still able to successfully perform the task with an average target acquisition rate of 83.1%. The monkeys’ ability to arbitrarily specify fingertip position was also quantified using an information throughput metric. During brain control task performance, the monkeys achieved an average 1.01 bits/s throughput, similar to that achieved in previous studies which decoded upper-arm movements to control computer cursors using a standard Kalman filter.

Significance

This is, to our knowledge, the first demonstration of brain control of finger-level fine motor skills. We believe that these results represent an important step towards full and dexterous control of neural prosthetic devices.

Introduction

Intracortical brain-machine interfaces (BMIs) are a promising source of prosthesis control signals for individuals with severe motor disabilities. By decoding neural activity into intended upper-limb movement, BMIs have enabled both able-bodied monkeys and humans with tetraplegia to control computer cursors (Gilja et al., 2012; Jarosiewicz et al., 2015; Kim et al., 2008) and high degree-of-freedom robotic arms (Hochberg et al., 2012; Velliste et al., 2008). These studies have primarily focused on predicting and controlling whole-arm movements; restoration of precise hand movement, however, has not been fully demonstrated.

Previous studies have enabled the continuous control of one (Collinger et al., 2013; Velliste et al., 2008) or several (Wodlinger et al., 2015) hand shapes, though subjects were asked only to fully open or fully close each hand shape. Though this type of binary grasp control is useful in the short term, providing the ability to interact with simple objects in the environment, true restoration of natural movement requires continuous, volitional control of hand and finger kinematics. Further, activities of daily living such as manipulation of small objects, handwriting, and dressing all require complex, dexterous movements of the fingers. One participant in an ongoing clinical trial has been able to use imagined and physical index and thumb movements to control a computer cursor (Gilja et al., 2015), but the fidelity of finger movement decoding, rather than the ability to provide two-dimensional cursor control, has not been evaluated.

Several groups have investigated offline decoding of continuous finger-level movements from primary motor cortex in monkeys. After recording neural activity during reach-to-grasp behavioral tasks, in which the monkey reaches for different objects using unique grasps, these groups have been able to reconstruct up to 18 (Aggarwal et al., 2013), 25 (Vargas-Irwin et al., 2010), and 27 (Menz et al., 2015) joint angles of the arm and hand simultaneously offline. The ability to decode many degrees of freedom with relatively high accuracy is encouraging for future dexterous BMIs, but the controllability of such systems in an online setting has not been evaluated. Further, movements of the hand and fingers during this task are not isolated, but rather are coincident and possibly highly correlated with more proximal movements of the elbow and shoulder due to the stereotyped task behavior (Schaffelhofer et al., 2015). It is thus unclear whether accuracy would be maintained in other behavioral contexts. Some studies have successfully decoupled arm and hand kinematics during the offline task (Vargas-Irwin, 2010), but did not directly demonstrate decoding of isolated hand movement.

To study isolated finger movements in the early 1990s, Schieber introduced a manipulandum allowing the measurement of individual finger flexion and extension over a range of a few millimeters by actuating force sensors and micro-switches (Schieber, 1991). This paradigm has been used successfully by several groups to classify multiple hand and wrist movements (Egan et al., 2012; Hamed et al., 2007), and continuously decode the instantaneous position of all five digits simultaneously offline (Aggarwal et al., 2009). The high accuracy of such results is promising; however, as the range of movement measured by the manipulandum is severely limited, generalization to natural movement is not possible.

These studies have demonstrated the potential for extracting finger-level information from neural activity, but the extracted information has not been used to evaluate actual functional control. This requires a transition to brain controlled task performance, in which the task is completed using the decoded rather than physical movements and the subject can act to change and correct the decoded movement in real time. In order to enable the evaluation of BMI-controlled finger and hand movements, virtual reality simulators have been developed in which behavioral tasks can be performed through an avatar (Aggarwal et al., 2011; Putrino et al., 2015). In these systems, avatars could be actuated via either physical movements or decoded movements, enabling both offline and online, brain controlled assessment of BMI performance. In one study, a monkey controlled a virtual hand avatar in brain control mode, though in this case the avatar was controlled via neural activity generated as the monkey moved a joystick with wrist and arm movements, and did not directly involve physical movement of the fingers (Rouse, 2016).

Though promising, to our knowledge these systems have not yet been used to decode finger movements in order to enable brain control of the avatar.

We have used this virtual reality paradigm to develop a novel finger-level behavioral task in which a monkey acquires fingertip position targets. Here, we used this task to investigate the continuous decoding of precise finger movements from primary motor cortex, isolated from confounding movements of the upper arm. We analyzed the resulting data in order to study the inherent kinematic tuning of motor cortex neurons during finger movements and identify the optimal parameters for decoding those movements. We used these results to present the first demonstration of brain control of fingertip position.

Methods

All procedures were approved by the University of Michigan Institutional Animal Care and Use Committee.

A. Behavioral task

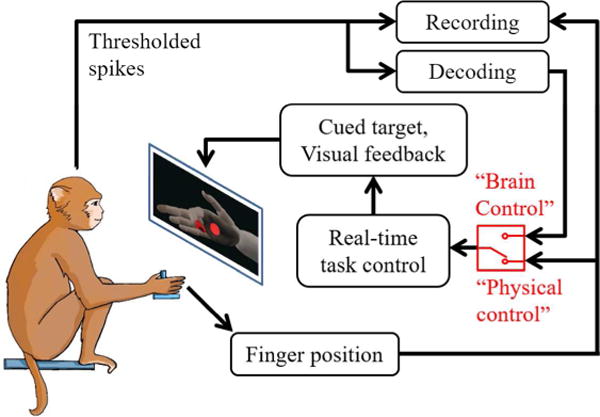

We trained four rhesus macaques, Monkeys S, P, L, and W, to perform simultaneous movements of all four fingers together to hit fingertip position targets in a virtual environment, as illustrated in Figure 1. This is equivalent to varying the aperture of a power grasp. The monkey sat in a shielded chamber with its right arm at its side, forearm flexed 90 degrees and resting on a table. The monkey’s palm was lightly restrained facing to the left, with the fingers free to move unimpeded. A flex sensor (FS-L-0073-103-ST, Spectra Symbol) was attached to the index finger, covering all three joints, in order to measure the finger position. Position data were read by a real-time computer running xPC Target (Mathworks). A computer monitor directly in front of the monkey displayed a virtual model of a monkey hand (MusculoSkeletal Modeling Software; Davoodi et al., Online), which was actuated by the xPC in order to mirror the monkey’s movements.

Figure 1.

Behavioral task illustration. The monkey performed flexion and extension movements of the four fingers together in order to hit virtual targets on a computer screen. The virtual hand could be controlled either through physical movements (“Physical control”), measured via flex sensor, or through decoded movements (“Brain control”) based on the thresholded neural spikes. Only one finger of the virtual hand was actuated on the screen, despite the monkey moving all four fingers together. This did not appear to affect the monkey’s behavior.

At the start of each trial, the xPC cued a spherical target to appear in the path of the virtual finger, and the monkey was required to move its fingers in order to hit the target and hold for a set period (100–500 ms, depending on the stage of training). Only the index finger was instrumented with a flex sensor, but the monkeys made movements with all four fingers simultaneously (as verified by visual monitoring of behavior). Targets could be generated in one of two patterns, flex-extend or center-out. In the flex-extend pattern, which was the first task learned by each monkey, targets were presented in positions requiring either full flexion or full extension in alternating trials. In the center-out pattern, a target was initially presented in the neutral or rest position, half-way between flexed and extended. Once the monkey successfully acquired this target, a second target was pseudorandomly presented in one of six positions requiring differing degrees of flexion or extension. After this target was successfully acquired or the trial timed out, the neutral target was again presented until successful acquisition.

B. Electrophysiology

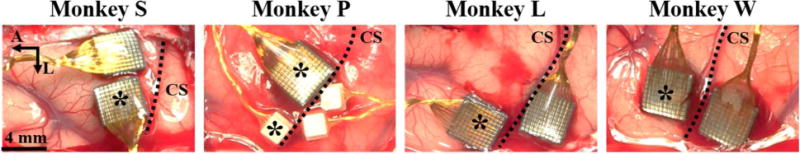

We implanted each monkey with intracortical electrode arrays in the hand area of primary motor cortex, as identified by surface landmarks. The genu of the arcuate sulcus was identified in the craniotomy, and a line was traced posteriorly to central sulcus. Arrays were placed on this line just anterior to central sulcus, as allowed by vasculature. Diagrams of each implantation are shown in Figure 2. Monkey S received two 96-channel Utah arrays (Blackrock Microsystems) in motor cortex. Monkey P received one 96-channel Utah array and one 16-channel FMA (MicroProbes) in motor cortex, and two 16-channel FMAs in sensory cortex. Monkeys L and W each received two 96-channel Utah arrays, one in primary motor and one in primary sensory cortex.

Figure 2.

Surgical photos of each monkey’s electrode array placement. Asterisks indicate arrays used for analysis. CS – central sulcus, A – anterior, L – lateral.

During experimental sessions, we recorded broadband data at 30 kS/s using a Cerebus neural signal processor (Blackrock Microsystems). Neural spikes were detected by thresholding at −4.5 times the RMS voltage on each channel, after high-pass filtering the broadband data at 250 Hz. Thresholded spikes were simultaneously recorded for offline analysis and streamed to the xPC for online decoding.

C. Decoding

Offline, we used a linear Kalman filter (Wu et al., 2006) to decode continuous finger position from the thresholded neural spikes. Mean hand kinematics and neural firing rates were computed in consecutive, non-overlapping time bins. Hand kinematics at time bin t are collectively described by the hand state vector, Xt = [p, v, a, 1]T, where p is the finger position as directly measured by the flex sensor and v and a are the finger velocity and acceleration as calculated by the first and second difference of position. Firing rates for each channel at time t are collected in the neural activity vector, Yt = [y1, …, yN]T, where yk is the firing rate of the kth channel. In the Kalman framework, hand state is described by a linear dynamical system and neural activity is modeled as a noisy transformation of the current hand state, as described by,

| (1) |

| (2) |

where A is the state transition matrix and C is the observation transformation matrix, as below,

| (3) |

| (4) |

Noise terms wt and qt are assumed to be drawn from individual Gaussian distributions with zero mean and covariance W and Q, respectively.

In all offline decoding, we used 10-fold cross-validation to avoid overfitting, training and testing the Kalman filter on separate sets of contiguous trials from the same day. For each monkey, one experimental day was set aside for optimizing decoding parameters. Optimal settings learned on this day were then applied to each testing day. The optimized parameters were the bin size, the time lag (the physiological delay between neural firing rate and kinematic measurements), and the kinematic tuning (whether modeling firing rates as being tuned only to position, velocity, or acceleration, or a combination resulted in more accurate movement prediction).

D. Online brain control

To determine the functional utility of the offline finger decoding, we enabled two monkeys to perform the behavioral task under brain control, using a real-time prediction of finger position. For each experimental session, Monkeys L and W performed the center-out task using physical movements for ~300 trials. The algorithm was trained on this set of data, and was run in real-time for the rest of the experiment. During brain control trial blocks, the virtual hand was controlled using the decoded finger position instead of the monkey’s actual finger movements. The task remained the same, occasionally with slightly larger targets or shorter target hold time to provide motivation for the monkey to continue.

For computational efficiency, we used the steady-state Kalman filter (Dethier et al., 2013; Malik et al., 2011) for online decoding. The only difference between the steady-state filter and the standard filter used offline is that the steady-state Kalman gain is pre-computed during training. In practice, the Kalman gain, though updated every timestep in the standard filter, depends only on the constant matrices A, C, W, and Q, and quickly converges to its steady-state value. When compared to the standard filter both offline and online in brain control mode, both the gain matrices and the kinematic predictions generally converged within 5 s (Malik et al., 2011). Thus, to compute the gain matrix during training, the gain update step of the Kalman algorithm was iterated for the equivalent of 5 s, and the final matrix saved for online use.

To evaluate the performance of the online movement decoding during brain control, we computed several metrics including trial success rate, time to target acquisition, and bit rate via Fitts’s law (Thompson et al., 2014), and compared them to those computed during physical task control.

Results

A. Neural and kinematic data

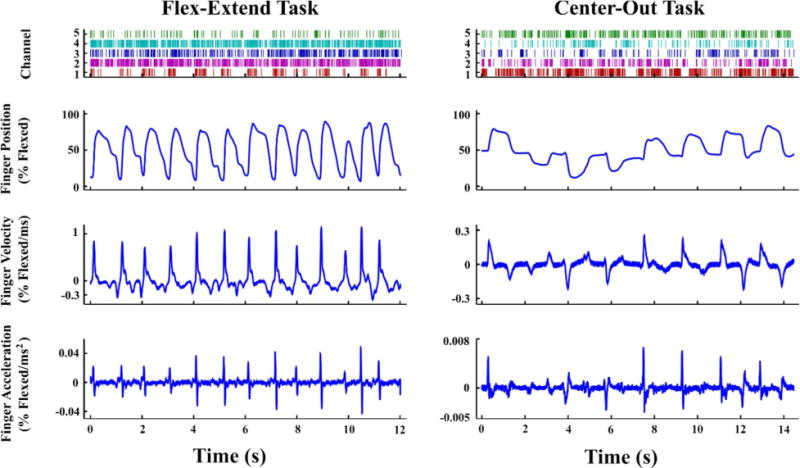

Examples of kinematic and neural data recorded during task behavior are shown in Figure 3, for both the flex-extend and center-out tasks. Neural modulation can be seen in both tasks, though the exact relationship between neural firing rates and behavior is unclear and seems to vary between channels. The flex-extend task paradigm was used as an intermediate step in training, and thus the behavior is both simpler and generally performed faster than the center-out task. The flex-extend task was performed by all four monkeys, while the center-out task was performed only by Monkeys L and W.

Figure 3.

Finger kinematics and associated neural spikes from (left) Monkey P performing the flex-extend task and (right) Monkey L performing the center-out task. Each spike raster displays five separate channels, chosen to be exemplary of modulated activity in each monkey. Within a raster row, the time of each individual spike event is represented by a tick.

To examine the kinematic tuning of each channel, we computed the correlation coefficient (Pearson’s r) between the kinematic data (position, velocity, and acceleration) and each single neural channel binned at 100 ms. This was performed on an experimental day reserved for this purpose. To account for the preferred time lag of each channel, we repeated the calculation with multiple lags, from 0 ms to 200 ms, for each kinematic variable and recorded the maximum correlation. Figure 4 shows the distribution of correlation values with each kinematic variable.

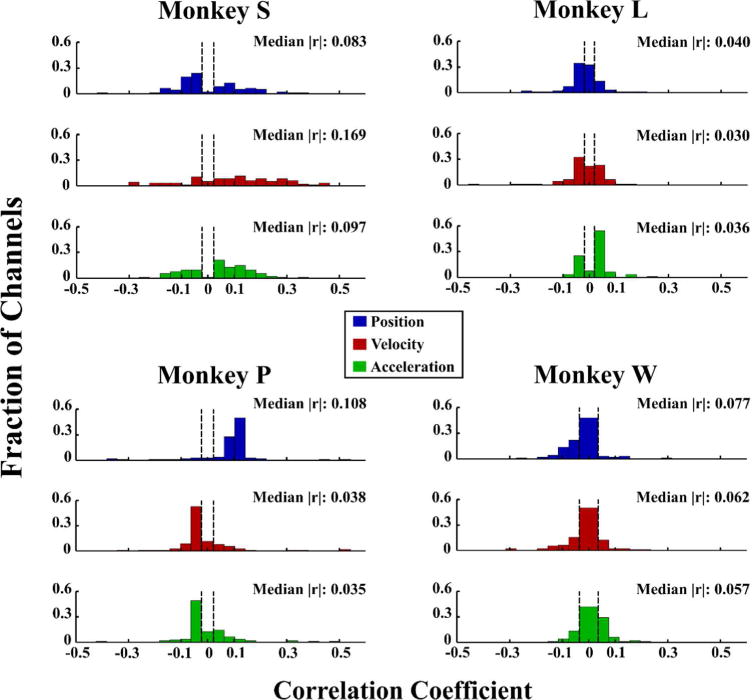

Figure 4.

Distribution of kinematic tuning for each monkey, as a histogram of correlation values for all single neural channels. Monkeys S and P performed the flex-extend task, while Monkeys L and W performed the center-out task. Dashed lines represent the statistical significance threshold for each monkey (p < .05, based on a two-tailed t-test of the transformed correlation coefficient). Reported medians are based only on statistically significant correlation values.8

Kinematic correlations with single channel firing rates were generally low, as expected due to the stochastic nature of the neural data. Though tuning was widely variable between monkeys, finger position was the best represented parameter in three of four animals (with channels from Monkey S being more tuned to velocity). However, within each monkey, at least some channels were tuned to each kinematic parameter. Both Monkey L and Monkey W had generally lower levels of tuning than Monkeys S and P, as well as more symmetric distributions about zero.

We also compared tuning distributions across two days per monkey and between tasks for Monkeys L and W, as shown in supplementary Figure S1. Distributions varied somewhat across both days and tasks, though position remained the best represented parameter in all cases except Monkey S (for whom velocity was best represented on the first day, and was equal to position on the second). In Monkeys L and W, distributions shifted more across tasks than across days, though more study is needed to determine whether these task-related changes are outside of the normal day-to-day variance. Finally, all monkeys displayed unique tuning distributions even when performing the same task, suggesting that these differences are intrinsic to the monkey rather than being solely related to the task. Whether this is caused by differences in array placement or simply by unique brain structure is unknown and requires further study. As the relationship between neural firing rates and movement remains unclear and appears to vary widely, we investigated the functional impact of including various combinations of kinematic parameters in the Kalman filter model during optimization.

B. Parameter optimization

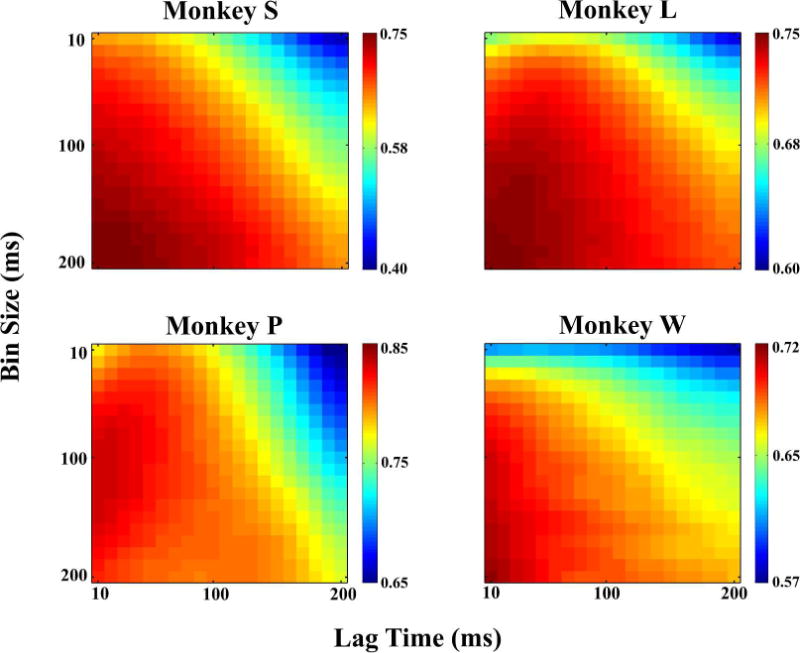

In order to optimize decoding parameters for each monkey, a grid search of bin size and time lag (from 10–200 ms for each parameter, with a single time lag for all channels) was performed on the reserved experimental day. Using each set of parameters, the Kalman filter was trained and tested with 10-fold cross-validation. The results of this grid search for each monkey is shown in Figure 5 as a heatmap of correlation coefficients between actual and predicted finger position.

Figure 5.

Offline movement decoding performance (correlation coefficient) as determined by the bin size and time lag parameters. Each monkey displayed a unique pattern of optimal parameters, though performance tended to increase as bin size increased and lag time decreased (Monkey P being an exception). One parameter setting which was near optimal for all monkeys was 100 ms bin size and zero time lag.

In general, performance tended to increase with larger bin size, though Monkey P’s decoding performance was optimal in a restricted range around 100 ms. This discrepancy may be explained by the speed with which Monkey P performed the task, making bin sizes greater than ~100 ms smooth out the behavior and lose valuable information. Time lags close to zero also tended to be better, but there was more variability between monkeys than for bin size. In each monkey, there was a wide range of “optimal” parameter settings in which the performance level was similar. One of these settings which was close to optimal for all monkeys (with a 4% or less decrease in performance from the maximum) was a bin size of 100 ms and zero time lag. These parameters are similar to those used previously for decoding of both arm (Cunningham et al., 2011; Kim et al., 2008) and hand (Aggarwal et al., 2013; Menz et al., 2015) movements, and were used for all further offline decoding. As optimal decoding parameters are typically different between offline and online brain control decoding, with shorter time bins being generally better for online control (Cunningham et al., 2011), online brain control decoding parameters were optimized separately on each experimental day.

We also investigated the impact of including each kinematic parameter in the Kalman filter observation model. That is, assuming neural firing rates are solely related to position, velocity, or acceleration; to a combination of position and velocity; or to all three kinematic parameters. This is accomplished by setting the C matrix columns corresponding to non-related kinematics to zero. For example, modeling neural firing rates as related only to velocity would modify C to the following:

| (5) |

indicating that neither position nor acceleration can impact the neural firing rate for any channel. In this case, the predicted velocity at each timestep is affected by both the previous hand state and the current neural activity, while position is estimated solely via temporal integration of the predicted velocity. Because the neural data is explicitly modeled as having no acceleration information, the acceleration similarly can only be estimated via differentiation of velocity. However, this is not useful in the ultimate goal of predicting finger position (as the impact on velocity would only be detrimental due to errors in differentiation and integration), and therefore acceleration is not estimated at all for this model.

The movement decoding performance resulting from each neural tuning model is presented in Table 1. Here, performance is measured by the correlation coefficient between predicted and actual kinematics. Correlation coefficients are listed separately for each individual kinematic parameter under each model (not including parameters which are not estimated for a given model).

Table 1.

Offline Kalman filter movement decoding performance (correlation coefficient, ρ, between predicted and actual kinematic parameters) under different neural tuning models.

| Neural model | Monkey S | Monkey P | Monkey L | Monkey W | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ρ (P) | ρ (V) | ρ (A) | ρ (P) | ρ (V) | ρ (A) | ρ (P) | ρ (V) | ρ (A) | ρ (P) | ρ (V) | ρ (A) | |

| P only | 0.65 | – | – | 0.56 | – | – | 0.47 | – | – | 0.56 | – | – |

| V only | 0.53 | 0.75 | – | 0.77 | 0.80 | – | 0.66 | 0.63 | – | 0.67 | 0.56 | – |

| A only | 0.49 | 0.61 | 0.64 | 0.66 | 0.64 | 0.61 | 0.25 | 0.10 | 0.25 | 0.26 | 0.13 | 0.25 |

| P+V | 0.73 | 0.79 | – | 0.83 | 0.82 | – | 0.73 | 0.65 | – | 0.71 | 0.58 | – |

| P+V+A | 0.74 | 0.80 | 0.62 | 0.83 | 0.82 | 0.66 | 0.74 | 0.65 | 0.33 | 0.70 | 0.58 | 0.29 |

Under the single parameter models, both position and velocity are more accurately predicted when neural activity is modeled as being related only to velocity (the “V only” model, with position purely estimated as integrated velocity), with Monkey S being an exception. However, we found that including both parameters in the tuning model (“P+V” model) significantly increased decoding performance over all single-parameter models. Further, the addition of acceleration (“P+V+A” model) did not greatly increase performance over the position and velocity model. Thus, the two parameter model (i.e. neural firing rates are related to both position and velocity, and acceleration is ignored) was used for all further analysis.

C. Offline decoding

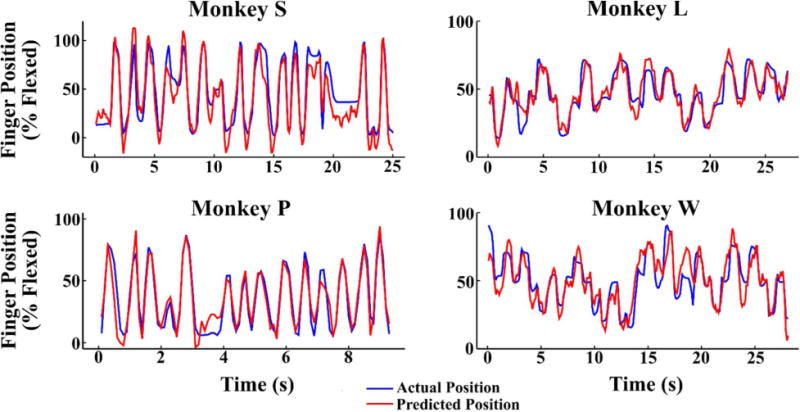

Using a standard Kalman filter with these optimal parameters, we decoded three days of experiments for each monkey with an average correlation coefficient of 0.803, 0.858, 0.709, and 0.740 (for Monkey S, P, L, and W, respectively) between true and predicted finger position. This performance level is very similar to that reported by previous studies during both reach-to-grasp tasks and limited isolated finger movement tasks (Aggarwal et al., 2009; Menz et al., 2015; Vargas-Irwin et al., 2010). Movement decoding performance for each session is shown in Table 2 as correlation coefficient and root-mean-squared error (RMSE), and example traces are shown in Figure 6 for each monkey. For these experimental sessions, Monkeys S and P performed the flex-extend task, while Monkeys L and W performed the center-out task.

Table 2.

Offline decoding performance.

| Monkey S | Monkey P | Monkey L | Monkey W | |

|---|---|---|---|---|

| Session | ρ (RMSE) | ρ (RMSE) | ρ (RMSE) | ρ (RMSE) |

| 1 | 0.827 (0.176) | 0.868 (0.121) | 0.659 (0.143) | 0.776 (0.130) |

| 2 | 0.772 (0.209) | 0.894 (0.119) | 0.705 (0.135) | 0.734 (0.134) |

| 3 | 0.810 (0.182) | 0.812 (0.161) | 0.763 (0.123) | 0.709 (0.149) |

| Mean | 0.803 (0.189) | 0.858 (0.134) | 0.709 (0.134) | 0.740 (0.139) |

Figure 6.

Sample decoded movements for each monkey, with the blue trace indicating the true finger position and the red trace indicating the predicted position. Monkeys S and P performed the flex-extend task, while Monkeys L and W performed the center-out task.

Performance was noticeably lower for Monkeys L and W, which was likely due to the more complex behavioral task. To explicitly compare decoding performance between task types, we also decoded two sessions in which Monkeys L and W performed both the center-out and the flex-extend tasks. As shown in Table 3, decoding correlation for the flex-extend task increased relative to the center-out task performed on the same day, though RMSE also increased due to the decoded movement “overshooting” near the minimum and maximum finger positions.

Table 3.

Offline task comparison.

| Monkey L | Monkey W | ||

|---|---|---|---|

| Session | Task | ρ (RMSE) | ρ (RMSE) |

| 1 | Center-out | 0.659 (0.143) | 0.749 (0.144) |

| Flex-extend | 0.795 (0.220) | 0.838 (0.208) | |

| 2 | Center-out | 0.733 (0.123) | 0.736 (0.164) |

| Flex-extend | 0.758 (0.189) | 0.793 (0.242) |

These differences indicate that care must be taken in the design of behavioral tasks so that the accuracy of movement prediction is not overstated. Though performance may be higher in a simpler task that requires only fully flexed and fully extended positions (at least offline), natural movement is necessarily more complex.

D. Online brain control

Following offline testing in Monkeys L and W, we used a steady-state position/velocity Kalman filter to decode movements in real-time during task performance. The filter was optimized and trained on the first ~300 trials of behavior, with a number of parameters tested both offline and during online brain control. In two days of experiments for Monkey L and three days for Monkey W, both 50 and 100 ms bins were used, with 50 and 0 ms of lag. In subsequent blocks of trials, the monkey used either physical movements or the predicted movements (brain control) in order to perform the task. The required hold time for each target ranged from 400–500 ms, the target sizes ranged from 17.3–21.0% of the finger movement space, and trials had a timeout of 5–10 s. These parameters were adjusted 1–3 times within the experiment in order to maintain the monkey’s motivation, with brain control trials generally requiring larger targets and shorter hold times. These parameter changes are accounted for in the target acquisition time and bit rate metrics calculated in Table 4.

Table 4.

Online task performance metrics for Monkeys L and W.

| Success rate (%) | Mean acquisition time (s) | Mean index of difficulty (bits) | Bit rate (bits/s) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Monkey | Session | Physical control | Brain control | Physical control | Brain control | Physical control | Brain control | Physical control | Brain control |

| L | 1 | 99.2 | 81.1 | 0.62 | 1.88 | 1.06 | 0.88 | 2.24 | 1.10 |

| 2 | 98.0 | 73.7 | 0.73 | 2.23 | 1.06 | 0.89 | 2.11 | 0.79 | |

| Mean | 98.6 | 77.4 | 0.68 | 2.06 | 1.06 | 0.89 | 2.18 | 0.95 | |

| W | 1 | 95.5 | 80.5 | 0.71 | 2.09 | 1.04 | 1.08 | 2.38 | 1.00 |

| 2 | 100 | 96.0 | 0.58 | 2.48 | 1.14 | 1.09 | 2.48 | 1.02 | |

| 3 | 98.8 | 90.2 | 0.73 | 1.77 | 1.07 | 1.07 | 2.25 | 1.19 | |

| Mean | 98.1 | 88.9 | 0.67 | 2.11 | 1.08 | 1.08 | 2.37 | 1.07 | |

| Overall | Mean | 98.4 | 83.1 | 0.68 | 2.09 | 1.07 | 0.99 | 2.28 | 1.01 |

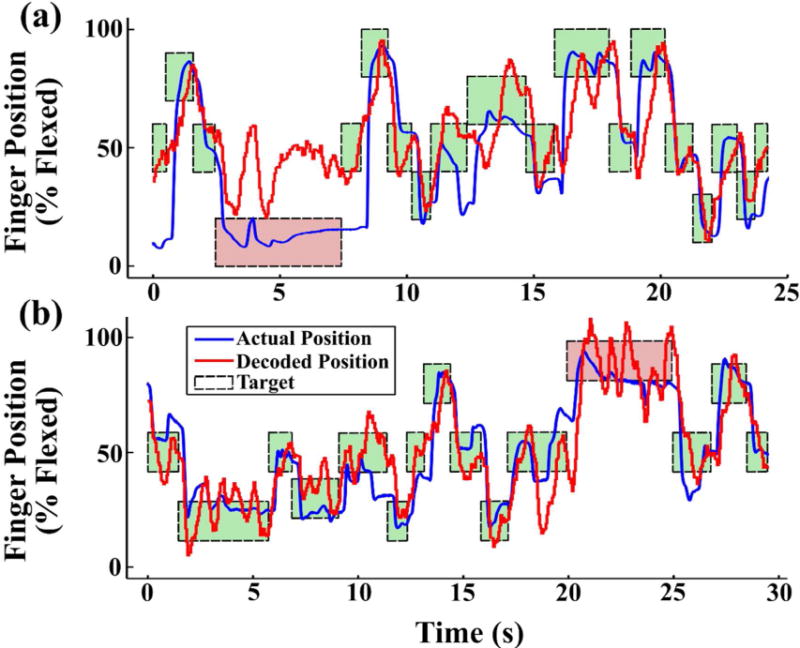

A sample of online brain control task performance for each monkey is shown in Figure 7, with both the monkeys’ actual movements and predicted movements. The movements decoded online appeared to be heavily influenced by the monkey’s finger velocity, often predicting a return to the rest position when the finger was actually held at a constant flexed or extended position, though this was more evident in Monkey L. Despite the often large errors in decoded position, the monkeys were able to successfully complete an average of 83.1% of brain control trials (with a minimum of 73.7% success by Monkey L on one experimental day), learning to compensate for the erroneous virtual finger motion. See supplemental video 1 for a video of brain control task performance by Monkey L.

Figure 7.

Online movement decoding during brain control of the behavioral task for (a) Monkey L, and (b) Monkey W. The monkeys were required to keep the decoded finger position (red trace) within the target zone (dashed boxes) for 500 ms, regardless of the true finger position (blue trace). Target background color indicates the trial success (green) or failure (red).

Several performance metrics for both the physical control and the brain control task are shown in Table 4. As expected, performance was clearly lower during the brain control mode than with physical control; however the animals were still able to successfully complete the task. The average time to acquire a target was longer during brain control trials by <1.5 s, and was still well below the trial timeout of 5 s. The mean target acquisition time metric does not include the target hold time, similar to (Gilja et al., 2012), but does include unsuccessful trials, such that a 0% success rate would result in a mean acquisition time equal to the average trial timeout.

An informative metric when performing continuous selection tasks is the Fitts’s law bit rate, which takes into account both the index of difficulty of the task (how hard the target is to acquire in space) and how quickly the target can be acquired. This metric is fairly robust to variations in tasks (Thompson et al., 2014), and therefore can be compared across algorithms and lab procedures. The index of difficulty of this behavioral task compares well to previous whole-arm tasks, both in physical and brain control. With a simple Kalman filter, we achieved an average bit rate of 1.01 bits/s across the two monkeys in brain control mode, compared to 2.28 bits/s when using the physical hand. This result is similar to bit rates reported in the literature for whole-arm BMI performance using similarly simple algorithms. See (Gilja et al., 2012) Supplemental Materials, Table 3.1 for a comparison of estimated bit rates from (Ganguly and Carmena, 2009; Kim et al., 2008; Taylor et al., 2002). Here, unsuccessful trials were included in the bit rate calculation, with the bit rate for those trials set to zero to indicate that no information was gained.

Discussion

Here, we have presented a novel virtual behavioral task paradigm which is designed to enable the detailed investigation and control of isolated movements of the hand. We used this task to perform the first successful demonstration of continuous decoding of grasp aperture over the full range of motion. Previous continuous decoding studies have either involved confounding simultaneous movement of the upper-arm (Aggarwal et al., 2013; Menz et al., 2015) or have shown only decoding of very limited movement of the fingers in isolation (Aggarwal et al., 2009). Our offline decoding performance was similar to these previous reports despite the lack of upper arm movement, confirming that robust information specifically concerning finger-level movements can be extracted from motor cortex. In addition, we have also demonstrated the first online brain control of finger-level fine motor skills, allowing two monkeys to perform a behavioral task using only data recorded from primary motor cortex.

Though these results represent important steps towards restoration of normal upper-limb function, there are still many remaining challenges which can be addressed using our task paradigm. Most critically, the offline decoding accuracy and online task performance must be improved in order to better approximate the abilities of the normal hand. For online decoding, it is probable that using a state-of-the-art algorithm such as the ReFIT Kalman filter (Gilja et al., 2012) or the neural dynamical filter (Kao et al., 2015) would improve BMI performance. Additionally, even using simpler algorithms as reported here, it is likely the monkeys could learn to improve their use of the BMI given sufficient practice (Carmena et al., 2003; Ganguly and Carmena, 2009).

In both cases, however, it is currently unknown if such techniques will translate to finger-level BMIs, and it is likely that some improvement in the underlying neural tuning model will be necessary. The offline reconstruction of finger position presented here appears to accurately capture periods of movement, but tends to overshoot the actual position at the extents of the movement. Further, during periods where the fingers are held in a flexed or extended position, the reconstruction tends to develop a constant offset. These relatively consistent errors, along with low neural tuning rates, may indicate that the assumption of a linear relationship between neural activity and finger kinematics is not realistic. Multiple studies have shown that decoding performance better generalizes to new task contexts (e.g. reaching with a different arm posture or within a force field) when neural activity is modeled as relating to non-kinematic parameters such as intended joint torque or muscle activity (Cherian et al., 2013; Morrow et al., 2007; Oby et al., 2013). This may indicate that certain non-linearities are present in the path from neural firing to ultimate kinematic output, whether caused by an inherent non-linear encoding in the neural activity itself (Pohlmeyer et al., 2007) or caused by the musculoskeletal mechanics underlying physical movement (Park and Durand, 2008). It may be the case that including musculoskeletal dynamics in the decoding algorithm is necessary for increasing accuracy in unrestricted environments (Kim et al., 2007).

The movements demonstrated here are simultaneous flexion and extension of all four fingers as one. This is equivalent to continuous control of a power grasp and is by itself a useful functionality, but it is unclear how this control would change when applied to different grasps or individuated finger movements. It is likely, due to the complex biomechanical constraints of the hand (Lang and Schieber, 2004), that difficulties will arise when directly translating simple linear algorithms to these movements. Modeling these constraints (Kim et al., 2007; Schaffelhofer et al., 2015) and incorporating them into the decoding process may be necessary. Another approach, used successfully by (Rouse, 2016), might be to incorporate a dimension selector into the BMI such that the subject controls a single dimension of the grasp at any given time, possibly mitigating the need for constraint modeling.

Finally, applying the functional control demonstrated with our virtual task to the control of a physical hand may introduce new challenges by altering neural tuning patterns during object manipulation (Wodlinger et al., 2015). Despite this, we believe that the virtual task paradigm presented here represents an important method for exploring neural control of fine motor skills, both for answering basic science questions and for developing BMI algorithms which can be applied to control physical effectors in the future.

Supplementary Material

Acknowledgments

This work was supported in part by the Craig H. Neilsen Foundation, the National Institutes of Health (grant R01GM111293), and the A. Alfred Taubman Medical Research Institute. P. Vu, D. Tat, A. Bullard, and C. Nu were supported by NSF Graduate Research Fellowships.

References

- Aggarwal V, Kerr M, Davidson AG, Davoodi R, Loeb GE, Schieber MH, Thakor NV. Cortical control of reach and grasp kinematics in a virtual environment using musculoskeletal modeling software. 2011 5th International IEEE/EMBS Conference on Neural Engineering (NER). Presented at the 5th International IEEE/EMBS Conference on Neural Engineering (NER) 2011:388–391. doi: 10.1109/NER.2011.5910568. [DOI] [Google Scholar]

- Aggarwal V, Mollazadeh M, Davidson AG, Schieber MH, Thakor NV. State-based decoding of hand and finger kinematics using neuronal ensemble and LFP activity during dexterous reach-to-grasp movements. J Neurophysiol. 2013;109:3067–3081. doi: 10.1152/jn.01038.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aggarwal V, Tenore F, Acharya S, Schieber MH, Thakor NV. Cortical decoding of individual finger and wrist kinematics for an upper-limb neuroprosthesis; Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2009. EMBC 2009. Presented at the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; 2009; EMBC; 2009. pp. 4535–4538. 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmena JM, Lebedev MA, Crist RE, O’Doherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS, Nicolelis MAL. Learning to Control a Brain–Machine Interface for Reaching and Grasping by Primates. PLoS Biol. 2003;1:e42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherian A, Fernandes HL, Miller LE. Primary motor cortical discharge during force field adaptation reflects muscle-like dynamics. J Neurophysiol. 2013;110:768–783. doi: 10.1152/jn.00109.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJC, Velliste M, Boninger ML, Schwartz AB. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham JP, Nuyujukian P, Gilja V, Chestek CA, Ryu SI, Shenoy KV. A closed-loop human simulator for investigating the role of feedback control in brain-machine interfaces. J Neurophysiol. 2011;105:1932–1949. doi: 10.1152/jn.00503.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davoodi R, Urata C, Loeb GE. Online. Musculoskeletal modeling software, MSMS [Online] doi: 10.1109/IEMBS.2004.1404281. http://mddf.usc.edu:85/?page_id=94. [DOI] [PubMed]

- Dethier J, Nuyujukian P, Ryu SI, Shenoy KV, Boahen K. Design and validation of a real-time spiking-neural-network decoder for brain–machine interfaces. J Neural Eng. 2013;10:036008. doi: 10.1088/1741-2560/10/3/036008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egan J, Baker J, House PA, Greger B. Decoding Dexterous Finger Movements in a Neural Prosthesis Model Approaching Real-World Conditions. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2012;20:836–844. doi: 10.1109/TNSRE.2012.2210910. [DOI] [PubMed] [Google Scholar]

- Ganguly K, Carmena JM. Emergence of a Stable Cortical Map for Neuroprosthetic Control. PLoS Biol. 2009;7:e1000153. doi: 10.1371/journal.pbio.1000153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilja V, Nuyujukian P, Chestek CA, Cunningham JP, Yu BM, Fan JM, Churchland MM, Kaufman MT, Kao JC, Ryu SI, Shenoy KV. A high-performance neural prosthesis enabled by control algorithm design. Nat Neurosci. 2012;15:1752–1757. doi: 10.1038/nn.3265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilja V, Pandarinath C, Blabe CH, Nuyujukian P, Simeral JD, Sarma AA, Sorice BL, Perge JA, Jarosiewicz B, Hochberg LR, Shenoy KV, Henderson JM. Clinical translation of a high-performance neural prosthesis. Nat Med. 2015;21:1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamed SB, Schieber MH, Pouget A. Decoding M1 Neurons During Multiple Finger Movements. J Neurophysiol. 2007;98:327–333. doi: 10.1152/jn.00760.2006. [DOI] [PubMed] [Google Scholar]

- Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P, Donoghue JP. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jarosiewicz B, Sarma AA, Bacher D, Masse NY, Simeral JD, Sorice B, Oakley EM, Blabe C, Pandarinath C, Gilja V, Cash SS, Eskandar EN, Friehs G, Henderson JM, Shenoy KV, Donoghue JP, Hochberg LR. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface. Science Translational Medicine. 2015;7:313ra179–313ra179. doi: 10.1126/scitranslmed.aac7328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kao JC, Nuyujukian P, Ryu SI, Churchland MM, Cunningham JP, Shenoy KV. Single-trial dynamics of motor cortex and their applications to brain-machine interfaces. Nat Commun. 2015;6:7759. doi: 10.1038/ncomms8759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HK, Carmena JM, Biggs SJ, Hanson TL, Nicolelis MAL, Srinivasan MA. The Muscle Activation Method: An Approach to Impedance Control of Brain-Machine Interfaces Through a Musculoskeletal Model of the Arm. IEEE Transactions on Biomedical Engineering. 2007;54:1520–1529. doi: 10.1109/TBME.2007.900818. [DOI] [PubMed] [Google Scholar]

- Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. Journal of Neural Engineering. 2008;5:455–476. doi: 10.1088/1741-2560/5/4/010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang CE, Schieber MH. Human Finger Independence: Limitations due to Passive Mechanical Coupling Versus Active Neuromuscular Control. J Neurophysiol. 2004;92:2802–2810. doi: 10.1152/jn.00480.2004. [DOI] [PubMed] [Google Scholar]

- Malik WQ, Truccolo W, Brown EN, Hochberg LR. Efficient Decoding With Steady-state Kalman Filter in Neural Interface Systems. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2011;19:25–34. doi: 10.1109/TNSRE.2010.2092443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menz V, Schaffelhofer S, Scherberger H. Representation of continuous hand and arm movements in macaque areas M1, F5, and AIP: a comparative decoding study. J Neural Eng. 2015;12 doi: 10.1088/1741-2560/12/5/056016. [DOI] [PubMed] [Google Scholar]

- Morrow MM, Jordan LR, Miller LE. Direct Comparison of the Task-Dependent Discharge of M1 in Hand Space and Muscle Space. J Neurophysiol. 2007;97:1786–1798. doi: 10.1152/jn.00150.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oby ER, Ethier C, Miller LE. Movement representation in the primary motor cortex and its contribution to generalizable EMG predictions. J Neurophysiol. 2013;109:666–678. doi: 10.1152/jn.00331.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park H, Durand DM. Motion control of musculoskeletal systems with redundancy. Biol Cybern. 2008;99:503–516. doi: 10.1007/s00422-008-0258-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pohlmeyer EA, Solla SA, Perreault EJ, Miller LE. Prediction of upper limb muscle activity from motor cortical discharge during reaching. J Neural Eng. 2007;4:369–379. doi: 10.1088/1741-2560/4/4/003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Putrino D, Wong YT, Weiss A, Pesaran B. A training platform for many-dimensional prosthetic devices using a virtual reality environment. Journal of Neuroscience Methods, Brain Computer Interfaces; Tribute to Greg A. Gerhardt. 2015;244:68–77. doi: 10.1016/j.jneumeth.2014.03.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rouse AG. A four-dimensional virtual hand Brain-Machine interface using active dimension selection. J Neural Eng. 2016;13:036021. doi: 10.1088/1741-2560/13/3/036021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaffelhofer S, Sartori M, Scherberger H, Farina D. Musculoskeletal Representation of a Large Repertoire of Hand Grasping Actions in Primates. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2015;23:210–220. doi: 10.1109/TNSRE.2014.2364776. [DOI] [PubMed] [Google Scholar]

- Schieber MH. Individuated finger movements of rhesus monkeys: a means of quantifying the independence of the digits. Journal of neurophysiology. 1991;65:1381–1391. doi: 10.1152/jn.1991.65.6.1381. [DOI] [PubMed] [Google Scholar]

- Taylor DM, Tillery SIH, Schwartz AB. Direct Cortical Control of 3D Neuroprosthetic Devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- Thompson DE, Quitadamo LR, Mainardi L, Laghari K ur R, Gao S, Pieter-Jan Kindermans, Simeral JD, Fazel-Rezai R, Matteucci M, Falk TH, Bianchi L, Chestek CA, Huggins JE. Performance measurement for brain–computer or brain–machine interfaces: a tutorial. J Neural Eng. 2014;11:035001. doi: 10.1088/1741-2560/11/3/035001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vargas-Irwin CE, Shakhnarovich G, Yadollahpour P, Mislow JMK, Black MJ, Donoghue JP. Decoding Complete Reach and Grasp Actions from Local Primary Motor Cortex Populations. Journal of Neuroscience. 2010;30:9659–9669. doi: 10.1523/JNEUROSCI.5443-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- Wodlinger B, Downey JE, Tyler-Kabara EC, Schwartz AB, Boninger ML, Collinger JL. Ten-dimensional anthropomorphic arm control in a human brain–machine interface: difficulties, solutions, and limitations. J Neural Eng. 2015;12:016011. doi: 10.1088/1741-2560/12/1/016011. [DOI] [PubMed] [Google Scholar]

- Wu W, Gao Y, Bienenstock E, Donoghue JP, Black MJ. Bayesian population decoding of motor cortical activity using a Kalman filter. Neural computation. 2006;18:80–118. doi: 10.1162/089976606774841585. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.