Abstract

Current cancer diagnosis employs various nuclear morphometric measures. While these have allowed accurate late-stage prognosis, early diagnosis is still a major challenge. Recent evidence highlights the importance of alterations in mechanical properties of single cells and their nuclei as critical drivers for the onset of cancer. We here present a method to detect subtle changes in nuclear morphometrics at single-cell resolution by combining fluorescence imaging and deep learning. This assay includes a convolutional neural net pipeline and allows us to discriminate between normal and human breast cancer cell lines (fibrocystic and metastatic states) as well as normal and cancer cells in tissue slices with high accuracy. Further, we establish the sensitivity of our pipeline by detecting subtle alterations in normal cells when subjected to small mechano-chemical perturbations that mimic tumor microenvironments. In addition, our assay provides interpretable features that could aid pathological inspections. This pipeline opens new avenues for early disease diagnostics and drug discovery.

Introduction

Recent studies have revealed that cells within the tissue microenvironment are subjected to a variety of mechanical and chemical signals that regulate cellular homeostasis1–3. The cell nucleus operates as an integrator of these signals via an elaborate meshwork of cytoskeletal-to-nuclear links4–6. In normal cells, these links maintain the nuclear mechanical homeostasis to regulate genomic programs. Alterations in mechano-chemical signals to the cell nucleus have been shown to play a critical role for the onset of various diseases7–11. Importantly, cancer cells exhibit structural, mechanical, and conformational aberrations in their nuclear architecture, including altered nuclear envelope organization12–14, distinct chromatin arrangements15,16, and telomere stability17. Cancer tissues have been conjectured to arise from single cells within the tissue microenvironment, in which impaired nuclear mechanotransduction resulted in altered genomic programs18–20.

Nuclear morphometric information is one of the major clinical diagnostic approaches used by pathologists to determine the malignant potential of cancer cells21–25. However, since the tissue microenvironment is highly heterogeneous, such morphometric assays are currently subjective and depend on the interpretation of the pathologist26. To circumvent this subjectivity, recent advances in imaging combined with machine learning have provided new avenues27–32. Given the prominent role of nuclear structure changes in cancer cells, various machine learning techniques based on quantitative nuclear morphometric information such as nuclear size, shape, nucleus-to-cytoplasm ratio, and chromatin texture, and various non-parametric methods such as deep learning have been applied for classifying histopathology images and diagnosing various cancers including breast, skin and thyroid cancer33–41. While this line of research has allowed accurate late-stage prognosis, early diagnosis remains a major challenge. This is primarily due to the difficulty of detecting a small number of abnormal cells in a heterogeneous population of normal cells as this is the case for example in fine-needle biopsies or blood smears. In addition, while there are accurate deep learning approaches, they don’t provide any interpretable features and functional annotations that could be used by pathologists for early disease diagnosis.

In this paper, we first present a pipeline combining single-cell imaging with machine learning algorithms to discriminate between normal and cancer cell lines. In particular, we develop a convolutional neural net pipeline that not only provides high classification accuracy but also interpretable features. This is achieved by analyzing small regions within the nucleus using our deep learning pipeline and correlating these with changes in heterochromatin to euchromatin ratios in normal and cancer cell lines. In addition, we validate our pipeline by discriminating between normal and cancer tissue slices. Importantly, we also evaluate the sensitivity of our method on normal cells subjected to small mechanical and biochemical perturbations to mimic the chromatin condensation patterns in early stages of cancer. Collectively, our platform has potential applications for drug discovery and early disease diagnostics in human populations.

Results

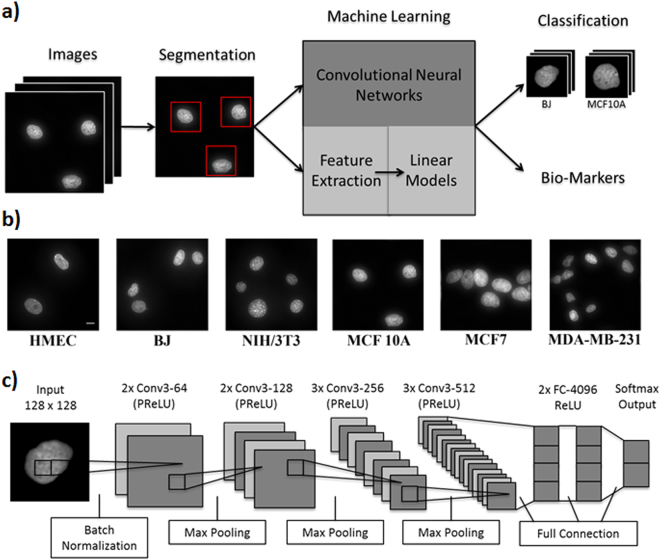

SCENMED: A combined fluorescence imaging and convolutional neural net platform for classifying nucleus images

We developed a single-cell nuclear mechanical diagnostics (SCENMED) platform consisting of single-cell fluorescence imaging and machine learning to discriminate between normal and cancer cells. Our pipeline is shown in Fig. 1a. First, cells were seeded in glass bottom dishes and were allowed to grow overnight. The cells were then fixed, permeabilized, and stained with DAPI (4′,6-diamidino-2-phenylindole). Several thousand wide-field images of the nucleus were acquired using a 60X objective. Representative nucleus images of the different cell types (NIH/3T3, BJ, MCF 10A, MCF7, MDA-MB-231, HMEC) are shown in Fig. 1b. The individual nuclei were identified using a 4-step image processing procedure to remove overexposure, edge blur and drift blur (see Methods and Supplementary Fig. 1a and Fig. 1b). These images were then classified using an approach based on an adaptation of the VGG (Visual Geometry Group) Convolutional Neural Network architecture (see Fig. 1c) and a supervised approach using linear models based on morphometric features that were extracted from each nucleus. These morphometric features capture shape and texture of the nucleus (see Methods) and have also been used in previous cancer classification studies21,30.

Figure 1.

Single-cell nuclear mechanical diagnostics (SCENMED) platform for cancer prognosis. (a) Analysis pipeline to identify nuclei and extract nuclear morphometric features. Cells are first fixed and imaged. The images are then classified using a linear model based on the extracted nuclear morphometric features and a convolutional neural network based on the segmented images. (b) Representative images of nuclei for NIH/3T3, BJ, HMEC, MCF10A, MCF7, and MDA-MB-231. (c) Adaptation of the VGG Convolutional Neural Network architecture consisting of an initial batch normalization layer followed by 10 layers of convolutions, 4 max pooling layers (kernel size 2, stride size 2), 2 fully connected layers, and a softmax output layer. Each convolution is followed by PReLU activations, and the fully connected layers are followed by ReLU activations.

SCENMED discriminates between normal and cancer cell lines with high accuracy

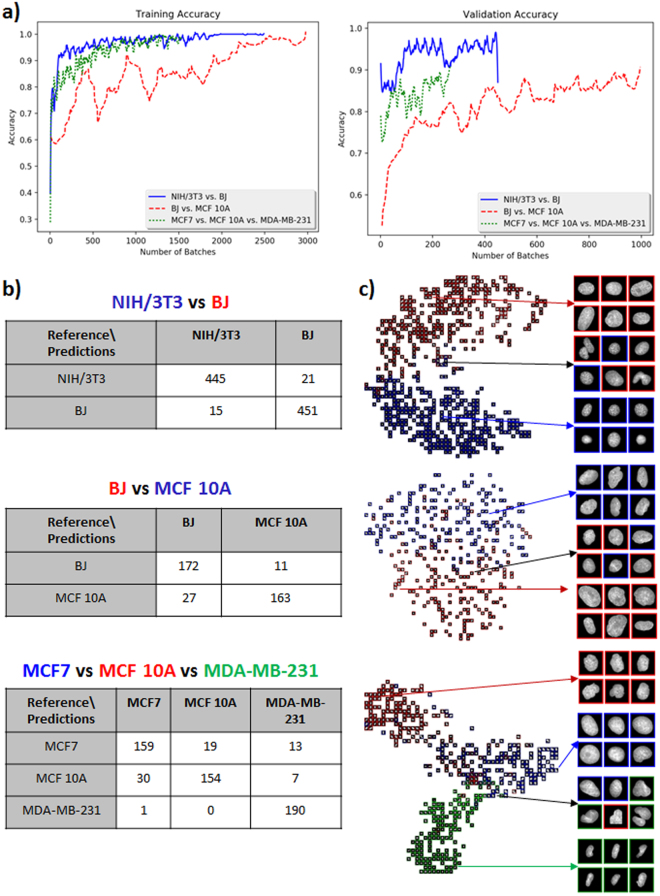

As a first step, we used our pipeline to discriminate between normal mouse and normal human fibroblast cells (NIH/3T3 and BJ) and various human breast cancer cell lines (MCF10A, MCF7 and MDA-MB-231). These representative breast cancer cell lines comprise of fibrocystic as well as metastatic states. The number of cell nuclei imaged in each condition and the split into training and validation is given in Supplementary Fig. 3a. As shown in Fig. 2a, validation accuracies for the neural model revealed that mouse versus human cell lines and normal versus cancer cell lines can be robustly discriminated with a large margin (NIH/3T3 versus BJ: 96.1% validation accuracy and 97.6% training accuracy; BJ versus MCF10A: 88.2% validation accuracy and 93.8% training accuracy). As expected, the progression of the training accuracy over the different batches shows that the neural model learns more quickly to distinguish between NIH/3T3 and BJ cells as compared to BJ versus MCF10A cells. In addition, Fig. 2a also shows that the three breast cancer cell lines can be discriminated with high accuracy (MCF7 versus MCF10A versus MDA-MB-231: 87.8% validation accuracy and 96.2% training accuracy. Counter intuitively, validation accuracy and validation loss might both increase, since the model is simply oscillating between class predictions for the cases that are difficult to distinguish (see also Supplementary Fig. 3b). A confusion matrix can be used to further quantify the discriminative power of classification methods and is shown in Fig. 2b. As expected, the most aggressive cancer cell line (MDA-MB-231) is easy to distinguish from the fibrocystic (MCF10A) and less aggressive cancer cell line (MCF7). Further, we used t-distributed stochastic neighbor embedding (t-SNE) to embed the high-dimensional data resulting from the neural net model into two dimensions and visualize the classification between the various cell lines. In these t-SNE plots similar cell nuclei are represented by nearby points, whereas dissimilar cell nuclei correspond to distant points (Fig. 2c). As can be clearly seen in the confusion matrices and the t-SNE plots, our method can easily distinguish between fibroblasts of mouse and human origin, human fibroblast and cancer cells, as well as the three cancer cell lines.

Figure 2.

Classification of NIH/3T3 versus BJ, BJ versus MCF10A, and MCF10A versus MCF7 versus MDA-MB-231 nuclei. (a) Training and validation accuracies of the neural model for the classification tasks of NIH/3T3 versus BJ, BJ versus MCF10A, and MCF7 versus MCF 10A versus MDA-MB-231 nuclei. (b) Confusion matrices for the three different classification tasks. (c) t-SNE visualizations for the three different classification tasks.

SCENMED platform applied to patches provides interpretable features for cancer diagnosis

The importance of different morphometric features for each classification task was determined using a regularized logistic regression analysis and is displayed in Table 1. Our analysis of various texture and shape features shows that texture features were most discriminative for classifying mouse versus human fibroblasts and for the different cancer cell lines, whereas shape features were most discriminative for classifying BJ versus MCF10A nuclei. As to be expected, the regularized logistic regression approach yields slightly lower discriminative power as compared to the convolutional neural net approach. However, it has the advantage that it provides a list of interpretable features together with their importance for classification.

Table 1.

Feature ablation tables based on a linear model for all three classification tasks indicating the effect on training and validation accuracy when removing each feature (or set of features).

| NIH/3T3 vs. BJ | BJ vs. MCF 10 A | MCF7 vs. MCF 10 A vs. MDA-MB-231 | |||

|---|---|---|---|---|---|

| Features Used | Training/ Validation Accuracy | Features Used | Training, Validation Accuracy | Features Used | Training/Validation Accuracy |

| Features Used | Training/ Validation Accuracy | Features Used | Training, Validation Accuracy | Features Used | Training/Validation Accuracy |

| All features | 96.4%,95.1% | All features | 89.1%, 83.9% | All features | 93.7%, 89.0% |

| - Texture features | 81.5%, 76.5% | - Shape features | 72.9%, 61.1% | - Texture features | 80.0%, 69.8% |

| - Shape features | 95.7%, 94.9% | - Texture features | 87.8%, 82.1% | - Shape features | 81.1%, 79.8% |

| All features | 96.4%, 95.1% | All features | 89.1%, 83.9% | All features | 93.7%, 89.0% |

| - PFTAS | 93.1%, 90.6% | - PFTAS | 85.7%, 81.3% | - area | 80.7%, 79.2% |

| - LBP | 95.6%, 93.3% | - LBP | 85.5%, 82.4% | - PFTAS | 89.4%, 82.7% |

| - roundness | 96.3%, 94.8% | - area | 88.9%, 82.9% | - LBP | 92.2%, 86.6% |

| -Major axis length | 96.4%, 94.8% | - eccentricity | 88.9%, 83.2% | - Zernike Moments | 92.2%, 87.1% |

| -eccentricity | 96.4%, 94.8% | - roundness | 88.8%, 83.9% | - Minor axis length | 93.4%, 88.8% |

| - Minor axis length | 96.4%, 95.0% | - Major axis length | 88.9%, 83.9% | - Major axis length | 93.5%, 88.5% |

| - Zernike Moments | 95.5%, 95.1% | - Minor axis length | 88.9%, 83.9% | - roundness | 93.7%, 88.5% |

| - area | 96.4%, 95.1% | - Zernike Moments | 88.7%, 84.2% | - eccentricity | 93.5%, 89.0% |

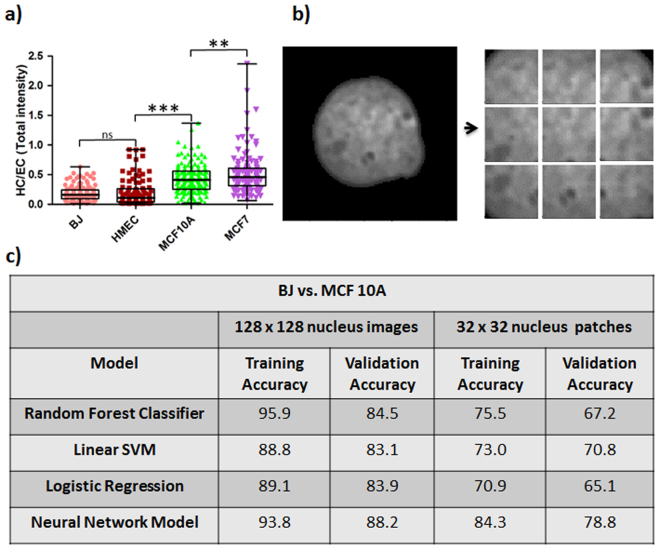

Particular known features for cancer progression are alterations in chromatin condensation patterns42. We measured chromatin condensation by plotting the heterochromatin (more condensed chromatin) to euchromatin (more open chromatin) ratio (HC/EC) as explained in Methods and in Supplementary Fig. 4. The threshold value for identifying heterochromatin was calculated using equation (2). The HC/EC ratio was found to be lower in normal BJ fibroblast cells and Human Mammary Epithelial Cells (HMECs) as compared to fibrocystic MCF10A cells and metastatic MCF7 cells (Fig. 3a). In agreement with previous studies there was a progressive increase in HC/EC ratio from normal to metastatic cell lines12.

Figure 3.

Identification of informative nuclear morphometric features for cancer prognosis. (a) Whisker box plot for HC/EC of normal and cancer cell lines (BJ: n = 134, HMEC: n = 101, MCF10A: n = 156, MCF7: n = 112; Student’s unpaired t-test ***p < 0.0001, **p < 0.0001 and ns = not significant). (b) Analysis of texture features via extraction of nuclear patches of size 32 × 32 pixels with stride size of 16 pixels in either direction. (c) Table indicating differences in accuracy between patch-based classification and classification based on full nuclear images for both neural and linear models.

The HC/EC ratio only provides a global view of chromatin condensation. Since the typical length scale of individual heterochromatin foci is in the range of a few hundred nanometers, we partitioned the nucleus into patches of 32 × 32 pixels (each pixel being 120 nm in width) as shown in Fig. 3b. These patches were then fed into our machine learning pipeline. The number of patches in each condition and the split into training and validation is given in Supplementary Fig. 5. As shown in Fig. 3c, both the neural model and the linear models (logistic regression, linear kernel support vector machine (SVM), and random forest classifier) did slightly worse on the patches (BJ versus MCF10A: 78.8% validation accuracy and 84.3% training accuracy for the neural model versus 70.8% validation accuracy and 73.0% training accuracy for the best linear model), since when analyzing patches the shape of the nucleus cannot be used for classification. This analysis confirms the discriminatory power of texture features at the length scale of heterochromatin foci for classification of normal versus cancer cell lines.

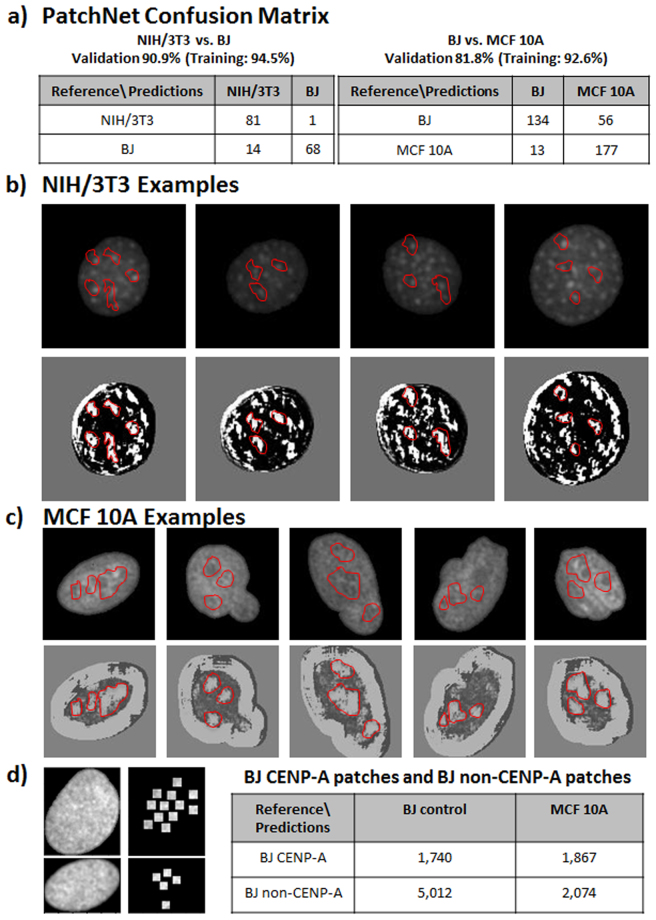

In general, tuning the patch size allows for trading off classification accuracy versus interpretability of the features. In order to better understand the features learned by our neural model when classifying between patches, we further reduced the patch size. To classify between NIH/3T3 and BJ nuclei we used patches of size 11 × 11 pixels and for classifying between BJ versus MCF10A nuclei we used patches of size 17 × 17 pixels to allow for more context in this more difficult classification task. The theoretical foundations for such an approach are described in PatchNet43. As suggested by the theory, Fig. 4a shows that the classification accuracies were slightly reduced when using PatchNet as compared to classification based on the full nucleus image (NIH/3T3 versus BJ: 90.9% validation accuracy and 94.5% training accuracy; BJ versus MCF10A: 81.8% validation accuracy and 92.6% training accuracy). A particular filter resulting from the PatchNet analysis is shown in Fig. 4b and c. It shows that significant features used to classify between NIH/3T3 and BJ nuclei as well as BJ and MCF10A nuclei are regions of high luminance contrast. Such regions in NIH/3T3 nuclei correspond to the heterochromatin regions, whereas in MCF10A nuclei PatchNet identified large interface regions between euchromatin and heterochromatin as important features for classification. A complete list of all filters is given in Supplementary Fig. 6a and Fig. 6b.

Figure 4.

Functional annotation of the features identified based on a patch-analysis. (a) The confusion matrices for PatchNet in classifying between NIH/3T3 and BJ as well as between BJ and MCF10A. (b) Representative examples of NIH/3T3 nuclei (upper row) together with the features indicative of NIH/3T3 (shown in white) as learned by PatchNet (bottom row). The red masks show examples of regions that were identified by PatchNet as being indicative of NIH/3T3. The predicted NIH/3T3 indicative regions correspond to heterochromatin foci. (c) Representative examples of MCF10A nuclei (upper row) together with the features indicative of MCF10A (shown in white) as learned by PatchNet (bottom row). The red masks show examples of regions that were identified by PatchNet as being indicative of MCF10A. The predicted MCF10A indicative regions correspond to interfaces between heterochromatin and euchromatin regions. (d) BJ nuclei were stained with CENP-A and patches of size 11 × 11 pixels were extracted around the CENP-A marks (left image). The table compares the classification accuracy between CENP-A and non-CENP-A patches, showing that CENP-A patches are more often misclassified as MCF10A.

In previous studies, Centromere Protein A (CENP-A) has been established as a biomarker for peri-centeromeric heterochromatin regions44. In order to provide a functional annotation of the features learned by the convolutional neural net trained on BJ and MCF10A nuclei, we analyzed the classification of our model on BJ patches of size 11 × 11 pixels that contained CENP-A markers as well as BJ patches of size 11 × 11 pixels that did not contain CENP-A markers (see Fig. 4d). As seen in this figure, the model learned to correctly classify a significant portion of the patches not containing CENP-A markers as BJ, while it incorrectly classified the majority of patches containing CENP-A markers as MCF10A. Importantly, this shows that our platform identified heterochromatin regions as early cancer biomarkers. This is in accordance with domain expertise as CENP-A stains are indicative of regions of DNA damage45,46.

SCENMED platform discriminates between normal and cancer cells in breast tissue slices with high accuracy

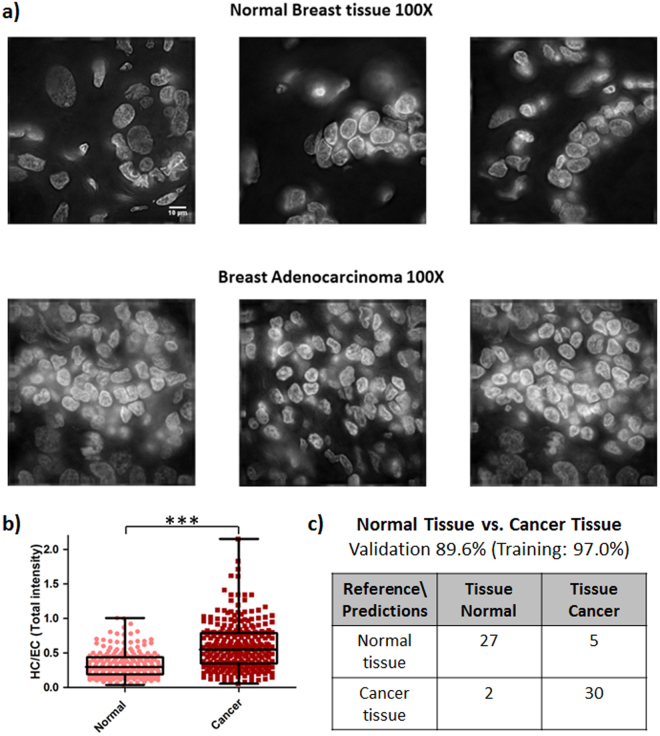

We next validated our platform on normal and cancer tissue slices of human origin. Normal breast tissue slices used in our study consist of 10% glandular epithelium, 20% stroma and 70% adipose tissue, whereas the breast adenocarcinoma tissue slices consist of 100% cancer cells as per the pathology report provided in the datasheet. Representative wide-field images obtained using 100x magnification of both normal and cancer tissues stained with DAPI are shown in Fig. 5a; for representative images of the tissue sections in 20x magnification see Supplementary Fig. 7a. The nuclei were cropped from the DAPI stained images obtained using 100X objective as explained in Methods and Supplementary Fig. 7b. As shown in Fig. 5b, the nuclei from cancer tissue slices have a higher HC/EC ratio as compared to nuclei from normal tissue slices. We also validated the SCENMED pipeline on these nuclear images. As seen in the confusion matrices in Fig. 5c, SCENMED is able to accurately classify between nuclei from cancer and normal tissues (89.6% validation accuracy and 97.0% training accuracy).

Figure 5.

SCENMED analysis on tissue slices. (a) Representative DAPI-stained slices of normal and adenocarcinoma breast tissue (scale bar: 10 μm). (b) Whisker box plot of HC/EC ratio for normal (n = 217) and cancer tissue (n = 286); Student’s unpaired t-test ***p < 0.0001. (c) Confusion matrix for classification between nuclei cropped from cancerous and normal tissue slices.

SCENMED’s sensitivity is benchmarked using nuclear mechanical perturbations that mimic cancer tissue microenvironments

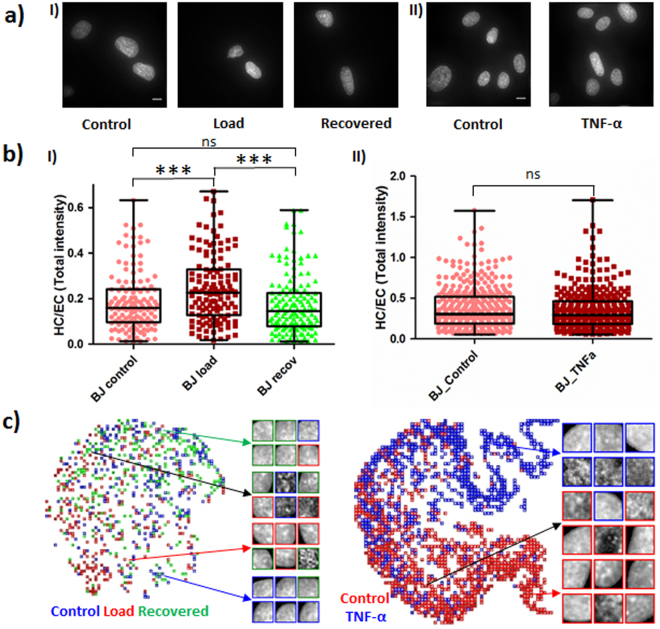

To test the sensitivity and benchmark our machine learning pipeline, the BJ cells were subjected to typical compressive forces as experienced by cells in the tissue microenvironment (in the order of micro-newtons of force per cell) for one hour and allowed to recover for one hour. The magnitude of compressive force applied was calculated using equation (1). In addition, we stimulated (for 30 min) BJ cells with tumor necrosis factor α (TNF-α), a cytokine that is known to play an important role in cancer progression and inflammation. The cells in each condition (Control, Load, Recovered, and after 30 min TNF-α stimulation) were fixed, permeabilized, and stained with DAPI (Fig. 6a). The resulting HC/EC ratios for these conditions are shown in Fig. 6b. Compressive load causes chromatin compaction as shown by the significantly elevated HC/EC ratio of load versus control cells. Interestingly, cells recovered their original HC/EC ratio after one hour of recovery, suggesting that normal cells possess an inherent structural memory in chromatin organization. Table 2, Fig. 6c and supplementary Fig. 8 show that the platform made more mistakes in distinguishing between recovered and control BJ nuclei than between load and control or recovered and control nuclei. This is especially apparent from the confusion matrix based on patches, since nearly half of the BJ control nuclei were wrongly classified as recovered nuclei, while the majority of load nuclei were classified correctly. The major reason for the indistinguishibility between BJ control and recovered nuclei is due to the highly reversible nature of the nuclear and chromatin patterns upon removal of load on these cells. While stimulation of BJ cells with TNF-α does not lead to a significant increase in the HC/EC ratio, our SCENMED platform was able to clearly distinguish between these two conditions. The classification accuracies and confusion matrices for these mechanical and chemical perturbations are shown in Table 2 and supplementary Fig. 8. In addition, the corresponding t-SNE plots are shown in Fig. 6c. These analyses indicate the sensitivity of our pipeline to detect even subtle changes in chromatin organization. Our mechanical and TNF-α stimulation assays can be seen as a model for disease progression, suggesting that our platform is applicable for early disease diagnostics.

Figure 6.

SCENMED analysis on cells subjected to nuclear mechanical perturbations. (a) Representative images of BJ nuclei under control, load and recovery conditions (in I) and under 30 minutes of TNF-α treatment (in II). (b) Whisker box plot for HC/EC ratio for BJ control (n = 134), load (n = 129) and recovery (n = 149) in (I) and control (n = 364) and TNF-α treated (n = 308) in (II); Student’s unpaired t-test ***p < 0.0001 and ns = not significant. (c) Corresponding t-SNE visualizations for BJ control, load, recovered nuclei patches as well as for BJ control and BJ TNF-α nuclei patches.

Table 2.

Confusion matrices for classification between BJ control, load, and recovered nuclei as well as confusion matrices for classification between BJ control and BJ TNF-α nuclei.

| BJ control vs. BJ TNF-α | |||||||

|---|---|---|---|---|---|---|---|

| Validation 86.1% (Training: 88.9%) (Full nuclei) | Validation 79.4% (Training: 76.8%) (Patches) | ||||||

| Reference\Predictions | BJ control | BJ TNF-α | Reference\Predictions | BJ control | BJ TNF-α | ||

| BJ control | 125 | 23 | BJ control | 1,801 | 499 | ||

| BJ TNF-α | 18 | 130 | BJ TNF-α | 445 | 1,855 | ||

| BJ control vs. BJ load vs. BJ recovered | |||||||

| Validation 55.8% (Training: 61.0%) (Full Nuclei) | Validation 45.4% (Training: 48.5%) (Patches) | ||||||

| Reference\Predictions | BJ control | BJ load | BJ recovered | Reference\Predictions | BJ control | BJ load | BJ recovered |

| BJ control | 22 | 4 | 14 | BJ control | 90 | 68 | 148 |

| BJ load | 10 | 19 | 11 | BJ load | 50 | 173 | 83 |

| BJ recovered | 12 | 2 | 26 | BJ recovered | 65 | 59 | 182 |

Discussion

A number of recent studies have shown that various diseases such as Cancer, Fibrosis, Alzheimer’s, and Premature Aging are accompanied by nuclear morphological alterations. These alterations often are a result of defective cytoskeletal-to-nuclear links as well as impaired mechanotransduction pathways12,47–50. The tissue microenvironment comprises of a large cellular heterogeneity in nuclear architecture. A growing number of evidence highlights that changes in the microenvironmental signals could potentially trigger a small group of cells into disease progression18,51–54. While detection of tissue-scale abnormalities have been easier to diagnose, early diagnostics of small groups of defective cells for example in tissue biopsies has been a major challenge. While challenging, early disease diagnostics has been identified as a major goal since it carries the promise of greatly increasing survival rates as well as reducing health care costs55.

Current approaches include genomic analyses to annotate mutations and alterations in the epigenome56,57. These methods are often based on population studies and hence their efficiency to detect abnormalities in single cells within tissue biopsies is low. The current approaches to diagnose abnormalities in tissue biopsies therefore include a combination of morphometric assays and immunohistochemistry, combined with genomic analyses. In order to reduce the subjectivity of the pathologist in classifying morphometric assays, recent efforts have introduced machine learning methods to this problem30,31. Such digital pathology approaches have included either using parametric or non-parametric approaches to detect alterations in tissue biopsies. While such efforts have proven to be beneficial in diagnosing late-stage diseases, early detection still poses a number of challenges. In addition, the deep learning approaches that often yield the highest accuracy, do not provide any interpretable or functional features that could aid the pathologist.

In this paper, we provided SCENMED, a single-cell nuclear mechano-morphometric diagnostic platform for cancer diagnosis. To benchmark our pipeline we subjected single cells to subtle microenvironmental alterations, thereby mimicking tumor microenvironments. Our platform robustly detected these subtle alterations that were reflected in chromatin condensation states. We also provided a quantitative analysis of our platform to discriminate normal and cancer cell lines as well as different kinds of cancer cell lines and cells in tissue biopsies. In addition to achieving high classification accuracy, our platform also provides interpretable features by performing the analysis on small regions within the cell nucleus. The nuclear mechano-morphometric features that we identified indicate typical length scales of chromatin condensation that are potentially important for early cancer diagnosis. Collectively, our platform has applications for early disease diagnosis in fine-needle biopsies and blood smears. Low-cost and fully automated methods based on nuclear mechano-morphometric biomarkers such as the one proposed here could also be combined with single-cell genomic methods and are attractive not only for clinical applications such as cancer diagnosis, but also open new avenues for drug discovery programs as well as the development of therapeutic interventions at single-cell resolution.

Methods

Cell culture, tissue slice preparation, immunofluorescence staining, and imaging

All experiments were performed in accordance with relevant guidelines and regulations at National University of Singapore (NUS). NIH/3T3 (CRL-1658), BJ (CRL-2522), MCF10A (CRL-10317), MCF7 (HTB22) and MDA-MB-231 (HTB-26) cells were obtained from ATCC. They were cultured in DMEM (ThermoFisher Scientific 11885092) media supplemented with 10% Fetal Bovine Serum (FBS) (ThermoFisher Scientific 16000044) and 1% pen-strep (Sigma P4333) antibiotic. Antibodies used: CENP-A (Cell Signalling Technology 2048 S), Histone H2A.X Phospho S139 (Abcam 184520). Human breast tissue sections within normal limits (CS708873, OriGene) and metastatic breast adenocarcinoma tissue sections (CS548359, OriGene) were purchased from OriGene Technologies where these samples were obtained according to the relevant ethical consenting practices (for more information visit http://www.origene.com/Tissue). Other reagents: Histozyme (H3292-15ML, Sigma) and ProLong® Gold Antifade Mountant with DAPI (P36941, ThermoFischer Scientific).

Cells were fixed with 4% parafoldehyde (PFA) (Sigma, 252549-500 ml) for 10 minutes. For compressive force experiments, cells were fixed in the presence of load, where the load and coverslip were removed after the fixation step. Cells were then permeabilized with 0.5% triton (Sigma, ×100-100 ml) for 10 minutes and rinsed with PBS after each step. Cells were then blocked with 5% BSA for 30 minutes and incubated with primary antibody followed by the secondary antibody as per the manufacturer’s instructions. Cells were then labeled with DAPI solution (ThermoFisher Scientific R37606) in PBS as recommended by the supplier. For experiments that did not involve antibody labeling, DAPI solution was added after the permeabilization step.

Formalin-fixed, paraffin-embedded (FFPE) tissue sections (5 μm thickness) on slides were deparaffinized by heating them in an oven at 60 °C for 5 minutes and subsequently washing them with xylene. The sections were then rehydrated in serially diluted ethanol solutions (100% − 50%) as per standard protocols and rinsed with water. Antigen retrieval was performed using Histozyme solution as per manufacturer’s protocol and then rinsed with water. DAPI was then added onto these sections and covered with a coverslip. The slides were incubated for 24 hours after which the coverslips were sealed and taken for imaging.

Images were obtained using an Applied Precision DeltaVision Core microscope. Wide field images were obtained using 60X objective (air, NA 0.7) with a pixel size of 0.2150 µm. These 512 × 512 12-bit images were deconvolved (enhanced ratio, 10 cycles) and saved in tiff format. The images and their corresponding labels (e.g. NIH/3T3, NIH/3T3_Load) were then stored in HDF5 format for subsequent analysis.

Compressive loading assay

1000 cells were seeded on glass bottom dishes (Ibidi 81158) and allowed to attach overnight. Then a pluronic acid treated coverslip was placed on the cells. The compressive force was applied by placing parafilm-wrapped metallic nuts (mass = 22 g) on the coverslip. This corresponds to each cell experiencing a force in the order of micro-newtons (calculation shown below). For recovery, the metallic nut was removed and the cells were allowed to recover for one hour before being fixed for immunofluorescence staining.

The force was computed as follows:

Mass = 22 g (22 × 10−3 kg); force applied (weight) = mass × acceleration due to gravity = 22 × 10−3 × 10 = 220 mN;

| 1 |

Buoyant force = weight of volume of media displaced; volume of media displaced by a single nut = 1 ml (1 g); mass equivalent to 1/20th of the nut that is submerged in the media during the experiment = 1.1 g; number of cells = 1000.

Image Processing and Segmentation

Our goal in segmentation was to automatically segment cleanly imaged nuclei from potentially noisy microscope images. Hence we focussed on precision in our segmentation methods.

We identified three types of noise introduced by the microscope imaging procedure. We refer to these types of noise as overexposure, edge blur, and drift blur. We define a nucleus to be overexposed when at least 25% of the nucleus has a pixel value higher than 3700. We define edge blur as the noise introduced by de-convolution at nuclei along the edge of the 512 × 512 image frame. We define drift blur to be the noise introduced by nucleus motion during the imaging process. We filtered out overexposed nuclei and removed edge blurred nuclei with software methods as described in the following paragraph, while drift blurred image sets were identified and removed by testing if a given image set for a particular nucleus line was distinguishable from the other image sets for the same nucleus line using a neural net approach (see implementation section for more details).

We compared a variety of segmentation algorithms (Supplementary Fig. 2) and found that the following procedure provided the best results for identifying the nuclei, namely marker-based watershed segmentation to segment the nuclei followed by software filtering techniques to remove nuclei that are affected by noise. To select appropriate markers for watershed, we first used morphological reconstruction, which resulted in better nucleus separation. Then, we used a Sobel edge detector to identify nucleus edges, noting that this detector also identified intra-nucleus edges. Next, we marked edges identified by the edge detector as foreground, and correspondingly marked pixels with values within the lowest 1% of image pixel values as background. Finally, we ran marker-based watershed segmentation to identify the nuclei.

After segmentation, we removed any nuclei that were larger than 128 × 128 pixels as well as any nuclei that were overexposed. For nuclei along the edges of an image, we cropped a depth of 15 pixels along the blurred edge. Nuclei patches were extracted from 128 × 128 crops by using a 32 × 32 sliding window approach with a stride of 16 in either direction. We only used patches that consisted of at least 85% nucleus. We also removed overexposed patches using the same technique that we used to remove overexposed nuclei.

For tissue slices, the image analysis was performed using FijiImageJ. The schematic is shown in Supplementary Fig. 7b. The nuclei in the original image were first selected using auto-threshold “Shanbag dark.” Nuclei were separated by applying a Gaussian blur to the selected regions, converting them into a binary image, and finally applying watershed. Each identified nucleus was then saved as a separate image for further analysis.

HC: EC calculation

First, the nucleus was identified by the Otsu thresholding method (Supplementary Fig. 4). To measure chromatin condensation in each nucleus a custom macro was written in FijiImageJ.

The threshold for identifying heterochromatin was calculated as:

| 2 |

The HC/EC value is then calculated where HC is the total intensity (heterochromatin regions) and EC is the difference between the total intensity of the entire nucleus and the total intensity of the heterochromatin regions.

Deep Learning

Inspired by the recent success of deep learning in image classification58,59, we modified state-of-the-art convolutional neural networks (CNNs) to perform nucleus classification on both, full nucleus images and nucleus patches. CNNs are particularly suited for this problem, since the convolution operation provides translational invariance and hence the output is invariant with respect to the position of a feature within a nucleus. In particular, we implemented an adaptation of the Visual Geometry Group (VGG) architecture with state-of-the-art layers, activations, and initializations60.

For our CNN, we first used batch normalization (with scaling) to normalize input images. Then we used 10 convolutional layers interspersed with max pooling following the conventions in VGG. Namely, we only used convolutions with kernel size 3 and began convolutions with 32 filters, doubling the number of filters each time after using a max pooling operation. We used two fully connected layers with dropout connections after the convolution layers. Each of our convolution layers has Parametric Rectified Linear Unit (PReLU) activations, the fully connected layers have Rectified Linear Unit (ReLU) activations, and the output layer has a softmax activation.

We used a weighted cross entropy loss and trained our network using the Adam optimizer, a variant of stochastic gradient descent61. We set class weights proportional to the amount of each class in the training set in order to maintain higher precision and recall. We initialized the learning rate to 8e-5. We used batch sizes of 32 for full nucleus images and batch sizes of 128 for nucleus patches. We initialized all weights according to the variant of the Xavier initialization proposed in He et al.58 and used the patience method described in Goodfellow et al.60 with a patience limit of 20 steps to enforce early stopping, which prevents overfitting.

PatchNet

In order to visualize and interpret features learned by convolutional neural networks, we used PatchNet43. PatchNet is composed of a small classifier CNN that is applied across patches of the original image. The classification decisions across all patches are then averaged in order to predict the class of the entire input image. PatchNet provides feature interpretability by providing a global heat-map (based on classification decisions from each patch) as well as filter heat-maps (based on filter activations from the last convolutional layer). By hand-selecting patch sizes for each of our classification problems, we were able to extract interpretable heat-maps from PatchNet without sacrificing accuracy.

In classifying between NIH/3T3 and BJ nuclei, we used patches of size 11 × 11 since the model was able to achieve a high accuracy by simply using areas of high luminance contrast to identify NIH/3T3 nuclei. However, in classifying between BJ nuclei and MCF10A nuclei, we used larger patches of size 17 × 17 to allow the network to incorporate more local context to improve classification at the expense of heatmap granularity.

Linear Models and Feature Selection

In order to better understand the effects of different features on learning, we also trained three linear models (logistic regression, linear SVM, and random forest classifier) on hand-selected features (as described below).

We studied the impact of both, shape-based features and texture-based features, for nucleus classification by using the following feature set (containing 104 features for full nucleus images and 99 features for nucleus patches). For shape-based features, we used area, eccentricity, major length axis, minor length axis, and roundness as features. For texture-based features, we used local binary patterns with a radius of up to 3 and 8-bit point codes (256 possible codes), parameter free Threshold Adjacency Statistics (PFTAS), and Zernike moments. We also considered gray level run length and co-occurrence matrices as well Gabor wavelet features. But since extracting these features is highly computationally intensive and did not improve accuracy, we did not include these features in the analysis.

We trained a weighted L2-Regularized Logistic Regression with the L-BFGS solver on our datasets. For BJ vs. MCF 10 A nucleus classification, we also trained a linear SVM as well as a random forest classifier. The linear SVM was trained using the default parameters in the python sci-kit learn library. The random forest classifier was trained using 100 estimators and a maximum depth of 8. To search across the parameter space for random forest model selection, we used a randomized search across a subset of possible parameters. Kernel SVMs (such as radial basis SVMs and kernels based on optimal transport) were too computationally expensive to train on the nucleus patches datasets, but also tended to over-fit on full nucleus datasets. Hence, we did not include these models in the results.

Evaluation of model performance

We used a held-out validation set to determine model generalizability. We selected our validation set using a fair split of examples among the classes to total at most 15% of the entire data set. Performance of the neural model was determined based on the accuracy on the validation set. We chose not to use area under the receiving operating characteristic curve or precision and recall as metrics, but instead to explicitly list out the confusion matrices. Performance of the linear model was determined solely by accuracy on the validation set. More importantly, we used feature ablation tables for the linear model to analyze the significance of each feature in classification.

Implementation

A key challenge in automatically segmenting cleanly imaged nuclei was to account for drift blur. The main challenge with drift blur is that the texture is destroyed, but the pixel value histograms for such images are indistinguishable from those of cleanly imaged nuclei. Thus, to identify drift blur in an image set, we applied our neural models to attempt to distinguish between nuclei that were segmented from different image sets (taken on different days), but are from the same cell line. We used a pairwise comparison across all image sets from the same cell line, and if the model was able to distinguish between one image set and all the others with a validation accuracy of consistently greater than 60%, we discarded such datasets. We used 60% as our threshold, even though we would ideally prefer complete indistinguishability (corresponding to a threshold of 50%), since some variability between the different image sets is to be expected. In addition, we manually verified that the files that were discarded contained a majority of nuclei with drift blur.

We now describe the relevant technologies used in our dataset and model development. Our image processing and segmentation pipeline was implemented in python using the libraries scikit-image62 and scipy63. Our neural models were implemented in python using Tensorflow and trained on an NVIDIA Titan X Pascal 12GB GPU. Our logistic regression model was implemented in python using scikit-learn and feature extraction for the linear model was done using the mahotas python library. Image segmentation on an NVIDIA Titan X Pascal 12GB GPU took roughly 0.5 seconds per 512 × 512 image containing several nuclei. As an example, training our CNN for classifying BJ vs. NIH/3T3 nuclei based on the full 128 × 128 nucleus images took less than 900 seconds on an NVIDIA Titan X Pascal 12GB GPU. More precisely, the model required 56 epochs until convergence, where each epoch consisted of 20 mini-batches composed of 128 images split equally among the two classes, and a complete forward and backward pass across all 20 mini-batches in an epoch took 14.66 seconds.

Data availability

Data and code for segmentation and neural network training will be made available on github upon publication of this paper.

Electronic supplementary material

Acknowledgements

K.D. and G.V.S. thank the Mechanobiology Institute (MBI), National University of Singapore (NUS), Singapore and the Ministry of Education (MOE) Tier-3 Grant Program for funding. A.R., A.C.S and C.U. were partially supported by NSF (1651995), DARPA (W911NF-16-1-0551), and ONR (N00014-17-1-2147).

Author Contributions

A.R., K.D., A.C.S., C.U., and G.V.S. designed the research; A.R., K.D., and A.C.S. performed the research; A.R., K.D., A.C.S., C.U., and G.V.S. analyzed the data, prepared the figures, and wrote the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Adityanarayanan Radhakrishnan and Karthik Damodaran contributed equally to this work.

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-017-17858-1.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Caroline Uhler, Email: cuhler@mit.edu.

G. V. Shivashankar, Email: shiva.gvs@gmail.com

References

- 1.Humphrey JD, Dufresne ER, Schwartz MA. Mechanotransduction and extracellular matrix homeostasis. Nat. Rev. Mol. Cell Biol. 2014;15:802–812. doi: 10.1038/nrm3896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Przybyla L, Muncie JM, Weaver VM. Mechanical control of epithelial-to-mesenchymal transitions in development and cancer. Ann. Rev. Cell Dev Biol. 2016;32:527–554. doi: 10.1146/annurev-cellbio-111315-125150. [DOI] [PubMed] [Google Scholar]

- 3.Iskratsch T, Wolfenson H, Sheetz MP. Appreciating force and shape-the rise of mechanotransduction in cell biology. Nat. Rev. Mol. Cell Biol. 2014;15:825–833. doi: 10.1038/nrm3903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang N, Tytell JD, Ingber DE. Mechanotransduction at a distance: mechanically coupling the extracellular matrix with the nucleus. Nat. Rev. Mol. Cell Biol. 2009;10:75–82. doi: 10.1038/nrm2594. [DOI] [PubMed] [Google Scholar]

- 5.Shivashankar G. Mechanosignaling to the cell nucleus and gene regulation. Annu. Rev. Biophys. 2011;40:361–378. doi: 10.1146/annurev-biophys-042910-155319. [DOI] [PubMed] [Google Scholar]

- 6.Burke B, Stewart CL. The nuclear lamins: flexibility in function. Nat. Rev. Mol. Cell Biol. 2013;14:13–24. doi: 10.1038/nrm3488. [DOI] [PubMed] [Google Scholar]

- 7.Isermann P, Lammerding J. Nuclear mechanics and mechanotransduction in health and disease. Curr. Biol. CB. 2013;23:R1113–1121. doi: 10.1016/j.cub.2013.11.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Halder G, Dupont S, Piccolo S. Transduction of mechanical and cytoskeletal cues by YAP and TAZ. Nat. Rev. Mol. Cell Biol. 2012;13:591–600. doi: 10.1038/nrm3416. [DOI] [PubMed] [Google Scholar]

- 9.Zanconato F, Cordenonsi M, Piccolo S. YAP/TAZ at the roots of cancer. Cancer cell. 2016;29:783–803. doi: 10.1016/j.ccell.2016.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Frost B. Alzheimer’s disease: An acquired neurodegenerative laminopathy. Nucleus. 2016;7:275–283. doi: 10.1080/19491034.2016.1183859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Scaffidi P, Misteli T. Lamin A-dependent nuclear defects in human aging. Science. 2006;312:1059–1063. doi: 10.1126/science.1127168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zink D, Fischer AH, Nickerson JA. Nuclear structure in cancer cells. Nature reviews. Cancer. 2004;4:677–687. doi: 10.1038/nrc1430. [DOI] [PubMed] [Google Scholar]

- 13.Liu F, Wang L, Perna F, Nimer SD. Beyond transcription factors: how oncogenic signalling reshapes the epigenetic landscape. Nat. Rev. Cancer. 2016;16:359–372. doi: 10.1038/nrc.2016.41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hatch E, Hetzer M. Breaching the nuclear envelope in development and disease. J. Cell Biol. 2014;205:133–141. doi: 10.1083/jcb.201402003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hnisz D, et al. Activation of proto-oncogenes by disruption of chromosome neighborhoods. Science. 2016;351:1454–1458. doi: 10.1126/science.aad9024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Flavahan, W. A., Gaskell, E. & Bernstein, B. E. Epigenetic plasticity and the hallmarks of cancer. Science357, doi:10.1126/science.aal2380 (2017). [DOI] [PMC free article] [PubMed]

- 17.Maciejowski J, de Lange T. Telomeres in cancer: tumour suppression and genome instability. Nat. Rev. Mol. Cell Biol. 2017;18:175–186. doi: 10.1038/nrm.2016.171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Slattum GM, Rosenblatt J. Tumour cell invasion: an emerging role for basal epithelial cell extrusion. Nat. Rev. Cancer. 2014;14:495–501. doi: 10.1038/nrc3767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Umar A, Dunn BK, Greenwald P. Future directions in cancer prevention. Nat. Rev. Cancer. 2012;12:835–848. doi: 10.1038/nrc3397. [DOI] [PubMed] [Google Scholar]

- 20.Lee HO, Park WY. Single-cell RNA-Seq unveils tumor microenvironment. BMB Rep. 2017;50:283–284. doi: 10.5483/BMBRep.2017.50.6.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nandakumar V, et al. Isotropic 3D nuclear morphometry of normal, fibrocystic and malignant breast epithelial cells reveals new structural alterations. PloS one. 2012;7:e29230. doi: 10.1371/journal.pone.0029230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nyirenda N, Farkas DL, Ramanujan VK. Preclinical evaluation of nuclear morphometry and tissue topology for breast carcinoma detection and margin assessment. Breast Cancer Res. Treat. 2011;126:345–354. doi: 10.1007/s10549-010-0914-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mueller JL, et al. Rapid staining and imaging of subnuclear features to differentiate between malignant and benign breast tissues at a point-of-care setting. J. Cancer Res. Clin. Oncol. 2016;142:1475–1486. doi: 10.1007/s00432-016-2165-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Underwood, J. C. E. & Crocker, J. Pathology of the Nucleus. (Springer-Verlag, 1990).

- 25.Liu Y, Uttam S, Alexandrov S, Bista RK. Investigation of nanoscale structural alterations of cell nucleus as an early sign of cancer. BMC biophys. 2014;7:1. doi: 10.1186/2046-1682-7-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Faridi, P., Danyali, H., Helfroush, M. S. & Jahromi, M. A. An automatic system for cell nuclei pleomorphism segmentation in histopathological images of breast cancer. In IEEE Signal Processing in Medicine and Biology Symposium (SPMB). 1–5 (2016).

- 27.Irshad H, Veillard A, Roux L, Racoceanu D. Methods for nuclei detection, segmentation, and classification in digital histopathology: a review-current status and future potential. IEEE Rev. Biomed. Eng. 2014;7:97–114. doi: 10.1109/RBME.2013.2295804. [DOI] [PubMed] [Google Scholar]

- 28.Ghaznavi F, Evans A, Madabhushi A, Feldman M. Digital imaging in pathology: whole-slide imaging and beyond. Annu. Rev. Pathol. 2013;8:331–359. doi: 10.1146/annurev-pathol-011811-120902. [DOI] [PubMed] [Google Scholar]

- 29.Xing F, Yang L. Robust nucleus/cell detection and segmentation in digital pathology and microscopy images: A comprehensive review. IEEE Rev. Biomed. Eng. 2016;9:234–263. doi: 10.1109/RBME.2016.2515127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sommer C, Gerlich DW. Machine learning in cell biology - teaching computers to recognize phenotypes. J. Cell Sci. 2013;126:5529–5539. doi: 10.1242/jcs.123604. [DOI] [PubMed] [Google Scholar]

- 31.Grys BT, et al. Machine learning and computer vision approaches for phenotypic profiling. J. Cell Biol. 2017;216:65–71. doi: 10.1083/jcb.201610026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 33.Paramanandam M, et al. Automated segmentation of nuclei in breast cancer histopathology images. PloS one. 2016;11:e0162053. doi: 10.1371/journal.pone.0162053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gann PH, et al. Development of a nuclear morphometric signature for prostate cancer risk in negative biopsies. PloS One. 2013;8:e69457. doi: 10.1371/journal.pone.0069457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Huang H, et al. Cancer diagnosis by nuclear morphometry using spatial information. Pattern Recognit. Lett. 2014;42:115–121. doi: 10.1016/j.patrec.2014.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wolfe P, et al. Using nuclear morphometry to discriminate the tumorigenic potential of cells: a comparison of statistical methods. Cancer Epidemiol. Biomarkers Prev. 2004;13:976–988. [PubMed] [Google Scholar]

- 37.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chen CL, et al. Deep learning in label-free cell classification. Sci Rep. 2016;6:21471. doi: 10.1038/srep21471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Yu KH, et al. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016;7:12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wienert S, et al. Detection and segmentation of cell nuclei in virtual microscopy images: a minimum-model approach. Sci. Rep. 2012;2:503. doi: 10.1038/srep00503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Michał, Ż., Marek, K., Józef, K. & Roman, M. Classification of breast cancer cytological specimen using convolutional neural network. J. Phys. Conf. Ser. 783, 012060 (2017).

- 42.Morgan MA, Shilatifard A. Chromatin signatures of cancer. Genes & Development. 2015;29:238–249. doi: 10.1101/gad.255182.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Radhakrishnan A, Durham C, Soylemezoglu A, Uhler C. PatchNet: Interpretable neural networks for image classification. arXiv. 2017;1705:08078. [Google Scholar]

- 44.Bloom KS. Centromeric heterochromatin: the primordial segregation machine. Ann. Rev. Genet. 2014;48:457–484. doi: 10.1146/annurev-genet-120213-092033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Slee RB, et al. Cancer-associated alteration of pericentromeric heterochromatin may contribute to chromosome instability. Oncogene. 2012;31:3244. doi: 10.1038/onc.2011.502. [DOI] [PubMed] [Google Scholar]

- 46.Zeitlin SG, et al. Double-strand DNA breaks recruit the centromeric histone CENP-A. Proc. Natl. Acad. Sci. USA. 2009;106:15762–15767. doi: 10.1073/pnas.0908233106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Korf B. Hutchinson-Gilford progeria syndrome, aging, and the nuclear lamina. N. Engl. J. Med. 2008;358:552–555. doi: 10.1056/NEJMp0800071. [DOI] [PubMed] [Google Scholar]

- 48.Schreiber KH, Kennedy BK. When lamins go bad: nuclear structure and disease. Cell. 2013;152:1365–1375. doi: 10.1016/j.cell.2013.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Uhler, C. & Shivashankar, G. V. Chromosome intermingling: Mechanical hotspots for genome regulation. Trends Cell. Biol., doi:10.1016/j.tcb.2017.06.005 (2017). [DOI] [PubMed]

- 50.Jain RK, Martin JD, Stylianopoulos T. The role of mechanical forces in tumor growth and therapy. Annu. Rev. Biomed. Eng. 2014;16:321–346. doi: 10.1146/annurev-bioeng-071813-105259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Quail DF, Joyce JA. Microenvironmental regulation of tumor progression and metastasis. Nat. Med. 2013;19:1423–1437. doi: 10.1038/nm.3394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Joyce JA, Pollard JW. Microenvironmental regulation of metastasis. Nat. Rev. Cancer. 2009;9:239–252. doi: 10.1038/nrc2618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Butcher DT, Alliston T, Weaver VM. A tense situation: forcing tumour progression. Nat. Rev. Cancer. 2009;9:108–122. doi: 10.1038/nrc2544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lambert AW, Pattabiraman DR, Weinberg RA. Emerging biological principles of metastasis. Cell. 2017;168:670–691. doi: 10.1016/j.cell.2016.11.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.World Health Organization. Cancer control: knowledge into action: WHO guide for effective programmes Vol. 2. World Health Organization (2007). [PubMed]

- 56.Gerdes MJ, et al. Highly multiplexed single-cell analysis of formalin-fixed, paraffin-embedded cancer tissue. Proc. Natl. Acad. Sci. USA. 2013;110:11982–11987. doi: 10.1073/pnas.1300136110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Tsoucas D, Yuan GC. Recent progress in single-cell cancer genomics. Curr. Opin. Genet. Dev. 2017;42:22–32. doi: 10.1016/j.gde.2017.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.He, K., Zhang, X., Ren, S. & Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. IEEE International Conference on Computer Vision (ICCV), 1026–1034 (2015).

- 59.Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. International Conference on Learning Representations (ICLR) (2015).

- 60.Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning. MIT Press (2016).

- 61.Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. International Conference on Learning Representations (ICLR) (2015).

- 62.Van der Walt, S. et al. scikit-image: image processing in Python. PeerJ 2, e453, doi:10.7717/peerj.453 (2014). [DOI] [PMC free article] [PubMed]

- 63.Van der Walt S, Colbert SC, Varoquaux G. The NumPy array: A structure for efficient numerical computation. Computing in Science & Engineering. 2011;13:22–30. doi: 10.1109/MCSE.2011.37. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data and code for segmentation and neural network training will be made available on github upon publication of this paper.