Abstract

Background

Medical students may not be able to identify the essential elements of situational awareness (SA) necessary for clinical reasoning. Recent studies suggest that students have little insight into cognitive processing and SA in clinical scenarios. Objective Structured Clinical Examinations (OSCEs) could be used to assess certain elements of situational awareness. The purpose of this paper is to review the literature with a view to identifying whether levels of SA based on Endsley’s model can be assessed utilising OSCEs during undergraduate medical training.

Methods

A systematic search was performed pertaining to SA and OSCEs, to identify studies published between January 1975 (first paper describing an OSCE) and February 2017, in peer reviewed international journals published in English. PUBMED, EMBASE, PsycINFO Ovid and SCOPUS were searched for papers that described the assessment of SA using OSCEs among undergraduate medical students. Key search terms included “objective structured clinical examination”, “objective structured clinical assessment” or “OSCE” and “non-technical skills”, “sense-making”, “clinical reasoning”, “perception”, “comprehension”, “projection”, “situation awareness”, “situational awareness” and “situation assessment”. Boolean operators (AND, OR) were used as conjunctions to narrow the search strategy, resulting in the limitation of papers relevant to the research interest. Areas of interest were elements of SA that can be assessed by these examinations.

Results

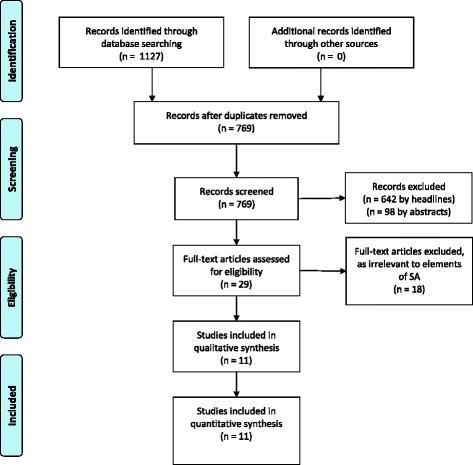

The initial search of the literature retrieved 1127 publications. Upon removal of duplicates and papers relating to nursing, paramedical disciplines, pharmacy and veterinary education by title, abstract or full text, 11 articles were eligible for inclusion as related to the assessment of elements of SA in undergraduate medical students.

Discussion

Review of the literature suggests that whole-task OSCEs enable the evaluation of SA associated with clinical reasoning skills. If they address the levels of SA, these OSCEs can provide supportive feedback and strengthen educational measures associated with higher diagnostic accuracy and reasoning abilities.

Conclusion

Based on the findings, the early exposure of medical students to SA is recommended, utilising OSCEs to evaluate and facilitate SA in dynamic environments.

Keywords: Situational awareness, Undergraduate medical education, Objective structured clinical examination, Diagnostic reasoning, Clinical reasoning

Background

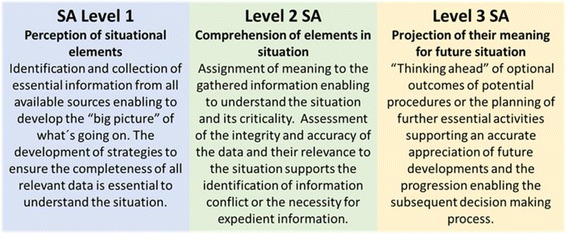

Diagnostic and treatment errors have gained increased attention over the last decades [1, 2]. It has been suggested that these errors are intensely personal and influenced by the physicians´ knowledge and cognitive abilities such as defective information processing and verification [3–5]. Clinical Reasoning (CR) as the underlying cognitive process in diagnostic and therapeutic decision making is directed by the situation and context of the patient’s condition [6]. The ability for CR necessitates recognition and incorporation of multiple individual aspects of a patient, which enables the selection of the best treatment option in any given clinical presentation [7]. The accumulation of cognitive errors within CR has been suggested as predictive for the genesis of harmful events to the patient [8]. Notwithstanding the implementation of innovative teaching and assessment methods, such as simulation-based learning [9, 10] and problem-based learning [11, 12] into medical education curricula, flawed identification of the clinical presentation and defective appropriateness of therapeutic options continue to be reported [13–15]. Situational awareness (SA) was described by Endsley in respect to aviation as "a person’s mental model of the world around them" [16]. Knowledge about a given set of actualities is central to effective decision making and ongoing assessment in dynamic systems [6, 17, 18]. The ability to integrate successive information and identify conflictive perceptions is an essential precondition for maintaining adequate SA [17, 19]. The incorporation of the surrounding circumstances, the given set of actualities and their possible impact on future outcomes have been divided into three different levels of SA: Level 1 Perception, Level 2 Comprehension and Level 3 Projection [17]. In healthcare, SA was identified as one key element of medical practice involving multiple cognitive capacities such as perception, understanding, reasoning and meta-cognition [20]. With regard to clinical practice, SA is believed to be essential for recognising and interpreting the clinical symptoms and signs of a patients´ illness, thereby enabling accurate CR [21–24]. The WHO identified inadequate SA as a primary parameter associated with deficient clinical performance [25], recommending the implementation of “human factors” training as realised in other high-risk environments in medical undergraduate education [26]. Furthermore, SA was emphasised as one of four fundamental cornerstones incorporated in patient safety education into an undergraduate medical curriculum [27].

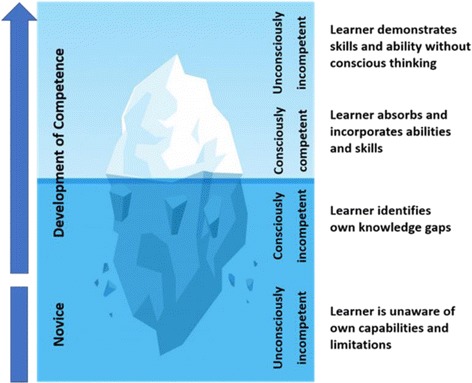

The development of clinical expertise is separated into four different levels [28, 29]. Students, initially characterised as “unconsciously incompetent”, learn clinically from experienced doctors who apply pattern recognition in their daily practice when assessing patients [30, 31]. Novices often are cognitively overburdened by the vast amount of available information and the prioritising process in identifying essential data, resulting in an incomplete or defective perception of the situation [32]. Professional clinicians who have developed their mental models by integration of knowledge and expertise over many years, are termed “unconsciously competent” [33]. The utilisation of illness scripts and schemata enables fast non-analytical thinking (System 1) resulting in an expeditious “big picture” of the clinical presentation of the patient, which is more comprehensive and projects possible outcomes when compared with the mental models of novices [32]. If the situation is not completely understood, clinical experts are able to switch to analytical thinking (System 2) [34]. However, they are commonly unaware of elements of SA and therefore, generally cannot convey or teach this sequence of data gathering and incorporation into the reasoning process [22, 35]. As a result, observing senior tutors might not enable students to develop incremental levels from conscious incompetence towards conscious competence through perceiving the essential steps of identifying and integrating relevant information for CR [33, 36]. Furthermore, Kiesewetter et al. emphasised, that very little knowledge exists about cognitive processing by medical students which may limit instruction on the incremental steps in CR in medical education [37]. Twenty years ago, Goss highlighted the fact, that medical students enter their third year of training competent in information gathering and facilitating patient care, but with deficient diagnostic reasoning ability [18]. Upon providing either a clinical vignette format or a chief complaint format in a paper-based examination, Nendaz and colleagues compared students, residents and general internists abilities in considering differential diagnosis (SA Level 2) or selecting basic diagnostic assessments (SA Level 1) and considering treatment options (SA Level 3). Thereby they noted that students were seen to be able to demonstrate knowledge and carry out examinations, but struggled to incorporate the data into further diagnostic processes [38]. Because the utility of the data gathering process is closely linked with the process of subsequent reasoning, both should be jointly addressed and evaluated. More recently, Schuwirth argued that the outcome based assessment does not reflect CR abilities, and therefore, adequate alternative evaluation techniques of intermediate steps should be explored [39]. Singh et al. suggested a change in the current framework of the analytical diagnostic process in order to identify breakdowns in SA. By distinguishing the level at which SA was lacking, distinct measures can be applied in subsequent training [40]. This suggests the necessity of emphasising the understanding of SA in the medical context and of formulating novel potentials to teach and evaluate the utilisation of SA in educational healthcare settings.

Objective structured clinical examinations (OSCEs) are, in theory, intended to function as an educational measure during medical training allowing for the assessment of student’s competence under variable circumstances [41, 42]. Fida and Kassab showed that scores achieved by medical students in OSCE stations demonstrated strong predictive value for the students´ ability to identify and integrate relevant information and competently manage a patient [7]. Therefore, there is potential for the identification and remediation of deficits in selecting and integrating essential parameters, which is pivotal for CR [31]. Contrary to that, Martin et al. demonstrated no significant correlation between OSCE scores, data interpretation and CR [43]. These factors raise the question as to whether aviation-like SA training and assessment could be purposefully reflected in medical education and assessment. OSCEs may be a suitable instrument to teach and evaluate students’ use of SA as part of their clinical reasoning. The purpose of this paper is to review the literature with a view to identifying whether levels of SA can be assessed during undergraduate medical training utilising OSCEs based on Endsley’s model.

Methods

A systematic search of the literature was performed pertaining to SA and OSCEs, to identify studies published between January 1975 (first paper describing an OSCE) and February 2017, in peer reviewed international journals published in English. PUBMED, EMBASE, PsycINFO Ovid and SCOPUS were searched for papers that described the assessment of CR using OSCEs among undergraduate medical students. Key search terms included “Objective Structured Clinical Examination”, “Objective Structured Clinical Assessment” or “OSCE” and “non-technical skills”, “sense-making”, “clinical reasoning”, “perception”, “comprehension”, “projection”, “situation awareness”, “situational awareness” and “situation assessment”. Boolean operators (AND, OR) were used as conjunctions to narrow the search strategy, resulting in the limitation of papers relevant to the research interest (Table 1). Publications relating to undergraduate medical training and ‘situational awareness’ or information processing as part of clinical reasoning were included. Due to different cognitive demands and scopes of practice, publications relating to nursing, paramedical disciplines, pharmacy and veterinary education were excluded from the search. The abstracts of remaining papers were manually reviewed in order to ensure their relevance. Areas of particular interest were elements of SA within OSCEs and the assessment of SA within these examinations. Additionally, a manual review of the references listed in the remaining publications was carried out and any publications of potential interested were sourced and reviewed (selection process described in Fig. 1).

Table 1.

Steps of initial literature search to retrieve papers for the critical appraisal for their relevance to SA and OSCE in undergraduate medical education

| 1 | Objective structured clinical examination |

|---|---|

| 2 | OSCE |

| 3 | OR 1–2 |

| 4 | Objective structured clinical assessment |

| 5 | OR 3–4 |

| 6 | Non-technical skills |

| 7 | AND 5–6 |

| 8 | Sense-making |

| 9 | AND 5–8 |

| 10 | Clinical reasoning |

| 11 | AND 5–10 |

| 12 | Perception |

| 13 | Comprehension |

| 14 | Projection |

| 15 | OR 12–13-14 |

| 16 | AND 5–15 |

| 17 | Situation awareness |

| 18 | Situational awareness |

| 19 | Situation assessment |

| 20 | OR 17–18-19 |

| 21 | AND 5–20 |

Fig. 1.

PRISMA Flowchart

Results

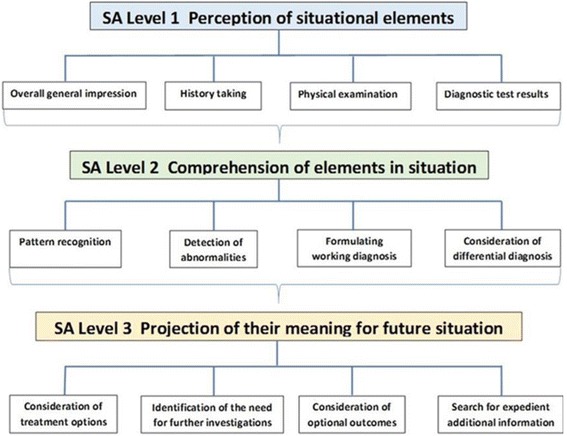

The search of the literature retrieved 11 articles eligible for inclusion (Table 2). Only one publication demonstrated an association between the OSCE and SA. An appraisal of the study design of the utilised simulation scenario, however, revealed that a root cause analysis was undertaken by the medical students to identify a prescription error [44]. Part of the examination focused on SA Level 1 when students were asked to take a history of the incident and SA Level 2 when integrating this data into the understanding of the situation. The authors suggested OSCEs to reflect utilisation of SA, however, neither a definition of the meaning nor the model of SA used for the conclusion was provided. Evaluation of SA Level 1 were identified in 11 publications, mostly seen in elements such as physical examinations, history taking but also in obtaining an overall impression of the patient and the retrieval of diagnostic test results. All 11 studies demonstrated continuative evaluation of elements of SA Level 2, demonstrated by the integration of the gathered parameters in SA Level 1 into further information processing steps. Only two studies assessed the selection process of optional diagnostic and treatment modalities categorised in SA Level 3.

Table 2.

Results of the analysis of 11 identified papers concerning SA (SA Level 1,2,3 Column 5,6,7, respectively) in undergraduate medical training evaluated by OSCEs

| Author / year of publication | Year of study | Number of students | Level of education | SA Level 1 | SA Level 2 | SA Level 3 | Feedback | Assessment tool for SA | Educational tool for SA | Research interest |

|---|---|---|---|---|---|---|---|---|---|---|

| Volkan 2004 [49] | 1999 | 169 | year three | History taking, physical examination | Differential diagnosis | Consideration of treatment options | X | Factor analysis of OSCE constructs | ||

| Durak 2007 [51] | 2000–2001 | 382 | year six | Overall impression, history taking, diagnostic test results | Differential diagnosis | Consideration of treatment options, identification the need for further investigations | X | X | Case-based stationary examination | |

| Varkey 2007 [44] | 2003 | 42 | year three | History taking | Identification of root cause of error | X | X | Root-cause analysis of error | ||

| Durning 2012 [45] | 2010 | 170 | year two | History taking, physical examination | Differential diagnosis | X | Feasibility, reliability, and validity of the evaluation of clinical reasoning utilising OSCEs | |||

| Myung 2013 [53] | 2011 | 145 | year four | Physical examination | Differential diagnosis | X | OSCE evaluation impact of pre-encounter analytical reasoning training | |||

| Lafleur 2015 [52] | 2013 | 40 | year five | Physical examination | Diagnostic reasoning | X | Influence of OSCE design on diagnostic reasoning | |||

| LaRochelle 2015 [46] | 2009–2011 | 514 | year four | History taking, physical examination | Clinical reasoning | X | Impact of pre-clerkship clinical reasoning training | |||

| Park 2015 [47] | 2011 | 65 | year four | Overall impression, history taking, physical examination | Differential diagnosis | X | Comparison of clinical reasoning scores and diagnostic accuracy | |||

| Sim 2015 [48] | 2013 | 185 | year five | History taking, physical examination | Data interpretation, clinical reasoning | X | Assessment of different clinical skills using OSCE | |||

| Stansfield 2016 [50] | 2012 | 45 | year four | Physical examination | Diagnostic reasoning | X | Evaluation of embedding clinical examination results into diagnostic reasoning | |||

| Furmedge 2016 [54] | 2013/ 2014 | 1280 | year one/ two | Information gathering | Predefined focus on integration of basic and clinical science | X | X | Acceptability and educational impact of OSCEs in early years |

Six papers described the OSCE as having the potential to be an assessment tool for CR [45–50], a method that might correspond with those used for the assessment of SA in high-risk environments or simulation scenarios (as described in Fig. 2). Furthermore, five papers suggested the OSCE as a valuable means for educating medical students on information gathering and processing when they are assessing the identification of the clinical presentation and incorporating the findings into their decision tree [44, 51–54].

Fig. 2.

Levels of SA based on Endsley’s model [17]

Situational awareness as part of the evaluation of clinical reasoning

Six studies concluded that OSCE stations allow for the assessment of students’ utilisation of CR abilities within diagnostic thinking [45–50]. In a study by Durning et al. based on three successive stations, students were asked to take a history from a patient, synthesise the data and provide the most likely diagnosis and a problem list. In the last step, the patient had to be presented to an attending colleague [45]. La Rochelle and colleagues detected a correlation between clinical and reasoning skills during pre-clerkship and abilities observed during internship [46]. Therefore, they suggested the potential of OSCEs to identify and foster those students who are experiencing difficulties with diagnostic reasoning and so possibly to prevent problems in subsequent clinical performance. Park et al., in contrast, demonstrated the inability of OSCE scores to correlate with CR abilities [47]. However, they demonstrated that scores achieved in CR OSCEs strongly correlated with diagnostic accuracy. When assessing students across 16 OSCE stations, Sim et al. demonstrated, that out of six evaluation criteria [history taking, physical examination, communication skills, CR skills, procedural skills, professionalism] procedural skills were identified as strongest and CR abilities as weakest [48]. They suggested that the low mean scores could be the result of students` lack of biomedical knowledge, their inability to incorporate the collected information into the clinical presentation of the patient or a combination of both. Volkan et al. in their study suggested two fundamental structures for OSCEs. Information gathering was represented by history-taking and physical examination, whereas reasoning and dissemination included hypothetico-deductive testing and differential diagnostic thinking [49]. Based on the findings of previous studies in which students showed a drop in CR when focussing on history-taking and physical examination, they highlighted the importance of comprehensive OSCEs to assess the ability to apply both processes simultaneously. In an innovative OSCE assessing the connotation of CR and physical examination abilities, Stansfield and colleagues identified a discrepancy between integrating acquired knowledge into the selected physical manoeuvres [50]. Additionally, there were fewer deficits in employing adequate physical examination skills in students able to embed their findings into the CR process.

The OSCE as an educational tool for situational awareness

Five research groups identified the potential for OSCE stations to be teaching tools for SA within medical education [44, 51–53]. Generally, studies demonstrated better diagnostic accuracy and reasoning abilities among students when using an underlying analytical approach. Direct feedback or the addition of supportive information between incremental OSCE scenarios exemplified good educational properties. Durak et al. described a model in which hybrid forms of OSCE stations were applied [51]. Based on patient scenarios, students were asked to develop a treatment plan and were guided in a stepwise manner. The initial step included the collection of relevant data from history-taking, evaluating signs and symptoms, and the identification of underlying pathophysiological changes. After identifying the most likely diagnosis, students were probed to extract relevant information from the clinical notes and diagnostic results. Subsequently, students created the treatment plan for the patient based on the chosen diagnosis. In between these steps, corrective feedback was provided and incorporated into subsequent decision making. This method was found to be a motivator for students to improve their CR. Lafleur et al. observed the impact of the design of OSCE stations on the learning behaviour of students [52]. They described students applying more diagnostic reasoning when studying for whole task OSCEs rather than those that focused purely on physical examinations. Backward and forward associations, that is, either looking for evidence to support a suspected diagnosis or the aggregation of all identified symptoms and signs to conclude a diagnosis respectively, are both tasks that demand higher cognitive processing activities and, were strengthened when studying collaboratively for comprehensive OSCEs. Myung et al. compared analytical reasoning ability and diagnostic accuracy in a randomised controlled study [53]. On analysis of two groups of students, one of which had received prior education on analytical reasoning and one of which had not, OSCE scores achieved in both cohorts demonstrated no difference for information gathering. However, higher diagnostic accuracy was seen in that group of students which had received training in applying analytical reasoning strategies. Due to the similarity to real clinical situations, Varkey et al. suggest that OSCEs in general are an ideal tool for assessing and teaching SA [44]. However, no statement of the meaning of SA or the association with the healthcare environment was provided. In their study, students were asked to identify pivotal information in an error-induced patient encounter. Formative feedback was provided by the tutor on information gathering, root cause analysis, and completing the task. Furmedge and colleagues interrogated the appreciation of students for a novel, formative OSCE. The clinical scenario was designed to enable testees to exemplify the integration of skills and knowledge into the understanding of a situation rather than the pure retrieval of recited text passages. In this study, OSCEs were seen as a learning environment to develop cognitive strategies when exposed to clinical scenarios mirroring reality [54].

Discussion

We suggest that OSCE stations could be utilised for the assessment of elements of SA (Fig. 3) in medical students, using whole task simulation scenarios. So far, no distinct comprehensible methodology has been described which is universally accepted as fundamental measurement of SA. Furthermore, the conjecture that accurate SA automatically correlates with adequate performance and vice versa has been disproven. Although students may demonstrate history-taking, physical examination and procedural skills, the literature suggests that they are frequently unable to embed their findings in subsequent steps and decisions. This might be explained by the fact that novices often only recite enormous amounts of information from their “knowledge database”. Reduced diagnostic accuracy by medical students accentuated the primary necessity for efficient data gathering and processing [29, 38]. Diagnostic excellence has been suggested to originate from a reasonable understanding of the fundamental anatomical and physiological context in conjunction with pathophysiological changes potentially identifiable within elements of SA in any given clinical presentation [55]. Borleffs et al. described the objective of teaching CR as the ability to make correct decisions in the process of establishing a diagnosis [56]. Alexander concluded that students must be able to demonstrate how to do it, but also, at the same time, why to do it [57]. Zwaan et al. suggested implementing interventions with proven records to enhance SA within the diagnostic reasoning process [8]. Gruppen and colleagues depicted how the different utilisation of hypotheses and information depends on clinical experience and expertise [58]. In their study, the collection and appropriate selection of data was demonstrated to be more difficult than the pure integration of available information. This imbalance between efficient information gathering and successive data integration suggests that educational measures should aim to enhance procedures in collecting and processing relevant information (Fig. 4).

Fig. 3.

Elements of SA in the clinical context

Fig. 4.

Developmental stages in compentence according to Scott [69] (designed by Vv studio

The OSCE as a learning approach for SA for medical students

OSCE stations can be educational tools for CR, pattern recognition and problem-based learning [59]. To foster the ability of putting it all together, Furmedge et al. suggested an early exposure of students to OSCEs [54]. However, they concurrently highlighted the need to identify how early OSCE exposure could contribute to development of non-analytical reasoning skills. When analysing feedback upon completion of the OSCE cycle, Haider and colleagues summarised students` appreciation of this type of assessment, which supported their individual abilities to identify areas of clinical weakness, thus inspiring their interest in developing information processing skills [60]. Baker et al. introduced three strategies for developing CR, hypothesis testing, forward thinking and pattern recognition [61]. They developed a specific assessment tool for the interpretative summary, differential diagnosis, explanation of reasoning and alternative diagnostics [IDEA]. OSCEs were described as a means of valuable feedback for both, examinee and educator [62], that enables the reinforcement of the importance of SA as an underlying requirement for well-informed CR in all disciplines [19, 29]. Feedback provided upon completion of OSCE scenarios could support the faculty’s appraisal and the examinees` self-rating of the sense-making process when selecting best clinical diagnosis and therapeutic options [51]. Providing individualised feedback upon completion of the OSCE was described as being complex [63]. Thus, establishing the cognitive map of the underlying information processing could potentially identify why selected parameters and criteria during the CR process either made sense to the testee at the time or were neglected [64–66]. Remedial teaching and education at undergraduate level could be considered if a deficiency within the three levels of SA was identified during OSCE assessments [67]. Gregory et al. described an innovative method of teaching aspects of situational awareness in undergraduate medical training by exposing students not only to perils, but also to additional indications of a patient’s condition [68]. Upon entry into undergraduate training, students are exposed to a clinical area without a patient, such as the bed space, and are evaluated collectively in their ability to recognise any hazards and clues indicating supportive information about the clinical status of the patient. Students are also expected to extract additional parameters from clinical notes and diagnostic results. The positive feedback from students and tutors suggests that this approach is a promising tool in teaching SA to medical students.

Conclusion

Assessment of elements of SA as adapted from the model by Endsley might have the potential to be translated into certain aspects of CR evaluation using OSCEs. Given that assessment is a fundamental driver of adult learning, incorporating the quantitation of utilisation of SA within OSCEs during undergraduate medical training could develop and strengthen teaching on information gathering and efficient processing. However, further research needs to establish whether different levels of SA can be identified throughout the medical curriculum and its assessment including the use of paper cases and reviewing medical records. If so, are these levels of assessment congruent with the learning outcomes in preclinical and clinical years? In order to teach students how to perceive and incorporate relevant data, it is essential to provide focussed and informative feedback related to each level of SA and the associated steps of CR. Upon identification of the potential and ability to assess levels of SA in a curriculum e. g. OSCEs, we suggest that students be exposed, in a staged format, to the concept of SA at the early stages in their training, prior to meeting complex challenging clinical situations in their later medical careers. Efforts in conveying underlying elements of SA during undergraduate education could be reflected in enhanced abilities to read and understand clinical scenarios in subsequent clinical practice.

Acknowledgements

The authors wish to thank P O’Connor and E Doherty for their input as experts for human factors in healthcare.

Funding

No funding was received to carry out the study.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CR

Clinical Reasoning

- OSCE

Objective Structured Clinical Examination

- SA

Situational Awareness

Authors’ contributions

TJBK contributed to the design of the study, analysis and interpretation of the data, drafting and revising the manuscript. KMK contributed to the interpretation of the data and revising the manuscript. SD, MPS, JK, PO’C and ED were involved in interpreting the data and revising the manuscript. All authors have given final approval of the version to be published.

Authors’ information

Markus Fischer is a PhD student at the School of Medicine, National University Ireland, Galway. His research interest is with Human Factors in Medical Education.

Dr. Kieran Kennedy is a Lecturer in Clinical Methods and Clinical Practice in the School of Medicine at the National University of Ireland Galway. He is involved in teaching undergraduate medical students at all stages of their training. He is a General Practitioner (family doctor) in Galway City, Ireland.

Prof Steven Durning is a Professor of medicine and pathology at the Uniformed Services University (USU) and is the Director of Graduate Programs in Health Professions Education. As an educator, mentors graduate students and faculty, he teaches in the HPE program and directs a second-year medical school course on clinical reasoning. As a researcher, his interests include enhancing our understanding of clinical reasoning and its assessment.

Prof M Schijven, MD PhD MHSc is a Surgeon at the Academic Medical Center Amsterdam, The Netherlands, and previous President of the Dutch Society of Simulation in Healthcare. She is one of the AMC Principal Investigators. Her focus of research is with Simulation, Serious Gaming, Applied Mobile Healthcare and Virtual Reality Simulation.

Prof Jean Ker is the Associate Dean of Innovation and Professor in Medical Education at the University of Dundee and the Director of the Institute of Health Skills and Education at the University of Dundee.

Dr. Paul O’Connor is a Lecturer in primary care at the National University of Ireland Galway. His research is concerned with human performance in high risk work domains with a focus on patient safety, human factors and human error.

Dr. Eva Doherty is the Director of the Human Factors and Patient Safety (HFPS) training, research and assessment programme at the Royal College of Surgeons in Ireland (RCSI).

Dr. Thomas Kropmans is a Senior Lecturer in Medical Informatics and Medical Education at the National University of Ireland Galway. His research interests include postgraduate medical education and continuing professional development.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Authors declare no competing interest.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Markus A. Fischer, Email: m.fischer2@nuigalway.ie

Kieran M. Kennedy, Email: kieran.kennedy@nuigalway.ie

Steven Durning, Email: steven.durning@usuhs.edu.

Marlies P. Schijven, Email: m.p.schijven@amc.uva.nl

Jean Ker, Email: j.s.ker@dundee.ac.uk.

Paul O’Connor, Email: paul.oconnor@nuigalway.ie.

Eva Doherty, Email: edoherty@rcsi.ie.

Thomas J. B. Kropmans, Email: thomas.kropmans@nuigalway.ie

References

- 1.Kohn LT, Corrigan JM, Donaldson MS. Institute of Medicine Committee on quality of health care. To err is human: building a safer health system. Washington [DC]: National Academies Press; 2000. [PubMed] [Google Scholar]

- 2.La Pietra L, Calligaris L, Molendine L, Quattrin R, Brusaferro S. Medical errors and clinical risk management: state of the art. Acta Otorhinolaryngol Ital. 2005;25(6):339–346. [PMC free article] [PubMed] [Google Scholar]

- 3.Graber M, Gordon R, Franklin N. Reducing diagnostic errors in medicine: what's the goal? Acad Med. 2002;77(10):981–992. doi: 10.1097/00001888-200210000-00009. [DOI] [PubMed] [Google Scholar]

- 4.Graber ML, Wachter RM, Cassel CK. Bringing diagnosis into the quality and safety equations. JAMA. 2012;308(12):1211–1212. doi: 10.1001/2012.jama.11913. [DOI] [PubMed] [Google Scholar]

- 5.Nendaz M, Perrier A. Diagnostic errors and flaws in clinical reasoning: mechanisms and prevention in practice. Swiss Med Wkly. 2012;142(w13706):1–9. doi: 10.4414/smw.2012.13706. [DOI] [PubMed] [Google Scholar]

- 6.De Jong T, Ferguson-Hessler MG. Types and qualities of knowledge. Educ Psychol. 1996;31(2):105–113. doi: 10.1207/s15326985ep3102_2. [DOI] [Google Scholar]

- 7.Fida M, Kassab SE. Do medical students' scores using different assessment instruments predict their scores in clinical reasoning using a computer-based simulation? Adv Med Educ Pract. 2015;6:135–141. doi: 10.2147/AMEP.S77459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zwaan L, Thijs A, wagner C, van der Wal G, Timmermans DR. Relating faults in diagnostic reasoning with diagnostic errors and patient harm. Acad Med. 2012;87(2):149–156. doi: 10.1097/ACM.0b013e31823f71e6. [DOI] [PubMed] [Google Scholar]

- 9.Ziv A, Ben-David S, Ziv M. Simulation based medical education: an opportunity to learn from errors. Med Teach. 2005;27(3):193–199. doi: 10.1080/01421590500126718. [DOI] [PubMed] [Google Scholar]

- 10.Cass GK, Crofts JF, Draycott TJ. The use of simulation to teach clinical skills in obstetrics. Semin Perinatol. 2011;35(2):68–73. doi: 10.1053/j.semperi.2011.01.005. [DOI] [PubMed] [Google Scholar]

- 11.Reid WA, Evans P, Duvall E. Medical students’ approaches to learning over a full degree programme. Med Educ Online 2012; doi: 10.3402/meo.v17i0.17205. [DOI] [PMC free article] [PubMed]

- 12.Davis P, Kvern B, Donen N, Andrews E, Nixon O. Evaluation of a problem-based learning workshop using pre- and post-test objective structured clinical examinations and standardized patients. J Contin Educ Heal Prof. 2000;20(3):164–170. doi: 10.1002/chp.1340200305. [DOI] [PubMed] [Google Scholar]

- 13.Makary MA, Daniel M. Medical error-the third leading cause of death in the US. BMJ. 2016; doi:10.1136/bmj.i2139. [DOI] [PubMed]

- 14.McDonald KM, Matesic B, Contopoulos-Ioannidis DG, Lonhart J, Schmidt E, Pineda N, Ioannidis JP. Patient safety strategies targeted at diagnostic errors: a systematic review. Ann Intern Med. 2013;158(5Part2):381–389. doi: 10.7326/0003-4819-158-5-201303051-00004. [DOI] [PubMed] [Google Scholar]

- 15.Newman-Toker DE, Pronovost PJ. Diagnostic errors—the next frontier for patient safety. JAMA. 2009;301(10):1060–1062. doi: 10.1001/jama.2009.249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Endsley MR. Situation awareness in aviation systems. In: Wise JA, V. David Hopkin, Garland DJ, editors. Handbook of Aviation Human Factors. Boca Ratom: CRC Press; 2009. p. 12-1–12-22.

- 17.Endsley MR, Garland DJ. Theoretical underpinnings of situation awareness: a critical review. In: Situation awareness analysis and measurement. Mahwah: Taylor & Francis e-Library; 2000. p. 3-28

- 18.Goss JR. Teaching clinical reasoning to second-year medical students. Acad Med. 1996;71(4):349–352. doi: 10.1097/00001888-199604000-00009. [DOI] [PubMed] [Google Scholar]

- 19.Endsley MR, Jones WM. A model of inter- and intrateam situation awareness: implications for design, training and measurement. In: McNeese M, Salas E, Endsley M, editors. New trends in cooperative activities: understanding system dynamics in complex environments. Santa Monica, CA: Human Factors and Ergonomics Society; 2001. [Google Scholar]

- 20.Parush A, Campbell C, Hunter A, Ma C, Calder L, Worthington J, Frank JR. Situational awareness and patient safety: a primer for physicians. Ottawa: The Royal College of Physicians and Surgeons of Canada; 2011. [Google Scholar]

- 21.Fore AM, Sculli GL. A concept analysis of situational awareness in nursing. J Adv Nurs. 2013;69(12):2613–2621. doi: 10.1111/jan.12130. [DOI] [PubMed] [Google Scholar]

- 22.Graafland M, Schraagen JM, Boermeester MA, Bemelman WA, Schijven MP. Training situational awareness to reduce surgical errors in the operating room. Br J Surg. 2015;102(1):16–23. doi: 10.1002/bjs.9643. [DOI] [PubMed] [Google Scholar]

- 23.Leonard MM, Kyriacos U. Student nurses’ recognition of early signs of abnormal vital sign recordings. Nurse Educ Today. 2015;35(9):e11–e18. doi: 10.1016/j.nedt.2015.04.013. [DOI] [PubMed] [Google Scholar]

- 24.Wassef ME, Terrill E, Yarzebski J, Flaherty H. The significance of situation awareness in the clinical setting: implications for nursing education. Austin J Nurs Health Care. 2014;1(1):1005. [Google Scholar]

- 25.WHO . Human factors in patient safety. Review of topic and pools. Report for methods and measures working group of WHO Pateint safety. 2009. [Google Scholar]

- 26.Walton M, Woodward H, Van Staalduinen S, Lemer C, Greaves F, Noble D, Ellis B, Donaldson L, Barraclough B. The WHO patient safety curriculum guide for medical schools. Qual Saf Health Care. 2010;19(6):542–546. doi: 10.1136/qshc.2009.036970. [DOI] [PubMed] [Google Scholar]

- 27.Armitage G, Cracknell A, Forrest K, Sandars J. Twelve tips for implementing a patient safety curriculum in an undergraduate programme in medicine. Med Teach. 2011;33(7):535–540. doi: 10.3109/0142159X.2010.546449. [DOI] [PubMed] [Google Scholar]

- 28.Launer J. Unconscious incompetence. Postgrad Med J. 2010;86(1020):628. doi: 10.1136/pgmj.2010.108423. [DOI] [Google Scholar]

- 29.Cutrer WB, Sullivan WM, Fleming AE. Educational strategies for improving clinical reasoning. Curr Probl Pediatr Adolesc Health Care. 2013;43(9):248–257. doi: 10.1016/j.cppeds.2013.07.005. [DOI] [PubMed] [Google Scholar]

- 30.Pinnock R, Welch P. Learning clinical reasoning. J Paediatr Child Health. 2014;50(4):253–257. doi: 10.1111/jpc.12455. [DOI] [PubMed] [Google Scholar]

- 31.Kuldas S, Ismail HN, Hashim S, Bakar ZA. Unconscious learning processes: mental integration of verbal and pictorial instructional materials. SpringerPlus. 2013;2(1):105. doi: 10.1186/2193-1801-2-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Endsley MR. Expertise and situation awareness. In: Ericsson KA, Charness N, Feltovich P, Hoffman R, editors. The Cambridge handbook of expertise and expert performance. Cambridge: Cambridge University Press; 2006. pp. 633–652. [Google Scholar]

- 33.Schmidt HG, Rikers RM. How expertise develops in medicine: knowledge encapsulation and illness script formation. Med Educ. 2007;41(12):1133–1139. doi: 10.1111/j.1365-2923.2007.02915.x. [DOI] [PubMed] [Google Scholar]

- 34.Croskerry P, Nimmo GR. Better clinical decision making and reducing diagnostic error. J R Coll Physicians Edinb. 2011;41(2):155–162. doi: 10.4997/JRCPE.2011.208. [DOI] [PubMed] [Google Scholar]

- 35.Ilgen JS, Humbert AJ, Kuhn G, Hansen ML, Norman GR, Eva KW, Charlin B, Sherbino J. Assessing diagnostic reasoning: a consensus statement summarizing theory, practice, and future needs. Acad Emerg Med. 2012;19(12):1454–1461. doi: 10.1111/acem.12034. [DOI] [PubMed] [Google Scholar]

- 36.Schmidt HG, Norman GR, Boshuizen HP. A cognitive perspective on medical expertise: theory and implication. Acad Med. 1990;65(10):611–621. doi: 10.1097/00001888-199010000-00001. [DOI] [PubMed] [Google Scholar]

- 37.Kiesewetter J, Ebersbach R, Görlitz A, Holzer M, Fischer MR, Schmidmaier R. Cognitive problem solving patterns of medical students correlate with success in diagnostic case solutions. PLoS One. 2013;8(8):e71486. doi: 10.1371/journal.pone.0071486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nendaz M, Raetzo MA, Junod AF, Vu NV. Teaching diagnostic skills: clinical vignettes or chief complaints? Adv Health Sci Educ Theory Pract. 2000;5(1):3–10. doi: 10.1023/A:1009887330078. [DOI] [PubMed] [Google Scholar]

- 39.Schuwirth L. Is assessment of clinical reasoning still the holy grail? Med Educ. 2009;43(4):298–300. doi: 10.1111/j.1365-2923.2009.03290.x. [DOI] [PubMed] [Google Scholar]

- 40.Singh H, Giardina TD, Petersen LA, Smith MW, Paul LW, Dismukes K, Bhagwath G, Thomas EJ. Exploring situational awareness in diagnostic errors in primary care. BMJ Qual Saf. 2012;21(1):30–38. doi: 10.1136/bmjqs-2011-000310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Watson R, Stimpson A, Topping A, Porock D. l. Clinical competence assessment in nursing: a systematic review of the literature. J Adv Nurs. 2002;39(5):421–431. doi: 10.1046/j.1365-2648.2002.02307.x. [DOI] [PubMed] [Google Scholar]

- 42.Chumley HS. What does an OSCE checklist measure? Fam Med. 2008;40(8):589–591. [PubMed] [Google Scholar]

- 43.Martin IG, Stark P, Jolly B. Benefiting from clinical experience: the influence of learning style and clinical experience on performance in an undergraduate objective structured clinical examination. Med Educ. 2000;34(7):530–534. doi: 10.1046/j.1365-2923.2000.00489.x. [DOI] [PubMed] [Google Scholar]

- 44.Varkey P, Natt N. The objective structured clinical examination as an educational tool in patient safety. Jt Comm J Qual Patient Saf. 2007;33(1):48–53. doi: 10.1016/S1553-7250(07)33006-7. [DOI] [PubMed] [Google Scholar]

- 45.Durning SJ, Artino A, Boulet J, La Rochelle J, Van der Vleuten C, Arze B, Schuwirth L. The feasibility, reliability, and validity of a post-encounter form for evaluating clinical reasoning. Med Teach. 2012;34(1):30–37. doi: 10.3109/0142159X.2011.590557. [DOI] [PubMed] [Google Scholar]

- 46.LaRochelle JS, Dong T, Durning SJ. Preclerkship assessment of clinical skills and clinical reasoning: the longitudinal impact on student performance. Mil Med. 2015;180(4 Suppl):43–46. doi: 10.7205/MILMED-D-14-00566. [DOI] [PubMed] [Google Scholar]

- 47.Park WB, Kang SH, Lee YS, Myung SJ. Does objective structured clinical examinations score reflect the clinical reasoning ability of medical students? Am J Med Sci. 2015;350(1):64–67. doi: 10.1097/MAJ.0000000000000420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sim JH, Abdul Aziz YF, Mansor A, Vijayananthan A, Foong CC, Vadivelu J. Students' performance in the different clinical skills assessed in OSCE: what does it reveal? Med Educ Online. 2015;20:26185. doi: 10.3402/meo.v20.26185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Volkan K, Simon SR, Baker H, Todres ID. Psychometric structure of a comprehensive objective structured clinical examination: a factor analytic approach. Adv Health Sci Educ Theory Pract. 2004;9(2):83–92. doi: 10.1023/B:AHSE.0000027434.29926.9b. [DOI] [PubMed] [Google Scholar]

- 50.Stansfield RB, Diponio L, Craig C, Zeller J, Chadd E, Miller J, Monrad S. Assessing musculoskeletal examination skills and diagnostic reasoning of 4th year medical students using a novel objective structured clinical exam. BMC Med Educ. 2016;16(1):268–274. doi: 10.1186/s12909-016-0780-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Durak HI, Caliskan SA, Bor S, Van der Vleuten C. Use of case-based exams as an instructional teaching tool to teach clinical reasoning. Med Teach. 2007;29(6):e170–e174. doi: 10.1080/01421590701506866. [DOI] [PubMed] [Google Scholar]

- 52.Lafleur A, Côté L, Leppink J. Influences of OSCE design on students' diagnostic reasoning. Med Educ. 2015;49(2):203–214. doi: 10.1111/medu.12635. [DOI] [PubMed] [Google Scholar]

- 53.Myung SJ, Kang SH, Phyo SR, Shin JS, Park WB. Effect of enhanced analytic reasoning on diagnostic accuracy: a randomized controlled study. Med Teach. 2013;35(3):248–250. doi: 10.3109/0142159X.2013.759643. [DOI] [PubMed] [Google Scholar]

- 54.Furmedge DS, Smith LJ, Sturrock A. Developing doctors: what are the attitudes and perceptions of year 1 and 2 medical students towards a new integrated formative objective structured clinical examination? BMC Med Educ. 2016;16(1):32. doi: 10.1186/s12909-016-0542-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Flin R. Measuring safety culture in healthcare: a case for accurate diagnosis. Safety Sci. 2007;45(6):653–667. doi: 10.1016/j.ssci.2007.04.003. [DOI] [Google Scholar]

- 56.Borleffs JC, Custers EJ, van Gijn J, ten Cate OT. "clinical reasoning theater": a new approach to clinical reasoning education. Acad Med. 2003;78(3):322–325. doi: 10.1097/00001888-200303000-00017. [DOI] [PubMed] [Google Scholar]

- 57.Alexander EK. Perspective: moving students beyond an organ-based approach when teaching medical interviewing and physical examination skills. Acad Med. 2008;83(10):906–909. doi: 10.1097/ACM.0b013e318184f2e5. [DOI] [PubMed] [Google Scholar]

- 58.Gruppen LD, Wolf FM, Billi JE. Information gathering and integration as sources of error in diagnostic decision making. Med Decis Mak. 1991;11(4):233–239. doi: 10.1177/0272989X9101100401. [DOI] [PubMed] [Google Scholar]

- 59.Salinitri FD, O'Connell MB, Garwood CL, Lehr VT, Abdallah K. An objective structured clinical examination to assess problem-based learning. Am J Pharm Educ. 2012;76(3):Article 44. doi: 10.5688/ajpe76344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Haider I, Kahn A, Imam SM, Ajmal F, Khan M, Ayub M. Perceptions of final professional MBBS students and their examiners about objective structured clinical examination [OSCE]: a combined examiner and examinee survey. J Med Sci. 2016;24(4):206–221. [Google Scholar]

- 61.Baker EA, Ledford CH, Fogg L, Way DP, Park YS. The IDEA assessment tool: assessing the reporting, diagnostic reasoning, and decision-making skills demonstrated in medical students' hospital admission notes. Teach Learn Med. 2015;27(2):163–173. doi: 10.1080/10401334.2015.1011654. [DOI] [PubMed] [Google Scholar]

- 62.Daud-Gallotti RM, Morinaga CV, Arlindo-Rodrigues M, Velasco IT, Martins MA, Tiberio IC. A new method for the assessment of patient safety competencies during a medical school clerkship using an objective structured clinical examination. Clinics. 2011;66(7):1209–1215. doi: 10.1590/S1807-59322011000700015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ashby SE, Snodgrass SH, Rivett DA, Russell T. Factors shaping e-feedback utilization following electronic objective structured clinical examinations. Nurs Health Sci. 2016;18(3):362–369. doi: 10.1111/nhs.12279. [DOI] [PubMed] [Google Scholar]

- 64.Siddiqui FG. Final year MBBS students' perception for observed structured clinical examination. J Coll Physicians Surg Pak. 2013;23(1):20–24. [PubMed] [Google Scholar]

- 65.Khairy GA. Feasibility and acceptability of objective structured clinical examination [OSCE] for a large number of candidates: experience at a university hospital. J Family Community Med. 2004;11(2):75–78. [PMC free article] [PubMed] [Google Scholar]

- 66.Hammad M, Oweis Y, Taha S, Hattar S, Madarati A, Kadim F. Students’ opinions and attitudes after performing a dental OSCE for the first time: a Jordanian experience. J Dent Educ. 2013;77(1):99–104. [PubMed] [Google Scholar]

- 67.Pugh D, Touchie C, Humphrey-Murto S, Wood TJ. The OSCE progress test–measuring clinical skill development over residency training. Medical Teach. 2016;38(2):168–173. doi: 10.3109/0142159X.2015.1029895. [DOI] [PubMed] [Google Scholar]

- 68.Gregory A, Hogg G, Ker J. Innovative teaching in situational awareness. Clin Teach. 2015;12:331–335. doi: 10.1111/tct.12310. [DOI] [PubMed] [Google Scholar]

- 69.Scott RB, Dienes Z. The conscious, the unconscious, and familiarity. J Exp Psychol-Learn Mem Cogn. 2008;34(5):1264–1288. doi: 10.1037/a0012943. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.