Abstract

Objectives

Although speech perception is the gold standard for measuring cochlear implant (CI) users’ performance, speech perception tests often require extensive adaptation to obtain accurate results, particularly after large changes in maps. Spectral ripple tests, which measure spectral resolution, are an alternate measure that has been shown to correlate with speech perception. A modified spectral ripple test, the spectral-temporally modulated ripple test (SMRT) has recently been developed, and the objective of this study is to compare speech perception and performance on the SMRT for a heterogeneous population of unilateral CI users, bilateral CI users, and bimodal users.

Design

Twenty-five CI users (eight using unilateral CIs, nine using bilateral CIs, and eight using a CI and a hearing aid) were tested on the Arizona Biomedical Institute Sentence Test (AzBio) with a +8 dB signal-to-noise ratio, and on the SMRT. All participants were tested with their clinical programs.

Results

There was a significant correlation between SMRT and AzBio performance. After a practice block, an improvement of 1 ripple per octave for SMRT corresponded to an improvement of 12.1% for AzBio. Additionally, there was no significant difference in slope or intercept between any of the CI populations.

Conclusion

The results indicate that performance on the SMRT correlates with speech recognition in noise when measured across unilateral, bilateral, and bimodal CI populations. These results suggest that SMRT scores are strongly associated with speech recognition in noise ability in experienced CI users. Further studies should focus on increasing both the size and diversity of the tested participants, and on determining whether the SMRT technique can be used for early predictions of long-term speech scores, or for evaluating differences among different stimulation strategies or parameter settings.

Introduction

Originally developed as an aid for lip-reading, cochlear implants (CI) have rapidly progressed to a point where many users have a high level of speech perception and sound recognition (Wilson & Dorman 2007). Improvements in speech recognition in noise are a key goal both clinically and in many research studies. However, such measures often need extended experience to obtain asymptotic performance because they rely on a learned association between a stimulation pattern and a phoneme. This presents a problem: with no immediate measure of how a patient will perform with a particular map, it is difficult to determine how one should adjust the map.

Measures of spectral resolution, such as the traditional spectral ripple test (Henry & Turner 2003) or spectral tilt measures (Winn & Litovsky 2015), which do not rely on such learned associations, offer a solution to this problem. In general, it has been shown that better spectral resolution correlates with better speech recognition in noise for CI users (Drennan et al. 2016; Henry & Turner 2003; Holden et al. 2016; Won et al. 2007; however, see Langner et al. 2017). It has also been shown that simulating broad activation patterns in normal hearing listeners via a vocoder results in deterioration of both spectral resolution and vowel recognition (Litvak et al. 2007). Additionally, spectral resolution tests involve minimal learning (Drennan et al. 2016; Won et al. 2007), indicating the potential use for these tests after remapping in predicting which adjustments may result in optimal speech perception in noise.

Recently, a modified version of the traditional spectral ripple test, the Spectral-temporally Modulated Ripple Test (SMRT; Aronoff & Landsberger 2013) has been used in a number of studies (Vickers et al. 2016; Holden et al. 2016; Aronoff et al. 2016b; Kirby et al. 2015; Zhou 2017). The SMRT uses a spectral ripple with a ripple phase that drifts over time. Each ripple phase has a 5 Hz repetition rate, creating a detectable temporal modulation with a changing phase across the spectrum (see Supplemental Digital Content 1). Holden et al. (2016) previously found that SMRT scores correlate with speech perception in noise for a relatively homogenous group of users tested with a unilateral CI. Similarly, Zhou et al (2017) also found a correlation between SMRT and speech recognition in noise for users tested with a unilateral CI. However, many CI users have two CIs or a CI and a contralateral hearing aid. SMRT involves frequency-specific temporal modulations that align across ears and the interaural place mismatches common for bilateral CI users (Aronoff et al. 2016a) may result in a misalignment of the temporal modulations across ears. This could potentially result in interference or spurious cues that could alter the relationship between SMRT and speech scores. For bimodal users, the hearing aid and CI are likely to alter the SMRT signal differently, also potentially resulting in interference or spurious cues when the two ears are combined that could alter the relationship between SMRT and speech scores.

Whether the SMRT will have broad clinical applicability will depend in part on the strength of its association with speech perception in noise for a wide variety of patients. The goal of this study is to explore the relationship between SMRT scores and performance on the Arizona Biomedical Institute sentence test (AZBio) in noise for a group comprising unilateral CI users, bilateral CI users, and bimodal users.

Materials and Methods

Subjects

There were a total of 25 participants. Nine participants were tested with bilateral CIs, eight were tested with unilateral CIs, and eight were tested with a CI in one ear and a hearing aid in the other (see Table 1). In patients tested with a unilateral CI, the contralateral side was not blocked or altered in any way. Participants were all 18 years or older and native English speakers. Participants were chosen based on availability with no consideration or evaluation of previous CI performance. Although the majority of participants had no experience with SMRT, most had experience with AZBio in routine clinical testing. All participants had at least 6 months of CI listening experience.

Table 1.

Participant characteristics.

| Subject | Age | Gender | Hearing Loss Onset |

Pre/post lingual onset of hearing loss |

Cause | Cochlear implant |

Cochlear implant experience |

Hearing Aid |

SMRT (RPO) |

AZBio (% correct) |

|---|---|---|---|---|---|---|---|---|---|---|

| I02 | 63 | Female | 2 y/o | Prelingual | Meningitis | Advanced Bionics (bilateral) | 4 years (left), 7 years (right) | ----- | 4.9 | 53.3 |

| I03 | 72 | Female | Congenital | Prelingual | Unknown | Advanced Bionics (bilateral) | 14 years (left), 9 years (right) | ----- | 2.8 | 6.8 |

| I16 | 51 | Female | 6 mo | Prelingual | Rubella | Advanced Bionics (bilateral) | 2 months (right), 9 years (left) | ----- | 0.5 | 3.3 |

| I26 | 45 | Female | Congenital | Prelingual | Congenital | Advanced Bionics (bilateral) | 2 years (bilateral) | ----- | 8.3 | 76.7 |

| I30 | 26 | Female | Congenital | Prelingual | Congenital | Cochlear (right) | 21 years | None | 3.1 | 21.8 |

| I44 | 54 | Male | Congenital | Prelingual | Congenital | Cochlear (right) | 5 years | Starkey (left) | 4.2 | 76.5 |

| I04 | 58 | Female | 39 y/o | Postlingual | Autoimmune | Advanced Bionics (bilateral) | 2 years (left), 2 years (right) | ----- | 5 | 80.4 |

| I05 | 58 | Male | 5 y/o | Postlingual | Congenital | Advanced Bionics (bilateral) | 13 years (bilateral) | ---- | 1.4 | 8.8 |

| I17 | 74 | Male | 66 y/o | Postlingual | Unknown | Advanced Bionics (right) | 3 years | Audibel (left) | 2.3 | 17.4 |

| I18 | 48 | Male | 20 y/o | Postlingual | Unknown | Cochlear (bilateral) | 3 years (left), 11 years (right) | ----- | 5 | 74.9 |

| I19 | 65 | Male | 44 y/o | Postlingual | Unknown | Med-El (bilateral) | 8 months (bilateral) | ----- | 1.6 | 3.7 |

| I20 | 59 | Female | 53 y/o | Postlingual | Autoimmune | Cochlear (left) | 6 years | None | 4.8 | 47.1 |

| I23 | 50 | Female | 20 y/o | Postlingual | Ototoxicity | Cochlear (right) | 13 years | * Unknown | 1.4 | 17.8 |

| I24 | 85 | Male | 65 y/o | Postlingual | Noise Exposure | Advanced Bionics (right) | 7 years | * Phonak (left) | 0.5 | 1.1 |

| I29 | 65 | Female | 63 y/o | Postlingual | Noise Exposure | Cochlear (left) | 6 months | ReSound (right) | 5.6 | 44.6 |

| I31 | 55 | Male | 37 y/o | Postlingual | Trauma | Cochlear (bilateral) | 16 years (left), 2 years (right) | ----- | 2.5 | 11.2 |

| I32 | 64 | Female | 39 y/o | Postlingual | Familial | Cochlear (left) | 2 years | None | 5.3 | 83.3 |

| I33 | 69 | Male | 44 y/o | Postlingual | Familial | Med-El (left) | 2 years | Oticon (right) | 3 | 38.1 |

| I45 | 68 | Female | 18 y/o | Postlingual | Genetic/progressive | Advanced Bionics (left) | 5 years | Phonak (right) | 6.3 | 77 |

| I46 | 81 | 2/1/17 | ~75 y/o | Postlingual | Chemotherapy | Advanced Bionics (left) | 1 year | Oticon (right) | 2.3 | 7.9 |

| I47 | 70 | Female | 30 y/o | Postlingual | Familial | Cochlear (right) | 2 years | Phonak (left) | 2 | 44.2 |

| I22 | 50 | Female | Unknown | Unclear | Chronic otitis | Cochlear (left) | 1 year | None | 1.6 | 20.2 |

| I25 | 18 | Female | Childhood | Unclear | Unknown | Med-El (right) | 5 years | * Oticon (left) | 0.3 | 0 |

| I27 | 66 | Male | Childhood | Unclear | Meningitis/otitis | Cochlear (right) | 9 years | None | 2.1 | 21.6 |

| I48 | 67 | Female | Birth (left), 59 y/o | Mixed | Congenital/ototoxicity | Advanced Bionics (left) | 8 years | Phonak (right) | 1.6 | 49.8 |

Tested without hearing aid.

Equipment

The stimuli were presented using either an Edirol UA-25 or MOTU 24I/O soundcard, amplified by a Behringer A500 Reference Amplifier, and presented in the sound field via a Pyle PCB5BK speaker placed 1 meter from the participant’s head at ear-level. The same soundcard was always used for both the SMRT and AzBio testing for a given participant.

Stimuli and Procedures

Participants underwent testing with both AzBio and SMRT. AzBio consists of twenty-nine sentence lists, each with twenty sentences, ten from two female speakers and ten from two male speakers. The sentences range from four to twelve words and are spoken in a conversational style in a background of continuous ten-talker babble. For this study, the eight lists included in the Minimum Speech Test Battery were used. The noise was presented at 60 dB (A) and a +8 dB signal-to-noise ratio was used. Participants were asked to repeat the sentences to the best of their ability. The total number of words correct for all sentences was counted and divided by the total number of words in each list. Each subject was tested with two separate AzBio sentence lists and the sentence list selection was randomized across participants. No AzBio training in quiet or noise was provided to participants, although many of them had extensive experience with AzBio given its prevalence in clinical assessment.

SMRT consists of three spectrally rippled (i.e., amplitude modulated in the frequency domain) stimuli, two reference stimuli and one target stimulus. Each stimulus is generated with a 44.1 kHz sampling rate and is 500 ms with 100ms onset and offset linear ramps. The stimuli are composed of 202 pure tones with random starting phases, spaced every 1/33.33 octaves (from 100–6400 Hz). The amplitudes of the pure tones are temporally modulated by a half-wave rectified sinewave with a starting phase that differs systematically across pure tones. The temporal modulation of the stimulus is controlled by the frequency of that sinewave. As is standard for SMRT, the sinewaves used to add the temporal modulation had a frequency of 5 Hz. The shift in the starting phase of the modulating sinewaves across frequencies causes a drift in the ripples in the frequency domain (see Supplemental Digital Content 1).

The reference stimuli have ripple densities of 20 ripples per octave (RPO). The target stimulus initially has a ripple density of 0.5 RPO that is varied using a 1 up/1 down adaptive procedure with a step size of 0.2 RPO. Subjects are asked to “choose which of the three noises sounds different” and to use a keyboard to indicate whether it was the first, second, or third stimulus. The test is completed after ten reversals. Spectral-ripple thresholds (in RPOs) are calculated from the average of the last six reversals. For this study, stimuli were presented at a fixed intensity of 60 dB (A). Each participant was tested twice with the SMRT. No training was provided for the SMRT.

This study was approved by the University of Illinois at Urbana-Champaign Institutional Review Board.

Results

Robust statistical techniques were used to analyze the data (see the Appendix in the Supplemental Digital Content in Aronoff et al. 2016b; re-printed with permission as Supplemental Digital Content 2), including bootstrap analyses, least trimmed squares regressions and trimmed means. Overall, these techniques serve to minimize the problems that outliers and non-normality cause for traditional statistical techniques without resorting to non-parametric methods which ignore important magnitude information. Trimmed means retain some characteristics of means and some characteristics of medians. With medians, the upper and lower approximately 50% of the data are treated as ordinal values. The mean of the remaining interval data is then calculated. With the 20% trimmed means used here, the upper and lower 20% of the data are treated as ordinal values and the mean of the remaining interval data is calculated. Least trimmed squares regressions are similar to least squares regressions, except that they minimize a subset of the errors to reduce the effects of outliers.

To determine if performance improved from the first to second SMRT test, a percentile bootstrap pairwise comparison using 20% trimmed means was conducted comparing the first and second SMRT test. The results indicated a small but significant improvement for the second SMRT test (95% confidence interval: .06 to .93 RPO, 20% trimmed mean: 0.5 RPO). This indicates that a practice test is needed. As such, comparisons between SMRT and AzBio excluded the results from the first (i.e., practice) SMRT test.

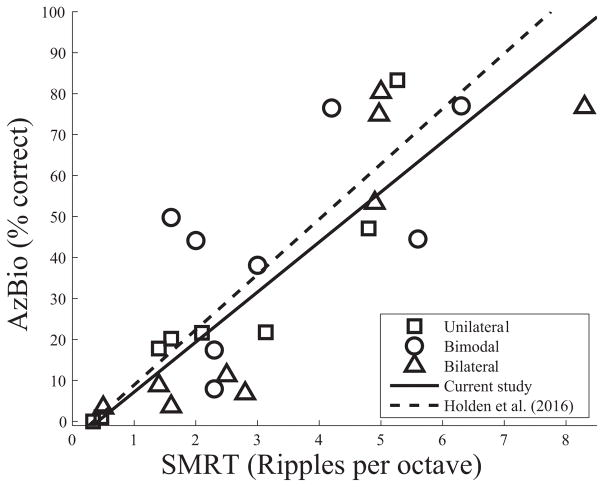

A bootstrap pearson correlation with outlier correction based on the minimum generalized variance outlier detection method was calculated. There was a significant correlation between the SMRT scores and the AzBio scores (r=0.83, 95% confidence interval: 0.63 to 0.94; see Figure 1). The one outlier detected by the outlier detection method was the bilateral CI user with the highest SMRT score. A least trimmed squares regression was used to characterize the relationship between SMRT and AzBio scores. This indicated that an increase of one RPO on SMRT correlates with an increase of 12.1 percent on AzBio (slope=12.1, intercept = −5).

Figure 1.

SMRT scores significantly correlate with AzBio in noise scores. Each data point represents one participant. The solid line reflects a linear fit of the data from the current study. The dashed line reflects a linear fit of the data from Holden et al. (2016).

Bootstrap comparisons of least trimmed squares regressions were used to compare the relationship between SMRT and AzBio across groups. For these analyses, two groups were compared at a time. For example, a bootstrap sample was generated for both the bilateral and the bimodal groups and a least trimmed squares regression analysis was conducted separately on each group. The slope for the bilateral group was subtracted from the slope for the bimodal group, creating a difference score for the slopes. The intercept for the bilateral group was also subtracted from the intercept for the bimodal group, creating a difference score for the intercepts. This process was repeated 599 times and the resulting difference scores were ordered by magnitude and the 95% confidence interval consisted of the 14th and 585th largest values. This same process was repeated comparing the bilateral and unilateral groups as well as the unilateral and bimodal groups. The results indicated that there was no significant difference between the slopes and intercepts for any of the groups (bilateral vs. bimodal groups: 95% confidence interval for slope = −56 to 27, 95% confidence interval for the intercept= −59 to 156; bilateral vs. unilateral groups: 95% confidence interval for slope = −17 to 16, 95% confidence interval for the intercept= −25 to 53; unilateral vs. bimodal groups: 95% confidence interval for slope = −24 to 32, 95% confidence interval for the intercept= −152 to 56).

The effects of participant characteristics were also analyzed. Although there were only a small number of participants with a prelingual onset of hearing loss, those individuals received similar scores as those with a postlingual onset of hearing loss (see Table 1). Additionally, the slope and intercept for the relationship between SMRT and AzBio scores were similar for the two groups (prelingual: slope = 11.1, intercept = −5.2; postlingual: slope = 12.8, intercept= −7.8). There was no significant correlation between the age of onset of hearing loss or length of CI use for either ear with either SMRT or AzBio scores.

Discussion

The results indicated that SMRT scores are correlated with speech perception in noise scores even with a diverse group of participants, including those tested with unilateral CIs, bilateral CIs, and a CI combined with a hearing aid. These results are consistent with previous work showing spectral resolution, as determined by spectral-ripple tasks, strongly correlate with speech perception in noise and in quiet (Holden et al. 2016; Litvak et al. 2007; Won et al. 2007; Zhou 2017) as well as the results from biologically inspired models (Chi et al. 1999).

Our results were similar to those found in Holden et al. (2016). Holden et al. (2016) included the same testing conditions as the current study but a much more homogenous group of participants, all tested with a unilateral CI. As with the results from the current study, they also found a significant correlation between SMRT and AzBio in noise (+8dB SNR in both studies). The range of SMRT and AzBio scores was largely similar across the two studies, although the range of SMRT scores was slightly larger in the current study. Holden et al. (2016) found a range of 1.5 to 6.2 RPO. In the current study, the range was 0.3 to 8.3 RPO (although excluding the outlier identified by the minimum generalized variance outlier detection method, it was more comparable, ranging from 0.3 to 6.3 RPO). Additionally, participants’ AzBio scores spanned nearly the entire range in both studies (0 to 83 in the current study compared to 0 to 96% in Holden et al., 2016). However, both our mean SMRT scores (3.1 RPO) and mean AzBio scores (36%) were lower than those in Holden et al. (2016) (3.8 RPO and 52%, respectively), suggesting, on average, better performers in the Holden et al. (2016) study.

Importantly, while Holden et al. took steps to limit the acoustic hearing for their participants, the current study specifically included a group of bimodal patients. Given that an aim of this study is to provide evidence for the clinical utility of the SMRT, a broad range of patients, similar to what would be found in a typical clinic, were included. Notably, the linear fit for the current data and that from Holden et al. (2016) is strikingly similar, as shown in Figure 1. This, coupled with the lack of a significant difference between the slopes and intercepts between any of the groups, suggests that the relationship between SMRT and AzBio is similar across different CI populations. Further research is needed to determine if a similar relationship would be found if other hearing impaired populations are tested.

Although the results from this study and others suggest that measures of spectral resolution are significantly correlated with performance on speech tests, the correlation only accounts for a portion of the variability in performance on speech tests. This is likely because a number of other factors that affect speech understanding, such as implant experience and age of implantation (e.g., Lazard et al. 2012; Eapen et al. 2009), may not have a comparable effect on spectral resolution. However, as spectral resolution is improved by adding additional channels, both vocoder and CI studies show an improvement in speech perception performance (Shannon et al. 1995; Fishman et al. 1997; Friesen et al. 2001). It is possible that the correlation between spectral resolution and speech perception may be caused by spectral resolution affecting the upper limit of speech perception performance.

Potential limitations of the SMRT

One limitation of the SMRT found in this study is that, despite the relatively simple nature of the task for SMRT, the significant improvement from the first to the second SMRT test suggests that, at minimum, a practice test is needed. However, it is possible that learning continues over additional tests. This suggests that the test-retest reliability for the SMRT needs to be further examined.

Another possible limitation of the SMRT is that it may have an upper range that could limit the utility of the test for extremely high performing participants. Excluding the one outlier, both the current study and Holden et al. (2016) had a maximum SMRT score of approximately 6 RPO. Although this may have reflected limitations in the samples in each study, the similarity across the two studies suggests that this may reflect a true limitation of the SMRT with CI users. When normal hearing listeners were tested (without vocoders) in Aronoff & Landsberger (2013), scores were typically above 8 RPO, suggesting that the 6 RPO upper limit may reflect limitations induced by the CI processor, such as the bandwidth of the frequency bands.

The correlation between SMRT and AzBio is also likely to be affected by a number of factors not specifically manipulated in this study. For example, there may be effects of factors such as pre/postlingual deafness, pre/postlingual implantation, native language, etc. Although the similarities between the results found here and those in Holden et al. (2016) suggest that the correlation is likely to be found for a wide range of participants, many of these factors were not manipulated in either study.

Possible cues used for SMRT by CI users

CI processors will invariably distort the cues in the acoustic SMRT stimuli. However, the temporal cue caused by the 5 Hz modulation that changes in phase across frequencies would be expected to be at least partially retained after processing. Because multiple ripples that fall within one filter will be added together and the temporal modulation for adjacent ripples is slightly offset in time, the expectation is that the greater the ripple density, the greater the temporal smearing of the amplitude modulation within a frequency band. Similarly, the more channel interaction, resulting in signals from multiple filters adding together at the neural level, the more temporal smearing will occur. With increasing temporal smearing, the modulation depth of the amplitude modulation is reduced, increasing the difficulty of detecting it.

To investigate if this occurs with CI processors, electrodograms were recorded using a 16 channel map programmed on an Advanced Bionics Harmony processor using Bionic Ear Programming System (BEPS+; Advanced Bionics, Valencia, CA). The map used a continuous interleaved sampling (CIS) processing strategy without current steering, with a phase duration of 48 μs and a pulse rate of 657 pulses per second. The Standard Extended Low clinical frequency allocation was used, which ranged from 238 to 8054 Hz (see Table 2 for the corner frequencies).

Table 2.

Corner frequencies for maps used to generate electrodograms.

| Channel | Lower bound (Hz) | Upper bound (Hz) |

|---|---|---|

| 1 | 238 | 442 |

| 2 | 442 | 510 |

| 3 | 510 | 646 |

| 4 | 646 | 714 |

| 5 | 714 | 850 |

| 6 | 850 | 986 |

| 7 | 986 | 1189 |

| 8 | 1189 | 1393 |

| 9 | 1393 | 1597 |

| 10 | 1597 | 1937 |

| 11 | 1937 | 2277 |

| 12 | 2277 | 2617 |

| 13 | 2617 | 3093 |

| 14 | 3093 | 3704 |

| 15 | 3704 | 4316 |

| 16 | 4316 | 8054 |

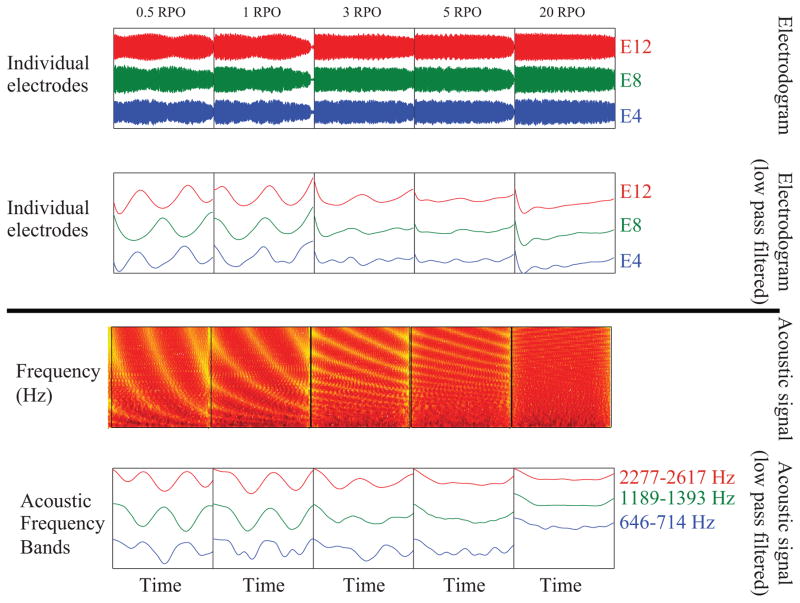

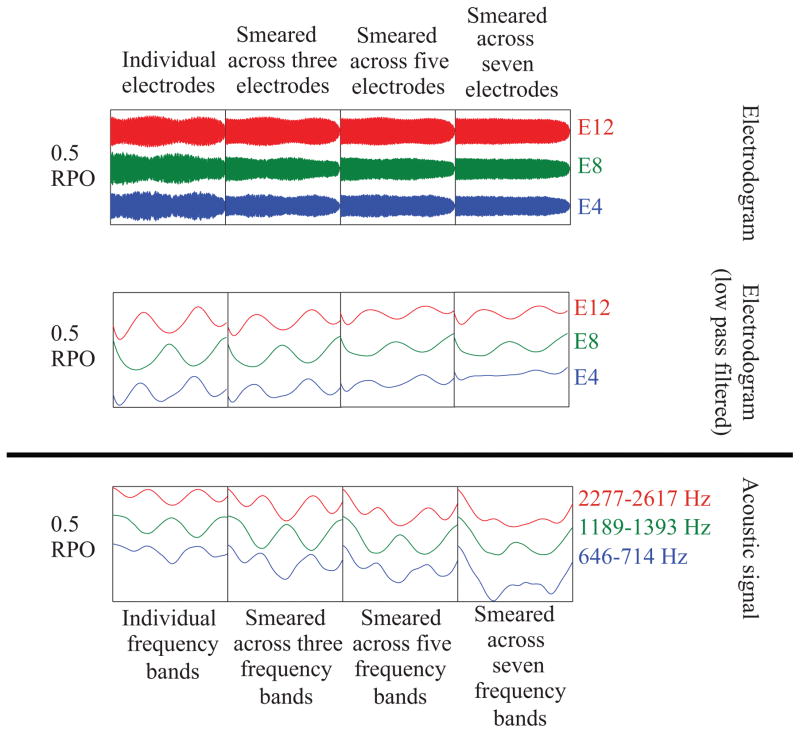

Because the amplitude modulation rate for each ripple is 5 Hz, versions of the electrodograms were also generated that were low-pass filtered at 10 Hz using a 4th order butterworth filter to highlight the temporal modulation in different channels. The top portion of Figure 2 shows the effect of increasing the ripple density. With a ripple density of 0.5 RPO, a clear amplitude modulation can be seen, shifted in time across channels. As predicted, increasing the ripple density decreased the amplitude modulation depth within each channel. The top portion of Figure 3 shows the effect of simulated increased channel interaction, by averaging the amplitude of the signal from each electrode over one, three, five, or seven electrodes. As predicted, increased simulated channel interaction also results in a reduction of the amplitude modulation depth. This is consistent with previous vocoder data indicating that increasing spectral smearing, by decreasing the number of vocoder channels, results in poorer SMRT scores (Aronoff & Landsberger 2013). Electrodograms for a wide range of SMRT stimuli are available in Supplemental Digital Content 1 and the raw data underlying the electrodograms are available in Supplemental Digital Content 3.

Figure 2.

Electrodograms (top panel) and acoustic signals (bottom panel) for SMRT stimuli demonstrating the effects of increased ripple density on the amplitude modulation in different frequency bands.

Figure 3.

Electrodograms (top panel) and acoustic signals (bottom panel) for SMRT stimuli demonstrating the effects of increased spectral smearing on the amplitude modulation in different frequency bands. For the electrodograms, channels were slightly shifted in time to align pulses across channels so they could be added together.

For bimodal users, the properties of the acoustic signal are also critical. The bottom portions of Figures 2 and 3 show acoustic SMRT stimuli. For comparison with the electrodograms, a version of the stimuli was also generated that were band-pass filtered with a 4th order butterworth filter using the corner frequencies from Table 2. The signals were then low-pass filtered at 10 Hz with a 4th order butterworth filter to examine the effects of increasing the RPO of the stimuli or increasing spectral smearing. Both the low pass filtered electrodograms and the low pass filtered acoustic signals show similar patterns. The similarity likely results from the preservation of envelope information in CIS-based strategies. This suggests that amplitude modulation detection may be key for both the CI and HA ear for the bimodal participants.

Given the reliance on detecting amplitude modulations for the SMRT, it is possible that, in addition to differences in spectral resolution, differences in amplitude modulation sensitivity could also affect SMRT performance. However, such differences are also likely to influence speech perception. Single-channel amplitude modulation detection has been found to correlate with speech perception (Fu 2002; Luo et al. 2008). Additionally, removing channels with poor amplitude modulation sensitivity can improve speech perception (Zhou & Pfingst 2012).

Although both spectral resolution and temporal amplitude modulation sensitivity could potentially account for the correlation with speech recognition (Friesen et al. 2001; Won et al. 2007; Fu 2002; Luo et al. 2008; Zhou & Pfingst 2012) it is not possible to distinguish the contributions of each based on the current experiment. Future studies will need to independently manipulate these factors to determine how they each contribute. Although the role of spectral resolution and temporal amplitude modulation sensitivity may differ across participants, the result of the current study suggest that, if they do, their effects are similar on both SMRT and speech recognition abilities.

Differences between SMRT and traditional ripple tests

Although both traditional ripple discrimination tests and SMRT scores are expressed in ripples per octave, numbers from the two tests are not interchangeable. Studies using traditional ripple tests with CI users have typically found average score of less than 3 RPO (Henry et al. 2005; Drennan et al. 2014; Anderson et al. 2011; Won et al. 2007). In contrast, studies using SMRT with CI users, including the current study, have typically found average scores greater than 3 RPO (Holden et al. 2016; Zhou 2017). These differences in scores likely reflect differences in both the stimuli and the tasks. Although both the traditional ripple test and the SMRT have amplitude modulations in the frequency domain, the SMRT also provides a temporal cue that varies across the spectrum and has a low modulation rate. These temporal modulations are not used in the traditional ripple test. In terms of task, the traditional ripple discrimination test typically asks participants to distinguish two ripples that differ in phase. In contrast, the SMRT asks participants to distinguish a stimulus with few RPOs, where the within-channel temporal modulation is likely audible, from a stimulus with a high ripple density, where the within-channel temporal modulation is unlikely to be preserved or audible. These differences may explain the different ranges of scores in the two tasks and suggest that the scores are unlikely to be interchangeable.

Conclusion

The results indicated that SMRT scores are strongly associated with speech recognition in noise ability in a diverse group of experienced CI users, including those using unilateral CIs, those with bilateral CIs, and those using a CI combined with a hearing aid. This increases the likelihood that SMRT may become a useful tool to evaluate map changes in the clinic, but further studies will be needed to provide a definitive answer.

Supplementary Material

Acknowledgments

Source of Funding:

Justin Aronoff is currently receiving NIH/NIDCD grant RO3-DC013380.

Portions of this work were presented at the 14th International Conference on Cochlear Implants 2016. This work was supported by NIH/NIDCD grant RO3-DC013380. We would like to thank Laura Holden for providing the data from Holden et al. 2016.

Footnotes

Conflicts of Interest

We declare no conflicts of interest.

References

- Anderson ES, Nelson DA, Kreft H, et al. Comparing spatial tuning curves, spectral ripple resolution, and speech perception in cochlear implant users. J Acoust Soc Am. 2011;130:364–375. doi: 10.1121/1.3589255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Landsberger DM. The development of a modified spectral ripple test. J Acoust Soc Am. 2013;134:EL217–22. doi: 10.1121/1.4813802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Padilla M, Stelmach J, et al. Clinically Paired Electrodes Are Often Not Perceived as Pitch Matched. Trends Hear. 2016a:20. doi: 10.1177/2331216516668302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Stelmach J, Padilla M, et al. Interleaved Processors Improve Cochlear Implant Patients’ Spectral Resolution. Ear Hear. 2016b;37:e85–e90. doi: 10.1097/AUD.0000000000000249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chi T, Gao Y, Guyton MC, et al. Spectro-temporal modulation transfer functions and speech intelligibility. J Acoust Soc Am. 1999;106:2719–2732. doi: 10.1121/1.428100. [DOI] [PubMed] [Google Scholar]

- Drennan WR, Anderson ES, Won JH, et al. Validation of a clinical assessment of spectral-ripple resolution for cochlear implant users. Ear Hear. 2014;35:e92–8. doi: 10.1097/AUD.0000000000000009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennan WR, Won JH, Timme AO, et al. Nonlinguistic Outcome Measures in Adult Cochlear Implant Users Over the First Year of Implantation. Ear Hear. 2016;37:354–364. doi: 10.1097/AUD.0000000000000261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eapen RJ, Buss E, Adunka MC, et al. Hearing-in-noise benefits after bilateral simultaneous cochlear implantation continue to improve 4 years after implantation. Otol Neurotol. 2009;30:153–159. doi: 10.1097/mao.0b013e3181925025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. J Speech, Lang Hear Res. 1997;40:1201–1215. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, et al. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Fu QJ. Temporal processing and speech recognition in cochlear implant users. Neuroreport. 2002;13:1635–1639. doi: 10.1097/00001756-200209160-00013. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW. The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners. J Acoust Soc Am. 2003;113:2861–2873. doi: 10.1121/1.1561900. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118:1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Holden LK, Firszt JB, Reeder RM, et al. Factors Affecting Outcomes in Cochlear Implant Recipients Implanted With a Perimodiolar Electrode Array Located in Scala Tympani. Otol Neurotol. 2016;37:1662–1668. doi: 10.1097/MAO.0000000000001241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby BJ, Browning JM, Brennan MA, et al. Spectro-temporal modulation detection in children. J Acoust Soc Am. 2015;138:EL465–EL468. doi: 10.1121/1.4935081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langner F, Saoji AA, Büchner A, et al. Adding simultaneous stimulating channels to reduce power consumption in cochlear implants. Hear Res. 2017;345:96–107. doi: 10.1016/j.heares.2017.01.010. [DOI] [PubMed] [Google Scholar]

- Lazard DS, Vincent C, Venail F, et al. Pre-, Per- and Postoperative Factors Affecting Performance of Postlinguistically Deaf Adults Using Cochlear Implants: A New Conceptual Model over Time. PLoS One. 2012;7:1–11. doi: 10.1371/journal.pone.0048739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litvak LM, Spahr AJ, Saoji AA, et al. Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners. J Acoust Soc Am. 2007;122:982–991. doi: 10.1121/1.2749413. [DOI] [PubMed] [Google Scholar]

- Luo X, Fu QJ, Wei CG, et al. Speech recognition and temporal amplitude modulation processing by Mandarin-speaking cochlear implant users. Ear Hear. 2008;29:957–970. doi: 10.1097/AUD.0b013e3181888f61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, et al. Speech recognition with primarily temporal cues. Science (80- ) 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Vickers D, Degun A, Canas A, et al. Deactivating cochlear implant electrodes based on pitch information for users of the ACE strategy. Adv Exp Med Biol. 2016;894:115–123. doi: 10.1007/978-3-319-25474-6_13. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Dorman MF. The surprising performance of present-day cochlear implants. IEEE Trans Biomed Eng. 2007;54:969–972. doi: 10.1109/TBME.2007.893505. [DOI] [PubMed] [Google Scholar]

- Winn MBB, Litovsky RYY. Using speech sounds to test functional spectral resolution in listeners with cochlear implants. J Acoust Soc Am. 2015;137:1430–1442. doi: 10.1121/1.4908308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Rubinstein JT. Spectral-Ripple Resolution Correlates with Speech Reception in Noise in Cochlear Implant Users. J Assoc Res Otolaryngol. 2007;8:384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou N. Deactivating stimulation sites based on low-rate thresholds improves spectral ripple and speech reception thresholds in cochlear implant users. J Acoust Soc Am. 2017;141:EL243–EL248. doi: 10.1121/1.4977235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou N, Pfingst BE. Psychophysically based site selection coupled with dichotic stimulation improves speech recognition in noise with bilateral cochlear implants. J Acoust Soc Am. 2012;132:994–1008. doi: 10.1121/1.4730907. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.