Abstract

Background:

Decision analysis—a systematic approach to solving complex problems—offers tools and frameworks to support decision making that are increasingly being applied to environmental challenges. Alternatives analysis is a method used in regulation and product design to identify, compare, and evaluate the safety and viability of potential substitutes for hazardous chemicals.

Objectives:

We assessed whether decision science may assist the alternatives analysis decision maker in comparing alternatives across a range of metrics.

Methods:

A workshop was convened that included representatives from government, academia, business, and civil society and included experts in toxicology, decision science, alternatives assessment, engineering, and law and policy. Participants were divided into two groups and were prompted with targeted questions. Throughout the workshop, the groups periodically came together in plenary sessions to reflect on other groups’ findings.

Results:

We concluded that the further incorporation of decision science into alternatives analysis would advance the ability of companies and regulators to select alternatives to harmful ingredients and would also advance the science of decision analysis.

Conclusions:

We advance four recommendations: a) engaging the systematic development and evaluation of decision approaches and tools; b) using case studies to advance the integration of decision analysis into alternatives analysis; c) supporting transdisciplinary research; and d) supporting education and outreach efforts. https://doi.org/10.1289/EHP483

Introduction

Policy makers are faced with choices among alternative courses of action on a regular basis. This is particularly true in the environmental arena. For example, air quality regulators must identify the best available control technologies from a suite of options. In the federal program for remediation of contaminated sites, government project managers must propose a clean-up method from a set of feasible alternatives based on nine selection criteria (U.S. EPA 1990). Rule makers in the Occupational Safety and Health Administration (OSHA) compare a variety of engineering controls and work practices in light of technical feasibility, economic impact, and risk reduction to establish permissible exposure limits (Malloy 2014). At present, as we describe below, some agencies must identify safer, viable alternatives to chemicals for consumer and industrial applications. Such evaluation, known as alternatives analysis, requires balancing numerous, often incommensurable, decision criteria and evaluating the trade-offs among those criteria presented by multiple alternatives.

The University of California Sustainable Technology and Policy Program, in partnership with the University of California Center for Environmental Implications of Nanotechnology (CEIN), hosted a workshop on integrating decision analysis and predictive toxicology into alternatives analysis (CEIN 2015). The workshop brought together approximately 40 leading decision analysts, toxicologists, law and policy experts, and engineers who work in national and state government, academia, the private sector, and civil society for two days of intensive discussions. To provide context for the discussions, the workshop organizers developed a case study regarding the search for alternatives to copper-based marine antifouling paint, which is used to protect the hulls of recreational boats from barnacles, algae, and other marine organisms. Participants received data regarding the health, environmental, technical, and economic performance of a set of alternative paints (see Supplemental Material, “Anti-Fouling Paint Case Study Performance Matrix”). Throughout the workshop, the groups periodically came together in plenary sessions to reflect on other groups’ findings. This article focuses on the workshop discussion and on conclusions regarding decision making.

We first review regulatory decision making in general, and we provide background on selection of safer alternatives to hazardous chemicals using alternatives analysis (AA), also called alternatives assessment. We then summarize relevant decision-making approaches and associated methods and tools that could be applied to AA. The next section outlines some of the challenges associated with decision making in AA and the role that various decision approaches could play in resolving them. After setting out four principles for integrating decision analysis into AA, we advance four recommendations for driving integration forward.

Regulatory Decision Making and Selection of Safer Alternatives

The consequences of regulatory decisions can have broad implications in areas such as human health and the environment. Yet within the regulatory context, these complex decision tasks are traditionally performed using an ad hoc approach, that is to say, without the aid of formal decision analysis methods or tools (Eason et al. 2011). As we discuss later, such ad hoc approaches raise serious concerns regarding the consistency of outcomes across different cases; the transparency, predictability, and objectivity of the decision-making process; and human cognitive capacity in managing and synthesizing diverse, rich streams of information. Identifying a systematic framework for making effective, transparent, and objective decisions within the dynamic and complex regulatory milieu can significantly mitigate those concerns (NAS 2005). In its 2005 report, the NAS called for a program of research in environmental decision making focused on:

[I]mproving the analytical tools and analytic-deliberative processes necessary for good environmental decision making. It would include three components: developing criteria of decision quality; developing and testing formal tools for structuring decision processes; and creating effective processes, often termed analytic-deliberative, in which a broad range of participants take important roles in environmental decisions, including framing and interpreting scientific analyses. (NAS 2005)

Since that call, significant research has been performed regarding decision making related to environmental issues, particularly in the context of natural resource management, optimization of water and coastal resources, and remediation of contaminated sites (Gregory et al. 2012; Huang et al. 2011; Yatsalo et al. 2007). This work has begun the process of evaluating the application of formal decision approaches to environmental decision making, but numerous challenges remain, particularly with respect to the regulatory context. In fact, very few studies have focused on the application of decision-making tools and processes in the context of formal regulatory programs, taking into account the legal, practical, and resource constraints present in such settings (Malloy et al. 2013; Parnell et al. 2001). We focus upon the use of decision analysis in the context of environmental chemicals.

The challenge of making choices among alternatives is central in an emerging approach to chemical policy, which turns from conventional risk management to embrace prevention-based approaches to regulating chemicals. Conventional risk management essentially focuses upon limiting exposure to a hazardous chemical to an acceptable level through engineering and administrative controls. In contrast, a prevention-based approach seeks to minimize the use of toxic chemicals by mandating, directly incentivizing, or encouraging the adoption of viable safer alternative chemicals or processes (Malloy 2014). Thus, under a prevention-based approach, the regulatory agency would encourage or even mandate use of what it views as an inherently safer process using a viable alternative plating technique. Adopting a prevention-based approach, however, presents its own challenging choice—identifying a safer, viable alternative. Effective prevention-based regulation requires a regulatory AA methodology for comparing and evaluating the regulated chemical or process and its alternatives across a range of relevant criteria.

AA is a scientific method for identifying, comparing, and evaluating competing courses of action. In the case of chemical regulation, it is used to determine the relative safety and viability of potential substitutes for existing products or processes that use hazardous chemicals (NAS 2014; Malloy et al. 2013). For example, a business manufacturing nail polish containing a resin made using formaldehyde would compare its product with alternative formulations using other resins. Alternatives may include drop-in chemical substitutes, material substitutes, changes to manufacturing operations, and changes to component/product design (Sinsheimer et al. 2007). The methodology compares the alternatives with the regulated product and with one another across a variety of attributes, typically including public health impacts, environmental effects, and technical performance, as well as economic impacts on the manufacturer and on the consumer. The methodology identifies trade-offs between the alternatives and evaluates the relative overall performance of the original product and its alternatives.

In the regulatory setting, multiple parties may be involved to varying degrees in the generation of an AA. Typically, the regulated firm is required to perform the AA in the first instance, as in the California Safer Consumer Products program and the Registration, Evaluation, Authorisation and Restriction of Chemicals (REACH) authorization process (DTSC 2013; European Parliament and Council 2006). The AA, which may be done within the firm or by an outside consultant retained by the firm, is generally performed by an interdisciplinary team of experts (hereafter collectively referred to as the “analyst”) (DTSC 2013). The firm submits the AA to the regulatory agency for review. The regulatory agency will often propose a final decision regarding whether a viable, safer alternative exists and the appropriate regulatory action to take. (DTSC 2013; European Parliament and Council 2006). Possible regulatory actions include a ban on the existing product, adoption of an alternative, product labeling, use restrictions, or end-of-life management. Stakeholders such as other government agencies, environmental groups, trade associations, and the general public may provide comments on the AA and regulatory response. Ultimately, the agency retains the authority to require revisions to the analysis and has the final say over the regulatory response (Malloy 2014).

Development of effective regulatory AA methods is a pressing and timely public policy issue. Regulators in California, Maine, and Washington are implementing new programs that call for manufacturers to identify and evaluate potential safer alternatives to toxic chemicals in products (DTSC 2013; MDEP 2012; Department of Ecology, Washington State 2015). At the federal level, in the last few years, the U.S. Environmental Health Protection Agency (EPA) began to use AA as part of “chemical action plans” in its chemical management program (Lavoie et al. 2010). In the European Union, the REACH program imposes AA obligations upon manufacturers seeking authorization for the continued use of certain substances of very high concern (European Parliament and Council 2006). The stakes in developing effective approaches to regulatory AA are high. A flawed AA methodology can inhibit the identification and adoption of safer alternatives or support the selection of an undesirable alternative (often termed “regrettable substitution”). An example of the former is the U.S. EPA’s attempt in the late 1980s to ban asbestos, which was rejected by a federal court that concluded, among other things, that the AA method used by the agency did not adequately evaluate the feasibility and safety of the alternatives (Corrosion ProoFittings v. EPA 1991). Regrettable substitution is illustrated by the case of antifouling paints used to combat the buildup of bacteria, algae, and invertebrates such as barnacles on the hulls of recreational boats. As countries throughout the world banned highly toxic tributyltin in antifouling paints in the late 1980s, manufacturers turned to copper as an active ingredient (Dafforn et al. 2011). The cycle is now being repeated as regulatory agencies began efforts to phase out copper-based antifouling paint because of its adverse impacts on the marine environment (Carson et al. 2009).

AA frameworks and methods abound, yet few directly address how decision makers should select or rank the alternatives. As the 2014 NAS report on AA observed, “[m]any frameworks … do not consider the decision-making process or decision rules used for resolving trade-offs among different categories of toxicity and other factors (e.g., social impact), or the values that underlie such trade-offs” (NAS 2014). Similarly, a recent review of 20 AA frameworks and guides identified methodological gaps regarding the use of explicit decision frameworks and the incorporation of decision-maker values (Jacobs et al. 2016). The lack of attention to the decision-making process is particularly problematic in regulatory AA, in which the regulated entity, the government agency, and the stakeholders face significant challenges related to the complexity of the decisions, uncertainty of data, difficulty in identifying alternatives, and incorporation of decision-maker values. We discuss these challenges in detail below.

A variety of decision analysis tools and approaches can assist policy makers, product and process designers, and other stakeholders who face the challenging decision environment presented by AA. For these purposes, decision analysis is “a systematic approach to evaluating complex problems and enhancing the quality of decisions.” (Eason et al. 2011). Although formal decision analysis methods and tools suitable for such situations are well developed (Linkov and Moberg 2012), for the reasons discussed below, they are rarely applied in existing AA practice. The range of decision analysis methods and tools is quite broad, requiring development of principles for selecting and implementing the most appropriate ones for varied regulatory and private settings. Following an overview of the architecture of decision making in AA, we examine how various formal and informal decision approaches can assist decision makers in meeting the four challenges identified above. We conclude by offering a set of principles for developing effective AA decision-making approaches and steps for advancing the integration of decision analysis into AA practice.

Overview of Decision Making in Alternatives Analysis

In the case of regulatory AA, the particular decision or decisions to be made will depend significantly upon the requirements and resources of the regulatory program in question. For example, the goal may be to identify a single optimal alternative, to rank the entire set of alternatives, or to simply differentiate between acceptable and unacceptable alternatives (Linkov et al. 2006). As a general matter, however, the architecture of decision making is shaped by two factors: the decision framework adopted and the decision tools or methods used. For our purposes, the term “decision framework” means the overall structure or order of the decision making, consisting of particular steps in a certain order. Decision tools and methods are defined below.

Decision Frameworks

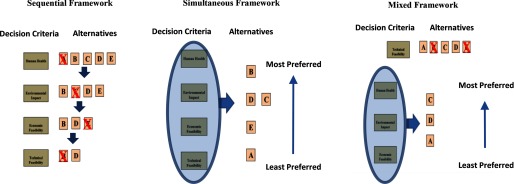

Existing AA approaches that explicitly address decision making use any of three general decision frameworks: sequential, simultaneous, and mixed (Figure 1). The sequential framework includes a set of attributes, such as human health, environmental impacts, economic feasibility, and technical feasibility, which are addressed in succession. The first attribute addressed is often human health or technical feasibility because it is assumed that any alternative that does not meet minimum performance requirements should not proceed with further evaluation. Only the most favorable alternatives proceed to the next step for evaluation, which continues until one or more acceptable alternatives are identified (IC2 2013; Malloy et al. 2013).

Figure 1.

Decision frameworks. Compares the process for decision making under sequential, simultaneous, and mixed frameworks.

The simultaneous framework considers all or a set of the attributes at once, allowing good performance on one attribute to offset less favorable performance on another for a given alternative. Thus, one alternative’s lackluster performance in terms of cost might be offset by its superior technical performance, a concept known as compensation (Giove et al. 2009). This type of trade-off is not generally available in the sequential framework across major decision criteria. Nevertheless, it is important to note that even within a sequential framework, the simultaneous framework may be lurking where a major decision criterion consists of sub-criteria. For example, in most AA approaches, the human health criterion has numerous sub-criteria reflecting various forms of toxicity such as carcinogenicity, acute toxicity, and neurotoxicity. Even within a sequential framework, the decision maker may consider all of those subcriteria simultaneously when comparing the alternatives with respect to human health (NAS 2014; IC2 2013).

The mixed or hybrid framework, as one might expect, is a combination of the sequential and simultaneous approaches (NAS 2014; IC2 2013; Malloy et al. 2013). For example, if technical feasibility is of particular importance to an analyst, she may screen out certain alternatives on that basis, and subsequently apply a simultaneous framework to the remaining alternatives regarding the other decision criteria. A recent study of 20 existing AA approaches observed substantial variance in the framework adopted: no framework (7 approaches), mixed (6 approaches), simultaneous (4 approaches), menu of all three frameworks (2 approaches), and sequential (1 approach) (Jacobs et al. 2016).

Decision Methods and Tools

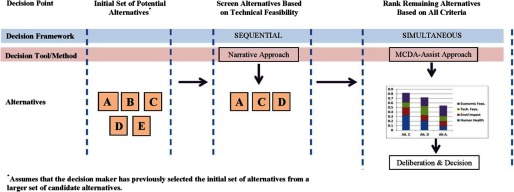

There are a wide range of decision tools and methods, that is to say, formal and informal aids, rules, and techniques that guide particular steps within a decision framework (NAS 2014; Malloy et al. 2013). These methods and tools range from informal rules of thumb to highly complex, statistically based methodologies. The various methods and tools have diverse approaches and distinctive theoretical bases, and they address data uncertainty, the relative importance of decision criteria, and other issues differently. For example, some methods quantitatively incorporate the decision maker’s relative preferences regarding the importance of decision criteria (a process sometimes called “weighting”), whereas others make no provision for explicit weighting. For our purposes, they can be broken into four general types: a) narrative, b) elementary, c) multicriteria decision analysis (MCDA), and d) robust scenario analysis. Each type can be used for various decisions in an AA, such as winnowing down the initial set of potential alternatives or for ranking the alternatives. As Figure 2 illustrates, in the context of a mixed decision framework, two different decision tools and methods could even be used at different decision points within a single AA.

Figure 2.

Multiple decision tool use in mixed decision framework. Demonstrates one potential scenario for using multiple decision tools in one chemical selection process. (Derived from Jacobs et al. 2016).

Narrative Approaches

In the narrative approach, also known as the ad hoc approach, the decision maker engages in a holistic, qualitative balancing of the data and associated trade-offs to arrive at a selection (Eason et al. 2011; Linkov et al. 2006). In some cases, the analyst may rely on explicitly stated informal decision principles or on expert judgment to guide the process. No quantitative scores are assigned to alternatives for the purposes of the comparison. Similarly, no explicit quantitative weighting is used to reflect the relative importance of the decision criteria, although in some instances, qualitative weighting may be provided for the analyst by the firm charged with performing the AA. The AA methodology developed by the European Chemicals Agency (ECHA) for substances that are subject to authorization under REACH is illustrative (ECHA 2011). Similarly, the AA requirements set out in the regulations for the California Safer Consumer Products program, which mandates that manufacturers complete AAs for certain priority products, adopt the ad hoc approach, setting out broad, narrative decision rules without explicit weighting (DTSC 2013). This approach could be particularly subject to various biases in decision making, which we will address later.

Elementary Approaches

Elementary approaches apply a more systematic overlay to the narrative approach, providing the analyst with specific guidance about how to make a decision. Such approaches provide an observable path for the decision process but typically do not require sophisticated software or specialized expertise. For example, Hansen and colleagues developed the NanoRiskCat tool for prioritization of nanomaterials in consumer products (Hansen et al. 2014). The structure may take the form of a decision tree that takes the analyst through an ordered series of questions. Alternatively, it may offer a set of checklists, specific decision rules, or simple algorithms to assist the analyst in framing the issues and guiding the evaluation. Elementary approaches can make use of both quantitative and qualitative data and may incorporate implicit or explicit weighting of the decision criteria (Linkov et al. 2004).

MCDA Approaches

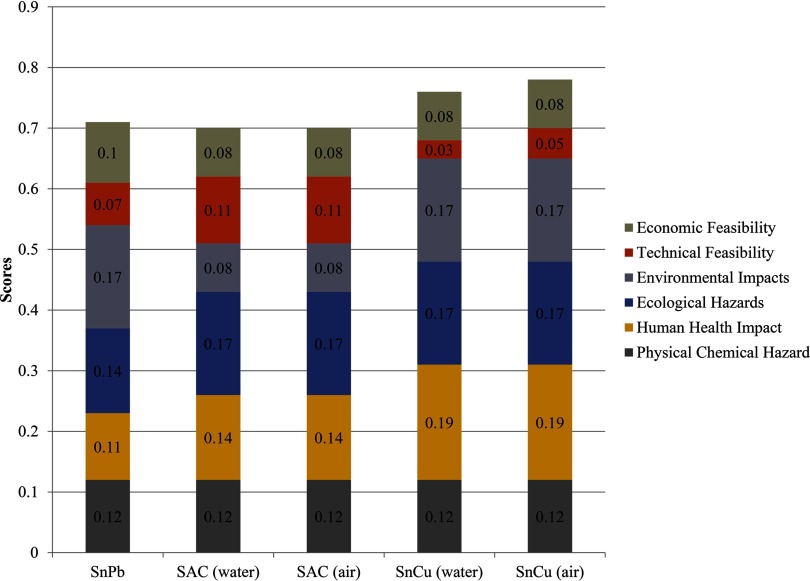

The MCDA approach couples a narrative evaluation with mathematically based formal decision analysis tools, such as multi-attribute utility theory (MAUT) and outranking. The output of the selected MCDA analysis is intended as a guide for the decision maker and as a reference for stakeholders affected by or otherwise interested in the decision. MCDA itself consists of a range of different methods and tools, reflecting various theoretical bases and methodological perspectives. Accordingly, those methods and tools tend to assess the data and generate rankings in different ways (Huang et al. 2011). However, they generally share certain common features, setting them apart from the type of informal decision making present in the narrative approach. Each MCDA approach provides a systematic, observable process for evaluating alternatives in which an alternative’s performance across the decision criteria is aggregated to generate a score. Each alternative is then ranked relative to the other alternatives based on its aggregate score. Figure 3 provides an example of the type of ranking generated from an MAUT tool. In most of these types of ranking approaches, the individual criteria scores are weighted to reflect the relative importance of the decision criteria and sub-criteria (Kiker et al. 2005; Belton and Stewart 2002).

Figure 3.

Sample output from MAUT decision tool comparing alternatives to lead solder. SnPb is a solder alloy composed of 63% Sn/37% Pb; SAC (Water) is a solder alloy composed of 95.5% Sn/3.9% Ag/0.6% Cu; water quenching is used to cool and harden solder; SAC (air) is a solder alloy composed of 95.5% Sn/3.9% Ag/0.6% Cu; air is used to cool and harden solder; SnCu (water) is a solder alloy composed of 99.2% Sn/0.8% Cu; water quenching is used to cool and harden solder; SnCu (air) solder alloy composed of 99.2% Sn/0.8% Cu; air is used to cool and harden solder [Malloy et al. 2013 with permission from Wiley Online Library http://onlinelibrary.wiley.com/journal/10.1002/(ISSN)1551-3793/homepage/Permissions.html)]. Note: Ag, silver; Cu, copper; Pb, lead; Sn, tin.

Some MCDA tools, such as MAUT, are optimization tools that seek to maximize the achievement of the decision maker’s preferences. These optimization approaches use utility functions, dimensionless scales that range from 0 to 1, to convert the measured performance of an alternative for a given decision criterion to a score between 0 and 1 (Malloy et al. 2013). In contrast, outranking methods do not create utility functions or seek optimal alternatives. Instead, outranking methods seek the alternative that outranks other alternatives in terms of overall performance, also known as the dominant alternative (Belton and Stewart 2002). The diverse MCDA tools use various approaches to address uncertainty regarding the performance of alternatives and the relative importance to be placed on respective attributes. Some, such as MAUT, use point values for performance and weighting and rely upon sensitivity analysis to evaluate the impact of uncertainty (Malloy et al. 2013). Sensitivity analysis evaluates how different values of uncertain attributes or weights would affect the ranking of the alternatives. Others, such as stochastic multi-criteria acceptability analysis (SMAA), represent performance information and relative weights as probability distributions (Lahdelma and Salminen 2010). Still others, such as multi-criteria mapping, rely on a part-quantitative, part-qualitative approach in which the analyst facilitates structured evaluation of alternatives by the ultimate decision maker, eliciting judgments from the decision maker regarding the performance of the respective alternatives on relevant attributes and on the relative importance of those attributes. The analyst then generates a ranking based upon that input (SPRU 2014; Hansen 2010). MCDA has been used, though not extensively, in the related field of life-cycle assessment (LCA) (Prado et al. 2012). For example, Wender et al. (2014) integrated LCA with MCDA methods to compare existing and emerging photovoltaic technologies.

Robust Scenario Approaches

Robust scenario analysis is particularly useful when decision makers face deep uncertainty, meaning situations in which the decision makers do not know or cannot agree upon the likely performance of one or more alternatives on important criteria (Lempert and Collins 2007). Robust scenario analysis uses large ensembles of scenarios to visualize all plausible, relevant futures for each alternative. With this range of potential futures in mind, it helps decision makers to compare the alternatives in search of the most robust alternative. A robust alternative is one that performs well across a wide range of plausible scenarios even though it may not be optimal or dominant in any particular one (Kalra et al. 2014).

Robust scenario decision making consists of four iterative steps. First, the decision makers define the decision context, identifying goals, uncertainties, and potential alternatives under consideration. Second, modelers generate ensembles of hundreds, thousands, or even more scenarios, each reflecting an outcome flowing from different plausible assumptions about how each alternative may perform. Third, quantitative analysis and visualization software is used to explore the benefits and drawbacks of the alternatives across the range of scenarios. Finally, trade-off analysis (i.e., comparative assessment of the relative pros and cons of the alternatives) is used to evaluate the alternatives and to identify a robust strategy (Lempert et al. 2013).

Decision-Making Challenges Presented by Alternatives Analysis

Like many decisions involving multiple criteria, identifying a safer viable alternative or set of alternatives is often difficult. Finding potential alternatives, collecting information about their performance, and evaluating the trade-offs posed by each alternative are all laden with problems. Those difficulties are exacerbated in the regulatory setting because of additional constraints associated with that regulatory setting, such as the need for accountability, transparency, and consistency across similar cases (Malloy et al. 2015). In this review, we focus on four challenges that are recognized in the decision analysis field to be of particular importance to regulatory AA:

-

•

Dealing with large numbers of attributes

-

•

Uncertainty in performance data

-

•

Poorly understood option space

-

•

Incorporating decision-maker values (sometimes called weighting of attributes)

Large Numbers of Attributes

In its essential form, AA focuses upon human health, environmental impacts, technical performance, and economic impact. But in fact, AA involves many more than four attributes. Each of the four major attributes, particularly human health, includes numerous sub-attributes, many more than any human can process without some form of heuristic or computational aid. An example is the case of California Safer Consumer Products regulations, which require that an AA consider all relevant “hazard traits” (DTSC 2013). Hazard traits are “properties of chemicals that fall into broad categories of toxicological, environmental, exposure potential, and physical hazards that may contribute to adverse effects …” (DTSC 2013). For human health alone, the California regulations identify twenty potentially relevant hazard traits (DTSC 2013). Similarly, the U.S. EPA considers a total of twelve hazard end points in assessing impacts to human health in its alternatives assessment guidance (U.S. EPA 2011).

Large numbers of attributes raise two types of difficulties. First, as the number of attributes increases, data collection regarding the performance of the baseline product and its alternatives becomes increasingly difficult, time-consuming, and expensive. Because not all attributes listed in regulations or guidance documents will be salient or have an impact in every case, decision-making approaches that judiciously sift out irrelevant or less-important attributes are desirable. Second, given humans’ cognitive limitations, larger numbers of relevant attributes complicate the often inevitable trade-off analysis that is needed in AA. Consider an example of two alternative solders, one of which performs best in terms of low carcinogenicity, neurotoxicity, acute aquatic toxicity, and wettability (a very desirable feature for solders), but not so well with respect to endocrine disruption, respiratory toxicity, chronic aquatic toxicity, and tensile strength (another advantageous feature for solders). Suppose the second alternative presents the opposite profile. Now, add dozens of other attributes relating to human health and safety, environmental impacts, and technical and economic performance to the mix. Even in the relatively simple case of one baseline product and two potential alternatives, evaluating and resolving the trade-offs can be treacherous. In assessing the alternatives, decision makers must determine whether and how to compensate for poor performance on some attributes with superior performance on other attributes. Similarly, the nature and scale of the performance data for the attributes varies wildly; using fundamentally different metrics for diverse attributes generates a mixture of quantitative and qualitative information.

Decision frameworks and methods should provide principled approaches to integrating or normalizing such information to support trade-off analysis. Elementary approaches often use ordinal measures of performance to normalize diverse types of data. For example, the U.S. EPA AA methodology under the Design for the Environment program characterizes performance on a variety of human health and environmental attributes as “low,” “medium,” or “high” (U.S. EPA 2011). The increased tractability comes with some decrease in precision, potentially obscuring meaningful differences in performance or exaggerating differences at the margins. As the number of relevant attributes increases, it becomes more difficult to rely upon narrative and elementary approaches to manage the diverse types of data and to evaluate the trade-offs presented by the alternatives. MCDA approaches are well suited for handling large numbers of attributes and diverse forms of data. (Kiker et al. 2005). In an AA case study using an MCDA method to evaluate alternatives to lead-based solder, researchers used an internal normalization approach to convert an alternative’s scores on each criterion to dimensionless units ranging from 0 to 1 and then applied an optimization algorithm to trade-offs across more than fifty attributes (Malloy et al. 2013).

Uncertain Data Regarding Attributes

Uncertainty is not unique to AA; it presents challenges in conventional risk assessment and in many environmental decision-making situations. However, the diversity and number of the relevant data streams and potential trade-offs faced in AA exacerbate the problem of uncertainty. In thinking through uncertainty in this context, three considerations stand out: defining it, responding to it methodologically, and communicating about it to stakeholders.

The meaning of the term “uncertainty” is itself uncertain; definitions abound (NAS 2009; Ascough et al. 2008). For our purposes, uncertainty includes a complete or partial lack of information, or the existence of conflicting information or variability, regarding an alternative’s performance on one or more attributes, such as health effects, potential exposure, or economic impact (NAS 2009). Uncertainty includes “data gaps” resulting from a lack of experimental studies, measurements, or other empirical observations, along with situations in which available studies or modeling provide a range of differing data for the same attribute (NAS 2014; Ascough et al. 2008). It also includes limitations inherent in data generation and modeling such as measurement error and use of modeling assumptions, as well as naturally occurring variability caused by heterogeneity or diversity in the relevant populations, materials, or systems. Uncertainty regarding the strength of the decision maker’s preferences, also known as value uncertainty, is discussed below.

There are a variety of methodological approaches for dealing with uncertainty. Some approaches (typically within narrative or elementary approaches) simply call for identification and discussion of missing data or use simple heuristics to deal with uncertainties, for example by assuming a worst-case performance for that attribute (DTSC 2013; Rossi et al. 2006). Others rely upon expert judgment (often in the form of expert elicitation) to fill data gaps (Rossi et al. 2012). Although MCDA approaches can make similar use of simple heuristics and expert estimations, they also provide a variety of more sophisticated mechanisms for dealing with uncertainty (Malloy et al. 2013; Hyde et al. 2003). Simple forms of sensitivity analysis in which single input values are modified to observe the effect on the MCDA results are also often used at the conclusion of the decision analysis process—the lead-based solder study used this approach to assess the robustness of its outcomes—although this type of ad hoc analysis has significant limitations (Malloy et al. 2013; Hyde et al. 2003).

Diverse MCDA methods also offer a variety of quantitative probabilistic approaches relying upon such tools as Monte Carlo analysis, fuzzy sets, and Bayesian networks to investigate the range of outcomes associated with different values for the uncertain attribute (Lahdelma and Salminen 2010). Canis and colleagues used a stochastic decision-analytic technique to address uncertainty in an evaluation of four different processes for synthesizing carbon nanotubes (arc, high-pressure carbon monoxide, chemical vapor deposition, and laser) across five performance criteria. Rather than generating an ordered ranking of the alternatives from first to last, the method provided an estimate of the probability that each alternative would occupy each rank (Canis et al. 2010). Robust scenario analysis takes a different approach, using large ensembles of scenarios in an attempt to visualize all plausible, relevant futures for each alternative. With this range of potential futures in mind, decision makers are enabled to compare the alternatives in search of the most robust alternative given the uncertainties (Lempert and Collins 2007).

Choosing among these approaches to uncertainty is not trivial. Studies in the decision analysis literature (and in the context of multi-criteria choices in particular) demonstrate that the approach taken with respect to uncertainty can substantially affect decision outcomes (Hyde et al. 2003; Durbach and Stewart 2011). For example, one heuristic approach—called the “uncertainty downgrade”—essentially penalizes an alternative with missing data by assuming the worst with respect to the affected attribute. In some cases, such a penalty default may encourage proponents of the alternative to generate more complete data, but it may also lead to the selection of less-safe but more-studied alternatives (NAS 2014).

How the evaluation of uncertainties is presented to the decision maker can be as important as the substance of the evaluation itself. Decision-making methods and tools are of course meant to assist the decision maker; thus, the results of the uncertainty analysis must be salient and comprehensible. In simple cases, a comprehensive assessment of uncertainty may not be necessary. In complicated situations, however, simply identifying data gaps without providing qualitative or quantitative analysis of the scope or impact of the uncertainty can leave decision makers adrift. Alternatively, the door could be left open to strategic assessment of the uncertainties aimed at advancing the interests of the regulated entity rather than achieving the goals of the regulatory program. Providing point estimates for uncertain data can bias decision making, and presenting ranges of data in probability distributions without supporting analysis designed to facilitate understanding can lead to information overload (Durbach and Stewart 2011). Decision analytical approaches such as MCDA can provide insightful, rigorous treatment of uncertainty, but that rigor comes at some potential cost in terms of resource intensity, complexity and reduced transparency (NAS 2009).

Poorly Understood Option Space

The range of alternatives considered in AA (often referred to as the “option space” in decision analysis and engineering) can be quite wide (Frye-Levine 2012; de Wilde et al. 2002). Alternatives may involve a) the use of “drop‐in” chemical or material substitutes, b) a redesign of the product or process to obviate the need for the chemical of concern, or c) changes regarding the magnitude or nature of the use of the chemical (Sinsheimer et al. 2007). Option generation is a core aspect of decision making; identifying an overly narrow set of alternatives undermines the value of the ultimate decision (Del Missier et al. 2015; Adelman et al. 1995). Accordingly, existing regulatory programs emphasize the importance of considering a broad range of relevant potential alternatives (DTSC 2013; ECHA 2011).

We highlight three issues that complicate the identification of viable alternatives. For these purposes, viability refers to technical and economic feasibility. First, information regarding the existence and performance of alternatives is often difficult to uncover, particularly when searching for alternatives other than straightforward drop-in chemical replacements. Existing government, academic, and private publications do offer general guidance on searching for alternatives (NAS 2014; U.S. EPA 2011; IC2 2013; Rossi et al. 2012), and databases and reports provide specific listings of chemical alternatives for limited types of products [U.S. EPA Safer Chemical Ingredients List (SCIL)]. However, for many other products, information regarding chemical and nonchemical alternatives may not be available to the regulated firm. Rather, the information may reside with vendors, manufacturers, consultants, or academics outside the regulated entity’s normal commercial network.

Second, for any given product or process, alternatives will be at different stages of development: Some may be readily available, mature technologies, whereas others are emerging or in early stages of commercialization. Indeed, selection of a technology through a regulatory alternative analysis can itself accelerate commercialization or market growth of that technology. Because the option space can be so dynamic, AA frameworks that assume a static set of options may exclude innovative alternatives that could be available in the near term (ECHA 2011). Thus, identifying the set of potential alternatives for consideration can itself be a difficult decision made under conditions of uncertainty.

Third, the regulated entity (or rather, its managers and staff) may be unable or reluctant to cast a broad net in identifying potential alternatives. Individuals face cognitive and disciplinary limitations that can substantially shape their evaluation of information and decision making. For example, cognitive biases and mental models that lead us to favor the status quo and to discount the importance of new information are well documented (Samuelson and Zeckhauser 1988), even in business settings with high stakes (Kunreuther et al. 2002); this status quo bias is amplified when executives have longer tenure within their industry (Hambrick et al. 1993). These unconscious biases can be mitigated to some degree through training and the use of well-designed decision-making processes and aids. Thaler and Benartzi (2004) demonstrated how changing the default can influence behavior in the context of saving for retirement, and Croskerry (2002) provided an overview of biases that occur in clinical decision making with strategies of how to avoid them. However, such training, processes, and aids are largely ineffective when the decision maker is acting strategically to limit the set of alternatives to circumvent the goals of the regulatory process. Many regulated firms have strong business reasons to resist externally driven alterations to successful products, including costs, disruption, and the uncertainty of customer response to the revised product.

Incorporating Decision-Maker Preferences/Weighting of Attributes

By its very nature, AA involves the balancing of attributes against one another in evaluating potential alternatives. Consider the example of antifouling paint for marine applications: One paint may be safer for boatyard workers, whereas another may be more protective of aquatic vegetation. In most multi-criteria decision situations, however, the decision maker is not equally concerned about all decision attributes. An individual decision maker may place more importance on whether a given paint kills aquatic vegetation than on whether it contributes to smog formation. Weighting is a significant challenge. In many cases, the individual decision maker’s preferences are not clear, even to that individual. This so-called “value uncertainty” is compounded in situations such as regulatory settings, in which many stakeholders (and thus many sets of preferences) are involved (Ascough et al. 2008).

Existing approaches to AA vary significantly in how they address incorporation of preferences/weighting. Narrative approaches typically provide no explicit weighting of the decision attributes, although in some instances, qualitative weighting may be provided for the analyst. More often, whether and how to weight the relevant attributes are left to the discretion of the analyst (Jacobs et al. 2016; Linkov et al. 2005). Elementary approaches usually incorporate either implicit or explicit weighting of the decision attributes. For example, decision rules in elementary approaches that eliminate alternatives based on particular attributes by definition place greater weight upon those attributes. Most MCDA approaches confront weighting explicitly, using various methods to derive weights. Generally speaking, there are three methods for eliciting or establishing explicit attribute weights: the use of existing generic weights such as the set in the National Institute of Standards and Technology’s life cycle assessment software for building products; calculation of weights using objective criteria such as the distance-to-target method; or elicitation of weights from experts or stakeholders (Hansen 2010; Zhou and Schoenung 2007; Gloria et al. 2007; SPRU 2004; Lippiatt 2002). The robust scenario approach does not attempt to weight attributes; instead, it generates outcomes reasonably expected from a set of plausible scenarios for each alternative, allowing the decision maker to select the most robust alternative; that is, the alternative that offers the best range of outcomes across the scenarios.

Each strategy for addressing value uncertainty raises its own issues. For example, in regulatory programs such as Superfund and the Clean Air Act, which use narrative decision making, weighting is typically performed on a largely ad hoc basis, generally without any direct, systematic discussion of the relative weights to be accorded to the relevant decision criteria (U.S. EPA 1994; U.S. EPA 1990). Such ad hoc treatment of weighting raises concerns regarding the consistency of outcomes across similar cases. Over time, regulators may develop standard outcomes or rules of thumb, which provide some consistency in outcome, but such conventions and the tacit weighting embedded in them can undermine transparency in decision making. Moreover, a lack of clear guidance regarding the relative weight to be accorded to criteria could allow political or administrative factors to influence the decision. However, incorporation of explicit weighting in regulatory decisions creates complex political and methodological questions beyond dealing with value uncertainty. For example, agencies generating explicit weightings would have to deal with potentially inconsistent preferences among the regulated entity, the various stakeholder groups, and the public at large. Similarly, they must consider whether pragmatic and strategic considerations related to implementation and enforcement of the program are relevant in establishing weighting (Department for Communities and Local Government 2009).

Principles for Developing Effective Alternatives Analysis Decision-Making Approaches

The previous section focused on the ways in which the various decision-making approaches can be used to address the four challenges presented by AA. However, integrating such decision making into AA itself raises thorny questions: for example, which of the decision approaches and tools should be used and in what circumstances. In this section, we propose four interrelated principles regarding the application of those approaches and tools in regulatory AA.

Different Decision Points within Alternatives Analysis May Require Different Decision Approaches and Tools

In the course of an AA, one must make a series of decisions. These decisions include selecting relevant attributes, identifying potential alternatives, assessing performance regarding attributes concerning human health impacts, ecological and environmental impacts, technical performance, and economic impacts; the preferred alternatives must also be ranked or selected. Different approaches and tools may be best suited for each of these decisions rather than a one-size-fits-all methodology. Consider decisions regarding the relative performance of alternatives on particular attributes. For some attributes such as production costs or technical performance, there may be well-established methods in industry for evaluating relative performance that can be integrated into a broader AA framework. Similarly, GreenScreen® is a hazard assessment tool that is used by a variety of AA frameworks (IC2 2013; Rossi et al. 2012). However, these individual tools are not designed to assist in the trade-off analysis across all of the disparate attributes; for this task, other approaches and tools will be needed. Some researchers recommend using multiple approaches for the same analysis with the aim of generating more robust analysis to inform the decision maker (Kiker et al. 2005; Yatsalo et al. 2007).

Decision-Making Approaches and Tools Should Be as Simple as Possible

Not every AA will require sophisticated analysis. In some cases, the analyst may conclude after careful assessment that the data are relatively complete and the trade-offs fairly clear. In such cases, basic decision approaches and uncomplicated heuristics may be all that are necessary to support a sound decision. Thus, a simple case involving a drop-in chemical substitute with substantially better performance across most attributes may not call for sophisticated MCDA approaches. Other situations will present high uncertainty and complex trade-offs; thus, these situations will require more advanced approaches and tools. The evaluation of alternative processes for synthesizing carbon nanotubes, which involved substantial uncertainty regarding technical performance and health impacts was more suited for probabilistic MCDA (Canis et al. 2010). Similarly, not every regulated business or regulatory agency will have the resources or the capacity to use high-level analytical tools. Accordingly, the decision-making approach/tool should be scaled to reflect the capacity of the decision maker and the task at hand while seeking to maximize the quality of the ultimate decision. Clearly, if the decision will have a major impact but the regulated entity is currently not equipped to apply the appropriate sophisticated tools, other entities such as nongovernmental organizations, trade associations, or regulatory agencies should support that firm with technical advice or resources rather than running the risk of regrettable outcomes.

The Decision-Making Approach and Tools Should Be Crafted to Reflect the Decision Context

Context matters in structuring decision processes. In particular, it is important to consider who will be performing the analysis and who will be making the decision. As discussed above, when AA is used in a regulatory setting, the regulated business will typically perform the initial alternative analysis and present a decision to the agency for review. These businesses will have a range of capabilities and objectives. Some will engage in a good faith or even a fervent effort to seek out safer alternatives. Others will reluctantly do the minimum required, and still others may engage in strategic behavior, appearing to perform a good faith AA but assiduously avoiding changes to their product. The decision-making process should be designed with all of these behaviors in mind. For example, it might include meaningful minimum standards to ensure rigor and consistency in the face of strategic behavior while incorporating flexibility to foster innovation among those firms more committed to adopting safer alternatives.

Multicriteria Decision Analysis Should Support but Not Supplant Deliberation

The output of MCDA is meant to inform rather than to replace deliberation, defined for these purposes as the process for communication and consideration of issues in which participants “discuss, ponder, exchange observations and views, reflect upon information and judgments concerning matters of mutual interest, and attempt to persuade each other” (NAS 1996). MCDA provides analytical results that systematically evaluate the trade-offs between alternatives, allowing those engaged in deliberation to consider how their preferences and the alternatives’ respective performance on different attributes affect the decision (Perez 2010). MCDA augments professional, political, and personal judgment as a guide and as a reference point for stakeholders affected by or otherwise interested in the decision. However, the output of many MCDA tools can appear conclusive, setting out quantified rankings and groupings of alternatives and striking visualizations. Therefore, care must be taken to ensure that MCDA does not supplant or distort the deliberative process and to ensure that decision makers and stakeholders understand the embedded assumptions in the MCDA tool used as well as the tool’s limitations. For example, multicriteria mapping methods specifically attempt to facilitate such deliberation through an iterative, facilitated process involving a series of interviews with identified stakeholders. (SPRU 2004; Hansen 2010). Moreover, although MCDA tools summarize the performance of alternatives under clearly defined metrics and preferences, they do not define standards for determining when a difference between the performance of alternatives is sufficient to justify making a change. Consider a case in which a manufacturer finds an alternative that exhibits lower aquatic toxicity by an order of magnitude but does somewhat worse in terms of technical performance. Without explicit input regarding the preferences of the decision maker, the MCDA tool cannot answer the question of whether the distinction is sufficiently large to justify product redesign. Ultimately, the decision maker must determine whether the differences between the incumbent and an alternative are significant enough to justify a move to the alternative.

With these challenges and principles in mind, we now turn to the question of how decision analysis and related disciplines can best be incorporated into the developing field of AA.

Next Steps: Advancing Integration of Alternatives Analysis and Decision Analysis

Decision science is a well-developed discipline, offering a variety of tools to assist decision makers. However, many of those tools are not widely used in the environmental regulatory setting, much less in the emerging area of AA. The process of integration is complicated by several factors. First, AA is by nature deeply transdisciplinary, requiring extensive cross-discipline interaction. Second, choosing among the wide range of available approaches and tools, each with its own benefits and limitations, can be daunting to regulators, businesses, and other stakeholders. Moreover, many of the tools require significant expertise in decision analysis and are not within the existing capacities of entities engaged in AA. Third, given the limited experience with formal decision tools in AA (and in environmental regulation more generally), there is skepticism among some regarding the value added by the use of such tools. Nonetheless, we see value in exploring the integration of decision analysis and its tools into AA, and we provide four recommendations to advance this integration.

Recommendation 1: Engage in Systematic Development, Assessment, and Evaluation of Decision Approaches and Tools

Although there is a rich body of literature in decision science concerning the development and evaluation of various decision tools, there has been relatively little research focused on applications in the context of AA in particular or in regulatory settings more broadly. Although recent studies of decision making in AA provide some insights, they ultimately call for further attention to be paid to the question of how decision tools can be integrated (NAS 2014; Jacobs et al. 2015). Such efforts may include, among other things:

-

•

Developing or adapting user-friendly decision tools specifically for use in AA, taking into account the capacities and resources of the likely users and the particular decision task at hand.

-

•

Analyzing how existing and emerging decision approaches and tools address the four decision challenges of dealing with large numbers of attributes, uncertainty in performance data, poorly understood option space, and weighting of attributes.

-

•

Evaluating the extent to which such approaches and tools are worthwhile and amenable to use in a regulatory setting by agencies, businesses, and other stakeholders.

-

•

Considering how to better bridge the gap between analysis (whether human health, environmental, engineering, economic, or other forms) and deliberation, with particular focus on the potential role of decision analysis and tools.

-

•

Articulating objective technical and normative standards for selecting decision approaches and tools for particular uses in AA.

The results of this effort could be guidance for selecting and using a decision approach or even a multi-tiered tool that offers increasing levels of sophistication depending on the needs of the user. The experience gained over the years with the implementation of LCA could be useful here. For instance, the development of methods such as top-down and streamlined LCA has emerged in response to the recognition that many entities do not have the capacity (or the need) to conduct a full-blown process-based LCA, and standards such as the International Organization for Standardization (ISO) 14,040 series have emerged for third-party verification of LCA studies.

Recommendation 2: Use Case Studies to Advance the Integration of Decision Analysis into AA

Systematic case studies offer the opportunity to answer specific questions about how to integrate decision analysis into AA, and they demonstrate the potential value and limitations of different decision tools in AA to stakeholders. Case studies could also build upon and test outcomes from the activities discussed in “Recommendation 1.” For example, a case study may apply different decision tools to the same data set to evaluate differences in the performance of the tools with respect to previously developed technical and normative standards. To ensure real-world relevance, the case studies should be based upon actual commercial products and processes of interest to regulators, businesses, and other stakeholders. Currently relevant case-study topics that could be used to examine one or more of the decision challenges discussed above include marine antifouling paint, chemicals used in hydraulic fracturing (fracking), flame retardant alternatives, carbon nanotubes, and bisphenol A alternatives.

Recommendation 3: Support Trans-Sector and Trans-Disciplinary Efforts to Integrate Decision Analysis and Other Relevant Disciplines into Alternatives Analysis

AA brings a range of disciplines to bear in evaluating the relative benefits and drawbacks of a set of potentially safer alternatives, including toxicology, public health, engineering, economics, chemistry, environmental science, decision analysis, computer science, business management and operations, risk communication, and law. Existing tools and methods for AA do not integrate these disciplines in a systematic or rigorous way. Advancing AA will require constructing connections across those disciplines. Although this paper focuses on decision analysis, engagement with other disciplines will also be needed. Existing initiatives such as the AA Commons, the Organisation for Economic Co-operation and Development (OECD) Working Group, the Health and Environmental Sciences Institute (HESI) Committee, and others provide a useful starting point, but more systematic, research-focused, broadly trans-disciplinary efforts are also needed (BizNGO 2016; OECD 2016). The AA case studies from Recommendation 2 could promote transdisciplinary efforts by creating a vehicle for practitioners to combine data from different sectors into a decision model. A research coordination network would provide the necessary vehicle for systematic collaboration across disciplines and public and private entities and institutions.

Recommendation 4: Support Undergraduate, Graduate, and Postgraduate Education and Outreach Efforts Regarding Alternatives Analysis, Including Attention to Decision Making

Advancing AA research and application in the mid-to-long term will require training the next generation of scientists, policy makers, and practitioners regarding the scientific and policy aspects of this new field. With very limited exceptions (Schoenung et al. 2009), existing curricula in relevant undergraduate, graduate, and professional programs do not cover AA or prevention-based regulation. Curricular development will be particularly challenging for two reasons: the relative emerging nature of AA and the transdisciplinary nature of the undertaking. Its emerging nature means that there is little in terms of curricular materials to begin with, requiring significant start-up efforts. In addition, the subject matter is something of a moving target as new research and methods become available and as regulatory programs develop. In terms of the many disciplines that affect AA and prevention-based policy, effective education will itself have to be transdisciplinary and will have to reach across disciplines in terms of readings and exercises and engage students and faculty from those various disciplines.

The societal value of research regarding AA methods depends largely on the extent to which research is accessible to and understood by its end users—policy makers at every level, nongovernmental organizations (NGOs), and businesses. Ultimately, adoption of the frameworks, methods, and tools developed by researchers also requires broader acceptance by the public. This acceptance requires systematic education and outreach: namely, nonformal education in structured learning environments such as in-service training and continuing education outside of formal degree programs and informal or community education facilitating personal and community growth and sociopolitical engagement (Bell 2009). For some, the education and outreach will be at the conceptual level alone, informing stakeholders about the general scope and nature of AA. For others who are more deeply engaged in chemicals policy, the education and outreach will focus upon more technical and methodological aspects.

Conclusions

There is immediate demand for robust, effective approaches to regulatory AA to select alternatives to chemicals of concern. Translation of decision analysis tools used in other areas of environmental decision making to the chemical regulation sphere could strengthen existing AA approaches but also presents unique questions and challenges. For instance, AAs must meet evolving regulatory standards but also be nimble enough for the private sector to employ as a tool during product development. To be useful, different tools crafted for the particular context may be required. The decision approaches employed should be as simple as possible and are intended to support rather than supplant decision making. Transdisciplinary work, mainly organized around case studies designed to address specific questions, and increased access to education and training would advance the use of decision analysis to improve AA.

Supplemental Material

Acknowledgments

This paper came from discussions at a workshop that was supported by the University of California (UC) Sustainable Technology and Policy Program, a joint collaboration of the University of California, Los Angeles (UCLA) School of Law and the Center for Occupational and Environmental Health at the UCLA Fielding School of Public Health in partnership with the UC Center for Environmental Implications of Nanotechnology (UC CEIN). UC CEIN is funded by a cooperative agreement from the National Science Foundation and the U.S. Environmental Protection Agency (NSF DBI-0830117; NSF DBI-1266377). Support for this workshop was also provided by the Institute of the Environment and Sustainability and the Emmett Institute on Climate Change and the Environment, both at UCLA.

References

- Adelman L, Gualtieri J, Stanford S. 1995. Examining the effect of causal focus on the option generation process: An experiment using protocol analysis. Organ Behav Hum Decis Process 61:54–66, 10.1006/obhd.1995.1005. [DOI] [Google Scholar]

- Ascough JC, Maier HR, Ravalico JK, Strudley MW. 2008. Future research challenges for incorporation of uncertainty in environmental and ecological decision-making. Ecol Modell 219:383–399, 10.1016/j.ecolmodel.2008.07.015. [DOI] [Google Scholar]

- Bell DVJ. 2009. Education for Sustainable Development: Cure Or Placebo? In: Innovation, Science, Environmental, Special Edition: Charting Sustainable Development in Canada 1987–2007. Toner G, Meadowcroft J, eds. Montreal, Quebec, Canada: McGill-Queen’s University Press, 106–130. [Google Scholar]

- Belton V, Stewart TJ. 2002. Multiple Criteria Decision Analysis: An Integrated Approach. Norwell, MA: Kluwer Academic Publishers. [Google Scholar]

- BizNGO. 2016. The Commons Principles for Alternatives Assessment. http://www.bizngo.org/alternatives-assessment/commons-principles-alt-assessment [accessed August 22, 2016].

- Canis L, Linkov I, Seager TP. 2010. Application of stochastic multiattribute analysis to assessment of single walled carbon nanotube synthesis processes. Environ Sci Technol 44:8704–8711, PMID: 20964398, 10.1021/es102117k. [DOI] [PubMed] [Google Scholar]

- Carson RT, Damon M, Johnson LT, Gonzalez JA. 2009. Conceptual issues in designing a policy to phase out metal-based antifouling paints on recreational boats in San Diego Bay. J Environ Manage 90(8):2460–2468, 10.1016/j.jenvman.2008.12.016. [DOI] [PubMed] [Google Scholar]

- CEIN (Center for Environmental Implications of Nanotechnology). 2015. Project KNO-2: Developing or Transforming Nano Regulatory Approaches. http://www.cein.ucla.edu/new/p177.php [accessed 12 August 2016].

- Corrosion Proof Fittings v. EPA, 947 F.2d 1201 (5th Cir. 1991).

- Croskerry P. 2002. Achieving quality in clinical decision making: Cognitive strategies and detection of bias. Acad Emerg Med 9:1184–1204, PMID: 12414468. [DOI] [PubMed] [Google Scholar]

- Dafforn KA, Lewis JA, Johnston EL. 2011. Antifouling strategies: History and regulation, ecological impacts and mitigation. Mar Pollut Bull 62:453–465, 10.1016/j.marpolbul.2011.01.012. [DOI] [PubMed] [Google Scholar]

- de Wilde P, Augenbroe G, van der Voorden M. 2002. Design analysis integration: Supporting the selection of energy saving building components. Build Environ 37:807–816, 10.1016/S0360-1323(02)00053-7. [DOI] [Google Scholar]

- Del Missier F, Visentini M, Mäntylä T. 2015. Option generation in decision making: Ideation beyond memory retrieval. Front Psychol 5:1584, 10.3389/fpsyg.2014.01584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Department for Communities and Local Government. 2009. Multi-Criteria Analysis: A Manual. http://eprints.lse.ac.uk/12761/1/Multi-criteria_Analysis.pdf [accessed 14 October 2015].

- Department of Ecology, Washington State. 2015. Alternatives Assessment Guide for Small and Medium Businesses. https://fortress.wa.gov/ecy/publications/documents/1504002.pdf [accessed 14 December 2015].

- DTSC (California Department of Toxic Substances Control). 2013. Safer Consumer Product Regulations (codified at 22 CCR Section 69506.2-.8.); R-2011 http://www.dtsc.ca.gov/LawsRegsPolicies/Regs/upload/Text-of-Final-Safer-Consumer-Products-Regulations-2.pdf [accessed 23 May 2017].

- Durbach IN, Stewart TJ. 2011. An experimental study of the effect of uncertainty representation in decision making. Eur J Oper Res 214:380–392, 10.1016/j.ejor.2011.04.021. [DOI] [Google Scholar]

- Eason T, Meyer DE, Curran MA, Upadhyayula V. 2011. “Guidance to Facilitate Decisions for Sustainable Nanotechnology.” EPA/600/R-11/107.

- ECHA (European Chemicals Agency). 2011. “Guidance on the Preparation of an Application for Authorisation.” ECHA-11-G-01 https://echa.europa.eu/documents/10162/23036412/authorisation_application_en.pdf/6571a0df-9480-4508-98e1-ff807a80e3a9 [accessed 23 May 2017].

- European Parliament and Council. 2006. Regulation (EC) No 1907/2006 of the European Parliament and of the Council of 18 December 2006 concerning the Registration, Evaluation, Authorisation and Restriction of Chemicals (REACH), OJ L 396 (30 December 2006):1–849. [Google Scholar]

- Frye-Levine LA. 2012. Sustainability through design science: Re-imagining option spaces beyond eco-efficiency. Sustain Dev 20:166–179, 10.1002/sd.1533. [DOI] [Google Scholar]

- Giove S, Brancia A, Satterstrom FK Linkov I.. 2009. Decision support systems and environment: Role of MCDA. In: Decision Support Systems for Risk-Based Management of Contaminated Sites. Marcomini A; Suter GW Jr.; Critto, eds. New York, NY: Springer, 53–74. [Google Scholar]

- Gloria TP, Lippiatt BC, Cooper J. 2007. Life cycle impact assessment weights to support environmentally preferable purchasing in the United States. Environ Sci Technol 41:7551–7557, PMID: 18044540. [DOI] [PubMed] [Google Scholar]

- Gregory R, Failing L, Harstone M, Long G, McDaniels T, Ohlson D. 2012. Structured Decision Making: A Practical Guide to Environmental Management Choices. Oxford, UK: Wiley-Blackwell. [Google Scholar]

- Hambrick DC, Geletkanycz MA, Fredrickson JW. 1993. Top executive commitment to the status quo. Strat Mgmt J 14:401–418, 10.1002/smj.4250140602. [DOI] [Google Scholar]

- Hansen SF, Jensen KA, Baun A. 2014. NanoRiskCat: A conceptual tool for categorization and communication of exposure potentials and hazards of nanomaterials in consumer products. J Nanopart Res 16:2195, 10.1007/s11051-013-2195-z. [DOI] [Google Scholar]

- Hansen SF. 2010. Multicriteria mapping of stakeholder preferences in regulating nanotechnology. J Nanopart Res 12:1959–1970, PMID: 21170118, 10.1007/s11051-010-0006-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang IB, Keisler J, Linkov I. 2011. Multi-Criteria Decision Analysis in environmental sciences: Ten years of applications and trends. Sci Total Environ 409:3578–3594, PMID: 21764422, 10.1016/j.scitotenv.2011.06.022. [DOI] [PubMed] [Google Scholar]

- Hyde K, Maier HR, Colby C. 2003. Incorporating uncertainty in the PROMETHEE MCDA method. J Multi-Crit Decis Anal 12:245–259, 10.1002/mcda.361. [DOI] [Google Scholar]

- IC2 (Interstate Chemicals Clearinghouse). 2013. Alternatives Assessment Guide. http://www.newmoa.org/prevention/ic2/IC2_AA_Guide-Version_1.pdf [accessed 14 Oct 2015].

- Jacobs MM, Malloy TF, Tickner JA, Edwards S. 2016. Alternatives assessment frameworks: Research needs for the informed substitution of hazardous chemicals. Environ Health Perspect 124:265–280, 10.1289/ehp.1409581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalra N; Hallegatte S; Lempert RJ; Brown C; Fozzard A; Gill S; Shah A. 2014. “Agreeing on Robust Decisions: New Processes for Decision Making Under Deep Uncertainty. World Bank Policy Research Paper No. 6906.” http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2446310&download=yes## [accessed 24 November 2015].

- Kiker GA, Bridge TS, Varghese A, Seager TP, Linkov I. 2005. Application of multicriteria decision analysis in environmental decision making. Integr Environ Assess Manage 1:95–108, 10.1897/IEAM_2004a-015.1. [DOI] [PubMed] [Google Scholar]

- Kunreuther H, Meyer R, Zeckhauser R, Slovic P, Schwartz B, et al. 2002. High stakes decision making: Normative, descriptive and prescriptive considerations. Mark Lett 13:259–268, 10.1023/A:1020287225409. [DOI] [Google Scholar]

- Lahdelma R, Salminen P. 2010. Stochastic Multicriteria Acceptability Analysis (SMAA). In: Trends in Multiple Criteria Decision Analysis. Ehrgott M, Figueira JR, Greco S, eds. New York, NY: Springer, 285–316. [Google Scholar]

- Lavoie ET, Heine LG, Holder H, Rossi MS, Lee RE, Connor EA, et al. 2010. Chemical alternatives assessment: Enabling substitution to safer chemicals. Environ Sci Technol 44:9244–9249, 10.1021/es1015789. [DOI] [PubMed] [Google Scholar]

- Lempert R, Collins M. 2007. Managing the risk of uncertain threshold responses: Comparison of robust, optimum, and precautionary approaches. Risk Anal 27(4):1009–1026. [DOI] [PubMed] [Google Scholar]

- Lempert RJ, Popper SW, Groves DG, Kalra N, Fischbach JR, Bankes SC. 2013. Making good decisions without predictions: Robust decision making for planning under deep uncertainty. http://www.rand.org/pubs/research_briefs/RB9701.html [accessed 14 October 2015].

- Linkov I, Sahay S, Kiker G, Bridges T, Seager TP. 2005. Multi-Criteria Decision Analysis: A framework for managing contaminated sediments. In: Strategic Management of Marine Ecosystems. Levner E, Linkov I, Proth J-M, eds. Dordrecht, The Netherlands: Springer, 271–297. [Google Scholar]

- Linkov I, Satterstrom FK, Kiker G, Batchelor C, Bridges T, Ferguson E. 2006. From comparative risk assessment to multi-criteria decision analysis and adaptive management: Recent developments and applications. Environ Int 32:1072–1093, 10.1016/j.envint.2006.06.013. [DOI] [PubMed] [Google Scholar]

- Linkov I, Moberg E. 2012. Multi-Criteria Decision Analysis: Environmental Applications and Case Studies. Boca Raton, FL: CRC Press. [Google Scholar]

- Linkov I, Varghese, A, Jamil, S, Seager, T, Kiker, G, Bridges, T. 2004. Multi-criteria decision analysis: A framework for structuring remedial decisions and contaminated sites. In: Comparative Risk Assessment and Environmental Decision Making. Linkov I and Ramadan AB, eds. The Netherlands: Kluwer Academic Publishing, 15–54. [Google Scholar]

- Lippiatt B. 2002. “Building for Environmental and Economic Stability Technical Manual and User Guide” (NISTIR 6916). https://nepis.epa.gov/Exe/ZyPDF.cgi?Dockey=9400081C.PDF [accessed 23 May 2017].

- Malloy TF, Blake A, Linkov I, Sinsheimer PJ. 2015. Decisions, science and values: Crafting regulatory alternatives analysis. Risk Analysis 35(12):2137–2151, 10.1111/risa.12466. [DOI] [PubMed] [Google Scholar]

- Malloy TF, Sinsheimer PJ, Blake A, Linkov I. 2013. Use of multi-criteria decision analysis in regulatory alternatives analysis: A case study of lead free solder. Integr Environ Assess Manag 9(4):652–664, PMID: 23703936, 10.1002/ieam.1449. [DOI] [PubMed] [Google Scholar]

- Malloy TF. 2014. Design for regulation: Integrating sustainable production into mainstream regulation. In: Law and the Transition to Business Sustainability. Cahoy DR and Colburn JE, eds. Cham, Switzerland: Springer International Publishing AG, 1–23. [Google Scholar]

- Malloy TF. 2014. Principled Prevention. Ariz State Law J 46:105–190. [Google Scholar]

- MDEP (Maine Department of Environmental Protection). 2012. Regulation of Chemical Use in Children’s Products (codified at 06 096 CMR Chapter 880-888) http://www.maine.gov/dep/safechem/rules.html [accessed 19 August 2016].

- NAS (National Academy of Sciences). 2014. A Framework to Guide Selection of Chemical Alternatives. Washington, DC: National Academies Press. [PubMed] [Google Scholar]

- NAS. 1996. Understanding Risk: Informing Decisions in a Democratic Society. Washington, DC: National Academies Press. [Google Scholar]

- NAS. 2005. Decision Making for the Environment: Social and Behavioral Research Priorities. Washington, DC: National Academies Press. [Google Scholar]

- NAS. 2009. Science and Decisions: Advancing Risk Assessment. Washington, DC: National Academies Press. [PubMed] [Google Scholar]

- OECD (Organisation for Economic Co-operation and Development) Ad Hoc Group of Substitution of Harmful Chemicals. 2016. Substitution of hazardous chemicals. http://www.oecd.org/chemicalsafety/testing/substitution-of-hazardous-chemicals.htm [accessed 23 May 2017].

- Parnell GS, Metzger RE, Merrick J, Eilers R. 2001. Multiobjective decision analysis of theater missile defense architectures. Syst Engin 4(1):24–34, . [DOI] [Google Scholar]

- Perez O. 2010. Precautionary governance and the limits of scientific knowledge: A democratic framework for regulating nanotechnology. UCLA J Environ Law Policy 28:30–76. [Google Scholar]

- Prado V, Rogers K, Seager TP. 2012. Integration of MCDA Tools in Valuation of Comparative Life Cycle Assessment, In: Life Cycle Assessment Handbook: A Guide for Environmentally Sustainable Products (Curran MA, ed). Beverly, MA: Scrivener, 413–432. [Google Scholar]

- Rossi M, Peele C, Thorpe B. 2012. BizNGO Chemical Alternatives Assessment Protocol. http://www.bizngo.org/alternatives-assessment/chemical-alternatives-assessment-protocol [accessed 14 October 2015].

- Rossi M, Tickner J, Geiser K. 2006. Alternatives Assessment Framework of the Lowell Center for Sustainable Production. http://www.sustainableproduction.org/downloads/FinalAltsAssess06_000.pdf [accessed 24 November 2015].

- Samuelson W, Zeckhauser R. 1988. Status quo bias in decision making. J Risk Uncertain 1:7–59, 10.1007/BF00055564. [DOI] [Google Scholar]

- Schoenung JM, Ogunseitan OA, Eastmond DA. 2009. Research and education in green materials: A multi-disciplinary program to bridge the gaps, In: ISSST, 2009, IEEE International Symposium on Sustainable Systems and Technology, 18–20 May 2009 Tempe, AZ pp. 1–6, 10.1109/ISSST.2009.5156760. [DOI] [Google Scholar]

- Sinsheimer PJ, Grout C, Namkoong A, Gottlieb R, Latif A. 2007. The viability of professional wet cleaning as a pollution prevention alternative to perchlorethylene dry cleaning. J Air Waste Manage 57:172–178. [DOI] [PubMed] [Google Scholar]

- SPRU (Science Policy Research Unit). 2004. Briefing 5: Using the Multi-criteria Mapping Technique. Deliberative Mapping, University of Sussex. http://users.sussex.ac.uk/∼prfh0/DM%20Briefing%205.pdf [accessed 14 October 2015].

- Thaler RH, Benartzi S. 2004. Save More Tomorrow™: Using Behavioral Economics to Increase Employee Saving. J Polit Econ 112:S164–S187, 10.1086/380085. [DOI] [Google Scholar]

- U.S. EPA (U.S. Environmental Protection Agency). 1990. National oil and hazardous substances pollution contingency plan. Final rule. Fed Reg 55:8665–8865. [Google Scholar]

- U.S. EPA. 2011. “Design for the Environment Program Alternatives Assessment Criteria for Hazard Evaluation, Version 2.0.” https://www.epa.gov/sites/production/files/2014-01/documents/aa_criteria_v2.pdf [accessed 23 May 2017].

- U.S. EPA. 1994. National oil and hazardous substances pollution contingency plan. Final rule. Fed Reg 59:13044–13161. [Google Scholar]

- U.S. EPA. “Safer Chemical Ingredients List (SCIL).” http://www2.epa.gov/saferchoice/safer-ingredients#scil [accessed 14 Oct 2015].

- Wender BA, Foley RW, Prado-Lopez V, Ravikumar D, Eisenberg DA, Hottle TA, et al. 2014. Illustrating anticipatory life cycle assessment for emerging photovoltaic technologies. Environ Sci Technol 48:10531–10538, 10.1021/es5016923. [DOI] [PubMed] [Google Scholar]

- Yatsalo BI, Kiker GA, Kim SJ, Bridges TS, Seager TP, Gardner K, et al. 2007. Application of multicriteria decision analysis tools to two contaminated sediment case studies. Integr Environ Assess Manag 3:223–233, 10.1897/IEAM_2006-036.1. [DOI] [PubMed] [Google Scholar]

- Zhou X, Schoenung JM. 2007. An integrated impact assessment and weighting methodology: Evaluation of the environmental consequences of computer display technology substitution. J Environ Manage 83:1–24, PMID: 16714079, 10.1016/j.jenvman.2006.01.006. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.