Abstract

This study investigated eye-movement patterns during emotion perception for children with hearing aids and hearing children. Seventy-eight participants aged from 3 to 7 were asked to watch videos with a facial expression followed by an oral statement, and these two cues were either congruent or incongruent in emotional valence. Results showed that while hearing children paid more attention to the upper part of the face, children with hearing aids paid more attention to the lower part of the face after the oral statement was presented, especially for the neutral facial expression/neutral oral statement condition. These results suggest that children with hearing aids have an altered eye contact pattern with others and a difficulty in matching visual and voice cues in emotion perception. The negative cause and effect of these gaze patterns should be avoided in earlier rehabilitation for hearing-impaired children with assistive devices.

Keywords: hearing-impaired, emotion perception, eye-movement, auditory-visual perception, oral statement

Introduction

Decoding people’s emotional expression is essential for a human’s successful social interaction (Lopes et al., 2004; Smith et al., 2005). Emotion can be judged by examining the face, voice, and body (Rosenberg and Ekman, 1994; Scherer, 2003; Banziger et al., 2009). Human faces, especially the eyes, are the primary and most powerful mediation for the perception and communication of emotion (O’Donnell and Bruce, 2001; Schyns et al., 2002; Vinette et al., 2004; Adams and Nelson, 2016). However, one cannot accurately interpret other’s emotion only through facial cues because there may be conflicting information in voice cues. An example of such a case is sarcasm (Zupan, 2013). By matching both auditory and visual information, people can better understand others’ emotional and mental states. Consequently, the development of social competence largely depends on learning to integrate and interpret facial and voice cues of emotion (Mayer et al., 2004).

Due to early sensory impairment, young children with severe and profound hearing loss lack auditory information in emotional communication and have difficulties in adult-child interactions. This implies that hearing-impaired children have a risk of deficits in understanding emotional expressions (Rieffe and Terwogt, 2000). Currently, an increasing number (i.e., 25% average annual growth rate in China since 1995, Liang and Brendan, 2013) of hearing-impaired children wear assistive devices [e.g., digital hearing aids (HAs) or cochlear implants (CIs)] to improve hearing and speech. As a result, there is a need to explore the effects of using assistive devices on children’s emotional development.

Previous research on emotion perception by children with assistive devices has mainly used emotion matching or labeling tasks to measure the response accuracy in recognizing facial expressions of different emotions. While some studies on children have found that the hearing group did not show any advantage in facial expression recognition over the severely and profoundly hearing-impaired group with CIs or HAs (Hosie et al., 1998), a growing body of research has shown that hearing children perform significantly better in facial expression recognition than those with CIs or HAs (Most et al., 1993; Gray et al., 2001; Dyck et al., 2004), suggesting that earlier intervention for severely and profoundly hearing-impaired children should include not only language aspects but also emotional treatment (Wang et al., 2011; Wiefferink et al., 2013).

Facial muscle movements provide a perceptual basis for identifying different emotional expressions. For example, the facial expression of happiness can be characterized as a flexing of the mouth muscles and a restriction of the eyes (Ekman and Friesen, 1978). To measure how a human deploys attention during facial expression decoding, researchers have utilized eye-movement techniques to investigate gaze tendencies in different parts of the face (e.g., Aviezer et al., 2008; Schurgin et al., 2014). Eye-movement studies on individuals with autism spectrum disorder and social anxiety disorder have demonstrated that participants with deficits look less at others’ faces, particularly the eye regions, compared to hearing controls (Klin et al., 2002; Horley et al., 2003, 2004; Riby and Hancock, 2008; Nakano et al., 2010; Weeks et al., 2013; Falck-Ytter et al., 2015). These results suggest that deficits in emotional and social communication are associated with gaze avoidance (i.e., avoiding looking at others’ eyes). For people with hearing loss, previous studies have indicated that they have different eye-movement patterns in face-to-face communication. While some research has indicated that deaf people rely more on visual cues in the eye regions relative to hearing people (Luciano, 2001), other studies have found that severely and profoundly hearing-impaired people pay more attention to the lower part of the face (e.g., Muir and Richardson, 2005; Letourneau and Mitchell, 2011).

Deficits in emotion perception among severely and profoundly hearing-impaired people are also associated with their deprivation of auditory-linguistic experience (Gray et al., 2001; Geers et al., 2013). On one hand, auditory experience in the first period of life is critical for the development of speech perception and production (Kral, 2007), which are fundamental for voice emotion recognition. Some studies have reported that voice emotion recognition in both congenitally and progressively hearing-impaired individuals with assistive devices is rather poor, as compared to their hearing peers (Hopyan-Misakyan et al., 2009; Volkova et al., 2013; Wang et al., 2013). On the other hand, auditory deprivation may also influence the remaining visual and somatosensory modalities (Gori et al., 2017), which are fundamental for face expression perception.

Emotion perception requires the matching of both visual and auditory information (Mayer et al., 2004). However, studies of emotion perception focused on hearing-impaired children’s performance in visual face recognition or voice emotion recognition separately. Without considering emotional aspects, previous studies have found that deaf individuals with restored hearing produced different behavioral patterns as compared to hearing controls in audio-visual integration tasks (e.g., Doucet et al., 2006; Rouger et al., 2008; Bergeson et al., 2010; Gori et al., 2017), suggesting a cross-modal reorganization at the cortical level for them (Doucet et al., 2006). Likewise, it is necessary to investigate hearing-impaired people’s behaviors when both visual and auditory cues of emotion are available in a typical social context (Ziv et al., 2013). Most et al. (1993) created auditory only, visual only, and auditory-visual modalities of emotional expressions (e.g., anger, disgust, surprise, and sadness) to study emotion perception for hearing-impaired (with HAs) and hearing adolescents. They found that the recognition accuracy in the auditory-visual mode was higher than that in the auditory or the visual modes alone for the hearing participants, but there was no difference between the auditory-visual mode and the visual mode alone for the hearing-impaired participants. This result was replicated by Most and Aviner (2009) and suggested that adolescents with profound and congenital hearing loss were mainly relying on visual information to interpret emotional expressions. However, when Most and Michaelis (2011) tested preschoolers (4.0–6.6 years) as participants, they found that the accuracy of emotion perception in auditory-visual conditions was significantly higher than in auditory or visual modes alone for both hearing children and children with hearing loss ranging from moderate to profound, indicating that hearing-impaired young children utilized both visual and auditory information for emotion perception.

As indicated above, different studies have used different age-samples (e.g., preschoolers and adolescents), different modalities of stimuli (e.g., auditory only, visual only, and auditory-visual), and different measurements (e.g., response accuracy and eye-movement patterns) to investigate hearing-impaired children’s deficits in emotion perception. However, there is still a lack of research to examine the eye-movement patterns of hearing-impaired preschoolers in a relatively ecological context for emotion perception. To construct a more valid context for emotion perception, Weeks et al. (2013) adopted a dynamic social evaluation task, wherein participants were asked to watch a video with a static facial expression followed by a talking face with an oral statement to assess the gaze behaviors of social anxiety disorder. We used similar materials and a similar task in the current study. The stimuli included the positive or neutral facial expression as visual cues and the positive or neutral oral statement as voice cues, which were either congruent or incongruent in emotional valence with each other. When hearing-impaired children with HAs had deficits in emotional expression recognition, they would produce different gaze patterns (e.g., reduced eye contact) as compared to hearing children. Specifically, if different gaze patterns existed during the static face period, the deficits would be ascribed to only visual aspect. In contrast, if different gaze patterns existed during the oral statement period, the deficits would be ascribed to the matching of visual and auditory information. In addition, whether the group effect was modulated by the consistency between visual and voice cues would validate hearing-impaired children’s interactive deficits in emotion perception.

Materials and Methods

Participants

Thirty-nine preschool-aged hearing-impaired children (Mage = 60 months, SD = 12; 24 boys and 15 girls) with HAs and 39 hearing children (Mage = 62 months, SD = 8; 23 boys and 16 girls), who came from rehabilitation centers in Hebei and kindergartens in Beijing, participated in the current study. The hearing-impaired children had severe and congenital hearing loss. The characteristics of participants are shown in Table 1. There were no significant group differences in age (t = -0.889, p = 0.377) and gender (χ2 = 0.054, p = 0.817). The results of the PPVT-IV test (Dunn and Dunn, 2007) showed that the receptive vocabulary skill of hearing-impaired children was worse than that of hearing children (t = -18.26, p < 0.001). The hearing-impaired children received training of voice production and voice recognition for 1 h every day and they were encouraged to use oral language for daily communication. This study was reviewed and approved by the Ethics Committee of School of Psychology, Capital Normal University with the written informed consent signed by the parents of the participants prior to the experiment.

Table 1.

Characteristics of participants in each group.

| Hearing-impaired | Hearing | |

|---|---|---|

| Number of children | 39 | 39 |

| Mean age (SD) (months) | 60 (12) | 60 (8) |

| Range of age (months) | 44–90 | 44–72 |

| Mean age of using hearing aids (SD) (months) | 29 (12) | // |

| Range of unaided-hearing loss (left/right) | 60–125/60–125 | // |

| Ratio of males: females | 24:15 | 23:16 |

Materials and Design

Twenty-four video clips were created. The actors were graduate students (Mage = 24.7 years) at Capital Normal University. Each clip began with a 4.5 s period in which the actor silently looked at the camera and exhibited either a neutral or a positive facial expression, followed by a 7.5 s period during which the actor delivered either a neutral statement (e.g., you are a child with hair) or a positive statement (e.g., you are a cute child) with a talking face and gazed at the camera (see Weeks et al., 2013 for similar video clips). For the videos, there were four conditions according to the congruency of visual vs. oral statements: 2 congruent (neutral expression/neutral statement, positive expression/positive statement) and 2 incongruent conditions (neutral expression/positive statement, positive expression/neutral statement). Each condition included six videos created by six actors (three males and three females). The faces of the different actors were normalized to a uniform size for presentation. The sounds of all statements in the videos were dubbed by the same person and were transferred (with cool edit Pro2.1 software) to male sounds or female sounds according to the actors’ gender. Sixty-five students were recruited to rate the emotional valence of the facial expressions and oral statements in the videos. The percentage of agreement was calculated relative to the emotional valence designated by experimenters. The results showed that the percentages of agreement for the emotional valence of the facial expressions and oral statements were above 95%.

Apparatus and Procedure

Participants’ eye-movements were recorded by a TobiiX120 system at a rate of 120 Hz. Children were instructed to view 24 video clips presented randomly on a 21.5-inch Samsung monitor. The size of each video clip was 1280 × 720 pixels. The distance between the monitor and participants’ eyes was 75 cm. Before the experiment, all the participants completed the calibration with a nine-point grid. There was a central fixation cross for 2 s followed by a blank screen for 2 s before the presentation of each video clip. All children were orally told that “After looking at each central fixation cross, you will see a video with voice. Please watch and listen carefully.” To make sure that hearing-impaired participants could understand the instructions, their language teacher helped the experimenter to explain the instructions. The mean retention rate of the eye-movement data when viewing the video clips for hearing-impaired and hearing children were 92 and 96% respectively.

Data Analysis

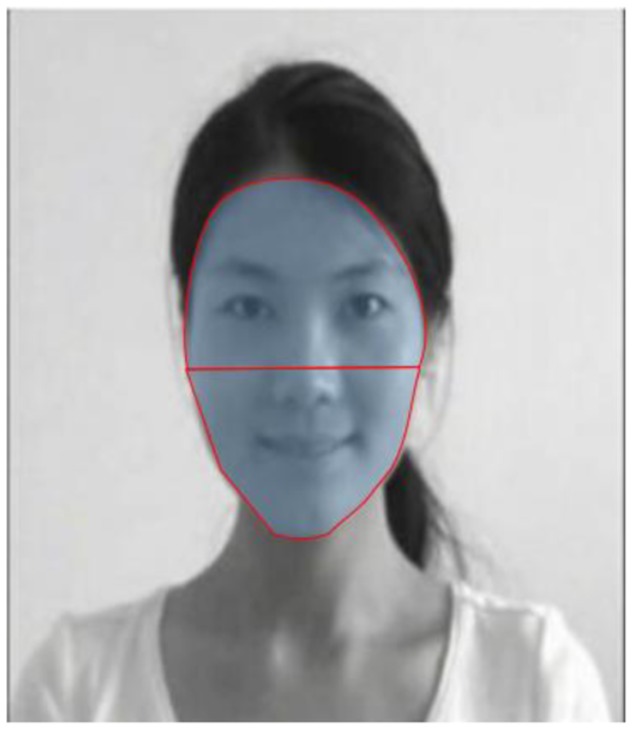

We used an algorithm with dispersion threshold (maximum fixation radius = 1) and duration threshold (minimum fixation duration = 100 ms) criteria (Salvucci and Goldberg, 2000; Borgi et al., 2014) to determine fixations in the current study. The upper and lower parts of the face represented the generalized regions for eyes and mouth, respectively, and were designated areas of interest (AOIs) (see Figure 1 for an example of AOIs). Trials (i.e., 6%) with no fixations on AOIs were not included in the analyses. The number of saccades between the upper and lower parts of the face, the number of fixations within the AOIs, and the viewing time (i.e., the sum of the individual fixation durations) within the AOIs were computed. The number of fixations and the viewing time were transformed to percentages by dividing the total number of fixations and the total viewing time on the stimuli, respectively. As the number of fixations and the viewing time in the upper and lower parts of the face were highly dependent on each other, we mainly report the patterns for the upper part of the face.

FIGURE 1.

An example of AOIs.

We analyzed the data in the period (4.5 s) with only the facial expression and the period (7.5 s) after the oral statement appeared. For each period, three repeated-measure ANOVAs (dependent variables: the number of saccades between the upper and lower parts of the face, the percentage for the number of fixations within the upper part of the face, and the percentage for the viewing time within the upper part of the face) were conducted with group as a between-subject factor, and the emotional valences of facial expression and oral statement were calculated as within-subject factors. All ANOVAs were calculated when age and gender were controlled.

Results

The Number of Saccades between the Upper and Lower Parts of the Face

Table 2 illustrates the number of saccades between the upper and lower parts of the face for each condition. During the period with the facial expression only, no significant main effects or interactions were found (ps > 0.05).

Table 2.

The mean (and standard deviations) for the number of saccades between the upper and lower parts of the face in each condition.

| NE-NS | NE-PS | PE-NS | PE-PS | ||

|---|---|---|---|---|---|

| Periods with facial expression | Hearing-impaired | 1.7 (1.4) | 1.7 (1.3) | 1.8 (1.4) | 1.6 (1.2) |

| Hearing | 1.9 (1.5) | 1.9 (1.1) | 2.2 (1.2) | 2.1 (1.3) | |

| Periods with oral statement | Hearing-impaired | 1.9 (1.4) | 1.9 (1.4) | 1.8 (1.3) | 1.6 (1.3) |

| Hearing | 2.5 (1.6) | 2.5 (1.6) | 2.6 (1.7) | 2.1 (1.4) |

NE, neutral expression; NS, neutral statement; PE, positive expression; PS, positive statement.

During the period with the oral statement, we observed a significant group effect, F(1,74) = 4.169, p = 0.045, η2 = 0.054, indicating that hearing children produced more inter-regional saccades as compared to hearing-impaired children. The other main effects and interactions during the period with the oral statement were not significant (ps > 0.05).

The Percentage for the Number of Fixations within the Upper Part of the Face

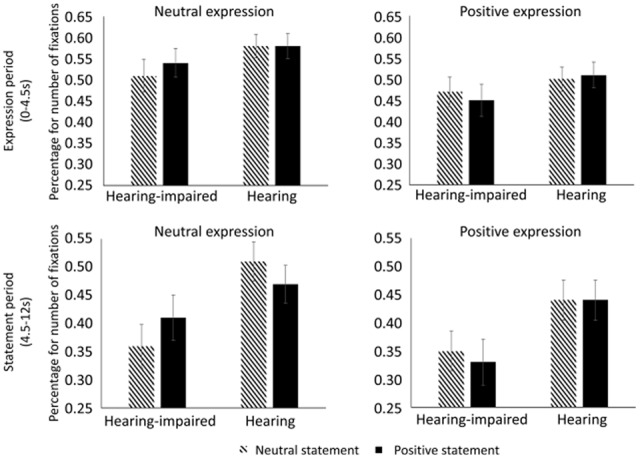

Figure 2 illustrates the percentage for the number of fixations within the upper part of the face for each condition. During the period with the facial expression only, no significant main effects or interactions were found (ps > 0.05).

FIGURE 2.

The percentage for number of fixations during the periods with facial expression (top panel) and oral statement (bottom panel) in each condition. The ordinate (Y axis) presents the quotient of the fixation number within the upper part of the face divided by the total fixation number on the stimuli.

During the period with the oral statement, we found that hearing-impaired children fixated less on the upper part of the face than hearing children, F(1,74) = 4.261, p = 0.043, η2 = 0.055. In addition, there was a significant three-way interaction among group, valence of expression, and valence of statement, F(1,74) = 5.055, p = 0.028, η2 = 0.065. Further analyses indicated that hearing-impaired children produced fewer fixations on the upper part of the face in the neutral expression/neutral statement condition relative to the neutral expression/positive statement condition (p = 0.033), whereas hearing children produced numerically more fixations in the neutral expression/neutral statement condition relative to the neutral expression/positive statement condition (p = 0.055). There was no significant difference between the positive expression/neutral statement condition and the positive expression/positive statement condition for either group (ps > 0.05). The other main effects and interactions during the period with the oral statement were not significant (ps > 0.05).

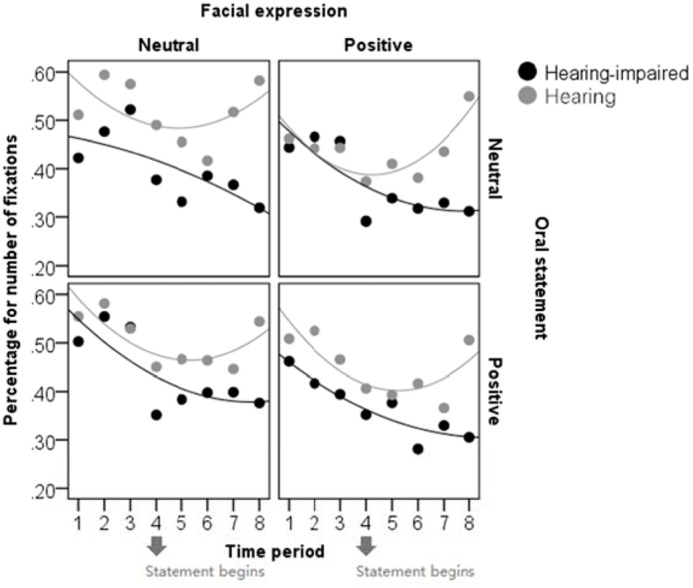

To illustrate the development of attention allocation as time went on during the trial, we divided the time into eight periods (1.5 s per period) and drew scatter plots between the time period and the percentage for number of fixations as a function of group, valence of facial expression, and valence of oral statement (see Figure 3). All the scatter plots were fitted by quadratic lines. Again, the patterns in the scatter plots showed that, after the oral statement was presented, hearing children looked more at the upper part of the face compared to hearing-impaired children and that hearing-impaired children looked less at the upper part of the face in the neutral expression/neutral statement condition relative to the neutral expression/positive statement condition.

FIGURE 3.

The scatter plots between time period and percentage for number of fixations in each condition. The abscissa (X axis) presents eight time periods with 1.5 s for each one. The ordinate (Y axis) presents the quotient of the fixation number within the upper part of the face divided by the total fixation number on the stimuli.

The Percentage for Viewing Time within the Upper Part of the Face

As shown in Table 3, the pattern of the percentage for viewing time was generally similar to the percentage for the number of fixations. During the period with the facial expression only, no significant main effects or interactions were found (ps > 0.05).

Table 3.

The mean (and standard deviations) for the percentage of viewing time within the upper part of the face in each condition.

| NE-NS | NE-PS | PE-NS | PE-PS | ||

|---|---|---|---|---|---|

| Periods with facial expression | Hearing-impaired | 0.50 (0.25) | 0.55 (0.22) | 0.47 (0.22) | 0.44 (0.24) |

| Hearing | 0.61 (0.18) | 0.61 (0.19) | 0.51 (0.21) | 0.53 (0.23) | |

| Periods with oral statement | Hearing-impaired | 0.33 (0.25) | 0.37 (0.28) | 0.32 (0.24) | 0.30 (0.26) |

| Hearing | 0.49 (0.25) | 0.46 (0.24) | 0.41 (0.25) | 0.45 (0.26) |

NE, neutral expression; NS, neutral statement; PE, positive expression; PS, positive statement.

During the period with the oral statement, we found that hearing-impaired children spent a shorter amount of time on the upper part of the face than hearing children, F(1,74) = 4.652, p = 0.034, η2 = 0.060. In addition, there was a significant three-way interaction among group, valence of expression, and valence of statement, F(1,74) = 6.202, p = 0.015, η2 = 0.078. Further analyses indicated that hearing-impaired children produced shorter viewing times on the upper part of the face in the neutral expression/neutral statement condition relative to the neutral expression/positive statement condition (p = 0.085; a trend toward significant), whereas there was no significant difference in viewing time between the neutral expression/neutral statement and the neutral expression/positive statement conditions (p = 0.163) for hearing children. There was no significant difference between the positive expression/neutral statement condition and the positive expression/positive statement condition for either group (ps > 0.05). The other main effects and interactions during the period with the oral statement were not significant (ps > 0.05).

In addition, neither the age of participants nor the age of using hearing aids was correlated with eye-movement measures (the percentage for the number of fixations and the percentage for viewing time within the upper part of the face) (ps > 0.05), suggesting that the findings of gaze behaviors are not modulated by the age of participants and the age of using hearing aids.

Discussion

The results showed that children with HAs have different gaze patterns in emotion perception relative to hearing children. Although there was no group effect or experimental effect on gaze patterns when there was only a facial expression, children with HAs produced fewer fixations and shorter viewing time on the upper part of the face and fewer inter-regional saccades than hearing children after the oral statement was presented. In addition, children with HAs produced fewer fixations and shorter viewing time on the upper part of the face for the neutral expression/neutral statement condition relative to the neutral expression/positive statement condition. These results indicated that children with HAs were less likely to explore different parts of the face and preferred to look at the lower part of the face when there was an oral statement. This result is consistent with previous studies on deaf people without assistive devices (Agrafiotis et al., 2003; Muir and Richardson, 2005; Letourneau and Mitchell, 2011). Although young children with HAs are encouraged and trained to use verbal-auditory communication, their gaze patterns during social interactions are still different from hearing controls.

According to the scatter plots, hearing children’s attention was also attracted to the lower part of the face by the presentation of the oral statement, but they looked back at the upper part of the face after that. These typical gaze patterns suggest that eye contact plays an important role in emotion perception and social interaction. The behavior of keeping eye contact is inborn (Farroni et al., 2002). Gaze behavior and eye contact are a conspicuous aspect of human interaction and the eye region is often used as a cue for the attribution of emotional/mental states to others (Kleinke, 1986), an ability referred to as “theory of mind” (Premack and Woodruff, 1978). Early social experience, in turn, affects the development of eye gaze processing (Corkum and Moore, 1998; Senju and Csibra, 2008; Senju et al., 2015). Children with HAs have experienced auditory deprivation during early childhood, so they may not receive enough social interactions depending on oral communications that are necessary to understand the emotional expressions and situations (Rieffe and Terwogt, 2000). To compensate for deficits in oral communications, children with HAs may use a different gaze strategy to integrate visual cues which are helpful in understanding the minds of others. For instance, hearing-impaired children might look at the lower face in order to attempt to lip read, which is the skill of processing speech from the visible movements of the mouth (Campbell et al., 1986). Lipreading has the potential to be useful in phonological processing when there is a lack of hearing (Kyle et al., 2016). It is also possible for hearing-impaired people to incorporate upper face information through peripheral vision, as previous studies have indicated that deaf people have better peripheral vision (Codina et al., 2017). Although children with HAs may notice the upper face information according to peripheral vision, the peripheral processing is not efficient for detecting the details of the eyes. On these grounds, the adapted gaze patterns, which are caused by language delay and deficits in oral communications for children with HAs, can result in reduced opportunities to process the detail information of the eyes. As eye contact is very important for the attribution of emotional states to others, the altered eye gaze pattern may further lead to deficits in emotion perception delay.

Interestingly, children with HAs increased their attention on the upper part of the face when there was a positive oral statement after a neutral facial expression, compared to when there was a neutral oral statement after a neutral facial expression. The different gaze patterns between visual-auditory congruent conditions and incongruent conditions indicate that children with HAs can notice both auditory and visual information in understanding emotional expression. When the facial and voice cues were incongruent, children with HAs directed their eyes to the upper part of the face for confirmation. There has been debate on whether people with HAs or CIs can make adequate use of auditory information in response to incongruent visual-auditory stimuli (Schorr and Knudsen, 2005; Zupan and Sussman, 2009). Most et al. (1993) and Most and Aviner (2009) demonstrated that hearing-impaired adolescents with HAs or CIs relied on visual information but not auditory information in the perception of emotional expressions. With a different paradigm and younger participants, the present study found that both auditory and visual information could be used by hearing-impaired children with HAs to interpret emotional expressions. However, children with HAs may not skillful in matching the auditory and visual information, which results the inconsistency of gaze behavior between them and hearing children. When there was a neutral oral statement after a neutral facial expression, children with HAs would not move theirs gazes back to others eyes for further confirm. The lack of eye contact may give rise to a potential risk in development of emotion perception.

Much of the literature on children with CIs or HAs has focused on the effectiveness of these devices on auditory development and the perception of speech (Sharma et al., 2002; Lee et al., 2010). Now some researchers have begun to explore the broader effects of the use of CIs or HAs on children’s emotional and social development. Actually, these two research fields are not independent of each other. For instance, the promotion of speech for children with CIs or HAs seems to improve language-based concepts related to emotion (Rieffe and Terwogt, 2000; Dyck and Denver, 2003; Peters et al., 2009). The present study further demonstrated that the gaze patterns on facial expressions for children with HAs deviated from that for hearing children when additional cues were presented in the form of speech. These results indicate that the speech perception and emotion perception of children with HAs influence each other interactively. The absence of reliable effects during the period with only a static facial expression implies that the deviation of the gaze pattern for hearing-impaired people is different from that for autism spectrum disorder and social anxiety disorder. That is, the gaze avoidance of hearing-impaired people may be attributable to more complex reasons that are related to speech perception. Notably, as we used limited types of facial expressions (neutral and positive) and limited periods with only static facial expressions, whether hearing-impaired people have different gaze patterns for static facial expressions remains open to further investigation.

Much of the research on the recognition of emotional facial expressions has been conducted in ways that minimize context information, but emerging literatures have shown that context is encoded and required during emotion perception (e.g., Barrett and Kensinger, 2010). A completely context-free presentation of facial expressions is impossible in daily life and face muscle movements are insufficient for perceiving internal emotion. At around 3 years, children are able to express their emotions orally and understand situations and circumstances about emotions (Denham et al., 1994; Brown and Dunn, 1996). Although the present study has emphasized the linguistic context in the perception of emotional facial expressions, observers can attribute emotions more accurately according to non-verbal social context such as postures, gestures, and a-priory knowledge about the situation and the protagonist (Wiefferink et al., 2013; Hess and Hareli, 2015). Non-verbal social context might be particularly useful in emotion perception for hearing-impaired individuals. As the integration of facial expression and various context information would help young children to interpret other’s emotion, the role of the context in emotion perception need to be further investigated.

Due to the absence of auditory signal, the cortical reorganization of auditory-visual systems for hearing-impaired individuals has been frequently observed in previous studies without considering emotional aspects (e.g., Doucet et al., 2006; Campbell and Sharma, 2016). Besides the auditory-visual systems, more extensive brain regions are involved in face-voice emotional matching. For instance, the posterior superior temporal sulcus is considered as the neural basis for gaze, facial expression, and lipreading (Haxby et al., 2000; Nummenmaa et al., 2009) and a “multisensory” region for face-voice integration (Watson et al., 2014). Limbic structures, such as the amygdala, is associated with emotional processing (Dolan et al., 2001; Klasen et al., 2011). In the present study, the altered gaze patterns in auditory-visual perception of emotion for hearing-impaired individuals suggest that the cortical reorganization might also happen among sensory-, gaze-, and emotion-related brain regions. Investigation on the interactive influences among these regions will provide an insight into the underlying cortical mechanism of the reduced eye gaze for hearing-impaired children.

Conclusion

The present study revealed the special gaze patterns in auditory-visual perception of emotion for children with assistive devices. While hearing children paid more attention to the upper part of the face, children with HAs paid more attention to the lower part of the face after the speech of emotional expression was presented, especially for the neutral facial expression – neutral oral statement condition. The negative cause and effect of overlooking the upper part of the face, especially in the neural expression/neural statement condition, should be avoided in earlier rehabilitation for hearing-impaired children with assistive devices.

Ethics Statement

All procedures performed in the study involving human participants were conducted in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Written informed consent was obtained from all participants included in the study.

Author Contributions

YW: substantial contributions to the conception or design of the work. WZ: drafting the work or revising it critically for important intellectual content and final approval of the version to be published. YC: acquisition, analysis of data for the work. XB: analysis and interpretation of data for the work.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

Funding. This research was funded by National Natural Science Foundation of China (grant no. 31371058 to YW), State Administration of Press, Publication, Radio, Film and Television of The People’s Republic of China (grant no. GD1608 to YW) and National Natural Science Foundation of China (grant no. 31500886 to WZ), Research Fund for the Talented Person of Beijing City Grant (grant no. 2014000020124G238 to WZ).

References

- Adams R. B., Nelson A. J. (2016). “Eye behavior and gaze,” in APA Handbook of Nonverbal Communication, eds Matsumoto D., Hwang H. C., Frank M. G. (Washington, DC: American Psychological Association; ), 335–362. 10.1037/14669-013 [DOI] [Google Scholar]

- Agrafiotis D., Canagarajah N., Bull D. R., Dye M. (2003). Perceptually optimised sign language video coding based on eye tracking analysis. Electr. Lett. 39 1703–1705. 10.1049/el:20031140 [DOI] [Google Scholar]

- Aviezer H., Hassin R. R., Ryan J., Grady C., Susskind J., Anderson A., et al. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19 724–732. 10.1111/j.1467-9280.2008.02148.x [DOI] [PubMed] [Google Scholar]

- Banziger T., Grandjean D., Scherer K. R. (2009). Emotion recognition from expressions in face, voice, and body: the multimodal emotion recognition test (mert). Emotion 9 691–704. 10.1037/a0017088 [DOI] [PubMed] [Google Scholar]

- Barrett L. F., Kensinger E. A. (2010). Context is routinely encoded during emotion perception. Psychol. Sci. 21 595–599. 10.1177/0956797610363547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergeson T. R., Houston D. M., Miyamoto R. T. (2010). Effects of congenital hearing loss and cochlear implantation on audiovisual speech perception in infants and children. Restor. Neurol. Neurosci. 28 157–165. 10.3233/RNN-2010-0522 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borgi M., Cogliati–Dezza I., Brelsford V., Meints K., Cirulli F. (2014). Baby schema in human and animal faces induces cuteness perception and gaze allocation in children. Front. Psychol. 5:411. 10.3389/fpsyg.2014.00411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown J. R., Dunn J. (1996). Continuities in emotion understanding from three to six years. Child Dev. 67 789–802. 10.2307/1131861 [DOI] [PubMed] [Google Scholar]

- Campbell J., Sharma A. (2016). Visual cross-modal re-organization in children with cochlear implants. PLOS ONE 11:e0147793. 10.1371/journal.pone.0147793 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell R., Landis T., Regard M. (1986). Face recognition and lipreading. a neurological dissociation. Brain 109 509–521. 10.1093/brain/109.3.509 [DOI] [PubMed] [Google Scholar]

- Codina C. J., Pascalis O., Baseler H. A., Levine A. T., Buckley D. (2017). Peripheral visual reaction time is faster in deaf adults and british sign language interpreters than in hearing adults. Front. Psychol. 8:50. 10.3389/fpsyg.2017.00050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corkum V., Moore C. (1998). The origins of joint visual attention in infants. Dev. Psychol. 34 28–38. 10.1037/0012-1649.34.1.28 [DOI] [PubMed] [Google Scholar]

- Denham S. A., Zoller D., Couchoud E. A. (1994). Socialization of preschoolers’ emotion understanding. Dev. Psychol. 30 928–936. 10.1037/0012-1649.30.6.928 [DOI] [Google Scholar]

- Dolan R. J., Morris J. S., de Gelder B. (2001). Crossmodal binding of fear in voice and face. Proc. Natl. Acad. Sci. U.S.A. 98 10006–10010. 10.1073/pnas.171288598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doucet M. E., Bergeron F., Lassonde M., Ferron P., Lepore F. (2006). Cross-modal reorganization and speech perception in cochlear implant users. Brain 129 3376–3383. 10.1093/brain/awl264 [DOI] [PubMed] [Google Scholar]

- Dunn L. M., Dunn D. M. (2007). PPVT–IV: Peabody Picture Vocabulary Test 4th Edn. Bloomington, MN: Pearson Assessments. [Google Scholar]

- Dyck M. J., Denver E. (2003). Can the emotion recognition ability of deaf children be enhanced? A pilot study. J. Deaf Stud. Deaf Educ. 8 348–356. 10.1093/deafed/eng019 [DOI] [PubMed] [Google Scholar]

- Dyck M. J., Farrugia C., Shochet I. M., Holmesbrown M. (2004). Emotion recognition/understanding ability in hearing or vision–impaired children: do sounds, sights, or words make the difference? J. Child Psychol. Psychiatry 45 789–800. 10.1111/j.1469-7610.2004.00272.x [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W. V. (1978). Facial action coding system: a technique for the measurement of facial movement. Riv. Psichiatr. 47 126–138. [Google Scholar]

- Falck-Ytter T., Carlstrom C., Johansson M. (2015). Eye contact modulates cognitive processing differently in children with autism. Child Dev. 86 37–47. 10.1111/cdev.12273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T., Csibra G., Simion F., Johnson M. H. (2002). Eye contact detection in humans from birth. Proc. Natl. Acad. Sci. U.S.A. 99 9602–9605. 10.1073/pnas.152159999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers A. E., Davidson L. S., Uchanski R. M., Nicholas J. G. (2013). Interdependence of linguistic and indexical speech perception skills in school–age children with early cochlear implantation. Ear Hear. 34 562–574. 10.1097/AUD.0b013e31828d2bd6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M., Chilosi A., Forli F., Burr D. (2017). Audio-visual temporal perception in children with restored hearing. Neuropsychologia 99 350–359. 10.1016/j.neuropsychologia.2017.03.025 [DOI] [PubMed] [Google Scholar]

- Gray C., Hosie J., Russell P., Ormel E. A. (2001). “Emotional development in deaf children: understanding facial expressions, display rules and theory of mind,” in Context, Cognition, and Deafness, eds Marschark M., Clark M. D., Karchmer M. (Washington, DC: Gallaudet University Press; ), 135–160. [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4 223–233. 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Hess U., Hareli S. (2015). “The influence of context on emotion recognition in humans,” in Proceedings of 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG) Vol. 3 (Piscataway, NJ: IEEE; ), 1–6. 10.1109/FG.2015.7284842 [DOI] [Google Scholar]

- Hopyan-Misakyan T. M., Gordon K. A., Dennis M., Papsin B. C. (2009). Recognition of affective speech prosody and facial affect in deaf children with unilateral right cochlear implants. Child Neuropsychol. 15 136–146. 10.1080/09297040802403682 [DOI] [PubMed] [Google Scholar]

- Horley K., Williams L. M., Gonsalvez C., Gordon E. (2003). Social phobics do not see eye to eye: a visual scanpath study of emotional expression processing. J. Anxiety Disord. 17 33–44. 10.1016/S0887-6185(02)00180-9 [DOI] [PubMed] [Google Scholar]

- Horley K., Williams L. M., Gonsalvez C., Gordon E. (2004). Face to face: visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Res. 127 43–53. 10.1016/j.psychres.2004.02.016 [DOI] [PubMed] [Google Scholar]

- Hosie J. A., Gray C. D., Russell P. A., Scott C., Hunter N. (1998). The matching of facial expressions by deaf and hearing children and their production and comprehension of emotion labels. Motiv. Emot. 22 293–313. 10.1023/A:1021352323157 [DOI] [Google Scholar]

- Klasen M., Kenworthy C. A., Mathiak K. A., Kircher T. T., Mathiak K. (2011). Supramodal representation of emotions. J. Neurosci. 31 13635–13643. 10.1523/JNEUROSCI.2833-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinke C. L. (1986). Gaze and eye contact: a research review. Psychol. Bull. 100 78–100. 10.1037/0033-2909.100.1.78 [DOI] [PubMed] [Google Scholar]

- Klin A., Jones W., Schultz R., Volkmar F., Cohen D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch. Gen. Psychiatry 59 809–816. 10.1001/archpsyc.59.9.809 [DOI] [PubMed] [Google Scholar]

- Kral A. (2007). Unimodal and cross-modal plasticity in the ‘deaf’ auditory cortex. Int. J. Audiol. 46 479–493. 10.1080/14992020701383027 [DOI] [PubMed] [Google Scholar]

- Kyle F. E., Campbell R., Macsweeney M. (2016). The relative contributions of speechreading and vocabulary to deaf and hearing children’s reading ability. Res. Dev. Disabil. 48 13–24. 10.1016/j.ridd.2015.10.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee Y. M., Kim L. S., Jeong S. W., Kim J. S., Chung S. H. (2010). Performance of children with mental retardation after cochlear implantation: speech perception, speech intelligibility, and language development. Acta Otolaryngol. 130 924–934. 10.3109/00016480903518026 [DOI] [PubMed] [Google Scholar]

- Letourneau S. M., Mitchell T. V. (2011). Gaze patterns during identity and emotion judgments in hearing adults and deaf users of American Sign Language. Perception 40 563–575. 10.1068/p6858 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang Q., Brendan M. (2013). Enter the dragon – china’s journey to the hearing world. Cochlear Implants Int. 14(Suppl. 1), S26–S31. 10.1179/1467010013Z.00000000080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopes P. N., Brackett M. A., Nezlek J. B., Schutz A., Sellin I., Salovey P. (2004). Emotional intelligence and social interaction. Pers. Soc. Psychol. Bull. 30 1018–1034. 10.1177/0146167204264762 [DOI] [PubMed] [Google Scholar]

- Luciano J. M. (2001). Revisiting Patterson’s paradigm: gaze behaviors in deaf communication. Am. Ann. Deaf 146 39–44. 10.1353/aad.2012.0092 [DOI] [PubMed] [Google Scholar]

- Mayer J. D., Salovey P., Caruso D. R. (2004). Emotional intelligence: theory, findings, and implications. Psychol. Inq. 15 197–215. 10.1207/s15327965pli1503_02 [DOI] [Google Scholar]

- Most T., Aviner C. (2009). Auditory, visual, and auditory–visual perception of emotions by individuals with cochlear implants, hearing aids, and normal hearing. J. Deaf Stud. Deaf Educ. 14 449–464. 10.1093/deafed/enp007 [DOI] [PubMed] [Google Scholar]

- Most T., Michaelis H. (2011). Auditory, visual and auditory–visual perception of emotions by young children with hearing loss in comparison to children with normal hearing. J. Speech Lang. Hear. Res. 75 1148–1162. 10.1044/1092-4388(2011/11-0060) [DOI] [PubMed] [Google Scholar]

- Most T., Weisel A., Zaychik A. (1993). Auditory, visual and auditory–visual identification of emotions by hearing and hearing–impaired adolescents. Br. J. Audiol. 27 247–253. 10.3109/03005369309076701 [DOI] [PubMed] [Google Scholar]

- Muir L. J. G., Richardson I. E. (2005). Perception of sign language and its application to visual communications for deaf people. J. Deaf Stud. Deaf Educ. 10 390–401. 10.1093/deafed/eni037 [DOI] [PubMed] [Google Scholar]

- Nakano T., Ota H., Kato N., Kitazawa S. (2010). Deficit in visual temporal integration in autism spectrum disorders. Proc. R. Soc. B Biol. Sci. 277 1027–1030. 10.1098/rspb.2009.1713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L., Passamonti L., Rowe J., Engell A. D., Calder A. J. (2009). Connectivity analysis reveals a cortical network for eye gaze perception. Cereb. Cortex 20 1780–1787. 10.1093/cercor/bhp244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Donnell C., Bruce V. (2001). Familiarisation with faces selectively enhances sensitivity to changes made to the eyes. Perception 30 755–764. 10.1068/p3027 [DOI] [PubMed] [Google Scholar]

- Peters K., Remmel E., Richards D. (2009). Language, mental state vocabulary, and false belief understanding in children with cochlear implants. Lang. Speech Hear. Serv. Schools 40 245–255. 10.1044/0161-1461(2009/07-0079) [DOI] [PubMed] [Google Scholar]

- Premack D., Woodruff G. (1978). Does the chimpanzee have a theory of mind? Behav. Brain Sci. 1 515–526. 10.1017/S0140525X00076512 [DOI] [Google Scholar]

- Riby D. M., Hancock P. J. B. (2008). Eyes on autism. Am. Sci. 96 465–465. [Google Scholar]

- Rieffe C., Terwogt M. M. (2000). Deaf children’s understanding of emotions: desires take precedence. J. Child Psychol. Psychiatry 41 601–608. 10.1111/1469-7610.00647 [DOI] [PubMed] [Google Scholar]

- Rosenberg E. L., Ekman P. (1994). Coherence between expressive and experiential systems in emotion. Cogn. Emot. 8 201–229. 10.1080/02699939408408938 [DOI] [Google Scholar]

- Rouger J., Fraysse B., Deguine O., Barone P. (2008). Mcgurk effects in cochlear-implanted deaf subjects. Brain Res. 1188 87–99. 10.1016/j.brainres.2007.10.049 [DOI] [PubMed] [Google Scholar]

- Salvucci D. D., Goldberg J. H. (2000). “Identifying fixations and saccades in eye–tracking protocols,” in Paper Presented at the Eye Tracking Research & Application Symposium, ETRA 2000 Palm Beach Gardens, FL. 10.1145/355017.355028 [DOI] [Google Scholar]

- Scherer K. R. (2003). Vocal communication of emotion: a review of research paradigms. Speech Commun. 40 227–256. 10.1016/S0167-6393(02)00084-5 [DOI] [Google Scholar]

- Schorr E. A., Knudsen E. I. (2005). Auditory–visual fusion in speech perception in children with cochlear implants. Proc. Natl. Acad. Sci. U.S.A. 102 18748–18750. 10.1073/pnas.0508862102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurgin M. W., Nelson J., Iida S., Ohira H., Franconeri S. L., Franconeri S. L. (2014). Eye movements during emotion recognition in faces. J. Vis. 14 1–16. 10.1167/14.13.14 [DOI] [PubMed] [Google Scholar]

- Schyns P. G., Bonnar L., Gosselin F. (2002). Show me the features! Understanding recognition from the use of visual information. Psychol. Sci. 13 402–409. 10.1111/1467-9280.00472 [DOI] [PubMed] [Google Scholar]

- Senju A., Csibra G. (2008). Gaze following in human infants depends on communicative signals. Curr. Biol. 18 668–671. 10.1016/j.cub.2008.03.059 [DOI] [PubMed] [Google Scholar]

- Senju A., Vernetti A., Ganea N., Hudry K., Tucker L., Charman T., et al. (2015). Early social experience affects the development of eye gaze processing. Curr. Biol. 25 3086–3091. 10.1016/j.cub.2015.10.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A., Dorman M. F., Spahr A. J. (2002). A sensitive period for the development of the central auditory system in children with cochlear implants: implications for age of implantation. Ear Hear. 23 532–539. 10.1097/00003446-200212000-00004 [DOI] [PubMed] [Google Scholar]

- Smith M. L., Cottrell G. W., Gosselin F., Schyns P. G. (2005). Transmitting and decoding facial expressions. Psychol. Sci. 16 184–189. 10.1111/j.0956-7976.2005.00801.x [DOI] [PubMed] [Google Scholar]

- Vinette C., Gosselin F., Schyns P. G. (2004). Spatio–temporal dynamics of face recognition in a flash: it’s in the eyes. Cogn. Sci. 28 289–301. 10.1207/s15516709cog2802_8 [DOI] [Google Scholar]

- Volkova A., Trehub S. E., Schellenberg E. G., Papsin B. C., Gordon K. A. (2013). Children with bilateral cochlear implants identify emotion in speech and music. Cochlear Implants Int. 14 80–91. 10.1179/1754762812Y.0000000004 [DOI] [PubMed] [Google Scholar]

- Wang D. J., Trehub S. E., Volkova A., Lieshout P. V. (2013). Child implant users’ imitation of happy– and sad–sounding speech. Front. Psychol. 4:351. 10.3389/fpsyg.2013.00351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y., Su Y., Fang P., Zhou Q. (2011). Facial expression recognition: can preschoolers with cochlear implants and hearing aids catch it? Res. Dev. Disabil. 32 2583–2588. 10.1016/j.ridd.2011.06.019 [DOI] [PubMed] [Google Scholar]

- Watson R., Latinus M., Noguchi T., Garrod O., Crabbe F., Belin P. (2014). Crossmodal adaptation in right posterior superior temporal sulcus during face–voice emotional integration. J. Neurosci. 34 6813–6821. 10.1523/JNEUROSCI.4478-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weeks J. W., Howell A. N., Goldin P. R. (2013). Gaze avoidance in social anxiety disorder. Depress. Anxiety 30 749–756. 10.1002/da.22146 [DOI] [PubMed] [Google Scholar]

- Wiefferink C. H., Rieffe C., Ketelaar L., De Raeve L., Frijns J. H. M. (2013). Emotion understanding in deaf children with a cochlear implant. J. Deaf Stud. Deaf Educ. 18 175–186. 10.1093/deafed/ens042 [DOI] [PubMed] [Google Scholar]

- Ziv M., Most T., Cohen S. (2013). Understanding of emotions and false beliefs among hearing children versus deaf children. J. Deaf Stud. Deaf Educ. 18 161–174. 10.1093/deafed/ens073 [DOI] [PubMed] [Google Scholar]

- Zupan B. (2013). “The role of audition in audiovisual perception of speech and emotion in children with hearing loss,” in Integrating Face and Voice in Person Perception, eds Belin P., Campanella S., Ethofer T. (New York, NY: Springer; ), 299–324. [Google Scholar]

- Zupan B., Sussman J. E. (2009). Auditory preferences of young children with and without hearing loss for meaningful auditory–visual compound stimuli. J. Commun. Disord. 42 381–396. 10.1016/j.jcomdis.2009.04.002 [DOI] [PubMed] [Google Scholar]