Abstract

The function of empathic concern to process pain is a product of evolutionary adaptation. Focusing on 5- to 6-year old children, the current study employed eye-tracking in an odd-one-out task (searching for the emotional facial expression among neutral expressions, N = 47) and a pain evaluation task (evaluating the pain intensity of a facial expression, N = 42) to investigate the relationship between children’s empathy and their behavioral and perceptual response to facial pain expression. We found children detected painful expression faster than others (angry, sad, and happy), children high in empathy performed better on searching facial expression of pain, and gave higher evaluation of pain intensity; and rating for pain in painful expressions was best predicted by a self-reported empathy score. As for eye-tracking in pain detection, children fixated on pain more quickly, less frequently and for shorter times. Of facial clues, children fixated on eyes and mouth more quickly, more frequently and for longer times. These results implied that painful facial expression was different from others in a cognitive sense, and children’s empathy might facilitate their search and make them perceive the intensity of observed pain on the higher side.

Keywords: child, pain, empathy, facial expression, eye tracking

Introduction

A human face expressing pain may indicate the need for help or the presence of threat, to which observers should react as quickly and accurately as possible, and this highlights the importance of the capacity of “reading” pain for people. Studies have suggested important factors that would influence our reading of, as well as reactions to pain, among which an essential one is empathy (Goubert et al., 2005). It refers to the process to perceive and be responsive to others’ emotions, which enables us to react appropriately (Decety et al., 2016). Studies have found that higher empathy was associated with better performance and deeper involvement in a task of facial expression recognition (Choi and Watanuki, 2014). Despite the findings on the relationship between empathy and reading of pain in adults, little attention has been given to children. Empathy can be divided into a cognitive and an affective facet, and children’s empathy mostly takes the form of affective empathy, such as emotion contagion and emotion sharing (de Waal, 2008; Decety, 2010; Huang and Su, 2012). Here, we used eye-tracking in combination with computerized odd-one-out task and pain evaluation task to investigate the relationship between children’s empathy and their perception and evaluation of facial pain expression.

Indeed, facial expressions are one of the most important visual signals of pain (Kunz et al., 2012; Schott, 2015). Previous research has suggested that pain is a highly affective state that is accompanied by typical facial expressions (Williams, 2002; Goubert et al., 2005). As evolutionary psychologists suggest, pain may involve two functions. Pain attracts the observer’s attention and evokes approaching or avoiding behaviors in him or her (Williams, 2002); for the signal sender, pain signals threat and alerts the observer, and may evoke empathic and pro-social behaviors in the observer (Decety, 2010; Prkachin, 2011). The current research was intended to provide insight as to how children decoded visually expressed pain as a distinct emotion.

First, the facial expression of pain is characterized by some facial clues that could be detected visually and contribute to the evaluation of pain (Deyo et al., 2004; Prkachin, 2011). Deyo et al. (2004) found that 5- to 6-year old children could discriminate facial expressions of pain from neutral ones, and children were able to make better use of facial clues for this discrimination as they grew up. Other researchers also found that 3- and 12-year-old children could detect pain in others and assess pain intensity indexed by facial expression (Grégoire et al., 2016). Roy et al. (2015) employed the bubbles method and found that the facial clues of frown lines and the mouth carry the most reliable information for adults to decide whether a facial expression shows pain or not, but researchers are still far from answering the same question in children (Craig et al., 2010; Prkachin, 2011). For vulnerable children to survive, facial expressions convey some of the most salient clues that children can display to attract caregivers’ attention (Cole and Moore, 2014; Finlay and Syal, 2014). Besides, relying more on bottom-up processing, children are better subjects than adults for investigating which perceptual features of a facial expression contribute to the search and evaluation of the expression (LoBue and DeLoache, 2010; LoBue et al., 2010).

Second, some individual factors would influence how efficiently and accurately observers decode pain, such as empathy (Goubert et al., 2005; Jackson et al., 2005). Specifically, decoding capability may be related to their abilities of affective sharing and cognitive regulation, which are core components of empathy (Decety and Svetlova, 2012). As we know, perception and evaluation of pain is closely linked with empathy in adults (Grynberg and Maurage, 2014; Schott, 2015). The hypothetic theory of empathy-altruism indicates that empathy may induce altruistic motivation (Batson and Shaw, 1991; Decety et al., 2016), which in turn may be closely related to our reaction to the signals of pain. However, few studies have focused on children as to how empathy influences their recognition of pain. It is widely believed that cognitive empathy and affective empathy do not develop in parallel (Decety, 2010; Huang and Su, 2012), with affective empathy developing first or we are born with a certain degree of affective empathy (Preston and de Waal, 2002; Decety, 2010). Present researches suggest the cognitive component may be dominant in adults’ empathy (Chen et al., 2014; Cheng et al., 2014). Grégoire et al. (2016) have investigated children’s empathy for pain, but failed to find any relationship, presumably because they adapted the self-report Interpersonal Reactivity Index (Davis, 1983) to a teacher-reported format Therefore, the current study had revised the measurement of empathy with a questionnaire used widely for children, and adapted the experimental tasks for study on children.

To sum up, we designed two experiments to assess the influence of children’s empathy upon their behavioral patterns in perception and evaluation of pain shown by facial expression. In the first experiment, we planned to test how pain facial expression was perceived differently from others and what the relationship was between children’s empathy and their perception performance. A 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) two-factor mixed experiment was used. In the second, we planned to test what facial clues were essential for children’s evaluation of others’ pain and what the relationship was between children’s empathy and their evaluated level of pain. A 2 (Empathy: high, low) × 3 (Facial expression type: painful, sad, angry) two-factor mixed experiment was employed. We used eye-tracking devices as in previous research (Vervoort et al., 2013; LoBue et al., 2014) in order to provide data with high temporal resolution to investigate the dynamics of the attentional processing stage. We predicted that painful faces would be searched for more quickly than others and evaluated as more painful than others, and children’s empathy would be positively associated with their performance in searching, and with their perceived intensity of pain. As for attentional processing, children’s empathy would influence the later stage of attention (attentional maintenance), and facial clues of mouth and eyes would be more helpful for them to view the faces.

Experiment 1

Participants

Sixty-two 5- to 6-year-old children were recruited from a local kindergarten. According to the kindergarten’s official records, these children were normally developing and showed no signs of mental disease. This experiment was approved by the Ethics Committee of the School of Psychological and Cognitive Sciences at Peking University, we obtained informed consent from their guardians. In accordance with the Declaration of Helsinki, we provided parents of each participant with a written description of the experiment before it began. All parents stated in written informed consent that they allowed their child to participate. Fifteen participants did not meet the data quality criteria and were excluded (e.g., eye movements tracked < 75% of total viewing time in task) (Vervoort et al., 2013), but this exclusion did not affect the nature of the results in terms of significance and directionality. Finally, 47 children (Mage = 71.21 months, SDage = 5.72; 21 males) were analyzed. Participants scoring on a self-reported questionnaire (see below) lower and higher than the median score formed the low and the high empathy groups, respectively. There was no difference in gender composition between the two groups [χ2(1, N = 47) = 1.53, p > 0.05]. According to previous research (Zaitchik et al., 2014; Freier et al., 2015; Grégoire et al., 2016), preliminary analysis revealed no significant effects of gender on either task, thus gender was not further analyzed.

Design and Material

Differences between facial expression of pain and other emotions would contribute to the recognition of pain; therefore, we intended to address the specificity of pain by including four emotional face types. A 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) two-factor mixed experiment was planned.

In the current study with young children, cartoon faces were preferred to real ones as the emotional information in the former was more easily accessed (Kendall et al., 2015). Thus, we collected thirteen neutral real faces as raw material from the gallery of Simon et al. (2008) and invited professional artists to convert them into cartoons with PaintTool SAI, removing any clue for gender. Then, according to results from Williams (2002) on facial action units (FAUs), we designed emotional faces that were morphed from each neutral one into the four emotional expressions, making a total of thirteen sets in five expressions (for a sample set, see Figure 1). Each was 200 pixels × 250 pixels, or 7.1 cm × 8.8 cm in size, and luminance was controlled. In order to evaluate the FAUs, two graduates in psychology coded them by the facial action coding system (Ekman et al., 2002, Chapter 12, p. 174, Table 1; Williams, 2002), with Cronbach’s α equal to 0.90. Next, we invited eighteen undergraduates majoring in psychology to evaluate these pictures on five dimensions with Likert scales. They were instructed to “rate how the person in the picture might feel, with respect to valence: 1 = clearly unpleasant to 9 = clearly pleasant; and arousal: 1 = highly relaxed to 9 = high level of arousal; and to “judge the type of facial expression (happy, neutral, sad, angry, and painful)” and “indicate how confident you are about your judgment on expression type: 1 = not sure to 9 = totally sure”; and lastly to “rate the intensity of each emotion in the picture (such as intensity of happiness in a happy face) from 1 = not at all to 6 = the most intense possible.” Statistics on all 65 pictures are shown in Table 1. Just as in previous research (Kappesser and Williams, 2002; Simon et al., 2008), significant variations were found on all dimensions. Pairwise analysis showed that painful (M = 4.53, SE = 0.19, ps < 0.01) and happy (M = 4.42, SE = 0.14, ps < 0.01) faces were greater in the intensity of emotion than angry (M = 3.45, SE = 0.21), sad (M = 3.67, SE = 0.22) and neutral (M = 1.71, SE = 0.16) ones. Happy faces (M = 6.88, SE = 0.26, ps < 0.001) were greater in the valence of emotion than angry (M = 3.54, SE = 0.21), sad (M = 3.02, SE = 0.22), painful (M = 2.87, SE = 0.28) and neutral (M = 4.93, SE = 0.09) ones. Happy faces (M = 6.48, SE = 0.28, ps < 0.01) were greater in the arousal of emotion than angry (M = 4.95, SE = 0.34), sad (M = 4.93, SE = 0.39), and neutral (M = 3.47, SE = 0.35) ones, and painful faces (M = 5.49, SE = 0.44, p < 0.05) greater than neutral ones (M = 3.47, SE = 0.35). Happy (M = 7.27, SE = 0.26, ps < 0.01) faces were associated with greater confidence in emotion judgment than angry (M = 5.50, SE = 0.43), sad (M = 5.25, SE = 0.53), painful (M = 4.76, SE = 0.46) and neutral (M = 5.77, SE = 0.36) ones. Happy (M = 0.99, SE = 0.004, ps < 0.01) faces had a greater hit rate than angry (M = 0.65, SE = 0.06), painful (M = 0.61, SE = 0.06) and neutral (M = 0.83, SE = 0.04) ones; sad faces (M = 0.92, SE = 0.04) had a greater hit rate than angry and painful ones. For a task taking a reasonable amount of time, we actually selected four sets of faces as task stimuli.

FIGURE 1.

A sample set of five faces used in Experiment 1. From left to right: sad, painful, happy, angry, and neutral.

Table 1.

Experimental material rating results.

| Facial expression type M(SD) |

|||||||

|---|---|---|---|---|---|---|---|

| Neutral | Happy | Angry | Painful | Sad | F | ||

| Valence | 4.93 (0.39) | 6.88 (1.08) | 3.54 (0.87) | 2.87 (1.20) | 3.02 (0.93) | 62.93∗∗ | 0.79 |

| Arousal | 3.47 (1.50) | 6.48 (1.19) | 4.95 (1.45) | 5.49 (1.88) | 4.93 (1.65) | 14.28∗∗ | 0.46 |

| Confidence | 5.77 (1.51) | 7.27 (1.12) | 5.50 (1.82) | 4.76 (1.96) | 5.25 (2.24) | 18.04∗∗ | 0.52 |

| Emotion intensity | 1.71 (0.68) | 4.42 (0.61) | 3.45 (0.90) | 4.53 (0.82) | 3.67 (0.92) | 51.59∗∗ | 0.75 |

| Hit rate (%) | 82.91 (16.17) | 99.57 (1.81) | 65.38 (27.07) | 60.68 (23.58) | 91.45 (16.45) | 15.59∗∗ | 0.48 |

∗∗p < 0.01. Hit rate refers to percentage of correct judgment of a given face type.

Apparatus and Measures

A Tobii X120 Eye-tracker (Tobii Technology AB, Sweden) was used.

The stimuli were shown on a ThundeRobot T150 computer (screen size = 15.6′′, resolution = 1600 × 900). The experiment was programmed with Matlab and Tobii SDK, and was analyzed with EveMMV toolbox (Krassanakis et al., 2014).

To measure children’s empathy, we translated the 6-item self-reported questionnaire from Zhou et al. (2003), which has been validated against various external criteria of social behavior in developmental studies (e.g., Catherine and Schonert-Reichl, 2011), and adapted the vignette empathy story task from Strayer (1993). The Empathy Continuum Scoring System developed by the latter study integrated the degree of affective sharing experienced (i.e., degree of match between one’s own emotion and that of the stimulus person) with the child’s cognitive attribution for his or her own emotions.

To develop a localized version of the self-reported empathy questionnaire, we employed two undergraduates majoring in English to translate all items into native language and another two to translate them back to English. Then two Ph.Ds. in psychology rated the similarity between the original and the reverse-translated versions, which was high at 0.88. The translated version was effectively equivalent in meaning to the original one. Participants rated how well the statements described them, such as “I feel sorry for other kids who don’t have toys and clothes,” from 1 (not like him/her), 2 (sort of like him/her), or 3 (like him/her). In vignette empathy story task, we used videotaped version of vignette stories (Wu, 2013, The Association between Oxytocin Receptor Gene and Prosocial Behavior: Role of Person and Situation Factors. Unpublished doctoral dissertation, Peking University). Participants were invited to watch four video clips. Each video clip was 1 to 3 min long, in which a kid performed some action such as singing when playing with one parent in a daily, real-life scene, and showed one type of emotion, such as happiness. Then, participants would answer four orally asked questions: (1) “How are you feeling now?”; (2) “Why do you have this feel?”; (3) “How do you think about the kid’s feel in the story?”; and (4) “Why do you think the kid would feel like that?” The answers were coded by two graduate students majoring in psychology with the empathy continuum scoring system (Strayer, 1993).

The experimental task followed the odd-one-out visual search paradigm (Krysko and Rutherford, 2009). Children were seated about 60 cm from the computer screen and a chin rest was used to restrict head movement. In each trial, experiment stimuli were composed of three copies of a neutral face and one emotional facial expression (angry, happy, painful, or sad) morphed from it (see Figure 2). Participants should find the different one (target) by pushing the stick to the corresponding direction (forward for a target at the top, backward at the bottom, plus left and right). Eye movement pattern was collected when the participant was viewing the target picture. A gaze that remained stable within a 35-pixel radius and lasted at least 100 ms on a defined area of interest (AOI) would count as fixation to that AOI (Yang et al., 2012; Vervoort et al., 2013). Each type of emotional expression was presented in four trials, resulting in a session of sixteen trials in a random order. That meant the sequence would be re-randomized in regards to target position for each participant yet each type-position combination (e.g., painful target on the left) would appear once and only once. Reaction time (from stimulus onset until the joystick was displaced from the origin) and accuracy were recorded as dependent variables, and data above or below three SDs would be replaced by means.

FIGURE 2.

Odd-one-out schematic diagram.

As in previous research (Yang et al., 2012; Vervoort et al., 2013), three eye tracking indices were used. The first one, time to first fixation, was defined as the time it took (in ms) following the onset of a picture set to first fixation on a specific AOI. The second, fixation count, was defined as the total fixation counts that a participant made within the rectangular picture containing a particular facial expression as a stimulus. The third, total fixation time, was defined as the total duration of time in which a participant’s gaze remained fixated within the boundaries of a particular facial expression category. All three indices applied to the target face only.

Procedure

Protocol for the current study was in accordance with the ethical standards of the institutional and national research committee. Upon arrival, children were invited to complete the two measurements of empathy. Then, they would begin the odd-one-out task. They were told that the screen would present a fixation picture first, and then it would present four facial expression pictures, among which they needed to find the different one. No time limit was specified, and the stimuli would not disappear until a response was made with the joystick (see above), and the next trial would begin after an interval of 1s. Before they began the experiment, they needed to pass a calibration procedure and do eight practice trials to make sure they understood the instruction. Besides the task stimuli, another 16 internet-sourced cartoons were used as training materials (see Figure 3). Upon completion, they would get a toy for participation.

FIGURE 3.

Stimuli in a sample training trial in Experiment 1, with the happy target at the bottom.

Results

The measures showed good reliability, with a Cronbach’s α of 0.80 for the questionnaire (Q), and inter-rater agreement of 0.89 for the empathy story (ES) task, and the two measures were positively correlated (r = 0.40, p < 0.01). We analyzed group difference to ensure the grouping was meaningful, and there were significant differences between high empathy group (ES: M = 23.22, SD = 10.51; Q: M = 14.96, SD = 1.80) and low empathy group (ES: M = 15.04, SD = 7.50; Q: M = 9.25, SD = 1.80), with high empathy group scoring higher on both empathy story task [t(45) = 3.08, p < 0.01, Cohen’s d = 0.90] and self-reported empathy questionnaire [t(45) = 10.88, p < 0.001, Cohen’s d = 3.17] as expected.

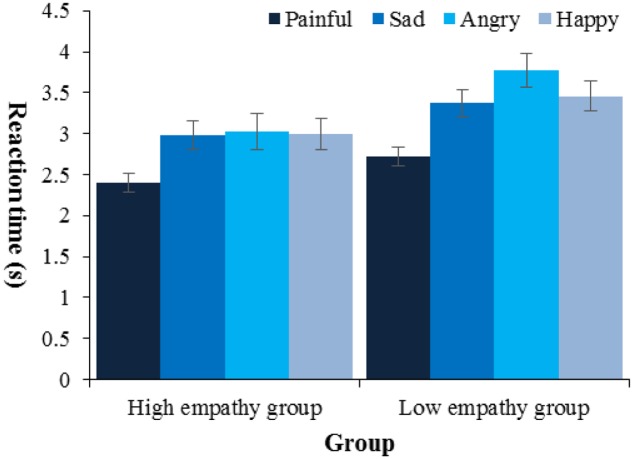

We first would like to ensure that participants did not sacrifice speed for accuracy, and a by-subject correlation analysis between reaction time and accuracy across all trials showed no significant correlation (p > 0.05). Then, we tested the relationship between empathy and performance in odd-one-out task. First, the difference in accuracy between high empathy (M = 0.93, SD = 0.07) and low empathy group (M = 0.93, SD = 0.06) was non-significant [t(45) = 0.45, p > 0.05, Cohen’s d = 0.13]. Second, a 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) mixed-design ANOVA was employed on reaction time (Figure 4). There were two main effects: facial expression type [F(3,135) = 16.25, p < 0.001, = 0.27] and empathy [F(1,45) = 6.24, p < 0.05, = 0.12]. Follow-up pairwise comparisons (All pairwise comparisons in the current article were done with Bonferroni correction) showed that children were faster when they searched for painful facial expressions (M = 2.56 s, SE = 0.08) than any other type (ps < 0.001), and high empathy group (M = 2.85 s, SE = 0.14) was faster than low empathy group when they searched for the odd one in a crowd of faces (M = 3.33 s, SE = 0.14, p < 0.05). No other effects were found. As a number of four trials for each face type were relatively few and the degree to which children’s performance stabilized within these few trials might vary with face type or empathy, we did a 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) mixed-design ANOVA on standard deviations of reaction time. There were two main effects: facial expression type [F(3,135) = 4.46, p < 0.01, = 0.09] and empathy [F(1,45) = 6.41, p < 0.05, = 0.13]. Follow-up pairwise comparisons showed that children performed more stably on pain (MSD = 0.77, SE = 0.08) than anger (MSD = 1.39, SE = 0.18, p < 0.05), and high empathy group (MSD = 0.88, SE = 0.11) was more stable than low empathy group (MSD = 1.26, SE = 0.11, p < 0.05).

FIGURE 4.

Reaction time by type of target and empathy. Error bars show standard errors in this and the following figures.

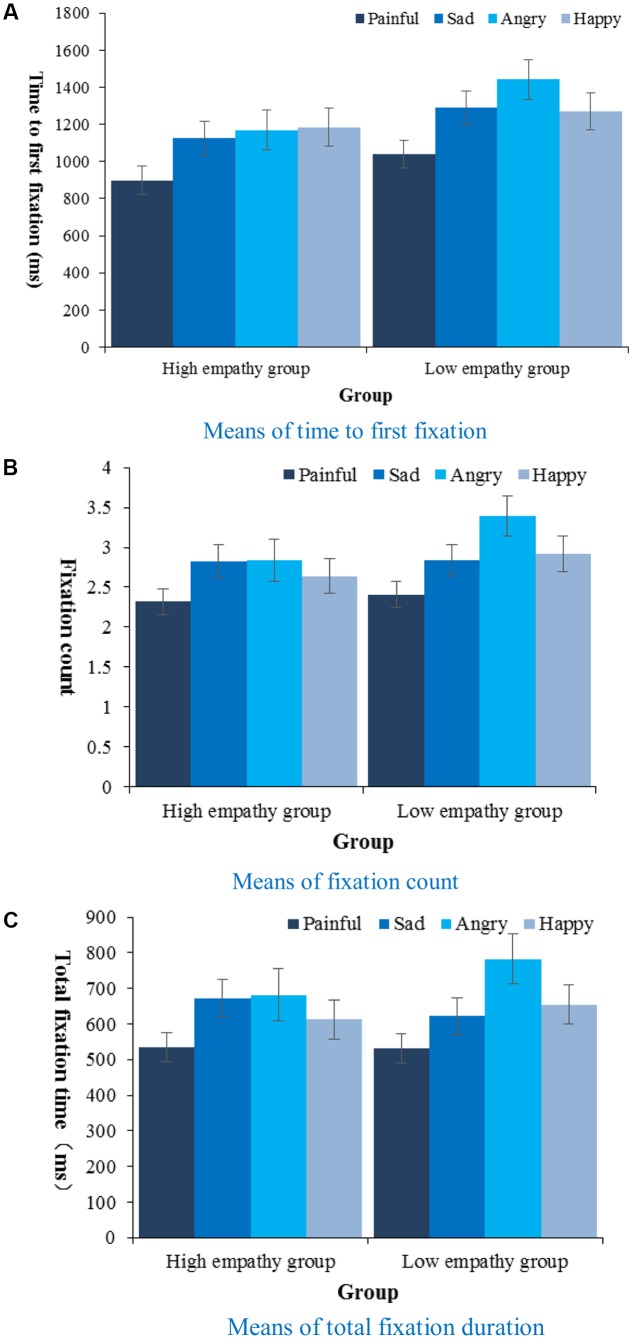

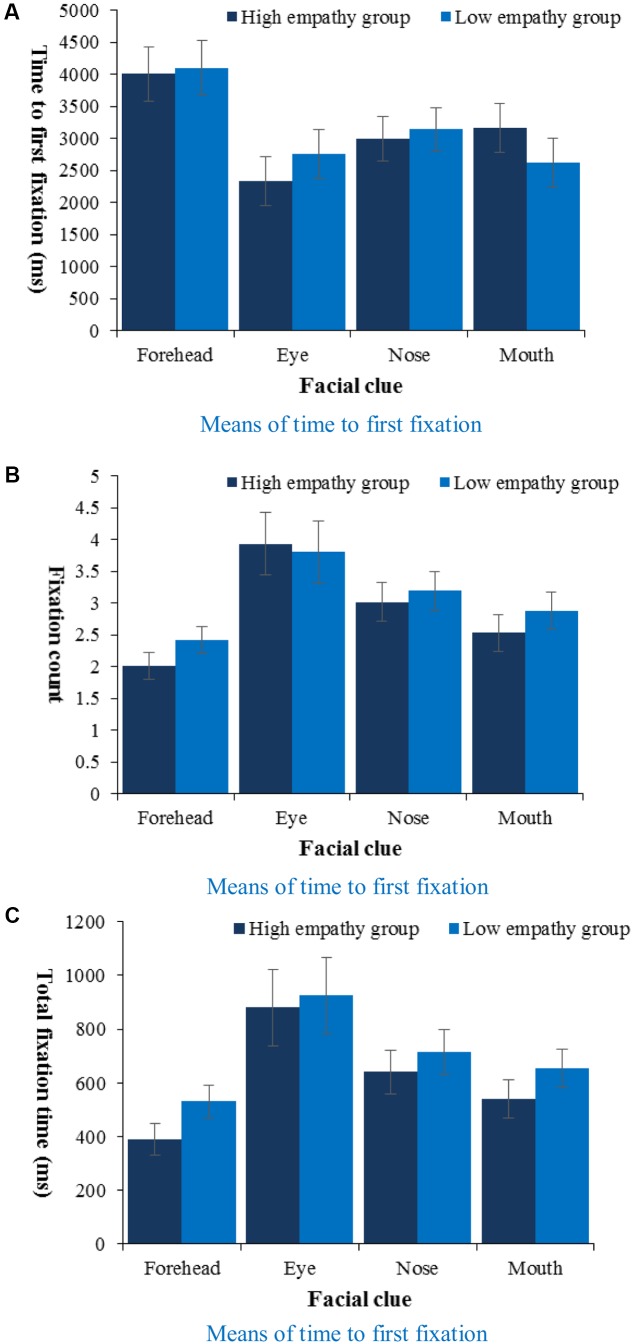

The AOI for analysis of the eye-tracking data was each picture containing the target face – all eye fixations within the frame would count. We employed 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) mixed-design ANOVAs on three eye-tracking indices (Figure 5). On the time to first fixation, there was a main effect of facial expression type [F(3,135) = 6.18, p < 0.01, = 0.12] and a marginal main effect of empathy [F(1,45) = 3.46, p = 0.069, = 0.07]. Follow-up pairwise comparisons showed that pain (M = 969.70 ms, SE = 54.27) drew attention faster than other emotions (ps < 0.01), and high empathy group (M = 1094.44 ms, SE = 63.47) focused marginally faster than low empathy group (M = 1259.72 ms, SE = 62.13, p = 0.069). On fixation count and total fixation time each, there was only a main effect of facial expression type [F(3,135) = 6.59, p < 0.001, = 0.13; F(3,135) = 7.24,p < 0.001, = 0.14, respectively]. Follow-up pairwise comparisons showed that there was a smaller fixation count on pain (M = 2.36, SE = 0.11) than on other emotions (ps < 0.05), and a shorter total fixation duration on pain (M = 532.66 ms, SE = 28.98) than on other emotions (ps < 0.05). As with reaction time, we also did 2 (Empathy: high, low) × 4 (Facial expression type: painful, happy, sad, angry) mixed-design ANOVAs on standard deviations of the three eye-tracking indices to test their stability, and found main effects of facial expression on all [F(3,135) = 2.71, p < 0.05, = 0.06; F(3,135) = 6.20, p < 0.01, = 0.12; F(3,135) = 3.13, p < 0.05, = 0.07, respectively]. Follow-up analysis showed that fixation count on pain (MSD = 0.97, SE = 0.07, ps < 0.05) was associated with a smaller standard deviation than those on angry (MSD = 1.60, SE = 0.15), happy (MSD = 1.30, SE = 0.09) and sad (MSD = 1.32, SE = 0.09) faces, and total fixation duration on pain (MSD = 243.89 ms, SE = 20.84) was associated with a smaller standard deviation than that on angry (MSD = 362.50 ms, SE = 38.00, p < 0.05).

FIGURE 5.

Means of time to first fixation (A), fixation count (B), and total fixation duration (C) during face searching task by type of target and empathy.

Discussion

Results from Experiment 1 indicated that children aged 5 and 6 could search for painful facial expression faster than for angry, happy and sad expressions. These results also agree with those found in previous research (Priebe et al., 2015). Given pain is a signal of threat and warning, participants need to notice it quickly, because doing so is essential to our health and safety (Williams, 2002; Williams and Craig, 2006). Smaller fixation count and shorter fixation duration may reflect threat aversion (LoBue, 2013; Thrasher and LoBue, 2016), for which neuroscience provided a range of evidence (Benuzzi et al., 2009; Hayes and Northoff, 2012; Kobayashi, 2012). Still, the results could be at least partly attributed to the perceptual distinctiveness of pain (Williams, 2002), and one should be cautious when interpreting them as showing differences across emotions.

Meanwhile, we found that high empathy group fixated faster than low empathy group did. This can be explained by Decety and Svetlova’s (2012) theory, which states that empathy may contribute to affective sharing and emotion recognition, or in other words, empathy would help improve our performance in emotion recognition. For example, Balconi and Canavesio (2016) have showed that empathy could affect adults’ face detection performance and attentional process. Some researchers also have found that empathy is positively related to performance in emotion recognition, an ability which requires shared representation and mirror neurons, and will help children make appropriate reactions (Kosonogov et al., 2015; Lamm and Majdandžić, 2015).

No difference in reaction time to and eye movement on sad, angry and happy faces was found. This is noteworthy because previous research has found that angry facial expressions would be detected faster, and elicited fewer fixations and shorter total fixation duration than happy, sad, and neutral ones (LoBue and Larson, 2010; Hunnius et al., 2011). An explanation for the inconsistency is that, as the length of task had to be limited for young children, each emotion was presented only in four trials; therefore the means of behavioral indices were less reliable. Other methodological differences should also be taken into consideration. Like LoBue et al. (2014), we presented an array of four pictures in a trial, but they were positioned in a cross arrangement rather than a matrix and were equally close to the center. Moreover, the participants were 5- to 6- year old children and the facial stimuli were designed in cartoon format. Additionally, there was no time pressure for the visual search task. ANOVAs on standard deviations in both reaction time and the indices of eye tracking found similar patterns of results (with pain showing the smallest SDs), which suggested that with just a few observations, how children perceived angry, happy and sad faces might not have been so stabilized as with the painful ones. An explanation tells that pain was perceived in a more stable way because it was evolutionarily crucial, and each individual should have his or her own well-established ways in processing painful stimuli.

In order to investigate whether children would perceive painful faces as indeed showing a higher intensity of pain than other expressions, what facial clues children would use and what role empathy played in the process, Experiment 2 was done, whereby we narrowed our focus on the negative facial expressions, namely sad, angry and painful.

Experiment 2

Participants

Forty-six 5- and 6-year-old children who did not take part in Experiment 1 were recruited from a local kindergarten. According to the kindergarten’s official records, these children were normally developing and showed no signs of mental disease. This experiment was approved by the Ethics Committee of the School of Psychological and Cognitive Sciences at Peking University. In accordance with the Declaration of Helsinki, we provided parents of each participant with a written description of the experiment before it began. All parents stated in written informed consent that they allowed their child to participate. Four were excluded because they failed to meet the same data quality criteria in Experiment 1, resulting in a sample of 42 (Mage = 69.20 months, SDage = 5.49; 22 males) for analysis. There was no difference in gender composition between the two groups [χ2(1, N = 42) = 0.10, p > 0.05].

Design and Material

This experiment employed a 2 (Empathy: high, low) × 3 (Facial expression type: painful, sad, angry) two-factor mixed experimental design.

Stimuli for Experiment 2 were eight sets of faces chosen from the collection prepared for Experiment 1, less happy ones.

Apparatus and Measures

Equipment used was the same as in Experiment 1.

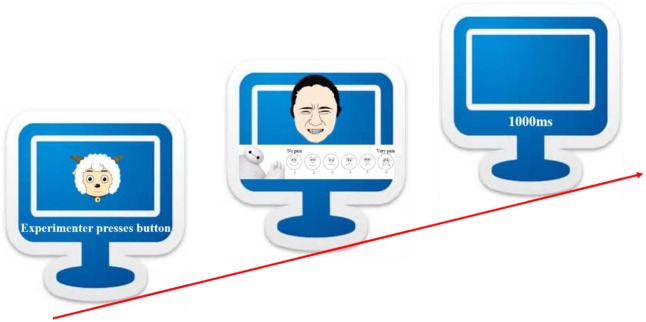

The experimental task was a pain evaluation task (see Figure 6), adapted from Deyo et al. (2004) paradigm. In order to make the evaluation more manageable for children, we used the FACES scale, ranging from 0 to 10 (Wong and Baker, 1988), which had been validated by many studies (Yu et al., 2009; Garra et al., 2013). Participants were told to evaluate the intensity of pain seen in a facial expression of one of the three types plus neutrality, from 1 (not at all) to 6 (very much), and their ratings would be transformed to 0–2–4–6–8–10 for analysis. Eight neutral faces and their 24 emotional morphs would be presented one at a time, yielding a session of 32 trials in a preset random order. The pain intensity of an emotional facial expression was obtained by subtracting the score of its neutral prototype from its raw score. For example, a score of 8 on a painful face would be adjusted to 7 if the neutral face from which the painful one was morphed was rated 1.

FIGURE 6.

Pain evaluation task diagram.

Procedure

Participants were invited to complete the empathy questionnaire as in Experiment 1, and then the pain intensity rating task. Participants were instructed that the screen would first present a fixation picture, then simultaneously present a facial expression (painful, sad, angry, or neutral) and a pain evaluation scale in the form of a row of face icons. The aim was to ensure that the children would stay attentive to pain (Saarela et al., 2007). There was no time limit and the next trial would begin 1s after the participant made a response. Eye movement was recorded the same way as in Experiment 1, and we divided the facial expression into four parts (sub-AOIs) for further analysis: forehead, eyes (include eye brows), nose, and mouth.

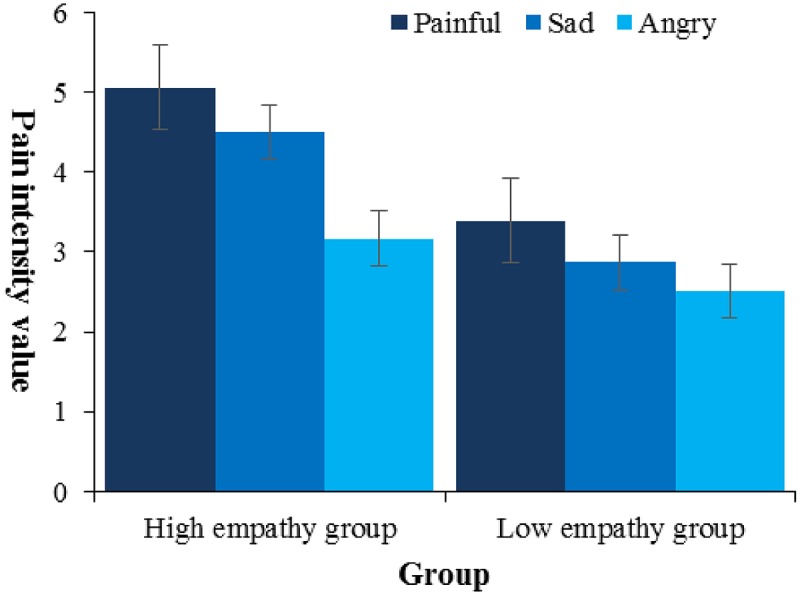

Results

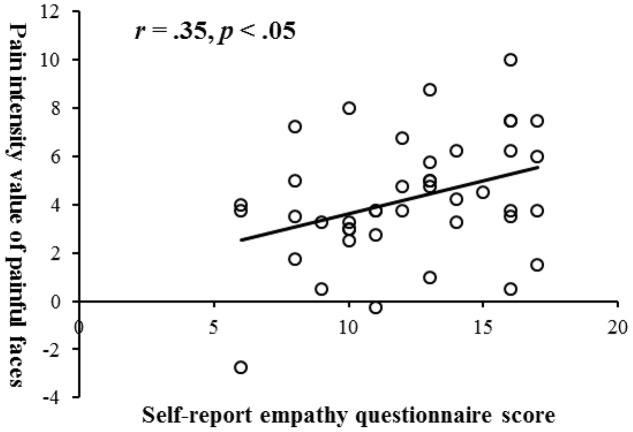

The questionnaire yielded a Cronbach’s α of 0.78. Subsequently, a 2 × 3 ANOVA evaluated the variation in pain intensity rating associated with level of participant’s empathy and type of facial expression (Figure 7). There were two main effects of facial expression type [F(2,80) = 9.13, p < 0.001, = 0.19] and empathy [F(1,40) = 6.02, p < 0.05, = 0.13]. Follow-up pairwise comparisons showed that, first, painful faces (M = 4.23, SE = 0.38) were evaluated as showing an equal intensity of pain to sad faces (M = 4.19, SE = 0.24), both of them higher than angry (M = 2.84, SE = 0.24, ps < 0.01); second, high empathy group (M = 4.24, SE = 0.28) gave higher evaluation than low empathy group (M = 3.26, SE = 0.28, p < 0.05). By-participant correlation analysis (Figure 8) showed that only the rating scores of pain intensity seen in the painful faces, not in the sad (r = 0.23, p > 0.05) or angry faces (r = 0.24, p > 0.05), were positively related to the questionnaire score of empathy (r = 0.35, p < 0.05).

FIGURE 7.

Pain intensity rating scores by type of emotion and empathy.

FIGURE 8.

Scatterplot for the correlation between empathy and pain intensity value of painful facial expression with linear regression line.

To analyze the eye-tracking patterns reflecting how children took advantage of facial clues to evaluate pain intensity, we separately analyzed data in the 8 trials with a painful face. In order to investigate the effects of facial clue, a series of 2 (Empathy: high, low) × 4 (Facial clue: forehead, eyes, nose, mouth) mixed-design ANOVAs on three indices was employed (Figure 9). On all indices, only facial clue had a main effect. On time to first fixation [F(3,120) = 7.46, p < 0.001, = 0.16], follow-up pairwise comparison showed that children would fixate onto eyes (M = 2547.81 ms, SE = 270.33), nose (M = 3064.76 ms, SE = 243.35) and mouth (M = 2892.79 ms, SE = 268.41) each more quickly than forehead (M = 4053.12 ms, SE = 299.69, ps < 0.05). On fixation count [F(3,120) = 9.47, p < 0.001, = 0.19], follow-up pairwise comparison showed that children would fixate on eyes (M = 3.86, SE = 0.35, ps < 0.05) more times than forehead (M = 2.22, SE = 0.15) and mouth (M = 2.70, SE = 0.21), and fixate on nose (M = 3.11, SE = 0.21) and mouth more times than forehead (ps < 0.05). On total fixation duration [F(3,120) = 8.85, p < 0.001, = 0.18], follow-up pairwise comparisons showed that children would fixate for a longer time on eyes (M = 903.50 ms, SE = 100.60, ps < 0.05) than forehead (M = 459.90 ms, SE = 42.02) and mouth (M = 596.84 ms, SE = 50.45), and fixate for a longer time on nose (M = 677.56 ms, SE = 58.01) and mouth than forehead (ps < 0.05). Then, we did twelve by-subject correlation analyses between pain rating and the three eye tracking indices on the face’s four clues, and found that time to first fixation on eyes (r = -0.33, p < 0.05), fixation count on mouth (r = -0.47, p < 0.01), total fixation duration on mouth (r = -0.46, p < 0.01) were negatively related to pain rating.

FIGURE 9.

Means of time to first fixation (A), fixation count (B), and total fixation duration (C) on painful faces during pain rating task by facial clue and empathy.

In order to find the most predictive index, a hierarchical regression was done (see Table 2). The regression results showed that the best predictor was self-reported empathy score. In other words, the higher an individual was in empathy, the higher she/he would rate the pain observed.

Table 2.

Hierarchical regression on pain rating (with Enter method, N = 42).

| Variable | B | β | t | p |

|---|---|---|---|---|

| Step 1 | ||||

| Self-reported empathy score | 0.27 | 0.35 | 2.39 | <0.05 |

| Step 2 | ||||

| Self-reported empathy score | 0.23 | 0.29 | 2.21 | <0.05 |

| Time to first fixation on eyes | 0.00 | -0.20 | -1.50 | >0.05 |

| Fixation count on mouth | -0.64 | -0.34 | -1.10 | >0.05 |

| Total fixation duration on mouth | -0.00 | -0.08 | -0.27 | >0.05 |

Step 1: Rchange2 = 0.13; Radjust2 = 0.10; Step 2: Rchange2 = 0.24; Radjust2 = 0.30 (ps < 0.05).

Discussion

This study found that children fixated on eyes and mouth more quickly, more frequently and for longer times. The findings implied that children were most sensitive to these parts, and they might be the most important clues for judging the pain intensity in facial expressions. Previous studies have suggested they are also the most important facial clues for the decoding of other emotional expressions in adults and children (Eisenbarth and Alpers, 2011; Guarnera et al., 2015). These results agreed with previous studies to a certain extent as they suggested that visual perception of brows, orbits, nose, and eyes were the best predictors for the judgment of pain in facial expression (Williams, 2002; Prkachin, 2011).

A potential explanation why children relied primarily on mouth and eyes is offered. Facial expressions have many facial clues, but children’s limitations in their cognitive capacities mean that they can only use one or two major ones (Eisenbarth and Alpers, 2011; Cowan et al., 2014) to evaluate the pain intensity, which is an economic way for them. The study by LoBue and Larson (2010) showed that infants may always identify the angry facial expression with the same clue, V-shaped brows, which carry the most useful information. Deyo et al. (2004) found that the ability to take advantage of facial clues was related to age, and children aged 5 and 6 years performed poorly, with brows and mouth being the most useful facial clue for them to rate pain.

Importantly, Experiment 2 agrees with our results from Experiment 1 because both suggested that children high in empathy had a lower threshold in the perception of pain. Children’s rating of pain intensity in observed faces was related to their empathy measured by a self-reported questionnaire, which extended the finding of Allen-Walker and Beaton (2015) that averaged ratings of intensity across six emotions in Facial Expressions of Emotion: Stimuli and Tests was significantly correlated with participants’ Empathy Quotient. Previous research in adults showed that people scoring higher in empathy wound exhibit higher pain-related brain activity (Singer et al., 2004; Saarela et al., 2007), and observers’ brain activity was in turn related to the rated intensity of pain shown (Saarela et al., 2007). Hence, empathy and evaluation of pain might share common neural basis, which could have developed considerably by preschool years.

It was unexpected, however, that children’s evaluations of pain intensity for sad and painful facial expressions did not differ. Given the accuracy of children’s self-report about pain (Spagrud et al., 2003), one possible explanation is, as Kappesser and Williams (2002) proposed, that patterns of FAUs and patterns in the messages conveyed by the two expressions were the same. Additionally, the face icons in the FACES scale in this study could be more easily perceived as sad than as painful facial expressions. As a result, they could have distracted children and interfered with their judgments.

General Discussion

Taken together, these findings showed us a picture that children’s empathy had a positive influence on their perception and an “amplifying” effect on the evaluation of pain in facial expression, and pain may be distinct from the other facial expressions in terms of eye-tracking indices. The present study provided evidence for a visual profile for facial expression of pain – faster orientation, shorter fixation duration and less fixation count. It may reflect the natural implication of pain – threat (Yamada and Decety, 2009; Todd et al., 2016), which may be imprinted through evolution and anchored in facial clues. Other similar facial expressions, e.g., threat-related expressions (such as angry and fearful ones), also elicits the same visual profile (Hunnius et al., 2011), but they are more closely associated with social threats (Fox et al., 2000; Lobue et al., 2016), and not a major sign for a life-threatening situation, possibly because these expressions differ in the later stages of visual processing. Some researchers suggested that attention might be more biased toward painful facial expressions than angry ones at the beginning (up to 1000 ms), and became increasingly less so thereafter (Priebe et al., 2015). Also notably, pain, like sadness, will induce personal distress in the observer (Luo et al., 2015). But facial expression of pain conveys more information regarding the physical environment.

Moreover, most researchers divided empathy into two facets, cognitive empathy and affective empathy (Shamay-Tsoory et al., 2009; Wu et al., 2012). From the developmental perspective, humans are born with the primary component of empathy, emotional contagion (Preston and de Waal, 2002), which mainly reflects the affective facet; and by 36 months will the ability to understand emotions develop (Decety, 2010), which mainly reflects the cognitive facet. In view of the aforementioned facts, we propose that cognitive empathy and affective empathy may play different roles in the feeling and perception of pain. A previous study by us in adults (Yan et al., 2016) has found that from processing of attention to making a painful facial expression, empathy would only influence the attentional maintenance stage, but here no influence of empathy was found on this stage in children. This is explainable as in children, the affective component of empathy develops earlier than the cognitive component and functions as the dominant one, and their immature cognitive abilities makes it hard for them to regulate their emotional reaction. It is also noticeable that although Experiment 1 showed that painful faces attracted children’s attention most quickly, and Experiment 2 showed that only the rating of pain intensity seen in the painful faces were positively related to empathy, the superiority of high empathy in child on their behavioral and perceptual response was manifested across emotions. First, this is consistent with previous findings that empathy facilitated the processing of more than one type of faces (Dimberg et al., 2011; Choi and Watanuki, 2014). Second, the affective component of empathy was affective arousal or emotional contagion (Preston and de Waal, 2002; Decety, 2010). This component would help decrease our threshold for emotional stimuli, and it would affect the processing of all types of facial expression rather than a specific one.

Some researchers theorized that, because painful stimuli are associated with a potential threat, and perception of others’ pain alone does not automatically activate an empathic process, a threat-detection system appears to be activated first, with a possibly general aversive response in the observer, instead of an empathic response (Ibáñez et al., 2011). Since threats might mean more serious harm to children than to adults, as a result of evolution, to avoid perceived threat might be given a higher priority in children, and that might explain why we failed to found any relationship between empathy and later attentional processing in Experiment 2. Yet other researchers suggested that observing others’ pain would trigger empathy for their pain (Decety and Lamm, 2006).

Strengths, Limitations, and Conclusion

Future studies can improve on the several limitations presented in our study. First, it has yet to be answered whether the cognitive or the affective facet of empathy influenced children’s performance. Larger sample sizes and more refined measurements of empathy would allow for analysis of empathy by facet. Second, in order to get the full picture of development, future research could cover people of all ages (Deyo et al., 2004), which is the best way to know how empathy and pain work together. Third, we suggest researchers solve the potential measurement issue in pain rating task by precluding potential confusion caused by sad faces used in the instrument and using a wider range of methodologies, such as the FACES pain scale (Hicks et al., 2001). Fourth, further research should probably consider the influence of IQ and language level to make the findings more reliable. Finally, the format of material should be taken into consideration. In cartoonized facial expressions used in the study, many details essential to real faces like texture were absent. Although we had removed or neutralized common gender clues, there might still be individual differences in how people perceived the gender of the faces. While in this way cartoons made it easier for young children to access the emotional information (Kendall et al., 2015), the ecological validity of the findings could be harmed because the cartoons differed substantially from real faces seen daily.

To conclude, the current study suggested that 5- to 6-year-old children could detect painful facial expression in the shortest time and with the lowest visual effort, and individuals high in empathy performed better in the search than those low in empathy. In addition, 5- to 6-year-old children primarily relied on eyes and mouth as clues to evaluate pain intensity of a painful facial expression. The observed pain was perceived to be stronger by raters higher in empathy.

Ethics Statement

All procedures performed in the study involving human participants were conducted in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed consent was obtained from all participants included in the study.

Author Contributions

ZY and YS contributed to the conception and design of the work. ZY collected and analyzed the data. ZY, MP, and YS contributed to the writing of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to the children and teachers in the Peking University kindergarten. We appreciate our lab members who provided invaluable ideas in discussion.

Footnotes

Funding. This research was supported by the National Natural Science Foundation of China [31371040, 31571134 to YS].

References

- Allen-Walker L., Beaton A. A. (2015). Empathy and perception of emotion in eyes from the FEEST/Ekman and Friesen faces. Pers. Individ. Dif. 72 150–154. 10.1016/j.paid.2014.08.037 [DOI] [Google Scholar]

- Balconi M., Canavesio Y. (2016). Is empathy necessary to comprehend the emotional faces? The empathic effect on attentional mechanisms (eye movements), cortical correlates (N200 event-related potentials) and facial behaviour (electromyography) in face processing. Cogn. Emot. 30 210–224. 10.1080/02699931.2014.993306 [DOI] [PubMed] [Google Scholar]

- Batson C. D., Shaw L. L. (1991). Evidence for altruism: toward a pluralism of prosocial motives. Psychol. Inq. 2 107–122. 10.1207/s15327965pli0202_1 [DOI] [Google Scholar]

- Benuzzi F., Lui F., Duzzi D., Nichelli P. F., Porro C. A. (2009). Brain networks responsive to aversive visual stimuli in humans. Magn. Reson. Imaging 27 1088–1095. 10.1016/j.mri.2009.05.037 [DOI] [PubMed] [Google Scholar]

- Catherine N. L. A., Schonert-Reichl K. (2011). Children’s perceptions and comforting strategies to infant crying: relations to age, sex, and empathy-related responding. Br. J. Dev. Psychol. 29 524–551. 10.1348/026151010X521475 [DOI] [PubMed] [Google Scholar]

- Chen Y. C., Chen C. C., Decety J., Cheng Y. (2014). Aging is associated with changes in the neural circuits underlying empathy. Neurobiol. Aging 35 827–836. 10.1016/j.neurobiolaging.2013.10.080 [DOI] [PubMed] [Google Scholar]

- Cheng Y., Chen C., Decety J. (2014). An EEG/ERP investigation of the development of empathy in early and middle childhood. Dev. Cogn. Neurosci. 10 160–169. 10.1016/j.dcn.2014.08.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi D., Watanuki S. (2014). Effect of empathy trait on attention to faces: an event-related potential (ERP) study. J. Physiol. Anthropol. 33 1–8. 10.1186/1880-6805-33-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole P. M., Moore G. A. (2014). About face! Infant facial expression of emotion. Emot. Rev. 7 116–120. 10.1177/1754073914554786 [DOI] [Google Scholar]

- Cowan D. G., Vanman E. J., Nielsen M. (2014). Motivated empathy: the mechanics of the empathic gaze. Cogn. Emot. 28 1522–1530. 10.1080/02699931.2014.890563 [DOI] [PubMed] [Google Scholar]

- Craig K. D., Versloot J., Goubert L., Vervoort T., Crombez G. (2010). Perceiving pain in others: automatic and controlled mechanisms. J. Pain 11 101–108. 10.1016/j.jpain.2009.08.008 [DOI] [PubMed] [Google Scholar]

- Davis M. H. (1983). Measuring individual differences in empathy: evidence for a multidimensional approach. J. Pers. Soc. Psychol. 44 113–126. 10.1037/0022-3514.44.1.113 [DOI] [Google Scholar]

- de Waal F. B. (2008). Putting the altruism back into altruism: the evolution of empathy. Annu. Rev. Psychol. 59 279–300. 10.1146/annurev.psych.59.103006.093625 [DOI] [PubMed] [Google Scholar]

- Decety J. (2010). The neurodevelopment of empathy in humans. Dev. Neurosci. 32 257–267. 10.1159/000317771 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J., Bartal I. B.-A., Uzefovsky F., Knafo-Noam A. (2016). Empathy as a driver of prosocial behaviour: highly conserved neurobehavioural mechanisms across species. Philos. Trans. R. Soc. B Biol. Sci. 371:20150077. 10.1098/rstb.2015.0077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J., Lamm C. (2006). Human empathy through the lens of social neuroscience. ScientificWorldJournal 6 1146–1163. 10.1100/tsw.2006.221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J., Svetlova M. (2012). Putting together phylogenetic and ontogenetic perspectives on empathy. Dev. Cogn. Neurosci. 2 1–24. 10.1016/j.dcn.2011.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deyo K. S., Prkachin K. M., Mercer S. R. (2004). Development of sensitivity to facial expression of pain. Pain 107 16–21. 10.1016/s0304-3959(03)00263-x [DOI] [PubMed] [Google Scholar]

- Dimberg U., Andréasson P., Thunberg M. (2011). Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 25 26–31. 10.1027/0269-8803/a000029 [DOI] [Google Scholar]

- Eisenbarth H., Alpers G. W. (2011). Happy mouth and sad eyes: scanning emotional facial expressions. Emotion 11 860–865. 10.1037/a0022758 [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W. V., Hager J. C. (2002). Facial Action Coding System (FACS). Salt Lake City, UT: A Human Face. [Google Scholar]

- Finlay B. L., Syal S. (2014). The pain of altruism. Trends Cogn. Sci. 18 615–617. 10.1016/j.tics.2014.08.002 [DOI] [PubMed] [Google Scholar]

- Fox E., Lester V., Russo R., Bowles R. J., Pichler A., Dutton K. (2000). Facial expressions of emotion: are angry faces detected more efficiently? Cogn. Emot. 14 61–92. 10.1080/026999300378996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freier L., Cooper R. P., Mareschal D. (2015). Preschool children’s control of action outcomes. Dev. Sci. 20:e12354. 10.1111/desc.12354 [DOI] [PubMed] [Google Scholar]

- Garra G., Singer A. J., Domingo A., Thode H. C. (2013). The Wong-Baker pain FACES scale measures pain, not fear. Pediatr. Emerg. Care 29 17–20. 10.1097/pec.0b013e31827b2299 [DOI] [PubMed] [Google Scholar]

- Goubert L., Craig K. D., Vervoort T., Morley S., Sullivan M. J., de C Williams A. C., et al. (2005). Facing others in pain: the effects of empathy. Pain 118 285–288. 10.1016/j.pain.2005.10.025 [DOI] [PubMed] [Google Scholar]

- Grégoire M., Bruneau-Bhérer R., Morasse K., Eugène F., Jackson P. L. (2016). The perception and estimation of others’ pain according to children. Pain Res. Manag. 2016:9097542. 10.1155/2016/9097542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grynberg D., Maurage P. (2014). Pain and empathy: the effect of self-oriented feelings on the detection of painful facial expressions. PLOS ONE 9:e100434. 10.1371/journal.pone.0100434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guarnera M., Hichy Z., Cascio M. I., Carrubba S. (2015). Facial expressions and ability to recognize emotions from eyes or mouth in children. Eur. J. Psychol. 11 183–196. 10.5964/ejop.v11i2.890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes D. J., Northoff G. (2012). Common brain activations for painful and non-painful aversive stimuli. BMC Neurosci. 13:60. 10.1186/1471-2202-13-60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hicks C. L., von Baeyer C. L., Spafford P. A., van Korlaar I., Goodenough B. (2001). The faces pain scale – revised: toward a common metric in pediatric pain measurement. Pain 93 173–183. 10.1016/S0304-3959(01)00314-1 [DOI] [PubMed] [Google Scholar]

- Huang H., Su Y. (2012). The development of empathy across the lifespan: a perspective of double processes. Psychol. Dev. Educ. 28 434–441. [Google Scholar]

- Hunnius S., de Wit T. C., Vrins S., von Hofsten C. (2011). Facing threat: infants’ and adults’ visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cogn. Emot. 25 193–205. 10.1080/15298861003771189 [DOI] [PubMed] [Google Scholar]

- Ibáñez A., Hurtado E., Lobos A., Escobar J., Trujillo N., Baez S., et al. (2011). Subliminal presentation of other faces (but not own face) primes behavioral and evoked cortical processing of empathy for pain. Brain Res. 1398 72–85. 10.1016/j.brainres.2011.05.014 [DOI] [PubMed] [Google Scholar]

- Jackson P. L., Meltzoff A. N., Decety J. (2005). How do we perceive the pain of others? A window into the neural processes involved in empathy. Neuroimage 24 771–779. 10.1016/j.neuroimage.2004.09.006 [DOI] [PubMed] [Google Scholar]

- Kappesser J., Williams A. C. (2002). Pain and negative emotions in the face: judgements by health care professionals. Pain 99 197–206. 10.1016/S0304-3959(02)00101-X [DOI] [PubMed] [Google Scholar]

- Kendall W., Kingstone A., Todd R. (2015). Detecting emotions is easier in less realistic faces. J. Vis. 15:1379 10.1167/15.12.1379 [DOI] [Google Scholar]

- Kobayashi S. (2012). Organization of neural systems for aversive information processing: pain, error, and punishment. Front. Neurosci. 6:136. 10.3389/fnins.2012.00136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosonogov V., Titova A., Vorobyeva E. (2015). Empathy, but not mimicry restriction, influences the recognition of change in emotional facial expressions. Q. J. Exp. Psychol. 68 2106–2115. 10.1080/17470218.2015.1009476 [DOI] [PubMed] [Google Scholar]

- Krassanakis V., Filippakopoulou V., Nakos B. (2014). EyeMMV toolbox: an eye movement post-analysis tool based on a two-step spatial dispersion threshold for fixation identification. J. Eye Mov. Res. 7 1–10. [Google Scholar]

- Krysko K. M., Rutherford M. D. (2009). The face in the crowd effect: threat-detection advantage with perceptually intermediate distractors. Vis. Cogn. 17 1205–1217. 10.1080/13506280902767789 [DOI] [Google Scholar]

- Kunz M., Lautenbacher S., LeBlanc N., Rainville P. (2012). Are both the sensory and the affective dimensions of pain encoded in the face? Pain 153 350–358. 10.1016/j.pain.2011.10.027 [DOI] [PubMed] [Google Scholar]

- Lamm C., Majdandžić J. (2015). The role of shared neural activations, mirror neurons, and morality in empathy – A critical comment. Neurosci. Res. 90 15–24. 10.1016/j.neures.2014.10.008 [DOI] [PubMed] [Google Scholar]

- LoBue V. (2013). What are we so afraid of? How early attention shapes our most common fears. Child Dev. Perspect. 7 38–42. 10.1111/cdep.12012 [DOI] [Google Scholar]

- Lobue V., Buss K. A., Taber-Thomas B. C., Pérez-Edgar K. (2016). Developmental differences in infants’ attention to social and nonsocial threats. Infancy 22 403–415. 10.1111/infa.12167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LoBue V., DeLoache J. S. (2010). Superior detection of threat-relevant stimuli in infancy. Dev. Sci. 13 221–228. 10.1111/j.1467-7687.2009.00872.x [DOI] [PubMed] [Google Scholar]

- LoBue V., Larson C. L. (2010). What makes an angry face look so ...angry? Examining visual attention to the shape of threat in children and adults. Vis. Cogn. 18 1165–1178. 10.1080/13506281003783675 [DOI] [Google Scholar]

- LoBue V., Matthews K., Harvey T., Stark S. L. (2014). What accounts for the rapid detection of threat? Evidence for an advantage in perceptual and behavioral responding from eye movements. Emotion 14 816–823. 10.1037/a0035869 [DOI] [PubMed] [Google Scholar]

- LoBue V., Rakison D. H., DeLoache J. S. (2010). Threat perception across the life span: evidence for multiple converging pathways. Curr. Dir. Psychol. 19 375–379. 10.1177/0963721410388801 [DOI] [Google Scholar]

- Luo P., Wang J., Jin Y., Huang S., Xie M., Deng L., et al. (2015). Gender differences in affective sharing and self-other distinction during empathic neural responses to others’ sadness. Brain Imaging Behav. 9 312–322. 10.1007/s11682-014-9308-x [DOI] [PubMed] [Google Scholar]

- Preston S. D., de Waal F. B. M. (2002). Empathy: its ultimate and proximate bases. Behav. Brain Sci. 25 1–20; discussion 20–71. [DOI] [PubMed] [Google Scholar]

- Priebe J. A., Messingschlager M., Lautenbacher S. (2015). Gaze behaviour when monitoring pain faces: an eye-tracking study. Eur. J. Pain 19 817–825. 10.1002/ejp.608 [DOI] [PubMed] [Google Scholar]

- Prkachin K. M. (2011). Facial pain expression. Pain Manag. 1 367–376. 10.2217/pmt.11.22 [DOI] [PubMed] [Google Scholar]

- Roy C., Blais C., Fiset D., Rainville P., Gosselin F. (2015). Efficient information for recognizing pain in facial expressions. Eur. J. Pain 19 852–860. 10.1002/ejp.676 [DOI] [PubMed] [Google Scholar]

- Saarela M. V., Hlushchuk Y., Williams A. C., Schürmann M., Kalso E., Hari R. (2007). The compassionate brain: humans detect intensity of pain from another’s face. Cereb. Cortex 17 230–237. 10.1093/cercor/bhj141 [DOI] [PubMed] [Google Scholar]

- Schott G. D. (2015). Pictures of pain: their contribution to the neuroscience of empathy. Brain 138 812–820. 10.1093/brain/awu395 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamay-Tsoory S. G., Aharon-Peretz J., Perry D. (2009). Two systems for empathy: a double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain 132 617–627. 10.1093/brain/awn279 [DOI] [PubMed] [Google Scholar]

- Simon D., Craig K. D., Gosselin F., Belin P., Rainville P. (2008). Recognition and discrimination of prototypical dynamic expressions of pain and emotions. Pain 135 55–64. 10.1016/j.pain.2007.05.008 [DOI] [PubMed] [Google Scholar]

- Singer T., Seymour B., O’Doherty J., Kaube H., Dolan R. J., Frith C. D. (2004). Empathy for pain involves the affective but not sensory components of pain. Science 303 1157–1162. 10.1126/science.1093535 [DOI] [PubMed] [Google Scholar]

- Spagrud L. J., Piira T., Von Baeyer C. L. (2003). Children’s self-report of pain intensity. Am. J. Nurs. 103 62–64. 10.1097/00000446-200312000-00020 [DOI] [PubMed] [Google Scholar]

- Strayer J. (1993). Children’s concordant emotions and cognitions in response to observed emotions. Child Dev. 64 188–201. 10.2307/1131445 [DOI] [PubMed] [Google Scholar]

- Thrasher C., LoBue V. (2016). Do infants find snakes aversive? Infants’ physiological responses to “fear-relevant” stimuli. J. Exp. Child Psychol. 142 382–390. 10.1016/j.jecp.2015.09.013 [DOI] [PubMed] [Google Scholar]

- Todd J., Sharpe L., Colagiuri B., Khatibi A. (2016). The effect of threat on cognitive biases and pain outcomes: an eye-tracking study. Eur. J. Pain 20 1357–1368. 10.1002/ejp.887 [DOI] [PubMed] [Google Scholar]

- Vervoort T., Trost Z., Prkachin K. M., Mueller S. C. (2013). Attentional processing of other’s facial display of pain: an eye tracking study. Pain 154 836–844. 10.1016/j.pain.2013.02.017 [DOI] [PubMed] [Google Scholar]

- Williams A. C. (2002). Facial expression of pain: an evolutionary account. Behav. Brain Sci. 25 439–455; discussion 455–488. 10.1017/S0140525X02000080 [DOI] [PubMed] [Google Scholar]

- Williams A. C., Craig K. D. (2006). A science of pain expression? Pain 125 202–203. 10.1016/j.pain.2006.08.004 [DOI] [PubMed] [Google Scholar]

- Wong D. L., Baker C. M. (1988). Pain in children: comparison of assessment scales. Pediatr. Nurs. 14 9–17. [PubMed] [Google Scholar]

- Wu N., Li Z., Su Y. (2012). The association between oxytocin receptor gene polymorphism (OXTR) and trait empathy. J. Affect. Disord. 138 468–472. 10.1016/j.jad.2012.01.009 [DOI] [PubMed] [Google Scholar]

- Yamada M., Decety J. (2009). Unconscious affective processing and empathy: an investigation of subliminal priming on the detection of painful facial expressions. Pain 143 71–75. 10.1016/j.pain.2009.01.028 [DOI] [PubMed] [Google Scholar]

- Yan Z., Wang F., Su Y. (2016). The influence of empathy on the attention process of facial pain expression: evidence from eye tracking. J. Psychol. Sci. 39 573–579. [Google Scholar]

- Yang Z., Jackson T., Gao X., Chen H. (2012). Identifying selective visual attention biases related to fear of pain by tracking eye movements within a dot-probe paradigm. Pain 153 1742–1748. 10.1016/j.pain.2012.05.011 [DOI] [PubMed] [Google Scholar]

- Yu H., Liu Y., Li S., Ma X. (2009). Effects of music on anxiety and pain in children with cerebral palsy receiving acupuncture: a randomized controlled trial. Int. J. Nurs. Stud. 46 1423–1430. 10.1016/j.ijnurstu.2009.05.007 [DOI] [PubMed] [Google Scholar]

- Zaitchik D., Iqbal Y., Carey S. (2014). The effect of executive function on biological reasoning in young children: an individual differences study. Child Dev. 85 160–175. 10.1111/cdev.12145 [DOI] [PubMed] [Google Scholar]

- Zhou Q., Valiente C., Eisenberg N. (2003). “Empathy and its measurement,” in Positive Psychological Assessment: A Handbook of Models and Measures, eds Lopez S. J., Snyder C. R. (Washington, DC: American Psychological Association; ), 269–284. 10.1037/10612-017 [DOI] [Google Scholar]