Abstract

Background

Traditional evaluation methods are not keeping pace with rapid developments in mobile health. More flexible methodologies are needed to evaluate mHealth technologies, particularly simple, self-help tools. One approach is to combine a variety of methods and data to build a comprehensive picture of how a technology is used and its impact on users.

Objective

This paper aims to demonstrate how analytical data and user feedback can be triangulated to provide a proportionate and practical approach to the evaluation of a mental well-being smartphone app (In Hand).

Methods

A three-part process was used to collect data: (1) app analytics; (2) an online user survey and (3) interviews with users.

Findings

Analytics showed that >50% of user sessions counted as ‘meaningful engagement’. User survey findings (n=108) revealed that In Hand was perceived to be helpful on several dimensions of mental well-being. Interviews (n=8) provided insight into how these self-reported positive effects were understood by users.

Conclusions

This evaluation demonstrates how different methods can be combined to complete a real world, naturalistic evaluation of a self-help digital tool and provide insights into how and why an app is used and its impact on users’ well-being.

Clinical implications

This triangulation approach to evaluation provides insight into how well-being apps are used and their perceived impact on users’ mental well-being. This approach is useful for mental healthcare professionals and commissioners who wish to recommend simple digital tools to their patients and evaluate their uptake, use and benefits.

Keywords: mental health

Introduction

Mobile health (mHealth) involves using handheld and typically internet-connected digital devices, such as smartphone and tablets, for the purpose of healthcare. These devices run a wide range of software applications (apps). Evaluation of digital technologies for young people’s mental health has focused largely on internet and computer-delivered cognitive behaviour therapy (eCBT) for depression and anxiety and computerised treatments for diagnosed conditions, for example, attention deficit hyperactivity disorder.1 Researchers have typically adopted traditional health technology assessment approaches for eCBT, such as randomised controlled trials (RCTs), because of the need to demonstrate the promised benefits and to justify the healthcare resources that are required to deliver them. While the literature does regularly cite that eCBT is more cost-effective compared with traditionally delivered CBT, findings from the recent Randomised Evaluation of the Effectiveness and Acceptability of Computerised Therapy trial found little cost-effectiveness differences between two eCBT programmes and GP treatment as usual.2 Because of the resources and time needed to plan, undertake and implement findings from traditional evaluation assessments, RCTs and other ‘big’ trial designs are also considered to be out of proportion to the rapid development and obsolescence of digital technologies,3 4 especially self-help tools (often ‘apps’) which can be accessed directly by users and may not be used in conjunction with clinical services. Several methods have been used to evaluate apps,4 and it is unlikely that one methodology will fit all. It is also argued that formal mHealth trials may not represent their intended real-world use, and evaluations should appraise technologies within the settings where they are intended to be used.3 In particular, the emergence of relatively unregulated (non-medical device) apps aimed at public mental health and well-being indicates the need for alternative approaches to evaluation.

The focus of this article is evaluation methods for simpler digital tools, such as mobile apps for assisting well-being, which are widely and publically available and intended for use without direct clinical supervision.5 These products may have potential in helping young people overcome some of the traditional barriers to accessing support and reducing stigma.6 7 Although there has been discussion of the need to stratify evaluation methods of healthcare apps based on complexity and risk,8 there has been little research investigating how best to evaluate examples of simple, self-help digital tools. Conceptualisation of engagement with digital health interventions has recently been recognised as an important area for research.9 We propose that the quality and value of these digital tools can be assessed through analysis of real-world usage data and assessment of user experience, methods which match the relative simplicity of these tools and anticipated size of effect on users’ mental health. Furthermore, the gap being addressed is the need for better quality early evaluation of new digital health products, whether this is an initial summative evaluation of an established product to help decide whether it is worth adopting, or as in this case the formative evaluation of an app during its development.

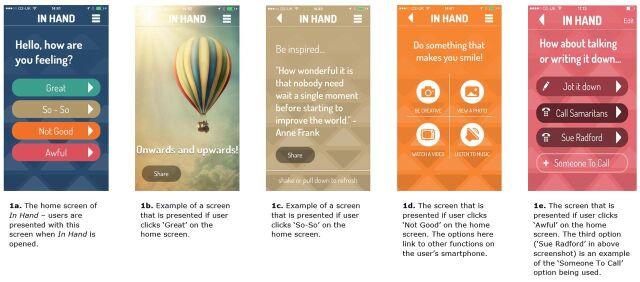

Using the context of an independent evaluation of In Hand, a mental well-being smartphone app, this paper aims to show how a proportionate evaluation can be realised while using elements of web-based and app-based software design that can be readily introduced by product developers into existing applications. In Hand (www.inhand.org.uk , launched 2014) is an app developed by young people with experience of mental health problems to support well-being through focusing the user on the current moment and bringing balance to everyday life. In Hand is a simple, free-to-download digital self-help tool publicly available on iOS and Android, intended to be used independently of healthcare services. Using a traffic light inspired system, the app takes the user through different activities depending on how they are feeling (see figure 1). Its development was led by a UK arts organisation, working with a digital agency and a public mental health service provider. The project’s clinical lead drew on principles of cognitive behaviour therapy and Five Ways to Well-being10 during the development process, but the primary influence on the content arose from needs derived in the co-design process that explored coping strategies used by young people at times of stress or low mood.

Figure 1.

Screenshots and description of In Hand app.

NIHR MindTech HTC was commissioned to undertake an independent evaluation of a suite of digital resources produced by Innovation Labs11; In Hand was one of these products. As these digital resources were about to be publically launched as the evaluation stage commenced, the team proposed to observe how users engaged with the tools by capturing background usage data and seeking feedback directly from users through embedding user elicitation into the tools. This approach would enable the capture of insights from naturally occurring users and gain understanding of how people interact with it in the ‘real world’.

Methods

Research design

We wanted to evaluate how In Hand was engaged with in real life, and what kind of benefits users gained through use. Three methods of data collection were used to gain insight into naturalistic use of In Hand: (1) sampling and analysis of quantitative mobile analytical data (eg, number of individual user sessions, number of interactions with each section of the app); (2) a user survey with questions adapted from a validated well-being measure (the Short Warwick-Edinburgh Mental Well-being Scale12 (SWEMWBS)) and (3) semistructured individual interviews with a subsample of survey respondents.

Procedure

Mobile analytical data

Flurry Analytics (now part of Yahoo!, http://developer.yahoo.com/analytics), a tool that captures usage data for smartphone/tablet apps, was used to securely access anonymous, aggregated data about users’ interactions: this captured time spent using the app, frequency of use, retention over time and key app-related events such as visits to specific content. No identifying information for any user was directly available to the research team, and interactions across sessions by individual users could not be tracked. Data were captured and analysed for the iOS and Android versions of In Hand between May and October 2014.

User survey

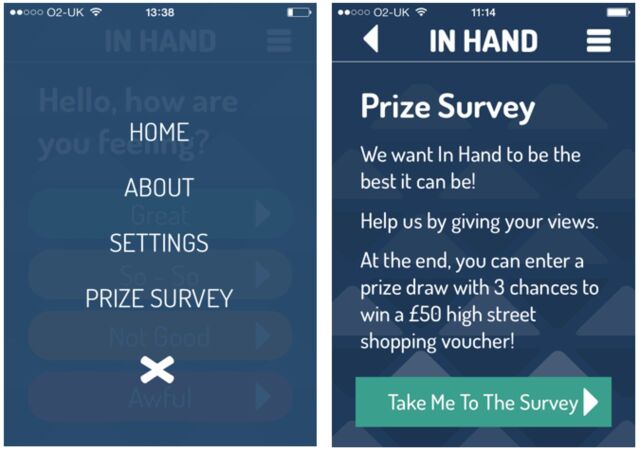

A software update to In Hand was implemented in August 2014 to add an invitation to complete the survey into the app. On first opening the app after the update, users were presented with a ‘splash’ page which invited them to feed back on the app, which was also added to the app’s menu (see figure 2). Once users clicked this, they were taken to the In Hand website (via internet connection outside the app), where further information about the survey was presented. Interested participants then clicked a URL to access the survey (hosted on SurveyMonkey) and consented to complete it. As an incentive to participate, respondents could opt into a prize draw to win a shopping voucher on survey completion. The online survey was open for 6 weeks.

Figure 2.

Screenshots showing the invitation to complete the online survey, as it appeared in the menu within In Hand.

Semistructured interviews

At the end of the online survey, users could enter their email address to register their interest in participating in a semistructured interview about their experience of In Hand. Twenty-four users registered their interest and were emailed an information sheet that included a request for an informal chat with a researcher about the interview procedure and to answer any questions. From this, eight people chose to participate: an arrangement for an interview was made and an online consent form emailed to them and completed prior to interview Six interviews were carried out by telephone and were audio recorded, with two asynchronously conducted by email. Participants received a £15 voucher as an inconvenience allowance.

Sampling and recruitment

The target group for In Hand is young people (aged up to 25 years), but given it was publicly available, people of all ages could access and use the app and therefore take part in the evaluation. All In Hand users (aged ≥16 years) were eligible to participate in the survey and interview. Those who entered their age as ≤15 (meaning parental consent would be required) at the start of the survey were automatically directed to an exit web page. One hundred responses to the survey were sought: using data from another digital tool evaluation,11 a conservative estimate of 10% of all app users completing the survey was made, suggesting that the survey needed to run for 6 weeks to achieve 100 responses.

For the interviews, a purposeful sample of 12 respondents with varied demographic characteristics was sought to gain a range of perspectives. Survey respondents who opted in to the interviews were sampled according to their age, gender, ethnicity, sexuality, disability, geographical location and their use and experience with In Hand.

Survey and interview guide design

The user survey and semistructured interview topic guide were developed specifically for this study. Young people involved with Innovation Labs and In Hand development collaborated with the NIHR MindTech Team in generating the study’s design and areas to be explored in the survey and interview, reviewing and testing out the online survey and interview schedule and finalising the study materials. To explore the kinds of benefits In Hand had to users, the survey questions were based on the SWEMWBS,12 an evidence-based measurement tool with seven dimensions of mental well-being which has been shown to be valid and reliable with young people.13 In this real world, observational evaluation, it was not possible to assess participants’ well-being before and after use of the app, but rather, users were asked to rate to what extent In Hand had helped them on specific dimensions of mental well-being. During the co-design process, one of these dimensions was reworded to be more accessible to young people (‘feel optimistic’ changed to ‘have a positive outlook’), and three other dimensions were selected for inclusion in the survey to reflect the young people’s experiences (‘feel ready to talk to someone else’, ‘feel less stressed’, ‘feel more able to take control’). These dimensions were also used to guide the interview topics.

Data analysis

Aggregated analytical data from Flurry were tabulated and summary statistics calculated. To assess users’ engagement, the In Hand team were asked to advise on the time a user would need to spend on the app to have a ‘meaningful engagement’: that is, open the app, make a selection of how they were feeling and perform at least one activity based on their response to the front screen (eg, take a photo). Flurry gives data on session length in fixed ranges (see table 1). It was likely that many sessions recorded as less than 30 s would have been too short for the user to have completed an activity. Therefore, user sessions in the range 30–60 s and above were classed as ‘meaningful engagement’.

Table 1.

Time spent on In Hand at each user session

| Length of time for each user session | Number of sessions, n (%) |

| 0–3 s | 2188 (10.9) |

| 3–10 s | 2230 (11.1) |

| 10–30 s | 3993 (19.8) |

| 30–60 s | 4185 (20.8) |

| 1–3 min | 5621 (27.9) |

| 3–10 min | 1717 (8.5) |

| 10–30 min | 213 (1.1) |

| 30+ min | 10 (0.1) |

Survey data were downloaded from SurveyMonkey and imported into a database. Descriptive statistics were calculated using SPSS V22 (Chicago, Illinois, USA). For the demographic description of the sample, the whole dataset is reported (n=131), but for data relating to whether In Hand helped with mental well-being, only data from respondents who ran the app once or more are reported (n=108).

Audio-recorded interviews were transcribed verbatim. A top-down, deductive thematic analysis14 was taken which focused on: how the user discovered In Hand, their use of In Hand, whether it was helpful, any risks in using In Hand and potential areas for improvement. Each transcript was reviewed and codes assigned to content which reflected the defined areas of interest. These codes were reviewed to identify any overlap between codes and to group them into overarching themes.

Findings

Analytics

From launch of In Hand on 14 May to 31 October 2014, there were 22 357 user sessions on In Hand across both mobile platforms (14 981 on iPhone; 7376 on Android). Seventy-five percent of these were returning users. Sixteen per cent remained active 1 week after first use (likely to be at installation), 7% after 4 weeks and 2% after 20 weeks. Around half of the users (52%) opened In Hand once a week, with 34% using it 2–3 times per week, 10% 4–6 times and 4% more than six times per week.

Table 1 shows engagement with In Hand measured by the length of time of each user session (data were provided by the analytics in the ranges shown). More than half of users’ sessions (58%) were in the ‘meaningful engagement’ ranges of 30–60 s or longer and a further fifth of sessions (20%) were somewhere in the range of 10–30 s where some users may have had time to have meaningful engagement. Around a fifth (22%) were in the lower ranges of 10 s or less session length. Less than 10% of sessions were in a range over 3 min. Overall, the median session duration was 43 s for iPhone users and 40 s for Android users (Flurry reports the average as the median, rather than the mean).

The opening screen of In Hand presents the question ‘Hello, how are you feeling?’ with four options: ‘Great’, ‘So-So’, ‘Not Good’ and ‘Awful’ and associated suboptions (see figure 1). The most frequent selection on this opening screen was ‘So-So’ (11 751 occurrences), followed by ‘Not Good’ (9958 occurrences), ‘Great’ (9048 occurrences) and ‘Awful’ (8614 occurrences). In general, it was seen that users accessed the entire set of suboptions but with some obvious preferences based on which of the four options they chose, for example, reading multiple inspirational quotes, viewing a personally loaded photo or using the ‘Jot it down’ function (akin to a journal where users could type their thoughts into the app).

Findings from user survey and interviews

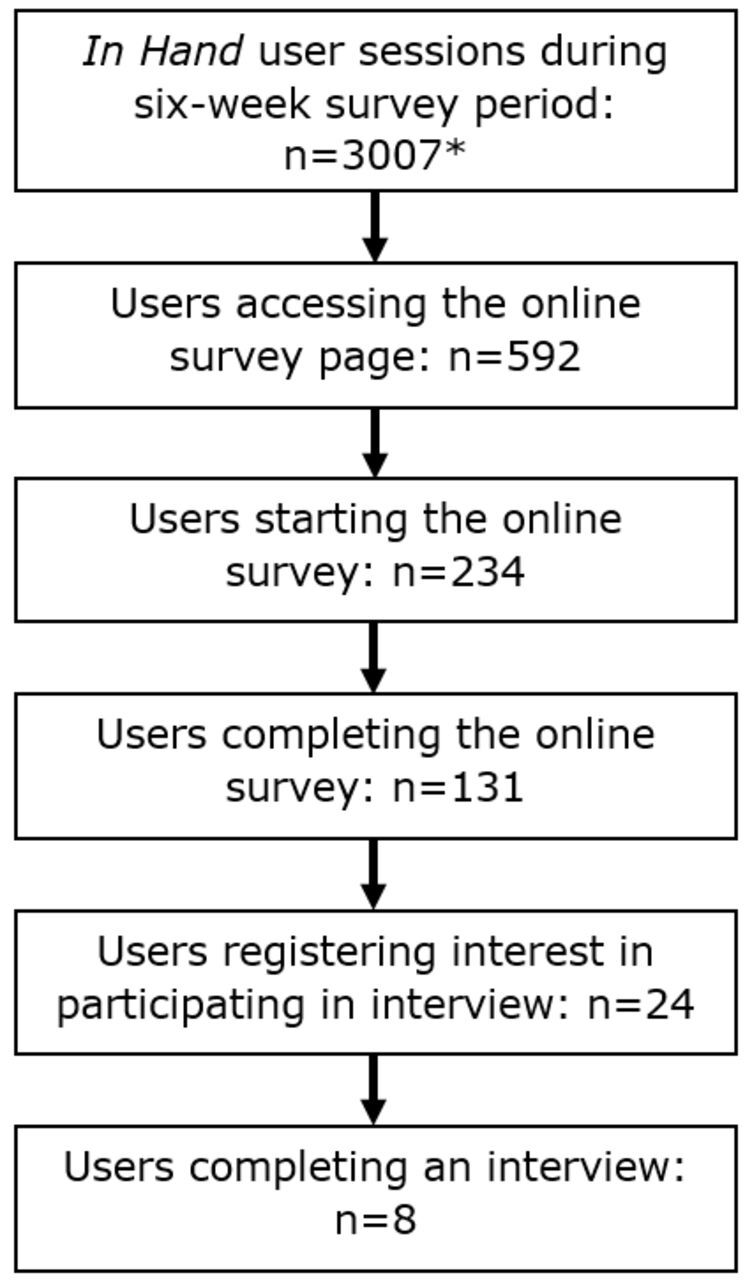

The survey information web page was accessed 592 times, with 131 users following through to complete the survey—a response rate of 22% from those who accessed the survey information (figure 3). The sample was predominantly female (n=100, 76%), ethnically white (n=122, 93%), and 75% (n=98) were aged 16–25 years (total range 16–54 years; mean 23±10 years). Three-fifths (n=79, 60%) reported they had experienced mental health problems.

Figure 3.

Diagram outlining number of user sessions (*does not necessary reflect individual users, but the number of individual sessions) and the flow of participants during the study.

Table 2 summarises how survey respondents reported In Hand helped their mental well-being. The 10 mental well-being dimensions, including the seven from the SWEMWBS, are presented in rank order. For seven dimensions, over 60% of the survey respondents reported that In Hand had offered them some help, with three dimensions reported as being helpful by half or less of the respondents. For four dimensions, almost three-quarters (74%) or more reported In Hand was helpful to them—‘More able to take control, ‘Think clearly’, ‘Feel relaxed’ and ‘Deal with problems well’. These top four dimensions relate most closely to the primary purpose of In Hand. For all of the dimensions, most respondents reported that In Hand had helped them ‘a little bit’, rather than ‘a lot’.

Table 2.

Participants ratings of whether In Hand helped with their mental well-being (n=108), ranked in order by numbers endorsing it helped ‘a lot’

| Would you say In Hand has helped you… | No way, n (%) | Not really, n (%) | Yes, a little bit, n (%) | Yes, a lot, n (%) |

| More able to take control | 4 (3.7) | 28 (25.9) | 43 (39.8) | 33 (30.6) |

| Think clearly* | 3 (2.8) | 25 (23.1) | 51 (47.2) | 29 (26.9) |

| Feel relaxed* | 2 (1.9) | 23 (21.3) | 58 (53.7) | 25 (23.1) |

| Deal with problems well* | 5 (4.6) | 37 (34.3) | 41 (38.0) | 25 (23.1) |

| Less stressed | 4 (3.7) | 20 (18.5) | 60 (55.6) | 24 (22.2) |

| Make my own mind up about things* | 6 (5.6) | 34 (31.5) | 47 (43.5) | 21 (19.4) |

| Have a positive outlook* | 3 (2.8) | 17 (15.7) | 68 (63.0) | 20 (18.5) |

| Feel useful* | 5 (4.6) | 39 (36.1) | 49 (45.4) | 15 (13.9) |

| Ready to talk to someone else | 15 (13.9) | 38 (35.2) | 41 (38.0) | 14 (13.0) |

| Feel close to other people* | 18 (16.7) | 43 (29.0) | 29 (26.9) | 14 (13.0) |

*These items are taken from the Short Warwick-Edinburgh Mental Well-being Scale.

The eight interviewed participants were mostly female (n=7), aged 16–44 years (mean 25±9 years), white (n=7) and had experience of mental health problems (n=7). Interviewees further highlighted how In Hand helped the dimensions of their mental well-being as revealed in the survey, including describing how it helped them to feel relaxed or less stressed:

"I can get a bit panicky quite quickly, so if I just stop and go on something like In Hand, the ease of using it and the colours and everything, sort of calms you and takes your mind off what you are feeling… looking at a quote helps you to feel more calm and relaxed." (Interviewee 8)

Helped to think clearly and more able to take control:

"In Hand is nice, so simple and it’s just common sense, the things it asks you about how you are feeling. But that then leads you on to thinking about a lot of things. So for me, it gives me more independence with my emotional well-being." (Interviewee 7)

And helped to facilitate a positive outlook:

"The little sayings like ‘keep going’ I found helpful because it’s something a friend might say if they were supporting you and it makes you realise that you can’t just give up." (Interviewee 3)

The interviewees further expanded on why In Hand was useful to them. They talked about In Hand being discreet, private, and not requiring them to be in specific locations to access it. Interviewees described how the anonymity and perceived non-judgmental nature of a digital tool was important to them. It gave them the ability to think about how they were feeling at any time without having to involve other people:

"There’s no-one to trust on your app – it’s just asking you how you are feeling. There’s no kind of come back or no-one’s going to say anything back." (Interviewee 1)

Discussion

Strengths of the evaluation approach

First, we believe the approach to evaluation set out in this paper is useful as it was intended to be proportionate to this type of digital tool and its anticipated impact on health outcomes—a simple, non-clinical tool intended for personal, unsupervised use as one part of an individual’s self-management strategies for their emotional well-being. The evidence generated by this approach, informed by the principles of Health Technology Assessment, has provided quantifiable insights into the app usage and patterns of engagement, an assessment of how the app has supported users with their mental well-being and identified some descriptive insights to how the tool works to support users. The approach has value because it goes beyond user ratings in app stores, while being timely and cost-efficient to implement.

Second, the approach was able to gather data from actual users of the tool in real world settings. By accessing the analytical data of all users within a specified time period, we were able to analyse, in aggregate, how people used the tool and how this changed over time. This may be different (or similar) from how people interact with a tool within a controlled setting: digital interventions in formal trials tend to have greater adherence than naturalistic evaluations.15 Moreover, the survey and interview respondents were people who had selected the tool independently from a range available (via the app stores) and used it in naturally occurring ways, rather than a sample of volunteers using the tool under experimental, controlled conditions.

Third, the evaluation plan (along with the app) was guided by a team of young people and proved achievable within a timescale to fit with the development cycles of digital tools. Evaluation commenced at the end of beta testing when the app first became available from app stores; data and analysis were communicated to the development team for implementation at their 6-month review point. The co-design process assisted in ascertaining that the evaluation methods were understandable and used language and a design familiar to its target audience. The methods adopted did not require extensive digital development—which would have been outside the resources of the development and evaluation teams—and good response rates were achieved. Nor did it require retention of participants over time to generate useful insights.

Fourth, while the approach used could be criticised for inability to determine the extent to which any changes to mental health are attributable to using In Hand, we would argue that our approach was not intended to measure effect, but rather assess the type of impact In Hand may have on users’ mental well-being. A measurement of the effect of In Hand and the resources this would require, is, we would argue, out of proportion to the nature of the tool and anticipated effect on mental well-being. In Hand is a self-help tool, commensurate with other self-help tools, such as books or online information, rather than a clinical intervention, treatment or psychological therapy, and In Hand is not a medical device. As such the level of evidence required to provide assurances of quality should not be expected to be as extensive as would be required for clinical interventions or medical devices. In this regard, we believe the methods adopted—including a validated measure of mental well-being to explore the nature of the effect—were sufficient to demonstrate this and can be considered a strength of the approach used. This assertion is supported by data from an associated evaluation of another simple digital tool—DocReady (www.docready.org)—which used the same standardised measure in a similar manner.11 This evaluation found that DocReady was reported as useful in different domains of mental well-being to In Hand: the top three rated domains were ‘Able to think clearly’, ‘Ready to talk to someone else’ and ‘More able to take control’, which reflects how DocReady aimed to benefit its users through changing preparatory intentions and behaviour in seeking out help from a GP. As with In Hand, most users rated the help as ‘a little bit’ rather than ‘a lot’, confirming that both these tools have a limited, specific effect and, as would be expected, one mHealth app would not provide all the functions required for overall mental health.16

Limitations of the evaluation approach

First, in common with other evaluation methods, the sample for the survey and interviews was reliant on people opting in to participate, so is a self-selecting sample. These people are those most likely to have a positive experience with the tool or those wanting to feedback their dissatisfaction—the so-called ‘TripAdvisor effect’—and people who ‘fall in the middle’ may not be providing feedback. Likewise, it may be that users who found benefits of using the tool were more likely to be those that have ‘stuck with’ the tool over time. In addition, the sample is relatively homogeneous—predominantly white females (the target audience for In Hand was young people aged up to 25 years, so the limited age range was as to be expected). This gender difference is observed in other research of similar tools,16–18 but whether this is a mHealth usage or research participation bias is not clear.

Second, while responses to the survey were good and numbers exceeded our initial target (see figure 3), this probably represents a small proportion of overall users: because usage of the app is recorded in user sessions, rather than individuals, it was not possible to accurately estimate the response rate to the survey.

Third, as figure 3 shows, interest in taking part in the interviews was very limited. In the event, we interviewed all those that agreed and achieved only eight in total: this was lower than our target number and it was not possible to sample from the specified criteria of interest (eg, gender, different experience with the tool). The interview findings are therefore to be interpreted with caution as they are based on a small number of experiences with a homogenous sample. Users of other backgrounds and demographics may have different perspectives on In Hand.

Fourth, the time period between users first interacting with In Hand and the completion of the survey was not recorded. Therefore, an assessment of the influence of recall bias on the reliability of the results is not possible. However, users would have accessed the survey through the hyperlinks within the app, so it is probable that they completed it during an app session.

Finally, the Flurry analytics software is designed for developers, rather than a research tool, which brings limitations. In particular, data were aggregated into predefined numerical ranges which acted to limit the analysis. For example, Flurry categorises each individual user session into time ranges, rather than providing the actual time spent on the app. The precise format of data returned by Flurry depended on the smartphone operating system and did not always align well between these. Moreover, it was not possible to track an individual user’s interactions with the app over time, for example to assess how consistent the usage was. The importance of using a more sophisticated individualised metric combining different aspects of user engagement (an ‘App Engagement Index’) is a current topic in the mHealth literature19 which could be adopted in future research.

Evaluation of In Hand

As a result of this evaluation, we can describe how people used In Hand and the nature of its benefit on users’ mental well-being:

Each interaction with the app was brief, but the majority of interactions were long enough to allow an active interaction with the app.

The majority of users interacted with the app more than once and although use did taper off over time, there was a level of sustained use.

In Hand supported users’ mental well-being through changing attitude and point of view, helping with decreasing feelings of stress and increasing feelings of relaxation and supporting clear thinking.

Users described these supports to their mental well-being were encouraged because: the app prompted users to actively reflect on their current mental state; provided an easily available strategy to ease anxiety and helped build confidence and empowerment in how users coped with their mental health day to day.

These usage patterns are similar to those seen in other studies of digital interventions,20 especially the low retention rate for many, but sustained use for some,4 which is similar to the 1-9-90 rule observed in online forums.21 This rule suggests that in participating in online communities, the majority (90%) are passive users (‘lurkers’ who observe but do not actively participate in the online community), a minority (9%) are occasional contributors, and an even smaller proportion (1%) are the biggest participators who are responsible for the majority of contributions to the online community.21 While other study designs can identify whether an effect is achieved, for example, increased emotional self-awareness,18 the methods adopted in this evaluation provide an understanding of how users perceive the benefits of a digital tool. The next stage in our proposed proportionate approach would be a closer examination which would explore any adverse effects, as well as further evaluation of value and benefit, if uptake of the tool proved sufficient to warrant it.

Clinical implications

This paper has demonstrated how simple, self-help digital tools can be evaluated in a proportionate and practical way. Triangulating data sources provided an understanding of how In Hand was used and the ways it can support mental well-being. In particular, the survey of naturally occurring users provided insights into the value of the app from the user perspective and, in this case provided evidence of the app having the intended effect for users. This is important for healthcare decision-makers who need to be assured of the quality of an app before recommending to patients. In addition, analytical data provides evidence of how and when a tool is being used (or not), which enables health providers and commissioners to make a judgement on the value of a tool in order to ensure any cost is justified (not applicable for in this case as the tool is free to use). Furthermore, we have demonstrated cost-efficient, timely methods, which can be easily incorporated into digital tools, thus providing scope for audits and service evaluations.

Acknowledgments

We would like to thank the In Hand team and their young persons' panel for their support and all the participants for their input and time. The research reported in this paper was conducted by the NIHR MindTech HTC and supported by the NIHR Nottingham BRC. The views represented are the views of the authors alone and do not necessarily represent the views of the Department of Health in England, NHS, or the National Institute for Health.

Footnotes

Contributors: MPC, JLM and LS conceived the study, collected and analysed the data and interpreted the results. All authors drafted and revised the manuscript and gave final approval of the version submitted for publication.

Funding: This study was funded by Comic Relief as part of Innovation Labs (a consortium of Comic Relief, Mental Health Foundation, Paul Hamlyn Trust and Nominet Trust).

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Hollis C, Falconer CJ, Martin JL, et al. Annual research review: digital health interventions for children and young people with mental health problems: a systematic and meta-review. J Child Psychol Psychiatry 2016;58:474–503. 10.1111/jcpp.12663 [DOI] [PubMed] [Google Scholar]

- 2. Littlewood E, Duarte A, Hewitt C, et al. A randomised controlled trial of computerised cognitive behaviour therapy for the treatment of depression in primary care: the Randomised Evaluation of the Effectiveness and Acceptability of Computerised Therapy (REEACT) trial. Health Technol Assess 2015;19:1–174. 10.3310/hta191010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Mohr DC, Weingardt KR, Reddy M, et al. Three problems with current digital mental health research… and three things we can do about them. Psych Serv 2017;68:427–9. 10.1176/appi.ps.201600541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Pham Q, Wiljer D, Cafazzo JA. Beyond the randomized controlled trial: a review of alternatives in mHealth clinical trial methods. JMIR Mhealth Uhealth 2016;4:e107. 10.2196/mhealth.5720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Van Ameringen M, Turna J, Khalesi Z, et al. There is an app for that! The current state of mobile applications (apps) for DSM-5 obsessive-compulsive disorder, posttraumatic stress disorder, anxiety and mood disorders. Depress Anxiety 2017;34:526–39. 10.1002/da.22657 [DOI] [PubMed] [Google Scholar]

- 6. Hollis C, Morriss R, Martin J, et al. Technological innovations in mental healthcare: harnessing the digital revolution. Br J Psychiatry 2015;206:263–5. 10.1192/bjp.bp.113.142612 [DOI] [PubMed] [Google Scholar]

- 7. Kauer SD, Mangan C, Sanci L. Do online mental health services improve help-seeking for young people? a systematic review. J Med Internet Res 2014;16:e66. 10.2196/jmir.3103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lewis TL, Wyatt JC. mHealth and mobile medical apps: a framework to assess risk and promote safer use. J Med Internet Res 2014;16:e210. 10.2196/jmir.3133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Perski O, Blandford A, West R, et al. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med 2017;7:254–67. 10.1007/s13142-016-0453-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Foresight mental capital and wellbeing project. final project report – executive summary. London: The Government Office for Science, 2008. [Google Scholar]

- 11. Simons L, Craven M, Martin J. Learning from the labs 2: evaluating effectiveness. Nottingham : NIHR MindTech Healthcare Technology Co-operative, 2015. [Google Scholar]

- 12. Stewart-Brown S, Tennant A, Tennant R, et al. Internal construct validity of the Warwick-Edinburgh Mental Well-being Scale (WEMWBS): a Rasch analysis using data from the Scottish Health Education Population Survey. Health Qual Life Outcomes 2009;7:15. 10.1186/1477-7525-7-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Clarke A, Friede T, Putz R, et al. Warwick-Edinburgh Mental Well-being Scale (WEMWBS): validated for teenage school students in England and Scotland. a mixed methods assessment. BMC Public Health 2011;11:487. 10.1186/1471-2458-11-487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 15. Christensen H, Griffiths KM, Farrer L. Adherence in internet interventions for anxiety and depression. J Med Internet Res 2009;11:e13. 10.2196/jmir.1194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Bidargaddi N, Musiat P, Winsall M, et al. Efficacy of a web-based guided recommendation service for a curated list of readily available mental health and well-being mobile apps for young people: randomized controlled trial. J Med Internet Res 2017;19:e141. 10.2196/jmir.6775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Kenny R, Dooley B, Fitzgerald A. Feasibility of “CopeSmart”: a telemental health app for adolescents. JMIR Ment Health 2015;2:e22. 10.2196/mental.4370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Reid SC, Kauer SD, Hearps SJ, et al. A mobile phone application for the assessment and management of youth mental health problems in primary care: a randomised controlled trial. BMC Fam Pract 2011;12:131. 10.1186/1471-2296-12-131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Taki S, Lymer S, Russell CG, et al. Assessing user engagement of an mHealth intervention: development and implementation of the growing healthy app engagement index. JMIR Mhealth Uhealth 2017;5:e89. 10.2196/mhealth.7236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Hilvert-Bruce Z, Rossouw PJ, Wong N, et al. Adherence as a determinant of effectiveness of internet cognitive behavioural therapy for anxiety and depressive disorders. Behav Res Ther 2012;50:463–8. 10.1016/j.brat.2012.04.001 [DOI] [PubMed] [Google Scholar]

- 21. Nielson J. The 90-9-1 rule for participation inequality in social media and online communities, 2006. www.nngroup.com/articles/participation-inequality/.