Abstract.

Reproducibly achieving proper implant alignment is a critical step in total hip arthroplasty procedures that has been shown to substantially affect patient outcome. In current practice, correct alignment of the acetabular cup is verified in C-arm x-ray images that are acquired in an anterior–posterior (AP) view. Favorable surgical outcome is, therefore, heavily dependent on the surgeon’s experience in understanding the 3-D orientation of a hemispheric implant from 2-D AP projection images. This work proposes an easy to use intraoperative component planning system based on two C-arm x-ray images that are combined with 3-D augmented reality (AR) visualization that simplifies impactor and cup placement according to the planning by providing a real-time RGBD data overlay. We evaluate the feasibility of our system in a user study comprising four orthopedic surgeons at the Johns Hopkins Hospital and report errors in translation, anteversion, and abduction as low as 1.98 mm, 1.10 deg, and 0.53 deg, respectively. The promising performance of this AR solution shows that deploying this system could eliminate the need for excessive radiation, simplify the intervention, and enable reproducibly accurate placement of acetabular implants.

Keywords: intraoperative planning, x-ray, augmented reality, RGBD camera, total hip arthroplasty

1. Introduction

1.1. Clinical Background

In total hip arthroplasty (THA), also referred to as total hip replacement, the damaged bone and cartilage are replaced with prosthetic components. The procedure relieves pain and disability with a high success rate. In 2010, there were THAs performed in the US. This is projected to increase to 570,000 THAs by 20301 as younger patients and patients in developing countries are considered for THA. Together with a prolonged life expectancy, the consideration of younger, more active patients for THA suggests that implant longevity is of increasing importance as it is associated with the time to revision arthroplasty.1 The time to repeat surgery is affected by the wear of the implants that is correlated with their physical properties as well as acetabular component positioning. Poor placement leads to increased impingement and dislocation that promotes accelerated wear. Conversely, proper implant placement that restores the hip anatomy and biomechanics decreases the risk for dislocation, impingement, loosening, and limb length discrepancy and thus implant wear and revision rate.2–6 Steps to ensure accuracy and repeatability of acetabular component positioning are therefore essential. Due to the large volume of THA procedures, small but favorable changes to the risk-benefit profile of this procedure enabled by improved implant positioning will have a significant impact on a large scale.

Unfortunately, optimal placement of the acetabular component is challenging due to two main reasons. First, the ideal position of the implant with respect to the anatomy is unknown, yet a general guideline exists7 and is widely accepted in clinical practice. This guideline suggests abduction and anteversion angles of the hip joint measured with respect to bony landmarks defining the so-called safe zone, which is indicative of an acceptable outcome. Recent studies suggest that an even narrower safe zone may be necessary to minimize the risk of hip dislocation.8,9 Defining the ideal implant position is not as straightforward as the definition of a range of abduction and anteversion angles when considering a large population.10 A static definition of the safe zone seems even more prone to error when considering that the position of the pelvis varies dramatically from supine to sitting to standing posture among individuals.11,12

Second, even if a clinically acceptable safe zone is known, it is questionable whether surgeons are, in fact, able to accomplish acetabular component placement within the suggested margin.9 In light of previous studies that report malpositioning of up to 30% to 75%13–15 when free-hand techniques are used, addressing this challenge seems to be imperative.

Most computer-assisted methods consider the direct anterior approach (DAA) to the hip for THA as it allows for convenient integration of intraoperative fluoroscopy to guide the placement of the acetabular component.16 The guidance methods reviewed below proved effective in reducing outliers and variability in component placement, which equates to more accurate implant positioning.17–20

1.2. Related Work

External navigation systems commonly use certain points on the anatomy of interest, as decided by the surgeon, and conform to a “map” of the known morphology of the anatomy of interest. Despite the fact that THA commonly uses x-ray images for navigation and preoperative patient CT may not be available, several computer-assisted THA solutions suggest planning the desired pose of the acetabular component preoperatively on a CT scan of the patient.21,22 Preoperative CT imaging allows planning of the implants in 3-D, automatically estimating the orientation of the natural acetabular opening, and predicting the appropriate size of the cup implant.23

Navigation-based THA with external trackers are performed based on preoperative patient CT or image-less computer assisted approaches. The planning outcome in a CT-based navigation approach is used intraoperatively with external optical navigation systems to estimate the relative pose of the implant with respect to the patient anatomy during the procedure. Tracking of the patient is commonly performed using fiducials that are drilled into the patient’s bones. Registration of the preoperative CT data to the patient on the surgical bed is performed by manually touching anatomical landmarks on the surface of the patient using a tracked tool.21 In addition to the paired-point transformation estimated by matching the few anatomical landmarks, several points are sampled on the surface of the pelvis and matched to the segmentation of the pelvis in the CT data.24 CT-based navigation showed statistically significant improvement in orienting the acetabular component and eliminating malpositioning, while resulting in increased blood loss, cost, and time for surgery.25,26 Combined simultaneous navigation of the acetabulum and the femur was used in 10 clinical tests, where the surgical outcome based on postoperative imaging showed 2.98 mm and 4.25 deg error in cup position and orientation, respectively.27

Image-less navigation systems do not require any preoperatively acquired radiology data. In this method, the pelvic plane is located in 3-D by only identifying anatomical landmarks on the surface of the patient using a tracked pointer reference tool and optically visible markers attached to the patient.28 This approach showed improvement in terms of cup positioning.29 However, a few number of samples points for registration as well as pelvis tilts resulted in unreliable registration.30

Robotic systems are developed to provide additional confidence to the surgical team in placing implants during THA.31,32 In a robotic system, pins are implanted into the patient’s femur prior to acquiring a preoperative CT scan. After the surgeon has performed the planning on the CT data, the robot is introduced into the operating room. To close the registration loop between patient, robot, and CT volume, each preoperatively implanted pin is touched by the robot with manual support. To eliminate the need for fiducial implantation, registration is either achieved by selecting several points on the surface of the bone using a digitizer and using an iterative closest point algorithm to perform registration to patient CT data,33 or by using intraoperative C-arm fluoroscopy and performing 2-D/3-D registration between the x-ray image and CT volume.34 After registering the preoperative CT data to the patient, the robot assists the surgeon in placing the femoral stem and the acetabular component according to the planning. The outcome of 97 robot-assisted THA procedures indicates performance similar to the conventional technique;35 however, in some cases, additional complications, such as nerve damage, postoperative knee effusion, incorrect orientation of the acetabular component, and deep reaming resulting in leg length discrepancy, were reported when the robotic system was used. To assist the surgeon in placing implants for joint replacement procedures, haptic technology was integrated into robotic solutions to maintain the orientation of the cup according to preoperative planning and to control the operator’s movement.36

If preoperative CT is available, intensity-based 2-D/3-D registration can be used to evaluate and verify the positioning of the acetabular component postoperatively.22 This is done by recovering the spatial relation between a postoperative radiograph (2-D) and the preoperative patient CT data (3-D), followed by a registration of the 3-D CAD model of the component to the 2-D representation of the cup in the postoperative radiograph. To overcome the large variability in individual patient pelvic orientations and to eliminate the need for preoperative 3-D imaging, the use of deformable 2-D/3-D registration with statistical shape models was suggested.37

Aforementioned solutions perform well but require preoperative CT, which increases the time and cost for surgery and requires intraoperative registration to the patient.25,32 Zheng et al.38 proposed a CT-free approach for navigation in THA. The method relies on tracking of the C-arm, surgical instruments used for placing femoral and acetabular components, and the patient’s femur and pelvis using an external navigation system. Multiple stereo C-arm fluoroscopy images are acquired intraoperatively. Anatomical landmarks are then identified both in these x-ray images as well as percutaneously using a point-based digitizer. Due to the tracking of the C-arm, the relative pose between the x-ray images is known; therefore, the anatomical landmarks are triangulated from the images and reconstructed in 3-D. These points are used later to define the anterior pelvic plane and the center of rotation for the acetabulum. After estimating the pelvis coordinate frame, the impactor is moved by the surgeon until the cup is at the correct alignment with respect to a desired orientation in the anterior pelvic plane coordinate frame. This work reported subdegree and submillimeter accuracy in antetorsion, varus/valgus, and leg length discrepancy. Later, this system was tested in 236 hip replacement procedures, where a maximum of 5-deg inclination error and 6-deg anteversion error was observed.39

The state-of-the-art approaches that provide guidance using image-less or image-based methods have certain drawbacks. Image-less methods require complex navigation and may provide unreliable registration.30 Image-based solutions rely on preoperative CT scans or intraoperative fluoroscopy and often use external navigations systems for tracking.40,41 Systems based on external navigation are expensive and increase the operative time due to the added complexity. Use of preoperative CT scans increases the radiation exposure and cost to the patient. Moreover, many of the methods used for registering CT to patient seek to solve ill-posed problems that require manual interaction either for initialization or landmark identification and, thus, disrupt the surgical workflow. Manual annotations can take between 3 to 5 min during the intervention for each image registration.21 Although proven beneficial for the surgical outcome, neither of these costly and labor-intensive navigation techniques were widely adopted in clinical practice.

Partly due to above drawbacks, surgeons who use the DAA often rely solely on fluoroscopic image guidance.16,42 These images, however, are a 2-D representation of 3-D reality and have inherent flaws that complicate the assessment. The challenges include finding the true anterior pelvic plane as well as eyeballing acetabular component position by eye on the image. Therefore, a technique that provides a quantitative and reliable representation of the pelvis and acetabular component intraoperatively without increasing either radiation dose or cost, while largely preserving the procedural workflow, is highly desirable.

1.3. Proposed Solution

This work proposes an augmented reality (AR) solution for intraoperative guidance of THA using DAA, where the C-arm is kept in place until the correct alignment of the acetabular cup is confirmed.43,44 With the proposed solution, the surgeon first plans the position of the acetabular cup intraoperatively based on two fluoroscopy images that are acquired after the dislocation of the femoral head and the reaming of the acetabulum are completed. The orientation of the cup in the x-ray images could be either automatically preset based on desired angles relative to the APP plane (or other known pelvic coordinate frames) or adjusted by the surgeon. Once the desired pose of the acetabular cup is estimated relative to the C-arm, we use optical information from the cocalibrated RGBD camera that is mounted on the C-arm to provide an AR overlay45–47 that enables placement of the cup according to the planning. As the cup is not visible in RGBD, we exploit the fact that the acetabular cup is placed using an impactor that is rigidly attached to the cup and is well perceived by the RGBD camera. For accurate cup placement, the surgeon aligns the optical information of the impactor (a cloud of points provided by the RGBD camera) with the planned virtual impactor-cup, which is visualized simultaneously in our AR environment. A schematic of the proposed clinical workflow is shown in Fig. 1.

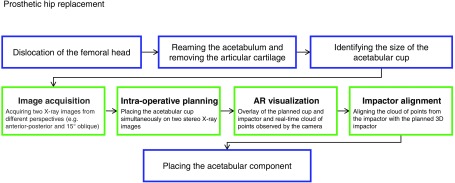

Fig. 1.

After the femoral head is dislocated, the size of the acetabular implant is identified based on the size of the reamer. Next, two C-arm x-ray images are acquired from two different perspectives. While the C-arm is repositioned to acquire a new image, the relative poses of the C-arm are estimated using the RGBD camera on the C-arm and a visual marker on the surgical bed. The surgeon then plans the cup position intraoperatively based on these two stereo x-ray images simultaneously. Next, the pose of the planned cup and impactor is estimated relative to the RGBD camera. This pose is used to place the cup in a correct geometric relation with respect to the RGBD camera and visualize it in an AR environment. Finally, the surgeon observes real-time optical information from the impactor and aligns it with the planned impactor using the AR visualization. The green boxes in this figure highlight the contributions of this work.

2. Methodology

The AR environment for THA requires a cocalibrated RGBD-C-arm (Sec. 2.1). Whenever the C-arm is repositioned, the RGBD camera on the C-arm tracks and estimates C-arm relative extrinsic parameters (Sec. 2.2). During the intervention, two x-ray images are recorded at different poses together with the respective extrinsic parameters and are used for intraoperative planning of the component (Sec. 2.3). Last, an AR environment is provided for the placement of the cup that comprises surface meshes of a virtual cup and impactor displayed in the pose obtained by intraoperative planning, overlaid with the real-time cloud of points from the surgical site acquired by the RGBD camera (Sec. 2.4).

2.1. Cocalibration of the RGBD-C-Arm Imaging Devices

The cocalibration of the RGBD camera and the x-ray imaging devices is performed using a multimodal checkerboard pattern. In this hybrid checkerboard pattern, each black square is backed with a radiopaque thin metal square of the same size.48 Calibration data are acquired by simultaneously recording RGB and x-ray image pairs of the checkerboard at various poses. Next, we estimate the intrinsic parameters for both the RGB channel of the RGBD sensor as well as for the x-ray imaging device. Using these intrinsic parameters, we estimate the 3-D locations of each checkerboard corner, and , in the RGB and x-ray coordinate frames, respectively. The stereo relation between the x-ray and RGB imaging devices is then estimated via least squares minimization:

| (1) |

The stereo relation between the RGB and IR (or depth) channel of the RGBD sensor is provided by the manufacturer. To simplify the notation, we use , which embeds the relation between the RGB, depth, and x-ray imaging devices. We assume that both extrinsic parameters between x-ray and RGBD and the intrinsic parameters of the x-ray remain constant; however, both quantities are subject to minor change while the C-arm rotates to different angles, an observation that is further discussed in Sec. 4. Figure 2(a) illustrates the spatial relation between the RGBD camera and the x-ray source.

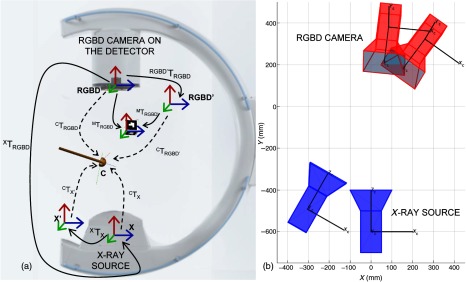

Fig. 2.

In the transformation chain of the RGBD-C-arm system for THA (a), the RGBD, x-ray, visual marker, and acetabular cup coordinate frames are denoted as RGBD, X, M, and C, respectively. In an offline calibration step, the extrinsic relation between the RGBD and x-ray () is estimated. Once this constant relation is known, the pose of the x-ray source can be estimated for every C-arm repositioning (b) by identifying displacements in the RGBD camera coordinate frame.

2.2. Vision-Based Estimation of C-Arm Extrinsic Parameters

The stereo relation between C-arm x-ray images acquired at different poses is estimated by first tracking visual markers in the RGBD camera coordinate frame and then transforming the tracking outcome to the x-ray coordinate frame:

| (2) |

where due to the rigid construction of the RGBD camera on the C-arm gantry. In Fig. 2(b), the rigid movement of x-ray source with the RGBD camera origin is shown for an arbitrary C-arm orbit.

2.3. Intraoperative Planning of the Acetabular Cup on Two x-ray Images

Planning of the acetabular component is performed in a user interface, where the cup could be rotated and translated by the surgeon in 3-D with six degrees of freedom (DoF) rigid parameters and is forward projected ( and ) onto the planes of the two x-ray images acquired from different perspectives:

| (3) |

where K and are the intrinsic perspective projection parameters for each C-arm image, P is a projection operator, and is the position of vertex of the cup in the world coordinate frame. Relying on two x-ray views not only provides the ability to plan the orientation of the acetabular component such that it is aligned in two images but, more importantly, also allows adjusting the depth of the cup correctly, which is not possible when a single x-ray image is used. It is worth mentioning that the size of the acetabular cup does not require adjustment but is known at this stage of the procedure as it is selected to match the size of the reamer.

In addition, if the desired orientation of the cup is known relative to an anatomical coordinate frame (e.g., APP plane) and an x-ray image is acquired from a known perspective in relation to that anatomical frame (e.g., AP view), then the orientation of the cup could be automatically adjusted for the user (equivalent to presetting the orientation in ). It is worth emphasizing that in several image-guided orthopedic procedures, x-ray images are frequently acquired from the AP view.

The transparency of the cup is adjusted by the surgeon in the user interface such that the ambiguity between the front and the back of the cup is optimally resolved. Finally, the contours around the edge of the cup are estimated and visualized by thresholding the dot product of the unit surface normal and the intersecting ray :

| (4) |

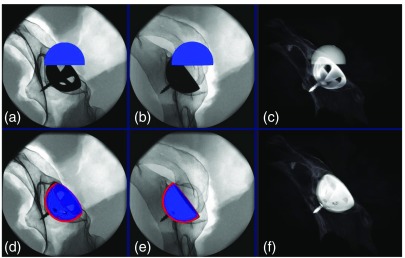

The planning of an acetabular cup based on two x-ray images is shown in Fig. 3.

Fig. 3.

(a) and (b) The acetabular component is forward projected from an initial 3-D pose onto the respective x-ray image plane. (c) and (d) The surgeon moves the cup until satisfied with the alignment in both views. The x-ray images shown here are acquired from a dry pelvis phantom encased in gelatin. (e) A cubic visual marker is placed near the phantom but outside the x-ray field of view to track the C-arm.

2.4. Augmented-Reality Visualization

Once the desired cup position is known, guidance of the cup placement using an impactor with an AR visualization is needed to ensure a positioning in agreement with the planning. To construct the AR environment, we first estimate the pose of the RGBD sensor relative to the planned cup as follows:

| (5) |

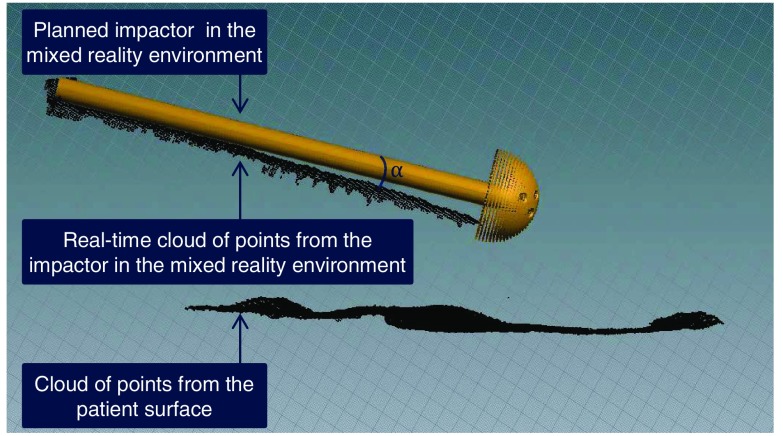

Within the AR environment, we then render a 3-D mesh of the cup and impactor superimposed with the real-time cloud of points observed by the camera, all in the RGBD coordinate frame. In the interventional scenario, the acetabular cup is hidden under the skin and only the impactor is visible. Therefore, the surgeon will only align the cloud of points from the impactor, a cylindrical object, with the 3-D virtual representation of the planned impactor.

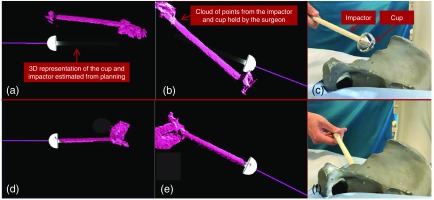

Ambiguities in the AR environment, among others occlusions or the rendering of a 3-D scene in a 2-D display, are eliminated by showing different perspectives of the scene simultaneously. Thus, it is ensured that the surgeon’s execution fully matches the planning once alignment of the current cloud of points of the impactor and the planned model is achieved in all perspectives. We provide an intuitive illustration of these relations in Fig. 4.

Fig. 4.

(a) and (b) Multiple virtual perspectives of the surgical site are shown to the surgeon (c) before the cup is aligned. (d)–(f) The impactor is then moved by the user until it completely overlaps with the virtual planned impactor.

To solely visualize the moving objects (e.g., the surgeon’s hands and tools), background subtraction of point clouds is performed with respect to the first static frame observed by the RGBD camera. It is important to note that in an image-guided DAA procedure, most tools other than the impactor, such as retractors, are removed prior to placing the acetabular component; therefore, important details on the fluoroscopy image are not occluded.

3. Evaluation and Results

3.1. System Setup

In DAA for THA, the detector is commonly positioned above the surgical bed. This orientation of the C-arm machine is considered to reduce the scattering to the surgical crew. Therefore, we modified the C-arm machine by mounting the RGBD camera near the detector plane of the C-arm, which then allows the detector to remain above the bed. The mount for the RGBD camera extends out from the C-arm detector for nearly 5.00 cm in the XY direction ( being the principal axis of the x-ray camera) and is screwed to a straight metal plate, which is rigidly tied to the image intensifier. Considering that the RGB camera is used for pose estimation of the C-arm scanner while the depth camera is used for point cloud observation, the RGBD camera needs to be angled such that a maximum of the surgical site is visible in both RGB and depth camera views. The RGBD sensor is, therefore, angled such that it has a direct view onto the surgical site such that the principal axis of the camera is close to the isocenter of the C-arm.

The impactor used for testing is a straight cylindric acetabular trialing from Smith and Nephew. For intraoperative x-ray imaging, we use an Arcadis Orbic 3-D C-arm (Siemens Healthcare GmbH, Forchheim, Germany) with an isocenteric design and an image intensifier. The RGBD camera is a short-range Intel RealSense SR300 (Intel Corporation, Santa Clara, California), which combines depth sensing with HD color imaging. Data transfers from C-arm and the RGBD camera to the development PC are done via Ethernet and powered USB 3.0 connections, respectively.

The AR visualization is implemented as a plug-in application in ImFusion Suite using the ImFusion software development kit (ImFusion GmbH, Germany). We use ARToolkit for visual marker tracking.49

3.2. Experimental Results

3.2.1. Stereo cocalibration of the RGBD and x-ray cameras

Offline stereo cocalibration between the x-ray source and the RGBD camera using 22 image pairs yields a mean reprojection error of 1.10 pixels. Individual mean reprojection errors for x-ray and RGBD cameras are 1.46 and 0.74 pixels, respectively.

3.2.2. Accuracy in tracking x-ray poses

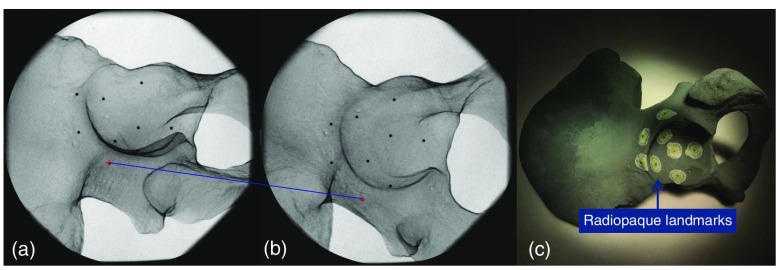

Tracking accuracy is computed by acquiring x-ray images from a phantom with several radiopaque landmarks and measuring the stereo error between the corresponding landmark points in different images.

The phantom is constructed by attaching nine radiopaque mammography skin markers (bbs) with diameters of 1.5 mm, inside and near the acetabulum on a pelvis model, as shown in Fig. 5. Next, we acquired 11 x-ray images from to along C-arm oblique rotation and 9 x-ray images from to on the cranial/caudal direction, with intervals of 10 deg. In the planning software, we placed a virtual sphere with the same diameter as the bbs on each of the bb landmarks and measured the distance of the bb in the second image to the epipolar line from the center of the corresponding virtual sphere in the first image. The error distance is measured as (values reported as ) in an x-ray image with pixel size of and pixel spacing of . In addition, we acquired a cone beam CT (CBCT) scan of the phantom and measured a root mean square error of 1.37 mm between the bbs in the CT and those reconstructed using two x-ray images.

Fig. 5.

(a) and (b) The geometric error is measured using radiopaque bbs viewed in the stereo x-ray images. (c) The blue line highlights a pair of corresponding bbs in the two images. The phantom is shown in panel (c).

3.2.3. Planning accuracy in placing the acetabular component using two views

To measure 3-D errors and ensure precise placement of the cup in two x-ray images during planning, we construct a dry phantom, where an implant cup is screwed into the acetabulum. Therefore, the desired implant cup placement is well visible in the x-ray images and serves as a reference. We perform experiments, where a virtual cup with the same size of the implant, shown in Fig. 6, must be aligned precisely with the cup implanted a priori that is visible in the x-ray images. To evaluate the 3-D error, we acquire a CBCT scan of the phantom and measure the error between the planning outcome and the ground-truth pose. This yields a mean translation error of 1.71 mm and anteversion and abduction errors of 0.21 deg and 0.88 deg, respectively.

Fig. 6.

An implant cup is placed inside the acetabulum, and two x-ray images and a CBCT scan are acquired using the C-arm. (a)–(c) The x-ray and CBCT images before planning. (d)–(f) The overlay of the real and virtual cup after proper alignment is shown.

3.2.4. Preclinical feasibility study of acetabular component planning using stereo x-ray imaging

In image-guided DAA hip arthroplasty, the proper alignment of the acetabular component is frequently inferred from AP x-ray images.50 Thus, the accuracy in estimating the 3-D pose-based on a single 2-D image heavily depends on the surgeon’s experience. In this experiment, we seek to demonstrate the clinical feasibility of our solution that is based on stereo x-ray imaging and compare the outcome with image-guided DAA solutions that only use AP x-ray images for guidance. We refer to the latter as “classic DAA.” Although the use of a single AP radiograph and the anterior pelvic plane coordinate system has certain drawbacks, it is the frame of reference that is most commonly used in computer-assisted THA solutions.51 While there may be alternatives (e.g., coronal plane), the use of anterior pelvic plane as the frame of reference will enable direct comparison with the current literature.

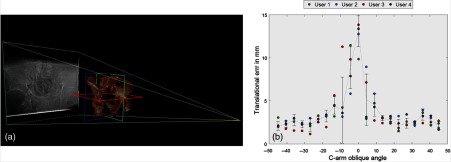

We conduct a preclinical user study, where medical experts use the planning software to place acetabular cups on simulated stereo x-ray images. These results are then compared with the conventional AP-based method considering orientational error in abduction and anteversion.

For the purpose of the user study, simulated x-ray images or so-called digitally reconstructed radiographs (DRR) are produced from a cadaver CT data. We generate 21 DRRs from the hip area, starting at and ending at with increments of on the orbital oblique axis of the C-arm, where 0 deg refers to an AP image. Each time the users are given a randomly selected DRR together with the DRR corresponding to the AP plane and are expected to place the acetabular cup such that it is properly aligned in both views.

As the spatial configuration of the DRRs is known relative to the APP plane, we are able to compute the correct rotation of the acetabular component and preset this orientation for the cup in the planning software. This can occur when an AP image is acquired during the intervention and the desired orientation of the component is known relative to the anterior pelvic plane, which allows locking the DoF for rotational parameters. When the orientation is preset, the user only has to adjust a translational component, substantially reducing the task load. Presetting the orientation of the cup is evidently only possible if the x-ray pose is known relative to the APP or the AP image.

Four orthopedic surgery residents from the Johns Hopkins Hospital participated in the user study. The translation error in placing the cup is shown in Fig. 7. The abduction and anteversion errors are measured as zero as a result of presetting the desired angles. The abduction and anteversion adjusted by the user solely using AP image (classic DAA) are and , respectively. Ground-truth for these statistics includes the five DoF pose of the cup in CT data (as the cup is a symmetric hemisphere, 1 DoF, i.e., rotation around the symmetry axis, is redundant), where abduction and anteversion angles are 40 deg and 25 deg, respectively.

Fig. 7.

(a) DRRs were generated from to around the AP view. Participants were each time given two images, where one was always AP and the other one was generated from a different view. (b) The translational errors are shown for all four participants. Note that 0 deg in the horizontal axis refers to where the user performed planning on only the AP x-ray image.

3.2.5. Error evaluation in the augmented reality environment

To evaluate the agreement between surgeons’ actions in the AR environment with their intraoperative planning, we measure the orientational error of the impactor after placement with respect to its planning.

The axis-angle error between the principal axis of the true and planned impactor in the AR environment is measured, as shown in Fig. 8. We repeat this experiment for 10 different poses, and each time we use four virtual perspectives of the surgical site. The orientational error is .

Fig. 8.

The angle between the principal axis of the virtual impactor and the cloud of points represents the orientation error in the AR environment.

After the cup is placed in the acetabulum using AR guidance, we acquire a CBCT scan of the cup and measure the translation, abduction, and anteversion errors compared with a ground-truth CBCT as 1.98 mm, 1.10 deg, and 0.53 deg, respectively.

4. Discussion and Conclusion

We propose an AR solution based on intraoperative planning for easy and accurate placement of acetabular components during THA. Planning does not require preoperative data and is performed only on two stereo x-ray images. If either of the two x-ray projections is acquired from an AP perspective, the correct orientation of the cup can be adjusted automatically, thus, reducing the task load of the surgeon and promoting more accurate implant placement.

Our AR environment is built upon an RGBD enhanced C-arm, which enables visualization of 3-D optical information from the surgical site superimposed with the planning target. Ultimately, accurate cup placement is achieved by moving the impactor until it is fully aligned with the desired planning.

Experimental results indicate that the anteversion and abduction errors are minimized substantially compared with the classic DAA approach. The translational error is below 3 mm provided that the lateral opening between two images is larger than 18 deg. All surgeons participating in the user study believed that presetting the cup orientation is useful and valid as having access to AP images in the operating room (OR) is a well-founded assumption. Nonetheless, the authors believe that a pose-aware RGBD augmented C-arm52 can, in the future, assist the surgeon in acquiring and confirming true AP images considering pelvis supine tilts in different planes.

The translational and orientational errors of the proposed AR solution are 1.98 mm and 1.22 deg, respectively, which show reduced error compared with the navigation-based system proposed by Sato et al.,27 which has a translation error of 2.98 mm and an orientation error of 4.25 deg. These results show the clear necessity to continue research and perform user studies on cadaveric specimens, as well as quantify the changes in operating time, number of required x-ray images, dose, accuracy, and surgical task load compared with classic image-guided approaches.

In classic DAA hip arthroplasty, correct translation of the cup is achieved by naturally placing the acetabular component inside the acetabulum and then moving the impactor around the pivot point of the acetabulum until the cup is at proper orientation. However, for our proposed solution to provide reliable guidance, both the translational and orientational alignments need to be planned.

In addition to presetting the orientations for the cup during planning, the surgeon can also adjust all six DoF rigid parameters of the component. Though, in the suggested AR paradigm, there are two redundant DoF: (1) rotation along the symmetry axis of the cup and (2) translation along the acetabular axis.

The RGBD camera on the C-arm is a short-range camera to allow detection even in near distances. The RGB channel of the sensor is used for tracking visual markers, and the depth channel is utilized to enable AR. The field-of-view of the RGBD camera is greater than the x-ray camera. Therefore, it allows placing visual marker outside the x-ray view to not obscure the anatomy in the x-ray image.

The visual marker is only introduced into the surgical scene for a short interval between acquiring two x-ray images. These external visual markers could be avoided if incorporating RGBD-based simultaneous localization and mapping to track the surgical site.52 Alternatively, the impactor, which is a cylindric object, could be used as a fiducial for vision-based inside-out tracking. It is important to note that surgical tools with shiny surfaces reflect IR beam. Tracking the surgical impactor is only done reliably if the surface has a matte finish or it is covered with a nonreflective adhesive material.

Projection of the 3-D hemispheric virtual cup onto the plane of x-ray images is done by utilizing the intrinsics parameters of the x-ray camera. These parameters are estimated while performing the checkerboard calibration. However, at different C-arm arrangements, the focal length and principal point could slightly change due to gravity and flex in the C-arm machine. We quantified the drift in the principal point for , , and of C-arm lateral opening, and the average shift was 5.17, 7.3, and 17 pixels on a x-ray image. Considering the pixel spacing of the detector, these values are equivalent to 1.16 mm, 1.64 mm, and 3.82 mm drift on the detector plane coordinate frame. To overcome the limitations of change of intrinsics in the future, a look-up table could be constructed from precalibration of the C-arm at different angulations. The correct intrinsic parameters could then be retrieved from the table by matching the corresponding extrinsics from the inside-out tracking of the C-arm. To avoid small inaccuracies due to image distortion of the image intensifier, we placed the acetabulum near the image center, where image distortion is minimal.

During the clinical intervention, sterilization of the imaging device needs to be ensured by either covering the RGBD camera with transparent self-adhesive sterile covers or extending the mount of the camera, such that the camera is located outside the sterile zone. While both options are conceivable, the latter will reduce the range of free motion while rearranging the C-arm.

The RGBD sensor is not embedded in the gantry of the C-arm; therefore, it is possible that the surgical crew inadvertently hits the camera and affects the calibration. Since repeating the cocalibration for the imaging devices is not feasible when the patient is present in the OR, we plan to place an additional cocalibrated camera on the opposite side of the detector. Hence, when the calibration of one camera becomes invalid, the opposite camera could be used as a substitute.

In the proposed solution, the patient is assumed to be static while placing the cup. However, if the patient moves, either the planning needs to be repeated or the surgeon ought to continue with classic fluoroscopy-based guidance.

This AR solution for THA uses a self-contained C-arm, which only needs a one-time offline calibration, requires no external trackers, and does not depend on out-dated preoperative patient data. We believe that this system, by enabling quick planning and visualization, can contribute to reduction of radiation, time, and frustration and can increase the efficiency and accuracy for placing acetabular components. Ultimately, this approach may aid in reducing the risk of revision surgery in patients with diseased hip joints.

Acknowledgments

The authors want to thank Wolfgang Wein and his team from ImFusion GmbH, Munich, for the opportunity of using the ImFusion Suite and Gerhard Kleinzig and Sebastian Vogt from Siemens Healthineers for their support and making a Siemens ARCADIS Orbic 3-D available.

Biographies

Javad Fotouhi has been a PhD student of computer science at Johns Hopkins University since 2014. Prior to joining the Laboratory for Computer Aided Medical Procedures at Johns Hopkins University, he earned his MSc degree in biomedical computing from Technical University of Munich and his BSc degree in electrical engineering from the University of Tehran. His research focus includes x-ray interventional imaging, medical image registration and reconstruction, and augmented reality.

Clayton P. Alexander earned his MD from the University of Maryland, School of Medicine, in 2014 and is currently a resident physician in his fourth year of the Johns Hopkins Orthopaedic Surgery Residency Training Program. He plans to complete a fellowship in hand and upper extremity surgery after completion of his residency. His research interests include imaging solutions for component placement for total hip arthroplasty.

Mathias Unberath is a postdoctoral fellow at Johns Hopkins University. He received his BSc degree in physics, MSc degree in optical technologies, and PhD in computer science from the Friedrich-Alexander-Universitt Erlangen-Nürnberg in 2012, 2014, and 2017, respectively. In 2014, he was appointed graduate research scholar at Stanford University. He is interested in medical image processing and image reconstruction and visualization to improve diagnostic assessment and interventional guidance.

Giacomo Taylor is an undergraduate student at the Johns Hopkins University studying computer science and applied mathematics. He works with the Laboratory for Computer Aided Medical Procedures at Johns Hopkins University and is interested in applying computer vision techniques to improving medical imaging and intervention.

Sing Chun Lee has been a PhD student in the Computer Science Department at Johns Hopkins University since 2016. He received dual degrees in mathematics and information engineering from the Chinese University of Hong Kong in 2009 and 2010, respectively. He earned his master’s degree in biomedical computing from Technical University Munich in 2016. His research interests include computer-assisted intervention, medical data visualization, and augmented reality.

Bernhard Fuerst was a research engineer at the Johns Hopkins University and is now with Verb Surgical Inc. He received his bachelor’s degree in biomedical computer science in 2009 and his master’s degree in biomedical computing in 2011. During his studies, he joined Siemens Corporate Research to investigate compensation of respiratory motion and Georgetown University to research metaoptimization. At the Johns Hopkins University, his research focus was on robotic ultrasound, minimally invasive nuclear imaging, and bioelectric sensing.

Alex Johnson is a resident physician with the Department of Orthopaedic Surgery at Johns Hopkins. He is currently conducting research investigating advanced visualization methods for orthopaedic surgical applications in collaboration with the CAMP Laboratory at Johns Hopkins University.

Greg Osgood is an orthopedic trauma surgeon at Johns Hopkins Hospital. He graduated medical school from Columbia University College of Physicians and Surgeons and then completed residency training at New York Presbyterian Hospital. He completed his subspecialty orthopaedic trauma fellowship at Harborview Medical Center. At Johns Hopkins, he was the first chief of orthopaedic trauma in the hospital’s history. His research focuses are advancing 2-D/3-D imaging, head mounted displays, and advanced guidance for surgery.

Russell H. Taylor received his PhD in computer science from Stanford in 1976. After spending 1976 to 1995 as a research staff member and research manager at IBM research, he moved to Johns Hopkins University, where he is the John C. Malone professor of computer science and director of the Laboratory for Computational Sensing and Robotics. His research interests include medical robotics and computer-integrated interventional medicine.

Harpal Khanuja is the chief of hip and knee reconstruction with the Department of Orthopaedic Surgery at Johns Hopkins Medical Center. He is also the Chairman of Orthopaedic Surgery at Johns Hopkins Bayview Medical Center. His clinical interest is hip and knee replacement surgery, and his research interests include clinical outcomes and applying newer technologies including imaging and robotics to orthopaedic procedures.

Mehran Armand is a principal scientist at the Johns Hopkins University Applied Physics Laboratory and an associate research professor in the Departments of Mechanical Engineering and Orthopaedic Surgery. He currently directs the Laboratory for Biomechanical- and Image-Guided Surgical Systems (BIGSS) at Whiting School and AVICENNA center for advancing surgical technologies at Bayview Medical Center. His lab encompasses research in continuum manipulators, biomechanics, and medical image analysis for translation to clinical application of integrated surgical systems.

Nassir Navab, MICCAI fellow, is a professor and director of Laboratory for Computer Aided Medical Procedures at Technical University of Munich and Johns Hopkins University. He completed his PhD at INRIA and University of Paris XI in 1993. He received Siemens inventor of the year award in 2001, SMIT Society Technology award in 2010, and “10 years lasting impact award” of IEEE ISMAR in 2015. His research interests include medical AR, computer vision, and machine learning.

Disclosures

The authors declare that they have no conflict of interest. Research reported in this publication was partially supported by NIH/NIBIB under the Award Number R21EB020113 and Johns Hopkins University internal funding sources.

References

- 1.Kurtz S., et al. , “Projections of primary and revision hip and knee arthroplasty in the united states from 2005 to 2030,” J. Bone Joint Surg. 89(4), 780–785 (2007). 10.2106/00004623-200704000-00012 [DOI] [PubMed] [Google Scholar]

- 2.Barrack R. L., et al. , “Virtual reality computer animation of the effect of component position and design on stability after total hip arthroplasty,” Orthop. Clin. 32(4), 569–577 (2001). 10.1016/S0030-5898(05)70227-3 [DOI] [PubMed] [Google Scholar]

- 3.Charnley J., Cupic Z., “The nine and ten year results of the low-friction arthroplasty of the hip,” Clin. Orthop. Relat. Res. 95, 9–25 (1973). 10.1016/S0030-5898(05)70227-3 [DOI] [PubMed] [Google Scholar]

- 4.D’lima D. D., et al. , “The effect of the orientation of the acetabular and femoral components on the range of motion of the hip at different head-neck ratios,” J. Bone Joint Surg. 82(3), 315–321 (2000). 10.2106/00004623-200003000-00003 [DOI] [PubMed] [Google Scholar]

- 5.Scifert C. F., et al. , “A finite element analysis of factors influencing total hip dislocation,” Clin. Orthop. Relat. Res. 355, 152–162 (1998). 10.1097/00003086-199810000-00016 [DOI] [PubMed] [Google Scholar]

- 6.Yamaguchi M., et al. , “The spatial location of impingement in total hip arthroplasty,” J. Arthroplasty 15(3), 305–313 (2000). 10.1016/S0883-5403(00)90601-6 [DOI] [PubMed] [Google Scholar]

- 7.Lewinnek G. E., et al. , “Dislocations after total hip-replacement arthroplasties,” J. Bone Joint Surg. 60(2), 217–220 (1978). 10.2106/00004623-197860020-00014 [DOI] [PubMed] [Google Scholar]

- 8.Elkins J. M., Callaghan J. J., Brown T. D., “The 2014 frank stinchfield award: The landing zonefor wear and stability in total hip arthroplasty is smaller than we thought: a computational analysis,” Clin. Orthop. Relat. Res. 473(2), 441–452 (2015). 10.1007/s11999-014-3818-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Danoff J. R., et al. , “Redefining the acetabular component safe zone for posterior approach total hip arthroplasty,” J. Arthroplasty 31(2), 506–511 (2016). 10.1016/j.arth.2015.09.010 [DOI] [PubMed] [Google Scholar]

- 10.Esposito C. I., et al. , “Cup position alone does not predict risk of dislocation after hip arthroplasty,” J. Arthroplasty 30(1), 109–113 (2015). 10.1016/j.arth.2014.07.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.DiGioia A. M., III, et al. , “Functional pelvic orientation measured from lateral standing and sitting radiographs,” Clin. Orthop. Relat. Res. 453, 272–276 (2006). 10.1097/01.blo.0000238862.92356.45 [DOI] [PubMed] [Google Scholar]

- 12.Zhu J., Wan Z., Dorr L. D., “Quantification of pelvic tilt in total hip arthroplasty,” Clin. Orthop. Relat. Res. 468(2), 571–575 (2010). 10.1007/s11999-009-1064-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Callanan M. C., et al. , “The John Charnley award: risk factors for cup malpositioning: quality improvement through a joint registry at a tertiary hospital,” Clin. Orthop. Relat. Res. 469(2), 319–329 (2011). 10.1007/s11999-010-1487-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bosker B., et al. , “Poor accuracy of freehand cup positioning during total hip arthroplasty,” Arch. Orthop. Trauma Surg. 127(5), 375–379 (2007). 10.1007/s00402-007-0294-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Saxler G., et al. , “The accuracy of free-hand cup positioning-a CT based measurement of cup placement in 105 total hip arthroplasties,” Int. Orthop. (SICOT) 28(4), 198–201 (2004). 10.1007/s00264-004-0542-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Anterior Total Hip Arthroplasty Collaborative (ATHAC) Investigators, “Outcomes following the single-incision anterior approach to total hip arthroplasty: a multicenter observational study,” Orthop. Clin. North Am. 40(3), 329–342 (2009). 10.1016/j.ocl.2009.03.001 [DOI] [PubMed] [Google Scholar]

- 17.Domb B. G., et al. , “Comparison of robotic-assisted and conventional acetabular cup placement in THA: a matched-pair controlled study,” Clin. Orthop. Relat. Res. 472(1), 329–336 (2014). 10.1007/s11999-013-3253-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dorr L. D., et al. , “Precision and bias of imageless computer navigation and surgeon estimates for acetabular component position,” Clin. Orthop. Relat. Res. 465, 92–99 (2007). 10.1097/BLO.0b013e3181560c51 [DOI] [PubMed] [Google Scholar]

- 19.Moskal J. T., Capps S. G., “Acetabular component positioning in total hip arthroplasty: an evidence-based analysis,” J. Arthroplasty 26(8), 1432–1437 (2011). 10.1016/j.arth.2010.11.011 [DOI] [PubMed] [Google Scholar]

- 20.Murphy S. B., Ecker T. M., Tannast M., “Tha performed using conventional and navigated tissue-preserving techniques,” Clin. Orthop. Relat. Res. 453, 160–167 (2006). 10.1097/01.blo.0000246539.57198.29 [DOI] [PubMed] [Google Scholar]

- 21.Digioia A. M., et al. , “Surgical navigation for total hip replacement with the use of hipnav,” Op. Tech. Orthop. 10(1), 3–8 (2000). 10.1016/S1048-6666(00)80036-1 [DOI] [Google Scholar]

- 22.Jaramaz B., Eckman K., “2D/3D registration for measurement of implant alignment after total hip replacement,” in Medical Image Computing and Computer-Assisted Intervention (MICCAI ’06), pp. 653–661 (2006). [DOI] [PubMed] [Google Scholar]

- 23.Nikou C., et al. , “Pop: preoperative planning and simulation software for total hip replacement surgery,” in Int. Conf. on Medical Image Computing and Computer-Assisted Intervention, pp. 868–875, Springer; (1999). [Google Scholar]

- 24.Leenders T., et al. , “Reduction in variability of acetabular cup abduction using computer assisted surgery: a prospective and randomized study,” Comput. Aided Surg. 7(2), 99–106 (2002). 10.3109/10929080209146021 [DOI] [PubMed] [Google Scholar]

- 25.Widmer K.-H., Grützner P. A., “Joint replacement-total hip replacement with CT-based navigation,” Injury 35(1), 84–89 (2004). 10.1016/j.injury.2004.05.015 [DOI] [PubMed] [Google Scholar]

- 26.Haaker R. G., et al. , “Comparison of conventional versus computer-navigated acetabular component insertion,” J. Arthroplasty 22(2), 151–159 (2007). 10.1016/j.arth.2005.10.018 [DOI] [PubMed] [Google Scholar]

- 27.Sato Y., et al. , “Intraoperative simulation and planning using a combined acetabular and femoral (CAF) navigation system for total hip replacement,” in MICCAI: Int. Conf. on Medical Image Computing and Computer-Assisted Intervention, pp. 1114–1125, Springer; (2000). [Google Scholar]

- 28.Sarin V. K., et al. , “Non-imaging, computer assisted navigation system for hip replacement surgery,” U.S. Patent No. 6,711,431 (2004).

- 29.Kalteis T., et al. , “Imageless navigation for insertion of the acetabular component in total hip arthroplasty,” Bone Joint J. 88(2), 163–167 (2006). 10.1302/0301-620X.88B2.17163 [DOI] [PubMed] [Google Scholar]

- 30.Lin F., et al. , “Limitations of imageless computer-assisted navigation for total hip arthroplasty,” J. Arthroplasty 26(4), 596–605 (2011). 10.1016/j.arth.2010.05.027 [DOI] [PubMed] [Google Scholar]

- 31.Taylor R. H., et al. , “An image-directed robotic system for precise orthopaedic surgery,” IEEE Trans. Rob. Automat. 10(3), 261–275 (1994). 10.1109/70.294202 [DOI] [Google Scholar]

- 32.Sugano N., “Computer-assisted orthopaedic surgery and robotic surgery in total hip arthroplasty,” Clin. Orthop. Surg. 5(1), 1–9 (2013). 10.4055/cios.2013.5.1.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nakamura N., et al. , “Robot-assisted primary cementless total hip arthroplasty using surface registration techniques: a short-term clinical report,” Int. J. Comput. Assisted Radiol. Surg. 4(2), 157–162 (2009). 10.1007/s11548-009-0286-1 [DOI] [PubMed] [Google Scholar]

- 34.Yao J., et al. , “AC-arm fluoroscopy-guided progressive cut refinement strategy using a surgical robot,” Comput. Aided Surg. 5(6), 373–390 (2000). 10.3109/10929080009148898 [DOI] [PubMed] [Google Scholar]

- 35.Schulz A. P., et al. , “Results of total hip replacement using the robodoc surgical assistant system: clinical outcome and evaluation of complications for 97 procedures,” Int. J. Comput. Assisted Radiol. Surg. 3(4), 301–306 (2007). 10.1002/(ISSN)1478-596X [DOI] [PubMed] [Google Scholar]

- 36.Nawabi D. H., et al. , “Haptically guided robotic technology in total hip arthroplasty: a cadaveric investigation,” Proc. Inst. Mech. Eng. H 227(3), 302–309 (2013). 10.1177/0954411912468540 [DOI] [PubMed] [Google Scholar]

- 37.Zheng G., “Statistically deformable 2D/3D registration for accurate determination of post-operative cup orientation from single standard x-ray radiograph,” in Medical Image Computing and Computer-Assisted Intervention (MICCAI ’09), pp. 820–827 (2009). [DOI] [PubMed] [Google Scholar]

- 38.Zheng G., et al. , “A hybrid CT-free navigation system for total hip arthroplasty,” Comput. Aided Surg. 7(3), 129–145 (2002). 10.3109/10929080209146024 [DOI] [PubMed] [Google Scholar]

- 39.Grützner P. A., et al. , “C-arm based navigation in total hip arthroplastybackground and clinical experience,” Injury 35(1), 90–95 (2004). 10.1016/j.injury.2004.05.016 [DOI] [PubMed] [Google Scholar]

- 40.Xu K., et al. , “Computer navigation in total hip arthroplasty: a meta-analysis of randomized controlled trials,” Int. J. Surg. 12(5), 528–533 (2014). 10.1016/j.ijsu.2014.02.014 [DOI] [PubMed] [Google Scholar]

- 41.Reininga I. H., et al. , “Minimally invasive and computer-navigated total hip arthroplasty: a qualitative and systematic review of the literature,” BMC Musculoskeletal Disord. 11(1), 92 (2010). 10.1186/1471-2474-11-92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Barrett W. P., Turner S. E., Leopold J. P., “Prospective randomized study of direct anterior vs postero-lateral approach for total hip arthroplasty,” J. Arthroplasty 28(9), 1634–1638 (2013). 10.1016/j.arth.2013.01.034 [DOI] [PubMed] [Google Scholar]

- 43.Slotkin E. M., Patel P. D., Suarez J. C., “Accuracy of fluoroscopic guided acetabular component positioning during direct anterior total hip arthroplasty,” J. Arthroplasty 30(9), 102–106 (2015). 10.1016/j.arth.2015.03.046 [DOI] [PubMed] [Google Scholar]

- 44.Masonis J., Thompson C., Odum S., “Safe and accurate: learning the direct anterior total hip arthroplasty,” Orthopedics 31(12 Suppl. 2), 1417–1426 (2008). [PubMed] [Google Scholar]

- 45.Lee S. C., et al. , “Calibration of RGBD camera and cone-beam CT for 3D intra-operative mixed reality visualization,” Int. J. Comput. Assisted Radiol. Surg. 11(6), 967–975 (2016). 10.1007/s11548-016-1396-1 [DOI] [PubMed] [Google Scholar]

- 46.Fotouhi J., et al. , “Interventional 3D augmented reality for orthopedic and trauma surgery,” in 16th Annual Meeting of the Int. Society for Computer Assisted Orthopedic Surgery (CAOS) (2016). [Google Scholar]

- 47.Fischer M., et al. , “Preclinical usability study of multiple augmented reality concepts for k-wire placement,” Int. J. Comput. Assisted Radiol. Surg. 11(6), 1007–1014 (2016). 10.1007/s11548-016-1363-x [DOI] [PubMed] [Google Scholar]

- 48.Fotouhi J., et al. , “Can real-time RGBD enhance intraoperative cone-beam CT?” Int. J. Comput. Assisted Radiol. Surg. 12(7), 1211–1219 (2017). 10.1007/s11548-017-1572-y [DOI] [PubMed] [Google Scholar]

- 49.Kato H., Billinghurst M., “Marker tracking and HMD calibration for a video-based augmented reality conferencing system,” in Proc. 2nd IEEE and ACM Int Workshop on Augmented Reality (IWAR ’99), pp. 85–94, IEEE; (1999). 10.1109/IWAR.1999.803809 [DOI] [Google Scholar]

- 50.Ji W., Stewart N., “Fluoroscopy assessment during anterior minimally invasive hip replacement is more accurate than with the posterior approach,” Int. Orthop. (SICOT) 40(1), 21–27 (2016). 10.1007/s00264-015-2803-x [DOI] [PubMed] [Google Scholar]

- 51.Rousseau M.-A., et al. , “Optimization of total hip arthroplasty implantation: is the anterior pelvic plane concept valid?” J. Arthroplasty 24(1), 22–26 (2009). 10.1016/j.arth.2007.12.015 [DOI] [PubMed] [Google Scholar]

- 52.Fotouhi J., et al. , “Pose-aware c-arm for automatic re-initialization of interventional 2D/3D image registration,” Int. J. Comput. Assisted Radiol. Surg. 12(7), 1221–1230 (2017). 10.1007/s11548-017-1611-8 [DOI] [PMC free article] [PubMed] [Google Scholar]