Abstract

Purpose

Language comprehension in people with aphasia (PWA) is frequently evaluated using multiple-choice displays: PWA are asked to choose the image that best corresponds to the verbal stimulus in a display. When a nontarget image is selected, comprehension failure is assumed. However, stimulus-driven factors unrelated to linguistic comprehension may influence performance. In this study we explore the influence of physical image characteristics of multiple-choice image displays on visual attention allocation by PWA.

Method

Eye fixations of 41 PWA were recorded while they viewed 40 multiple-choice image sets presented with and without verbal stimuli. Within each display, 3 images (majority images) were the same and 1 (singleton image) differed in terms of 1 image characteristic. The mean proportion of fixation duration (PFD) allocated across majority images was compared against the PFD allocated to singleton images.

Results

PWA allocated significantly greater PFD to the singleton than to the majority images in both nonverbal and verbal conditions. Those with greater severity of comprehension deficits allocated greater PFD to nontarget singleton images in the verbal condition.

Conclusion

When using tasks that rely on multiple-choice displays and verbal stimuli, one cannot assume that verbal stimuli will override the effect of visual-stimulus characteristics.

Auditory and written language comprehension is vital to virtually every aspect of interpersonal interaction. It also supports decision making and planning for social, educational, and professional goals, desires, and needs. Valid and reliable assessment of language comprehension is therefore crucial for people with aphasia (PWA). Language comprehension for PWA is frequently evaluated using multiple-choice image displays and verbal stimuli. Participants are asked to choose the image that best corresponds to the verbal stimulus in a display comprising a target and one or more nontarget (foil) images. It is assumed that choosing a foil reflects a failure to comprehend the verbal stimulus. This assumption is problematic due to the many potentially confounding factors not related to comprehension that may influence performance on a multiple-choice task. For example, deficits in visual perception (Berryman, Rasavage, & Politzer, 2010) and visual processing efficiency (Azouvi et al., 2006; Beaudoin et al., 2013; Fisk, Owsley, & Mennemeier, 2002; Lachapelle, Bolduc-Teasdale, Ptito, & McKerral, 2008) are common confounding factors in individuals with neurological disorders. We use the term potentially confounding factors because during the actual process of assessment we often cannot be sure of just which factors are influencing a given person's responsiveness. It is always important to consider whether attributing a lack of response or an incorrect response to poor comprehension is valid.

Potentially Confounding Factors When Multiple-Choice Displays Are Used to Index Comprehension

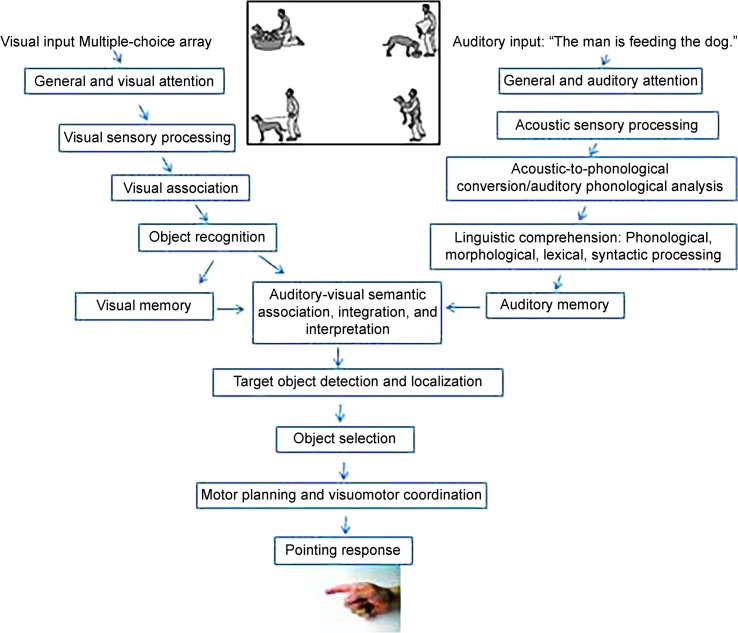

Accurate responses during language-comprehension tasks that rely on multiple-choice image displays require several abilities in addition to intact comprehension ability. A process-analysis approach allows one to consider all of the component processes required to respond during an assessment task, analyze the abilities required to carry out those processes, and consider which of those abilities are and are not truly relevant to the construct under study (Hallowell, 2016). Several published studies have indicated how potentially confounding factors may threaten the validity of multiple-choice assessment for PWA (e.g., Hallowell, 1999; Hallowell, Wertz, & Kruse, 2002; Heuer & Hallowell, 2009). Several processes occur simultaneously at different stages of multiple-choice task completion (see Figure 1 for a summary of these processes and abilities). For example, processing of visual and auditory stimuli may occur simultaneously but precede processes related to target-image selection. Potential confounds include a host of visual, auditory, motoric, memory, attention, speed-of-processing, and executive-function deficits. Those deficits tend to occur with greater frequency and severity in individuals who have had a stroke or brain injury in general, and PWA in particular (Azouvi et al., 2006; Beaudoin et al., 2013; Fisk et al., 2002; Robin & Rizzo, 1989; Tatemichi et al., 1994).

Figure 1.

Diagram of processes involved in multiple-choice task performance during auditory comprehension assessment. Adapted from Aphasia and Other Acquired Neurogenic Language Disorders: A Guide for Clinical Excellence (p. 325), by B. Hallowell, 2016. San Diego, CA: Plural. Copyright 2016 by Plural Publishing. Adapted with permission.

Some potentially confounding deficits in people with neurological disorders may not influence performance during simple multiple-choice tasks but become apparent during more complex tasks (Villard & Kiran, 2015). Such deficits can compromise performance, especially when nonlinguistic aspects of the task are not well controlled. To ensure that valid inferences regarding language deficits can be made, the linguistic and nonlinguistic processes required to successfully perform such a task must be evaluated. Given that visual attention is a fundamental prerequisite to accurate multiple-choice task performance, it is especially important to explore its influence on tasks that are purported to index comprehension. The purpose of this study was to explore the influence of physical image characteristics of multiple-choice image displays on allocation of visual attention for PWA.

Top-Down and Bottom-Up Influences on Visual-Search Tasks

Multiple-choice assessment using multiple images or written words entails visual search, which comprises top-down and bottom-up processes. Top-down processes refer to image selection on the basis of the observer's knowledge (in the case of multiple-choice assessment, semantic interpretation of a spoken or written verbal stimulus). Top-down selection of a target image is typically considered to be under the voluntary control of the observer during visual search (Theeuwes, 2010), although this is not necessarily the case (Hallowell et al., 2002; Hutchison, Balota, & Ducheck, 2010; Rush, Barch, & Braver, 2006; Wolfe, Butcher, Lee, & Hyle, 2003). In the case of a multiple-choice task, the respondent intentionally uses linguistic information to guide the selection of the corresponding image. In contrast, bottom-up influences of physical image characteristics influence stimulus salience and affect early visual processing (Theeuwes, 2010). Examples of bottom-up influences on visual-search performance include color (Green & Anderson, 1956; Wolfe, 2000), size (Nagy & Sanchez, 1990), orientation (Bergen & Julesz, 1983; Foster & Ward, 1991; Moraglia, 1989), and luminance (Itti & Koch, 2001; Nothdurft, 2002) of the visual stimuli. Bottom-up processing may influence visual attention during a multiple-choice task regardless of the verbal stimulus, and the respondent may be unaware of that influence.

In auditory and written comprehension-assessment tasks that include multiple-choice image displays and verbal stimuli, the goal is to index the top-down influence of the verbal stimulus. However, it is important to consider that target-image selection is based on a combination of top-down and bottom-up processes. For example, in response to the auditory verbal stimulus “red” in a simple task of searching for a red target among green distractors, a person ideally deliberately searches for an item that matches the semantic content associated with the spoken word. In actuality, he or she may select the target solely on the basis of the salience of the bottom-up physical stimulus property of color (red target) in the display without even attending to or comprehending the verbal stimulus. When PWA are assessed using multiple-choice tasks, it is commonly assumed that top-down processes completely override the bottom-up processes involved in image selection. However, especially without careful visual-stimulus control, this assumption may not necessarily be valid.

There are several factors that influence stimulus salience of target images, including specific image characteristics and image-display design factors. For a review of specific image characteristics that have been shown to influence visual attention, see Heuer and Hallowell (2007). Issues related to image-display design may influence visual-search demands. For example, visual-search demands increase when more physical stimulus characteristics are shared between targets and foils; the more similar the stimuli, the greater the attention allocation required to distinguish them from each other (Duncan & Humphreys, 1989; Treisman & Gormican, 1988). In the alternative, foil salience may be increased by reducing the similarity between distractors and the target stimulus, reducing visual-search demands (Duncan & Humphreys, 1989; Nagy & Sanchez, 1990; Wolfe et al., 2003). Target salience may also be increased by reducing the number of foil items in a display (Wolfe et al., 2003). Increasing the number of stimuli presented in a multiple-choice display yields greater complexity overall, and thus potential for distraction (Bennett & Jaye, 1995). Increasing the visual complexity of images within the display also increases visual-search demands in multiple-choice tasks (Davis, Shikano, Peterson, & Michel, 2003), a fact that is often not effectively addressed in the design of image displays that are part of traditional assessment batteries (Heuer & Hallowell, 2007).

Given that PWA tend to have challenges in allocating limited attentional resources (Murray, 1999, 2012; Robin & Rizzo, 1989), it is especially important to consider how visual-attention demands may confound multiple-choice task performance during comprehension assessment. Overall, PWA have been shown to be less likely than people without neurogenic impairment to make use of explicit visual and auditory cues that might help them allocate attention more efficiently to linguistic targets (Murray, 1999; Robin & Rizzo, 1989). Compared with people without neurologic deficits, PWA are less likely to strategically allocate cognitive resources according to varying task demands (Tseng, McNeil, & Milenkovic, 1993). They are less likely to apply strategies such as trading off speed of processing to maintain accuracy (Heuer & Hallowell, 2015; Murray, Holland, & Beeson, 1997). Attention deficits may interact with deficits in working-memory capacity (Caplan & Waters, 1995; Caspari, Parkinson, LaPointe, & Katz, 1998; Friedmann & Gvion, 2003; Ivanova, Dragoy, Kuptsova, Ulicheva, & Laurinavichyute, 2015; Ivanova & Hallowell, 2012, 2014) and executive functions (Frankel, Penn, & Ormond-Brown, 2007; Fridriksson, Nettles, Davis, Morrow, & Montgomery, 2006; Murray, 2012; Penn, Frankel, Watermeyer, & Russell, 2010; Purdy, 2002) to influence actual language-comprehension abilities. Such deficits may affect performance on language-comprehension assessment tasks in ways that may not be relevant to true receptive abilities.

Eye-Tracking Measures of Top-Down and Bottom-Up Processes

Eye-tracking methods have been shown to be effective for indexing top-down and bottom-up influences during language processing as well as free viewing. Top-down processes are highlighted in the visual-world paradigm (e.g., Cooper, 1974; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995) and comprehension-assessment studies (e.g., Hallowell, 1999, 2012; Hallowell et al., 2002). People tend to look at visual stimuli that correspond to a spoken message when images and the spoken message are presented simultaneously. Bottom-up processes have been described and indexed using eye tracking in studies about the impact of physical stimulus characteristics on the allocation of visual attention in multiple-choice image displays. The proportion of total viewing time that a person allocates to images within a multiple-choice display when there is no accompanying verbal stimulus serves as an index of the degree to which physical stimulus characteristics influence eye-fixation patterns (Heuer & Hallowell, 2007, 2009, 2015). Several studies have used eye-tracking measures to yield information about processing demands of visual scenes and multiple-image displays by varying those visual displays in terms of levels of complexity (Henderson & Hollingworth, 1999; Henderson, Weeks, & Hollingworth, 1999; Heuer & Hallowell, 2015; Judd, Ehinger, Durand, & Torralba, 2009; Thiessen, Beukelman, Ullman, & Longenecker, 2014; Wilkinson & Light, 2011; Yarbus, 1967).

Heuer and Hallowell (2009) examined how the control and manipulation of four physical image characteristics affects visual attention when those images are viewed with and without an accompanying verbal stimulus. They indexed allocation of visual attention by studying eye fixations in individuals without neurological impairment. The task differed from a typical word–picture matching task in that only one physical image characteristic was manipulated in one of the three images at a time, strategically controlling bottom-up processes. By contrast, in typical word–picture matching tasks top-down processes are typically manipulated via the verbal stimulus; bottom-up characteristics tend not to be methodically manipulated. In fact, word–picture matching tasks tend to involve multiple complex images that differ from one another in visual complexity. Characteristics manipulated included size, shape, orientation, and luminance. Those characteristics were selected because they are objectively quantifiable and can be controlled independently of semantic-content conveyance factors.

The authors presented multiple-choice image displays composed of three simple images. For each display, two of these images were identical simple shapes (majority images) and one image varied from these two with respect to one image characteristic (singleton image). Displays were presented under a nonverbal and a verbal condition. In the nonverbal condition, only the visual stimuli were presented. In the verbal condition, a word was presented auditorily at display onset and participants were instructed to look at the image that corresponded best with the word. For half of the displays the word corresponded to the singleton, and for the other half of the displays the word corresponded to the majority images. Participants fixated longer on the singleton than the majority images in both conditions. Thus, the singleton image received greater visual attention than the majority images even in the presence of a verbal stimulus. Allocation of attention was influenced by visual salience of individual images within multiple-choice displays even when the verbal stimulus was easily understood. The fact that a verbal stimulus may not completely override the influence of stimulus-driven aspects even in individuals without neurogenic impairment is important. The risk of bottom-up confounds on comprehension assessment is likely to be greater in PWA due to the common concomitant nonlinguistic deficits already described and to deficits in correctly perceiving the incoming linguistic input.

Aims of the Current Study

The purpose of this study was to investigate the influence of bottom-up (stimulus-driven) processes on visual attention, as indexed by eye tracking, in PWA during a verbal and a nonverbal condition. The stimuli and procedures are similar to those in a study by Heuer and Hallowell (2009), which incorporated adults without neurological disorders. The same physical image characteristics (size, luminance, color, and orientation) were manipulated to explore the effects of bottom-up influences on visual attention with and without verbal stimuli. Indices of visual attention are quantified as the proportion of fixation duration (PFD) allocated to singleton and majority images within each display.

The following hypotheses were addressed:

In the nonverbal condition, PWA will allocate greater PFD to a singleton image than to the three majority images in a four-image set.

In the verbal condition, PWA will allocate greater PFD to target singleton images than to target majority images.

In the verbal condition, PWA will allocate greater PFD to nontarget singleton images than to nontarget majority images.

The severity of comprehension deficits and overall severity of aphasia in PWA will be related to the degree to which the singleton image attracts visual attention. People with greater language-comprehension deficits and greater overall severity of aphasia will allocate greater PFD to the minority image in the verbal and the nonverbal conditions.

Findings as predicted in the first three hypotheses would complement findings from previous studies with people without neurological disorders, indicating that physical stimulus characteristics influence visual attention during viewing of multiple-choice displays in verbal and nonverbal conditions (Heuer & Hallowell, 2007, 2009). It is important to confirm whether such influences are observed in PWA. Findings as predicted in the fourth hypothesis would suggest that the greater the severity of a person's language-comprehension deficits, the more likely it is that bottom-up influences (physical stimulus characteristics) will compete with the top-down influence of the verbal stimulus.

Method

Participants

Approval for this research was granted by the institutional review boards at Ohio University and the Center for Speech Pathology and Neurorehabilitation in Moscow, Russia. Forty-one participants with aphasia due to stroke or traumatic brain injury were recruited from the Center for Speech Pathology and Neurorehabilitation in Moscow. The diagnosis was based on a definition of aphasia as an acquired neurogenic impairment of “language modalities including speaking, listening, reading, and writing; it is not the result of a sensory deficit, a general intellectual deficit, or a psychiatric disorder” (Hallowell & Chapey, 2008, p. 3). The diagnosis of aphasia was documented by an independent certified speech-language pathologist and/or neuropsychologist who was a native speaker of Russian. The presence of lesions in participants with aphasia was verified by radiologists' interpretation of neuroimaging scans (computer tomography and/or magnetic resonance imaging).

Inclusion criteria for all PWA included passing vision and hearing screenings. Passing the visual-acuity screening required that participants identify symbols presented at a visual angle of 2° at a viewing distance of 2 ft on the Lea Symbols Line Test (Precision Vision, Woodstock, IL; Hyvärinen, Näsänen, & Laurinen, 1980). Participants had to achieve a 100% score on a color-vision screening test (Color Vision Testing Made Easy, Home Vision Care, Gulf Breeze, FL). Intactness of visual fields was screened using the Amsler grid, and functional bilateral peripheral vision was screened using a confrontation finger-counting task. Extraocular motor function and pupillary reflexes were also assessed (per Hallowell, 2008). Eyes were inspected for any pathological signs (e.g., redness, swelling, asymmetry of pupils, ocular drainage) that might suggest poor eye health and thus lead to unreliable eye tracking. Visual neglect was screened via the eye-tracking calibration procedure, which requires that participants fixate on a dot that appears in varied locations across the full horizontal and vertical range of the stimulus display area. Participants were allowed to use glasses or contact lenses, the use of which was documented. Hearing screening criteria included pure-tone responses at 500, 1000, and 2000 Hz at 30 dB SPL and accurate repetition of two examples of the auditory verbal stimuli used in the experiment, presented at 65–70 dB. All participants passed the hearing screening. One participant exhibited signs of hemianopsia identified with the confrontation finger-counting task. That participant was not excluded, because the hemianopsia did not interfere with eye-tracking calibration and recording procedures. No participants showed signs of visual neglect.

Participant ages ranged from 15 to 69 years (mean: 49.4). Education ranged from 9 to 19 years (mean: 13.9). Nine participants were women and 32 were men. All were right-handed and had one or more lesions confined to the left hemisphere. Twenty-two (54%) of the participants had ischemic strokes, six (14%) had hemorrhagic strokes, four (10%) had strokes of mixed origin, and nine (22%) had traumatic brain injury. All were at least 2 months postonset (range: 2 months–14 years 6 months; mean: 2 years 2 months). All were native speakers of Russian. Twenty-one participants (51%) had right-sided hemiparesis. Aphasia subtype was assigned on the basis of Luria's classification (for background on Luria's classification and its correspondence with Western classification systems, see Akhutina, 2016; Ivanova et al., 2015); fifteen participants (37%) were classified as having the efferent motor subtype, five (12%) sensory, nine (22%) dynamic, 10 (24%) acoustic-mnestic, one (2.4%) semantic, and one (2.4%) amnestic. (See Appendix for a description of all participants.)

The modified short form of the Russian Bilingual Aphasia Test (BAT; Ivanova & Hallowell, 2009) was administered to all participants. The revised Russian BAT is based on the short form by Paradis and Zeiber (1987). In that version, seven test items were modified from the original (for a detailed description of modified items, see Ivanova & Hallowell, 2009). The BAT is a well-known published aphasia battery that is generally used to assess expressive and receptive language abilities in individuals with bilingual aphasia. Its individual forms in various languages can be used to assess language impairment in monolingual speakers with aphasia when other standardized assessment tools are not available (Ivanova & Hallowell, 2009; Paradis & Zeiber, 1987). The modified short form of the Russian version of the BAT is one of the few published tests for aphasia (Paradis & Zeiber, 1987) for which psychometric properties have been documented in Russian (Ivanova & Hallowell, 2009). The test was administered in Russian only; participants were not expected to be bilingual to take the test. Group scores across subtests are summarized in Table 1. Although some participants achieved 100% accuracy on some of the subtests, they were included in the study because their communication characteristics met the definition of aphasia and each had a history of a left-hemisphere lesion.

Table 1.

Group performance on the subtests of the Russian Bilingual Aphasia Test indexed in raw scores.

| Subtest | M (proportion) | M (%) | SD (%) | Range (%) |

|---|---|---|---|---|

| Auditory Comprehension | 65.14/80 | 81.43 | 13.13 | 47.5–100 |

| Reading | 32.17/46 | 69.94 | 30.06 | 0–100 |

| Overall Receptive Skills a | 97.32/126 | 77.24 | 18.57 | 37–57 |

| Repetition | 25.93/40 | 64.82 | 33.62 | 0–100 |

| Naming | 22.61/35 | 64.60 | 33.29 | 0–100 |

| Metalinguistic Skills | 31.24/40 | 78.11 | 25.58 | 0–100 |

| Overall | 177.35/241 | 73.59 | 22.50 | 20.75–99.17 |

Overall Receptive Skills score is a combination of Auditory Comprehension and Reading scores.

Stimuli

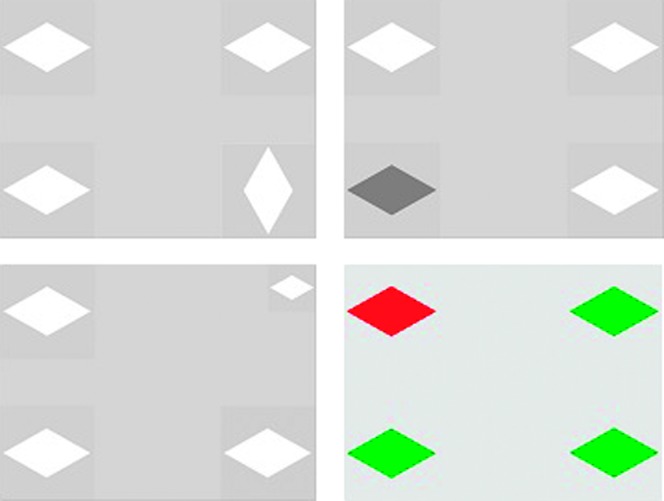

Forty multiple-choice image displays were created, each with four images. One of the four images within each display was manipulated with regard to one stimulus feature: color, orientation, luminance, or size. The three other images within the set were controlled to have exactly the same physical image characteristics as one another. (See Figure 2 for an example of each type of image display.)

Figure 2.

Examples of image displays for each type of image characteristic (clockwise from upper left): orientation, luminance, color, and size. (In the color display, the upper left diamond is red.) The three identical images within each display are the majority images. The different image is the singleton image.

Each display contained four instances of the same shape, each shown in one of the four corners of the screen. The number of images per display was revised from three to four to create more balanced image displays in order to avoid the gap in one corner of the image display, which could potentially in itself create a visual distraction. Simple symmetrical shapes were used (diamond, square, triangle, ellipsis, rectangle, hexagon, rotated square, pentagon, trapezoid, or rhombus). The four image characteristics were objectively controlled for each shape, per Heuer and Hallowell (2009). Luminance was defined as the degree of light reflected from an image (Zwahlen, 1984), measured in candelas per square meter (cd/m2). In the 10 displays in which luminance was manipulated, one image was darker than the others. The mean intensity for low-luminosity images was 46.3 cd/m2, and for high-luminosity images it was 174.7 cd/m2. For the 10 displays in which orientation was manipulated, one image had a vertical orientation and the remaining images had an identical horizontal orientation. Image size was the same for all display images—1,161 mm2 (subtending 6.62° of visual angle at a viewing distance of 60 cm)—except for the 10 displays in which size was manipulated; in those, one image had a size of 581 mm2 (1.31° of visual angle). For the 10 displays in which color was manipulated, one image was red and the remaining three were green. Displays were presented at a viewing distance of 60 cm.

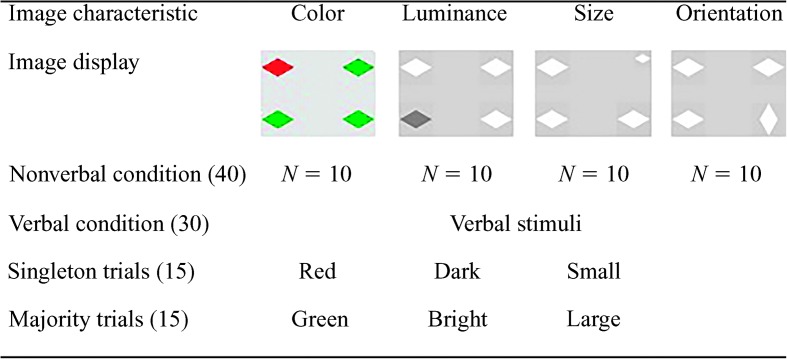

During the nonverbal condition, all 40 image displays were presented without a verbal stimulus. The verbal condition consisted of 30 trials: Orientation was not manipulated during the verbal trials, because there was no single word that unambiguously differentiated the two orientations. For instance, the terms horizontal and vertical did not consistently differentiate the two orientations of each shape. In half (15) of the 30 trials the verbal stimuli matched the singleton image (singleton trials), and in the other half (15) they matched the majority image (majority trials). See Figure 3 for a visualization of the different presentation conditions.

Figure 3.

Overview of the different presentation conditions, number of trials, and types of stimuli.

The verbal stimuli were prerecorded and digitized. Verbal stimuli were spoken by a male native speaker of Russian and were recorded in a soundproof booth using a high-quality dynamic microphone that was directly connected to a computer. Each verbal stimulus was digitized (22 kHz, low-pass filtered at 10.5 kHz), normalized for intensity, and stored on a computer using Cool Edit Pro 2.1. Recordings were edited to eliminate any noise at the start and end of each stimulus. The verbal stimuli were the following single words translated into Russian: green, red, small, large, bright, and dark. They were presented at approximately 60 dB SPL, as assessed by a sound-level meter, simultaneously with each accompanying image set.

Procedure

Peripheral vision was controlled by presenting the images in the four corners of the screen, at least 20° of visual angle apart. The nonverbal condition was presented first. The 40 multiple-choice image sets were presented for 4 s each on a 43-cm computer screen in a randomized order. Each participant was given the following instruction by the experimenter, a native speaker of Russian: “Look at the images on the computer screen in whichever way comes naturally to you. You do not have to remember any of the images.” This instruction was given to discourage participants from developing an expectation that they had to perform in any particular way. Prior to the verbal condition, each participant was instructed to look at the images that corresponded best to the words (identical to instructions provided in Heuer & Hallowell, 2009). Verbal stimuli and visual displays were presented with a simultaneous onset.

Prior to stimulus presentation, participants' eye movements were calibrated using an automatic nine-point procedure that involved looking sequentially at blinking yellow dots on a black screen from a distance of 60 cm (LC Technologies, 2011). Participants' eye movements were monitored and recorded at 60 samples per second using an LC Technologies Eyegaze remote pupil center/corneal reflection system.

Analysis

The initial 3 s of eye-fixation recordings were used for analysis. This time window was used for consistency with the previous study (Heuer & Hallowell, 2009). Heuer and Hallowell demonstrated that this interval allows capture of the greatest difference in PFD between singleton and majority images evoked in individuals free of neurological impairment when they view similar types of multiple-choice displays. Further, a 3,000-ms time window was sufficient for PWA to engage in visual-search tasks without a verbal stimulus (Heuer & Hallowell, 2015). A fixation was defined as a stable eye position of at least 100 ms (Manor & Gordon, 2003), having relative stability within 4° vertically and 6° horizontally. Customized software (Kruse, 2008) was used to extract and calculate the eye-tracking measure. Only fixations that could be assigned to a particular image located in each quadrant in the display were considered. Fixations allocated to the center of the screen were excluded from analysis. Fixation time associated with blink artifacts and movements of the eyes between fixations was eliminated prior to analysis.

Trials in which there were no fixation data on any of the singleton or majority images were excluded. This can happen when participants look away from the images or when they fail to reach the stability threshold required for the a priori definition of fixations. Of a total of 2,870 trials across all participants and conditions, 40 trials were excluded.

Eye-tracking methods such as those implemented in this study allow for natural, spontaneous responses and are based on the assumption that participants look at the image that corresponds to the verbal stimulus regardless of whether they were instructed to or not (Hallowell et al., 2002; Henderson, Shinkareva, Wang, Luke, & Olejarczyk, 2013). For the singleton and majority target images in the verbal condition, only data from trials for which comprehension of the verbal stimulus was likely were considered. Trials to be excluded were selected on the basis of having a PFD on the target of less than .28. This cutoff value is based on a 90% confidence interval for PFD on the target being greater than .25, which is the ideal chance value if the proportion of fixation duration were equally distributed across the target and the three remaining images in the display (Heuer & Hallowell, 2015). For PFD on the target below .28, it was unlikely that participants had understood the verbal stimulus. A total of 195 out of 1,230 trials of the verbal condition were excluded for this analysis (with one exception—Participant 9, for whom all luminance and color majority trials were excluded—the excluded trials were distributed across participants).

Visual attention allocated to each image was indexed in terms of PFD allocated to that particular image. PFD was calculated as the total duration of all fixation allocated to a particular image divided by the total of fixation durations on all images in the display (Dahan, Magnuson, & Tanenhaus, 2001; Hallowell, 1999; Hallowell et al., 2002; Heuer & Hallowell, 2007, 2009, 2015; Ivanova & Hallowell, 2012; Knoblich, Ohlsson, & Raney, 2001; Odekar et al., 2009). Dependent measures included the mean PFD across the three majority images and the mean PFD for the singleton image. The mean PFD across the three majority images was compared against the PFD allocated to the singleton image. Paired-samples t tests were conducted to address the first three hypotheses. Partial correlation coefficients were computed to determine the relationship between severity of comprehension deficits and the stimulus-driven influences as indexed by PFD allocated to nontarget and target singletons (controlling for age). Data in the nonverbal and verbal conditions, as well as BAT comprehension scores, were normally distributed on the basis of visual examination of the SPSS 22 output of the Q–Q plot and the nonsignificant results of the Shapiro–Wilk test.

Results

Comparison of PFD for Singleton and Majority Images in the Nonverbal Condition

Analyses were conducted for singleton versus majority images in each condition across all the manipulated stimulus characteristics. The mean PFD allocated to the singleton images (M = .36, SD = .12) was significantly greater than the mean PFD allocated to the majority images in the nonverbal condition (M = .20, SD = .04), t(40) = 7.16, p < .001 (two-tailed).

Comparison of PFD for Target Singleton Images and Target Majority Images in the Verbal Condition

The mean PFD allocated to the target singleton images (M = .74, SD = .11) was significantly greater than the mean PFD allocated to the target majority images (M = .50, SD = .11) in the verbal condition, t(40) = 11.83, p < .001 (two-tailed).

Comparison of PFD for Nontarget Singleton Images and Nontarget Majority Images in the Verbal Condition

The mean PFD allocated to the nontarget singleton images (M = .28, SD = .15) was significantly greater than the mean PFD allocated to the nontarget majority images (M = .10, SD = .05) in the verbal condition, t(40) = 7.28, p < .001 (two-tailed).

Relationship Between Severity of the Comprehension Deficit and PFD on the Minority Image in the Verbal and Nonverbal Conditions

Partial correlation coefficients, controlling for age, were computed between the BAT comprehension score, the BAT overall score, and the PFD allocated to the singleton image (a) in the nonverbal condition, (b) in the verbal condition when the singleton image was the target, and (c) in the verbal condition when the singleton image was not the target. A significant negative relationship was observed between the BAT comprehension and overall scores and PFD allocated to the nontarget singleton image in the verbal condition. Zero-order (full) correlations showed a similar statistically significant negative correlation between the BAT comprehension and overall scores and PFD allocated to the nontarget singleton image, indicating that age had very little influence on the relationship between BAT and PFD. Results suggest that as severity of language deficits increased, more visual attention was allocated to nontarget singleton images. Results are summarized in Table 2.

Table 2.

Partial correlation coefficients for the singleton image and Bilingual Aphasia Test (BAT) comprehension scores in the nonverbal and verbal conditions when controlling for age.

| Control variable | Mean proportion of fixed duration allocated to singleton image (N = 41) |

||||||

|---|---|---|---|---|---|---|---|

| Nonverbal condition |

Verbal condition (target) |

Verbal condition (nontarget) |

|||||

| r | p | r | p | r | p | ||

| None | BAT Auditory Comprehension | −.25 | .15 | .20 | .22 | −.67** | < .001 |

| Overall BAT aphasia score | −.23 | .15 | .09 | .56 | −.67** | < .001 | |

| Age | BAT Auditory Comprehension | −.21 | .19 | .30 | .06 | −.63** | < .001 |

| Overall BAT aphasia score | −.21 | .20 | .14 | .38 | −.67** | < .001 | |

p < .01.

Discussion

The first hypothesis, that greater visual attention (as indexed by PFD) would be allocated to the singleton than to majority images within the nonverbal condition, was confirmed. Images that differed from the remaining images in the display with respect to one image characteristic were fixated proportionally longer. This result is consistent with results of previous studies indicating that physical stimulus characteristics influence visual attention during viewing of multiple-choice displays in people without neurological impairment (Heuer & Hallowell, 2007, 2009).

The second hypothesis, that greater visual attention (as indexed by PFD) would be allocated to target singleton images than to target majority images in the verbal condition, was also confirmed. Despite explicit instructions to look at the image(s) that corresponded to the verbal stimulus, physical stimulus characteristics had a significant influence on visual attention in the presence of a verbal stimulus, as indicated by the significantly greater PFD allocated to the singleton target images than to majority target images. If visual attention were driven solely by the verbal stimulus (which is assumed in comprehension assessments that rely on multiple-choice image displays), no significant difference would have been observed in the allocation of PFD to singleton versus majority images in the verbal condition. This finding is consistent with results reported by Heuer and Hallowell (2009) for people without neurological impairment.

The third hypothesis, that greater PFD would be allocated to nontarget singleton images than to nontarget majority images in the verbal condition, was also confirmed. The nontarget singleton elicited significantly greater PFD than the nontarget majority images. This suggests that the singleton image is more likely to distract the viewer's visual attention when a multiple-choice image display is shown. Participants are more likely to allocate attention to the singleton when it does not correspond to the verbal stimulus. This provides evidence that the verbal stimulus does not completely override the influence of image salience in tasks that present with verbal and visual stimuli. Although it is possible that participants ignored the verbal stimulus, it is unlikely. Application of the eye-tracking method relies on the assumption that viewers will look most naturally and automatically at the image that corresponds to the verbal stimulus regardless of the absence or presence of explicit instructions. This is a robust finding among studies incorporating the visual-world paradigm (e.g., Cooper, 1974; Tanenhaus et al., 1995) and comprehension-assessment studies (e.g., Hallowell, 1999, 2012; Hallowell et al., 2002) in people with and without neurologic impairment.

With regard to the fourth hypothesis, people with more severe comprehension deficits (indexed by lower comprehension scores on the BAT) and more severe aphasia overall (indexed by lower overall BAT scores) allocated greater PFD to the nontarget singleton images. This is consistent with previous findings that PWA tend to have challenges in allocating limited attentional resources (Murray, 1999, 2012; Robin & Rizzo, 1989). It appears that with increasing severity of aphasia and increasing comprehension deficits in particular, the top-down influence of the linguistic input might have less influence on allocation of visual attention by PWA as they process a verbal stimulus. Participants with more severe aphasia overall were potentially also more likely to be distracted by the bottom-up influence of the nontarget singleton images. These findings are consistent with those of Murray (2012), who reported a significant correlation between aphasia severity and performance on the Test of Everyday Attention (Robertson, Ward, Ridgeway, & Nimmo-Smith, 1994) in a sample of 39 PWA. However, in that study Murray also highlighted that six PWA performed within normal limits on the test. The current finding of an association between aphasia severity and apparent bottom-up influences of stimulus characteristics is not consistent with findings by Heuer and Hallowell (2015), who reported no significant correlation between aphasia severity and eye-tracking measures used to index visual-attention allocation in a dual task. In sum, deficits in attention allocation may contribute to poorer responses in a multiple-choice comprehension task, but our findings cannot necessarily be attributed to a strong relationship between attention-allocation deficits and aphasia severity (Murray, 2004, 2012). Also, it is important to keep in mind that overall the aphasia severity score incorporates the comprehension score, so the two measures of severity are not independent—a fact that likely contributed to the similar magnitude in correlations between the two measures.

The fact that physical stimulus characteristics influenced visual attention in PWA even during the verbal condition highlights the fact that one cannot assume that the verbal stimulus will completely override the influence of visual-stimulus characteristics during auditory verbal tasks that rely on multiple-choice displays. Overall, this study conducted with native speakers of Russian with aphasia using Russian verbal stimuli successfully replicates the findings of a study with native speakers of American English without aphasia (Heuer & Hallowell, 2009). Results highlight that the observed visual stimulus–driven influences, even in the presence of a verbal stimulus, are not language specific. The findings mirror those of a similar study with people without aphasia (Heuer & Hallowell, 2009), suggesting that all people, regardless of the presence or absence of neurogenic comprehension deficits, are susceptible to the influence of image salience in multiple-choice image displays even in the presence of verbal stimuli. The experimental paradigms of these studies were not typical word–picture matching tasks commonly used to assess comprehension. Instead, the task was designed to strategically manipulate how participants allocate visual attention to simple geometric shapes (by altering one image characteristic in one of the images in each display) and to determine the influence of a verbal stimulus on visual-attention allocation. The results still have implications for testing in clinical and research settings.

First, when an individual selects a nontarget image instead of a target image during a multiple-choice task, it is important to consider whether this might be attributable (at least in part) to poor control of physical stimulus properties among items within a display as opposed to being attributable to a comprehension deficit. This is especially the case when a person being assessed is likely to have concomitant nonlinguistic cognitive deficits. The greater the severity of comprehension deficits and overall severity of aphasia, the more susceptible PWA may be to the influence of poorly controlled physical stimulus properties among target and nontarget images, which may further confound the validity of comprehension assessment. Careful control of stimulus-driven aspects in multiple-choice image displays is vital to valid indexing of auditory processing.

Second, all people are susceptible to the influences of physical image characteristics on visual attention, regardless of the presence or severity of deficits. Visual stimulus characteristics can be strategically manipulated in assessment and treatment materials as a way to influence visual attention and to affect the likelihood that participants will identify target images within multiple-choice displays.

Limitations

The images and the verbal stimuli used in the current study were intentionally simplistic, for the sake of controlling image characteristics objectively. Typical multiple-choice images have greater complexity and involve a combination of stimulus characteristics that might disproportionately influence visual attention. In the future, generalization to more typical types of test images should be established. Further, it is important to recognize that the experimental paradigm was not the same as a typical word–picture matching task, not only in terms of response modality but also in that typical multiple-choice tasks include overt instructions regarding how participants are to respond (both of which are strengths of the proposed method). Studies including more complex and ecologically valid visual stimuli, along with carefully controlled linguistic stimuli designed to evaluate comprehension abilities, will be important in further exploration of the influence of visual attention on linguistic processes and their interaction with cognitive and linguistic deficits in PWA. Last, although visual attention was monitored online by observing participants' fixations in real time, no measures of visual or auditory attention were collected.

Acknowledgments

This study was supported in part by the Ohio University School of Hearing, Speech and Language Sciences (graduate fellowships) and Ohio University College of Health and Human Services Student Research and Creative Activity Awards to Sabine Heuer and Maria V. Ivanova, and by National Institute on Deafness and Other Communication Disorders Grant R43DC010079 and National Science Foundation Biomedical Engineering Research to Aid Persons with Disabilities Program Grant 0454456 awarded to Brooke Hallowell. The article was also prepared within the framework of the Basic Research Program at the National Research University Higher School of Economics (HSE) and supported within the framework of a subsidy by the Russian Academic Excellence Project ‘5-100’ (Maria Ivanova). We extend our gratitude to Marina Emelianova and Victor Shklovsky from the Center for Speech Pathology and Neurorehabilitation, Moscow, Russia, for assistance with participant recruitment. We thank Hans Kruse for software design.

Appendix

Individual Characteristics of Participants With Aphasia

| Participant | Age (years) | Gender | Education (years) | Time since onset (years;months) | Site of lesion | Types of aphasia (according to Luria’s classification) | BAT Auditory Comprehension a | BAT Reading b | BAT Overall Receptive Skills c | BAT Overall d |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 68 | M | 16 | 0;11 | Parietal, occipital, posterior temporal lobe | Sensory, acoustic-mnestic, semantic | 61 | 42 | 103 | 187 |

| 2 | 19 | M | 9 | 5;5 | Frontal lobe | Dynamic, acoustic-mnestic, efferent motor | 64 | 36 | 100 | 189 |

| 3 | 59 | M | 13 | 0;6 | Temporal lobe | Sensory, acoustic-mnestic | 76 | 45 | 121 | 219 |

| 4 | 66 | F | 16 | 0;7 | Frontal, anterior temporal lobe | Acoustic-mnestic, efferent motor | 77 | 40 | 117 | 224 |

| 5 | 45 | M | 16 | 0;4 | Parietal, occipital lobe | Acoustic-mnestic, efferent motor | 73 | 43 | 116 | 229 |

| 6 | 59 | M | 16 | 0;2 | Temporal lobe | Acoustic-mnestic, efferent motor | 79 | 43 | 122 | 233 |

| 7 | 30 | M | 13 | 0;11 | Parietal, occipital, temporal lobe, subcortical area | Efferent motor, afferent motor, sensory | 38 | 10 | 48 | 50 |

| 8 | 52 | M | 16 | 3;4 | Frontal, temporal lobe | Dynamic, acoustic-mnestic, efferent motor | 58 | 29 | 87 | 172 |

| 9 | 29 | M | 16 | 0;3 | Parietal, occipital lobe | Dynamic, efferent motor, afferent motor | 80 | 45 | 125 | 239 |

| 10 | 53 | M | 16 | 0;2 | Acoustic-mnestic | 68 | 39 | 107 | 202 | |

| 11 | 54 | M | 11 | 3;3 | Frontal, temporal lobe | Dynamic | 68 | 41 | 109 | 213 |

| 12 | 36 | M | 13 | 0;10 | Temporal, occipital lobe | Acoustic-mnestic, sensory, amnestic | 58 | 24 | 82 | 152 |

| 13 | 58 | F | 9 | 0;10 | Parietal, anterior temporal lobe | Efferent motor | 73 | 42 | 115 | 215 |

| 14 | 57 | F | 13 | 7;6 | Efferent motor, dynamic | 68 | 43 | 111 | 210 | |

| 15 | 55 | F | 13 | 2;6 | Frontal, anterior temporal lobe | Acoustic-mnestic, sensory | 77 | 43 | 120 | 232 |

| 16 | 58 | M | 12 | 2;8 | Prefrontal, parietal lobe | Efferent motor | 70 | 35 | 105 | 207 |

| 17 | 45 | M | 16 | 1;9 | Frontal lobe, basal ganglia | Efferent motor, afferent motor, dynamic | 62 | 11 | 73 | 128 |

| 18 | 50 | M | 12 | 1;4 | Parietal, occipital, posterior temporal lobe | Sensory, acoustic-mnestic | 61 | 35 | 96 | 180 |

| 19 | 52 | M | 13 | 1;7 | Parietal, posterior temporal lobe | Acoustic-mnestic, efferent motor, dynamic | 71 | 45 | 116 | 215 |

| 20 | 50 | M | 13 | 2;5 | Parietal, temporal lobe | Sensory, acoustic-mnestic | 51 | 23 | 74 | 135 |

| 21 | 54 | M | 16 | 2;5 | Frontal lobe | Efferent motor, afferent motor | 66 | 40 | 106 | 177 |

| 22 | 31 | M | 16 | 2;8 | Acoustic-mnestic, efferent motor, semantic | 66 | 43 | 109 | 210 | |

| 23 | 52 | M | 16 | 2;0 | Dynamic, efferent motor, afferent motor | 71 | 30 | 101 | 146 | |

| 24 | 69 | M | 16 | 6;7 | Frontal, anterior temporal lobe | Efferent motor, afferent motor | 66 | 35 | 101 | 184 |

| 25 | 48 | M | 16 | 0;2 | Frontal, posterior temporal | Acoustic-mnestic | 76 | 44 | 120 | 232 |

| 26 | 34 | M | 11 | 0;5 | Frontal lobe | Efferent motor, afferent motor | 45 | 13 | 58 | 62 |

| 27 | 66 | M | 16 | 0;10 | Parietal, temporal lobe | Dynamic, acoustic-mnestic, afferent motor | 72 | 35 | 107 | 201 |

| 28 | 31 | M | 9 | 14;6 | Frontal, temporal lobe | Acoustic-mnestic, efferent motor, dynamic | 68 | 33 | 101 | 187 |

| 29 | 49 | F | 13 | 0;8 | Frontal lobe | Efferent motor, afferent motor | 80 | 46 | 126 | 238 |

| 30 | 57 | M | 19 | 1;5 | Frontal, temporal, parietal lobe | Efferent motor, afferent motor, dynamic | 44 | 9 | 53 | 87 |

| 31 | 50 | M | 11 | 0;11 | Sensory, acoustic-mnestic, dynamic | 57 | 11 | 68 | 109 | |

| 32 | 60 | F | 16 | 1;0 | Parietal, temporal lobe | Efferent motor | 73 | 42 | 115 | 224 |

| 33 | 52 | M | 13 | 4;4 | Prefrontal, parietal, temporal lobe | Dynamic, efferent motor, acoustic-mnestic | 66 | 34 | 100 | 190 |

| 34 | 61 | M | 16 | 2;7 | Left temporal lobe, right occipital lobe | Amnestic | 75 | 43 | 118 | 231 |

| 35 | 58 | M | 16 | 3;3 | Occipital, posterior temporal lobe | Dynamic, efferent motor | 47 | 0 | 47 | 85 |

| 36 | 47 | M | 11 | 1;3 | Temporal, parietal, occipital lobe | Efferent motor, afferent motor | 59 | 18 | 77 | 81 |

| 37 | 53 | F | 13 | 1;2 | Frontal lobe | Efferent motor, afferent motor | 48 | 0 | 48 | 79 |

| 38 | 55 | M | 11 | 1;4 | Prefrontal, parietal, temporal lobe | Dynamic, efferent motor | 65 | 41 | 106 | 189 |

| 39 | 26 | F | 16 | 0;10 | Frontal, parietal, temporal lobe | Efferent motor, afferent motor, acoustic-mnestic | 62 | 37 | 99 | 165 |

| 40 | 15 | M | 9 | 1;1 | Parietal, temporal lobe | Semantic, acoustic-mnestic, efferent motor | 74 | 43 | 117 | 226 |

| 41 | 63 | F | 16 | 0;10 | Efferent motor, afferent motor | 58 | 8 | 66 | 133 |

The Auditory Comprehension subtest of the Bilingual Aphasia Test (BAT) included the following subtests: Word–Picture Matching, Understanding of Simple and Semicomplex Commands, Verbal Auditory Discrimination, Syntactic Comprehension, and Listening Comprehension. The maximum score was 80.

Maximum score: 46.

Maximum score: 126.

Maximum score: 241.

Funding Statement

This study was supported in part by the Ohio University School of Hearing, Speech and Language Sciences (graduate fellowships) and Ohio University College of Health and Human Services Student Research and Creative Activity Awards to Sabine Heuer and Maria V. Ivanova, and by National Institute on Deafness and Other Communication Disorders Grant R43DC010079 and National Science Foundation Biomedical Engineering Research to Aid Persons with Disabilities Program Grant 0454456 awarded to Brooke Hallowell.

References

- Akhutina T. (2016). Luria's classification of aphasias and its theoretical basis. Aphasiology, 30, 878–897. https://doi.org/10.1080/02687038.2015.1070950 [Google Scholar]

- Azouvi P., Bartolomeo P., Beis J.-M., Perennou D., Pradat-Diehl P., & Rousseaux M. (2006). A battery of tests for the quantitative assessment of unilateral neglect. Restorative Neurology and Neuroscience, 24, 273–285. [PubMed] [Google Scholar]

- Beaudoin A. J., Fournier B., Julien‐Caron L., Moleski L., Simard J., Mercier L., & Desrosiers J. (2013). Visuoperceptual deficits and participation in older adults after stroke. Australian Occupational Therapy Journal, 60, 260–266. [DOI] [PubMed] [Google Scholar]

- Bennett P. J., & Jaye P. D. (1995). Letter localization, not discrimination, is constrained by attention. Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale, 49, 460–504. [DOI] [PubMed] [Google Scholar]

- Bergen J. R., & Julesz B. (1983). Rapid discrimination of visual patterns. IEEE Transactions on Systems, Man, and Cybernetics, SMC-13, 857–863. https://doi.org/10.1109/TSMC.1983.6313080 [Google Scholar]

- Berryman A., Rasavage K., & Politzer T. (2010). Practical clinical treatment strategies for evaluation and treatment of visual field loss and visual inattention. NeuroRehabilitation, 27, 261–268. [DOI] [PubMed] [Google Scholar]

- Caplan D., & Waters G. S. (1995). On the nature of the phonological output planning processes involved in verbal rehearsal: Evidence from aphasia. Brain and Language, 48, 191–220. [DOI] [PubMed] [Google Scholar]

- Caspari I., Parkinson S. R., LaPointe L. L., & Katz R. C. (1998). Working memory and aphasia. Brain and Cognition, 37, 205–223. https://doi.org/10.1006/brcg.1997.0970 [DOI] [PubMed] [Google Scholar]

- Cooper R. M. (1974). The control of eye fixation by the meaning of spoken language: A new methodology for the real-time investigation of speech perception, memory, and language processing. Cognitive Psychology, 6, 84–107. [Google Scholar]

- Dahan D., Magnuson J. S., & Tanenhaus M. K. (2001). Time course of frequency effects in spoken-word recognition: Evidence from eye movements. Cognitive Psychology, 42, 317–367. [DOI] [PubMed] [Google Scholar]

- Davis E. T., Shikano T., Peterson S. A., & Michel R. K. (2003). Divided attention and visual search for simple versus complex features. Vision Research, 43, 2213–2232. [DOI] [PubMed] [Google Scholar]

- Duncan J., & Humphreys G. W. (1989). Visual search and stimulus similarity. Psychological Review, 96, 433–458. [DOI] [PubMed] [Google Scholar]

- Fisk G. D., Owsley C., & Mennemeier M. (2002). Vision, attention, and self-reported driving behaviors in community-dwelling stroke survivors. Archives of Physical Medicine and Rehabilitation, 83, 469–477. [DOI] [PubMed] [Google Scholar]

- Foster D. H., & Ward P. A. (1991). Asymmetries in oriented-line detection indicate two orthogonal filters in early vision. Proceedings of the Royal Society B: Biological Sciences, 243, 75–81. [DOI] [PubMed] [Google Scholar]

- Frankel T., Penn C., & Ormond-Brown D. (2007). Executive dysfunction as an explanatory basis for conversation symptoms of aphasia: A pilot study. Aphasiology, 21, 814–828. https://doi.org/10.1080/02687030701192448 [Google Scholar]

- Fridriksson J., Nettles C., Davis M., Morrow L., & Montgomery A. (2006). Functional communication and executive function in aphasia. Clinical Linguistics & Phonetics, 20, 401–410. [DOI] [PubMed] [Google Scholar]

- Friedmann N., & Gvion A. (2003). Sentence comprehension and working memory limitation in aphasia: A dissociation between semantic-syntactic and phonological reactivation. Brain and Language, 86, 23–39. [DOI] [PubMed] [Google Scholar]

- Green B. F., & Anderson L. K. (1956). Color coding in a visual search task. Journal of Experimental Psychology, 51, 19–24. [DOI] [PubMed] [Google Scholar]

- Hallowell B. (1999). A new way of looking at auditory linguistic comprehension. In Becker W., Deubel H., & Mergner T. (Eds.), Current oculomotor research: Physiological and psychological aspects (pp. 287–291). New York, NY: Springer. [Google Scholar]

- Hallowell B. (2008). Strategic design of protocols to evaluate vision in research on aphasia and related disorders. Aphasiology, 22, 600–617. [Google Scholar]

- Hallowell B. (2012). Exploiting eye-mind connections for clinical applications in language disorders. In Goldfarb R. (Ed.), Translational speech-language pathology and audiology (pp. 335–341). San Diego, CA: Plural. [Google Scholar]

- Hallowell B. (2016). Aphasia and other acquired neurogenic language disorders: A guide for clinical excellence. San Diego, CA: Plural. [Google Scholar]

- Hallowell B., & Chapey R. (2008). Introduction to language intervention strategies in adult aphasia. In Chapey R. (Ed.), Language intervention strategies in aphasia and related communication disorders (5th ed.; pp. 3–19). Philadelphia, PA: Lippincott, Williams & Wilkins. [Google Scholar]

- Hallowell B., Wertz R. T., & Kruse H. (2002). Using eye movement responses to index auditory comprehension: An adaptation of the Revised Token Test. Aphasiology, 16, 587–594. [Google Scholar]

- Henderson J. M., & Hollingworth A. (1999). High-level scene perception. Annual Review of Psychology, 50, 243–271. [DOI] [PubMed] [Google Scholar]

- Henderson J. M., Shinkareva S. V., Wang J., Luke S. G., & Olejarczyk J. (2013). Predicting cognitive state from eye movements. PLoS One, 8(5), e64937 https://doi.org/10.1371/journal.pone.0064937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henderson J. M., Weeks P. A. Jr., & Hollingworth A. (1999). The effects of semantic consistency on eye movements during complex scene viewing. Journal of Experimental Psychology: Human Perception and Performance, 25, 210–228. [Google Scholar]

- Heuer S., & Hallowell B. (2007). An evaluation of multiple-choice test images for comprehension assessment in aphasia. Aphasiology, 21, 883–900. [Google Scholar]

- Heuer S., & Hallowell B. (2009). Visual attention in a multiple-choice task: Influences of image characteristics with and without presentation of a verbal stimulus. Aphasiology, 23, 351–363. [Google Scholar]

- Heuer S., & Hallowell B. (2015). A novel eye-tracking method to assess attention allocation in individuals with and without aphasia using a dual-task paradigm. Journal of Communication Disorders, 55, 15–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchison K. A., Balota D. A., & Ducheck J. M. (2010). The utility of Stroop task switching as a marker for early-stage Alzheimer's disease. Psychology and Aging, 25, 545–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen L., Näsänen R., & Laurinen P. (1980). New visual acuity test for pre-school children. Acta Ophthalmologica, 58, 507–511. [DOI] [PubMed] [Google Scholar]

- Itti L., & Koch C. (2001). Computational modelling of visual attention. Nature Reviews Neuroscience, 2, 194–203. [DOI] [PubMed] [Google Scholar]

- Ivanova M. V., Dragoy O. V., Kuptsova S. V., Ulicheva A. S., & Laurinavichyute A. K. (2015). The contribution of working memory to language comprehension: Differential effect of aphasia type. Aphasiology, 29, 645–664. https://doi.org/10.1080/02687038.2014.975182 [Google Scholar]

- Ivanova M. V., & Hallowell B. (2009). Short form of the Bilingual Aphasia Test in Russian: Psychometric data of persons with aphasia. Aphasiology, 23, 544–556. [Google Scholar]

- Ivanova M. V., & Hallowell B. (2012). Validity of an eye-tracking method to index working memory in people with and without aphasia. Aphasiology, 26, 556–578. [Google Scholar]

- Ivanova M. V., & Hallowell B. (2014). A new modified listening span task to enhance validity of working memory assessment for people with and without aphasia. Journal of Communication Disorders, 52, 78–98. https://doi.org/10.1016/j.jcomdis.2014.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judd T., Ehinger K., Durand F., & Torralba A. (2009, September). Learning to predict where humans look. In 2009 IEEE 12th International Conference on Computer Vision (pp. 2106–2113). Piscataway, NJ: Institute of Electrical and Electronics Engineers. [Google Scholar]

- Knoblich G., Ohlsson S., & Raney G. E. (2001). An eye movement study of insight problem solving. Memory & Cognition, 29, 1000–1009. [DOI] [PubMed] [Google Scholar]

- Kruse H. (2008). iAnalyze [Computer software]. Athens: Ohio University Neurolinguistics Laboratory. [Google Scholar]

- Lachapelle J., Bolduc-Teasdale J., Ptito A., & McKerral M. (2008). Deficits in complex visual information processing after mild TBI: Electrophysiological markers and vocational outcome prognosis. Brain Injury, 22, 265–274. [DOI] [PubMed] [Google Scholar]

- LC Technologies. (2011). Eyegaze Edge Analysis System user manual. Fairfax, VA: Author. [Google Scholar]

- Manor B. R., & Gordon E. (2003). Defining the temporal threshold for ocular fixation in free-viewing visuocognitive tasks. Journal of Neuroscience Methods, 128, 85–93. [DOI] [PubMed] [Google Scholar]

- Moraglia G. (1989). Display organization and the detection of horizontal line segments. Perception & Psychophysics, 45, 265–272. [DOI] [PubMed] [Google Scholar]

- Murray L. L. (1999). Attention and aphasia: Theory, research and clinical implications. Aphasiology, 13, 91–111. [Google Scholar]

- Murray L. L. (2004). Cognitive treatments for aphasia: Should we and can we help attention and working memory problems? Journal of Medical Speech-Language Pathology, 12, xxv–xl. [Google Scholar]

- Murray L. L. (2012). Attention and other cognitive deficits in aphasia: Presence and relation to language and communication measures. American Journal of Speech-Language Pathology, 21, S51–S64. [DOI] [PubMed] [Google Scholar]

- Murray L. L., Holland A. L., & Beeson P. M. (1997). Auditory processing in individuals with mild aphasia: A study of resource allocation. Journal of Speech, Language, and Hearing Research, 40, 792–808. [DOI] [PubMed] [Google Scholar]

- Nagy A. L., & Sanchez R. R. (1990). Critical color differences determined with a visual search task. Journal of the Optical Society of America A: Optics, Image Science, and Vision, 7, 1209–1217. [DOI] [PubMed] [Google Scholar]

- Nothdurft H.-C. (2002). Attention shifts to salient targets. Vision Research, 42, 1287–1306. [DOI] [PubMed] [Google Scholar]

- Odekar A., Hallowell B., Kruse H., Moates D., & Lee C.-Y. (2009). Validity of eye movement methods and indices for capturing semantic (associative) priming effects. Journal of Speech, Language, and Hearing Research, 52, 31–48. [DOI] [PubMed] [Google Scholar]

- Paradis M., & Zeiber T. (1987). Bilingual Aphasia Test (Russian version). Hillsdale, NJ: Erlbaum. [Google Scholar]

- Penn C., Frankel T., Watermeyer J., & Russell N. (2010). Executive function and conversational strategies in bilingual aphasia. Aphasiology, 24, 288–308. https://doi.org/10.1080/02687030902958399 [Google Scholar]

- Purdy M. (2002). Executive function ability in persons with aphasia. Aphasiology, 16, 549–557. https://doi.org/10.1080/02687030244000176 [Google Scholar]

- Robertson I. H., Ward T., Ridgeway V., & Nimmo-Smith I. (1994). The Test of Everyday Attention. Bury St. Edmunds, UK: Thames Valley Test Company. [Google Scholar]

- Robin D. A., & Rizzo M. (1989). The effect of focal cerebral lesions on intramodal and cross-modal orienting of attention. Clinical Aphasiology, 18, 61–74. [Google Scholar]

- Rush B. K., Barch D. M., & Braver T. S. (2006). Accounting for cognitive aging: Context processing, inhibition or processing speed? Aging, Neuropsychology, and Cognition, 13, 588–610. [DOI] [PubMed] [Google Scholar]

- Tanenhaus M. K., Spivey-Knowlton M. J., Eberhard K. M., & Sedivy J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268, 1632–1634. [DOI] [PubMed] [Google Scholar]

- Tatemichi T. K., Desmond D. W., Stern Y., Paik M., Sano M., & Bagiella E. (1994). Cognitive impairment after stroke: Frequency, patterns, and relationship to functional abilities. Journal of Neurology, Neurosurgery & Psychiatry, 57, 202–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theeuwes J. (2010). Top-down and bottom-up control of visual selection. Acta Psychologica, 135, 77–99. [DOI] [PubMed] [Google Scholar]

- Thiessen A., Beukelman D., Ullman C., & Longenecker M. (2014). Measurement of the visual attention patterns of people with aphasia: A preliminary investigation of two types of human engagement in photographic images. Augmentative and Alternative Communication, 30, 120–129. [DOI] [PubMed] [Google Scholar]

- Treisman A., & Gormican S. (1988). Feature analysis in early vision: Evidence from search asymmetries. Psychological Review, 95, 15–48. [DOI] [PubMed] [Google Scholar]

- Tseng C. H., McNeil M. R., & Milenkovic P. (1993). An investigation of attention allocation deficits in aphasia. Brain and Language, 45, 276–296. [DOI] [PubMed] [Google Scholar]

- Villard S., & Kiran S. (2015). Between-session intra-individual variability in sustained, selective, and integrational non-linguistic attention in aphasia. Neuropsychologia, 66, 204–212. [DOI] [PubMed] [Google Scholar]

- Wilkinson K. M., & Light J. (2011). Preliminary investigation of visual attention to human figures in photographs: Potential considerations for the design of aided AAC visual scene displays. Journal of Speech, Language, and Hearing Research, 54, 1644–1657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe J. M. (2000). Visual attention. In De Valois K. K. (Ed.), Seeing (2nd ed.; pp. 335–386). San Diego, CA: Academic Press. [Google Scholar]

- Wolfe J. M., Butcher S. J., Lee C., & Hyle M. (2003). Changing your mind: On the contributions of top-down and bottom-up guidance in visual search for feature singletons. Journal of Experimental Psychology: Human Perception and Performance, 29, 483–502. [DOI] [PubMed] [Google Scholar]

- Yarbus A. L. (1967). Eye movements and vision. New York, NY: Plenum. [Google Scholar]

- Zwahlen H. (1984). Light and optics. In Kaufman J. E. (Ed.), IES lighting handbook: 1984 reference volume (pp. 2–7). New York, NY: Illuminating Engineering Society of North America. [Google Scholar]