Abstract

Increasingly popular touch-screen electronic tablets offer clinics a new medium for collecting adolescent health screening data in the waiting area before visits, but there has been limited evaluation of interactive response modes. This study investigated the clarity, comprehensibility and utility of icon-driven and gestural response functions employed in one such screening tool, TickiT™. We conducted cognitive processing interviews with 30 adolescents from Vancouver (ages 14–20, 60% female, 30% ESL) as they completed the TickiT survey. Participants used 7 different interactive functions to respond to questions across 30 slides, while being prompted to articulate their thoughts and reactions. The audio-recorded, transcribed interviews were analyzed for evidence of comprehension, nuances in response choices, and youth interest in the modes. Participants were quite receptive to the icon response modes. Across demographics and cultural backgrounds they indicated question prompts were clear, response choices appropriate, and response modes intuitive. Most said they found the format engaging, and would be more inclined to fill out such a screening tool than a paper-and-pencil form in a clinical setting. Given the positive responses and ready understanding of these modes among youth, clinicians may want to consider interactive icon-driven approaches for screening.

Keywords: adolescent health screening, assessment technology, questionnaire, TickiT

A good survey or screening tool provides reliable and accurate information1, and the advantages of using computer-aided self-reports in research and clinical settings have been well documented2–9. Foremost of these benefits is an improvement in eliciting relatively truthful responses; studies have shown that, compared to face-to-face interviews, computer-based self-surveys decrease public self-awareness while increasing private self-awareness2, eliciting higher levels of spontaneous self-disclosure and more frequent admissions of stigmatized behavior3,4. This phenomenon is most acutely manifested in the “online disinhibition effect,” a term that describes the ease with which individuals disclose personal information over the Internet5.

Computer-based programs show other benefits relative to pencil-and-paper formats. When given a choice between computer or paper surveys, research participants have indicated they prefer electronic media6, and perceive the process as less intimidating7. Further, computerized data collection offers a) limited response options that prevent out-of-range answers; b) minimal errors in the recording and scoring of responses; c) the swift generation of results; and d) the capacity to automatically store and index data for future use8,9. This swift collection and display of results is particularly valuable for health care providers who use self-surveys as a screening tool prior to meeting with the patient, allowing them address disclosed risk or protective factors during face-to-face sessions. This screening strategy has been shown to improve outcomes following adolescent interventions10, and enhance young patients’ views of their visits.

Computer based screening tools, for the reasons listed above, are becoming popular for such screening assessments11,12. In recent years, the increased availability of new devices, such as interactive electronic tablets, offer promising utility for even more effective and reliable data collection. For example, a study of school-aged children found that 61% found tablets more private and confidential than laptops, and easier to answer more truthfully13. Tablet-aided surveys have also been validated by adult populations as being easy to read and easy to respond to14. It is important to note, though, that these studies evaluated surveys that had merely transposed the visuals from a paper survey or a computer survey onto the tablet screen, with solely textual questions and response options. This is in contrast to the growing number of programs that have taken advantage of the interactive technology of tablets and have shifted to icon, pictogram, or gestural interactive response options, wherein users swipe or tap fingers on the screen to move bars, buttons and icons to enter responses.

While this departure away from strictly text-based questionnaires does have the potential to lower the required literacy of users and so increase accessibility15, it must first be determined that such methods are equally well understood, and valid substitutes for text-based approaches. We were not able to find any currently published evaluations or validations of icon-based and interactive response modes used on electronic tablets as part of an adolescent health screening tool or survey. Therefore, this study aimed to evaluate the clarity, comprehensibility, and feasibility of using icon-driven and gestural response modes with adolescents of varying ages and ethnocultural backgrounds in health screening assessments and survey research. Three research questions guided our study: 1) Do diverse adolescents similarly understand how to operate icon and gestural response options for survey questions without instructions? 2) Do diverse adolescents attribute the same or similar meanings to question stems and icon or gestural response options in choosing their answers? And 3) Do diverse adolescents express predominantly positive or negative views about, or a preference for completing health screens that involve electronic tablets and interactive response options?

Methods

This study involved cognitive processing interviews, using the “think-aloud” technique of data collection16, which is a common approach for evaluating how measures are perceived and understood by the target population. The think-aloud method of cognitive processing interviews has been used for more than 30 years17 as one of the primary methods of assessing survey item clarity, acceptability, and comprehension across a diverse range of age groups18. It has also been recommended widely for usability studies in computer software and internet use studies19, including for interactive e-health software use16,19. This group of data collection methods elicits knowledge of how people are thinking about and responding to survey items during or immediately after the initial cognitive process occurs, and so theoretically taps into working memory, rather than retrospective cognitions and interpretations. In interactive computer surveys that are icon- or gesture-based, response requires decoding the icon and knowing what actions are required to answer, often quite quickly and intuitively 16,19; a method that engages youth in expressing their understanding in the moment is more likely to capture variations in understanding or problems in use than solely asking participants in retrospective interviews, even soon after the activity16. However, several researchers have suggested the cognitive burden of thinking aloud while doing the complex task, especially where the cognitions can happen much more quickly than can be spoken aloud, may also require some further probing after completing the task, rather than during the task19. Thus, our procedure included follow-up interviews to ask about preferences, and the overall experience of this form of surveying, as well as specific recommendations for improvements.

Sample

Thirty participants, aged 14–20, (median age: 16) were recruited from community recreation programs, campus groups, clubs, and other community sites across the greater Vancouver area during June and July 2012. Two-thirds of the sample (63%) were female, and participants represented varying ethnic backgrounds, including European heritage, East Asian (Chinese, Japanese, Taiwanese), and South Asian (Indian/Pakistani); 9 of 30 participants (30%) learned English as a second language, and first languages included Punjabi, Mandarin, Cantonese, Taiwanese, and Japanese.

Participants 18 years and under participated with parental consent, and all participants provided written consent or assent. Ethics approval was obtained from the Behavioral Research Ethics Board of the University of British Columbia.

Instrument

TickiT™ (Shift Health Paradigms, Inc., Vancouver, Canada) is a tablet-based adolescent health screening tool designed to obtain a psychosocial review from youths in a clinic waiting room or school-counseling office setting20. It was derived from the Adolescent Screening Questionnaire21, a paper-and-pencil self-report survey developed to collect data used to flag both risk and protective factors for health care providers (sample question: “Do you have a friend you can talk to if you have any problems or worries?”- yes; sometimes, depending on the problem; no). TickiT utilizes seven groups of response options, detailed below, that participants interact with to enter information. Response types vary by visual cues (icons vs. numbers), nature of gestural input (slide vs. tap), and the number of items in the response set. The application focuses around a central “home page” screen, from which subsequent topical sections are launched. Within each section, slides are displayed in a particular order, and users must tap the “next” button at the bottom right of each screen to move on to the next screen; questions can be skipped by pressing next without inputting a response.

Patient information is encoded according to a randomized patient ID, transmitted to the provider’s personalized and password protected online account, and removed from the tablet device. Providers can then view the survey results, which are classified and color-coded as protective or risk factors, according to the provider’s preferences.

Procedures

During digitally recorded interviews, participants interacted with the screening tool, filling out the survey while being prompted to say aloud their thoughts as they read each question, considered the choices, and selected their response. This technique aimed to elucidate how users interpreted the icons employed in the tool, whether item wording was both culturally and developmentally appropriate, and whether the question prompts were clear and congruent with the possible response choices. Follow-up questions after the think-aloud portion was complete assessed participants’ overall opinion of the approach, and clarified points expressed during the interview. The TickiT sections completed by participants included questions on demographics and family relationships, education experiences, chronic conditions and health self-care, and nutrition. Participant’s actual survey responses were not saved, either on the tablet device, nor transmitted via the internet.

Analysis

Interviews were transcribed, and responses were analyzed focusing on four general areas: a) the interpretation of the question prompt, b) understanding how to use the response mode and the saliency of the mode, c) the clarity and appropriateness of the response options, and d) the positive or negative value of the mode of health screening overall and for individual response modes. Prior to coding the transcripts, the required action and the intent or meaning of the icon or gestural actions was specified for each mode and each question, to ensure consistency between the primary and secondary coder; as well, a set of a priori codes were developed16,19 to flag whether participants struggled to understand how to use the icon or gesture or not, whether their vocalized understanding of the question prompt, the icon meaning, and the response options fit the intended meaning. These were coded in an item-by-item review within interviews18 to assess comprehension, but also coded across questions with similar response modes, to determine understanding of the action required with the response mode. Additional inductive coding of emotive responses, value statements as well as potential variations across demographic characteristics, such as participant age, gender, and English as a second language (ESL) status, were developed during the reading and rereading of the transcripts and field notes across individuals and across item areas, to identify inconsistency or commonality of responses and flag potential issues of acceptability in addition to clarity.

Results

Participants responded to a total of 30 screens (with 10 additional screens if they indicated they had a chronic health condition), each containing a question prompt and interactive response function. Their reactions to and thoughts about the seven different types of response modes and their functions (see Table 1) are discussed below.

Table 1.

Types of interactive or non-textual response modes evaluated.

| Response Mode | Function | |

|---|---|---|

| 1 | Sliding Scale | Four choice intervals written along a horizontal shaft |

| 2 | Pick an Icon | Choose from an array of symbol icons with descriptor terms below |

| 3 | Moving thumb | Swing the hand between three positions, thumbs up, down and neutral |

| 4 | Scrolling Number List | Swipe finger vertically to scroll through number list |

| 5 | Tap a Word Response | Text becomes highlighted when pressed |

| 6 | The Three Blocks | Varying block sizes denote “too little”, “enough”, and “too much” |

| 7 | Emoticons | Four faces expressing different emotions, with descriptor terms below |

1) Sliding Scale

The sliding scale mode offered users a spectrum of four choices written along a horizontal shaft (see Figure 1). The scale began at the mid-mark (no response) as a default, and then filled with color as user moved a finger along the scale, “almost like a thermometer,” as one ESL 18-year old male participant put it. Though the marker could be moved continuously across the scale, it automatically shifted to the closest of the four named intervals once the finger was lifted from the screen. In questions regarding the frequency of behaviors, the usual set of choices was “never, rarely, often, always,” although these were not the only range of options for this mode of response in the survey.

Figure 1.

Slide response mode example from TickiT™ screening tool (used with permission).

All participants found the gestural function of the scale to be clear, and readily moved their finger on the screen to fill the scale to the desired point. These slides were the most frequently used, and participants quickly became familiar with the format. As one 17-year old ESL youth said, “this is really simple, so it’s really easy for me to answer right away…I like that.” There was also a general consensus that the given set of response options for each slide appropriately matched the question prompt, with one exception.

The screen for “I will see the doctor by myself” with slide options of “in 12 months,” “in 6 months,” “I already do,” and “I don’t know” caused confusion, and age appeared to be a factor in participants’ understanding of the question. Among the 19 participants under the age of 17, 89% chose the “I don’t know” option, and many expressed uncertainty as to whether it referred to seeing the doctor alone during a clinic visit, or alternatively, to “start making my own appointments…taking care of my personal health independently,” (female, age 15). Although a majority of participants 17 and older opted for one of the other three choices (64%), they too expressed ambiguity as to what the question was asking. For example, one 17 year-old male responded: “Um, in…(pause)…12 months I’d say. Or I don’t even know, because, like, I never see the doctor by myself.”

2) Pick an Icon

Such screens displayed an array of symbols, paired with a descriptive term below, both of which became highlighted when tapped or selected (see Figure 2). The number of items in the icon set ranged from two (“I am: male/female”) to over twenty, depending on the slide. For example, the screen “My favorite foods:” had 18 symbols to choose from, and garnered one of the most enthusiastic responses among participants. Participants indicated they clearly understood how to operate this response mode, and further, many stated they actively enjoyed searching through and highlighting the pictures; as one 15-year-old male said: “It’s fun to click the icons.” In the follow-up interview, the colorful and playful design of the icons was mentioned as being particularly engaging.

Figure 2.

Pick an Icon mode example from TickiT™ screening tool (used with permission).

One point of uncertainty among participants was whether they could select multiple icons or just one, a function allowed on most screens, but restricted on some. The first icon-based screen to appear in the survey explicitly stated “(tick all that apply)”, and subsequent screens permitted multiple responses without overtly saying so, but others allowed highlighting only one icon at a time. One such example was the slide for “When I leave school I plan to:” with the choices of “Travel” (an orange airplane), “Post-secondary school” (an opened book, fuchsia), “Work” (a purple stick figure laboring), and “Not sure” (a large black question mark). Seven participants attempted multiple responses on this slide; as one 17-year old female, remarked, “Well, I figured I could choose more than one option… I guess in this case it’s not.”

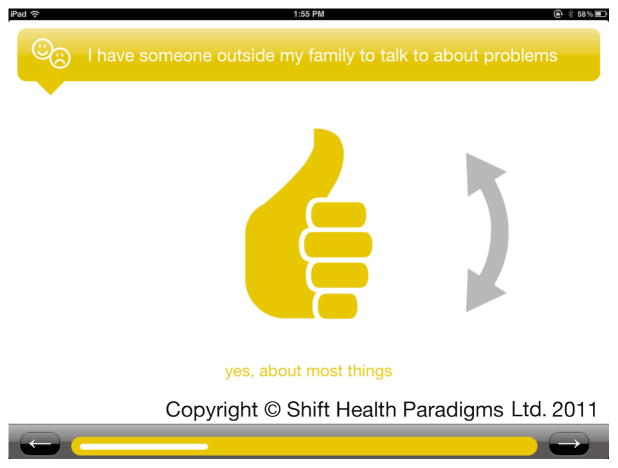

3) Moving Thumb

This function displayed a hand extending an upright thumb that could be revolved and locked into one of three positions- thumbs up, thumb down, or neutral (Figure 3). The slide opened with the thumb at a 45-degree angle and no words shown, just a curved arrow to indicate movement. As the user pressed the screen and moved the thumb either upwards or downwards, words appeared at the bottom of the screen in accordance with the thumb placement.

Figure 3.

Thumbs Up response mode example from TickiT™ health screening tool (used with permission).

Only a single phrase was displayed at a time, depending on thumb position, and so users had to scroll through each of the three thumb settings to read the specific wording of each response choice, which varied by question. Participants were divided as to whether they chose their answer based on the thumb placement or text wording. For example, one 15-year old bilingual male stated, “I read the question first, and then I already have an answer,” while another 14-year old said the opposite: “I look at the words… I want to know exactly what the thumbs up, thumbs down means.”

Reactions to the thumb function illustrated both the benefits and drawbacks of the tablet-based interface technology. On the one hand, participants expressed excitement towards the novelty of manually swinging the thumb image. As one 16-year old female explained:

This one… is much better in the aspect that you can actually move the symbols and whatnot with your finger, instead of, rather than clicking or checking a box or whatnot…Way more interactive, so it keeps the person engaged, and actually compelled to finish.

Another 18-year old ESL male compared it to the Facebook™ social media “like” button.

However, the touch function also required users to learn how to move the thumb, often through trial and error, and this was not equally intuitive for all participants. Some found it “hard to scroll through,” (15-year-old ESL female) and others were “confused at first, like I wasn’t too sure what I was supposed to do” (16-year-old female). Most participants quickly adapted to the thumb on the first slide, but seven (23%) showed some difficulty operating it, and two eventually asked for assistance from the interviewer. It was also unclear to some participants that response choices were restricted to the 3 set intervals; six (20%) tried to set the thumb in between one of the three accepted response points for at least one question.

4) Scrolling Number List

This mode required participants to scroll through a list of numbers by swiping a finger vertically in the inverse direction (Figure 4). It was well received by participants; as one 18-year-old ESL male commented, “It’s actually really cool how this works.” One 15 year-old female described the format as akin to the scrolling mechanism on Apple™ portable electronic products. Most participants found the mode easy to use, but the questions that incorporated a precise numeric choice were not all equally clear. For example, the slide, “I eat ___ meals/snacks each day” illustrated how an overly inclusive question prompt could lead to divergent interpretations among users. It was seen as ambiguous due to the combination of both meals and snacks, and participants were uncertain how to weigh them together. As one 15-year old female participant said, “I don’t know, if you have a banana or something, I wouldn’t count that here, because it’s comparing it to a meal as well. So if you pick like 5, you might have a banana, but they might think it’s two lunches.”

Figure 4.

Scrolling number list example from TickiT™ health screening tool (used with permission).

5) Tap a Word Response

These slides presented simple word choices, such as “yes” and “no,” as response options; the user pressed the text to highlight it. The function and question prompts of all such screens were clear and unambiguous to participants, and they answered them quickly.

6) The Three Blocks

This response mode displayed three blocks of increasing size bearing phrases “too little,” “enough,” and “too much,” which users selected by tapping. The number of blocks varied in different sections (one binge drinking screen in the “Drugs” section of the survey, for example, has 6 blocks). Participants found these choices to be very simple and straightforward, and showed no hesitation in selection. “I like the blocks, they’re easy…and they show what’s too little, and too much,” commented one 14-year old.

7) Emoticons

This response mode was featured in only one screen as part of this study, but appeared more frequently in other sections. The screen displayed the prompt “The look of my body makes me feel…” and offered an array of four faces expressing varied emotions, with descriptor terms below: “angry,” “sad,” “good,” and “great”. Only one response was allowed. Interviewees stated that they were cued by the expressions of the emoticons more than the words, more so with this mode than with any of the other icons or symbols in the survey. For example, one 14-year-old female noted, “I’m looking at the faces more than the words this time.” The range of the emotions displayed was considered somewhat limited, however, as one 15 year-old female explained, “It can be OK, I think the smiley faces are good, but we should have an OK (emoticon).” A 17-year old ESL youth elaborated, “I like icons, cause they match the choices, but I think that the choices are a little, a little extreme, for example, if I think that my body looks, um, self-conscious, then that’s, I’m not feeling angry, but I’m not feeling sad either.”

Follow up Questions

Participants were asked additional questions after completing the TickiT survey to assess their overall opinion of the survey approach. Responses were encouraging, and many participants stated that they actively enjoyed completing the assessment. As one 15-year old ESL female said, “Coming into this, I felt that… questions would require me to think a lot more deeply, but I kind of appreciated how it was so simple… I just had to go through everything, and it was, it was kind of fun.”

When asked how they felt the tablet format compared to pencil and paper-based surveys, 23 of 28 (82%) participants preferred the interactive electronic approach, two preferred the standard paper format, and three viewed both modes as equivalent. Participants also felt the screening took less effort and time than they had anticipated. Said one 15-year old male said, “It was pretty straightforward, but each section was shorter than I expected.”

The single question per screen format was also noted as a benefit. Said one 16 year-old ESL female, “I like seeing one question at a time because you focus, and you don’t go off.” Another 15-year old bilingual male offered, “on a tablet… once you press ‘next’ you don’t see it again, but on a sheet of paper, people beside you could see the survey.” He added that on “paper surveys, once you look at it, (you think) why are there so many yesses, maybe I should start changing it up.”

All 30 participants indicated that they would be willing to complete such a screening tool at the doctor’s office, and 26 of 29 (90%) they would also be willing take it at a school or mall for survey research purposes. Asked why they reacted positively towards the application, one 14-year old female succinctly put it, “’cause it’s just on an iPad™, iPads are fun.” The interactive presentation of the tool was frequently cited as a primary reason. One 16-year old female remarked, “It’s for people in the new generation, ‘cause everyone is doing stuff, like, with technology, and they don’t really like to write anymore.” The program’s colorful display, icon-driven graphics were appealing; one 15-year old female reacted, “This is really cool, Weeeee!! I like that it changes colors.” The use of diverse response modes also engaged the adolescents: “You kind of want to know what the next question is like,” said a 16-year old ESL female participant.

Discussion

A psychosocial assessment is a standard of care in adolescent clinical health care encounters, and groups such as the Canadian Pediatric Society22 and the American Medical Association23 have issued recommendations for health care providers to obtain a comprehensive health profile in order to determine risk factors for younger patients. Such an interview can be time consuming24, though, and some questions may be uncomfortable for young patients to answer directly25. The paper-based Adolescent Screening Questionnaire21 was developed and validated to provide such an assessment in lieu of a face-to-face interview; unfortunately, the paper format was not well received by youth, with a 25% refusal rate in one clinical trial21. Electronic tablets are becoming commonplace, and now offer health care providers another medium for collecting data. By making the self-report process more engaging, through the use of interactive and icon-based responses, adolescents may be more willing to complete a self-survey. The results of our study support this, as the diverse young people in our study were generally enthusiastic about the modes.

Previous studies have found that questionnaires requiring participants to answer each question individually, in a specific order, reduce instances of skipped questions26. Single questions per screen on the tablet appear to enhance this, and, based on some responses of the participants, may actually contribute to enhanced reliability of responses, as it offers greater privacy for prior answers, and may reduce response set bias, or adapting responses to create (or disrupt) perceived overall response patterns, rather than actually answering each question.

A willingness to use the technology, though, does not indicate comprehension in how to use the mode response. A risk of any new technology is that its functions may not be clear to unfamiliar users, and indeed, testing this possibility was one of the aims of our study. Our findings suggest that participants were overall quite capable of operating interactive touch-screen formats - interviewees indicated that they understood the tool, and these comments were reinforced by the group’s evident ability to enter their intended responses reliably and uniformly. Most participants completed the entire survey without any technical complications.

Those who did run into hurdles did so at specific slides. Nearly a quarter of the participants either expressed or exhibited some level of difficulty in operating the “Swinging Thumb” function the first time it was presented. It should be noted, though, that only two required instructions from the interviewer, and all participants showed clear comprehension by the third time the thumb appeared as a response mode. For the “Pick an Icon” function, there was some uncertainty regarding whether screens allowed single or multiple responses, and was perceived as somewhat arbitrary. This could have been resolved by more explicit instructions within the prompt.

Overall, the use of pictorial displays in lieu of text may have contributed to the consistency of comprehension across ethnic background and ESL status, as well as age. All groups were equally able to manipulate the tablet interface, and proficiency around the thumb function was not divided along any particular demographic. While one slide did prompt confusion for younger participants, this again stemmed from what was interpreted as ambiguous phrasing for the question, rather than the nature of the response mode.

Some caution should be considered, though, from our study, regarding the blending of graphical displays and corresponding texts in responses. Respondents may interpret the symbol-word interplay in divergent ways, and this can influence the validity of the survey’s results. Within our study, some participants responded to the “Swinging Thumb” according to the specific text, while others said they focused on the general understood notion of thumbs up, down and neutral. Both the appearance and description of the emoticons were noted in the interviews. Even so, there was a general consensus that text appropriately matched icons.

Limitations

This study was focused primarily on the understanding of the different response modes, rather than the validity of the health topics themselves. Although we evaluated all the different types of icon-driven or gestural modes that are used in the standard TickiT health screening survey, this should not be considered a validation of the overall screening instrument content. We omitted 5 of 9 sections from our cognitive processing interviews, those about sexual behaviours, substance use, abuse and safety, physical activities, and emotional and mental health. Some of the questions in these topic areas are more sensitive than questions about nutrition and health self-care, at least for disclosure during an interview that asks participants to talk aloud about their responses, and we felt that would distract from the primary objective, to determine whether adolescents consistently understood the implied meaning of the icons or gestural response modes.

Our study relied on a convenience sample exclusively from the Vancouver metropolitan area in western Canada. Despite the inclusion of ESL participants from diverse cultural backgrounds, it is possible that rural or non-Canadian youth may interpret questions or response modes somewhat differently from our participants. Adolescents younger than fourteen years were not included in our sample, although they may be part of a youth clinic patient population.

Despite these limitations, the findings of our investigation are promising. The use of icon-based and interactive response options appears to be a relevant and engaging approach for obtaining data from adolescents. Further research is needed in other countries, with other languages, and with younger participants. However, among the latest digitally engaged generation of adolescents, updating health-screening tools with icon-based and interactive response modes appears to offer an acceptable (or even preferred) alternative to the standard paper form on a clipboard in the waiting room. Health care providers and future health screen developers should consider enhancing the appeal and effectiveness of the adolescent health screening process through more interactive or icon-driven response modes.

Acknowledgments

We would like to thank Christopher Drozda and Bea Miller of the Stigma and Resilience Among Vulnerable Youth Centre research team for their support and assistance. We would also like to acknowledge Dr. Sandy Whitehouse and Shift Health Paradigms, Inc., for their permission to use the TickiT™ screening tool for this study.

Source of Funding:

Funding was provided in part by the Child Family Research Institute at BC Children’s Hospital, and in part by grant #CPP 86374 from the Canadian Institutes for Health Research’s Institute for Population and Public Health.

Footnotes

Conflicts of Interest:

The authors have no conflicts of interest to declare.

References

- 1.Ellis A. The validity of personality questionnaires. Psych Bulletin. 1946;43(5):385. doi: 10.1037/h0055483. [DOI] [PubMed] [Google Scholar]

- 2.Joinson AN. Self-disclosure in computer mediated communication: The role of self-awareness and visual anonymity. European J Soc Psych. 2001;31(2):177–192. [Google Scholar]

- 3.Newman J, Des Jarlais DC, Turner CF, Gribble J, Cooley P, Paone D. The differential effects of face-to-face and computer interview modes. AJPH. 2002;92(2):294–297. doi: 10.2105/ajph.92.2.294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kissinger P, et al. Application of computer-assisted interviews to sexual behavior research. J Epi. 1999;149(10):950–954. doi: 10.1093/oxfordjournals.aje.a009739. [DOI] [PubMed] [Google Scholar]

- 5.Suler J. The online disinhibition effect. Cyberpsych & Behav. 2004;7(3):321–326. doi: 10.1089/1094931041291295. [DOI] [PubMed] [Google Scholar]

- 6.Vereecken CA, Maes L. Comparison of a computer-administered and paper-and-pencil-administered questionnaire on health and lifestyle behaviors. J Adol Health. 2006;38:426–432. doi: 10.1016/j.jadohealth.2004.10.010. [DOI] [PubMed] [Google Scholar]

- 7.Salgado JF, Moscoso S. Internet-based personality testing: Equivalence of measures and assessees’ perceptions and reactions. Intl J Select Assess. 2003;11(2–3):194–205. [Google Scholar]

- 8.Synodinos NE, Brennan JM. Computer interactive interviewing in survey research. Psychol & Market. 1988;5(2):117–137. [Google Scholar]

- 9.Snyder DK. Computer-assisted judgment: Defining strengths and liabilities. Psychol Assess. 2000;12(1):52–60. doi: 10.1037//1040-3590.12.1.52. [DOI] [PubMed] [Google Scholar]

- 10.Olson AL, et al. Changing adolescent health behaviors: The healthy teens counseling approach. Am J Prev Med. 2008;35(5):S359–S364. doi: 10.1016/j.amepre.2008.08.014. [DOI] [PubMed] [Google Scholar]

- 11.Ahmad F, Hogg-Johnson S, Skinner HA. Assessing patient attitudes to computerized screening in primary care: psychometric properties of the computerized lifestyle assessment scale. J Med Internet Res. 2008;10(2):e11. doi: 10.2196/jmir.955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rhodes KV, et al. Better health while you wait: a controlled trial of a computer-based intervention for screening and health promotion in the emergency department. Ann Emerg Med. 2001;37(3):284–291. doi: 10.1067/mem.2001.110818. [DOI] [PubMed] [Google Scholar]

- 13.Denny SJ, et al. Hand-held internet tablets for school-based data collection. BMC Res Notes. 2008;1(1):52. doi: 10.1186/1756-0500-1-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Abernethy AP, et al. Improving health care efficiency and quality using tablet personal computers to collect research quality, patient reported data. Health Serv Res. 2008;43(6):1975–1991. doi: 10.1111/j.1475-6773.2008.00887.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Borzekowski DL. Considering children and health literacy: A theoretical approach. Peds. 2009;124(Supp3):S282–S288. doi: 10.1542/peds.2009-1162D. [DOI] [PubMed] [Google Scholar]

- 16.Jaspers MWM. A comparison of usability methods for testing interactive health technologies: Methodological aspects and empirical evidence. Intl J Med Informatics. 2009;78:340–353. doi: 10.1016/j.ijmedinf.2008.10.002. [DOI] [PubMed] [Google Scholar]

- 17.Ericsson KA, Simon HA. Verbal reports as data. Psych Rev. 1980;87(3):215–251. [Google Scholar]

- 18.Knafl K, et al. The analysis and interpretation of cognitive interviews for instrument development. RINAH. 2007;30:224–234. doi: 10.1002/nur.20195. [DOI] [PubMed] [Google Scholar]

- 19.Nielsen J, Clemmensen T, Yssing C. Getting access to what goes on in people’s heads? Reflections on the think-aloud technique. NordiCHI. 2002 Oct;:101–110. [Google Scholar]

- 20.Whitehouse SR, et al. Co-Creation with TickiT: Designing and evaluating a clinical eHealth platform for youth. JMIR Res Protocol. 2013;2(2):e42. doi: 10.2196/resprot.2865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lam PY, et al. To pilot the use of the Adolescent Screening Questionnaire (ASQ) in a Canadian Children’s Hospital and Exploring Outcomes in Referral Pathways. J Adol Hlth. 2012;50(2):S81. [Google Scholar]

- 22.Greig A. Preventive health care visits for children and adolescents aged 6 to 17 years: The Greig Health Record – Technical Report. Paed Child Hlth. 2010;15(3):157–159. doi: 10.1093/pch/15.3.157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Montalto NJ. Implementing the guidelines for adolescent preventive services. Amer Fam Phys. 1998;57:2181–2191. [PubMed] [Google Scholar]

- 24.Bagshaw S. [Accessed 8-01-2013];Report on the HEADSS assessment on YR 9 and YR 10 students at Linwood College. 2006 at http://www.laneresearch.co.nz/files//LANE-chapter8.pdf.

- 25.Brown JD, Wissow LS. Discussion of sensitive health topics with youth during primary care visits: relationship to youth perceptions of care. J Adol Hlth. 2009;44(1):48–54. doi: 10.1016/j.jadohealth.2008.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Richman W, et al. A meta-analytic study of social desirability distortion in computer-administrated questionnaires, traditional questionnaires, and interviews. J Appl Psychol. 1999;84(5):754–775. [Google Scholar]