Abstract

Objective

We investigated the factors influencing speech perception in babble for 5-year-old children with hearing loss who were using hearing aids (HAs) or cochlear implants (CIs).

Design

Speech reception thresholds (SRTs) for 50% correct identification were measured in two conditions - speech collocated with babble, and speech with spatially separated babble. The difference in SRTs between the two conditions give a measure of binaural unmasking, commonly known as spatial release from masking (SRM). Multiple linear regression analyses were conducted to examine the influence of a range of demographic factors on outcomes.

Study sample

Participants were 252 children enrolled in the Longitudinal Outcomes of Children with Hearing Impairment (LOCHI) study.

Results

Children using HAs or CIs required a better signal-to-noise ratio to achieve the same level of performance as their normal-hearing peers, but demonstrated SRM of a similar magnitude. For children using HAs, speech perception was significantly influenced by cognitive and language abilities. For children using CIs, age at CI activation and language ability were significant predictors of speech perception outcomes.

Conclusions

Speech perception in children with hearing loss can be enhanced by improving their language abilities. Early age at cochlear implantation was also associated with better outcomes.

Keywords: children, speech perception in noise, hearing aids, audibility, cochlear implants, predictors of speech perception

Introduction

The presence of permanent childhood hearing loss (PCHL) has a negative impact on children’s speech and language development. Acquisition of speech perception relies on access to relevant acoustic and linguistic information early in life (Kuhl, 2000), oftentimes in complex situations where target speech occurs with competing sounds (Barker & Newman, 2004). In typically developing children and adults, it has been observed that speech perception in noise improves when the target source is spatially separated from competing sounds, an advantage commonly referred to as ‘spatial release from masking’ or SRM (Akeroyd, 2006). This improvement has been partly attributed to mechanisms relating to spatial hearing, known to reach maturity in early childhood in typically developing children (Garadat & Litovsky, 2007, Ching et al., 2011b). Little is known about how young children with hearing loss use spatial cues to resolve auditory information in complex environments – a feat that underpins learning in real-world environments.

With the advent of universal newborn hearing screening (UNHS) and advances in technology, early fitting of hearing aids (HAs) or cochlear implants (CIs) to children with PCHL is possible. The influence of age at intervention (defined as age at HA fitting or cochlear implantation in this paper) on children’s developmental time course to use spatial cues is unknown. As speech perception is a major outcome measure of effectiveness of the hearing devices for children with PCHL, an increased understanding of factors influencing speech perception in noise and SRM in these children would contribute to devising evidence-based strategies for optimising outcomes. In this article, the influence of age at intervention together with a comprehensive range of factors, including language, cognitive and demographic characteristics, on 5-year-old children using HAs or CIs in perceiving speech in noise and SRM was analysed for a large group of children who participated in the Longitudinal Outcomes of Children with Hearing Impairment (LOCHI) study.

Previous studies have shown that many children with PCHL experienced significant difficulties listening to speech in real-world environments (Ching et al., 2010, Scollie et al., 2010). They required a more favourable signal-to-noise ratio (SNR) than their peers with normal hearing (NH) to achieve a comparable level of speech perception performance (e.g. Gravel et al., 1999, Hicks and Tharpe, 2002). The deficit was more pronounced when the competing noise was complex, such as speech babble produced by one or more talkers (e.g. Leibold et al, 2013; Hillock-Dunn et al, 2015). This suggests that assessing perception of speech in babble provides an estimate that better approximates listening in real-world environments than evaluating perception of speech in quiet or in steady-state noise-maskers.

Studies on adult speech perception in simulated complex auditory environments have shown that SRM incorporated the use of monaural ‘head-shadow’ (listening to the ear with a better SNR or better-ear effect) cues, binaural summation (receiving information with two ears or redundancy), and the squelch effect (binaural integration of inputs to the two ears for inter-aural time and level difference cues, or binaural unmasking) (for a summary, see Dillon, 2012). In current literature, approaches to quantifying SRM have used listening conditions that either contain only one competing source from a single location (thereby relying mainly on head shadow cues), or competing sounds that are simultaneously distributed in multiple locations (in which head shadow cues are minimised and listeners must rely on binaural cues). The SRM is generally expressed as the difference in speech reception threshold (SRT) or percent correct score when target speech and competing sounds are collocated and when target speech and competing sounds are spatially separated.

Children with normal hearing (NH) demonstrated the use of spatial hearing by about 4 years of age when asked to identify spondaic words in 2-talker babble presented from a location that was either the same as target speech at 0° azimuth, or separated from target speech at 90° azimuth to one ear (Garadat & Litovsky, 2007, Johnstone & Litovsky, 2006). However, this ability has not been observed in school-aged children with hearing loss who were using bilateral HAs (Nittrouer et al., 2013). Nittrouer et al (2013) assessed word perception of 48 NH children and 18 children with hearing loss using HAs at a mean age of 9 years by presenting words in steady-state noise, with the noise located either at 0° azimuth with target speech (S0N0), or laterally to one side at 90° azimuth (S0N90). For both test conditions, the mean scores of children with NH were significantly higher than those of children with HAs. On average, spatial advantages in terms of SRM were observed in NH children, but not in children using HAs.

Other studies that focussed on assessing the use of binaural processing in SRM have presented competing babble on both sides of the head in the listening condition when target speech and competing sounds were spatially separated so that head shadow effects could be minimised (S0N±90). Ching et al (2011b) evaluated 31 NH children and 27 children with hearing loss using HAs, aged between 3.5 and 11.8 years. On average, there were no significant between-group differences in SRTs when speech was presented in collocated babble at 0° azimuth (S0N0). However, performance of children with HAs was significantly poorer than normal when competing babble was presented simultaneously at ±90° azimuth in both ears (S0N±90). On average, children with NH obtained an SRM of 3 dB whereas children with HAs did not demonstrate an ability to make use of inter-aural cues for binaural unmasking.

Many previous studies have investigated speech perception in noise and SRM in children using CIs. In studies that focused on head shadow effects by comparing performance in the S0N0 and S0N90 conditions, SRM was observed in children generally when noise was presented near the ear that had ‘poorer’ functional hearing; viz, the ear that received the second CI in children with bilateral CIs, or the ear with an HA in children using a CI and HA in opposite ears (bimodal fitting). Litovsky et al (2006) compared the performance of children ranging in age between 3 and 14 years, who used either bilateral CIs or bimodal fitting. Spondaic words were presented from a loudspeaker located in front at 0° azimuth, and competing 2-talker babble was presented either in front or laterally to one side of the listener (S0N90). On average, there were no significant between-group differences in SRTs for word recognition and in SRM. However, the results revealed that SRM was observed only when competing babble was moved to the side of the second CI (for users of bilateral CIs) or HA (for users of bimodal fitting), but not when babble was located on the side of the first CI. Similar findings were reported by Chadha et al (2011) on 12 children who received bilateral CIs sequentially. Van Deun et al (2010) used a similar test paradigm to assess 8 children (aged 5 to 13 years) using bilateral CIs, and found that better performance was obtained when they listened with both CIs than with one, and that a larger SRM was observed when the competing sound was located near the second CI than when it was near the first CI. Misurelli and Litovsky (2012) showed that 6- to 8-year-old children with bilateral CIs required better SNR than children with NH to achieve 80% correct in a spondaic word test in competing babble, and children using bilateral CIs also exhibited smaller SRMs than children with NH. In a similar vein, Nittrouer et al (2013) showed that children with bilateral CIs had poorer word scores in steady-state noise than children with NH, and SRMs were smaller in magnitude than children with NH. There was no significant between-group differences in SRMs of children who used unilateral CIs (n=12) or bilateral CIs (n=25). A recent study that investigated binaural benefit by comparing detection thresholds for a/baba/stimulus presented in broadband noise for 20 children between 2.5 and 6 years of age by Galvin et al (2017) also revealed no significant difference in performance between unilateral and bilateral CI conditions.

Other studies have examined the use of both head shadow and binaural cues for speech perception in noise by comparing performance in the S0N0 and the S0N±90 conditions. Whereas NH children showed SRM demonstrating the ability to use binaural cues at a very young age (Ching et al., 2011b, Misurelli & Litovsky, 2012), school-aged children with bilateral CIs did not (Misurelli & Litovsky, 2012). It has been suggested that children who received simultaneous CIs are likely to develop better skills at using binaural information than those who received their two CIs sequentially (Chadha et al., 2011).

It should not be surprising that measurements of SRM differ according to whether, in the spatially separated condition, competing sounds were presented laterally to one ear only, i.e., either +90° or −90° azimuth; or to both ears, i.e., at ± 90° azimuth (Misurelli & Litovsky, 2012). When competing sounds were presented laterally to one ear only (S0N90), SRM can be achieved by relying mainly on the use of head shadow effects. By selectively attending to the ear with a better SNR and/or aided hearing, listeners demonstrated SRM when competing sounds were presented to the second CI (presumably the poorer ear) but not the first CI for those using bilateral CIs, or to the side of the HA for those using bimodal fitting. When competing sounds were presented on both sides (S0N±90), the overall level at the two eardrums (and both sides of the head) would be about 3 dB greater than if the sources were both at 0° azimuth because the increase at the near ear more than offsets the decrease at the far ear (Festen & Plomp, 1986). Hence, the listener has to rely on binaural processing to partially suppress noise masking for SRM to occur in the S0N±90 condition, but on head shadow cues in the S0N90 condition. As the ability to use binaural cues relies on early stimulation of both ears for the complex auditory neural networks to develop (Moore & Linthicum Jr, 2007), we hypothesized that children who received earlier intervention (fitting of HAs or CIs) would achieve better SRTs and SRMs than those who received later intervention.

In one study that investigated the effect of early intervention on speech perception in noise for children with PCHL, Sininger et al (2010) showed that earlier HA fitting was associated with better speech perception in babble for children aged between 3 and 9 (28 using HAs, 16 using CIs) whose hearing loss ranged from mild to profound degrees. However, having a CI was linked to poorer speech perception in babble. It is not clear whether this rather unexpected finding could be explained by the duration of CI use (e.g. Killan et al, 2015) or the relatively late age at cochlear implantation (range: 12.8 to 76.5 months). Several studies have investigated the effect of age at implantation on speech perception in noise for children using CIs, with some reports showing a significant effect (e.g. Sarant et al, 2001) whereas others not (Geers et al., 2003, Nittrouer et al., 2013). Most previous studies, however, included children who were identified before UNHS was implemented and therefore received later intervention by current standards (JCIH 2007). Therefore, the effectiveness of early age at intervention on speech perception remains to be further investigated with the current population of children with PCHL.

A range of factors have also been posited in the literature to contribute to speech perception in noise in children with hearing loss, although the findings were not consistent across studies. Language ability has been associated with speech perception in children using HAs (e.g. McCreery et al., 2015b), but other studies have not found a consistent link (e.g. Eisenberg et al, 2000; Nittrouer et al, 2013). McCreery et al (2015) reported on perception of monosyllabic words in steady-state noise presented at 0° azimuth in 129 children aged between 7 and 9 years, when listening with their HAs or when they were unaided. It was found that on average, higher correct scores were correlated with aiding, more favourable SNR, higher receptive vocabulary, higher aided audibility, higher maternal education level, and higher scores on a test of phonological working memory. On the other hand, Nittrouer et al (2013) did not find a significant effect of language ability on speech perception in noise. Cognitive ability or IQ was a significant factor influencing speech perception of children using CIs (e.g. Geers et al, 2003), but there is also evidence to show that IQ was no longer significant after accounting for the effects of language ability (Sarant et al., 2010). Degree of hearing loss has also been found to be negatively correlated with speech perception ability in noise in some studies (e.g. Blamey et al, 2001), but not others (Sininger et al., 2010, Sarant et al., 2010).

Potential contributing factors influencing speech perception of children using CIs also included communication mode – children who normally communicate using speech scored higher than those who use sign and speech (Sarant et al., 2001); and type of CI speech processor – newer processors performed better (Davidson et al., 2011).

As previous studies have included children that spanned a wide age range, age has been found to be a significant factor influencing speech perception. Older age at evaluation was typically associated with better performance (higher correct scores or less favourable SNR to achieve a criterion level of performance); compared to children assessed at a younger age (Elliott, 1979, Eisenberg et al., 2000, Fallon et al., 2000, Talarico et al., 2006). Most previous studies have excluded children with additional disabilities or those from homes with lower socio-economic status; thereby limiting the applicability of findings to the population of children with hearing loss. However, it is clear that many children with PCHL have other conditions, including additional disabilities (about 20–40%, Ching et al., 2013b, Gallaudet Research institute, 2011), auditory neuropathy spectrum disorder (ANSD; about 10% of children with hearing loss, Ching et al., 2013b) and that demographic characteristics are known to influence learning and development. For this reason, it is important to study population-based cohorts with sufficient power to account for the effects of potential confounders in examining the effect of timing of intervention on speech perception development.

Rationale

In the current study, we addressed the question of whether early intervention improves speech perception in noise by drawing on a population cohort that comprised children with PCHL diagnosed via either UNHS or standard care, enrolled in the LOCHI study. We aimed to increase knowledge about listening-in-noise abilities and SRM at 5 years of age, in children using HAs or CIs; and identify factors influencing these outcomes with the ultimate goal of deriving strategies to improve outcomes.

Two research questions were addressed.

How do age at intervention, aided audibility, cognitive, language, and demographic factors influence speech perception ability in children using HAs?

How do age at implantation, cognitive, language, and demographic factors influence speech perception ability in children using CIs?

Method

Participants

Participants were children with PCHL born between April 2002 and August 2007 in the states of New South Wales, Victoria and Queensland in Australia. All children have access to the consistent high-quality post-diagnostic audiological services and technology provided by a government-funded national service provider, Australian Hearing (AH). However, their hearing loss was detected either via UNHS or standard care, depending on whether UNHS was operating in the state where they were born. Children who presented for services at AH before 3 years of age were invited to participate in the LOCHI study. This study was approved by institutional human research ethics committee.

Children using HAs: fitting and verification

Hearing assessment, HA fitting and verification, and on-going evaluation and adjustments were carried out by AH paediatric audiologists, in accordance with the AH national paediatric amplification protocol (King, 2010). A real-ear-aided gain approach (Ching & Dillon, 2003, Bagatto et al., 2005) was used for fitting. This involved using either individual real-ear-to-coupler differences (RECD) or age-appropriate values to derive gain targets in an HA2–2cc coupler by using the National Acoustic Laboratories (NAL, Byrne et al, 2001; Dillon et al, 2011) or the Desired Sensation Level (DSL, Seewald et al, 1997; Scollie et al, 2005) standalone prescriptive software. The LOCHI study included a randomised controlled trial of hearing aid prescription (Ching et al., 2013a), such that participants in the trial were randomly assigned to fitting with either the NAL or the DSL prescription. Hearing aids were adjusted in an HA2–2cc coupler, using a broad-band speech-weighted stimulus generated by the Aurical or Med-Rx system to measure gain-frequency responses at low, medium and high input levels; and a swept pure tone at 90 dB SPL to measure maximum power output. Verification was achieved by comparing the measured 2cc coupler gain/output to custom target values. Details of the proximity of hearing aid fitting to prescriptive targets are reported in a companion article) (Ching et al., this issue). In brief, the root-mean-square (rms) error for fitting to targets across the range from 0.5 to 4 kHz was within 3 dB at medium input level.

Information about hearing threshold levels and hearing aid characteristics that were current within 6 months of evaluation for each child were retrieved from individual clinical files held at AH hearing centres.

Children using HAs: Calculation of aided audibility

Aided audibility was calculated using the standard method for calculating the Speech Intelligibility Index (ANSI S3.5, 1997) as well as a modification that included a hearing loss desensitization factor (Ching et al., 2013c, Johnson & Dillon, 2011). The latter accounted for the reduction in the amount of speech information that can be extracted from an audible signal as hearing loss increases (Ching et al., 2011a). The SII depicts the proportion of the long-term average speech spectrum that is above the listener’s hearing threshold or noise level, whichever is higher; weighted by the relative importance of the frequency region for speech intelligibility. The importance function for average speech was used (Pavlovic, 1994). The SII was calculated for both the unaided and the aided conditions. To estimate whether for a certain degree of hearing loss, aided audibility that was independent of unaided SII (i.e., the unique effect of aided hearing) may be associated with speech perception performance, we calculated a residualised SII (rSII), as proposed by Tomblin et al (2014). This rSII was derived by firstly regressing unaided SII onto aided SII with a quadratic function that provided the best fit, and then taking the difference between the predicted and the observed aided SII (see Appendix 1). The rSII gives a ‘normalised HA gain measure where the magnitude of the gain is relative to the gain obtained by children with similar unaided hearing’(Tomblin et al., 2015).

Cochlear Implants

All children use a Nucleus implant manufactured by Cochlear Limited (Sydney, Australia); including CI24R, CI24 RE, CI512, or CI513; with either straight or perimodiolar electrode arrays. All children had their CI sound processors (CP810 or Freedom or ESPrit 3G) programmed by their case managers at cochlear implant centres. A companion article provides detailed information about parameter settings (Incerti et al., this issue).

Assessing speech perception

Due to the range in language and cognitive abilities represented in the sample under study here, and in line with common practice (e.g. Geers et al, 2003; McCreery et al, 2015), we have selected tests that included stimuli with low and high linguistic demands and response formats that varied from a picture pointing task to a stimulus word to verbally repeating a stimulus sentence.

The Northwestern University Children’s Perception of Speech Test (NU-CHIPS, Elliott & Katz, 1980)

This closed-set task requires a picture-pointing response. In this test, one stimulus word is presented for each response plate, where a set of 4 pictures depicting four words with the same or similar vowel sound but different consonants is represented. The test comprises of 4 forms each of 50 words.

The Bamford–Kowal–Bench (BKB)–like sentence test material (Bench & Doyle, 1979, Bench & Bamford, 1979)

This open-set task requires a child to repeat as much of the stimulus sentence as possible. Eighty lists of 16 simple sentences, including 50 keywords in each list, were devised to include vocabulary, grammar and sentence length appropriate to the linguistic abilities of young children.

Digital recordings of the test material produced by native Australian English speakers were used as stimuli, and speech babble was used as the competing sound to approximate listening in real-world situations. All testing was carried out in a sound-treated booth. The stimuli were presented from an array of three loudspeakers in the free field via an audiometer with each output channel connected to amplifiers and loudspeakers. The loudspeakers were located at a distance of 0.75 m from the subject position, at 0°, and at ±90° azimuth. Prior to testing, the height of the chair at subject position was adjusted so that the centre of the loudspeakers approximately aligned with ear level of each child. The speech perception testing was performed in two listening conditions. In the S0N0 condition, target speech and competing 8-talker babble were presented from the same loudspeaker located at 0° azimuth. In the S0N±90 condition, target speech was presented from the front at 0° azimuth, and uncorrelated 4-talker babble was presented simultaneously from each of the two loudspeakers positioned on either side of the subject (effectively 8-talker babble). Target and competing babble were calibrated at the test position with the child absent, using a sound level meter.

Each child completed either the NU-CHIPs or the BKB sentence test, depending on the abilities of the child as judged by speech pathologists who completed language assessments. Test conditions and test lists were counterbalanced across participants.

Procedure

The children wore their HAs or CIs at their personal settings. The hearing devices were checked to ensure that they were functioning normally. All noise management features were deactivated, and microphones were set to omnidirectional mode. Prior to testing with the NU-CHIPs material, children were familiarised with all the test items and associated pictures. For both tests, children were given practice before testing. During testing, the research audiologists monitored the child closely and provided verbal encouragement, but did not provide feedback about the correctness of response.

An adaptive procedure was used to measure the SRT for 50% correct, in terms of SNR (Mackie & Dermody, 1986). The level of the babble was varied according to whether a child could identify a word correctly (in NU-CHIPs) or repeat more than half of the keywords in a sentence correctly (2 out of 3 keywords in a BKB sentence). The test began with an SNR of 10 dB, and adjustments in 5-dB steps to achieve 2 reversals, after which the step size was reduced to 1 dB. The test stopped after 12 reversals with 1 dB step size were obtained (typically 2 lists) for the child. The SRT was the mean of the midpoints of the last 10 reversals, expressed in terms of SNR. The standard error of the mean for each SRT was within 1 dB. The SRM was calculated by taking the difference in SRTs between the two test conditions (S0N0 – S0N±90 = SRM), expressed in dB. A positive value indicated that an advantage was obtained when listening to speech in spatially separated babble compared to collocated babble.

Other Measures

As part of the children’s 5-year-old assessment battery, each child was assessed directly by research speech pathologists on receptive and expressive language (Pre-school Language Scale version 4 (PLS-4; Zimmerman et al., 2002), and nonverbal cognitive ability (Wechsler Nonverbal Scale of Ability (WNV; Wechsler & Naglieri, 2006). These tests have been widely applied to assess abilities of children with hearing loss. The PLS-4 is a standardised test of spoken English. The test includes verbal tasks which enable children to demonstrate understanding of and ability to produce English language structures, including semantics, morphology, syntax, and phonology. It gives an overall total language score, and two subscale scores – expressive communication and auditory comprehension. The WNV is a standardised test of nonverbal cognitive ability. It gives a full-scale IQ score.

Parents were asked to complete demographic questionnaires to provide information regarding children’s birth weight, diagnosed disabilities in addition to hearing loss, communication mode used at home and during early intervention (speech vs sign and speech), and parents’ level of education.

Statistical analysis

The statistical analysis was done using Statistica v.10 (Statsoft Inc, 2011) and SPSS for Windows v.16 (SPSS Inc., 2007). All analyses used two-tailed tests, with statistical significance set at p <0.05. Children who were deemed unable to complete speech perception testing were assigned the poorest score observed in the sample (n = 67, 26.6%). A multiple imputation method was used to handle missing values in variables (Rubin, 1987). The analyses were averaged over 10 imputations.

Descriptive statistics were used to report quantitative outcomes. Analysis of variance (ANOVA) with or without repeated measures was used to test for differences within (test condition: S0N0 vs S0N±90) and between groups (device group: HA vs CI) respectively.

To investigate which variables, including age at intervention, influence speech perception outcomes in terms of SRTs and SRMs, linear multiple regression models were fitted separately to data from children who were using HAs and those who were using CIs at five years of age. Two dependent variables were used, SRTs averaged across both test conditions and SRMs. The speech perception data for children who had an SNR for one of the two test conditions (S0N0, S0N±90) were filled in by predicting from simple linear regression of S0N±90 on S0N0 SNR, separately for each of the two tests. Using a previously reported data set (Ching et al, 2011b) that comprised scores of children assessed using both the NU-CHIPs and the BKB tests with a similar procedure as this study to derive linear regression models, BKB scores were predicted from NU-CHIPs scores and vice versa for participants in this study. These were averaged to give the SRTs that were used as a dependent variable in multiple regression analyses. The SRM was calculated only for participants who had an SNR for both test conditions, and was calculated as the averaged SNR for S0N0 minus the averaged S0N±90 SNR.

For each dependent variable, two models were fitted for the CI group, and three models were fitted for the HA group. In the first model, the predictor variables common to both the HA and the CI models included nonverbal cognitive ability (WNV standard score) as a continuous variable, and additional disabilities (presence or absence), ANSD (presence or absence), maternal education (3 categories: university degree, vocational training certificate, ≤12 years of school), and communication mode during early educational intervention (speech only vs combined [speech and sign]) as categorical variables. The HA model also included age at fitting of first HA and four-frequency-average hearing loss (4FAHL, averaged hearing levels at 0.5, 1, 2 and 4 kHz in the better ear) as continuous variables. The CI model also included age at activation of first CI as a continuous variable. In the second model for both device groups, language ability (PLS-4 total language scores) as a continuous variable was added. In the third model for the HA group, the additional predictor was aided audibility (rSII) as a continuous variable. This approach allowed an investigation of the contributions of language to speech perception, after allowing for the effects of other cognitive and demographic characteristics. The third model enabled an investigation of the unique contribution of aided audibility to speech perception after accounting for the effects of other variables.

Results

Table 1 shows the demographic characteristics of children whose results are reported here. The current analysis included data from 252 children, 168 of whom were using HAs and 84 of whom were using CIs at 5 years of age.

Table 1.

Demographic characteristics of participants.

| Characters | Hearing Aid (HA) (n = 168) |

Cochlear Implant (CI) (n = 84) |

|---|---|---|

| Gender (Male), No. (percentage) | 100 (59.5%) | 35 (41.7%) |

| Hearing Loss (4FA HL in better ear), | ||

| No. (percentage) | ||

| Mild (≤ 40 dB) | 49 (29.2%) | NA |

| Moderate (41–60 dB) | 86 (51.2%) | NA |

| Severe (61–80 dB) | 30 (17.9%) | 7 (8.3%) |

| Profound (>80 dB) | 3 (1.8%) | 77 (91.7%) |

| Additional disabilities (AD), No. (percentage) | ||

| Presence of AD (without ANSD) | 55 (32.7) | 26 (31.0%) |

| Presence of ANSD only | 8 (4.8%) | 5 (6.0%) |

| Presence of AD and ANSD | 6 (3.6%) | 6 (7.1%) |

| Nonverbal cognitive ability | ||

| Mean (SD) | 104.4 (16.3) | 102.0 (14.1) |

| Median | 105.0 | 102.0 |

| Interquartile range (IQR) | 93.8–117.0 | 93.0–111.5 |

| Missing data (n) | 32 | 23 |

| Age at hearing aids fitting | ||

| Mean (SD) | 10.6 (9.9) | 5.7 (6.2) |

| Median | 6.0 | 2.5 |

| IQR | 2.0–18.0 | 1.8–7.3 |

| Age at cochlear implantation | ||

| Mean (SD) | NA | 16.3 (7.4) |

| Median | NA | 14.0 |

| IQR | NA | 10.0–22.0 |

| Hearing Device, No. (percentage) | ||

| Hearing Aid – Bilateral | 157 (93.5%) | NA |

| Unilateral | 11 (6.5%) | NA |

| Cochlear Implant – Bilateral | NA | 56 (66.7%) |

| Unilateral plus hearing aid | NA | 16 (19.0%) |

| Unilateral only | NA | 12 (14.3%) |

| Maternal Education | ||

| No. (percentage): University Qualification | 63 (41.7%) | 34 (47.2%) |

| vocational training certificate | 33 (21.9%) | 16 (22.2%) |

| 12 years or less of schooling | 55 (36.4%) | 22 (30.6%) |

| Missing data (n) | 17 | 12 |

| Communication mode in early intervention | ||

| No. (percentage): Oral only | 125 (80.6%) | 51 (69.9%) |

| Combined (sign and speech) | 30 (19.4%) | 22 (30.1%) |

| Missing data (n) | 13 | 11 |

| Language score (PLS-4 Total standard score) | ||

| Mean (SD) | 85.7 (19.9) | 78.2 (23.2) |

| Median | 88.0 | 79.0 |

| IQR | 71.0–101.0 | 52.5–94.8 |

| Missing data (n) | 32 | 25 |

In the HA group, 98 children participated in the randomised controlled trial of hearing aid prescription (50 were assigned to fitting with the NAL prescription, and 48 to the DSL prescription). Preliminary analyses using repeated measures ANOVA with the SRTs for both test conditions as dependent variables, prescription (NAL vs DSL) as between-group variable, and test condition (S0N0 vs S0N±90) as a within-group variable revealed a significant main effect of test condition (F(1,96) = 52.14, p < 0.0001), but the main effect of prescription was not significant (p = 0.96); and there was no significant interaction effect (p = 0.09). Therefore, subsequent analyses have combined results in all children with HAs, regardless of prescription used for fitting.

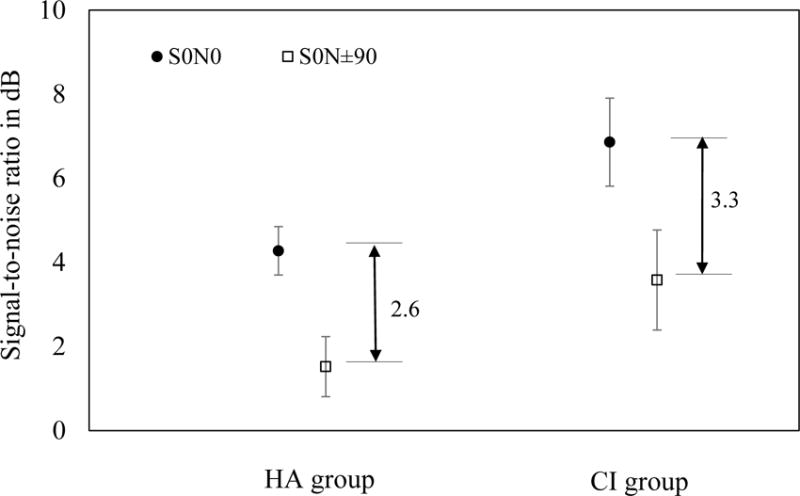

SRTs and SRMs for children using HAs or CIs

Figure 1 shows the mean SRTs for S0N0 and S0N±90 conditions in children using HAs or CIs. On average, children using CIs required about 2 dB better SNR than those using HAs to achieve the same level of speech perception. Repeated measures ANOVA showed significant main effects of device group (F(1,173) = 21.81, p < 0.0001) and test condition (F(1,173) = 90.05, p < 0.0001). There was no significant interaction effect (p = 0.29).

Figure 1.

Mean SNRs at S0N0 (filled circles) and S0N±90 conditions (open squares) for children using hearing aids (HA group) and children using cochlear implants (CI group). The vertical bars denote 95% confidence intervals.

The mean SRMs were 2.6 dB (SD 3.5) and 3.3 dB (SD 4.2) for the HA and CI groups respectively. ANOVA showed that the between-group difference was not significant (F(1,173) = 1.12, p = 0.29).

Factors that influence children using HAs

Table 2 shows the results of multiple linear regression analyses. In the first model, the full set of predictors accounted for 41% of total variance in speech perception scores, with nonverbal cognitive ability as the only significant predictor of SRTs (effect size: 0.12, 95% confidence interval or 95% CInt: 0.16, 0.09). Language ability accounted for additional 13% of variance (effect size: 0.11, 95% CInt: 0.14, 0.07), with the full model accounting for 54% of total variance in scores. The inclusion of rSII as a predictor did not contribute to explaining additional variance in scores, after allowing for demographic and language factors. Using the desensitized SII that included hearing loss desensitization in deriving rSII, the multiple linear regression analyses were repeated and similar results were obtained.

Table 2.

Effect size (unstandardized coefficient estimates B-values), 95% confidence intervals (95% CInt), and significance levels (p-values) of predictor variables for SRT outcomes of children with HAs (n=144). The rSII calculation was based on the American National Standards Institute (ANSI) S3.5 (1997) method.

| Model 1 | Model 2 | Model 3 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

||||||||||||

| B | 95%CI int | p | B | 95%CI int | p | B | 95%CI int | p | ||||

| Age at hearing aids fitting | 0.07 | −0.60 | 0.74 | 0.85 | –0.01 | –0.60 | 0.58 | 0.92 | –0.02 | –0.61 | 0.57 | 0.91 |

| Additional disabilities (AD) | 1.05 | −0.60 | 2.70 | 0.24 | 0.35 | –1.12 | 1.82 | 0.59 | 0.49 | –1.00 | 1.97 | 0.51 |

| Maternal Education | −0.28 | −1.03 | 0.48 | 0.47 | 0.29 | –0.39 | 0.98 | 0.41 | 0.24 | –0.45 | 0.93 | 0.50 |

| Communication mode in early intervention | 0.51 | −0.25 | 1.27 | 0.26 | 0.31 | –0.37 | 0.98 | 0.46 | 0.32 | –0.36 | 0.99 | 0.45 |

| ANSD | 0.75 | −1.55 | 3.06 | 0.53 | 0.83 | –1.20 | 2.86 | 0.43 | 0.81 | –1.22 | 2.83 | 0.44 |

| 4FA HL in better ear | 0.02 | −0.02 | 0.07 | 0.33 | 0.02 | –0.02 | 0.07 | 0.30 | 0.02 | –0.02 | 0.07 | 0.34 |

| Nonverbal cognitive ability | −0.12 | −0.16 | −0.09 | 0.00* | –0.05 | –0.09 | –0.02 | 0.01* | –0.05 | –0.09 | –0.02 | 0.01* |

| PLS-4 averaged score from PLS_AC and PLS_EC | – | – | – | – | –0.11 | –0.14 | –0.07 | 0.00* | –0.10 | –0.14 | –0.07 | 0.00* |

| rSII | – | – | – | – | – | – | – | – | –4.11 | –10.86 | 2.65 | 0.23 |

|

| ||||||||||||

| Adjusted R2 | 0.41 | 0.54 | 0.54 | |||||||||

| R2 Change | 0.44 | 0.13 | 0.00 | |||||||||

Asterisks depict significance at 0.05 probability level.

The analyses were repeated with SRM as the dependent variable, but the models did not account for significant variance in scores.

Factors that influenced children using CIs

Table 3 shows the results of multiple linear regression analyses with SRT as the dependent variable. Age at CI activation, maternal education, and nonverbal cognitive ability significantly influenced outcomes, with the first model accounting for 46% of total variance in scores. In the second model, language ability accounted for additional variability (p < 0.001), with the full model explaining 54% of total variance. Age at CI activation and language ability were significant predictors of SRT outcomes.

Table 3.

Effect size (unstandardized coefficient estimates B-values), 95% confidence intervals (95% CInt), and significance levels (p-values) of predictor variables for SRT outcomes of children with CIs (n=80). The rSII calculation was based on the American National Standards Institute (ANSI) S3.5 (1997) method.

| Model 1 | Model 2 | |||||||

|---|---|---|---|---|---|---|---|---|

|

|

||||||||

| B | 95%CI int | p | B | 95%CI int | p | |||

| Age at cochlear implantation | 3.27 | 1.26 | 5.27 | 0.00* | 2.47 | 0.57 | 4.38 | 0.01* |

| Additional disabilities (AD) | 2.47 | 0.00 | 4.95 | 0.06 | 0.86 | −1.60 | 3.31 | 0.52 |

| Maternal Education | −1.15 | −2.25 | −0.06 | 0.04* | –0.86 | −1.89 | 0.17 | 0.10 |

| Communication mode in early intervention | −0.36 | −1.52 | 0.79 | 0.58 | –0.01 | −1.10 | 1.07 | 0.68 |

| ANSD | 1.79 | −0.85 | 4.42 | 0.18 | 1.63 | −0.81 | 4.06 | 0.19 |

| Nonverbal cognitive ability | −0.09 | −0.13 | −0.04 | 0.00* | –0.04 | −0.09 | 0.01 | 0.13 |

| PLS-4 averaged score from PLS_AC and PLS_EC | – | – | – | – | –0.07 | −0.11 | −0.03 | 0.00* |

|

| ||||||||

| Adjusted R2 | 0.46 | 0.54 | ||||||

| R2 Change | 0.50 | 0.08 | ||||||

Asterisks depict significance at 0.05 probability level.

The analyses were repeated with SRM as the dependent variable, but the models did not account for significant variance in scores.

Discussion

We report an investigation of speech perception in noise in a large sample of 5-year-old children with hearing loss, of whom 168 were using HAs and 84 were using CIs. We aimed to describe their performance and explore the factors contributing to the outcomes.

Outcomes of children with PCHL

We found that 5-year-old children with PCHL required on average about 4.0 to 6.9 dB SNR to achieve 50% correct speech perception in babble, compared to about −1.2 dB in children with typical hearing on a similar task (Ching et al., 2011b). Consistent with findings reported in the literature on children using HAs (e.g. McCreery et al, 2015) and those using CIs (Misurelli & Litovsky, 2012, Nittrouer et al., 2013), children with hearing loss require better SNR than their age-matched peers to achieve similar levels of speech perception in noise.

Despite absolute differences in mean SRTs, the current study found that the mean SRM obtained by children with PCHL, regardless of whether they were users of HAs or CIs, was of a similar magnitude to that reported for typically developing children (Ching et al., 2011b). This finding suggests that the children with PCHL were able to use spatial and binaural cues for speech perception in noise, as with typically developing children. This is unlike previous studies that showed no observable or much reduced SRMs in children using HAs (Nittrouer et al., 2013, Ching et al., 2011b) or CIs (Nittrouer et al., 2013, Misurelli & Litovsky, 2012). As the cohorts in previous studies included children who received late intervention, by current standards (JCIH, 2007), the observed advantage in the present cohort may be related to their earlier age at fitting of hearing devices and enrolment in educational intervention. Early auditory stimulation underpins the development of binaural hearing skills (Gordon et al., 2015) and language development (Moeller, 2011). Better language has been associated with better speech perception (e.g. Vance et al, 2009).

Factors that influenced performance: children using HAs

We found that nonverbal IQ and language abilities were significant predictors of speech perception in babble in children using HAs, with the effect size of language ability almost doubled that of nonverbal IQ. This finding lends support to the importance of focusing on language development in early intervention.

We found that the presence of additional disabilities or ANSD did not have a significant effect on speech perception outcomes, although the effects were in the expected direction, viz, presence of the condition was associated with poorer outcomes.

In contrast to a previous study that showed a significant effect of aided audibility on speech perception (McCreery et al., 2015), we found that the rSII factor did not contribute to explaining variance in speech perception after allowing for the effects of other demographic, cognitive and language characteristics. As the former did not control for variation in aided audibility with hearing threshold level, and the HA fittings deviated considerably from prescriptive targets (McCreery et al., 2013), thereby increasing variability in aided audibility, these may have confounded the investigation on the effect of aided audibility on speech perception. The present study adopted a quantification of aided audibility that was independent of hearing threshold level, in terms of residualised SII as proposed by Tomblin et al (2014). We did not find a significant effect of rSII. The finding reinforces the value of matching validated prescriptive targets as closely as possible (in the present study, the rms error for fitting to target was within 3 dB, see Ching et al, this issue) using real-ear measures in clinical practice (Bagatto et al., 2005).

Factors that influenced performance: children using CIs

We found a significant positive effect of early age at activation of CI on speech perception in babble at 5 years of age. The effect size was 2.47 (95% CInt: 0.57 to 4.38), a large effect of the order of about 1 SD. The present findings provide strong evidence on the effectiveness of early implantation for improving speech perception outcomes. We also found that better language ability was associated with better speech perception ability, with an effect size of 0.07 (95% CInt: 0.11 to 0.03). After allowing for the effect of language ability, nonverbal IQ was no longer significant. This is consistent with findings reported by Sarant et al (2010), and corroborates the conclusion that IQ influenced speech perception through its effect on language outcomes. In a similar fashion, maternal education was no longer significant after adding language ability as a predictor.

Consistent with the findings in children with HAs, we found that the presence of additional disabilities and the presence of ANSD were not significant predictors of speech perception outcomes in children using CIs, after allowing for the effects of other demographic characteristics.

Overall findings and Clinical implications

The current study on speech perception in noise emphasises the importance of considering how children function in real-world environments in providing intervention. Not only is it crucial to provide cochlear implantation early, but their language development must also be the focus of educational intervention. In both children using HAs and CIs, language ability was a significant predictor of speech perception in noise. This is consistent with previous studies indicating an association between speech perception and language ability (Blamey et al, 2001; Davidson et al, 2011). Specific phonological skills have also been associated with children’s speech perception (Nittrouer et al., 2013, McCreery et al., 2015). Although we have not investigated the effect of phonological awareness in this study, our earlier reports indicated that many children with PCHL (regardless of whether they were users of HAs or CIs) exhibit deficits in phonological awareness at 5 years of age (Ching & Cupples, 2015).

The present study shows that optimising HA fitting and ensuring early intervention contribute to improving outcomes. Not only is it crucial to provide cochlear implantation early, but their language development must also be the focus of intervention.

Limitations, Strengths, and Future Directions

Although there was clear evidence of observed SRM in children with hearing loss, our regression models that included demographic, cognitive and language characteristics did not account for significant variation in performance. Future investigations into factors influencing SRM, possibly by including objective physiological measures, may shed light on the mechanism associated with its development.

In children using CIs, device configuration (bilateral CIs or bimodal fitting) has been associated with difference in speech perception performance in some studies (Mok et al., 2007) but not others (Litovsky et al., 2006). Analysis of this effect is beyond the scope of the current study, but will be further investigated in future work. Previous studies on speech perception with CIs have also found that performance improved with experience listening with the CIs (e.g. Dowell et al, 2002; Geers et al, 2003; Davidson et al, 2011). Furthermore, speech perception in noise abilities have been shown to improve with age, which has been attributed to utilisation of sensory information, and linguistic and cognitive developmental factors (Eisenberg et al., 2000). This longitudinal study will investigate the development of speech perception in noise abilities in children when they reached their next assessment point at 9 years of age.

This study found an association between speech perception ability and language ability. The current literature is inconsistent in regards to the equivalent language age at which the association with speech perception asymptotes, with some suggesting a language age at 7 years (Blamey et al., 2001, Blamey & Sarant, 2002) or 10 years (Davidson et al., 2011). Future follow-up of the present study cohort will allow an investigation into this question.

A major strength of the study lies in its population base, allowing the findings to be generalised to the population of children with hearing loss. Data were collected by audiologists who were not involved in the children’s clinical care, and who were blinded to the severity of hearing loss, screening status, and age at intervention, to the extent possible. Unlike previous studies that assessed children with a wide range of ages at intervention and assessment showing an effect of chronological age, this study involved direct assessments of a cohort of children at 5–6 years of age, whose hearing loss was identified early and who had access to the same post-diagnostic intervention services. The longitudinal nature of the study allows future investigations into the development of speech perception abilities and the potential factors influencing development.

Conclusions

Children with hearing loss required better signal-to-noise ratio than their normal-hearing peers to achieve the same level of performance. However, they demonstrated binaural unmasking of a similar magnitude regardless of whether they were using HAs or CIs. Speech perception skills can be enhanced by improving their language abilities. Early age at cochlear implantation was also associated with better outcomes.

Acknowledgments

We gratefully thank all the children, their families and their teachers for participation in this study. We are also indebted to the many persons who served as clinicians for the study participants or assisted in other clinical or administrative capacities at Australian Hearing, Catherine Sullivan Centre, Hear and Say Centre, National Acoustic Laboratories, Royal Institute for Deaf and Blind Children, Royal Victorian Eye and Ear Hospital Cochlear Implant Centre, the Shepherd Centre, and the Sydney Cochlear Implant Centre. We also thank Mark Seeto for his support in statistical analysis, and Kirsty Gardner-Berry for her input to this paper. We also thank Angela Wong for input and assistance with data collection.

Sources of funding

This work was partly supported by the National Institute on Deafness and Other Communication Disorders (Award Number R01DC008080). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Deafness and Other Communication Disorders or the National Institutes of Health.

The project was also partly supported by the Commonwealth of Australia through the Office of Hearing Services. We acknowledge the financial support of the HEARing CRC, established and supported under the Cooperative Research Centres Program of the Australian Government. We also acknowledge the support provided by New South Wales Department of Health, Australia; Phonak Ltd; and the Oticon Foundation.

Abbreviations

- 4FAHL

Four frequency average hearing loss (averaged hearing loss at 0.5, 1, 2, and 4 kHz)

- AH

Australian Hearing

- ANSI SII

Speech Intelligibility Index (SII) calculated with the American National Standards Institute (ANSI) S3.5 (1997) method

- BKB

Bamford–Kowal–Bench (BKB) sentence test

- CIs

Cochlear implants

- HAs

Hearing aids

- ILTASS

International long-term average speech spectrum

- LOCHI

Longitudinal Outcomes of Children with Hearing Impairment

- NH

Normal hearing

- NU-CHIPS

North Western University Children’s Perception of Speech

- PEACH

Parents’ Evaluation of Aural/oral Performance of Children

- PCHL

Permanent childhood hearing loss

- PLS-4

Pre-school Language Scale, 4th edition

- RECD

Real-ear-to-coupler Difference

- rSII

Residualised Speech Intelligibility Index (SII)

- SII

Speech Intelligibility Index

- SNR

signal-to-noise ratio

- SRT

Speech reception thresholds

- SRM

Spatial release from masking

- SD

Standard deviation

- WNV

Wechsler Nonverbal Scale of Ability

Appendix 1. Calculation of Speech Intelligibility Index (SII) and residualised SII (rSII)

Speech intelligibility was calculated using the Speech Intelligibility Index (SII) model. This was completed with two approaches:

The American National Standards Institute (ANSI) S3.5 (1997) method

The ANSI S3.5 method incorporating a hearing loss desensitization factor (Ching et al., 2013c), referred to as the desensitized SII in this article. This approach included the same transforms and steps as ANSI S3.5, but with the addition of a hearing loss desensitization factor that was empirically derived (Ching et al., 2011a) and adopted in the NAL prescriptive method (Dillon et al., 2011).

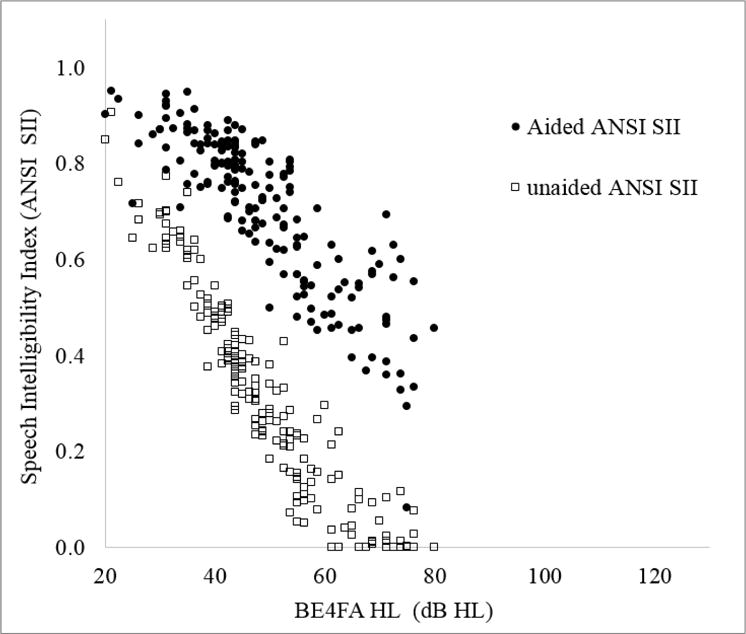

The SIIs were calculated for unaided and aided conditions, using a third-octave band method. Unaided SII values were computed by using a child’s pure tone hearing thresholds and an input long-term-average speech spectrum at an overall level of 65 dB SPL (ILTASS, Byrne et al, 1994). Aided SII values were calculated by using the hearing thresholds and the real-ear-aided responses (REAR). The REAR for each ear was calculated by adding the measured gain of a hearing aid in an HA2–2cc coupler to the real-ear-to-coupler difference of the ear and the third-octave band levels of the ILTASS at 65 dB SPL. An average speech importance function (Pavlovic, 1994) was used to weight the amount of audibility across the frequency range from 160 to 8000 Hz. The weighted audibility was summed across bands to give an overall SII value that ranged from 0 to 1, where 0 indicates that no audible speech information was available, and 1 indicates that all speech information was available. The SIIs were calculated for the better ear of each child. eFigure 1 shows the unaided and aided SII as a function of hearing level, expressed as four-frequency-average pure-tone hearing loss (average of hearing level at 0.5, 1, 2, 4 kHz) in the better ear (BE4FA HL).

eFigure 1.

Unaided Speech Intelligibility Index (SII) (open squares) and aided SII (filled circles) as a function of four-frequency average hearing loss in the better ear (BE4FA HL).

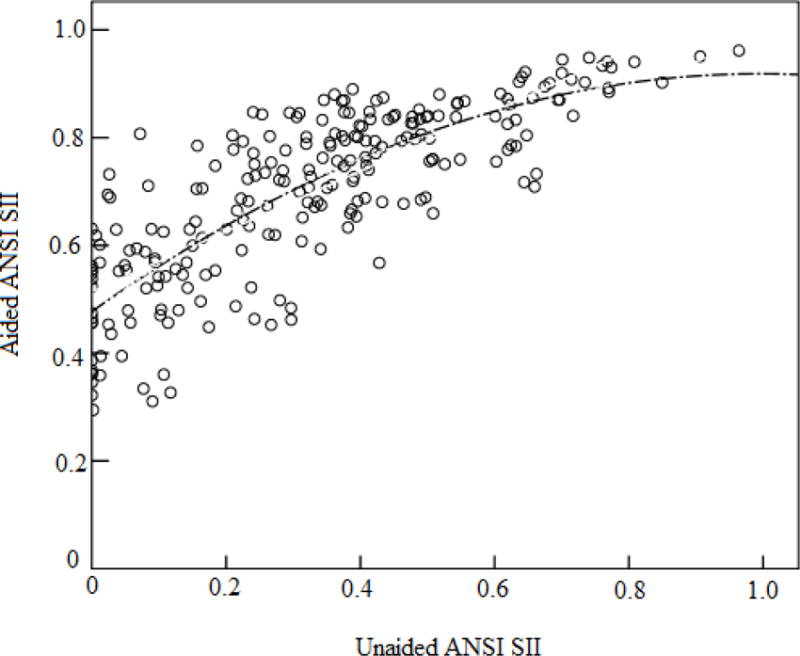

As SIIs are necessarily related to hearing threshold level, and unaided and aided SIIs are essentially correlated, Tomblin et al (2014) proposed the use of a residualized SII (rSII) to account for the unique contribution of aided hearing independent of hearing level. A linear regression method was used to remove the variance of unaided SII that was common to the aided SII. The rSII was derived by taking the difference between the predicted aided SII and the observed aided SII. This study followed the same method, but used a quadratic function to characterise the relation between unaided and aided SII as a function of hearing loss (see eFigure 2).

eFigure 2.

Curve estimation regression (quadratic function) fitted to unaided and aided SII, calculated using the American National Standards Speech Intelligibility Index model (ANSI SII) (American National Standards Institute, 1997).

Footnotes

Conflicts of interest

None were declared.

References

- Akeroyd MA. The psychoacoustics of binaural hearing. Int J Audiol. 2006;45:25–33. doi: 10.1080/14992020600782626. [DOI] [PubMed] [Google Scholar]

- American National Standards Institute. Methods for calculation of the speech intelligibility index, (ANSI S3.5-1997) New York, USA: American National Standards Institute; 1997. [Google Scholar]

- Bagatto M, Moodie S, Scollie S, Seewald R, Pumford J, et al. Clinical protocols for hearing instrument fitting in the Desired Sensation Level method. Trends Amplif. 2005;9:199–226. doi: 10.1177/108471380500900404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barker BA, Newman RS. Listen to your mother! The role of talker familiarity in infant streaming. Cognition. 2004;94:9. doi: 10.1016/j.cognition.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Bench J, Bamford J. Speech-hearing tests and the spoken language of hearing-impaired children. London: Academic Press; 1979. [Google Scholar]

- Bench J, Doyle J. The BKB/A (Bamford-Kowal-Bench/Australian version) sentence lists for hearing-impaired children. Victoria, Australia: La Trobe University; 1979. [Google Scholar]

- Blamey P, Sarant J. Speech perception and language criteria for paediatric cochlear implant candidature. Audiol Neurootol. 2002;7:114–121. doi: 10.1159/000057659. [DOI] [PubMed] [Google Scholar]

- Blamey PJ, Sarant JZ, Paatsch LE, Barry JG, Bow CP, et al. Relationships among speech perception, production, language, hearing loss, and age in children with impaired hearing. J Speech Lang Hear Res. 2001;44:264–285. doi: 10.1044/1092-4388(2001/022). [DOI] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Ching TYC, Katsch R, Keidser G. NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12:37–51. [PubMed] [Google Scholar]

- Chadha NK, Papsin BC, Jiwani S, Gordon KA. Speech detection in noise and spatial unmasking in children iwth simultaneous versus sequential bilateral cochlear implants. Otol Neurotol. 2011;32:1057–1064. doi: 10.1097/MAO.0b013e3182267de7. [DOI] [PubMed] [Google Scholar]

- Ching TYC, Crowe K, Martin V, Day J, Mahler N, et al. Language development and everyday functioning of children with hearing loss assessed at 3 years of age. Int J Speech Lang Pathol. 2010;12:124–131. doi: 10.3109/17549500903577022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Cupples L. Phonological awareness at 5 years of age in children who use hearing aids or cochlear implants. Perspect Hear Hear Disord Child. 2015;25:48–59. doi: 10.1044/hhdc25.2.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Dillon H. Prescribing amplification for children: Adult-equivalent hearing loss, real-ear aided gain, and NAL-NL1. Trends Amplif. 2003;7:1–9. doi: 10.1177/108471380300700102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Dillon H, Hou S, Zhang V, Day J, et al. A randomised controlled comparison of NAL and DSL prescriptions for young children: Hearing aid characteristics and performance outcomes at 3 years of age. Int J Audiol. 2013a;52:17S–28S. doi: 10.3109/14992027.2012.705903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Dillon H, Lockhart F, van Wanrooy E, Flax M. Audibility and speech intelligibility revisited: Implications for amplification. In: Dau T, Dalsgaard JC, Jepsen ML, Poulsen T, editors. International Symposium on Auditory and Audiological Research. Denmark: The Danavox Jubilee Foundation; 2011a. pp. 11–19. [Google Scholar]

- Ching TYC, Dillon H, Marnane V, Hou S, Day J, et al. Outcomes of early- and late-identified children with hearing loss at 3 years of age: Findings from a prospective population-based study. Ear Hear. 2013b;34:535–552. doi: 10.1097/AUD.0b013e3182857718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Johnson EE, Hou S, Dillon H, Zhang V, et al. A comparison of NAL and DSL prescriptive methods for paediatric hearing aid fitting: Predicted speech intelligibility and loudness. Int J Audiol. 2013c;52:29S–38S. doi: 10.3109/14992027.2013.765041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, van Wanrooy E, Dillon H, Carter L. Spatial release from masking in normal-hearing children and children who use hearing aids. J Acoust Soc Am. 2011b;129:368–375. doi: 10.1121/1.3523295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, Zhang VW, Johnson EE, Van Buynder P, Hou S, et al. Hearing aid fitting and developmental outcomes of children fit according to either the NAL or DSL prescription: fit-to-target, audibility, speech and language abilities (submitted) Int J Audiol. doi: 10.1080/14992027.2017.1380851. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson LS, Geers AE, Blamey PJ, Tobey EA, Brenner CA. Factors contributing to speech perception scores in long-term pediatric cochlear implant users. Ear Hear. 2011;32:19S–26S. doi: 10.1097/AUD.0b013e3181ffdb8b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon H. Binaural and bilateral considerations in hearing aid fitting. In: Dillon H, editor. Hearing Aids. 2nd. Sydney, Australia: Boomerang Press; 2012. pp. 430–468. [Google Scholar]

- Dillon H, Keidser G, Ching TYC, Flax M, Brewer S. The NAL-NL2 prescription procedure. Phonak Focus. 2011:1–10. doi: 10.4081/audiores.2011.e24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowell RC, Dettman SJ, Blamey PJ, Barker EJ, Clark GM. Speech perception in children using cochlear implants: Prediction of long‐term outcomes. Cochlear Implants Int. 2002;3:1–18. doi: 10.1179/cim.2002.3.1.1. [DOI] [PubMed] [Google Scholar]

- Eisenberg LS, Shannon RV, Schaefer Martinez A, Wygonski J, Boothroyd A. Speech recognition with reduced spectral cues as a function of age. J Acoust Soc Am. 2000;107:2704–2710. doi: 10.1121/1.428656. [DOI] [PubMed] [Google Scholar]

- Elliott L, Katz D. Northwestern University Children’s Perception of Speech (NU-CHIPS) St. Louis: Auditec; 1980. [Google Scholar]

- Elliott LL. Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. J Acoust Soc Am. 1979;66:651–3. doi: 10.1121/1.383691. [DOI] [PubMed] [Google Scholar]

- Fallon M, Trehub SE, Schneider BA. Children’s perception of speech in multitalker babble. J Acoust Soc Am. 2000;108:3023–3029. doi: 10.1121/1.1323233. [DOI] [PubMed] [Google Scholar]

- Festen JM, Plomp R. Speech reception threshold in noise with one and two hearing aids. J Acoust Soc Am. 1986;79:465–471. doi: 10.1121/1.393534. [DOI] [PubMed] [Google Scholar]

- Gallaudet Research Institute. Regional and national summary report of data from the 2009–10 annual survey of deaf and hard of hearing children and youth. Washington, DC: Gallaudet Research Institute, Gallaudet University; 2011. [Google Scholar]

- Galvin KL, Dowell RC, van Hoesel RJ, Mok M. Speech detection in noise for young bilaterally implanted children: Is there evidence of binaural benefit over the shadowed ear alone? Ear Hear. 2017 doi: 10.1097/AUD.0000000000000442. Advance online publication. [DOI] [PubMed] [Google Scholar]

- Garadat SN, Litovsky RY. Speech intelligibility in free field: Spatial unmasking in preschool children. J Acoust Soc Am. 2007;121:1047–1055. doi: 10.1121/1.2409863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers AE, Brenner C, Davidson L. Factors associated with development of speech perception skills in children implanted by age five. Ear Hear. 2003;24:24–35. doi: 10.1097/01.AUD.0000051687.99218.0F. [DOI] [PubMed] [Google Scholar]

- Gordon K, Henkin Y, Kral A. Asymmetric hearing during development: The aural preference syndrome and treatment options. Pediatrics. 2015;136:1098–4275. doi: 10.1542/peds.2014-3520. [DOI] [PubMed] [Google Scholar]

- Gravel JS, Fausel N, Liskow C, Chobot J. Children’s speech recognition in noise using omni-directional and dual-microphone hearing aid technology. Ear Hear. 1999;20:1–11. doi: 10.1097/00003446-199902000-00001. [DOI] [PubMed] [Google Scholar]

- Hicks CB, Tharpe AM. Listening effort and fatigue in school-age children with and without hearing loss. J Speech Lang Hear Res. 2002;45:573–584. doi: 10.1044/1092-4388(2002/046). [DOI] [PubMed] [Google Scholar]

- Hillock-Dunn A, Taylor C, Buss E, Leibold LJ. Assessing speech perception in children with hearing loss: What conventional clinical tools may miss. Ear Hear. 2015;36:e57–e60. doi: 10.1097/AUD.0000000000000110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Incerti P, Ching TYC, Hou S, Van Buynder P, Flynn L, et al. Cochlear implant programming characteristics (submitted) Int J Audiol. doi: 10.1080/14992027.2017.1370139. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson EE, Dillon H. A comparison of gain for adults from generic hearing aid prescriptive methods: Impacts on predicted loudness, frequency bandwidth, and speech intelligibility. J Am Acad Audiol. 2011;22:1–19. doi: 10.3766/jaaa.22.7.5. [DOI] [PubMed] [Google Scholar]

- Johnstone PM, Litovsky RY. Effect of masker type and age on speech intelligibility and spatial release from masking in children and adults. J Acoust Soc Am. 2006;120:2177–2189. doi: 10.1121/1.2225416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joint Committee on Infant Hearing (JCIH) Year 2007 position statement: Principles and guidelines for early hearing detection and intervention programs. Pediatrics. 2007;120:898–921. doi: 10.1542/peds.2007-2333. [DOI] [PubMed] [Google Scholar]

- Killan CF, Killan EC, Raine CH. Changes in children’s speech discrimination and spatial release from masking between 2 and 4 years after sequential cochlear implantation. Cochlear Implants Int. 2015;16:270–276. doi: 10.1179/1754762815Y.0000000001. [DOI] [PubMed] [Google Scholar]

- King AM. The national protocol for paediatric amplification in Australia. Int J Audiol. 2010;49:64S–69S. doi: 10.3109/14992020903329422. [DOI] [PubMed] [Google Scholar]

- Kuhl PK. A new view of language acqusition. Proceedings of the NAS. 2000;97:11850–11857. doi: 10.1073/pnas.97.22.11850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibold LJ, Hillock-Dunn A, Duncan N, Roush PA, Buss E. Influence of hearing loss on children’s identification of spondee words in a speech-shaped noise or a two-talker masker. Ear Hear. 2013;34:575–584. doi: 10.1097/AUD.0b013e3182857742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Johnstone PM, Godar SP. Benefits of bilateral cochlear implants and/or hearing aids in children. Int J Audiol. 2006;45:78S–91S. doi: 10.1080/14992020600782956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackie K, Dermody P. Use of a monosyllabic adaptive speech test (MAST) with young children. J Speech Hear Res. 1986;29:275–281. doi: 10.1044/jshr.2902.275. [DOI] [PubMed] [Google Scholar]

- McCreery RW, Bentler RA, Roush PA. Characteristics of hearing aid fittings in infants and young children. Ear Hear. 2013;34:701–710. doi: 10.1097/AUD.0b013e31828f1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery RW, Walker EA, Spratford M, Oleson J, Bentler R, et al. Speech recognition and parent ratings from auditory development questionnaires in children who are hard of hearing. Ear Hear. 2015;36:60S–75S. doi: 10.1097/AUD.0000000000000213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Misurelli SM, Litovsky RY. Spatial release from masking in children with normal hearing and with bilateral cochlear implants: Effect of interferer asymmetry. J Acoust Soc Am. 2012;132:380–391. doi: 10.1121/1.4725760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moeller MP. Language development: New insights and persistent puzzles. Sem Hear. 2011;32:172–181. [Google Scholar]

- Mok M, Galvin KL, Dowell RC, McKay CM. Spatial unmasking and binaural advantage for children with normal hearing, a cochlear implant and a hearing aid, and bilateral implants. Audiol Neurootol. 2007;12:295–306. doi: 10.1159/000103210. [DOI] [PubMed] [Google Scholar]

- Moore JK, Linthicum FH., Jr The human auditory system: A timeline of development. Int J Audiol. 2007;46:460–478. doi: 10.1080/14992020701383019. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, Tarr E, Lowenstein JH, Rice C, et al. Improving speech-in-noise recognition for children with hearing loss: Potential effects of language abilities, binaural summation, and head shadow. Int J Audiol. 2013;52:513–525. doi: 10.3109/14992027.2013.792957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlovic CV. Band importance functions for audiological applications. Ear Hear. 1994;15:100–104. doi: 10.1097/00003446-199402000-00012. [DOI] [PubMed] [Google Scholar]

- Rubin DB. Multiple imputation for nonresponse in surveys. New York, NY: Wiley; 1987. [Google Scholar]

- Sarant JZ, Blamey PJ, Dowell RC, Clark GM, Gibson WPR. Variation in speech perception scores among children with cochlear implants. Ear Hear. 2001;22:18–28. doi: 10.1097/00003446-200102000-00003. [DOI] [PubMed] [Google Scholar]

- Sarant JZ, Hughes K, Blamey PJ. The effect of IQ on spoken language and speech perception development in children with impaired hearing. Cochlear Implants Int. 2010;11:370–374. doi: 10.1179/146701010X12671177990037. [DOI] [PubMed] [Google Scholar]

- Scollie SD, Ching TYC, Seewald RC, Dillon H, Britton L, et al. Children’s speech perception and loudness ratings when fitted with hearing aids using the DSL v.4.1 and the NAL-NL1 prescriptions. Int J Audiol. 2010;49:26S–34S. doi: 10.3109/14992020903121159. [DOI] [PubMed] [Google Scholar]

- Scollie SD, Seewald RC, Cornelisse LE, Moodie S, Bagatto M, et al. The desired sensation level multistage input/output algorithm. Trends Amplif. 2005;9:39. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seewald R, Cornelisse LE, Ramji KV. Users’ manual. London, Ontario: University of Western Ontario Hearing Health Care Research Unit; 1997. DSL v4.1 for Windows: a software implementation of the Desired Sensation Level (DSL[i/o]) method for fitting linear gain and wide-dynamic-range compression hearing instruments. [Google Scholar]

- Sininger YS, Grimes A, Christensen E. Auditory development in early amplified children: Factors influencing auditory-based communication outcomes in children with hearing loss. Ear Hear. 2010;31:166–185. doi: 10.1097/AUD.0b013e3181c8e7b6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Inc SPSS. SPSS for Windows (version 16.0) Chicago, USA: SPSS Inc; 2007. [Google Scholar]

- Statsoft Inc. Statistica Software (version 10.0) Tulsa, OK: USA: Statsoft Inc; 2011. [Google Scholar]

- Talarico M, Abdilla G, Aliferis M, Balazic I, Giaprakis I, et al. Effect of age and cognition on childhood speech in noise perception abilities. Audiol Neurootol. 2006;12:13–19. doi: 10.1159/000096153. [DOI] [PubMed] [Google Scholar]

- Tomblin JB, Harrison M, Ambrose SE, Walker EA, Oleson JJ, et al. Language outcomes in young children with mild to severe hearing loss. Ear Hear. 2015;36:76S–91S. doi: 10.1097/AUD.0000000000000219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomblin JB, Oleson JJ, Ambrose SE, Walker E, Moeller MP. The influence of hearing aids on the speech and language development of children with hearing loss. JAMA Otolaryngol Head Neck Surg. 2014;140:403–409. doi: 10.1001/jamaoto.2014.267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Deun L, van Wieringen A, Wouters J. Spatial speech perception benefits in young children with normal hearing and cochlear implants. Ear Hear. 2010;31:12. doi: 10.1097/AUD.0b013e3181e40dfe. [DOI] [PubMed] [Google Scholar]

- Vance M, Rosen S, Coleman M. Assessing speech perception in young children and relationships with language skills. Int J Audiol. 2009;48:708–717. doi: 10.1080/14992020902930550. [DOI] [PubMed] [Google Scholar]

- Wechsler D, Naglieri JA. Wechsler nonverbal scale of ability. San Antonio, TX: Harcourt Assessment; 2006. [Google Scholar]

- Zimmerman I, Steiner VG, Pond RE. Preschool Language Scale. 4th. San Antonio, TX: The Psychological Corporation; 2002. [Google Scholar]

- American National Standards Institute. Methods for calculation of the speech intelligibility index, (ANSI S3.5-1997) New York, USA: American National Standards Institute; 1997. [Google Scholar]

- Byrne D, Dillon H, Tran K, Arlinger S, Wilbraham K, et al. An international comparison of long-term average speech spectra. J Acoust Soc Am. 1994;96:2108–2120. [Google Scholar]

- Ching TYC, Dillon H, Lockhart F, van Wanrooy E, Flax M. Audibility and speech intelligibility revisited: Implications for amplification. In: Dau T, Dalsgaard JC, Jepsen ML, Poulsen T, editors. International Symposium on Auditory and Audiological Research. Denmark: The Danavox Jubilee Foundation; 2011. pp. 11–19. [Google Scholar]

- Ching TYC, Johnson EE, Hou S, Dillon H, Zhang V, et al. A comparison of NAL and DSL prescriptive methods for paediatric hearing aid fitting: Predicted speech intelligibility and loudness. Int J Audiol. 2013;52:29S–38S. doi: 10.3109/14992027.2013.765041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon H, Keidser G, Ching TYC, Flax M, Brewer S. The NAL-NL2 prescription procedure. Phonak Focus. 2011:1–10. doi: 10.4081/audiores.2011.e24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlovic CV. Band importance functions for audiological applications. Ear Hear. 1994;15:100–104. doi: 10.1097/00003446-199402000-00012. [DOI] [PubMed] [Google Scholar]

- Tomblin JB, Oleson JJ, Ambrose SE, Walker E, Moeller MP. The influence of hearing aids on the speech and language development of children with hearing loss. JAMA Otolaryngol Head Neck Surg. 2014;140:403–409. doi: 10.1001/jamaoto.2014.267. [DOI] [PMC free article] [PubMed] [Google Scholar]