SUMMARY

In primates, posterior auditory cortical areas are thought to be part of a dorsal auditory pathway that processes spatial information. But how posterior (and other) auditory areas represent acoustic space remains a matter of debate. Here we provide new evidence based on functional magnetic resonance imaging (fMRI) of the macaque indicating that space is predominantly represented by a distributed hemi-field code rather than by a local spatial topography. Hemifield tuning in cortical and subcortical regions emerges from an opponent hemispheric pattern of activation and deactivation that depends on the availability of interaural delay cues. Importantly, these opponent signals allow responses in posterior regions to segregate space similarly to a hemifield code representation. Taken together, our results reconcile seemingly contradictory views by showing that the representation of space follows closely a hemifield code and suggest that enhanced posterior-dorsal spatial specificity in primates might emerge from this form of coding.

INTRODUCTION

The ability to localize sounds is essential for an animal’s survival. In primates, auditory spatial information is thought to be processed along a dorsal auditory pathway (Romanski et al., 1999), a hierarchical system with reciprocal connections that includes caudal belt areas CL/CM of the posterior superior temporal (pST) region (Rauschecker and Tian, 2000; Tian et al., 2001). A central question regarding sound localization in primates is whether sound source location is represented in localized areas of the pST region or distributed throughout auditory cortex (AC).

Original results suggesting regional specificity for sound source localization in posterior regions of AC came independently from neuroimaging studies in humans (Griffiths et al., 1996; Baumgart et al., 1999) and from single-unit recordings in macaque monkeys (Rauschecker and Tian, 2000; Tian et al., 2001). In the macaque, neurons in area CL were found to be sharply tuned to azimuth position in the frontal hemifield and were significantly more selective than in other fields (Tian et al., 2001; Woods et al., 2006). However, recent data in both monkeys (Werner-Reiss and Groh, 2008) and humans (Salminen et al., 2009; Magezi and Krumbholz, 2010; M1ynarski, 2015; Derey et al., 2016) suggest that acoustic space is also represented by broadly tuned neurons distributed more widely across AC.

Such evidence is consistent with a different perspective suggesting that acoustic space in AC is coded by opponent neural populations tuned to either side of space (Stecker et al., 2005; Stecker and Middlebrooks, 2003) as similarly found in subcortical structures (Grothe, 2003; McAlpine et al., 2001). Lesion studies in cats (Jenkins and Masterton, 1982; Malhotra et al., 2004), ferrets (Nodal et al., 2012), and monkeys (Heffner and Masterton, 1975) demonstrate that unilateral lesions of AC result in severe localization deficits for sound sources contralateral to the lesion. Similarly, previous single-unit studies in cats (Middle-brooks et al., 1994) and optical imaging experiments in ferrets (Nelken et al., 2008) have provided evidence supporting a more distributed code for sound location in the AC by showing neurons responding maximally and broadly to sound sources near the contralateral ear.

Up to date, it still remains a challenge to record simultaneously and in parallel across multiple and distal cortical regions using single-unit and optical imaging methodology. Functional magnetic resonance imaging (fMRI) based on blood-oxygen-level-dependent (BOLD) signals provides an alternative and complementary method to study the functional representation of acoustic space across cortical regions (CRs) of each hemisphere.

In the present study, we mapped the frequency organization of AC (Formisano et al., 2003; Petkov et al., 2006) and then measured the BOLD response to spatial sounds obtained from virtual acoustic space. We further analyzed the BOLD responses to spatial sounds with and without interaural time difference (ITD) cues and found a dependency between the presence of ITD cues and contralateral tuning. Finally, we compared the representations of space across CRs with a hemifield code model using representational similarity analyses (RSA).

RESULTS

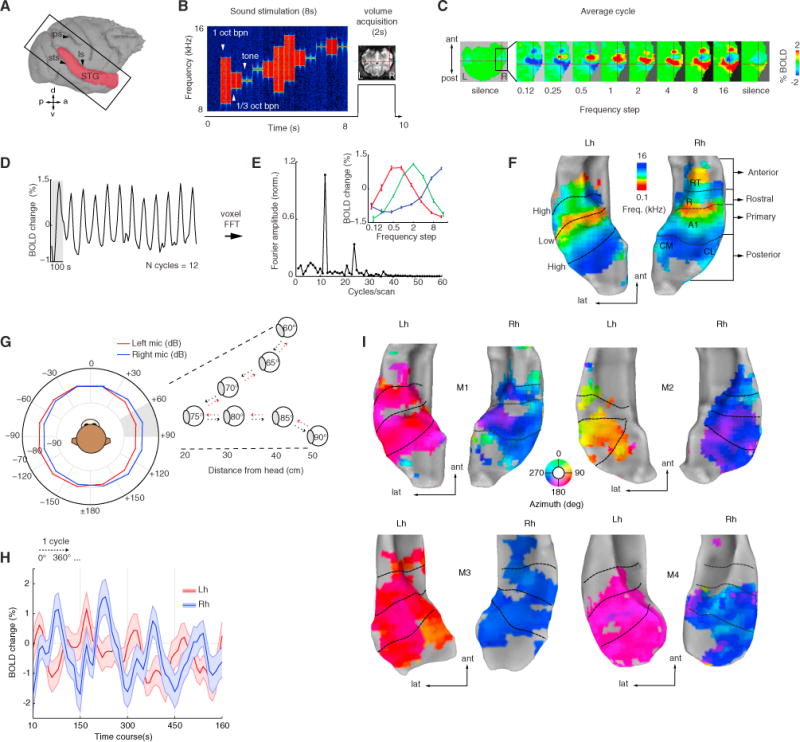

Our first aim was to identify auditory CRs based on their frequency organization and then map the spatial domain using the same phase-encoding techniques (Barton et al., 2012; Wandell et al., 2007). Auditory stimulation elicited significant BOLD responses along the auditory pathway in both anesthetized and awake monkeys (Figure S1). After optimization, we conducted tonotopic-mapping experiments utilizing tones and narrow-band noise stimuli (Figure 1B) that were presented in blocks of one-octave steps in ascending frequency order and repeated in cycles 12 times (Figure S2A). The resulting average BOLD response to each frequency range was narrow and gradually shifted from low-frequency A1 to anterior and posterior regions of AC with a distinct wave pattern of positive BOLD responses (PBRs) and negative BOLD responses (NBRs) (Figure 1C). The PBR/NBR pattern reversed drastically around 2 kHz indicating a shift toward high-frequency regions. Voxels with significant (coherence > 0.3) BOLD modulation (Figures 1D and 1E) to the stimulation rate (0.01 Hz, 12 cycles/1,200 s) were mapped by their scaled phase values to the frequency range of the presented stimuli (0.125–16 kHz) (Figure 1F). Subsequently, we defined four CRs based on their frequency-reversal boundaries with the same population response mediolaterally: Posterior, Primary, Rostral, and Anterior. Each region included core fields and adjacent medial and lateral belt fields (which were not separately delineated) as follows: Posterior (including fields CL, CM), Primary (ML, A1, MM), Rostral (AL, R, RM), and Anterior (RTL, RT, RTM).

Figure 1. Phase-Mapping for Frequency and Space.

(A) Image acquisition plane and extracted surface (red).

(B) Sparse imaging and stimulation design (e.g., high-frequency stimuli, 8–16 kHz).

(C) Average BOLD response to each frequency step in octaves (labeled frequency refers to the upper range of the frequency presented).

(D) Time course of an A1 voxel’s BOLD response (crosshair in C) tuned to high frequency. Gray shading represents one presentation cycle.

(E) Fourier transform of the same voxel’s BOLD response shows a peak at the stimulation rate (0.01 Hz = 12 cycles/1,200 s). Inset panel, mean ± SEM of 3 voxels in A1 at the peak stimulation rate. Response peaks were used to calculate the preferred phase that translates to preferred sound frequency independently at each voxel.

(F) Resulting tonotopic maps rendered into STG surfaces of each hemisphere. Black dotted lines indicate frequency-reversal boundaries of preferred sound frequency between mirror-symmetric regions. For the awake monkeys (M3 and M4), reversal boundaries were obtained from anatomical reference (Saleem and Logothetis, 2012).

(G) Binaural sound recordings and stimulation design. Mean amplitude of sounds (broad-band noise 0.125–16 kHz) recorded at each ear (red and blue) plotted in hemifield polar angles. Outset panel illustrates a virtual sector of speaker orientations and distances from the head. Sound bursts (100 ms) were played every 5° in a leftward, rightward and distance sequence oscillating pattern (dashed red and black arrows) within a 30°-wide spatial sector (shaded gray, n sectors = 12) for 7.2 s.

(H) Mean ± SEM of BOLD signal in all significant voxels (coherence > 0.3) in AC shown for four cycles of the time course to illustrate the overall broad amplitude modulation across hemispheres.

(I) Space maps highlight two phases across hemispheres in all four monkeys. STG, superior temporal gyrus; ls, lateral sulcus; ips, intraparietal sulcus; sts, superior temporal sulcus; Lh, left hemisphere; Rh, right hemisphere; ant, anterior; lat, lateral; post, posterior.

We then aimed to map the spatial domain utilizing the same analytical methods but at a stimulation rate of 0.0067 Hz (12 cycles/1,800 s). Prior to these experiments, virtual spatial sounds (broad-band noise bursts, 0.125–16 kHz, 80 dB SPL, 100 ms in duration) were created via binaural sound recordings from each individual monkey (see STAR Methods section binaural recordings). The recorded stimuli contained all individual spatial cues (ITDs, interaural level differences [ILDs], and spectral cues, Figures S3A and S3B). In addition, the virtual noise bursts changed in azimuth direction (leftward, rightward) and in distance within a 30° sector over time (Figure 1G). This innovative design allowed us to keep space partitioned while we estimated the response to spatial sounds within a constrained sector and additionally avoided repetition suppression in the BOLD response. A total of 12 sectors (spanning 30° each) around a virtual plane surrounding the head of the monkey were used to image the BOLD signal.

Compared to sound frequency responses, the mean BOLD responses to spatial sounds were broad and shifted between two opposite phases across the cerebral hemispheres (Figure 1H). Overall, the resulting maps showed no clear topographic organization, but instead reflected the broad peak response across sounds in the contralateral hemifield (Figure 1I). While M2 showed a trend toward a “space map” in the left hemisphere, this result was less clear in the other seven hemispheres. Thus, we investigated phase-peak response across cortical space spanning 10 mm by plotting regions-of-interest (ROI) in voxels crossing along trajectories parallel and orthogonal to the primary field and evidently confirmed a lack of topography in the flat-peak responses (Figure S3C, compared to Figure S3D).

In summary, our mapping experiments corroborated previous electrophysiological (Rauschecker et al., 1995) and imaging studies (Formisano et al., 2003; Petkov et al., 2006) of primate AC showing mirror-symmetric tonotopic maps and provided new evidence indicating that the functional representation of auditory space (in the azimuth plane), as measured with fMRI, lacks a clear spatial topography.

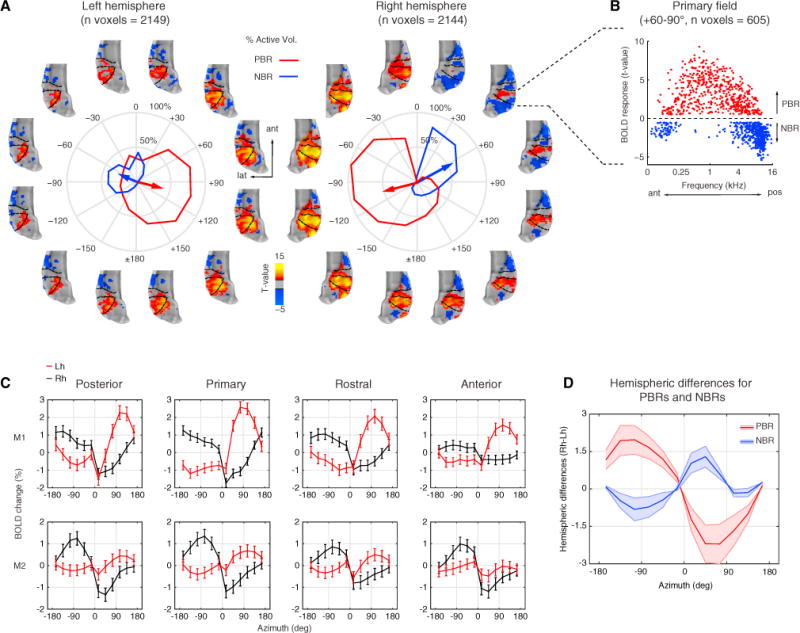

Positive and Negative BOLD Responses across Auditory Regions

How is azimuth space represented in each auditory CR? We investigated our data further by analyzing each time series with a general linear model (GLM) of the BOLD signal. We tested the significance of the model from the measured BOLD responses to each spatial condition (n = 12) as compared to the baseline/silence periods (q FDR < 0.05, p < 10−6, cluster size > 10 voxels). Surprisingly, we found distinct patterns of PBRs and NBRs within each hemisphere that changed as a function of each spatial sector (Figure 2A and Figure S4). Spatial tuning curves calculated from the spatial spread of PBRs and NBRs in each AC showed that the overall tuning was of opposite polarity between signals, with PBRs oriented approximately at ±120° and NBRs at ±60° between hemispheres. Similarly, average hemispheric differences in PBRs and NBRs showed opposite polarity between signals, with NBRs showing a peak around frontal right sectors (Figure 2D). The peak for PBRs was observed for sectors near the contralateral ear (e.g., ±90°/120°), with a cluster size extending across much of AC. By contrast, the ipsilateral PBRs were greatly reduced in size and were accompanied by an NBR pattern in anterior and posterior regions of AC. In the primary field, the responses exhibited a concentric pattern (e.g., at +30° −60°) with positive voxels expanding the overall anterior-posterior frequency axis and negative voxels mostly lying anteriorly and posteriorly (Figures 2A and 2B).

Figure 2. Positive and Negative BOLD Responses Represent Opposite Hemifields.

(A) Activation t-maps with significant positive (red/yellow) and negative (blue) BOLD responses (q FDR < 0.05, p < 10−6, cluster size > 10 voxels). Each map is shown around the corresponding spatial sector in polar plots of each hemisphere of monkey M2 (see Figure S4 for a similar plot in monkey M1). The polar plot shows spatial tuning curves obtained from the spatial spread of the positive (red) and negative (blue) BOLD responses (PBRs and NBRs, respectively). Mean resultant vectors (arrows) point toward the preferred angular direction. The length represents the percentage of active voxels around the mean direction. Negative angles (−180°−0°) in polar plot represent the left hemifield and positive angles (+180°−0°) represent the right hemifield.

(B) Scatterplot of voxels in primary field showing PBRs and NBRs to an exemplar spatial sector (+60°−90°) plotted as function of the frequency tuning of each voxel.

(C) Mean ± SEM of BOLD responses (including both PBRs and NBRs) for cortical regions of each hemisphere (Lh, red; Rh, black) of monkey M1 (top) and M2 (bottom).

(D) Average amplitude differences across hemispheres for PBRs and NBRs plotted as a function of azimuth. The differential response shows opposite polarity between hemifields with a peak in NBRs for frontal right sectors. Lh, left hemisphere; Rh, right hemisphere; ant, anterior; lat, lateral; pos, posterior.

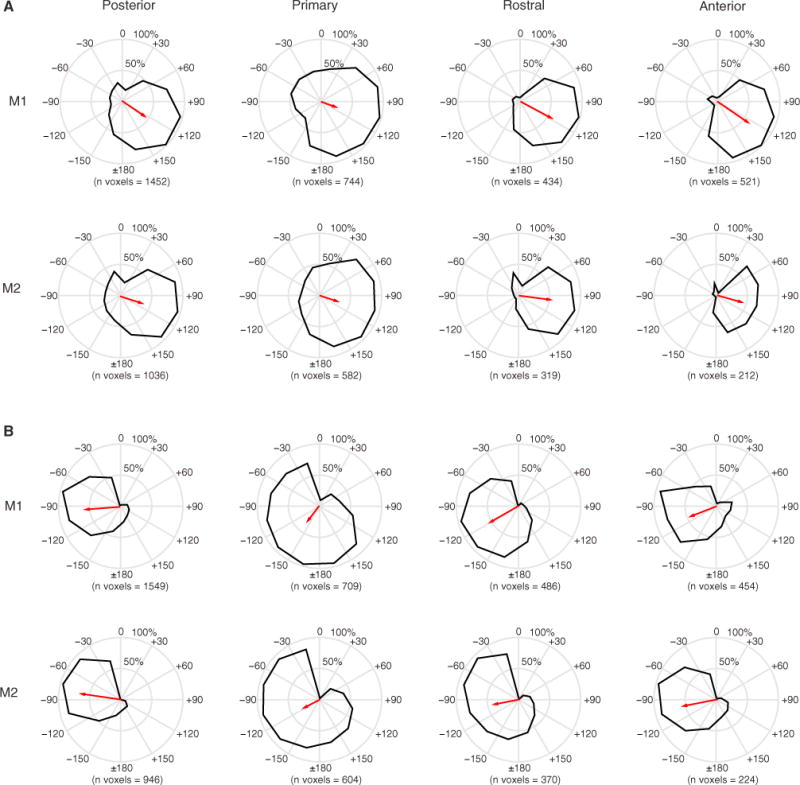

The average BOLD signal in CRs of each monkey (mean and ± SEM, including both PBRs and NBRs) showed a marked shift in amplitude around the midline, which further indicated hemifield tuning across all CRs (Figure 2C). Similarly, spatial tuning curves obtained from PBRs showed highly significant deviations from circular uniformity (Rayleigh test, p < 0.001) with angular means oriented in opposite polarity (~ ±120) between hemispheres and similarly oriented in all CRs of the same hemisphere (Figure 3). Vector length showed that more than half of the total numbers of voxels were active in response to stimulation of contralateral sectors (~ ±120) for all CRs. The overall tuning in central regions (primary and rostral) was slightly broader than in anterior and posterior regions of the same hemisphere based on standard deviations (see Data S1 for details).

Figure 3. Cortical Fields Are Broadly Tuned to Contralateral Space.

The spatial spread of the positive BOLD response was used to calculate spatial tuning curves (black curves) for each cortical field: posterior, primary, rostral, and anterior.

(A) Left hemisphere for M1 (top) and M2 (bottom).

(B) Right hemisphere for M1 (top) and M2 (bottom). The mean resultant vectors (red) point toward the preferred circular mean direction, and the length represents the percentage of active voxels concentrated around ±30° of the mean direction. All fields were approximately oriented around ± 90°−120°. Overall, cortical fields were broadly tuned, with central fields (primary and rostral) slightly broader than anterior and posterior fields (see Tables S1 and S2).

In summary, our functional analyses showed that azimuth space as measured by fMRI is represented by opponent hemifield responses of positive and negative BOLD across hemispheres. The dynamic change between PBRs and NBRs supports an opponent-channel mechanism (Stecker et al., 2005) for the representation of acoustic space in the macaque monkey.

Contralateral Bias Measured with BOLD Response Contrast

While animal studies have uniformly shown a clear contralateral bias in the firing rate of auditory cortical neurons (Tian et al., 2001; Miller and Recanzone, 2009; Stecker and Middlebrooks, 2003; Werner-Reiss and Groh, 2008; Woods et al., 2006), neuroimaging studies in humans have obtained mixed results in respect to the degree of contralaterality (Krumbholz et al., 2007; Werner-Reiss and Groh, 2008; Zatorre et al., 2002) in AC responses to spatial sounds. Whether these discrepancies are due to species differences in neural coding or to methodological differences (e.g., sound stimulation, single-unit, and/or fMRI) between studies in animals and humans remains a matter of debate (Werner-Reiss and Groh, 2008).

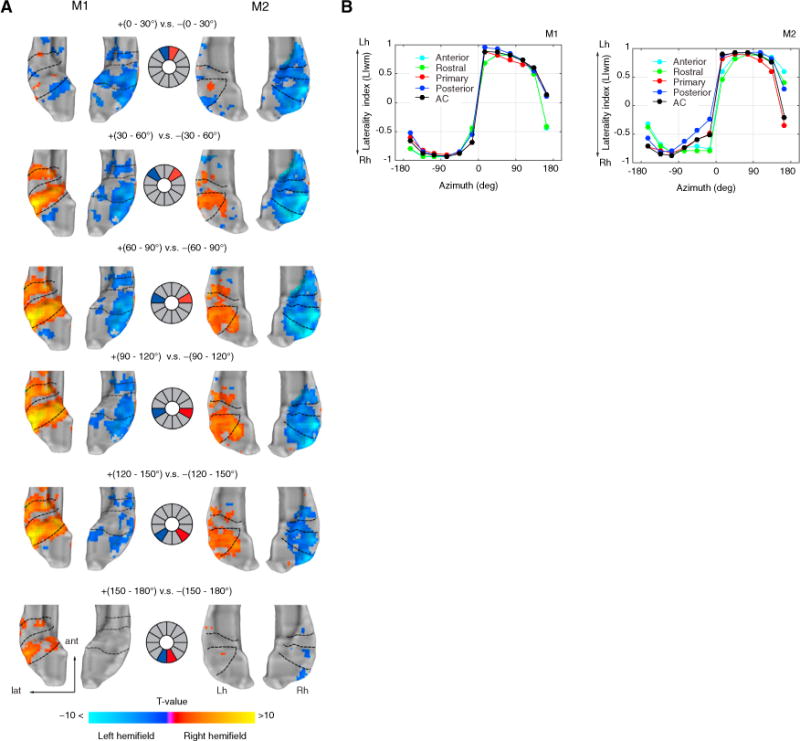

Here, we provide evidence showing a contralateral bias in the fMRI BOLD contrast between equidistant spatial sectors (Figure 4A). The differential activation maps (q FDR < 0.05, p < 10−3, cluster size > 10 voxels) indicated whether the responses were greater for the left (blue to cyan) or the right hemifield (red to yellow). The strength of the BOLD response showed a robust contralateral bias for spatial sectors near the lateral axis (e.g., ~ ±90 −120). The contrast in frontal sectors (±0°−30°) displayed greater differential response only in the right hemisphere, while contrast for backward sectors (±150–180°) showed almost no differential activation at equal threshold values (q FDR < 0.05).

Figure 4. Auditory Cortex Represents the Contralateral Hemifield.

(A) Contrast t-maps between equidistant sectors for both monkeys. Middle panel illustrates the contrast design between sectors (left hemifield in blue; right hemifield in red). Voxels preferring left hemifield sectors were mapped as negative (blue-to-cyan), while voxels preferring right hemifield sectors were mapped as positive (red-to-yellow). The range of t values (q FDR < 0.05, p < 10−3, cluster size > 10 voxels) in the color bar was scaled according to a maximum t value of 10 to illustrate the strength of the contrast across sectors and monkeys.

(B) Mean-weighted laterality index (LIwm) between hemispheres calculated from the t value threshold of each spatial sector (see STAR Methods section Laterality index). Index range between −1 and +1 with a positive value indicating Lh biases and a negative index indicating Rh biases. Index curves are shown for each monkey and for each cortical field, including auditory cortex as a whole (all fields combined). Lh, left hemisphere; Rh, right hemisphere.

We quantified these results further by calculating a hemispheric laterality index (LI = L−R/|L+R|) between corresponding CRs the opposite hemisphere, including AC as a whole (all CRs included). Since laterality indices in fMRI typically show a threshold dependency (Wilke and Lidzba, 2007), we measured LI curves by bootstrapping LI values as a function of the t value threshold and then calculated a mean weighted laterality index (LIwm) (see STAR Methods section Laterality index). The LIwm ranges between −1 and 1 with a positive index assigned to left-hemisphere bias and a negative index to a right-hemisphere bias. The resulting indices for all CRs showed a very strong right-hemisphere bias (LIwm < −0.5) for sectors in the left hemifield and a left-hemisphere bias (LIwm > 0.5) for sectors in the right hemifield, except for a backward sector (±150°−180°) were LIwm was found to be asymmetrical toward the right hemisphere in three monkeys (Figures 4B and 5C). The LIwm changes around the midline were drastic, as observed in the steep slope between frontal sectors, compared to shallower slopes for sectors within each hemifield.

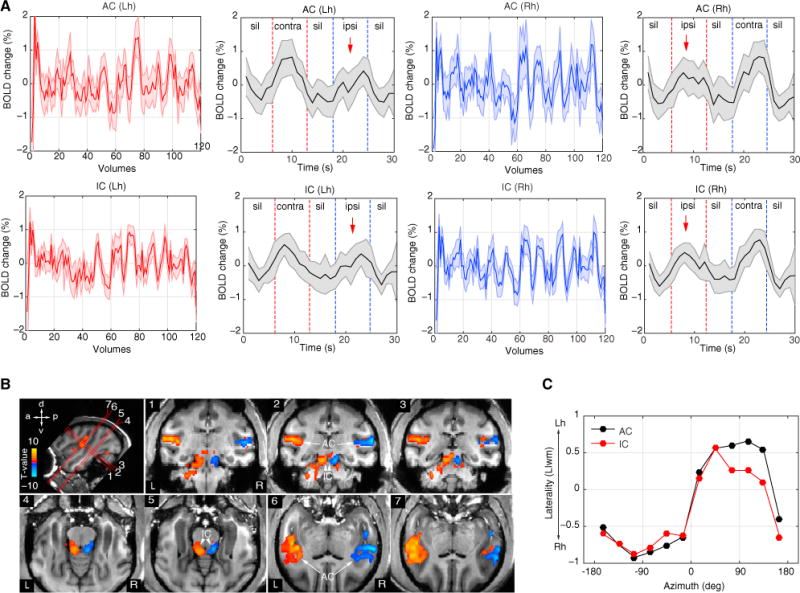

Figure 5. Cortical and Subcortical Hemifield Tuning in the Awake Monkey.

(A) Example time courses and average response (mean ± SEM) of auditory cortex (AC) and inferior colliculi (IC) in each hemisphere (red, left hemisphere; blue, right hemisphere). Red dashed lines indicate duration periods of sounds presented in the right hemifield and blue dashed duration periods of sounds presented in the left hemifield. Notice the amplitude suppression for sound sources on the ipsilateral side (red arrows).

(B) Contrast t-maps (q FDR < 0.05, p < 10−3, cluster size > 10 voxels, t value range ± 7.8) between all left and all right spatial sectors in awake monkey M3 (see also Figure S5 for M4). Top left image illustrates oblique slice orientations and planes (numbered 1–7) cutting through AC and IC. Voxels preferring the left hemifield sectors were mapped as negative (blue-to-cyan) while voxels preferring the right hemifield sectors were mapped as positive (red-to-yellow).

(C) Laterality index (LIwm) curves for AC and IC of monkey M3. Lh, left hemisphere; Rh, right hemisphere; contra, contralateral; ipsi, ipsilateral; sil, silence.

Similar contralateral representations were found in AC of the awake monkey (Figures 5 and S5). Additionally, however, we obtained reliable BOLD signals from inferior colliculli (IC) of the awake monkey. Similar to the anesthetized monkey, the average time courses showed an overall suppression effect to sound sources on the ipsilateral side. For contrast between spatial sectors of the awake monkey, we collapsed across all left and all right hemifield sectors (q FDR < 0.05, p < 10−3, cluster size > 10) and confirmed a robust contralateral bias (Figures 5B and S5B). The LIwm values in the awake monkey resembled those in the anesthetized animal showing contralateral biases (Figure 5C).

Overall, our analyses showed a robust contralateral bias in both anesthetized and awake monkeys, as measured with fMRI. Moreover, our finding in IC further supports the neurophysiological evidence showing hemifield tuning below the cortical level (Groh et al., 2003) and attests to the feasibility of fMRI as a tool for imaging auditory spatial representations in cortical and subcortical structures of primates.

Removing ITD Cues from Spatial Sounds

Previous work has suggested that NBRs are related to decreases in neuronal activity (Shmuel et al., 2006). Given the strong inhibitory roles involved in ITD coding at subcortical levels (Brand et al., 2002; Pecka et al., 2008), we explored the effects of removing ITD cues from the original recorded sounds in the PBR/NBR pattern in AC.

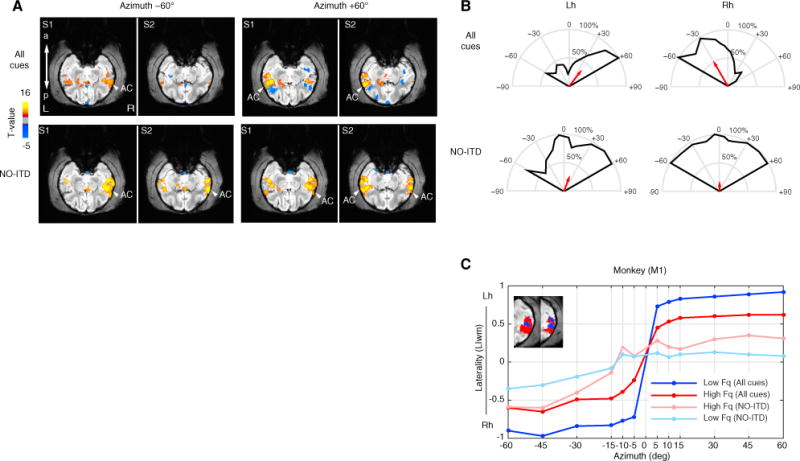

First, we replicated our previous findings (Figure 6A) showing PBRs and NBRs in AC for spatial sounds carrying all spatial cues in frontal azimuth (±0° −60°) of the anesthetized monkey (All-cues condition, q FDR < 0.01, p < 10−6, cluster size > 10 voxels, t value range −6.4 to 8.7). Second, we measured the BOLD responses to the same sounds but without ITD cues, i.e., sounds carrying the remaining ILD and spectral cues (NO-ITD condition, q FDR < 0.01, p < 10−3, cluster size > 10 voxels, t value range −6 to 18.8). The BOLD response to leftward sounds (e.g., NO-ITD at −60°) showed greater activation in the right hemisphere as compared to the left hemisphere (Figure 6A). However, the BOLD response to rightward sounds (e.g., NO-ITD at +60°) showed a bilateral activation in both left and right AC.

Figure 6. ITD Cues Are Essential for Contralateral Tuning in Auditory Cortex.

(A) Example t-maps with significant BOLD responses (q FDR < 0.05) to spatial sounds presented in left (+60°) and right (−60°) hemifields. “All cues” condition (top) and “NO-ITD” condition (bottom) in which ITD cues were removed from the original recorded sounds, leaving ILD and spectral cues. Maps are shown for two pairs of oblique slices (S1 ventral and S2 dorsal) cutting through the superior temporal gyrus. The response to rightward +60° in the NO-ITD condition was observed in both auditory cortices (i.e., no contralateral tuning).

(B) Spatial tuning curves for frontal field show a loss of hemifield tuning in the right hemisphere for the NO-ITD condition.

(C) Laterality index (LIwm) as a function of frontal azimuth plotted for low- and high-frequency voxels shows a lack of laterality (LIwm near zero) for sounds without ITD at the midline (±15°) with only a slight increase in laterality for high frequency (LIwm < 0.5) as compared to low frequency. Compare to Figures 3, 4, and 5.

Moreover, while spatial tuning curves for the All-cues condition showed contralateral tuning (~ ±30°), the ITD-control condition showed hardly any contralateral tuning (Figure 6B). LIwm values near the midline (−15 to 15°) shifted drastically toward zero and just increased slightly (LIwm < 0.5) for more rightward sounds as compared to the All-cues condition. In addition, we investigated the effect of frequency by analyzing LIwm values from both the All-cues and NO-ITD conditions in voxels identified to belong to either low- (0.125–1 kHz) or high- (2–16 kHz) frequency regions (Figure 6C). These findings showed similar decreases in laterality around the midline for both low- and high-frequency (NO-ITD) conditions with an increase in contralaterality (LIwm > 0.25) for more lateral positions in high-frequency regions as compared to an overall decrease in LIwm values for low- (LIwm < 0.25) frequency regions. Thus, removal of ITD cues facilitated the responses of the right hemisphere across frequency regions, and consequently the difference in BOLD activity between both hemispheres plateaued near the midline (Figure 6C).

Taken together, our results suggest that the suppression effects (either in the form of smaller positive clusters and/or negative BOLD responses) are likely due to inhibitory inter-hemispheric processes provided by ITD cues. In addition, our results show that the lack of suppression caused by the removal of ITD cues particularly affected the right-hemisphere response necessary for contralateral tuning. Overall, we provide new evidence for the role of ITD mechanisms in the representation of azimuth space at the cortical level in the macaque.

Relating Cortical Representations to the Hemifield Model

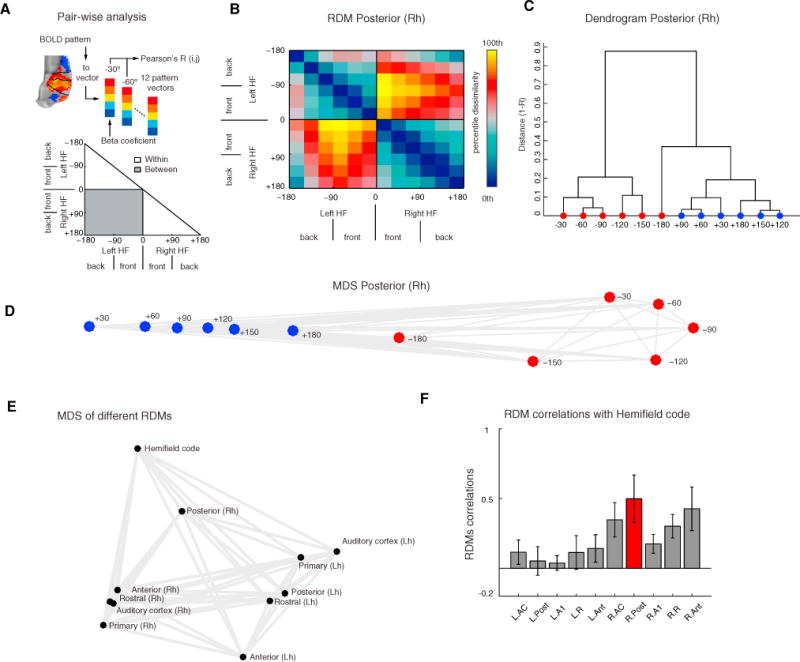

Previous work has suggested that the representation of azimuth space in AC follows a hemifield rate code (Salminen et al., 2009; Stecker et al., 2005; Werner-Reiss and Groh, 2008). Here, we use representational similarity analyses (RSA) (Kriegeskorte et al., 2008) to measure the dissimilarity between the BOLD response patterns to each spatial sector and to then compare the resultant spatial representations across CRs and hemifield model.

For each stimulus condition (i.e., each sector of space; n = 12), the beta coefficients (β) obtained from the fitted GLM were subjected to pairwise Pearson’s correlation (R), and the distance (1 − R) between responses to all possible pairs of conditions was ordered into a 12 × 12 representational dissimilarity matrix (RDM). The RDM characterizes the BOLD response patterns to each spatial sector and captures distinctions within and between hemifield responses (Figure 7A).

Figure 7. Posterior Superior Temporal Region Represents Space Similarly to a Hemifield Code.

(A) For each field, we extracted the response patterns to each spatial sector, yielding 12 response patterns. We then calculated pairwise Pearson’s correlations (R) across all spatial sectors and then assigned the dissimilarity measure (1 − R) to a 12 × 12 representational dissimilarity matrix (RDM). This analysis was repeated for each cortical field and the hemifield model for all runs and monkeys (see Figure S6).

(B) Mean RDM of the right posterior superior temporal region (pST) region. The color bar reflects dissimilarity in percentiles (low dissimilarity, blue; high dissimilarity, red/yellow).

(C and D) Hierarchical clustering (C) and multidimensional scaling (MDS) (D) of fMRI responses in right pST. Unsupervised hierarchical clustering (criterion: average dissimilarity) revealed a hierarchical structure dividing left and right hemifields. MDS (criterion: metric stress) resulted in apparent segregation of data derived from each hemifield (red versus blue).

(E) MDS based on dissimilarity (1 – Spearman’s correlation) between RDMs (see Figure S6E and STAR Methods section fMRI dissimilarity analyses for second-order RDM). Visual inspection of the MDS structure reveals that the right pST RDM lies closer to the hemifield model than any other cortical region.

(F) Mean ± SEM of Spearman’s correlation coefficients obtained from all monkeys and runs (n = 65) between CRs and hemifield code (see Figure S7 for individual runs and monkeys) RDMs. RDM from the right pST relates more to the hemifield code than any other RDM.

This analysis was repeated for each CR, AC (all CRs combined), and hemifield model, providing a total of 11 RDMs. Visual inspection of each RDM from the left hemisphere revealed a small dissimilarity (blue) distance between spatial sectors within the right hemifield, while RDMs from the right hemisphere showed a small dissimilarity distance between spatial sectors within the left hemifield (Figure S6). These results largely confirm our previous results showing contralateral preference (Figures 4 and 5). More importantly, however, these analyses revealed that while most regions showed variability within ipsilateral sectors, the pST region of the right hemisphere showed a small dissimilarity within hemifields and a graded dissimilarity distance (red) across hemifields (Figure 7B and Figure S6), indicating that the right pST region carried spatial information in the NBRs to ipsilateral sound sources. By subjecting the right pST RDM to clustering analyses and multidimensional scaling (MDS), we were able to show that the response patterns segregated in an orderly manner largely replicating the spatial arrangement of stimuli that evoked them (Figures 7C and 7D). Interestingly, the elicited response patterns to frontal sectors (±30°) were very dissimilar, generating a larger distance between them, which further indicated drastic changes of responses around the midline.

We also examined the dissimilarity between spatial representations obtained from individual CR, AC, and the hemifield model by computing Spearman’s rank-order correlations between RDMs (1 – Spearman’s R). This analysis resulted in a second-order RDM (see STAR Methods fMRI dissimilarity analyses and Figure S6E). The second-order RDM when subjected to MDS showed how the right pST clustered at a closer distance to the hemifield code than the other CRs (Figure 7E). The correlation coefficient between the right pST region’s RDM and the hemi-field code model, averaged across individual runs and monkeys, was numerically higher than between any other CR and the hemifield model (Figure S7A). This result was replicated in most individual fMRI runs of individual animals: 17/23 runs (74%) in M1; 13/14 (93%) in M2; 14/14 (100%) in M3, and 10/14 (71%) in M4 (Figure S7B). The lower 95%-confidence boundaries for these proportions are 53%, 66%, 75%, and 45%, respectively, and are well above the expected chance level of 12.5% (1 in 8 CRs). Using a sign-rank permutation test (FDR p < 0.01, 95% confidence intervals by bootstrap), we determined that the right pST RDM was significantly more similar to the hemifield model RDM than any other cortical RDM (Figure S6F). Overall, our dissimilarity analyses show that representation of space in the right pST region follows closely a hemifield code.

DISCUSSION

Using fMRI and multivariate analytical methods, we mapped auditory cortical fields (CRs) in the macaque on the basis of their tonotopic organization and then measured BOLD responses to spatial auditory stimuli. We showed that the functional representation of azimuth in AC, as measured by the BOLD signal, is not organized topographically but distributed with a strong contralateral bias. We further demonstrated that the opponent pattern of positive and negative BOLD responses across the cerebral hemispheres is dependent on the presence of ITD cues. Taken together, our main findings support the existence of an opponent-channel mechanism (Stecker et al., 2005) that is based on contralateral inhibition (Grothe, 2003) for coding auditory space in primates.

ITD Cues Modulate the BOLD Response across Hemispheres

While it was originally thought that ITDs (the most salient spatial cues) were coded exclusively by a topographic arrangement of coincidence detectors in the auditory brainstem as in the case of the barn owl (Jeffress, 1948; Knudsen and Konishi, 1978); research in multiple mammalian species has revealed an additional mechanism (McAlpine et al., 2001), one in which ITDs are coded by an opponent hemifield code based on neuronal inhibition (Brand et al., 2002; Pecka et al., 2008).

Given the profound role that inhibition plays in the coding of spatial cues in the auditory brainstem (Grothe, 2003), we investigated the effect of removing ITD cues from the original spatial sounds on BOLD responses in the AC. The lack of ipsilateral suppression caused by the removal of ITD cues particularly affected the right-hemisphere response necessary for a contralateral representation. Spatial measures and laterality indices further indicated that contralateral tuning was lost after removal of ITD cues (Figure 6). Thus, removing ITD cues from the original spatial sounds facilitated the response of the right hemisphere to ipsilateral sounds, generating an overall net activation for both ipsilateral and contralateral sounds and consequently no shifts in hemifield tuning around the midline. The lack of contralaterality was more pronounced in low-frequency as compared to high-frequency regions, suggesting a similar hemifield-tuned representation for ILD cues driven by high-frequency regions (Magezi and Krumbholz, 2010). Overall, our findings indicate that ITD cues are necessary to preserve the drastic shift in hemifield representations across auditory cortices and that ILD cues might provide additional contralateral tuning information for spatial localization in the high-frequency range.

Response Tuning: Effects of Anesthesia, Attention, and Stimulus History

Functional analyses of the positive BOLD response, in both anesthetized and awake monkeys, showed a maximum amplitude and spatial spread for contralateral sectors, in agreement with previous lesion (Heffner and Masterton, 1975; Jenkins and Masterton, 1982; Nodal et al., 2012), single-unit (Middlebrooks et al., 1994; Tian et al., 2001; Miller and Recanzone, 2009; Stecker et al., 2005; Werner-Reiss and Groh, 2008; Woods et al., 2006), and optical imaging data (Nelken et al., 2008). Although previous single-unit studies in mammals reported contralateral tuning centered around ±90°, our spatial tuning measures derived from the BOLD responses show a rearward shift in contralateral tuning (e.g., ± 90°−120°). While LI shifts toward more rear sound positions could potentially be due to the expectancy of subsequent sound sources (Stange et al., 2013), they are in accordance with previous population measures of neuronal tuning in awake monkey AC (Woods et al., 2006). Interestingly, shifts in amplitude tuning around the midline were drastic as compared to rear midline sectors indicating a sharp slope tuning in frontal space as compared to rear sound positions, which suggest a potential different mechanism for coding backward space.

In monkeys, single-unit studies have found neurons in posterior regions (particularly area CL) with significantly sharper tuning to spatial position (Kuśmierek and Rauschecker, 2014; Miller and Recanzone, 2009; Tian et al., 2001; Woods et al., 2006). However, we found very small differences in the spatial tuning curves between CRs. This could be due to the average activity across large neuronal populations as reflected by the BOLD signal and thus is conceivable that sharply tuned neurons can only be detected at the single-unit level. Sharply tuned neurons may also be more common in frontal auditory space, where spatial resolution at the behavioral level is highest in most species and where much of single-unit recording has taken place. One important point to keep in mind is that our experimental design does not include a spatial attention auditory task, which has been shown to sharpen cortical responses after learning (Lee and Middlebrooks, 2011). While biases in spatial attention could be detected from the average shift gaze in visual paradigms (Caspari et al., 2015), our auditory stimuli included sound sources beyond the visual field, challenging our ability to reliably decipher shifts in gaze between frontal and backward space. In addition, our data also included imaging under general anesthesia in combination with eye muscle paralysis. While anesthesia could potentially hinder the underlying cortical mechanisms involved in coding auditory space and paralytics could even obscure eye-movements modulations in auditory cortex (Werner-Reiss et al., 2003), our experimental approach allowed us to image the bottom-up driven response to cortex without—presumably—top-down attention effects. Considering the fact that fMRI indirectly measures the pooled activity of cortical neurons and that responses were similar between both awake and anesthetized conditions, we believe that eye movements and attention were not a source of response bias in our data from awake animals.

Contralateral and Asymmetrical Bias: Implications for Human and Monkey Neuroimaging Studies

While single-unit studies consistently and invariably report a contralateral bias in the firing rate of cortical neurons (Tian et al., 2001; Miller and Recanzone, 2009; Stecker and Middle-brooks, 2003; Werner-Reiss and Groh, 2008; Woods et al., 2006), neuroimaging studies in humans have obtained mixed results with respect to the degree of contralaterality (Krumbholz et al., 2007; Werner-Reiss and Groh, 2008; Zatorre et al., 2002). Here we found a robust contralateral bias in the BOLD contrast to equidistant hemifield sectors in both anesthetized and awake monkeys, suggesting that the lack of contralaterality in some previous neuroimaging studies in humans might be due to differences in sound stimulation, i.e., sounds relying on ITD (Krumbholz et al., 2007) or ILD cues alone, and might not be due to an inherent lack of functional sensitivity in fMRI (Werner-Reiss and Groh, 2008). Furthermore, our stimulation design consisted of individualized (in-ear) binaural sound recordings and the bias we obtained in our contralaterality measures is in accordance with human neuroimaging studies utilizing individualized spatial sounds (Derey et al., 2016; M1ynarski, 2015; Palomäki et al., 2005; Salminen et al., 2009).

One interesting observation relates to laterality indices to right-backward sounds (e.g., +150°−180°) that were asymmetrically shifted to the right hemisphere for three of the animals. These results could be due to greater spectral and motion sensitivity in the right hemisphere (Zatorre and Belin, 2001), especially for backward space. In humans, the right hemisphere is generally more involved in spatial auditory processing (Baumgart et al., 1999; Krumbholz et al., 2007) and motion detection (Warren et al., 2002; Griffiths et al., 1996). Furthermore, lesions to the right hemisphere in humans can result in spatial hemi-neglect (Bisiach et al., 1984). In monkeys, previous neuroimaging work has focused on hemispheric biases for vocal sounds (Gil-da-Costa et al., 2006; Ortiz-Rios et al., 2015; Petkov et al., 2008; Poremba et al., 2004); however, until now, no functional MRI study in monkeys utilizing spatial sounds had been performed. In our present study, we found a dynamic BOLD modulation in both hemispheres for spatial (non-vocal) broad-band noise sounds. Interestingly, the right-hemisphere response was strongly modulated across hemifield sectors, particularly in the pST region, as shown in the small activation surrounded by deactivation in posterior and anterior regions of AC (Figures 2A and 2B). These small ipsilateral patches could correspond to EE regions (Imig and Brugge, 1978; Reser et al., 2000) receiving callosal input (Hackett et al., 1998; Pandya and Rosene, 1993), while NBRs could be due to subcortical inhibition (Grothe, 2003) or cortico-cortical lateral inhibition (Shmuel et al., 2006) from EE cells in low-frequency ITD sensitive regions (Brugge and Merzenich, 1973). In humans, the ability to localize sounds based on ITD cues alone has been found to depend on an intact right hemisphere (Bisiach et al., 1984; Spierer et al., 2009). Our results here are in accordance with the role of ITD cues in the right hemisphere of humans and support these findings by showing that the pST region could segregate the response patterns in an orderly manner similar to a hemifield rate code (Salminen et al., 2009; Werner-Reiss and Groh, 2008). Our dissimilarity analyses also provide support for the notion of a posterior region particularly sensitive to spatial sounds (Rauschecker and Tian, 2000; Tian et al., 2001) and coincides with previous single-unit and behavioral studies in cats (Stecker et al., 2005; Lomber and Malhotra, 2008) and single-unit studies in monkeys (Kuśmierek and Rauschecker, 2014; Miller and Recanzone, 2009; Tian et al., 2001; Woods et al., 2006), showing that posterior regions carry more spatial information than primary cortical regions in agreement with a posterior-dorsal auditory “where” pathway (Rauschecker and Tian, 2000; Romanski et al., 1999).

Conclusion

Taken together, our results reconcile seemingly contradictory views of auditory space coding by showing that the representation of space follows closely a hemifield code (Salminen et al., 2009; Stecker et al., 2005; Werner-Reiss and Groh, 2008) and that such representation depends on the availability of ITD cues. Moreover, our data also suggest that the cortical activation pattern across each AC, as a result of the hemifield tune response, generates the right-posterior dorsal sensitivity for space commonly seen in spatial studies (Baumgart et al., 1999; Krumbholz et al., 2007; Rauschecker and Tian, 2000; Tian et al., 2001) of primate auditory cortex.

STAR★METHODS

Detailed methods are provided in the online version of this paper and include the following:

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited Data | ||

| Auditory-Space/data | Echo Planar Imaging datasets, https://dx.doi.org/10.6084/m9.figshare.4508576.v4 | https://figshare.com/articles/Auditory_Space_data/4508576 |

| Software and Algorithms | ||

| AFNI/SUMA | Cox, 1996 | RRID: SCR_005927 |

| FreeSurfer | Dale et al., 1999 | RRID: SCR_001847 |

| ParaVision 4 | Bruker, BioSpin GmbH, Ettlingen, Germany | RRID: SCR_001964 |

| (MATLAB) Circular Statistis Toolbox | Berens, 2009 | https://philippberens.wordpress.com/code/circstats/ |

| (MATLAB) LI-toolbox | Wilke and Lidzba, 2007 | http://www.medizin.uni-tuebingen.de/kinder/en/research/neuroimaging/software/ |

| (MATLAB) toolbox for RSA | Nili et al., 2014 | http://www.mrc-cbu.cam.ac.uk/methods-and-resources/toolboxes/ |

| Custom scripts | This paper | https://github.com/ortizriosm/Auditory-space |

CONCTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed, to and will be fulfilled by the Lead Contact, Dr. Michael Ortiz Rios (michael.ortiz-rios@newcastle.ac.uk).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

All neuroimaging data was obtained from four rhesus monkeys (Macaca mulatta); two males and two females paired in groups of two or more. Experiments under anesthesia were performed in the two male monkeys (M1 and M2, 6–7 years of age, weighing 6–8 kg) while experiments in awake-fMRI were performed in the two female monkeys (M3 and M4, 7–8 years of age, weighing 8 kg each). Monkeys designated for awake experiments were implanted with head-holder under general anesthesia with isoflurane (1%–2%) following pre-anesthetic medication with glycopyrrolate (i.m. 0.01 mg/kg) and ketamine (13 mg/kg). All surgical procedures under anesthesia were approved by the local authorities (Regierungspräsidium Tübingen) and were handled in accordance with the German law for the protection of animals and guidelines of the European Community (EUVD 86/609/EEC) for the care and use of laboratory animals.

METHOD DETAILS

Auditory stimuli

Stimuli for tonotopic mapping consisted of 250 ms pure tones (PT), 1/3-octave and 1-octave band-pass noise bursts with center frequency every octave from 0.125 to 16 kHz. These sounds were further filtered with an inverted macaque audiogram to simulate the effect of different ear sensitivity at multiple frequencies. The stimuli were equalized so that they produced equal maximum root mean square (RMS) amplitude (using a 200-ms sliding window) in filtered recordings. During experiments, all stimuli were played using a QNX real-time operating system (QNX Software Systems, Ottawa, Canada), amplified (Yamaha, AX-496) and delivered at a calibrated RMS amplitude of ~ 80 dB SPL through electrostatic in-ear headphones (SRS-005S +SRM-252S, STAX, Ltd., Japan) attach to a customize silicon earmold.

Binaural sound recordings

Monkeys were anesthetized (ketamine 0.2 mL + medetomidine 0.4 ml) inside an MRI-chair placed inside a sound-insulated acoustic chamber (Illtec, Illbruck Acoustic GmbH, Germany). In-ear miniature microphones (Danish Pro Audio 4060) were placed at the entrance of the ear canals of the animal. A broadband noise signal (0.125–16 kHz, 100 ms in duration) was generated in MATLAB (MATLAB 7.10) at a sampling rate and resolution of 48 kHz/16-bit and played through a loudspeaker (Apple Pro M653170, 2.2 cm radius) mounted on a circular frame around the MRI-chair. The recorded signals from the microphones were pre-amplified (Saffire Pro 40, Focusrite) and recorded using Adobe Audition CS6 (Adobe, San Jose, CA).

The noise bursts were played every 5° (72 horizontal angle steps) from −180° to +180° at 0° elevations from the interaural plane. A full horizontal plane was recorded at four distances (20, 30, 40 and 50 cm) from head-center for a total of 72 recordings per distance. The signals measured ~82 dB SPL at 20 cm and ~70 dB SPL at 50 cm from the center of the monkey’s head (Brüel and Kjær 2238 Mediator SPL meter with the 4188 microphone). Recorded sounds contained all individual spatial cues (ITDs, interaural level differences [ILDs], and spectral cues, Figures S3A and S3B).

Offline, the recorded noise bursts were concatenated every 5° to form 12 spatial sectors (Figure 2G). For example, for positions referring to the 0° to 30° sector the stimuli were concatenated in the following way: From 0° at a distance of 50 cm to 15° at a distance of 20 cm every 5° to form a looming pattern and from 15° at a distance of 20 cm to 30° at a distance of 50 cm to form a receding pattern; total duration = 1200 ms). The same pattern was applied inversely (30°/50 cm to 15°/20 and from 15°/20 to 30°/50). This pattern shifts in directionality (2400 ms) was repeated 3 times (total time = 7200 ms). Such patterns were used to avoid adaptation in the BOLD responses (Dahmen et al., 2010), to control for directionality (e.g., toward ear/away from ear) and to introduce dynamic and amplitude modulation into the perception of horizontal positions. For the stimulus manipulation of ITD we calculated interaural delay between left and right microphone signals using cross-correlation and subtracted the computed lag from either the left or the right microphone signal.

Behavioral training for awake-monkey fMRI

Monkeys assigned to awake-fMRI experiments (M3 and M4) were trained to sit still in an MRI-compatible primate chair placed inside an acoustically shielded box simulating the scanner environment. Inside the box, the animals were trained to be accustomed to wear headphone equipment and to hear simulated scanner noise, presented by a loudspeaker. Eye movements were monitored using an infrared eye-tracking system (iView, SensoMotoric Instruments GmbH, Teltow, Germany). Typically, while being trained or scanned in the absence of any visual stimulation in darkness, the monkeys kept their eyes closed resembling a light sleep condition.

Anesthesia for fMRI

Anesthesia procedures have been described elsewhere (Logothetis et al., 1999). In brief, anesthesia was induced with a cocktail of short-acting drugs (fentanyl at 3 μg/kg, thiopental at 5 mg/kg, and the muscle relaxant succinyl-choline chloride at 3 mg/kg) after premedication with glyco-pyrrolate (i.m. 0.01 mg/kg) and ketamine (i.m. 15 mg/kg). Anesthesia was then maintained with remifentanil (0.5−2 ug/kg/min) and the muscle relaxant mivacurium chloride (5 mg/kg/h). Physiological parameters (heart rate, blood pressure, blood oxygenation, expiratory CO2 and temperature) were monitored and kept in desired ranges with fluid supplements. Data acquisition started approximately ~2 hr after the start of animal sedation.

MRI data acquisition

Images for anesthetized experiments were acquired with a vertical 7T magnet running ParaVision 4 (Bruker, BioSpin GmbH, Ettlingen, Germany) and equipped with a 12-cm quadrature volume coil covering the whole head. All images were acquired using sparse acquisition design with an in-plane resolution of 0.75 × 0.75 mm2 with a 2 mm axial slice aligned parallel to the superior temporal gyrus (STG) (Figures 1A and 1B).

For functional data, gradient-echo echo planar images (GE-EPI) were acquired with 4-segments shots (TR = 500 ms, TE = 18 ms, flip angle = 40°, FOV = 96 × 96 mm2, matrix = 128 × 128 voxels, slices = 9 – 11, slice thickness = 2 mm, resolution = 0.75 × 0.75 × 2 mm3 voxel size) with slices aligned to the STG. Followed by the functional scans, two in-session volumes (FLASH and RARE) were acquired with the following parameters: for RARE (TE = 48 ms, TA = 24 ms, TR = 4000 ms, flip angle = 180°, FOV = 96 × 96, matrix = 256 × 256 voxels, resolution = 0.375 × 0.375 mm2, slice thickness = 2 mm, slices = 9–11); for FLASH (TE = 15 ms, TA = 24 ms, TR = 2000 ms, flip angle = 69°, FOV = 96 × 96, matrix = 256 × 256 voxels, resolution = 0.375 × 0.375 mm2, slice thickness = 2 mm, slices = 9–11). For tonotopic mapping experiments with anesthetized animals, 14 EPI runs (120 volumes) were acquired for M1 over one experimental session (day) and 16 runs (120 volumes) for M2 over one session; while for azimuth space experiments, 14 runs (150 time points each) were acquired for M1 over two sessions and 23 runs for M2 over two sessions.

Anatomical images, we acquired with high-resolution scan using a T1-weighted three-dimensional (3D) MDEFT pulse sequence (4 segments, TR = 15 ms, TE = 5.5 ms, flip angle = 16.7 ms, FOV = 112 × 112 × 60.2 mm3; matrix = 320 × 320 × 172 voxels, number of slices = 172, resolution = 0.35 × 0.35 × 0.35 mm3 voxel size). A total of 6 scans were acquired to form an average MDEFT high-resolution volume.

Measurements for awake-experiments were made on a vertical 4.7T magnet (Bruker, BioSpin GmbH, Ettlingen, Germany) equipped with a 12-cm quadrature volume coil. We acquired functional images with 360 volumes per run for each monkey (GE-EPI sequence: TR = 1000 ms, TE = 18 ms, flip angle = 53°, FOV = 96 × 96 mm2, matrix = 96 × 96 voxels, number of slices = 18, slice thickness = 2 mm, resolution = 1.0 × 1.0 × 2 mm3). For azimuth space experiments in awake-monkeys, 5 runs (360 volumes) were acquired for M3 over one session. Given that 3 volumes were acquired in sparse sampling and the emitted power at any given sequential volume was different but comparable across sparse blocks we separated first, second and third volumes and created 3 separate time courses (120 volumes each) per run. Thus a total of 15 runs per monkey were analyzed.

Anatomical images were acquired with an MDEFT sequence customized for awake-experiments (TE = 15 ms, TA = 840 ms, TR = 2320 ms, flip angle = 20°, FOV = 96 × 96 × 80 mm3, matrix = 192 × 192 × 80 voxels, slice thickness = 1 mm, resolution = 0.5 × 0.5 × 1 mm3).

QUANTIFICATION AND STATISTICAL ANALYSIS

GLM analyses

FMRI data analyses were performed using AFNI (Cox, 1996), FreeSurfer (Dale et al., 1999), SUMA (Saad et al., 2004) and MATLAB (MathWorks). Preprocessing included slice-timing (3dTshift) correction, spatial-smoothing (3dmerge, 1.5 mm full width at half-maximum Gaussian kernel) and scaling of the time series at each voxel by its mean. Subsequent analyses were performed on both smooth and un-smooth data.

Smooth data was used mainly for visualization purposes while most second order analyses (e.g., dissimilarity analyses) were performed on unsmooth data. For awake-fMRI data, motion correction (3dvolreg) was used to exclude volumes that contained motion shifts > 0.5 mm and/or rotations > 0.5 degrees from further analyses. Lastly, we used 3dDeconvolve for linear least-squares detrending to remove nonspecific variations (i.e., scanner drift) and regression. Following preprocessing, data were submitted to general linear modeling analyses which included 12 spatial-condition-specific regressors and six estimated motion regressors of no interest for awake-fMRI data. For each stimulus condition (sectors 1 to 12) we estimated a regressor by convolving a one-parameter gamma distribution estimate of the hemodynamic response function with the square-wave stimulus function. We then performed t tests contrasting each azimuth sector condition with baseline (“silent” trials). To obtain auditory modulated voxels we first contrast all sounds versus silent conditions to select voxels for further analyses (see also Figure S1). Subsequently, contrast analyses between equidistant spatial sectors were performed to quantify hemifield biases.

The average anatomical scans (n = 6) were spatially normalized (3dAllineate), the head and skull removed (3dSkullStrip), and extracted brains were corrected for intensity non-uniformities from the radiofrequency coil (3dUniformize). After intensity corrections, the volumes were segmented to obtain white and gray matter. Whole-brain surfaces were then rendered along with the extracted surfaces of the STG using Freesurfer (Figure 1B). Finally, we illustrated the results on a semi-inflated cortical surface extracted with SUMA to facilitate visualization and identification of cortical regions and boundaries.

FMRI phase-mapping analyses

3dRetinoPhase scripts from AFNI were used for phase-mapping analyses. The coherence of the fMRI time series at the stimulus presentation cycle was used to measure the strength of the BOLD response amplitude in each voxel. Coherence measures the ratio of the amplitude at the fundamental frequency to the signal variance, ranging between 0 and 1 (Barton et al., 2012; Wandell et al., 2007). The measure of coherence is

where f0 is the stimulus frequency, A(f0) the amplitude of the signal at that frequency, A(f) the amplitude of the harmonic term at the voxel temporal frequency f and Δf the bandwidth of frequencies in cycles/scan around the fundamental frequency f0. For all tonotopy stimuli f0 corresponds to twelve cycles (12/1200 s = 0.01 Hz) and Δf corresponds to the frequencies around the fundamental excluding the second and third harmonics (see Figure 2A for an example of voxel harmonics). In the case of spatial mapping, f0 corresponds to twelve cycles (12/1800 s = 0.0067 Hz). Each voxel was given a coherence threshold value of 0.3. The phase response at f0 encodes the sound frequency (or azimuth in degrees in case of the spatial domain) (see Figure 1E for an example of three voxels in A1). Phase peaks were plotted across cortical space for qualitatively comparison between sound frequency and space maps (see Figure S3). Volumes acquire during silent periods were excluded from this analysis.

Laterality index

Significant activations (q FDR > 0.05) from the two hemispheres were used to calculate a laterality index (LI). Given that LIs show a threshold dependency we measured LI curves to provide a more comprehensive estimate over a whole range of thresholds and to ensure that lateralization effects were not caused by small numbers of highly activated voxels across hemispheres. The LI curves were based on t-values obtained from each condition and were calculated using the LI-toolbox (Wilke and Lidzba, 2007) with the following options: +5 mm mid-sagittal exclusive mask, clustering with a minimum of 5 voxels and default bootstrapping parameters (min/max sample size 5/10000 and bootstrapping set to 25% of data). The bootstrapping method calculates 10,000 times LIs using different thresholds ranging from 0 until the maximum t-value for each condition. For each threshold a cut-off mean value is obtained from which a weighted mean (LIwm) index is calculated (Wilke and Lidzba, 2007). This analysis returns a single value between −1 and 1 referring to a right- or left-sided hemispheric bias. Indices between −0.25 and +0.25 were used to exclude a lateralization bias. Indices higher than +0.5 or below −0.5 were designated strongly lateralized.

Spatial tuning curves

Circular statistics and spatial tuning curves were performed using the CircStat toolbox for MATLAB (Berens, 2009). The spatial spread of the BOLD response to each azimuth sector was used to calculate spatial tuning curves. The total number of voxels per CR was used to calculate the percentage of significantly active voxels (q FDR < 0.05), either positive or negative, per azimuth sector. Descriptive statistics, mean, resultant vector length, variance, standard deviation and confidence intervals (see Table S1) were calculated using the following functions: circ_mean, circ_r, circ_var, stats and circ_confmean respectively. A Rayleigh test was applied to all circular data with the function circ_rtest, to test whether data was uniformly distributed around the circle or had a common mean direction. All deviations from circular uniformity were highly significant (Rayleigh test, p < 0.001) for all CRs, accepting the alternative hypothesis of a non-uniform distribution.

FMRI dissimilarity analyses

Representational similarity analyses (RSA) were performed using the MATLAB toolbox for RSA (Nili et al., 2014). The beta coefficients (b) obtained from the fitted GLM to each stimulus condition (n = 12) were subjected to pairwise Pearson’s correlation (R) and the distance (1−R) to each spatial sector was ordered into a 12 × 12 representational dissimilarity matrix (RDM) (Figure 7). This analysis was repeated for each CR, AC and hemifield model, providing a total of 11 RDMs (Figures S6A and S6B). For further analyses, we averaged the RDMs for each session and monkey, resulting in one RDM for each CR or hemisphere and model. To visualize the geometry of the responses without assuming any categorical structure we used multidimensional scaling (MDS). MDS arranges the spatial position of sound sources in two dimensions such that the distance between them reflects the dissimilarities between the response patterns they elicited. Similar hierarchical clustering was used to visualize the subdivisions in responses patterns. However, unlike MDS, this method assumes the existence of some structure, but not a particular arrangement.

For the hemifield code RDM we used the ITD delay functions for pairwise correlations (Figure S6C) and linearly combined noisy estimates of the ITD RDM with a categorical-model RDM (Figure S6D). We then measured the relationships between the matrices by calculating the dissimilarity distance (1− Spearman’s R) obtaining a second-order dissimilarity matrix (Figure S6E). We used Spearman’s correlation coefficient as to not assume a linear match between RDMs from CRs and the hemifield model (Kriegeskorte et al., 2008). Multidimensional scaling (MDS) was then performed on the second-order RDM to visualize the similarity distances between cortical representations and the hemifield model (Figure 7E).

DATA AND SOFTWARE AVAILABILITY

The accession number for the imaging data reported in this paper is http://dx.doi.org/10.6084/m9.figshare.4508576. Custom scripts had been deposited under https://github.com/ortizriosm/Auditory-space.

ADDITIONAL RESOURCES

Additional information about brain research on non-human primates could be found on http://hirnforschung.kyb.mpg.de/en/homepage.html.

Supplementary Material

Highlights.

Auditory cortex lacks a topographical representation of space

Each cortical field of the same hemisphere is tuned to the contralateral hemifield

Hemifield tuning depends on the availability of interaural delay cues

Posterior-dorsal sensitivity emerges from hemifield tuning

Acknowledgments

This work was supported by the Max Planck Society and by a PIRE Grant from the National Science Foundation (OISE-0730255 to Josef P. Rauschecker). We would like to thank Xin Yu for extensive discussion on the nature of BOLD signals. We would also like to thank Thomas Steudel for help during anesthetized experiments and Mirko Lindig for animal handling and anesthesia. We thank also Vishal Kapoor and Michael C. Schmid for comments on previous versions of the manuscript.

Footnotes

SUPPLEMENTAL INFORMATION

Supplemental Information includes seven figures, one table, and neuroimaging files and can be found with this article online at http://dx.doi.org/10.1016/j.neuron.2017.01.013.

AUTHOR CONTRIBUTIONS

Conceptualization, M.O.R., N.K.L., and J.P.R.; Methodology, M.O.R., F.A.C.A., G.A.K., P.K., and D.Z.B.; Investigation, M.O.R.; Formal Analysis, M.O.R.; Writing – Original Draft, M.O.R.; Writing – Review & Editing, M.O.R., F.A.C.A., G.A.K., P.K., M.H.M., N.K.L., and J.P.R; Funding Acquisition, N.K.L. and J.P.R.; Resources, N.K.L.; Supervision, J.P.R., G.A.K., M.H.M., and N.K.L.

References

- Barton B, Venezia JH, Saberi K, Hickok G, Brewer AA. Orthogonal acoustic dimensions define auditory field maps in human cortex. Proc Natl Acad Sci USA. 2012;109:20738–20743. doi: 10.1073/pnas.1213381109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumgart F, Gaschler-Markefski B, Woldorff MG, Heinze HJ, Scheich H. A movement-sensitive area in auditory cortex. Nature. 1999;400:724–726. doi: 10.1038/23390. [DOI] [PubMed] [Google Scholar]

- Berens P. CircStat: A MATLAB toolbox for circular statistics. Jstasoft. 2009;31:i10. http://dx.doi.org/10.18637/jss.v031.i10. [Google Scholar]

- Bisiach E, Cornacchia L, Sterzi R, Vallar G. Disorders of perceived auditory lateralization after lesions of the right hemisphere. Brain. 1984;107:37–52. doi: 10.1093/brain/107.1.37. [DOI] [PubMed] [Google Scholar]

- Brand A, Behrend O, Marquardt T, McAlpine D, Grothe B. Precise inhibition is essential for microsecond interaural time difference coding. Nature. 2002;417:543–547. doi: 10.1038/417543a. [DOI] [PubMed] [Google Scholar]

- Brugge JF, Merzenich MM. Responses of neurons in auditory cortex of the macaque monkey to monaural and binaural stimulation. J Neurophysiol. 1973;36:1138–1158. doi: 10.1152/jn.1973.36.6.1138. [DOI] [PubMed] [Google Scholar]

- Caspari N, Janssens T, Mantini D, Vandenberghe R, Vanduffel W. Covert shifts of spatial attention in the macaque monkey. J Neurosci. 2015;35:7695–7714. doi: 10.1523/JNEUROSCI.4383-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dahmen JC, Keating P, Nodal FR, Schulz AL, King AJ. Adaptation to stimulus statistics in the perception and neural representation of auditory space. Neuron. 2010;66:937–948. doi: 10.1016/j.neuron.2010.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Derey K, Valente G, de Gelder B, Formisano E. Opponent Coding of Sound Location (Azimuth) in Planum Temporale is Robust to Sound-Level Variations. Cereb Cortex. 2016;26:450–464. doi: 10.1093/cercor/bhv269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Formisano E, Kim DS, Di Salle F, van de Moortele PF, Ugurbil K, Goebel R. Mirror-symmetric tonotopic maps in human primary auditory cortex. Neuron. 2003;40:859–869. doi: 10.1016/s0896-6273(03)00669-x. [DOI] [PubMed] [Google Scholar]

- Gil-da-Costa R, Martin A, Lopes MA, Muñoz M, Fritz JB, Braun AR. Species-specific calls activate homologs of Broca’s and Wernicke’s areas in the macaque. Nat Neurosci. 2006;9:1064–1070. doi: 10.1038/nn1741. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Rees A, Witton C, Shakir RA, Henning GB, Green GG. Evidence for a sound movement area in the human cerebral cortex. Nature. 1996;383:425–427. doi: 10.1038/383425a0. [DOI] [PubMed] [Google Scholar]

- Groh JM, Kelly KA, Underhill AM. A monotonic code for sound azimuth in primate inferior colliculus. J Cogn Neurosci. 2003;15:1217–1231. doi: 10.1162/089892903322598166. [DOI] [PubMed] [Google Scholar]

- Grothe B. New roles for synaptic inhibition in sound localization. Nat Rev Neurosci. 2003;4:540–550. doi: 10.1038/nrn1136. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Subdivisions of auditory cortex and ipsilateral cortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998;394:475–495. doi: 10.1002/(sici)1096-9861(19980518)394:4<475::aid-cne6>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- Heffner H, Masterton B. Contribution of auditory cortex to sound localization in the monkey (Macaca mulatta) J Neurophysiol. 1975;38:1340–1358. doi: 10.1152/jn.1975.38.6.1340. [DOI] [PubMed] [Google Scholar]

- Imig TJ, Brugge JF. Sources and terminations of callosal axons related to binaural and frequency maps in primary auditory cortex of the cat. J Comp Neurol. 1978;182:637–660. doi: 10.1002/cne.901820406. [DOI] [PubMed] [Google Scholar]

- Jeffress LA. A place theory of sound localization. J Comp Physiol Psychol. 1948;41:35–39. doi: 10.1037/h0061495. [DOI] [PubMed] [Google Scholar]

- Jenkins WM, Masterton RB. Sound localization: effects of unilateral lesions in central auditory system. J Neurophysiol. 1982;47:987–1016. doi: 10.1152/jn.1982.47.6.987. [DOI] [PubMed] [Google Scholar]

- Knudsen EI, Konishi M. A neural map of auditory space in the owl. Science. 1978;200:795–797. doi: 10.1126/science.644324. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis - connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:4. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Hewson-Stoate N, Schönwiesner M. Cortical response to auditory motion suggests an asymmetry in the reliance on inter-hemispheric connections between the left and right auditory cortices. J Neurophysiol. 2007;97:1649–1655. doi: 10.1152/jn.00560.2006. [DOI] [PubMed] [Google Scholar]

- Kuśmierek P, Rauschecker JP. Selectivity for space and time in early areas of the auditory dorsal stream in the rhesus monkey. J Neurophysiol. 2014;111:1671–1685. doi: 10.1152/jn.00436.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee CC, Middlebrooks JC. Auditory cortex spatial sensitivity sharpens during task performance. Nat Neurosci. 2011;14:108–114. doi: 10.1038/nn.2713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK, Guggenberger H, Peled S, Pauls J. Functional imaging of the monkey brain. Nat Neurosci. 1999;2:555–562. doi: 10.1038/9210. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Malhotra S. Double dissociation of ‘what’ and ‘where’ processing in auditory cortex. Nat Neurosci. 2008;11:609–616. doi: 10.1038/nn.2108. [DOI] [PubMed] [Google Scholar]

- Magezi DA, Krumbholz K. Evidence for opponent-channel coding of interaural time differences in human auditory cortex. J Neurophysiol. 2010;104:1997–2007. doi: 10.1152/jn.00424.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malhotra S, Hall AJ, Lomber SG. Cortical control of sound localization in the cat: unilateral cooling deactivation of 19 cerebral areas. J Neurophysiol. 2004;92:1625–1643. doi: 10.1152/jn.01205.2003. [DOI] [PubMed] [Google Scholar]

- McAlpine D, Jiang D, Palmer AR. A neural code for low-frequency sound localization in mammals. Nat Neurosci. 2001;4:396–401. doi: 10.1038/86049. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Clock AE, Xu L, Green DM. A panoramic code for sound location by cortical neurons. Science. 1994;264:842–844. doi: 10.1126/science.8171339. [DOI] [PubMed] [Google Scholar]

- Miller LM, Recanzone GH. Populations of auditory cortical neurons can accurately encode acoustic space across stimulus intensity. Proc Natl Acad Sci USA. 2009;106:5931–5935. doi: 10.1073/pnas.0901023106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- M1ynarski W. The opponent channel population code of sound location is an efficient representation of natural binaural sounds. PLoS Comput Biol. 2015;11:e1004294. doi: 10.1371/journal.pcbi.1004294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelken I, Bizley JK, Nodal FR, Ahmed B, King AJ, Schnupp JWH. Responses of auditory cortex to complex stimuli: functional organization revealed using intrinsic optical signals. J Neurophysiol. 2008;99:1928–1941. doi: 10.1152/jn.00469.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nili H, Wingfield C, Walther A, Su L, Marslen-Wilson W, Kriegeskorte N. A toolbox for representational similarity analysis. PLoS Comput Biol. 2014;10:e1003553. doi: 10.1371/journal.pcbi.1003553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nodal FR, Bajo VM, King AJ. Plasticity of spatial hearing: behavioural effects of cortical inactivation. J Physiol. 2012;590:3965–3986. doi: 10.1113/jphysiol.2011.222828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ortiz-Rios M, Kuśmierek P, DeWitt I, Archakov D, Azevedo FAC, Sams M, Jääskeläinen IP, Keliris GA, Rauschecker JP. Functional MRI of the vocalization-processing network in the macaque brain. Front Neurosci. 2015;9:113. doi: 10.3389/fnins.2015.00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palomäki KJ, Tiitinen H, Mäkinen V, May PJC, Alku P. Spatial processing in human auditory cortex: the effects of 3D, ITD, and ILD stimulation techniques. Brain Res Cogn Brain Res. 2005;24:364–379. doi: 10.1016/j.cogbrainres.2005.02.013. [DOI] [PubMed] [Google Scholar]

- Pandya DN, Rosene DL. Laminar termination patterns of thalamic, callosal, and association afferents in the primary auditory area of the rhesus monkey. Exp Neurol. 1993;119:220–234. doi: 10.1006/exnr.1993.1024. [DOI] [PubMed] [Google Scholar]

- Pecka M, Brand A, Behrend O, Grothe B. Interaural time difference processing in the mammalian medial superior olive: the role of glycinergic inhibition. J Neurosci. 2008;28:6914–6925. doi: 10.1523/JNEUROSCI.1660-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, Kayser C, Augath M, Logothetis NK. Functional imaging reveals numerous fields in the monkey auditory cortex. PLoS Biol. 2006;4:e215. doi: 10.1371/journal.pbio.0040215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, Kayser C, Steudel T, Whittingstall K, Augath M, Logothetis NK. A voice region in the monkey brain. Nat Neurosci. 2008;11:367–374. doi: 10.1038/nn2043. [DOI] [PubMed] [Google Scholar]

- Poremba A, Malloy M, Saunders RC, Carson RE, Herscovitch P, Mishkin M. Species-specific calls evoke asymmetric activity in the monkey’s temporal poles. Nature. 2004;427:448–451. doi: 10.1038/nature02268. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B, Hauser M. Processing of complex sounds in the macaque nonprimary auditory cortex. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- Reser DH, Fishman YI, Arezzo JC, Steinschneider M. Binaural interactions in primary auditory cortex of the awake macaque. Cereb Cortex. 2000;10:574–584. doi: 10.1093/cercor/10.6.574. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saad ZS, Reynolds RC, Cox RJ, Argall B, Japee S. SUMA: An Interface for Surface-Based Intra- and Inter-Subject Analysis with AFNI (ISBI) 2004 http://dx.doi.org/10.1109/ISBI.2004.1398837.

- Saleem KS, Logothetis NK. Horizontal, Coronal and Sagittal Series. 2nd. Elsevier/Academic Press; 2012. A Combined MRI and Histology Atlas of the Rhesus Monkey Brain in Stereotaxic Coordinates. [Google Scholar]

- Salminen NH, May PJC, Alku P, Tiitinen H. A population rate code of auditory space in the human cortex. PLoS ONE. 2009;4:e7600. doi: 10.1371/journal.pone.0007600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmuel A, Augath M, Oeltermann A, Logothetis NK. Negative functional MRI response correlates with decreases in neuronal activity in monkey visual area V1. Nat Neurosci. 2006;9:569–577. doi: 10.1038/nn1675. [DOI] [PubMed] [Google Scholar]

- Spierer L, Bellmann-Thiran A, Maeder P, Murray MM, Clarke S. Hemispheric competence for auditory spatial representation. Brain. 2009;132:1953–1966. doi: 10.1093/brain/awp127. [DOI] [PubMed] [Google Scholar]

- Stange A, Myoga MH, Lingner A, Ford MC, Alexandrova O, Felmy F, Pecka M, Siveke I, Grothe B. Adaptation in sound localization: from GABA(B) receptor-mediated synaptic modulation to perception. Nat Neurosci. 2013;16:1840–1847. doi: 10.1038/nn.3548. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Middlebrooks JC. Distributed coding of sound locations in the auditory cortex. Biol Cybern. 2003;89:341–349. doi: 10.1007/s00422-003-0439-1. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Harrington IA, Middlebrooks JC. Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 2005;3:e78. doi: 10.1371/journal.pbio.0030078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- Wandell BA, Dumoulin SO, Brewer AA. Visual field maps in human cortex. Neuron. 2007;56:366–383. doi: 10.1016/j.neuron.2007.10.012. [DOI] [PubMed] [Google Scholar]

- Warren JD, Zielinski BA, Green GGR, Rauschecker JP, Griffiths TD. Perception of sound-source motion by the human brain. Neuron. 2002;34:139–148. doi: 10.1016/s0896-6273(02)00637-2. [DOI] [PubMed] [Google Scholar]

- Werner-Reiss U, Groh JM. A rate code for sound azimuth in monkey auditory cortex: implications for human neuroimaging studies. J Neurosci. 2008;28:3747–3758. doi: 10.1523/JNEUROSCI.5044-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner-Reiss U, Kelly KA, Trause AS, Underhill AM, Groh JM. Eye position affects activity in primary auditory cortex of primates. Curr Biol. 2003;13:554–562. doi: 10.1016/s0960-9822(03)00168-4. [DOI] [PubMed] [Google Scholar]

- Wilke M, Lidzba K. LI-tool: a new toolbox to assess lateralization in functional MR-data. J Neurosci Methods. 2007;163:128–136. doi: 10.1016/j.jneumeth.2007.01.026. [DOI] [PubMed] [Google Scholar]

- Woods TM, Lopez SE, Long JH, Rahman JE, Recanzone GH. Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. J Neurophysiol. 2006;96:3323–3337. doi: 10.1152/jn.00392.2006. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb Cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Bouffard M, Ahad P, Belin P. Where is ‘where’ in the human auditory cortex? Nat Neurosci. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.