Abstract

Background

The Centers for Disease Control and Prevention (CDC) recommends one-time hepatitis C virus (HCV) antibody testing for “Birth Cohort” adults born during 1945–1965.

Objective

To examine the impact of an electronic health record (EHR)-embedded best practice alert (BPA) for HCV testing among Birth Cohort adults.

Design

Cluster-randomized trial was conducted from April 29, 2013 to March 29, 2014.

Subjects and Setting

Ten community and hospital-based primary care practices. Participants were attending physicians and medical residents during 25,620 study-eligible visits.

Intervention

Physicians in all practices received a brief introduction to the CDC testing recommendations. At visits for eligible patients at intervention sites, physicians received a BPA through the EHR to order HCV testing or medical assistants were prompted to post a testing order for the physician. Physicians in control sites did not receive the BPA.

Main Outcomes

HCV testing; the incidence of HCV antibody positive tests was a secondary outcome.

Results

Testing rates were greater among Birth Cohort patients in intervention sites (20.2% vs. 1.8%, P < 0.0001) and the odds of testing were greater in intervention sites after controlling for imbalances of patient and visit characteristics between comparison groups [odds ratio (OR), 9.0; 95% confidence interval, 7.6–10.7). The adjusted OR of identifying HCV antibody positive patients was also greater in intervention sites (OR, 2.1; 95% confidence interval, 1.3–11.2).

Conclusions

An EHR-embedded BPA markedly increased HCV testing among Birth Cohort patients, but the majority of eligible patients did not receive testing indicating a need for more effective methods to promote uptake.

Keywords: hepatitis C screening, electronic health records, baby boomer birth cohort

BACKGROUND

In 2012, the United States Centers for Disease Control and Prevention (CDC) recommended one-time testing for hepatitis C virus (HCV) among individuals born during 1945–1965 (the baby boomer Birth Cohort), without ascertainment of risk of possible exposure to, or symptomatic indications of infection.1 Shortly thereafter, the United States Preventive Services Task Force put forth a similar guideline and effective January 1, 2014 testing baby boomers for HCV became public health law in New York State.2 With growing evidence to support birth-cohort testing3–6 and the advent of more effective HCV treatment options with fewer side effects,7 widespread testing has become imperative. Testing baby boomers could detect as many as 800,000 more cases of HCV than testing based solely on other risk factors, lead to treatment for up to 260,000 patients, and avert nearly 50,000 cases of hepatocellular carcinoma and 15,000 liver transplants.8,9

Baby boomers have been included in earlier studies of screening involving higher risk populations but their age group has not been specifically targeted for screening until recently,10,11 in a few studies limited to specialty and emergency department settings.12–14 Moreover, some methods used to engage patients in screening place considerable demand on personnel resources and may not be sustainable.12–14

Electronic health records (EHR) can increase HCV testing in settings that reach large numbers of patients, like primary care, by alerting clinicians to current guidelines and facilitating test ordering.15 EHR-based alerts and interventions have improved screening rates for colorectal cancer and osteoporosis, uptake of appropriate vaccinations,16,17 and testing for other viral infections among at-risk patients18–21 in primary care settings. On the basis of these observations, we sought to test the impact of EHR-based alerts on HCV testing among members of the 1945–1965 Birth Cohort.

METHODS

Study Settings

The study took place from April 1, 2013 through March 1, 2014 in adult primary care practices of the Mount Sinai Healthcare System. These were academically affiliated medical practices which included a hospital-based clinic and a hospital-based faculty practice, both located in the Upper East Side and East Harlem communities of New York City, and community-based faculty practices in Nassau County, Long Island, New York. The hospital clinic has 4 independently operating practices, employs 20 faculty physicians and is the outpatient training venue for 140 medical residents. It has approximately 55,000 visits each year. The hospital-based faculty practice consists of 12 full-time physicians who provide care during 35,000 patient visits annually. Mount Sinai’s North Shore (Nassau County) community-based faculty practice group consists of 4 practices with 12 primary care physicians and 3 nurse practitioners. They have approximately 62,000 visits annually. All practices used the Epic EHR system at the time of the study (Epic Systems, Madison, WI).

A research assistant approached all physicians, nurse practitioners, nurses, and medical assistants at all sites, in person or by email, described the study and requested their consent to receive the EHR-based alerts. The study was approved by the Institutional Review Board of the Icahn School of Medicine at Mount Sinai.

Study Design

The study was designed as a practice-level cluster randomized controlled trial, with clinical sites subdivided into 10 clusters defined by geography and functionality to decrease potential cross-contamination. The hospital-based clinic was divided by its 3 practice areas, the hospital-based faculty practice by its 2 separate practice floors, and the community-based faculty practice by its 5 independently operating practice areas. Providers in these sites consistently worked in the same physical area of their practice so the risk of contamination across clusters was low. Randomization of clusters was performed by an investigator at the CDC (A.Y.) and resulted in the following study arm assignments: in the hospital clinic there were 2 intervention clusters and 1 control; in the hospital-based faculty practice there was 1 intervention cluster and 1 control; and in the community-based faculty practice there were 3 intervention clusters and 2 controls. Study personnel were not blinded to cluster assignment.

An eligible study visit was defined as a scheduled visit with a consented clinician of a Birth Cohort patient who had no prior HCV antibody test, hepatitis C viral load result, or prior diagnosis of hepatitis C as documented in the EHR.

Intervention Design and Implementation

The study intervention was a best practice alert (BPA) programmed in the EHR to appear in yellow highlight on the EHR-clinician interface during visits to inform clinicians of the patients’ eligibility for HCV testing and to facilitate testing by providing a testing order set. Before creating the alert, we conducted an informal focus group with attending physicians from the hospital-based practices to get their input on BPA design. The most consistent theme that emerged was efficiency, as exemplified by suggestions for prepopulated laboratory orders, minimal navigation through the chart (ie, few clicks of the mouse), and minimal disruption to work flow. These recommendations were integrated into the design of the BPA. The BPA was pilot tested with staff at each site and adjustments were made to ensure fit with local workflow.

The intervention consisted of 2 pathways. Pathway 1: if a medical assistant opened the chart for an eligible patient before the clinician did (eg, to enter the patient’s vital signs data), he or she would encounter a BPA prompting them to order an HCV test. The order would appear as “pended” when the clinician opened the chart, at which time the clinician could choose to discuss testing with the patient, sign the order, or delete it. This pathway required just 1 click of the mouse by the clinician.

Pathway 2: if the clinician opened the chart before the medical assistant or if the medical assistant bypassed the alert presented through pathway 1, a different BPA appeared for the clinician. This BPA briefly outlined the Birth Cohort testing guidelines and suggested HCV testing for the patient. The alert also presented an order, known as a “smart set,” for HCV testing which the clinician could accept or bypass. The HCV testing BPAs for both pathways would continue to appear at subsequent visits until an HCV testing order was placed. An order pended by the medical assistant could not be placed unless the clinician ultimately signing the order associated it with the appropriate diagnosis (also pended through the BPA). This step ensured that no HCV tests were ordered inadvertently. Furthermore, the clinician could delete the pended test based on their clinical judgment in the same manner that any order in the EHR could be removed. The BPA would reappear at subsequent visits until HCV testing was ordered or the clinician selected the option to delay or permanently exclude the patient from testing. Data on the reasons why physicians chose to exclude patients from testing were not included.

The clinical staff members of all practices were blinded to study assignment and all underwent the same standardized education about HCV infection, Birth Cohort testing recommendations, and HCV treatment referral resources within the Mount Sinai Healthcare System.

A research assistant on the study team was assigned to contact physicians if patients who tested positive for HCV antibody did not receive a confirmatory hepatitis C viral load test within 90 days of the positive test. This was not a feature of the intervention design.

Measures

The primary outcome was the rate of HCV antibody testing during eligible study visits in the intervention and control practices. HCV antibody testing was identified through automated reports from the EHR. The secondary outcome was HCV antibody positivity rates among tested individuals. Explanatory variables were also identified through the EHR and included patient sociodemographic and health status characteristics (age, sex, race and ethnicity, insurance type, quartile of median income from zip code data matched to census blocks, and comorbidities). Age was categorized for ease of interpretation. Clinicians were characterized as attending physicians or medical residents using administrative data.

We hypothesized that HCV testing would be less likely to occur during complex encounters or encounters that required more work of the clinician. To represent encounter complexity, we used level of service and number of diagnosis codes [International Classification of Diseases, Ninth Revision (ICD-9)] listed for the encounter. To represent provider workload, we measured counts of active BPAs for actions other than HCV testing and the number of orders placed during visits. The blood tests excluded orders placed for HCV antibody or viral load testing. Further, we separated blood testing from orders for other services (other testing, medications or supplies, procedures, referrals) on the assumption that clinicians might be more inclined to order HCV tests if they were already ordering blood work.

Statistical Analysis

The main outcomes were HCV testing and HCV antibody positivity. Visits were the unit of analysis. Subsequent visits in which the patient was tested for HCV were censored because the CDC recommendations call for one-time testing only. We used generalized estimating equations (GEE) to model the outcomes as well as to test differences in characteristics of visits between intervention and control sites. We chose GEE to account for the potential correlations arising from repeated visits by individual patients and clustering of patients by physician. All models used a binomial distribution, logit link function, and a robust covariance matrix. The models included the proxy measures of visit complexity and workload intensity and were adjusted for patient and site characteristics that differed significantly between the intervention and control sites (P < 0.05). We estimated a minimum sample size of 440 visits per cluster to detect an absolute difference in testing between intervention and control sites of 2.5% (eg, 8.5% vs. 6.0%)14 with a type I error of 5%, power of 80%, and intracluster correlation coefficient of 0.005.22

In a set of exploratory analyses, we separately modeled HCV antibody testing in intervention and control sites to identify the practice and visit-level factors associated with testing in those settings. Statistical analyses were performed using the GENMOD procedure in SAS (version 9.4; SAS Institute Inc., Cary, NC).

RESULTS

Study Enrollment

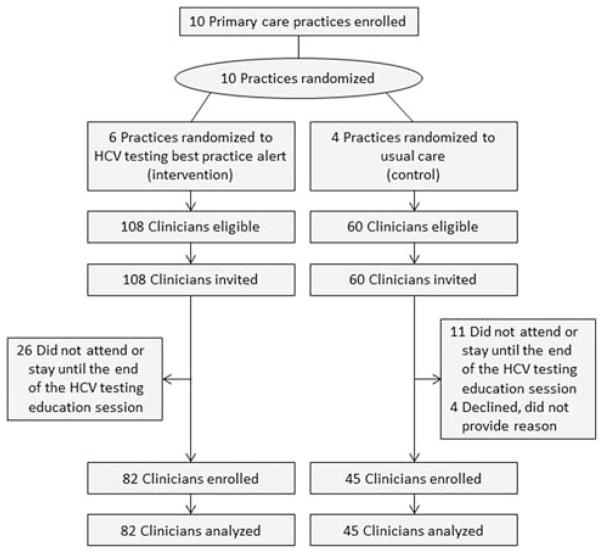

Study enrollment is shown in Figure 1. In the intervention sites, 26 clinicians did not attend one or any of the HCV education sessions required for study participation or attended but left before the session was completed. In the control sites, 11 clinicians did not attend or remain until the end of the session. In addition, 4 clinicians who attended the entire educational session at control sites refused to provide written consent for study participation. They provided no reason for their refusal. Data on visits made to 82 clinicians in the intervention arm and 45 in the control arm were used in the final analyses.

FIGURE 1.

Study enrollment. HCV indicates hepatitis C virus.

Visit and Setting Characteristics

Across the 10 sites, 14,151 study-eligible patients made 25,821 visits between April 29, 2013 and March 29, 2014 (intervention arm, n = 15,010; control arm, n = 10,811). We censored 201 visits. The final sample consisted of 25,620 visits, with 14,825 visits to the intervention sites and 10,795 visits to the control sites. The number of unique individuals seen during these visits was 8713 in the intervention sites and 5438 in the control sites. The majority of visits were made by patients who were female (61.8%), white non-Hispanic (75.0%), and privately insured (76.1%) and most occurred in community-based practice settings (70.4%) (Table 1).

TABLE 1.

Patient, Setting, and Service Characteristics, by Study Arm

| Study Arm (%)

|

||||

|---|---|---|---|---|

| All Visits (N = 25,620) (%) | Intervention (N = 14,825) | Control (N = 10,795) | P | |

| Patient age (y) | 0.94 | |||

| 49–54 | 29.2 | 29.2 | 29.2 | |

| 55–64 | 47.8 | 48.0 | 47.7 | |

| ≥65 | 23.0 | 22.8 | 23.1 | |

| Patient sex, male | 38.2 | 36.7 | 40.3 | 0.0005 |

| Patient race | < 0.0001 | |||

| White, non-Hispanic | 75.0 | 71.6 | 79.7 | |

| Black, non-Hispanic | 9.6 | 11.9 | 6.4 | |

| Hispanic | 9.3 | 10.0 | 8.4 | |

| Other | 6.2 | 6.6 | 5.6 | |

| Median income by zip code | < 0.0001 | |||

| ≥$108,000 | 27.5 | 25.4 | 30.3 | |

| $93,000–$107,999 | 26.4 | 22.3 | 32.0 | |

| $65,000–$92,999 | 25.3 | 28.3 | 21.1 | |

| < $65,000 | 20.9 | 24.1 | 16.6 | |

| Patient insurance | < 0.0001 | |||

| Private | 76.1 | 75.2 | 77.2 | |

| Medicare | 18.5 | 18.2 | 18.8 | |

| Medicaid/uninsured | 5.5 | 6.5 | 4.0 | |

| Practice setting | < 0.0001 | |||

| Hospital-based faculty practice | 17.1 | 18.6 | 15.1 | |

| Hospital-based clinic | 12.4 | 16.0 | 7.6 | |

| Community-based faculty practice | 70.4 | 65.4 | 77.3 | |

| Physician level* | 0.06 | |||

| Attending | 94.3 | 94.1 | 94.7 | |

| Resident | 5.7 | 6.0 | 5.3 | |

| # EHR alerts presented to physician during visit | < 0.0001 | |||

| 1–2 | 7.8 | 6.0 | 10.4 | |

| 3–5 | 67.0 | 63.8 | 71.3 | |

| ≥6 | 25.2 | 30.3 | 18.3 | |

| # Diagnosis codes listed for the visit | < 0.0001 | |||

| 0–2 | 17.1 | 23.0 | 9.1 | |

| 3–5 | 32.2 | 30.7 | 34.2 | |

| 6–8 | 22.5 | 20.4 | 25.3 | |

| ≥9 | 28.2 | 25.9 | 31.4 | |

| # Blood tests ordered during visit† | < 0.0001 | |||

| 0 | 82.1 | 76.1 | 90.3 | |

| 1–4 | 9.7 | 12.6 | 5.7 | |

| ≥5 | 8.2 | 11.3 | 4.0 | |

| # Orders other than blood tests placed during visit‡ | < 0.0001 | |||

| 0 | 22.4 | 21.1 | 24.1 | |

| 1–3 | 59.5 | 51.8 | 69.9 | |

| ≥4 | 18.2 | 27.1 | 6.0 | |

| Level of service coded for visit | 0.003 | |||

| Established patient/low-moderate complexity | 72.5 | 72.6 | 72.3 | |

| Established patient/high complexity | 20.5 | 19.8 | 21.3 | |

| New patient/low-moderate complexity | 5.2 | 5.7 | 4.6 | |

| New patient/high complexity | 1.8 | 1.9 | 1.7 | |

Some values in columns do not sum to 100% owing to rounding error.

Among sites that included residency training. Total numbers of patients at control sites, n = 509; intervention sites, n = 1498.

Excludes hepatitis C virus antibody and RNA testing.

Orders for referrals, procedures, medications, supplies, and any testing except blood testing.

EHR indicates electronic health record.

Visit characteristics differed between intervention and control sites for all variables except patient age and proportion of resident physicians. Notably, visits at intervention sites included fewer white, non-Hispanic patients (71.6% vs. 79.7%, P < 0.0001), fewer publicly insured or uninsured patients (75.2% vs. 77.2%, P < 0.0001), and fewer attending hospital-based practices (65.4% vs. 77.3%, P < 0.0001) (Table 1).

HCV Antibody Testing

Testing occurred 18.4% more frequently at visits in intervention sites (2995/14,825 patient-eligible visits, 20.2%) compared with control sites (198/10,795 visits, 1.8%) (P < 0.0001). After adjustment for visit-level characteristics, the odds ratio (OR) for testing at intervention versus control sites was 9.0, 95% confidence interval (CI), 7.6–10.7; P < 0.0001 (Table 2). The intraclass correlation coefficient for the clustering effect was 0.173. HCV testing was more likely to be ordered when more blood tests or other types of orders were placed and when visit complexity was coded as high. Visits involving nonwhite, publicly insured patients residing in the zip code areas with median incomes between $65,000 and $92,999, and those with visits occurring outside of faculty practice settings were significantly less likely to involve HCV testing.

TABLE 2.

Adjusted ORs for Hepatitis C Virus Antibody Testing During Visits for Screening-eligible Birth Cohort Members in Intervention Versus Control Sites (n = 25,620)

| OR (95% CI) | P | |

|---|---|---|

| Study arm | ||

| Control | Ref. | |

| Intervention | 8.99 (7.57–10.7) | < 0.0001 |

| Age (y) | ||

| 49–54 | Ref. | |

| 55–64 | 0.96 (0.85–1.07) | 0.46 |

| ≥65 | 0.98 (0.84–1.14) | 0.76 |

| Patient sex, male | 1.27 (1.15–1.41) | < 0.0001 |

| Race | ||

| White, non-Hispanic | Ref. | |

| Black, non-Hispanic | 0.76 (0.64–0.91) | 0.002 |

| Hispanic | 0.81 (0.67–0.97) | 0.02 |

| Other | 0.79 (0.66–0.96) | 0.02 |

| Median income by zip code | ||

| ≥$108,000 | Ref. | |

| $93,000–$107,999 | 1.20 (1.02–1.41) | 0.02 |

| $65,000–$92,999 | 1.54 (1.33–1.79) | < 0.0001 |

| < $65,000 | 1.06 (0.89–1.26) | 0.54 |

| Insurance | ||

| Private | Ref. | |

| Medicare | 0.65 (0.56–0.76) | < 0.0001 |

| Medicaid/uninsured | 0.53 (0.42–0.66) | < 0.0001 |

| Practice setting | ||

| Hospital-faculty practice | Ref. | |

| Hospital-clinic | 0.51 (0.43–0.61) | < 0.0001 |

| Community-faculty practice | 0.06 (0.05–0.07) | < 0.0001 |

| # EHR alerts presented to the physician | ||

| 1–2 | Ref. | |

| 3–5 | 1.36 (1.12–1.66) | 0.002 |

| ≥6 | 1.12 (0.91–1.39) | 0.29 |

| # Diagnosis codes listed for the visit | ||

| 0–2 | Ref. | |

| 3–5 | 1.10 (0.96–1.26) | 0.19 |

| 6–8 | 1.01 (0.87–1.8) | 0.90 |

| ≥9 | 1.00 (0.86–1.17) | 0.97 |

| # Blood tests ordered during visit* | ||

| 0 | Ref. | |

| 1–4 | 5.01 (4.19–5.99) | < 0.0001 |

| ≥5 | 11.2 (9.42–13.3) | < 0.0001 |

| # Orders other than blood tests placed during visit† | Ref. | |

| 0 | 1.77 (1.54–2.04) | |

| 1–3 | 3.39 (2.87–4.00) | < 0.0001 |

| ≥4 | < 0.0001 | |

| Level of service coded for visit | ||

| Established/low-moderate complexity | Ref. | |

| Established/high complexity | 1.45 (1.28–1.65) | < 0.0001 |

| New/low-moderate complexity | 1.98 (1.62–2.41) | < 0.0001 |

| New/high complexity | 1.81 (1.33–2.46) | 0.0001 |

Excludes hepatitis C virus antibody and RNA testing.

Orders for referrals, procedures, medications, supplies, and any testing except blood testing.

CI indicates confidence interval; EHR, electronic health record; OR, odds ratios; Ref., reference.

Identification of HCV Antibody-positive Cases

The number of HCV antibody-positive patients identified at visits for Birth Cohort patients was greater in intervention than in control sites [27 HCV antibody-positive cases among 8713 unique patients (3.1%) in the intervention arm vs. 6 cases among 5438 unique patients (1.1%) in the control arm; P < 0.0001). Overall, the OR for identifying an HCV antibody-positive Birth Cohort patient was 2.1 (95% CI, 1.33–11.2; P = 0.01) compared with control sites, adjusting for sex, race, and insurance status.

Patient and Visit Characteristics Associated With HCV Testing Within Intervention and Control Sites

Within intervention sites, testing was more likely at visits involving male patients (OR, 1.22; 95% CI, 1.09–1.35) and patients residing in zip codes with lower median incomes (Table 3). It was also more likely to occur during visits in which multiple blood or nonblood tests were ordered (blood tests: ≥5 vs. 0; OR, 10.9; 95% CI, 9.10–13.2 and nonblood tests: ≥4 vs. 0; OR, 3.28; 95% CI, 2.72–3.96), and the level of service was of high complexity and/or involved a new patient. Testing was less likely to occur during visits made by black patients (OR, 0.80; 95% CI, 0.67–0.96) and non-Hispanic patients of other races (OR, 0.81; 95% CI, 0.67–0.99) compared with visits by white patients, visits by patients with public versus private insurance, and visits to hospital clinics and community-based practices compared with hospital-based faculty practices.

TABLE 3.

Adjusted ORs for Hepatitis C Virus Antibody During Visits for Screening-eligible Birth Cohort Members, Stratified by Intervention (n = 14,825) and Control Sites (n = 10,795)

| Hepatitis C Virus Testing

|

||||

|---|---|---|---|---|

| Characteristics | Intervention Sites OR (95% CI) | P | Control Sites OR (95% CI) | P |

| Patient age (y) | ||||

| 49–54 | Ref. | Ref. | ||

| 55–64 | 1.00 (0.86–1.09) | 0.58 | 0.87 (0.60–1.26) | 0.45 |

| ≥65 | 1.04 (0.88–1.22) | 0.67 | 0.49 (0.29–0.83) | 0.008 |

| Patient sex, male | 1.22 (1.09–1.35) | 0.0004 | 1.84 (1.31–2.58) | 0.0005 |

| Patient race/ethnicity | ||||

| White, non-Hispanic | Ref. | Ref. | ||

| Black, non-Hispanic | 0.80 (0.67–0.96) | 0.02 | 0.53 (0.31–0.92) | 0.02 |

| Hispanic | 0.88 (0.73–1.07) | 0.21 | 0.51 (0.31–0.84) | 0.009 |

| Other | 0.81 (0.67–0.99) | 0.04 | 0.69 (0.39–1.22) | 0.20 |

| Median income by zip code | ||||

| ≥$108,000 | Ref. | Ref. | ||

| $93,000–$107,999 | 1.24 (1.05–1.47) | 0.01 | 0.92 (0.55–1.55) | 0.77 |

| $65,000–$92,999 | 1.65 (1.41–1.92) | < 0.0001 | 0.59 (0.34–1.03) | 0.06 |

| < $65,000 | 1.12 (0.93–1.34) | 0.23 | 0.60 (0.36–1.00) | 0.05 |

| Patient insurance | ||||

| Private | Ref. | Ref. | ||

| Medicare | 0.64 (0.54–0.75) | < 0.0001 | 0.82 (0.51–1.31) | 0.41 |

| Medicaid/uninsured | 0.59 (0.47–0.75) | < 0.0001 | 0.04 (0.01–0.32) | 0.002 |

| Practice setting | ||||

| Hospital-based faculty practice | Ref. | Ref. | ||

| Hospital-based clinic | 0.47 (0.39–0.56) | < 0.0001 | 1.23 (0.77–1.96) | 0.38 |

| Community-based faculty practice | 0.07 (0.05–0.08) | < 0.0001 | 0.01 (0.01–0.05) | < 0.0001 |

| # EHR alerts presented to the physician | ||||

| 1–2 | Ref. | Ref. | ||

| 3–5 | 1.29 (1.03–1.60) | 0.02 | 1.84 (1.10–3.08) | 0.02 |

| ≥6 | 1.05 (0.83–1.32) | 0.71 | 1.81 (1.03–3.17) | 0.04 |

| # Diagnosis codes listed for the visit | ||||

| 0–2 | Ref. | Ref. | ||

| 3–5 | 1.10 (0.95–1.26) | 0.20 | 1.39 (0.69–2.81) | 0.36 |

| 6–8 | 0.97 (0.83–1.14) | 0.73 | 1.86 (0.92–3.76) | 0.08 |

| ≥9 | 0.98 (0.83–1.15) | 0.78 | 1.48 (0.75–2.93) | 0.26 |

| # Blood tests ordered during the visit* | ||||

| 0 | Ref. | Ref. | ||

| 1–4 | 4.94 (4.08–5.97) | < 0.0001 | 1.81 (0.50–6.59) | 0.38 |

| ≥5 | 10.9 (9.10–13.2) | < 0.0001 | 6.89 (1.82–26.14) | 0.005 |

| # Orders other than blood tests placed during visit† | ||||

| 0 | Ref. | Ref. | ||

| 1–3 | 1.68 (1.44–1.97) | < 0.0001 | 1.18 (0.71–1.96) | 0.52 |

| ≥4 | 3.28 (2.72–3.96) | < 0.0001 | 0.99 (0.43–2.28) | 0.98 |

| Level of service coded for visit | ||||

| Established patient/low-moderate complexity | Ref. | Ref. | ||

| Established patient/high complexity | 1.48 (1.29–1.69) | < 0.0001 | 1.67 (1.09–2.55) | 0.02 |

| New patient/low-moderate complexity | 1.87 (1.53–2.30) | < 0.0001 | 3.54 (2.02–6.18) | < 0.0001 |

| New patient/high complexity | 1.75 (1.25–2.44) | 0.001 | 2.16 (1.03–4.53) | 0.04 |

Excludes hepatitis C virus antibody and RNA testing.

Orders for referrals, procedures, medications, supplies, and any testing except blood testing.

CI indicates confidence interval; EHR, electronic health record; OR, odds ratios; Ref., reference.

In sites without the EHR-based HCV testing BPAs, testing was less likely for men, visits with ≤3 EHR alerts, where ≥5 blood tests were ordered, or the visit was billed as other than established patient/low-moderate complexity (control sites, Table 3). Testing was less likely for patients ages 65 years and older, black or Hispanic, Medicaid covered or uninsured, or who received their care in a community-based faculty practice.

DISCUSSION

In this cluster-randomized trial, prompting physicians to test Birth Cohort patients with alerts that appeared in the EHR resulted in a 9-fold higher rate of testing compared with that of patients in sites where no BPA was provided. Moreover, more patients with HCV antibodies were identified in the intervention sites compared with the control sites.

To date, no randomized controlled trials of HCV testing have been conducted in primary care settings, whether targeting Birth Cohort patients or others. Before this work, observational studies suggested that EHR-directed testing was a promising strategy even though these studies could not fully determine the impact of EHR-based testing.4,23–25 White et al23 reported the results of HCV testing in an emergency department in which a triage nurse offered HCV testing to patients with history of injection drug use or who were born between 1945 and 1964 when prompted by an EHR-based alert. Approximately half of age-eligible patients were offered HCV testing and 29% of them received testing, among whom 11% were positive. In another emergency department study that followed a similar protocol, 87% of eligible patients accepted testing and 11% of tested subjects were HCV antibody-positive.12 Notably, testing rates in these 2 studies were higher than what we observed in our study. Clinicians may be more likely to test for HCV in settings like emergency departments where more people are likely to have undiagnosed HCV infection than in general primary care.26

Testing has also been evaluated in primary care and in-patient settings. Litwin and colleagues used a multi-component intervention to promote testing among at-risk patients in primary care, including Birth Cohort patients, that involved physician-targeted HCV education supported by regular follow-up communication about the testing process, visual reminders to prompt testing (buttons, posters, and pocket cards), and flagging charts of qualified patients. This approach increased testing from 6.0% to 9.9%.14 Turner et al24 tested hospital in-patients identified by the EHR using an opt-out approach. Orders were automatically placed in most cases, although one third of orders had to be manually entered by a clinician. Testing was performed on 49% of eligible patients, among whom 7.6% were positive.

Taken together, these observational studies demonstrate that EHR-embedded BPAs can boost HCV testing in varied clinical settings. Our study moves beyond this work by comparing brief physician education combined with EHR-embedded BPAs for HCV testing to physician education alone in primary care in a large scale, cluster-randomized trial. This approach enables a direct assessment of the impact of this testing modality, and clearly demonstrates the benefit of the BPA.

Despite the success of the intervention, the overall rate of testing using EHR prompts was low: only one fifth of eligible patients were tested. This finding is consistent with literature on clinical decision support, which shows that clinical decision support in various settings, including primary care, often has only a moderate impact on physician action,27,28 preventive care testing rates,29–31 adherence to treatment guidelines,32,33 and meeting quality of care standards.34 A number of factors may be at play, including the physicians’ workload, perceived inapplicability of the recommendations, inadequate time to document why recommendations were not followed, a lack of training to prepare or prime the end-users, and distraction from the doctor-patient interaction by the electronic reminder.35,36 Similar barriers have been reported elsewhere.30,37

Given that workload limits physicians’ responses to electronic reminders, we were surprised to find that testing occurred more often during visits of greater complexity, as indicated by more diagnoses, more orders placed (blood work and others), or higher level of service codes. As the automated reminder prepopulated the order set, signing off on it may have been easier for physicians if they already had other orders present. Alternatively, physicians may have been more inclined to test individuals who had greater morbidity. However, these considerations are speculative.

We also identified disparities in testing, with lower rates of HCV testing among black patients and those with Medicaid coverage in both the intervention and control sites, consistent with observations elsewhere.38 However, disparities were less pronounced in intervention sites suggesting that automated prompts for testing may reduce them. Examining physicians’ and patients’ experiences with the BPA testing procedure was beyond the scope of this project and the data collected did not enable us to determine whether disparities were the result of physician practice or patient preference, or both. Regardless, more research is needed to understand patterns of test ordering and to ultimately improve on the suboptimal rate of testing produced by this intervention.

Although there was variation in the type of clinical primary care practices involved in this study, our study is limited in generalizability by the exclusive participation of practices from a single health care system. In addition, covariate balance between intervention and control sites was not fully achieved, although we controlled for imbalances between these groups using multivariable GEE. Clinicians who did not attend the educational sessions on the CDC Birth Cohort testing recommendations were not consented to participate in the study and data from their patient encounters were not included in our analyses. Whether there was a general bias in favor or against Birth Cohort testing among these clinicians is unknown.

Testing high-prevalence cohorts for HCV is an increasingly important service that primary care providers can offer. This study demonstrates that EHR-based reminders can increase HCV antibody testing among Birth Cohort patients. Nonetheless, overall testing rates were low, suggesting that this strategy needs more study, including a better understanding of the role of physician beliefs and knowledge about Birth Cohort testing, to improve its efficacy. Still, EHR-based reminders should perhaps be coupled with other strategies to achieve widespread testing of Birth Cohort patients.

Acknowledgments

Supported by the CDC Foundation-organized Viral Hepatitis Action Coalition (VHAC), which in 2015, was funded by 10 corporate members: Abbott Laboratories; AbbVie; Bristol-Myers Squibb; CDC Foundation; Gilead Sciences Inc.; Janssen; Merck Sharp & Dohme Corp.; OraSure Technologies Inc.; Quest Diagnostics Inc.; and, Siemens Healthcare Inc. During the timing of the trial, VHAC also received funding from Vertex Pharmaceuticals. In addition to the funding organizations, 2 nonprofit organizations, the Association of State and Territorial Health Officials, and the National Viral Hepatitis Roundtable (NVHR), and a representative from the US Department of Health and Human Services (HHS), Office of HIV/AIDS and Infectious Disease Policy, participated in VHAC activities.

Footnotes

ClinicalTrials.gov identifier NCT02123212.

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

The authors declare no conflict of interest.

References

- 1.United States Preventive Services Task Force. Hepatitis C screening. [Accessed May 3, 2016];Recommendation summary. 2013 Available at: www.uspreventiveservicestaskforce.org/Page/Topic/recommendation-summary/hepatitis-c-screening.

- 2.New York State Department of Health. [Accessed May 3, 2016];NYS hepatitis C testing law: frequently asked questions. 2014 Available at: www.health.ny.gov/diseases/communicable/hepatitis/hepatitis_c/rapid_antibody_testing/docs/testing_law_faqs.pdf.

- 3.McGarry LJ, Pawar VS, Panchmatia HR, et al. Economic model of a birth cohort screening program for hepatitis C virus. Hepatology. 2012;55:1344–1355. doi: 10.1002/hep.25510. [DOI] [PubMed] [Google Scholar]

- 4.Rein DB, Smith BD, Wittenborn JS, et al. The cost-effectiveness of birth-cohort screening for hepatitis C antibody in US primary care settings. Ann Intern Med. 2012;156:263–270. doi: 10.7326/0003-4819-156-4-201202210-00378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Denniston MM, Jiles RB, Drobeniuc J, et al. Chronic hepatitis C virus infection in the United States, National Health and Nutrition Examination Survey 2003 to 2010. Ann Intern Med. 2014;160:293–300. doi: 10.7326/M13-1133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rein DB, Wittenborn JS, Weinbaum CM, et al. Forecasting the morbidity and mortality associated with prevalent cases of pre-cirrhotic chronic hepatitis C in the United States. Dig Liver Dis. 2011;43:66–72. doi: 10.1016/j.dld.2010.05.006. [DOI] [PubMed] [Google Scholar]

- 7.Kohli A, Shaffer A, Sherman A, et al. Treatment of hepatitis C: a systematic review. JAMA. 2014;312:631–640. doi: 10.1001/jama.2014.7085. [DOI] [PubMed] [Google Scholar]

- 8.Smith BD, Morgan RL, Beckett GA, et al. Recommendations for the identification of chronic hepatitis C virus infection among persons born during 1945–1965. MMWR Recomm Rep. 2012;61:1–32. [PubMed] [Google Scholar]

- 9.Rein DB, Wittenborn JS, Smith BD, et al. The cost-effectiveness, health benefits, and financial costs of new antiviral treatments for hepatitis C virus. Clin Infect Dis. 2015;61:157–168. doi: 10.1093/cid/civ220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cartwright EJ, Rentsch C, Rimland D. Hepatitis C virus screening practices and seropositivity among US veterans born during 1945–1965. BMC Res Notes. 2014;7:449. doi: 10.1186/1756-0500-7-449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mahajan R, Liu SJ, Klevens RM, et al. Indications for testing among reported cases of HCV infection from enhanced hepatitis surveillance sites in the United States, 2004–2010. Am J Public Health. 2013;103:1445–1449. doi: 10.2105/AJPH.2013.301211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Galbraith JW, Franco RA, Donnelly JP, et al. Unrecognized chronic hepatitis C virus infection among baby boomers in the emergency department. Hepatology. 2015;61:776–782. doi: 10.1002/hep.27410. [DOI] [PubMed] [Google Scholar]

- 13.Sears DM, Cohen DC, Ackerman K, et al. Birth cohort screening for chronic hepatitis during colonoscopy appointments. Am J Gastroenterol. 2013;108:981–989. doi: 10.1038/ajg.2013.50. [DOI] [PubMed] [Google Scholar]

- 14.Litwin AH, Smith BD, Drainoni ML, et al. Primary care-based interventions are associated with increases in hepatitis C virus testing for patients at risk. Dig Liver Dis. 2012;44:497–503. doi: 10.1016/j.dld.2011.12.014. [DOI] [PubMed] [Google Scholar]

- 15.Fisher DA. Electronic medical records and improving the quality of the screening process. J Gen Intern Med. 2011;26:683–684. doi: 10.1007/s11606-011-1722-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Green BB, Wang CY, Anderson ML, et al. An automated intervention with stepped increases in support to increase uptake of colorectal cancer screening: a randomized trial. Ann Intern Med. 2013;158:301–311. doi: 10.7326/0003-4819-158-5-201303050-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Loo TS, Davis RB, Lipsitz LA, et al. Electronic medical record reminders and panel management to improve primary care of elderly patients. Arch Intern Med. 2011;171:1552–1558. doi: 10.1001/archinternmed.2011.394. [DOI] [PubMed] [Google Scholar]

- 18.Broach V, Day L, Barenberg B, et al. Use of electronic health record-based tools to improve appropriate use of the human papillomavirus test in adult women. J Low Genit Tract Dis. 2014;18:26–30. doi: 10.1097/LGT.0b013e31828cde2a. [DOI] [PubMed] [Google Scholar]

- 19.Clarke E, Bhatt S, Patel R, et al. Audit of the effect of electronic patient records on uptake of HIV testing in a level 3 genitourinary medicine service. Int J STD AIDS. 2013;24:661–665. doi: 10.1177/0956462413478877. [DOI] [PubMed] [Google Scholar]

- 20.Hsu L, Bowlus CL, Stewart SL, et al. Electronic messages increase hepatitis B screening in at-risk Asian American patients: a randomized, controlled trial. Dig Dis Sci. 2013;58:807–814. doi: 10.1007/s10620-012-2396-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.White P, Kenton K. Use of electronic medical record-based tools to improve compliance with cervical cancer screening guidelines: effect of an educational intervention on physicians’ practice patterns. J Low Genit Tract Dis. 2013;17:175–181. doi: 10.1097/LGT.0b013e3182607137. [DOI] [PubMed] [Google Scholar]

- 22.Friedman LM, DeMets DL, editors. Fundamentals of Clinical Trials. 3. St Louis, MO: Mosby; 1996. [Google Scholar]

- 23.White DA, Anderson ES, Pfeil SK, et al. Results of a rapid hepatitis C virus screening and diagnostic testing program in an urban emergency department. Ann Emerg Med. 2016;67:119–128. doi: 10.1016/j.annemergmed.2015.06.023. [DOI] [PubMed] [Google Scholar]

- 24.Turner BJ, Taylor BS, Hanson JT, et al. Implementing hospital-based baby boomer hepatitis c virus screening and linkage to care: Strategies, results, and costs. J Hosp Med. 2015;10:510–516. doi: 10.1002/jhm.2376. [DOI] [PubMed] [Google Scholar]

- 25.Smith BD, Yartel AK, Krauskopf K, et al. Hepatitis C virus antibody positivity and predictors among previously undiagnosed adult primary care outpatients: cross-sectional analysis of a multisite retrospective cohort study. Clin Infect Dis. 2015;60:1145–1152. doi: 10.1093/cid/civ002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lyons MS, Kunnathur VA, Rouster SD, et al. Prevalence of diagnosed and undiagnosed hepatitis C in a midwestern urban emergency department. Clin Infect Dis. 2016;62:1066–1071. doi: 10.1093/cid/ciw073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 28.Bryan C, Boren SA. The use and effectiveness of electronic clinical decision support tools in the ambulatory/primary care setting: a systematic review of the literature. Inform Prim Care. 2008;16:79–91. doi: 10.14236/jhi.v16i2.679. [DOI] [PubMed] [Google Scholar]

- 29.Goetz MB, Hoang T, Bowman C, et al. A system-wide intervention to improve HIV testing in the Veterans Health Administration. J Gen Intern Med. 2008;23:1200–1207. doi: 10.1007/s11606-008-0637-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sequist TD, Zaslavsky AM, Marshall R, et al. Patient and physician reminders to promote colorectal cancer screening: a randomized controlled trial. Arch Intern Med. 2009;169:364–371. doi: 10.1001/archinternmed.2008.564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sundaram V, Lazzeroni LC, Douglass LR, et al. A randomized trial of computer-based reminders and audit and feedback to improve HIV screening in a primary care setting. Int J STD AIDS. 2009;20:527–533. doi: 10.1258/ijsa.2008.008423. [DOI] [PubMed] [Google Scholar]

- 32.Gill JM, Mainous AG, 3rd, Koopman RJ, et al. Impact of EHR-based clinical decision support on adherence to guidelines for patients on NSAIDs: a randomized controlled trial. Ann Fam Med. 2011;9:22–30. doi: 10.1370/afm.1172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Peiris D, Usherwood T, Panaretto K, et al. Effect of a computer-guided, quality improvement program for cardiovascular disease risk management in primary health care: the treatment of cardiovascular risk using electronic decision support cluster-randomized trial. Circ Cardiovasc Qual Outcomes. 2015;8:87–95. doi: 10.1161/CIRCOUTCOMES.114.001235. [DOI] [PubMed] [Google Scholar]

- 34.Romano MJ, Stafford RS. Electronic health records and clinical decision support systems: impact on national ambulatory care quality. Arch Intern Med. 2011;171:897–903. doi: 10.1001/archinternmed.2010.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Patterson ES, Nguyen AD, Halloran JP, et al. Human factors barriers to the effective use of ten HIV clinical reminders. J Am Med Inform Assoc. 2004;11:50–59. doi: 10.1197/jamia.M1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Saleem JJ, Patterson ES, Militello L, et al. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005;12:438–447. doi: 10.1197/jamia.M1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Peiris DP, Joshi R, Webster RJ, et al. An electronic clinical decision support tool to assist primary care providers in cardiovascular disease risk management: development and mixed methods evaluation. J Med Internet Res. 2009;11:e51. doi: 10.2196/jmir.1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tohme RA, Xing J, Liao Y, et al. Hepatitis C testing, infection, and linkage to care among racial and ethnic minorities in the United States, 2009–2010. Am J Public Health. 2013;103:112–119. doi: 10.2105/AJPH.2012.300858. [DOI] [PMC free article] [PubMed] [Google Scholar]