Abstract

Background:

Beaker is a relatively new laboratory information system (LIS) offered by Epic Systems Corporation as part of its suite of health-care software and bundled with its electronic medical record, EpicCare. It is divided into two modules, Beaker anatomic pathology (Beaker AP) and Beaker Clinical Pathology. In this report, we describe our experience implementing Beaker AP version 2014 at an academic medical center with a go-live date of October 2015.

Methods:

This report covers preimplementation preparations and challenges beginning in September 2014, issues discovered soon after go-live in October 2015, and some post go-live optimizations using data from meetings, debriefings, and the project closure document.

Results:

We share specific issues that we encountered during implementation, including difficulties with the proposed frozen section workflow, developing a shared specimen source dictionary, and implementation of the standard Beaker workflow in large institution with trainees. We share specific strategies that we used to overcome these issues for a successful Beaker AP implementation. Several areas of the laboratory-required adaptation of the default Beaker build parameters to meet the needs of the workflow in a busy academic medical center. In a few areas, our laboratory was unable to use the Beaker functionality to support our workflow, and we have continued to use paper or have altered our workflow. In spite of several difficulties that required creative solutions before go-live, the implementation has been successful based on satisfaction surveys completed by pathologists and others who use the software. However, optimization of Beaker workflows has continued to be an ongoing process after go-live to the present time.

Conclusions:

The Beaker AP LIS can be successfully implemented at an academic medical center but requires significant forethought, creative adaptation, and continued shared management of the ongoing product by institutional and departmental information technology staff as well as laboratory managers to meet the needs of the laboratory.

Keywords: Beaker anatomic pathology, implementation, laboratory information system

INTRODUCTION

Beaker is a laboratory information system (LIS) offered by Epic Systems Corporation (Verona, WI) and can be installed in two separate modules, Beaker Anatomic Pathology (Beaker AP) and Beaker Clinical Pathology (Beaker CP). Beaker is a relatively new LIS operating within the Epic suite of software, an example of using a single vendor for both the LIS and electronic medical record (EMR).[1] Given that Epic is a common EMR within the United States, clinical laboratories evaluating LIS options might consider Beaker as an LIS option if their institution currently uses the Epic EMR or will do so in the future. Beaker and other software are often included in institutional enterprise licenses for Epic software. We have described our implementation of the Beaker CP module at the University of Iowa Hospitals and Clinics and more recently another group has also described their implementation of Beaker CP at an academic medical center.[2,3] In this document, we describe the implementation of the Beaker AP module at the University of Iowa.

TECHNICAL BACKGROUND

Institutional details

Our institution is a tertiary/quaternary teaching hospital with 728 inpatient beds. On a yearly basis, our Pathology Department processes 50,000 surgical pathology accessions, 6000 nongynecologic cytology specimens, 10,000 gynecologic cytologic specimens, 2200 bone marrow biopsies, and 600 autopsies. We have an outreach laboratory that performs primarily AP consultation and testing for institutions regionally and nationally. All of the pathology laboratories use Epic's Resolute billing module aside from the outreach laboratory which uses a third-party revenue cycle management product.

As our current AP system (Cerner Classic) would no longer be supported, we began investigating potential other LIS configurations. However, due to having Beaker CP and the known, predictable integration that would occur with the final report formats in the institution's EPIC EMR, we elected to utilize Beaker AP over other LIS products.

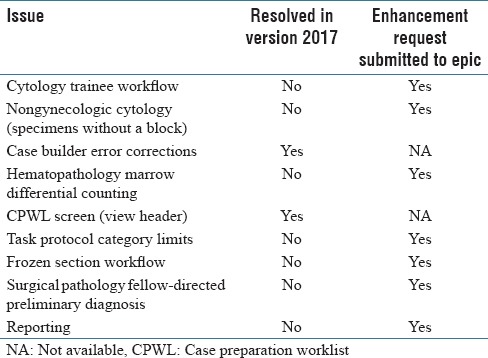

This manuscript is based on Beaker AP v2014. We are currently in process of updating to v2017. We describe in Table 1 our current Beaker AP issues that will be described below and any resolution in v2017. Of nine significant issues, only two appear to have been resolved with the newest version [Table 1].

Table 1.

Summary of issues and status of resolution

Scope of the laboratory information system project

The LIS implementation affected all of the AP laboratories including surgical pathology, cytopathology, ocular pathology, and autopsy. In addition, portions of what some would consider as CP laboratory testing were deemed to fit better with the Beaker AP module and were saved for the implementation of that module. These tests included the bone marrow examination portion of hematopathology, qualitative flow cytometry testing, and molecular pathology testing that used a surgical pathology specimen since these require narrative sign out. Before the Beaker implementation, the Cytogenetics Laboratory, a laboratory that is based in the Department of Pediatrics, used the Cerner Classic PathNet LIS only for specimens received through the outreach laboratory and used its own custom system for internal cases. It was determined that the Beaker AP implementation project scope would only cover those activities in the Cytogenetics Laboratory that were performed in the Cerner Classic PathNet system and that other testing would be moved into Beaker as part of a later project.

The project scope included more than a simple transfer of old workflows to a new system. We used the change of LIS as an opportunity to improve our practice with techniques that were not possible in our legacy system such as specimen tracking and just-in-time printing/etching of cassettes and slide labels. Four LIS specialists from our hospital's central information technology (IT) department, University of Iowa Health Care Information Systems, carried out the main portion of the build. Their build was directed by our Pathology Informatics unit that consisted of one pathology IT faculty member, one manager, and three pathology informatics specialists. The build was done in collaboration with subject-matter experts (SMEs), laboratory supervisors from frozen section/gross room, histology, cytology, immunopathology, bone marrow, autopsy, and electron microscopy. Another team of two reporting specialists from the central IT department built the reports for our department.

The institution tracked the numbers of individuals directly involved and the time spent electronically on a weekly basis to the extent possible. While this tracking system was available to all, including pathologists and administrators, generally only laboratory or central IT personal utilized it. Nevertheless, 24 employees recorded their time, with 4744 h logged. In more tangible terms, the lead scientists (working supervisors) in histology, frozen section gross room, bone marrow, autopsy, and immunopathology spent approximately 30% of their overall time in the 13-month build process working on the build. During the initial phases of the build, they spent approximately 10%–15% of their time on this activity but in the past few months before go-live, nearly 100% of their time was consumed, taking them out of the laboratory, and understandably affecting staffing needs.

Sources of data

These data are collected from personal experience, meeting notes and agendas, and satisfaction surveys from the preimplementation period and the 6-month post go-live.

RESULTS

Project timeline

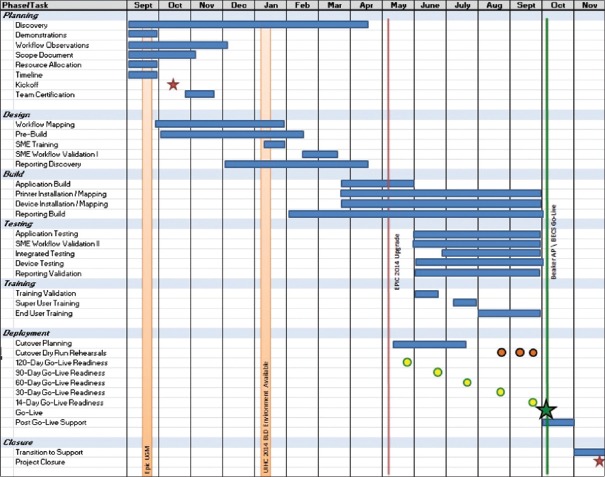

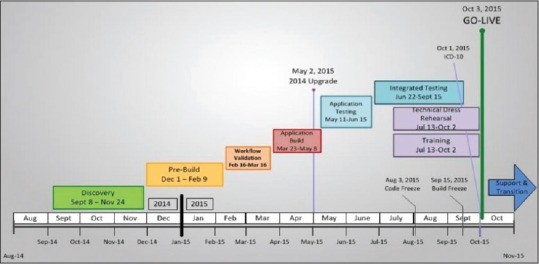

The project overall took 13 months from the beginning of the planning stage to the day of go-live [Figure 1]. Postimplementation optimization officially continued as part of the project for several months after go-live although optimization has continued through routine department quality improvement activities to the current time. Implementation activities were divided into several sections, including planning, design, build, training, and testing [Figure 2]. The project was complicated by the fact that there was a concurrent upgrade of our live Epic environment from version 2012 to version 2014, which required validation by our SMEs during the build phase of the project.

Figure 1.

Beaker AP Timeline

Figure 2.

Beaker anatomic pathology timeline with demonstration of task phases

Preimplementation challenges

Electrical power concerns

One unexpected problem that we encountered in the pre go-live period was that additional computer workstations and label printers (needed for specimen tracking and just-in-time label generation workflows) required more electrical power. Our AP laboratory is housed in a physical facility that was designed 40 years previous to implementation. Working with our department's facilities manager, we were able to identify unused outlets in our histology laboratory and nearby rooms that could be moved to meet the electrical power needs of the histology laboratory. This issue highlights the importance of early evaluation of the physical space, power, and network connectivity needs of new computers or equipment need to support LIS workflows in the laboratory.

Data conversion

Anatomic pathologists require easy access to previous AP result text when viewing new material. During our institution's transition to Epic's EMR in 2009, most of our AP reports had been imported from our LIS into the Epic EMR. Those that were not imported were cases that came through the department's outreach laboratory, as they were not considered to be University of Iowa patients. Because Beaker and the Epic EMR are integrated, those cases needed to be imported into Epic. This involved matching them to already existing patients or verifying that they did not already exist in our EMR and generating new medical record numbers. We found several tens of thousands of records to convert. We worked with a consulting service (S&P Consultants, Braintree, MA) to import the data into the Epic EMR. The process was not completely smooth, as hand-entered demographics inevitably duplicated patients over the years and there is always some mystery involved with joining tables in old database systems. We began with small batches and worked up to extracting results in batches of one year's results. For the initial small batches, we verified 100% of transferred results by comparing screens in the old and new systems. This thorough validation of the early small batches allowed the consulting service to improve their algorithm. After a few false starts, we were able to verify that all of our old records were being satisfactorily transferred into the Epic EMR and were confident enough to decrease our validation of the data conversion to checking 10 records of each case type in each year-sized batch.

Specimen source dictionary

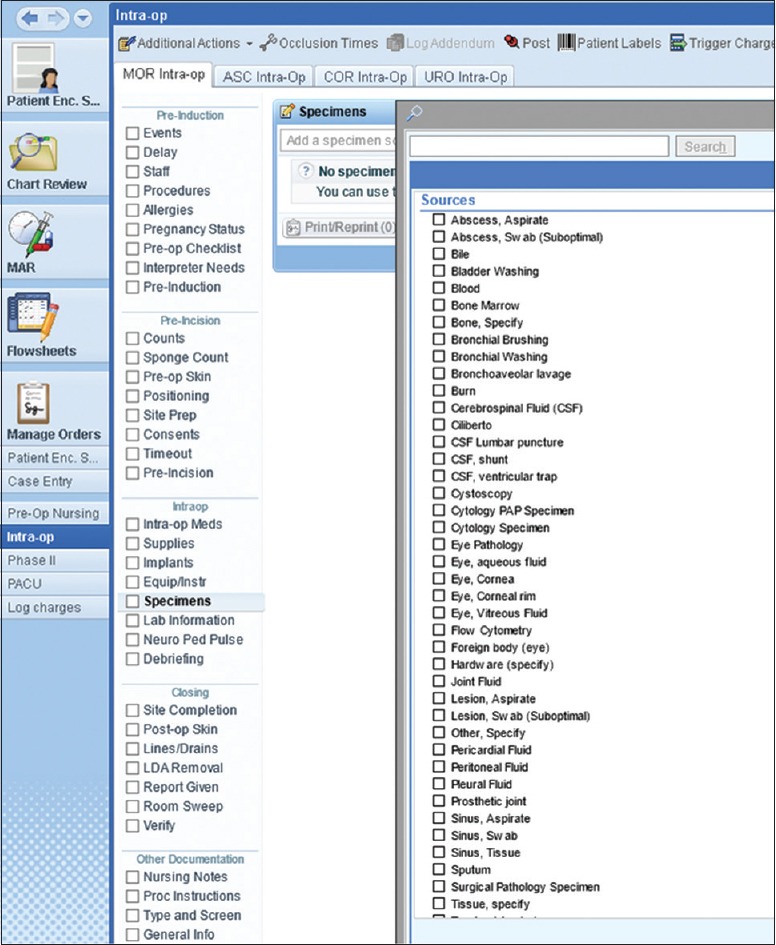

Specimen Source Dictionary ORD325 is a single, shared list used by all Epic applications. We found that several of the groups of people using the source list have different goals. For instance, microbiology requires that the list is sophisticated enough to dictate which plate labels and testing protocols should be generated, yet the surgical pathology laboratory would like a simplified list with limited sources to reduce errors in the downstream selection of specimen protocols which trigger specific cassette and slide protocols and billing codes.

In our situation, having already implemented Beaker CP in our institution, the specimen source dictionary was built with the microbiology schema in place; however, the source list required substantial expansion (>200) to incorporate sources applicable to AP. In addition to the increased list size, common sources, such as “tissue,” have overlapping schemata where one source could be linked to more than one pathology order and/or test. For the purposes of this section, we will focus on the challenges faced when discussing the use of the specimen source dictionary in OpTime (the Epic module for the surgical suite) and the downstream implications in the Surgical Pathology Laboratory [Figure 3].

Figure 3.

Specimen source dictionary in OpTime

In our meetings with operating room (OR) staff during the Beaker AP implementation project, nursing staff expressed a strong desire not to have overlapping schemata in the same list. Moreover, the OR nurses expressed concerns with selecting specimen sources from a single, comprehensive list. Without the ability to limit the available sources by laboratory or test, the nurses believed selecting specific sources would significantly complicate their workflow and inadvertently lead to ordering errors. Their preference was to not be required to select a specimen source but to simply choose the desired test or destined laboratory, and then type the source description into the free-text specimen description field (the equivalent of handwriting the source on a blank specimen label as had been done in the legacy system).

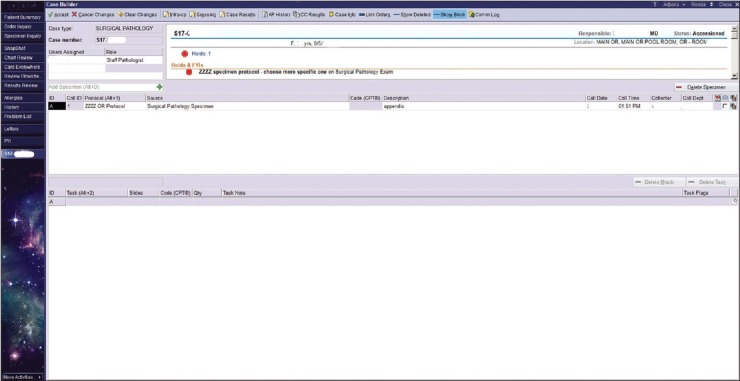

One argument in favor of using the source list could have been that choosing individual sources would allow for the automated selection of specimen protocols for AP specimens. However, this is not the case. The selection of a specimen source in OpTime does not allow for the automated population of a default specimen protocol. An illustrative example of this is the term “appendix” [Figure 4]. If an OR nurse selects the tissue source “appendix,” the source does not default the specimen protocol “appendix.” The specimen protocol must be manually selected by someone in the gross room surgical pathology laboratory to generate cassettes, slides, and billing codes.

Figure 4.

Case builder screen seen from the Surgical Pathology Laboratory. In this example, if the OR had chosen “appendix,” the possible protocols would have been narrowed to just three

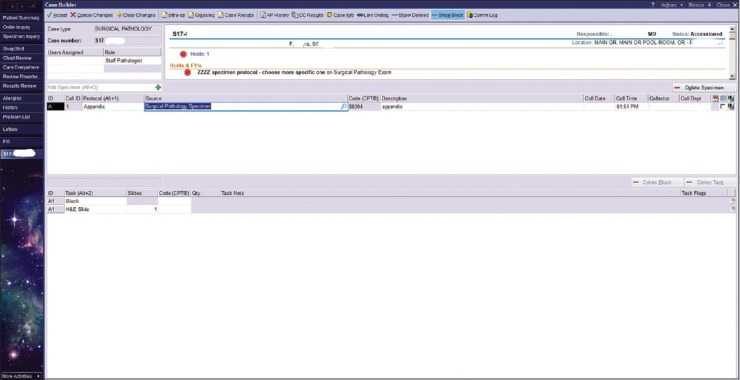

The compromise that we created involved OR nurses choosing a dummy specimen source, “Surgical Pathology Examination,” for specimens destined for the Surgical Pathology Laboratory [Figure 5]. This specimen source is then updated based on the free-text specimen description by the laboratory accessioner or the individual grossing the specimen.

Figure 5.

Case builder screen seen from the Surgical Pathology Laboratory. A compromise was reached with the OR nurses where for specimens destined for Surgical Pathology there was a creation of a “dummy” protocol. This specimen source is then updated in the Surgical Pathology Laboratory by the accessioner or the individual grossing the specimen

To track specimens coming from the OR's, we had to create a report to look for specimens which were “marked as sent” in the OR OpTime navigator but not yet received. This is an inelegant report that does not adapt well when errors are made. For instance, if duplicate specimens are entered into OpTime navigator in error by the OR nurse, and we catch the error and cancel the duplicate part, the report will still show that the specimen ordered in error has not been received. The report only looks at specimens in a 24-h period and has to manually be refreshed. We do not routinely run this report as we have found that it is not useful.

Areas not using the OpTime navigator must enter orders through Order Entry or Managed Orders. This is referred to as the “clinic collect” method of ordering. They first select the appropriate encounter and then, through Order Entry/Managed Orders, select the desired order. They do not have to select a specific source first. For instance, if a clinic wants to send a skin biopsy using a dermatopathology order, they can select the appropriate office visit, open Order Entry, type in “Dermatopathology” and select the dermatopathology order. Each order is tailored with specific questions and required fields that must be filled out before final submission (see screenshots). The Surgical Pathology Examination order only has two required fields. It does not require the user to select a tissue source from an expansive dictionary.

The “clinic collect” method of ordering does not account for each specimen being collected and does not directly communicate with Beaker AP. For instance, if in the order, the clinician/nurse enters a tissue source, the tissue source does not crossover into Case Builder as it does in the OR collection process. The collection information will display in Case Builder using both methods of collection.

One advantage of the “clinic collect” method is that any pertinent clinical information/history typed into the order by the clinician/nurse can be automatically populated into the “Clinical History” section of the pathology report. This information can also be displayed in the information window of Case Builder. The OR collection method does not provide a convenient section for the surgeon to enter pertinent information to communicate to pathology.

Cytology trainee workflow

Our cytopathology workflow is divided into gynecologic specimens, encompassing primarily liquid-based Pap-stained preparations and non-gynecologic specimens, encompassing fluids and fine-needle aspiration specimens. Several significant issues developed during the build portion of the cytopathology workflow that affected both of these specimen types.

Gynecologic cytology

An issue that we discovered during the build of our gynecologic cytopathology workflow involved the recording of preliminary interpretations. As a teaching institution, our workflow can involve numerous people looking at each case. The list of people reviewing a gynecologic case can include a cytotechnologist screener and a mandated rescreener (for gynecologic cases only), a pathology resident, a medical student, and up to two cytopathology fellows. Our cytopathologists desired an easy way to see the interpretation of each person who viewed the case. The gynecologic cytology case type in Beaker AP is built to be able to record two preliminary interpretations (screener and rescreener) but was unable to accommodate the extended number of people that are part of a teaching workflow. At go-live, and still currently, we are only able to record two interpretations. Another shortfall for the gynecologic cases is that we are required to manually enter the pathologist attestation statement of review for any cases that need to be signed out by a pathologist. This problem has not been resolved in Beaker AP v. 2017.

Nongynecologic cytology

After exploring several options, the non-gynecologic cytology case types were rebuilt based on a surgical pathology template because (1) the proposed nongynecologic template would not allow ordering of cell blocks of cytologic materials since “tissue” blocks could not be added to any cytology specimen (a practice that is universal in pathology laboratories) as well as (2) the proposed nongynecologic template would not allow free text in the interpretation field. Building on the surgical pathology template allows free text and the use of canned text phrases, highly useful in cytology where there is considerable overlap between surgical pathology and nongynecologic pathology specimen diagnoses. Unfortunately, from a teaching standpoint, utilizing the Surgical Pathology template does not allow saving of any previous interpretation other than the cytotechnologist's and a cytopathology fellow's opinion into the final diagnosis discrete field. We have manipulated this by entering in our signature line in an “Internal Comment” field. The cytotechnologists’ name has to be the first line in this field for our statistical reports to count the cytotechnologist as having screened these cases for regulatory purposes. The downside of this choice was that automatic counting of the number of cases viewed by our cytotechnologist screeners to maintain regulatory compliance is not functional and nongynecologic cases must be tallied manually.

However, a significant functional downside for using a Surgical Pathology template for every nongynecologic cytology case is that each case shows that a paraffin tissue block exists, even though the majority of our nongynecologic cases do not have tissue blocks. Another downside of use of the Surgical Pathology template is that no discrete values can be automatically chosen, instead relying on a dictionary of separate discrete values to choose from, but which do not always perfectly match the final diagnosis. This problem has not been resolved in Beaker AP v.2017.

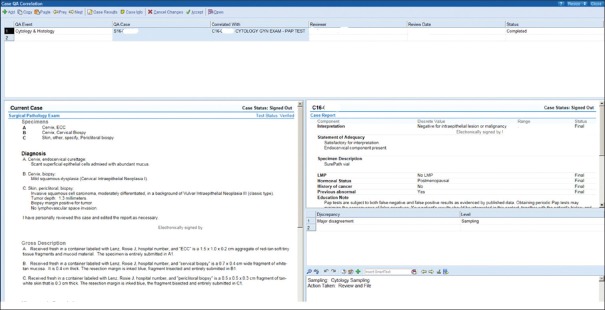

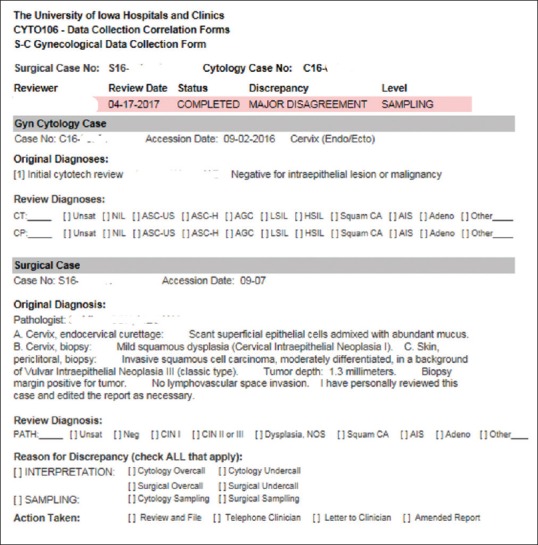

Cytology/histology correlation

Using HEDI reports, we identify patients with a cytology case that also have had a surgical pathology case in an identified period as well as identifying patients with a surgical pathology case that have had a cytology case in an identified period. We review this report for cases that are candidates for the cyto/histocorrelation, searching for truly matching body sites. Once identified, a correlation form is used to document the case review, and compile all the information in Case QA in Beaker. A statistical report can be run in Case QA using HEDI [Figures 6 and 7].

Figure 6.

Data collection screen for cytology/histology correlation

Figure 7.

Cytology/histology case correlation report

Frozen section

The difficulty with moving the frozen section workflow from paper to an electronic system was encountered early in our build process. The proposed generic workflow for frozen sections from EPIC was as follows:

The process would begin in OpTime with the OR nurses selecting the frozen section indicator to signal downstream that the specimen was for frozen section. A signal would appear as a checkmark underneath a “snowflake” icon in Case Builder. By checking the snowflake box, a cassette, and frozen section slide labels would have generated automatically in the task field. Once received, the specimen would move to a prosector who could enter a quick gross description into Beaker, and then prepare the frozen section slides at the cryostat and staining bench. Next, the surgical pathology fellow, along with faculty oversight, would review the slides and type his or her interpretation into Case Results. The “Preliminary Verify” (Prelim Verify) button would then send any text already entered into the report to the EMR. If the pathologist reviewing the frozen section slides were not the pathologist who would ultimately sign out the final diagnosis, he or she would be assigned as an “intraoperative consultant” to meet regulatory compliance as well as receive billing credit for the frozen section interpretation.

Overall, we believe that this process could work well in an institution that sees very few frozen sections but this proposed workflow breaks down when there are multiple frozen section specimens on a single case and does not incorporate requests for touch preparations or intraoperative “gross only” assessments, both common occurrences at our institution. Of note, the snowflake icon is only indicative of a frozen section, but most large institutions must accommodate requests for all types of intraoperative consultations, not just frozen sections. Beaker does offer rapid frozen section accession through an option to default frozen section slide tasks when the frozen section icon is selected in OpTime. For instance, if the default is to add two frozen section slide labels when the frozen section icon is selected in OpTime, once the laboratory receives the specimen, two slide labels will default in Case Builder and will print automatically once the lab tech clicks “Accept”. However, if the prosector cutting the frozen section slides needs more than two slides, a new frozen section task must be added in Case Builder to print additional slide labels.

A significant problem with EPIC's layout of the frozen section function is that the accessioning, grossing, and frozen interpretation activities are mutually exclusive; an individual entering data in one of these activities for a specific case locks the case for other activities. This is also true for activity in Case Results; entering text on a specific case in Case Results locks activity in Case Builder and vice versa. If multiple parts on a single case arrived in the laboratory at the same time, it would be impossible to accession, gross and interpret the frozen results in a timely manner. We have encountered similar specimen locking issues in our prior analysis of the Beaker CP module.

Frozen section interpretation requires rapid turn-around to facilitate timely decision-making in the OR and our faculty and fellows felt that this locking system would lead to gridlocks and delays. In addition, the surgical pathology faculty members felt uncomfortable with preliminary information going to the chart available for any clinician to see; they were uncomfortable with clinicians making decisions based on incomplete information and without knowing who to contact in the case the final diagnosis differs significantly from the preliminary frozen section diagnosis information. After much deliberation, we decided to remain with our paper system of recording intraoperative interpretations and calling the OR to report the results. The frozen section paperwork is then scanned into Beaker by our transcriptionists. Issues with frozen section workflow have not been resolved in Beaker AP v.2017.

Integration with cassette etchers

At our facility, we use barcoded cassette etchers and a multicolor cassette scheme that has enabled downstream visual cues for processing in Histology. For example, pink cassettes are used to signify cases which require immediate (STAT) processing and gray cassettes signify dermatopathology cases. Barcode cassette etching was not integrated with our legacy system but was facilitated by the cassette vendor's etching software. The software allowed us to create print jobs from various workstations, send print jobs to any one of the several cassette etchers in use, designate specific colors, and create notes that would print on each cassette. All functions were user controlled and user-friendly. With the implementation of Beaker AP, we lost most of this functionality.

Cassette color designation in Beaker AP is directed by the use of specimen protocols. In some cases, we had to develop duplicate or triplicate specimen protocols to account for various cassette color options. For instance, “lipoma” specimens sent from our Dermatology Clinic (whose specimens are interpreted and billed separately by faculty members of the Dermatology Department) required the specimen protocol to produce a gray cassette while “lipoma” specimens sent from the main OR (interpreted and billed by faculty members of the Pathology Department) required the protocol with a peach cassette.

Beaker AP also requires the designation of specific cassette etchers based on the workstation in use. The print jobs cannot be easily rerouted by the user. This becomes troubling for users who have to share cassette etchers and has often resulted in printing delays.

Specimens without a block

Each case created in Beaker AP requires the assignment of a specimen protocol. The typical surgical specimen generates a block/cassette task and a slide task. However, for gross pathology specimens, most surgical pathology consult cases coming to our department from other institutions as well as the aforementioned nongynecologic cytopathology specimens which require a Surgical Pathology template to be able to provide a narrative report, a block task is not needed. Unfortunately, each specimen protocol assigns at least one block. However, our in-house IT builders were able to create workarounds so that it did not appear in the tracking function as if a block was produced; however, the block tasks are still visible in Beaker AP's Case Preparation Work List (CPWL) as pending tasks. To ensure that CPWL did not become bogged down with too many incomplete tasks, the undesired block tasks need to be cleared from the pending list.

Histology

A significant improvement from our legacy LIS was the ability to print slide labels in a just-in-time method. Printing labels on demand, at the microtome, required that each station be equipped with a label printer and full computer workstation. However, there was physically not enough space adjacent to each microtome to accommodate this additional equipment. Our solution was to install touchscreen monitors to eliminate the need for a keyboard and mouse. We were able to mount the touchscreen on a moveable monitor arm that swivels to a desirable position for each histotechnologist.

During the build, we discovered that task protocols had to be assigned to one of four categories. These categories were immunohistochemistry (IHC), recut, special stain, and others. These categories could not be changed nor could we add any additional categories. As a result, the “Others” category became a catch-all for tasks that did not fit into the other three categories. The downside was that if the user was not familiar with a specific task name, it was time-consuming to find the task in a long list of possible task protocols.

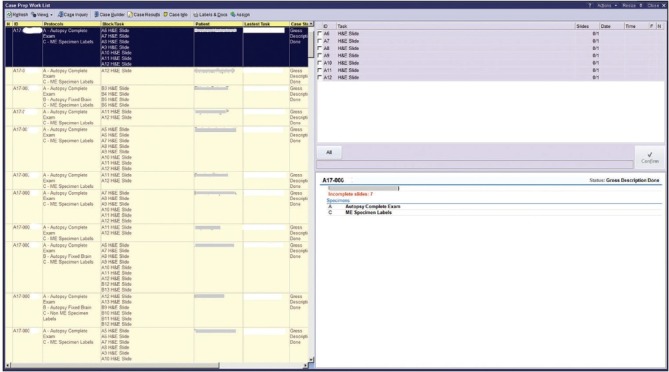

It was decided early in the build that the histology laboratory would assign tasks to various lists in CPWL [Figure 8]. Depending on bench assignment, the histotechnologist could then choose the appropriate view to dictate their work protocol. However, in the CPWL screen, the user cannot see what view from which they are working. For example, there is no header in the screen that indicates to the histotechnologist whether they are in the main view versus IHC versus special stains). To make it easier for the histotechnologist to differentiate between the different views, we assigned a color to each view so that one could tell at a glance what screen they were viewing. This problem in CPWL has now been addressed in Beaker AP v.2017.

Figure 8.

Case preparation worklist

Of note, in the year proceeding go-live with our legacy LIS, the histology laboratory encountered 21 errors in which the tissue not belonging to the patient on the slide label was mounted. Since go-live, the laboratory has only had two such labeling errors, both of which were related to the histotechnologist not following a standardized procedure for scanning blocks and printing labels.

Immunopathology

We found that there were advantages for Beaker AP in our Immunopathology laboratory, which is a separate laboratory in the section of AP. The major advantage of Beaker AP for IHC was the implementation of an interface with our IHC stainer. Before Beaker AP, each case number and stain had to be manually typed into the stainer, time-consuming and error-prone task, requiring numerous pre- and post-staining checks in place to detect clerical errors.

However, as supplied, there was no interface between Beaker AP and our IHC stainer (DAKO). This was a very large problem that required significant amounts of dedicated preimplementation institutional IT, departmental IT, and vendor resources to overcome, a problem similar to our experiences with the integration of middleware vendors during the implementation of Beaker CP at our institution. Nevertheless, with the interface of the IHC stainer and Beaker AP established, an IHC task, once confirmed at the point of microtomy in the Histology laboratory, a barcoded label was generated and the task downloaded into the IHC stainer. We have found that this interface saves approximately 60 min on each of our twice-daily IHC staining runs.

For our immunofluorescence staining procedures, the main advantage with Beaker AP is in slide labeling. In our legacy system, all slides, up to 16 in renal pathology cases, needed hand labeling. Now barcode labels are generated when the task is confirmed.

Hematopathology marrow counting

The Beaker AP module does not include a widget for counting bone marrow or blood cells. The standard workflow proposed to us by EPIC was that each bone marrow case would be broken into three accession numbers: A CP case for counting the bone marrow smears, a CP case for counting the accompanying peripheral blood smear, and an AP case for the morphologic description and diagnosis section. The three cases would be linked, but show up as separate results in EPIC's Chart Review and Results Review activities that are commonly used by clinicians. Our hematopathologists desired to have a unified report, and the technologists on the service were not pleased with the prospect of having to track slides with three different accession numbers for a single bone marrow report. A standalone piece of software was developed in-house that allowed residents, fellows, and hematopathology technologists to perform differentials on bone marrow and peripheral blood slides and calculate averages on an arbitrary number of cell counts. The software generates rich text tables that can easily be placed in the appropriate section of the AP bone marrow report through the Windows clipboard. This issue is not resolved in Beaker AP v. 2017

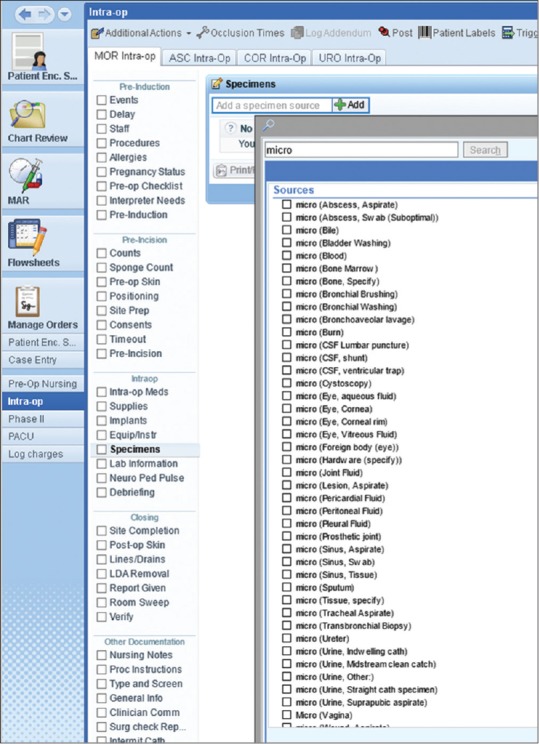

MICROBIOLOGY

Microbiology sources in Epic Beaker had been built to trigger-specific testing in the laboratory. These were supplemented with free-text “site” specifications such that, for example, a source for blood culture would be “venipuncture” and trigger a blood culture laboratory workflow, with “site” specified as “left antecubital” for documentation in the Epic EMR. “Source,” therefore, consisted of a limited number of microbiology selections without equivalents in other workflows such as AP [Figure 9]. With Beaker AP, a number of redundant or near-neighbor sources was generated, and the source list expanded to a very large number (>200). This was manageable in the regular Epic ordering workflow, where only appropriate sources were presented to providers when specific microbiological tests were ordered. However, in Epic OpTime, the ordering workflow is source-driven rather than culture-driven, forcing providers to parse several hundred potential sources to find the correct one. We, therefore, determined what sources were commonly used by surgeons and built these as segregated “micro” sources that could be searched for with a synonym. These number approximately 35 sources at present, all of which fit on one screen for ease of ordering when “micro” is entered a keyword during ordering. This approach mitigated the impact of this ordering change, which otherwise would have presented an inconvenient number of sources, slow work during surgery, and result in misordering. Sources not in OpTime are accommodated by using the routine Epic ordering process outside OpTime, in which providers select a culture, then a source for the culture, and then add the “site” designation at the expense of extra time.

Figure 9.

OR OpTime filtering for microbiology specimens. Typing “micro” in the search field brings up the list of sources

DEATH LOG

The autopsy service (Decedent Care Center) used a “Death Log” to document all deceased patients. This includes tracking next of kin, possessions, the funeral home that receives the body, disposition, death certificate, cause/manner of death, and autopsy date and prosector if applicable. The Death Log consisted of a complicated Microsoft Access database that was difficult to support and often became locked or corrupted in situations where more than one user attempted to use it concurrently. Given these limitations, we were eager to implement this functionality in our new LIS. An initial query with Epic on how to implement this functionality pointed to Smart Forms as a way to collect this information, but the information would not be stored discretely and would not support the searching and report generation capabilities that we required. We eventually settled on a solution, in which each death generates a CP test; the various data points are test components. This solution permits us to track the data that we need and generate reports through the Clarity database. Ad hoc searching continues to be a struggle with this system.

ISSUES ENCOUNTERED SOON AFTER IMPLEMENTATION

Reporting

Beaker offers two methods for data analytics including report generation and ad hoc searching. The first, Reporting Workbench, allows users to create reports and ad hoc queries by themselves. A fairly user-friendly interface facilitates query generation by users who may not be familiar with SQL or other database query languages. Reporting Workbench queries act directly on the production Cache database that underlies the Epic products, allowing for up-to-date results. However, given that the queries involve the production database, query run-time at our institution is limited to one hour for users outside of the central IT department. In our system, queries with a query date range of >2–3 weeks tend to time-out before completion. This limits the utility Reporting Workbench in cases when a large amount of data needs to be analyzed. Reporting Workbench is used for the laboratory dashboards to direct workflow and to identify tests that are falling behind expected turn-around time (TAT).

The second analytic option involves the Clarity database, a SQL-based relational database that is updated with new data on a nightly basis. Queries are built with SQL and reports are generated using Crystal reports. At our institution, creating queries involving the Clarity database is restricted to the central IT department's reporting team. Queries submitted to the Clarity system complete more quickly due to the fact that the relational database is better optimized than the hierarchical database architecture of the Epic/Beaker production database for the types of queries that we generally perform. On the other hand, given that the data is “day old,” this system is not ideal for dashboard reports or any other report that needs to be real-time. Moreover, given that only a few select people at our institution can create queries with this method, it is not suitable for “ad hoc” query generation. This is the system that is used to generate most long-term operational reports such as TAT.

One area in which these two data analytics systems fail is the usage most commonly requested by our pathologists: The ability to perform a free-text search of all cases over a wide time range to either find a specific report or find a series of reports with similar diagnostic wording. The Clarity database system stores the large text blobs that comprise a surgical pathology report as smaller blocks of text that are reconstituted to generate a complete text. This complicates creating a free text search because if the query phrase is in a text, but the phrase is split into two of the smaller blocks of text, a simple query would miss that result. Our central IT department has developed a workaround for this solution that involves pulling all of the text from all of the report types in question in the given time, reconstituting the full text of each report from the individual small text blocks, and then filtering based on the presence of the phrase in question. This process is sufficient for some searches but is not user-friendly, requiring much more time to setup search parameters compared to the legacy LIS. Many anatomic pathologists have now decreased their frequency of quick searches and have continued to rely on the pre-Beaker AP cases that are stored in the legacy LIS for finding cases in more timely manner for research, case conference presentation or personal interest, even though the case accession dates are superannuated at this point. Problems associated with Reporting have not been addressed in Beaker AP v.2017.

Case builder error corrections

Case Builder is the primary application employed in the gross Surgical Pathology laboratory for accessioning and gross dissection. The application is used by both full-time (pathologists’ assistants), and rotating staff, including pathology residents and medical students. One issue we discovered after go-live was the lack of forgiveness Case Builder offers for easily correcting user errors. For instance, if a resident trainee mistakenly selects the specimen protocol for a medical renal biopsy, which includes immunofluorescent stains, multiple slide levels, and electron microscopy, rather than the appropriate protocol having a single routine hematoxylin and eosin slide, the error is difficult to correct. Case Builder treats each task, once saved, as a unique item that cannot be replicated. In the above example, there are only two options provided in Case Builder to correct the error: (1) enter the correct specimen protocol and delete all tasks or (2) enter the correct specimen protocol and keep all tasks. The problem with deleting all of the tasks associated with the incorrect protocol is that the first cassette (A1) is also deleted. This means that the trainee would have to submit the tissue in the second cassette (A2), which many prosectors find appropriately misleading. If the trainee chooses the second option and keeps all tasks, he/she can submit the tissue in the first cassette (A1); however, all of the immunohistochemical stains, slide levels and electron microscopsy tasks are kept as well. The compromise we have adapted is to keep all tasks and delete the unwanted tasks, one by one, which in some cases can be rather time-consuming and laborious. However, this procedure allows us to submit tissue in the first cassette, so downstream users are not confused by the out of sequence numbering scheme. This issue appears to be resolved in Beaker AP v. 2017.

Surgical pathology fellow-directed preliminary diagnosis

One workflow that we have struggled to make functional in our institution is that of preliminary diagnosis. Our workflow differs significantly from that envisioned by the creators of Beaker AP. We have a surgical pathology fellow assigned to a “hot seat” or otherwise, a preliminary diagnosis service. The fellows assigned to this service quickly preview all of the surgical pathology slides before they are viewed by residents or pathologists. They record a short diagnosis or an impression so that clinicians can call to find some early indication of a case's initial differential diagnostic consideration and if the case needs more work-up before a diagnosis can be rendered. In our legacy LIS, their comment would trigger a message to be sent to the medical record that a preliminary diagnosis was available at the hotseat phone extension. Any clinician who called to get the preliminary diagnosis would have their contact information recorded manually by the fellow in the LIS so the clinician could be informed if there was a discrepancy between the preliminary and final diagnoses. In addition to being a satisfier for inpatient clinicians, this service also represents a second opinion and helps ensure high-quality results. As discussed above in results for “Frozen Section,” there is a Prelim Verify button in Beaker AP that sends whatever is currently written in the report to the chart in an unfinished state, but we are uncomfortable with unfinished reports being viewable and actionable without the ability to inform those who may have received an incorrect diagnosis from an incomplete report. The other side of this functionality is that Prelim Verify is the only way of sending anything to the medical record in association with this report final verification. To replicate our previous functionality we built a very complex set of rules that would prevent the main portions of the report from being sent to the medical record by the Prelim Verify button, and instead inserted the message about calling the hot seat service. These rules worked for most scenarios, but there were occasional cases in which a pathology resident's version of the report would be sent to the medical record by the Prelim Verify button. This did result in some preliminary reports to temporarily being present in a few patients’ charts that were in variance with the final diagnosis. We could not find a fool-proof way to prevent this from happening, so we eventually discontinued using Prelim Verify function for this workflow. We now send a generic text that says that a preliminary diagnosis is usually available within a certain time period from receipt by Pathology. This problem is not resolved in Beaker AP v.2017.

Autopsy sign-out glitches

After go-live, an issue arose that prevented preliminary autopsy reports from being signed out. The issue was related to the fact that we had implemented a rule that prevented a case from being signed out if it had placeholders for missing content. The preliminary report is a subset of the final report; the preliminary report could not be signed out if there were any blanks in the final report. Consultation with our Epic representatives determined that a patch could be used to enable us to adjust the rule so that the check for placeholders only evaluates fields that will be sent to the chart by the action in question. In this way, only the preliminary portion of the report gets checked for placeholders when the prelim verify button is pressed.

Measures of success in our anatomic pathology beaker installation

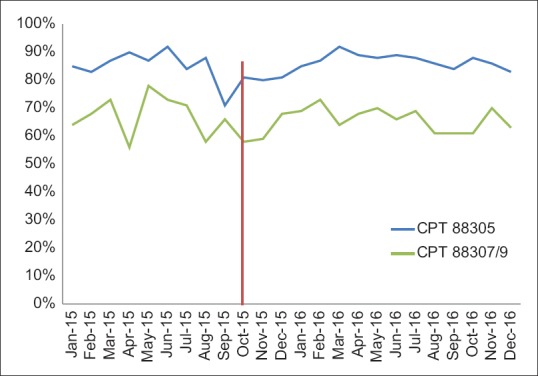

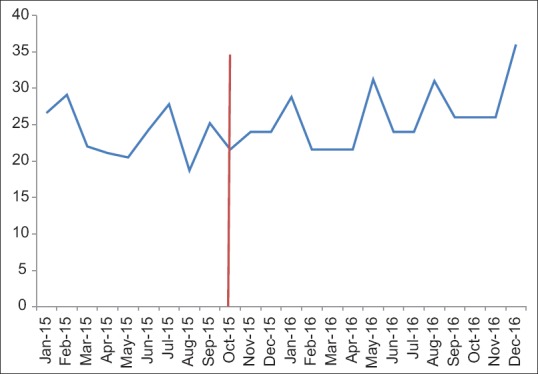

We used TAT data as a measure of the success of our implementation with the caveat that we could not control for all other variables that might affect TAT metrics. The time over which TAT data was evaluated also had personnel turnover, concurrent process improvement projects, and variation in specimen volume as uncontrolled variables that potentially affect TAT metrics. Our assessment of the metric data for surgical pathology cases was separated into two groups: those billed as CPT 88305 and more complex cases billed as CPT 88307 or 88309 [Figure 10]. The data show that there was no significant change in the percent of cases meeting TAT goal thresholds during and after implementation.

Figure 10.

Surgical pathology turn-around time pre- and post-Beaker anatomic pathology implementation

We did not find that there were significant impacts in amended reports or frozen section discrepancy reporting, but we did find it easier to collect data and prepare reports for these functions.

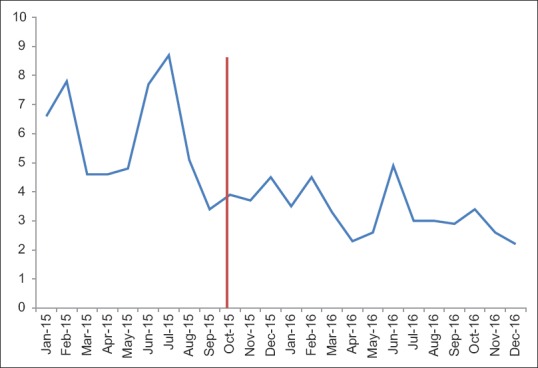

Cytology TAT data is divided into gynecologic cytology (Pap tests) and nongynecologic cytology cases (everything else) [Figures 11 and 12]. There is significant variation in these metrics from month-to-month making interpretation more difficult. There are several months before implementation in which a significant portion of our cytotechnologists was busy validating and revalidating the cytology build (see the previous discussion of cytology build issues). Overall, there was no significant change in the postimplementation metrics from our institutional historic TAT.

Figure 11.

Gynecologic cytology turnaround time (work days) pre- and post-Beaker anatomic pathology implementation

Figure 12.

Nongynecologic cytology turnaround time (hours) pre- and post-Beaker anatomic pathology implementation

The implementation Beaker AP was helped by the fact that Beaker CP had been installed 13 months previously; and that, the pathology residents were familiar with the use of this product by their rotations in CP laboratories. The familiarity that the residents had was helpful as they could act as local “experts” in Beaker AP.

We also collected subjective feedback in the form of comments solicited by E-mail from faculty and staff approximately three months after implementation. Many of the comments focused on the user interface and compared it to our previous LIS. As the previous LIS had only rudimentary word processing capability, Beaker AP compared much more favorably in terms of the ease of word processing. The ease of ordering stains, integrating images into reports, and viewing previous pathology reports was praised. Nevertheless, some users who were used to using a keyboard-based interface called it a “click-fest” (Keyboard shortcuts are available in Beaker for those that want to learn them). Many reported that they appreciated the ease of transitioning to the EMR to view clinical data from an integrated LIS. Other positive attributes were specimen tracking capabilities of each step of specimen-to-slide processing and the ability to have imported synoptic reporting templates. Negative comments mainly focused on issues mentioned previously in this report, such as difficulties with the frozen section workflow.

Ongoing post go-live optimization

Post go-live optimization has been an ongoing process in the time since go-live. Significant portions of an ongoing weekly AP process improvement committee meeting have been dedicated to exploring how we can use Beaker functionality to improve our quality and efficiency as well as to fix glitches in the system. For 16 months after go-live, at least 50% of the meeting time for this committee, composed of laboratory section leaders, departmental and institutional IT members, departmental quality assurance members and faculty, has been devoted to working on improvements of Beaker AP. Reporting improvements have been part of the continued optimization.

DISCUSSION

The Beaker AP LIS was successfully implemented in our pathology department, but several areas of the laboratory required significant adaptation of the default Beaker build parameters to meet the needs of the workflow. In a few areas, our laboratory was unable to use the Beaker functionality to support our workflow, and we have continued to use paper or have altered our workflow. Some of these adaptations were due to the presence of trainees in the workflow, but others were due to the inability of the supplied build parameters to fit a large AP practice with numerous clinics and 40 ORs. Other areas were able use supplied functionality but still these areas required at least some modification. Below, we discuss the areas with the most problems.

We needed to alter our preliminary diagnosis workflow in surgical pathology, a workflow that had been successfully operating for over 30 years. Clinicians and our surgical pathology fellows have adapted to the inability to send alerts that a preliminary diagnosis was available as soon as possible, but this remains a dissatisfier for clinicians.

Other alterations that were negative for the department included changes to our cytology workflow as it related to teaching. Our nongynecologic cytology case types had to be rebuilt to allow for recording any number of preliminary interpretations by screeners as well as trainees at the cost of now manually tallying of number of cases viewed by our cytotechnologist screeners to maintain regulatory compliance.

Because of case “locking,” Beaker AP was not capable of handling our surgical pathology frozen section workflow, one which must render frozen sections on multiple parts of a single case that are in the laboratory at any one time. Paper was preferred for tracking and recording of data on active cases, and we have decided not to utilize the LIS for frozen sections at this time, instead calling the surgeon to relay the information obtained from the frozen section. However, unrelated to any LIS’ ability, considerable thought should be also be given to whether or not frozen section reports should be the prime means of communication with the surgeon, as voice communication between pathologist and surgeon can contain critical data that cannot always be reliably provided by text.

Dictionary creation is a known complex aspect of LIS development.[1] This was a problem in implementing Beaker AP when developing a shared source dictionary for microbiology and surgical pathology for the OR's, where confusion as to whether to choose a specimen site (critical for surgical pathology but not for microbiology) or type of specimen, such as “tissue” or “abscess,” important for microbiology to set up correct plate labels and protocols in the laboratory. The OR nursing personnel did not want overlapping sources and the compromise created involved OR nurses choosing a dummy specimen source, “Surgical Pathology Examination,” for specimens destined for the Surgical Pathology Gross Room. This specimen source was required to be updated on every case coming to the surgical pathology by the accessioner or the individual grossing the specimen. Thus, while the proposed workflow would have essentially accessioned the specimen and directed block etching by OR personnel, we could not use that functionality.

AP LIS are known to have difficulties in data mining.[4] This is related in part to the fact that this data is usually in free text and this problem is evident in Beaker AP. Although we have a search function that was developed by our departmental IT leaders, it is not as user-friendly and compared to the legacy system, has resulted in less searching for simple items, such as a list of cases with specific diagnoses. Open source solutions for free text searching, such as TIES from the University of Pittsburgh (http://TIES.UPMC.COM) are available for consideration though we have not yet investigated these options. We continue to push for a user-friendly search interface built into the Beaker experience.

Tracking of specimens in an AP LIS has been described as a positive benefit.[5,6] Clearly, a very useful aspect of the implementation of this LIS resulted from the fine detail of specimen tracking of tissues through the surgical pathology laboratory by barcodes. Every individual that “touched” a specimen in Beaker AP, left a record that was easily viewed and the step-by-step fate of each case, down to the block/slide level, from the individual accessioning to final diagnosis, is tracked. This has allowed the department to ascertain and monitor critical data elements in the overall workflow.

The implementation of Beaker AP has allowed the department to take advantage of synoptic pathology reporting, a critical element of pathology reporting.[7] This is accomplished in Beaker AP by subscribing to College of American Pathologists synoptic reporting templates which are updated on a regular basis.

Our go-live with Beaker AP was probably aided by our prior implementation of Beaker CP, which had occurred 14 months previously. This allowed key individuals in our departmental as well as institutional IT sections to become more facile with the structure of a new LIS. It also allowed our pathology residents who had rotated on CP specialties in the previous year to become familiar with basic functional commands and appearances in a new LIS. Thus, when Beaker AP was implemented, these individuals became embedded SMEs in beginning day-to-day use for AP faculty.

Implementation of the Beaker AP module at an academic medical center required creative thinking to overcome certain mismatches between the laboratory workflow and the standard workflow built into the Beaker LIS. Finding these mismatches early, through demonstrations to end users in the laboratory during the planning phase and hands-on experience early in the build process helped us find many of those areas that would require more work to optimize. In some instances, portions of the build were scrapped and begun again after feedback from end-users indicated the need for major changes. Decisions to discard portions of the build should be made with the project timeline in mind, and after a certain time, rebuild must be delayed until post go-live optimization.

In addition to soliciting feedback from pathologists and laboratory technicians, it is critical that consultation be made with parties both upstream and downstream from pathology workflows. This can include outpatient, inpatient, and OR clinicians and nursing staff that collect specimens, as well as those that receive our reports.

Finally, involving SMEs in end-to-end validation of the LIS build was a useful tool for us to find errors and ensure that our LIS was ready for go-live. Being able to follow various specimen types through the entire pathology workflow from collection to reporting and billing helped ensure that all of the cogs meshed together correctly.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Acknowledgments

The authors would like to thank the Executive Oversight Committee (Lee Carmen, Doug Van Daele, Ken Fisher, Amy O’Deen, Rose Meyer) as well as team members from our Health Care Information System (Karmen Dillon, Nick Dreyer, Rick Dyson, Steve Meyer, Jason Smith, Mary Heintz, Patrick Duffy, Kathy Eyres, Peter Kennedy, Bob Stewart, Mary Jo Duffy, Elizabeth Lee, Cass Garrett, Dean Aman, Julie Fahnle, Christine Hillberry, Sharon Lyle, Shel Greek-Lippe, Kurt Wendel, Brian Hegland, Jeffrey Smith, Tom Alt, Tony Castro, Steve Niemela), Department of Pathology (Mary Sue Otis, Jeff Valiga, Connie Floerchinger, Josh Christain), and Epic (Brian Berres, Jenny Neugent, Zak Keir, and Krystal Hsu). We also thank all the other individuals involved in interface development, validation, help support, and other key tasks in this project.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2017/8/1/47/220746

REFERENCES

- 1.Koppel R, Lehmann CU. Implications of an emerging EHR monoculture for hospitals and healthcare systems. J Am Med Inform Assoc. 2015;22:465–71. doi: 10.1136/amiajnl-2014-003023. [DOI] [PubMed] [Google Scholar]

- 2.Krasowski MD, Wilford JD, Howard W, Dane SK, Davis SR, Karandikar NJ, et al. Implementation of epic beaker clinical pathology at an academic medical center. J Pathol Inform. 2016;7:7. doi: 10.4103/2153-3539.175798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tan BT, Fralick J, Flores W, Schrandt C, Davis V, Bruynell T, et al. Implementation of epic beaker clinical pathology at Stanford University medical center. Am J Clin Pathol. 2017;147:261–72. doi: 10.1093/ajcp/aqw221. [DOI] [PubMed] [Google Scholar]

- 4.Park SL, Pantanowitz L, Sharma G, Parwani AV. Anatomic pathology laboratory information systems: A review. Adv Anat Pathol. 2012;19:81–96. doi: 10.1097/PAP.0b013e318248b787. [DOI] [PubMed] [Google Scholar]

- 5.Pantanowitz L, Mackinnon AC, Jr, Sinard JH. Tracking in anatomic pathology. Arch Pathol Lab Med. 2013;137:1798–810. doi: 10.5858/arpa.2013-0125-SA. [DOI] [PubMed] [Google Scholar]

- 6.Zarbo RJ, Tuthill JM, D’Angelo R, Varney R, Mahar B, Neuman C, et al. The henry ford production system: Reduction of surgical pathology in-process misidentification defects by bar code-specified work process standardization. Am J Clin Pathol. 2009;131:468–77. doi: 10.1309/AJCPPTJ3XJY6ZXDB. [DOI] [PubMed] [Google Scholar]

- 7.Kang HP, Devine LJ, Piccoli AL, Seethala RR, Amin W, Parwani AV, et al. Usefulness of a synoptic data tool for reporting of head and neck neoplasms based on the College of American Pathologists cancer checklists. Am J Clin Pathol. 2009;132:521–30. doi: 10.1309/AJCPQZXR1NMF2VDX. [DOI] [PubMed] [Google Scholar]