Abstract

In medicine, cognitive errors form the basis of bias in clinical practice. Several types of bias are common and pervasive, and may lead to inaccurate diagnosis or treatment. Forensic and clinical neurology, even when aided by current technologies, are still dependent on cognitive interpretations, and therefore prone to bias. This article discusses 4 common biases that can lead the clinician astray. They are confirmation bias (selective gathering of and neglect of contradictory evidence); base rate bias (ignoring or misusing prevailing base rate data); hindsight bias (oversimplification of past causation); and good old days bias (the tendency for patients to misremember and exaggerate their preinjury functioning). We briefly describe strategies adopted from the field of psychology that could minimize bias. While debiasing is not easy, reducing such errors requires awareness and acknowledgment of our susceptibility to these cognitive distortions.

In clinical medicine, bias is a predisposition to form premature or undue impressions that are not based on actual data. This all-too-common human trait may result in cognitive errors with serious consequences. Cognitive errors are debated prominently in social and psychological sciences but only infrequently in clinical literature.1–3 Research methodologists emphasize and caution us against many types of biases that enter epidemiologic and research domains. Neurologic practice, rich in advanced technologies but still heavily dependent on cognitive skills, should be wary of the snares set by at least some of the common biases. The neurologist engaged in forensic consultation, where critical legal judgments depend on unbiased formulations using clinical knowledge, is especially vulnerable to cognitive bias.4 Our aim is to raise awareness of this subject for clinical neurologists, especially those considering forensic practice. In addition to reviewing typical cognitive errors, we discuss debiasing techniques that might curtail the chances of biased forensic evaluations.

Four common biases

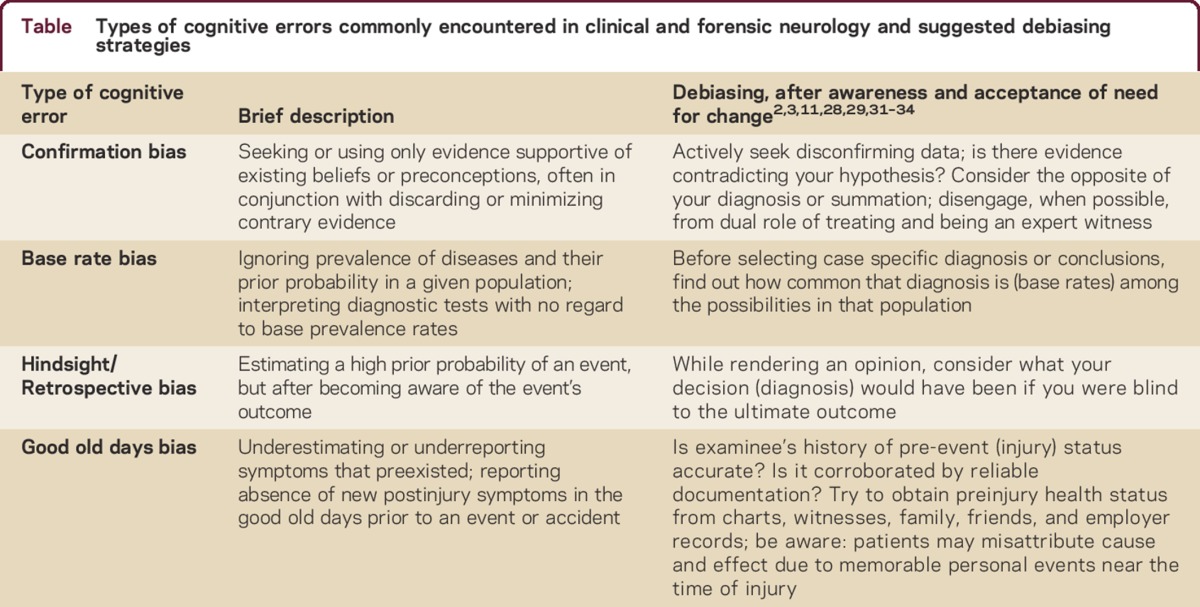

Cognitive bias could occur in any clinical situation. We focus on 4 biases that are common in practice, especially with a forensic emphasis. These are (1) confirmation bias, which includes diagnostic momentum and the allegiance effect; (2) bias ignoring base rates; (3) hindsight bias; and the (4) good old days bias (table), a recently recognized construct. We use the term cognitive bias as the tendency to seek information and form conclusions in certain ways, not as a synonym for prejudice. Our discussion about bias in this article is limited; we do not address bias in research methodologies, Bayesian reasoning, or the intuitive vs analytic systems of thinking. We do not address potential sources of bias arising from ethical conflicts, such as when treating physicians try to be expert witnesses and mix their differing dual responsibilities. Several researchers and ethicists provide in-depth discussion of these and related topics in independent publications.2,3,5–7

Table Types of cognitive errors commonly encountered in clinical and forensic neurology and suggested debiasing strategies

Confirmation bias

This ubiquitous and powerful bias is a common method for one-sided case building. It operates by conscious or unconscious assimilation of evidence that is consistent with our assumptions and rejection of contrary evidence.1 When gathering diagnostic information, an early focus or selective anchoring on the wrong bit of available evidence may prevent the search for alternative possibilities. This leads to a search primarily for data confirming the early hypothesis and dismissal of equally strong contrary information.9,10 Here is a clinical example: A 34-year-old woman reported episodic oral numbness, thick speech, and hand tingling, more on the right. Stressors included financial and marital difficulties. A cousin had a diagnosis of multiple sclerosis (MS). Examination was normal. Brain MRI showed a very small left subcortical T2 hyperintensity. The patient received a course of IV methylprednisolone for a diagnosis of acute MS. Symptoms improved in a week, but recurred 6 months later, along with shortness of breath and inability to speak. Repeat MRI showed no changes. Fearing critical worsening, the patient was transferred by air to a tertiary facility, where revised diagnoses of panic attack, anxiety disorder, and domestic abuse were made. The age, gender, family history, and imaging findings had narrowed the physician's thinking prematurely to a diagnosis of MS, ignoring the stressors and other common symptoms of anxiety. Confirmation bias had misguided the physician.

Confirmation bias is not unique to medicine: it appears to be common across scientific disciplines, and particularly within the forensic context. Confirmation bias has been found to operate in such diverse fields as fingerprint analysis, forensic anthropology, and assessment of DNA admixtures.11 For example, false confessions may be more likely if interrogators presume a subject guilty prior to an interview, then conduct the interview seeking information confirming their hypothesis, while avoiding nonconfirming evidence. In a study of fingerprint analysis, forensic experts tended to develop confirmation bias when told a particular person was the prime suspect. The experts were more likely to seek commonalities between the prints found at the crime scene and those of the most likely suspect, rather than seeking disconfirming evidence. By manipulating this contextual information, 15% of fingerprint experts changed their correct match to an incorrect one.12 In forensic anthropology, where experts attempt to assess the gender of skeletal remains, anthropologists were strongly influenced by cues (e.g., clothing) that incorrectly suggested the sex of the remains.

Diagnostic momentum

A type of confirmation bias that can occur in medical settings is termed diagnostic momentum.3 This refers to the tendency of a diagnosis to be accepted and passed on, with little examination of the underlying evidence for its validity. Factors that may exacerbate this problem include the clinician's anxiety surrounding diagnostic uncertainty and the time constraints of modern practice. Such momentum impedes the expert's ability to seek out potential alternative sources for the patient's symptoms and explore more beneficial therapeutic strategies, and delays appropriate treatment.

Allegiance bias

A subtle form of the confirmation bias that occurs in adversarial forensic settings is the allegiance bias.13 Experts are susceptible to this bias in any forensic setting that pits one side against another or creates a potential conflict between different experts. It is particularly common in the adversarial legal setting, where experts on each side may receive financial incentives for a favorable opinion. Although studied extensively in forensic psychology, it may occur in any setting where an expert is swayed for financial reasons to implicitly or explicitly express a particular opinion. In a comprehensive study of violence risk assessment, experts were ostensibly retained by one side vs the other and given instructions suggesting they might emphasize information favoring that side to some extent.13 There was also the implicit promise of possible gain from lucrative future work. In such cases, even on objective measures of future risk, experts differed dramatically during their assessment of potential dangerousness.

Ignoring the base rate

Prevalence of diseases varies by age and context.9 Even with an above-normal sedimentation rate, a diagnosis of temporal arteritis is unlikely in a young woman with new-onset headaches, but far more likely in a 70-year-old man. In this setting, ignoring the prior probability or base rate could lead to a misinterpretation of the diagnostic test. Bayes theorem tells us that a test's utility depends on both its sensitivity and the prevalence of the disorder in the population of interest. Applying an expectation based on the prevalence rates of the older population to this young woman will increase the risk of false-positives.

Similar results ensue in other high base rate situations, if the clinician is unaware of them. A common example lies in the context of postinjury lumbar pain and diagnostic imaging.15,16 The base rate of spinal abnormalities increases considerably with age, yet there is rarely a direct correlation between the abnormalities visible in the image and the clinical presentation (i.e., correlation does not imply causality). If the diagnostician is not wary, it is easy to attribute common age-related findings to forensic causes, and infer an illusory correlation between the two.

Conversely, low base rates can have the effect of biasing the experts toward being too conservative in their detection of disorders. In low base rate settings, experts must carefully examine their expectations. For example, in evaluating x-rays, physicians who were told the base rate of disease was low often missed positive findings.15,16 Interestingly, those who had high base rate expectations demonstrated the opposite: more false-positive findings.

The usefulness of many tests depends on clearly specifying beforehand the prevalence of the disease/disorder. As an example, forensic neuropsychologists commonly use symptom validity testing (SVT) to determine if patients are putting forth adequate effort during cognitive testing. These empirically validated tests are essential to ensuring that measures of neuropsychological functioning truly reflect the patient's capabilities. SVT is especially useful in cases where litigation is likely (e.g., mild traumatic brain injury [mTBI] due to a motor vehicle accident) or where medical explanations for symptoms have been elusive. In these instances, performance below chance levels (termed SVT failure) may be an index of symptom exaggeration, somatoform, or functional disorders. Such failure can lead to false-positives in a low base rate population (i.e., motivated patients eager to return to work); however, when patients are seeking compensation or in litigation (i.e., a high base rate population), SVT failure has major diagnostic value.17

Hindsight bias

When we know the outcome of an event, we tend to overestimate or overpredict the likelihood of its occurrence even prior to its development. Our judgment of care rendered by others is subject to such hindsight bias. When giving an expert opinion on an adverse outcome, it is well to remember that knowledge gained through hindsight was a privilege unavailable when our peers cared for a case. Emergency care and forensic evaluations are areas where this error may color judgments if we are providing an opinion on an adverse outcome.19 When hindsight bias is operating, clinicians may lose sight of the complexities and possibilities that confronted them prior to knowing the outcome. That is, what seems obvious to us today may well have appeared to be just one among a number of equally likely possibilities in the past. Hindsight bias acts to oversimplify our view of the past. If this simplified model of the past is inaccurate, it can in turn lead us to make errors in the future.

In forensic medicine, retrospective (hindsight) bias has been studied most extensively in evaluating malpractice claims or personal injury lawsuits.20 For example, when reviewing medical records for adherence to standard of care, reviewers who were told that the patient had a permanent injury were more likely to believe that malpractice occurred, as opposed to those who were told that the injury was only temporary.21 As Roese and Vohs22 note, “Knowledge born of hindsight is appropriate and useful when directed at current actions and future plans, in which it informs ongoing strategy.”

Good old days bias

In our discussion so far, the physician is the active party formulating a hypothesis by selecting and synthesizing all available data. Thus, any bias in this process is largely attributable to the clinician. There is a contrasting situation wherein the patient is mostly responsible for providing a biased history, unwittingly or otherwise. The patient's self-reported symptoms are assumed to be accurate, but this is not always the case. It is common for individuals who have had major illnesses and injuries (e.g., mTBI, pain, and headache) to exaggerate their preinjury wellness and functioning as supranormal compared to healthy, uninjured controls. Gunstad and Suhr24 termed this the good old days bias.25 For instance, patients with persistent self-reported axial pain after motor vehicle accidents reported a lack of preinjury symptoms; their report was historically inaccurate. Although a patient attributed pain and several other symptoms to the accident, these symptoms had been noted in the chart prior to the event.26 The good old days effect is particularly pronounced in patients who fail neuropsychological effort tests (SVTs). This bias not only causes the patient to overestimate the effect of the injury or accident on current functioning, but also alters the subjective information provided to the neurologist in the history. Bias has proven to be quite robust, occurring in mTBI, axial pain, and even in parents who describe the functioning of their injured children.27

Good old days bias affects litigation in personal injury and Workers' Compensation cases, which involve a comparison between patient's preinjury and postinjury functioning. This bias can exaggerate preinjury functioning levels, thereby increasing the potential postinjury loss. This bias should not be misconstrued as malingering. It may be a relatively normal cognitive reaction to injury. That is, it appears common to view symptoms that are present near the time of the injury as having been caused by the injury, perhaps because the event provides a salient anchor for the patient. The neurologist must be aware of this, especially when evaluating the cause of later disability. It should alert the forensic evaluator to be cautious in accepting patients' characterization of their preinjury functioning, especially in a compensation-seeking context.

Debiasing

Recent descriptive literature suggests that debiasing, although not easy or standardized, is feasible. It starts with an awareness that bias is omnipresent and pervades many aspects of clinical sciences. Recognition and willingness to change are of utmost importance. The first step in bias minimization is an awareness of susceptibility. Some debiasing strategies have been studied, and when consistently utilized, could mitigate bias.2,3,28

Confirmation bias

Debiasing strategies that have been studied include considering the opposite, by actively seeking information that counters the initial hypothesis.11 In addition, it is helpful to review data and form opinions in the absence of outcome information, and not allow referral sources to overframe the case.

Base rate bias

Clinicians should acquaint themselves with and examine their assumptions about the base rate of an illness or condition in their population. Gigerenzer and Edwards29 encouraged the use of natural frequencies to minimize this bias in diagnostic reasoning (e.g., emphasizing “3 out of 10 patients experience drug's side-effect” rather than stating the patient has “a 30% chance of side effects”).

Hindsight bias

The real world is complex; in retrospect, we have 20-20 vision. This bias impairs learning, and gives a false sense of confidence.22,28 Roese and Vohs22 state that “… knowledge born of hindsight may involve error when directed at past moments in time, as in evaluating the skill of decision makers who had no crystal ball and so could not possibly have known what is known now.” There is a suggestion that in negligence trials bifurcating the defendant's precedent conduct from the plaintiff's resultant injury was effective in reducing hindsight bias.30 Barriers to widespread adoption of bifurcation are cost and time; however, when formulating opinions, neurologists can strive to decouple and isolate the prior conduct under scrutiny from later consequence.

Good old days bias

This bias is a natural reaction of patients to injury or traumatic events. The expert could seek information from before and after the time of the injury. Such prior information sources would include paper, electronic/cyber trails, medical records, employment records, family, friends, and social networks. The evaluator should try to ascertain the objectivity and neutrality of these sources. If such verification is not ethically possible, or access to records denied, the validity of claimed new-onset symptoms becomes uncertain or indeterminate.

CONCLUSION

We present some typical biases that may confront the neurologist conducting forensic evaluations. While not an exhaustive list, this article attempts to illustrate common ways in which the physician (or patient) may be led astray. While many biases originate within the neurologist (i.e., the confirmation, base rate, and hindsight biases), others find their origin in the patient's perception of injury (the good old days bias). Effective debiasing strategies used in some other disciplines can assist the neurologist in examining assumptions and improving clinical decision-making skills.

AUTHOR CONTRIBUTIONS

Saty Satya-Murti: drafting/revising the manuscript, study concept or design, analysis or interpretation of data. Joseph J. Lockhart: drafting/revising the manuscript, concept or design.

STUDY FUNDING

No targeted funding reported.

DISCLOSURES

S. Satya-Murti has participated in telephone consultations or in-person medical advisory board meetings for United BioSource Corporation (UBC), Simon-Kucher consultants, AstraZeneca, Avalere LLC, Evidera consulting group, Covidien, Michael J. Fox Foundation, Foley Hoag LLP, Baxter, and Abiomed; has received funding for travel from UBC, AstraZeneca, Avalere LLC, Evidera consulting group, Covidien, Michael J. Fox Foundation, Foley Hoag LLP, and Baxter; serves on the editorial board of Neurology®: Clinical Practice; served on the American Academy of Neurology Payment Policy Subcommittee; and served as panelist and later (2010–2011) Vice-Chair CMS-MEDCAC (Medicare Evidence Development and Coverage Advisory Committee). For the duration of the MEDCAC meeting, usually 1–2 days, S. Satya Murti was considered an SGE (Special Government Employee). J.J. Lockhart has received funding for travel from SAMHSA/NIDA for training and developing Veterans' Treatment Courts and serves on the editorial advisory board for Open Access Journal of Forensic Psychology. Full disclosure form information provided by the authors is available with the full text of this article at http://cp.neurology.org/lookup/doi/10.1212/CPJ.0000000000000181.

Correspondence to: josephjlockhart@gmail.com

Funding information and disclosures are provided at the end of the article. Full disclosure form information provided by the authors is available with the full text of this article at http://cp.neurology.org/lookup/doi/10.1212/CPJ.0000000000000181.

Footnotes

Correspondence to: josephjlockhart@gmail.com

Funding information and disclosures are provided at the end of the article. Full disclosure form information provided by the authors is available with the full text of this article at http://cp.neurology.org/lookup/doi/10.1212/CPJ.0000000000000181.

REFERENCES

- 1.Nickerson RS. Confirmation bias: a ubiquitous phenomenon in many guises. Rev Gen Psychol. 1998;2:175–220. [Google Scholar]

- 2.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–780. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 3.Croskerry P. From mindless to mindful practice: cognitive bias and clinical decision making. N Engl J Med. 2013;368:2445–2448. doi: 10.1056/NEJMp1303712. [DOI] [PubMed] [Google Scholar]

- 4.Woodcock JH. Medical legal consultation in neurologic practice. Neurol Clin Pract. 2014;4:323–334. doi: 10.1212/01.CPJ.0000437696.56006.94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kaptchuk TJ. Effect of interpretive bias on research evidence. BMJ. 2003;326:1453. doi: 10.1136/bmj.326.7404.1453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Delgado-Rodríguez M, Llorca J. Bias. J Epidemiol Community Health. 2004;58:635–641. doi: 10.1136/jech.2003.008466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kahneman D. Thinking, Fast and Slow. New York: Farrar, Straus and Giroux; 2011.

- 8.Durant W, Durant A. The Lessons of History, 1st ed. New York: Simon & Schuster; 1968.

- 9.Vickrey BG, Samuels MA, Ropper AH. How neurologists think: a cognitive psychology perspective on missed diagnoses. Ann Neurol. 2010;67:425–433. doi: 10.1002/ana.21907. [DOI] [PubMed] [Google Scholar]

- 10.Neale G, Hogan H, Sevdalis N. Misdiagnosis: analysis based on case record review with proposals aimed to improve diagnostic processes. Clin Med. 2011;11:317–321. doi: 10.7861/clinmedicine.11-4-317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kassin SM, Dror IE, Kukucka J. The forensic confirmation bias: problems, perspectives, and proposed solutions. J Appl Res Mem Cogn. 2013;2:42–52. [Google Scholar]

- 12.Dror IE, Champod C, Langenburg G, Charlton D, Hunt H, Rosenthal R. Cognitive issues in fingerprint analysis: inter- and intra-expert consistency and the effect of a “target” comparison. Forensic Sci Int. 2011;208:10–17. doi: 10.1016/j.forsciint.2010.10.013. [DOI] [PubMed] [Google Scholar]

- 13.Murrie DC, Boccaccini MT, Guarnera LA, Rufino KA. Are forensic experts biased by the side that retained them? Psychol Sci. 2013;24:1889–1897. doi: 10.1177/0956797613481812. [DOI] [PubMed] [Google Scholar]

- 14.Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- 15.Willis BH. Empirical evidence that disease prevalence may affect the performance of diagnostic tests with an implicit threshold: a cross-sectional study. BMJ Open. 2012;2:e000746. doi: 10.1136/bmjopen-2011-000746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brinjikji W, Luetmer PH, Comstock B. Systematic literature review of imaging features of spinal degeneration in asymptomatic populations. AJNR Am J Neuroradiol. 2015;36:811–816. doi: 10.3174/ajnr.A4173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sollman MJ, Berry DTR. Detection of inadequate effort on neuropsychological testing: a meta-analytic update and extension. Arch Clin Neuropsychol. 2011;26:774–789. doi: 10.1093/arclin/acr066. [DOI] [PubMed] [Google Scholar]

- 18.Morris M. Quotable Churchill. Chichester, UK: Summersdale Publishers; 2014.

- 19.Annunziata A. Retrospective bias in expert evidence: effects on patient and doctor safety. Emerg Med Australas. 2009;21:80–83. doi: 10.1111/j.1742-6723.2009.01155.x. [DOI] [PubMed] [Google Scholar]

- 20.Caplan RA, Posner KL, Cheney FW. Effect of outcome on physician judgments of appropriateness of care. JAMA. 1991;265:1957–1960. [PubMed] [Google Scholar]

- 21.Gupta M, Schriger DL, Tabas JA. The presence of outcome bias in emergency physician retrospective judgments of the quality of care. Ann Emerg Med. 2011;57:323–328.e9. doi: 10.1016/j.annemergmed.2010.10.004. [DOI] [PubMed] [Google Scholar]

- 22.Roese NJ, Vohs KD. Hindsight bias. Perspect Psychol Sci. 2012;7:411–426. doi: 10.1177/1745691612454303. [DOI] [PubMed] [Google Scholar]

- 23.Young D. Great Funny Quotes. Round Rock, TX: Wind Runner Press; 2012.

- 24.Gunstad J, Suhr JA. “Expectation as etiology” versus “the good old days”: postconcussion syndrome symptom reporting in athletes, headache sufferers, and depressed individuals. J Int Neuropsychol Soc. 2001;7:323–333. doi: 10.1017/s1355617701733061. [DOI] [PubMed] [Google Scholar]

- 25.Iverson GL, Lange RT, Brooks BL, Rennison VLA. “Good old days” bias following mild traumatic brain injury. Clin Neuropsychol. 2010;24:17–37. doi: 10.1080/13854040903190797. [DOI] [PubMed] [Google Scholar]

- 26.Don AS, Carragee EJ. Is the self-reported history accurate in patients with persistent axial pain after a motor vehicle accident? Spine J. 2009;9:4–12. doi: 10.1016/j.spinee.2008.11.002. [DOI] [PubMed] [Google Scholar]

- 27.Brooks BL, Kadoura B, Turley B, Crawford S, Mikrogianakis A, Barlow KM. Perception of recovery after pediatric mild traumatic brain injury is influenced by the “good old days” bias: tangible implications for clinical practice and outcomes research. Arch Clin Neuropsychol. 2014;29:186–193. doi: 10.1093/arclin/act083. [DOI] [PubMed] [Google Scholar]

- 28.Arkes HR. The consequences of the hindsight bias in medical decision making. Curr Dir Psychol Sci. 2013;22:356–360. [Google Scholar]

- 29.Gigerenzer G, Edwards A. Simple tools for understanding risks: from innumeracy to insight. BMJ. 2003;327:741–744. doi: 10.1136/bmj.327.7417.741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Smith AC, Greene E. Conduct and its consequences: attempts at debiasing jury judgments. Law Hum Behav. 2005;29:505. doi: 10.1007/s10979-005-5692-5. [DOI] [PubMed] [Google Scholar]

- 31.Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184–1204. doi: 10.1111/j.1553-2712.2002.tb01574.x. [DOI] [PubMed] [Google Scholar]

- 32.Croskerry P, Singhal G, Mamede S. Cognitive debiasing 2: impediments to and strategies for change. BMJ Qual Saf. 2013;22:65–72. doi: 10.1136/bmjqs-2012-001713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lilienfeld SO, Ammirati R, Kristin Landfield K. Giving debiasing away. Persp Psychol Sci. 2009;4:390. doi: 10.1111/j.1745-6924.2009.01144.x. [DOI] [PubMed] [Google Scholar]

- 34.Strasburger LH, Gutheil TG, Brodsky A. On wearing two hats: role conflict in serving as both psychotherapist and expert witness. Am J Psychiatry. 1997;154:448–456. doi: 10.1176/ajp.154.4.448. [DOI] [PubMed] [Google Scholar]