Abstract

Machine learning and artificial intelligence have strong roots on principles of neural computation. Some examples are the structure of the first perceptron, inspired in the retina, neuroprosthetics based on ganglion cell recordings or Hopfield networks. In addition, machine learning provides a powerful set of tools to analyze neural data, which has already proved its efficacy in so distant fields of research as speech recognition, behavioral states classification, or LFP recordings. However, despite the huge technological advances in neural data reduction of dimensionality, pattern selection, and clustering during the last years, there has not been a proportional development of the analytical tools used for Time–Frequency (T–F) analysis in neuroscience. Bearing this in mind, we introduce the convenience of using non-linear, non-stationary tools, EMD algorithms in particular, for the transformation of the oscillatory neural data (EEG, EMG, spike oscillations…) into the T–F domain prior to its analysis with machine learning tools. We support that to achieve meaningful conclusions, the transformed data we analyze has to be as faithful as possible to the original recording, so that the transformations forced into the data due to restrictions in the T–F computation are not extended to the results of the machine learning analysis. Moreover, bioinspired computation such as brain–machine interface may be enriched from a more precise definition of neuronal coding where non-linearities of the neuronal dynamics are considered.

Keywords: neuronal coding, non-linear signals, NA-MEMD, machine learning classification, single trial classification

Introduction

The mutual benefits of the interplay between natural and artificial computation are well-known. Moreover, the increasing volume and complexity of the generated data in neuroscience exceeds the capacity of classical analysis, and they are becoming more and more difficult to analyze. In this scenario, the emergence of artificial computation and machine learning (ML) techniques is becoming crucial for the interpretation and analysis of these complex data. Some examples are the interaction between networks and behavior (Bathellier et al., 2012), stimulus coding (Nikolić et al., 2009; Klampfl et al., 2012), population dynamics in neural networks (Buonomano and Merzenich, 1995), classification of behavioral states (Kabra et al., 2012), and spike sorting procedures (Bongard et al., 2014; Carlson et al., 2014; Dimitriadis et al., 2016). On the other hand artificial computation has received inspiration from neuroscience since the first artificial neuron developed in the 40's (McCulloch and Pitts, 1943), continuing to the first perceptron, inspired in the circuitry of the retina (Rosenblatt, 1957), Hopfield networks (Hopfield, 1982), or Self-Organizing maps (Kohonen, 1982) and is still widely present nowadays.

The scope of this perspectives paper is to highlight the reliability and usefulness of ML techniques for the analysis of electrophysiological recordings. In particular, we will address the manipulation of the data prior to its analysis and classification, specifically regarding to Time–Frequency (T–F) features. In this framework we think that T–F analysis tools have not been as extensively implemented as other ML algorithms in neuroscience research. To facilitate the analysis of relevant T–F information using ML analysis, we propose to use Empirical Mode Decomposition data-driven algorithms (Huang et al., 1998, EMD) to extract the relevant T–F features to be studied. This procedure is widely used in signal analysis and has been proved successfully in the analysis of electrophysiological data (Li, 2006; Huang et al., 2013; Hu and Liang, 2014; Al-Subari et al., 2015; Alegre-Cortés et al., 2016); nevertheless, they have not yet become of common use and are sparsely found in neuroscience publications. As a result, we still use linear and stationary techniques that are unavoidably biasing and blurring relevant information, since they are not able to accurately depict the intermittency and non-linearity of the data. This approach usually leads to the underperformance of classification or pattern extraction using ML algorithms, hence limits the strength of the posterior analysis (Mandic et al., 2013). The general idea behind this suggestion is that a more precise transformation into the T–F domains of the data will improve the result of the classification and/or search for patterns in the data performed by the ML algorithms.

Synergy between EMDs, machine learning, and brain processes

Most of the brain processes are non-linear and non-stationary. Hence, the selected analytical tools require the capability to deal with these properties of the data. EMDs (Huang et al., 1998) are data driven algorithms designed to extract oscillatory information without its projection onto any predefined function, converting the original signal into a sum of oscillatory components called Intrinsic Mode Functions (IMFs). In this way they facilitate the extraction of meaningful information from the data without temporal or waveform restrictions, usually using the Hilbert transform (Huang et al., 1998). In addition, there exist multivariate variations of these algorithms (Rehman and Mandic, 2010; Ur Rehman and Mandic, 2011) that allow a simultaneous decomposition of multiple recorded neuronal signals. This is possible thanks to the simultaneous decomposition of all dimensions of the data, which ensures the same number of IMFs containing the information in the same frequency ranges (Rehman and Mandic, 2010). Thus, thanks to the advantages of EMD algorithms over classic linear analysis, they are being increasingly used in neuronal analysis (Liang et al., 2005; Huang et al., 2013; Al-Subari et al., 2015; Alegre-Cortés et al., 2016), and they are helping us to achieve a better understanding of the oscillatory properties of neuronal activity (Buzsáki and Draguhn, 2004).

Despite the advantages of this approach, we should take into account that EMD algorithms increase the dimensionality of the data, since they convert the original signal in a set of IMFs. Hence these procedures increase the difficulties in the management of the data to extract useful results or perform any desired classification.

In this context, ML techniques are the perfect tools to analyze and classify the decomposed neuronal activity. ML is a subfield of statistics and computer science, which takes advantage of the power of computers to perform iterative computations to identify the existing patterns on the data to make future models and predictions. Furthermore, the projection of the data into a higher dimensional space provides an additional advantage, since it helps to improve discrimination (Cover, 1965).

To support these ideas and the advantages of the proposed approach, we will briefly introduce a couple of real examples based on different experimental approaches and electrophysiological techniques.

Texture discrimination from vibrissal nerve recordings

The first example are electrophysiological recordings from rat vibrissal nerve during a texture discrimination task (see Albarracín et al., 2006 for details). Previous work with this data (Lucianna et al., 2016) using linear techniques for T–F features extraction (Root Mean Square value to estimate signal energy and Burg parametric estimation method to compute the Power Spectrum Density) and a simple perceptron (Hertz et al., 1991) concluded that five sweeps were required for an adequate texture classification. To probe our thoughts, we performed a similar analysis on the discrimination of the pair of materials of hardest discrimination, wood vs. L1000 sandpaper (Figure 1A), using information from single sweeps on the surface. Previous results on these pair of textures had described that a single swept provided just 70% correct texture classification and had great variability.

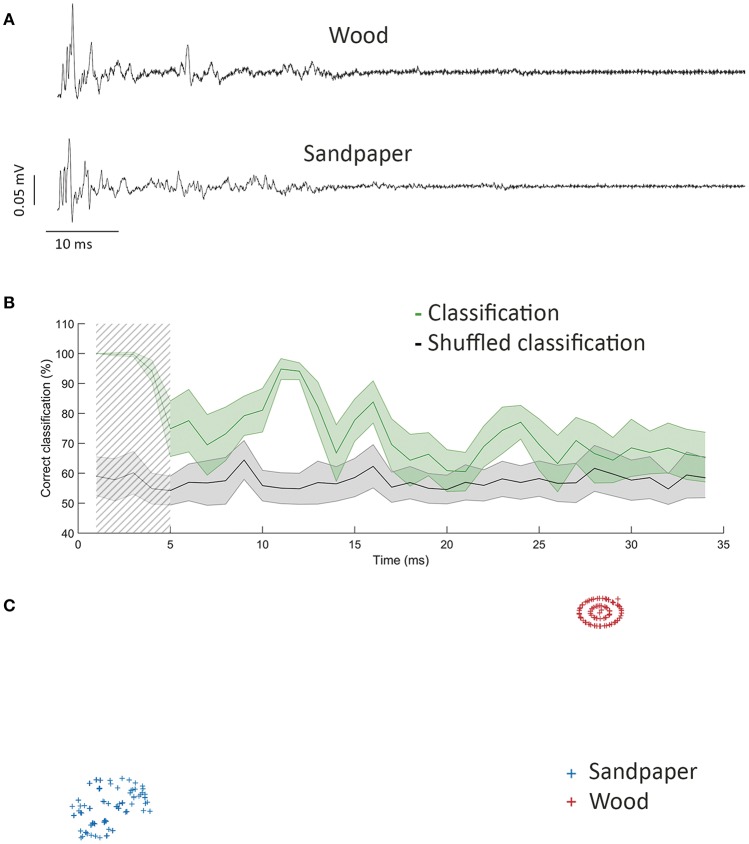

Figure 1.

Texture discrimination using NA-MEMD plus MLP. (A) Mean vibrissal nerve response to sweeping wood (top) and sandpaper (bottom). (B) Percentage of correct classification (green) and classification after shuffling (gray). Shadow square represents maximum discrimination window, used in (C). Error displayed as s.e.m. (C) t-SNE representation of vibrissal nerve activity during the first 5 ms of the response.

We decomposed the data using Noise Assisted Multivariate Empirical Mode Decomposition (NA-MEMD, Ur Rehman and Mandic, 2011) to obtain the T–F spectrum of the response to each texture (see Alegre-Cortés et al., 2016 for details). Standard stopping criterion is described in Rilling et al. (2003). The obtained mean amplitude and mean IF of the different IMFs between 115 and 384 Hz were used to train a multilayer perceptron (MLP) to perform the classification (Cybenko, 1989). We used a single hidden layer of 14 neurons and scaled conjugated gradient as supervised training algorithm (Powell, 1977). We repeated this analysis on a sliding window to compute the temporal profile of discrimination between these textures during 100 ms after stimulus offset. To prevent from biased results due to the finite number of experiments, we shuffled texture across our data to determine the average error in classification. This process was repeated 100 times in each window.

Figure 1 shows the main results. Discrimination was maximal (99.5 ± 0.5%, shadow square, Figure 1B) during a 5 ms window starting 5 ms after stimulus offset. Discrimination performance decreased during time, but a second peak of discrimination was seen 15–20 ms after stimulation, coinciding in time with the second contact with the surface during the withdrawal of the whisker. When we compared this maximum value of discrimination (Figure 1B) with the previous results obtained on the discrimination on these dataset (≈70% on average, Lucianna et al., 2016) we confirmed an evident increase in texture discrimination thanks to the combined use of NA-MEMD followed by ML classification. Moreover, the classification was based on single-trial recordings and was shown to had almost no variability in the peak of discrimination (Figure 1B), providing an additional improvement over previous results.

We used the t-distributed stochastic neighbor embedding algorithm (t-SNE) (van der Maaten and Hinton, 2008) as an additional ML technique to differentiate the vibrissal nerve response to the different stimulating textures (wood and sandpaper), starting from the same parameters we used to train the MLP in a time window of 5 ms length starting 5 ms after stimulation, coinciding with maximum discrimination in Figure 1B. This technique is useful to reduce the dimensionality of the data and allowed us to classify our complex data into two different and well-separated clusters, each one corresponding to one of the stimulating textures: wood and sandpaper (Figure 1C).

Stimulation electrode discrimination from multielectrode primary cortical neurons culture recordings

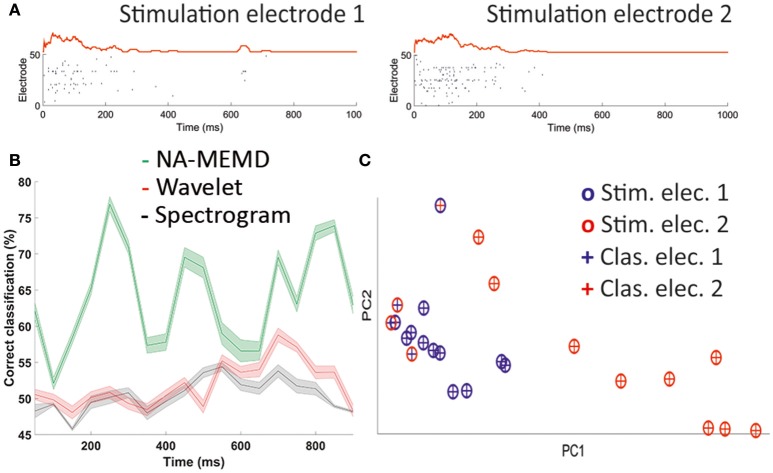

To further illustrate the power and potential of this approach, we carried out an additional analysis of simultaneous recordings in primary cortical neurons cultures (see Calvo et al., 2016 for details). Briefly, embryonic primary cortical neurons were cultured on a multielectrode array; then, population activity was recorded simultaneously at 60 points of the culture while electrically stimulated in two different electrodes of the array (Figure 2A). We decomposed the averaged activity present in the electrode to obtain the mean oscillatory activity during 100 stimulations in each of the stimulation electrodes independently using NA-MEMD. Then, we extracted different values of mean amplitude and mean IF at different T–F windows (IMFs ranging from 30 to 90 Hz) to train a MLP to discriminate the stimulation electrode, from the recorded activity when a minimum number of spikes were evoked in the whole response window. We used a single hidden layer of 15 neurons and scaled conjugated gradient as supervised training algorithm. An equivalent shuffling procedure was done to subtract chance-level classification. This process was repeated 100 times.

Figure 2.

Stimulation electrode discrimination. (A) Example of a single stimulation in each stimulation electrode. Raster plot of the whole electrode and mean activity vector. (B) Percentage of correct classification using NA-MEMD (green), Morlet wavelet (red), and spectrogram (gray). Error displayed as s.e.m. (C) Distribution of individual trials after using PCA (crosses) and clusterization using DBSCAN algorithm (circles).

Once we subtracted chance-level classification, stimulation electrode had its maximum discrimination peak 200–300 ms after stimulation, exceeding 75% successful classification when we used NA-MEMD as the feature extraction tool (Figure 2B). Therefore, we were able to discriminate the electrode on which the unique stimulation had occurred analyzing the oscillatory properties of the generated response. This was not possible when we extracted the T–F features to train the MLP using either spectrogram or wavelet (Morlet) analysis. When we used these linear techniques, stimulation electrode classification was similar to chance-level classification (Figure 2B).

We performed an additional analysis applying a density-based algorithm for discovering clusters in large spatial databases with noise named DBSCAN that is designed to discover clusters of arbitrary shape (Ester et al., 1996). This algorithm was applied to the extracted parameters during the window of maximum discrimination using NA-MEMD in Figure 2B (200–300 ms after stimulus onset). We found two clusters (Figure 2C), corresponding to the two stimulation electrodes. A total of 83% of the trials were in the correct clusters, in clear coincidence with the mean percentage of correct classification of the MLP in that window of time before the subtraction of the chance-level classification.

Concluding remarks

Over the last decade, many technical and conceptual issues related with the analysis of neuronal recordings have been addressed, but there are still some problems related with the analysis of T–F data. We suggest that a combination of T–F signal decomposition via EMD algorithms (NA-MEMD, in our case) plus a posterior classification of the obtained parameters using ML techniques are powerful tools in this framework. Therefore, the implementation of this combination of analytical tools in the daily neuroscience research would improve the information extracted from the recorded single or multiple neuronal activities and, in ultimate extent, increase our understanding of the nervous system. Furthermore, although more studies are still needed, these tools could be also useful for a better understanding of some pathological processes of the brain.

Ethics statement

All the procedures carried out at the Institute for Biological Research (INSIBIO)/Instituto Superior de Investigaciones Biológicas, were in accordance with the recommendations of the Guide for the Care and Use of Laboratory Animals (National Research Council, NRC). All the experimental procedures carried out at the Miguel Hernandez University were conformed to the directive 2010/63/EU of the European Parliament and of the Council, and the RD 53/2013 Spanish regulation on the protection of animals use for scientific purposes and approved by the Miguel Hernandez University Committee for Animal use in Laboratory.

Author contributions

Data have been provided by AA, FF, and MV-C. Data have been analyzed by JA-C. Paper was written by JA-C, CS-S, JF, and EF.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work has been supported in part by the Spanish national research program (MAT2015-69967-C3-1), by Research Chair Bidons Egara and by a research grant of the Spanish Blind Organization (ONCE).

References

- Albarracín A. L., Farfán F. D., Felice C. J., Décima E. E. (2006). Texture discrimination and multi-unit recording in the rat vibrissal nerve. BMC Neurosci. 7:42. 10.1186/1471-2202-7-42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alegre-Cortés J., Soto-Sánchez C., Pizá A. G., Albarracín A. L., Farfán F. D., Felice C. J., et al. (2016). Time-frequency analysis of neuronal populations with instantaneous resolution based on noise-assisted multivariate empirical mode decomposition. J. Neurosci. Methods 267, 35–44. 10.1016/j.jneumeth.2016.03.018 [DOI] [PubMed] [Google Scholar]

- Al-Subari K. S. A., Al-Baddai S. M. H., Tomé A. M., Goldhacker M., Faltermeier R., Lang E. W. (2015). EMDLAB : a toolbox for analysis of single-trial EEG dynamics using empirical mode decompostion. J. Neurosci. Methods 253, 1–14. 10.1016/j.jneumeth.2015.06.020 [DOI] [PubMed] [Google Scholar]

- Bathellier B., Ushakova L., Rumpel S. (2012). Discrete neocortical dynamics predict behavioral categorization of sounds. Neuron 76, 435–449. 10.1016/j.neuron.2012.07.008 [DOI] [PubMed] [Google Scholar]

- Bongard M., Micol D., Fernández E. (2014). NEV2lkit: a new open source tool for handling neuronal event files from multi-electrode recordings. Int. J. Neural Syst. 24:1450009. 10.1142/S0129065714500099 [DOI] [PubMed] [Google Scholar]

- Buonomano D. V., Merzenich M. M. (1995). Temporal information transformed into a spatial code by a neural network with realistic properties. Science 267, 1028–1030. 10.1126/science.7863330 [DOI] [PubMed] [Google Scholar]

- Buzsáki G., Draguhn A. (2004). Neuronal oscillations in cortical networks. Science 304, 1926–1929. 10.1126/science.1099745 [DOI] [PubMed] [Google Scholar]

- Calvo M., Ferrández J., De la Paz López F., Álvarez Sánchez J., Troncoso J., Eduardo F. (2016). Functional connectivity graphs in hippocampal cultures using tetanic stimulation for real time robotic control. Front. Neurosci. 10:104 10.3389/conf.fnins.2016.93.0010427047326 [DOI] [Google Scholar]

- Carlson D. E., Vogelstein J. T., Wu Q., Lian W., Zhou M., Stoetzner C. R., et al. (2014). Multichannel electrophysiological spike sorting via joint dictionary learning and mixture modeling. IEEE Trans. Biomed. Eng. 61, 41–54. 10.1109/TBME.2013.2275751 [DOI] [PubMed] [Google Scholar]

- Cover T. M. (1965). Geometric and statistical properties of systems of linear in-equalities with applications in pattern recognition. IEEE Trans. Electron. Comput. 14, 326–334. 10.1109/PGEC.1965.264137 [DOI] [Google Scholar]

- Cybenko G. (1989). Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314. 10.1007/BF02551274 [DOI] [Google Scholar]

- Dimitriadis G., Neto J., Kampff A. (2016). T-SNE visualization of large-scale neural recordings. bioRxiv 1–22. 10.1101/087395 [DOI] [PubMed] [Google Scholar]

- Ester M., Kriegel H. P., Sander J., Xu X. (1996). A density-based algorithm for discovering clusters in large spatial databases with noise, in Proceedings of 2nd International Conference on Knowledge Discovery and Data Mining, (Munich: ), 226–231. [Google Scholar]

- Hertz J. A., Krogh A. S., Palmer R. G. (1991). Introduction to the Theory of Neural Computation, New Edn. Redwood City, CA: Westview Press. [Google Scholar]

- Hopfield J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. U.S.A. 79, 2554–2558. 10.1073/pnas.79.8.2554 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu M., Liang H. (2014). Search for information-bearing components in neural data. PLoS ONE 9:e99793. 10.1371/journal.pone.0099793 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang J. R., Fan S. Z., Abbod M. F., Jen K. K., Wu J. F., Shieh J. S. (2013). Application of multivariate empirical mode decomposition and sample entropy in EEG signals via artificial neural networks for interpreting depth of anesthesia. Entropy 15, 3325–3339. 10.3390/e15093325 [DOI] [Google Scholar]

- Huang N. E., Shen Z., Long S., Wu M., Shih H., Zheng Q., et al. (1998). The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. A 454, 903–995. 10.1098/rspa.1998.0193 [DOI] [Google Scholar]

- Kabra M., Robie A. A., Rivera-Alba M., Branson S., Branson K. (2012). JAABA: interactive machine learning for automatic annotation of animal behavior. Nat. Methods 10, 64–67. 10.1038/nmeth.2281 [DOI] [PubMed] [Google Scholar]

- Klampfl S., David S. V., Yin P., Shamma S. A., Maass W. (2012). A quantitative analysis of information about past and present stimuli encoded by spikes of A1 neurons. J. Neurophysiol. 108, 1366–1380. 10.1152/jn.00935.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohonen T. (1982). Self-organized formation of topologically correct feature maps. Biol. Cybern. 43, 59–69. 10.1007/BF00337288 [DOI] [Google Scholar]

- Li X. (2006). Temporal structure of neuronal population oscillations with empirical model decomposition. Phys. Lett. A 356, 237–241. 10.1016/j.physleta.2006.03.045 [DOI] [Google Scholar]

- Liang H., Bressler S. L., Desimone R., Fries P. (2005). Empirical mode decomposition: a method for analyzing neural data. Neurocomputing 65–66, 801–807. 10.1016/j.neucom.2004.10.077 [DOI] [Google Scholar]

- Lucianna F. A., Farfán F. D., Piz G. A., Albarracín A. L., Felice C. J. (2016). Functional specificity of rat vibrissal primary afferents. Physiol. Rep. 4, 1–6. 10.14814/phy2.12810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandic D. P., Ur Rehman N., Wu Z., Huang N. E. (2013). Empirical mode decomposition-based time-frequency analysis of multivariate signals: the power of adaptive data analysis. IEEE Signal Process. Mag. 30, 74–86. 10.1109/MSP.2013.2267931 [DOI] [Google Scholar]

- McCulloch W. S., Pitts W. A. (1943). A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 5, 115–133. 10.1007/BF02478259 [DOI] [PubMed] [Google Scholar]

- Nikolić D., Häusler S., Singer W., Maass W. (2009). Distributed fading memory for stimulus properties in the primary visual cortex. PLoS Biol. 7:e1000260. 10.1371/journal.pbio.1000260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell M. J. D. (1977). Restart procedures for the conjugate gradient method. Math. Program. 12, 241–254. 10.1007/BF01593790 [DOI] [Google Scholar]

- Rehman N., Mandic D. P. (2010). Multivariate empirical mode decomposition. Proc. R. Soc. A 466, 1291–1302. 10.1098/rspa.2009.0502 [DOI] [Google Scholar]

- Rilling G., Flandrin P., Goncalves P. (2003). On empirical mode decomposition and its algorithms, in IEEE EURASIP Work Nonlinear Signal Image Process NSIP 3 (Grado; ), 8–11. [Google Scholar]

- Rosenblatt F. (1957). The Perceptron: A Perceiving and Recognising Automaton. Report 85-460-1, Project PARA. [Google Scholar]

- Ur Rehman N., Mandic D. P. (2011). Filter bank property of multivariate empirical mode decomposition. IEEE Trans. Signal Process. 59, 2421–2426. 10.1109/TSP.2011.2106779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Maaten L., Hinton G. (2008). Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605. [Google Scholar]