Abstract

Enhanced interpersonal relationships and meaningful resident engagement in daily life are central to nursing home cultural transformation, yet these critical components of person-centered care may be difficult for frontline staff to measure using traditional research instruments. To address the need for easy-to-use instruments to help nursing home staff members evaluate and improve person-centered care, the psychometric method of cognitive-based interviewing was used to adapt a structured observation instrument originally developed for researchers and nursing home surveyors. Twenty-eight staff members from 2 Veterans Health Administration (VHA) nursing homes participated in 1 of 3 rounds of cognitive-based interviews, using the instrument in real-life situations. Modifications to the original instrument were guided by a cognitive processing model of instrument refinement. Following 2 rounds of cognitive interviews, pre-testing of the revised instrument, and another round of cognitive interviews, the resulting set of 3 short instruments mirrored the concepts of the original longer instrument but were significantly easier for frontline staff to understand and use. Final results indicated frontline staff found the revised instruments feasible to use and clinically relevant in measuring and improving the lived experience of a changing culture. This paper provides a framework for developing or adapting other measurement tools for frontline culture change efforts in nursing homes, in addition to reporting on a practical set of instruments to measure aspects of person-centered care.

Keywords: person-centered care, cognitive interview, nursing home, quality improvement, instrument development

Introduction

Since 2008, the Veterans Health Administration (VHA) has sought to transform the culture of care across its 135 nursing homes, known as Community Living Centers (CLCs; United States [U.S.] Department of Veterans Affairs, 2008). This cultural transformation has focused on a shift in care principles, from a medical care model driven by medical diagnoses to a person-centered care model driven by individual resident’s needs and desires. Core concepts of person-centered care include individualized care; family engagement; respect, dignity, and compassion; communication; shared decision-making; self-management; and access to care (National Quality Foundation, 2014). Implementation of person-centered care models in nursing homes has not universally been shown to be beneficial (Shier et al., 2014) but has, for example, been associated with improved psychosocial outcomes among residents (Hill, Kolanowski, Milone-Nuzzo & Yevchak, 2011), less restraint use and better resident self-performed eating ability (Chang, Li & Porock, 2013), fewer resident distress symptoms (Burack, Weiner & Reinhardt, 2012), higher Minimum Data Set-measured quality (Sullivan et al., 2013a), and decreases in health-related survey deficiencies (Grabowski et al., 2014).

The initial policy directive for CLCs (U.S. Department of Veterans Affairs, 2008) focused on four primary areas of cultural transformation: resident needs (providing medical and daily care services in an individualized manner within a person-centered environment), work practices (supporting employee engagement and empowerment in decision making, particularly for those employees who work closest with the resident), care practices (supporting resident choice, autonomy, and preference across the resident experience, such as dining, resident activity programming, and resident schedules) and environment of care (spaces that reflect a home-like environment, privacy, and comfort). This paper focuses specifically on care practices, an important component of which is prioritizing enhanced resident-staff interactions and the engagement of residents in meaningful activities. That is, a necessary but not sufficient aspect of person-centered care is staff proactively and positively interacting with residents and creating opportunities for residents to be meaningfully occupied.

Residents having positive interactions with staff and engagement of residents through behavioral interventions are related to positive resident well-being, improved affect and quality of life, and longer survival (Gilbart & Hirdes, 2000; Kiely, Simon, Jones, & Morris, 2000; McKee, Houston, & Barnes, 2002; Meeks, Shah, & Ramsey, 2009; Meeks & Looney, 2011; Schreiner, Yamamoto, & Shiotani, 2005; Wood, Womack, & Hooper, 2009). Yet learning to meaningfully engage with residents has traditionally not been part of training or practice for nursing home staff. Creating change in individual and collective staff behavior is, however, essential for the success of a person-centered care initiative (Gaudet, 2011). Work in other areas, such as in measuring the fulfillment of nursing home resident preferences, has documented the need for tools that are easy to use and provide quick overviews for frontline staff (Van Haitsma, et al., 2014; Van Haitsma, et al., 2016). Simple person-centered care tools are, therefore, also needed to help frontline staff assess their performance of these skills and identify potential areas for improvement.

Current Measures of Resident-staff Interaction and Engagement

Measurement of the quality of interpersonal relationships and patient engagement has been identified as a priority for person-centered care (National Quality Forum, 2014). The Resident Assessment Instrument in the Minimum Data Set, while assessing resident preferences for routines and activities, does not gather information on resident preferences for meaningful engagement and interactions or provide tools for staff to measure these constructs. The few existing tools designed to assess interaction and engagement largely rely on self-report approaches (Bowman & Schoeneman, 2006; Edvardsson & Innes, 2010; Sullivan et al., 2013b; Zimmerman et al., 2015). Though these tools are useful for gathering data, their findings are limited by the respondents’ potential biases, both in terms of what they choose not to report and in terms of what they are unaware of and so cannot report. Observational tools that exist for measuring engagement in nursing homes are often computer-based and not designed for staff use (Allen, Burgio, Fisher, Hardin & Shuster, 2005; Allen-Burge, Burgio, Bourgeois, Sims & Nunnikhoven, 2001; Burgio et al., 2001). To overcome these existing limitations, it is critical to develop objective measures of resident-staff interaction and engagement that can easily be used by frontline staff in their day-to-day work lives.

The Resident-centered Assessment of Interactions with Staff and Engagement (RAISE) tool

To begin addressing this need, we developed a structured, systematic, observation-based research tool to better identify behavior concordant with person-centered processes and outcomes. The development of the Resident-centered Assessment of Interactions with Staff and Engagement (RAISE) tool is described elsewhere (Blinded for peer review). Briefly, The RAISE instrument is a paper-based tool that quantifies the number, type, and quality of interactions between and among staff and residents; the level and type of engagement of residents; and the types of activities in which both staff and residents are involved. It has 8 columns containing numbered codes that capture detailed information about 8 variables: target, who is nearby, staff activity, resident activity, staff interaction type, emotional tone, social role, and resident engagement. Each variable is coded by choosing from among up to 30 codes. Observations with the instrument are conducted during a 20-minute period, using 5-second observation intervals. Observations are recorded on consecutive rows, with one row for each observation interval. The original RAISE instrument was designed for use primarily by researchers or by professional nursing home surveyors and is not practical for use by front line staff. It has adequate inter-rater reliability, with Fleiss kappas ≥ .70 for all 8 variables. An expert panel positively evaluated its face and construct validity.

This manuscript describes the adaption of the RAISE instrument into a set of simplified, staff-friendly tools for nursing home quality improvement efforts. We used the psychometric approach of cognitive-based interviewing to simplify the original structured observation tool while retaining categories that were pertinent to staff. The methods we used can be used by nursing homes to adapt other research instruments to create staff-friendly culture change measurement tools.

Methods

Study Design

Initial instrument simplification

Researchers initially simplified the original RAISE instrument by eliminating variables that were deemed tangential to measuring the key concepts of resident-staff interaction and resident engagement. The variables of who is nearby, emotional tone, and social role were thus eliminated. The initial simplified version of the tool included only 5 columns: observation target, staff activity, staff interaction type, resident activity, and resident engagement. The codes for Observation Target were reduced to a choice of 2 checkboxes on each row, but the other columns kept the original instrument’s numeric codes. For example, to code the staff activity variable, a rater still had to choose from among 30 different codes.

Cognitive interviews

Cognitive-based interviewing is a well-established psychometric method for instrument refinement that helps identify how respondents or users understand materials by capturing and analyzing their thought processes (Beatty & Willis, 2007; Biemer & Lyberg, 2003; Presser et al., 2004; Willis, 2005). It differs from other evaluation methods, such as split-sample experiments, experimental designs, and statistical modeling, precisely because it focuses on the target audience’s mental processes (Presser et al., 2004; Willis, 2005). There are many possible reasons for user problems with materials, including lack of information to make a judgment, unclear or ambiguous terms, items not measuring intended constructs, or items designed to make discriminations that are too subtle for respondents. The results of cognitive interviews are framed in terms of participants’ descriptions of how they “understand, mentally process, and respond” (Willis, 2005, p.3) to the tasks required. In a cognitive interview, a researcher prompts a participant to talk though, for example, an instrument item-by-item, recording the participant’s impressions. Methods include asking the participant to “think aloud” and then probing for more concrete and descriptive information (Beatty & Willis, 2007; Levine, Fowler & Brown, 2005; Presser et al., 2004; Willis, 2005). Specifically, thinking aloud entails participants verbalizing their thoughts as they read each item, a process that may not be initially intuitive for participants and is often modeled first by the researcher. Targeted probes focusing on particular aspects of an item then allow the researcher to understand participants’ thoughts more clearly. This information helps pinpoint problems in the instrument that only actual users can identify. The established methodology for cognitive interviewing, therefore, does not have random sampling or generalizability as its goal (Presser et al., 2004). Studies using cognitive interviewing have demonstrated that only a few participants (e.g., 4–8) are necessary to identify critical problems with instrument items that can have large effects on data quality (Forsyth, Rothgeb & Willis, 2004; Fowler, 1992; Willis, 2005).

The current study included three rounds of cognitive interviews with CLC staff together with one round of instrument piloting. Specifically, we recruited staff participants from six units in two CLCs (Site A and Site B) in different U.S. geographic regions and with different resident populations. Rounds 1 and 2 of the cognitive interviews comprised, respectively, phone and in-person cognitive interviews with participants from Site A. The two research team leaders then piloted the instrument resulting from Round 2. They did this in one community-based nursing home and recorded their impressions via notes immediately after the observations. Next, both phone and in-person cognitive interviews with staff from Site B were conducted in Round 3 cognitive interviews, to test the multiple instruments that resulted from the piloting.

Numerous published articles and books on cognitive interviewing were used to construct an initial cognitive interview guide and train research staff on cognitive interviewing procedures (Beatty & Willis, 2007; Levine, Fowler & Brown, 2005; Presser et al., 2004; Willis, 2005). Training on becoming an effective cognitive interviewer is not time intensive compared with other methods for testing and evaluating instruments, and we were able to train various levels of research staff effectively with minimal use of time. Throughout the study, we modified the cognitive interview guide as the content of the RAISE instrument changed based on feedback. Each version of the interview guide contained probes relating to general impressions of the instrument, its perceived strengths and weaknesses, and specific items and categories (see examples below).

At each site, all CLC staff members with direct resident interaction as part of their jobs were eligible to participate. Staff were recruited via an email invitation from the researchers for both the phone and in-person interviews. Opportunities for in-person cognitive interviews were also announced at staff meetings and through posted flyers. Phone interviews were conducted at a convenient time selected by the participant. In-person interviews were conducted on the CLC units during a 4-day site visit. All cognitive interviews were recorded and, when possible, a note taker was present. After each cognitive interview, the note taker and/or interviewer recorded their impressions.

Other procedures differed somewhat for phone and in-person cognitive interviews. Phone participants were sent a packet containing the following: an introductory summary of the cognitive interview procedures, a detailed summary of the RAISE tool that included information on how it would be used in a real-life setting, and a copy of the tool itself. The phone interviewer began by reviewing information in the packet, concentrating on the procedures for using the RAISE tool and the explanation of the actual tool. The interviewer then used the interview guide to elicit comments about the tool. Questions included, “Can you tell me what was going through your mind as you looked at the instrument? What were your main impressions?” “What do you see as the strengths of the tool in using it?” and “What do you see as the weaknesses of the tool in using it?” During in-person cognitive interviews, the researchers took a CLC staff member out on the floor of a unit, demonstrated how to use the RAISE tool, and then had the participant use the tool while observing activity in the CLC and think aloud while doing so. The staff person thus used the tool in a real situation and gave feedback. The researcher used probes to elicit the participant’s impressions of specific items. Probes included questions such as, “We hope to improve resident engagement with this tool. What do you think of when you hear the words ‘resident engagement’? Does this instrument capture that for you?” and “Let’s go through each column on the tool. Which words were unclear in their meaning? For those words that were unclear, what did the word mean to you?” Approval was obtained for all study procedures from the Department of Veterans Affairs Central IRB.

Data analysis

Data analysis involved an iterative process. After cognitive interviews for 1 round were completed, all recordings were reviewed. Notes from instrument piloting were also reviewed. One member of the study team constructed a site-level summary matrix based on the recordings and notes, as is usual in cognitive interviewing data analysis (Willis, 2005). This matrix summarized findings, indicating where tool modification was needed. Findings included but were not limited to suggestions about unclear wording, unclear organization, multiple interpretations, suggested changes, and problems encountered during implementation. We organized the matrix based on 5 domains for categorizing end-user feedback outlined by Willis (2005): Comprehension/Communication, Response Category, Bias/Sensitivity, Recall/Computation, and Logic. These domains relate to interviewees’ cognitive processing when reviewing an instrument. We also remained open to any other data that did not fit these domains. This led to our adding an Ease of Use domain to capture tool usability. The entire research team reviewed the completed site-level matrix in an iterative process, reviewing notes and interview recordings as necessary for clarification until consensus about coding was reached. The final matrix then served as the basis for subsequent modifications to the RAISE tool.

Results

In total, we conducted 5 semi-structured telephone interviews and 23 in-person cognitive interviews with CLC leaders and clinical and nursing staff members between April 2014 and November 2014. All in-person cognitive interviews took place with participants conducting observations using the tool on CLC units. These data collection efforts represent cognitive interview Rounds 1, 2 and 3, as described above. Cognitive interviews lasted between 30 and 60 minutes. Instrument piloting took 1 hour .

All data from the four rounds fit into one of the Willis (2005) domains or into the additional domain we termed Ease of Use.

Round 1 Cognitive Interviews

Table 1 highlights Round 1’s suggested and incorporated tool changes, organized by domain. Round 1’s interviewee feedback pertained mostly to changes in the Response Category and Ease of Use domains. Response Category changes included consolidating response options, specifying the meaning of response options, and improving response options (e.g., creating response options to be circled rather than written in). Suggested changes related to Ease of Use involved organizing response options under subheadings, improving instructions with directions to choose all that apply, adding visuals to supplement text, and using larger print. Based on the cognitive interviews, we also made one change related to Comprehension/Communication: adding definitions for various terms directly on the tool. Suggestions in the Logic domain led us to combine two columns into one column.

Table 1.

Round 1 Suggested Modifications by Domain

| Domaina | Suggested Modification |

|---|---|

| Comprehension/Communication | Define key terms (e.g., positive interaction; actively engaged) b |

| Response Category | Present target individual column response options so they can be circled (rather than written in) |

| Change N/A response option to not interacting | |

| Minimize number of staff/resident activity response optionsb | |

| Collapse response options in resident activity columnb | |

| Logic | Combine the columns for staff doing what with whom and staff activity |

| Ease of Use | Add subheadings to staff activity column |

| Add the instruction choose all that apply to staff activity column | |

| Add visuals to supplement textb | |

| Use larger printb |

These domains are modified from those presented in Willis (2005). Domains not included in the table were not mentioned by participants or did not result in changes to the tool.

This suggestion was made in Round 1 but addressed between Rounds 2 and the piloting.

Within the Bias/Sensitivity domain, participants made several general comments that were not associated with specific changes in this round. One interviewee, for example, proposed making the tool look less like a report card. We addressed this suggestion by adding other visuals and making additional changes, described below under Round 3’s results. Two other participants commented on the meaning of the tool to its end users. One participant pointed to potential reluctance among staff to rate their coworkers negatively. The other participant appreciated that data collected about resident engagement was not based on complaints. We modified the instrument based on this feedback, along with additional feedback from the Round 2, by shifting to a strengths-based, rather than a deficiency-based, assessment approach at the end of Round 3.

Round 2 Cognitive Interviews

Round 2 cognitive interviews reflected theoretical saturation; that is, the interviewees in Round 2 did not perceive the need for any new substantive changes to the instrument and thus none were made. But 2 global themes from Round 1 persisted in Round 2: (a) the tool was perceived as demanding excessive cognitive load (e.g., there would be a large learning curve in learning how to use the tool) and (b) deficiency-based assessment would have sub-optimal results (e.g., some staff might perceive the concept of “missed opportunity for relationship” that was part of the Round 2 tool as a criticism).

Piloting

Because staff comments did not lead to substantive changes in Round 2, we piloted it the tool in one community-based nursing home. Resulting changes fell into the Logic, Bias/Sensitivity, and Comprehension/Communication domains (see Table 2).

Table 2.

Suggested Modifications by Domain Based on Piloting

| Domaina | Suggestion |

|---|---|

| Comprehension/Communication | Change interaction to communication |

| Change passively engaged to only receiving input | |

| Add non-verbal communication category (in addition to verbal communication) | |

| Define non-verbal and verbal communication | |

| Define the various levels of engagement users are asked to code | |

| Define positive and neutral communication types | |

| Response Category | Consolidate levels of engagement into fewer response options |

| Remove non-essential response options (i.e., collapse small group activity and large group activity into group activity) | |

| Replace response option cannot rate with can’t see/can’t hear (i.e., can’t see or hear what targeted individual is doing/saying) | |

| Bias/Sensitivity | Change missed opportunity for relationship to realized opportunity for relationship |

| Remove response options of not attending resident distress, ignoring, and non-work | |

| Logic | Develop one tool per overarching measurement goal (i.e., divide the existent 1 tool into 3 separate tools) |

| Represent only one construct per column (i.e., separate staff activities and staff communication with resident into two columns) | |

| Organize column order so tool logic is explicit (i.e., components adding up to a realized opportunity for relationship are presented in the appropriate sequence) | |

| Ease of Use | Add instructions to top of tool |

| Replace open-ended code boxes with categorical check-off boxes | |

| Remove response option numbering |

These domains are modified from those presented in Willis (2005). Domains not included in the table were not mentioned by participants or did not result in changes to the tool.

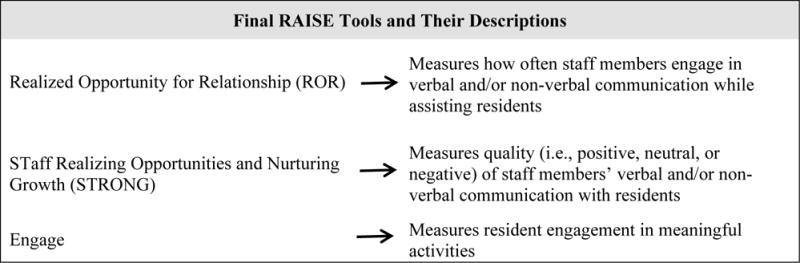

Suggestions in the Logic domain resulted in a major restructuring of the tool. The Round 2 tool was transformed into three primary tools. Each focused on 1 construct and 1 type of target individual (staff member or resident). These new tools were named the Realized Opportunity for Relationship (ROR), the Staff Realizing Opportunities and Nurturing Growth through communication (STRONG), and the Engage (see Figure 1). The ROR and STRONG tools targeted staff members for observation. The former assessed whether staff members took advantage of opportunities to engage in verbal and/or non-verbal communication with a resident while assisting that resident. The latter assessed the quality (i.e., positive, neutral, or negative) of a staff member’s verbal and/or non-verbal communication with a resident. The Engage tool had residents as the focus of the observations. It assessed whether a resident was engaged in a meaningful activity. A resident could be rated as actively engaged (e.g., playing a card game), receiving input only (e.g., watching television in a passive manner), or not engaged (e.g., staring at the floor). Each tool had columns that represented only 1 construct. Column order was organized to make a tool’s logic explicit.

Figure 1. Final RAISE Tool Descriptions.

Responding to suggestions in the Bias/Sensitivity domain from cognitive interviewing Rounds 1 and 2 and the instrument piloting, the research team also switched the focus of the tools from identifying deficiencies to identifying strengths in resident care. We substituted the strengths-based concept, realized opportunity for relationship (ROR), for the previous deficiencies-based concept, missed opportunity for relationship. The revised tool was designed to capture instances when staff capitalized on the potential for creating relationships (e.g., talking to and smiling at a resident while feeding her/him), rather than instances when the potential for relationship was overlooked (e.g., not communicating while feeding the resident). A second change was to remove response options with deficiency-based connotations (e.g., deleting “not attending to resident distress”).

We also made language changes that related to the Comprehension/Communication domain, based on feedback from Rounds 1 and 2 and the piloting. This included altering and defining terms more explicitly. For example, the word “interaction” was changed to “communication” in the ROR and STRONG tools to improve tool clarity and accuracy in recording observations. For the same reasons, definitions of key terms were merged directly into the tools’ text.

Round 3 Cognitive Interviews

The Round 3 cognitive interviews revealed a few issues with the ROR and STRONG tools pertaining to the domains of Logic and Ease of Use (see Table 3). Interviewees pointed out that these tools needed explicit indicators that both talking and nonverbal communication could and should be selected when both were observed. Interviewees also suggested some formatting changes, such as adding row numbers and reducing text crowding. Interviewees had no recommended changes for the Engage tool.

Table 3.

Round 3 Suggested Modifications by Domain

| Domaina | Suggestion |

|---|---|

| Comprehension/Communication | Describe what is meant by negative communication |

| Logic | Clarify that the talking and nonverbal response options can both be checked (i.e., add instruction to check all that apply) |

| Separate staff communication column from staff activity column | |

| Ease of Use | Add row numbers |

| Make tools easier to read by reducing crowding of text |

These domains are modified from those presented in Willis (2005). Domains not included in the table were not mentioned by participants or did not result in changes to the tool.

Beyond these minor suggestions, interviewees responded favorably to the set of tools. After using them in real-life situations, participants expressed their beliefs in the tools’ clinical value for reinforcing key constructs in person-centered care. Participants stated that the tools’ constructs were important to track and that the tools would motivate and empower staff to incorporate the measured concepts in resident care. Participants also indicated they found all tools easy to use when conducting observations. They found having examples and definitions directly on the tools helpful and described the tools as simple, self-explanatory, and requiring little training.

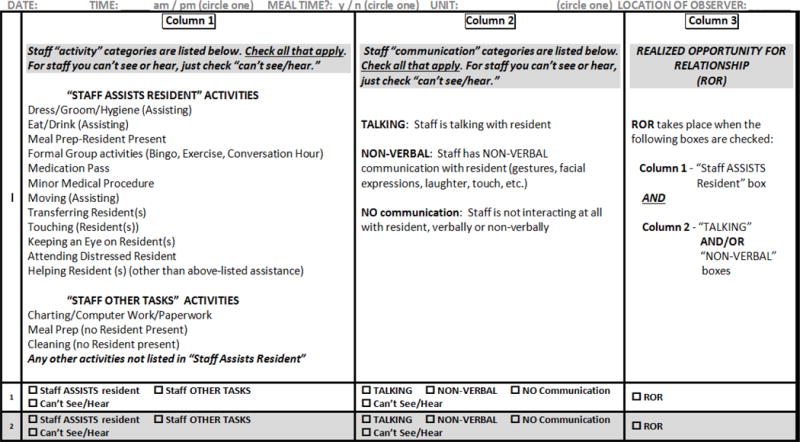

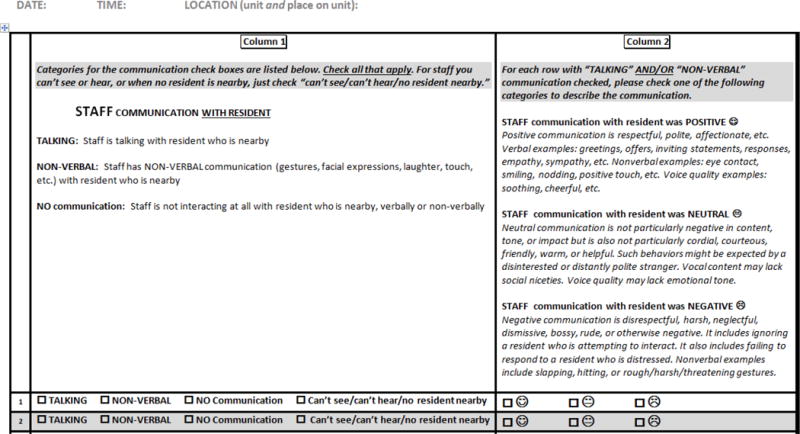

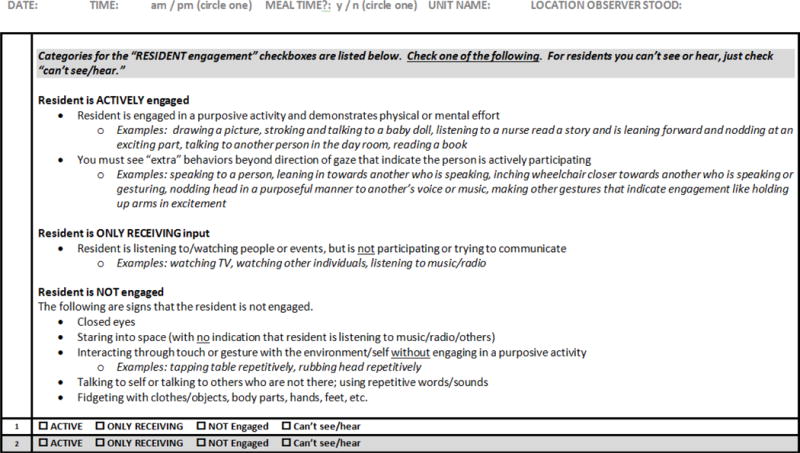

Final Instruments

The final set of 3 tools, the Realized Opportunity for Relationship (ROR) tool, the STaff Realizing Opportunities and Nurturing Growth (STRONG) tool, and the Engage tool, is shown in Figures 2, 3, and 4, respectively.

Figure 2.

Realized Opportunity for Relationship (ROR) Tool, Final Version

Figure 3.

Staff Realizing Opportunities and Nurturing Growth (STRONG) Tool, Final Version

Figure 4.

Engage Tool, Final Version

Discussion

There is a strong, documented need for improving long-term care settings by using quality improvement projects. But frontline staff may lack opportunities to participate in such quality improvement work because research instruments often require substantial time for training and practice and may not be readily comprehensible to non-researchers. We therefore set out to adapt a research instrument into a staff-friendly quality improvement tool by using methods that are easily transferable to other such endeavors. In our work, iterative rounds of cognitive interviewing with end users enabled us to develop a set of tools that met the needs of frontline staff. The final set of tools measures different aspects of resident-staff interactions and resident engagement. The tools are designed to be used separately or in conjunction with each other, depending on the focus of the quality improvement work being undertaken. The methods outlined are particularly important for culture change, where accurately capturing the experiences of nursing home residents and staff is critical to philosophical as well as practical success.

Implementing aspects of culture change may provide nursing homes with numerous benefits, such as positive effects on resident outcomes, including quality of life, quality of care indicators, and activities of daily living (Colorado Foundation for Medical Care, 2006; Grant, 2008; Hill et al., 2011; Kane, Lum, Cutler, Degenholtz & Yu, 2007). There are also potential beneficial effects for staff, including lower turnover and higher satisfaction (Craft Morgan, Haviland, Woodside & Konrad, 2007; Deutschman, 2001; Stone et al., 2002; Tellis-Nayak, 2007). Yet without accurate measurement there is no clear information about strategies that work well and areas that present challenges. Enabling staff to participate in meaningful quality improvement activities represents a potentially powerful and inherently meaningful aspect of culture change. Using the procedures described in this article (i.e., cognitive interviewing), staff can become engaged in the first steps of such quality improvement projects. Numerous tools exist to measure various aspects of culture change (Bowman & Schoeneman, 2006; Edvardsson & Innes, 2010; Sullivan et al., 2013b; Zimmerman et al., 2015), but we are aware of none that have been adapted for use by the individual staff members involved in the day-to-day experience of changing a culture.

One finding from our cognitive interviews pointed to the importance of minimizing cognitive load for busy frontline staff. Prior to Round 1 we developed our draft of a staff-friendly version of the RAISE research tool with staff time constraints in mind. But we quickly learned that a tool we deemed relatively easy to use was, in fact, still much too difficult for staff to understand without a lengthy training period. This highlights a potential problem for researchers: accurately predicting what will be easy to use in the clinical setting without direct input from staff. Our inaccurate interpretation was born out in staff members’ repeated suggestions across cognitive interviewing rounds. Learning from staff about areas they found to be problematic enabled us to revise the tool in ways that we could not have predicted beforehand. Staff helped us understand the practical benefits of modularizing a longer, comprehensive instrument into smaller components that could be mastered and administrated more quickly. This process also revealed useful information about the number of options staff find manageable when using rating and coding scales. What is now a hallmark of the RAISE set of tools—their focus on staff strengths—would also not have emerged if we had not solicited staff members’ input.

The tool’s initial focus on deficits was mentioned by many of the participants. They felt the tool would be unfavorably received by frontline staff users if it focused on areas in which they were not doing well. These participants suggested the tool be reworked to focus on areas of staff strength. The literature supports this contention. Studies in other settings show, for example, that using strengths-based approaches to professional development can improve the work environment for clinicians and increase retention of nurses (Challis, 2009; Vogt et al., 2015). Strengths-based approaches have also been used to overcome staff and organizational resistance to person-centered culture change in inpatient mental health care settings (Beckett et al., 2013). The strengths-based or positive deviance approach is also consistent with the mission of culture change, which is to focus on and celebrate individuals for their abilities and promote harmonious relationships among staff and between staff and residents. These kinds of nurturing environments lower the risk of residents developing behavioral and psychological problems and have positive effects on resident well-being (Biglan, Flay, Embry & Sandler, 2012). Yet without the input from end-users, it is likely our tool would have remained a deficit-focused tool.

The final round of cognitive interviews confirmed that staff felt the instruments helped them to step back from daily tasks and obtain a novel perspective on life in the CLC. They believed the tools were clinically relevant, easy to use, and congruent with their definitions of engagement and positive resident-staff interactions. Nursing staff, however, are typically not trained in measurement and may not be aware of potential sources of bias (e.g., the reluctance to rate friends negatively, inconsistent rating of liked and disliked peers), and the cognitive interviewing process is not designed to delve deeply into these types of bias issues. But our experience with having staff use the instruments on the floor gave us confidence in their ability to understand the protocol and follow it consistently. Staff remarked that stepping back from their usual routine and watching daily life as an observer instead of a participant gave them a valuable perspective on their practice. In further work beyond the scope of this paper, we used this set of tools in 6 CLCs with staff from varying disciplines as part of a comprehensive quality improvement program focused on improving resident-staff interactions and resident engagement. This CLC program is now being rolled out nationally in VA.

Discussions about bias can be a valuable part of the overall quality improvement process, which would include discussions about the data, identification of successful practices, and brainstorming about ways to spread successful practices. But the tools we developed through this process are not designed to benchmark. Rather, they provide input for unit-based quality improvement activities. The strengths-based approach focuses not on numeric tracking of deficits over time but rather uses the numeric data to pinpoint successful outliers. These processes are consistent with the Patient Protection and Affordable Care Act (ACA) of 2010’s mandate to implement quality assurance and quality improvement programs that enable cross-discipline and cross-hierarchy problem solving and to develop and test improvement strategies.

Cognitive interviewing is well-suited for adapting research instruments for use in quality improvement efforts. It is a psychometric technique that has a strong foundation of success (Presser et al., 2004; Willis, 2005). Numerous guides exist on how to conduct cognitive interviews (Presser et al., 2004; Willis, 2005). Small sample sizes are the norm. Conducting iterative rounds of cognitive interviews with participants can be achieved in a relatively short amount of time. Data analysis requires no special background in statistics but rather an eye to capturing all participants’ viewpoints using, preferably, a theoretical or analytic framework. Use of rigorous cognitive interviewing methods, while neither significantly taxing nor time-consuming, can result in an instrument that has a high probability of truly matching the needs of the population for which it is intended, particularly when, as in this study, saturation of responses is reached. That is, one can be relatively confident that the instrument will be well understood by its intended recipients when the information obtained from iterative rounds eventually yields no significant new information. For all these reasons, we recommend wider use of cognitive interviewing techniques to adapt research instruments for use in culture change and the world of quality improvement.

Although the methods employed for this study were more rigorous than those traditionally used in studies involving cognitive interviewing (Forsyth et al., 2004; Fowler, 1992; Willis, 2005), there are still minor limitations to these findings. First, this study was conducted in VHA CLCs, which may impact generalization of specific results to non-VHA nursing home settings. The general methods, however, for simplifying a research instrument into a staff-friendly version for use in quality improvement are based on techniques that are not context specific. Second, although we had more than an adequate number of participants for cognitive interviewing purposes, the sample was drawn from only two study sites, potentially limiting the representativeness of the sample. Again, this limitation pertains only to the specific results, not to the methods employed. Regarding the instruments themselves, the original RAISE tool has been established as psychometrically sound, but the staff-friendly versions developed through the cognitive interviewing process have not undergone formal reliability or validity testing. In the future, it would also be valuable, among other activities, to include resident perspectives in work such as that outlined here.

Culture change measurement is a relatively new field, and the use of measurement tools in quality improvement for culture change is still in its infancy. But great potential exists as these two areas merge, because the premise of incorporating end-user feedback is highly consistent with the culture change mission. Our work adapting an intricate and difficult culture change measurement tool into a set of easy-to-use, meaningful quality improvement tools for use by frontline staff in their day-to-day work provides a framework for similar adaptation of other instruments. Enabling staff and residents to be part of the process of developing the measures by which aspects of their lives will be assessed provides a strong foundation for any quality improvement work, and particularly that founded on the principles of culture change.

Contributor Information

Christine W. Hartmann, Edith Nourse Rogers Memorial VA Hospital, Bedford, Massachusetts, and Boston University.

Jennifer A. Palmer, Edith Nourse Rogers Memorial VA Hospital, Bedford, Massachusetts

Whitney L. Mills, Michael E. DeBakey Veterans Affairs Medical Center, Houston, Texas, and Baylor College of Medicine

Camilla B. Pimentel, University of Massachusetts Medical School

Rebecca S. Allen, University of Alabama

Nancy J. Wewiorski, Edith Nourse Rogers Memorial VA Hospital, Bedford, Massachusetts

Kristen R. Dillon, Edith Nourse Rogers Memorial VA Hospital, Bedford, Massachusetts

A. Lynn Snow, Tuscaloosa Veterans Affairs Medical Center, Tuscaloosa, Alabama, and University of Alabama.

References

- Allen RS, Burgio LD, Fisher SE, Hardin JM, Shuster JL. Behavioral characteristics of agitated nursing home residents with dementia at the end of life. The Gerontologist. 2005;45:661–666. doi: 10.1093/geront/45.5.661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen-Burge R, Burgio LD, Bourgeois MS, Sims R, Nunnikhoven J. Increasing communication among nursing home residents. Journal of Clinical Geropsychology. 2001;7:213–230. [Google Scholar]

- Beatty PC, Willis GB. Research synthesis: The practice of cognitive interviewing. Public Opinion Quarterly. 2007;71:287–312. [Google Scholar]

- Beckett P, Field J, Molloy L, Yu N, Holmes D, Pile E. Practice what you preach: Developing person-centered culture in inpatient mental health settings through strengths-based, transformational leadership. Issues in Mental Health Nursing. 2013;34:595–601. doi: 10.3109/01612840.2013.790524. [DOI] [PubMed] [Google Scholar]

- Biemer PP, Lyberg LE. Introduction to survey quality. Hoboken, NJ: John Wiley & Sons, Inc; 2003. [Google Scholar]

- Biglan A, Flay BR, Embry DD, Sandler IN. The critical role of nurturing environments for promoting human well-being. American Psychologist. 2012;67:257–271. doi: 10.1037/a0026796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blinded for peer review.

- Bowman CS, Schoeneman K. Development of the Artifacts of Culture Change Tool. Washington, DC: Centers for Medicare & Medicaid Services; 2006. (Report of Contract HHSM-500-2005-00076P). [Google Scholar]

- Burack OR, Weiner AS, Reinhardt JP. The impact of culture change on elders’ behavioral symptoms: A longitudinal study. Journal of the American Medical Directors Association. 2012;13:522–528. doi: 10.1016/j.jamda.2012.02.006. [DOI] [PubMed] [Google Scholar]

- Burgio LD, Allen-Burge R, Roth DL, Bourgeois MS, Dijkstra K, Gerstle J, Bankester L. Come talk with me: Improving communication between nursing assistants and nursing home residents during care routines. The Gerontologist. 2001;41:449–460. doi: 10.1093/geront/41.4.449. [DOI] [PubMed] [Google Scholar]

- Challis AM. An appreciative inquiry approach to RN retention. Nursing Management. 2009;40(7):9–13. doi: 10.1097/01.NUMA.0000357795.15645.1c. [DOI] [PubMed] [Google Scholar]

- Chang YP, Li J, Porock D. The effect on nursing home resident outcomes of creating a household within a traditional structure. Journal of the American Medical Directors Association. 2013;14:293–99. doi: 10.1016/j.jamda.2013.01.013. [DOI] [PubMed] [Google Scholar]

- Colorado Foundation for Medical Care. Measuring culture change: Literature review. 2006 Retrieved from http://www.cfmc.org/files/nh/MCC%20Lit%20Review.pdf.

- Craft Morgan J, Haviland SB, Woodside MA, Konrad TR. Fostering supportive learning environments in long-term care: The case of WIN A STEP UP. Gerontology and Geriatrics Education. 2007;28(2):55–75. doi: 10.1300/J021v28n02_05. [DOI] [PubMed] [Google Scholar]

- Deutschman M. Interventions to nurture excellence in the nursing home culture. Journal of Gerontological Nursing. 2001;27(8):37–43. doi: 10.3928/0098-9134-20010801-11. [DOI] [PubMed] [Google Scholar]

- Edvardsson D, Innes A. Measuring person-centered care: A critical comparative review of published tools. The Gerontologist. 2010;50:834–846. doi: 10.1093/geront/gnq047. [DOI] [PubMed] [Google Scholar]

- Forsyth B, Rothgeb J, Willis G. Does pretesting make a difference? New York, NY: John Wiley & Sons, Inc; 2004. [Google Scholar]

- Fowler FJ., Jr How unclear terms affect survey data. Public Opinion Quarterly. 1992;56(2):218–231. doi: 10.1086/269312. [DOI] [PubMed] [Google Scholar]

- Gaudet TW. VA’s new office of patient centered care represents an ongoing commitment to transform VA’s healthcare culture. 2011 Retrieved from http://www.prevention.va.gov/Publications/Newsletters/2011/HealthPOWER_Prevention_News_Spring_2011_Feature_Article.asp.

- Gilbart EE, Hirdes JP. Stress, social engagement and psychological well-being in institutional settings: Evidence based on the Minimum Data Set 2.0. Canadian Journal on Aging. 2000;19(Supplement 2):50–66. [Google Scholar]

- Grabowski DC, O’Malley AJ, Afendulis CC, Caudry DJ, Elliot A, Zimmerman S. Culture change and nursing home quality of care. The Gerontologist. 2014;54(S1):S35–S45. doi: 10.1093/geront/gnt143. [DOI] [PubMed] [Google Scholar]

- Grant LA. Culture change in a for-profit nursing home chain: An evaluation. New York, NY: Commonwealth Fund; 2008. [Google Scholar]

- Hill NL, Kolanowski AM, Milone-Nuzzo P, Yevchak A. Culture change models and resident health outcomes in long-term care. Journal of Nursing Scholarship. 2011;43(1):30–40. doi: 10.1111/j.1547-5069.2010.01379.x. [DOI] [PubMed] [Google Scholar]

- Kane RA, Lum TY, Cutler LJ, Degenholtz HB, Yu TC. Resident outcomes in small-house nursing homes: A longitudinal evaluation of the initial Green House program. Journal of the American Geriatrics Society. 2007;55:832–839. doi: 10.1111/j.1532-5415.2007.01169.x. [DOI] [PubMed] [Google Scholar]

- Kiely DK, Simon SE, Jones RN, Morris JN. The protective effect of social engagement on mortality in long-term care. Journal of the American Geriatrics Society. 2000;48:1367–1372. doi: 10.1111/j.1532-5415.2000.tb02624.x. [DOI] [PubMed] [Google Scholar]

- Levine RE, Fowler FJ, Jr, Brown JA. Role of cognitive testing in the development of the CAHPS Hospital Survey. Health Services Research. 2005;40:2037–2056. doi: 10.1111/j.1475-6773.2005.00472.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKee KJ, Houston DM, Barnes S. Methods for assessing quality of life and well-being in frail older people. Psychology & Health. 2002;17:737–751. [Google Scholar]

- Meeks S, Shah SN, Ramsey SK. The Pleasant Events Schedule—nursing home version: A useful tool for behavioral interventions in long-term care. Aging & Mental Health. 2009;13:445–455. doi: 10.1080/13607860802534617. [DOI] [PubMed] [Google Scholar]

- Meeks S, Looney SW. Depressed nursing home residents’ activity participation and affect as a function of social engagement. Behavior Therapy. 2011;42:22–29. doi: 10.1016/j.beth.2010.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Quality Foundation. Priority setting for healthcare performance measurement: Addressing performance measure gaps in person-centered care and outcomes. 2014 Retrieved from http://www.qualityforum.org/Publications/2014/08/Priority_Setting_for_Healthcare_Performance_Measurement__Addressing_Performance_Measure_Gaps_in_Person-Centered_Care_and_Outcomes.aspx.

- Presser S, Rothgeb JM, Couper MP, Lessler JT, Martin E, Martin J, Singer E. Methods for testing and evaluating survey questionnaires. Hoboken, NJ: John Wiley & Sons, Inc; 2004. [Google Scholar]

- Schreiner AS, Yamamoto E, Shiotani H. Positive affect among nursing home residents with Alzheimer’s dementia: The effect of recreational activity. Aging & Mental Health. 2005;9:129–134. doi: 10.1080/13607860412331336841. [DOI] [PubMed] [Google Scholar]

- Shier V, Khodyakov D, Cohen LW, Zimmerman S, Saliba D. What does the evidence really say about culture change in nursing homes? The Gerontologist. 2014;54:S6–S16. doi: 10.1093/geront/gnt147. [DOI] [PubMed] [Google Scholar]

- Stone RI, Reinhard SC, Bowers B, Zimmerman D, Phillips CD, Hawes C, Jacobson N. Evaluation of the Wellspring model for improving nursing home quality. New York, NY: The Commonwealth Fund; 2002. [Google Scholar]

- Sullivan JL, Shwartz M, Burgess JF, Peko EA, Christiansen CL, Genera-Melia M, Berlowitz D. Person-centered care practices and quality in Department of Veterans Affairs nursing homes: Is there a relationship? Medical Care. 2013a;51:165–171. doi: 10.1097/MLR.0b013e3182763230. [DOI] [PubMed] [Google Scholar]

- Sullivan JL, Meterko M, Baker E, Stolzmann J, Adjognon O, Ballah K, Parker VA. Reliability and validity of a person-centered care staff survey in Veterans Health Administration Community Living Centers. The Gerontologist. 2013b;53:596–607. doi: 10.1093/geront/gns140. [DOI] [PubMed] [Google Scholar]

- Tellis-Nayak V. A person-centered workplace: The foundation for person-centered caregiving in long-term care. Journal of the American Medical Directors Association. 2007;8:46–54. doi: 10.1016/j.jamda.2006.09.009. [DOI] [PubMed] [Google Scholar]

- U.S. Department of Veterans Affairs. Criteria and standards for VA Community Living Centers. 2008 Retrieved from http://www.va.gov/vhapublications/publications.cfm?pub=2&order=asc&orderby=pub_Number.

- Van Haitsma K, Crespy S, Humes S, Elliot A, Mihelic A, Scott C, Abbott K. New toolkit to measure quality of person-centered care: development and pilot evaluation with nursing home communities. Journal of the American Medical Directors Association. 2014;15:671–680. doi: 10.1016/j.jamda.2014.02.004. [DOI] [PubMed] [Google Scholar]

- Van Haitsma K, Abbott KM, Heid AR, Spector A, Eshraghi KL, Duntzee C, Van Valkenburgh-Schultz M. Honoring nursing home resident preferences for recreational activities to advance person-centered care. Annals of Long-Term Care: Clinical Care and Aging. 2016;24(2):25–33. [Google Scholar]

- Vogt K, Johnson F, Fraser V, Koh JC, McQueen K, Thornhill J, Verbowski V. An innovative, strengths-based, peer mentoring approach to professional development for registered dieticians. Perspectives in Practice. 2015;76:185–189. doi: 10.3148/cjdpr-2015-027. [DOI] [PubMed] [Google Scholar]

- Willis GB. Cognitive interviewing: A tool for improving questionnaire design. Thousand Oaks, CA: Sage Publications, Inc; 2005. [Google Scholar]

- Wood W, Womack J, Hooper B. Dying of boredom: An exploratory case study of time use, apparent affect, and routine activity situations on two Alzheimer’s special care units. American Journal of Occupational Therapy. 2009;63:337–350. doi: 10.5014/ajot.63.3.337. [DOI] [PubMed] [Google Scholar]

- Zimmerman S, Allen J, Cohen LW, Pinkowitz J, Reed D, Coffey WO, Sloane PD. A measure of person-centered practices in assisted living: The PC-PAL. Journal of the American Medical Directors Association. 2015;16:132–137. doi: 10.1016/j.jamda.2014.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]