Supplemental digital content is available in the text.

Key Words: Simulation training, mechanical ventilation, computer simulations, acute respiratory failure, critical care

Abstract

Introduction

Advances in knowledge regarding mechanical ventilation (MV), in particular lung-protective ventilation strategies, have been shown to reduce mortality. However, the translation of these advances in knowledge into better therapeutic performance in real-life clinical settings continues to lag. High-fidelity simulation with a mannequin allows students to interact in lifelike situations; this may be a valuable addition to traditional didactic teaching. The purpose of this study is to compare computer-based and mannequin-based approaches for training residents on MV.

Methods

This prospective randomized single-blind trial involved 50 residents. All participants attended the same didactic lecture on respiratory pathophysiology and were subsequently randomized into two groups: the mannequin group (n = 25) and the computer screen–based simulator group (n = 25). One week later, each underwent a training assessment using five different scenarios of acute respiratory failure of different etiologies. Later, both groups underwent further testing of patient management, using in situ high-fidelity simulation of a patient with acute respiratory distress syndrome.

Results

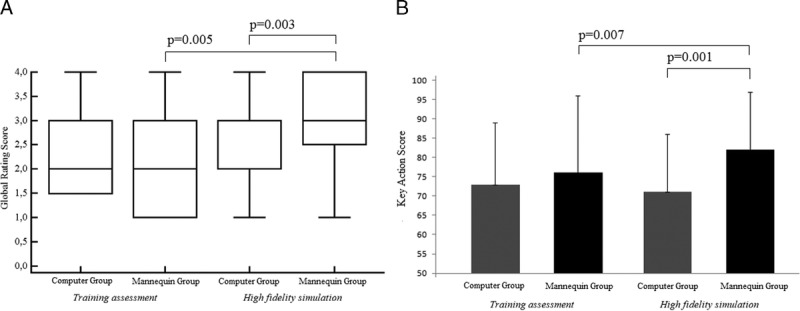

Baseline knowledge was not significantly different between the two groups (P = 0.72). Regarding the training assessment, no significant differences were detected between the groups. In the final assessment, the scores of only the mannequin group significantly improved between the training and final session in terms of either global rating score [3.0 (2.5–4.0) vs. 2.0 (2.0–3.0), P = 0.005] or percentage of key score (82% vs. 71%, P = 0.001).

Conclusions

Mannequin-based simulation has the potential to improve skills in managing MV.

In recent years, advances in knowledge regarding mechanical ventilation (MV), in particular lung-protective ventilation strategies with low tidal volume, have been shown to reduce mortality.1 However, despite positive clinical trials and widespread access to recommendations, the translation of these advances in knowledge into better therapeutic performance in real-life clinical settings continues to lag. Indeed, acute respiratory distress syndrome (ARDS) is underestimated2 and protective ventilation is therefore underused.3 Needham et al4 recently found that only 41% of the observed ventilator settings in 13 intensive care units were compliant with low tidal volume ventilation guidelines for ARDS. Similarly, in a large observational study, Bellani et al5 found that ARDS was undertreated in terms of the use of recommended approaches to MV and the use of adjunctive measures and the in-hospital mortality is still unacceptably high. Even agreement among physicians seems to be low, when presented with the same patient cases, ten experienced Danish doctors selected different ventilator settings, and 33% considered the settings selected by other doctors to be unacceptable.6 These findings highlight the need for greater compliance to recommendations of the ARDS network and the importance of enhancing the training of clinical residents.

Simulation-based training may be a valuable addition to traditional didactic teaching.7–9 Several studies have described the benefits of simulation-based training on physician performance in clinical areas such as critical intraoperative events,10,11 airway management,12 noncardiac critical care ultrasonography,13,14 and obstetric emergencies.15 However, few studies have investigated the use of simulation training for teaching MV, and the existing studies report mixed results.16–18 Simulation-based education was shown to be more effective than traditional teaching16 and comparable with live-animal laboratory.17 However, not all the researchers reached the same conclusion because Corbridge et al18 did not find significant improvement when comparing simulation-based education and traditional didactic education. Moreover, it is not clear whether training performed using computer-based simulation provides the same benefit as that using the more expensive, lifelike, mannequin-based simulation.

The purpose of the present study was, therefore, to compare two approaches to simulation-based training of MV: computer and mannequin based. Our hypothesis was that the introduction of the mannequin in training would provide a more interactive learning experience compared with computer-based training, which would translate into better assessment and management performance. We also developed two new assessments for MV skills, a training assessment consisting of different clinical scenarios aimed at specific MV problems, and a final assessment representing a complex clinical case of ARDS. Instructors performed assessment during simulation by evaluating key actions and overall solving of the scenarios. For the final assessment of the performance of the two groups, we implemented a high-fidelity mannequin-based simulation system, using a mannequin with an integrated lung simulator capable of changing pulmonary resistance and compliance.

MATERIALS AND METHODS

Study Design

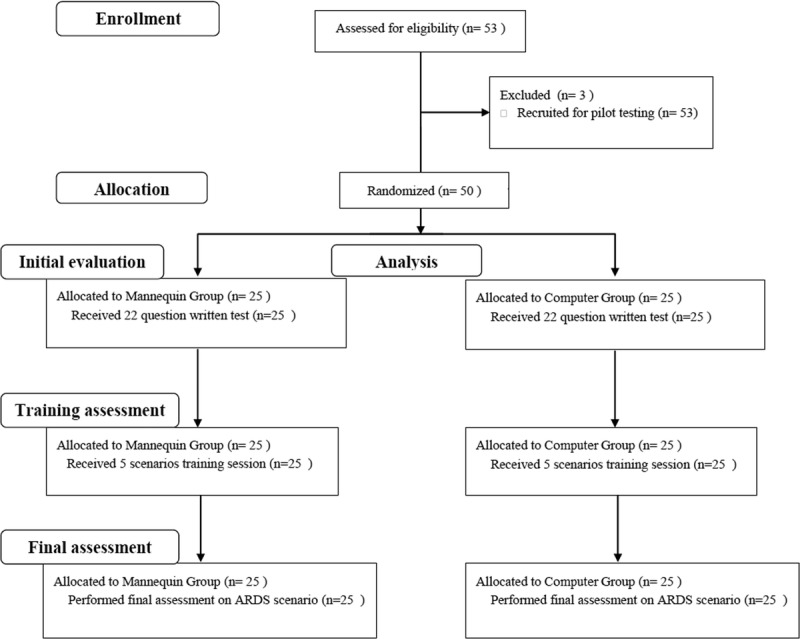

A prospective randomized trial was performed at the Simulation Centre of the University of Ferrara (Italy) from January 2013 to April 2013, evaluating the training outcome of 53 anesthesiology residents. As summarized in Figure 1, all participants attended the same didactic lecture session on respiratory pathology and respiratory failure applied to MV, which was specifically aimed at creating a homogeneous background of knowledge. During the didactic lecture, the learning objectives for all the study period were explained; of note, these learning objectives were the main topics of the didactic lecture (management of COPD, Near Fatal Asthma, and ARDS). No further learning objectives were given for the final assessment compared with the beginning, to avoid influence by solo studying of the residents. Residents were then randomized into two groups: the mannequin group (MG), to be trained on the mannequin-based simulator, and the computer group (CG), to be trained on the computer screen–based simulator. One week later, each group performed simulation training with both the MG and CG being exposed to five different training scenarios (see Appendix, Supplemental Digital Content (SDC) 1, http://links.lww.com/SIH/A335, which contains all scenarios explained in detail). During performance of the training scenarios, the instructors assessed the skills of each participant (training assessment). The training was followed by a debriefing session for both groups, aimed at discussing correct actions and best approaches to solving each scenario. Three weeks later (with no interval clinical or theoretical training), both groups underwent a final skills assessment, using in situ high-fidelity simulation (HFS), administered on an individual basis. All participants were challenged on the same ARDS scenario, this time in a vacant real room in the intensive care area of the emergency department. The study protocol was approved by our institutional ethics committee (number of the protocol: 161088), and residents provided written informed consent before participating.

FIGURE 1.

Flowchart of the study.

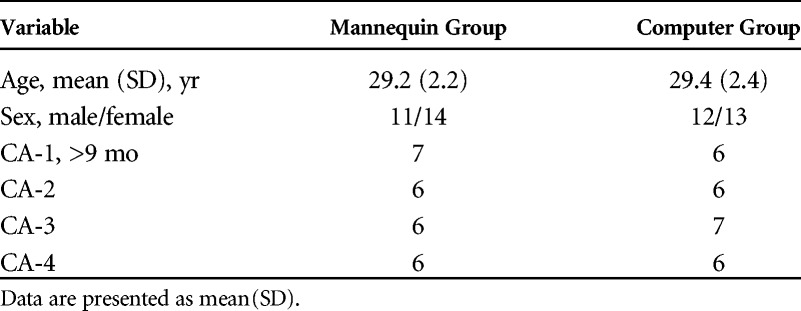

The study cohort comprised anesthesiology residents from junior to senior year and clinical anesthesia (CA) levels CA-1 to CA-4 (Table 1). Every resident had a practice of 2 months in intensive care unit (ICU) for each year of residence. All the trainees knew that the purpose of the training was to ensure an improvement in the ability of managing MV. Participation was not mandatory, but all recruited residents participated in the study. However, the three residents recruited for pilot testing were not included in the study, leaving 50 residents. Randomization to the MG or CG was performed by computer-generated randomization based on year of residency, to ensure an equal distribution of less or more experienced residents in each group.

TABLE 1.

Demographic Characteristics of Participating Residents

Baseline Assessment

After the didactic lecture, but before simulation training, we administered a test to assess whether the two groups of residents had equal distributions of knowledge and training. The test consisted of 22 multiple choice questions that covered principles of MV (see Appendix, SDC 2, http://links.lww.com/SIH/A336, for the complete questionnaire).

Simulation Training

The CG was trained on a free online software program, Virtual MV v4.0 (Mechanical Ventilation Simulator), a Microsoft Excel 2007 tool developed by Iotti et al.19 Virtual MV allowed residents to set up the ventilator in presence of various diseases and to modify the parameters of respiratory mechanics, such as resistance, compliance, tidal volume, respiratory rate, and inspiratory and expiratory pause. Any changes in the respiratory variables, including pressure, flow, and volume traces, were displayed on the computer screen. This allowed residents to practice calculating dynamic and static compliance, resistance, and intrinsic positive end-expiratory pressure (PEEP) by simply pushing the hold button on the worksheet.

Simulation for MG was performed using a SimMan mannequin and Advanced Video System (Laerdal, Stavanger, Norway) and a Servo 300 ventilator (Siemens-Elena, Solna, Sweden).

Training Assessment

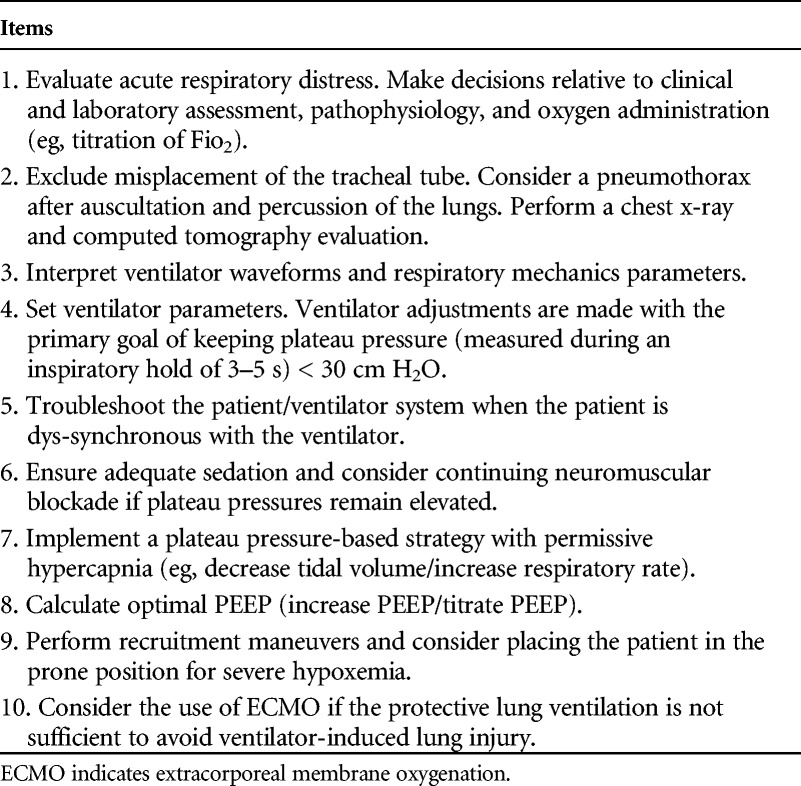

Performances in management of the training scenarios (training assessment) were evaluated by two instructors (C.V. and R.R.), with each scenario covering tasks reflecting relevant areas in best practice for MV. All the instructors involved in the study were anesthetists with at least 10 years of experience in ICU. Scenarios were presented to each group in random order and 15 minutes were allowed for completion, followed by a 15-minute debriefing.20,21 The presentation of each scenario given by the instructors is presented in Appendix 1. After the presentation, each scenario was performed by residents as individuals. All the residents performed all the five scenarios and participated in the respective debriefing. Scenarios were specifically designed to cover most of the items necessary for the final assessment (ie, titration of fraction of inspired oxygen [Fio2] to increase oxygenation, titration of optimal PEEP to ensure alveolar recruitment and stabilization, calculation of static compliance (PPlat, PEEPi), usage of recruitment maneuvers to open collapsed part of the lung and to improve oxygenation) (Table 2).

TABLE 2.

Scoring System for the Final Assessment Test Scenario on Refractory Hypoxemia

The performance of each resident during the training was assessed by a key action score and a global rating score (GRS). The design of the key action score was inspired by the specific items investigated by Cox et al.22 These actions reflecting guidelines that in general are universally and well as locally accepted. The score reflected the number of correct actions performed (diagnosis, initial treatment, and final treatment). Key actions, that is, those needed to achieve successful patient management, were identified by an expert panel providing a checklist used to create training scenarios assessing resident skills.22 The checklist for each scenario included ten items that instructors registered as done or not done, yielding one point or no point, respectively. The SDC shows the key action score checklist for the five training scenarios.

The GRS was used to assess overall performance (both technical and nontechnical skills) with instructors using a 1 to 4 scale (1 = poor: problem not identified; 2 = marginal: problem identified but not solved; 3 = acceptable: problem identified and partially solved; and 4 = good: problem identified and completely solved). As an overall score, the GRS addressed the participant's ability, attitude, and confidence in identifying and solving the problem.

Final Assessment

Three weeks after performing the training scenarios, participants from both groups underwent a final assessment using HFS in situ, as individual 20-minute sessions. All the residents continued their usual clinical practice between training and final assessment, without other theoretical or practical training. In situ HFS was conducted with a SimMan (Laerdal Corporation, Stavanger, Norway) mannequin placed in an intensive care unit bed, within a fully equipped intensive care room. To obtain an HFS environment, we equipped the mannequin with a lung simulator (Adult/Pediatric Lung Model; Pulmonary Mechanics Graphics Module PMG 3000; and SB2000 Spontaneous Breathing Module, Pittsburgh, Pa) (Fig. 2). The simulator allowed changes in compliance and resistance, and it could trigger the ventilator and display flow, volume, and pressure curves by connection via RS-232 to a personal computer. The system was equipped with software for data acquisition and could display loops and waveforms. As such, the feedback from the mannequin equipped with the lung simulator was new for both MG and CG participants. The mannequin's clinical parameters (blood pressure, electrocardiography, oxygen saturation, and expired carbon dioxide) were displayed on a monitor (Elo Touch Solutions, Milpitas, Calif). To avoid favoring the MG, who had trained on the Servo 300 ventilator, the ICU bed was equipped with a Dräger Evita XL ventilator (Dräger Medical AG & Co, Lübeck, Germany).

FIGURE 2.

High-fidelity simulation in situ.

Before assessment, residents were allowed to familiarize themselves with the equipment. The assessment started with briefing the resident on patient history and physical condition (see Appendix, SDC 3, http://links.lww.com/SIH/A337, for description of the scenario for the final assessment). During the assessment, the instructors were in the room. All the residents received the instruction to ask for every examination, analysis, or device not present in the room. Key action and GRS were used for assessing resident performance as during the training scenarios (Table 2). All residents were evaluated by two raters (S.S. and E.M.), both blinded to the study group. The scoring was supported by digital audio and video recording of each participant's performance using a four-quadrant screen with two different video views of the provider and the mannequin. The raters were able to watch the videos recorded for assessing the final score.

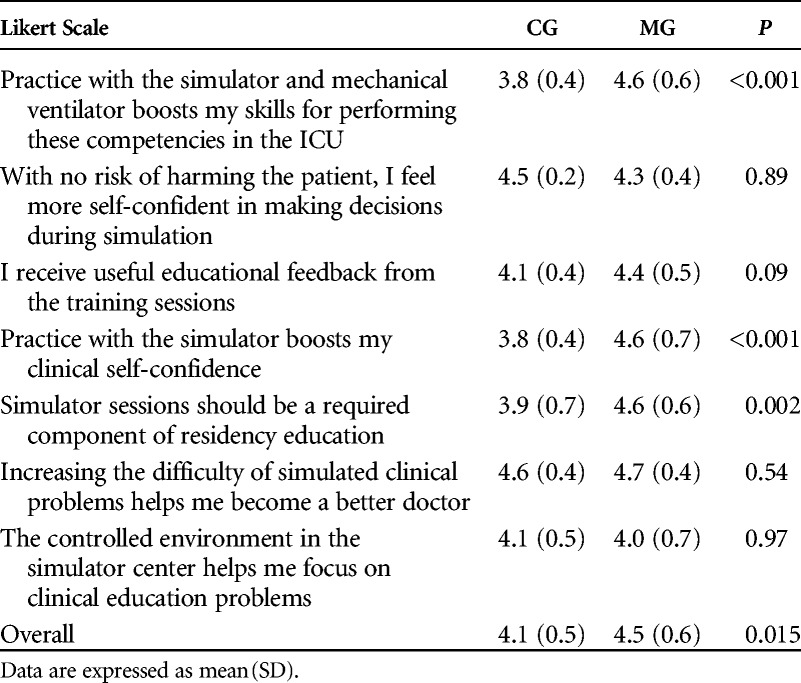

Resident Evaluation of Training

Resident's opinions about the usefulness of the simulation were assessed using an online survey at the end of the final assessment. To avoid influences, opinions were taken before the residents were told about the key action score and the GRS score. Responses were recorded on a Likert-type scale (1 = strongly disagree, 2 = disagree, 3 = uncertain, 4 = agree, and 5 = strongly agree). A Likert-type scale was also used to assess perceived satisfaction with type of simulation training.

Statistical Analysis

A convenience sample of 50 residents was chosen, including all anesthesiology residents from our department. The normality of the variables was tested using the Chen-Shapiro test. All variables were expressed as mean (SD) or as median (interquartile range), when appropriate. A P value of 0.05 or less was considered statistically significant for all tests.

At baseline assessment, the difference in knowledge between CG and MG was evaluated using two-tailed independent-samples t tests. Key action score and GRS were compared between groups using independent-samples t tests or Mann–Whitney tests for normally and nonnormally distributed data, respectively. Paired t test analysis (for normally distributed data) or Wilcoxon signed-rank tests (for nonnormally distributed data) were performed to check for any improvement in key action score and GRS that occurred from the training to the final assessment within the MG and CG.

Validity Evidence for Training and Final Assessments

As pointed out before, we developed two new assessments, the training and final assessments. We gathered the following validity evidence in accordance with Kane's framework.23 The purpose, as also described in SDC 1, was a correct diagnosis and treatment using appropriate MV settings. We aimed to establish scoring validity by the description of the procedures involved, the descriptions of key action and GRSs, as well as the level of expertise of the instructors performing the rating. These details are provided in the methods section as well as in SDC 1 and 3 for training and final assessment, respectively. Furthermore, we established generalization validity by our sampling strategy and empirically evaluating interrater reliability between the two training assessment instructors and final assessment raters GRS. The interrater reliability of the raters' GRSs was evaluated using the intraclass correlation coefficient (ICC) and Cohen κ, in accordance with Cicchetti,24 as well as Cronbach' α coefficient. We considered ICCs greater than 0.75 as excellent agreement, while κ had to be 0.80 or higher for a scale to be considered reliable, in accordance with Altman.25 We established extrapolation evidence by providing authentic clinical cases in training and final assessment scenarios, with the authenticity by design being different between MG and CG. We gathered empirical evidence for extrapolation evidence by linear regression analysis. Univariate linear regression was performed to assess the influence of the training scenarios (1–5) on key action score and GRS at the training assessment, as well as the influence of year of residency (CA1–CA4) on key action score and GRS at the training and final assessment. Pass/fail considerations were outside the scope of our study; hence, we collected no evidence for implication validity.

RESULTS

Resident Characteristics and Baseline Knowledge Assessment

All 50 residents consented to participate in the study and completed the protocol. Demographic characteristics of participants are summarized in Table 1. A written test assessing baseline knowledge did not reveal differences between the two groups (P = 0.72).

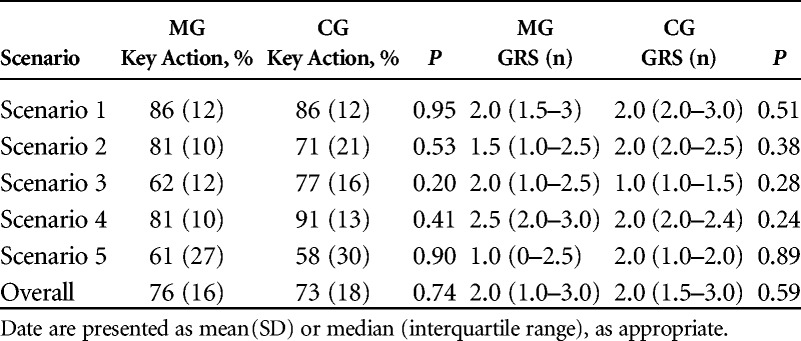

Training Assessment

No significant differences were detected between the two groups both in terms of GRS (P = 0.59) or percentage of key action score (P = 0.74), as shown in Table 3. The univariate linear regression across both groups showed statistically significant relationships between the score and the year of training (P < 0.001), a strong effect size with β value of 0.47 (P < 0.05) for key action score, and a moderate effect size with β value of 0.36 for global score. There were no significant linear relationships between training scenario and key action score or GRS (P > 0.05).

TABLE 3.

Key Action and Global Rating Scores for Training Assessment

The interrater reliability between the GRSs of the two instructors was excellent, with an ICC of 0.87 [95% confidence interval (CI) = 0.74–1.00]. Cohen κ showed the evaluators fully agreed on the classification 87.8% of the time. The coefficient of agreement was very good (κ = 0.82, z = 8.14, P < 0.001).

Final Assessment HFS in situ for ARDS Scenario

When comparing the ability of the two groups to deal with the same scenario in HFS, a statistically significant difference was found both in GRS [MG: (3.0 2.5–4.0) vs. CG: 2.0 (2.0–3.0), P = 0.005] and key action score (MG: 82% vs. CG: 71%, P = 0.001) (Figs. 3A, B). Linear regression between year of training and final assessment score was not statistically significant after the simulation training (GRS: P = 0.9; key action score: P = 0.8). Only residents in the MG group showed significant improvement in GRS (P = 0.003) and key action score (P = 0.007) from training to final assessment (Fig. 3). The interrater reliability between the GRSs of the two raters was excellent, with an ICC of 0.89 (95% CI = 0.77–1.00). Cronbach α coefficient for the checklist was 0.73, indicating good internal consistency. Resident evaluation of training was in general high, indicating good satisfaction scores for both MG and CG (Table 4). However, the MG group had a significantly higher overall satisfaction score (P = 0.015).

FIGURE 3.

A and B, Global rating score and key action score for training assessment and high-fidelity simulation.

TABLE 4.

Resident Satisfaction About Training

DISCUSSION

The main finding of our study was that among anesthesiology residents, mannequin-based simulation seemed more effective than computer-based simulation for improving knowledge and skills related to MV. Indeed, the MG, when compared with the CG, improved on both the key action score and GRS.

To our knowledge, there are no studies on the effectiveness of meeting learning objectives for MV using a computer screen–based simulator compared with a mannequin-based simulator. Our results seem to support the conclusion that mannequin-based training contributes toward strengthening residents' skills in treating patients in difficult clinical scenarios.18 The difference between the two groups may be due to the manner of interaction with the simulators, in that the MG participants actively used the ventilator, whereas the CG trained conceptualized actions on the computer screen. Furthermore, the observed difference could be related to the ability of the MG to deal with a “real” patient, including observations of continuous variations in clinical parameters. In contrast, the CG simply interacted with a computer screen.

Validity of Training and Final Assessments

Scoring validity of the assessments was supported by the use of a fair evaluation in terms of standardized key actions and use of experienced raters blinded to study groups in their evaluation. Videotaping of sessions was furthermore employed to improve accuracy of the rating. The finding of no relation between training assessment scenario and evaluation also indicates that evaluation was similar across scenarios. Both training and final assessment scenarios were developed to cover a broad section of required skills of MV management with the final assessment scenario representing a significantly complex problem drawing upon skills developed from multiple training scenarios. The broad scope of the scenarios support generalization validity, which is further supported by the very good to excellent interrater reliability observed during both training and final assessments. Scenarios were developed by instructors with certificated experience in MV and simulator programming with scoring checklists provided by an expert panel to allow the evaluation as closely as possible to reflect an authentic clinical context supporting an establishment of extrapolation validity. The relationship between experience level and performance during the simulation training assessment indicates that the scenarios provided a valid method to discriminate between different skill levels supporting extrapolation validity. However, future studies are required to investigate whether a score during the training and final assessments would correlate with performance at the bedside. We found the combined validity evidence in favor of rating and comparing MV skills in the investigated resident sample. However, we did not consider thresholds for passing/failing the assessments nor any consequent actions, and further studies are therefore required to investigate the implication validity of the presented training and final assessments.

Relation Between Experience and Performance

This study included residents with different levels of experience. The four levels (CA-1 to CA-4) were equally distributed between the two study groups. Our results showed a significant linear relationship between the grade year and both GRS and key action score at the training assessment. However, no such relationship was observed at the final assessment. The relationship between experience level and performance during simulation training indicates that traditional didactic lectures alone do not sufficiently raise skill levels of less experienced residents. In contrast, mannequin-based simulation seemed to improve the skills of residents of all experience levels in managing a complex MV scenario Training assessment probably reflects the baseline knowledge (experienced residents performed better); however, the MG performed better, irrespectively of the year of training, after the training assessment. We believe that this result can be due to the more realistic practice during the training assessment. Previous studies have reported mixed results, either observing no difference in performance between different experience levels26 or reporting a difference between the least and most experienced levels.27,28

The Role of High-Fidelity Simulation

We chose an ARDS scenario representative of a complex scenario for the final assessment. Acute respiratory distress syndrome is characterized by a sharp reduction of compliance, increased airway resistance, and lung inhomogeneity.29,30 The lung simulator connected to the mannequin allowed us to recreate an HFS environment in a field in which simulation is still scarcely practiced.

Recently, Khanduja et al31 advocated for more widespread use of simulations. Regarding simulations in MV, the Harmonising Education in Respiratory Medicine for European Specialists Task Force has begun working toward a standard training curriculum for European clinicians in respiratory critical care, establishing a training framework recommending simulation as an assessment tool.32 Indeed, there is general agreement that assessment through simulation should be viewed as a learning tool, in which effective real-time feedback from the instructor supports the learning process and translates to improved clinical skills.33,34 Hence, our model of HFS could play a fundamental role, providing a valid training and assessment experience at a relatively affordable cost for university departments and clinical facilities. Moreover, HFS could evidence errors in the clinical practice and could help focus residents teaching on the issues that they were not able to accomplish. Even if our study was not designed to evidence specific gaps, two key items were missed frequently in the CG group (ie, “troubleshoot the patient/ventilator system when the patient is dys-synchronous with the ventilator” and “perform recruitment maneuvers and consider placing the patient in the prone position for severe hypoxemia.”). Our results could suggest a more specific teaching of these skills.

Limitations

Our study has some limitations. First, the evaluation of skills in the final assessment scenario may not predict performance in other scenarios.27,35 However, the final assessment scenario was intentionally designed to have greater complexity and to require additional actions that were not covered by any of the training scenarios. Second, this was a single-center study, and the performance and responses to scenarios may be representative of the teaching strategies of our department. Third, we use a novel outcome tool GRS and key action score to evaluate the residents; even if interrater agreement between the two raters was excellent, we cannot exclude that the novelty of our rating tools could influence the reliability of our results. Finally, although we changed the ventilator, scenario and fidelity between the training and final assessment, the MG might have had a test-retest advantage as compared with the simulation group. However, this is one of the justifications for using HFS to teaching residents in the field of MV; moreover, the test-retest advantage, if present, might be limited because both groups interacted with the same mannequin in previous simulation teaching experience in different critical settings (ie, airway management), as usual practice in our department.

CONCLUSIONS

Our results indicate that as compared with computer-based training, mannequin-based training provides skills that transfer better to the case of an in situ high-fidelity simulated patients with ARDS. Residents also reported increased satisfaction with mannequin-based training. Our findings support the idea that mannequin-based simulation has the potential to improve MV skills. Future studies should address whether this also translates into better performance in the clinical setting.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank the Ferrara University anesthesiology and intensive care medicine residents for their dedication to patient care and education.

Footnotes

The authors declare no conflict of interest.

Supported by the University of Ferrara, Italy.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s Web site (www.simulationinhealthcare.com).

REFERENCES

- 1.Acute Respiratory Distress Syndrome Network, Brower RG, Matthay MA, Morris A, Schoenfeld D, Thompson BT, Wheeler A. Ventilation with lower tidal volumes as compared with traditional tidal volumes for acute lung injury and the acute respiratory distress syndrome. N Engl J Med 2000;342:1301–1308. [DOI] [PubMed] [Google Scholar]

- 2.Ferguson ND, Frutos-Vivar F, Esteban A, et al. Acute respiratory distress syndrome: underrecognition by clinicians and diagnostic accuracy of three clinical definitions. Crit Care Med 2005;33(10):2228–2234. [DOI] [PubMed] [Google Scholar]

- 3.Kalhan R, Mikkelsen M, Dedhiya P, et al. Underuse of lung protective ventilation: analysis of potential factors to explain physician behavior. Crit Care Med 2006;34(2):300–306. [DOI] [PubMed] [Google Scholar]

- 4.Needham DM, Colantuoni E, Mendez-Tellez PA, Dinglas VD, Sevransky JE, Dennison Himmelfarb CR. Lung protective mechanical ventilation and two year survival in patients with acute lung injury: prospective cohort study. BMJ 2012;344:e2124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bellani G, Laffey JG, Pham T, et al. Epidemiology, patterns of care, and mortality for patients with acute respiratory distress syndrome in intensive care units in 50 countries. JAMA 2016;315(8):788–800. [DOI] [PubMed] [Google Scholar]

- 6.Allerød C, Karbing DS, Thorgaard P, Andreassen S, Kjærgaard S, Rees SE. Variability of preference toward mechanical ventilator settings: a model-based behavioral analysis. J Crit Care 2011;26:637.e5–637.e12. [DOI] [PubMed] [Google Scholar]

- 7.Tainter CR, Wong NL, Bittner EA. Innovative strategies in critical care education. J Crit Care 2015;30(3):550–556. [DOI] [PubMed] [Google Scholar]

- 8.Cook DA, Hatala R, Brydges R, et al. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA 2011;306(9):978–988. [DOI] [PubMed] [Google Scholar]

- 9.Wax RS, Kenny L, Burns P. Educating providers of mechanical ventilation: an update. Curr Opin Crit Care 2006;12(1):61–66. [DOI] [PubMed] [Google Scholar]

- 10.Park CS, Rochlen LR, Yaghmour E. Acquisition of critical intraoperative event management skills in novice anesthesiology residents by using high-fidelity simulation-based training. Anesthesiology 2010;112:202–211. [DOI] [PubMed] [Google Scholar]

- 11.Aggarwal R, Mytton OT, Derbrew M, Hananel D, Heydenburg M, Issenberg B. Training and simulation for patient safety. Qual Saf Health Care 2010;19(Suppl 2):i34–i43. [DOI] [PubMed] [Google Scholar]

- 12.Kennedy CC, Cannon EK, Warner DO, Cook DA. Advanced airway management simulation training in medical education: a systematic review and meta-analysis. Crit Care Med 2014;42(1):169–178. [DOI] [PubMed] [Google Scholar]

- 13.Sekiguchi H, Bhagra A, Gajic O, Kashani KB. A general Critical Care Ultrasonography workshop: results of a novel Web-based learning program combined with simulation-based hands-on training. J Crit Care 2013;28(2):217.e7–217.e12. [DOI] [PubMed] [Google Scholar]

- 14.Farsoni S, Astolfi L, Bonfè M, Spadaro S, Volta CA. A versatile ultrasound simulation system for education and training in high-fidelity emergency scenarios. IEEE J Transl Eng Health Med 2017;27(5):1800109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Draycott TJ, Crofts JF, Ash JP, et al. Improving neonatal outcome through practical shoulder dystocia training. Obstet Gynecol 2008;112(1):14–20. [DOI] [PubMed] [Google Scholar]

- 16.Schroedl CJ, Corbridge TC, Cohen ER, et al. Use of simulation-based education to improve resident learning and patient care in the medical intensive care unit: a randomized trial. J Crit Care 2012;27(2):219.e7–219.e3. [DOI] [PubMed] [Google Scholar]

- 17.Keegan R, Henderson T, Brown G. Use of the virtual ventilator, a screen-based computer simulation, to teach the principles of mechanical ventilation. J Vet Med Educ 2009;36(4):436–443. [DOI] [PubMed] [Google Scholar]

- 18.Corbridge SJ, Robinson FP, Tiffen J, Corbridge TC. Online learning versus simulation for teaching principles of mechanical ventilation to nurse practitioner students. Int J Nurs Educ Scholarsh 2010;7. [DOI] [PubMed] [Google Scholar]

- 19.Iotti GA. Virtual-MV. Mechanical ventilation simulator In: Iotti GA, Patroniti N. Mechanical ventilation. Skills and techniques, Patient-centred Acute Care Training (PACT). European Society of Intensive Care Medicine, Brussels : 2011. [Google Scholar]

- 20.Dismukes RK, Gaba DM, Howards SK. So many roads: facilitated debriefing in healthcare. Simul Healthc 2006;1:23–25. [DOI] [PubMed] [Google Scholar]

- 21.Fanning RM, Gaba DM. The role of debriefing in simulation-based learning. Simul Healthc 2007;2:115–125. [DOI] [PubMed] [Google Scholar]

- 22.Cox CE, Carson SS, Ely EW, et al. Effectiveness of medical resident education in mechanical ventilation. Am J Respir Crit Care Med 2003;167(1):32–38. [DOI] [PubMed] [Google Scholar]

- 23.Cook DA, Brydges R, Ginsburg S, Hatala R. A contemporary approach to validity arguments: a practical guide to Kane's framework. Med Educ 2015;49:560–575. [DOI] [PubMed] [Google Scholar]

- 24.Cicchetti DV. guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol Assess 1994;6:284–290. [Google Scholar]

- 25.Altman DG. Practical Statistics for Medical Research. Boca Raton, FL: Chapman and Hall/CRC; 1991. [Google Scholar]

- 26.Nyssen AS, Larbuisson R, Janssens M, Pendeville P, Mayné A. A comparison of the training value of two types of anesthesia simulators: computer Screen-based and mannequin-based simulators. Anesth Analg 2002;94:1560–1565. [DOI] [PubMed] [Google Scholar]

- 27.Murray D, Boulet J, Avidan M, et al. Performance of residents and anesthesiologists in a simulation-based skill assessment. Anesthesiology 2007;107:705–713. [DOI] [PubMed] [Google Scholar]

- 28.Fehr JJ, Boulet JR, Waldrop W, Kras JF, Henrichs B, Woodhouse J. Simulation-based assessment of pediatric anesthesia skills. Anesthesiology 2011;115(6):1308–1315. [DOI] [PubMed] [Google Scholar]

- 29.Gattinoni L, Pesenti A. The concept of “baby lung.” Intensive Care Med 2005;31(6):776–784. [DOI] [PubMed] [Google Scholar]

- 30.Mauri T, Eronia N, Abbruzzese C, Marcolin R, Coppadoro A, Spadaro S. Effects of sigh on regional lung strain and ventilation heterogeneity in acute respiratory failure patients undergoing assisted mechanical ventilation. Crit Care Med 2015;43(9):1823–1831. [DOI] [PubMed] [Google Scholar]

- 31.Khanduja PK, Bould MD, Naik VN, Hladkowicz E, Boet S. The role of simulation in continuing medical education for acute care physicians: a systematic review. Crit Care Med 2015;43(1):186–193. [DOI] [PubMed] [Google Scholar]

- 32.Antonio Artigas A, Noe J, Brochard L. Defining a training framework for clinicians in respiratory critical care. Eur Respir J 2014;44:572–577. [DOI] [PubMed] [Google Scholar]

- 33.Mitchell S, Bloch K, Butiene I, Cooper BG, Steenbruggen I, Hare A. Education is the passport to the future: enabling today's medical teachers to prepare tomorrow's respiratory health practitioners. Eur Respir J 2014;44:578–584. [DOI] [PubMed] [Google Scholar]

- 34.Gordon JA. As accessible as a book on a library shelf: the imperative of routine simulation in modern health care. Chest 2012;141(1):12–16. [DOI] [PubMed] [Google Scholar]

- 35.Boulet JR, Murray DJ, Kras J, Woodhouse J, McAllister J, Ziv A. Assessing the acute-care skills of medical students and recent graduates: reliability and validity of patient simulator scores. Anesthesiology 2003;99:1270–1280. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.