Abstract

Code-blends (simultaneous words and signs) are a unique characteristic of bimodal bilingual communication. Using fMRI, we investigated code-blend comprehension in hearing native ASL-English bilinguals who made a semantic decision (edible?) about signs, audiovisual words, and semantically equivalent code-blends. English and ASL recruited a similar fronto-temporal network with expected modality differences: stronger activation for English in auditory regions of bilateral superior temporal cortex, and stronger activation for ASL in bilateral occipitotemporal visual regions and left parietal cortex. Code-blend comprehension elicited activity in a combination of these regions, and no cognitive control regions were additionally recruited. Furthermore, code-blends elicited reduced activation relative to ASL presented alone in bilateral prefrontal and visual extrastriate cortices, and relative to English alone in auditory association cortex. Consistent with behavioral facilitation observed during semantic decisions, the findings suggest that redundant semantic content induces more efficient neural processing in language and sensory regions during bimodal language integration.

Keywords: Bimodal bilingualism, American Sign Language, fMRI

1. Introduction

When bilinguals who are fluent in two spoken languages (unimodal bilinguals) mix their languages in conversation, they must alternate between them (i.e., “code-switch”) because both languages rely on a single output channel (the vocal tract) and the same input channel (audition). In contrast, bilinguals who are fluent in a signed and a spoken language (bimodal bilinguals) can code-blend, producing or perceiving their two languages simultaneously. Code-blends are characterized by temporally synchronous onsets of signs and English words that convey equivalent (or closely related) semantic content (Emmorey, Borinstein, Thompson, & Gollan, 2008). They are strongly preferred by bimodal bilinguals over code-switches (Bishop, 2010; Bishop & Hicks, 2005; Emmorey et al., 2008), and are natural and frequent events in bimodal bilingual communication (Baker & Van den Bogaerde, 2008; Bishop, 2010; Petitto et al., 2001).

There is substantial evidence that in unimodal bilinguals, comprehension of each spoken language relies on the same, predominantly left hemisphere network, including the inferior frontal gyrus (IFG) and superior temporal gyrus (STG), particularly when both their languages are acquired early and with comparable levels of proficiency (for reviews see Perani & Abutalebi, 2005; van Heuven & Dijkstra, 2010). However, while comprehension of signed languages also activates this left hemisphere network (e.g., MacSweeney et al., 2002b; Petitto et al., 2000; Sakai, Tatsuno, Suzuki, Kimura, & Ichida, 2005), comparisons between spoken and signed languages in bimodal bilinguals reveal clear sensory-related differences (Emmorey, McCullough, Mehta, & Grabowski, 2014). Most prominently, audiovisual speech leads to greater recruitment of bilateral auditory processing regions in more anterior, perisylvian parts of the STG (in BA 22), whereas posterior motion processing regions in inferior, middle, and superior temporal gyri are more active for signed than spoken language comprehension (Söderfeldt et al., 1997). In addition, bimodal bilinguals show greater parietal and premotor activation during comprehension of sentences in American Sign Language (ASL), compared to audiovisual English sentences (Emmorey et al., 2014). Stronger parietal activation is also found during comprehension of British Sign Language sentences in deaf signers, compared to audiovisual spoken English in hearing speakers (MacSweeney et al., 2002b). Thus, although studies directly comparing signed and spoken languages within the same participants are rare, the available data demonstrate modality-related differences between the functional neuroanatomy of spoken and signed languages in anterior perisylvian, posterior temporal, parietal, and premotor regions.

Our goal in the present study was to further investigate modality-related differences in the language comprehension networks engaged by English and by ASL and to explore the network engaged during the simultaneous comprehension of both languages – a phenomenon unique to bimodal bilinguals. To that end, we examined brain responses in a group of hearing native ASL-English bilinguals making semantic decisions (i.e. is it edible?) to blocks of ASL signs, audiovisual English words, and code-blends (signs and words articulated simultaneously). We expected that comprehension of English or ASL in isolation would activate overlapping regions in left hemisphere fronto-temporal networks and that direct comparison of English and ASL perception would replicate previous language modality-related differences. Less clear, however, is how code-blend comprehension affects the recruitment of regions within these networks or whether additional regions are recruited to support code-blend comprehension.

Clues about the neural basis of code-blend comprehension can be gleaned from behavioral findings in bimodal bilinguals. For example, compared to comprehending audiovisual English or ASL alone, comprehension of code-blends incurs no additional processing cost, suggesting that processing English and ASL simultaneously is no more effortful (and may be easier) than processing one language in isolation (Emmorey, Petrich, & Gollan, 2012). In contrast, when unimodal bilinguals engage in code-switching, reaction times increase (compared to non-switch trials), possibly due to suppression of one language and activation of another (Costa & Santesteban, 2004; Meuter & Allport, 1999; for review, see Bobb & Wodniecka, 2013). Although code-blend comprehension does not appear to require the increased effort associated with code-switching, it does nonetheless require managing two languages. Thus, regions associated with executive functions, recruited to manage switching between two spoken languages in unimodal bilinguals, may also be recruited to support code-blend comprehension (e.g., anterior cingulate and dorsolateral prefrontal cortices; Abutalebi, 2008).

Code-blends not only incur no cost, but in fact they can facilitate comprehension, as evidenced by faster reaction times for semantic decisions (is it edible?) to code-blends than to either English or ASL alone (Emmorey et al., 2012). If this behavioral facilitation reflects reduced processing load, perhaps due to the presence of redundant semantic information, it may be reflected by decreased neural activity within parts of the language network, such as regions associated with semantic processing. Alternatively, if semantic congruency promotes top-down modulation, effects may be found in regions associated with processing lower level features (e.g., lexical and phonological information).

Code-blend comprehension may also be supported, in part, by the neural network that supports co-speech gesture comprehension, as ASL-English code-blends share several properties with co-speech gestures. First, both express semantic content via manual articulations in conjunction with spoken words, and both signs and gestures convey information that tends to closely match that of the accompanying speech (Emmorey et al., 2008; McNeill, 1992). Second, ASL signs and co-speech gestures are both typically synchronized with the onset of the relevant (“affiliated”) spoken word (Emmorey et al., 2008; Kendon, 1980; McNeill, 1992). Third, just as code-switching is rare among bimodal bilinguals (Emmorey et al., 2008), gestures typically co-occur with speech, and switching between gesturing and speaking is rare (McNeill, 1992). Finally, code-blend and co-speech gesture comprehension both require integration at the sensory level (across the visual and auditory modalities), and at the semantic level (across the meaning conveyed by the manual and speech signals).

A region in posterior STG/S has been implicated in the sensory integration of audiovisual information related to language, such as auditory speech and mouth movements (Callan et al., 2003; Calvert, Campbell, & Brammer, 2000). However, this region also appears to support multimodal integration of non-linguistic audiovisual information (e.g., objects and their associated sounds; Beauchamp, Lee, Argall, & Martin, 2004) as well as integration within a single modality, such as integrating visual information about form, color and motion into a single representation (e.g., Puce et al., 2003; Vaina, Solomon, Chowdhury, Sinha, & Belliveau, 2001). Thus, posterior STG/S may play a more general role in integrating multiple streams of information, regardless of modality (Beauchamp (2005)). Consistent with this idea, studies of co-speech gesture implicate posterior STS in the semantic integration of gesture with the accompanying speech (e.g., Hubbard, Wilson, Callan, & Dapretto, 2009; Willems, Ozyurek, & Hagoort, 2007).

Semantic integration effects for speech and gesture have been found in the inferior frontal gyrus of the left hemisphere (e.g., Green et al., 2009; Kircher et al., 2009) and the right hemisphere (Dick, Goldin-Meadow, Hasson, Skipper, & Small, 2009), as well as in the inferior parietal lobule (IPL) (e.g., Dick et al., 2009; Willems et al., 2007). This pattern of results suggests a role for the action processing system in the semantic integration of gesture with speech. Observation-execution simulations may aid in interpreting the goal of gestures that are vague until resolved within the context of speech (Green et al., 2009; Holle, Gunter, Rueschemeyer, Hennenlotter, & Iacoboni, 2008). The network of regions implicated in crossmodal integration for co-speech gesture may overlap, at least in part, with the network of regions participating in code-blend integration and comprehension.

There are important distinctions, however, between code-blends and co-speech gestures that make code-blends unique. First, ASL signs used in code-blends are part of an independent linguistic system with phonological and lexical structure (unlike gestures). The neural network supporting code-blend comprehension thus may include phonological or lexical related activation, in addition to that associated with sensory and semantic integration. Second, ASL signs and English words are each fully conventionalized lexical forms and neither requires contextualization from the other for comprehension. Thus, code-blend comprehension may rely less strongly on parietal action-observation regions that are recruited to interpret less-conventionalized gestures.

In sum, by comparing the neural networks recruited by bimodal bilinguals during English, ASL, and ASL-English code-blend comprehension, we aim to examine modality-related differences in language comprehension networks and explore whether simultaneous comprehension of languages across modalities simply relies on the combination of those networks or recruits additional regions (e.g., cognitive control regions).

2. Materials and methods

2.1. Participants

Twelve right-handed, hearing native ASL users (7 female, mean age = 26.85 years (SD = 5.51), mean education = 15.54 years (SD = 3.50) participated in the study. Data from an additional three participants were not useable due to excessive head movement during scanning. Two additional participants were excluded from analysis: one because he misunderstood task instructions and one due to low accuracy in the ASL condition. All participants were “children of deaf adults” (CODAs) born into deaf signing families. Participants reported no neurological, behavioral, or learning disorders. Proficiency ratings were collected from 11 of the 12 participants using a 7-point Likert scale where 1 indicated not proficient at all and 7 indicated native fluency. The mean rating was 6.64 (SD = .50) for understanding ASL and 6.72 (SD = .65) for understanding English. The language ratings did not differ from each other (p > .1). All but one reported using ASL in daily communication outside of work, seven reported using ASL daily in their work, eight reported working as interpreters, and the average percentage of time reported using ASL on a typical day was 51% (SD = 20). Informed consent was obtained according to procedures approved by the UCSD and SDSU Human Research Protection Programs.

2.1.1. Materials

We selected 180 different English nouns (mean log transformed frequency per million from CELEX = 3.05; SD = 1.49), each of which had a common ASL sign translation. These 180 nouns were split into three lists of 60 items each (List A, B, C), balanced for frequency (pairwise comparisons all p > .1). Each of these 60-item lists was used in the construction of one imaging run (three runs total). Forty words in each list denoted inedible items (e.g., guitar, car) and 20 words denoted edible items (e.g., chocolate, salt). We divided these lists into six blocks of 10 items each, with 3–4 edible items per block.

We filmed a male native ASL-English bilingual (a CODA) producing each of the original 180 items as (1) the spoken English word (ENG); (2) its ASL sign translation (ASL); and (3) the spoken word and its ASL sign translation simultaneously (code-blend or CB), resulting in a total of 540 unique video clips (180 nouns, each filmed in ENG, ASL, and as a CB). As in Emmorey et al. (2012), the sign model was not prevented from mouthing (producing small mouth movements related to the English translation of a sign) because isolated signs produced with a “blank face” and no mouth movements are perceived as unnatural. The video clips were edited with Final Cut Pro (Apple, Cupertino, CA). Video clips showed the seated sign model from the waist to just above the top of his head, seated in front of a blue background. Following Emmorey et al. (2012), ASL and CB clips began with the first frame in which the hands appeared on the screen and ended 5 frames (165 ms) after the hands began moving back to the rest position on the lap. A separate group of deaf bilinguals confirmed that each sign video elicited the intended English translation.

Following Emmorey et al. (2012), the onset of the audiovisual ENG clips occurred 9 frames (300 ms) prior to the voice onset of the word (in order to avoid an abrupt onset of audio-visual speech) and ended 9 frames (300 ms) after the offset of the word. The average duration of ASL, ENG, and CB clips was 1.35 s (SD = 0.26), 1.15 s (SD = 0.20), and 1.42 s (SD = 0.31) respectively (mean duration across all clips = 1.31, SD = .28). Blue frames (without any stimulus) were added to the end of each clip to extend trial durations to 3 seconds.

During the control task (based on MacSweeney et al., 2002a, 2002b), participants viewed audiovisual video clips of the sign model at rest (hands on his lap), with a black or white dot superimposed on his chin, and pressed a button to indicate the color of the dot. We matched the control clips for duration with the language clips and added blue frames to extend each to a three second trial length (as was done for the language clips). Half of the control task trials included auditory stimulation (a tone, in control blocks for audiovisual English) and the other half did not (control blocks for ASL). Lastly, a video of a black fixation cross centered on a light blue screen served as a low-level baseline.

The edited videos were combined to create three 5 min 56 s QuickTime movies (Apple, Cupertino, CA), each containing 60 language trials and 40 control trials. Each movie began with six seconds of blank screen to allow scanner magnetization to reach steady state prior to stimulus presentation, followed by a 16 s fixation period. Within each movie, language trials were presented in six 30 s blocks (10 trials per block; two blocks each of ENG, ASL, CB), intermixed with four 30 s control task blocks (two 10 trial blocks with tones and two without tones) and two 20 s fixation periods, such that each language block was followed by either a control or a fixation block.

Language assignment for each block was counterbalanced so that each participant saw each block only once, in either English, ASL, or as code-blends, and across the experiment, each item was seen in each language condition equally often. Thus, if one participant saw a 10-item block in ENG, another participant saw those items in ASL and a third participant saw them presented as code-blends. The order of language and control blocks was counterbalanced across runs, and run order was counterbalanced across participants.

2.2. Procedure

We presented stimulus movies using QuickTime running on a MacBook Pro computer (Apple, Cupertino, CA) and recorded responses on another MacBook Pro using PsyScope X Build 41 (psy.ck.cissa.it). Stimuli were projected by an XGA video projector to a screen at the foot of the scanner bed, visible through a mirror attached to the head coil. Subjects responded via a Lumitouch four-button response box (Photon Control, Burnaby, Canada) connected to the computer running PsyScope. Response hand was counterbalanced across subjects. During the six language blocks (two blocks of ENG, two of ASL, two of CB) participants pushed one button on the response box for each item they considered edible and a second button for all non-edible items. During control blocks they pressed one button if a dot on the sign model’s chin was white and the other button if the dot was black. We instructed participants to respond as quickly and as accurately as possible, stressing that speed and accuracy were equally important. Prior to scanning, participants practiced with unique trials (items not seen during scanning).

2.3. MRI Data acquisition

MRI data were collected on a 3-Tesla GE Signa Excite scanner equipped with an eight-element phased-array head coil at the Center for Functional MRI at the University of California, San Diego. For each participant, we collected high-resolution anatomical images using a T1 weighted FSPGR sequence (FOV 256 mm, 106 axial slices, 1.3 mm thick, 256 × 256 matrix, .98 mm in plane resolution, flip angle =12°, inversion time = 600 ms). For functional images, we acquired T2* weighted gradient-echo echo planar images (EPI) in the sagittal plane, 30 slices covering the whole brain, with a repetition time (TR) of 2000 ms, an echo time (TE) of 30 ms, flip angle = 90°, FOV = 240 mm, 64 × 64 matrix, 3.75 × 3.75 mm in-plane resolution, and 4.5 mm slice thickness (no gap). Each of three functional runs included 175 EPI volumes each, plus an additional three volumes at the beginning of each run (removed during data pre-processing) for a total run length of 5 min 56 s each). Additional functional imaging scans totaling ~33 min were acquired prior to these runs for a separate experiment that will be reported elsewhere.

2.4. Data processing and analysis

All MRI data were processed and analyzed using AFNI software (Cox, 1996). Anatomical images for each participant were aligned to the EPI data (align_epi_anat.py) and then spatially normalized to standardized space (Talairach & Tournoux, 1988) with a 12-parameter affine registration, using the @auto_tlrc program with the AFNI TT_N27 template (Colin27 brain). This transform was then applied to each participant’s functional data after correction for slice-dependent acquisition timing differences. During the transformation to standardized space head movements were corrected using Fourier interpolation in 3dvolreg, with the second volume of the first run as the reference, and volumes were smoothed with a 5.5 mm FWHM filter. Functional data were then normalized by dividing each voxel by the mean across all voxels and scaled to a mean of 100.

The regression matrix, generated using 3dDeconvolve, included head motion parameters as nuisance variables (three rotation and three translation estimates output by the 3dvolreg algorithm) and a third order Legendre polynomial for each run to account for slow drifts. Regressors of interest included one regressor for each language (ENG, ASL, CB) and each control condition (Still model without tone, Still model with tone) modeled using AFNI’s BLOCK basis function with duration of 30 s; any time points where the Euclidean norm of head movements was greater than one (i.e. > 1) were censored from statistical analysis. Fixation blocks were not explicitly modeled, as they comprised the low-level baseline for regression analysis. Voxel-wise response estimates for each individual, and their corresponding t-values for each stimulus type and contrast of interest, were entered into group-level mixed effects analyses contrasting each language condition versus its respective control condition and versus the other language conditions (3DMEMA; Chen, Saad, Nath, Beauchamp, & Cox, 2012). These analyses consider effect estimates and their precision to weight individual subjects’ contributions to group effects. We used a false discovery rate (FDR) correction for multiple comparisons, and all results are FDR corrected at a threshold of q < .05, unless otherwise noted. We report only cortical clusters whose extent exceeded 10 contiguous voxels; cluster volumes reflect resampling to a 3.5 mm3 grid, such that 1 voxel = 42.875 mm3. To create figures, data were resampled to 1 mm3 resolution for illustration purposes only. Sub-extrema for clusters larger than 100 voxels are available in Supplementary Materials (identified using 3dExtrema with minimum separation distance = 10 mm).

Lastly, we examined activations common to English and ASL comprehension by thresholding the statistical maps for English and for ASL comprehension, each versus its respective baseline, at an FDR corrected threshold of q < .05. The conjunction of the thresholded maps revealed areas active during both English and ASL comprehension exceeding a statistical threshold of q < .05.

3. Results

3.1. Behavioral results

Participants were highly accurate on the edible/non-edible decision task performed during scanning: mean percent correct for English alone = 96% (SD = 3.72%), for ASL alone = 92% (SD = 4.78%), and for code-blends = 98% (SD = 1.50%). A repeated measures ANOVA on percent accuracy revealed a main effect of condition (F(2,22) = 8.11, p <.01). Pairwise contrasts indicated that participants were more accurate for code-blends than for ASL alone (p < .001), and there was a trend for English to be more accurate than ASL (p = .053). There was no difference in accuracy between English alone and code-blends (p = .21). Similarly, a repeated measures ANOVA on reaction times revealed a main effect of condition (F(2,22) = 6.93, p < .01) and contrasts indicated faster reaction times (RTs) for code-blends (1318 ms; SD = 188 ms) than for ASL alone (1406 ms; SD = 195 ms), p < .002, and a trend for faster RTs for English alone (1337 ms; SD = 195 ms) than for ASL alone, p = .051. RTs for English alone and code-blends did not differ, p = .335.

3.2. Neuroimaging results

3.2.1. Language(s) vs. control task

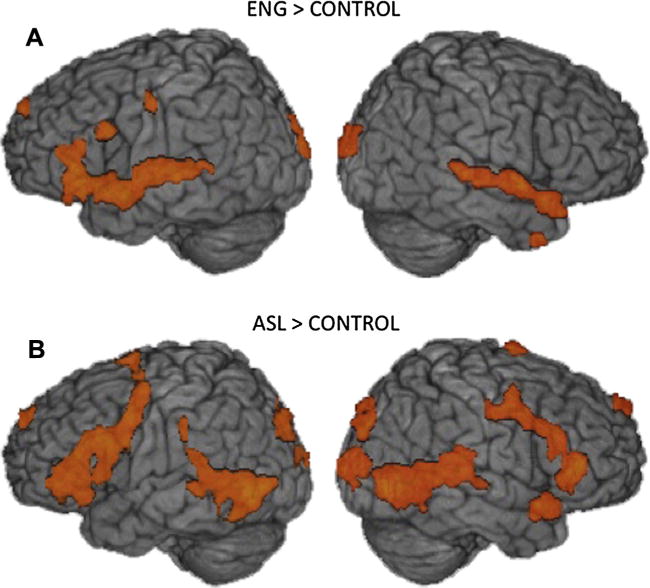

Comprehension of English words, relative to the control task, led to widespread activation, particularly in frontal and temporal cortices (see Table 1 and Fig. 1A). The largest clusters were centered in the left IFG (BA 44, pars opercularis), and anterior STG, with several extrema more posteriorly in BA 22 of STG (auditory cortex) (see Supplementary Materials for tables listing sub-extrema for all contrasts). Somewhat smaller clusters were focused in the left occipital pole, superior frontal gyrus, dorsal precentral gyrus, parahippocampal gyrus, and inferior temporal gyrus. In the right hemisphere, clusters were focused in the auditory association cortex of anterior STG, superior frontal gyrus, middle temporal gyrus close to the temporal pole, and visual extrastriate areas (Table 1 lists some clusters not visible in Fig. 1).

Table 1.

Clusters significantly more active for English perception than the Still model baseline (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | x | y | z | t-value |

|---|---|---|---|---|---|---|

| English vs. Still | ||||||

| L superior frontal gyrus | BA 9 | 600 | −9 | 55 | 31 | 5.62 |

| R superior frontal/cingulate gyrus | BA 32 | 429 | 12 | 20 | 35 | 4.54 |

| L inferior frontal gyrus (pars opercularis) | BA 44, 45, 47 | 15,006 | −37 | 13 | 21 | 9.62 |

| R MTG/temporal pole | BA 38 | 515 | 40 | 10 | −32 | 5.10 |

| L superior temporal gyrus | BA 38, 47, 41 22, 21 | 13,077 | −30 | 6 | −11 | 6.89 |

| L superior frontal gyrus | BA 6 | 986 | −5 | 3 | 52 | 5.00 |

| R superior temporal gyrus | BA 22, 47 | 7246 | 61 | −1 | −1 | 7.43 |

| R parahippocampal gyrus | 1672 | 26 | −10 | −15 | 6.59 | |

| L precentral gyrus | BA 6 | 515 | −51 | −12 | 45 | 4.38 |

| L parahippocampal gyrus | BA 35 | 429 | −23 | −26 | −18 | 5.16 |

| R fusiform gyrus | 943 | 37 | −43 | −22 | 5.14 | |

| L inferior temporal gyrus | BA 37 | 3301 | −47 | −47 | −4 | 5.07 |

| R lingual gyrus | BA 17 | 2573 | 5 | −61 | 7 | 5.15 |

| R superior occipital gyrus/occipital pole | BA 18 | 429 | 16 | −96 | 17 | 3.74 |

| L superior occipital gyrus/occipital pole | BA 17, 18 | 4416 | −5 | −96 | 14 | 6.15 |

Fig. 1.

Group averaged statistical maps show regions activated by bimodal bilinguals for (A) English comprehension and for (B) ASL comprehension, each relative to their respective control condition (q < .05). Activations up to 15 mm below the cortical surface are projected onto a template brain.

During ASL comprehension, relative to the control task, the largest active cluster was also focused in left IFG (BA 44, pars opercularis; Table 2, Fig. 1B), with additional clusters in bilateral extrastriate cortex, left superior frontal and superior parietal cortices, right inferior frontal and superior temporal gyri, and occipital cortex, bilaterally. Thus, overall, relative to the control task, ASL and English comprehension activated similar regions in frontal, temporal, and occipital cortices with activation in right frontal and left superior parietal regions for ASL, but not for English.

Table 2.

Clusters significantly more active for ASL perception than the Still model baseline (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | X | y | z | t-value |

|---|---|---|---|---|---|---|

| ASL vs. Still | ||||||

| L superior frontal gyrus | BA 9, 6 | 1329 | −9 | 59 | 31 | 5.21 |

| R inferior frontal gyrus (pars triangularis) | BA 45, 6 | 12,863 | 54 | 31 | 14 | 7.56 |

| L inferior frontal gyrus (pars opercularis) | BA 44, 45, 6 | 30,913 | −47 | 13 | 28 | 12.84 |

| R superior temporal gyrus | 2101 | 47 | 10 | −11 | 8.43 | |

| L superior parietal lobule | BA 40, 7 | 1158 | −30 | −43 | 38 | 3.92 |

| L fusiform gyrus | BA 37, 36, 22, 19, V5 | 28,683 | −40 | −47 | −8 | 13.63 |

| R middle/lateral occipital gyrus | V5, 22, 42, 37, V4, 18 | 27,097 | 47 | −68 | 3 | 9.50 |

| L lateral occipital gyrus | BA 18 | 4502 | −19 | −82 | −4 | 6.64 |

| R middle occipital gyrus/occipital pole | BA 18, 17 | 6131 | 26 | −89 | 7 | 6.05 |

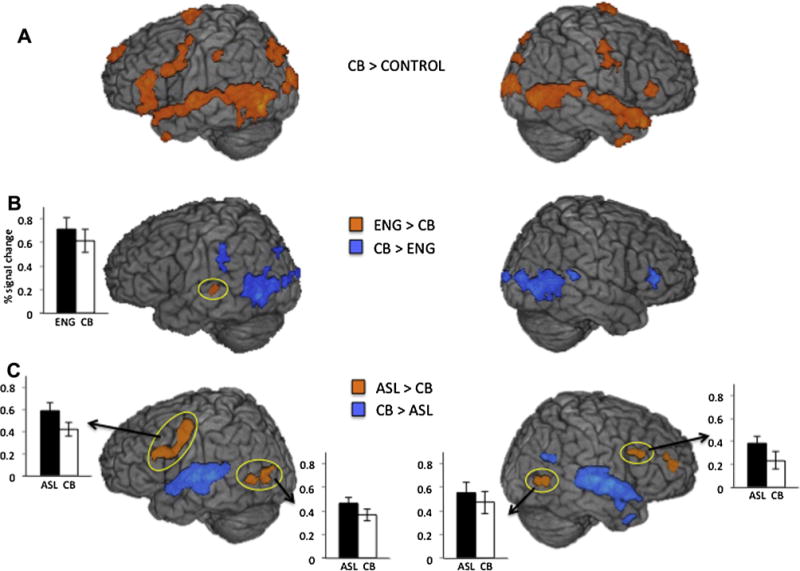

Examination of activation during code-blend comprehension compared to the control task revealed, as might be expected, activity in a combination of the regions active during the comprehension of each language alone, including bilateral frontal language areas, lateral temporal, and ventral occipitotemporal regions (Table 4 and Fig. 3A).

Table 4.

Clusters significantly more active for Code-blend perception than the Still model baseline (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | x | y | z | t-value |

|---|---|---|---|---|---|---|

| Code-blends vs. Still | ||||||

| L superior frontal gyrus | BA 9 | 1415 | −9 | 59 | 35 | 4.93 |

| L medial orbitofrontal/anterior cingulate gyrus | BA 32 | 1758 | −2 | 34 | −8 | 5.12 |

| R inferior frontal gyrus (pars triangularis) | BA 45 | 1243 | 51 | 27 | 10 | 5.38 |

| L inferior frontal gyrus (pars triangularis) | BA 45, 6, 13, 11 | 15,778 | −47 | 27 | 17 | 10.66 |

| R superior temporal gyrus | BA 38, 22 | 8575 | 51 | 17 | −15 | 8.94 |

| R inferior temporal gyrus/temporal pole | BA 21 | 1715 | 40 | 6 | −32 | 5.69 |

| L superior frontal gyrus | BA 6 | 2015 | −12 | −5 | 70 | 6.43 |

| R precentral gyrus | BA 4/6 | 1501 | 54 | −15 | 45 | 4.53 |

| L supramarginal gyrus | BA 40 | 3087 | −40 | −36 | 38 | 6.89 |

| R fusiform gyrus | BA 37, 22, V5 | 19,508 | 40 | −40 | −15 | 9.53 |

| R postcentral gyrus | BA 2 | 729 | 26 | −40 | 45 | 4.89 |

| L inferior temporal gyrus | BA 19, 38, 22, 39, 37 | 30,570 | −51 | −68 | −4 | 12.83 |

| L lateral occipital gyrus/occipital pole | BA 17 | 1929 | −19 | −89 | −1 | 4.84 |

| R lateral occipital gyrus/occipital pole | BA 17/18 | 7803 | 16 | −92 | 10 | 7.64 |

Fig. 3.

Group averaged statistical maps show regions activated by bimodal bilinguals during code-blend comprehension. Histograms show grouped averaged percent change in BOLD signal within the regions circled in yellow. (A) Orange colored regions indicate greater activity during code-blend comprehension than the control condition. (B) Orange regions indicate activation for comprehension of English > Code-blends (q < .052); blue colored regions indicate activation for comprehension of Cod-blends > English (q < .05). (C) Orange colored regions indicate activation for comprehension of ASL > Code-blends (q < .05); blue regions indicate activation for comprehension of Code-blends > ASL (q < .05). Activations up to 15 mm below the cortical surface are projected onto a template brain.

Having identified brain regions active in bimodal bilinguals during audiovisual English comprehension, during ASL comprehension, and during simultaneous perception of both languages, we next compared brain responses for English versus ASL comprehension, and for code-blends relative to English and to ASL perceived alone.

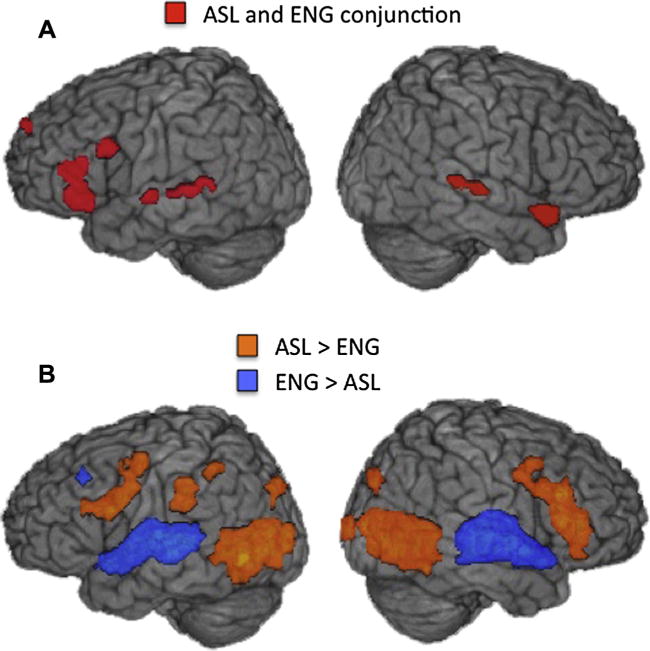

3.2.2. Comparing and contrasting audiovisual English and ASL comprehension

If the same left hemisphere language network mediates comprehension of both languages in bimodal bilinguals, we would expect overlapping activations for English and ASL compared to their respective control tasks. As shown in Fig. 2A, a conjunction analysis revealed that activation for English and ASL comprehension overlapped in the left inferior and middle frontal gyri, extending into premotor cortex. Activation for both languages also overlapped in the right superior temporal cortex and anterior temporal lobe.

Fig. 2.

(A) Group averaged conjunction map shows regions activated by bimodal bilinguals during both English and ASL comprehension (red), each contrasted with its respective control condition (q < .05). (B) Group averaged statistical maps show regions where activation for comprehension of ASL > English (orange) and where activation for comprehension of English > ASL (blue) (direct contrast, q < .05). Activations up to 15 mm below the cortical surface are projected onto a template brain.

The analysis directly contrasting activation during English and ASL comprehension revealed larger responses for English than ASL predominantly in bilateral superior temporal auditory regions (Table 3 and Fig. 2B). In contrast, several left hemisphere regions exhibited greater activation for ASL than for English comprehension, including the precentral gyrus (extending into IFG), fusiform gyrus (extending into MTG and occipital cortex), and superior parietal cortex (including the supramarginal gyrus and IPS) (Table 3 and Fig. 2B). In the right hemisphere, increased activation for ASL, relative to English, occurred in the middle frontal gyrus (extending into IFG) and fusiform gyrus (extending into STS and occipital cortex).

Table 3.

Significantly active clusters for the contrast of ASL versus English perception (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | x | y | z | t-value |

|---|---|---|---|---|---|---|

| English > ASL | ||||||

| L superior temporal gyrus | BA 22, 40, 41, 42 | 29,841 | −47 | −5 | 3 | 12.00 |

| R superior temporal gyrus | BA 41/22, 43, 21 | 35,929 | 47 | −19 | 7 | 14.73 |

| L parahippocampal gyrus | 900 | −16 | −26 | −15 | 5.45 | |

| ASL > English | ||||||

| R middle frontal gyrus | BA 9, 45 | 11,319 | 51 | 24 | 31 | 9.16 |

| L precentral gyrus | BA 6, 44 | 8961 | −51 | 3 | 31 | 6.98 |

| R fusiform gyrus | BA 18, 19, 17, 39, V5 | 29,712 | 40 | −50 | −8 | 14.90 |

| L fusiform gyrus | BA 37, 19, 18, V5 | 25,211 | −40 | −57 | −8 | 10.86 |

| L superior parietal lobule | BA 31, 39, 40, 7 | 13,248 | −19 | −78 | 28 | 8.30 |

3.2.3. Code-blends vs. audiovisual english alone

The direct contrast between responses to ASL-English code-blends and audiovisual English revealed several clusters that were more active during code-blend comprehension than English comprehension, and each overlapped with clusters identified in our previous analysis as more active for ASL than for English (and ASL relative to the control condition). This suggests that code-blend comprehension relies on a combination of the English and ASL comprehension networks. Foci were located in the right IFG and STG, and in bilateral parietal (i.e. inferior parietal lobule on the left and superior parietal lobule on the right) and occipitotemporal cortices (e.g., inferior temporal, fusiform gyri) (Table 5 and Fig. 3B).

Table 5.

Significantly active clusters where English > Code-blend perception (q = .052) and where Code-blend > English perception (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | x | y | z | t-value |

|---|---|---|---|---|---|---|

| Code-blend > English | ||||||

| R inferior frontal gyrus (pars triangularis) | BA 45 | 986 | 51 | 34 | 10 | 7.30 |

| L inferior parietal lobule | BA 40 | 3173 | −40 | −33 | 38 | 7.37 |

| R superior temporal gyrus | BA 22 | 643 | 61 | −40 | 14 | 6.94 |

| R superior parietal lobule | BA 2 | 858 | 26 | −47 | 45 | 5.96 |

| R inferior temporal gyrus | BA 37, 39V4, V5 | 13,120 | 44 | −50 | −8 | 13.20 |

| L inferior temporal gyrus | BA 37, 22, 19 | 12,605 | −37 | −57 | −4 | 10.74 |

| R middle/lateral occipital gyrus | BA 18/19, 17 | 4459 | 33 | −85 | 10 | 9.43 |

| L middle/lateral occipital gyrus | BA 18 | 858 | −23 | −89 | 3 | 7.40 |

| English > Code-blend | ||||||

| L superior temporal gyrus | BA 22 | 429 | −58 | −29 | 7 | 5.00 |

Only one cluster, located in the left STG (BA 22) showed the opposite preference, responding more strongly during English than code-blend comprehension, though activation here fell slightly below our selected statistical threshold (q = .052) (Table 5 and Fig. 3B). This cluster overlapped with a region that was also more active for English than for ASL (and English relative to the control condition). Thus, in this part of auditory cortex, responses are reduced when English is perceived as part of an ASL-English code-blend.

3.2.4. Code-blends vs. ASL alone

Compared to ASL alone, code-blend comprehension elicited additional activity in auditory cortex, specifically, in bilateral STG (focused in BA 22 on the right and BA 41 on the left) (Table 6 and Fig. 3C). This result is unsurprising given that code-blend comprehension involves the auditory perception of speech and ASL comprehension does not. These same regions also showed greater activation for English than ASL comprehension.

Table 6.

Significantly active clusters for the contrast of ASL versus Code-blend perception (q < .05). Peak and submaxima Brodmann areas, cluster volumes, peak Talairach coordinates, and peak t-values are listed (see Supplementary Materials for extrema coordinates).

| Region | BA | Vol (mm3) | x | y | z | t-value |

|---|---|---|---|---|---|---|

| Code-blend > ASL | ||||||

| R superior temporal gyrus | BA 22, 41, 42, 43, 21 | 23,067 | 61 | −8 | 7 | 14.90 |

| L superior temporal gyrus | BA 41, 22, 42 | 20,451 | −54 | −15 | 7 | 12.68 |

| ASL > Code-blend | ||||||

| R inferior frontal gyrus/insula | BA 47 | 1115 | 33 | 27 | −1 | 6.29 |

| L insula/lateral orbitofrontal gyrus | BA 47 | 515 | −33 | 24 | −1 | 5.16 |

| R middle/inferior frontal gyrus (pars triangularis) | BA 45 | 815 | 51 | 17 | 31 | 4.99 |

| R middle temporal gyrus | BA 37/V5 | 1286 | 47 | −61 | 3 | 6.01 |

| L middle occipital gyrus | V5 | 1415 | −30 | −75 | 7 | 5.59 |

However, perhaps more surprising is that we identified several regions that responded more strongly during ASL comprehension than during code-blend comprehension. Specifically, increased responses to ASL occurred in bilateral frontal language regions (including precentral and inferior frontal gyri) and in bilateral occipitotemporal cortex, overlapping with area V5/MT+ (with peaks in middle occipital gyrus on the left and in MTG on the right; Table 6, Fig. 3C). Each of these regions was also more active for ASL than English comprehension (and ASL versus the control condition). Thus, when ASL signs are accompanied by English speech expressing the same lexical information, reduced activation occurs in regions associated with ASL comprehension.

4. Discussion

We examined the neural network engaged during comprehension of audiovisual English words, ASL signs, and ASL-English code-blends in a group of bimodal bilinguals. Behaviorally, participants were faster and more accurate making semantic decisions for code-blends than for ASL signs, replicating results from Emmorey et al. (2012). Participants performed marginally better for English than for ASL in terms of speed and accuracy, but showed no advantage for English compared to code-blends. In contrast, Emmorey et al. (2012) reported faster semantic decisions for code-blends than English, but the facilitation effect was nonetheless weaker than for code-blends compared to ASL (93 ms for English compared to 138 ms for ASL). Emmorey et al. had a much larger sample (45 subjects) than the present study, which may have increased their ability to detect smaller differences between conditions. The pattern of results in both studies may arise, in part, because English is the dominant language of hearing ASL-English bilinguals (Emmorey et al., 2008). Even though participants’ self-rated proficiency was high for both English and ASL, they are immersed in an English-dominant environment and perceive English more frequently and through more avenues than they perceive ASL (e.g., radio, television, commercial interactions, etc.). English dominance is also consistent with superior performance for English compared to ASL in both studies.

4.1. Modality-independent neural activations for English and ASL comprehension

Very few studies have directly compared perception of audiovisual English and ASL within the same group of subjects in the same study. This contrast is crucial, as within-group comparisons eliminate variability on a host of factors that can influence imaging results when brain responses are compared across different groups of participants (e.g., physiology, functional organization, anatomical structure; in addition to non-physiological variability from factors such as demographics, education, linguistic experience). Compared to their respective control tasks, neural responses to words and signs each engaged a left hemisphere dominant network that included frontal and temporal regions (e.g., IFG and superior temporal cortex). Overlapping activation for English and ASL comprehension in this left fronto-temporal network is consistent with studies of language comprehension in both unimodal (Perani & Abutalebi, 2005; van Heuven & Dijkstra, 2010) and bimodal bilinguals (Söderfelt et al., 1997; Emmorey et al., 2014). It is also consistent with findings that signed and spoken language comprehension activate the same left hemisphere regions when separate groups of deaf signers and hearing speakers are compared (MacSweeney et al., 2002b; Sakai et al., 2005). Thus, the left hemisphere language network that supports comprehension in monolinguals can represent multiple languages in the same cortical regions, particularly for highly proficient, early-acquired languages (Chee, Hon, Lee, & Soon, 2001; Illes et al., 1999; Mayberry, Chen, Witcher, & Klein, 2011; Perani et al., 1996; Wartenburger et al., 2003).

We also found overlapping activation for English and ASL bilaterally in perisylvian auditory association cortex (BA 21, 22), consistent with Emmorey et al. (2014). Although they also reported common activations across spoken and signed languages in bilateral inferior frontal gyri, we found overlap in left IFG only; right prefrontal cortex showed increased activation for ASL comprehension, but not for English comprehension. The divergent results may reflect task (e.g., semantic decision task vs. anomalous sentence detection) or stimulus factors (e.g., semantic content, sentence vs. word comprehension) that result in differential engagement of right inferior frontal cortex during English comprehension.

4.2. Modality-dependent activation differences between English and ASL comprehension

Although the conjunction analysis demonstrates that English and ASL comprehension rely, in part, on a shared network of regions, the direct contrast between English and ASL comprehension revealed modality-related differences between languages. As in previous studies of spoken and signed language comprehension, spoken words activated auditory regions in bilateral superior temporal cortex more than ASL signs (Söderfelt et al., 1997; Emmorey et al., 2014; MacSweeney et al., 2002b; Sakai et al., 2005), whereas ASL signs activated occipitotemporal cortices extending rostrally into STS, and encompassing the motion sensitive area MT+, more than English (Emmorey et al., 2014; MacSweeney et al., 2002b; Sakai et al., 2005; Söderfeldt et al., 1997). This pattern is consistent with the notion that the middle-anterior part of superior temporal cortex is especially tuned to auditory speech in hearing people, regardless of sign language experience (MacSweeney et al., 2002b), and that robust responses to ASL in extrastriate regions reflect the larger hand and arm movements characteristic of signing, compared to the smaller mouth movements of speech (Courtin et al., 2011).

Increased neural responses for ASL, relative to English, also occurred in left parietal and bilateral premotor cortices. The left parietal activation is consistent with previous studies demonstrating that parietal cortex is associated not only with sign language production (e.g., Braun, Guillemin, Hosey, & Varga, 2001; Corina et al., 1999), but with comprehension as well (Emmorey et al., 2014; MacSweeney et al., 2002a, 2002b; MacSweeney et al., 2004). The increased engagement of premotor and parietal regions by ASL signs relative to English words resembles the premotor-parietal circuit that forms part of the action observation network (AON) described by Buccino et al. (2001) and others (see Grafton, 2009 for review). As suggested by Emmorey et al. (2014), this premotor-parietal circuit may reflect the use of a manual internal simulation to predict upcoming signs using forward modeling during ASL sentence comprehension. Our finding that this network is engaged when viewing single ASL signs further suggests that the premotor-parietal AON plays a role in predicting the movement of ASL articulators in the service of comprehension not only at the sentential level, but for individual lexical items as well. Although further investigation is required, a recent MEG study reported that deaf signers matching single signs to pictures produced larger parietal responses than hearing speakers matching auditory English words to pictures (Leonard et al., 2012), consistent with the idea that a fronto-parietal circuit is involved in sign comprehension at the lexical level.

4.3. Neural activation during code-blend comprehension

We identified no regions that were uniquely activated by code-blend comprehension. Rather, compared to the control task, code-blend comprehension engaged a network corresponding to a combination of the regions active for English and ASL alone. Thus, code-blend comprehension appears to rely on fronto-temporal regions revealed by the English-ASL conjunction analysis, in addition to sensory modality-related regions active for each language alone. Given that code-blend comprehension requires managing two languages, it is striking that no cognitive control regions (e.g., anterior cingulate cortex) were recruited during code-blend comprehension. In contrast, unimodal bilinguals activate the anterior cingulate cortex when perceiving auditory language switches (Abutalebi et al., 2007). This pattern of results suggests that greater executive control is required to manage two languages within the same modality than across modalities.

Although it may seem surprising that code-blend comprehension did not engage cognitive control regions, this finding is nonetheless consistent with our behavioral results that indicated no processing costs for code-blends. In fact, code-blends actually facilitated comprehension (see also Emmorey et al., 2012). Code-blend facilitation may be analogous to a more general cognitive phenomenon known as the redundant signals effect, in which responses times are faster when two stimuli with the same meaning are presented in different modalities compared to presentation of either stimulus alone (e.g., Miller, 1986). First reported for very simple stimuli and thought to rely on the proximity of low-level perceptual properties of the stimuli, e.g., a tone paired with a light signaling a “go” response (e.g., Diederich & Colonius, 1987, 2004; Schröger & Widmann, 1998), more recent reports demonstrated that semantic congruence is required to observe such facilitation effects (Laurinenti, Kraft, Maldjian, Burdette, & Wallace, 2004; Molholm, Ritter, Javitt, & Foxe, 2004; Suied, Bonneel, & Viaud-Delmon, 2009). In the current study, the redundant lexical-semantic information in code-blends may facilitate both sensory and semantic processing.

We observed reduced activation for code-blends in sensory cortices compared to each language alone, suggesting that code-blend facilitation occurs at the sensory level. Specifically, relative to English alone, code-blend comprehension elicited reduced activity in auditory association cortex (left STG/S, BA 22), and relative to ASL alone, code-blend comprehension elicited reduced activity in posterior extrastriate visual regions (e.g., in area MT+/V5 and nearby cortex). Decreased activation in these sensory regions may reflect reduced resources allocated to perception of language in one modality in the presence of redundant cues from the other.

Reduced activation for code-blends compared to ASL alone also occurred in bilateral inferior frontal cortex. Notably, these regions are associated with semantic processing (e.g., Binder, Desai, Graves, & Conant, 2009), and bilateral IFG also showed greater activation for ASL signs than English words. This pattern suggests that semantic processing of ASL signs requires fewer neural resources when they are accompanied by spoken word translations. The redundant lexico-semantic information provided by English appears to reduce engagement of prefrontal semantic processing regions. The fact that we observed reduced activation for code-blends relative to ASL, but not relative to English, is consistent with English as the dominant language of our bimodal bilinguals.

The prefrontal regions we identified as less active during code-blend than ASL comprehension also concur with reports of a prefrontal semantic integration region found in studies of co-speech gesture comprehension (e.g., Kircher et al., 2009; Straube, Green, Weis, Chatterjee, & Kircher, 2009; Straube et al., 2013). For example, several studies have demonstrated that increased semantic load leads to increased IFG activation, e.g., when speech and gestures are incongruent vs. congruent, or when gestures serve to disambiguate speech (Dick et al., 2009; Green et al., 2009; Willems et al., 2007). For code-blends, we find a complementary effect: identical semantic information conveyed by English and by ASL reduces semantic load, and this semantic congruency leads to reduced activity in bilateral IFG. While our results suggest that these activations reflect multimodal semantic integration, others have posited that activation in IFG reflects pre-integration monitoring (Green et al., 2009; Straube, Green, Weis, & Kircher, 2012). In fact, overlapping subregions of the left IFG have been associated with a host of language-related functions at phonological, lexical, semantic, and syntactic levels (Hagoort, 2005). Further studies of ASL-English code-blends in which the semantic content across modalities is incongruent, ambiguous, or complementary, will help clarify the role of semantic load and redundant signals in modulating activity in these prefrontal regions.

It is tempting to wonder whether the pattern of activation differences for code-blends, relative to each language alone, can be explained by differences in participants’ task performance across language conditions. Semantic decisions were faster and more accurate for code-blends and for English, relative to ASL, with comparable performance between code-blends and English. However, we found reduced responses for code-blends compared to ASL and compared to English alone. Moreover, because these decreases occurred in sensory cortices specific to each modality (visual cortex and auditory cortex, for ASL and English, respectively), integration across modalities is a more likely explanation.

As mentioned in the introduction, ASL-English code-blends differ from co-speech gestures, in part because signs comprise an independent linguistic system with phonological and lexical structure, while gestures do not and often require speech for interpretation. In contrast to findings for co-speech gesture (Green et al., 2009; Holle et al., 2008), parietal cortex was not differentially active for code-blend comprehension compared to comprehension of either language alone. Increased parietal activation accompanying effortful semantic integration across gesture and speech is thought to represent attempts to interpret the goal of gestural actions, which can appear vague until resolved within the context of speech due to their lack of conventionalization. The parietal action processing system may not be required to the same extent to interpret the fully conventionalized ASL signs and English words that comprise code-blends. Future studies that directly contrast code-blend comprehension with co-speech gesture perception will help elucidate the role of parietal cortex in semantic contextualization and more clearly distinguish between brain regions mediating semantic versus sensory modality-related integration.

In summary, ASL-English code-blend comprehension recruited a network that resembled a combination of regions active for each language alone. Unlike code-switching in unimodal bilinguals, code-blend comprehension did not recruit cognitive control regions, suggesting that there is little or no competition between languages when the same lexical item is perceived simultaneously in speech and sign. Rather, the behavioral facilitation observed during code-blend comprehension is reflected by reduced neural activity in the relevant sensory cortices: auditory regions when code-blends are compared to English and visual regions when compared to ASL. In addition, the prefrontal regions demonstrating reduced activity during code-blend comprehension, compared to ASL, are similar to those found for semantic integration during co-speech gesture perception, reflecting shared characteristics of these multimodal inputs.

Although comprehension of spoken and signed language rely on similar left hemisphere-dominant fronto-temporal networks, activation differences were evident in sensory cortices associated with the perception of each language modality. These auditory and visual regions engaged by perception of English and ASL, respectively, exhibited reduced recruitment during comprehension of code-blends. Taken together, our findings indicate that code-blend comprehension is facilitated by redundant cues across languages in different modalities and that integration across signed and spoken languages leads to more efficient neural processing in frontal language and posterior temporal sensory regions.

Supplementary Material

Highlights.

fMRI study of code-blend comprehension in bimodal ASL-English bilinguals.

ASL comprehension activates prefrontal-parietal action observation circuit.

Code-blend comprehension did not recruit cognitive control regions.

Reduced activity for code-blends vs. English in auditory association cortex.

Reduced activity for code-blends vs. ASL in prefrontal and posterior temporal cortices.

Acknowledgments

This research was supported by NIH Grant HD047736 to SDSU and Karen Emmorey. We thank Jennifer Petrich and Cindy O’Grady for help with stimulus creation and data collection and all who participated in the study.

Appendix A. Supplementary material

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.bandl.2015.05.006.

References

- Abutalebi J. Neural aspects of second language representation and language control. Acta Psychologica. 2008;128(3):466–478. doi: 10.1016/j.actpsy.2008.03.014. http://dx.doi.org/10.1016/j.actpsy.2008.03.014. [DOI] [PubMed] [Google Scholar]

- Abutalebi J, Brambati SM, Annoni JM, Moro A, Cappa SF, Perani D. The neural cost of the auditory perception of language switches: An event-related functional magnetic resonance imaging study in bilinguals. Journal of Neuroscience. 2007;27(50):13762–13769. doi: 10.1523/JNEUROSCI.3294-07.2007. http://dx.doi.org/10.1523/jneurosci.3294-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker A, Van den Bogaerde B. In: Sign bilingualism: Language development, interaction, and maintenance in sign language contact situations. Plaza-Pust C, Morales Lopéz E, editors. Amsterdam: John Benjamins; 2008. [Google Scholar]

- Beauchamp MS. See me, hear me, touch me: Multisensory integration in lateral occipital-temporal cortex. Current Opinion in Neurobiology. 2005;15(2):145–153. doi: 10.1016/j.conb.2005.03.011. http://dx.doi.org/10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron. 2004;41(5):809–823. doi: 10.1016/s0896-6273(04)00070-4. http://dx.doi.org/10.1016/s0896-6273(04)00070-4. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. http://dx.doi.org/10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop M. Happen can’t hear: An analysis of code-blends in hearing, native signers of American Sign Language. Sign Language Studies. 2010;11(2):205–240. http://dx.doi.org/10.1353/sls.2010.0007. [Google Scholar]

- Bishop M, Hicks S. Orange eyes: Bimodal bilingualism in hearing adults from deaf families. Sign Language Studies. 2005;5(2):188–230. http://dx.doi.org/10.1353/sls.2005.0001. [Google Scholar]

- Bobb SC, Wodniecka Z. Language switching in picture naming: What asymmetric switch costs (do not) tell us about inhibition in bilingual speech planning. Journal of Cognitive Psychology. 2013;25(5):568–585. http://dx.doi.org/10.1080/20445911.2013.792822. [Google Scholar]

- Braun AR, Guillemin A, Hosey L, Varga M. The neural organization of discourse – An (H2O)-O-15-PET study of narrative production in English and American Sign Language. Brain. 2001;124:2028–2044. doi: 10.1093/brain/124.10.2028. http://dx.doi.org/10.1093/brain/124.10.2028. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, et al. Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. European Journal of Neuroscience. 2001;13(2):400–404. http://dx.doi.org/10.1046/j.1460-9568.2001.01385.x. [PubMed] [Google Scholar]

- Callan DE, Jones JA, Munhall K, Callan AM, Kroos C, Vatikiotis-Bateson E. Neural processes underlying perceptual enhancement by visual speech gestures. NeuroReport. 2003;14(17):2213–2218. doi: 10.1097/00001756-200312020-00016. http://dx.doi.org/10.1097/01.wnr.0000095492.38740.8f. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Current Biology. 2000;10(11):649–657. doi: 10.1016/s0960-9822(00)00513-3. http://dx.doi.org/10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- Chee MWL, Hon N, Lee HL, Soon CS. Relative language proficiency modulates BOLD signal change when bilinguals perform semantic judgments. Neuroimage. 2001;13(6):1155–1163. doi: 10.1006/nimg.2001.0781. http://dx.doi.org/10.1006/nimg.2001.0781. [DOI] [PubMed] [Google Scholar]

- Chen G, Saad ZS, Nath AR, Beauchamp MS, Cox RW. FMRI group analysis combining effect estimates and their variances. Neuroimage. 2012;60(1):747–765. doi: 10.1016/j.neuroimage.2011.12.060. http://dx.doi.org/10.1016/j.neuroimage.2011.12.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, McBurney SL, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. Functional roles of Broca’s area and SMG: Evidence from cortical stimulation mapping in a deaf signer. Neuroimage. 1999;10(5):570–581. doi: 10.1006/nimg.1999.0499. http://dx.doi.org/10.1006/nimg.1999.0499. [DOI] [PubMed] [Google Scholar]

- Costa A, Santesteban M. Lexical access in bilingual speech production: Evidence from language switching in highly proficient bilinguals and L2 learners. Journal of Memory and Language. 2004;50(4):491–511. http://dx.doi.org/10.1016/j.jml.2004.02.002. [Google Scholar]

- Courtin C, Jobard G, Vigneau M, Beaucousin V, Razafimandimby A, Herve PY, et al. A common neural system is activated in hearing non-signers to process French Sign language and spoken French. Brain Research Bulletin. 2011;84(1):75–87. doi: 10.1016/j.brainresbull.2010.09.013. http://dx.doi.org/10.1016/j.brainresbull.2010.09.013. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29(3):162–173. doi: 10.1006/cbmr.1996.0014. http://dx.doi.org/10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dick AS, Goldin-Meadow S, Hasson U, Skipper JI, Small SL. Co-speech gestures influence neural activity in brain regions associated with processing semantic information. Human Brain Mapping. 2009;30(11):3509–3526. doi: 10.1002/hbm.20774. http://dx.doi.org/10.1002/hbm.20774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diederich A, Colonius H. Intersensory facilitation in the motor component – A reaction-time analysis. Psychological Research-Psychologische Forschung. 1987;49(1):23–29. http://dx.doi.org/10.1007/bf00309199. [Google Scholar]

- Diederich A, Colonius H. Bimodal and trimodal multisensory enhancement: Effects of stimulus onset and intensity on reaction time. Perception & Psychophysics. 2004;66(8):1388–1404. doi: 10.3758/bf03195006. http://dx.doi.org/10.3758/bf03195006. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Borinstein HB, Thompson R, Gollan TH. Bimodal bilingualism. Bilingualism: Language and Cognition. 2008;11(1):43–61. doi: 10.1017/S1366728907003203. http://dx.doi.org/10.1017/S1366728907003203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Mehta S, Grabowski TJ. How sensory-motor systems impact the neural organization for language: Direct contrasts between spoken and signed language. Frontiers in Psychology. 2014;5:13. doi: 10.3389/fpsyg.2014.00484. http://dx.doi.org/10.3389/fpsyg.2014.00484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Petrich JAF, Gollan TH. Bilingual processing of ASL-English code-blends: The consequences of accessing two lexical representations simultaneously. Journal of Memory and Language. 2012;67(1):199–210. doi: 10.1016/j.jml.2012.04.005. http://dx.doi.org/10.1016/j.jml.2012.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nature Neuroscience. 2001;4(12):1171–1173. doi: 10.1038/nn763. http://dx.doi.org/10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Grafton ST. Embodied cognition and the simulation of action to understand others. Year in Cognitive Neuroscience. 2009;2009(1156):97–117. doi: 10.1111/j.1749-6632.2009.04425.x. http://dx.doi.org/10.1111/j.l749-6632.2009.04425.x. [DOI] [PubMed] [Google Scholar]

- Green A, Straube B, Weis S, Jansen A, Willmes K, Konrad K, et al. Neural integration of iconic and unrelated coverbal gestures: A functional MRI study. Human Brain Mapping. 2009;30(10):3309–3324. doi: 10.1002/hbm.20753. http://dx.doi.org/10.1002/hbm.20753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagoort P. On Broca, brain, and binding: A new framework. Trends in Cognitive Sciences. 2005;9(9):416–423. doi: 10.1016/j.tics.2005.07.004. http://dx.doi.org/10.1016/j.tics.2006.07.004. [DOI] [PubMed] [Google Scholar]

- Hickok G, Bellugi U, Klima ES. The neurobiology of sign language and its implications for the neural basis of language. Nature. 1996;381(6584):699–702. doi: 10.1038/381699a0. http://dx.doi.org/10.1038/381699a0. [DOI] [PubMed] [Google Scholar]

- Hickok G, Love-Geffen T, Klima ES. Role of the left hemisphere in sign language comprehension. Brain and Language. 2002;82(2):167–178. doi: 10.1016/s0093-934x(02)00013-5. http://dx.doi.org/10.1016/s0093-934x(02)00013-5. [DOI] [PubMed] [Google Scholar]

- Holle H, Gunter TC, Rueschemeyer SA, Hennenlotter A, Iacoboni M. Neural correlates of the processing of co-speech gestures. Neuroimage. 2008;39(4):2010–2024. doi: 10.1016/j.neuroimage.2007.10.055. http://dx.doi.org/10.1016/j.neuroimage.2007.10.055. [DOI] [PubMed] [Google Scholar]

- Hubbard AL, Wilson SM, Callan DE, Dapretto M. Giving speech a hand: Gesture modulates activity in auditory cortex during speech perception. Human Brain Mapping. 2009;30(3):1028–1037. doi: 10.1002/hbm.20565. http://dx.doi.org/10.1002/hbm.20565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Illes J, Francis WS, Desmond JE, Gabrieli JDE, Glover GH, Poldrack R, et al. Convergent cortical representation of semantic processing in bilinguals. Brain and Language. 1999;70(3):347–363. doi: 10.1006/brln.1999.2186. http://dx.doi.org/10.1006/brln.1999.2186. [DOI] [PubMed] [Google Scholar]

- Kendon A. Gesticulation and speech: Two aspects of the process of utterance. The Hague: Mouton; 1980. [Google Scholar]

- Kircher T, Straube B, Leube D, Weis S, Sachs O, Willmes K, et al. Neural interaction of speech and gesture: Differential activations of metaphoric coverbal gestures. Neuropsychologia. 2009;47(1):169–179. doi: 10.1016/j.neuropsychologia.2008.08.009. http://dx.doi.org/10.1016/j.neuropsychologia.2008.08.009. [DOI] [PubMed] [Google Scholar]

- Laurienti PJ, Kraft RA, Maldjian JA, Burdette JH, Wallace MT. Semantic congruence is a critical factor in multisensory behavioral performance. Experimental Brain Research. 2004;158(4):405–414. doi: 10.1007/s00221-004-1913-2. http://dx.doi.org/10.1007/s00221-004-1913-2. [DOI] [PubMed] [Google Scholar]

- Leonard MK, Ramirez NF, Torres C, Travis KE, Hatrak M, Mayberry RI, et al. Signed words in the congenitally deaf evoke typical late lexicosemantic responses with no early visual responses in left superior temporal cortex. Journal of Neuroscience. 2012;32(28):9700–9705. doi: 10.1523/JNEUROSCI.1002-12.2012. http://dx.doi.org/10.1523/jneurosci.1002-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Calvert GA, McGuire PK, David AS, Suckling J, et al. Dispersed activation in the left temporal cortex for speech-reading in congenitally deaf people. Proceedings of the Royal Society B – Biological Sciences. 2001;268(1466):451–457. doi: 10.1098/rspb.2000.0393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Giampietro V, David AS, McGuire PK, et al. Dissociating linguistic and nonlinguistic gestural communication in the brain. Neuroimage. 2004;22(4):1605–1618. doi: 10.1016/j.neuroimage.2004.03.015. http://dx.doi.org/10.1016/j.neuroimage.2004.03.015. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, Calvert GA, McGuire PK, David AS, et al. Neural correlates of British sign language comprehension: Spatial processing demands of topographic language. Journal of Cognitive Neuroscience. 2002a;14(7):1064–1075. doi: 10.1162/089892902320474517. http://dx.doi.org/10.1162/089892902320474517. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SCR, et al. Neural systems underlying British Sign Language and audiovisual English processing in native users. Brain. 2002b;125:1583–1593. doi: 10.1093/brain/awf153. http://dx.doi.org/10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Chen JK, Witcher P, Klein D. Age of acquisition effects on the functional organization of language in the adult brain. Brain and Language. 2011;119(1):16–29. doi: 10.1016/j.bandl.2011.05.007. http://dx.doi.org/10.1016/j.bandl.2011.05.007. [DOI] [PubMed] [Google Scholar]

- McCullough S, Saygin AP, Korpics F, Emmorey K. Motion-sensitive cortex and motion semantics in American Sign Language. Neuroimage. 2012;63(1):111–118. doi: 10.1016/j.neuroimage.2012.06.029. http://dx.doi.org/10.1016/j.neuroimage.2012.06.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thoughts. Chicago, IL: University of Chicago; 1992. [Google Scholar]

- Meuter RFI, Allport A. Bilingual language switching in naming: Asymmetrical costs of language selection. Journal of Memory and Language. 1999;40(1):25–40. http://dx.doi.org/10.1006/jmla.1998.2602. [Google Scholar]

- Miller J. Time course of coactivation in bimodal divided attention. Perception & Psychophysics. 1986;40 doi: 10.3758/bf03203025. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt DC, Foxe JJ. Multisensory visual-auditory object recognition in humans: A high-density electrical mapping study. Cerebral Cortex. 2004;14(4):452–465. doi: 10.1093/cercor/bhh007. http://dx.doi.org/10.1093/cercor/bhh007. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, et al. Cerebral organization for language in deaf and bearing subjects: Biological constraints and effects of experience. Proceedings of the National academy of Sciences of the United States of America. 1998;95(3):922–929. doi: 10.1073/pnas.95.3.922. http://dx.doi.org/10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishimura H, Hashikawa K, Doi K, Iwaki T, Watanabe Y, Kusuoka H, et al. Sign language ‘heard’ in the auditory cortex. Nature. 1999;397(6715) doi: 10.1038/16376. http://dx.doi.org/10.1038/16376.116–116. [DOI] [PubMed] [Google Scholar]

- Park HRP, Badzakova-Trajkov G, Waldie KE. Language lateralization in late proficient bilinguals: A lexical decision fMRI study. Neuropsychologia. 2012;50(5):688–695. doi: 10.1016/j.neuropsychologia.2012.01.005. http://dx.doi.org/10.1016/j.neuropsychologia.2012.01.005. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J. The neural basis of first and second language processing. Current Opinion in Neurobiology. 2005;15(2):202–206. doi: 10.1016/j.conb.2005.03.007. http://dx.doi.org/10.1016/j.conb.2005.03.007. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J, Paulesu E, Brambati S, Scifo P, Cappa SF, et al. The role of age of acquisition and language usage in early, high-proficient bilinguals: An fMRI study during verbal fluency. Human Brain Mapping. 2003;19(3):170–182. doi: 10.1002/hbm.10110. http://dx.doi.org/10.1002/hbm.10110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perani D, Dehaene S, Grassi F, Cohen L, Cappa SF, Dupoux E, et al. Brain processing of native and foreign languages. NeuroReport. 1996;7(15–17):2439–2444. doi: 10.1097/00001756-199611040-00007. http://dx.doi.org/10.1097/00001756-199611040-00007. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Katerelos M, Levy BG, Gauna K, Tétreault K, Ferraro V. Bilingual signed and spoken language acquisition from birth: Implications for the mechanisms underlying early bilingual language acquisition. Journal of Child Language. 2001;28(2):453–496. doi: 10.1017/s0305000901004718. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proceedings of the National academy of Sciences of the United States of America. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. http://dx.doi.org/10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Syngeniotis A, Thompson JC, Abbott DF, Wheaton KJ, Castiello U. The human temporal lobe integrates facial form and motion: Evidence from fMRI and ERP studies. Neuroimage. 2003;19(3):861–869. doi: 10.1016/s1053-8119(03)00189-7. http://dx.doi.org/10.1016/s1053-8119(03)00189-7. [DOI] [PubMed] [Google Scholar]

- Sakai KL, Tatsuno Y, Suzuki K, Kimura H, Ichida Y. Sign and speech: Amodal commonality in left hemisphere dominance for comprehension of sentences. Brain. 2005;128(Pt 6):1407–1417. doi: 10.1093/brain/awh465. http://dx.doi.org/10.1093/brain/awh465. [DOI] [PubMed] [Google Scholar]

- Söderfeldt B, Ingvar M, Rönnberg J, Eriksson L, Serrander M, Stone-Elander S. Signed and spoken language perception studied by positron emission tomography. Neurology. 1997;49(1):82–87. doi: 10.1212/wnl.49.1.82. [DOI] [PubMed] [Google Scholar]

- Straube B, Green A, Weis S, Chatterjee A, Kircher T. Memory effects of speech and gesture binding: Cortical and hippocampal activation in relation to subsequent memory performance. Journal of Cognitive Neuroscience. 2009;21(4):821–836. doi: 10.1162/jocn.2009.21053. http://dx.doi.org/10.1162/jocn.2009.21053. [DOI] [PubMed] [Google Scholar]

- Straube B, Green A, Weis S, Kircher T. A supramodal neural network for speech and gesture semantics: An fMRI study. pLoS ONE. 2012;7(11):10. doi: 10.1371/journal.pone.0051207. http://dx.doi.org/10.1371/journal.pone.0051207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straube B, He YF, Steines M, Gebhardt H, Kircher T, Sammer G, et al. Supramodal neural processing of abstract information conveyed by speech and gesture. Frontiers in Behavioral Neuroscience. 2013;7:14. doi: 10.3389/fnbeh.2013.00120. http://dx.doi.org/10.3389/fnbeh.2013.00120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suied C, Bonneel N, Viaud-Delmon I. Integration of auditory and visual information in the recognition of realistic objects. Experimental Brain Research. 2009;194(1):91–102. doi: 10.1007/s00221-008-1672-6. http://dx.doi.org/10.1007/s00221-008-1672-6. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Vaina LM, Solomon J, Chowdhury S, Sinha P, Belliveau JW. Functional neuroanatomy of biological motion perception in humans. Proceedings of the National academy of Sciences of the United States of America. 2001;98(20):11656–11661. doi: 10.1073/pnas.191374198. http://dx.doi.org/10.1073/pnas.191374198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Heuven WJB, Dijkstra T. Language comprehension in the bilingual brain: fMRI and ERP support for psycholinguistic models. Brain Research Reviews. 2010;64(1):104–122. doi: 10.1016/j.brainresrev.2010.03.002. http://dx.doi.org/10.1016/j.brainresrev.2010.03.002. [DOI] [PubMed] [Google Scholar]

- Wartenburger I, Heekeren HR, Abutalebi J, Cappa SF, Villringer A, Perani D. Early setting of grammatical processing in the bilingual brain. Neuron. 2003;37(1):159–170. doi: 10.1016/s0896-6273(02)01150-9. http://dx.doi.org/10.1016/s0896-6273(02)01150-9. [DOI] [PubMed] [Google Scholar]

- Weisberg J, Koo DS, Crain KL, Eden GF. Cortical plasticity for visuospatial processing and object recognition in deaf and hearing signers. Neuroimage. 2012;60(1):661–672. doi: 10.1016/j.neuroimage.2011.12.031. http://dx.doi.org/10.1016/j.neuroimage.2011.12.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willems RM, Ozyurek A, Hagoort P. When language meets action: The neural integration of gesture and speech. Cerebral Cortex. 2007;17(10):2322–2333. doi: 10.1093/cercor/bhl141. http://dx.doi.org/10.1093/cercor/bhl141. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.